Abstract

The internet has changed the way in which we gather and interpret information. While books were once the exclusive bearers of data, knowledge is now only a keystroke away. The internet has also facilitated the synthesis of new knowledge. Specifically, it has become a tool through which medical research is conducted. A review of the literature reveals that in the past year, over one-hundred medical publications have been based on web-based survey data alone.

Due to emerging internet technologies, web-based surveys can now be launched with little computer knowledge. They may also be self-administered, eliminating personnel requirements. Ultimately, an investigator may build, implement, and analyze survey results with speed and efficiency, obviating the need for mass mailings and data processing. All of these qualities have rendered telephone and mail-based surveys virtually obsolete.

Despite these capabilities, web-based survey techniques are not without their limitations, namely recall and response biases. When used properly, however, web-based surveys can greatly simplify the research process. This article discusses the implications of web-based surveys and provides guidelines for their effective design and distribution.

INTRODUCTION

From Googlea to eMedicine,b the internet has changed the way in which medical information is gathered and interpreted. While books were once the exclusive bearers of data, knowledge is now only a keystroke away. The internet has also facilitated the synthesis of new knowledge. Specifically, it has become a tool through which medical research is conducted. Web-based survey tools, as a prime example, facilitate efficient communication between investigators and participants. Accordingly, survey reach and sample size are augmented, and cost is markedly reduced.1

Evidence also suggests that subjects on the web may be more likely to take a survey to completion; this indicates that participants find web-based surveys more appealing.2 Increased appeal is likely due to the ease of submission: clicked responses and a “Submit” button are more facile than written responses and postal mail. In accordance, web-based survey response rates have been shown to be comparable to traditional survey methods.3,4,5

As internet technology has emerged, web-based surveys can now be launched with little computer knowledge. The surveys may also be self-administered, eliminating personnel requirements. Ultimately, an investigator may build, implement, and analyze survey results with speed and efficiency, obviating the need for mass mailings and data processing. All of these qualities have rendered telephone and mail-based surveys increasingly outdated.6,7

Web-based survey techniques are not without their limitations.8 The fundamentals of research design should not be lost in a rush to adopt this novel approach. Fastidious adherence to traditional survey principles has particular importance in online surveys, where verbal communication and guidance is noticeably absent.9 Despite their limitations, appropriate use of web-based surveys can greatly simplify the research process. This article discusses the implications of web-based surveys and provides guidelines for their effective design and distribution.

DESIGNING A WEB-BASED SURVEY

Web-Based Survey Providers

While there are several online applications dedicated to customized survey solutions, Zoomerangc and SurveyMonkeyd are the leading providers. Their services are nearly identical, each providing a step-by-step approach to customized survey design. A limited account is available for free through either service. Premium plans range from $20 to $350, depending on account requirements. Expert assistance is also available (for an additional fee) in cases of complex survey development.

Creating a Survey

Like any computer interface, web-based surveys must be both easy to navigate and to understand.10 This principle of web design is referred to as usability. Online survey applications solve much of the usability problem by presenting survey questions and responses in a standardized format. Online survey providers allow each question to be customized by type. For example, responses may be displayed in formats such as multiple choice, yes/no, rating scale, etc. The caveat is that large variations in format may cause confusion in respondents. Graphics, sound, video, colors, and fonts may then be added to improve communication and create a uniquely branded appearance.

Based on established principles, the general language of a survey should mimic a verbal dialogue.11 Schwarz contends that survey respondents conduct themselves as if involved in conversation with the investigator.12 This “dialogue” should be clear, concise, honest, and not repetitive.13 The absence of verbal cues imparts further importance to survey design, with appearance and syntax carrying more weight.14

Deployment and Accessibility

There are two major ways in which to deploy an online survey: via e-mail or on the web. Both of these means may be used concurrently.

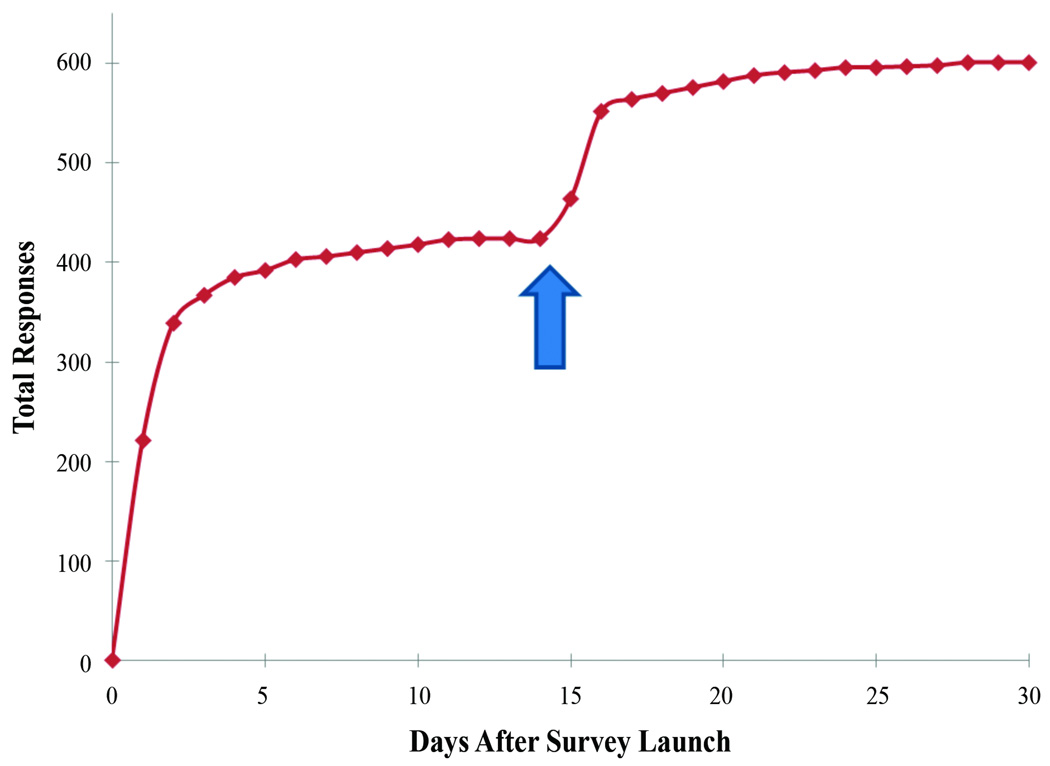

Web-based survey applications enable investigators to reach subjects via mass e-mail. A contact importer allows an investigator to upload subjects’ e-mail addresses to a private account. Subsequently, a survey invitation may then be sent automatically to all subjects simultaneously. Reminder emails may also be sent, which has been shown to increase response rates (figure 1).1,25

Figure 1.

Total number of responses to the web-based BreastPE.com survey over time.26 Arrow indicates timing of “reminder” email which was sent to non-respondents fourteen days after the initial survey was launched.

A unique web address, or URL (Uniform Resource Locator), is generated by the survey application. The URL can then be posted on a website or sent to subjects via e-mail. Subjects may simply click the link to be redirected. This URL is often long and difficult to verbally communicate (e.g. http://www.zoomerang.com/Survey/WEB229R27WJVCD) and respondents may have difficulty retyping the URL into the web browser. In order to resolve this problem, a separate web address may be purchased through a domain name registrar, such as Godaddy.com.e This simplified domain name (e.g. http://www.PRSsurvey.info) can then forward to your survey. The new domain name is much easier to convey, both verbally and in print. This approach is particularly useful if a mail-based solicitation is desired. Notably, a preliminary mailing prior to web release has been shown to improve survey response rates.15

Before deployment, a small test group should be physically observed taking your survey. This “pilot testing” or “usability testing” can reveal stumbling blocks in design and formatting. Items that are ambiguous can be identified and altered. Changes may then be made without detriment to the survey's integrity. An improved, user-friendly version of the survey can then be sent to the true research population.

Participation Incentives

Researchers commonly use incentives to encourage survey participation and increase the volume of data generated. Singer and Couper contend that incentives are influential but not coercive.16 They found that the willingness of survey respondents to divulge personal information was not related to increasing incentives. The authors further argued that an Institutional Review Board (IRB) should not regulate incentive size, as this methodology does not pertain to the protection of subjects from harm.

Mail surveys commonly provide incentives through cash distribution prior to survey completion. Pre-payment is possible in web-based surveys using money distributed via PayPal.f Pre-paid incentives, however, have not been shown to significantly increase participation rates when compared to incentives provided upon survey completion.17 Studies evaluating multiple means of web-based survey incentives have shown that entry into a “prize draw” will significantly increase willingness to participate when compared with a smaller, guaranteed cash incentive.17 Evidence also suggests that monetary compensation may introduce bias, with a disproportionate increase in respondents from lower socioeconomic groups.18

Even more important than financial incentives is conveying the importance of the research to the respondents. Similarly, investigator gratitude should be conveyed. These methods engender trust between the participant and researcher, while creating a sense of cooperation for survey participation.19 Even without a financial incentive, potential respondents may be more likely to participate if they perceive that doing so will have an impact on an issue they find important.

PRIVACY AND CONFIDENTIALITY

Data Security

Medical information is inherently sensitive. Similarly, clinician behaviors, practice patterns and outcomes data should be treated as privileged, confidential information. The movement to health care computing has required an enormous overhaul of hospital information technology (IT) systems in order to keep such information protected. Web-based surveys often require participants to disclose highly private information, and the principal investigator is responsible for upholding the participants’ right to privacy. Zoomerang,g for example, uses both physical and technological means to protect survey data. In addition to an individual account password, Zoomerang’s central servers (upon which data is stored) require card key access and are under constant video surveillance. They are also protected from “hacking” by firewalls and SSL (Secure Sockets Layer) encryption. These means have become the industry standard, and are consistent the mechanisms presently in place within hospital computer mainframes.

Institutional Review Board (IRB) Approval

Research involving any human subject requires a full IRB application that demonstrates a favorable risk/benefit ratio to study subjects and/or society. As such, all surveys at the authors’ institution are reviewed by the IRB prior to launch. Consultation with an IRB prior to initiating survey research is mandatory. In general, the IRB will require that subject data is de-identified. With de-identification, response data cannot be linked to an individual survey participant.

Patient-reported data clearly falls under the realm of protected health information (PHI). Survey data that reflects the attitudes and/or practice patterns of physicians—although not considered PHI—still fall under the realm of the IRB. No distinction between patient and clinician is made during IRB evaluation; each survey respondent is treated as a subject.

DATA ANALYSIS

Response Rates

The existing literature gives conflicting reports on the differential response rates for mailed and web-based surveys.3,4,5 While some authors have demonstrated higher initial survey response rates with mailed versus web-based surveys (58% vs. 45%, respectively),4 other authors have demonstrate the opposite (36% vs. 73%, respectively).5 Recruitment methods (e.g. an advertisement in a print journal) may also affect relative response rates to a web-based survey.20 Regardless of recruitment methods, when regular reminders are sent to subjects, the difference in final survey response rates between mailed and web-based surveys are small.3,4,5 Reminder e-mails to non-respondents has specifically been shown to augment web-based survey response rates.3,4,5,21 The primary authors recently conducted a web-based survey of practice patterns for venous thromboembolism prophylaxis after autogenous tissue breast reconstruction. Through the use of a single reminder e-mail, sent two weeks after initial survey deployment, the total number of responses was increased by over 40% (figure 1).21

Perhaps more important than the actual response rate, however, is whether the respondents comprise a representative sample of the population of interest. If a sufficient number of subjects respond (from a statistical standpoint), and it can be demonstrated that the respondents are representative, then it is reasonable to make generalizations from the data back to the population. Conversely, even with a response rate of 50%, if the respondents are not representative of the population, generalizations are difficult. This underscores the importance of obtaining pertinent demographic information.

Data Collection

Responses to online surveys may be viewed in real-time. Data may be viewed either in aggregate or individually. Survey results may also be provided to participants on the “thank you” page at the end of a survey. While this would affect the validity of a formal study, the technique may be used in an informal survey as a way to normalize patient experiences with those sharing the same condition.14

To prevent “loading the ballot,” responses from a specific computer (IP address) may be limited to a single survey completion. Alternatively, a computer may be repeatedly used and refreshed, as a kiosk. Kiosks are ideal for recruiting patient participants in clinic or for surveying surgeons at national meetings. Survey results may be exported to almost any statistical software package (e.g. Microsoft Excel, SAS, Stata) for further analysis. Advanced analytical capabilities, such as filtering and cross-tabulation, are also possible online. In general, an unlimited amount of survey responses may be interpreted, although the investigator may incur a fee for each respondent after a certain number (usually $.05 for each response above 1,000).

An advantage of web-based survey methodology is that, depending on the sophistication of the software, the instrument may be designed using “logic,” such that items may be chosen based responses to previous items. This can certainly be done with paper questionnaires using directions such as “if you answered ‘no’ to this item please proceed to item number X.” However, if these directions become too complicated, drop out is likely. In a web-based instrument, this all occurs “behind the scenes:” the respondent sees only a smooth transition from one item to the next, and a shorter appearing instrument overall. This may lead to higher completion rates. Qualtrics™ allows for sophisticated logic, with items being chosen randomly from a pool.h The particular pool itself is determined by responses to previous items. In this way, data can be gathered across a large number of topics without having any one respondent forced to answer items on all topics. The most sophisticated software systems use item response theory to choose successive items. Based on responses to several initial items with broad subject coverage, the respondent’s awareness of the subject is determined. Subsequent items are then selected, with continual adjustment throughout the remainder of the survey.

Bias

Innovation in survey modalities should always be mitigated by concerns about bias. Bias is a broad topic that has been extensively reviewed elsewhere.21,22 A brief review of recall and response biases, however, is appropriate for this discussion of survey research.

Recall bias refers to the phenomenon that personal experience may color an individual’s response. If a surgeon has recently experienced a specific complication, for example, he or she may be more likely to report a higher incidence of said complication. Stringent inclusion criteria or stratified analysis, in which similar subjects are grouped prior to analysis, may minimize the influence of recall bias.

Systematic differences may be present between subjects who choose to respond to a survey compared to those who do not respond. This is known as response bias. As another example, younger surgeons, who have less experience but are more computer-savvy, may be more likely to respond to a web-based survey. Internet browser compatibility may also be an issue for web-based surveys. Most software allows for tracking of browser data without tracking respondents’ identities. Multiple instances of drop out, particularly at a consistent item, should be investigated for browser compatibility issues. This would best be discovered and corrected in the pilot phase. Response bias can be quantified through collection of demographic data from respondents and non-respondents. A data comparison can help to determine if a representative sample has been obtained. For web-based surveys, consideration of subjects without internet access is intrinsically critical to one’s ability to generalize the results of the study.23

DISCUSSION

While the body of research pertaining to web-based surveys is still in its infancy, increasing attention has been given to this modality as a valid means of conducting formal research.24,25 A review of the literature reveals that in the past year, over one-hundred medical publications have been based on internet survey data alone. While this article has presented a basic review, established guidelines for the use of the internet in medicine is a topic of central interest: the U.S. Department of Health and Human Services has published a treatise on this subject.i

Using the principles outlined in this article, the authors have completed survey research on a variety of topics, including practice patterns for venous thromboembolism prophylaxis in breast reconstruction26 and post-bariatric body contouring,30 cost-utility and decision making in rheumatoid hand surgery,27,28 hand transplantation,29 and the management of lower extremity fractures.30 A sample survey for Plastic and Reconstructive Surgery readers has been created at www.PRSsurvey.info. Please explore an example of this exciting new research tool.

CONCLUSION

Web-based surveys can be created, deployed, and analyzed with increasing ease. Here, we have provided clinicians with a practical guide to the design and distribution of web-based surveys for medical research.

Acknowledgments

Dr. Pannucci receives salary support from the NIH T32 grant program (T32 GM-08616).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

FINANCIAL DISCLOSURE AND PRODUCTS PAGE

The authors have no conflicts of interest to declare.

Contributor Information

Adam J. Oppenheimer, Section of Plastic Surgery, Department of Surgery, University of Michigan, Ann Arbor, Michigan.

Christopher J. Pannucci, Section of Plastic Surgery, Department of Surgery, University of Michigan, Ann Arbor, Michigan.

Steven J. Kasten, Section of Plastic Surgery, Department of Surgery, University of Michigan, Ann Arbor, Michigan.

Steven C. Haase, Section of Plastic Surgery, Department of Surgery, University of Michigan, Ann Arbor, Michigan.

References

- 1.Schleyer TK, Forrest JL. Methods for the design and administration of web-based surveys. J Am Med Inform Assoc. 2000;7:416–425. doi: 10.1136/jamia.2000.0070416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bälter KA, Bälter O. Web-based and mailed questionnaires: a comparison of response rates and compliance. Epid. 2005;16:577–579. doi: 10.1097/01.ede.0000164553.16591.4b. [DOI] [PubMed] [Google Scholar]

- 3.Truell AD, Bartlett JE, Alexander MW. Response rate, speed and completeness: A comparison of internet-based and mail surveys. Behav Res Methods, Instr, & Comp. 2002;34:46–49. doi: 10.3758/bf03195422. [DOI] [PubMed] [Google Scholar]

- 4.Leece P, Bhandari M, Sprague S, Swiontkowski MF, Schemitsch EH, Tornetta P, Devereaux PJ, Guyatt GH. Internet versus mailed questionnaires: a controlled comparison. J Med Internet Res. 2004;6:e39. doi: 10.2196/jmir.6.4.e39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ritter P, Lorig K, Laurent D, Matthews K. Internet versus mailed questionnaires: a randomized comparison. J Med Internet Res. 2004;6:e29. doi: 10.2196/jmir.6.3.e29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Couper MP. Web surveys: a review of issues and approaches. Public Opinion Q. 2000;64:464–494. [PubMed] [Google Scholar]

- 7.Groves RM, Kahn RL. Surveys by telephone: a national comparison with personal interviews. New York: Academic Press; 1979. [Google Scholar]

- 8.Couper MP. Issues of representation in eHealth research (with a focus on web surveys) Am J Prev Med. 2007;32(5S):S83–S89. doi: 10.1016/j.amepre.2007.01.017. [DOI] [PubMed] [Google Scholar]

- 9.Schwarz N, Grayson CE, Knäuper B. Formal features of rating scales and the interpretation of question meaning. Int J Public Opin Res. 1998;10:177–183. [Google Scholar]

- 10.Crawford S, McCabe SE, Pope D. Applying web-based survey design standards. J Prev Interv Community. 2005;29:43–66. [Google Scholar]

- 11.Dillman DA, Smyth JD. Design effects in the transition to web-based surveys. Am J Prev Med. 2007;32(5S):S90–S96. doi: 10.1016/j.amepre.2007.03.008. [DOI] [PubMed] [Google Scholar]

- 12.Schwarz N. Cognition and communication: judgmental biases, research methods, and the logic of conversation. Mahwah NJ: Lawrence Erlbaum; 1996. [Google Scholar]

- 13.Grice PH. Logic and conversation. In: Cole P, Morgan JL, editors. Syntax and semantics. Vol. 3. Speech acts. New York: Academic Press; 1975. pp. 41–58. [Google Scholar]

- 14.Capella J, Kasten SJ, Steinemann S, Torbeck L. Guide for Researchers in Surgical Education. Woodbury: Ciné-Med; 2010. [Google Scholar]

- 15.Beebe TJ, Locke GR, Barnes SA, et al. Mixing web and mail methods in a survey of physicians. Health Serv Res. 2007;42:3. doi: 10.1111/j.1475-6773.2006.00652.x. Part I. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Singer E, Couper MP. Do incentives exert undue influence on survey participation? Experimental evidence. J Empir Res Hum Res Ethics. 2008;3:49–56. doi: 10.1525/jer.2008.3.3.49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bosnjak M, Tuten TL. Prepaid and promised incentives in web surveys. Soc Sci Comp Rev. 2003;21(2) [Google Scholar]

- 18.Moyer A, Brown M. Effect of participation incentives on the composition of national health surveys. J Health Psychol. 2008;13:870–873. doi: 10.1177/1359105308095059. [DOI] [PubMed] [Google Scholar]

- 19.Kropf ME, Blair J. Eliciting survey cooperation: incentives, self-interest, and norms of cooperation. Eval Rev. 2005;29:559–575. doi: 10.1177/0193841X05278770. [DOI] [PubMed] [Google Scholar]

- 20.Schonlau M. Will web surveys ever become part of mainstream research? J Med Internet Res. 2004;6:e31. doi: 10.2196/jmir.6.3.e31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Pannucci CJ, Wilkins EG. Identifying and avoiding bias in research. Plast Reconstr Surg. 2010;126(2):619–625. doi: 10.1097/PRS.0b013e3181de24bc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Paradis C. Bias in surgical research. Ann. Surg. 2008;248:180–188. doi: 10.1097/SLA.0b013e318176bf4b. [DOI] [PubMed] [Google Scholar]

- 23.Kaplowitz MD, Hadlock TD, Levine R. A Comparison of web and mail survey response rates. Public Opin Q. 2004;68:91–101. [Google Scholar]

- 24.Smyth JD, Dillman DA, Christian LM, Stern MJ. Effects of using visual design principles to group response options in web surveys. Int J Internet Sci. 2005;1:6–16. [Google Scholar]

- 25.Couper MP, Traugott MW, Lamias MJ. Web survey design and administration. Public Opin Q. 2001;65:230–254. doi: 10.1086/322199. [DOI] [PubMed] [Google Scholar]

- 26.Pannucci CJ, Oppenheimer AJ, Wilkins EG. Practice Patterns in Venous Thromboembolism Prophylaxis: A Survey of 606 Reconstructive Breast Surgeons. Ann Plast Surg. doi: 10.1097/SAP.0b013e3181ba57a0. (In press) [DOI] [PubMed] [Google Scholar]

- 27.Cavaliere C, Chung KC. Total wrist arthroplasty and total wrist arthrodesis in rheumatoid arthritis: A decision analysis from the hand surgeons’ perspective. J Hand Surg (A) 2008;33:1744–1755. doi: 10.1016/j.jhsa.2008.06.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cavaliere CM, Oppenheimer AJ, Chung KC. Reconstructing the Rheumatoid Wrist: A Utility Analysis Comparing Total Wrist Fusion and Total Wrist Arthroplasty from the Perspectives of Rheumatologists and Hand Surgeons. Hand (N Y) 2009 Apr 28; doi: 10.1007/s11552-009-9194-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chung KC, Oda T, Saddawi-Konefka D, Shauver MJ. An economic analysis of hand transplantation in the United States. Plast Reconstr Surg. doi: 10.1097/PRS.0b013e3181c82eb6. (In press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chung KC, Saddawi-Konefka D, Haase SC, Kaul G. A Cost-Utility Analysis of Amputation versus Salvage for Gustilo IIIB and IIIC Open Tibial Fractures. Plastic and Reconstructive Surgery. 2009 Dec;124(6):1965–1973. doi: 10.1097/PRS.0b013e3181bcf156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Clavijo-Alvarez JA, Pannucci CJ, Oppenheimer AO, Wilkins EG, Rubin JP. Prevention of venous thromboembolism (VTE) in body contouring surgery. A national survey of ASPS surgeons. Ann Plast Surg. doi: 10.1097/SAP.0b013e3181e35c64. (In press) [DOI] [PMC free article] [PubMed] [Google Scholar]