Abstract

Objectives

To improve BPH care, the American Urological Association created best practice guidelines for BPH management. We evaluate trends in use of BPH related evaluative tests and the extent to which urologists comply with the guidelines for evaluative tests.

Methods

From a 5% random sample of Medicare claims from 1999 to 2007, we created a cohort of 10,248 patients with new visits for BPH to 748 urologists. Trends in use of BPH related testing were determined. After classifying urologists by compliance with best practice guidelines, models were fit to determine the differences in use of BPH related testing among urologists. Further models defined the extent to which individual BPH related tests influenced guideline compliance.

Results

Use of most BPH testing increased over time (p < 0.001) except PSA (declined; p < 0.001) and ultrasound (p=0.416). Northeastern and Midwestern urologists were more likely to be in the lowest compliance group compared to Southern and Western urologists (29%, 27%, 13% and 19% respectively; p = 0.01). Testing associated with high guideline compliance included urinalysis and PSA (p < 0.01 for both), while prostate ultrasound (p = 0.03), cystoscopy (p < 0.01), uroflow (p < 0.01), and post void residual (p = 0.02) were associated low guideline compliance. Urodynamics, PVR, cytology, serum creatinine, and upper tract imaging were not strongly associated with guideline compliance.

Conclusion

Despite the AUA guidelines for BPH care, wide variations in evaluation and treatment are seen. Improving guideline adherence and reducing variation could improve BPH care quality.

Keywords: Prostatic Hyperplasia, Practice Guideline, Physician’s Practice Patterns

Introduction

In response to calls for greater consistency and quality in patient care,1 the American Urological Association (AUA) has created, and updated, clinical practice guidelines for benign prostatic hyperplasia (BPH). Within these guidelines, evaluations for men newly presenting with symptomatic BPH are placed into three categories: recommended care, optional care, and care that is not recommended for routine patients. More invasive tests, such as cystoscopy or urodynamics studies, are performed to identify the etiology of symptoms or further direct treatment, and are considered not recommended for routine patients by the AUA guidelines.2

Despite the efforts to develop and promote the BPH practice guidelines, much variation remains in the treatment of men with BPH. The over two fold differences in rates of surgery for BPH found in the 1980s3 continue to be seen in more recent data.4 Emerging minimally invasive surgical therapies have been associated with even greater geographic and racial variations in use.5 However, little is known about the evaluations men receive to guide their care at the time of presentation to a urologist. Previous work from the Urologic Diseases in America Project showed decreasing rates of upper urinary tract imaging in accordance with guideline recommendations.6 Yet degree of compliance among urologists with the guidelines is unknown.

As medicine moves into an era with an increased focus on the costs and quality of care,7,8 compliance with guidelines will be further scrutinized.9 In this study we examined changes in use of guideline recommended care first at the patient level. We then assessed compliance with guideline recommendations among urologists, and factors influencing this compliance. Finally, we examined which BPH evaluative tests influenced urologist’s compliance with guidelines to gauge the degree of agreement among urologists in the work up of men with BPH.

Methods

Data Source

We created our cohort using a 5% random sample of Medicare claims from 1999 to 2007. The Medicare program provides health care for Americans over age 65, patients with end stage renal disease, and patients with long term disability. Over 95% of Americans over age 65 use Medicare as their primary insurance.10 We limited our study to Medicare beneficiaries over 65 years of age.

Study population

We selected patients with International Classification of Disease Ninth Edition (ICD-9) diagnosis codes (Appendix 1) consistent with a BPH diagnosis. We determined the specialty of the physician treating the patient from the Medicare records, and confirmed with data from the AMA Masterfile. All patients with a visit to a urologist for a BPH diagnosis were included in the initial cohort. We excluded patients who lacked continuous enrollment in Medicare parts A and B or who were enrolled in a Medicare HMO for two years prior to the initial visit with the urologist to one year after the visit. The two year period allowed exclusion of patients who visited a urologist for non-BPH conditions. We also excluded patients with diagnoses suggesting prior surgical BPH therapy, prostate cancer, or neurologic disease that could contribute to LUTS (Appendix 2). These restrictions resulted in a study population of 40,483 patients. Next, to examine differences in care at the level of the urologist, we restricted the cohort to patients seen by urologists with at least 10 patients between 1999 and 2007. We established the primary urologist responsible for the patient’s care by assessing the Unique Provider Identification Number (UPIN) for the patient visits and laboratory testing. For most patients, a single urologist provided the BPH-related care. In cases with multiple urologists, the primary urologist was classified as the urologist who provided the plurality of patient care services. These restrictions left a study population of 10,248 patients treated by 748 urologists.

Characterization of Compliance with Guidelines

We created an index of compliance with the 2003 AUA guidelines based on a urologist’s average use of recommended, optional, and not-recommended tests. Recommended tests included urinalysis and serum PSA. Optional tests included post void residual urine assessment (PVR), uroflow, and urine cytology. Not-recommended tests included serum creatinine, upper tract imaging, filling cystometrogram, pressure flow urodynamic studies, cystoscopy, and prostatic and kidney ultrasound.(Appendix 3) Using ICD-9 and Healthcare Common Procedure Coding System (HCPCS) codes, we assessed BPH-related evaluative testing for the first 3 months after the initial urologist visit. Use of symptoms scores and physical exams could not be determined from this data. Use of recommended care was valued with 1 point for a PSA test or urinalysis. Each not-recommended care test received minus 1 point. Optional care received no points. Since such care is considered optional by the guidelines, we did not assign a value to it. Each type of test was allowed to count more than once in the 3 month period. We then summed the points for the patient and divided by the total amount of care provided in the three month period. Each individual patient score was then aggregated at the level of the treating urologist, and the average index of compliance for the urologist was calculated. If a urologist performed only not-recommended care, the index would be −1. Conversely if a urologist performed only recommended care, the index would be 1. Provision of only optional care would result in an index score of 0. We then categorized providers into quintile groups based on their average compliance scores.

Urologist characteristics

Using data from the AMA Masterfile and Medicare we described several characteristics of the urologists: practice structure (solo, group, or hospital based), geographic location (census divisions), urban vs. rural practice (urban locations included metropolitan areas of over 100,000 people, rural less than 100,000), and years in practice (categorized as medical school graduation before and after 1985) as explanatory factors for adherence to guidelines.

Outcome

Our primary outcome was the rate of use of evaluative care tests. First we examined the use of these tests over time in the Medicare population. Then we explored the impact of urologist’s characteristics on use of these tests. We examined the use of tests as outlined in the categories of recommended care, optional care, and not-recommended care. We then determined which tests were associated with categorization of urologists into the lowest and highest quintiles of guideline adherence. Testing was determined based on Medicare procedure or laboratory codes as appropriate.

Statistical Analysis

Trends over time in the use of each type of evaluative care test were assessed with the Cochran-Armitage test for trend. We then explored differences in provider characteristics according to quintiles of guideline compliance. Statistical inference was made using the chi-square test for all variables.

Using linear regression, we determined the mean number of each type of evaluative care test by urologist quintile. For each quintile of urologists, we also determined the use of evaluative care by guideline category: recommended, optional, and not recommended. We compared the rates of use of the evaluative care tests among the quintiles of guideline adherence by linear regression. Differences in utilization across the quintiles of guideline adherence were assessed by the F-statistic from linear regression.

We assessed the impact each evaluative care test had on classifying urologists into the highest or lowest quintiles of compliance with guidelines through logistic regression analysis. We adjusted for the urologist’s practice style expenditures (defined in terms of average Medicare expenditures per month for BPH tests performed in the first year after an initial visit to a urologist)11 and for urologist and patient characteristics. Patient factors included age (67–70, 71–74, 75–78, and 79+), socioeconomic status (zip code level using the methodology of Diez-Roux),12 race (white, black, and other), and comorbidity (Klabunde modification of the Charlson comorbidity index).13 These patient factors were modeled as the percentage of a physician’s practice population with the characteristic.

All analyses were carried out with SAS software (Version 9.1 Cary, NC). All statistical tests were two sided, and the probability of a type I error was set at 0.5. This study was granted letters of exemption by the institutional review boards at each of the author’s institutions.

Results

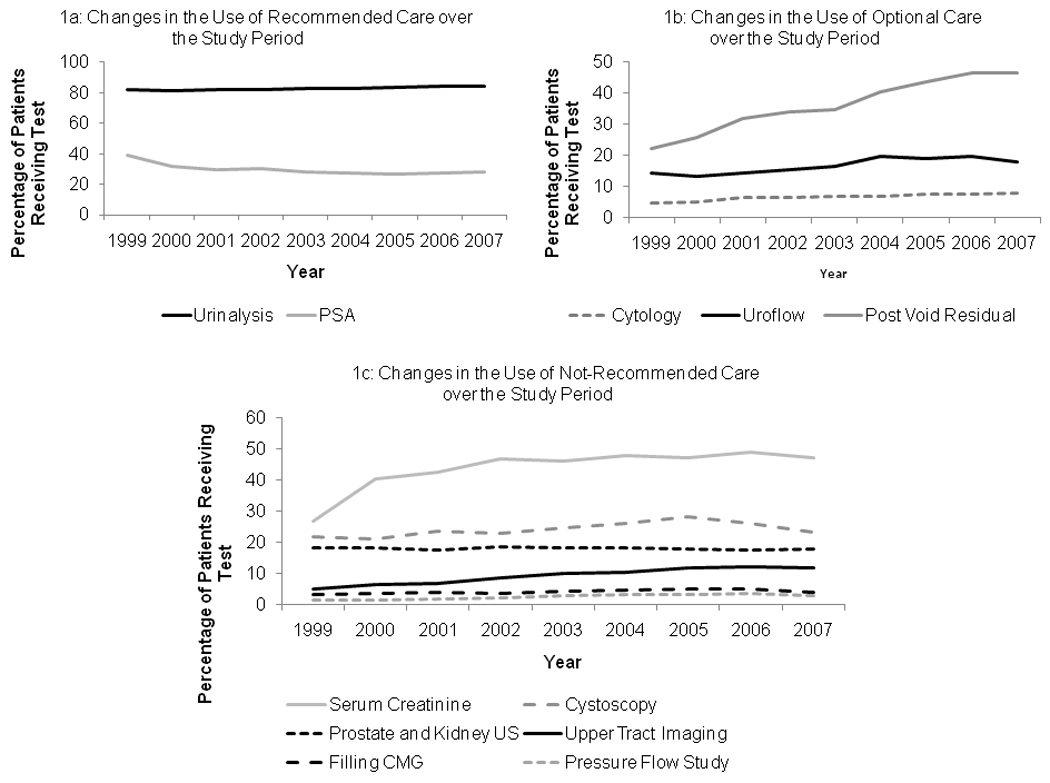

A wide range of evaluative care services are provided to patients within three months of their initial visit to a urologist (Figure 1). Urinalysis was routinely performed, with over 80% of patients receiving the test in each year of the study. Use of serum creatinine measurements and post void residual testing increased over time, with over 40% of patients receiving these tests by 2007. All BPH related testing showed significant increases in use (p < 0.001) except PSA testing where a significant decrease in use occurred (p < 0.001) and renal or prostatic ultrasound which showed no changes in use (p=0.416).

Figure 1. Changes in Proportions of Patients Receiving Evaluative Care tests for BPH over the Study Period.

Among recommended care (Figure 1a) use of testing increased significantly over time for urinalysis (p<0.001), but decreased for PSA testing (p<0.001). Use of optional care (Figure 1b) increased significantly (p < 0.001) for all tests. Use of not-recommended care increased for all testing (p<0.001) except for ultrasonography of the kidney and prostate (stable utilization p = 0.416).

Within a possible range of the guideline compliance index from minus 1 (no compliance) to 1 (complete compliance), actual levels of compliance ranged from −0.53 to 0.91 (Table 1). The lowest quintile had index values of −0.53 to 0.08, with the low to highest quintiles being 0.09 to 0.22, 0.23 to 0.33, 0.34 to 0.44, and 0.45 to 0.91 respectively. Geographic location was associated with guideline compliance, with urologists in the West evenly distributed among the quintiles (19.7% lowest compliance), urologists in the Northeast and Midwest having lower compliance with guidelines (28.6% and 27.1% lowest compliance respectively), and urologists in the South showing more guideline compliance than urologists in other regions (13.3% lowest compliance; overall p = 0.01 for comparisons among the regions). Urologists practicing in rural areas were less likely to provide care compliant with the guidelines than urologists in urban areas (26.1% versus 17.8% lowest compliance in rural versus urban; p < 0.01). Type of practice (solo, group, or hospital) and length of time since medical school (before or after 1985) were not associated with compliance with guidelines (p = 0.70 and p = 0.43 respectively.)

Table 1.

Urologist Characteristics by Quintile of Guideline Compliance

| Urologist Characteristics |

Quintiles of Urologist Guideline Compliance | ||||||

|---|---|---|---|---|---|---|---|

| Lowest | Low | Middle | High | Highest | p - value |

||

| Compliance Index | −0.53 to 0.08 | 0.09 to 0.22 | 0.23 to 0.33 | 0.34 to 0.44 | 0.45 to 0.91 | ||

| Physician Characteristics | |||||||

| Employment | P = 0.70 | ||||||

| Solo | 17.9 | 21.0 | 21.0 | 20.1 | 20.1 | ||

| Group | 20.4 | 19.5 | 14.1 | 17.7 | 21 | ||

| Hospital | 22.2 | 22.2 | 33.3 | 14.8 | 7.4 | ||

| Census Division | P = 0.01 | ||||||

| Northeast | 28.6 | 15.4 | 20.9 | 23.1 | 12.1 | ||

| Midwest | 27.1 | 19.3 | 24.0 | 13.5 | 16.2 | ||

| South | 13.3 | 19.9 | 21.4 | 19.0 | 26.5 | ||

| West | 19.7 | 21.5 | 19.8 | 20.3 | 18.8 | ||

| Location | P < 0.01 | ||||||

| Urban | 17.8 | 20.8 | 22.4 | 16.6 | 22.4 | ||

| Rural | 26.1 | 17.5 | 18.5 | 22.3 | 15.6 | ||

| Medical School Graduation Date | P = 0.43 | ||||||

| Prior to 1985 | 21.0 | 23.6 | 19.3 | 17.6 | 18.5 | ||

| 1985 and After | 19.6 | 18.3 | 22.2 | 18.3 | 21.5 | ||

| Characteristics of Physicians’ Patients | |||||||

| Race | |||||||

| > 90% White | 21.3 | 18.6 | 22.3 | 18.3 | 19.6 | P = 0.14 | |

| > 10% Black | 19.8 | 23.5 | 18.5 | 18.5 | 19.8 | P = 0.79 | |

| > 10% Other | 15.6 | 25.6 | 18.9 | 15.6 | 24.4 | P = 0.61 | |

| Comorbidity | |||||||

| > 80% 0 | 18.1 | 15.6 | 18.1 | 21.1 | 27.1 | P = 0.06 | |

| > 20% 1 | 17.4 | 22.8 | 23.2 | 15.8 | 20.9 | P = 0.39 | |

| > 10% 2 or more | 24.0 | 21.5 | 21.5 | 19.0 | 14.0 | P = 0.01 | |

| SES | |||||||

| > 40% Lowest | 14.2 | 19.3 | 18.8 | 18.3 | 29.4 | P = 0.04 | |

| > 40% Middle | 26.6 | 22.8 | 19.5 | 14.5 | 16.6 | P = 0.04 | |

| > 70% Upper Middle | 19.7 | 16.1 | 21.9 | 16.8 | 25.6 | P = 0.44 | |

| > 10% Highest | 24.7 | 15.1 | 19.2 | 21.9 | 19.2 | P = 0.57 | |

| Age | |||||||

| >30% 67–70 | 15.7 | 19.9 | 20.2 | 22.1 | 22.1 | P = 0.10 | |

| >30% 71–74 | 19.9 | 21.1 | 29.1 | 20.3 | 19.5 | P = 0.76 | |

| >30% 75–78 | 11.0 | 23.6 | 20.5 | 16.5 | 28.4 | P = 0.07 | |

| >30% 79+ | 25.5 | 22.2 | 20.9 | 14.1 | 17.3 | P = 0.02 | |

Urologist’s patient populations differed among the quintiles of guideline compliance. Patient comorbidity burdens were lower among urologists with high guideline compliance. Among urologists with patient populations consisting of low comorbidity patients (i.e. > 80% of the patients having no comorbid conditions), 27.1% were in the highest quintile of compliance versus 18.1% were in the lowest level of compliance (p = 0.06). Among urologists whose practice included over 10% of patients with two or more comorbid conditions, 14% were highly guideline compliant and 24% were in the lowest guideline compliance category (p = 0.01). Urologists seeing an older patient population were more likely to be in the lowest quintile of guideline compliance than the highest quintile (25.5% lowest versus 17.3% highest; p = 0.02). The racial distribution of a urologist’s practice was not associated with levels of guideline compliance.

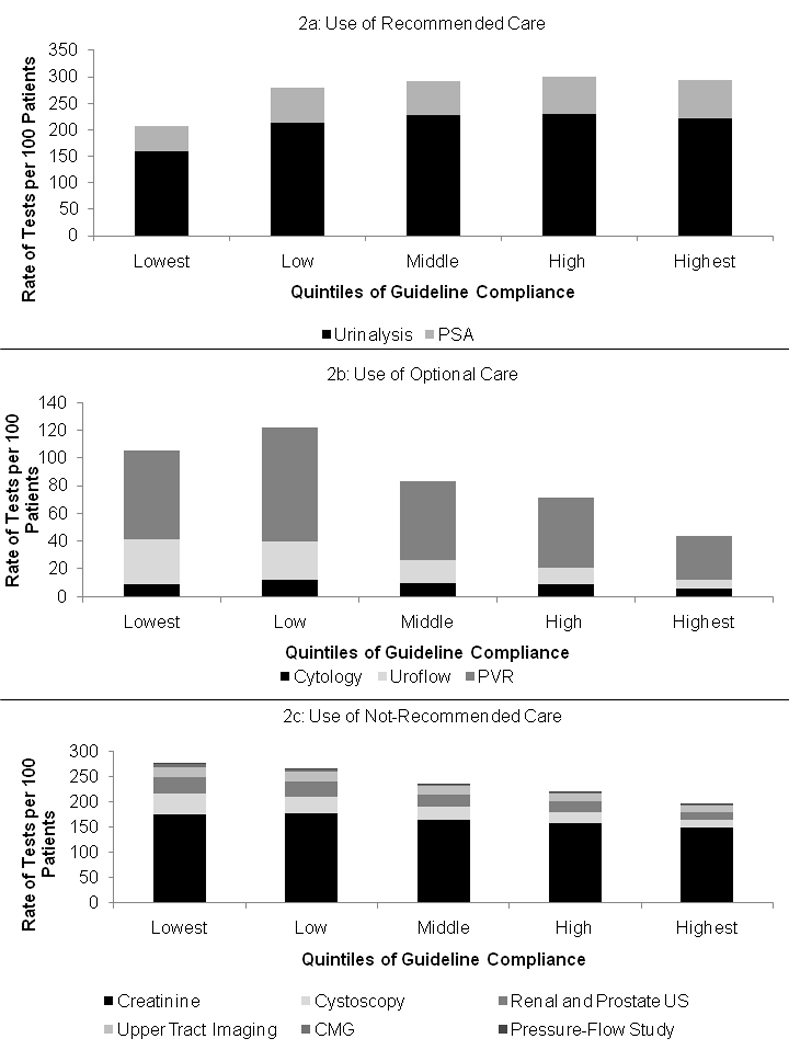

Urologists in the lowest quintile of guideline compliance had the lowest use of recommended care, and the highest use of not-recommended care (Figure 2). Low utilization of both urinalysis and PSA testing was seen (Figure 2a: p < 0.01 for both). Optional care was performed most frequently by urologists in the low guideline compliance quintile (Figure 2b; 122/100 patients). Driving this finding, use of PVR was highest in the low guideline adherence quintile. In contrast, cytology use was relatively flat across the groups (9, 12, 10, 9, and 6 tests per 100 patients for lowest to highest adherence), whereas use of uroflow procedures was high in the lowest quintiles of guidelines adherence (32 and 28 tests per 100 patients), and decreased rapidly in the more adherent groups (16, 12, and 6 tests per 100 patients). None of the not-recommended tests had stable use across quintiles of urologist guideline compliance (Figure 2c), with use of each type of evaluative care higher for the lowest versus the highest quintile of guideline compliance (p < 0.01 for all comparisons).

Figure 2. Use of Recommended, Optional, and Not-Recommended Care.

Urologists in the lowest quintile of guideline compliance had the lowest use of recommended care (Figure 2a), and the highest use of not-recommended care (Figure 2c). Urologists in the low compliance group had the highest use of optional care (Figure 2b). However, extensive variations in the use of individual tests were found. As examples, urinalysis use stable across the low to highest guideline compliance groups, whereas cystoscopy use declined from the lowest to highest guideline compliance groups.

Individual evaluative care tests were strongly associated with compliance quintiles (Table 2). After adjustment for patient and urologist variables, urinalysis and PSA testing were associated with urologists being in the highest quintile (p < 0.01 for both). Prostate and renal ultrasound (p = 0.03), cystoscopy (p < 0.01), uroflow (p < 0.01), and post void residual (p = 0.02) were associated with a urologist being in the lowest group. Filling CMG, pressure-flow studies, and upper tract imaging provided borderline associations with urologists being in the lowest quintile (p = 0.07; p = 0.07, p = 0.08 respectively). Serum creatinine measurements and urine cytology testing were not associated with a urologist being in the highest or lowest quintiles of guideline compliance (p = 0.44 and p = 0.35, respectively).

Table 2.

Odds Ratios and 95% Confidence Intervals for a Urologist being in the Highest versus Lowest Guideline Compliance Quintile by each Evaluative Care Test

| Variable | OR | 95% Confidence Interval |

P - value |

|---|---|---|---|

| Urinalysis | 7.75 | 4.17 – 14.40 | <.0001 |

| PSA | 69.22 | 17.10 – 280.24 | <.0001 |

| Prostate and Kidney Ultrasound | 0.102 | 0.013 – 0.811 | 0.031 |

| Serum Creatinine | 0.796 | 0.443 – 1.429 | 0.4443 |

| Cystoscopy | 0.003 | <0.001 – 0.019 | <.0001 |

| Cytology | 0.276 | 0.019 – 4.095 | 0.3494 |

| Uroflow | 0.033 | 0.006 – 0.172 | <.0001 |

| Filling CMG | 0.002 | <0.001 – 1.73 | 0.0722 |

| Post-Void Residual | 0.419 | 0.205 – 0.856 | 0.0169 |

| Pressure-Flow Study | <0.001 | <0.001 – 1.932 | 0.0652 |

| Upper Tract Imaging | 0.123 | 0.011 – 1.316 | 0.0831 |

Comment

Best practice guidelines are intended to improve and standardize clinical care quality by promoting diagnostic and treatment approaches of demonstrated clinical effectiveness while minimizing those producing little benefit or actual harm. Despite the publication of multiple guidelines since the 1990s, older men in the United States with BPH continue to receive varied care based on the urologist they see for BPH. Variation occurred according to geographic location and urban versus rural setting. Urologists practicing in the South or urban settings provided the highest level of guideline compliant care. Urologists with the lowest guideline compliance used recommended care less commonly and not recommended care more commonly than the other 80% of urologists in our study. However, assessment of individual tests revealed that urologists used urine cytology, urodynamics studies, upper tract imaging, and serum creatinine at similar rates after adjusting for patient and provider characteristics. Differences in guideline compliance were driven largely by use of cystoscopy, PSA testing, urinalysis, uroflow, post void residual urine checks, and ultrasound studies.

Despite the creation and promotion of guidelines, patient and provider compliance with the recommendations is variable. Barriers to physician compliance with guidelines include awareness of the guideline, familiarity with the guideline recommendations, lack of agreement with the recommendations, poor self-efficacy for implementing guideline recommendations, inertia of previous practice, and a belief that the guideline would not improve patient outcomes.14 Further problems with guideline compliance stem from physicians’ beliefs that the recommendations do not apply well to their patients,15 and the lack of opportunity for individualization of care.16 Combined with an often poor evidence base lacking randomized trials to support recommendations,17 the impact of guidelines on eliminating variations in care has been limited.

The variable compliance with guidelines among urologists could result from many of these factors including a poor evidence base on which to base care, the practice of defensive medicine, and different referral patterns by which patients reach the urologist. Although our study cannot assess which of these factors is contributing to varying levels of guideline compliance, it does provide some evidence supporting each contention.

The lack of high quality data on the use of most evaluative care precludes making strong judgments about the value of most evaluative care testing.18 Due to the weak evidence base, many urologists will err on the side of doing more testing. Given the fee-for-service population studied, such testing would also increase practice income. In addition, the comparable European Association of Urology guidelines recommend many procedures the AUA guidelines consider optional, and call optional procedures the AUA considers not-recommended.19 Since the risk of harm from testing is perceived as low, the added information potentially useful, and the testing is reimbursed by the Medicare program, optional and not-recommended tests are provided.

Increased use of BPH related testing could be a response to an increasingly litigious society, with urologists protecting themselves from future malpractice actions with ever increasing amounts of testing. Indeed, the secular trends in use of optional and not-recommended care and prior literature support this contention.20 However, all diagnostic tests have the potential for harm including false positive and negative results, and increased expense.

Finally, the types of referrals sent to a urologist might influence practice patterns and guideline compliance. While many patients sent to a urologist for BPH or LUTS have had a limited work up with only some exposure to medical management,21 some urologists may see more refractory patients. This would be especially true if patients are referred from primary care physicians who are experienced in treating men with BPH. Such factors may be contributing to the rise in use of PVR testing, with urologists seeing men who may be considering anticholinergic medications for their symptoms.

Our study also reveals problems that could arise as payers and other organizations attempt to use claims data and guideline compliance as measures of quality. With claims-based studies, patient symptoms and prior therapies cannot be determined. While our study addressed this issue by excluding patients with prior diagnoses consistent with neurologic disease or cancers that could contribute to LUTS, broader attempts to gauge quality using claims and guideline adherence may include such subpopulations or be limited by changes in guidelines over time.22 Also, all important aspects of care and outcomes cannot be assessed using only claims data.23,24 For example, in our study assessment of symptoms scores and performance of a physical exam could not be determined. Finally, care may be incorrectly assigned to a physician or condition when actually given for a different indication. This phenomenon may have occurred in our study with serum creatinine measurements; a common aspect of many primary care physicians’ yearly patient assessment. While in our study this testing did not influence the assignment of urologists to a specific level of guideline compliance, it is conceivable that such misallocation of care could substantially impact assessments of quality or compliance with guidelines in other settings.

Certain limitations exist in this study, and warrant consideration. Our results are most applicable to older men treated in a fee-for-service setting. We cannot determine if compliance with guidelines would be the same in younger men, or men treated in health maintenance organizations. However, since Medicare provides the primary health insurance to over 95% of older men in the United States and these are the men most likely to have BPH symptoms, our results have validity for a large population most affected by the treatment decisions in BPH management. Similarly, we have examined care among urologists with higher volumes of BPH patients. We cannot determine if similar variations in guideline compliance occur among urologists who see fewer Medicare patients with BPH. In addition, we group BPH related tests by level of guideline recommendation. In so doing, we provide equal weight to procedures that have varying risks, and costs, to patients.

Conclusion

Despite the availability of AUA practice guidelines for BPH care, wide variations in BPH related test utilization are seen among urologists in the United States. Further study of the effectiveness of evaluative care testing provided to patients is needed, and may help improve compliance with the guidelines.

Supplementary Material

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Committee on Quality of Health Care in America IoM. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, D.C.: National Academy Press; 2001. [Google Scholar]

- 2.Roehrborn C, McConnell J, Barry M. American Urological Association Guideline on the Management of Benign Prostatic Hyperplasia (BPH) American Urological Association; 2003. [Google Scholar]

- 3.Wennberg JE. On the status of the Prostate Disease Assessment Team. Health Serv Res. 1990;25:709–716. [PMC free article] [PubMed] [Google Scholar]

- 4.Sung JC, Curtis LH, Schulman KA, Albala DM. Geographic variations in the use of medical and surgical therapies for benign prostatic hyperplasia. J Urol. 2006;175:1023–1027. doi: 10.1016/S0022-5347(05)00409-X. [DOI] [PubMed] [Google Scholar]

- 5.Yu X, McBean AM, Caldwell DS. Unequal use of new technologies by race: the use of new prostate surgeries (transurethral needle ablation, transurethral microwave therapy and laser) among elderly Medicare beneficiaries. J Urol. 2006;175:1830–1835. doi: 10.1016/S0022-5347(05)00997-3. discussion 1835. [DOI] [PubMed] [Google Scholar]

- 6.Wei JT, Calhoun E, Jacobsen SJ. Urologic diseases in america project: benign prostatic hyperplasia. J Urol. 2008;179:S75–S80. doi: 10.1016/j.juro.2008.03.141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wharam JF, Sulmasy D. Improving the quality of health care: who is responsible for what? JAMA. 2009;301:215–217. doi: 10.1001/jama.2008.964. [DOI] [PubMed] [Google Scholar]

- 8.Berwick DM. Measuring physicians' quality and performance: adrift on Lake Wobegon. JAMA. 2009;302:2485–2486. doi: 10.1001/jama.2009.1801. [DOI] [PubMed] [Google Scholar]

- 9.Nichol MB, Knight TK, Priest JL, Wu J, Cantrell CR. Nonadherence to clinical practice guidelines and medications for multiple chronic conditions in a California Medicaid population. J Am Pharm Assoc (2003) 2010;50:496–507. doi: 10.1331/JAPhA.2010.09123. [DOI] [PubMed] [Google Scholar]

- 10.Warren JL, Klabunde CN, Schrag D, Bach PB, Riley GF. Overview of the SEER-Medicare data: content, research applications, and generalizability to the United States elderly population. Med Care. 2002;40:IV-3–IV-18. doi: 10.1097/01.MLR.0000020942.47004.03. [DOI] [PubMed] [Google Scholar]

- 11.Strope SA, Elliott SP, Smith A, Wlit TJ, Wei JT, Saigal CS. Urologist Practice Styles in the Initial Evaluation of Elderly Men with BPH Urology. 2010 doi: 10.1016/j.urology.2010.07.485. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Diez Roux AV, Merkin SS, Arnett D, Chambless L, Massing M, Nieto FJ, Sorlie P, Szklo M, Tyroler HA, Watson RL. Neighborhood of residence and incidence of coronary heart disease. N Engl J Med. 2001;345:99–106. doi: 10.1056/NEJM200107123450205. [DOI] [PubMed] [Google Scholar]

- 13.Klabunde CN, Legler JM, Warren JL, Baldwin LM, Schrag D. A refined comorbidity measurement algorithm for claims-based studies of breast, prostate, colorectal, and lung cancer patients. Ann Epidemiol. 2007;17:584–590. doi: 10.1016/j.annepidem.2007.03.011. [DOI] [PubMed] [Google Scholar]

- 14.Cabana MD, Rand CS, Powe NR, Wu AW, Wilson MH, Abboud PA, Rubin HR. Why don't physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282:1458–1465. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 15.Shea AM, DePuy V, Allen JM, Weinfurt KP. Use and perceptions of clinical practice guidelines by internal medicine physicians. Am J Med Qual. 2007;22:170–176. doi: 10.1177/1062860607300291. [DOI] [PubMed] [Google Scholar]

- 16.Shaneyfelt TM, Centor RM. Reassessment of clinical practice guidelines: go gently into that good night. JAMA. 2009;301:868–869. doi: 10.1001/jama.2009.225. [DOI] [PubMed] [Google Scholar]

- 17.Tricoci P, Allen JM, Kramer JM, Califf RM, Smith SC., Jr Scientific evidence underlying the ACC/AHA clinical practice guidelines. JAMA. 2009;301:831–841. doi: 10.1001/jama.2009.205. [DOI] [PubMed] [Google Scholar]

- 18.McConnell JD, Barry MJ, Bruskewitz RC, Bueschen AJ, Denton SE, Holtgrewe HL. Agency for Health Care Policy and Research PHS. Rockville, Md: U.S. Department of Health and Human Services; 1994. Benign Prostatic Hyperplasia: Diagnosis and Treatment. Clinical Practice Guideline. vol. AHCPR publication No. 94-0582. [PubMed] [Google Scholar]

- 19.Madersbacher S, Alivizatos G, Nordling J, Sanz CR, Emberton M, de la Rosette JJ. EAU 2004 guidelines on assessment, therapy and follow-up of men with lower urinary tract symptoms suggestive of benign prostatic obstruction (BPH guidelines) Eur Urol. 2004;46:547–554. doi: 10.1016/j.eururo.2004.07.016. [DOI] [PubMed] [Google Scholar]

- 20.Kessler D, McClellan M. Do Doctors Practice Defensive Medicine? The Quarterly Journal of Economics. 1996;111:353–390. [Google Scholar]

- 21.Hollingsworth JM, Hollenbeck BK, Daignault S, Kim SP, Wei JT. Differences in initial benign prostatic hyperplasia management between primary care physicians and urologists. J Urol. 2009;182:2410–2414. doi: 10.1016/j.juro.2009.07.029. [DOI] [PubMed] [Google Scholar]

- 22.Lin GA, Redberg RF, Anderson HV, Shaw RE, Milford-Beland S, Peterson ED, Rao SV, Werner RM, Dudley RA. Impact of Changes in Clinical Practice Guidelines on Assessment of Quality of Care. Med Care. 2010;48:733–738. doi: 10.1097/MLR.0b013e3181e35b3a. [DOI] [PubMed] [Google Scholar]

- 23.Robinson JC, Williams T, Yanagihara D. Measurement of and reward for efficiency In California's pay-for-performance program. Health Aff (Millwood) 2009;28:1438–1447. doi: 10.1377/hlthaff.28.5.1438. [DOI] [PubMed] [Google Scholar]

- 24.Linder JA, Kaleba EO, Kmetik KS. Using electronic health records to measure physician performance for acute conditions in primary care: empirical evaluation of the community-acquired pneumonia clinical quality measure set. Med Care. 2009;47:208–216. doi: 10.1097/MLR.0b013e318189375f. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.