Abstract

Segregation of information flow along a dorsally directed pathway for processing object location and a ventrally directed pathway for processing object identity is well established in the visual and auditory systems, but is less clear in the somatosensory system. We hypothesized that segregation of location vs. identity information in touch would be evident if texture is the relevant property for stimulus identity, given the salience of texture for touch. Here, we used functional magnetic resonance imaging (fMRI) to investigate whether the pathways for haptic and visual processing of location and texture are segregated, and the extent of bisensory convergence. Haptic texture-selectivity was found in the parietal operculum and posterior visual cortex bilaterally, and in parts of left inferior frontal cortex. There was bisensory texture-selectivity at some of these sites in posterior visual and left inferior frontal cortex. Connectivity analyses demonstrated, in each modality, flow of information from unisensory non-selective areas to modality-specific texture-selective areas and further to bisensory texture-selective areas. Location-selectivity was mostly bisensory, occurring in dorsal areas, including the frontal eye fields and multiple regions around the intraparietal sulcus bilaterally. Many of these regions received input from unisensory areas in both modalities. Together with earlier studies, the activation and connectivity analyses of the present study establish that somatosensory processing flows into segregated pathways for location and object identity information. The location-selective somatosensory pathway converges with its visual counterpart in dorsal frontoparietal cortex, while the texture-selective somatosensory pathway runs through the parietal operculum before converging with its visual counterpart in visual and frontal cortex. Both segregation of sensory processing according to object property and multisensory convergence appear to be universal organizing principles.

It is well known that visual perception, in non-human primates (Ungerleider and Mishkin, 1982) as well as humans (Haxby et al., 1994), involves relatively distinct dorsal and ventral streams, specialized for object location and object identity, respectively. Auditory processing is similarly segregated into dorsally and ventrally directed pathways in both non-human primates and humans (Alain et al., 2001; Arnott et al., 2004; Rauschecker and Tian, 2000). Aspects of audiovisual information are also processed in distinct regions, with stimulus identity judgments engaging regions around the superior temporal sulcus while stimulus location judgments recruit parietal cortical regions (Sestieri et al., 2006). In the somatosensory system, Mishkin (1979) originally postulated the existence of a hierarchically organized processing stream originating in primary somatosensory cortex (S1), located in the postcentral gyrus and sulcus (PoCG, PoCS), and projecting through secondary somatosensory cortex (S2) in the parietal operculum onto medial temporal and inferior frontal cortices. This pathway was proposed to mediate learning of shape discrimination in the somatosensory system, analogous to the ventral visual stream. Tract-tracing methods in macaques provided anatomical evidence for this somatosensory pathway (Friedman et al., 1986). Neurophysiological studies in macaques supported the idea of hierarchical information flow from S1 to S2, since S1 lesions essentially abolished somatosensory responses in S2 (Burton et al., 1990; Pons et al., 1987, 1992), although this was not true in macaques whose S1 was lesioned in infancy (Burton et al., 1990) and neurophysiological studies in other primate species are inconsistent with the hierarchical model (Nicolelis et al., 1998; Zhang et al., 2001).

The idea of dichotomous pathways subserving processing of stimulus identity and location, analogous to those in the visual and auditory systems, has been pursued in a number of studies of human somatosensory processing. One study found selectivity for haptic object recognition in frontocingulate cortex and for extrapersonal spatial processing in superior parietal cortex (Reed et al., 2005). Another report described hemispheric specialization within posterior parietal cortex: the left anterior intraparietal sulcus (IPS) for tactile discrimination of grating orientation and the right temporoparietal junction for tactile discrimination of grating location on the fingerpad (Van Boven et al., 2005). In another study, location was varied in terms of the hand stimulated, and stimulus identity in terms of vibrotactile frequency: electrophysiologic activity, initially greater in the hemisphere contralateral to the stimulated hand, remained lateralized in parieto-occipito-temporal cortex for frequency but became bilateral for location (De Santis et al., 2007). Finally, task-selective processing was demonstrated in common across auditory and tactile systems, stimulus identity (auditory chords or vibrotactile frequencies) being processed in inferior frontal and insular cortex whereas stimulus location (in extrapersonal space for auditory stimuli or on different fingers for tactile stimuli) was processed in inferior and superior parietal cortex (Renier et al., 2009). Thus, while some of these studies point to posterior parietal cortex as being important for tactile location, none provided evidence for tactile identity processing in somatosensory cortex, leaving open the functional role of the pathway studied by Mishkin and colleagues (Friedman et al., 1986; Mishkin, 1979; Pons et al., 1987, 1992).

Selective somatosensory cortical processing of stimulus attributes was first reported in studies of macaques with lesions of different parts of S1: ablating Brodmann's area (BA) 1 impaired haptic perception of surface texture, whereas removal of BA 2 affected haptic perception of object form (Randolph and Semmes, 1974). Contrasting with such selectivity, lesions of BA 3b (Randolph and Semmes, 1974) or S2 (Murray and Mishkin, 1984) impaired perception of both surface texture and object form. Neurophysiological studies in macaques found that neuronal responses in S1 and S2 encode roughness (Jiang et al., 1997; Pruett et al., 2000; Sinclair et al., 1996), an aspect of texture (Hollins et al., 1993) while shape-selective neurons occur in BAs 2 and 5 (Koch and Fuster, 1989). In humans, damage to S1 compromises both roughness and shape discrimination (Roland, 1987), while lesions involving S2 have been associated with tactile agnosia (Caselli, 1993; Reed et al., 1996). Although some functional neuroimaging studies noted common regions of activation in the PoCS (O'Sullivan et al., 1994; Servos et al., 2001) or parietal operculum (O'Sullivan et al., 1994) for judgments of both surface roughness and object form, other studies emphasized segregated processing of these attributes. Shape-selectivity (relative to texture), in both haptic and visual modalities, occurs in multiple posterior parietal foci as well as a ventral visual cortical region known as the lateral occipital complex (LOC) (Amedi et al., 2001; Stilla and Sathian, 2008; Zhang et al., 2004), while parietal opercular cortex is specialized for surface texture (Ledberg et al., 1995; Roland et al., 1998; Stilla and Sathian, 2008; see review by Whitaker et al., 2008). Studies of S2 in the parietal operculum have a long history, as reviewed comprehensively by Burton (1986). More recent studies in monkeys have identified additional parietal opercular somatosensory fields known as the ventral somatosensory area (VS) (Cusick et al., 1989) and the parietal ventral area (PV) (Krubitzer et al., 1995), and three distinct maps have been demonstrated in the parietal opercular hand representation of macaques (Fitzgerald et al., 2004). Three of four cytoarchitectonic regions in the human parietal operculum, termed OP1, OP3 and OP4, are somatosensory: OP1 appears homologous to S2, OP4 to PV and OP3 to VS (Eickhoff et al., 2007). These three regions were all texture-selective in our prior study (Stilla and Sathian, 2008).

We suggested that our earlier findings of haptic shape-selectivity in the PoCS and IPS but texture-selectivity in OP1, OP3 and OP4 might fit the action-perception dichotomy (Dijkerman and de Haan, 2007; Goodale and Milner, 1992), proposed as an alternative to the location-identity basis for segregation of sensory processing in both vision and touch. According to this view, the pathway comprising the PCS and IPS would be concerned with grasping, which is closely tied to object shape, while the stream involving parietal opercular somatosensory areas would be related to material object properties such as texture, an object property especially salient to touch (Whitaker et al., 2008). Here, we tested the hypothesis that segregation of location vs. identity information for touch would be evident if texture was used as the relevant property for stimulus identity, which was not the case in any of the earlier studies exploring such segregation. There are a number of reasons to use texture as the variable determining stimulus identity: not only is texture highly salient to touch, but texture judgments are actually better for touch than vision, particularly for fine textures, and touch tends to dominate vision in texture perception (reviewed by Whitaker et al., 2008). Thus, when one perceives an object, its shape is readily assessed visually; however, judging its texture, which is sometimes the unique property (e.g. a peach vs. nectarine or ripe vs. unripe fruit), though visually perceptible, benefits from tactile exploration. This may be because touch and vision, although both capable of extracting texture information, differ substantially in the nature of sensing: vision is largely concerned with spatial patterns and is sensitive to illumination gradients, whereas touch relies on spatial variation as well as vibrations and tangential forces generated by surface exploration and is multidimensional, including at least the rough-smooth and soft-hard continua and possibly additional ones such as sticky-slippery (reviewed by Hollins and Bensmaia, 2007; Sathian, 1989; Whitaker et al., 2008).

As noted above, posterior parietal cortical areas are involved in processing location information for visual (Haxby et al., 1994; Ungerleider and Mishkin, 1982), auditory (Alain et al., 2001; Arnott et al., 2004; Rauschecker and Tian, 2000), tactile (Reed et al., 2005; Van Boven et al., 2005), audiovisual (Sestieri et al., 2006) and audiotactile (Renier et al., 2009) stimuli, but the extent to which spatial processing is shared between vision and touch remains uncertain. Selective haptic processing of texture has been found in the parietal operculum, as outlined above (Ledberg et al., 1995; Roland et al., 1998; Stilla and Sathian, 2008; see review by Whitaker et al., 2008). Visual texture-selectivity has been reported in and around the collateral sulcus (Cant et al., 2009; Cant and Goodale, 2007; Peuskens et al., 2004; Puce et al., 1996) or distributed through visual cortex (Beason-Held et al., 1998). Whether texture processing is separable from location processing has not been studied in either vision or touch, although one study showed a dorso-ventral segregation in vision for motion- vs. texture-selectivity (Peuskens et al., 2004). To our knowledge, our prior study (Stilla and Sathian, 2008) was the only one to investigate texture-selectivity in both vision and touch, with the finding of bisensory overlap in early visual cortex around the presumptive V1–V2 border.

Here we report a functional magnetic resonance imaging (fMRI) study designed to investigate whether the pathways for haptic and visual processing of location and texture are segregated, and the extent to which such selective processing is unisensory or bisensory. The present study investigated not only task-selective activations which were the focus of earlier studies, but also effective connectivity, to better define the relevant pathways.

METHODS

Subjects

Eighteen subjects (11 male, 7 female) took part in the study after giving informed consent. All were right-handed as assessed by the high-validity subset of the Edinburgh handedness questionnaire (Raczkowski et al., 1974), were neurologically normal, and had vision that was normal or corrected to normal with contact lenses. Subjects with callused fingerpads or a history of injury to the upper extremity were excluded. Ages ranged from 18–23 years, with a mean age of 20.8.

Stimuli

HAPTIC

The haptic texture (HT) stimuli were constructed of various fabrics or upholstery glued to both sides of a 4×4×0.3 cm cardboard substrate. The haptic location (HL) stimuli consisted of a cardboard base of similar dimensions, on which was drawn a 4×4 grid. A plastic, 3 mm diameter, raised dot that could easily be felt was centrally glued at one of 16 locations within the grid; the grid itself could not be felt. Subjects were blindfolded and instructed to keep their eyes closed. The right arm was extended and supported by soft foam padding, with the right hand supinated and comfortably placed near the front magnet aperture. Stimuli were presented by an experimenter in a consistent orientation, and subjects were instructed to support each stimulus with the 2nd–5th digits while `sweeping' the superior stimulus surface with the thumb. Consequently, motor activity to evaluate texture or location was very similar.

VISUAL

Visual texture (VT) stimuli were 2.6 cm square black and white photographs of the fabrics/ upholstery used to construct the haptic texture stimuli. Visual location (VL) stimuli consisted of a 1.5 mm black dot at one of 16 possible locations within a 4×4 (invisible) grid on a gray 2.6 cm square background. Visual stimuli were centrally displayed on a black background, and projected on a screen at the rear magnet aperture. An angled mirror positioned above the subject's eyes enabled clear visualization of the images. Subjects were instructed to fix their gaze at the center of the screen.

PILOT TESTING

Pilot psychophysical studies were conducted to minimize differences in discriminability among the stimulus sets, as far as possible. Initial testing (n=8) indicated that discriminability was similar for HT, HL and VT stimuli (82.8% correct, 81.0% correct and 80.1% correct, respectively) but better for VL stimuli (94.1% correct). Adjustments were subsequently made to visual stimulus size and intensity to further minimize discriminability differences. Psychophysical measures based on responses collected during scanning indicated that there were some residual differences in discriminability; hence, covariate analysis was conducted on response accuracy to assess its potential impact on task-selective activations.

MR scanning

Scans were performed on a 3 Tesla Siemens Trio whole body scanner (Siemens Medical Solutions, Malvern, PA), using a 12-channel matrix head coil. Functional images with blood oxygenation level-dependent (BOLD) contrast were acquired using a T2*-weighted single-shot gradient-recalled echoplanar imaging (EPI) sequence with the following parameters: twenty-nine axial slices of 4 mm thickness, repetition time (TR) 2000 ms, echo time (TE) 31 ms, flip angle (FA) 90°, in-plane resolution 3.4×3.4 mm, in-plane matrix 64×64. Following the functional imaging runs, a 3D anatomic (MPRAGE) sequence of 176 sagittal slices of 1 mm thickness was obtained with: TR 2600 ms, TE 3.9 ms, inversion time (TI) 1100 ms, FA 8°, in-plane resolution 1×1 mm, in-plane matrix 256×256. Foam padding under the body and arms was used to minimize the transfer of vibration from the gradient coils. Additional foam padding was used within the head coil to minimize movement. Gradient noise attenuation was provided by headphones that also served to convey verbal cues and instructions.

A block design paradigm was utilized, consisting of alternating blocks with and without stimulation. There were 12 stimulation blocks (6 per condition) and 13 rest blocks. Immediately preceding each stimulation block, a verbal cue was presented for 1 s to prepare the subject for the following condition: “rest”, “texture” or “location”. In the inter-stimulus intervals, the subject pressed one of two buttons on a fiberoptic response box, with the second or third digit of the left hand, to indicate whether the stimulus was identical to or different from the immediately preceding stimulus.

For haptic stimulation, each 30 s stimulation block contained five stimulus trials of 6 s each. The timing of stimulus presentations was provided by cues displayed to the experimenter on the wall of the scanner room. An experimenter placed stimuli in the subject's right hand for 5 s, with a 1 s inter-stimulus interval (ISI). The stimulation blocks were interleaved in a predetermined pseudorandom sequence with 20 s rest blocks, using Presentation software (Neurobehavioral Systems, Albany CA) that also controlled stimulus timing and the display of cues to the subject and experimenter. Two runs were completed on each subject.

For visual stimulation, each 18 s stimulation block contained nine stimulus trials of 2 s each: 0.5 s visual display, 1.5 s ISI. Presentation software controlled stimulus timing. Rest blocks were also 18 s long, during which subjects were asked to fixate on a central fixation cross. Two runs were completed per subject.

The two haptic runs were conducted one after the other, as were the two visual runs. Half the subjects did the haptic runs first while the other half did the visual runs first. A blindfold was placed inside the head coil at the time of initial setup. Immediately before and after the haptic runs, the subject was carefully moved out of the magnet aperture while remaining in position on the scanner table to allow application or removal of the blindfold.

Image processing and analysis

Image processing and analysis was performed using BrainVoyager QX v1.10.4 (Brain Innovation, Maastricht, Netherlands). Each subject's functional runs were real-time motion-corrected utilizing Siemens 3D-PACE (prospective acquisition motion correction). Functional images were preprocessed utilizing sinc interpolation for slice scan time correction, trilinear-sinc interpolation for intra-session alignment of functional volumes, and high-pass temporal filtering to 3 cycles per run to remove slow drifts in the data. Anatomic 3D images were processed, co-registered with the functional data, and transformed into Talairach space (Talairach and Tournoux, 1988). Activations were localized with respect to 3D cortical anatomy with the help of an MRI atlas (Duvernoy, 1999).

For group analysis, the transformed data were spatially smoothed with an isotropic Gaussian kernel (full-width half-maximum 4 mm) and normalized across runs and subjects using the “z-baseline” option in BrainVoyager, which normalizes using time points where the predictor values are at or close to zero (default criterion of ≤ 0.1 was used). Statistical analysis of group data used random-effects, general linear models and contrasts of the texture and location conditions to identify haptic or visual texture-selective or location-selective regions, with correction for multiple comparisons (q<0.001) by the false discovery rate (FDR) approach (Genovese et al., 2002).

Unisensory activations common to location and texture tasks were identified by computing modality-specific contrasts for each task and taking their conjunction (FDR)-corrected, q<0.001), i.e., (HL–VL) + (HT–VT) for the haptic modality and (VL–HL) + (VT–HT) for the visual modality. The purpose of this contrast was to find regions in classic unimodal somatosensory or visual areas that were non-selective, but modality-specific, for the tasks used here. Such regions would be expected to be cortical sources of projections to task-selective regions.

Activations were characterized as bisensory using the conjunction of haptic and visual texture-selective regions or haptic and visual location-selective regions, FDR corrected (q<0.05). Such bisensory regions could simply reflect intermingled populations of modality-specific neurons, or actual bisensory neurons. We have previously argued (Stilla and Sathian, 2008) that if such regions show correlations across individuals in the magnitude of activity evoked in the haptic and visual modalities, the probability that they actually house bisensory neurons is higher than in the absence of such correlations. Therefore, the “hot-spots” of these bisensory, task-selective regions were interrogated for the presence of these inter-sensory correlations in activation magnitude across individuals; Bonferroni corrections were applied for the number of regions so tested for each task.

Further, ANCOVAs were used to find voxels showing correlations across individuals between activation magnitude and behavioral accuracy, using the ANCOVA module in BrainVoyager, with cluster correction within the whole brain (corrected p<0.05). These correlational analyses were used to assess the possible contribution of residual discriminability differences among stimulus sets to the observed activations.

Eye movement measurement

Eye movements were measured using predictive eye estimation regression (PEER), a novel method developed by some of the authors and their colleagues (LaConte et al., 2006, 2007). PEER is a simple, image-based approach utilizing the MR signal from the orbits that changes as a function of eye position. Specifically, PEER uses multivariate regression based on the support vector machine (SVM) to model the relationship between the EPI images and direction of gaze (LaConte et al., 2006, 2007). Three PEER calibration runs were used – at the beginning, middle, and end of each subject's functional session. These calibration runs matched the scanning parameters of the haptic and visual runs and consisted of a fixation circle that rastered twice through nine positions on a 3×3 grid, its position changing every 6 s (3 TRs). The grid locations were at the center and ±25% of the visual field, which measured approximately 20° horizontally and 15° vertically. Based on the averages across the three calibration runs, multivariate SVM regression was used to model eye position along the horizontal and vertical axes, on a TR-by-TR basis. These estimates of eye position were averaged across TRs within each condition to yield subject-specific values for net eye movement; their absolute values were treated similarly to yield subject-specific values for total eye movement irrespective of directions along each axis.

Effective connectivity analysis

To test whether somatosensory and visual information processing segregated into distinct pathways for texture and location, effective connectivity analyses were performed. Regions of interest (ROIs) were selected from the unisensory non-selective, the unisensory task-selective and the bisensory task-selective regions, yielding one ROI set for vision and one for touch. The foci selected are indicated by asterisks in Tables 1–6 and Supplementary Tables 1&2.

Table 1.

HL-selective activations. x,y,z = Talairach coordinates; tmax = peak t value.

| Region | x | y | z | tmax |

|---|---|---|---|---|

| L FEF | −18 | −9 | 54 | 13.9 |

| R FEF (2 foci) | 22 | −6 | 55 | 10.8 |

| 33 | −10 | 50 | 9.5 | |

| L prCG* | −31 | −31 | 51 | 6.5 |

| L smIPS (3 foci) | −28 | −45 | 52 | 10.5 |

| −35 | −43 | 52 | 10.3 | |

| −27 | −54 | 56 | 9.3 | |

| L pIPS | −10 | −72 | 41 | 14.1 |

| R smIPS | 25 | −52 | 52 | 8.7 |

| R pIPS | 15 | −67 | 50 | 9.6 |

| R aIPS* | 31 | −44 | 40 | 9.6 |

| R SMG | 50 | −40 | 37 | 10.6 |

| R MFG* | 34 | 29 | 35 | 11.6 |

| L mid CiS* | −11 | −6 | 43 | 7.4 |

| R pCiS* | 13 | −32 | 37 | 6.6 |

| R pvIPS* | 32 | −73 | 29 | 6.3 |

| RPMv* | 49 | 6 | 16 | 6.9 |

| L thalamus | −15 | −24 | 10 | 7.6 |

| R thalamus | 16 | −25 | 14 | 8.6 |

| cerebellar vermis | 3 | −53 | −13 | 7 |

| R supr cerebellum (2 foci) | 30 | −34 | −26 | 7 |

| 30 | −42 | −26 | 7.8 | |

| L supr cerebellum (2 foci) | −34 | −36 | −28 | 8.1 |

| −25 | −55 | −25 | 6.2 |

Table 6.

Bisensory texture-selective activations from the conjunction contrast of (HT-HL) + (VT-VL). x,y,z = Talairach coordinates; tmax = peak t value; r(VH) and p values refer to inter-task correlations (significant values, Bonferroni-corrected, in bold).

| Region | x | y | z | tmax | r (VH) | p |

|---|---|---|---|---|---|---|

| R MOG* | 23 | −93 | 7 | 3.8 | −0.51 | 0.03 |

| L MOG* | −21 | −94 | 12 | 3.9 | 0.15 | 0.56 |

| L LG* | −19 | −52 | 0 | 3.5 | 0.67 | 0.002 |

| L PMv* | −43 | 8 | 28 | 4.2 | 0.68 | 0.002 |

| L IFG/TFS* | −41 | 32 | 20 | 3.8 | 0.53 | 0.02 |

The task-specific time series data from these ROIs were extracted and subjected to multivariate Granger causality analyses (GCA) using methods elaborated previously (Deshpande et al., 2008, 2010a,b). Briefly, the approach involves assessing the extent to which future values of one time series can be predicted from past values of another (Granger, 1969), explicitly factoring out instantaneous correlations, implemented in a multivariate framework (Deshpande et al., 2010a,b). A network reduction procedure was employed, starting with a relatively large initial set of ROIs selected as outlined above (25 ROIs for the haptic conditions and 20 for the visual conditions), followed by iterative elimination of redundant ROIs until no redundant ROIs remained, as decribed previously (Deshpande et al., 2008).

A simulation study found that a Bayesian approach involving computation of a statistic known as Patel's tau (Patel et al., 2006) gave better estimates than GCA of directionality, but not magnitude (Smith et al., 2011). On the other hand, GCA has been shown to be helpful and fairly accurate in exploratory effective connectivity analysis (Rogers et al., 2010; Schippers et al., 2011), and Bayesian approaches in general do not lend themselves well to exploratory analyses of large ROI sets. Therefore, at each iterative step of network reduction, we used GCA as an exploratory method, and subjected the directionality of significant paths emerging from this analysis to confirmatory testing using Patel's tau method (Patel et al., 2006). Thus, the GCA-determined magnitudes of significant paths whose directionality was subsequently confirmed using Patel's tau were included in the redundancy analysis during the network reduction process. This approach allowed us to exploit the advantages of each method, using one to compensate for limitations of the other.

RESULTS

Behavioral

Accuracy (mean % correct±SEM) was 81.4±2.8% in the VT task, 91.1±1.9% in the visual location VL task, 87.7±2.0% in the haptic texture HT task and 84.4±1.9% in the haptic location HL task. An ANOVA showed no main effect of either modality (F1,17=.01; p=.91) or task (F1,17=3.3; p=.09), but there was a significant interaction between these two factors (F1,17=761.2; p<.001). Paired t-tests (Bonferroni-corrected) showed significant differences between VT/VL (t17=−3.8; p=0.001) and between VL/HL (t17=3.4; p=0.003), but not between HT/HL (t17=2.1; p=0.06) or between VT/HT (t17=−2.5; p=0.02).

Imaging

UNISENSORY ACTIVATIONS COMMON TO TASKS

Haptic

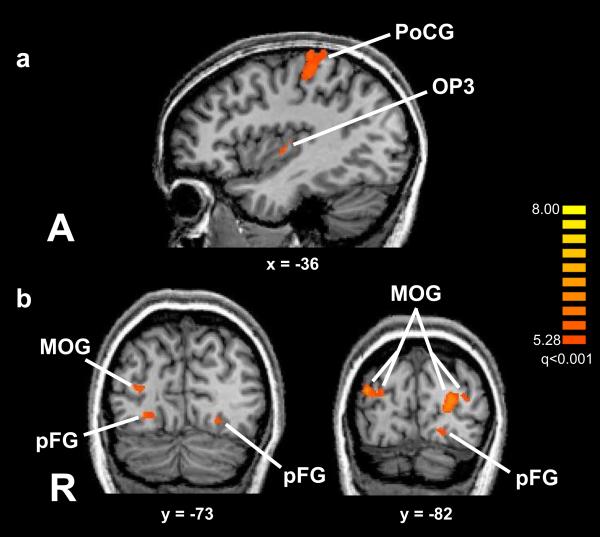

Unisensory haptic activations common to both location and texture tasks were identified using a conjunction of (HL–VL) and (HT–VT) contrasts. As illustrated in Figure 1a and detailed in Supplementary Table 1, these activations were in left S1 including the PoCG and extending anteriorly into the precentral gyrus (PrCG), in the parietal opercular field OP3, in the left mid-cingulate sulcus and the right superior cerebellum. Of these, the OP3 focus was part of a larger region of texture-selectivity (see below); i.e., the larger regions including this OP3 focus was texture-selective, but this particular focus also showed a significant response in the HL condition (albeit less in magnitude than the HT condition).

Figure 1.

Unisensory, non-selective activations, (a) haptic, (b) visual, displayed on slices through MR images (Talairach plane is indicated below each slice). Color t-scale on right; abbreviations as in text.

Visual

Unisensory visual activations common to both location and texture tasks were identified using a conjunction of (VL–HL) and (VT–HT) contrasts. These activations (Figure 1b; Supplementary Table 2) were in bilateral, contiguous sectors of visual cortex, the inferior (occipitotemporal) parts of which exhibited texture-selectivity while the remaining parts were either non-selective, or only weakly selective for either task.

UNISENSORY TASK-SPECIFIC ACTIVATIONS

Haptic

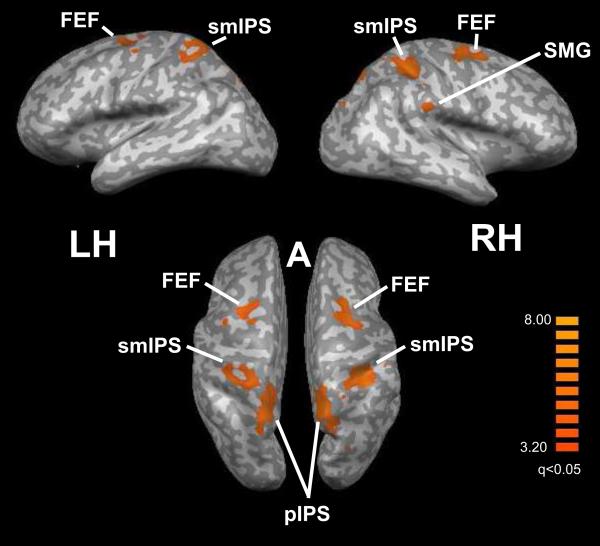

Haptic location-selective

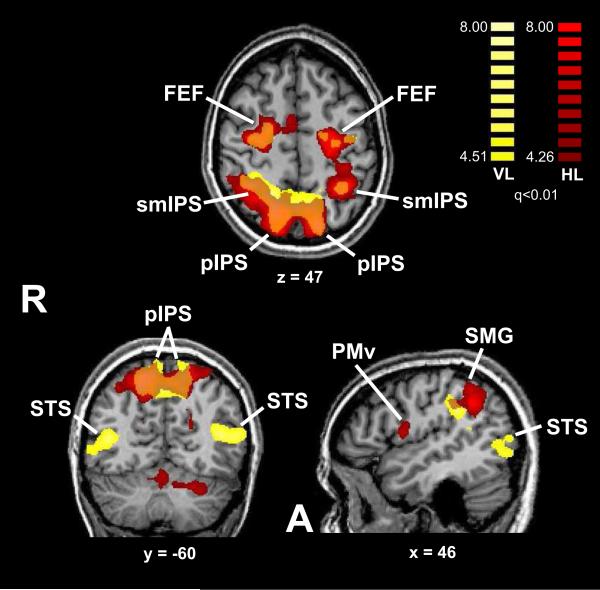

HL-selective activations were identified on the HL–HT contrast. Table 1 lists these activations, of which the major ones are shown in Figure 2 (red). These activations were found bilaterally in the frontal eye field (FEF), along extensive sectors of the IPS, in the ventral posterolateral (VPL) nucleus of the thalamus bilaterally, and in some motor areas. Typical time courses are shown in Figure 3. ANCOVAs were used to test for correlations across individuals between activation magnitude and behavioral accuracy: no positive correlations were found, but a number of regions exhibited significant negative correlations (Supplementary Table 3). These regions were distributed through frontal and occipitotemporal cortex and the cerebellum, but also included parts of right OP3. All these regions were independent of the activation map, except for a focus in the left superior cerebellum, and examination of the BOLD signal changes in these regions in the entire group revealed that they were predominantly negative with respect to baseline, i.e., greater suppression of the BOLD signal in these regions correlated with better accuracy.

Figure 2.

Location-selective activations displayed on slices through MR images (Talairach plane is indicated below each slice). Color t-scales on right (yellow: visual; red: haptic); note display threshold q<0.01; abbreviations as in text.

Figure 3.

Representative BOLD signal time courses; error bars: SEM.

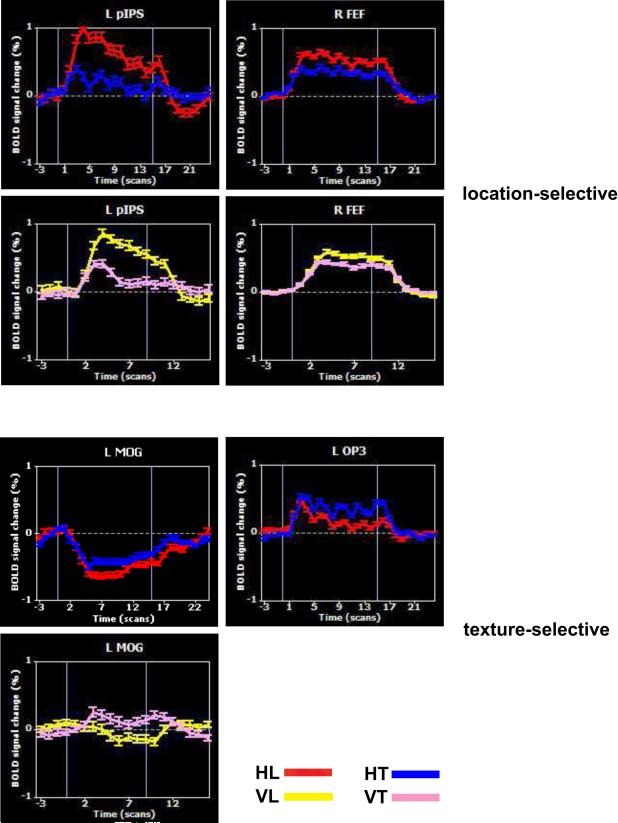

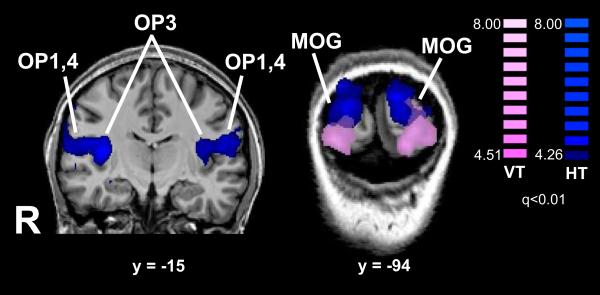

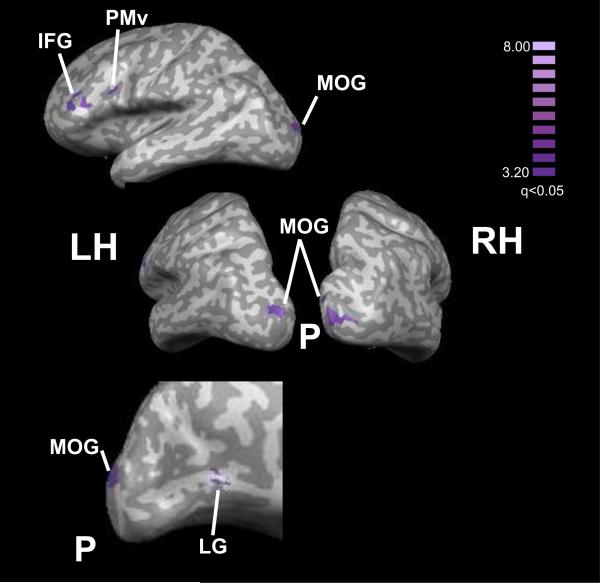

Haptic texture-selective

The HT–HL contrast was used to reveal regions with HT-selectivity (Table 2; Figure 4, blue). These regions included a swath of cortex extending across the parietal opercular fields OP1, OP3, and OP4 bilaterally; parts of visual cortex in the middle occipital gyri (MOG) bilaterally; and a number of left inferior frontal foci: in ventral premotor cortex (PMv), the inferior frontal gyrus (IFG) and orbitofrontal cortex (OFC). The visual cortical and some of the frontal cortical regions showed negative BOLD signals, i.e. the “activations” here actually reflected differential deactivation with respect to baseline (e.g., Figure 3, L MOG). The parietal opercular regions, however, were truly differentially activated (e.g., Figure 3, L OP3). Again, ANCOVAs showed no positive correlations with behavioral accuracy, with significant negative correlations in parts of visual cortex and the cerebellum (Supplementary Table 4) that were largely independent of the activations and again had negative BOLD signals in the subject group.

Table 2.

HT-selective activations. x,y,z = Talairach coordinates; tmax = peak t value.

| Region | x | y | z | tmax |

|---|---|---|---|---|

| L PMv | −44 | 3 | 25 | 7.7 |

| L IFG | −39 | 31 | 17 | 6.7 |

| L OP3* | −35 | −10 | 13 | 9.7 |

| L OP4/1* | −47 | −11 | 16 | 8.2 |

| R OP3* | 37 | −10 | 13 | 12.3 |

| R OP4/1* | 53 | −10 | 16 | 7.8 |

| L OFC* | −24 | 30 | −2 | 6.7 |

| R MOG (2 foci) | 18 | −79 | 13 | 8.5 |

| 15 | −91 | 19 | 8.5 | |

| L MOG (3 foci) | −8 | −91 | 25 | 7.8 |

| −3 | −87 | 26 | 7.3 | |

| −10 | −94 | 22 | 8.7 |

Figure 4.

Texture-selective activations displayed on slices through MR images (Talairach plane is indicated below each slice). Color t-scales on right (pink: visual; blue: haptic); note display threshold q<0.01; abbreviations as in text.

Visual

Visual location-selective

Location-selectivity in the visual modality (VL–VT contrast) was found bilaterally in the FEF, along and near the IPS, and the superior temporal sulcus (STS), as detailed in Table 3 and shown in Figure 2 (yellow). Typical time courses are shown in Figure 3. ANCOVAs showed significant positive correlations with behavioral accuracy at some parietal and temporal cortical foci and in parts of the cerebellum, independent of the activations (Supplementary Table 5). No negative correlations were found.

Table 3.

VL-selective activations. x,y,z = Talairach coordinates; tmax = peak t value.

| Region | x | y | z | tmax |

|---|---|---|---|---|

| R FEF (2 foci) | 27 | −10 | 46 | 9.1 |

| R FEF | 22 | −4 | 49 | 7.8 |

| L pIPS (2 foci) | −14 | −58 | 55 | 7.9 |

| −14 | −71 | 49 | 8.8 | |

| R precuneus* | 1 | −58 | 47 | 7.4 |

| R poCS* | 35 | −42 | 53 | 9.1 |

| R smIPS | 18 | −48 | 50 | 8.1 |

| R pIPS | 18 | −61 | 50 | 9.1 |

| R aIPS* | 32 | −36 | 37 | 8.6 |

| R SMG | 46 | −31 | 30 | 8.9 |

| L pvIPS* | −28 | −79 | 29 | 9 |

| L STS* | −43 | −64 | 13 | 9.4 |

| R STS* | 39 | −57 | 11 | 8.8 |

Visual texture-selective

Table 4 and Figure 4 (pink) reveal that VT-selectivity, from the VT–VL contrast, was found in parts of visual cortex: in the left MOG and right posterior fusiform gyrus (pFG). Unlike HT-selectivity in visual cortex, VT-selectivity reflected true differential activation (Figure 3, L MOG). ANCOVAs showed no positive correlations with behavioral accuracy, with a significant negative correlation at a single focus in left lateral OFC – group activation at this site was minimal and non-selective.

Table 4.

VT-selective activations. x,y,z = Talairach coordinates; tmax = peak t value.

| Region | x | y | z | tmax |

|---|---|---|---|---|

| L MOG (2 foci) | −32 | −88 | −1 | 8.3 |

| −18 | −91 | −5 | 9.1 | |

| R pFG* | 26 | −73 | −13 | 8.3 |

Order effects

Since half the subjects underwent haptic scans first and the other half, visual scans first, we tested for activation differences as a consequence of this order of modalities, using interaction contrasts between order and the within-modal task-specific contrasts just described. No significant differences survived correction for multiple comparisons at the q<0.001 threshold; this was true even at a more liberal threshold (FDR q<0.05). Thus, the observed task-selective activations are not attributable to either the sub-group who experienced visual stimulation first or the sub-group who experienced haptic stimulation first.

BISENSORY TASK-SPECIFIC ACTIVATIONS

Location-selective

Bisensory location-selective activations were revealed by the conjunction of the HL–HT and VL–VT contrasts. These regions, shown in Figure 5 and listed in Table 5, were mainly in the FEF and along the IPS bilaterally. Among these regions, three showed significant positive correlations across subjects between the magnitudes of activation in the HL and VL tasks. Two foci were in the right FEF; the third was in the left posterior IPS (pIPS), probably corresponding to the caudal intraparietal area (CIP)(Shikata et al., 2008).

Figure 5.

Bisensory location-selective activations displayed on inflated hemispheric representations. Color t-scale on right; abbreviations as in text.

Table 5.

Bisensory location-selective activations from the conjunction contrast of (HL-HT) + (VL-VT). x,y,z = Talairach coordinates; tmax = peak t value; r(VH) and p values refer to inter-task correlations (significant values, Bonferroni-corrected, in bold).

| Region | x | y | z | tmax | r (VH) | p |

|---|---|---|---|---|---|---|

| R FEF (2 foci) * | 28 | −12 | 48 | 4.5 | 0.63 | 0.0048 |

| 21 | −4 | 50 | 4.6 | 0.8 | 0.00007 | |

| L FEF (2 foci)* | −22 | −11 | 58 | 4.3 | 0.51 | 0.03 |

| −34 | −11 | 48 | 4.2 | 0.5 | 0.04 | |

| R smIPS* | 34 | −42 | 49 | 4.7 | 0.26 | 0.3 |

| R pIPS* | 15 | −61 | 49 | 4.6 | 0.56 | 0.02 |

| L smIPS* | −31 | −45 | 48 | 4.5 | −0.02 | 0.94 |

| L pIPS* | −13 | −70 | 48 | 4.8 | 0.78 | 0.0001 |

| R SMG* | 53 | −31 | 33 | 4.2 | 0.55 | 0.02 |

| cerebellar vermis | 1 | −64 | −20 | 3.7 | 0.44 | 0.07 |

Texture-selective

The conjunction of the HT–HL and VT–VL contrasts was used to identify bisensory texture-selective regions (Table 6; Figure 6), which lay in the MOG bilaterally, in the left lingual gyrus (LG), left PMv and left IFG extending into the inferior frontal sulcus (IFS). Although the frontal cortical foci did not feature in the list of VT-selective regions, they were present in the HT-selective set as well as in the bisensory texture-selective set, the differences probably reflecting statistical threshold effects. Of the bisensory regions, the left LG and left PMv foci showed significant positive correlations across subjects between the magnitudes of activation in the HT and VT tasks.

Figure 6.

Bisensory texture-selective activations displayed on inflated hemispheric representations. Color t-scale on right; abbreviations as in text.

EYE MOVEMENTS

Even though participants were required to fixate during visual presentations, and were blindfolded with their eyes closed during haptic presentations, it is possible that location-selective activations in either modality, especially in the FEF and IPS, could have been due to greater eye movements in the location conditions relative to the texture conditions (Corbetta and Shulman, 2002). To assess this possibility, eye movements were monitored using a novel method that can record eye movements even when the eyes are closed (see Methods). Table 7 shows that although total eye movements, reflecting cumulated values of transient deviations of eye position regardless of sign, were nonzero, net eye movements (drift over time from initial position) were negligible in all conditions, because of movements in opposite directions along each axis. Critically, both these measures were very similar for the location and texture conditions within each modality, and t-tests revealed no significant differences between these conditions in each modality (Table 7). Thus, there were no detectable differences in eye movements between location and texture conditions that could account for location-selective activations in regions known to be associated with eye movements.

Table 7.

Net eye movement and total eye movement (°) in horizontal and vertical directions during each condition; p value refers to t-tests (two-tailed) contrasting location and texture for each set of measures.

| Net movement | Total movement | |||||

|---|---|---|---|---|---|---|

| Location | Texture | p | Location | Texture | p | |

| Haptic: horizontal | 0.089 | 0.084 | 0.94 | 3.959 | 3.843 | 0.78 |

| Haptic: vertical | −0.058 | 0.003 | 0.3 | 2.424 | 2.160 | 0.44 |

| Visual: horizontal | 0.055 | 0.017 | 0.35 | 1.443 | 1.445 | 0.99 |

| Visual: vertical | −0.072 | 0.021 | 0.18 | 1.412 | 1.310 | 0.59 |

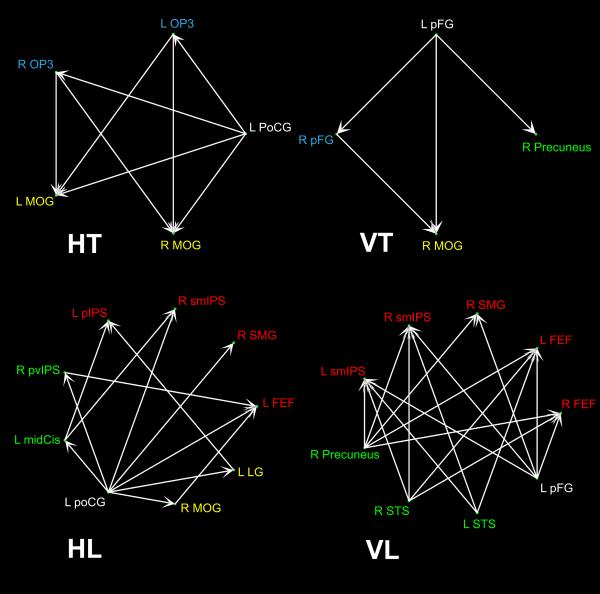

CONNECTIVITY ANALYSES

As shown in Supplementary Table 6 and Figure 7, there was quite clear segregation of pathways between texture and location processing in each modality. As outlined in the Methods, the ROIs for connectivity analyses were chosen to represent unisensory non-selective, unisensory task-selective, and bisensory task-selective areas. For HT, the left PoCG projected to OP3 in the parietal operculum bilaterally; in turn these projected to the MOG bilaterally. This implies that unisensory haptic regions non-selective for task, in the PoCG of S1, projected to HT-selective parietal opercular cortex (OP3), and on from there to bisensory texture-selective occipital cortex (MOG). For VT, there was a similar pattern of information flow: the left pFG, a visual non-selective area, projected to VT-selective cortex in the right pFG, which in turn projected to bisensory texture-selective cortex in the right MOG. The connectivity patterns for the location tasks were more complex than those for the texture tasks. For HL, the left PoCG, located in S1 and haptically non-selective, projected either directly or indirectly to bisensory, location-selective areas: the left FEF or various regions in and around the IPS. The IPS regions included the right supramarginal gyrus (SMG), a focus in the right superomedial IPS (smIPS) that probably corresponds to the medial intraparietal area (MIP)(Shikata et al., 2008) and the left pIPS. For VL, both visual non-selective (left pFG) and VL-selective (right precuneus, bilateral STS) regions projected to bisensory location-selective regions in the FEF and IPS bilaterally – the IPS regions included the right SMG and bilateral smIPS. Thus, overall, the connectivity patterns were consistent with serial flow of information from unisensory regions lacking task-selectivity to bisensory, task-selective regions, reflecting segregation of task-associated pathways but convergence across vision and touch.

Figure 7.

Significant paths identified by connectivity analyses (see text for details and abbreviations). Color key for foci: white, unisensory non-selective; blue, unisensory texture-selective; yellow, bisensory texture-selective; green, unisensory location-selective; red, bisensory location-selective.

DISCUSSION

Analysis of the behavioral data obtained during scanning showed that subjects performed at a high level of accuracy, i.e. above 80% correct in all tasks. Although accuracy on the VL task was significantly higher than in either the VT or HL tasks, this did not impact the activations, since voxels independently identified as showing correlations (positive or negative) between activation magnitude and accuracy in each task were essentially non-overlapping with the corresponding activation maps. Not surprisingly, unisensory activations common to location and texture tasks were found in somatosensory cortical regions and parts of the motor circuit in the case of haptic stimulation, and in visual cortical regions for visual stimulation. It is worth noting that the selectivity observed for either task was relative rather than absolute in most regions, as noted earlier (Stilla and Sathian, 2008).

Texture-selectivity

HT-selectivity, without accompanying VT-selectivity or visual responsiveness, was found in the parietal operculum, including OP4, OP1 and OP3. This replicates our previous report of HT-selectivity in this region (Stilla and Sathian, 2008). In this earlier study, HT-selectivity was observed relative to haptic shape in a small number of participants (n=6) whereas in the present study, HT-selectivity was found relative to HL in a larger participant group (n=18). Therefore, taking the present study together with our earlier study (Stilla and Sathian, 2008), the somatosensory fields of the parietal operculum can be regarded as unisensory tactile areas with specialization for processing surface texture. This accords with some earlier studies showing parietal opercular selectivity for perception of roughness (Ledberg et al., 1995; Roland et al., 1998) or hardness (Servos et al., 2001), and with findings that S2 neuronal responses encode roughness (Jiang et al., 1997; Pruett et al., 2000; Sinclair et al., 1996). As pointed out earlier, texture is an object property that is highly salient to touch; moreover, touch excels at extracting fine texture information and can dominate the visual percept (reviewed by Whitaker et al., 2008). Thus, the finding of HT-selectivity in the parietal operculum is consistent with Mishkin's (1979) postulate that “what” information acquired through touch is directed into a somatosensory pathway involving the parietal operculum. Note that HT-selectivity in the present study as well as in our previous study (Stilla and Sathian, 2008) was present in all three somatosensory fields of the parietal operculum. Eickhoff and colleagues (Eickhoff et al., 2007) have provided good evidence that OP1 is the probable human homolog of macaque second somatosensory cortex (SII), OP3 of the macaque ventral somatosensory area (VS) and OP4 of the macaque parietal ventral area (PV).

HT-selectivity was also found in parts of left frontal cortex: in PMv, IFG and OFC, as well as in visual cortex in the MOG bilaterally. In most of these foci, the BOLD signals were negative relative to baseline for both HT and HL conditions, but less negative for HT than HL. This was not the case for HT-selective visual cortical activity in our prior study (Stilla and Sathian, 2008). Thus, the interpretation of HT-selectivity in these regions is less clear than in the parietal operculum. One possibility is that some of these regions may have been active during the rest state in the present study, a possibility supported by the observation that visual cortical activity during rest is correlated with that in a part of posteromedial cortex (Cauda et al., 2010). This requires further evaluation. However, VT-selectivity in visual cortex, in left MOG and right pFG, did reflect truly differential activation. VT-selectivity in these parts of visual cortex is generally consistent with previous reports that have found similar selectivity in and around the collateral sulcus (Cant et al., 2009; Cant and Goodale, 2007; Peuskens et al., 2004; Puce et al., 1996) as well as distributed through visual cortex (Beason-Held et al., 1998).

There was bisensory texture-selectivity at some of these sites: in posterior visual cortex in the MOG bilaterally, in inferotemporal visual cortex in the left LG, and frontally in the left PMv and left IFS. The right MOG site was near a site showing bisensory overlap of texture-selectivity relative to shape in our prior study (Stilla and Sathian, 2008), although a conjunction analysis was not performed in that study and thus its results are less robust. Among the bisensory texture-selective sites in the present study, the left LG and left IFS showed significant correlations across individuals between HT and VT activation magnitudes, pointing to these regions as potentially housing multisensory texture-selective neurons. Parts of inferotemporal cortex around the LG focus were previously suggested to be bisensory on the basis of similar object category-specific responses across visual and haptic presentation (Pietrini et al., 2004). In the PMv, we previously reported bisensory shape-selectivity (Stilla and Sathian, 2008); a number of multisensory responses have been found in this region in macaques (Graziano et al., 1997; Rizzolatti and Craighero, 2004) and humans (Ehrsson et al., 2004).

The connectivity analyses demonstrated, in each modality, flow of information from unisensory non-selective areas (left PoCG for touch, left pFG for vision) to modality-specific texture-selective areas (bilateral OP3 for touch, right pFG for vision) and further to bisensory texture-selective areas (MOG). Haptic information flow from the PoCG (S1) to OP3 in parietal opercular cortex thus reinforces the activation findings in supporting Mishkin's (1979) suggestion of a somatosensory “what” pathway involving projections from S1 to S2, in accord with anatomical and physiological studies that bolstered this concept (Burton et al., 1990; Friedman et al., 1986; Pons et al., 1987, 1992). Thus, the present human study is more in keeping with findings from macaques (Burton et al., 1990; Friedman et al., 1986; Mishkin, 1979; Pons et al., 1987, 1992) than in other non-human primate species, which emphasized parallel processing in S1 and S2, rather than serial information flow from S1 to S2 (Nicolelis et al., 1998; Zhang et al., 2001). Hierarchical information flow from S1 to S2 is supported by human studies using magnetoencephalography during electrocutaneous stimulation (Inui et al., 2004) or tactile pattern discrimination (Reed et al., 2009), or electroencephalography during tactile shape discrimination (Lucan et al., 2010). It is unclear why VT information flow appeared to originate in the FG and end in early visual cortex, in contrast to HT information streaming from S1 through parietal opercular cortex to the MOG. Although this may reflect differences in texture processing between vision and touch (Whitaker et al., 2008), with predominantly bottom-up information flow in touch but possibly top-down processing in vision, limitations of effective connectivity analysis based on the slow hemodynamic response dictate the necessity for further work to settle this issue.

The flow of HT information into visual cortical areas suggests that these areas are likely to be functionally involved in processing haptic texture, consistent with findings that transient interference with or permanent abolition of occipital cortical function disrupts haptic roughness perception (Merabet et al., 2004). However, there is some lack of consistency on various points (i) the activations found here and previously (Stilla and Sathian, 2008) implicate both the MOG and the LG in bisensory texture perception (ii) the connectivity analyses of the present study implicate the MOG but the inter-modal correlations point to the LG in bisensory texture perception, and (iii) HT-specificity suggested by the earlier transcranial magnetic stimulation and lesion study (Merabet et al., 2004) was not corroborated by a matched fMRI study, which found only weak, non-selective visual cortical activation (Merabet et al., 2007). Thus, further investigation of unisensory and multisensory texture-selectivity is warranted, particularly in relation to intersensory differences and somatosensory interactions with vision (Klatzky and Lederman, 2010; Whitaker et al., 2008), hearing (Guest et al., 2002) and taste (Auvray and Spence, 2008).

Location-selectivity

As expected, VL-selectivity was found in a number of dorsal areas, including the FEF and mulitple regions around the IPS bilaterally. Posterior parietal cortical regions in and around the IPS have been repeatedly shown to be selective for a variety of visuo-spatial tasks, e.g., locating stimuli in extrapersonal space (Haxby et al., 1994; Ungerleider and Mishkin, 1982), determining spatial relationships between objects (Amorapanth et al., 2010; Ellison and Cowey, 2006) and mental rotation (Gauthier et al., 2002). Interestingly, the present study shows that many of these regions were also location-selective in touch, and received input from unisensory areas in both modalities, indicating that they process location independent of stimulus modality. Specifically, these regions appear to be involved in processing the location of a feature on an object, since this was the nature of the location task used in the present study.

The HL-selectivity in posterior parietal regions is consistent with prior reports (Reed et al., 2005; Renier et al., 2009). These also regions appear to be location-selective for auditory (Arnott et al., 2004), audiotactile (Renier et al., 2009) and audiovisual (Sestieri et al., 2006) in addition to tactile and visual stimuli, although all three modalities have not so far been tested in the same study to confirm the existence of trisensory location-selective regions in humans, to the best of our knowledge. The FEF or nearby regions are also implicated in auditory spatial localization (Arnott et al., 2004), and our group has previously reported FEF activation during a variety of somatosensory tasks (Stilla and Sathian, 2008; Stilla et al., 2007; Zhang et al., 2005). Thus, the FEF can be appropriately regarded as a region that processes multisensory inputs, as is the case for other premotor areas, e.g. PMv (see above). Both the FEF and the IPS are importantly involved in directing visuospatial attention, constituting the “dorsal attention network” (Corbetta and Shulman, 2002), which also appears to be multisensory (Macaluso, 2010). One obvious question is whether eye movements could have been responsible for these location-specific activations, especially in the FEF and IPS which are known to be involved in eye movements (Corbetta and Shulman, 2002). However, analysis of eye movements revealed no significant differences between conditions, ruling out this possibility.

The patterns of location information flow were somewhat less clear than those for texture, although an overall flow of information reflecting segregation of task-associated pathways but convergence across vision and touch was still discernible, from unisensory regions without task-selectivity to bisensory, task-selective regions in the FEF and around the IPS. For HL, the flow began in the left PoCG of S1, a haptically non-selective region, whereas for VL, early visual cortical areas (e.g. in the MOG) were not featured in the connectivity matrix, which originated instead in higher-order areas – the left pFG (a visual non-selective area), and the right precuneus and bilateral STS (VL-selective regions). The reason for this is not clear. As for texture, it is premature to infer functional differences between the modalities in these pathways, given limitations of effective connectivity analysis based on the BOLD signal.

Among the bisensory location-selective regions identified in the present study, the right FEF and left pIPS demonstrated significant correlations across individuals between activation magnitudes in the VL and HL tasks, suggesting that these regions are the most likely candidates for housing multisensory, location-selective neurons. Interestingly, the left pIPS region also showed significant inter-modal correlations during visual and haptic shape perception in our previous study (Stilla and Sathian, 2008), and has been implicated in cross-modal shape matching (Saito et al., 2003). Note that visual shape-selective responses occur in parietal cortex (e.g., Denys et al., 2004; Konen and Kastner, 2008; Sereno and Maunsell, 1998) in addition to the ventral stream. The shapes used in our prior study were unfamiliar and meaningless; thus, apprehension of their global shape probably involves computing spatial relations between parts. In our model of multisensory object recognition (Lacey et al., 2009), we suggested that this computation is important for recruiting parietal cortical regions, perhaps involving spatial imagery, and may be an especially important aspect of shape processing for unfamiliar objects. The co-localization of intersensory correlations in the left pIPS for shape (Stilla and Sathian, 2008) and location (present study) point to the possibility of a multisensory neuronal pool that mediates the spatial processing of relations between object parts to arrive at global shape.

Dual somatosensory and visual pathways and bisensory convergence

The present study, together with our previous study (Stilla and Sathian, 2008) provides clear support for Mishkin's (1979) conjecture that somatosensory processing flows from S1 into S2 as part of a pathway for object identity information, when texture is used as the key identifying stimulus property. This is consistent with our hypothesis, predicated on the high saliency of texture for touch and the importance of touch for texture perception (Whitaker et al., 2008). This haptic pathway projects further onto multisensory regions in early visual cortex and is relatively segregated from the haptic location-selective pathway, which is directed into multisensory regions in dorsal frontoparietal cortex. Thus, the duality of processing streams for location and identity observed in other sensory systems (Arnott et al., 2004; Haxby et al., 1994; Ungerleider and Mishkin, 1982) and multisensory combinations (Renier et al., 2009; Sestieri et al., 2006) also holds for touch and visuo-haptic convergence. The present findings, however, do not rule out the applicability of the alternative account based on the action-perception dichotomy in touch (Dijkerman and de Haan, 2007, Stilla and Sathian, 2008), in which IPS regions are involved in grasping while the parietal operculum mediates obejct identification with special reference to texture – this account parallels a similar model for vision (Goodale and Milner, 1992). The projections from somatosensory areas to classic visual and well-known multisensory areas, revealed by the connectivity analyses of the present study, have precedents in earlier studies using varying methods (Deshpande et al., 2008, 2010b; Lucan et al., 2010; Peltier et al., 2007; Reed et al., 2009).

In the case of visual processing, the findings of the present study corroborate many earlier studies demonstrating a dorsoventral segregation of location and identity information (Haxby et al., 1994; Ungerleider and Mishkin, 1982), and complement these earlier studies in revealing segregated processing of visual texture and location information in ventral and dorsal pathways, respectively. Although we have emphasized segregation of these pathways, it is clear that the selectivity in most regions is relative rather than absolute, and obviously, interaction between these pathways is critical for integrated perception and action (Ellison and Cowey, 2009; Konen and Kastner, 2008).

The present study also adds to the rapidly growing body of evidence for widespread multisensory processing, in the present case, of texture and location information across vision and touch. While the somatosensory location-selective pathway converges directly with its visual counterpart in dorsal frontoparietal cortex, the somatosensory texture-selective pathway runs through the somatosensory parietal operculum before converging with its visual counterpart in visual and frontal cortex. Thus, many cortical areas formerly considered to be primarily associated with vision are in fact multisensory and may perform modality-independent operations on particular aspects of stimuli (reviewed by Sathian, in press). This is also true of the bisensory regions found in the present study: e.g., the FEF and parts of the IPS for location processing, and parts of posterior occipital, inferotemporal and inferior frontal cortices for texture processing. The extent to which multisensory convergence regions found in the present and prior studies actually integrate multisensory stimuli remains uncertain. A recent fMRI study used cross-modal adaptation to suggest multisensory processing of shape at the neuronal level in the LOC, anterior IPS, precentral sulcus and insular cortex (Tal and Amedi, 2009). This study relied on the popular fMRI-adaptation method whereby adaptation is taken to imply recruitment of a common neuronal pool (Grill-Spector and Malach, 2001); however, this interpretation may not always be secure (Krekelberg et al., 2006; Mur et al., 2010). In the present study, the subset of regions receiving bisensory input where activation magnitudes were correlated across individuals between the visual and haptic tasks offers candidates to be tested for the occurrence of multisensory neurons (Stilla and Sathian, 2008): the right FEF and left pIPS for location processing; the left LG and left PMv for texture processing. The extent and nature of multisensory integrative processing in these various regions merits further study.

Methodological considerations

EYE MOVEMENT RECORDING

The novel method of eye movement recording used here, predictive eye estimation regression (PEER), utilizes the changes in MR signal generated by known eye movements in calibration runs to estimate eye movements during task performance. This method has been shown to be reliable (LaConte et al., 2006, 2007) and has a number of advantages over conventional eye tracking, although it does not have as high temporal resolution as conventional eye tracking methods. Not only is the PEER method less cumbersome, but it is also applicable to situations where the eyes are closed or subjects are blindfolded, both of which were true of the haptic runs here.

CONNECTIVITY ANALYSES

There is now considerable interest in investigating functional and effective connectivity in relation to various regions and tasks. GCA is a method for effective connectivity analysis (Roebroeck et al., 2005) for which a multivariate formulation was developed, refined and applied by our group in a number of fMRI studies (Deshpande et al., 2008, 2009, 2010a,b; Hampstead et al., 2011; Lacey et al., 2011; Stilla et al., 2007, 2008). Here, we used the version of the method which excludes zero-lag correlations (Deshpande et al., 2010a,b) and also applied a network reduction procedure described earlier (Deshpande et al., 2008). In response to simulation-based arguments that GCA can sometimes yield incorrect directionality estimates (Smith et al., 2011), we introduced here a combined approach, in which GCA was used as an initial exploratory method to investigate interactions among a large number of ROIs, followed by the application of Patel's tau method, a Bayesian approach, to verify the direction of significant paths yielded by GCA. Only paths surviving both analyses were accepted, and resulted in a conservative estimate of effective connectivity which accorded with a priori hypotheses. We suggest this as a viable, albeit computationally intensive, approach going forward, that combines the merits of both GCA and Bayesian analysis – the former is well suited to exploratory analyses of large ROI sets that can then be subjected to iterative network reduction, while the latter can be used to verify the directionality of paths at each step of network reduction.

Conclusions

The present study establishes the existence of dual pathways for processing location and texture in both the somatosensory and the visual system. The texture pathways proceed from modality-specific regions without task-selectivity through regions specific for both modality and texture to texture-selective bisensory regions. The location pathways also originate in modality-specific regions lacking task-selectivity, but project directly to location-selective unisensory and bisensory regions. Both segregation of sensory processing according to object property and multisensory convergence appear to be universal organizing principles.

Supplementary Material

RESEARCH HIGHLIGHTS

Dual pathways for processing of location and texture identified in somatosensory and visual systems by activation and connectivity analyses

Somatosensory pathway for location converges with dorsal visual pathway in dorsal frontoparietal cortex

Somatosensory pathway for texture runs through parietal operculum before converging with visual counterpart in visual and frontal cortex

ACKNOWLEDGEMENTS

This work was supported by research grants from the NIH (R01 EY12440 and K24 EY17332 to KS, and R01 EB002009 to XH). Support to KS from the Veterans Administration is also gratefully acknowledged.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc. Natl. Acad. Sci. USA. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nature Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amorapanth PX, Widick P, Chatterjee A. The neural basis for spatial relations. J. Cogn. Neurosci. 2010;22:1739–1753. doi: 10.1162/jocn.2009.21322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. NeuroImage. 2004;22:401–408. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- Auvray M, Spence C. The multisensory perception of flavor. Consc. Cognit. 2008;17:1016–1031. doi: 10.1016/j.concog.2007.06.005. [DOI] [PubMed] [Google Scholar]

- Beason-Held LL, Purpura KP, Krasuski JS, Maisog JM, Daly EM, Mangot DJ, Desmond RE, Optican LM, Schapiro MB, VanMeter JW. Cortical regions involved in visual texture perception: a fMRI study. Cognit. Brain Res. 1998;7:111–118. doi: 10.1016/s0926-6410(98)00015-9. [DOI] [PubMed] [Google Scholar]

- Burton H. Second somatosensory cortex and related areas. In: Jones EG, Peters A, editors. Cerebral Cortex. Vol. 5. Plenum Publishing Corporation; New York: 1986. pp. 31–98. [Google Scholar]

- Burton H, Sathian K, Dian-Hua S. Altered responses to cutaneous stimuli in the second somatosensory cortex following lesions of the postcentral gyrus in infant and juvenile macaques. J. Comp. Neurol. 1990;291:395–414. doi: 10.1002/cne.902910307. [DOI] [PubMed] [Google Scholar]

- Cant JS, Arnott SR, Goodale MA. fMR-adaptation reveals separate processing regions for the perception of form and texture in the human ventral stream. Exp. Brain Res. 2009;192:391–405. doi: 10.1007/s00221-008-1573-8. [DOI] [PubMed] [Google Scholar]

- Cant JS, Goodale MA. Attention to form or surface properties modulates different regions of human occipitotemporal cortex. Cereb. Cortex. 2007;17:713–731. doi: 10.1093/cercor/bhk022. [DOI] [PubMed] [Google Scholar]

- Caselli RJ. Ventrolateral and dorsomedial somatosensory association cortex damage produces distinct somesthetic syndromes in humans. Neurology. 1993;43:762–771. doi: 10.1212/wnl.43.4.762. [DOI] [PubMed] [Google Scholar]

- Cauda F, Geminiani G, D'Agata F, Sacco K, Duca S, Bagshaw AP, Cavanna AE. Functional connectivity of the posteromedial cortex. PLoS ONE. 2010;5:e13107. doi: 10.1371/journal.pone.0013107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Cusick CG, Wall JT, Felleman DJ, Kaas JH. Somatotopic organization of the lateral sulcus of owl monkeys: area 3b, S-II, and a ventral somatosensory area. J. Comp. Neurol. 1989;282:169–90. doi: 10.1002/cne.902820203. [DOI] [PubMed] [Google Scholar]

- De Santis L, Spierer L, Clarke S, Murray MM. Getting in touch: Segregated somatosensory what and where pathways in humans revealed by electrical neuroimaging. NeuroImage. 2007;37:890–903. doi: 10.1016/j.neuroimage.2007.05.052. [DOI] [PubMed] [Google Scholar]

- Denys K, Vanduffel W, Fize D, Nelissen K, Peuskens H, Van Essen D, Orban GA. The processing of visual shape in the cerebral cortex of human and nonhuman primates: a functional magnetic resonance imaging study. J. Neurosci. 2004;24:2551–2565. doi: 10.1523/JNEUROSCI.3569-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, Sathian K, Hu X. Assessing and compensating for zero-lag correlation effects in time-lagged Granger causality analysis of fMRI. IEEE Transactions on Biomedical Engineering. 2010a;57:1446–1456. doi: 10.1109/TBME.2009.2037808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, Hu X, Lacey S, Stilla R, Sathian K. Object familiarity modulates effective connectivity during haptic shape perception. NeuroImage. 2010b;49:1991–2000. doi: 10.1016/j.neuroimage.2009.08.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, Hu X, Stilla R, Sathian K. Effective connectivity during haptic perception: A study using Granger causality analysis of functional magnetic resonance imaging data. NeuroImage. 2008;40:1807–1814. doi: 10.1016/j.neuroimage.2008.01.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, LaConte S, James GA, Peltier S, Hu X. Multivariate Granger causality analysis of fMRI data. Hum. Brain Mapp. 2009;30:1361–1373. doi: 10.1002/hbm.20606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dijkerman HC, de Haan EHF. Somatosensory processes subserving perception and action. Behav. Brain Sci. 2007;30:189–239. doi: 10.1017/S0140525X07001392. [DOI] [PubMed] [Google Scholar]

- Duvernoy HM. Surface, blood supply and three-dimensional sectional anatomy. 2nd ed. Springer; New York: 1999. The human brain. [Google Scholar]

- Ehrsson HH, Spence C, Passingham RE. That's my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science. 2004;305:875–877. doi: 10.1126/science.1097011. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Grefkes C, Zilles K, Fink GR. The somatotopic organization of cytoarchitectonic areas on the human parietal operculum. Cereb. Cortex. 2007;17:1800–1811. doi: 10.1093/cercor/bhl090. [DOI] [PubMed] [Google Scholar]

- Ellison A, Cowey A. TMS can reveal contrasting functions of the dorsal and ventral visual processing streams. Exp. Brain Res. 2006;175:618–625. doi: 10.1007/s00221-006-0582-8. [DOI] [PubMed] [Google Scholar]

- Ellison A, Cowey A. Differential and co-involvement of areas of the temporal and parietal streams in visual tasks. Neuropsychologia. 2009;47:1609–1614. doi: 10.1016/j.neuropsychologia.2008.12.013. [DOI] [PubMed] [Google Scholar]

- Fitzgerald PJ, Lane JW, Thakur PH, Hsiao SS. Receptive field properties of the macaque second somatosensory cortex: evidence for multiple functional representations. J. Neurosci. 2004;24:11193–11204. doi: 10.1523/JNEUROSCI.3481-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman DP, Murray EA, O'Neill JB, Miskin M. Cortical connections of the somartosensory fields of the lateral sulcus of macaques: evidence for a corticolimbic pathway for touch. J. Comp. Neurol. 1986;252:323–347. doi: 10.1002/cne.902520304. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Hayward WG, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. BOLD activity during mental rotation and viewpoint-dependent object recognition. Neuron. 2002;34:161–171. doi: 10.1016/s0896-6273(02)00622-0. [DOI] [PubMed] [Google Scholar]

- Graziano MSA, Hu XT, Gross CG. Visuospatial properties of ventral premotor cortex. J. Neurophysiol. 1997;77:2268–2292. doi: 10.1152/jn.1997.77.5.2268. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. NeuroImage. 2002;15:870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Goodale M, Milner A. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Granger CWJ. Investigating causal relations by econometric models and cross-spectral methods. Econometrica. 1969;37:424–438. [Google Scholar]

- Grill-Spector K, Malach R. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol. 2001;107:293–321. doi: 10.1016/s0001-6918(01)00019-1. [DOI] [PubMed] [Google Scholar]

- Guest S, Catmur C, Lloyd D, Spence C. Audiotactile interactions in roughness perception. Exp. Brain Res. 2002;146:161–171. doi: 10.1007/s00221-002-1164-z. [DOI] [PubMed] [Google Scholar]

- Hampstead BM, Stringer AY, Stilla RF, Deshpande G, Hu X, Moore AB, Sathian K. Activation and effective connectivity changes following explicit-memory training for face-name pairs in patients with mild cognitive impairment: a pilot study. Neurorehabil. Neural Repair. 2011;25:210–222. doi: 10.1177/1545968310382424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J. Neurosci. 1994;14:6336–6353. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollins M, Bensmaia SJ. The coding of roughness. Can. J. Exp. Psychol. 2007;61:184–195. doi: 10.1037/cjep2007020. [DOI] [PubMed] [Google Scholar]

- Hollins M, Faldowski R, Rao S, Young F. Perceptual dimensions of tactilke surface texture: a multidimensional scaling analysis. Percept. Psychophys. 1993;54:697–705. doi: 10.3758/bf03211795. [DOI] [PubMed] [Google Scholar]

- Inui K, Wang X, Tamura Y, Kaneoke Y, Kakigi R. Serial processing in the human somatosensory system. Cereb. Cortex. 2004;14:851–857. doi: 10.1093/cercor/bhh043. [DOI] [PubMed] [Google Scholar]

- Jiang W, Tremblay F, Chapman CE. Neuronal encoding of texture changes in the primary and the secondary somatosensory cortical areas of monkeys during passive texture discrimination. J. Neurophysiol. 1997;77:1656–1662. doi: 10.1152/jn.1997.77.3.1656. [DOI] [PubMed] [Google Scholar]

- Klatzky RL, Lederman SJ. Multisensory texture perception. In: Naumer MJ, Kaiser J, editors. Multisensory object perception in the primate brain. Springer Science+Business Media; New York: 2010. pp. 211–230. [Google Scholar]

- Koch KW, Fuster JM. Unit activity in monkey parietal cortex related to haptic perception and temporary memory. Exp. Brain Res. 1989;76:292–306. doi: 10.1007/BF00247889. [DOI] [PubMed] [Google Scholar]

- Konen CS, Kastner S. Two hierarchically organized neural systems for object information in human visual cortex. Nat. Neurosci. 2008;11:224–231. doi: 10.1038/nn2036. [DOI] [PubMed] [Google Scholar]

- Krekelberg B, Boynton GM, van Wezel RJA. Adaptation: from single cells to BOLD signals. Trends Neurosci. 2006;29:250–256. doi: 10.1016/j.tins.2006.02.008. [DOI] [PubMed] [Google Scholar]

- Krubitzer L, Clarey J, Tweedale R, Elston G, Calford M. A redefinition of somatosensory areas in the lateral sulcus of macaque monkeys. J. Neurosci. 1995;15:3821–3839. doi: 10.1523/JNEUROSCI.15-05-03821.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Hagtvedt H, Patrick VM, Anderson A, Stilla R, Deshpande G, Hu X, Sato JR, Reddy S, Sathian K. Art for reward's sake: Visual art recruits the ventral striatum. NeuroImage. 2011;55:420–433. doi: 10.1016/j.neuroimage.2010.11.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Tal N, Amedi A, Sathian K. A putative model of multisensory object representation. Brain Topog. 2009;21:269–274. doi: 10.1007/s10548-009-0087-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaConte S, Glielmi C, Heberlein K, Hu X. Verifying visual fixation to improve fMRI with predictive eye estimation regression (PEER) Proc. Intl. Soc. Magn. Reson. Med. 2007;3438 [Google Scholar]

- LaConte S, Peltier S, Heberlein K, Hu X. Predictive eye estimation regression (PEER) for simultaneous eye tracking and fMRI. Proc. Intl. Soc. Magn. Reson. Med. 2006;2808 [Google Scholar]

- Ledberg A, O'Sullivan BT, Kinomura S, Roland PE. Somatosensory activations of the parietal operculum of man. A PET study. Eur. J. Neurosci. 1995;7:1934–1941. doi: 10.1111/j.1460-9568.1995.tb00716.x. [DOI] [PubMed] [Google Scholar]

- Lucan JN, Foxe JJ, Gomez-Ramirez M, Sathian K, Molholm S. Tactile shape discrimination recruits human lateral occipital cortex during early perceptual processing. Hum. Brain Mapp. 2010 doi: 10.1002/hbm.20983. online before print, doi: 10.1002/hbm.20983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macaluso E. Orienting of spatial attention and the interplay between the senses. Cortex. 2010;46:282–297. doi: 10.1016/j.cortex.2009.05.010. [DOI] [PubMed] [Google Scholar]

- Merabet L, Thut G, Murray B, Andrews J, Hsiao S, Pascual-Leone A. Feeling by sight or seeing by touch? Neuron. 2004;42:173–179. doi: 10.1016/s0896-6273(04)00147-3. [DOI] [PubMed] [Google Scholar]

- Merabet LB, Swisher JD, McMains SA, Halko MA, Amedi A, Pascual-Leone A, Somers DC. Combined activation and deactivation of visual cortex during tactile sensory processing. J. Neurophysiol. 2007;97:1633–1641. doi: 10.1152/jn.00806.2006. [DOI] [PubMed] [Google Scholar]

- Mishkin M. Analogous neural models for tactual and visual learning. Neuropsychologia. 1979;17:139–151. doi: 10.1016/0028-3932(79)90005-8. [DOI] [PubMed] [Google Scholar]

- Mur M, Ruff DA, Bodurka J, Bandettini PA, Kriegeskorte N. Face-identity change activation outside the face system: “release from adaptation” may not always indicate neuronal selectivity. Cereb. Cortex. 2010;20:2027–2042. doi: 10.1093/cercor/bhp272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Mishkin M. Relative contributions of SII and area 5 to tactile discrimination in monkeys. Behav. Brain Res. 1984;11:67–83. doi: 10.1016/0166-4328(84)90009-3. [DOI] [PubMed] [Google Scholar]

- Nicolelis MAL, Ghazanfar AA, Stambuagh CR, Oliveira LMO, Laubach M, Chapin JK, Nelson RJ, Kaas JH. Simultaneous encoding of tactile information by three primate cortical areas. Nature Neurosci. 1998;1:621–630. doi: 10.1038/2855. [DOI] [PubMed] [Google Scholar]

- O'Sullivan BT, Roland PE, Kawashima R. A PET study of somatosensory discrimination in man. Microgeometry versus macrogeometry. Eur. J. Neurosci. 1994:137–148. doi: 10.1111/j.1460-9568.1994.tb00255.x. [DOI] [PubMed] [Google Scholar]

- Pons TP, Garraghty PE, Friedman DP, Mishkin M. Physiological evidence for serial processing in somatosensory cortex. Science. 1987;237:417–420. doi: 10.1126/science.3603028. [DOI] [PubMed] [Google Scholar]

- Pons TP, Garraghty PE, Mishkin M. Serial and parallel processing of tactual information in somatosensory cortex of rhesus monkeys. J. Neurophysiol. 1992;68:518–527. doi: 10.1152/jn.1992.68.2.518. [DOI] [PubMed] [Google Scholar]

- Patel RS, Bowman FD, Rilling JK. A Bayesian approach to determining connectivity of the human brain. Hum. Brain Mapp. 2006;27:267–276. doi: 10.1002/hbm.20182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltier S, Stilla R, Mariola E, LaConte S, Hu X, Sathian K. Activity and effective connectivity of parietal and occipital cortical regions during haptic shape perception. Neuropsychologia. 2007;45:476–483. doi: 10.1016/j.neuropsychologia.2006.03.003. [DOI] [PubMed] [Google Scholar]

- Peuskens H, Claeys KG, Todd JT, Norman JF, Van Hecke P, Orban GA. Attention to 3-D shape, 3-D motion, and texture in 3-D structure from motion displays. J. Cognit. Neurosci. 2004;16:665–682. doi: 10.1162/089892904323057371. [DOI] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu W-HC, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: object-based representation in the human ventral pathway. Proc. Natl. Acad. Sci. USA. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]