Abstract

Patients with damage to the prefrontal cortex (PFC)—especially the ventral and medial parts of PFC—often show a marked inability to make choices that meet their needs and goals. These decision-making impairments often reflect both a deficit in learning concerning the consequences of a choice, as well as deficits in the ability to adapt future choices based on experienced value of the current choice. Thus, areas of PFC must support some value computations that are necessary for optimal choice. However, recent frameworks of decision making have highlighted that optimal and adaptive decision making does not simply rest on a single computation, but a number of different value computations may be necessary. Using this framework as a guide, we summarize evidence from both lesion studies and single-neuron physiology for the representation of different value computations across PFC areas.

Keywords: reward, decision making, learning, orbitofrontal cortex (OFC), anterior cingulate cortex (ACC)

It has been over 150 years since the case of Phineas Gage—the rail worker who suffered a traumatic accident in which an iron rod was propelled through the front of his brain—highlighted the importance of the ventral and medial parts of the prefrontal cortex in decision making. Damasio and colleagues (Bechara, Damasio, Damasio, & Anderson, 1994; Damasio, 1994; Damasio & Van Hoesen, 1983; Eslinger & Damasio, 1985) since documented the cases of several more patients with damage similar to that of Phineas Gage—that is, to the ventromedial prefrontal cortex (VMPFC), the orbitofrontal cortex (OFC) and the peri- and subgenual anterior cingulate cortex (ACC)—and demonstrated in most cases, perhaps surprisingly, that these patients perform within the normal range in neuropsychological tests of intelligence, memory, and cognitive function. However, in real life, patients with such frontal lobe brain damage are far from normal; they live disorganized lives, tend to be impatient, vacillate when making decisions, often invest their money in risky ventures and exhibit socially inappropriate behavior (e.g., are grossly profane or indifferent to the feelings of others). In other words, their defining feature is that they consistently make poor choices.

One notable finding is that these types of dramatic decision-making deficits are seldom found with damage outside of the PFC; in fact, these functions seem largely to depend on the orbital and medial parts of PFC (OFC and VMPFC) and ACC, as damage to the lateral prefrontal cortex (LPFC) does not appear to cause similar profound impairments in value-guided decisions unless correct behavior is determined by rules (Baxter, Gaffan, Kyriazis, & Mitchell, 2008; Bechara, Damasio, Tranel, & Anderson, 1998; Buckley et al., 2009; Fellows, 2006; Fellows & Farah, 2003; Fellows & Farah, 2007; Wheeler & Fellows, 2008). This implies that we should be able to discover something fundamentally special about the functions of these three PFC areas to allow them to support appropriate decision making.

Perhaps the first insight into this question came in the 1970s, when Niki and Watanabe (1976, 1979) recorded from single neurons in ACC and later also in LPFC. They found that neurons in both regions were modulated by whether the animal received a reward, and some only when receiving reward in the context of a correct response. The work of Rolls and colleagues (Rolls, Sienkiewicz, & Yaxley, 1989; Rolls, Yaxley, & Sienkiewicz, 1990; Thorpe, Rolls, & Maddison, 1983) began to focus on the functions of OFC in the 1980s and found evidence that neurons in this part of PFC encoded the sensory properties of reward, encoded links between stimuli and reward, and seemed to encode information about satiety state. Years later it was found that LPFC neurons not only differentiated multiple rewards, but the value scale encoded by LPFC neurons reflected the subjective reward preferences of the animal (Watanabe, 1996). In a similar manner, it was shown that OFC neurons encoded reward preference but that neurons here adjust their response based on the relative value of the rewards available (Tremblay & Schultz, 1999).

Still, in all of these cases, neuronal activity was recorded in the absence of any direct choice between possible outcomes of positive value (i.e., only one available option was rewarded); rather activity was correlated with conditioned stimuli that predicted rewards or with the rewards themselves. It was not until Shima and Tanji 1998) documented that single neurons in ACC were sensitive to reductions in reinforcement value concomitant with a change in behavioral response, that we had clear evidence of a neuronal correlate in PFC or ACC that might reflect a decision-making process. Nonetheless, at the very end of the 20th century we had evidence that single neurons in PFC encoded the value of outcomes and conveyed information about when to adapt decision making, representations which might provide a functional explanation for the decision-making deficits present in patients with ventral and medial PFC damage.

However, as interest in this field grew in the 21st century, we discovered a new confounding issue; many (if not all) areas within the frontal cortex encode (to some degree) a signal that correlates with decision value. This was elegantly demonstrated by Roesch and Olson (2003) who recorded from multiple brain areas spanning both the prefrontal and premotor cortices and found that the encoding of reward value was most commonly found in premotor areas. What was notable about this finding was that damage to premotor cortex does not typically induce decision-making deficits per se. This emphasized that importance of having converging evidence from both correlative and interference techniques when trying to infer function. Moreover, this study highlighted that processes such as attention, arousal, motivation, or motor preparation all strongly correlate with value, meaning that it is seldom straightforward to determine whether neurons are actually encoding the subjective value of a decision or instead some other related variable (Roesch & Olson, 2007). We now know that value signals are in fact a ubiquitous signal in the brain, evident not just in PFC areas, but also in amygdala, striatum, premotor, parietal, posterior cingulate, and visual areas to name a few (McCoy, Crowley, Haghighian, Dean, & Platt, 2003; Paton, Belova, Morrison, & Salzman, 2006; Platt & Glimcher, 1999; Roesch & Olson, 2003; Shuler & Bear, 2006).

So we are now at a new junction; neuropsychological studies suggest decision making occurs in the PFC yet neurophysiological studies implicated almost the whole brain in representing a value signal. How do we reconcile the findings from these different methodologies and how do we determine if different brain areas support specialized functions in decision-making? The strategy that we have used in the current review is to try to find converging evidence from studies using neurophysiological and neuropsychological techniques in the same frontal lobe areas to address the issue of functional specialization among PFC areas. The former has unique spatial and temporal resolution, thus allowing us to identify not only in which value is represented, but also at what time course, how it changes with learning or behavioral state, and the encoding scheme in which value is represented. This is particularly important in PFC because many neurons encode value with opposing encoding schemes that when averaged across populations of neurons (e.g., functional MRI and event-related potentials) might not be detected (cf. Kennerley, Dahmubed, Lara, & Wallis, 2009). The latter methodology provides a powerful tool to draw causal inferences between physiology and behavior and allows us to address the question of the “functional importance” of a value representation. Indeed, as will become clear below, it is notable that restricted frontal lobe lesions often cause surprisingly selective impairments in value-based decision making, suggesting that these regions may only be required for a subset of such choices. As focal lesions of single PFC regions are relatively rare in humans, we mainly concentrate on the effects of circumscribed lesions of specific regions of ACC and OFC in animal models.

Our review is necessarily not an exhaustive survey of the whole of PFC. This is partly due to the fact that we have very little evidence of the “reward” functions of some PFC areas based on neurophysiology and lesions studies. For example, there have been few recording studies to date in areas 9, 10, 14, 32, and 25 (cf. Bouret & Richmond, 2010; Rolls, Inoue, & Browning, 2003; Tsujimoto, Genovesio, & Wise, 2010), sometimes for methodological reasons (Mitz, Tsujimoto, Maclarty, & Wise, 2009), and selective lesions that include these regions are also rare (cf. Buckley et al., 2009; Piekema, Browning, & Buckley, 2009). We also do not give as much attention to the neuroimaging literature as it deserves, and instead direct the interested reader toward reviews on this topic (e.g., Knutson & Wimmer, 2007; O'Doherty, Hampton, & Kim, 2007; Rangel & Hare, 2010). Instead, we review ACC, LPFC, and OFC function based on single neuron electrophysiology and focal lesion studies and attempt to place these functions into recent frameworks of decision making.

We use an anatomical nomenclature to help synthesize results across studies and techniques. In ACC, we largely focus on the cingulate sulcus as this is the area in which most neurophysiological recordings have taken place. There is some confusion about the classification of the more rostral aspect of the dorsal bank of the ACC sulcus, stemming partly from the fact that early anatomical diagrams labeled this region as 24c or did not include a label (Devinsky & Lucuano, 1993; Dum & Strick, 1996; He, Dum, & Strick, 1995; Rizzolatti, Luppino, & Matelli, 1996). More recent anatomical studies classify the dorsal bank of the ACC sulcus as area 9v and part of the PFC, whereas the ACC begins in the ventral bank of the cingulate sulcus and includes areas 24, 25, and 32 of the cingulate gyrus (Vogt, Vogt, Farber, & Bush, 2005). However, we include studies that have recorded from or lesioned the dorsal bank of the cingulate sulcus in our ACC nomenclature, even though they may include parts of areas 9 and 6. For simplicity, OFC will largely be taken together as areas 11, 13, and 14, despite recent evidence that there may be functional differences between lateral OFC and medial OFC/VMPFC regions (Bouret & Richmond, 2010; Noonan et al., 2010). LPFC will include studies of areas 9, 46, and 45 (Petrides & Pandya, 2002). To aid cross-species comparisons, we use the above common nomenclature to describe studies in both primates and rodents, as there is functional and anatomical evidence that rodent OFC, infralimbic and prelimbic cortex (often termed together as medial frontal cortex), and ACC may be functionally equivalent to agranular parts of OFC (posterior parts of areas 14 and 13), subgenual ACC (area 25), rostral ACC (pregenual area 32), and dorsal ACC (dorsal area 24), respectively in primates (Balleine & O'Doherty, 2010; Rushworth, Walton, Kennerley, & Bannerman, 2004; Wise, 2008). However, it is important to note that there may not be clear homologues of primate granular frontal lobe regions in the rodent brain, many of which are the targets of lesion and recording studies that we discuss.

A Decision-Making Framework for Understanding Frontal Lobe Functions

Many neurons in the brain are modulated by the value of an outcome. On the one hand, this is very interesting because such a signal might contribute to the process of decision making. However, which decision-making process? Many of the decisions that humans and animals face on a daily basis require a consideration of multiple-decision variables, each of which may have separate effects on the neural representation of value in a particular brain region. Even the routine decision of where to shop (or forage) for food is likely to be influenced by a number of disparate factors. First, humans and animals might evaluate their current internal state (thirst/appetite); the decision whether to expend energy foraging for food will be influenced by one's current (or future) nutrition needs. Assuming dietary resources are desired, one's choice in food might be influenced by how hungry you are (how much should I eat?), what type of food you currently prefer (which is likely to be influenced by your recent history of food choices), what your goals are (save money, eat less/healthy, explore new foraging environments), nonphysical costs associated with each food option (e.g., uncertainty of how palatable the food may be, delay before food will be obtained, potential risks), and physical costs, such as the energy that must be expended to obtain food. And the choices we make today may differ substantially from the ones we made yesterday (or tomorrow), as our current needs and goals change and as we learn from the choices we have made in the past. Thus, optimal decision making is likely to rest on a number of valuation processes and it is critical that we develop a framework of possible valuation processes so that we can begin to identify the unique functional roles different areas contribute to decision making.

Recently, Rangel and colleagues (Rangel, Camerer, & Montague, 2008; Rangel & Hare, 2010) devised just such a framework of decision making that highlights several processes necessary for optimal goal-directed choice. First, the brain needs to represent what alternatives are available and determine the current and predicted future internal state (e.g., hunger level, subjective preference) that may inform the valuation of those alternatives. Second, given these states, the brain must then evaluate the external variables that will influence the value of the outcome (e.g., risk, probability, delay). Third, the brain needs to determine the value of the action that would obtain the different outcomes, and discount the outcome based on the expected physical costs (e.g., effort). Optimal selection would then be based on comparison of these action values. Finally, once the choice has been made the brain must compute the value of the obtained outcome. Depending on whether the obtained outcome matched the predicted value of that alternative, a prediction error signal can be generated to modify the value of the alternatives thereby ensuring that future choices are adaptive. Although some of the functions associated with ACC, LPFC and OFC cannot necessarily be ascribed to any single level in this framework (e.g., the role of OFC in reversal learning), we use this framework as a general guide to describe the specialized (or nonspecialized) contributions of ACC, LPFC, and OFC in decision-making processes. It should be noted that we are largely focusing on goal-directed types of behavior in our discussion of PFC function. However, there are likely several other influences on choices such as from habitual stimulus-response or Pavlovian associations, which we will not consider in this review (see Balleine & O'Doherty, 2010 or Rangel et al., 2008, for further discussion of the role of multiple-valuation systems during decision making).

Representation of Internal States

Representation of Incentive Value

Arguably the starting point of any decision for an organism is an evaluation of its internal needs given the current state of the environment, as well as an evaluation of what outcomes might satisfy those needs. These parameters are present implicitly in almost all behavioral experiments as most tasks with animals and many with humans involve an element of incentive to motivate the subjects to attend and work. In the case of decision making, the requirement to use reward as a reinforcer has been exploited to allow investigators to probe i) how humans and animals make choices to satisfy internal needs, such as metabolic state, ii) how value is represented (whether outcome specific or as an abstract “cached” value) and, iii) how these parameters can be incorporated into models of the computation of value (Doya, 2008; Rangel et al., 2008). Several studies have shown that neuronal activity in OFC (Critchley & Rolls, 1996; Nakano et al., 1984; Rolls, Critchley, Browning, Hernadi, & Lenard, 1999; Rolls et al., 1989) and VMPFC (Bouret & Richmond, 2010) changes as subjects become sated, possibly implemented through interactions with the lateral hypothalamus (de Araujo et al., 2006; Floyd, Price, Ferry, Keay, & Bandler, 2001; Ongur, An, & Price, 1998).

The OFC is also closely connected with regions such as the anterior insula and frontal operculum that receive inputs from gustatory-responsive parts of the thalamus, and has been proposed to contain an area of secondary taste cortex in caudolateral OFC (Cavada, Company, Tejedor, Cruz-Rizzolo, & Reinoso-Suarez, 2000; Rolls et al., 1990). Thus OFC is in a particularly strong anatomical position to evaluate the incentive value, or preference, of different rewards. Studies that manipulate gustatory rewards have found encoding of these rewards within OFC (Lara, Kennerley, & Wallis, 2009; Rolls, 2000; Rolls et al., 1990), which often reflects a preference ranking of different rewards (Hikosaka & Watanabe, 2000, 2004; Tremblay & Schultz, 1999) and the combination of reward preference and magnitude (Padoa-Schioppa & Assad, 2006, 2008). Damage to OFC and VMPFC impairs the ability to establish relative and consistent preferences when offered novel foods (Baylis & Gaffan, 1991), and humans with OFC and VMPFC damage show inconsistent preferences (Fellows & Farah, 2007). Interestingly however, in a task that required the animal to remember the sensory characteristics and identity of different rewards across delays to make a subsequent discrimination (rather than use reward preference to guide decision making), we found little evidence that OFC neurons encoded reward preference (Lara et al., 2009). This emphasizes a common feature of PFC function; that behavioral context often influences the type of information encoded to facilitate flexible behavior (Kennerley & Wallis, 2009c; Miller & Cohen, 2001; Rainer, Asaad, & Miller, 1998; Wallis & Kennerley, 2010).

Both medial and lateral parts of OFC, along with the ACC and subcortical regions such as the amygdala and ventral striatum, also seem to carry representations of the qualities of foods such as the texture, temperature, and flavor (Rolls, 2010). Although there is some evidence that LPFC (Hikosaka & Watanabe, 2000; Watanabe, 1996) and ACC (Luk & Wallis, 2009) neurons also encode gustatory rewards as if encoding reward preference, the OFC may have a specialized role in encoding the incentive value of a reward, especially with respect to current internal state.

Adaptive Coding of Incentive Value

OFC therefore has a potentially rich representation of the incentive value of an anticipated outcome associated with predictive stimuli. However, given the potential range of reinforcers in a natural environment and the limited firing range of neurons (typically < 50 Hz), a question then arises is how such information is encoded to allow appropriate decisions to be taken. One solution would be to have a contextually based relative valuation scale. Some OFC neurons—particularly in area 13—have been shown to adjust their firing rate to the range of values offered (Kobayashi, Pinto de Carvalho, & Schultz, 2010; Padoa-Schioppa, 2009; Tremblay & Schultz, 1999). Such adaptive coding is efficient because it allows for maximum discrimination between each distribution of possible outcomes, and it allows flexibility to encode values across decision contexts that may differ substantially in value (e.g., a choice between dinner entrees on a menu compared to a choice of what type of car to buy).

However, it is also important not simply to judge value based on relative context. Interestingly, the majority of outcome sensitive OFC neurons actually do not adapt their firing rates to the range (or type) of outcomes available, instead encoding value on a fixed scale (Kobayashi et al., 2010; Padoa-Schioppa & Assad, 2008). The fact that some OFC neurons are not range adaptive and thus are invariant to the set of offers available indicates value transitivity, a key characteristic of economic choice (Padoa-Schioppa & Assad, 2008). Thus, as a population, OFC expresses both range adaptation and value transitivity, indicating two fundamental traits necessary for optimal choice. Although some evidence suggests that ACC neurons may adapt their firing rate depending on the distribution of values available (Hillman & Bilkey, 2010; Sallet et al., 2007), reports of range adaptation in single neurons have typically focused either on OFC or areas outside of frontal cortex, such as parts of the striatum and midbrain dopaminergic nuclei (Cromwell, Hassani, & Schultz, 2005; Tobler, Fiorillo, & Schultz, 2005). It therefore remains an open question whether range adaptation in frontal cortex is a unique trait of OFC neurons. However, neuroimaging studies have shown relative value activation in ACC (Coricelli et al., 2005; Fujiwara, Tobler, Taira, Iijima, & Tsutsui, 2009) and LPFC (Coricelli et al., 2005; Fujiwara et al., 2009; Lohrenz, McCabe, Camerer, & Montague, 2007).

Updating Value Representations Through Internal State-Based Manipulations

One of the cardinal tasks employed to demonstrate how changes in internal states modify the values of potential appetitive outcomes is one known as reinforcer devaluation (Holland & Straub, 1979). Subjects are taught that one of two distinct reward types (i.e., peanuts or chocolate) is associated with particular neutral objects or can only be obtained by making particular actions. Following this training, one of the rewards is “devalued,” either by feeding of the reward to satiety or by pairing the reward with chemically induced illness. Subsequently, in a test session, subjects are presented with pairs of options, one associated with the receipt of each reward type, meaning that they have to make their decisions based on the updated values of the expected outcomes prior to re-experiencing the outcomes (i.e., the decision is goal directed). It has been suggested that this type of decision may not simply be guided by change in the incentive value of the outcome, however, but may also be influenced by the contingent link between a stimulus and a particular outcome type (Ostlund & Balleine, 2007a).

In a stimulus-based version of this task, both rats and monkeys with damage to OFC (but not to LPFC or VMPFC) continued to select the option associated with the devalued reward (Baxter et al., 2008; Baxter, Gaffan, Kyriazis, & Mitchell, 2009; Gallagher, McMahan, & Schoenbaum, 1999; Izquierdo, Suda, & Murray, 2004; Pickens et al., 2003). This is true whether lesions were made prior to initial stimulus-outcome training or following training. Importantly, this impairment is only present when selecting between objects associated with the devalued reward, rather than when selecting between the two foods directly (Izquierdo et al., 2004). These findings suggest OFC, in conjunction with the amygdala (Baxter, Parker, Lindner, Izquierdo, & Murray, 2000), has an important role in updating stimulus-outcome associations based on current internal state. However, it has been shown in rodents that OFC was not required for appropriate state-based decision making when choices were guided by associations with particular actions (Ostlund & Balleine, 2007b). Instead, only lesions to prelimbic cortex affect response-based reinforcer devaluation, although only if lesions were made prior to training on the response-outcome associations and not if these associations have already been learned prior to surgery (Ostlund & Balleine, 2005).

Schoenbaum and colleagues (Schoenbaum, Roesch, Stalnaker, & Takahashi, 2009) used such evidence, along with that from a series of their own elegant recording and interference studies in rodents, to present a strong case that the main function of OFC was to signal specific stimulus-based outcome expectancies. Without such associative representations, they argued, it was not possible to generate appropriate error signals following breaks of expectation to guide learning.

Representation of External Variables That Influence Outcome Value

Encoding of Decision Variables That Determine Expected Value

A decision's expected value is not only based on one's internal state, but also on a number of variables that influence that value of the expected outcome. It is important to distinguish neurons that encode information about “expected” outcomes from neurons that encode information after the actual outcome has been received. The former may contribute to the process of making the current decision, whereas the latter signal may have multiple functions, which will be addressed in later sections of this review. Importantly, these two representations often occur in very close temporal proximity (i.e., <1 s) around outcome onset, thus it is important to have methodological techniques that can distinguish between these two representations. Although we showed that some of the same PFC neurons encode information about decision variables (e.g., reward size) at the time of choice (i.e., prior to outcome onset) and at the time of the experienced outcome (Kennerley et al., 2009; Kennerley & Wallis, 2009b), this also is clearly not the case for many PFC neurons. Thus, it is not simply what value information is encoded (i.e., reward size) but rather when that value information is encoded (pre- or post-outcome) that reflects the type of valuation process represented by different neurons.

At first glance it would appear as though there is no particular specialization within PFC for the encoding of decision value. Single neurons across most of the brain—but especially within ACC, LPFC, and OFC—are modulated by almost every decision variable investigators have used to manipulate the expected value of an outcome. Neurons in these frontal areas are sensitive to sensory cues that indicate the presence, size and/or probability of an expected positive or negative outcome (Amiez, Joseph, & Procyk, 2006; Hosokawa, Kato, Inoue, & Mikami, 2007; Kennerley et al., 2009; Kennerley & Wallis, 2009a; Kennerley & Wallis, 2009c; Kobayashi et al., 2006; Kobayashi et al., 2010; Morrison & Salzman, 2009; O'Neill & Schultz, 2010; Roesch & Olson, 2003; Roesch & Olson, 2004; Rolls, 2000; Sallet et al., 2007; Schoenbaum, Chiba, & Gallagher, 1998; Seo & Lee, 2009; Wallis & Miller, 2003; Watanabe, Hikosaka, Sakagami, & Shirakawa, 2002), size and temporal proximity to reward (Kim, Hwang, & Lee, 2008; Roesch & Olson, 2005; Roesch, Taylor, & Schoenbaum, 2006), reward preference (Hikosaka & Watanabe, 2000; Luk & Wallis, 2009; Padoa-Schioppa & Assad, 2006, 2008; Tremblay & Schultz, 1999; Watanabe et al., 2002), effort required to harvest reward (Hillman & Bilkey, 2010; Kennerley et al., 2009), and one's confidence in the choice outcome (Kepecs, Uchida, Zariwala, & Mainen, 2008). This ubiquity of value coding across different brain areas has not only made it difficult to understand the specialized functions of different PFC areas (Wallis & Kennerley, 2010) but also has raised speculation that value coding in some brain areas may represent other functions beyond contributing to the decision-making process.

With respect to single neuron electrophysiology studies, the overlap of these signals across frontal areas highlights the difficulty in inferring functional specialization by comparing across studies. For example, neurons in ACC, LPFC, and OFC all encode reward magnitude but it is very difficult to infer a functional hierarchy by simply comparing which area has more neurons that encode reward magnitude because different investigators use different paradigms, record activity in different training or behavioral states and often use very different analytical methods.

One resolution to this issue is to examine the activity of neurons in multiple-brain areas simultaneously. We (Kennerley et al., 2009; Kennerley & Wallis, 2009b) designed an experiment with the precise goal of determining whether neurons in different frontal areas exhibit preferences for encoding different variables related to a decision's value (Figure 1A, B). Monkeys were trained to make choices based on conditioned stimuli that indicated different behavioral outcomes that varied in terms of either reward probability, reward magnitude, or physical effort (Kennerley et al., 2009). We recorded from OFC, ACC, and LPFC simultaneously, thus allowing us to directly pit these three frontal areas against each other in animals in the same behavioral state using the same analytical methods. We found that neurons encoded value across the different decision variables in diverse ways. For example, some neurons encoded the value of just a single decision variable, others encoded the value of choices for two of the variables but not the third, while still others encoded value across all three decision variables (Figure 1C, D). We found no evidence that any of the three frontal areas were specialized for encoding any particular decision variable (Figure 1E). Instead we found that ACC was significantly more likely to encode the value of any of the decision variables (Figure 1E), and significantly more likely to encode two or three decision variables when compared to either LPFC or OFC (Figure 1F). Thus, single ACC neurons are capable of encoding the value of multiple-decision variables across trials, a type of multiplexed value representation that may allow the integration of the individual components of a decision into a common value signal (see The Encoding of Action Value section for further discussion on this topic) and underlie ACC's critical contribution to decision making.

Figure 1. Single neurons encode value and actions in a multivariable decision-making task. (A) Subjects made choices between pairs of presented pictures. (B) There were six sets of pictures, each associated with a specific outcome. We varied the value of the outcome by manipulating either the amount of reward the subject would receive (payoff), the likelihood of receiving a reward (probability) or the number of times the subject had to press a level to earn the reward (effort). We manipulated one parameter at time, holding the other two fixed. Presented pictures were always adjacent to one another in terms of value, that is, choices were 1 versus 2, 2 versus 3, 3 versus 4 or 4 versus 5. (C and D) Spike density histograms illustrating the activity recorded from single neurons under three different types of value manipulation (probability, payoff, or effort). The vertical lines indicate the onset of the pictures indicating the value of the choice (left) and the time at which the animal was able to make his choice (right). The different colored lines indicate the value of the choice under consideration or which action the subject would select. (C) Anterior cingulate cortex (ACC) neuron encodes payoff and effort but not probability. (D) ACC neuron encodes the value and action of all three decision variables. (E) Percentage of all neurons selective for value for each decision variable. All variables are predominately coded in ACC. (F) Percentage of all neurons selective for value as a function of number of decision variables encoded. ACC neurons tend to multiplex decision value across two (as in C) and three (as in D) decision variables. (G) Percentage of all neurons selective for action for each decision variable. Orbitofrontal cortex (OFC) neurons are less likely to encode action information relative to lateral prefrontal cortex (LPFC) and ACC. χ2 test, * p < .05. From “Neurons in the Frontal Lobe Encode the Value of Multiple Decision Variables,” by S. W. Kennerley, A. F. Dahmubed, A. H. Lara, and J. D. Wallis, 2009Journal of Cognitive Neuroscience, 21Figure 1. Copyright 2008 by the Massachusetts Institute of Technology. Adapted with permission.

Reward Modulation of Cognitive Processes

Thus far we argued that the evidence from both neurophysiological and neuropsychological studies implicates both the OFC and ACC in the representation of reward to guide decision making. Although the encoding of reward in many areas is likely to reflect a role in guiding decision making, neuronal encoding of reward also may reflect a change in attentional processes, rather than reward coding per se, because one is likely to direct attention to features or locations predictive of greater reward (Bendiksby & Platt, 2006; Maunsell, 2004). A possible candidate for the encoding of reward to influence attention is LPFC, as this area of PFC has a role in a number of executive control processes including working memory, strategy implementation, shifts of attention, representation of rules/categories/objects, and response inhibition among other functions (for detailed reviews, see Mansouri, Tanaka, & Buckley, 2009; Miller & Cohen, 2001; Petrides, 2005; Tanji & Hoshi, 2008; Wise, 2008). However evidence from both neurophysiological and neuropsychological studies argues that rather than simply maintaining information in working memory (which could potentially be subserved by sensory areas) the crucial role of LPFC appears to be guiding attentional processes of behavioral relevance (Buckley et al., 2009; Fellows & Farah, 2005; Lebedev, Messinger, Kralik, & Wise, 2004; Mansouri, Buckley, & Tanaka, 2007; Petrides, 2000; Rushworth, Nixon, Eacott, & Passingham, 1997; Wise, 2008), which includes the representation of value (Hikosaka & Watanabe, 2000; Kim et al., 2008; Roesch & Olson, 2003; Seo, Barraclough, & Lee, 2007; Seo & Lee, 2009; Wallis & Miller, 2003). Yet, LPFC lesions do not appear to cause severe decision-making deficits, thus it remains unclear how reward representations in LPFC contribute to goal-directed behavior.

One possibility we tested is that value encoding in LPFC reflects a mechanism of prioritization of behaviorally relevant information (Wallis & Kennerley, 2010). Our logic was twofold. First, we know that cognitive processes have capacity constraints (e.g., the limit and precision of information stored in working memory; Bays & Husain, 2008), so there must be a mechanism by which the brain allocates these resources in an efficient and behaviorally relevant manner. Second, it is commonly known that increasing the reward/value of an outcome improves behavioral performance (Roesch & Olson, 2007). Given LPFC neurons encode information about the direction of attention that can be held in working memory (Funahashi, Bruce, & Goldman-Rakic, 1989; Lebedev et al., 2004; Rao, Rainer, & Miller, 1997) and performance improves when a subject expects a larger reward, we reasoned that increasing reward might provide a means of directing attentional resources to effectively modulate the fidelity of goal-relevant information, thereby improving behavioral performance.

To examine this, we trained monkeys to attend to spatial locations and to maintain this information in working memory (Kennerley & Wallis, 2009a; Kennerley & Wallis, 2009c). On each trial, a picture informed the subject how much juice they would receive for making a saccade to the remembered location. However, in one set of trials (see Figure 2), the reward cue was presented before the spatial cue (RS) and in the other set of trials the spatial cue was presented before the reward cue (SR), allowing us to compare the effects of reward on the spatial tuning of neurons both independently and conjointly. Figure 2 illustrates an example of reward modulating the spatial selectivity of a LPFC neuron. We recorded from neurons in dorsolateral prefrontal cortex (DLPFC), ventrolateral prefrontal cortex (VLPFC), OFC, and ACC. Many neurons in VLPFC, OFC, and ACC (but not DLPFC) encoded reward information, but spatial information was predominantly encoded in VLPFC. Moreover, reward information was encoded earlier in OFC and VLPFC than in ACC and DLPFC, consistent with previous reports that OFC was one of the initial PFC areas to represent the rewarding value predicted by a conditioned stimulus (Kennerley & Wallis, 2009a; Kennerley & Wallis, 2009c; Wallis & Miller, 2003). Importantly, although some neurons exhibited a change in spatial tuning as a function of reward, these neurons were only found in VLPFC (Kennerley & Wallis, 2009c). This is consistent with previous reports that have failed to find strong evidence that reward shapes the direction of attention in neurons in DLPFC, frontal eye field, or premotor cortex (Leon & Shadlen, 1999; Roesch & Olson, 2003), although recent evidence suggests the supplementary eye field may play some role in this function (So & Stuphorn, 2010).

Figure 2. The influence of reward on spatial tuning in a delayed response task. (A) In the reward-space (RS) task, the subject sees two cues separated by a delay. The first cue indicates the amount of juice to expect for successful performance of the task, and the second cue indicates the location the subject must maintain in spatial working memory. The subject indicates his response by making a saccade to the location of the mnemonic cue 1 s later. The fixation cue changes to yellow to tell the subject to initiate his saccade. The space-reward (SR) task is identical except the cues appear in the opposite order. There are five different reward amounts, each predicted by one of two cues, and 24 spatial locations. (B and C) Spike density histograms of single neurons illustrating how the size of an expected reward can modulate spatial tuning of information held in working memory. The graphs illustrate neuronal activity as animals remember different locations on a computer screen under the expectancy of receiving either a small or a large reward for correct performance. The gray bar indicates the presentation of the mnemonic spatial cue. To enable clear visualization, the spatial data is collapsed into four groups consisting of six of the 24 possible spatial locations tested. The inset indicates the mean standardized firing rate of the neuron across the 24 spatial locations. (B) When the subject expected a small reward, the neuron showed little spatial selectivity, which consisted of an increase in firing rate when the subject was remembering locations in the top left of the screen. When the subject expected a large reward for correct performance, spatial selectivity dramatically increased with a high firing rate for locations in the top left of the screen and a low firing rate for locations in the bottom right. Spatial selectivity was primarily evident only during cue presentation. (C) A neuron that showed moderate spatial selectivity when the subject expected a small reward, but a dramatic increase in spatial selectivity for targets in the top right when the subject expected a large reward. This reward modulation of spatial selectivity persisted into the delay period, indicating a reward modulation of the information contained in working memory. Panel A from “Reward-Dependent Modulation of Working Memory in Lateral Prefrontal Cortex,” by S. W. Kennerley and J. D. Wallis, 2009, Journal of Neuroscience, 29, p. 3260, Figure 1J. D. Wallis and S. W. Kennerley, 2010Figure 3.

While only VLPFC neurons exhibited a reward-dependent modulation of spatial selectivity, the behavioral performance of the animals in the two different sets of trials indicated that the encoding of reward in VLPFC neurons reflected an attentional - rather than reward - function; larger expected reward led to increased behavioral performance and increased spatial selectivity when the reward cue was presented before the spatial cue, but decreased spatial selectivity and no improvement in performance when the reward cue was presented after the spatial cue. This suggested that the reward cue competes for limited attentional resources, interfering with ongoing spatial attention processes that are attempting to maintain the location of the peripherally presented mnemonic cue (Awh & Jonides, 2001; Duncan, 2001). This interpretation of our results also is compatible with recent results from neurophysiology (Lebedev et al., 2004) and neuropsychology (Rushworth et al., 2005) studies that suggest a primary role for VLPFC in attentional control, and are consistent with the idea that VLPFC is important for the maintenance of task relevant information and the filtering of irrelevant information (Petrides, 1996).

Reward-dependent modulation of cognitive resources is not limited to spatial attention; reward can also modulate LPFC encoding of high-level information, such as categories (Pan, Sawa, Tsuda, Tsukada, & Sakagami, 2008). Furthermore, the modulation may be bidirectional: Attentional allocation also may modulate the reward signal based on behavioral goals. In dieters exercising self-control regarding choices involving healthy and unhealthy foods, increased LPFC activation is associated with a concomitant decrease in areas representing value information (e.g., OFC), as though LPFC is dampening the value signal (Hare, Camerer, & Rangel, 2009).

The Encoding of Action Value

Representing the Action Costs of a Decision

Most studies of decision making have tended to focus on variables that influence the rewarding value of an outcome, such as variables like magnitude, probability, and delay as discussed above. However, in natural environments, the distance and terrain that one might encounter in obtaining food (or traveling to work) produces energetic costs (e.g., effort), which is a critical component in optimal choice (Rangel & Hare, 2010; Stephens & Krebs, 1986; Stevens, Rosati, Ross, & Hauser, 2005; Walton, Kennerley, Bannerman, Phillips, & Rushworth, 2006). Growing evidence suggests that ACC may have a specialized role in influencing effort-based decision making. Damage to ACC biases animals toward actions that are associated with less effort even when a more rewarding option is available (Floresco & Ghods-Sharifi, 2007; Schweimer & Hauber, 2005; Walton, Bannerman, Alterescu, & Rushworth, 2003; Walton, Bannerman, & Rushworth, 2002). In contrast, OFC lesions impair delay-based decision making, but not effort-based decision making (Rudebeck, Walton, Smyth, Bannerman, & Rushworth, 2006).

Relative to reward processing, very few studies have directly manipulated physical effort while recording the activity of frontal neurons. Neurons in ACC increase their activity as monkeys work through multiple actions toward reward (Shidara & Richmond, 2002). Although neurons in ACC are significantly more likely to encode effort than neurons in LPFC or OFC, this is also true for the reward variables as seen in Figure 1E (Kennerley et al., 2009; Kennerley & Wallis, 2009b). Thus, although ACC may have a specialized role in encoding effort costs, this may be part of its broader role in integrating the costs and benefits of a choice option in the determination of which action is optimal.

Nonetheless, the precise role of these regions in allowing animals to overcome such costs is not yet fully understood. For example, despite the finding that when given a choice option that would minimize effort or delay, ACC and OFC lesions make animals effort- or delay-cost averse, respectively (Rudebeck, Walton, et al., 2006; Walton et al., 2002). Yet, damage to either region has no effect on an animal's basic willingness to work or tolerate delays for reward when there are no other potential rewarding alternatives, as shown by normal performance in progressive ratio tests that require animals to work progressively harder and wait progressively longer for reward (Baxter et al., 2000; Izquierdo et al., 2004; Pears, Parkinson, Hopewell, Everitt, & Roberts, 2003). This implies that neither ACC nor OFC encodes a generalized motivational signal that drives behavior in all contexts. Instead, as we will discuss in later sections, these effects likely reflect more general roles for ACC and OFC for maintaining and updating outcome expectancies associated with either actions or stimuli respectively, especially in situations where outcomes are separated from choices either in terms of action steps or in time (Rushworth & Behrens, 2008; Schoenbaum et al., 2009).

Integration or Specialization of Decision Variable Representations

Despite the variety of decision alternatives available in any given moment, the brain must somehow determine which option best meets our current needs and goals. Although some decisions can be evaluated using objective measures along a single dimension (e.g., choosing between identical items that differ only by their price), many of the decisions humans and animals face have incommensurate consequences (e.g., should I spend my extra money on a much needed vacation, or should I spend that money on much needed home repairs). In these latter two examples there is no straightforward way to compare the outcomes because each decision is associated with very different benefits.

Formal decision models suggest that the determination of an action's overall value rests on the integration of both the costs and benefits of a decision, thus generating a single value estimate for each decision alternative (Montague & Berns, 2002; Rangel & Hare, 2010). The product of such a calculation can be thought of as a type of neuronal currency—equivalent to net utility in economic terms—which can then be used to compare very disparate outcomes (Montague & Berns, 2002). An abstract value signal would be computational efficient as it would allow optimal choice by simple identification of the outcome with the maximal value. However, such a system operating in isolation would make learning about individual decision variables problematic, as it would not be possible to update our estimates of the contribution of, for example, the effort costs in isolation of reward benefits, if the only signal an organism received was in terms of overall utility.

Whether a brain area is capable of integrating value across decision variables is a topic of great interest. However, it is important to draw distinctions between a neuron (or voxel) that encodes two different decision variables when tested independently (or across studies) and a neuron that encodes the sum or difference of the two variables when they are assessed conjointly in the same testing session (integration). The former case can inform whether a region is capable of encoding the components necessary to form an integrated value signal, whereas only the latter case describes whether a single neuron can integrate across decision variables. Nonetheless, at the population level, we know areas of the frontal cortex—especially ACC and OFC—are particularly good candidates for representing multiple (or integrating across) decision variables because damage here impairs decisions that require a consideration of multiple decision variables (Amiez et al., 2006; Bechara et al., 1994; Fellows, 2006; Fellows & Farah, 2005; Rudebeck et al., 2008; Rudebeck, Buckley, Walton, & Rushworth, 2006; Walton, Behrens, Buckley, Rudebeck, & Rushworth, 2010; Walton et al., 2006).

At the level of single neurons, some neurons in ACC, LPFC, and OFC appear to integrate across decision variables. OFC neurons integrate reward preference and magnitude as if reflecting the economic value of the different “goods” or outcomes available (Padoa-Schioppa & Assad, 2006, 2008). OFC neurons are sensitive to both reward size and delay (Roesch & Olson, 2005) or positive and negative events (Hosokawa et al., 2007; Morrison & Salzman, 2009; Rolls, 2000) as if coding value on a common scale. In ACC, neurons integrate both size and probability of reward (Amiez et al., 2006; Sallet et al., 2007), multiplex reward size and probability with effort cost (Kennerley et al., 2009) and integrate both the costs and benefits of a decision indicative of net value (Hillman & Bilkey, 2010). Neurons in LPFC encode both positive and negative events (Kobayashi et al., 2006) and integrate reward and delay information to form a temporally discounted value signal (Kim et al., 2008).

However, for some OFC neurons, variables such as size and delay to reward (Roesch et al., 2006), risk and reward size (O'Neill & Schultz, 2010), or rewarding and aversive events (Morrison & Salzman, 2009), are encoded by largely separate populations of neurons. It should be noted however that in many studies, different decision variables have been examined independently (i.e., only a single decision variable was examined within a trial). Thus, differences in the degree in which neurons encode multiple decision variables or integrate across variables might depend on the task requirements. Nonetheless, one interpretation of these results is that many OFC neurons encode individual value parameters associated with a stimulus (as opposed to an abstract value signal) because this representation is key for learning and representing why a particular decision option is valuable (see above). If so, these variable-specific neurons in OFC may have a more influential role in influencing the calculation of decision value and in updating these individual parameters following discrepancies, whereas neurons that encode an integrated representation of decision value might reflect the output of the decision process.

Linking Value to Action

Although neurons throughout the frontal cortex encode information about the expected value of an outcome, how these neurons influence action selection clearly differentiates frontal areas. Both ACC and LPFC send projections to the premotor areas, whereas OFC receives strong sensory input but weakly connects with motor areas (Carmichael & Price, 1995; Cavada et al., 2000; Croxson et al., 2005; Dum & Strick, 1993; Wang, Shima, Isoda, Sawamura, & Tanji, 2002). Consistent with this anatomy, neurophysiological studies have reported that ACC neurons tend to encode the value of the outcome and the action that led to the outcome (Figure 1E, 1G; Hayden & Platt, 2010; Kennerley et al., 2009; Luk & Wallis, 2009; K. Matsumoto, Suzuki, & Tanaka, 2003), although studies using eye movements also have found less prominent representation of actions than in adjacent dorsomedial prefrontal structures (Seo & Lee, 2007, 2009; So & Stuphorn, 2010). LPFC neurons also encode information about actions, but neurons here tend to encode associations between the stimulus and action, whereas ACC neurons preferentially encode information about the action and outcome (Luk & Wallis, 2009; K. Matsumoto et al., 2003; Wallis & Miller, 2003). In contrast, OFC neurons encode the value of sensory stimuli with little encoding of motor responses (Kennerley et al., 2009; Kennerley & Wallis, 2009b; Morrison & Salzman, 2009; Padoa-Schioppa & Assad, 2006, 2008; Wallis & Miller, 2003). Some recent reports suggested that OFC neurons may encode action information but typically after the action has been performed and the outcome is experienced (Bouret & Richmond, 2010; Tsujimoto, Genovesio, & Wise, 2009).

Few electrophysiology experiments to date have manipulated only an action's value. However, there is evidence that ACC neurons detect changes in action value—or how preferable one action is relative to another—to directly influence adaptive action selection, but show less sensitivity to changes in value cued by nonappetitive sensory stimuli (Luk & Wallis, 2009; M. Matsumoto, Matsumoto, Abe, & Tanaka, 2007; Shima & Tanji, 1998).

The Comparison of Action Values: Making a Choice

It may seem, after the computation of stimulus and action values, that the process of making a decision itself should be relatively straightforward, requiring a simple comparison of the different integrated values of the available alternatives. However, the role of frontal cortex neurons in allowing certain choices to be made is arguably the least well-understood component of decision making. As discussed above, there are multiple influences on the value of an option, any of which might be influenced by external factors such as rules or attention. Decision making is also not simply a read out of current action values, but instead is a stochastic process that likely uses something equivalent to a logistic function to translate between available options and a selected response (Rangel & Hare, 2010; Sutton & Barto, 1998). In some situations, it may not directly require a comparison of action values at all, actions instead being chosen via a decision policy (Fleming, Thomas, & Dolan, 2010). Moreover, it is sometimes not clear at what point a particular decision is made, given that the evidence for a particular choice can be accumulated over a relatively large number of trials and long time periods. There is increasing evidence that persistent activity between and across trials may be important for choice behavior (Curtis & Lee, 2010).

It has been argued that of all the frontal lobe regions, LPFC is best placed to control and implement choice behavior through its connections with parietal and premotor cortices as well as with OFC and ACC (Kable & Glimcher, 2009). Some directionally tuned cells in LPFC have been shown to dynamically increase their firing rates during choices based on the integrated value of a target presented within their preferred direction (Kim et al., 2008). Electrophysiology studies have reported chosen value signals in OFC (Kepecs et al., 2008; Padoa-Schioppa & Assad, 2006; Sul, Kim, Huh, Lee, & Jung, 2010). Again, however, whether such signals reflect the process of a decision or the readout from a comparison process made elsewhere is a matter of debate.

Given the range of possible contributing sources of evidence to be considered during a decision, one potentially important function of the frontal lobe would be to focus attention on the variables pertinent to the current decision. Patients with large lesions encompassing OFC and VMPFC alter the way in which they choose to acquire information when making complex decisions, making them prefer to compare all the attributes of a single available option (in this case, an apartment) rather than evaluating across all available options on an selected set of attributes (for instance, the price or number of bedrooms; Fellows, 2006). Recently, Noonan and colleagues (Noonan et al., 2010) showed that monkeys with selective medial OFC lesions failed to choose the highest value option in a three-armed bandit decision making task when the value of the next best option was itself much greater than value of the worst option. One interpretation of these data is that medial OFC is required to focus attention on the relevant variables prior to making a decision.

There have been a number of influential theoretical models to explain how response selection might occur. These models typically describe a process by which sensory information about potential actions “accumulate” and potentially compete until a “threshold” is reached, at which point a response is selected (Beck et al., 2008; Bogacz, 2007; Cisek, 2007; Cisek, Puskas, & El-Murr, 2009; Gold & Shadlen, 2002; Krajbich, Armel, & Rangel, 2010; Ratcliff & McKoon, 2008; Usher & McClelland, 2001; Wang, 2008). These models often explain the firing patterns of neurons in the superior colliculus (Munoz & Wurtz, 1995; Ratcliff, Hasegawa, Hasegawa, Smith, & Segraves, 2007), the lateral intraparietal area (Leon & Shadlen, 2003; Roitman & Shadlen, 2002), the frontal eye fields (Gold & Shadlen, 2000; Gold & Shadlen, 2003), the dorsal premotor cortex (Cisek & Kalaska, 2005), and the LPFC (Kim & Shadlen, 1999).

However, in most cases, these models account for patterns of activity in neurons which have defined receptive fields; in other words, where the value of a decision is coded in an action frame of reference. In contrast, as we described earlier, many neurons in PFC encode aspects of decision value that are independent of an action frame of reference, such as current motivational state or preference for particular rewards. Moreover, even when particular actions are associated with different value parameters (e.g., magnitude of reward), many neurons in ACC, LPFC, and OFC encode the value parameter without encoding any information about the response, as if value is coded in “goods” rather than “action” space (Kennerley et al., 2009; Kennerley & Wallis, 2009a; Padoa-Schioppa & Assad, 2006, 2008; Seo & Lee, 2009; Wallis & Miller, 2003). Thus value coding in many PFC neurons lacks a clear frame of reference for which to bias action selection. It therefore remains a challenge to develop theoretical models to explain precisely how effector independent value representations in areas like OFC and ACC ultimately bias the accumulation of evidence in favor of selecting a particular action.

The Encoding of Experienced Outcomes

The Encoding of Outcome Value

Within the frontal cortex, neurons are particularly sensitive to the experienced outcome of a choice. Neurons in ACC, LPFC, and OFC are modulated by the presence and absence of reward or to unexpected outcomes (Amiez, Joseph, & Procyk, 2005; Ito, Stuphorn, Brown, & Schall, 2003; Kennerley & Wallis, 2009b; Niki & Watanabe, 1979; Quilodran, Rothe, & Procyk, 2008; Sallet et al., 2007; Seo et al., 2007; Seo & Lee, 2007; Seo & Lee, 2009; Simmons & Richmond, 2008; Tremblay & Schultz, 2000b; Watanabe, 1989; Watanabe et al., 2002) and by the experience of different magnitudes or types of outcomes (Amiez et al., 2006; Hikosaka & Watanabe, 2000; Kennerley & Wallis, 2009b; Roesch et al., 2006; Rolls, 2000; Rolls et al., 1990; Tremblay & Schultz, 1999; Watanabe, 1996).

We examined neuronal activity at the time of outcome in our multivariate choice task and found that many frontal neurons encoded reward presence, especially in ACC, in which approximately 60% of ACC neurons differentiated whether a trial was rewarded (Kennerley & Wallis, 2009b). However, we found no evidence that ACC neurons were simply detecting errors because an approximately equal number of neurons increased firing rate based on reward presence as increased firing rate to reward absence; rather, ACC neurons appeared to be encoding the value of the outcome. Although we also found evidence that frontal neurons encoded reward prediction errors (see below), the encoding of reward presence/absence was much more common, as has been reported in other studies (Amiez et al., 2006; M. Matsumoto et al., 2007; Seo & Lee, 2007). Moreover, our results also suggest that an explanation of outcome activity solely in terms of expectancy violation or error monitoring is overly simplistic. Many of the neurons that responded to the presence/absence of the reward encoded other aspects of the outcome, such as the magnitude of the reward and the physical effort necessary to earn the reward. This suggests that for many frontal neurons, their role in outcome evaluation generalizes across different types of outcomes rather than being specific to monitoring for the presence of reward or errors. Thus, frontal neurons contain a particularly rich representation of the behavioral outcome that is not necessarily simply related to the received reward, but also why the reward is valuable.

Outcome information can also serve as feedback about the relevance of a response or as a cue to guide future decisions. Neurons in several frontal lobe regions are sensitive to feedback indicating when a change in response is necessary to obtain a reward or avoid reward omission (Johnston, Levin, Koval, & Everling, 2007; Mansouri et al., 2007; K. Matsumoto et al., 2003; Wallis & Miller, 2003). In fact, in the majority of animal neuroscience tasks, outcome value directly informs subsequent responding, meaning that the contribution of the value of a received outcome and information obtained from such feedback cannot be dissociated. One exception to this came in a series of studies employing a instructed strategy task in which a cue on each trial instructed the animal whether to switch from its previous required response and therefore could not be solved using a reward-stay/no reward-shift strategy (Tsujimoto et al., 2009; Tsujimoto et al., 2010). At the time of the outcome, LPFC neurons encoded both the response and whether it was rewarded, but OFC neurons only carried information about what response had been chosen (Tsujimoto et al., 2009). Such signals in OFC therefore appear not to be representing outcome value per se, but instead a signal to indicate the appropriateness of a choice.

Adaptive Decision Making

Reward Prediction Errors

Dopamine neurons in the ventral tegmental area and substantia nigra have been suggested to encode a prediction error, which is the discrepancy between a predicted and actual outcome (Bayer & Glimcher, 2005; Fiorillo, Tobler, & Schultz, 2003; M. Matsumoto & Hikosaka, 2009; Montague, Dayan, & Sejnowski, 1996; Roesch, Calu, & Schoenbaum, 2007; Satoh, Nakai, Sato, & Kimura, 2003; Schultz, Dayan, & Montague, 1997; Tobler et al., 2005). The frontal cortex is a major recipient of dopaminergic input (Berger, Trottier, Verney, Gaspar, & Alvarez, 1988; Williams & Goldman-Rakic, 1998), and several studies have shown that neurons in PFC, especially within ACC, encode a type of reward prediction error signal, although not necessarily qualitatively identical to the signal evident in dopamine neurons. For example, in a task in which monkeys have to learn which of two actions is the correct (rewarded) action within a block of trials, many ACC (but fewer LPFC) neurons responded to either positive or negative feedback, especially on the first trial of a block (when the feedback is particularly instructive as to which action to select on subsequent trials). In some neurons, the magnitude of the neuronal response to positive feedback decreased over the next few trials (as the animal learned which action would be rewarded) in correlation with decreases in the prediction error (M. Matsumoto et al., 2007). Yet, although neurons in ACC encode a reward prediction error signal, positive and negative prediction errors seem to be encoded by largely separate populations of neurons. Other studies have identified neuronal activity in ACC that resembles a prediction error (Amiez et al., 2005; Seo & Lee, 2007).

In contrast, most neurons in OFC do not exhibit a prediction error signal. Instead, OFC neurons signal expected outcomes, as OFC neurons exhibit the same response at the time of outcome to both expected rewards or omitted rewards, and do not exhibit a larger response (relative to an expected reward) to unexpected rewards, thus being qualitatively different to the prediction error signal evident in dopamine neurons (Schoenbaum et al., 2009; Schoenbaum, Setlow, Saddoris, & Gallagher, 2003; Takahashi et al., 2009), although there is some disagreement in the literature (Sul et al., 2010).

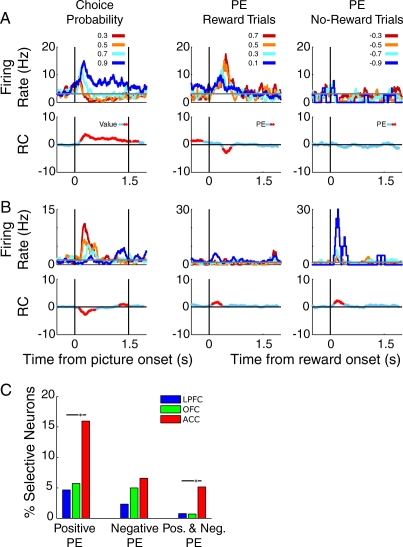

We examined the activity of neurons in ACC, OFC, and LPFC at the time when animals viewed a conditioned stimulus that indicated the probability of receiving a reward, as well as at the time of the outcome. We found that reward prediction error activity was evident only in ACC (Figure 3C), but that positive prediction errors (response on rewarded trials; Figure 3A) were more common than negative prediction errors (response on nonrewarded trials; Figure 3C). We also showed that focal ACC lesions caused reward-based, but not error-based, learning and decision-making impairments (Kennerley, Walton, Behrens, Buckley, & Rushworth, 2006), consistent with a functional link between dopamine and ACC for learning from positive prediction errors. Moreover, the negative prediction error activity rarely took the form of a depression of activity from baseline (e.g., Figure 3B), as has been reported in dopamine neurons (Fiorillo et al., 2003; Schultz et al., 1997). However this finding may be attributable to the fact that PFC neurons have heterogeneous baseline firing rates that are typically lower than half of the dynamic range of the neuron, thus it can be more difficult to detect a significant effect encoded by a depression from baseline firing rate (compared to excitation from baseline firing rate) simply because the range between baseline and minimum firing rate is often much smaller than the range between baseline and maximum firing rate. In sum, although some PFC neurons—especially in ACC—encode the difference between expected and actual reward, this prediction error activity tends to be qualitatively different than what is evident in dopamine neurons.

Figure 3. Single neurons encode reward prediction errors. (A and B) Spike density histograms illustrating the activity of single neurons synched to the presentation of conditioned stimuli (left panels) associated with different probabilities of reward delivery, or synched to the onset of reward on rewarded trials (middle columns) or the expected onset of reward on nonrewarded trial (right columns). The vertical lines in the left panel indicate the onset of the choice stimuli (left) and the time at which the animal was able to make his choice (right); the vertical lines in the middle and right columns indicate the time at which the reward was (rewarded trial) or would have been (nonrewarded trial) delivered following the choice. The different colored lines indicate the value of the chosen probability stimulus which also determines the size of the prediction error (PE) where PE equals 1 - chosen probability for rewarded trials and 0 - chosen probability for nonrewarded trials. The lower row of plots indicates the regression coefficients (RC) from a sliding linear regression, testing the relationship between the neuron's firing rate and the probability of reward delivery. Red data points indicate time points in which the probability of reward delivery (or size of prediction error) significantly predicted the neuron's firing rate. (A) Anterior cingulate cortex (ACC) neuron encodes expected probability at the time of choice (left panel). This neuron also encodes a positive prediction error at the time of reward onset (middle column), but is insensitive to negative prediction errors (right column). (B) ACC neuron encodes expected probability at the time of choice (left panel), encodes positive prediction errors on rewarded trials (middle column), and encodes negative prediction errors on nonrewarded trials (right column). (C) Percentage of all neurons selective for positive prediction errors only, negative prediction errors only, or both positive and negative prediction errors. ACC neurons are more likely to encode positive prediction errors or both positive and negative prediction errors relative to lateral prefrontal cortex (LPFC) and OFC. OFC = orbitofrontal cortex. χ2 test, * p < .05.

Learning From Outcomes and the Guidance of Subsequent Choices

To be able to survive in a changeable world, animals need to be able not only to keep track of whether expected outcomes were received, but also then to use this information to decide whether to persist with the current response or adjust their behavior accordingly. One of the most long-standing and oft-replicated findings is that frontal lobe lesions can cause a variety of behavioral problems when it is necessary to update response rules, alter attentional focus, change associations, or explore alternative options. Although LPFC has been more commonly associated with situations in which a change in context is explicitly cued (Aron, Robbins, & Poldrack, 2004; Stuss et al., 2000), parts of OFC, VMPFC, and ACC appear particularly important when changes in outcome signal a requirement to switch behavior (see reviews in Murray, O'Doherty, & Schoenbaum, 2007; Walton, Rudebeck, Behrens, & Rushworth, 2011).

The most commonly used task to probe this type of flexible decision making has been reward-guided reversal learning (Figure 4A, B). Typically in such tasks, subjects choose between two options, only one of which is consistently rewarded. However, at certain points during a session once the rewarded option is being reliably selected, the reward contingencies are reversed so that subjects have to switch to select the alternative option to continue to gain rewards. Importantly, no other cues are presented other than the receipt or absence of reward to signal when to switch between options to guide appropriate behavior.

Figure 4. Use of reward information as evidence for selecting the correct response. (A and B) Schematics of the stimulus- (panel A) and action-based reversal learning tasks (panel B). For the stimulus-based task, animals chose between two stimuli presented on the left and right of a touchscreen, only one of which was associated with reward across a block of trials. Stimulus-outcome contingencies reversed (i.e., the other stimulus became the rewarded stimulus) after animals had performed at 90% correct (27/30) across 2 days of testing. For the action-based task, animals chose between two joystick movements, only one of which was associated with reward across a block of trials. Action-outcome contingencies reversed (i.e., the other action became the rewarded action) after animals had gained 25 rewards for a particular action. (C) Effect of orbitofrontal cortex (OFC) lesions on using reinforcement information in the stimulus-based reversal task. (From “Amygdala and Orbitofrontal Cortex Lesions Differentially Influence Choices During Object Reversal Learning,” by P. H. Rudebeck and E. A. Murray, 2008Journal of Neuroscience, 28Figure 5. Copyright 2008 by E. A. Murray. Adapted with permission). (D) Effect of OFC lesions on using reinforcement information in the action-based joystick reversal task (From “Frontal cortex subregions play distinct roles in choices between actions and stimuli,” by P. H. Rudebeck, T. E. Behrens, S. W. Kennerley, M. G. Baxter, M. J. Buckley, M. E. Walton, and M. F. Rushworth, 2008Journal of Neuroscience, 28Figure 5. Copyright 2008 by S. W. Kennerley. Adapted with permission). (E) Effect of anterior cingulate cortex (ACC) lesions on using reinforcement information in the stimulus-based reversal task. (F) Effect of ACC lesions on using reinforcement information in the action-based joystick reversal task (From “Optimal Decision Making and the Anterior Cingulate Cortex,” by S. W. Kennerley, M. E. Walton, T. E. Behrens, M. J. Buckley, and M. F. Rushworth, 2006Nature Neuroscience, 9Figure 3. Copyright 2006 by S. W. Kennerley. Adapted with permission). E + 1 = performance on a trial after an error; EC + 1 = performance on a trial following a single correct response after an error; EC (N) + 1 = performance on a trial following N correct responses after an error. Data are included for each trial type for which every animal had at least 10 instances (which is why there are fewer trial types in the analysis of the stimulus-based reversal task in Rudebeck & Murray, 2008.

As observed with previous tasks, it seems that the nature of the value representation affects the regions implicated in these types of behavior. Rudebeck and colleagues (Izquierdo et al., 2004; Rudebeck & Murray, 2008) found that animals with OFC lesions, who in previous studies had been shown to be impaired on a stimulus reversal task when associations between visual stimuli and reward changed, were just as able as control animals at updating their choices on a comparable joystick-based response reversal task, in which behavior was guided by associations between instrumental actions and reward (Rudebeck et al., 2008). This suggests that the OFC is not required to detect and respond to changes in reinforcement generally, but instead is needed when there is a requirement to update stimulus-reward associations. Conversely, ACC sulcus lesions impair instrumental reversals but do not affect the speed of reversal on a stimulus-based version of the task as measured by the number of errors made prior to reaching a behavioral criterion (though see below for subtle effects on performance based on reinforcement history). A similar double dissociation between the effects of OFC and ACC lesions has been observed in stimulus- and action-based dynamic matching tasks respectively (Kennerley et al., 2006; Rudebeck et al., 2008). Taken together, these findings reinforce the notion that the OFC and ACC play vital roles not merely when reward contingencies change but during all types of choice-outcome associative learning.

Reversal tasks have been important tools for allowing investigation of flexible behavior. Although they could simply be solved using a win-stay, lose-switch strategy, animals nonetheless appear to weigh the receipt or absence of reward as a piece of evidence in an uncertain environment favoring one response option or an alternative. The importance of this becomes clear when examining OFC- or ACC-lesioned animals' performance as a function of their recent history of reinforcement following a change in reward contingencies (Izquierdo et al., 2004; Kennerley et al., 2006; Murray et al., 2007; Rudebeck et al., 2008; Rudebeck & Murray, 2008; see Figure 4). Normal subjects show an increased likelihood of continuing to choose the correct option as they gather more rewards for the now correct response. Both OFC- and ACC sulcus-lesioned animals, by contrast, have a marked impairment in persisting with the correct response during a stimulus- or action-based reversal task, respectively, failing to continue to select the currently rewarded option at the same rate as control animals after having received several rewards for such selections (Figure 4C, 4F). Nonetheless, both sets of animals do show an initial increased tendency to switch to the rewarded option following a reversal, demonstrating that they are not entirely insensitive to errors or changes in reward information.

These results may at first seem at odds with the previous discussion of the prevalence of ACC value encoding in stimulus-based decision tasks (e.g., Figure 1). Although there may be a clear functional bias for OFC and ACC being specialized for decisions based on value assignments with stimuli and actions, respectively, ACC-lesioned animals are also shown to be initially less willing to persist with a correct response even on a stimulus-based task (Figure 4e). Inactivation of ACC also has been shown to disrupt learning about stimulus-outcome associations (Amiez et al., 2006). However, the effect here following ACC lesions is qualitatively different to that seen on the action-based reversal task or in OFC-lesioned animals as they behave comparably to controls after making more than three correct responses. This might relate to the importance of ACC for representing the volatility of the reward environment, which suggests that ACC may play a general role in dictating how much influence individual outcomes should be given to guide adaptive behavior and how much effort might need to be expended to gain new information (Behrens, Woolrich, Walton, & Rushworth, 2007; Jocham, Neumann, Klein, Danielmeier, & Ullsperger, 2009). In contrast, there is little evidence to date that OFC damage causes action-based decision-making impairments.

Multiple Learning Systems and Credit Assignment

It has been known for many years that there are many parallel learning systems in the brain. In complex situations in which there are multiple stimuli and outcome possibilities and when the precise spatial and temporal structure of the environment is unknown, it may not be possible to rely on a system that requires contingent predictions to drive learning (“credit assignment” problem). Thorndike (1933) described such a system that would assign the weight of influence of an outcome not only to immediately preceding choices that led to that outcome, but also temporally contiguous choices made in the recent past or even closely following this outcome (“spread-of-effect”). However, although such a learning system may be adequate in situations in which choice and/or reward histories are relatively uniform, in rapidly changeable or low reward yielding environments in which such conditions are not met, the mixed pattern of choice and reward histories will compromise the accuracy of such stimulus value approximations, resulting in a specific pattern of behavioral impairments. Although some prefrontal cells are tuned for specific choice-outcome conjunctions (Seo & Lee, 2009; Uchida, Lu, Ohmae, Takahashi, & Kitazawa, 2007) and others encode an extended history of past choices or outcomes (Seo et al., 2007; Seo & Lee, 2007, 2009; Sul et al., 2010), little is known about which type of learning might be performed by such neurons.

To investigate the role of OFC in guiding different learning systems in complex, changeable environments, Walton, Rudebeck, and colleagues (Rudebeck et al., 2008; Walton et al., 2010) tested macaque monkeys on a series of changeable three-armed bandit tasks, in which fluctuations in outcome value associated with each stimulus could change dynamically over the course of the session so that at some points in the session, the identity of the most highly rewarding option also changed. Following OFC lesions, the animals were markedly impaired when a change in stimulus value occurred in synchrony with a change in the identity of the highest rewarding stimulus (Figure 5A). Nonetheless, these same animals were just as able as controls to flexibly track local changes in reward likelihood of the currently chosen option when the identity of the highest rewarding stimulus stayed the same (Figure 5B).

Figure 5. Choices on a changeable three-armed bandit task and the influences on current behavior. (A) Two example predetermined reward schedules. The schedules determined whether reward was delivered for selecting a stimulus (stimulus A to C) on a particular trial. Dashed black lines represent the reversal point in the schedule when the identity of the highest value stimulus changes. (B) Average likelihood of choosing the highest value stimulus in the two schedules in the control (solid black line) and orbitofrontal cortex (OFC) groups (dashed black line). SEMs are filled gray and blue areas respectively for the two groups. Colored points represent the reward probability of the highest value stimulus. (C) Matrix of components included in logistic regression and influence of (i) recent choices and their specific outcomes (red Xs, bottom right graph); (ii) the previous choice and each recent past outcome (blue Xs, top right graph); and (iii) the previous outcome and each recent past choice (green Xs, bottom left graph), on current behavior. Green area represents influence of associations between choices and rewards received in the past; blue area represents the influence of associations between past rewards and choices made in the subsequent trials. The data for the first trial in the past in the three plots are identical. Controls, solid black lines; OFCs, dashed gray lines. From “Separable Learning Systems in the Macaque Brain and the Role of Orbitofrontal Cortex in Contingent Learning,” by M. E. Walton, T. E. Behrens, M. J. Buckley, P. H. Rudebeck, and M. F. Rushworth, 2010Neuron, 65Figures 12.