Abstract

When estimating the position of one hand for the purpose of reaching to it with the other, humans have visual and proprioceptive estimates of the target hand's position. These are thought to be weighted and combined to form an integrated estimate in such a way that variance is minimized. If visual and proprioceptive estimates are in disagreement, it may be advantageous for the nervous system to bring them back into register by spatially realigning one or both. It is possible that realignment is determined by weights, in which case the lower-weighted modality should always realign more than the higher-weighted modality. An alternative possibility is that realignment and weighting processes are controlled independently, and either can be used to compensate for a sensory misalignment. Here, we imposed a misalignment between visual and proprioceptive estimates of target hand position in a reaching task designed to allow simultaneous, independent measurement of weights and realignment. In experiment 1, we used endpoint visual feedback to create a situation where task success could theoretically be achieved with either a weighting or realignment strategy, but vision had to be regarded as the correctly aligned modality to achieve success. In experiment 2, no endpoint visual feedback was given. We found that realignment operates independently of weights in the former case but not in the latter case, suggesting that while weighting and realignment may operate in conjunction in some circumstances, they are biologically independent processes that give humans behavioral flexibility in compensating for sensory perturbations.

Keywords: adaptation, sensory integration, multisensory, proprioception, vision

estimating the hand's position in space is an important computation for humans. We often have to reach to one hand with the other, e.g., when placing a teacup on a saucer. The true position (Y) of the hand holding the saucer can be estimated by two independent sensory modalities: vision and proprioception. The image of the hand on the retina provides the brain with a visual estimate of hand position (ŶV), which is equal to Y plus errors introduced by visual processing (EV), e.g., due to noise in neural transmission, coordinate transformation, sensory receptors, and the environment, as follows:

| (1) |

Sensory receptors in the muscles and joints of the arm provide a proprioceptive estimate of hand position (ŶP). This is equal to Y plus errors introduced by proprioceptive processing (EP), e.g., due to noise in neural transmission, coordinate transformation, sensory receptors, and the environment, as follows:

| (2) |

ŶV and ŶP are likely to differ in both variance and bias, as the independent processing of each modality introduces different noise and biases to each final estimate. To form a single estimate of hand position with which to guide behavior, the brain is thought to weight and combine the available estimates into a single, integrated estimate of hand position (Ghahramani et al. 1997).

If ŶVP is the brain's estimate of hand position that integrates both vision and proprioception, and WV is the weight of vision versus proprioception (i.e., a WV of 0.5 implies equal reliance on vision and proprioception), then:

| (3) |

Visual and proprioceptive estimates of target hand position are unlikely to be in perfect agreement normally (Smeets et al. 2006). If ŶV and ŶP are substantially misaligned (e.g., wearing glasses with prisms causes the visual estimate of hand position to be offset from the proprioceptive estimate), we initially have difficulty making accurate movements, e.g., reaching to that hand. However, the brain can compensate for such misalignments, enabling us to move accurately once more (e.g., Welch 1978).

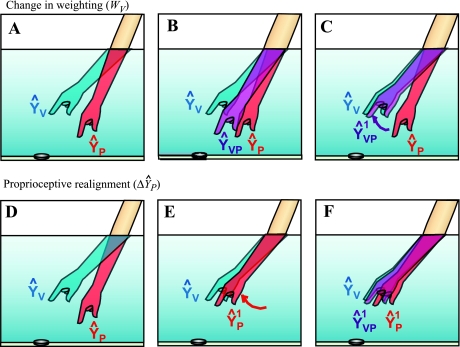

In theory, there are two distinct ways for the brain to resolve such a discrepancy between ŶV and ŶP (Fig. 1). A realignment strategy (Fig. 1E) would entail shifting the proprioceptive estimate (ŶP) closer to the visual estimate (ŶV), shifting the visual estimate closer to the proprioceptive estimate, or both (e.g., Welch 1978). In other words, resolving the discrepancy between ŶV and ŶP directly, by remapping the relationship between vision and proprioception. Alternatively, the brain could use a weighting strategy (Fig. 1C) and alter the weight of vision versus proprioception without any realignment (i.e., a change in WV rather than in ŶV or ŶP). For example, if proprioception is misaligned relative to vision, and vision is deemed to be the more reliable modality, then proprioception could simply be ignored (and Wv increased to 100%).

Fig. 1.

Sensory weighting and realignment could each be used to compensate for a visuoproprioceptive misalignment. A: when reaching into a fountain to pick up a coin, for example, the visual estimate of hand position (ŶV) will be offset from the proprioceptive estimate (ŶP). B: the brain is thought to weight and combine the available estimates into a single, integrated estimate of hand position (ŶVP) (Ghahramani et al. 1997). However, relying on this integrated estimate will result in missing the coin, which is most closely lined up with ŶV. C: to bring the integrated estimate closer to ŶV, the brain could increase the contribution of ŶV (i.e., up weight vision), yielding ŶVP1. D–F: alternatively, the brain could realign proprioception, bringing ŶP closer to ŶV (E). When the brain integrates ŶP1 and ŶV (F), ŶVP1 is obtained, and the person can accurately reach the coin.

A realignment strategy has the advantage of preserving information from both vision and proprioception. Integrating the two inputs, rather than ignoring one, confers a benefit in terms of variance: the integrated estimate has a smaller variance than either unimodal estimate (Maybeck 1979; Ghahramani et al. 1997). However, there may be situations when a weighting strategy is more beneficial. For example, if the misalignment will be of brief duration (e.g., compensating for the visual distortion when reaching into a tub of water to retrieve a dropped object), an accurate reach may be achieved most quickly by simply relying more on vision, i.e., a weighting strategy. Realignment, conversely, requires experience with the sensory misalignment (e.g., Welch 1978) and so may be more time consuming.

Weightings of vision versus proprioception have been studied extensively (e.g., Ghahramani et al. 1997; van Beers et al. 1999), but it is not known how or whether weights are related to realignment. Smeets et al. (2006) raised the possibility that in studies of visuoproprioceptive realignment, the process taking place is actually visuoproprioceptive reweighting. Adams et al. (2001) demonstrated that people adjust to visual magnification through a remapping, rather than a weighting, process; however, only visual submodalities were considered. The bottom line is that it is not known if the brain uses a weighting strategy, either alone or in combination with realignment, in a situation where vision and proprioception are spatially misaligned with each other. Furthermore, it is not known if there is any biological or functional relationship between visuoproprioceptive weighting and realignment.

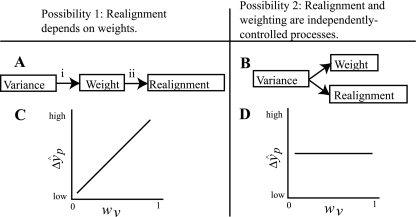

One possible relationship between these two processes is that realignment is determined by weights. If two sensory inputs are misaligned relative to each other, the brain must decide which input is less trustworthy and therefore should be realigned to match the other, more trustworthy input: the more variable (and therefore lower weighted) input would be the obvious choice. In other words, the relative weights of integrated sensory estimates depend on their relative variances, and the relative realignment of these estimates depends on their relative weights (Ghahramani et al. 1997). Relying on this possible relationship, sensory weights have been inferred from sensory realignment in experimental situations, i.e., if realignment is determined by weights, then the sensory input that realigns more must have been weighted less (van Beers et al. 2002). However, while the relationship between variance and weights (Fig. 2A, i) has been demonstrated in a number of circumstances (e.g., Ernst and Banks 2002; Ghahramani et al. 1997; van Beers et al. 1999), the dependence of realignment on weights (Fig. 2A,ii) has never been directly tested.

Fig. 2.

Views of the relationship between sensory weights and realignment. A: possibility 1. The sensory modality with greater variance will be weighted less in an integrated sensory estimate (i) and will realign more if realignment takes place (ii). The connection between variance and weights (i) has been demonstrated in a number of human behaviors (e.g., Ghahramani et al. 1997; van Beers et al. 1999; Ernst and Banks 2002), but the relationship between sensory realignment and weights (ii) has been studied only indirectly (van Beers et al. 2002). B: possibility 2. In the present study, we found evidence to support an alternative view, in which weighting and realignment are two independently controlled processes, either of which can be used to compensate for a sensory misalignment. It should be noted that while weights (Ghahramani et al. 1997; van Beers et al. 1999; Ernst and Banks 2002) and realignment (Ghahramani et al. 1997; van Beers et al. 2002; Burge et al. 2008) have each been linked to the relative variances in sensory estimates, we do not suggest that either weights or realignment are wholly dependent on variance; other influences, such as attention/conscious effort, have been shown to affect both weights (Warren and Schmitt 1978) and realignment (Kelso et al. 1975). Accuracy may also be important (Di Luca et al. 2009). C and D: experimental predictions. C: if possibility 1 is correct and sensory realignment depends on weights, we predicted that in both experiments, subjects with a higher weight of vision (wV) would show a greater realignment of proprioception (ΔŷP). D: if possibility 2 is correct and sensory realignment is controlled independently of weights, we expected wv and ΔŷP to be unrelated in at least one of the two experiments.

An alternative possibility is that realignment is not determined by weights but rather that the two processes are controlled independently by the brain (Welch et al. 1979). In this case, we would expect to find situations where realignment occurs independently of weights, reflecting greater behavioral flexibility than the first possibility.

Discriminating between these two possibilities is important to our understanding of human multisensory processing and may, in turn, improve our ability to help neurological patient populations who are impaired at compensating for sensory misalignments. To examine the relationship between sensory weights and realignment experimentally, we gradually imposed a misalignment between visual and proprioceptive estimates of a static hand position. Weighting of vision versus proprioception was measured independently of proprioceptive realignment, which allowed us to examine the relationship between the two processes.

In experiment 1, we used endpoint visual feedback to create a situation where the subject could only “succeed” (i.e., hit the target) by regarding vision as correct and proprioception as misaligned, but realignment and weighting strategies would in theory lead to equal success. In experiment 2, no endpoint visual feedback was given, allowing us to test whether sensory realignment could occur in the absence of an explicit error signal and whether weights and realignment are related when the constraint of regarding vision as correct is removed (predictions in Fig. 2, C and D). We found that realignment operates independently of weights in experiment 1 but not in experiment 2, suggesting that while weighting and realignment may operate in conjunction in circumstances such as those in experiment 2, they are biologically independent processes (Fig. 2B). This independence gives humans behavioral flexibility in compensating for sensory perturbations.

MATERIALS AND METHODS

We conducted two experiments to determine if sensory weighting and realignment are independent processes. Subjects in both experiments reached with one hand to a target. In different trials, the target was either a visual target (V); their other hand's position, which could be estimated by proprioception (P); or both (VP). We imposed a misalignment between visual and proprioceptive estimates of target position by gradually shifting the V component away from the P component of VP targets and measured sensory weights and realignment. Subjects in experiment 1 received endpoint visual feedback on VP reaches; subjects in experiment 2 did not.

Subjects

We studied 53 individuals in total. Thirty-nine subjects did experiment 1 (16 women and 23 men; mean age: 35.6 yr). Nineteen subjects did experiment 2 (12 women and 7 men; mean age: 35.8 yr). Five subjects participated in both experiments. Of the 39 experiment 1 subjects, 18 subjects also performed a sensory subexperiment. All subjects stated that they were neurologically healthy, had normal or corrected-to-normal vision, and gave informed consent. Protocols were approved by the Institutional Review Board of The Johns Hopkins University.

Experimental Design

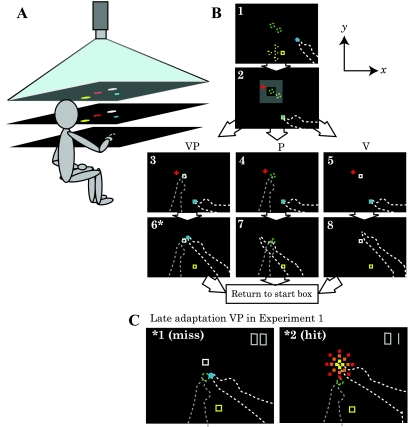

Setup.

Subjects sat at a reflected rear projection apparatus (Fig. 3A) and reached to V, P, or VP targets with no vision of either arm. The V target was a 12 × 12-mm white box projected on the display, and the P target was the index finger of the nondominant hand positioned on a tactile marker beneath the reaching surface (Fig. 3B). Infrared-emitting markers were placed on each index fingertip, and an Optotrak 3020 (Northern Digital) was used to record three-dimensional position data at 100 Hz. Black fabric obscured the subject's view of both arms and the room beyond the apparatus. The three target types repeated in the order of VP-P-VP-V throughout the baseline (28 reaches) and adaptation (84 reaches) blocks, which together took 30–40 min. Every reach began with the reaching finger in a start box in one of five positions (Fig. 3B, screen 1). Targets were in one of two locations centered 30–40 cm (mean: 36 cm) in front of the subject's chest at midline (Fig. 3B, screens 3–5).

Fig. 3.

Experimental setup for experiments 1 and 2. A: the subject looked down into a horizontal mirror (middle) and saw targets and cursors indicating hand position reflected from a horizontal rear projection screen (top). The (dominant) reaching hand rested on a hard acrylic reaching surface (bottom) below the mirror. The (nondominant) target hand remained below the reaching surface at all times. The mirror was positioned midway between screen and reaching surface, such that images in the mirror appeared to be in the plane of the reaching surface. Not pictured: black drapes that obscured the subject's vision of his/her arms and the room outside the apparatus. B: timeline of a single reach in the baseline block and bird's eye view of the display. Dashed lines were not visible to the subject. The total display area was 75 × 100 cm. Schematic is not shown to scale. V, visual target; P, proprioception target; VP, combination of V and P targets. Screen 1: subjects placed their reaching finger (white dashed line) in the yellow start box, which appeared in one of five possible positions (yellow dashed squares; the central position was 20 mm from each of the other positions), with the aid of an 8-mml:diameter blue cursor indicating reaching finger position [veridical to minimize proprioceptive drift between reaches (Wann and Ibrahim 1992)]. Screen 2: a red fixation cross appeared in a random location within an invisible zone (gray), and subjects were instructed to fixate on it for the duration of the reach. Screens 3–5: subjects positioned their target finger (dashed gray line) as instructed, on one of the two tactile markers stuck to the bottom of the reaching surface ∼40 mm apart (green dashed circles) for a P or VP target or down in their lap for a V target, which appeared as a white box in one of the two possible target locations. For VP reaches, the V target was projected on the P target during the baseline block but was gradually offset in the y direction during the adaptation block. Once both hands were correctly positioned, subjects reached toward the target, with the cursor disappearing at movement initiation. Movement speed was not restricted, and subjects were permitted to make adjustments. Screens 6–8: when the reaching finger had not moved more than 1 mm for 2 consecutive seconds, the endpoint position was recorded and the red fixation cross disappeared. In experiment 2, subjects were immediately instructed to lower their target hand to rest in their lap and return the reaching finger to the start box. In experiment 1 only, endpoint visual feedback (blue dot) was displayed for 2 s at the movement endpoint location after reaches to VP targets. After this, subjects were instructed to lower their target hand and return the reaching finger to the start box. C: bird's eye view of an unsuccessful (screen 1) and successful (screen 2) reach to a late adaptation VP target in experiment 1. The illustration is to scale. The V component (white box) of VP targets was gradually displaced up to 70 mm away from the P component (green dashed circle) in the positive y direction. Subjects were not aware of this manipulation, but reaching endpoints close to the V component (screen 2) counted as a hit: subjects viewed an animated explosion, heard sound effects, and were awarded a point (top right corner). Reaching endpoints farther than 10 mm from the V component (screen 1) resulted in a negative sound effect and no point was awarded. In other words, the visual estimate of target hand position had to be regarded as the “good” estimate (and proprioception the “bad” estimate) to succeed in experiment 1. In experiment 2, no endpoint visual feedback was given (no blue dot, no explosions, and no points awarded). *Endpoint visual feedback was given in experiment 1 only and only for VP targets.

To prevent arm length from constraining reaching endpoints, the entire display was scaled to arm length such that if the elbow was fully extended, the reaching finger could be placed ∼20 cm past the farthest target, well outside the maximum distance subjects typically reached at any point in the experiment. We randomized start positions and target positions so that it was unlikely that a subject could memorize a reach direction or extent. For a participant of average arm length, reach distance (from start box to target location) averaged 14 cm.

Targets and perturbation.

VP targets represented the situation of interest, i.e., the subject was estimating the position of his/her target hand with both visual and proprioceptive estimates available. We imposed a misalignment between these estimates: after a veridical baseline period, the V component gradually shifted away from the P component in the forward direction (i.e., positive y direction) such that, by the end of the adaptation block, the V component was 70 mm farther away from the subject than the P component (Fig. 3C). The unimodal V and P targets were used to assess proprioceptive realignment and sensory weights as discussed below. During the adaptation block, the unimodal V target shifted away from the subject at the same rate as the V component of VP targets (1.67-mm shift added every VP reach). Every subject was questioned at the end of the experiment about whether they felt that the V component of VP targets was always on top of the P component. If a subject felt that the V component was displaced from the P component in the direction of the 70-mm perturbation (straight ahead), even if they only estimated it to be a 1-mm displacement, we discarded that subject's data. This occurred for six subjects who were excluded (additional to the 53 subjects in the final sample).

Fixation.

Before every reach, a red fixation cross appeared in a random location (constant across subjects) within an invisible 10-cm zone centered on the target (Fig. 3B, screen 2). Subjects were instructed to look at the cross for the duration of the reach. To prevent subjects from noticing that the V targets were shifting during the adaptation block, the fixation cross zone shifted at the same rate as the V target. We discarded subjects from the final sample whose reaching endpoints were influenced by the position of the fixation cross (appendix a).

Visual feedback.

A finger cursor was shown so that subjects could put it in the start box. The cursor disappeared when they reached to the target. In experiment 1, subjects were shown the cursor at the end of the reaches to VP targets only. The target exploded if they were within 10 mm of the center of the white box. Subjects were awarded a point for hitting the target and the score was displayed at all times. They were told that the goal was to earn as many points as possible. In experiment 2, they were not shown the cursor at the end of any reaches, and there were no explosions or points awarded.

Task Measures of Experiment 1

The purpose of experiment 1 was to impose a misalignment between visual and proprioceptive estimates of the target hand position such that success could theoretically be attained with either a weighting strategy (weighting vision heavily, i.e., high WV in Eq. 3) or a realignment strategy (realigning the proprioceptive estimate to match the visual estimate, i.e., change ŶP in Eq. 3).

We specifically evaluated the following four measures in experiment 1: 1) success at the task, 2) weighting of vision versus proprioception, 3) realignment of proprioception, and 4) the amount of motor adaptation. These measures are described below and are shown in Table 1.

Table 1.

Summary of task measures

| Task Measures | Equation | Symbol | Relation to Eq. 3 | n | Group Mean |

|---|---|---|---|---|---|

| Experiment 1 | |||||

| Success | 4 | N/A | N/A | 39 | 69% |

| Weight of vision | 5 | wV | ≈WV | 39 | 0.59 |

| P endpoint shift | 7 | ΔŷP | ≈ΔŶP + motor adaptation | 39 | 27.5 mm |

| Sensory subexperiment | N/A | ΔŷP | ≈ΔŶP | 11* | 18.6 mm* |

| Experiment 2 | |||||

| Weight of vision | 5 | wV | ≈WV | 19 | 0.53 |

| P endpoint shift | 8 | ΔŷP | ≈ΔŶP | 19 | 17.4 mm |

ΔŷP represents the observed change in behavior when subjects indicated the position of proprioceptive (P) targets; wV represents the measured weight of vision relative to proprioception. All task measures are related to elements of Eq. 3. N/A, not applicable.

Data given only for 11 of 18 subjects whose change in P reach endpoints was significant.

Success.

We wanted to determine the role of weighting versus realignment in task success: if weighting and realignment operate independently, then knowing which process contributes to success could be important for future study of patients who are impaired at one or both processes. From the subject's perspective, “success” in experiment 1 meant hitting the V component of a VP target. By our approximation of a subject's success, a 100% successful subject was one whose VP reaching endpoints reached the position of the displaced V targets (70 mm displaced from the P targets) by the end of the adaptation block. A 50% successful subject is one whose VP reaching endpoints shifted only half of the distance. If ŷVPα is the mean of the first four VP endpoint y-coordinates in the adaptation block and ŷVPβ is the mean of the last four VP endpoint y-coordinates, then:

| (4) |

The denominator serves to normalize success to VP endpoints in early adaptation. This is important because not all subjects began the adaptation block with zero bias on VP reaches.

Weight of vision.

To calculate weights, we relied on the fact that reaches to targets of different modalities are biased in different directions, even in the absence of a perturbation (e.g., Crowe et al. 1987; Foley and Held 1972; Haggard et al. 2000; Smeets et al. 2006). As we have done previously (Block and Bastian 2010), we reasoned that on VP reaches, subjects would point closer to their mean V endpoint position if they were assigning more weight to vision and closer to their mean P endpoint position if they were assigning more weight to proprioception. If wV is the experimental weight of vision, wP is the experimental weight of proprioception, and the “P to VP distance” is the linear distance between mean P endpoints and mean VP endpoints, then:

| (5) |

| (6) |

For simplicity, we will refer to weights only in terms of vision (wV). We computed a separate wV for every VP reach to minimize interference from any sensory drift or recalibration over time. For the ith VP reach (VPi), we used the mean position of the four V endpoints and four P endpoints occurring closest in time and compared these two positions to the mean position of VPi, VPi − 1, and VPi + 1 (using VPi alone did not alter the results, but it did make wV noisier). Thus, we could estimate the weight of vision on a short time scale. To estimate the initial weight of vision, the first four wV values in the experiment were averaged. To estimate weight of vision late in the adaptation block, the last four wV values in adaptation were averaged.

It should be noted that this method of weight calculation is meaningful only if the sets of V and P endpoints are distinct from one another. We therefore discarded any wV values that resulted from a calculation where the V to P separation was smaller than half a SD of either the V or P endpoint distributions (a cutoff of 1 SD yielded similar results but caused more wV values to be discarded). Van Beers et al. (1999, 2002) have shown that weights are matrixes rather than scalars, i.e., the brain uses one WV to estimate hand position in azimuth and a different WV for depth. Our calculation of a single wV values using two-dimensional distances is therefore a simplification, but this was necessary here because the visual and proprioceptive covariance matrixes were not known, and V and P endpoints were often not separated enough in depth to calculate wV for that dimension. Subjects' weights were not affected by the endpoint error feedback or the modality of the previous target (appendix a).

P endpoint shift.

We measured the shift in P reaching endpoints in the y dimension from the beginning to the end of the adaptation block. This shift was not unduly influenced by the shifting visual target, fixation cross position, or subjects' memory of reaching distance (appendix a); however, we did find that some of the P endpoint shift could be accounted for by reaching arm motor adaptation rather than pure proprioceptive realignment per se (Table 1). Here, we defined motor adaptation as a systematic change in the reach motor command in response to explicit feedback about reach errors. Proprioceptive realignment of the target hand and motor adaptation of the reaching hand could each contribute to the reaching hand moving further than the proprioceptive estimate of target position (i.e., a shift in P endpoints). If ŷPα is the mean of the first four P endpoint y-coordinates in the adaptation block and ŷPβ is the mean of the last four P endpoint y-coordinates, then:

| (7) |

Here, ΔŷP (our measured change in P endpoints) is equivalent to ΔŶP (change in the brain's proprioceptive estimate of target hand position) plus motor adaptation.

To get a qualitative estimate of which strategies lead to the greatest task success, we divided subjects into four groups according to their use of weighting and P endpoint shift strategies. Subjects who shifted P reach endpoints ≥ 35 mm (half the total misalignment) but did not rely heavily on vision (last four wV in the adaptation block < 0.5) were classified as using the P endpoint strategy. Subjects who relied heavily on vision but did not shift P endpoints at least 35 mm were classified as using a weighting strategy. Subjects who shifted P endpoints ≥ 35 mm and also relied heavily on vision (last four wV in the adaptation block ≥ 0.5) were classified as using a combined strategy. If neither P endpoint shift nor weight of vision met the cutoff, the subject was classified as using an insufficient strategy.

Sensory subexperiment.

To estimate ΔŶP without the interference of motor adaptation, 18 participants in experiment 1 performed an additional task that did not involve reaching movements. Instead, they used arrow keys on a keypad to move a white cursor from the upper right corner of the display until they felt it was sitting on top of their target finger. The final position of the cursor in relation to the target finger was recorded. Subjects did six proprioceptive estimates (ŷP) before adaptation and six ŷP after adaptation; any change in the median cursor position (ΔŷP) was interpreted to reflect sensory realignment of the proprioceptive estimate of target hand position (ΔŶP).

Task Measures of Experiment 2

In experiment 1, we constrained the situation so that vision had to be regarded as the correct modality, and proprioception as misaligned, to achieve success. In experiment 2, we removed this constraint by giving no endpoint visual feedback at the reaching fingertip (no explosions or dots) and no points were awarded. Thus, subjects never found out anything about the accuracy of their reaches. We asked the following two questions: 1) if sensory realignment takes place without explicit error feedback and 2) if weights and realignment operate in conjunction in this situation (i.e., higher visual weights are associated with greater realignment of proprioception). wV and P endpoint shift were calculated the same as in experiment 1. Unlike experiment 1, however, P endpoint shift was not likely affected by motor adaptation (see appendix c for the reasons behind this assumption). We will therefore consider the P endpoint shift in experiment 2 (ΔŷP) to be an approximation of ΔŶP. Thus, for experiment 2, if ŷPα is the mean of the first four P endpoint y-coordinates in the adaptation block and ŷPβ is the mean of the last four P endpoint y-coordinates, then:

| (8) |

Statistical Analysis

To evaluate the relationships among weighting (wV), P endpoint shift (ΔŷP), and success in experiment 1, we calculated the correlation between wV in late adaptation and P endpoint shift, between P endpoint shift and success, and between wV in late adaptation and success. We also calculated a stepwise multiple regression with wV and P endpoint shift as the independent variables and success as the dependent variable.

To determine if an individual subject's P endpoint shift was significant, we used the Mann-Whitney U-test (rank sum test) to compare the y-coordinates of the first and last four P reach endpoints in the adaptation block. For group data, we used t-tests to determine if P endpoint shifts in experiments 1 and 2 were different from zero. Two-sided values are reported for all hypothesis tests. In the sensory subexperiment, we also used a t-test to determine if group ΔŷP was significantly different from zero. Finally, we tested whether weighting was related to proprioceptive realignment by calculating correlation coefficients between wV and ΔŷP for experiments 1 and 2.

RESULTS

Experiment 1

Success (i.e., hitting the visual target and earning points) could theoretically be achieved by relying heavily on the visual estimate of target hand position (weighting strategy), shifting the proprioceptive estimate to more closely match the visual estimate of target hand position (realignment strategy), or by adapting the motor command so that the reaching hand moved further than the sensory estimate of target position. Use of a weighting strategy would be apparent in the wV values we measured, but proprioceptive realignment and motor adaptation would each lead to a P endpoint shift (motor adaptation would lead to a shift in reach endpoints for all target types). We will first describe the variety of behaviors exhibited by subjects in experiment 1 with regard to weight of vision and P endpoint shift and then, using the sensory subexperiment, consider the proprioceptive versus motor components of the P endpoint shift.

Subjects can be successful using weighting or P endpoint shift strategies.

We wanted to know if subjects who were highly successful at the task (i.e., had reach endpoints near the V component of VP targets; see materials and methods) primarily used a weighting strategy, P endpoint strategy, or a combination of both. Subjects used weighting and P endpoint strategies in a variety of ways, so we divided subjects into four groups according to their use of the strategies (see materials and methods). We found that 28% of subjects used a combined strategy (Fig. 4, A and E) where they shifted P reach endpoints at least 50% (red dashed line in Fig. 4A, i) and also weighted vision over 50% in late adaptation (Fig. 4A, ii). Ten percent of subjects used a P endpoint strategy only (Fig. 4, B and E). The largest percentage, 44% of subjects, used a weighting strategy only (Fig. 4, C and E). Finally, 18% of subjects made insufficient changes in either parameter (Fig. 4, D and E) and were not successful.

Fig. 4.

Individuals used different strategies in experiment 1. When visual-proprioceptive alignment is perturbed and vision is constrained to be the “good” modality, subjects compensate by weighting vision heavily, shifting P reach endpoints in the direction of the perturbation, or both. A: subject who used a combined weighting and P endpoint strategy. i, Targets and reach endpoints in the adaptation block. V targets (solid gray line) were gradually displaced from P targets (dashed gray line) in the positive y direction. This displacement applied to both V and VP targets and reached a maximum of 70 mm by the end of the 84 reaches. V endpoints (solid blue line) tended to follow V targets. Because endpoint visual feedback was given only for VP targets, VP endpoints (dashed purple line) approximate the subject's success: if VP endpoints closely followed the V component of the target (solid gray line), subjects were scoring more points. This subject was 79% “successful.” A change in the position of P endpoints (ΔŷP = 57.4 mm, dotted red line) suggests that proprioceptive realignment may have taken place, but motor adaptation of the reaching hand could also have contributed. ii, wV in the adaptation block. A separate wV value was calculated for each VP reach. The line represents the best fit but was not used in any calculations. This subject relied more on vision by the end of the adaptation block (wV = 0.55). iii and iv, Example wV calculations. wV was calculated by comparing the mean of three VP endpoints (purple circle) to the means of the four V endpoints and four P endpoints (blue and red circles) occurring closest in time. Two-dimensional distances were used. P targets were at the origin. iii, It is apparent that early in adaptation (fourth wV is illustrated in this case), VP endpoints are closer to the P estimate than the V estimate, reflecting a high weight of proprioception and low weight of vision. iv, The situation was reversed later in adaptation (second-last wV is illustrated in this case). B: subject who used the P endpoint strategy alone. i, P endpoints shifted substantially during adaptation (38.2 mm). Success was 66%. ii, However, vision was down-weighted, and the subject relied more on proprioception by late adaptation (wV = 0.29). C: subject who used the weighting strategy alone. i, No P endpoint shift in the direction of the misalignment occurred (−7.3 mm, not significantly different from zero; P > 0.4 by rank sum test). Nonetheless, success was 79%, because vision was weighted heavily throughout (late adaptation wV = 0.94; ii). D: subject who did not use either strategy. i and ii, Little P endpoint shift took place (9.9 mm; i), and the subject did not rely heavily on vision (late adaptation wv = 0.30; ii). This subject had limited success (44%). E: group data (n = 39 subjects). For the purpose of dividing subjects into categories, we counted P endpoint shift ≥ 35 mm as using the P endpoint strategy and late adaptation wV ≥ 0.5 as using the weighting strategy.

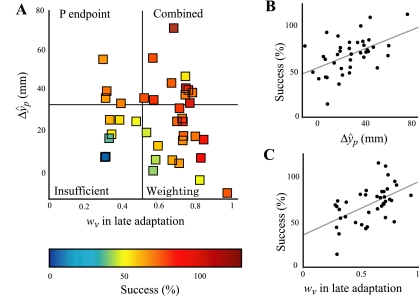

Figure 5 shows a scatterplot of ΔŷP versus wV, with color codes indicating our approximation of the success of each subject. The strategies fell in different quadrants as indicated. First, there was no clear relationship between ΔŷP and wV; individual subjects were scattered in all quadrants. Second, ΔŷP and high wV both led to success; together, they explained 62% of the success variance (stepwise multiple regression R2 = 0.62, F = 29.3, P < 0.0001). ΔŷP and weighting alone were each significantly correlated with our measure of success (Fig. 5B, r = 0.49, P = 0.0014 for ΔŷP; and Fig. 5C, r = 0.53, P = 0.0005 for wV). Weighting and P endpoint strategies thus appeared to operate independently in this task. Finally, using the combined strategy was not necessary for success; subjects who used only the P endpoint or weighting strategy were also successful (Fig. 5A). This implies a greater behavioral flexibility than if the most successful subjects had all used the same strategy.

Fig. 5.

wV and ΔŷP are each related to success in experiment 1. A: each square represents a single subject. Color reflects success. Subjects who rely heavily on vision are not more or less inclined to shift P reach endpoints in the direction of the perturbation (no significant correlation between wV and ΔŷP: r = −0.15, P = 0.37, n = 39 subjects). Subjects could succeed at the task by shifting P endpoints in the direction of the misalignment (ΔŷP, reflecting some combination of proprioceptive realignment and motor adaptation), weighting vision high, or both. Together, wV and ΔŷP account for 62% of the variance in success (stepwise multiple regression R2 = 0.62, F = 29.3, P < 0.0001). B: ΔŷP is related to success for subjects in experiment 1 (r = 0.49, P = 0.0014). C: wV is related to success for subjects in experiment 1 (r = 0.53, P = 0.0005). Overall, these results suggest P endpoint and weighting strategies operate independently in this task and, furthermore, that either strategy can lead to success at hitting targets. This implies greater behavioral flexibility than if only one strategy led to success.

P endpoint shifts have both sensory and motor components.

The shift in P reach endpoints could reflect proprioceptive realignment of the target hand (ΔŶP), motor adaptation of the reaching hand, or both. Motor adaptation could be driven by errors on VP targets, which would tend to indicate undershoot errors due to the direction of V target displacement. If the brain attributes these errors to not reaching far enough, it may adjust the motor command so that the reaching arm will reach farther.

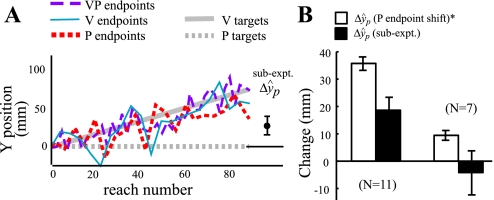

In a subset of experiment 1, we determined how much of the P endpoint shift reflects true proprioceptive realignment of the target hand versus motor adaptation affecting the reaching arm (n = 18). Subjects estimated P target position before and after the adaptation block by moving a cursor with a keypad rather than reaching to the target. Figure 6A shows an example subject for this experiment, who displayed a large shift of P endpoints (red dotted line), and the keypad estimate was more than half of that (filled circle = ΔŷP). The group data from the subset of subjects that showed a significant shift in P endpoints (n = 11) demonstrated that half of the P endpoint shift was likely due to proprioceptive realignment of the target hand (Fig. 6B). There were seven subjects who didn't show a shift in P endpoints, and their sensory component [i.e., subexperiment ΔŷP was not significantly different from zero (t6 = −0.52, P > 0.6; Fig. 6B)], demonstrating that subexperiment ΔŷP is not simply large no matter what the P endpoint shift is.

Fig. 6.

Experiment 1 sensory subexperiment. A: sensory subexperiment example subject. Before and after the adaptation block, 18 subjects estimated the location of the P target by pressing buttons on a keypad rather than reaching to it. The change in these estimates (filled circle, ΔŷP) reflects sensory realignment of the proprioceptive estimate of target hand position (ΔŶP) rather than adaptation of the motor command to the reaching hand. B: sensory subexperiment group data. For the 11 subjects with a significant P endpoint shift (first two bars), mean subexperiment ΔŷP (solid bars) was 18.6 mm, or 52% of the P endpoint shift (open bars), which includes motor adaptation. This difference was significant (P = 0.002 by Wilcoxon rank sum test), suggesting that the P endpoint shift we measured in experiment 1 had approximately equal sensory and motor components. To verify that subexperiment ΔŷP is not the same no matter what the P endpoint shift is, we also looked at the seven subjects whose P endpoint change was not significant (second two bars) and found that subexperiment ΔŷP was not significantly different from zero or from P endpoint change. Furthermore, we found a significant correlation between P endpoint shift ΔŷP and subexperiment ΔŷP for these 18 subjects (correlation r = 0.54, P = 0.021, slope of the fit line: 0.77), supporting the idea that the P endpoint shift in experiment 1 comprised both sensory and motor components across subjects. Error bars represent SEs. *Includes a motor adaptation component in addition to sensory realignment.

Experiment 2

Proprioceptive realignment occurs without explicit error feedback.

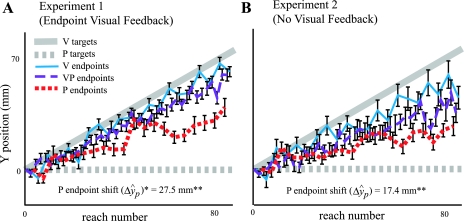

In experiment 1, we constrained the task so that vision had to be regarded as the correct modality, and proprioception as the misaligned modality, to achieve success. In experiment 2, we removed this constraint by giving no endpoint visual feedback at all. We first asked whether sensory realignment still occurs when there is no explicit error signal to drive the process. Figure 7A shows group data from subjects in experiment 1, who shifted their P endpoints 27.5 mm on average, which was significantly different from zero (t38 = 9.99, P < 0.001). Figure 7B shows that even without an explicit error signal, subjects in experiment 2 shifted their P endpoints 17.4 mm on average, which was also significantly different from zero (t18 = 5.28, P < 0.0001). This can be interpreted as a purely sensory shift (i.e., reflecting ΔŶP only) since there was no knowledge of results to drive motor adaptation (see appendix c and Howard and Templeton 1966). These results demonstrate that a misalignment between vision and proprioception is sufficient to drive sensory realignment; no explicit error signal is needed.

Fig. 7.

The proprioceptive estimate of target hand position is significantly realigned even without explicit error feedback. A: reaching endpoints averaged across subjects in experiment 1 (n = 39 subjects) with SEs. Subjects had an explicit error signal (endpoint visual feedback on VP reaches) to drive motor adaptation in addition to the VP misalignment to drive proprioceptive realignment. With both signals, P endpoint shift (ΔŷP, includes motor adaptation) was substantial. B: reaching endpoints averaged across subjects in experiment 2 (n = 19 subjects) with SEs. Even without an explicit error signal, significant shifting of P endpoints occurred (ΔŷP), which here reflects purely sensory realignment since there was no explicit error signal to drive motor adaptation (see appendixes). *Includes both motor adaptation and proprioceptive realignment. **Significantly different from zero.

Shift of P endpoints was larger in experiment 1 than in experiment 2 (t56 = 2.21, P < 0.04). This does not mean that subjects realign more in general with endpoint visual feedback (experiment 1) than without (experiment 2). Our measure of realignment includes proprioceptive realignment but not visual realignment (shifting the visual estimate to more closely match the proprioceptive estimate). Visual realignment is unlikely to be substantial in experiment 1, since task success depends on the visual estimate remaining close to the visual target. In experiment 2, however, visual realignment is likely to be substantial, especially since the visuoproprioceptive perturbation was in depth rather than azimuth [van Beers et al. (2002) found that vision adapted more than proprioception in this dimension].

Do sensory weighting and alignment operate together?

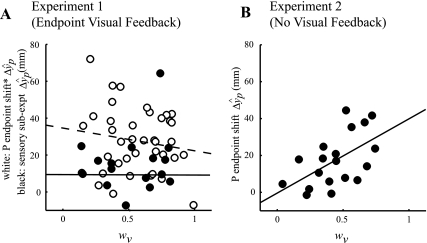

Does an individual's initial weight of vision predict how much they will realign? One hypothesis is that people with a high wV in the early part of the experiment (i.e., baseline) will regard vision as dominant and will realign proprioception to a greater extent. Yet, in experiment 1, we found no relationship between the initial wV and P endpoint shift (Fig. 8A, open circles). Furthermore, we found no relationship between wV and the sensory component of P endpoint shift from the sensory subexperiment (Fig. 8A, solid circles).

Fig. 8.

Sensory realignment can operate independently of sensory weights. A: in experiment 1, there was no relationship between P endpoint shift (ΔŷP, includes motor adaptation; open circles and dashed line) or proprioceptive realignment (ΔŷP from the sensory subexperiment; solid circles and solid line) and wV early in the baseline block, suggesting that sensory weighting and realignment are separable processes that can operate independently. B; in experiment 2, without endpoint visual feedback to necessitate that proprioception be regarded as the misaligned modality to succeed at the task, P endpoint shift (ΔŷP, motor adaptation component = 0, i.e., proprioceptive realignment) was strongly correlated with early baseline wV (correlation r = 0.55, P = 0.01), suggesting that weighting and realignment do operate in conjunction (high wV associated with large ΔŷP) in this situation. *Includes both motor adaptation and proprioceptive realignment.

Interestingly, we found that in experiment 2, without endpoint visual error feedback, the initial wV correlated with proprioceptive realignment (correlation r = 0.55, P = 0.01; Fig. 8B). This implies that sensory weighting and realignment can operate in conjunction in these circumstances. However, the relationship was nonsignificant for wV in late adaptation and realignment (correlation r = 0.27, P > 0.25). We also looked at whether the change in wV during adaptation is related to proprioceptive realignment. We (Block and Bastian 2010) have previously found that subjects who start with a very low wV tend to up-weight vision over time and subjects who start with a very high wV tend to down-weight vision over time. While we observed a similar trend in the subjects of both experiments in the present study, there was no relationship of P endpoint shift to the change in wV during adaptation in either experiment 1 (correlation r = −0.08, P = 0.64) or experiment 2 (r = −0.15, P = 0.51). This suggests that sensory weighting and realignment operated independently in both experiments: although proprioceptive realignment was related to initial wV in experiment 2, wV fluctuated throughout the experiment, independently of proprioceptive realignment. Taken together, these results suggest that sensory weighting and realignment are separable processes that can operate independently (Fig. 2B).

DISCUSSION

Here, we have shown that sensory weighting and realignment strategies may operate in conjunction in some circumstances, but they are biologically independent processes. This allows humans behavioral flexibility in compensating for sensory perturbations. Our experiments demonstrated two relationships between these processes: first, realignment can be determined by weights under some circumstances (i.e., each modality realigns in proportion to its relative weight); however, in other circumstances, the two processes can operate independently (i.e., it is possible for realignment to occur independently of weights).

The Relationship Between Weights and Realignment Has Been Uncertain

The variance associated with a given sensory modality depends in part on the situation and can change rapidly (e.g., visual variance increases when lighting is decreased). By manipulating variance experimentally, a relationship between variance and sensory weights has been demonstrated in a variety of human behaviors (e.g., Ernst and Banks 2002; Ghahramani et al. 1997; van Beers et al. 1999). Likewise, several studies have found a relationship between variance and sensory realignment. The spatial pattern of auditory adaptation is affected by auditory localization variance when estimating the position of a misaligned visuoauditory target (Ghahramani et al. 1997). Vision and proprioception have been found to realign in proportion to their relative variances in each dimension, with vision adapting more in depth and proprioception in azimuth (van Beers et al. 2002).

Given that weights and realignment are each related to variance, it seems fair to predict they are related to each other, i.e., the lower-weighted modality will realign more (Ghahramani et al. 1997; van Beers et al. 2002). Indeed, the idea of realignment depending on weights is not a new one (Welch and Warren 1980). It has been proposed that weighting and realignment are aspects of the same phenomenon occurring on different time scales, with vision being up-weighted immediately when prisms are placed in front of the eyes and proprioception gradually realigning (e.g., Hay et al. 1965; Kelso et al. 1975). Hay et al. (1965) suggested that visual capture of proprioception serves as the basis of proprioceptive realignment, and Kelso et al. (1975) proposed that sensory weights determine the extent to which each modality realigns.

The other possibility considered in the present study is that sensory realignment is not determined by weights, i.e., the amount of realignment a sensory modality undergoes is not simply a function of its relative weight. Although weights and realignment have both been found to be related to variance, variance is not necessarily the only determinant of either process. It is known that sensory weights can be influenced by other factors such as attention (Kelso et al. 1975; Warren and Schmitt 1978) and conscious effort (Block and Bastian 2010). Attention has also been found to influence sensory realignment: the unattended modality adapts more than the attended modality (Kelso et al. 1975). Furthermore, it has been suggested that variance determines the rate, not the extent, of recalibration (Burge et al. 2008) and that it is avoidance of bias, rather than variance, that determines sensory realignment (Di Luca et al. 2009). The relationship between visuoproprioceptive weights and realignment has not previously been studied directly, as weights have not been experimentally measured independently of realignment during a visuoproprioceptive misalignment task.

There Are Situations Where Realignment Does Not Depend on Weights

In experiment 1, success on VP targets could be achieved by realigning the proprioceptive estimate of the target hand, adapting the motor command to the reaching hand (both of which would cause a shift in reach endpoints on P targets), or weighting the visual estimate of target hand position heavily. We evaluated subjects' success at hitting targets to determine whether one strategy led to greater success. We found that subjects used these strategies in varying combinations, and success was not associated with one strategy more than another. This suggests the brain has flexibility in how it compensates for a sensory misalignment. However, the weighting strategy was used most frequently. This may be because changing sensory weights is a faster process or perhaps because the task was set up to reward reliance on vision, a modality that subjects are accustomed to relying on consciously.

It may seem counterintuitive that so many subjects dealt with a visuoproprioceptive misalignment primarily by using a weighting strategy. For one thing, a weighting strategy is only useful when the target is multimodal; when these subjects reached to unimodal targets, their behavior was similar to baseline, which may be disadvantageous in real life. However, in the present study, subjects never received feedback on their performance with unimodal targets, so there was no negative consequence for the subject. While much research has focused on sensory realignment, there is some evidence that changes in weighting may play an important role when compensating for sensory misalignments (Smeets et al. 2006). Indeed, a weighting strategy may be advantageous if, e.g., the misalignment is going to be very brief, such as when reaching into a tub of water to retrieve a dropped object. Simply giving more weight to vision may be faster than spatially recalibrating proprioception.

Why did some subjects use other strategies? One reason may be that we made the various strategies equally valid in this task: there was no predetermined advantage to using a weighting strategy over a realignment strategy. A second reason may be due to subjects' previous multisensory experiences. In real life, there are some circumstances where realignment is more advantageous and others where a weighting strategy is a better choice. Individual subject idiosyncrasies may depend on their past experience.

In experiment 1, we also found no relationship between weight of vision and proprioceptive realignment or motor adaptation. This does not support the conclusion that realignment is determined by weights; in that case, the lower-weighted modality should realign more (Ghahramani et al. 1997), leading to a positive correlation between weight of vision and realignment of proprioception. Our results are also inconsistent with a negative correlation between the two processes, i.e., if the brain can resolve the misalignment with a weighting strategy, realignment is less likely to occur (Welch and Warren 1980).

In contrast, experiment 2 showed that weight of vision could be positively correlated with realignment of proprioception: subjects who began the experiment with a high weight of vision tended to realign proprioception more than subjects who began with a low weight of vision. In this experiment, there was no visual feedback and no explicit definition of success, so subjects were theoretically free to weight vision high or low and to realign proprioception or not. The only possible error signal was the gradual offset of the visual target from the proprioceptive target.

Weighting and Realignment Are Functionally Independent Processes

Because we were able to create a situation in experiment 1 where weight and realignment were not related at any time point, we conclude that realignment and weighting are independent processes that can be used, alone or in combination, to compensate for a visuoproprioceptive misalignment. We have therefore failed to support the view that weights determine realignment (Fig. 2A). Instead, we found that, although weighting and realignment are each affected by variance in the available sensory modalities, they are biologically distinct, independently controlled processes (Fig. 2B). It is possible that a top-down cognitive phenomenon such as attention plays a role when these processes do not operate in conjunction as in experiment 1; although subjects were not aware of the misalignment, the constant emphasis on a visual goal (making the visual component of VP targets “explode”) could draw attention to, and focus independently controlled compensatory processes on, vision.

In summary, our results suggest that visuoproprioceptive weighting and realignment are functionally independent processes that give humans behavioral flexibility in compensating for sensory perturbations. This has implications for our understanding of how multimodal sensory processing is organized in the brain. Since weighting and realignment processes can operate independently, it is possible they require different neural circuits. This could have important implications for specific neurological patient groups, i.e., brain damage that impairs one process may not affect the other. For example, sensory realignment may depend on the cerebellum, which has been implicated in adapting to prism offsets (Martin et al. 1996), whereas sensory weights may depend on the posterior parietal cortex, a multimodal area of the cortex thought to be important in sensory integration (Andersen et al. 1997) with reciprocal connections to the cerebellum. If this is the case, patients may be able to compensate for sensory misalignments by relying on the unimpaired process.

GRANTS

This work was supported by National Institutes of Health Grants R01-HD-040289 and 1-F31-NS-061547-01.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

ACKNOWLEDGMENTS

The authors thank Christina Fuentes and Nasir Bhanpuri for helpful comments on the manuscript.

APPENDIX A: POSSIBLE EXTRANEOUS INFLUENCES ON OUR DETERMINATION OF WEIGHTING AND REALIGNMENT

Sensory Weights Were Not Affected by Previous Target Modality or Reaching Error

We wondered whether wV calculated for a given VP reach could be affected by the modality of the previous target; i.e., if wV was higher on VP reaches that followed V reaches and lower on VP reaches that followed P reaches. To find out, we divided VP endpoints into those that occurred after a V reach and those that occurred after a P reach. For both sets, we did an alternate wV calculation using only one VP endpoint instead of the mean of three (see materials and methods). For each subject, we tested to see if the two populations of wV were significantly different (two-sample t-tests with n1 = n2 = 22 VP reaches/subject). We found that wV was significantly higher (∼0.1 greater) after V reaches than after P reaches for only 4 of the total 53 subjects. Removing these subjects did not alter the pattern of results, so they were left in.

With regard to experiment 1 only, there is a question of whether the errors subjects saw on VP reaches (endpoint visual feedback in relation to the V component of the target) substantially influenced the subsequent V or P reach. The random changes in start position, target position, and fixation cross position between every reach were intended to minimize this possibility. However, to make sure, we divided VP reaches into those that occurred before a V reach and those that occurred before a P reach. We used the VP endpoint error seen by subjects (distance from reach endpoint to the V component of the target) and calculated the correlation with the reach error on the subsequent V or P reach (actual reach endpoint, not seen by subjects). We calculated separate correlations for errors in x and y. Of the 39 experiment 1 subjects, 5 subjects had P endpoints that were significantly influenced by the preceding VP error (1 in x and 4 in y). A sixth subject had V endpoints that were significantly influenced by the preceding VP error in the y dimension. Results were not substantially altered by the removal of these six subjects. We therefore do not think the VP reaching error subjects observed was an important influence on subsequent reaching endpoints to unimodal targets or, by extension, on our calculation of weighting and realignment.

The Presence of Endpoint Visual Feedback on VP Reaches in Experiment 1 Did Not Improve the Accuracy on V and P Reaches

Because our weighting calculation depends on V and P reach endpoints being spatially separated, we wondered whether the presence of feedback on VP reaches caused V and P reach endpoints to be more accurate (and less spatially separated), which could make our calculated wV noisier. Thus, if V and P targets were more accurate in experiment 1, that could account for the lack of correlation between P endpoint shift and early baseline wV. However, reach endpoint accuracy for V and P targets in early baseline was not significantly different in experiment 1 versus experiment 2 (P = 0.31 for V targets and 0.84 for P targets). It therefore does not appear that the presence of visual endpoint feedback in experiment 1 made our weight calculation noisier.

P Endpoint Shift Was Not Influenced by Fixation Cross or Remembered V Target Position or Distance

Since the fixation cross, on average, was shifting position in the positive y direction along with the V target, any influence of fixation cross position on subjects' reaching endpoints could cause a shift in P endpoints independent of sensory realignment of the proprioceptive estimate of target hand position. To rule out this possibility, we calculated the regression coefficient between fixation cross x-coordinates and P endpoint x-coordinates (Rfixation cross2) as a way to estimate the influence of fixation cross position on P reaching endpoints. We considered only the x-coordinates because the y-coordinates should be related whether or not P endpoints are influenced by the fixation cross, since both shift in the positive y direction during the adaptation block. We discarded the data of the nine subjects with the highest Rfixation cross2. Mean Rfixation cross2 was 0.07 for the remaining 39 subjects in experiment 1 and 0.08 for the 19 subjects in experiment 2. We also checked to see if Rfixation cross2 was correlated with the P endpoint shift for the remaining subjects (i.e., do subjects with higher Rfixation cross2 have larger P endpoint shifts) and found no correlation (r = −0.169 and P > 0.30 for experiment 1 and r = 0.38 and P > 0.38 for experiment 2), indicating that the P endpoint shift was not an artifact of the shifting fixation cross zone.

A second possible explanation for the P endpoint shift is that on reaches to P targets, subjects were simply reaching the remembered distance to the most recent (shifted) V target. We do not think this could be an influence, however, because 1) no subject was consciously aware of any shift in V targets in the direction of the misalignment and 2) the arrangement of start and target positions was such that the direction and distance between start position and target was highly variable throughout the experiment and did not change significantly from early to late adaptation (P > 0.1 by rank sum test).

A third explanation might be that on P reaches, subjects were pointing to the remembered location of the most recent V target (or V component of VP target), causing P endpoints to shift along with the V target. We thought this possibility unlikely because of the random changes in target position, starting position, and fixation position between every reach. However, to be certain, we examined the relationship between P endpoint x-coordinates and the x-coordinate of the V component of the VP target immediately preceding throughout baseline and adaptation blocks (recall that P targets are always preceded by VP targets). The mean regression coefficient for this relationship (with P endpoint x-coordinates as the dependent variable) was very small in both experiments (0.046 in experiment 1 and 0.042 in experiment 2), and no subject had a significant positive correlation between the two variables, signifying that subjects did not point to the remembered location of V targets on P reaches.

APPENDIX B: SUBJECTS WHO REPEATED EXPERIMENT 2 SHOWED SOME CONSISTENCY IN wv BUT NOT IN ΔŷP (CONSISTENCY STUDY)

We wanted to find out how consistent subjects' behavior would be if tested a second time. In experiment 1, we found that when subjects received endpoint visual feedback on reaches to misaligned VP targets, they used the weighting strategy and the P endpoint strategy in varying combinations, and wV late in the adaptation block was not related to either P endpoint shift ΔŷP or sensory subexperiment ΔŷP (Figs. 5A and 8A). We wondered if the apparent idiosyncrasy was due to some influence of subjects' past sensory experience or development (in which case we would expect subjects to behave similarly if tested again) or if, given that the two strategies yielded equal probability of success (hitting the target), the choice of strategy was essentially random (in which case we would expect no relationship between subjects' behavior when tested on two separate occasions).

Because of the explicit visual endpoint feedback in experiment 1, we were concerned that subjects repeating that experiment might be more likely to notice the manipulation or that learning in the first session might affect performance in the second session. We therefore recruited eight new subjects to perform experiment 2 (no endpoint visual feedback) two times each. The two experimental sessions were at least 1 day apart. There was no significant correlation between ΔŷP in the first and second sessions (r = 0.39, P = 0.34), but there was a trend for late adaptation wV in the two sessions to be correlated (r = 0.69, P = 0.06).

This result supports the idea that wV, at least, is not determined randomly but may rather be somewhat idiosyncratic. We could speculate that individuals who have spent more time relying on their proprioceptive sense, such as musicians or athletes, may tend to rely less heavily on vision than other subjects, but such questions are beyond the scope of this study. Interestingly, we did not find a similar correlation when looking at ΔŷP. This supports our conclusion that ΔŷP is not determined by wV and suggests that ΔŷP is not idiosyncratic.

APPENDIX C: REASONS FOR THE ASSUMPTION THAT MOTOR ADAPTATION DID NOT PLAY A ROLE IN EXPERIMENT 2

Our interpretation of the results of experiment 2 relies on the assumption that without explicit error feedback, motor adaptation could not occur, and, therefore, any P endpoint shift can be considered a purely sensory adaptation, i.e., ΔŶP. In other words, if a series of reaches is performed and the brain receives no information to suggest that the motor command to the reaching arm was systematically wrong, then there should be no systematic change in the motor command (i.e., no motor adaptation). This assumption is based on the idea that some knowledge of the results of movement is required for sensorimotor adaptation to occur (e.g., Templeton et al. 1966; Howard and Templeton 1966). In experiment 2, subjects had no knowledge of the result of their reaches–no feedback dots, no target explosions, and no points awarded–so we feel our assumption of no motor adaptation is reasonable.

In addition, some subjects in experiment 2 performed the sensory subexperiment (see materials and methods) in which subjects used a keypad to align a visual cursor with their felt target finger position. This was done before and after the adaptation block, with the change representing a purely sensory rather than motor adaptation since no reaching was involved. We expected that if motor adaptation played a role in P endpoint shift for experiment 2, then P endpoint shift would be substantially larger than the subexperiment proprioceptive realignment, as is the case for experiment 1 (Fig. 6B, first two bars: 35.7 vs. 18.6 mm, respectively; P = 0.002 by Wilcoxon rank sum test). However, for the five experiment 2 subjects with the largest P endpoint shift (mean: 32.1 mm), the mean change in the keypad estimate was 27.3 mm, which was not significantly different (P = 0.7 by Wilcoxon rank sum test). Thus, we found no evidence that motor adaptation played a role in experiment 2.

REFERENCES

- Adams WJ, Banks MS, van Ee R. Adaptation to three-dimensional distortions in human vision. Nat Neurosci 4: 1063–1064, 2001 [DOI] [PubMed] [Google Scholar]

- Andersen RA, Snyder LH, Bradley DC, Xing J. Multimodal representation of space in the posterior parietal cortex, and its use in planning movements. Annu Rev Neurosci 20: 303–330, 1997 [DOI] [PubMed] [Google Scholar]

- Block HJ, Bastian AJ. Sensory reweighting in targeted reaching: effects of conscious effort, error history, and target salience. J Neurophysiol 103: 206–217, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burge J, Ernst MO, Banks MS. The statistical determinants of adaptation rate in human reaching. J Vis 8: 1–19, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowe A, Keessen W, Kuus W, van VR, Zegeling A. Proprioceptive accuracy in two dimensions. Percept Mot Skills 64: 831–846, 1987 [DOI] [PubMed] [Google Scholar]

- Di Luca M, Machulla TK, Ernst MO. Recalibration of multisensory simultaneity: cross-modal transfer coincides with a change in perceptual latency. J Vis 9: 1–16, 2009 [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual, and haptic information in a statistically optimal fashion. Nature 415: 429–433, 2002 [DOI] [PubMed] [Google Scholar]

- Foley JM, Held R. Visually directed pointing as a function of target distance, direction, and available cues. Percept Psychophys 12: 263–268, 1972 [Google Scholar]

- Ghahramani Z, Wolpert DM, Jordan MI. Computational models for sensorimotor integration. In: Self-Organization, Computational Maps and Motor Control, edited by Morasso PG, Sanguineti V. Amsterdam: North-Holland, 1997, p. 117– 263–147 [Google Scholar]

- Haggard P, Newman C, Blundell J, Andrew H. The perceived position of the hand in space. Percept Psychophys 62: 363–377, 2000 [DOI] [PubMed] [Google Scholar]

- Hay JC, Pick HL, Jr, Ikeda K. Visual capture produced by prism spectacles. Psychon Sci 2: 215–216, 1965 [Google Scholar]

- Howard IP, Templeton WB. Human Spatial Orientation. London: Wiley, 1966 [Google Scholar]

- Kelso JA, Cook E, Olson ME, Epstein W. Allocation of attention, and the locus of adaptation to displaced vision. J Exp Psychol Hum Percept Perform 1: 237–245, 1975 [DOI] [PubMed] [Google Scholar]

- Martin TA, Keating JG, Goodkin HP, Bastian AJ, Thach WT. Throwing while looking through prisms. I. Focal olivocerebellar lesions impair adaptation. Brain 119: 1183–1198, 1996 [DOI] [PubMed] [Google Scholar]

- Smeets JB, van den Dobbelsteen JJ, de Grave DD, van Beers RJ, Brenner E. Sensory integration does not lead to sensory calibration. Proc Natl Acad Sci USA 103: 18781–18786, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Templeton WB, Howard IP, Lowman AE. Passively generated adaptation to prismatic distortion. Percept Mot Skills 22: 140–142, 1966 [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol 81: 1355–1364, 1999 [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Wolpert DM, Haggard P. When feeling is more important than seeing in sensorimotor adaptation. Curr Biol 12: 834–837, 2002 [DOI] [PubMed] [Google Scholar]

- Wann JP, Ibrahim SF. Does limb proprioception drift? Exp Brain Res 91: 162–166, 1992 [DOI] [PubMed] [Google Scholar]

- Warren DH, Schmitt TL. On the plasticity of visual-proprioceptive bias effects. J Exp Psychol Hum Percept Perform 4: 302–310, 1978 [DOI] [PubMed] [Google Scholar]

- Welch RB. Perceptual Modification. New York: Academic, 1978 [Google Scholar]

- Welch RB, Warren DH. Immediate perceptual response to intersensory discrepancy. Psychol Bull 88: 638–667, 1980 [PubMed] [Google Scholar]

- Welch RB, Widawski MH, Harrington J, Warren DH. An examination of the relationship between visual capture, and prism adaptation. Percept Psychophys 25: 126–132, 1979 [DOI] [PubMed] [Google Scholar]