Abstract

Face processing relies on a distributed, patchy network of cortical regions in the temporal and frontal lobes that respond disproportionately to face stimuli, other cortical regions that are not even primarily visual (such as somatosensory cortex), and subcortical structures such as the amygdala. Higher-level face perception abilities, such as judging identity, emotion and trustworthiness, appear to rely on an intact face-processing network that includes the occipital face area (OFA), whereas lower-level face categorization abilities, such as discriminating faces from objects, can be achieved without OFA, perhaps via the direct connections to the fusiform face area (FFA) from several extrastriate cortical areas. Some lesion, transcranial magnetic stimulation (TMS) and functional magnetic resonance imaging (fMRI) findings argue against a strict feed-forward hierarchical model of face perception, in which the OFA is the principal and common source of input for other visual and non-visual cortical regions involved in face perception, including the FFA, face-selective superior temporal sulcus and somatosensory cortex. Instead, these findings point to a more interactive model in which higher-level face perception abilities depend on the interplay between several functionally and anatomically distinct neural regions. Furthermore, the nature of these interactions may depend on the particular demands of the task. We review the lesion and TMS literature on this topic and highlight the dynamic and distributed nature of face processing.

Keywords: faces, lesion studies, transcranial magnetic stimulation, fusiform face area

1. Introduction

Studies of people with brain damage have revealed a great deal about the functional architecture of face perception (e.g. [1]), and will continue to do so [2], providing an important complement to functional neuroimaging and electrophysiological studies. The majority of this work has examined the patterns of impaired and spared behavioural performance within and across individuals who have a variety of brain lesions (typically confirmed with structural brain imaging), allowing inferences to be drawn about the processes underlying those face perception abilities and the brain regions that implement them (e.g. [3]).

Cognitive psychology is replete with examples of how behavioural evidence alone, even from people without brain damage, can reveal useful insights about the functional architecture of face perception (e.g. [1]). Localization of these processes to specific regions of the undamaged brain was made possible by the introduction of functional neuroimaging techniques, particularly functional magnetic resonance imaging (fMRI) and positron emission tomography (PET), and determination of the timings of these different processes in different parts of the undamaged brain was made possible by the introduction of electroencephalography (EEG) and magnetoencephalography (MEG). The important contribution of these imaging techniques to our understanding of face perception has been widely documented elsewhere (e.g. [4–6]). Here, we discuss how recent research in cognitive neuroscience is providing a richer picture of face processing than that hitherto provided by ‘simple’ dissociations in task performance that result from brain damage and by enhanced responses to faces in specific regions of the undamaged brain. We show how the findings from such lesion and imaging studies are being complemented, refined, extended and, in some cases overturned, by findings from studies using transcranial magnetic stimulation (TMS) or new methods for analysing fMRI data, and from functional imaging studies of people with brain damage, as well as from studies of face perception in monkeys that combine high-definition fMRI with single-cell recordings. We argue that face perception is underpinned by a neural system that is more interactive and flexible than is typically captured by existing models of face perception.

2. Models of face processing and its neural substrates

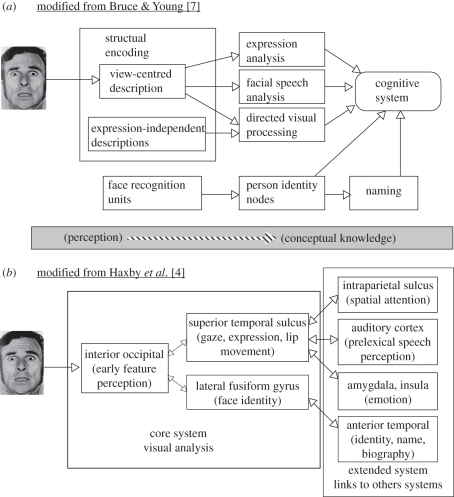

Early models of face perception, exemplified by Bruce & Young's [7] influential model (see figure 1a), featured distinct processing routes for the perception and identification of different person characteristics from the face, notably identity, emotion and facial speech. The model focused principally on identity processing. Thus, emotional expression processing, for example, was represented in the model by a single box; future work went on to unpack that box (for reviews see, e.g. [8,9]). A central feature of Bruce & Young's model was the idea that certain component processes of face perception are functionally independent of each other, or more specifically, that the processing of one type of social information from faces, such as identity or sex, is not influenced by the processing of a different type of social information, such as emotional expression. This feature of early models of face perception was based on several sources of evidence, particularly dissociations in task performance following brain injury (e.g. [10–14]) and behavioural dissociations in speeded judgement tasks in healthy individuals (e.g. [15–18]). Additional evidence was provided by studies of non-human primates: specifically, behavioural dissociations following brain lesions (e.g. [19,20]) and single cell responses (e.g. [21–23]). Nonetheless, other non-human primate studies demonstrated the existence of neurons, particularly in the amygdala, that responded both to emotional expression and to identity [24,25]. Indeed, Gothard et al. [24] demonstrated that some amygdala neurons responded to emotions expressed by some individuals but not by others, or responded with different firing rates and duration of response for emotions expressed by different individuals. Thus, these amygdala neurons seem to be able to specify unique combinations of facial expression and identity.

Figure 1.

The face processing model of (a) Bruce & Young and (b) Haxby et al.

As a functional account of the processes underlying face perception, Bruce & Young's [7] model did not make any specific proposals about the neural localization of those processes. It was not long before the newly developing functional brain-imaging techniques began to shed light on the brain mechanisms underlying face perception, in concert with improved structural brain imaging techniques and additional lesion evidence. Throughout the mid–late 1990s, this early imaging evidence was mostly concerned with the localization of functions, functions that were, for the most part, identified by the lesion and behavioural evidence. Thus, for example, the processing of facial identity, or of eye and mouth movements, was localized to distinct anatomical locations in inferior and superior regions of the temporal lobes, respectively (e.g. [26,27]). Another notable discovery was that there are at least three principal cortical regions in the human brain whose activity is greatest for faces relative to other, non-face objects. These face-selective regions are found in the fusiform gyrus (the fusiform face area or FFA), lateral inferior and sometimes middle occipital gyrus (the occipital face area or OFA), and in and around superior temporal sulcus (STS, the face-selective region of which we denote as fSTS) (e.g. [28,29]; for a review, see [5]). It is probable that there are other regions in more anterior regions of the human brain that are responsive to face stimuli, similar to the ‘face patches’ that have been discovered in macaque monkeys [30,31].

Much of the early imaging work helped inform Haxby et al.'s [4] development of a new model of face processing (figure 1b), which shares some elements of Bruce and Young's model but locates the various components of face processing in specific neural regions. On Haxby et al.'s model, face perception involves the coordinated participation of multiple neural regions, broadly delineated into the ‘core’ and ‘extended’ systems. The core system itself consists of two processing streams, one leading from inferior occipital cortex to inferotemporal cortex, in which relatively invariant aspects of faces are represented, and another route, leading from inferior occipital cortex to superior temporal cortex, in which changeable aspects of faces resulting from movement of the facial features are represented.

3. Recent developments

(a). Non-human primate studies

As noted in §2, early studies in monkeys showed face-selective responses of single neurons located in both superior and inferior aspects of temporal cortex (e.g. [21,23,32]). However, the relevance of such findings for our understanding of human face perception would be limited if humans and monkeys process faces differently. Some early studies did indeed suggest that monkeys process faces in a qualitatively different manner than humans (e.g. [33–35]), although subsequent work demonstrated a closer correspondence between monkey and human face-processing mechanisms than these earlier studies indicated (e.g. [36]). More recent research indicates marked similarities in face-processing strategies between macaques and humans, particularly as revealed by measures of eye-gaze behaviour on tasks for which the macaques' responses are not explicitly reinforced [37,38]. There may still be relatively subtle differences in the way that macaques and humans process faces, however (e.g. [39,40]). Nonetheless, as Dahl et al. [37,38] point out, some of the findings showing such differences may be due to the use of explicit reinforcement for behavioural discrimination, which can result in idiosyncratic response strategies and can make it difficult to disentangle what monkeys are capable of learning from what they would do naturally.

Recent research has also shown a close anatomical correspondence in the cortical regions selective for faces between macaques and humans [30]. Functional neuroimaging studies have confirmed not only that monkeys have a small number of discrete face-selective regions similar in relative size to those in humans [41,42], but also that the great majority of neurons in at least one of these face-selective regions (one of the face patches in STS) are themselves face selective [43]. Furthermore, face-responsive cells in this face-selective region responded to distinct constellations of face parts and were tuned to the geometry of facial features [44]. At least two of the face-selective patches in macaques are located in regions of inferior temporal cortex that correspond topographically to face-selective regions in humans. One of these regions is the FFA [42] whereas the other is a more anterior temporal face-selective region [45] that is less often observed in humans, either because it represents subtle differences between individual faces rather than faces per se [46] or because of technical reasons related to magnetic resonance (MR) signal drop-out in the anterior temporal lobes [45]. An unresolved question is whether the FFA and the anterior temporal face patch in humans are homologues or analogues of the posterior and anterior temporal face patches in macaques, that is, whether these mechanisms evolved from a common ancestor or evolved separately. On the basis of evidence that the relations between neighbouring specific cortical areas are typically evolutionarily conserved across cortical grey matter in monkeys and humans (e.g. [47–49]), Rajimehr et al. [45] suggest that these posterior and anterior temporal face patches in humans and macaques are homologous.

Strong evidence that these distributed face patches constitute a functional system for face processing comes from a study using direct electrical stimulation of face-selective patches [50]. Stimulation of individual face patches induced strong activation within a subset of the other face patches (as measured with simultaneous fMRI); direct stimulation of sites just outside these face patches induced activity elsewhere in ventral temporal cortex, including STS, but not in the face patches. These findings support a view of face processing according to which there is a sequence of functionally distinct processing stages within discrete patches of cortex dedicated to a particular aspect of face perception, rather than more diffuse coding of facial information in ventral visual cortex such that object-selective regions contribute to face perception and face-selective regions contribute to object perception, for example. (For discussion of these contrasting views, see [5,51].)

(b). Human lesion studies

Several fMRI studies have established that face-selective activity in lateral occipital gyri, revealing the OFA, reflects processing of the shape and features of faces (e.g. [4,52,53]). The OFA may also be involved in computing the relationships between facial features. There is fMRI evidence that inferior occipital gyrus or OFA is involved in processing second-order relational or configural cues (the metric distances between features [54,55]), but not first-order configural cues (which encode the relative positions of facial features [56]). The fusiform gyrus is also involved in processing second-order relational cues from faces [54,55], although not necessarily the region that corresponds to FFA [57]. Consistent with these neuroimaging findings, lesion evidence shows that impaired processing of spatial relations between facial features is characteristic of acquired prosopagnosia whether as the result of damage principally to right fusiform in the region of FFA [58] or to right occipital gyrus in the region of OFA and left fusiform [59]. The latter case of prosopagnosia, patient P.S., also showed deficient use of diagnostic information from the eye region to identify familiar faces; instead she relied on information from the mouth and external facial contours, similar to the information that normal observers use when processing unfamiliar faces [60]. On the basis of these findings, Rossion and co-workers argue that acquired prosopagnosia arises because of a deficit in holistic processing, that is, in the ability to perceive multiple elements of a face as a single global representation [59], a suggestion for which they also provide direct evidence in patient P.S. [61].

Evidence that the OFA or the lateral occipital gyrus more generally is necessary for the successful performance of a variety of face perception tasks comes from both human lesion and TMS studies (the latter evidence will be discussed in §3c). A critical role for right OFA in judging the identity of faces was shown by studies of two individuals with prosopagnosia, P.S. and D.F. As noted above, P.S. is unable to recognize the identity of faces as the result of lesions encompassing left mid-ventral and right inferior occipital cortex [62]. Two aspects of this case make it particularly interesting with respect to models of face processing. First, P.S. is a rare case of someone who has a profound problem in recognizing and naming faces but not non-face objects, thus supporting the selectivity or ‘specialness’ of face processing in the brain. Second, P.S.'s right fusiform gyrus, left lateral occipital cortex and bilateral STS are intact and normal face-selective fMRI activation is evident in the regions corresponding to right FFA, left OFA and right fSTS, whereas her right occipital cortex corresponding to OFA and left fusiform gyrus are lesioned [62–64]. Thus it appears that P.S.'s prosopagnosia is a result particularly of the lesion in right OFA. This suggestion is consistent with the finding that right OFA was the principal face-selective region implicated in a meta-analysis of the lesion overlaps in several prosopagnosics [65]. That study found that face-selective peaks of activation corresponding to right OFA reported by several fMRI studies fell closer to regions of maximum lesion overlap than did peaks corresponding to FFA or STS; moreover, there was very little lesion overlap in left lateral occipital cortex. That P.S. was also significantly impaired but nevertheless above chance in discriminating faces on the basis of their sex and emotional expression [62] also suggests a role for right OFA in allowing observers to judge the sex and emotion of faces.

Studies of another patient, D.F., also demonstrate a critical role for OFA in various face perception tasks. D.F. has visual form agnosia as well as prosopagnosia; that is, she is severely impaired in recognizing and naming non-face objects from their shape [66], as well as faces. D.F. has substantial lesions to lateral occipital cortex in both hemispheres, and showed no face-selective activation in the regions that correspond to OFA bilaterally in neurologically intact individuals, yet showed the typical face-selective activity in bilateral fusiform gyrus (i.e. the FFA) and bilateral STS [67]. While D.F. could differentiate faces from non-face objects, principally on the basis of configural cues, she was at or near chance in discriminating the identity, sex and emotional expression of faces, suggesting that the functional integrity of FFA and STS alone is not sufficient for these abilities.

Interestingly, whereas the fMRI signal in the right FFA of both P.S. and D.F. shows a normal range of preferential activation to faces compared with non-face objects and scenes, sensitivity to individual face representations in right FFA was abnormal in both these patients [63,68,69]. Specifically, a lack of sensitivity to changes in facial identity in right FFA of both patients was indicated by fMR adaptation. In neurologically intact individuals, the fMRI response is larger for repeated presentations of faces of different identities compared with the same identity (e.g. [28,70,71]); this release from adaptation in right fusiform for different face identities has even been reported in patients with congenital (developmental) prosopagnosia [72]. Yet neither D.F. nor P.S. showed any such evidence of sensitivity to changes in facial identity in right fusiform, suggesting that right OFA is necessary to individualize faces, perhaps through re-entrant interactions with FFA [73].

In Haxby et al.'s [4] model of face processing, OFA (or inferior occipital cortex more generally) is the principal and common source of input for FFA and STS (see figure 1b). The findings discussed in this section challenge a particular interpretation of Haxby et al.'s model advocated by others (e.g. [74–77]), according to which face processing proceeds in a hierarchical feed-forward manner from OFA to other regions such as FFA. First, FFA can be activated without ipsilateral OFA inputs, perhaps through direct functional connections originating from extrastriate cortical areas that are not explicit in the Haxby et al. model ([62,63,73]; see below for further discussion). Second, OFA's involvement in ‘higher-level’ face-perception abilities, such as identity, sex and emotion judgements, may not be limited to an early processing stage at which a fixed set of visual representations are encoded and passed forward to other regions such as FFA and STS (e.g. [4,78]). (We here distinguish such higher-level face-perception abilities from ‘lower-level’ abilities such as distinguishing faces from non-face objects, judging the spacing between facial features or making same–different judgements on faces that differ in the spacing between or shape of features.) In addition to an early role in extracting features, second-order configural and holistic cues, OFA might be recruited at later points in time and in a more interactive manner. Interestingly, Haxby et al. themselves propose ‘that many face perception functions are accomplished by the coordinated participation of multiple regions’ [4, p. 231]; moreover, the arrows between the different components of their model are bidirectional (see figure 1b), indicating feedback as well as feed-forward interactions. Presumably any relatively late involvement of OFA in higher-level face perception reflects ongoing extraction of relatively simple visual cues such as the shape of features and of the face. Towards the end of §3c we shall discuss some evidence indicating that OFA's involvement in higher-level face perception and, relatedly, what particular visual cues it processes, can depend on the nature of the stimuli and the demands of the task.

(c). Transcranial magnetic stimulation studies

TMS is a non-invasive technique that allows focal cortical regions near the lateral surface of the skull to be stimulated for short, precisely controlled periods of time. One or more brief magnetic pulses are applied via a coil positioned on the scalp over the selected cortical region, which effectively induces electrical noise into a small region of the underlying neural tissue, transiently altering neural processing in that region [79–84]. Importantly, TMS allows researchers to make causal inferences about the involvement of neural regions in perceptual and cognitive processes, in contrast to the correlational nature of functional neuroimaging; and it also has the advantage of greater spatial resolution than neurological lesion analyses. The critical contribution of a cortical region to a task is typically established either with high-frequency repetitive TMS ‘online’ (i.e. around the same time as the event of interest, such as the presentation of a visual stimulus) or with longer periods of low-frequency repetitive TMS ‘off-line’ (i.e. within several minutes prior to the events of interest).

Another benefit of TMS is its temporal resolution. Because the local effects on neural activity of single pulses are very transient (for discussion, see [83,85]), TMS can be used to measure with a good deal of precision the critical period during which a small region of cortex (approx. 1 cm, depending on coil size and shape) is involved in a task, as well as the relative timings of the involvement of different regions in the same task. To measure the critical period for the involvement of a neural region, single or short trains of two or three briefly interspersed TMS pulses are delivered over the region of interest at varying delays with respect to a particular event such as the onset of a stimulus (e.g. [77,86–88]; for discussion, see [80,81,83,85]). For at least several brain regions, including primary visual and dorsal premotor cortices, the period during which one or more TMS pulses are most effective in disrupting performance on a task tends to correspond with the time at which responses of single cells are recorded from the homologous area of the macaque brain (e.g. [83,89]). This critical period for TMS delivery is sometimes earlier than the peak of the signal recorded over that brain region using EEG or MEG [82,83]. To measure when two brain regions are critically involved in a task relative to each other, single or short trains of briefly interspersed pulses are applied over each of those two regions at varying intervals, typically with the use of two coils (e.g. [90,91]). Such dual-site or paired-pulse TMS allows experimenters to investigate the dynamic interaction between brain areas (for discussion see, e.g. [81,82]).

There are also some limitations of TMS, however. One is that TMS is ineffective for directly investigating the functions of neural regions that do not lie near the externally accessible parts of the skull, which include some regions discussed in this article, such as the fusiform gyrus and amygdala. This is because the intensity of the electric field decreases rapidly as a function of tissue depth. The depth of stimulation can be increased, but with a concomitant decrease in the spatial resolution of stimulation. (For discussion, see [92].) It can also be difficult to stimulate some superficial cortical regions, such as those in anterior temporal and lateral frontal lobes, without introducing participant discomfort or artefacts in behavioural measurements (or both) due to stimulation of superficial muscles in the face and head. Another limitation is that TMS can have either facilitatory or inhibitory effects depending on a variety of factors, including the duration of inter-pulse intervals (e.g. [87,93]). Finally, we note that TMS applied over one region of interest can have effects on neighbouring regions because of spreading of the induced current, as well as effects on more remote regions via anatomical connections with the region of interest. If one is interested in the functional contributions of neighbouring cortical regions, then accurate localization of stimulation sites and use of subtraction methodology can help to alleviate problems associated with spreading of induced current between those regions (e.g. [82]). The spreading of induced current via anatomical connections from a region of interest to more remote regions can, of course, be turned to the advantage of the investigator interested in functional relationships between these regions and in this way underpins the dual-site or paired-pulse paradigm (for discussion see [79,81–83,92]).

In §3a we noted that human neuroimaging and lesion evidence implicates a role for the OFA in processing second-order relational or other, higher-order configural cues from faces. Using TMS, Pitcher et al. [77] found that right OFA was not critically involved in the discrimination of faces on the basis of the spacing between those features. Nonetheless, that same study did confirm a critical early role (around 60–100 ms post-stimulus onset) for the right (but not left) OFA in the discrimination of faces on the basis of differences in individual features alone [77]. A subsequent TMS study confirmed a critical role for the right OFA in allowing observers to distinguish between faces on the basis of a combination of featural cues and cues more related to differences in face shape [94]. Moreover, this contribution of right OFA to face perception was found to be functionally distinct from the contributions of right extrastriate body area to the discrimination of bodies and of right lateral occipital area to the discrimination of objects, as evidenced by a TMS triple dissociation [94].

Other TMS studies have confirmed roles for OFA and other neural regions in higher-level face-perception abilities. For example, Pitcher et al. [86] demonstrated a critical role for right OFA in the discrimination of facial expressions of emotion at an early stage of processing, between 60 and 100 ms from stimulus onset. In this study, repetitive TMS (rTMS) applied over either right OFA or right somatosensory cortex disrupted the ability of observers to match successively presented faces with respect to emotional expression, as assessed with a measure of accuracy, irrespective of the particular emotion. These effects were evident relative to two control TMS conditions (stimulation over a control site and sham stimulation). Moreover, emotion discrimination accuracy was impaired relative to a matched identity discrimination task when rTMS was applied over either right OFA or right somatosensory cortex, while identity discrimination accuracy itself was not impaired relative to the control conditions, thus suggesting that neither region has a critical role in identity discrimination. Using double-pulse TMS delivered at different times to right OFA and right somatosensory cortex, this same study also demonstrated different critical periods for the involvement of these regions in emotion discrimination: right OFA's involvement was pinpointed to a window of 60–100 ms from stimulus onset, whereas the involvement of right somatosensory cortex was pinpointed to a window of 100–170 ms from stimulus onset [86]. Given that TMS-induced interference at right OFA preceded the TMS interference at right somatosensory cortex, these findings are consistent with hierarchical feed-forward models of face perception.

Pitcher et al.'s [86] finding that right somatosensory cortex is critically involved in enabling observers to discriminate faces on the basis of emotional expressions replicates and extends the findings of an earlier TMS study [95] and is consistent with evidence from previous lesion [96] and functional imaging [97] studies. In Pourtois et al.'s [95] study, participants were required to judge whether the second of two successively presented faces had either the same emotion or the same gaze direction as the first face. Single-pulse TMS was delivered either 100 or 200 ms following the presentation of the second face to either right somatosensory or right superior lateral temporal cortex. Reaction times for emotion judgements were increased by stimulation of right somatosensory cortex compared with stimulation of superior lateral temporal cortex, whereas the converse pattern was observed for the eye gaze judgement. Moreover, stimulation of right somatosensory cortex increased reaction times for judgements of fearful but not happy expressions, which contrasts with the lack of emotion-specific effects for somatosensory cortex in Pitcher et al.'s [86] study and in the lesion and neuroimaging studies. Together the findings discussed in this and the previous paragraph are consistent with embodied cognition or simulation models of emotion recognition, according to which recognizing another's emotional expression recruits neural mechanisms in the perceiver corresponding to those responsible for generating the other's emotional experience and behaviour (e.g. [98–100]). Interestingly, Pitcher et al. [86] found that TMS targeted at the face representation of somatosensory cortex impaired emotion discrimination accuracy relative to TMS targeted at the finger region. This finding is consistent with a role for facial mimicry in facial emotion perception, with the face region of somatosensory cortex representing the consequent changes in facial muscle activity. However, this finding alone cannot rule out an alternative explanation, namely, that the role of somatosensory cortex in emotion recognition is in the simulation of more widespread somatosensory states associated with the viewed emotion, one of which includes changes in facial muscle activity (but is much less likely to include finger muscle activity).

The TMS findings discussed so far are consistent with the interpretation of Haxby et al.'s [4] model that maintains OFA's involvement in face processing is restricted to the initial extraction of visual cues such as the shape of features and of the face and in making this information available to other, downstream face-processing regions such as FFA and STS. We now consider some additional TMS and fMRI evidence suggestive of a more flexible, interactive relationship between OFA and other face-processing regions that can vary depending on the nature of the stimuli and the demands of the task.

A recent TMS study from our own laboratory (A.P.A.) confirms critical roles for right OFA in allowing observers to discriminate the sex of faces and for right and left posterior STS regions in allowing observers to judge the trustworthiness of faces [101]. This study also suggests a possible role for right OFA in trustworthiness judgements, but only for those faces whose trustworthiness observers find it more difficult to judge and thus perhaps only at a later processing stage. Participants judged the sex of individually presented faces and, in a separate session, whether those same faces were trustworthy or not. During the presentation of the faces, rTMS was delivered over right OFA or right or left face-selective posterior STS (fSTS). There was also a sham TMS condition as a baseline, in which participants heard the same TMS pulses and had the same feeling of a coil on the head, but in which no pulse was administered into the brain. Distinct roles for right OFA and fSTS in sex and trustworthiness judgements were hypothesized on the basis of evidence that these regions process distinct sets of visual cues: judging the sex of faces relies on cues related to facial morphology and spatial relations between features (e.g. [102–106]), the processing of at least some of which involves the OFA (e.g. [52–55]). In contrast, judging the trustworthiness of faces relies on both structural and expressive cues that signal affective valence (e.g. [107–109]), the processing of which is thought to involve posterior STS more than OFA [4]. The principal results of Dzhelyova et al.'s [101] study confirmed these predictions: participants were significantly impaired in (showed longer reaction times for) judging sex when rTMS was delivered over right OFA, relative to sham stimulation, but not when it was delivered over right or left fSTS. In contrast, trustworthiness judgements were significantly impaired when rTMS was delivered over right or left fSTS relative to sham stimulation, but not when it was delivered over right OFA.

So far these findings of Dzhelyova et al. [101] are consistent with OFA and fSTS being functionally distinct face-processing regions. However, for trustworthiness judgements the effect of rTMS over right OFA relative to sham stimulation did not differ significantly from the effects of rTMS over right or left posterior STS relative to sham stimulation. This result was to be expected given that the face-selective cortical regions are not entirely functionally independent of each other. As we have mentioned, in Haxby et al.'s [4] model, processing begins in OFA (or inferior occipital gyrus (IOG) more generally) and bifurcates into two principally parallel but potentially interacting streams, one to STS and one to FFA. Given that STS is downstream of OFA and that judging the sex of faces relies little if at all on the processing of expressive cues, Dzhelyova et al. [101] predicted that sex judgments would not be impaired by the application of rTMS over right or left STS, which their results confirmed. For judgments of trustworthiness, by contrast, OFA's role might be limited to an initial structural encoding of faces (e.g. [4,78]), the outputs of which are fed forward to STS, or OFA might play a critical role in trustworthiness judgments also or only at a later processing stage, subsequent to an initial involvement of STS. If OFA's critical involvement in trustworthiness judgements is limited to an early feed-forward processing stage, then application of rTMS over right OFA would be expected either to produce a general delay in reaction times for trustworthiness judgments across the whole reaction-time distribution, or to have no effect. The latter result would be expected if the relevant visual information is able to reach STS bypassing right OFA, such as via left OFA, other regions of IOG, or other cortical or subcortical routes (for discussion of relevant evidence see, e.g. [6,73]). But if OFA's critical involvement in trustworthiness judgements is at a later processing stage, then application of rTMS over right OFA would be expected either to prolong reaction times across the whole reaction-time distribution or to prolong only the relatively longer reaction times. The latter result would be expected if the first feed-forward volley of processing to STS (whether it originates from OFA or not) provides sufficient information for the observer to reach a decision about the trustworthiness of some faces, with other faces requiring subsequent additional involvement of right OFA. Dzhelyova et al.'s [101] analysis of the reaction-time distributions supports this latter proposal: the effect of rTMS over right OFA for trustworthiness judgements was limited to faces with longer reaction times, which were also the faces for which participants were generally less accurate. An important goal for future research will be to confirm the involvement of OFA in trustworthiness judgements and other face-perception tasks at relatively late as well as (or instead of) early processing stages by, for example, using dual-site or paired-pulse TMS methods. If confirmed, a later critical role for OFA in trustworthiness judgments might reflect the use of cues related to facial morphology and spatial relations between features, or perhaps even the implicit use of sex information in this task.

Another recent TMS study suggests a critical role for right OFA in processing configural cues common to both expression and identity changes in faces. Cohen Kadosh et al. [110] used a task in which participants had to judge whether a face was the same as or different from the previously presented face. The faces in a pair could differ with respect to identity, emotion, or gaze direction alone, or a combination of any two or all three of these dimensions. Repetitive TMS to the right OFA caused participants to be less accurate in matching faces on the basis of both identity and expression, but did not disrupt their ability to match the faces on the basis of identity, emotion, or gaze direction alone. This finding suggests that right OFA integrates information across identity and expression; moreover, Cohen Kadosh et al. [110] suggested these findings imply that right OFA has a role in computing the overall configural relations between facial features, given that this is one class of information on which the encoding of both identity and expression depend [111–114]. In a second experiment using double-pulse TMS, Cohen Kadosh et al. [110] found that impaired matching of faces on the basis of combined identity and expression was evident when TMS was applied over right OFA from 170 ms after stimulus onset, indicating that right OFA's role in integrating these two stimulus dimensions occurs at a mid-latency rather than early processing stage.

We noted in §2 the discovery of neurons in the monkey, particularly in the amygdala, that are sensitive both to facial identity and expression and may even specify unique combinations of identity and expression [24,25]. An interesting issue for future research is thus whether OFA's involvement in integrating identity and expression information is a function of the activity of neurons in OFA that are similarly sensitive both to facial identity and expression, or instead a function of it receiving inputs from regions such as the amygdala that contain neurons sensitive to both dimensions.

To round off this section, we return to consider an fMRI study, the findings of which suggest that the involvement of face-selective regions in processing featural and configural cues depends on task demands. Cohen Kadosh et al.'s [115] participants were presented with streams of individually presented faces in which they were required to detect, in different stimulus blocks, an occasional specific identity, emotional expression or gaze direction. On the basis of previous research, the identity task was supposed to engender configural more than featural processing and the gaze task more featural than configural processing, whereas the expression task was supposed to engender both configural and featural processing. Mini-blocks of stimuli consisted in repeated presentations of the same identity in the identity task, the same emotional expression in the expression task, and the same eye gaze in the gaze task. The task-irrelevant face properties varied independently; for example, faces in the identity task varied in their emotional expression, gaze direction, or both, or remained the same. An fMRI adaptation or repetition suppression paradigm was used, the logic of which is that if neurons in a region of interest are sensitive to a particular stimulus property, then activity in that region should be greater for conditions in which that stimulus property varies as compared with a condition in which that property remains constant. Activity in the occipital and fusiform gyri (OFA and FFA) was found not to differ as a function of changes in the stimulus per se, such as identity or expression changes. Rather, relative to the condition in which the faces did not change within a mini-block, changes in expression during the identity task and changes in identity during the expression task increased activity in OFA and FFA; the activity of OFA and FFA was also increased by changes in gaze direction in the expression task, independent of concurrent identity changes. Activity in the STS was increased only to gaze changes in the expression task. Cohen Kadosh et al.'s [115] assumption was that face-selective STS is more involved in processing featural than configural cues whereas OFA and FFA are more involved in processing configural cues, although as our review of the research indicates, this functional division of labour might not be so clear-cut. Indeed, Cohen Kadosh et al.'s [115] explanation for why they also observed increased responses in OFA and FFA to changes in gaze direction in the expression task suggests a way of reconciling the partly conflicting findings in the literature: the perception of emotional expressions relies on featural as well as configural cues (see [54–56,77,94]) and the OFA and FFA, which as we have noted are closely functionally interlinked, may process either featural or configural cues, depending on task demands.

4. Implications for hierarchical feed-forward models of face perception

Some but not all of the findings discussed in §3b,c argue against a strict feed-forward hierarchical model of face perception, in which the OFA is the principal and common source of input for other visual and non-visual cortical regions involved in face perception, including the FFA, fSTS and somatosensory cortex. Instead, these findings point to a more interactive model in which higher-level face-perception abilities depend on the interplay between several functionally and anatomically distinct neural regions. Furthermore, some of this evidence suggests that the nature of these interactions and the computations performed by the different components of the core face-processing network may depend on the particular demands of the task.

We have seen that two patients with lesions encompassing much of lateral occipital cortex (one in the right hemisphere, one bilateral) with consequent lack of the normal face-selective fMRI response in this region nevertheless showed face-selective responses in spared regions of fusiform cortex and STS [62,67,69]. Both patients are able to discriminate faces from non-face objects, which is reflected in normal face-selectivity in right FFA of both patients despite the lateral occipital lesions, implying that the relevant information must be reaching FFA via a processing route that bypasses OFA. Both P.S. and D.F. are severely impaired in recognizing the identity of faces and also perform significantly below the level of matched comparison individuals when required to discriminate or identify faces on the basis of emotional expression and sex. Patient D.F.'s performance on these latter two tasks was at or near chance, whereas P.S.'s performance was well above chance, and she discriminated the age of faces normally. For P.S., it is possible that her intact left OFA is adequate to help drive regions further up the processing hierarchy, such as STS and right fusiform, thus underpinning her residual (but nevertheless impaired) higher-level face processing abilities. D.F., on the other hand, shows no face-selective activity corresponding to OFA in either hemisphere and is unable to perform higher-level face processing tasks at above-chance levels. Furthermore, neither D.F. nor P.S. showed any such evidence of sensitivity to changes in facial identity in right fusiform [63,68,69], suggesting that right OFA is necessary to individualize faces, perhaps through re-entrant interactions with FFA [73]. More generally, this finding illustrates the caution that is required when interpreting the effects of lesions to individual nodes of interconnected networks: brain regions that may appear structurally intact may nevertheless not be receiving their normal inputs and so may be functionally depressed [73,116].

Thus, higher-level face-perception abilities, such as judging identity, emotion and trustworthiness, seem to rely on an intact face-processing network that includes OFA, whereas lower-level face categorization abilities, such as discriminating faces from objects, can be achieved without OFA, perhaps via the direct connections to FFA from several extrastriate cortical areas [73]. Support for this idea comes from a diffusion tensor imaging study, which has revealed anatomical connections from several extrastriate cortical areas to FFA [117] and from a study employing a functional connectivity analysis [118]. The latter study showed a direct influence of middle occipital gyrus (outside the typical location for OFA) on fusiform activity for low spatial frequency information related to facial identity, as well as a distinct influence of inferior occipital gyrus on fusiform activity for high spatial frequency information related to facial identity, with no evidence for direct functional connections between middle and inferior occipital gyri [118].

Much of the TMS evidence discussed in §3c corroborates the lesion evidence for a critical role for OFA in a network of neural regions that underpins higher-level face perception abilities. There was, however, one anomaly in this regard. Why did rTMS applied to right OFA not disrupt the ability to discriminate faces on the basis of their identity [86]? Pitcher et al. [86] suggest that this might be because OFA is particularly critical for discriminating faces on the basis of differences in the shape of individual face parts and that the faces in their 2008 study always differed in their expressions and hence the shape of their face parts. Consequently, they suggest, their participants may have been forced to rely on information other than the shape of face parts in order to successfully discriminate the facial identities. This may have involved the recruitment of or a greater reliance on other face-processing regions, such as the FFA, fSTS or both.

Some of the TMS findings discussed in §3c are consistent with the interpretation of Haxby et al.'s [4] model that maintains OFA's involvement in face processing is restricted to the initial encoding of information about certain structural properties of faces, information that is then fed forward to other face-processing regions such as FFA and STS. Nonetheless, we also considered two TMS studies [101,110] and one fMRI study [115] the results of which suggest a more flexible, interactive relationship between OFA and other face-processing regions. Thus, the TMS findings in themselves do not conclusively argue against a feed-forward hierarchical model of face perception in favour of the more interactive model that we have been advocating. However, the weight of evidence provided by the lesion and fMRI studies together with the two aforementioned TMS studies is, we believe, sufficient to call into question hierarchical feed-forward models. Further research is required to uncover how and under what circumstances the different components of the core and extended face-processing networks interact and what computations they each perform.

5. Conclusions and future directions

Some clear caveats are in order with respect to the lesion method, since we have highlighted it here. Two obvious limitations are that the lesions, if neurological and in humans, are not anatomically precise or controlled; and that there is substantial reorganization following chronic lesions. These limitations are well known, but they do pose serious issues. With respect to the first, it becomes critical to have both high-resolution structural (as well as possibly functional) MRI data in order to delineate the boundaries of the lesion. It also becomes critical to conduct group analyses, ideally on sample sizes in excess of 100 patients or so, such that deficits can be traced reliably to shared damage to a particular region, rather than attributed to the idiosyncracies of any individual lesion. One approach—the classic one—to address this problem, of course, has been to study patients with highly selective lesions. But such patients are exceedingly rare and still suffer from the fact that each is essentially unique. We need to know whether the results generalize, and we need to know this about neuroanatomical regions so specific that they are almost never lesioned selectively. Current approaches are using voxel-based lesion–symptom mapping with samples of several hundred to address these issues.

The issue of plasticity and reorganization is even more difficult to address. The simplest way is by combining neurological lesions with transient lesions induced by TMS. Indeed, a major thrust of current studies has been to combine methods, since all of them suffer severe limitations when used in isolation (see our discussion of the limitations of TMS in §3c). Combining electrophysiological, fMRI, TMS and lesion studies will produce results that are much more reliable than those which can be obtained from any one of these approaches in isolation (e.g. [119–121]).

One important feature of the majority of studies we have reviewed here is that they have examined face processing under conditions in which observers are able to free-view either whole faces or one or more isolated facial features, and are thus able to make (one or more) fixations on facial features. However, in everyday life faces often appear in our visual periphery, yet very little is currently known about peripheral visual processing of faces or about the processes that subsequently direct our gaze and attention to those faces, despite the well-established differences between peripheral and central vision. Moreover, in everyday face-to-face interactions it is common for another person's face to occupy a sufficiently large region of the visual field that not all facial features can fall within the fovea at once. Yet, at any given moment, those features falling outside the fovea nevertheless receive some visual processing. Relatively little is known about how facial features are processed in the visual periphery and parafovea. Nor is much known about the processes that allow us to seek out, fixate, pay attention to and make use of facial features that are initially visible only in the periphery. Yet vision is an active process [122], and understanding how peripheral vision contributes to the perception of facially expressed emotions may provide important insights into the larger issue of normal and abnormal human social interactions.

We have reviewed evidence supporting the idea that the neural substrates of face perception do not simply implement passive, bottom-up processing of the retinal images of faces and their features, but rather, their operation reflects a complex interplay between mechanisms that extract different types of information from the retinal image at different points in time, the mechanisms that direct eye gaze and attention, and thus the demands of the task and environmental and social context. Future studies should pay close attention to the dynamic effects of task demands, and to the active nature of vision. The eventual goal of vision science is to go from an understanding of how we process static images of single faces to how we see people in the real world: interactively, dynamically, under a variety of different informational demands, in cluttered environments, and of course multimodally.

Footnotes

One contribution of 10 to a Theme Issue ‘Face perception: social, neuropsychological and comparative perspectives’.

References

- 1.Young A. W. 1998. Face and mind. Oxford, UK: Oxford University Press [Google Scholar]

- 2.Fox C. J., Iaria G., Barton J. J. S. 2008. Disconnection in prosopagnosia and face processing. Cortex 44, 996–1009 10.1016/j.cortex.2008.04.003 (doi:10.1016/j.cortex.2008.04.003) [DOI] [PubMed] [Google Scholar]

- 3.Adolphs R. 2007. Investigating human emotion with lesions and intracranial recording. In Handbook of emotion elicitation and assessment (eds Allen J., Coan J.), pp. 426–439 New York, NY: Oxford University Press [Google Scholar]

- 4.Haxby J. V., Hoffman E. A., Gobbini M. I. 2000. The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233 10.1016/S1364-6613(00)01482-0 (doi:10.1016/S1364-6613(00)01482-0) [DOI] [PubMed] [Google Scholar]

- 5.Kanwisher N., Yovel G. 2006. The fusiform face area: a cortical region specialized for the perception of faces. Phil. Trans. R. Soc. B 361, 2109–2128 10.1098/rstb.2006.1934 (doi:10.1098/rstb.2006.1934) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Vuilleumier P., Pourtois G. 2007. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45, 174–194 10.1016/j.neuropsychologia.2006.06.003 (doi:10.1016/j.neuropsychologia.2006.06.003) [DOI] [PubMed] [Google Scholar]

- 7.Bruce V., Young A. 1986. Understanding face recognition. Br. J. Psychol. 77, 305–327 [DOI] [PubMed] [Google Scholar]

- 8.Adolphs R. 2002. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 1, 21–62 10.1177/1534582302001001003 (doi:10.1177/1534582302001001003) [DOI] [PubMed] [Google Scholar]

- 9.Calder A. J., Young A. W. 2005. Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 6, 641–651 10.1038/nrn1724 (doi:10.1038/nrn1724) [DOI] [PubMed] [Google Scholar]

- 10.Campbell R., Landis T., Regard M. 1986. Face recognition and lipreading: a neurological dissociation. Brain 109, 509–521 10.1093/brain/109.3.509 (doi:10.1093/brain/109.3.509) [DOI] [PubMed] [Google Scholar]

- 11.Humphreys G. W., Donnelly N., Riddoch M. J. 1993. Expression is computed separately from facial identity, and it is computed separately for moving and static faces: neuropsychological evidence. Neuropsychologia 31, 173–181 10.1016/0028-3932(93)90045-2 (doi:10.1016/0028-3932(93)90045-2) [DOI] [PubMed] [Google Scholar]

- 12.Parry F. M., Young A. W., Saul J. S., Moss A. 1991. Dissociable face processing impairments after brain injury. J. Clin. Exp. Neuropsychol. 13, 545–558 10.1080/01688639108401070 (doi:10.1080/01688639108401070) [DOI] [PubMed] [Google Scholar]

- 13.Tranel D., Damasio A. R., Damasio H. 1988. Intact recognition of facial expression, gender, and age in patients with impaired recognition of face identity. Neurology 38, 690–696 [DOI] [PubMed] [Google Scholar]

- 14.Young A. W., Newcombe F., de Haan E. H., Small M., Hay D. C. 1993. Face perception after brain injury. Selective impairments affecting identity and expression. Brain 116, 941–959 10.1093/brain/116.4.941 (doi:10.1093/brain/116.4.941) [DOI] [PubMed] [Google Scholar]

- 15.Bruce V., Ellis H., Gibling F., Young A. 1987. Parallel processing of the sex and familiarity of faces. Can. J. Psychol. 41, 510–520 10.1037/h0084165 (doi:10.1037/h0084165) [DOI] [PubMed] [Google Scholar]

- 16.Campbell R., Brooks B., de Haan E., Roberts T. 1996. Dissociating face processing skills: decision about lip-read speech, expression, and identity. Q. J. Exp. Psychol. A 49, 295–314 10.1080/027249896392649 (doi:10.1080/027249896392649) [DOI] [PubMed] [Google Scholar]

- 17.Pizzamiglio L., Zoccolotti P., Mammucari A., Cesaroni R. 1983. The independence of face identity and facial expression recognition mechanisms: relationship to sex and cognitive style. Brain Cogn. 2, 176–188 10.1016/0278-2626(83)90007-6 (doi:10.1016/0278-2626(83)90007-6) [DOI] [PubMed] [Google Scholar]

- 18.Young A. W., McWeeny K. H., Hay D. C., Ellis A. W. 1986. Matching familiar and unfamiliar faces on identity and expression. Psychol. Res. 48, 63–68 10.1007/BF00309318 (doi:10.1007/BF00309318) [DOI] [PubMed] [Google Scholar]

- 19.Campbell R., Heywood C. A., Cowey A., Regard M., Landis T. 1990. Sensitivity to eye gaze in prosopagnosic patients and monkeys with superior temporal sulcus ablation. Neuropsychologia 28, 1123–1142 10.1016/0028-3932(90)90050-X (doi:10.1016/0028-3932(90)90050-X) [DOI] [PubMed] [Google Scholar]

- 20.Heywood C. A., Cowey A. 1992. The role of the ‘face-cell’ area in the discrimination and recognition of faces by monkeys. Phil. Trans. R. Soc. Lond. B 335, 31–37 10.1098/rstb.1992.0004 (doi:10.1098/rstb.1992.0004) [DOI] [PubMed] [Google Scholar]

- 21.Hasselmo M. E., Rolls E. T., Baylis G. C. 1989. The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behav. Brain Res. 32, 203–218 10.1016/S0166-4328(89)80054-3 (doi:10.1016/S0166-4328(89)80054-3) [DOI] [PubMed] [Google Scholar]

- 22.Perrett D. I., Smith P. A., Potter D. D., Mistlin A. J., Head A. S., Milner A. D., Jeeves M. A. 1984. Neurones responsive to faces in the temporal cortex: studies of functional organization, sensitivity to identity and relation to perception. Hum. Neurobiol. 3, 197–208 [PubMed] [Google Scholar]

- 23.Rolls E. T., Cowey A., Bruce V. 1992. Neurophysiological mechanisms underlying face processing within and beyond the temporal cortical visual areas. Phil. Trans. R. Soc. Lond. B 335, 11–21 10.1098/rstb.1992.0002 (doi:10.1098/rstb.1992.0002) [DOI] [PubMed] [Google Scholar]

- 24.Gothard K. M., Battaglia F. P., Erickson C. A., Spitler K. M., Amaral D. G. 2007. Neural responses to facial expression and face identity in the monkey amygdala. J. Neurophysiol. 97, 1671–1683 10.1152/jn.00714.2006 (doi:10.1152/jn.00714.2006) [DOI] [PubMed] [Google Scholar]

- 25.Leonard C. M., Rolls E. T., Wilson F. A. W., Baylis G. C. 1985. Neurons in the amygdala of the monkey with responses selective for faces. Behav. Brain Res. 15, 159–176 10.1016/0166-4328(85)90062-2 (doi:10.1016/0166-4328(85)90062-2) [DOI] [PubMed] [Google Scholar]

- 26.Hoffman E. A., Haxby J. V. 2000. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 3, 80–84 10.1038/71152 (doi:10.1038/71152) [DOI] [PubMed] [Google Scholar]

- 27.Puce A., Allison T., Bentin S., Gore J. C., McCarthy G. 1998. Temporal cortex activation in humans viewing eye and mouth movements. J. Neurosci. 18, 2188–2199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gauthier I., Tarr M. J., Moylan J., Skudlarski P., Gore J. C., Anderson A. W. 2000. The fusiform ‘face area’ is part of a network that processes faces at the individual level. J. Cogn. Neurosci. 12, 495–504 10.1162/089892900562165 (doi:10.1162/089892900562165) [DOI] [PubMed] [Google Scholar]

- 29.Kanwisher N., McDermott J., Chun M. M. 1997. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tsao D. Y., Moeller S., Freiwald W. A. 2008. Comparing face patch systems in macaques and humans. Proc. Natl Acad. Sci. USA 105, 19 514–19 519 10.1073/pnas.0809662105 (doi:10.1073/pnas.0809662105) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tsao D. Y., Schweers N., Moeller S., Freiwald W. A. 2008. Patches of face-selective cortex in the macaque frontal lobe. Nat. Neurosci. 11, 877–879 10.1038/nn.2158 (doi:10.1038/nn.2158) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Desimone R., Albright T. D., Gross C. G., Bruce C. 1984. Stimulus-selective properties of inferior temporal neurons in the macaque. J. Neurosci. 4, 2051–2062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bruce C. 1982. Face recognition by monkeys: absence of an inversion effect. Neuropsychologia 20, 515–521 10.1016/0028-3932(82)90025-2 (doi:10.1016/0028-3932(82)90025-2) [DOI] [PubMed] [Google Scholar]

- 34.Overman W. H., Jr, Doty R. W. 1982. Hemispheric specialization displayed by man but not macaques for analysis of faces. Neuropsychologia 20, 113–128 10.1016/0028-3932(82)90002-1 (doi:10.1016/0028-3932(82)90002-1) [DOI] [PubMed] [Google Scholar]

- 35.Swartz K. B. 1983. Species discrimination in infant pigtail macaques with pictorial stimuli. Dev. Psychobiol. 16, 219–231 10.1002/dev.420160308 (doi:10.1002/dev.420160308) [DOI] [PubMed] [Google Scholar]

- 36.Perrett D. I., Mistlin A. J., Chitty A. J., Smith P. A., Potter D. D., Broennimann R., Harries M. 1988. Specialized face processing and hemispheric asymmetry in man and monkey: evidence from single unit and reaction time studies. Behav. Brain Res. 29, 245–258 10.1016/0166-4328(88)90029-0 (doi:10.1016/0166-4328(88)90029-0) [DOI] [PubMed] [Google Scholar]

- 37.Dahl C. D., Logothetis N. K., Hoffman K. L. 2007. Individuation and holistic processing of faces in rhesus monkeys. Proc. R. Soc. B 274, 2069–2076 10.1098/rspb.2007.0477 (doi:10.1098/rspb.2007.0477) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Dahl C. D., Wallraven C., B¸lthoff H. H., Logothetis N. K. 2009. Humans and macaques employ similar face-processing strategies. Curr. Biol. 19, 509–513 10.1016/j.cub.2009.01.061 (doi:10.1016/j.cub.2009.01.061) [DOI] [PubMed] [Google Scholar]

- 39.Parr L. A., Heintz M. 2008. Discrimination of faces and houses by rhesus monkeys: the role of stimulus expertise and rotation angle. Anim. Cogn. 11, 467–474 10.1007/s10071-008-0137-4 (doi:10.1007/s10071-008-0137-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Parr L. A., Heintz M., Pradhan G. 2008. Rhesus monkeys (Macaca mulatta) lack expertise in face processing. J. Comp. Psychol. 122, 390–402 10.1037/0735-7036.122.4.390 (doi:10.1037/0735-7036.122.4.390) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pinsk M. A., DeSimone K., Moore T., Gross C. G., Kastner S. 2005. Representations of faces and body parts in macaque temporal cortex: a functional MRI study. Proc. Natl Acad. Sci. USA 102, 6996–7001 10.1073/pnas.0502605102 (doi:10.1073/pnas.0502605102) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Tsao D. Y., Freiwald W. A., Knutsen T. A., Mandeville J. B., Tootell R. B. H. 2003. Faces and objects in macaque cerebral cortex. Nat. Neurosci. 6, 989–995 10.1038/nn1111 (doi:10.1038/nn1111) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tsao D. Y., Freiwald W. A., Tootell R. B. H., Livingstone M. S. 2006. A cortical region consisting entirely of face-selective cells. Science 311, 670–674 10.1126/science.1119983 (doi:10.1126/science.1119983) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Freiwald W. A., Tsao D. Y., Livingstone M. S. 2009. A face feature space in the macaque temporal lobe. Nat. Neurosci. 12, 1187–1196 10.1038/nn.2363 (doi:10.1038/nn.2363) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Rajimehr R., Young J. C., Tootell R. B. H. 2009. An anterior temporal face patch in human cortex, predicted by macaque maps. Proc. Natl Acad. Sci. USA 106, 1995–2000 10.1073/pnas.0807304106 (doi:10.1073/pnas.0807304106) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kriegeskorte N., Formisano E., Sorger B., Goebel R. 2007. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc. Natl Acad. Sci. USA 104, 20 600–20 605 10.1073/pnas.0705654104 (doi:10.1073/pnas.0705654104) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Sereno M. I., Tootell R. B. H. 2005. From monkeys to humans: what do we now know about brain homologies? Curr. Opin. Neurobiol. 15, 135–144 10.1016/j.conb.2005.03.014 (doi:10.1016/j.conb.2005.03.014) [DOI] [PubMed] [Google Scholar]

- 48.Tootell R. B. H., Tsao D., Vanduffel W. 2003. Neuroimaging weighs in: humans meet macaques in ‘primate’ visual cortex. J. Neurosci. 23, 3981–3989 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Van Essen D. C., Lewis J. W., Drury H. A., Hadjikhani N., Tootell R. B. H., Bakircioglu M., Miller M. I. 2001. Mapping visual cortex in monkeys and humans using surface-based atlases. Vis. Res. 41, 1359–1378 10.1016/S0042-6989(01)00045-1 (doi:10.1016/S0042-6989(01)00045-1) [DOI] [PubMed] [Google Scholar]

- 50.Moeller S., Freiwald W. A., Tsao D. Y. 2008. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science 320, 1355–1359 10.1126/science.1157436 (doi:10.1126/science.1157436) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Cohen J. D., Tong F. 2001. Neuroscience: the face of controversy. Science 293, 2405–2407 10.1126/science.1066018 (doi:10.1126/science.1066018) [DOI] [PubMed] [Google Scholar]

- 52.Fox C. J., Moon S. Y., Iaria G., Barton J. J. S. 2009. The correlates of subjective perception of identity and expression in the face network: an fMRI adaptation study. NeuroImage 44, 569–580 10.1016/j.neuroimage.2008.09.011 (doi:10.1016/j.neuroimage.2008.09.011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Rotshtein P., Henson R. N., Treves A., Driver J., Dolan R. J. 2005. Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat. Neurosci. 8, 107–113 10.1038/nn1370 (doi:10.1038/nn1370) [DOI] [PubMed] [Google Scholar]

- 54.Rhodes G., Michie P. T., Hughes M. E., Byatt G. 2009. The fusiform face area and occipital face area show sensitivity to spatial relations in faces. Eur. J. Neurosci. 30, 721–733 10.1111/j.1460-9568.2009.06861.x (doi:10.1111/j.1460-9568.2009.06861.x) [DOI] [PubMed] [Google Scholar]

- 55.Rotshtein P., Geng J. J., Driver J., Dolan R. J. 2007. Role of features and second-order spatial relations in face discrimination, face recognition, and individual face skills: behavioral and functional magnetic resonance imaging data. J. Cogn. Neurosci. 19, 1435–1452 10.1162/jocn.2007.19.9.1435 (doi:10.1162/jocn.2007.19.9.1435) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Liu J., Harris A., Kanwisher N. 2010. Perception of face parts and face configurations: an fMRI study. J. Cogn. Neurosci. 22, 203–211 10.1162/jocn.2009.21203 (doi:10.1162/jocn.2009.21203) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Maurer D., O'Craven K. M., Le Grand R., Mondloch C. J., Springer M. V., Lewis T. L., Grady C. L. 2007. Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia 45, 1438–1451 10.1016/j.neuropsychologia.2006.11.016 (doi:10.1016/j.neuropsychologia.2006.11.016) [DOI] [PubMed] [Google Scholar]

- 58.Barton J. J. S., Press D. Z., Keenan J. P., O'Connor M. 2002. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology 58, 71–78 [DOI] [PubMed] [Google Scholar]

- 59.Ramon M., Rossion B. 2010. Impaired processing of relative distances between features and of the eye region in acquired prosopagnosia: two sides of the same holistic coin? Cortex 46, 374–389 10.1016/j.cortex.2009.06.001 (doi:10.1016/j.cortex.2009.06.001) [DOI] [PubMed] [Google Scholar]

- 60.Caldara R., Schyns P., Mayer E., Smith M. L., Gosselin F., Rossion B. 2005. Does prosopagnosia take the eyes out of face representations? Evidence for a defect in representing diagnostic facial information following brain damage. J. Cogn. Neurosci. 17, 1652–1666 10.1162/089892905774597254 (doi:10.1162/089892905774597254) [DOI] [PubMed] [Google Scholar]

- 61.Ramon M., Busigny T., Rossion B. 2010. Impaired holistic processing of unfamiliar individual faces in acquired prosopagnosia. Neuropsychologia 48, 933–944 10.1016/j.neuropsychologia.2009.11.014 (doi:10.1016/j.neuropsychologia.2009.11.014) [DOI] [PubMed] [Google Scholar]

- 62.Rossion B., Caldara R., Seghier M., Schuller A. M., Lazeyras F., Mayer E. 2003. A network of occipito-temporal face-sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain 126, 2381–2395 10.1093/brain/awg241 (doi:10.1093/brain/awg241) [DOI] [PubMed] [Google Scholar]

- 63.Schiltz C., Sorger B., Caldara R., Ahmed F., Mayer E., Goebel R., Rossion B. 2006. Impaired face discrimination in acquired prosopagnosia is associated with abnormal response to individual faces in the right middle fusiform gyrus. Cereb. Cortex 16, 574–586 10.1093/cercor/bhj005 (doi:10.1093/cercor/bhj005) [DOI] [PubMed] [Google Scholar]

- 64.Sorger B., Goebel R., Schiltz C., Rossion B. 2007. Understanding the functional neuroanatomy of acquired prosopagnosia. NeuroImage 35, 836–852 10.1016/j.neuroimage.2006.09.051 (doi:10.1016/j.neuroimage.2006.09.051) [DOI] [PubMed] [Google Scholar]

- 65.Bouvier S. E., Engel S. A. 2006. Behavioral deficits and cortical damage loci in cerebral achromatopsia. Cereb. Cortex 16, 183–191 10.1093/cercor/bhi096 (doi:10.1093/cercor/bhi096) [DOI] [PubMed] [Google Scholar]

- 66.Milner A. D., et al. 1991. Perception and action in ‘visual form agnosia’. Brain 114, 405–428 10.1093/brain/114.1.405 (doi:10.1093/brain/114.1.405) [DOI] [PubMed] [Google Scholar]

- 67.Steeves J. K. E., Culham J. C., Duchaine B. C., Pratesi C. C., Valyear K. F., Schindler I., Humphrey G. K., Milner A. D., Goodale M. A. 2006. The fusiform face area is not sufficient for face recognition: evidence from a patient with dense prosopagnosia and no occipital face area. Neuropsychologia 44, 594–609 10.1016/j.neuropsychologia.2005.06.013 (doi:10.1016/j.neuropsychologia.2005.06.013) [DOI] [PubMed] [Google Scholar]

- 68.Dricot L., Sorger B., Schiltz C., Goebel R., Rossion B. 2008. The roles of ‘face’ and ‘non-face’ areas during individual face perception: evidence by fMRI adaptation in a brain-damaged prosopagnosic patient. NeuroImage 40, 318–332 10.1016/j.neuroimage.2007.11.012 (doi:10.1016/j.neuroimage.2007.11.012) [DOI] [PubMed] [Google Scholar]

- 69.Steeves J. K. E., Dricot L., Goltz H. C., Sorger B., Peters J., Milner A. D., Goodale M. A., Goebel R., Rossion B. 2009. Abnormal face identity coding in the middle fusiform gyrus of two brain-damaged prosopagnosic patients. Neuropsychologia 47, 2584–2592 10.1016/j.neuropsychologia.2009.05.005 (doi:10.1016/j.neuropsychologia.2009.05.005) [DOI] [PubMed] [Google Scholar]

- 70.Andrews T. J., Ewbank M. P. 2004. Distinct representations for facial identity and changeable aspects of faces in the human temporal lobe. Neuroimage 23, 905–913 10.1016/j.neuroimage.2004.07.060 (doi:10.1016/j.neuroimage.2004.07.060) [DOI] [PubMed] [Google Scholar]

- 71.Grill-Spector K., Malach R. 2001. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychologica 107, 293–321 10.1016/S0001-6918(01)00019-1 (doi:10.1016/S0001-6918(01)00019-1) [DOI] [PubMed] [Google Scholar]

- 72.Avidan G., Hasson U., Malach R., Behrmann M. 2005. Detailed exploration of face-related processing in congenital prosopagnosia: 2. Functional neuroimaging findings. J. Cogn. Neurosci. 17, 1150–1167 10.1162/0898929054475145 (doi:10.1162/0898929054475145) [DOI] [PubMed] [Google Scholar]

- 73.Rossion B. 2008. Constraining the cortical face network by neuroimaging studies of acquired prosopagnosia. NeuroImage 40, 423–426 10.1016/j.neuroimage.2007.10.047 (doi:10.1016/j.neuroimage.2007.10.047) [DOI] [PubMed] [Google Scholar]

- 74.Fairhall S. L., Ishai A. 2007. Effective connectivity within the distributed cortical network for face perception. Cereb. Cortex 17, 2400–2406 10.1093/cercor/bhl148 (doi:10.1093/cercor/bhl148) [DOI] [PubMed] [Google Scholar]

- 75.Ishai A. 2008. Let's face it: it's a cortical network. NeuroImage 40, 415–419 10.1016/j.neuroimage.2007.10.040 (doi:10.1016/j.neuroimage.2007.10.040) [DOI] [PubMed] [Google Scholar]

- 76.Jiang X., Rosen E., Zeffiro T. A., VanMeter J., Blanz V., Riesenhuber M. 2006. Evaluation of a shape-based model of human face discrimination using fMRI and behavioral techniques. Neuron 50, 159–172 10.1016/j.neuron.2006.03.012 (doi:10.1016/j.neuron.2006.03.012) [DOI] [PubMed] [Google Scholar]

- 77.Pitcher D., Walsh V., Yovel G., Duchaine B. 2007. TMS evidence for the involvement of the right occipital face area in early face processing. Curr. Biol. 17, 1568–1573 10.1016/j.cub.2007.07.063 (doi:10.1016/j.cub.2007.07.063) [DOI] [PubMed] [Google Scholar]

- 78.Eimer M. 2000. Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clin. Neurophysiol. 111, 694–705 10.1016/S1388-2457(99)00285-0 (doi:10.1016/S1388-2457(99)00285-0) [DOI] [PubMed] [Google Scholar]

- 79.Bolognini N., Ro T. 2010. Transcranial magnetic stimulation: disrupting neural activity to alter and assess brain function. J. Neurosci. 30, 9647–9650 10.1523/JNEUROSCI.1990-10.2010 (doi:10.1523/JNEUROSCI.1990-10.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Pascual-Leone A., Bartres-Faz D., Keenan J. P. 1999. Transcranial magnetic stimulation: studying the brain–behaviour relationship by induction of ‘virtual lesions’. Phil. Trans. R. Soc. Lond. B 354, 1229–1238 10.1098/rstb.1999.0476 (doi:10.1098/rstb.1999.0476) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Pascual-Leone A., Walsh V., Rothwell J. 2000. Transcranial magnetic stimulation in cognitive neuroscience—virtual lesion, chronometry, and functional connectivity. Curr. Opin. Neurobiol. 10, 232–237 10.1016/S0959-4388(00)00081-7 (doi:10.1016/S0959-4388(00)00081-7) [DOI] [PubMed] [Google Scholar]

- 82.Walsh V., Cowey A. 2000. Transcranial magnetic stimulation and cognitive neuroscience. Nat. Rev. Neurosci. 1, 73–79 10.1038/35036239 (doi:10.1038/35036239) [DOI] [PubMed] [Google Scholar]

- 83.Walsh V., Rushworth M. 1999. A primer of magnetic stimulation as a tool for neuropsychology. Neuropsychologia 37, 125–135 [PubMed] [Google Scholar]

- 84.Ziemann U. 2010. TMS in cognitive neuroscience: virtual lesion and beyond. Cortex 46, 124–127 10.1016/j.cortex.2009.02.020 (doi:10.1016/j.cortex.2009.02.020) [DOI] [PubMed] [Google Scholar]

- 85.Robertson E. M., Theoret H., Pascual-Leone A. 2003. Studies in cognition: the problems solved and created by transcranial magnetic stimulation. J. Cogn. Neurosci. 15, 948–960 10.1162/089892903770007344 (doi:10.1162/089892903770007344) [DOI] [PubMed] [Google Scholar]

- 86.Pitcher D., Garrido L., Walsh V., Duchaine B. C. 2008. Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. J. Neurosci. 28, 8929–8933 10.1523/JNEUROSCI.1450-08.2008 (doi:10.1523/JNEUROSCI.1450-08.2008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Sacco P., Turner D., Rothwell J., Thickbroom G. 2009. Corticomotor responses to triple-pulse transcranial magnetic stimulation: effects of interstimulus interval and stimulus intensity. Brain Stimul. 2, 36–40 10.1016/j.brs.2008.06.255 (doi:10.1016/j.brs.2008.06.255) [DOI] [PubMed] [Google Scholar]

- 88.Silvanto J., Lavie N., Walsh V. 2005. Double dissociation of V1 and V5/MT activity in visual awareness. Cereb. Cortex 15, 1736–1741 10.1093/cercor/bhi050 (doi:10.1093/cercor/bhi050) [DOI] [PubMed] [Google Scholar]

- 89.Corthout E., Uttl B., Ziemann U., Cowey A., Hallett M. 1999. Two periods of processing in the (circum)striate visual cortex as revealed by transcranial magnetic stimulation. Neuropsychologia 37, 137–145 10.1016/S0028-3932(98)00088-8 (doi:10.1016/S0028-3932(98)00088-8) [DOI] [PubMed] [Google Scholar]

- 90.Ellison A., Lane A. R., Schenk T. 2007. The interaction of brain regions during visual search processing as revealed by transcranial magnetic stimulation. Cereb. Cortex 17, 2579–2584 10.1093/cercor/bhl165 (doi:10.1093/cercor/bhl165) [DOI] [PubMed] [Google Scholar]

- 91.Pascual-Leone A., Walsh V. 2001. Fast backprojections from the motion to the primary visual area necessary for visual awareness. Science 292, 510–512 10.1126/science.1057099 (doi:10.1126/science.1057099) [DOI] [PubMed] [Google Scholar]

- 92.Wagner T., Rushmore J., Eden U., Valero-Cabre A. 2009. Biophysical foundations underlying TMS: setting the stage for an effective use of neurostimulation in the cognitive neurosciences. Cortex 45, 1025–1034 10.1016/j.cortex.2008.10.002 (doi:10.1016/j.cortex.2008.10.002) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Nakamura H., Kitagawa H., Kawaguchi Y., Tsuji H. 1997. Intracortical facilitation and inhibition after transcranial magnetic stimulation in conscious humans. J. Physiol. 498, 817–823 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Pitcher D., Charles L., Devlin J. T., Walsh V., Duchaine B. 2009. Triple dissociation of faces, bodies, and objects in extrastriate cortex. Curr. Biol. 19, 319–324 10.1016/j.cub.2009.01.007 (doi:10.1016/j.cub.2009.01.007) [DOI] [PubMed] [Google Scholar]

- 95.Pourtois G., Sander D., Andres M., Grandjean D., Reveret L., Olivier E., Vuilleumier P. 2004. Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. Eur. J. Neurosci. 20, 3507–3515 10.1111/j.1460-9568.2004.03794.x (doi:10.1111/j.1460-9568.2004.03794.x) [DOI] [PubMed] [Google Scholar]

- 96.Adolphs R., Damasio H., Tranel D., Cooper G., Damasio A. R. 2000. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J. Neurosci. 20, 2683–2690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Winston J. S., O'Doherty J., Dolan R. J. 2003. Common and distinct neural responses during direct and incidental processing of multiple facial emotions. Neuroimage 20, 84–97 10.1016/S1053-8119(03)00303-3 (doi:10.1016/S1053-8119(03)00303-3) [DOI] [PubMed] [Google Scholar]

- 98.Adolphs R. 2006. How do we know the minds of others? Domain-specificity, simulation, and enactive social cognition. Brain Res. 1079, 25–35 10.1016/j.brainres.2005.12.127 (doi:10.1016/j.brainres.2005.12.127) [DOI] [PubMed] [Google Scholar]

- 99.Heberlein A. S., Atkinson A. P. 2009. Neuroscientific evidence for simulation and shared substrates in emotion recognition: beyond faces. Emotion Rev. 1, 162–177 10.1177/1754073908100441 (doi:10.1177/1754073908100441) [DOI] [Google Scholar]

- 100.Niedenthal P. M. 2007. Embodying emotion. Science 316, 1002–1005 10.1126/science.1136930 (doi:10.1126/science.1136930) [DOI] [PubMed] [Google Scholar]

- 101.Dzhelyova M. P., Ellison A., Atkinson A. P. In press Event-related repetitive TMS reveals distinct, critical roles for right OFA and bilateral posterior STS in judging the trustworthiness and sex of faces. J. Cogn. Neurosci. 10.1162/jocn.2011.21604 (doi:10.1162/jocn.2011.21604) [DOI] [PubMed] [Google Scholar]

- 102.Brown E., Perrett D. I. 1993. What gives a face its gender? Perception 22, 829–840 10.1068/p220829 (doi:10.1068/p220829) [DOI] [PubMed] [Google Scholar]

- 103.Bruce V., Burton A. M., Hanna E., Healey P., Mason O., Coombes A., Fright R., Linney A. 1993. Sex discrimination: how do we tell the difference between male and female faces? Perception 22, 131–152 10.1068/p220131 (doi:10.1068/p220131) [DOI] [PubMed] [Google Scholar]

- 104.Burton A. M., Bruce V., Dench N. 1993. What's the difference between men and women? Evidence from facial measurement. Perception 22, 153–176 10.1068/p220153 (doi:10.1068/p220153) [DOI] [PubMed] [Google Scholar]

- 105.Calder A. J., Burton A. M., Miller P., Young A. W., Akamatsu S. 2001. A principal component analysis of facial expressions. Vis. Res. 41, 1179–1208 10.1016/S0042-6989(01)00002-5 (doi:10.1016/S0042-6989(01)00002-5) [DOI] [PubMed] [Google Scholar]

- 106.Campbell R., Wallace S., Benson P. J. 1996. Real men don't look down: direction of gaze affects sex decisions on faces. Vis. Cogn. 3, 393–412 10.1080/135062896395643 (doi:10.1080/135062896395643) [DOI] [Google Scholar]