Abstract

Objective

To understand mammographers’ perception of individual women’s breast cancer risk.

Materials and Methods

Radiologists interpreting screening mammography examinations completed a mailed survey consisting of questions pertaining to demographic and clinical practice characteristics, as well as 2 vignettes describing different risk profiles of women. Respondents were asked to estimate the probability of a breast cancer diagnosis in the next 5 years for each vignette. Vignette responses were plotted against mean recall rates in actual clinical practice.

Results

The survey was returned by 77% of eligible radiologists. Ninety-three percent of radiologists overestimated risk in the vignette involving a 70-year-old woman; 96% overestimated risk in the vignette involving a 41-year-old woman. Radiologists who more accurately estimated breast cancer risk were younger, worked full-time, were affiliated with an academic medical center, had fellowship training, had fewer than 10 years experience interpreting mammograms, and worked more than 40% of the time in breast imaging. However, only age was statistically significant. No association was found between radiologists’ risk estimate and their recall rate.

Conclusion

U.S. radiologists have a heightened perception of breast cancer risk.

Keywords: perception, risk, pretest probability

Clinicians routinely use information about patient risk when making medical decisions.1–4 This risk assessment is based on their understanding of disease prevalence and the impact of patient history. Bayes’ theorem, used intuitively by many clinicians, utilizes sensitivity, specificity, and pretest probability to determine the positive predictive value that a patient has a particular disease given a positive test for that disease.5,6 As a result, a clinician’s estimate of pretest probability for an individual patient can have a significant effect on the interpretation and use of tests.

Unfortunately, clinicians may not always understand specific quantitative information.7–10 Breast cancer risk is one area where data about risk factors are readily available; however, we found no published studies on how radiologists assess the risk of individual women undergoing breast cancer screening. Published studies indicate US women generally have a heightened perception of their own risk of breast cancer.11 Furthermore, it is commonly stated that women have a 1 in 8 chance (12.5%) of developing invasive breast cancer over their lifetime.12 This statistic may be somewhat misleading, however, as it is not an estimate for a short time interval (1–5 years) but a lifetime risk, and it is a risk of a diagnosis, not a death.

US radiologists have a higher recall rate than do their counterparts in other countries.13,14 Thus it is reasonable to ask if heightened perception of risk exists among US radiologists, and whether this heightened perception is associated with higher recall rates among radiologists. In this study, we describe radiologists’ perception of breast cancer risk at the level of an individual woman. We then use a unique link with actual clinical practice in the community to see if perception of breast cancer risk is associated with recall rate (the percentage of screening mammograms interpreted as abnormal and requiring additional evaluation).

METHODS

Study Population

This community-based, multicenter observational study utilizes a unique collaboration among 3 geographically and clinically distinct breast cancer screening programs: a nonprofit health plan in the Pacific Northwest, Group Health Cooperative Breast Cancer Surveillance System;15,16 the New Hampshire Mammography Network,17 which captures >85% of women having mammography in the state of New Hampshire; and the Colorado Mammography Program, which captures approximately 50% of the women in the 6-county metropolitan area of Denver, CO. These 3 screening programs are members of the federally funded Breast Cancer Surveillance Consortium.18 The study was approved by the institutional review boards of the University of Washington School of Medicine, Group Health Cooperative of Puget Sound, Dartmouth College, and the Cooper Institute.

Data Collection

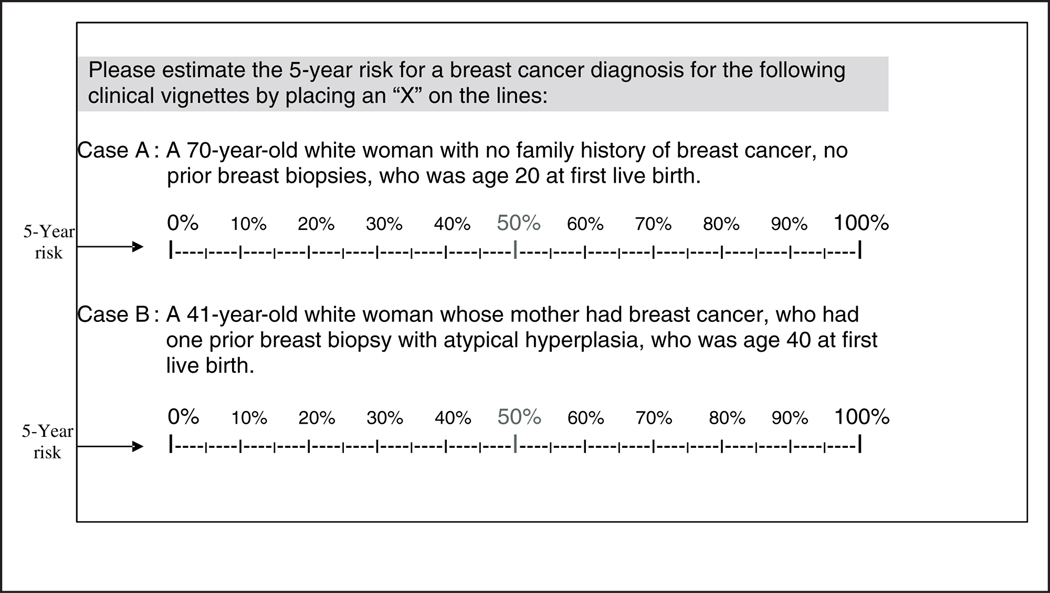

The self-administered survey was developed among national experts and extensively pilot-tested with community radiologists not in the study cohort. Survey questions determined basic demographics, mammography practice environment, and extent and experience working in mammography interpretation. Survey questions presented clinical vignettes involving 2 different risk profiles of women and asked respondents to estimate the 5-year risk of a breast cancer diagnosis using a visual analog scale for each (Figure 1). The respondents were asked to mark their answers with an X on a linear, 100-point scale, with values ranging from 0% to 100%, with 50% written in red. The words 5-year risk were written in both the instructions and to the immediate left of each scale.

Figure 1.

Survey questions asking radiologists, who are actively interpreting mammography examinations, to estimate the 5-year risk of breast cancer diagnosis for two different risk profiles of women.

Eligible radiologists included those interpreting mammograms at 1 of the 3 breast cancer screening programs between 1 January 2001 and 31 December 2001. Surveys were sent with informed consent materials and returned via regular mail. To enhance our response rate, we either resent the survey and/or followed up with a personal telephone call to nonresponders after 3 weeks (a total of 3 attempts). Survey mailings and follow-up calls were administered directly from each of the 3 mammography registries.

Completed survey data were double entered into a relational database at each site by 2 different individuals blinded to the other’s entry. The entry program included data checks at the time of data entry, and any discrepancies between the 2 data entries were resolved by the respective site coordinator. Standardized methods were developed to interpret markings on the vignette analog scale and translate them into quantitative data. For example, the value for the X response was determined to be the point whereby a vertical line, drawn through the center of the X, perpendicularly crossed the scale. If a response was marked with a circle, the value was determined to be the point whereby a vertical line, drawn through the center of the circle, perpendicularly crossed the scale (complete methods for interpreting responder’s intent are available upon request). All data were sent password-protected via FTP (file transfer protocol) to the central analytic center in Seattle, WA.

Survey data were abstracted using standardized methods, and the abstractors were blinded to linked Breast Cancer Surveillance Consortium data. Data were merged with computerized files available from the 3 registries that included interpretations and recommendations for each screening mammogram performed between 1 January 1996 and 31 December 2001. Specific variables included date, BI-RADS® interpretation and recommendation categories (Table 1),19 patient clinical characteristics, and breast pathology. New identification codes were created prior to data transfer from the local sites for each radiologist to preserve confidentiality.

Table 1.

Adaptation of BI-RADS® Classification System

| Category | Comments |

|---|---|

| BI-RADS 0 | Incomplete study, additional imaging needed |

| BI-RADS 1 | Negative |

| BI-RADS 2 | Benign finding(s): Examination is normal, but one or more clearly benign findings are identified that require no specific follow-up |

| BI-RADS 3 | Probably benign finding: Initial short-interval follow-up is suggested. Probability of malignancy or other life-threatening illness should be less than 2% for findings in this category. Initial follow-up within 6 months is suggested to ensure stability. |

| BI-RADS 4 | Suspicious abnormality: Biopsy or further immediate evaluation is recommended. This includes abnormalities with likelihood of cancer or other life-threatening illness greater than described for category 3. Immediate evaluation recommendations could include further imaging, laboratory investigation, or physical examination. |

| BI-RADS 5 | Highly suggestive of malignancy or life-threatening illness: Appropriate action should be taken. Appropriate action might include surgery, further imaging, or other diagnostic procedures. |

| BI-RADS 6 | Known biopsy-proven malignancy |

Analytic Definitions

Responses to the 2 vignettes were compared to the Gail risk prediction model estimate, as calculated by the National Cancer Institute Web site.20 Age at menarche was not specified in the vignettes to keep the survey brief; therefore, as assumed, mean age at menarche of 12 years21 was used in both calculations.

Standard definitions of recall rate developed by the Breast Cancer Surveillance Consortium22 using BI-RADS19 categories were used. The recall rate was defined as the number of exams interpreted as positive divided by the total number of exams. Screening mammograms obtained on women 40 years and older were included in the analysis. If additional nonscreening imaging was done on the same day as the screening mammogram, the original BI-RADS was recoded to 0 (needs additional assessment). Screening mammography examinations were considered positive if interpreted as a BI-RADS category 0, 4, 5, or 3 with a recommendation for immediate workup. If a woman had different assessments for each breast, the higher assessment level was used according to the following hierarchy (1 < 2 < 3 < 0 < 4 < 5).

Analysis

Radiologists who responded to the vignettes with 480 or more screening mammograms (the minimum number of mammograms required by MQSA)23 in the Surveillance Consortium data were used for the analysis (N = 123). Univariate analyses examined the relationship between radiologist characteristics (demographics, practice type, breast imaging experience) and their responses to each vignette. An analysis of variance was used to compare vignette scores across all categories for each survey item. When more than 2 categories were made per category, Tukey’s correction for multiple comparisons was used to keep the overall α = 0.05 for all pairwise comparisons. Mean recall rates and 95% confidence intervals were computed for each physician. A univariate analysis also examined the relationship between radiologist mean recall rate and their response to vignette A and vignette B. These relationships were also examined after adjusting for potential confounders. For both models, recall rate was weighted by the number of screening mammography examinations performed by each physician to ensure that each mammogram would have the same weight. A radiologist with fewer mammograms may provide unstable performance measures, and thus an unweighted analysis at the radiologist level may not adequately characterize the overall effect. Furthermore, a Pearson correlation coefficient was generated to further assess the relationship between vignette score and recall rate. For all analyses, 2-sided tests were done and a P-value < 0.05 was considered to be statistically significant.

RESULTS

Of 181 eligible radiologists, 139 (77%) returned the survey with full consent, 2 (1%) returned without consent, 3 (2%) declined to complete the survey, and 37 (20%) did not respond. We found no significant differences by gender, year of medical school graduation, recall rate, sensitivity, and specificity between the responders and nonresponders.

The radiologists interpreted mammograms at 81 facilities in 3 states. Physician characteristics are shown in Table 2. The majority of the radiologists were male (77%), worked full-time (74%), had no affiliation with an academic medical center (84%), had more than 10 years experience interpreting mammograms (77%), and spent less than 40% of the time working in breast imaging (88%).

Table 2.

Radiologist Demographic and Clinical Practice Characteristics and Associated Perception of Breast Cancer Risk

| Total (N = 123) | Mean Response to Vignette A (70-year-old woman) (Estimated risk from Gail model = 1.4) |

Mean Response to Vignette B (41-year-old woman) (Estimated risk from Gail model = 3.4) |

||||

|---|---|---|---|---|---|---|

| N | (%) | Mean | SD | Mean | SD | |

| Demographics | ||||||

| Gender (1 missing) | ||||||

| Male | 95 | (77) | 8.3 | 9.4 | 22.4 | 18.6 |

| Female | 28 | (23) | 8.5 | 9.1 | 27.3 | 17.4 |

| Age (1 missing) | ||||||

| 34–44 | 36 | (29) | 6.5 | 4.4 | 19.8 | 15.2 |

| 45–54 | 48 | (39) | 10.4 | 12.6 | 21.5 | 18.9 |

| 55+ | 39 | (32) | 7.6 | 7.4 | 29.5 | 19.3 |

| History of breast cancer | ||||||

| None | 20 | (16) | 7.1 | 7.0 | 29.5 | 24.5 |

| Colleague or friend | 58 | (47) | 8.3 | 11.3 | 21.0 | 17.4 |

| Self/spouse or partner/relative | 45 | (37) | 9.0 | 7.3 | 24.1 | 16.2 |

| Practice | ||||||

| Work full-time (1 missing) | ||||||

| Yes | 91 | (74) | 8.1 | 9.0 | 22.3 | 17.7 |

| No | 32 | (26) | 9.3 | 10.4 | 26.9 | 20.1 |

| Academic affiliation (1 missing) | ||||||

| Yes, primary/adjunct/affiliate | 20 | (16) | 5.4 | 6.8 | 21.3 | 21.3 |

| No | 103 | (84) | 9.0 | 9.6 | 24.0 | 17.9 |

| General experience in breast imaging | ||||||

| Fellowship training in breast imaging (1 missing) | ||||||

| Yes | 6 | (5) | 5.9 | 4.5 | 14.5 | 13.9 |

| No | 117 | (95) | 8.5 | 9.5 | 24.0 | 18.5 |

| No. of years interpreting mammograms (1 missing) | ||||||

| <1 year | — | — | — | — | — | |

| 1–4 years | — | — | — | — | — | |

| 5–9 years | 28 | (23) | 5.8 | 4.0 | 18.5 | 16.0 |

| 10–19 years | 57 | (46) | 9.9 | 11.8 | 23.1 | 18.8 |

| ≥20 years | 38 | (31) | 8.0 | 7.3 | 27.8 | 18.9 |

| Percentage time working in breast imaging (1 missing) | ||||||

| <20% | 55 | (45) | 7.1 | 5.8 | 22.8 | 16.0 |

| 20%–39% | 52 | (43) | 10.0 | 12.2 | 22.6 | 18.8 |

| 40%+ | 15 | (12) | 5.7 | 4.8 | 26.9 | 23.6 |

| Work reported in 2001 | ||||||

| No. of mammograms interpreted (1 missing) | ||||||

| < 1000 | 31 | (25) | 7.2 | 4.6 | 24.0 | 12.5 |

| 1001–2000 | 45 | (37) | 8.4 | 7.3 | 24.1 | 18.5 |

| > 2000 | 46 | (38) | 9.2 | 12.9 | 22.6 | 21.8 |

| Percentage of mammograms that were screening (1 missing) | ||||||

| 0–50 | 10 | (8) | 7.8 | 5.7 | 24.0 | 16.5 |

| 51–75 | 52 | (43) | 8.1 | 8.1 | 23.5 | 18.1 |

| 76–100 | 60 | (49) | 8.4 | 10.4 | 22.8 | 18.6 |

Note: The P value for the association between age and vignette B is 0.0429.

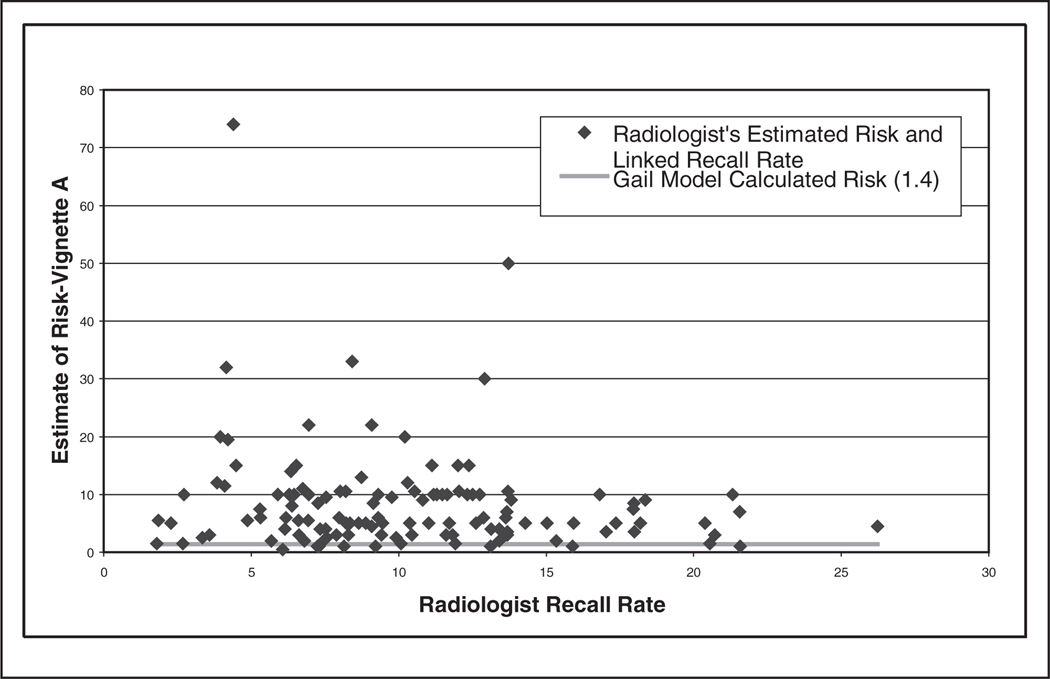

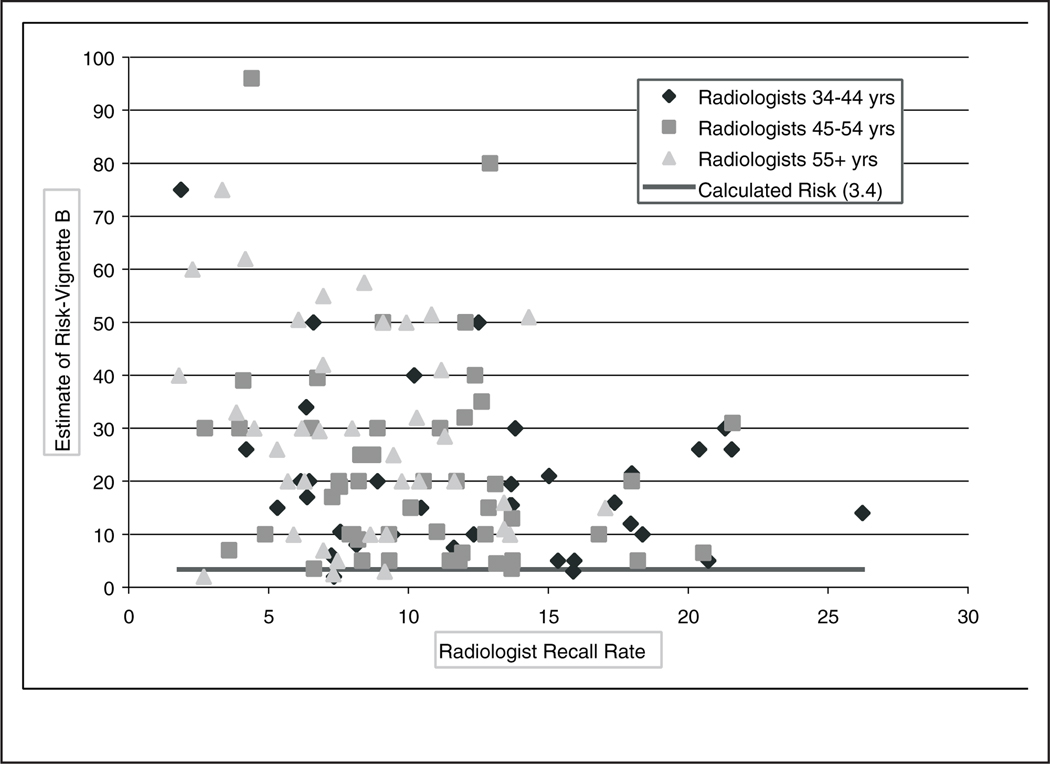

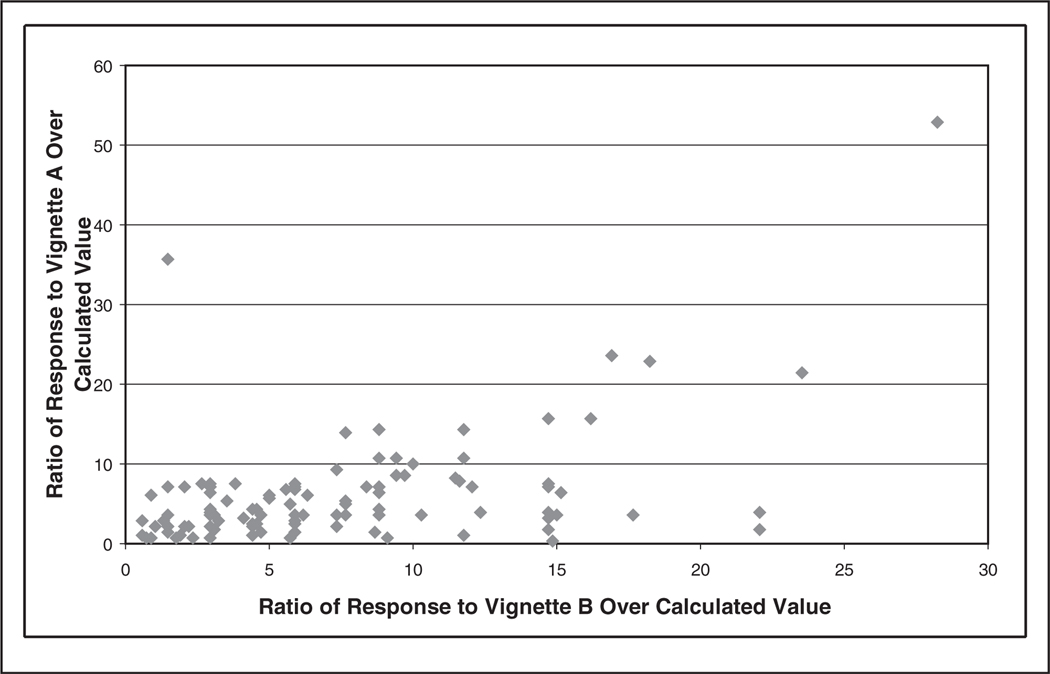

Radiologists’ perception of the women’s 5-year risk ranged from 0.5% to 74% for vignette A (Gail model estimate = 1.4%) and 2% to 96% for vignette B (Gail model estimate = 3.4%). Ninety-three percent of the radiologists in our study overestimated the 5-year risk of breast cancer for vignette A, and 96% overestimated for vignette B (Figures 2 and 3). Figure 4 shows the association between the ratios of each radiologist’s vignette response over the calculated value using the Gail model. When comparing individual responses for both vignettes, radiologists who overestimated on vignette A also tended to overestimate on vignette B (Figure 4, r = 0.483, P < 0.001); however, radiologists tended to overestimate more on vignette B. Radiologists who were younger (vignette B only), worked full time, were affiliated with an academic medical center, had fellowship training, had fewer than 10 years experience interpreting mammograms, and worked greater than 40% of the time in breast imaging (vignette A only) tended to provide 5-year breast cancer risk estimates closer to the Gail risk model results (Table 2). Statistical significance (P < 0.05) was obtained only for the age variable.

Figure 2.

Radiologist’s 5-year estimate of breast cancer diagnosis for vignette A (70-year-old woman with value from Gail model = 1.4%) linked to the radiologist’s mean recall rate.

Figure 3.

Radiologist’s 5-year estimate of breast cancer diagnosis for vignette B (41-year-old woman with value from Gail model = 3.4%) linked to the radiologist’s mean recall rate.

Figure 4.

Individual response to vignette A/calculated value of vignette A using the Gail model plotted against the individual response to vignette B/calculated value of B using the Gail model.

Responses to vignettes A and B linked with radiologists’ recall rate (ordered from lowest to highest) from their community practices are also shown in Figures 2 and 3, respectively. For vignette B, the majority (13/19) of radiologists who estimated >40% risk were in the oldest age category. Furthermore, the majority (12/19) of those with a recall rate >15% were in the youngest age category. No positive association was found between vignette response and recall rate. Similar results were obtained in an analysis in which recall rate was not weighted by the number of screening mammography examinations performed by each physician (results not shown). Indeed, the correlation coefficients and slope estimates were negative (Table 3). Although statistical significance was obtained for vignette B, no clinically significant difference was noted.

Table 3.

Models Examining the Association between Radiologist Responses to Vignette Questions and Mean Recall Rate in Screening Mammography

| Estimates | SE | P values | Pearson Correlation Coefficientsa (P value) |

|

|---|---|---|---|---|

| Vignette A (unadjusted) | −0.03 | 0.03 | 0.3329 | −0.147 (0.104) |

| Vignette B (unadjusted) | −0.06 | 0.02 | 0.0005 | −0.277 (0.002) |

| Vignette B (adjusted for age of radiologist) | −0.05 | 0.02 | 0.0046 | |

| Age group (ref = 34–44) | ||||

| 45–54 | −1.37 | 0.87 | 0.1171 | |

| 55+ | −3.02 | 0.93 | 0.0014 |

Note: Radiologist’s recall rate was weighted by the number of screening mammography examinations performed.

The correlation coefficient was not weighted by the number of screening mammography examinations.

DISCUSSION

Radiologists in this study, who were all actively interpreting screening mammography in community settings, significantly overestimated the 5-year risk of breast cancer diagnosis for both clinical vignettes. A more accurate estimation of breast cancer risk was seen in radiologists who were younger, were associated with academic medical centers, worked full time, had fellowship training in breast imaging, interpreted mammograms for a fewer number of years, and worked greater than 40% of the time in breast imaging. However, only radiologist age was statistically significant.

We hypothesized that radiologists who had high estimates of breast cancer risk would also have a higher recall rate. Interestingly, our results do not directly support this hypothesis. It is possible that the heightened estimate of pretest probability is associated with the high recall rates noted in the United States. Radiologists in the United States have higher recall rates and false-positive rates compared with radiologists from other countries.13,14 It is also possible that radiologists do not use pretest probability to influence the decision making process when interpreting screening mammograms. Due to time constraints or perhaps due, in part, to the isolation from patients when interpreting screening mammography examinations (batch reading), radiologists may not view mammographic films in the context of a specific woman. Radiologists need to be adept at recognizing patterns on film and may feel that it is unnecessary to use quantitative notions of risk when interpreting screening results. Radiologists may pay more attention to a woman’s individual risk factors when interpreting diagnostic mammograms and would be more inclined to use Bayes’ theorem when deciding whether to recall a woman for further testing. It would therefore be interesting to perform this study with linkage to diagnostic mammography.

This study provides a unique link to practice-based behavior and is composed of radiologists working in a community setting. To the authors’ knowledge, no prior data of this type have been reported. One prior publication in a test situation (not community practice) found that radiologists are influenced by the clinical history when interpreting mammograms.24 Also, the survey response rate was 77%, which is excellent compared with other national studies.25

No study is without limitations. Surveyed radiologists were not a random sample of all US radiologists but an inclusive sample of those interpreting mammograms in 3 distinct geographic locations. It is therefore possible that these radiologists may not be completely representative of US mammography radiologists. However, these radiologists practice in a facility that signed onto these breast cancer screening registries, a less selective cohort than many surveys. Although age at menarche was not included in the vignette questions to keep the survey brief, its impact as a risk factor on the Gail model is minimal. No adjustments were made for possible differences in characteristics of patients seen by these radiologists. Furthermore, asking responders to place an X on the graphic scale, instead of providing a numeric answer, may have led to an inaccurate measure of the physician’s intent. However, it seems highly unlikely that such errors would always be biased upward.

Even though the words 5-year risk were written in the directions, and also on each response line, radiologists still may not have realized that the question was asking for 5-year risk and potentially provided a risk estimate based on another time interval (e.g., lifetime risk). However, if this were the case, then when comparing survey responses to lifetime risk, 66% of radiologists still provided an estimate greater than lifetime risk for the vignette of the 70-year-old woman (lifetime risk estimated using Gail model = 4.5%) and 19% provided an estimate greater than lifetime risk for the vignette of the 41-year-old woman (lifetime risk estimated using Gail model = 39.4%).

In conclusion, radiologists in our study estimated an individual woman’s 5-year breast cancer risk to be significantly higher than the risk estimated from the Gail model. When linking the radiologists’ estimate of breast cancer risk with their corresponding recall rate in practice, no positive correlation between the 2 was noted. This study, therefore, suggests that radiologists may not consider patient risk factors when determining whether to recall an individual woman for further testing after a screening mammogram. It is also possible that the inflated estimate may be, in part, leading to the higher recall rate in the United States compared with other countries. It also suggests that clinicians, like patients, have a challenging time understanding numeric concepts. Because of the dearth of literature on this subject and our relatively surprising results, further studies should be done to understand how radiologists interpret mammography examinations and whether education of the true risk of breast cancer may help to decrease false-positive recall rates.

ACKNOWLEDGMENT

This project was supported by grants from the Agency for Health Research and Quality and the National Cancer Institute (Grant # HS10591 and CA1040699) and grants from the National Cancer Institute (U01 CA63731 GHC Surveillance, 5 U01 CA 63736-09 Colorado Surveillance, 1 U01 CA86082-01 New Hampshire Surveillance). This work was primarily conducted while Dr. Taplin was at Group Health Cooperative, though final writing was done while he was at the National Cancer Institute. Although the NCI funded this work, all opinions are those of the coauthors and do not imply agreement or endorsement by the federal government or the National Cancer Institute. We appreciate the dedication of participating radiologists and project support staff.

REFERENCES

- 1.Deber RB, Blidner IN, Carr LM, Barnsley JM. The impact of selected patient characteristics on practitioners’ treatment recommendations for end-stage renal disease. Med Care. 1985;23(2):95–109. doi: 10.1097/00005650-198502000-00001. [DOI] [PubMed] [Google Scholar]

- 2.McKinlay JB, Burns RB, Durante R, et al. Patient, physician and presentational influences on clinical decision making for breast cancer: results from a factorial experiment. J Eval Clin Pract. 1997;3(1):23–57. doi: 10.1111/j.1365-2753.1997.tb00067.x. [DOI] [PubMed] [Google Scholar]

- 3.Bogart LM, Catz SL, Kelly JA, Benotsch EG. Factors influencing physicians’ judgments of adherence and treatment decisions for patients with HIV disease. Med Decis Making. 2001;21(1):28–36. doi: 10.1177/0272989X0102100104. [DOI] [PubMed] [Google Scholar]

- 4.Shetty V, Atchison K, Der-Martirosian C, Wang J, Belin TR. Determinants of surgical decisions about mandible fractures. J Oral Maxillofac Surg. 2003;61(7):808–813. doi: 10.1016/s0278-2391(03)00156-3. [DOI] [PubMed] [Google Scholar]

- 5.Weiss NS. Monographs in Epidemiology and Biostatistics. 2nd ed. v. 27. New York: Oxford University Press; 1996. Clinical Epidemiology: The Study of the Outcome of Illness; p. 163. viii. [Google Scholar]

- 6.Jekel J, Elmore J, Katz D. Epidemiology, Biostatistics and Preventive Medicine. Philadelphia: WB Saunders; 1996. [Google Scholar]

- 7.Kong A, Barnett GO, Mosteller F, Youtz C. How medical professionals evaluate expressions of probability. N Engl J Med. 1986;315(12):740–744. doi: 10.1056/NEJM198609183151206. [DOI] [PubMed] [Google Scholar]

- 8.Feinstein A. A bibliography of publications on observer variability. J Chron Dis. 1985;38(8):619–632. doi: 10.1016/0021-9681(85)90016-5. [DOI] [PubMed] [Google Scholar]

- 9.Poses RM, Cebul RD, Centor RM. Evaluating physicians’ probabilistic judgments. Med Decis Making. 1988;8(4):233–240. doi: 10.1177/0272989X8800800403. [DOI] [PubMed] [Google Scholar]

- 10.Heckerling PS, Tape TG, Wigton RS. Relation of physicians’ predicted probabilities of pneumonia to their utilities for ordering chest x-rays to detect pneumonia. Med Decis Making. 1992;12(1):32–38. doi: 10.1177/0272989X9201200106. [DOI] [PubMed] [Google Scholar]

- 11.Woloshin S, Schwartz LM, Black WC, Welch HG. Women’s perceptions of breast cancer risk: how you ask matters. Med Decis Making. 1999;19(3):221–229. doi: 10.1177/0272989X9901900301. [DOI] [PubMed] [Google Scholar]

- 12.American Cancer Society. Atlanta, GA: Author; 2003. Cancer Facts and Figures, 2002–2003. [Google Scholar]

- 13.Smith-Bindman R, Chu PW, Miglioretti DL, et al. Comparison of screening mammography in the United States and the United Kingdom. JAMA. 2003;290(16):2129–2137. doi: 10.1001/jama.290.16.2129. [DOI] [PubMed] [Google Scholar]

- 14.Elmore JG, Nakano CY, Koepsell TD, Desnick LM, D’Orsi CJ, Ransohoff DF. International variation in screening mammography interpretations in community-based programs. J Natl Cancer Inst. 2003;95(18):1384–1393. doi: 10.1093/jnci/djg048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Carter AP, Thompson RS, Bourdeau RV, Andenes J, Mustin H, Straley H. A clinically effective breast cancer screening program can be cost-effective, too. Prev Med. 1987;16(1):19–34. doi: 10.1016/0091-7435(87)90003-x. [DOI] [PubMed] [Google Scholar]

- 16.Taplin SH, Mandelson MT, Anderman C, et al. Mammography diffusion and trends in late-stage breast cancer: evaluating outcomes in a population. Cancer Epidemiol Biomarkers Prev. 1997;6(8):625–631. [PubMed] [Google Scholar]

- 17.Carney P, Poplack S, Wells W, Littenberg B. Development and Design of a Population-Based Mammography Registry: The New Hampshire Mammography Network. Am J Roentgenol. 1996;167:367–372. doi: 10.2214/ajr.167.2.8686606. [DOI] [PubMed] [Google Scholar]

- 18.Ballard-Barbash R, Taplin SH, Yankaskas BC, et al. Breast Cancer Surveillance Consortium: a national mammography screening and outcomes database. AJR Am J Roentgenol. 1997;169(4):1001–1008. doi: 10.2214/ajr.169.4.9308451. [DOI] [PubMed] [Google Scholar]

- 19.American College of Radiology. Reston, VA: Author; 1993. Breast Imaging Reporting and Data System (BI-RADS®) [Google Scholar]

- 20.Models for Prediction of Breast Cancer Risk: Gail Model. [Accessed April 16, 2003]; Available from: http://www.nci.nih.gov/cancerinfo/pdq/genetics/Section_73.

- 21.Anderson SE, Dallal GE, Must A. Relative weight and race influence average age at menarche: results from two nationally representative surveys of US girls studied 25 years apart. Pediatrics. 2003;111(4 Pt 1):844–850. doi: 10.1542/peds.111.4.844. [DOI] [PubMed] [Google Scholar]

- 22.Ballard-Barbash R, Taplin SH, Yankaskas BC, et al. Breast Cancer Surveillance Consortium: A national mammography screening and outcomes database. Am J Roentgenol. 1997;169:1001–1008. doi: 10.2214/ajr.169.4.9308451. [DOI] [PubMed] [Google Scholar]

- 23.Department of Health and Human Services. F., 21 CFR Part 900 Mammography Facilities—Requirements for Accrediting Bodies and Quality Standards and Certification Requirements: Interim Rules. Federal Register. 1993;58:67557–67572.

- 24.Elmore J, Wells CK, Howard DH, et al. The impact of clinical history on mammographic interpretations. JAMA. 1997;277(1):49–52. [PubMed] [Google Scholar]

- 25.Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol. 1997;50(10):1129–1136. doi: 10.1016/s0895-4356(97)00126-1. [DOI] [PubMed] [Google Scholar]