Abstract

Despite the existence of speech errors, verbal communication is successful because speakers can detect (and correct) their errors. The standard theory of speech-error detection, the perceptual-loop account, posits that the comprehension system monitors production output for errors. Such a comprehension-based monitor, however, cannot explain the double dissociation between comprehension and error-detection ability observed in the aphasic patients. We propose a new theory of speech-error detection which is instead based on the production process itself. The theory borrows from studies of forced-choice-response tasks the notion that error detection is accomplished by monitoring response conflict via a frontal brain structure, such as the anterior cingulate cortex. We adapt this idea to the two-step model of word production, and test the model-derived predictions on a sample of aphasic patients. Our results show a strong correlation between patients’ error-detection ability and the model’s characterization of their production skills, and no significant correlation between error detection and comprehension measures, thus supporting a production-based monitor, generally, and the implemented conflict-based monitor in particular. The successful application of the conflict-based theory to error-detection in linguistic, as well as non-linguistic domains points to a domain-general monitoring system.

Keywords: Speech monitoring, Speech errors, Error detection, Aphasia, Computational models

Introduction

The fact that we survive and even thrive in spite of the error-prone nature of our cognitive systems makes the study of error processing crucial to the understanding of human cognition. Most probably, the reason that we function well despite erring often is that we have the ability to detect our own errors and counteract their effects, either by correcting them or by catching them before they cause trouble. It is the error-detection process that we target in this paper.

There are two types of errors (Reason, 1990) with distinct properties. The first type, classically labeled as “mistakes”, comprises errors that result from the lack of information necessary to arrive at the correct response (e.g. you might call a yellow-jacket a bee if you do not know the difference between the two). In contrast, some errors result from a hasty response or a momentary lapse of attention, rather than lack of knowledge (e.g. when you confuse left and right when giving someone directions to your home). This second type of errors, referred to as “slips”, is particularly important, because slips are common, correctable, and in principle preventable.

In the current paper, we address the question of error detection in speech, by adults who are using their native language. These errors are thus most likely to be “slips” in Reason’s terminology, although we often use the term “error” to label them. Our goal is two-fold: for one, we review the problems with the existing account of error detection in speech production and propose an alternative account, which does not suffer similar criticisms. To develop our model we use the similarity between speech errors and slips in other tasks, which brings us to the second goal of the paper: to provide support for a central, generic error detection system (e.g. Miltner, Braun, & Coles, 1997) by showing that a central mechanism that had previously been shown to explain error detection in forced-choice response tasks is equally plausible and effective when applied to a natural task such as speech production.

We start by discussing the “perceptual loop” theory (Levelt, 1983, 1989), the most widely-accepted account of speech monitoring. We review the evidence against this theory and introduce a new account, in which conflict (Botvinick, Braver, Carter, Barch, & Cohen, 2001; Yeung, Botvinick, & Cohen, 2004) is used as a signal for error detection. We then implement our theory in the interactive two-step model of word production (Dell & O’Seaghdha, 1991) and establish its predictions with two computational simulations. Next, we assess the explanatory power of our account against the perceptual loop theory by analyzing the error-detection performance of a group of aphasic patients. We conclude by proposing the conflict-based model as the default mechanism of error-detection in language production and discuss the similarities between this account and the monitoring of motor movements, thus pointing to the possibility of a domain-general monitoring mechanism.

The perceptual loop account of monitoring

The perceptual loop theory (Levelt, 1983, 1989) is an elegant account of speech monitoring because it assumes no specialized device or mechanism for error detection. According to this theory, speakers detect errors in their speech by listening to themselves. Error detection then boils down to comprehending that the produced utterance is different from the intended one. Since humans need the comprehension system to process the speech of others anyway, using this system for self-monitoring sounds plausible and is parsimonious. Similar to detecting errors in other people’s speech by listening to them, speakers can detect errors in their own speech through an “external channel” (implying that the spoken utterance is processed by the auditory system).

There is some support for the perceptual loop theory’s claim that error detection in self and others’ speech is similar. Although normal speakers (as well as Broca’s aphasia patients) detect more errors in others speech than in their own (Oomen, Postma & Kolk, 2001), the relative detectability of different error types is similar in monitoring self and others’ speech. An equal proportion of semantically-related errors (e.g. “dog” for “cat”), as well as form-related errors (e.g. “mat” for “cat”), is detected by people when they monitor their own speech (when auditory feedback is not blocked by noise; Postma & Noordanus, 1996) and when they monitor the speech of others, at least under conditions where the intended meaning is known to both the speaker and the listener (Oomen & Postma, 2002; but see Tent & Clark, 1980). Such similarity in the pattern of error detection can be taken as evidence that a similar mechanism underlies the detection of both self and others’ speech errors.

However, the external channel is not sufficient to explain all the empirical findings concerning error detection. An important finding in this regard is the timeline of detection. We show this using the classic example of the corrected error “v-horizontal” (Levelt, 1989), where the production of the word “vertical” is halted as soon as the first phoneme is produced. The latency between the initiation of the erroneous utterance (the onset of “v” in “vertical”) and stopping articulation (halting the production of “v-”) is reported to be shorter than 150 ms in about 15%, and shorter than 100 ms in about 5% of overt errors (Blackmer & Mitton, 1991). Given that at least 200 ms is needed for word recognition (Marslen-Wilson & Tyler, 1980) and on average 150- 200 ms is required to halt ongoing behavior (Hartsuiker & Kolk, 2001; Logan & Cowan, 1984), such short latencies between the error onset and cut-off (error-to-cutoff time) are incompatible with error detection through the external channel. Therefore a second channel of processing has been proposed within the perceptual loop theory, in which inner speech undergoes monitoring. Like the external channel, this “internal channel” is monitored by the comprehension system, with the only difference being the level at which the speech representation is monitored. The representation is thought to be more abstract in the case of inner speech (phonological representations; Wheeldon & Levelt, 1995; Oppenheim & Dell, 2008).

The combination of the internal and external channels provided a simple and plausible account of monitoring, which succeeded in explaining the nature and time course of error detection behavior in normal speakers (but see Oomen & Postma, 2001). Thus, since its introduction, the perceptual loop theory has maintained its status as the leading theory of speech monitoring in spite of a number of alternative accounts (e.g., Laver, 1973; MacKay, 1987, 1992; Schlenck, Huber, & Willmes, 1987). The theory, however, has not gone without criticism. Two of its major assumptions have been called into questions: (1) Do speakers routinely monitor their inner speech even while producing overt speech? (2) Is comprehension really the basis of error detection? In addition, even if the two assumptions hold, there is doubt that the perceptual loop account can explain the pattern of detection and repair in speech rates faster than normal (Oomen & Postma, 2001). We will review these criticisms below and reevaluate the viability of the perceptual loop account of error detection in speech production.

Problems with the perceptual loop account

Do speakers routinely monitor their inner speech while producing overt speech?

There is little doubt that humans are capable of monitoring their inner speech when they are not speaking aloud. The empirical evidence for this claim comes from a variety of tasks, ranging from phoneme monitoring (e.g. judging whether there is /l/ in the name of the pictured object, when subjects see a picture of a nose; Ganushchak & Schiller, 2006, 2008a, 2009; Wheeldon & Levelt, 1995; Wheeldon & Morgan, 2002) to reporting errors when silently reciting tongue-twisters (Oppenheim & Dell, 2008). This however, does not necessarily imply that people often monitor inner speech (or even that they can do so) while overtly articulating. Vigliocco and Hartsuiker (2002) bring up a theoretical problem with simultaneous monitoring of inner and overt speech. Since the inner speech precedes articulation of overt speech by one or a few words (buffering of inner speech), listening to both would be similar to constantly listening to an echo of your voice, which would make comprehension difficult. Moreover, note that monitoring these echoed signals must be performed while attending to the main task, which is the production of speech.

Recently, Huettig and Hartsuiker (2010) directly tested the hypothesis that inner speech is monitored when there is overt production. They registered the eye movements of their participants while they named a picture (e.g. a heart) in the presence of a phonological competitor (e.g. the word “harp”) and two unrelated words. Regardless of whether the subjects monitor their inner speech or their overt utterance during picture naming, the phonological similarity of the competitor to the target is expected to cause temporary fixation on the competitor. The critical point is the timing of this fixation. If inner speech is perceived during overt production, one would expect the competitor picture (harp) to attract more looks than unrelated pictures at an early time window (−50 ms to +55 ms, based on different estimations of the head-start of inner-speech perception on overt word onset; Indefrey & Levelt, 2004; Hartsuiker & Kolk, 2001; Levelt, 1989). But if only overt speech is monitored, the earliest time point at which the participants fixate the phonological competitor would be around 300 ms post-onset of speech (the same as if they heard the word “heart” spoken to them). The data were compatible with the second possibility. Subjects began fixating the phonological competitor 350–500 ms after they started naming the target. There was thus no indication that they monitored their inner speech during overt production.

Is comprehension really the basis of error detection?

For now, let us assume that monitoring inner speech during the course of overt production is in fact possible and speakers routinely do it. The perceptual loop theory’s second main assumption is that monitoring is carried out by the comprehension system. A corollary to this assumption is that there should be a correlation between the ability to comprehend and the ability to detect errors. Doubts about the existence of such correlation were first raised by Schlenck et al. (1987), and these doubts were supported later by Nickels and Howard (1995; but see Özdemir & Roelofs, 2007), who failed to find a correlation between error-detection performance by aphasic patients and three measures of their input processing abilities: lexical decision tasks, nonword-minimal-pair judgment, and synonym judgment. The lack of correlation held up even when the authors included only those patients who showed intact inner speech processing ability, as defined by good performance on rhyme and homophone judgment tasks.

More support for the dissociation between comprehension and error detection came from studies of individual aphasic patients, some of whom showed poor error detection in spite of good comprehension (Butterworth & Howard, 1987; J. Marshall, Robson, Pring, & Chiat, 1998; Liss, 1998). McNamara, Obler, Au, Durso and Albert (1992) reported a similar finding in Parkinson patients, who missed 75% of their errors (a rate comparable to that of Alzheimer’s patients with poor comprehension), in spite of having good comprehension. However, whether a monitoring deficit in spite of good comprehension is problematic for the perceptual loop or not, is not entirely clear. Hartsuiker and Kolk (2001) argue that comprehension might be necessary but not sufficient for error detection. So, patients who have detection problems in spite of good comprehension might have problems with the comparison process required for detection, either in storing the intended and comprehended representations, or in determining if and how they differ.

Interestingly, many patients with good comprehension and poor self-correction detect errors in other people’s speech perfectly (e.g. Kinsbourne & Warrington, 1963; Maher, Rothi, & Heilman, 1994; J. Marshall et al., 1998). One account of the dissociation between detecting errors in self and others’ speech is that these patients are in denial; they avoid acknowledging their disorder by not reacting to their errors. This view does not seem very convincing for two reasons: first, some patients show cross-modal differences in error detection, meaning that they can detect errors in their writing (e.g., patient RMM; J. Marshall et al., 1998) while they fail to detect errors in their oral speech. There is no compelling reason why patients should be in denial about the problem in only one mode of production. Second, even within the same modality, error detection differs across tasks. Patient CM (J. Marshall et al., 1998) fails to detect most of his errors in picture naming, but does so perfectly in auditory word repetition. Again, a denial account is inconsistent with this finding.

An alternative explanation for poor self-monitoring in spite of good comprehension is an extension of the capacity limitation account for why normal speakers detect errors more frequently in others’ speech compared to their own. It has been proposed that aphasic patients might suffer from greater capacity limitations, so the advantage of monitoring others’ speech is even greater for this population. There is evidence to doubt this assumption as well. J. Marshall et al. (1998) asked patient CM (the one who could detect his auditory-repetition, but not his picture-naming errors) to listen to a spoken word, choose the correct picture from a semantic competitor, repeat the name and then judge the accuracy of his response. Contrary to the prediction of the limited-capacity account, he did quite well on this task. It therefore seems that, at least in some patients, the denial and capacity limitation accounts of poor detection can be refuted and, hence, we are left with the conclusion that, although good comprehension is associated with good detection of errors in others’ speech (e.g. Kinsbourne & Warrington, 1963), it is less associated with detection of one’s own errors.

An even stronger case against the assumption that comprehension is the basis for error detection could be made if good error detection was to be shown in spite of poor comprehension. R. Marshall, Rappaport and Garcia-Bunuel, (1985) report a patient with auditory agnosia, who fails to understand spoken speech, but has better-than-expected ability to detect her speech errors. Hartsuiker and Kolk (2001) rightly point out that the patient had good reading comprehension, so although the external loop was absent, comprehension through the internal loop could have been responsible for her successful monitoring performance. But recall that monitoring inner speech during overt production is suspect (Vigliocco & Hartsuiker, 2002; Huettig & Hartsuiker, 2010).

Moreover, R. Marshall et al.’s (1985) patient shows differential monitoring ability for different error types. She makes a lot of semantic errors and fails to detect them, while her fewer phonological errors are almost always repaired. This pattern of error detection is the opposite of what would be expected by an internal-channel-only monitor, because studies of error detection under noise-masked condition have shown that the contribution of the external channel to the detection of semantic errors is minimal (Postma & Noordanus, 1996; also see Hartsuiker, Kolk, & Martensen, 2005, for a model of the division of labor between the internal and external monitoring channels). A similar pattern of differential error detection based on the error type was reported in a transcortical sensory aphasic (with poor comprehension and fluent grammatical speech) who corrected all her phonological errors, but failed to acknowledge her semantic errors, even her semantic jargons, which were her dominant type of error (Stark, 1988). Stark proposed that the patient’s trouble with detection may in fact arise from trouble with production, rather than trouble with comprehension.

But before these cases can be taken as evidence against a comprehension-based monitor, an alternative explanation must be refuted. It is possible that semantic errors are in fact detected, but the patients’ awareness of the difficulty of the process of repair prevents them from bothering with error acknowledgment (Oomen, Postma, & Kolk, 2005). Countering this argument, Stark (1988) could not find any behavior in the patient who did not correct her semantic errors indicating that she had noticed them, and later a more objective approach confirmed that other patients who do not acknowledge their errors truly do not notice them. J. Marshall et al. (1998) compared the percentage of pauses in two jargon aphasics to that of normal speakers. If, in fact, the lack of overt indication of error detection was due to a conscious decision to ignore the error because of the estimated difficulty of the subsequent repair, one would expect to see more hesitations in the speech of patients. The results were the opposite. The two patients were marginally more fluent than normal speakers and showed no sign of covert monitoring behavior. Considering all of the data from aphasic speakers together, it appears that there is evidence for a dissociation of comprehension from monitoring of self-speech, thus opposing a major assumption of the perceptual loop theory.

To summarize, we reviewed the evidence that questions the two core assumptions of the perceptual loop account, that inner speech can be monitored during overt production, and that error detection abilities are predicted by comprehension abilities. Nevertheless, there is no doubt that monitoring overt speech through comprehension occurs and is useful for a variety of purposes. For one thing, monitoring errors in other people’s speech is certainly carried out, at least in part, by means of comprehension. Furthermore, the increased rate of detection of phonological errors in one’s own speech when auditory feedback is present (Lackner & Tuller, 1979), also points to a contribution to error detection from comprehension. In the same vein, the role of other monitoring processes, such as that of proprioceptive receptors in calibrating articulation (Postma, 2000), is undeniable. Given these points, it is not the goal of this paper to refute the contribution of the perceptual loop to speech monitoring altogether, but to question its role as the primary mechanism for error detection in self-speech.

An alternative class of monitors: the production-based monitors

Note that the main source of failure of the perceptual loop account is its contingency on comprehension. The argument against monitoring through inner speech does not question the existence of inner speech but rather that it can be profitably processed through the comprehension system during overt production. Thus, for a monitoring theory to avoid these problems, it should not rely on the comprehension system for error detection. Examples of such monitors have been proposed (De Smedt & Kempen, 1987; Laver, 1973, 1980; Schlenk et al., 1987; Van Wijk & Kempen, 1987). The main feature of this class, which we refer to as “production-based” monitors 1, is that they view error detection to be mostly independent of comprehension, and instead to rely on the information generated by the production system itself.

Of these, Laver’s (1980) model is the most detailed. It assumes multiple independent monitors between the layers of the production system and a final sensory loop, which is the equivalent of the external channel in the perceptual loop account. According to this account, the production process is held up while monitoring is taking place (unlike the perceptual loop account, in which the production process and monitoring are carried out in parallel). This theory has been criticized, because the hold-up nature of multiple monitors is bound to interfere with the fluency of speech (Postma, 2000). Moreover, since the production process is put on hold at the time of monitoring, the only errors ever to become overt are the ones missed by all the production-based monitors. Such errors can only be detected by the sensory loop, which takes a few hundred milliseconds to detect them and plan a repair. So the theory fails to explain very short error-to-cutoff intervals in overt errors such as “v-horizontal”.

An important objection to multiple specialized monitors -and more generally to any specialized monitor that compares the actual output against the “correct” output- is reduplication of knowledge: “If an editor knows the correct output all along, one wonders why the correct output wasn’t generated in the first place.” (MacKay, 1987, p. 167). This problem motivated a new idea of monitoring, in which error detection does not consist of a comparison between the actual and the correct response, but is based on the patterns of information flow in the production system. One such proposal is MacKay’s Node structure Theory (NST; MacKay, 1987, 1992). A crucial mechanism of error detection in the NST is the detection of new patterns, not previously experienced by the speaker. Given the evidence supporting the speakers’ high sensitivity to the statistical patterns of the information received (Saffran, Newport, Aslin, Tunick, & Barrueco, 1997; Warker & Dell, 2006), it is quite conceivable that an unfamiliar pattern will raise a red flag in the system. This red flag, in NST’s term, is triggering the speaker’s awareness, which is proposed as the mechanism for error detection. Although the NST should be credited for pioneering the idea that detection of a speech error is possible without the system already having the correct response at its disposal, it assumes that nodes are shared between the production and the comprehension systems, and thus suffers the criticism of the dissociability of comprehension and error detection abilities, brought up earlier against the perceptual-loop account.

Another mechanism for a monitor that uses the patterns of information flow in production is a comparison between the amount of activation a node sends to its connected nodes and the amount of feedback it receives (Postma & Kolk, 1993). Obviously, this proposal hinges on the interactive nature of the production system. In an interactive network, such as Dell’s (1986) model, when the word node “cat” becomes activated, it sends activation to phonemes /k/, /æ/ and /t/. By virtue of feedback, these phonemes send activation back to the word node “cat”. Now, if by some mistake, instead of the onset /k/ another onset (e.g. /b/) becomes activated, the amount of feedback that the word node “cat” receives will be lower than the amount it would have received if the correct phoneme had been activated. In addition, a different node (e.g. “bat”) receives feedback without having had much activation previously. It is possible that a monitor would use such discrepancies as a signal that an error must have occurred. Finally, it has been proposed that error detection might be achieved by a competition detector, which measures the total activation of the nodes in a pool and generates a signal if the total activation exceeds a preset threshold (Mattson & Baars, 1992; Schade & Laubenstein, 1993). Some have likened this theory to conflict theories of error detection in action monitoring (Huettig & Hartsuiker, 2010).

Although production-based monitors have promise, they have not been able to supplant the perceptual loop theory as the most widely-accepted account of monitoring, because they either have not been laid out -and tested- in sufficient detail (e.g. Postma & Kolk’s proposed model), or have empirical findings contradicting their assumptions (e.g. MacKay’s NST or Laver’s monitor). Below, we propose a new monitoring mechanism, production-based in nature, that avoids the problems with the comprehension-based monitors such as the perceptual loop, but is detailed enough in its assumptions and predictions to be testable.

The conflict-based account of error detection

Conflict can, in principle, arise at any point during the performance and aftermath of an information processing task. We target a specific type of conflict in this paper which emerges from the competition of several alternatives at the time of response selection, and propose that this conflict can be a basis for speech error detection. The notion of conflict monitoring in service of error detection is not new. It was originally proposed that conflict could be used as a signal to recruit and regulate cognitive control (Botvinick et al., 2001). If control is defined as an interaction between an executive system and a subordinate system (Logan & Cowan, 1984), conflict within the subordinate system (e.g. motor system) can be used as a signal for the executive system to increase the amount of control and subsequently resolve conflict. The evidence leading to this proposal was provided by neuroimaging data on a medial frontal brain structure, the Anterior Cingulate Cortex (ACC). Although the ACC shows activity during a wide range of tasks, varying from visual and motor processing to language and memory, it has been argued that the majority of tasks in which the ACC’s activity has been documented can be categorized in three groups: overriding a prepotent response (e.g. naming the ink color of the word RED printed in green), generating a response from a host of equally possible responses (e.g. completing the stem ST- with any word that comes to mind) or during commission of errors (See Botvinick et al., 2001, for a review). The common element in these categories is argued to be high amount of conflict at the response level. Why there is response conflict in the first two categories is obvious. In Stroop-like tasks, the natural tendency is to generate the prepotent response, but the correct response is in fact the subordinate one, leading to conflict. In stem-completion tasks, no one response is salient enough, so in order to make a single-word response, the subject must suppress a number of equally strong alternatives and resolve the conflict. But the most interesting of all is the ACC’s activity during error commission. Does error commission also indicate response conflict?

Botvinick, Nystrom, Fissell, Carter, and Cohen (1999) tested specifically for the correlation between error commission and conflict by measuring the ACC’s activity using a variation of the Eriksen flanker task. In this task, targets were arrows pointing left or right with the direction defining the binary response to be made. Flankers consisted of surrounding arrows that pointed in either the same or opposite direction of the central target. The functional magnetic resonance imaging (fMRI) findings showed that this brain region was activated on both error trials and correct trials with high conflict (e.g. ≪ > ≪; see also Carter, Braver, Barch, Botvinick, Noll, & Cohen, 1998), thus hinting at a possible relationship between high conflict and error commission.

Another source of valuable information about error processing has been electrophysiological studies (ERP). Below, we will review the findings concerning the ERP component, called the Error Related Negativity (ERN), with the aim of illuminating certain properties necessary for a model of monitoring. ERN is a negative deflection in the ERP, the onset of which coincides with (Holroyd & Coles, 2002) or precedes (Gehring, Goss, Coles, Meyer, & Donchin, 1993) the onset of the EMG activity giving rise to the erroneous response in speeded tasks, and the peak of which is observed about 80–100 ms after the response (e.g. Dehaene, Posner, & Tucker, 1994; Falkenstein, Hohnsbein, Hoormann, & Blanke, 1990; Gehring et al., 1993). The ERN’s origin has been traced to the frontocentral regions, particularly the ACC and SMA (Gehring et al., 1993) or more precisely to the inferior ACC (Dehaene et al., 1994), and has been corroborated by MEG findings (Miltner, Lemke, Weiss, Holroyd, Schevers, & Coles, 2003).

There is strong evidence to suggest that the ERN reflects the operation of a generic error-detection system. First, it has been shown to be independent of the modality of error commission. Errors committed not only with hands, but also with feet (Holroyd, Dien, & Coles, 1998), eyes (Nieuwenhuis, Ridderinkhof, Blow, Band, & Kok, 2001; Van’t Ent & Apkarian, 1999), and voice (Masaki, Tanaka, Takasawa, & Yamazaki, 2001) all elicit ERNs. Moreover, this negativity has been traced back to the same brain region regardless of whether the errors were committed by hand or by foot (Holroyd et al., 1998). In addition, ERP studies have provided evidence for a central detection-correction loop, involving the frontal structures. Some of these studies have shown the correlation between the ERN amplitude and a variety of post-error adjusting behaviors (e.g., Debener, Ullsperger, Siegel, Fiehler, von Cramon, & Engel, 2005; Dehaene et al., 1994; Gehring et al., 1993) implying that the ERN-generating center is involved in a cycle of error detection and cognitive regulation to avoid further errors (but see Gehring & Fenscik, 2001; Núñez-Castellar, Kühn, Fias, & Notebaert, 2010; van Meel, Heslenfeld, Oosterlaan, & Sergeant 2007). Other studies have focused on the pathologies of frontal structures, showing that patients with damage to the ACC have error rates comparable to normal subjects, but generate no ERNs (Stemmer et al., 2004; Swick & Turken, 2002). Abnormal ERNs are also well-documented in obsessive-compulsive adults (Endrass, Schuermann, Kaufmann, Spielberg, Kniesche, & Kathmann, 2010; Gehring, Himle, & Nisenson, 2000; Hajcak & Simons, 2002; Ruchsow, Gron, Reuter, Spitzer, Hermle, & Kiefer, 2005) and children (Santesso, Segalowitz, & Schmidt, 2006) as well as adults with the Gilles de la Tourette syndrome (Johannes, Wieringa, Müller-Vahl, Dengler, & Münte, 2002). These abnormalities have been ascribed to the malfunction of a central error-processing system involving the ACC and the basal ganglia (Devinsky, Morrell, & Vogt, 1995; Holroyd, Nieuwenhuis, Mars, & Coles, 2004). Together, these pieces of evidence point to a central loop which is involved in the detection and correction of errors, some part of which generates the ERN.

In addition to suggesting a central monitoring mechanism, research findings on the ERN have defined specific properties for the monitor. Of particular importance among these findings is the independence of the ERN from awareness. The very early onset of this negativity with regard to the onset of the motor response suggests that it is unlikely to result from conscious processing of the response. Also, direct empirical evidence shows that the magnitude of the ERN is unaffected by whether participants are aware of the error (Endrass, Franke, & Kathmann, 2005; O’Connell, Dockree, Bellgrove, Kelly, Hester, Garavan et al., 2007; Nieuwenhuis et al., 2001; but see Steinhauser & Yeung, 2010). In fact, many errors which were declared “unperceived” by the participants were followed by corrections (Nieuwenhuis et al. 2001; Postma, 2000; Ullsperger & von Cramon, 2006), sometimes of significantly shorter latencies compared to those corrections made to “perceived” errors (Nieuwenhuis et al. 2001). The seemingly automatic nature of error detection (and at least some corrections), together with the neuroimaging findings showing the ACC’s activity during both error commission and high-conflict situations, naturally led to the proposal that monitoring for conflict might be the basis of error detection (Yeung et al., 2004). The theory was further corroborated by the presence of a pre-response ERN-analogue (N2) on correct but high-conflict trials (Nieuwenhuis, Yeung, van den Wildenberg, & Ridderinkhof, 2003; Pritchard, Shappell, & Brandt, 1991; Smith, Smith, Provost, & Heathcote, 2010; Van Veen & Carter, 2002; Yeung et al., 2004; Yeung & Nieuwenhuis, 2009; but see Holroyd & Coles for a different view on the ERN).

Although there are relatively few studies of the ERN in language, the findings clearly show that the negativity is present during uncertain or errorful language processing. Sebastián-Gallés, Rodríguez-Fornells, De Diego-Balaquer, and Díaz (2006) showed that Catalan-dominant (but not Spanish-dominant) Spanish-Catalan bilinguals generated ERNs when they made a mistake in a lexical decision task involving Catalan word/nonwords. On the production side, Ganushchak and Schiller (2006, 2008a, 2009) used a button-push go-nogo version of the phoneme monitoring task to investigate the ERN in verbal self-monitoring. The task requires the participants to monitor for a certain phoneme (e.g. /l/) in the name of an object the picture of which (e.g. a nose) is presented on the screen. Participants are to push a button only if the picture name contains the specific phoneme. With this task, Ganushchak and Schiller (2006) demonstrated ERNs on error trials whose amplitude decreased under severe time pressure. This result agrees with that of Gehring et al. (1993) who found smaller ERNs when participants were asked to sacrifice accuracy for speed in the Flanker task. Ganushchak and Schiller (2008a) tested phoneme monitoring in the presence of semantic (e.g., “ear” for the picture of a nose) or neutral (semantically irrelevant) distractors. They found the largest-amplitude ERNs following errors that occurred after semantic distractors. The authors interpreted these result in terms of a monitor that is not only sensitive to conflict at the lower-level motor representations of the response, but also to the more abstract steps of lexical access, such as semantic processing. Finally, Ganushchak and Schiller (2009) demonstrated that German-Dutch bilinguals performing the phoneme monitoring task in Dutch showed an ERN on error trials, just like the Dutch speakers did. However, they showed higher-amplitude ERNs under time pressure compared to the control condition, the opposite of the finding in the Dutch speakers.

The ERN has also been found following verbal responses. Masaki et al (2001) were the first to show this negativity following spoken responses in a Stroop task. Later, Möller, Jansma, Rodríguez-Fornells, and Münte (2007) showed negativities with a frontocentral distribution on speech errors arising from the SLIP task (Baars, Motley, & MacKay, 1975). Ganushchak and Schiller (2008b) collected ERN-like components in a semantic-blocking paradigm, in which participants had to name pictures in semantically-related or unrelated blocks. In addition, they replicated with spoken responses the button-push finding that the amplitude of the ERN increases when monetary reward is offered for higher accuracy (Gehring et al., 1993; Hajcak, Moser, Yeung, & Simons, 2005; Pailing & Segalowitz, 2004), thus adding to the growing body of evidence that the ERN reflects a error monitoring system that applies regardless of the specific domain that generated the error. Furthermore, they showed larger ERNs in the semantically-related compared to the semantically-unrelated blocks, which they attributed to the higher amount of response conflict in the semantically-related block.

Most recently, Riès, Janssen, Dufau, Alario, and Burle (2011) asked participants to determine the grammatical gender of the name of a pictured object, and in a second experiment, to name the object itself. Both experiments found a negativity, with the time-course and scalp distribution previously described for the ERN, not only on the incorrect, but also on the correct trials. However, the amplitude of the negativity was larger for the incorrect trials. The authors argued that the presence of the negative potential on both correct and incorrect trials in speech production, in agreement with the findings in the visuomotor literature, points towards an online monitor that is shared, at least in part, between the speech-production system and the other cognitive systems.

To summarize, converging evidence from linguistic and non-linguistic studies, using neuroimaging and electrophysiological measures, supports the idea that a generic monitoring system is in place, and that this system consists of a frontal brain region (most likely the ACC) which might use response conflict as a signal for error detection. An example of such a monitoring model has been detailed and shown to explain the data in speeded forced-choice tasks (Yeung et al., 2004). We propose a speech monitoring model, in which, similar to Yeung et al.’s model, measuring conflict between response options and relaying that conflict to an executive center is the basis for error detection. Although the model builds on this domain-general approach to monitoring, it is not simply an application of Yeung et al.’s model to speech. Our model’s properties have been tailored to the task of unprompted error-detection in natural speech production, which is quite different from the forced-choice laboratory tasks. Moreover, the model is based on a previously existing model of lexical access in production and this further constrains how conflict is defined and used. In the simulation section below, we outline the principles of our proposed monitor and its predictions. Using an implemented production model not only helps us explain the details of the theory in a precise fashion, but also allows us to generate concrete and testable predictions, which will guide our patient-data analysis.

Model simulations

We used the interactive two-step model of word production (Figure 1) in which semantic features in a semantic layer are connected to lemmas (Kempen & Huijbers, 1983) in a word layer and those lemmas, in turn, are connected to their relevant phonemes. The strength of the connections between the semantic and the word layer is defined by the s (semantic) weight and the strength of the connections between the word and the phoneme layer by the p (phonological) weight. The values of these parameters determine how strongly the information is transferred from one layer to another, and therefore, determine how well the system functions. In order to simulate a damaged system (such as an aphasic production system), the value of s or p or both weights is decreased (see Foygel & Dell, 2000, for the details of this lesioning).

Figure 1.

The interactive two-step model of word production. Boxes indicate the places where conflict was measured, once at the word layer at the time of lemma selection (the upper box), and once at the phoneme layer at the time of onset selection (the lower box).

This model names an object in two steps. Imagine the target word is “cat”. In the first step, the semantic features pertaining to cat become activated. Activation spreads in the network, activating the lemma “cat”, but also its competitors in the word layers, such as “dog”. Each node’s activation is determined linearly from the sum of activations received from the nodes connected to it and is subject to decay and random noise. Cascading in the model allows for further spread of activation down to the phoneme layer, and the interactive nature of the model causes nodes in the higher layers to receive feedback from the nodes in the lower layers. After 8 time-steps the most active node in the word layer is selected. When describing the simulations, we will call this selected node the “word-layer response” to describe the outcome of the first step of the process of lexical retrieval, although no overt response is made at this stage.

The second step starts by giving a jolt of activation (100 units) to the word-layer response (e.g., “cat”), creating a non-linearity in the process of mapping semantics to phonology. Activation spreads for another 8 time-steps, at the end of which the most active node in each phoneme cluster (e.g., /k/ in onset, /æ/ in vowel and /t/ in coda) is selected and the model’s “final response” is generated by combining these phonemes.

The “word-layer response” can be correct (e.g. “cat” for “cat”), or a semantic (e.g. “dog” for “cat”), formal (e.g. “cap” for “cat”) or unrelated-word (e.g. “fog” for “cat”) error. In a normal speaker, nearly all responses at this layer are correct. If an error is made, it is almost exclusively of semantic type, because the error receives activation from the semantic features that it shares with the target. Most semantic errors in the model are in fact first-step errors. The final response of the model can belong to all the above categories but also to a unique category of nonword errors (e.g. “lat” for “cat”). The reason that nonwords are limited to the second step of lexical retrieval is that whichever node is selected in the first step will be, by definition, a word. Therefore, it is only through an error in retrieving the phonemes (i.e. the second step) that a nonword can be created.

If detecting conflict is useful for error detection in a language production system, that system should exhibit three principles:

Detection Sensitivity. The amount of conflict must be predictive of the probability of error occurrence. In other words, the distribution of correct and incorrect responses must be distinguishable by a quantified measure of conflict.

Layer Specificity. Conflict at each layer of the system should specifically predict the error type arising from that step. Recall that semantic errors are common first-step errors, while nonword errors are second-step errors. Therefore, high conflict at the word layer should be predictive of the occurrence of a semantic (but not a nonword) error. Likewise, high conflict at the phoneme layer must signal a nonword (but not a semantic) error.

Integrity Contingency. Finally, the conflict-based monitor is a production-based monitor, meaning that it relies on the information generated in the process of production to detect errors. Thus, the reliability of conflict between the model’s nodes as an error signal should be directly related to the strength of the production weights. When these weights are strong, correct trials will generally be associated with low conflict. So when conflict is high (due to noise factors), the system can use this information as a signal that something must have gone wrong. On the other hand, when the weights are weak, transmission of information between layers suffers, with noise playing a large role and thus creating conflict on all trials, regardless of whether they end in the correct response or not. In this case, conflict should no longer be a useful signal to discern error trials. Thus, the more degraded the system, the less reliable the error signal.

In the simulations that follow, we show that the model exhibits detection sensitivity and layer specificity in a simulation of a normal speaker. To investigate integrity contingency in the model, we simulate 5 aphasic patients, and demonstrate how gradual decrease of the s and p weights affects the reliability of the error signal derived from the amount of conflict between the competing nodes.

Simulation I

In the two-step model of word production the s and p parameters were both set to 0.04 to simulate picture naming performance of a normal individual. Using these parameters, when the model is run through 10000 trials, the final response is correct in about 98% of the times. The other 2% comprises mostly semantic errors, with few nonword and formal errors (These results match data from control subjects doing a picture naming task with high name-agreement pictures; Dell, Schwartz, Martin, Saffran, & Gagnon, 1997). For each of the 10000 trials, the following information was registered: word-layer response, conflict at the word layer (see below for how conflict was measured), final response and conflict at the phoneme layer. For the latter, we measured conflict among the model’s six possible onset phonemes.

Conflict was quantified using two measures: The first measure was simply the difference between the two nodes with the highest levels of activation in a layer, which we call the difference between the maximums or diff(max). For example, if activations in the word layer looked like cat = 0.099, dog = 0.018, hat = 0.008, mat = 0.006 and fog = 0.008, diff(max) = 0.099–0.018= 0.081. The second measure was the standard deviation (sd) of the activation of all nodes in the layer at which conflict was to be determined. In the above example, , where xi is the activation of each node in the layer, x̄, is the mean and is the total number of nodes in that layer, thus sd = 0.04. Note that both measures determine the difficulty with which a “winner” is selected. The first measure deals with only the strongest competitor, while the second measure takes into account competition from all the competitors. In both cases, the measures were converted into −ln(diff(max)) and −ln(sd), because the transformed measures are easier to interpret (higher values represent more conflict) and they generate distributions with less skew. The pattern of the results obtained by using the −ln(diff(max)) vs. the −ln(sd) measure was similar, but the −ln(sd) was a noisier measure of conflict. Ultimately, though, the choice of the conflict measure is an empirical question (see Botvinick et al., 2001 for a full discussion of this issue).

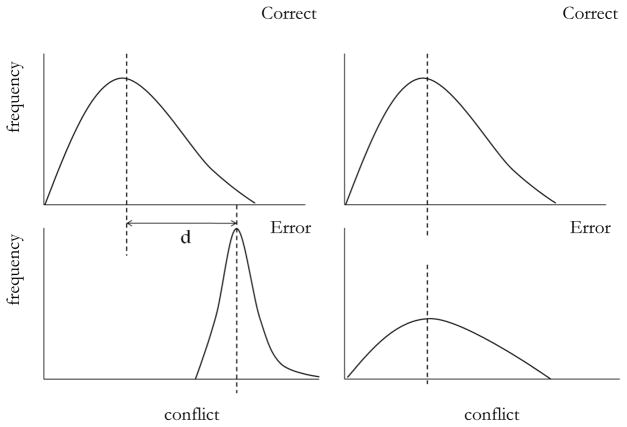

10000 values (for the 10000 trials) were gathered for each measure of conflict at each layer. These values were then categorized based on whether they belonged to a correct or an incorrect trial (determined by the model’s final response) and for each measure, separate distributions were built for correct and incorrect responses. If conflict is a useful measure for error detection, the distributions of correct and incorrect responses that are built using the measures of conflict should have little overlap. If not, these distributions should not be easily distinguishable (Figure 2). To determine the overlap of the two distributions we calculated the Cohen’s , where me is the mean of the distribution of the conflict measure for error responses, mc is the mean of the distribution of conflict measure for correct responses and Sp is the pooled standard deviation of the two distributions calculated as , where the sc and se are standard deviations of the correct and error distributions respectively, and nc and ne are the number of trials in those distributions (Hartung, Knapp, & Sinha, 2008). A larger Cohen’s d means less overlap, which means the distribution of the two response types are well-differentiated by the conflict-measure (detection sensitivity; see Figure 2, left panels). If the distribution of correct and error responses are very similar with regard to the amount of conflict, the Cohen’s d will be close to zero (Figure 2, right panels). When discussing the Cohen’s d’s, we report the values based on the −ln(diff(max)) measure of conflict (which proved to be the more sensitive measure) and put the −ln(sd)-based values in parentheses. To check the reliability of our estimates of Cohen’s d, we calculated the 95% CIs. The large number of trials led to confidence intervals so narrow that in most cases the upper and lower bounds were the same as the reported value when rounded up to two decimals. For this reason, we do not report the confidence intervals for each Cohen’s d.

Figure 2.

Demonstration of the principle of detection sensitivity using hypothetical distributions. The graphs plot the number of trials showing a certain amount of conflict, and are categorized based on whether the trial ended in the correct or incorrect response. The two panels on the left show the case where detection sensitivity is high. If conflict correctly signals error occurrence, errors should on average show higher conflict compared to correct trials. On the other hand, if conflict is not a useful signal for error detection, the distribution of correct and incorrect responses should not differ with regard to measures of conflict (right panels). d = Cohen’s d (see text).

Of the 10000 trials, 209 resulted in incorrect responses and 9791 in correct responses at the phoneme layer (final response). When conflict was measured at the word layer, Cohen’s d was 3.11 (1.15 based on the −ln(sd)), which indicates good discriminibility, particularly for the −ln(diff(max)) measure. However, when conflict was measured at the phoneme layer Cohen’s d was only 0.24 (0.19 based on the −ln(sd)), which indicates low discriminability. Although at first glance this finding looks problematic for the theory, this is in fact exactly what is predicted by the model’s second principle: Conflict at each layer must specifically predict the type of error arising from that layer. In the normal speaker simulated here, the majority of errors are semantic (199 semantic errors, 2 formal and 8 nonword errors as the final response pattern). The error distribution therefore, contains mainly first-step errors, which according to layer specificity must be detectable by conflict at the word, and not at the phoneme layer; precisely what the Cohen’s d’s show.

To test whether this interpretation was correct, we built separate distributions for semantic and nonword errors. Of the 10000 word-layer responses, 199 of them were semantic errors. This number was unchanged in the model’s final response, confirming our assumption that semantic errors are dominantly (and in this case, exclusively) first-step errors. Distributions of the two measures of conflict were then generated for correct vs. semantic errors at the word layer. Cohen’s d was 3.26 (1.18 based on the −ln(sd)) for the detection of semantic errors. The 8 nonword errors in the model, as discussed earlier must be second-step errors. When distributions of the conflict measures were built for nonword errors vs. correct responses at the phoneme layer, Cohen’s d was 2.83 (1.52 for −ln(sd)), much higher than the Cohen’s d measured at the phoneme layer, when all errors were lumped together.

As a control, we also did the reverse pairing. The distributions of correct and error responses for the semantic errors were paired with conflict measured at the phoneme layer, and distributions of correct and error responses for nonword errors were paired with conflict measured at the word layer. Recall that this is incorrect pairing of error type and the level at which conflict is measured, and detection sensitivity is expected to be low. Cohen’s d was 0.09 (0.11) for the semantic and 0.57 (0.50) for the nonword errors. The low values of the Cohen’s d’s for the reverse pairing, together with large values when the correct pairing of error type and conflict-layer was made, confirm the principle of layer specificity, which entails a more specific definition of detection sensitivity.

Simulation II

One of the objections raised against the comprehension-based monitor was that some patients with poor comprehension did not have much trouble detecting their own errors. The logic was that if the monitoring device receives its information from the comprehension system, then it should not operate well if the source of information is malfunctioning. The same rationale applies to a production-based monitor. We claim that error detection uses the information (in the case of our model, the amount of conflict) generated by the production system. If the production system does not function properly, the information being used for error detection should be unreliable. Under such circumstances, the speaker either continues to monitor using the unreliable information or stops monitoring altogether. In the former case, the monitor will likely generate many false alarms, while, in the latter, there will necessarily be many misses. In our modeling terms, both of these scenarios would manifest as lower Cohen’s d’s.

In simulation II we lesioned the model systematically to create patients with different degrees of damage to their s and p weights. From previous data-fitting studies (Dell et al., 1997; Dell, Martin & Schwartz, 2007; Schwartz, Dell, Martin, Gahl, & Sobel, 2006) we know that a weight value of 0.04 indicates normal production, and values lower than 0.01 indicates severe damage. 0.02 is a middle value, for mild to moderate damage. Six speakers (one normal speaker, as in simulation I and five simulated aphasic patients) were created using permutations of these three values of s and p. Table 1 shows that of the simulated aphasic patients (second to sixth entries), the first two have one normal weight and one moderately-damaged (but still functioning) weight. The second two have one normal weight and one weight which is severely damaged. The last patient’s production system is degraded to the degree that its production is close to random (weights are too low for systematic mapping from one layer to another).

Table 1.

Detection sensitivity and layer specificity in the 6 simulated speakers with normal to severely damaged production systems. The last two columns show the Cohen’s d’s for semantic and nonword errors at word and phoneme layers calculated using the −ln(diff(max)) measure of conflict, with the −ln(sd)-based values reported in the parentheses. The Bold numbers identify cases where there is some detection sensitivity. Note that as the weights decrease, detection sensitivity decreases as well. When the production system is completely distorted (s=p = 0.008) detection sensitivity is very low.

| Weights | level of conflict measurement | Cohen’s d for Error type | ||

|---|---|---|---|---|

| s | p | Semantic | Nonword | |

| 0.04 | 0.04 | Word layer | 3.26(1.18) | 0.57 (0.50) |

| Phoneme layer | 0.09 (0.11) | 2.83 (1.52) | ||

| 0.02 | 0.04 | Word layer | 1.41 (0.76) | 0.55 (0.33) |

| Phoneme layer | 0 (0) | 3.05 (1.95) | ||

| 0.04 | 0.02 | Word layer | 2.81 (1.18) | 0.05 (0) |

| Phoneme layer | 0.04 (0.04) | 1.42 (0.95) | ||

| 0.008 | 0.04 | Word layer | 0.37 (0.34) | 0.03 (0.37) |

| Phoneme layer | 0.09 (0.20) | 3.58 (2.18) | ||

| 0.04 | 0.008 | Word layer | 2.03 (0.82) | 0.29 (0.29) |

| Phoneme layer | 0.09 (0) | 0.55 (0.43) | ||

| 0.008 | 0.008 | Word layer | 0.25 (0.19) | 0.25 (0.18) |

| Phoneme layer | 0.12 (0.04) | 0.31 (0.26) | ||

Table 1 also shows the Cohen’s d’s for each simulated speaker, calculated at the word and phoneme layers, using the amount of conflict measured for semantic and nonword error trials respectively. The numbers show that when layer specificity is taken into account, detection sensitivity is high when the relevant weights are strong. As the weights become weaker, the sensitivity decreases (e.g. a decrease in the value of the s weight from 0.04 to 0.02 causes a drop in detection sensitivity from 3.26 to 1.41), until the weight value becomes so low that there is little connectivity between the nodes in the production network. In this case, detection sensitivity becomes so low that the error signal is almost meaningless (e.g., 0.37 when the s weight drops to 0.008).

One might wonder why the Cohen’s d’s for the detection of semantic errors are so different for the normal speaker, the third and the fifth virtual patients in Table 1, despite all their s weights being 0.04. The reason is that the relationship between semantic errors generated at the word-layer and semantic errors that emerge as the final response changes as a function of p weights. When p weights are strong, the numbers of word-layer and final-response semantic errors in the model are very similar because the incorrectly chosen word at the word layer is correctly pronounced, creating a semantic error as the final responses. Simulation of the normal speaker shows 199 semantic errors at the word layer, all of which turned into semantic errors at the phoneme layer. Moreover, no new semantic errors were created during phonological encoding, so there is a one to one relationship between semantic errors at the word layer and in the final response profile of this speaker.

The third patient makes 292 semantic errors at the word level (on 292 trials “dog” is chosen at that level). But the final response profile of the patient contains 275 semantic errors. Of these, only 274 come from the “dog” node, and one is a new error, created by the misselection of the phonemes for the chosen node “cat” at the word layer. So, in this patient, 18 of the word-layer semantic errors are converted to other words during phonological encoding, and one correct word-layer response is turned into a semantic error because of lesioned p weights. Thus the relationship between the word-layer and final semantic responses is not perfect like the normal speaker. For the fifth patients, the p weights are very small, and the association between the word level and semantic errors is further undermined. The simulations with this patient show 265 word-level, but only 164 final-response, semantic errors, of which only 124 come from the word-layer “dog” node. Therefore, 141 of the word-level semantic errors were converted to other response types, and 20 new semantic errors were created from responses other than “dog” at the word layer.

In summary, our simulations exhibited the three principles deemed necessary for the conflict-based monitor to be a plausible mechanism for error detection: Conflict is a good predictor for the occurrence of an error (detection sensitivity), when measured at the layer from which the error originates (layer specificity). Since this monitoring mechanism relies on the information produced by the speech production system, it functions best when the production system is healthy, and becomes increasingly less accurate as the production system becomes more degraded (integrity contingency).

Throughout the modeling section we have reported Cohen’s d as a measure of the usefulness of conflict in signaling that an error is probable. Certainly the high level of discriminability shown by the normal model’s values of Cohen’s d supports an effective detection mechanism, but ultimately the model must simulate natural error detection in human speakers. Estimates from connected speech corpora place the natural detection rate by a neurologically-healthy adult speaker at around 50% (Nooteboom, 2005; slightly higher in Nooteboom, 1980; Note that there are no comparable data for single-word utterances). By “natural” detection rate, we mean the rate with which errors are followed by corrections or other acknowledgment of error, in the absence of any explicit instruction to detect or report errors.

Why are the real-life hit rates only moderately good, and does the model simulate this? To address these questions we augmented the model with a criterion that governs whether or not a certain degree of conflict indicates an error. Specifically, we used a model of incremental criterion placement in signal detection proposed by Kac (1962), which appears to account for detection data about as well as other models (Thomas, 1973). People learn to set their criteria by making detection responses and getting feedback on their accuracy. In our case, speakers determine whether or not they made a speech error, and then determine whether that assessment was correct or not (presumably by using the external channel for feedback). In Kac’s model, the criterion is initially placed in some neutral location. Whenever a signal is not detected, the criterion is lowered by a small amount, and whenever a false alarm occurs, it is raised by a similar amount. No adjustments are made on hits or correct rejections. After many trials, the criterion will hover around a location that reflects a probability matching strategy. The reason that Kac’s model yields probability matching is that, at equilibrium, the chance of a criterion increase due to a false alarm must equal the chance of a decrease due to a miss. From this, it follows that the overall probability of a “detect” response (in our case, detect that an error has occurred) will match the overall probability of a signal (in our case, the occurrence of an actual error).

Figure 3 shows the distributions of the −ln(diff(max)) measure of conflict for correct responses and semantic errors of the simulated normal speaker (simulation I). We applied Kac’s model to these distributions and found the criterion to fall at the conflict level equal to 5.20. When this criterion is applied to the error distribution, the hit rate is 47%. Thus, the combination of the conflict model and the incremental criterion-setting model mimics the fact that normal speakers detect only half of their errors. The reason for the medium hit rate, in spite of the strong difference in the distributions of error and correct conflict values, is the probability matching feature of the criterion placement algorithm. When errors are uncommon, criterion placement will be conservative, that is, the model will miss detecting many errors, but rarely generate false alarms. It must be noted though, that Kac’s model only estimates how a criterion is derived when there are no specific demands influencing criterion placement. Speakers may – and most probably do- change their criteria for self-monitoring under different circumstances. The criterion is most probably lowered (to increase hit rates) under conditions when detection is important.

Figure 3.

Distribution of the −ln(diff(max)) measure of conflict for semantic errors and correct trials in a simulated normal speaker. The dashed line indicates the criterion derived from Kac’s (1962) model. The area to the right of the criterion consists of conflict values translated into error signals. This area indicates false alarms (≈ 1%) in the correct distribution (upper panel), and detected errors or hits (≈47%) in the error distribution (lower panel).

Just as hit rates were derived by applying the criterion to the error distributions, false alarms can be calculated by applying the criterion to the distribution of correct responses. This renders a 1% false alarm rate for a normal speaker. Although 1% seems like a reasonably low value, given that the number of correct trials is much larger than the error trials, suspicion may raise as to whether it is in fact realistic. Healthy adult speakers rarely have the impression of having erred when they have not. False alarms, however, may manifest as disfluencies. Hesitations or other breaks in the flow of speech are common, with an estimated rate of 6 (Bortfeld, Leon, Bloom, Schober, & Brennan, 2001) to 26 (Fox Tree, 1995) per 100 spoken words. These include overt repairs, disfluencies which clearly reflect error detection and correction, and covert repairs, disfluencies that may reflect monitoring and repair, but without any audible reparandum (Postma & Kolk, 1992). Levelt (1983) categorizes repeats (e.g. “I … I went…”) and filled pauses (e.g. “I…uh…went”) as covert repairs and brings up the possibility that some of these are in fact false alarms generated by the monitoring system. Bortfeld et al. (2001) report an average rate of 1.47 for repeats and 2.56 for filled pauses per 100 words (with slightly higher overall rates in the older population), which together comprise about 4% of the spoken words. If, a sizable fraction of these are the result of the monitoring system falsely detecting an errors, our simulated 1% false alarm rate is not far off the mark. In the General Discussion we will return to the question of the effectiveness of error detection by arguing that although we propose the conflict-based monitoring as the default natural monitoring mechanism, error detection is in fact boosted by a number of ancillary mechanisms, each of which add to the probability of the success of detection.

We also examined the effects of Kac’s (1962) criterion-setting algorithm on the effectiveness of error monitoring through conflict when connection weights are weaker (as in aphasia). These effects can be appreciated by looking at semantic error detection as a function of s weights. With p weights kept constant at 0.04 (normal), we determined the correct and error distributions of conflict scores and the resulting criteria as the s weights dropped from 0.04 (normal) to 0.03, 0.02 and 0.015. The model predicts a decrease in the hit rates from 0.47 (normal) to 0.39, 0.35 and 0.34, accompanied by an increased rate of false alarms from 0.01 (normal) to 0.02, 0.07 and 0.11 for the respective weights. In the section that follows, we investigate the predicted association between hit rates for error detection and production-derived connection weights in a sample of aphasic patients. False alarms, as mentioned above, have many different manifestations in natural speech, which makes quantifying them very difficult, especially in aphasic speech. So, although we do not test specific quantitative predictions about false alarms in error detection in aphasia, we discuss relevant qualitative findings in our sample data.

Patient data

The plausibility of the conflict-based theory was established by simulations showing a certain degree of detection sensitivity, as required under the first principle. The principles of layer specificity and integrity contingency, however, are stronger and lead to predictions that can be empirically tested. According to integrity contingency, the ability to detect errors must correlate with the indices of the functionality of the production system (parameters s and p in our model), as opposed to indices of comprehension. Layer specificity makes an even more specific prediction; that the ability to detect semantically-related errors must correlate with the s parameter (which determines conflict at the word layer) and the ability to detect phonologically-related (mostly nonword) errors must correlate with the p parameter (which determines conflict at the phoneme layer). To this end, we determined the percentage of detected errors in a group of aphasic patients who completed a picture naming task. We then assessed the correlation between the detection of different error types and measures of comprehension and production.

The first step in the patient study was to devise a reliable method for coding natural error detection in patients. For this step, data were obtained from 63 aphasic patients, who participated in the 175-item Philadelphia Naming Test (The PNT; Roach, Schwartz, Martin, Grewal, & Brecher, 1996). In this test, a single picture (a black and white line drawing) appears and remains on the screen until the patient responds or for 30 seconds in case of no response. There are no specific instructions for self-correction in this task, but patients do have time to detect and correct their errors. Therefore, detecting errors is self-initiated, and somewhat reflexive of the natural process of error detection, rather than a task demand. In addition, the single-word-response nature of this task, which eliminates contextual cues, makes it suitable for comparison with our model, which is a model of single-word (and not sentence) production.

Patients’ responses (word by word, including disfluencies, pauses, tangents and incomplete responses) were transcribed, once on-line (by a trained expert at the Moss Research Institute during the testing session), and once off-line using the session’s recordings. In the next step, the responses were “coded” into correct and a number of error categories. For the purpose of this study, all of the original transcriptions of trials were then recoded by the first author with regard to the nature of the errors made and the patients’ detection of those errors. A second coder, a graduate student trained on the coding scheme, also recoded the transcription independently of the first author. The codings were then compared, resulting in an initial agreement of 78%. The coding criteria were consulted in resolving the disagreements until full agreement was achieved.

Coding error categories

For each picture, the response was coded as either correct, incorrect or omission. We assigned the “omission” code to trials in which no response was produced, as well as trials in which the patient gave a relevant description of the item or a multi-word response irrelevant to the description of the picture (e.g., “I can’t remember.”). A trial was not coded as an omission, though, when such responses were followed by a single-word response in the noun category. For example, if in response to the picture of a cat, the patient said, “I have one of those (pause) dog”, we accepted the response after the pause, coding it as a semantic error.

If a response was coded as an error, a category had to be specified. A detailed description of all error categories with examples is available upon request. The two important error categories are semantic and phonological errors. Semantic errors were defined as any nouns related in meaning to the target word (e.g. “dog” for the target “cat”). In agreement with previous coding schemes using the PNT, verbs (e.g., “ride” for the target “bike”) did not count as semantic errors, but were registered as omissions. Phonological errors were coded as any responses, word or nonword, which showed a clear phonological similarity to the target, as defined in Schwartz et al. (2006). It is noteworthy that most of the targets in the PNT do not have a rich phonological neighborhood (e.g., tractor, helicopter, pumpkin, etc.), so most of the phonological errors end up being nonwords. Dialectical variations in pronunciation and phonological distortions due to mild speech apraxia, as determined by the Apraxia Battery for Adults (Dabul, 2000), were not coded as errors.

Fragments that showed a clear phonological similarity to the target were also coded as phonological errors. For example, for the target “thermometer”, if the patient responded “thero-” we counted that as a phonological error. However, one phoneme fragments (e.g. “th-”) were counted as fragments and not as errors. Also, if the fragment had the correct sequence of phonemes but was simply incomplete (e.g. “thermo-”) it did not count as an error. As a policy, in keeping with previous applications of PNT data, we coded fragments in a conservative manner, meaning that where the two coders did not agree that a fragment showed clear similarity to the target, it was not coded as an error.

Coding error detection

An error was coded as “detected” if the patient gave any indication of response rejection. This included a repair attempt, regardless of whether the repair was successful or not (e.g. “dog… cat” or “dog…cow” in response to the target “cat”) or simply rejection of the response (e.g., “dog… no…”). We did not code repetition of the same response as detection, due to the uncertain nature of repetitions (Fraundorf, & Watson, 2008; Levelt, 1983; MacKay, 1976; Postma & Kolk, 1993).

Other measures

In addition to error detection, a number of other measures were registered for each patient. These consisted of two production and three comprehension measures. For production, the strength of the s and p weights for each patient was determined by fitting the interactive two-step model to the patient’s naming data. Briefly, this entails a search of the s and p values so that the model maximizes its fit (minimizing Chi-square) to the patient naming response proportions in the correct category, as well as five error categories: semantic, phonologically-related word (formal), mixed semantic and formal, unrelated word, and nonword errors (See Dell, Lawler, Harris, & Gordon, 2004 for the details of the relevant coding and fitting process). After the model is fitted to the data, the deviation of its predicted proportions from the actual response proportions for each patient is calculated as the uncorrected root mean squared deviation (RMSD). For the current sample, the average RMSD was 0.021, which is a good fit, slightly better than the .024 found in Schwartz et al.’s (2006) study of picture naming by 94 patients.

Comprehension was assessed at three levels: (1) semantic comprehension was measured by the 52-item Pyramids and Palm Trees test (Howard & Patterson, 1992), in which a pictured item must be matched to the closest associate among a set of two pictured choices on each trial (e.g. a picture of a pyramid must be matched to a picture of a palm tree or a picture of a pine tree). Abstract semantic knowledge, without accessing the correct lexical item, is enough to successfully complete this task. (2) Lexical comprehension was measured by the 30-item Synonym Judgment test (Saffran, Schwartz, Linebarger, Martin, & Bochetto, 1988). On each trial, the subject views three written words that are spoken aloud by the examiner and must decide which two are most similar in meaning (e.g., violin, fiddle, clarinet). This test requires semantic comprehension not only at the abstract level, but also at the word level, because meaning must be accessed through word-forms, without the aid of pictures. This test has two variants, noun and verb. Because all the PNT targets are nouns, we only used the noun variant of the test in this study. Since lexical comprehension is a key component of the perceptual-loop monitor, we used a second test to ensure that the results obtained by using the Synonym Judgment test are corroborated. We used the Peabody Picture Vocabulary Test, Third Edition-form A (Dunn & Dunn, 1997), in which the patient must match a heard word to one of the four pictures that best represent the meaning of that word. (3) Finally, phonological comprehension was measured using the 40-item Phonological Discrimination test (N. Martin, 1996). In this task, the patient hears two items in immediate succession (20 lexical trials, and 20 nonlexical trials) and has to judge whether the items in each trial were the same or different. Non-identical pairs differ by a single onset or final phoneme. This task could be accomplished without access to meaning or even lexical knowledge.

Refining the sample for a study of error detection

The goal of the analyses was to find which measures reliably predict success or failure of error detection. To this purpose, we created a subsample of the 63 patients whose data were most likely to be accurately coded and to be revealing of error detection behavior. Specifically, patients were excluded from the subsample if they met either of the following criteria:

Moderate to severe speech apraxia (as measured by the Apraxia Battery for Adults; Dabul, 2000): the reason for this exclusion is that in such patients a portion of the phonological errors may not be due to low p weights, but instead to the malfunction of the articulatory system (e.g., Romani, Olson, Semenza & Grana, 2002). In this case, detection of such errors is not expected to correlate with the strength of the patient’s p weight.

High rate of omissions: This was defined by calculating two scores for each patient. The raw score was the proportion of correct responses overall, and the normalized score was the proportion of correct responses when omission trials were thrown out. If the difference between these two scores was greater than 20%, the patient was excluded. One reason for excluding these high-omission patients is that when there are too many omissions, the model’s estimate of the s and p weights becomes unreliable (Dell et al., 2004). Another is that many silent omissions could be due to covert error detection, which directly speaks to the ability of the patient in detecting errors; but we had no way of discriminating between omissions that did or did not reflect covert error detection.

After these criteria were enforced, 29 patients were selected. Their error detection behavior was assessed and related to the measures of comprehension and production ability.

Results

Correlations

Recall from simulation I that conflict at each layer was predictive of the error type originating mainly from that layer. We showed that the probability of the model making a semantic error was best predicted by the amount of conflict at the word layer while the probability of making a nonword error was best predicted by the amount of conflict in the phoneme layer. Moreover, simulation II showed that the reliability of the error signal varied as a function of the weights in the production system. If weights were strong, detection sensitivity was high. As weights decreased, so did the sensitivity with which errors were detected. Taken together, these two principles suggest that the strength of the s weights (which predicts conflict at the word layer) must be correlated with the detection of the semantic errors (which originate mainly from that layer). The same relationship should hold between the strength of the p weights and the detection of the phonological errors.