Abstract

The present study examined whether the compression of perceived visual space varies according to the type of environmental surface being viewed. To examine this issue, observers made exocentric distance judgments when viewing simulated 3D scenes. In 4 experiments, observers viewed ground and ceiling surfaces and performed either an L-shaped matching task (Experiments 1, 3, and 4) or a bisection task (Experiment 2). Overall, we found considerable compression of perceived exocentric distance on both ground and ceiling surfaces. However, the perceived exocentric distance was less compressed on a ground surface than on a ceiling surface. In addition, this ground surface advantage did not vary systematically as a function of the distance in the scene. These results suggest that the perceived visual space when viewing a ground surface is less compressed than the perceived visual space when viewing a ceiling surface and that the perceived layout of a surface varies as a function of the type of the surface.

Keywords: depth, space and scene perception, 3D surface and shape perception

Introduction

An important ability for human observers is to perceive the layout of three-dimensional space and use this information to guide behavior. Previous studies have examined how information from the physical environment is used to perceive 3D space (Blank, 1961; Foley, Ribeiro-Filho, & Da Silva, 2004; Koenderink, van Doorn, & Lappin, 2000; Luneberg, 1947). Significant distortions in perceived size, shape, and distance suggest that visual space is not Euclidean (e.g., Baird & Biersdorf, 1967; Gilinsky, 1951; Levin & Haber, 1993; Norman, Todd, Perotti, & Tittle, 1996; Ooi, Wu, & He, 2006; Wagner, 1985). For instance, Gilinsky (1951) had observers successively set equivalently appearing depth intervals on a ground plane and found that the depth extent of the interval increased with increased distance from the observer. A similar result was obtained by Blank (1961) using a bisection task. These results, considered together, suggest that visual space is not uniform but is compressed and is best described mathematically by a hyperbolic function.

Recent findings suggest that the compression of visual space may be influenced by factors such as the depth cues available to the observers (full cue vs. reduced cue) and whether eye, head, and body movements were allowed (Wagner, 1985). This suggests that the visual system may take advantage of (or be limited to) the information available in the environment and may construct representation of visual space in accordance with available information (Indow, 1991). Previously, we have found that the perception of distance varies according to the type of environmental surfaces present in the scene. Specifically, we found that observers organize the ordinal depth within a scene in accordance with a ground surface as compared to other environmental surfaces (Bian, Braunstein, & Andersen, 2005, 2006). Given the importance of the ground surface in organizing the ordinal depth within a scene, we examined in the present study whether the compression of visual space is different for ground and ceiling surfaces.

The importance of the ground surface in perceiving the layout of 3D scenes was discussed approximately 1000 years ago in Alhazen’s (1989, translation) writings and more recently by Gibson (1950) in his “ground theory.” Recent studies have examined the unique role of the ground surface in the perceptual organization of the 3D space by comparing it directly with other environmental surfaces, especially the ceiling surface. For example, Epstein (1966) found that the “height in the picture” cue was less effective when a ceiling surface was presented as compared to when a ground surface was presented. McCarley and He (2000, 2001) found that visual search was faster on an implicit ground surface than on an implicit ceiling surface defined by binocular disparity. Their finding was extended by Morita and Kumada (2003) who showed superior visual search performance on a ground surface defined by pictorial cues. Champion and Warren (2010) also obtained an advantage of the ground surface as compared to the ceiling surface in 3D size estimation. Bian et al. (2005) found that when the ground surface and the ceiling surface provided conflicting information about the relative distance of objects in a scene, observers used the information on the ground surface to determine the layout of the scene. They referred to this result as the ground dominance effect. In a follow-up study, Bian et al. (2006) showed that the ground dominance effect was mainly due to the differences in the projections of ground and ceiling surfaces, with visual field location having a minor effect. Recent research has also found a ground dominance effect for older observers, although the magnitude of the effect was smaller than that found for younger observers (Bian & Andersen, 2008). Finally, using a change detection paradigm, Bian and Andersen (2010) found that changes to a ground surface or objects on a ground surface were detected faster than changes to a ceiling surface or objects attached to a ceiling surface and that this advantage was mainly due to superior encoding, rather than retrieval and comparison, of ground surface information.

The unique role of the ground surface in the perceptual organization of 3D scenes is generally attributed to the ground surface being universal whereas other environmental surfaces are present only in artificial environments, such as buildings (Gibson, 1950). The ground surface supports almost all objects and the locomotion of most land-dwelling animals either directly (Gibson, 1950) or indirectly through a series of “nested contact relations” (Meng & Sedgwick, 2001, 2002). Through evolution, our visual system may have been adapted to the perspective structure of the ground surface and, consequently, is able to process the information on the ground surface more efficiently as compared to other environmental surfaces (McCarley & He, 2000). One explanation for this advantage was proposed by He and Ooi (2000). According to their “quasi-2D” theory, the visual system may encode the location of objects on a common visual surface using a quasi-2D coordinate system instead of a 3D Cartesian coordinate system. The benefit of this approach is to reduce computational demand when encoding a representation of the scene. It is possible that the degree to which the visual system uses this quasi-2D coordinate system may be greater for a ground surface than for other environmental surfaces. As a result, the encoding of object locations and relative distance between objects on a ground surface is more veridical than on a ceiling surface.

These studies, considered together, suggest that the perceived visual space when viewing a ground surface may be different from the perceived visual space when viewing a ceiling surface. Specifically, the perceived distance on a ceiling surface may be more compressed than on a ground surface. One recent study by Thompson, Dilda, and Creem-Regehr (2007) showed that the perceived egocentric distance on a ceiling surface, measured using a blind-walking task, was as accurate as that on a ground surface. In the current study, we used a perceptual matching task to examine exocentric distance judgments on a ceiling as compared to a ground surface and to examine whether the compression of visual space when viewing a ceiling surface differed from the compression of visual space when viewing a ground surface.

The experiments were conducted using computer-generated 3D displays in which texture and motion parallax information was present. In Experiment 1, we used an L-shaped matching task similar to that used by Feria, Braunstein, and Andersen (2003) to examine whether exocentric distance judgments were more compressed on a ceiling surface than on a ground surface. In addition, we were interested in whether the difference between the two surfaces in the compression of perceived visual space varied as a function of distance in the scene. In Experiment 2, we used a bisection task and examined whether the results obtained in Experiment 1 were due to a difference in perceived length in the frontal-parallel plane between the ground surface and the ceiling surface. In Experiment 3, we examined whether linear perspective was necessary to produce the ground surface advantage in perceived exocentric distance. In Experiments 1–3, a single environmental surface was presented. In Experiment 4, we examined whether similar results would occur when both surfaces were presented in the scene.

Experiment 1

In Experiment 1, we used an L-shaped matching task similar to that used in Feria et al. (2003) to examine whether judged exocentric distance varied based on the presence of a ground or ceiling surface. Since previous studies using visual matching tasks have found a large compression of depth (e.g., Loomis, Da Silva, Fujita, & Fukusima, 1992), a more accurate response would mean less compression of perceived visual space. In addition, previous research has found that compression of a depth interval increased systematically as a function of distance in the scene (Levin & Haber, 1993; Loomis et al., 1992; Loomis & Philbeck, 1999; Norman et al., 1996; Ooi et al., 2006), suggesting a distortion of perceived visual space. In Experiment 1, we also manipulated the distance of the L-shape from the observers and examined whether the difference in judged depth extent on the two surfaces varied as a function of distance in the scene.

On each trial, three poles were attached to either a ground surface or a ceiling surface, forming an inverse “L” shape. The task of the observers was to match the horizontal arm of the “L” to the vertical arm of the “L” in depth. If the perceived depth when viewing a ground surface is different than a ceiling surface, then the adjusted ratio of the L-shape should be more accurate when a ground surface is present than when a ceiling surface is present. Previous studies have found that greater depth was perceived when stimuli with motion parallax information were presented as compared to when stationary stimuli were presented (Gibson, Gibson, Smith, & Flock, 1959; Rogers & Graham, 1979; Smith & Smith, 1963). In the present study, we manipulated motion parallax information and examined whether it has a differential effect on the perceived depth when viewing ground and ceiling surfaces.

Methods

Observers

The observers were 12 undergraduate students (6 males and 6 females) from the University of California, Riverside. All observers were paid for their participation, were naive regarding the purpose of the experiment, and had normal or corrected-to-normal visual acuity.

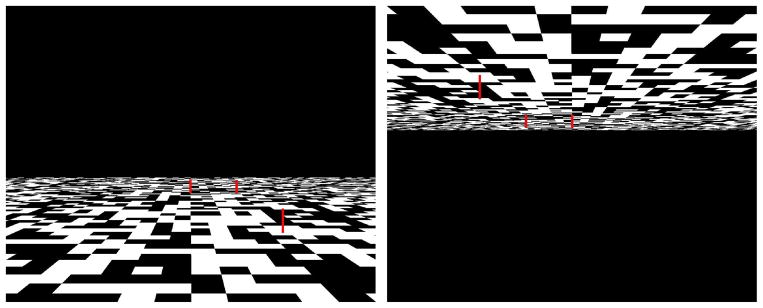

Stimuli

The stimuli were computer-generated 3D scenes composed of either a ground or ceiling surface with a 64 × 64 random black–white rectangle texture. The simulated dimension of the surface was 19.2 m × 34.3 m and each rectangle was measured as 30 cm × 53.6 cm. The average luminance of the white rectangles was 60.8 cd/m2. The simulated distances from the observer to the near and far ends of the plane were 571 cm and 4000 cm, respectively (the calculation of the scene dimensions was based on an eye height of 120 cm). Three red vertical poles were attached to the surface and formed an inverse L-shape (see Figure 1). The first pole was positioned close to the observers (“the front pole”), the second pole was positioned directly behind the first pole (“the back pole”), and the third pole was positioned either to the left or to the right side of the second pole (“the side pole”). The simulated depth interval between the front pole and the back pole was 12 m, subtending a visual angle of 3.39° and 2.18° when the front pole was 12 m and 16 m away from the observer, respectively. The location of the front and back poles was fixed, whereas the side pole could be adjusted horizontally by the observer. On each trial, the height of each pole varied randomly between 24 cm and 44 cm, and the width of each pole varied randomly between 3.5 cm and 8 cm, respectively, in order to prevent the use of size information as a depth cue. The initial position of the side pole varied randomly between 50 cm and 100 cm from the back pole. When motion parallax information was available, the whole scene oscillated horizontally back and forth at an average speed of 90 cm/s. The actual speed in each frame was determined by a sine-wave function. The duration of each cycle was 8 s (480 frames).

Figure 1.

An example of the stimuli used in Experiment 1. (a) A ground surface. (b) A ceiling surface.

Design

Four independent variables were manipulated: (1) surface type (ground or ceiling), (2) motion parallax information (present or absent), (3) simulated distance of the front pole to the observers (“front pole distance,” 8 m, 12 m, 16 m, or 20 m), and (4) simulated distance between the front and back poles (“depth interval,” 6 m or 8 m). The variable of motion parallax information was blocked and the order was counterbalanced across observers. On each block, 16 combinations of each level of surface type, front pole distance, and depth interval were presented for 6 replications. The side pole was to the left or right of the back pole with equal probability. Four practice trials (2 trials for each surface) were inserted at the beginning of each block. The order of the trials for each observer in each block was randomized.

Apparatus

The displays were presented on a 21-inch (53 cm) flat screen CRT monitor with a pixel resolution of 1024 by 768, controlled by a Windows XP Professional Operating System on a Dell Dimension XPS workstation. The dimensions of the display on the monitor were 40.0 cm (W) × 30.0 cm (H), subtending a visual angle of 31.3° × 23.7°. The center of the monitor was 120 cm above the floor. A black viewing hood was placed in front of the monitor to cover the edges of the screen. A 19-cm-diameter glass collimating lens, which magnified the images by approximately 19%, was located between the observer and the monitor. The purpose of the collimating lens was to remove accommodation as a flatness cue and, thus, increase the perceived depth of the 3D scenes. The distance between the eyes and the collimating lens was approximately 10 cm and the distance from the eyes to the monitor was 85 cm. A chin rest was mounted at a position appropriate to this viewing distance. A Logitech Attach 3 joystick was used to control the position of the side pole.

Procedure

The experiment was run in a dark room. The observers viewed the display monocularly through the collimating lens with their head position fixed by a chin rest and one of their eyes (the weaker eye) covered by an eye patch. An eye height of 120 cm was used. The observers were instructed to use a joystick to adjust the position of the side pole such that the horizontal separation between the side pole and the back pole matched the perceived distance between the front pole and the back pole. Once satisfied with their response, the observer pressed a button on the joystick to proceed to the next trial. The judged distance between the side pole and the back pole was recorded.

Results and discussion

The aspect ratio between the adjusted horizontal extent and the simulated depth (the depth interval between the front and back poles) was measured. An accurate judgment would result in an aspect ratio of 1. Formally, the judgment is defined as

| (1) |

where r is the aspect ratio, D′ is the horizontal separation between the side and back poles, and D is the simulated depth interval between the front and back poles.

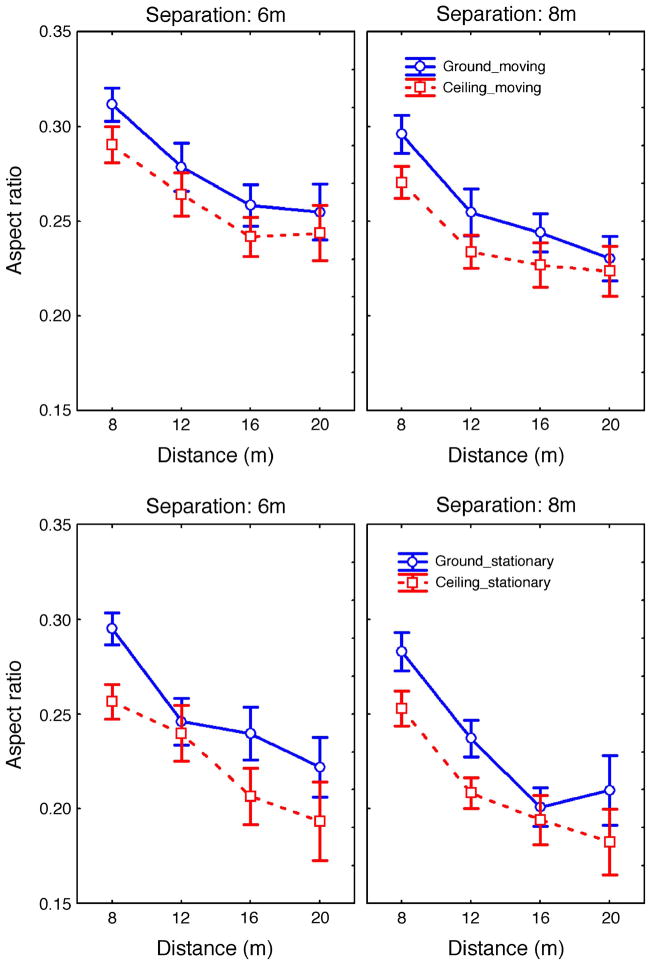

Overall, the aspect ratio for all observers varied from 0.20 to 0.33, suggesting a large compression of depth, a result consistent with previous research that used computer-generated scenes (Feria et al., 2003) and research conducted in real scenes (Beusmans, 1998, Figure 11). The aspect ratio for each observer in each condition was analyzed in a 2 (surface type) × 2 (motion parallax information) × 4 (front pole distance) × 2 (depth interval) analysis of variance (ANOVA). The main effect of surface type was significant (F(1, 11) = 18.83, p < 0.01). The average aspect ratio was 0.25 when the poles were located on a ground surface and 0.23 when the poles were located on a ceiling surface, suggesting that judged exocentric distance showed less distortion on a ground surface than on a ceiling surface. The main effect of motion parallax information was significant (F(1, 11) = 24.05, p < 0.01). According to this result, observers judged more depth with a moving scene (mean aspect ratio = 0.26) than with a stationary scene (mean aspect ratio = 0.23).

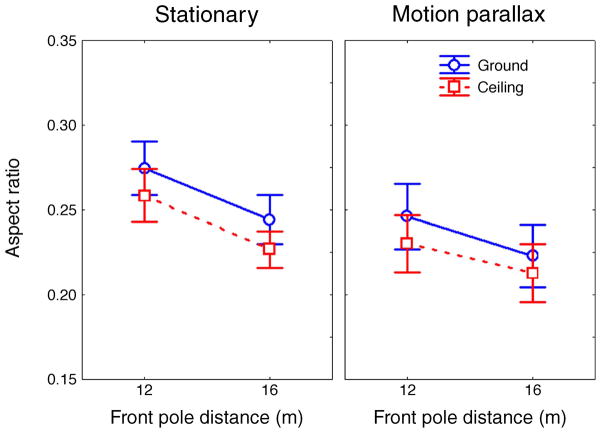

There was a significant main effect of front pole distance (F(3, 33) = 28.08, p < 0.01) and depth interval (F(1, 11) = 66.92, p < 0.01) that were mediated by a significant 3-way interaction of surface type, front pole distance, and depth interval (F(3, 33) = 3.73, p < 0.05, see Figure 2). According to this result, the interaction between surface type and front pole distance was significant (F(3, 33) = 2.91, p < 0.05) when the depth interval was 6 m but was not statistically significant when the depth interval was 8 m (F(3, 33) = 2.68, p = 0.06). No other interactions reached significance (p > 0.05).

Figure 2.

Aspect ratio of judged depth and simulated depth as a function of surface type, motion parallax, front pole distance, and depth interval from Experiment 1. The top and bottom panels are the results for motion parallax and stationary scene conditions, respectively. Error bars represent ±1 standard error.

As the front pole distance increased from 8 m to 20 m, the judged aspect ratio decreased from 0.28 to 0.22. If the observers had responded to the projected size (retinal images) rather than the simulated size of the depth interval, then the aspect ratio would have been 0.15 for the 8-m condition and 0.06 for the 20-m condition, respectively. Our results suggest that observers were responding to the simulated distance between the front pole and the back pole.

Overall, the results are consistent with our prediction that judged depth on a ground surface was less compressed than on a ceiling surface. This ground surface advantage did not vary systematically as a function of distance in the scene. Our results are also consistent with previous studies showing a ground surface advantage in the perceptual organization of 3D scenes (e.g., McCarley & He, 2000).

Experiment 2

In Experiment 1, we found that the aspect ratio of an L-shape when observers adjusted the horizontal extent to match the perceived depth was smaller when viewing a ceiling surface than when viewing a ground surface, suggesting a difference in the compression of visual space for a ground as compared to a ceiling surface. These results, however, could be attributed to a difference in the perceived length in the frontal-parallel plane rather than a difference in the perceived depth when viewing the surfaces. In other words, it is possible that the perceived depth of ground and ceiling surfaces was the same with the horizontal extent (the horizontal separation between the back pole and the side pole) on the ground surface perceived to be smaller than the extent when viewing a ceiling surface.

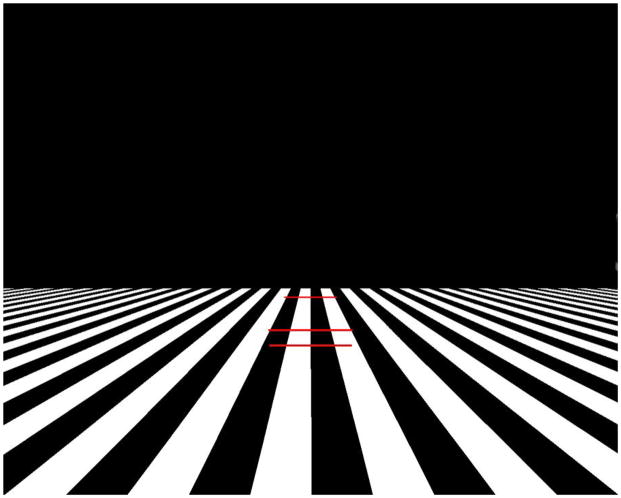

In Experiment 2, we examined this possibility with a bisection task. On each trial, three horizontal poles were positioned on either the ground or ceiling surface. The three poles were parallel to each other and separated in depth (see Figure 3). The observers adjusted the position of the middle pole to match the depth interval between the front and middle poles to the interval between the middle and back poles. If the results obtained in Experiment 1 were due to a difference in the perceived horizontal extent in the frontal-parallel plane, then we would expect similar bisection judgments when viewing ground and ceiling surfaces. On the other hand, if the results of Experiment 1 were due to a difference in perceived depth between the two surfaces, then a similar ground surface advantage should occur when performing a bisection task.

Figure 3.

An example of the stimuli with a ground surface used in Experiment 2.

Methods

Observers

The observers were 12 undergraduate students (6 males and 6 females) from the University of California, Riverside. All observers were paid for their participation, were naive regarding the purpose of the experiment, and had normal or corrected-to-normal visual acuity. None of the observers had participated in Experiment 1.

Stimuli

The stimuli were computer-generated 3D scenes composed of either a ground surface or a ceiling surface with a black and white striped texture. The simulated width of each stripe was 30 cm. A striped texture was used to prevent observers from matching the number of rectangles to perform the bisection task. The simulated distances from the observer to the near and far ends of the plane were 571 cm and 4000 cm, respectively (based on an eye height of 120 cm). Three red horizontal poles were attached to the surface (see Figure 3). The position of the front pole and the back pole was fixed and the position of the middle pole could be adjusted in depth. On each trial, the length of each pole varied randomly between 90 cm and 150 cm. The initial position of the middle pole varied randomly either closer to the front pole or closer to the back pole. When motion parallax information was available, the scene oscillated horizontally back and forth at an average speed of 90 cm/s. The actual speed for each frame was determined by a sine-wave function. The duration of each cycle was 8 s (480 frames).

Design

Four independent variables were manipulated: (1) surface type (ground or ceiling), (2) motion parallax information (present or absent), (3) front pole distance (12 m or 16 m), and (4) depth interval (6 m, 9 m, or 12 m). The variable of motion parallax information was blocked and the order was counterbalanced across observers. In each block, 12 combinations of each level of surface type, front pole distance, and depth interval were presented for 8 replications for a total of 96 trials. Four practice trials (2 trials for each surface) were inserted at the beginning of each block. The order of trials for each observer in each block was randomized.

Apparatus and procedure

The apparatus and the procedure were the same as in Experiment 1 with the following exception. On each trial, the observers used a joystick to adjust the position of the middle pole until the distance between the front and middle poles matched the distance between the middle and back poles. Once satisfied with their responses, the observers pressed a button on the joystick to proceed to the next trial.

Results and discussion

The ratio of the judged distance from the middle pole to the back pole and the distance from the front pole to the middle pole was measured. An accurate bisection would result in a ratio of 1. A ratio larger than 1 would indicate a compression in perceived depth.

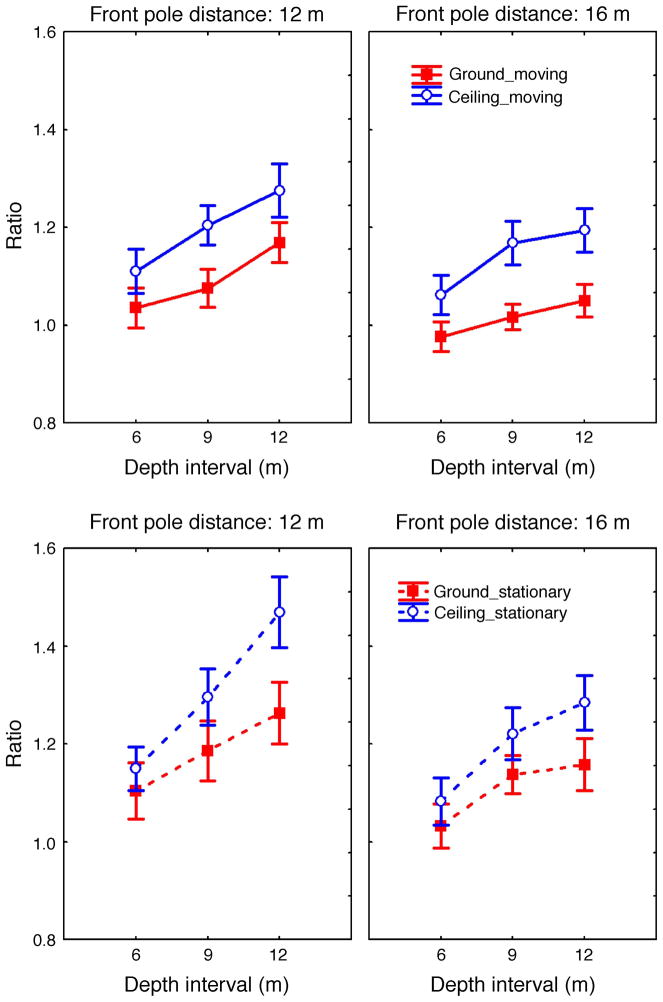

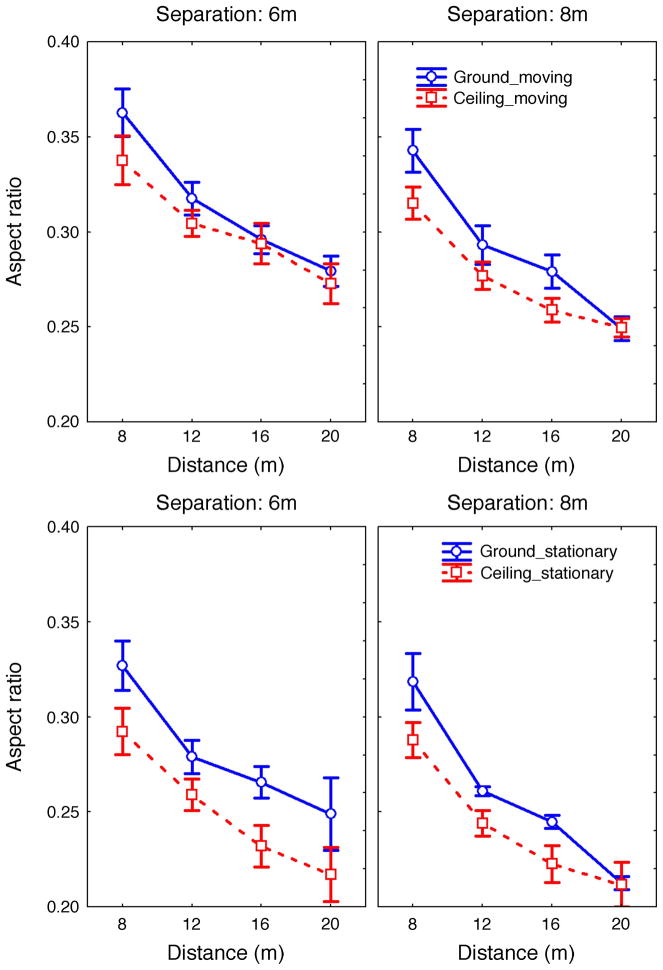

The ratio for each observer in each condition was analyzed in a 2 (surface type) × 2 (motion parallax information) × 2 (front pole distance) × 3 (depth interval) analysis of variance (ANOVA). There was a significant main effect of surface type (F(1, 11) = 26.19, p < 0.01). The average ratio was 1.10 when the poles were located on a ground surface and 1.21 when the poles were attached to a ceiling surface (see Figure 4). The main effect of motion parallax information was also significant (F(1, 11) = 12.74, p < 0.01), which is consistent with the results of Experiment 1. The average ratio was 1.20 when the scenes were stationary and 1.11 when the motion parallax information was available. Although the main effect of the front pole distance was significant (F(1, 11) = 51.01, p < 0.01), the direction of this effect was inconsistent with our prediction. The average ratio was 1.12 when the front pole distance was 16 m and 1.19 when the front pole distance was 12 m, suggesting less compression of depth as the front pole distance increased. This finding is inconsistent with previous research showing increased compression with increased viewing distance (a similar result was reported by Beusmans, 1998). There was a significant main effect of the depth interval (F(2, 22) = 100.92, p < 0.01). As the depth interval increased from 6 m to 12 m, the ratio increased from 1.07 to 1.23. Post-hoc comparisons (Tukey HSD test) indicated significant differences among all three levels.

Figure 4.

Ratio of far depth interval to near depth interval (bisection response) as a function of surface type, motion parallax information, front pole distance, and depth interval from Experiment 2. The top and bottom panels are the results for motion parallax and stationary scene conditions, respectively. Error bars represent ±1 standard error.

In addition, we found significant two-way interactions between surface type and depth interval (F(2, 22) = 10.44, p < 0.01) and between motion parallax information and depth interval (F(2, 22) = 15.34, p < 0.01), which were mediated by a three-way interaction of surface type, motion parallax information, and depth interval (F(2, 22) = 6.27, p < 0.01). There was also a significant two-way interaction between front pole distance and depth interval (F(2, 22) = 11.12, p < 0.01) and a significant three-way interaction of surface type, motion parallax information, and frontal pole distance (F(1, 11) = 7.09, p < 0.05). Figure 4 shows the average ratio as a function of surface type, motion parallax information, front pole distance, and depth interval. As shown in Figure 4, the average ratio increased with an increase in depth interval, and the amount of increase varied as a function of surface, motion parallax information, and front pole distance. No other interactions were significant (p > 0.05).

Overall, the results indicated that exocentric distance judgments on ground surface were less compressed than on a ceiling surface. These results are consistent with those in Experiment 1, suggesting that the ground surface advantage found in Experiment 1 was due to a difference in perceived depth rather than a difference in perceived horizontal extent in the frontal-parallel plane.

Experiment 3

In Experiments 1 and 2, we found a ground surface advantage in perceiving exocentric distance using two different tasks. In both experiments, the texture patterns on the two surfaces provided strong linear perspective information, which has been shown to be an effective depth cue (Andersen, Braunstein, & Saidpour, 1998; Gibson, 1950; Sedgwick, 1986; Wu, He, & Ooi, 2007). An important question is whether the ground surface advantage obtained in Experiments 1 and 2 is the result of the presence of a strong linear perspective cue. To examine this issue, we replaced the texture on the surfaces in Experiment 1 with an irregular texture pattern with the linear perspective cue removed. If the effects in Experiments 1 and 2 were due to the linear perspective cue being more effective on the ground surface than on the ceiling surface, then we would expect no effect of surface type in judging exocentric distance when the linear perspective information is removed.

Methods

Observers

The observers were 8 undergraduate students (3 males and 5 females) from the University of California, Riverside. All observers were paid for their participation, were naive with regard to the purpose of the experiment, and had normal or corrected-to-normal visual acuity. Two of the observers had participated in Experiment 2.

Stimuli

The displays were similar to that in Experiment 1 with the exception that the texture elements on the surfaces were elliptical shaped black blobs on a white background (see Figure 5). The blobs varied between 25 cm and 230 cm in width and between 32 cm and 310 cm in depth.

Figure 5.

An example of the stimuli containing a ground surface used in Experiment 3.

Design

Four independent variables were manipulated: (1) surface type (ground or ceiling), (2) motion parallax information (present or absent), (3) front pole distance (12 m or 16 m), and (4) depth interval (6 m, 9 m, or 12 m). The variable of motion parallax information was blocked and the order was counterbalanced across observers. In each block, each of the 12 combinations of surface type, front pole distance, and depth interval was presented for 8 replications. The side pole was to the left or right of the back pole with equal probability. Four practice trials (2 trials for each surface) were inserted at the beginning of each block. The order of trials for each observer in each block was randomized.

Apparatus and procedure

The apparatus and procedure were the same as that used in Experiment 1.

Results and discussion

The aspect ratio of judged depth and simulated depth was measured with an accurate response resulting in an aspect ratio of 1.

The aspect ratio for each observer in each condition was analyzed in a 2 (surface type) × 2 (motion parallax information) × 2 (front pole distance) × 3 (depth interval) analysis of variance (ANOVA). The main effect of surface type was significant (F(1, 7) = 14.73, p < 0.01, see Figure 6). The average aspect ratio was 0.26 when the poles were located on a ground surface and 0.24 when the poles were located on a ceiling surface. The main effect of motion parallax information did not reach significance (F(1, 7) = 2.25, p = 0.18).

Figure 6.

Aspect ratio of judged depth and simulated depth as a function of surface type, motion information, and the front pole distance from Experiment 3. Error bars represent ±1 standard error.

There were also significant main effects of front pole distance (F(1, 7) = 167.08, p < 0.01) and depth interval (F(2, 14) = 87.53, p < 0.01), which were mediated by a significant interaction between these two variables (F(2, 14) = 4.08, p < 0.05). An examination of simple effects indicated that the difference between the two front pole distances was significant for all three depth interval conditions (F(1, 7) = 50.23, p < 0.01 for the 6-m condition, F(1, 7) = 27.56, p < 0.01 for the 9-m condition, and F(1, 7) = 39.82, p < 0.01 for the 12-m condition, respectively). The three-way interaction of surface type, motion parallax information, and front pole distance was significant (F(1, 7) = 5.86, p < 0.05, see Figure 6). No other interactions reached significance (p > 0.05).

Overall, the results replicated the findings of Experiments 1 and 2 and suggest that judged depth on a ground surface was less compressed than on a ceiling surface. The results of Experiments 1 and 3, which used different texture patterns, were quite similar. This suggests that the difference between the ground and ceiling surfaces in the degree of compression obtained in these experiments was not due to the type of texture used.

Experiment 4

In the previous experiments, we showed that visual space was less compressed when viewing a ground surface than when viewing a ceiling surface. A possible limitation of the previous experiments is that only one environmental surface was presented at a time. Under real-world viewing conditions, ground and ceiling surfaces are often both visible. An important question is whether the same pattern of results will be obtained when both surfaces are visible. Indeed, Thompson et al. (2007) presented observers with scenes containing both surfaces and found that egocentric distance judgments on a ceiling surface were as accurate as judgments on a ground surface. In order to examine this hypothesis, we replicated Experiment 1 with both ground and ceiling surfaces in the scene.

Methods

Observers

The observers were 9 undergraduate students (3 males and 6 females) from the University of California, Riverside. All observers were paid for their participation, were naive regarding the purpose of the experiment, and had normal or corrected-to-normal visual acuity. None of the observers had participated in any of the previous experiments.

Stimuli

The stimuli were similar to that of Experiment 1 with the exception that on each trial, both the ground surface and the ceiling surface were presented.

Design

The design was similar to that of Experiment 1, except that the “surface type” variable in Experiment 4 is replaced by the “surface containing the L configuration” variable.

Apparatus and procedure

The apparatus and procedure were similar to that used in Experiment 1. On each trial, the observer used a joystick to adjust the position of the side pole such that the horizontal separation between the side and back poles matched the perceived distance between the front and back poles.

Results and discussion

The aspect ratio between the judged distance and the simulated distance was measured. An accurate judgment would result in an aspect ratio of 1.

The aspect ratio for each observer in each condition was analyzed in a 2 (surface containing the L configuration) × 2 (motion parallax information) × 4 (front pole distance) × 2 (depth interval) analysis of variance (ANOVA). The pattern of the results was similar to that obtained in Experiment 1. The main effect of surface containing the L configuration was significant (F(1, 8) = 6.24, p < 0.05). The average aspect ratio was 0.28 when the poles were located on a ground surface and 0.26 when the poles were located on a ceiling surface. In contrast, the aspect ratios in Experiment 1 were 0.25 when the poles were located on a ground surface and 0.23 when the poles were located on a ceiling surface. This suggests that the presence of both surfaces improved overall judged depth. The main effect of motion parallax information was significant (F(1, 8) = 24.90, p < 0.01) and indicated an increase in perceived depth when motion parallax was present. There was also a main effect of front pole distance (F(3, 24) = 18.83, p < 0.01) and a main effect of depth interval (F(1, 8) = 14.11, p < 0.01). According to these results, the aspect ratio decreased with increased front pole distance and with increased depth interval.

There was a significant interaction between surface containing the L configuration and motion parallax information (F(1, 8) = 8.28, p < 0.05, see Figure 7). An analysis of the simple effects showed that the difference between the ground surface and the ceiling surface was significant when the scenes were stationary (F(1, 8) = 10.49, p < 0.05) but not significant when the motion parallax information was presented (F(1, 8) = 2.52, p = 0.15). None of the other interactions reached significance.

Figure 7.

Aspect ratio of judged depth and simulated depth as a function of surface type, motion parallax, front pole distance, and depth interval from Experiment 4. The top and bottom panels are the results for motion parallax and stationary scene conditions, respectively. Error bars represent ±1 standard error.

Overall, the results were quite similar to the results obtained in Experiment 1, indicating that less compression of visual space occurred on a ground surface than on a ceiling surface. These results, thus, indicate that the difference in compression for ground and ceiling surfaces occurs regardless of whether a single surface or both surfaces are presented.

General discussion

In a series of four experiments, we examined whether perceived visual space is different for ground and ceiling surfaces. In Experiment 1, we used an L-shaped matching task and found that the judged depth on a ground surface was less compressed than on a ceiling surface. This effect did not systematically vary as a function of distance in the scene. These results, however, could be explained by a difference in perceived extent in the horizontal dimension rather than a difference in perceived depth of ground and ceiling surfaces. In Experiment 2, we used a bisection task to examine this possibility. We found that the results were consistent with those in Experiment 1, suggesting a difference between the ground surface and the ceiling surface in perceived exocentric distance rather than in perceived extent in the horizontal dimension. In Experiment 3, we examined whether this effect was dependent on linear perspective information. An L-shaped matching task was used with a random blob texture that did not contain a strong linear perspective component. Again, we found that the perceived depth was less compressed on a ground surface as compared to a ceiling surface, suggesting that this difference was not due to more effective linear perspective for ground surfaces. In Experiments 1 to 3, only one of two surfaces was presented on each trial. In Experiment 4, we examined whether the same pattern of results will be obtained when both surfaces are visible in the scene. The results were consistent with the previous experiments, suggesting that the ground surface advantage did not result from less consistent visual space when only one surface was present.

Our results, taken together, suggest that the visual space when viewing a ground surface is perceived as less compressed than when viewing a ceiling surface. The reason for this difference is probably due to the ground surface being encoded more efficiently by the visual system as compared to the ceiling surface (McCarley & He, 2000, 2001). For instance, a 3D scene may be encoded in either a hierarchical structure or in a structure based on locally coded information. A hierarchical representation of 3D scenes could be organized with a background surface at the top level in the structure, followed by the relative distance of different objects on the background surface, and then by the distance of object parts relative to individual objects. A locally coded representation, on the other hand, encodes all the relative distance of different objects and the distance among object parts at a single level. The hierarchical representation could, thus, encode the spatial layout information more efficiently and more accurately than the locally encoded representation due to reduced demand for computation (see Bian & Andersen, 2010 for a further discussion). It is possible that the ground surface and the ceiling surface are encoded in different structures, or they are both encoded hierarchically but to a different extent. He and Ooi (2000) also suggested that the visual system may encode objects on a common visual surface according to a quasi-2D coordinate system rather than a 3D coordinate system. An important goal of future research will be to examine this hypothesis in detail.

If visual space is less compressed when viewing a ground as compared to a ceiling surface, then egocentric distance judgments on a ground surface should be more accurate than on a ceiling surface. However, Thompson et al. (2007) found similar performance in judged egocentric distance for ground and ceiling surfaces. It is worth noting that there are several methodological differences between the present study and Thompson et al. (2007).

For instance, the current study used visual matching tasks, whereas Thompson et al. (2007) used a visually directed blind-walking task. Previous studies have found that visually directed tasks, such as blind walking, could produce more accurate egocentric distance judgments than indirect measures, such as verbal report (e.g., Elliott, 1986; Loomis et al., 1992; Ooi, Wu, & He, 2001; Thomson, 1983; Wu, Ooi, & He, 2004). Exocentric distance measured with blind walking also seems to be free of foreshortening (increased compression of perceived depth with increased viewing distance). That is, the errors made in a blind-walking task did not vary as a function of distance in the scene (Loomis et al., 1992; Philbeck, O’Leary, & Lew, 2004). This suggests that the spatial representation mediating visually directed responses may be more accurate than that mediating a verbal or visual matching response (Philbeck et al., 2004). Future research should examine whether there is a difference in exocentric distance judgments between a ground surface and a ceiling surface using visually directed tasks, such as a blind-walking task.

The discrepancy in results between the current study and Thompson et al. (2007) may also reflect a dissociation between perceived egocentric distance and exocentric distance (Loomis, Philbeck, & Zahorik, 2002). Previous research has found that perceived egocentric distance at near and intermediate distances was relatively accurate when measured with visually directed tasks such as blind walking (e.g., Loomis et al., 1992; Sinai, Ooi, & He, 1998; Thomson, 1983). Other studies, however, found large distortions in exocentric distance judgments even when measured with visually directed tasks (Beusmans, 1998; Levin & Haber, 1993; Loomis et al., 2002; Norman, Crabtree, Clayton, & Norman, 2005; Norman et al., 1996; Phibeck, 2000; Wagner, 1985). It was suggested that the perception of locations in a scene and the perception of depth magnitude are based on different and possibly independent visual functions (Loomis et al., 2002). Previous studies have suggested that the recovery of scene layout information occurs at an early level of visual processing (Champion & Warren, 2010; He & Nakayama, 1992). Accurate judgments of egocentric distance can be recovered within 150 ms and does not require focused attention (Phibeck, 2000). These results suggest that the recovery of egocentric distance information occurs at an early level of processing and is overall accurate within intermediate distances. Recovery of exocentric distance, on the other hand, may take place at a later stage of visual processing and is subject to errors due to the complexity of the task.

He, Wu, Ooi, Yarbrough, and Wu (2004) proposed that the visual system uses a sequential surface integration process (SSIP) to construct an accurate representation of the ground surface from near to intermediate distance. The SSIP emphasizes the importance of an attentional selection process in the integration of perceived depth on the ground surface. For instance, Wu, He, and Ooi (2008) showed that more depth was perceived when observers were required to focus attention on the near as compared to intermediate or far distances. Could the present results be due to the observers allocating more attention to the ground surface as compared to the ceiling surface?

There are two arguments that are inconsistent with this hypothesis. First, in Experiments 1 to 3, only one surface was presented on each trial and observers were instructed to focus on the surface to complete the task. In Experiment 4, when both the ground and ceiling surfaces were presented on each trial, the pattern of results was very similar to Experiments 1–3, suggesting that observers did not vary their attention based on the environmental surface present in the scene. The consistency in standard errors of the judged aspect ratios between the ground and ceiling surfaces (see Figures 2, 4, and 6) also suggest that observers did not differentially allocate attention to the two surfaces. Second, if this hypothesis were true, then inaccurate judgments of egocentric distance should occur when viewing a ceiling surface. This result did not occur in the study by Thompson et al. (2007). Thus, we do not believe that our results could be explained by a difference in the allocation of attentional resources to different locations in the display.

The current study used computer-generated stimuli that simulated scenes. The results indicated a significant compression of perceived depth on both the ground and ceiling surfaces. The average adjusted aspect ratio of the L-shape was 25% for the ground surface and 23% for the ceiling surface in Experiment 1. One possible reason for such a large compression was that the depth information in computer-generated scenes is not as rich as that present when viewing real scenes. For instance, Thompson et al. (2004) showed that observers made accurate egocentric distance judgments when viewing stimuli in real scenes but underestimated egocentric distance by more than 50% when viewing stimuli in virtual environments with high graphics quality. This reduction in perceived depth when viewing computer-generated scenes might be due to limitations in the information for depth such as the presence of microstructure in the texture gradients or the availability of shading or cast shadows. An important topic for future research will be to examine whether perceived visual space when viewing a ground surface is less compressed than when viewing a ceiling surface when observers are viewing real scenes under full cue conditions.

Ooi and He (2007) proposed that the intrinsic representation of the ground surface was an upward slanted surface, especially under non-optimal viewing conditions with reduced depth cues. They showed that the underestimation of egocentric distance could be used to accurately model subject performance by adding a slant error to Gilinsky’s (1951) equation. An interesting topic for future research will be to examine whether our results reflect a difference in the slant error between the representations of the ground and ceiling surfaces.

In summary, the results of the current study show that perceived visual space when viewing a ground surface is less compressed than when viewing a ceiling surface. These results were consistent with the results of previous studies showing superior encoding of information on a ground surface (Bian et al., 2005, 2006; Champion & Warren, 2010; McCarley & He, 2000, 2001; Morita & Kumada, 2003), suggesting a unique role of the ground surface in the perceptual organization of 3D scenes.

Acknowledgments

This research was supported by NIH AG031941 and EY18334.

Footnotes

Commercial relationships: none.

Contributor Information

Zheng Bian, Department of Psychology, University of California, Riverside, Riverside, CA, USA.

George J. Andersen, Department of Psychology, University of California, Riverside, Riverside, CA, USA

References

- Alhazen I. Book of optics. In: Sabra AI, translator. The optics of Ibn-Haytham. Vol. 1. London: University of London, Warburg Institute; 1989. pp. 149–170. (Date of original work unknown, approx. 11th century) [Google Scholar]

- Andersen GJ, Braunstein ML, Saidpour A. The perception of depth and slant from texture in three-dimensional scenes. Perception. 1998;27:1087–1106. doi: 10.1068/p271087. [DOI] [PubMed] [Google Scholar]

- Baird JC, Biersdorf WR. Quantitative functions for size and distance judgments. Perception & Psychophysics. 1967;2:161–166. [Google Scholar]

- Beusmans JMH. Optic flow and the metric of the visual ground plane. Vision Research. 1998;38:1153–1170. doi: 10.1016/s0042-6989(97)00285-x. [DOI] [PubMed] [Google Scholar]

- Bian Z, Andersen GJ. Aging and the perceptual organization of 3-D scenes. Psychology & Aging. 2008;23:342–352. doi: 10.1037/0882-7974.23.2.342. [DOI] [PubMed] [Google Scholar]

- Bian Z, Andersen GJ. The advantage of a ground surface in the representation of visual scenes. Journal of Vision. 2010;10(8):16, 1–19. doi: 10.1167/10.8.16. http://www.journalofvision.org/content/10/8/16. [DOI] [PMC free article] [PubMed]

- Bian Z, Braunstein ML, Andersen GJ. The ground dominance effect in the perception of 3-D layout. Perception & Psychophysics. 2005;67:815–828. doi: 10.3758/bf03193534. [DOI] [PubMed] [Google Scholar]

- Bian Z, Braunstein ML, Andersen GJ. The ground dominance effect in the perception of relative distance in 3-D scenes is mainly due to characteristics of the ground surface. Perception & Psychophysics. 2006;68:1297–1309. doi: 10.3758/bf03193729. [DOI] [PubMed] [Google Scholar]

- Blank AA. Curvature of binocular visual space: An experiment. Journal of the Optical Society of America. 1961;51:335–339. doi: 10.1364/josa.43.000717. [DOI] [PubMed] [Google Scholar]

- Champion RA, Warren PA. Ground-plane influences on size estimation in early visual processing. Vision Research. 2010;50:1510–1518. doi: 10.1016/j.visres.2010.05.001. [DOI] [PubMed] [Google Scholar]

- Elliott D. Continuous visual information may be important after all: A failure to replicate Thomson (1983) Journal of Experimental Psychology: Human Perception and Performance. 1986;12:388–391. doi: 10.1037//0096-1523.12.3.388. [DOI] [PubMed] [Google Scholar]

- Epstein W. Perceived depth as a function of relative height under three background conditions. Journal of Experimental Psychology. 1966;72:335–338. doi: 10.1037/h0023630. [DOI] [PubMed] [Google Scholar]

- Feria CS, Braunstein ML, Andersen GJ. Judging distance across texture discontinuities. Perception. 2003;32:1423–1440. doi: 10.1068/p5019. [DOI] [PubMed] [Google Scholar]

- Foley JM, Ribeiro-Filho NP, Da Silva JA. Visual perception of extent and the geometry of visual space. Vision Research. 2004;44:147–156. doi: 10.1016/j.visres.2003.09.004. [DOI] [PubMed] [Google Scholar]

- Gibson EJ, Gibson JJ, Smith OW, Flock H. Motion parallax as a determinant of perceived depth. Journal of Experimental Psychology. 1959;58:40–51. doi: 10.1037/h0043883. [DOI] [PubMed] [Google Scholar]

- Gibson JJ. The perception of the visual world. Boston: Houghton-Mifflin; 1950. [Google Scholar]

- Gilinsky AS. Perceived size and distance in visual space. Psychological Review. 1951;58:460–482. doi: 10.1037/h0061505. [DOI] [PubMed] [Google Scholar]

- He ZJ, Nakayama K. Surfaces versus features in visual search. Nature. 1992;359:231–233. doi: 10.1038/359231a0. [DOI] [PubMed] [Google Scholar]

- He ZJ, Ooi TL. Perceiving binocular depth with reference to a common surface. Perception. 2000;29:1313–1334. doi: 10.1068/p3113. [DOI] [PubMed] [Google Scholar]

- He ZJ, Wu B, Ooi TL, Yarbrough G, Wu J. Judging egocentric distance on the ground: Occlusion and surface integration. Perception. 2004;33:789–806. doi: 10.1068/p5256a. [DOI] [PubMed] [Google Scholar]

- Indow T. A critical review of Luneburg’s model with regard to global structure of visual space. Psychological Review. 1991;98:430–453. doi: 10.1037/0033-295x.98.3.430. [DOI] [PubMed] [Google Scholar]

- Koenderink JJ, van Doorn AJ, Lappin JS. Direct measurement of the curvature of visual space. Perception. 2000;29:69–79. doi: 10.1068/p2921. [DOI] [PubMed] [Google Scholar]

- Levin CA, Haber RN. Visual angle as a determinant of perceived interobject distance. Perception & Psychophysics. 1993;54:250–259. doi: 10.3758/bf03211761. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Da Silva JA, Fujita N, Fukusima SS. Visual space perception and visually directed action. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:906–921. doi: 10.1037//0096-1523.18.4.906. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Philbeck JW. Is the anisotropy of perceived 3-D shape invariant across scale? Perception & Psychophysics. 1999;61:397–402. doi: 10.3758/bf03211961. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Philbeck JW, Zahorik P. Dissociation between location and shape in visual space. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:1202–1212. [PMC free article] [PubMed] [Google Scholar]

- Luneberg RK. Mathematical analysis of binocular vision. Princeton, NJ: Princeton University Press; 1947. [Google Scholar]

- McCarley JS, He ZJ. Asymmetry in 3-D perceptual organization: Ground-like surface superior to ceiling-like surface. Perception & Psychophysics. 2000;62:540–549. doi: 10.3758/bf03212105. [DOI] [PubMed] [Google Scholar]

- McCarley JS, He ZJ. Sequential priming of 3-D perceptual organization. Perception & Psychophysics. 2001;63:195–208. doi: 10.3758/bf03194462. [DOI] [PubMed] [Google Scholar]

- Meng JC, Sedgwick HA. Distance perception mediated through nested contact relations among surfaces. Perception & Psychophysics. 2001;63:1–15. doi: 10.3758/bf03200497. [DOI] [PubMed] [Google Scholar]

- Meng JC, Sedgwick HA. Distance perception across spatial discontinuities. Perception & Psychophysics. 2002;64:1–14. doi: 10.3758/bf03194553. [DOI] [PubMed] [Google Scholar]

- Morita H, Kumada T. Effects of pictorially-defined surfaces on visual search. Vision Research. 2003;43:1869–1877. doi: 10.1016/s0042-6989(03)00300-6. [DOI] [PubMed] [Google Scholar]

- Norman JF, Crabtree CE, Clayton AM, Norman HF. The perception of distances and spatial relationships in natural outdoor environments. Perception. 2005;34:1315–1324. doi: 10.1068/p5304. [DOI] [PubMed] [Google Scholar]

- Norman JF, Todd JT, Perotti VJ, Tittle JS. The visual perception of three-dimensional length. Journal of Experimental Psychology: Human Perception and Performance. 1996;22:173–186. doi: 10.1037//0096-1523.22.1.173. [DOI] [PubMed] [Google Scholar]

- Ooi TL, He ZJ. A distance judgment function based on space perception mechanisms: Revisiting Gilinsky’s (1951) equation. Psychological Review. 2007;114:441–454. doi: 10.1037/0033-295X.114.2.441. [DOI] [PubMed] [Google Scholar]

- Ooi TL, Wu B, He ZJ. Distance determined by the angular declination below the horizon. Nature. 2001;414:197–200. doi: 10.1038/35102562. [DOI] [PubMed] [Google Scholar]

- Ooi TL, Wu B, He ZJ. Perceptual space in the dark affected by the intrinsic bias of the visual system. Nature Perception. 2006;35:605–624. doi: 10.1068/p5492. [DOI] [PubMed] [Google Scholar]

- Phibeck JW. Visually directed walking to briefly glimpsed targets is not biased toward fixation location. Perception. 2000;29:259–272. doi: 10.1068/p3036. [DOI] [PubMed] [Google Scholar]

- Phibeck JW, O’Leary S, Lew ALB. Large errors, but no depth compression, in walked indications of exocentric extent. Perception & Psychophysics. 2004;66:377–391. doi: 10.3758/bf03194886. [DOI] [PubMed] [Google Scholar]

- Rogers B, Graham M. Motion parallax as an independent cue for depth perception. Perception. 1979;8:125–134. doi: 10.1068/p080125. [DOI] [PubMed] [Google Scholar]

- Sedgwick HA. Space perception. In: Boff KR, Kaufman L, Thomas JP, editors. Handbook of perception and human performance. New York: Wiley; 1986. pp. 21-1–21-57. [Google Scholar]

- Sinai MJ, Ooi TL, He ZJ. Terrain influences the accurate judgment of distance. Nature. 1998;395:497–500. doi: 10.1038/26747. [DOI] [PubMed] [Google Scholar]

- Smith OW, Smith PC. On motion parallax and perceived depth. Journal of Experimental Psychology. 1963;65:107–108. doi: 10.1037/h0049065. [DOI] [PubMed] [Google Scholar]

- Thompson WB, Dilda V, Creem-Regehr SH. Absolute distance perception to locations off the ground plane. Perception. 2007;36:1559–1571. doi: 10.1068/p5667. [DOI] [PubMed] [Google Scholar]

- Thompson WB, Willemsen P, Gooch AA, Creem-Regehr SH, Loomis JM, Beall AC. Does the quality of the computer graphics matter when judging distances in visually immersive environments? Presence. 2004;13:560–571. [Google Scholar]

- Thomson JA. Is continuous visual monitoring necessary in visually guided locomotion? Journal of Experimental Psychology: Human Perception and Performance. 1983;9:427–443. doi: 10.1037//0096-1523.9.3.427. [DOI] [PubMed] [Google Scholar]

- Wagner M. The metric of visual space. Perception & Psychophysics. 1985;38:483–495. doi: 10.3758/bf03207058. [DOI] [PubMed] [Google Scholar]

- Wu B, He ZJ, Ooi TL. In accurate representation of the ground surface beyond a texture boundary. Perception. 2007;36:703–721. doi: 10.1068/p5693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu B, Ooi TL, He ZJ. Perceiving distance accurately by a directional process of integrating ground information. Nature. 2004;428:73–77. doi: 10.1038/nature02350. [DOI] [PubMed] [Google Scholar]

- Wu J, He ZJ, Ooi TL. Perceived relative distance on the ground affected by the selection of depth information. Perception & Psychophysics. 2008;70:707–713. doi: 10.3758/pp.70.4.707. [DOI] [PMC free article] [PubMed] [Google Scholar]