Abstract

Recent investigations indicate that retinal motion is not directly available for perception when moving around [Souman JL, et al. (2010) J Vis 10:14], possibly pointing to suppression of retinal speed sensitivity in motion areas. Here, we investigated the distribution of retinocentric and head-centric representations of self-rotation in human lower-tier visual motion areas. Functional MRI responses were measured to a set of visual self-motion stimuli with different levels of simulated gaze and simulated head rotation. A parametric generalized linear model analysis of the blood oxygen level-dependent responses revealed subregions of accessory V3 area, V6+ area, middle temporal area, and medial superior temporal area that were specifically modulated by the speed of the rotational flow relative to the eye and head. Pursuit signals, which link the two reference frames, were also identified in these areas. To our knowledge, these results are the first demonstration of multiple visual representations of self-motion in these areas. The existence of such adjacent representations points to early transformations of the reference frame for visual self-motion signals and a topography by visual reference frame in lower-order motion-sensitive areas. This suggests that visual decisions for action and perception may take into account retinal and head-centric motion signals according to task requirements.

Keywords: visual motion topography, V3A, MT+, parietal cortex, optic flow

When a child eyeballs the seeker while exposing as little as possible of its head from behind cover, it performs an exquisite piece of eye and head control. Such visually coordinated actions rely on fast and dynamic integration of self-motion signals from different reference frames (1) to control the rotations of the head, the eye, and other body parts.

Motion relative to the eye is directly given on the retina. In the monkey lower-tier visual areas, neurons respond vigorously to such retinal motion (2, 3). Motion relative to the head or other body parts must be derived from visual (4) and nonvisual (5) information on the eye's rotation (6). Many monkey cortical areas also contain neurons that take into account the eye's rotation when responding to visual motion (7–10). Remarkably, a number of recent psychophysical studies have concluded that retinal motion signals are not directly available for perception (11–13), in particular during self-motion (11), whereas head-centric motion signals are.

This raises the interesting question how retinal and head-centric representations of visual motion are distributed across the lower-tier visual motion areas. If subjects cannot attend to retinal motion signals, it could indicate that retinal motion sensitivity gives way to head-centric motion signals in higher tier areas, such as the posterior parietal cortex (PPC). Alternatively, signals in both reference frames may be present up to higher visual areas, but not available for certain visual tasks or under certain movement conditions.

We investigated if the visual system builds up visual representations of eye-in-space and head-in-space motion. The latter can be constructed by combining visual signals and eye movement signals (5). Previous functional MRI (fMRI) research demonstrated a representation of head-centric motion signals within the human medial superior temporal (MST) region (14). This finding extended physiological (15) and fMRI work (16), which shows involvement of MST in the perception of self-motion.

No clear topography has been found in MST, although some single-cell studies reported clustering according to preferred motion pattern (17–19). We wondered if there exists a functional topography governed by visual reference frame. If so, the representation of the head's motion in space might be accompanied by a parallel representation of the eye's motion in space. This way, MST provides appropriate visual representations to the PPC for the coordination of complex eye and head rotations. Possibly, visual representations of this kind are also present within other lower-tier visual motion areas. Physiological work thus far has shown that neurons within the accessory V3 area (V3A) and the V6 area are modulated by extraretinal signals (20, 21).

Previous fMRI research successfully identified motion sensitivity in these cortical regions (22, 23), but did not distinguish between retinocentric and head-centric motion representations.

Here, we exposed subjects to wide-field, 3D optic flow stimuli that simulate independently varied gaze and head motions while assessing blood oxygen level-dependent (BOLD) signals. The visual stimuli allowed us to dissociate BOLD modulations correlated with the speed of rotational flow relative to the eye, the speed of rotational flow relative to the head, and the eye-in-head pursuit speed. We found that each visual motion area—V3A, V6+, and middle temporal (MT+) area—represents pursuit speed as well as rotational flow speed relative to the eye and head. Our results show that multiple visual representations are constructed in different frames of reference before PPC, possibly serving different visuomotor tasks, involving complex eye and head motion.

Results

Visual Stimuli.

Our main goal was to isolate regions within MT, MST, V3A, and V6+ areas that are sensitive to rotational speed relative to the retina (Rs), relative to the head (head-centric speed; Hs), or pursuit speed (Ps). The head-centric flow was manipulated independently from the retinal flow by simulating different head rotations during forward translation of the eye along an undulating path (Fig. 1). The overall direction of the path (wide arrow; Fig. 1) was forward, perpendicular to the immersive wide field screen (120 × 90°).

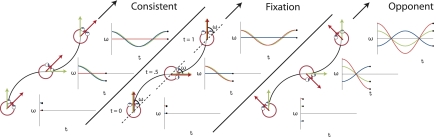

Fig. 1.

Visualization of the stimuli for the three different conditions together with plots of the gaze (green), eye-in-head (blue), and head-in-space (red) orientation at different instants in time. The flow field simulates self-motion through space in a forward direction (the global heading direction, black arrow), but along an oscillating path (black trajectory). Rotation of the eyes and head are defined relative to the global heading direction (ω). The gaze rotation is identical in the three conditions. At every time point, gaze is aligned with the tangent of the path (green arrow). As a result, the flow patterns on the retina remain the same. Combined with different eye-in-head positions, however, different simulated head rotations (red arrows) are defined. At the three instances depicted, the direction of motion along the path deviates maximally from the global heading direction. In the CONSISTENT condition, the amplitude and direction of simulated gaze rotation matches the amplitude and direction of the eye-in-head rotation. Thus, the simulated head rotation is zero. In the FIXATION condition, eye and head remain aligned with the momentary heading direction. Therefore, simulated gaze and head rotation are identical. In the OPPONENT condition, simulated gaze rotation is always opposite in direction to the eye-in-head rotation. Now, the eye rotation relative to the global heading direction remains the same, but the magnitude of the simulated head rotation is doubled. Note that the angles of rotation are exaggerated for visualization purposes.

We used a fixation condition (“FIXATION”) and two types of pursuit conditions (“CONSISTENT” and “OPPONENT”). Fig. 1 shows the three principal conditions and the accompanying gaze rotation (Fig. 1, green), eye-in-head rotation (Fig. 1, blue), and the rotational flow relative to the head (Fig. 1, red) at different instances in time. To keep the retinal flow constant, the simulated gaze rotation (change of the viewing direction relative to the scene) was always the same. This required that subjects fixated a moving or stationary fixation point that was aligned with the simulated heading direction. The rotational flow relative to the head is, by definition, the eye-in-head rotation minus the simulated gaze rotation. Thus, during FIXATION, the head-centric rotational flow equals the simulated gaze rotation. During pursuit, the eye-in-head rotations were in phase (CONSISTENT) or in counter-phase (OPPONENT) with the simulated gaze rotations. Thus, for CONSISTENT, the rotational flow relative to the head was zero (i.e., eye pursuit is the cause of all rotational flow), whereas, for OPPONENT, it was twice that for FIXATION.

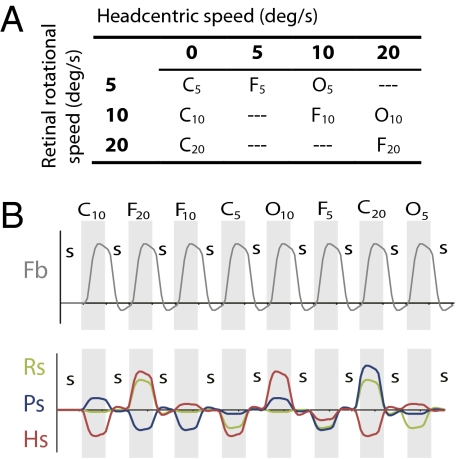

We tested the triple conditions at different levels of retinal rotational speed (Fig. 2B). The combination of stimuli allowed us to assess retinal, pursuit, and head-centric contributions to the BOLD signal. We presented head-centered rotational flow about one of the three semicircular canal axes in different sessions to truly assess 3D rotation sensitivity.

Fig. 2.

Stimulus conditions in the main experiment. (A) Combining the three principal conditions (FIXATION, CONSISTENT, OPPONENT) with three levels of retinal rotational flow (subscripts) results in eight different test conditions with varying pursuit and head-centric speed levels. This set of conditions allowed us to independently assess contributions of Rs, Hs, and Ps origin to the BOLD signal. (B) Order of stimulus conditions (Top) and time courses for the four predictors (Pb, Rs, Ps, and Hs) used in the GLM analysis. Numbers identify for each rotation component the different physical speed levels. In each run (155 volumes), all test conditions were presented, interleaved with a control condition (static random dot pattern; “S”). In half the runs, test conditions were presented in opposite order. In each run, only one axis of rotation was tested. In the final analysis, the C20 condition was excluded (SI Materials and Methods). The time courses for the parametric predictors were made orthogonal to the Fb predictor by use of de-meaning.

Localizers.

First, we identified the main visual areas and isolated our regions of interest (ROIs) by a series of functional localizers. The specific positioning of the surface coil enabled us to investigate MT+ unilaterally (i.e., right hemisphere) and V3A and V6+ bilaterally. V3A and V6+ were identified by using retinotopic mapping (24, 25). To identify MT+ and distinguish between MT and MST, responses between ipsilateral and contralateral flow presentation were contrasted. MST was identified as the part of a flow-responsive region within MT+ that responded to ipsilateral and contralateral flow presentation (26, 27).

Main Experiment.

Subjects fixated a ring target at all times and paid attention to the flow surround. During each functional run, all eight conditions were presented once, alternated by static flow (Fig. 2B), for rotations about a single canal axis.

We applied to all ROIs a parametric generalized linear model (GLM) with four main regressors (Fig. 2B). Besides taking into account BOLD responses to flow (Fb), the model captures separate modulations of the BOLD response that result from the different levels of retinal (i.e., Rs), pursuit (i.e., Ps), and head-centric (i.e., Hs) rotational flow speed. Two conditions were met for a significant response: (i) a significant contrast between the Fb predictor and the static condition and (ii) a significant positive regression coefficient for a specified parametric predictor.

For each ROI in each subject, t-value thresholds were set for each predictor, to a level that demarcated the peak sensitivity, with a surface area of at least 10 mm2 on the inflated surface reconstruction.

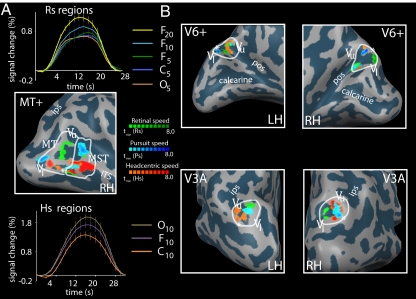

We found regions sensitive to Rs, Ps, and Hs within all ROIs in all subjects. Fig. 3 shows this finding for one subject (additional subjects are described in Fig. S1 and Table S1). Table 1 lists the accompanying Talairach coordinates (detailed subject data in Table S2). First and foremost, we found regions with strong Hs sensitivity within all ROIs in all subjects. Both MST and MT contain a Hs-sensitive region. Second, we found Ps-sensitive regions in MST, MT, and V6+ areas in all subjects. Within MST of all subjects, we distinguished two separate Ps regions. In 10 of 12 hemispheres, a Ps-sensitive region was found in V3A (five in left hemisphere, five in right hemisphere). Third, we found Rs-sensitive regions within MT and MST of all subjects, except subject 6. In eight of 12 hemispheres, an Rs-sensitive region was identified within V3A (three in left hemisphere, five in right hemisphere). In seven of 12 hemispheres, an Rs region was identified within V6+ (four in right hemisphere, three in left hemisphere).

Fig. 3.

Rs, Hs, and Ps sensitivities in MT, MST, V3A, and V6+ in one representative subject. ROIs are identified by white borders. For MT, V3A, and V6+, the vertical upper (Vu) and vertical lower (Vl) meridians of the polar angle map are depicted, as is the foveal part (asterisk) of the eccentricity map (ITS, inferior temporal sulcus; POS, parietooccipital sulcus; IPS, intraparietal sulcus). (A) Identified Rs, Ps, and Hs-sensitive regions within MT and MST [Middle: tmin(Rs) = 3.0, tmin(Ps) = 2.5; tmin(Hs) = 6.0]. The average BOLD response over all voxels within the Rs-sensitive regions (Top) shows a clear response increment for increasing retinal speed levels (subscripts) in FIXATION, but lacks an amplitude difference for OPPONENT versus CONSISTENT at the same pursuit and retinal speed level. The average BOLD response across all voxels within the Hs regions (Bottom) shows a clear amplitude difference between the opponent (Hs, 20 °/s) and consistent (Hs, 0 °/s) condition at the same pursuit and retinal speed level. (B) Identified Rs-, Ps-, and Hs-sensitive regions within V3A [Bottom: right hemisphere, tmin(Rs, Ps, Hs) = (2.7, 3.3, 6.0); left hemisphere, tmin (Rs, Ps, Hs) = (2.8, 2.7, 4.0)] and V6 (Top: right hemisphere, tmin (Rs, Ps, Hs) = (4.0, 5.5, 6.0); left hemisphere, tmin (Rs, Ps, Hs) = (4.2, 4.1, 3.0)].

Table 1.

Average Talairach coordinates of the center of the identified area

| Area | Rs | Ps | Hs | ||||||

| x | y | z | x | y | z | x | y | z | |

| RH | |||||||||

| MST | 42 (6) | −61 (7) | 7 (7) | 44 (6), 43 (5) | −63 (6), −57 (4) | 0 (6), 9 (5) | 42 (3) | −60 (5) | 5 (6) |

| MT | 36 (8) | −75 (8) | 5 (6) | 39 (3) | −73 (7) | 5 (6) | 39 (3) | −76 (3) | 2 (4) |

| V3A | 19 (2) | −83 (4) | 20 (4) | 19 (7) | −82 (2) | 20 (7) | 17 (7) | −83 (5) | 29 (5) |

| V6+ | 16 (2) | −77 (4) | 27 (4) | 15 (3) | −79 (4) | 31 (9) | 15 (4) | −79 (4) | 31 (4) |

| LH | |||||||||

| V3A | −18 (7) | −83 (3) | 26 (2) | −14 (5) | −86 (4) | 22 (5) | −16 (6) | −87 (7) | 20 (3) |

| V6+ | −13 (4) | −75 (5) | 28 (8) | −10 (3) | −83 (6) | 26 (5) | −13 (5) | −81 (3) | 27 (7) |

Values in parentheses are SDs. For Ps in MST, the first value corresponds to Ps_posterior, the second value to Ps_anterior.

Response Selectivity.

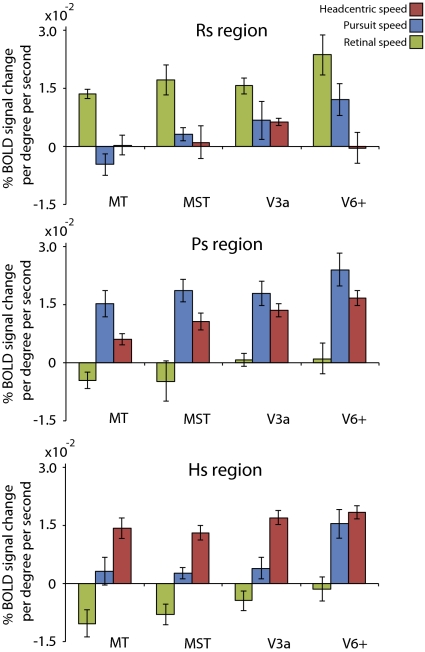

Is the activity in the identified Rs, Ps, and Hs regions specifically modulated by one type of rotation information? We performed a region GLM on the Rs, Ps, and Hs regions (Fig. 4) and extracted the averaged β-values for all three predictors. Note that these β-values do not reflect a level activation, but instead quantify a region's sensitivity to Rs, Ps, and Hs (as expressed in percent signal change per degree per second). Regions that are predominantly modulated by an Rs, Ps, or Hs will show the strongest modulation for that particular predictor, whereas speed modulation for the other predictors will be near zero. The flow predictor and primary parametric predictor (i.e., which was used to name the region) were always significant (P < 0.05, after correction for autocorrelation). Note that specificity was high for all Rs and Hs regions (P < 0.05, one-sided t test), except for the Hs region within V6+, which was also strongly pursuit-modulated. The Ps regions were not significantly comodulated by retinal rotational speed, but did show a strong cosensitivity with head-centric speed (P < 0.05, except for MT). We observed no systematic differences between the sensitivities of the anterior and posterior Ps region within MST. Data from the two regions were therefore pooled. The pursuit-related modulation within Ps_V6+ was stronger than the pursuit-related modulation in other Ps regions (one-sided paired t test, P < 0.05). Also, within the Ps_V3A and Hs_V6+ region, there was no significant difference between modulation by Ps and Hs (two-sided t test, P = 0.25 and P = 0.47, respectively). Thus, in contrast to MT+, there is an extensive overlap of the pursuit and head-centric representations in V6+, whereas in V3A, pursuit is mixed with head-centric sensitivity but a separate head-centric region exists.

Fig. 4.

Response specificity of the identified Rs-, Ps-, and Hs-sensitive regions. Results are from the region based GLM analysis (mean ± SE across subjects). Retinal speed (green) for Rs regions, pursuit speed (blue) for Ps regions, and head-centric speed (red) for Hs regions produced significantly larger modulations than the other two motion variables (P < 0.05, one-sided t test). The Rs sensitivities in all Ps and Hs regions were not significantly different from 0. Pursuit and head-centric sensitivities within Ps_V3A (P = 0.25) and within Hs_V6+ (P = 0.47) were not significantly different.

Hs regions were quite dominant in some visual areas. We therefore wondered if the BOLD response to retinal flow speed was perhaps suppressed in MT+ for some conditions. Possibly, pursuit signals are “gating” retinal flow responses (28). To test this, we investigated whether there was a consistent down-regulation of the response to the flow by pursuit signals if they matched the retinal flow (CONSISTENT), without a concomitant up-modulation of the response if they did not match (OPPONENT). However, a contrast between the BOLD response for FIXATION (5°/s and 10°/s) and CONSISTENT (5°/s and 10°/s) for all voxels of MT or MST did not reach significance (two sided t test, MT, P = 0.46; MST, P = 0.95; Fig. S2). Hence, our data do not support this hypothesis.

Other Motion-Sensitive Regions.

A variety of (multisensory) areas are responsive to self-motion information, and have been identified on their responsiveness to visual self-motion stimuli (29), most notably the ventral intraparietal area (VIP) (19, 30, 31). Our use of a (rotated) occipital coil and high resolution limited the field of view (FOV). Despite the lower signal-to-noise ratio at the borders of our FOV, we could identify five of these areas in the right hemisphere in all subjects on the basis of a Flow baseline (Fb) contrast. This supports the notion that self-motion stimuli can be used as a functional localizer for these regions (29). We applied our GLM to those ROIs in each subject. Interestingly, analysis on the data pooled across subjects suggests that the balance shifts from a pursuit-dominated response in cingulate sulcus visual area (CSv) and precuneus (pc), to a head-centric flow-dominated response in putative human area 2v (p2v) and putative VIP (SI Materials and Methods, Fig. S3, and Table S3). In none of these areas did we find a significant modulation by retinal speed across subjects.

Discussion

We investigated the distribution of two visual representations of self-motion across lower visual motion areas: (i) a retinal representation of self-motion speed that specifies the rotation speed of the eye in space, and (ii) a head-centric representation of self-motion speed that specifies rotation of the head in space.

We found partially overlapping subregions within MT, MST, V3A, and V6+ that were modulated by such motion signals. We also found a positive correlation with pursuit speed in V6+, MT, and MST areas, and less consistently in V3A. Pursuit sensitivity within MT and MST areas is in line with earlier physiological work, and is necessary to perform the transformation to head-centric speed. Apparently, these lower-tier visual motion areas already perform visual transformations to other reference frames on motion signals. This finding confirms and extends the involvement of V3A and V6+ area in distinguishing real from self-induced visual motion (32). Additionally, it supports the notion that these regions are involved in visuomotor tasks that have eye- and head-centric functional requirements. A parsimonious interpretation of our findings is that these task requirements are supported by distinct modules sensitive to Rs, Ps, and Hs signals in the identified ROIs, but clearly, we cannot exclude the possibility that those signals might also be mixed at the single-neuron level. We note that our experiments do not allow any inferences about the reference frame of the spatial receptive fields within the ROIs. It was proposed more than a decade ago (33) that visual signals of head-centric motion are collected by neural units having a spatial receptive field in a retinal reference frame. Evidence for such coding at the single-unit level exists for monkey dorsal MST (34).

Functional Relevance of Parallel Representations.

All these visual motion areas project strongly to regions in the parietal cortex. What functional tasks may be supported by the coincidence of retinal and head-centric motion coding in these lower tier motion areas? It is likely that the specific functional goals of higher-order regions in the parietal cortex and inferotemporal cortex is served in different ways by these two types of self-motion information. Further along the dorsal stream, activity within the PPC reflects the convergence of visual information with information from other modalities (e.g., vestibular system) with a different spatial reference frame. PPC performs the necessary sensory/motor transformation for the planning of motor commands (35). Eye-, head-, and limb-position information drive PPC neurons in a gain-modulated manner, depending on the movement plan, constructing a flexible representation of space (36, 37). PPC has also been related to the guidance of visual attention (38). In this framework, gain modulations are thought to reflect the functional priority of an object in space.

Visual/Vestibular Integration.

Head-centric flow signals serve heading perception (39), a function contributed to by MST and VIP by using visual and vestibular information (19, 40). We speculate that the visual representation of the scene's motion relative to the head forms an important step toward visual and vestibular signal convergence because these signals deal with motion of the same body part relative to the environment. For individual MST neurons, full alignment of the preferred head motion through vestibular and visual inputs does not always occur (41). We note, however, that no distinction was made in that study between head-centric and retinocentric tuning to visual motion. Thus, the precise form of such integration still needs to be clarified.

Functional Contribution of V6+ and V3A.

Our findings extend the understanding of the functional role of V3A and V6+ area. Area V6+ lies within the posterior part of the POS and contains neurons with large receptive fields (42). V6+ area is known to be highly responsive to optic flow patterns (23). Interestingly, V6+ area has strong connections, first with VIP (43), an area with strong multisensory representations of peripersonal space (44), and second with V6A, a visuomotor area related to reaching and grasping (43, 45–47). Both areas contain cells that encode visual space in a head-centric reference frame (48, 49). It is likely that a head-centric motion representation collected through head-centric spatial receptive fields would more completely cover the needs for head-centered control of actions.

Area V3A is highly motion-sensitive and contains cells that distinguish self-motion from object motion (50, 51). It is involved in the processing of 3D visual information about objects in space (52). Apart from motion, it responds to both monocular (22) and binocular (53, 54) depth information and has strong projections to the lateral intraparietal area (LIP) and anterior intraparietal area within PPC, which process visual 3D object information and object-related hand actions (55). We show strong responses in V3A to 3D monocular self-motion stimuli. Our finding of a head-centric speed-of-rotation representation in V3A complements its contribution to motion-in-depth information, for example, for approaching objects (56). This way, V3A provides data about moving objects to PPC, such as the head-centric distance and the object's direction of motion during self-motion, that likely are relevant for saliency computations.

Prioritizing Object Motion.

Directing attention to object motion in the visual field becomes complex during self-motion. Humans remove global self-motion patterns to discount self-motion components from perception and thereby recover object motion (13). Removal of the component of visual rotation that accompanies voluntary eye and/or head turns likely depends on the retinal and head-centric representations we investigated. Such recovered object motion that stands out from the motion of its background is an important component of visual saliency (57) and is integrated with behavioral goals in LIP. LIP attributes priority to moving objects for directing eye and head motion (38). For bottom-up visual motion information, an object's saliency might depend on its motion relative to the head, or relative to the eyes. Behavioral priority might then select the appropriate visual input to PPC. Such a view implies that to some extent transformations of self-motion information must take place before PPC. Because LIP receives strong projections from V3A and MST, the rotational speed sensitivity in different reference frames that we find in areas V3A and MST would seem useful to direct attention in a cluttered dynamic environment to objects moving relative to the head or the eye.

Access to Retinal Flow?

Remarkably, visual representations of the head-centric speed of rotation were more robust than visual representations of retinal speed of rotation. Especially for MT, this seems surprising, as increased motion speed is associated with increased firing rate on a weighted averaged base in physiological work (58). One explanation is that retinal motion signals are encoded in a labeled-line coding in some regions. If so, neurons tuned to different retinal speed levels are intermixed in cortex, leveling out any parametric speed effect on the voxel level. Some studies support this view for the MT area (59).

Another explanation could be that neurons within MT and MST can be gain-modulated by pursuit velocity in an asymmetric way: they show a reduced response when visual motion is opposite in direction to the pursuit, as during natural object pursuit (28). If such a response persists up to the population level, a BOLD modulation difference between CONSISTENT pursuit conditions and the FIXATION conditions should occur. This was not found.

Retinal and head-centric speed representations coexist in the MT+ area, which importantly contributes to visual flow processing, but the head-centric speed representation dominated that of the retinal representation in most subjects (compare the lower t thresholds for Rs in Table S1). We did not find evidence for widespread suppression of retinal flow responses in MT+ by eye pursuit, as might be suspected given the psychophysical evidence (11–13). If retinal flow is down-regulated, it occurs at higher-tier areas. Unfortunately, the few data we collected in the parietal cortex do not permit us to further explore this idea.

Conclusion

Our results show that visual motion areas V3A, V6+, and MT+ contain partially overlapping regions that represent the speed of visual rotations in a head-centric and a retinocentric frame of reference. The existence of such adjacent presentations before PPC points to early transformations of the reference frame for self-motion signals and a topography by visual reference frame in lower-order motion areas. This suggests that higher-order visual decisions may take into account retinal and head-centric motion signals according to task requirements.

Materials and Methods

Subjects.

Six healthy subjects participated in the experiment, from all of whom written consent was obtained. All subjects were experienced with the visual stimuli and had previously participated in other fMRI studies. All subjects participated in multiple scanning sessions of approximately 90 min each.

Visual Stimuli.

All visual stimuli were generated on an Macintosh MacBook pro notebook by using openGL-rendering software. The scene consisted of a cloud of 2,000 red dots on a black background, moving within a box with dimensions that depended on the dimensions of the display screen. The stimuli simulated an undulating motion through the cloud of dots. For a detailed description of the stimulus, see ref. 14. The flow simulated a combination of forward motion and gaze rotation at a frequency of 1/6 Hz. The simulated forward motion was 1.5 m/s. In the fixation condition, both fixation point and the frame borders remained stationary on the screen. In the consistent and opponent condition, a pursuit is made consistent with or opponent to the gaze rotation. To maintain the same retinal stimulus as in the fixation condition, the borders of the flow pattern moved in correspondence with the fixation point. The sinusoidal rotation of the target was added to all flow points. Provided that pursuit was accurate, the flow on the retina was identical for the fixation and the pursuit conditions. Three different axes of rotation were simulated in different blocks. The axes were perpendicular to the semicircular canal planes.

We used a custom-built visual projection system to optimize the FOV (∼120 × 90°; SI Materials and Methods). Pursuit accuracy was verified in a separate control experiment (SI Materials and Methods and Table S4).

Scan Parameters.

The MR data acquisition was conducted on the 3T trio Siemens scanner at the Donders Centre for Cognitive Neuroimaging (Nijmegen, The Netherlands). For each subject, we first obtained a high-resolution full-brain anatomical scan, by using a 12-channel head coil (T1-weighted MPRAGE, 192 slices, 256 × 256 matrix, resolution of 1 × 1 × 1 mm). For the experimental scans, a custom-made eight-channel occipital surface coil was used (60). The coil was placed slightly dorsal to the occipital pole and was rotated 30° along the midbody axis. This way, we acquired an optimal signal-to-noise ratio within right hemisphere MT+ and V3A/V6+ bilaterally. High-resolution functional scans were obtained with an in-plane resolution of 1.12 × 1.12 mm and a slice thickness of 2 mm [T2*-weighted; single-shot echo-planar imaging; 24 slices; repetition time (TR), 2 s; echo time, 30 ms]. Slices were coaligned with the functional data, by use of an in-plane high-resolution anatomical scan (T1-weighted MPRAGE, 80 slices, 256 × 256 matrix, resolution of 0.75 × 0.75 × 0.75 mm), acquired halfway each scanning session.

Data Acquisition and Analysis.

Brainvoyager QX (version 2.2) was used for the analysis of all anatomical and functional images (Brain Innovation). The data of the experimental conditions were collected in all subjects in at least two separate sessions. Total number of runs ranged from 12 (subjects 4 and 6) to 39 (subject 2), consisting of at least four runs for each axis of simulated head rotation.

Subjects performed four runs for the retinotopic mapping (two for polar angle, two for eccentricity) and two for the MT/MST localizer. For every run, we discarded the first two volumes to account for saturation effects. Subsequently, the images were corrected for 3D head motion and slice acquisition timing. The resulting time courses were corrected for low-frequency drift by a regression fit line that connects each final two data points of each static condition. Finally, a Gaussian temporal filter was applied with a full width at half-maximum of two data points.

All experimental runs consisted of 155 volume acquisitions. For the experimental functional scans, a blocked design was used. Each run consisted of 17 blocks of nine TRs, in which all eight experimental conditions were interleaved by a rest condition (static random dot pattern; Fig. 2B). The total sequence was preceded by two dummy TRs, which were not analyzed. Half the runs presented conditions in reversed order to balance order effects.

Functional Localizers.

ROIs for analysis were identified based on functional localization data collected in separate sessions (SI Materials and Methods).

Experimental Sessions.

Any functional scans with severe motion artifacts (>1 mm) were discarded (four functional scans). The data were spatially normalized according to the atlas of Talairach and Tournoux (61) to obtain standardized coordinates for the ROI. No further spatial smoothing was applied to the functional data.

A multistudy GLM was performed, including all experimental runs (SI Materials and Methods). The GLM was performed on the five ROIs separately (V3A and V6+ bilaterally and MT+ in right hemisphere) on the inflated version of each subjects’ anatomy. Not all experimental runs encompassed all ROIs. Therefore, for each ROI, all experimental session that did not encompass the ROI were excluded from the ROI GLM. Within each ROI, the GLM was applied as a conjunction contrast between the flow baseline predictor (i.e., Fb) and a single parametric predictor (i.e., Rs, Ps, or Hs). Subsequently, Rs-, Ps-, and Hs-sensitive regions were identified as voxel groups of approximately 30 mm2, but with a minimum of 10 mm2 (P < 0.05, uncorrected for clustering). We specifically looked for the sensitivity peaks, which resulted in different t-level thresholds. Final representations of the regions as depicted in Fig. 2 and Fig. S1 were slightly smoothed (full width at half-maximum, two vertices).

Subsequently, β-values were extracted for each region by performing an ROI GLM (P < 0.05 for the primary predictor, corrected for auto-correlation), on which Fig. 4 is based.

Supplementary Material

Acknowledgments

We thank N. Hermesdorf and S. Martens for construction of the wide-field stimulation equipment in the scanner and Prof. D. Norris and P. Gaalman for fMRI support. This work was supported by Netherlands Organization for Scientific Research NWO-ALW Grant 818.02.006 (to A.V.v.d.B.).

Footnotes

The authors declare no conflict of interest.

*This Direct Submission article had a prearranged editor.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1102984108/-/DCSupplemental.

References

- 1.Colby CL. Action-oriented spatial reference frames in cortex. Neuron. 1998;20:15–24. doi: 10.1016/s0896-6273(00)80429-8. [DOI] [PubMed] [Google Scholar]

- 2.Bair W, Movshon JA. Adaptive temporal integration of motion in direction-selective neurons in macaque visual cortex. J Neurosci. 2004;24:7305–7323. doi: 10.1523/JNEUROSCI.0554-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Britten KH. Cortical processing of visual motion. In: Albright T, Maslan R, editors. The Senses: A Comprehensive Reference. London: Elsevier; 2008. [Google Scholar]

- 4.Warren WH, Hannon DJ. Direction of self-motion is perceived from optical-flow. Nature. 1988;336:162–163. [Google Scholar]

- 5.van den Berg AV, Beintema JA. The mechanism of interaction between visual flow and eye velocity signals for heading perception. Neuron. 2000;26:747–752. doi: 10.1016/s0896-6273(00)81210-6. [DOI] [PubMed] [Google Scholar]

- 6.Lappe M, Bremmer F, van den Berg AV. Perception of self-motion from visual flow. Trends Cogn Sci. 1999;3:329–336. doi: 10.1016/s1364-6613(99)01364-9. [DOI] [PubMed] [Google Scholar]

- 7.Bremmer F, Ilg UJ, Thiele A, Distler C, Hoffmann KP. Eye position effects in monkey cortex. I. Visual and pursuit-related activity in extrastriate areas MT and MST. J Neurophysiol. 1997;77:944–961. doi: 10.1152/jn.1997.77.2.944. [DOI] [PubMed] [Google Scholar]

- 8.Inaba N, Shinomoto S, Yamane S, Takemura A, Kawano K. MST neurons code for visual motion in space independent of pursuit eye movements. J Neurophysiol. 2007;97:3473–3483. doi: 10.1152/jn.01054.2006. [DOI] [PubMed] [Google Scholar]

- 9.Galletti C, Battaglini PP, Fattori P. Functional properties of neurons in the anterior bank of the parieto-occipital sulcus of the macaque monkey. Eur J Neurosci. 1991;3:452–461. doi: 10.1111/j.1460-9568.1991.tb00832.x. [DOI] [PubMed] [Google Scholar]

- 10.Ilg UJ, Schumann S, Thier P. Posterior parietal cortex neurons encode target motion in world-centered coordinates. Neuron. 2004;43:145–151. doi: 10.1016/j.neuron.2004.06.006. [DOI] [PubMed] [Google Scholar]

- 11.Souman JL, Freeman TC, Eikmeier V, Ernst MO. Humans do not have direct access to retinal flow during walking. J Vis. 2010;10:14. doi: 10.1167/10.11.14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Freeman TCA, Champion RA, Sumnall JH, Snowden RJ. Do we have direct access to retinal image motion during smooth pursuit eye movements? J Vis. 2009;9:33.1–33.11. doi: 10.1167/9.1.33. [DOI] [PubMed] [Google Scholar]

- 13.Warren PA, Rushton SK. Optic flow processing for the assessment of object movement during ego movement. Curr Biol. 2009;19:1555–1560. doi: 10.1016/j.cub.2009.07.057. [DOI] [PubMed] [Google Scholar]

- 14.Goossens J, Dukelow SP, Menon RS, Vilis T, van den Berg AV. Representation of head-centric flow in the human motion complex. J Neurosci. 2006;26:5616–5627. doi: 10.1523/JNEUROSCI.0730-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Page WK, Duffy CJ. MST neuronal responses to heading direction during pursuit eye movements. J Neurophysiol. 1999;81:596–610. doi: 10.1152/jn.1999.81.2.596. [DOI] [PubMed] [Google Scholar]

- 16.Morrone MC, et al. A cortical area that responds specifically to optic flow, revealed by fMRI. Nat Neurosci. 2000;3:1322–1328. doi: 10.1038/81860. [DOI] [PubMed] [Google Scholar]

- 17.Chen A, Gu Y, Takahashi K, Angelaki DE, Deangelis GC. Clustering of self-motion selectivity and visual response properties in macaque area MSTd. J Neurophysiol. 2008;100:2669–2683. doi: 10.1152/jn.90705.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Geesaman BJ, Born RT, Andersen RA, Tootell RB. Maps of complex motion selectivity in the superior temporal cortex of the alert macaque monkey: A double-label 2-deoxyglucose study. Cereb Cortex. 1997;7:749–757. doi: 10.1093/cercor/7.8.749. [DOI] [PubMed] [Google Scholar]

- 19.Britten KH. Mechanisms of self-motion perception. Annu Rev Neurosci. 2008;31:389–410. doi: 10.1146/annurev.neuro.29.051605.112953. [DOI] [PubMed] [Google Scholar]

- 20.Galletti C, Battaglini PP, Fattori P. Eye position influence on the parieto-occipital area PO (V6) of the macaque monkey. Eur J Neurosci. 1995;7:2486–2501. doi: 10.1111/j.1460-9568.1995.tb01047.x. [DOI] [PubMed] [Google Scholar]

- 21.Galletti C, Battaglini PP. Gaze-dependent visual neurons in area V3A of monkey prestriate cortex. J Neurosci. 1989;9:1112–1125. doi: 10.1523/JNEUROSCI.09-04-01112.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Koyama S, et al. Separate processing of different global-motion structures in visual cortex is revealed by FMRI. Curr Biol. 2005;15:2027–2032. doi: 10.1016/j.cub.2005.10.069. [DOI] [PubMed] [Google Scholar]

- 23.Pitzalis S, et al. Human v6: The medial motion area. Cereb Cortex. 2010;20:411–424. doi: 10.1093/cercor/bhp112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sereno MI, et al. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268:889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- 25.Pitzalis S, et al. Wide-field retinotopy defines human cortical visual area v6. J Neurosci. 2006;26:7962–7973. doi: 10.1523/JNEUROSCI.0178-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Huk AC, Dougherty RF, Heeger DJ. Retinotopy and functional subdivision of human areas MT and MST. J Neurosci. 2002;22:7195–7205. doi: 10.1523/JNEUROSCI.22-16-07195.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Dukelow SP, et al. Distinguishing subregions of the human MT+ complex using visual fields and pursuit eye movements. J Neurophysiol. 2001;86:1991–2000. doi: 10.1152/jn.2001.86.4.1991. [DOI] [PubMed] [Google Scholar]

- 28.Chukoskie L, Movshon JA. Modulation of visual signals in macaque MT and MST neurons during pursuit eye movement. J Neurophysiol. 2009;102:3225–3233. doi: 10.1152/jn.90692.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Cardin V, Smith AT. Sensitivity of human visual and vestibular cortical regions to egomotion-compatible visual stimulation. Cereb Cortex. 2010;20:1964–1973. doi: 10.1093/cercor/bhp268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wall MB, Smith AT. The representation of egomotion in the human brain. Curr Biol. 2008;18:191–194. doi: 10.1016/j.cub.2007.12.053. [DOI] [PubMed] [Google Scholar]

- 31.Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- 32.Galletti C, Fattori P. Neuronal mechanisms for detection of motion in the field of view. Neuropsychologia. 2003;41:1717–1727. doi: 10.1016/s0028-3932(03)00174-x. [DOI] [PubMed] [Google Scholar]

- 33.Beintema JA, van den Berg AV. Heading detection using motion templates and eye velocity gain fields. Vision Res. 1998;38:2155–2179. doi: 10.1016/s0042-6989(97)00428-8. [DOI] [PubMed] [Google Scholar]

- 34.Lee B, Pesaran B, Andersen RA. Area MSTd neurons encode visual stimuli in eye coordinates during fixation and pursuit. J Neurophysiol. 2011;105:60–68. doi: 10.1152/jn.00495.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;386:167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- 36.Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- 37.Cohen YE, Andersen RA. A common reference frame for movement plans in the posterior parietal cortex. Nat Rev Neurosci. 2002;3:553–562. doi: 10.1038/nrn873. [DOI] [PubMed] [Google Scholar]

- 38.Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annu Rev Neurosci. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.van den Berg AV. Robustness of perception of heading from optic flow. Vision Res. 1992;32:1285–1296. doi: 10.1016/0042-6989(92)90223-6. [DOI] [PubMed] [Google Scholar]

- 40.Gu Y, DeAngelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nat Neurosci. 2007;10:1038–1047. doi: 10.1038/nn1935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Takahashi K, et al. Multimodal coding of three-dimensional rotation and translation in area MSTd: Comparison of visual and vestibular selectivity. J Neurosci. 2007;27:9742–9756. doi: 10.1523/JNEUROSCI.0817-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Galletti C, Fattori P, Kutz DF, Gamberini M. Brain location and visual topography of cortical area V6A in the macaque monkey. Eur J Neurosci. 1999;11:575–582. doi: 10.1046/j.1460-9568.1999.00467.x. [DOI] [PubMed] [Google Scholar]

- 43.Galletti C, et al. The cortical connections of area V6: an occipito-parietal network processing visual information. Eur J Neurosci. 2001;13:1572–1588. doi: 10.1046/j.0953-816x.2001.01538.x. [DOI] [PubMed] [Google Scholar]

- 44.Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: Congruent visual and somatic response properties. J Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- 45.Galletti C, Fattori P, Kutz DF, Battaglini PP. Arm movement-related neurons in the visual area V6A of the macaque superior parietal lobule. Eur J Neurosci. 1997;9:410–413. doi: 10.1111/j.1460-9568.1997.tb01410.x. [DOI] [PubMed] [Google Scholar]

- 46.Fattori P, Gamberini M, Kutz DF, Galletti C. ‘Arm-reaching’ neurons in the parietal area V6A of the macaque monkey. Eur J Neurosci. 2001;13:2309–2313. doi: 10.1046/j.0953-816x.2001.01618.x. [DOI] [PubMed] [Google Scholar]

- 47.Fattori P, et al. Hand orientation during reach-to-grasp movements modulates neuronal activity in the medial posterior parietal area V6A. J Neurosci. 2009;29:1928–1936. doi: 10.1523/JNEUROSCI.4998-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Galletti C, Battaglini PP, Fattori P. Parietal neurons encoding spatial locations in craniotopic coordinates. Exp Brain Res. 1993;96:221–229. doi: 10.1007/BF00227102. [DOI] [PubMed] [Google Scholar]

- 49.Duhamel JR, Bremmer F, BenHamed S, Graf W. Spatial invariance of visual receptive fields in parietal cortex neurons. Nature. 1997;389:845–848. doi: 10.1038/39865. [DOI] [PubMed] [Google Scholar]

- 50.Tootell RB, et al. Functional analysis of V3A and related areas in human visual cortex. J Neurosci. 1997;17:7060–7078. doi: 10.1523/JNEUROSCI.17-18-07060.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Galletti C, Battaglini PP, Fattori P. ‘Real-motion’ cells in area V3A of macaque visual cortex. Exp Brain Res. 1990;82:67–76. doi: 10.1007/BF00230838. [DOI] [PubMed] [Google Scholar]

- 52.Caplovitz GP, Tse PU. V3A processes contour curvature as a trackable feature for the perception of rotational motion. Cereb Cortex. 2007;17:1179–1189. doi: 10.1093/cercor/bhl029. [DOI] [PubMed] [Google Scholar]

- 53.Tsao DY, et al. Stereopsis activates V3A and caudal intraparietal areas in macaques and humans. Neuron. 2003;39:555–568. doi: 10.1016/s0896-6273(03)00459-8. [DOI] [PubMed] [Google Scholar]

- 54.Georgieva S, Peeters R, Kolster H, Todd JT, Orban GA. The processing of three-dimensional shape from disparity in the human brain. J Neurosci. 2009;29:727–742. doi: 10.1523/JNEUROSCI.4753-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Nakamura H, et al. From three-dimensional space vision to prehensile hand movements: The lateral intraparietal area links the area V3A and the anterior intraparietal area in macaques. J Neurosci. 2001;21:8174–8187. doi: 10.1523/JNEUROSCI.21-20-08174.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Berryhill ME, Olson IR. The representation of object distance: Evidence from neuroimaging and neuropsychology. Front Hum Neurosci. 2009;3:43. doi: 10.3389/neuro.09.043.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Itti L, Koch C. Computational modelling of visual attention. Nat Rev Neurosci. 2001;2:194–203. doi: 10.1038/35058500. [DOI] [PubMed] [Google Scholar]

- 58.Lisberger SG, Movshon JA. Visual motion analysis for pursuit eye movements in area MT of macaque monkeys. J Neurosci. 1999;19:2224–2246. doi: 10.1523/JNEUROSCI.19-06-02224.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Lagae L, Raiguel S, Orban GA. Speed and direction selectivity of macaque middle temporal neurons. J Neurophysiol. 1993;69:19–39. doi: 10.1152/jn.1993.69.1.19. [DOI] [PubMed] [Google Scholar]

- 60.Barth M, Norris DG. Very high-resolution three-dimensional functional MRI of the human visual cortex with elimination of large venous vessels. NMR Biomed. 2007;20:477–484. doi: 10.1002/nbm.1158. [DOI] [PubMed] [Google Scholar]

- 61.Talairach J, Tournoux P. Co-planar Stereotaxic Atlas of the Human Brain. Stuttgart: Thieme; 1988. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.