Abstract

Conditioned reinforcer effects may be due to the stimulus' discriminative rather than its strengthening properties. While this was demonstrated in a frequently-changing choice procedure, a single attempt to replicate in a relatively static choice environment failed. We contend that this was because the information provided by the stimuli was nonredundant in the frequently-changing preparation, and redundant in the steady-state arrangement. In the present experiments, 6 pigeons worked in a steady-state concurrent schedule procedure with nonredundant informative stimuli (red keylight illuminations). When a response-contingent red keylight signaled that the next food delivery was more likely on one of the two alternatives, postkeylight choice responding was reliably for that alternative. This effect was enhanced after a history of extended informative red keylight presentation (Experiment 2). These results lend support to recent characterizations of conditioned reinforcer effects as reflective of a discriminative, rather than a reinforcing, property of the stimulus.

Keywords: choice, conditional reinforcer, local analyses, preference pulse, key peck, pigeon

The reinforcing effectiveness of a primary reinforcer is usually attributed to its appetitive properties, which likely arise from that reinforcer's innate biological significance. In contrast, a conditional reinforcer is typically defined as a stimulus which has acquired appetitive properties and thus the ability to increase operant responding (Dinsmoor, 2004). These reinforcing properties are acquired via some relationship (e.g., temporal contiguity) with a primary reinforcer (Kelleher & Gollub, 1962). This characterization of conditional reinforcer effects as arising from the acquired appetitive properties of the stimulus has recently been questioned. Conditional reinforcers may be better described as discriminative stimuli (Davison & Baum, 2006; 2010), or signposts (Shahan, 2010), primarily guiding the behaving animal towards responses likely to lead to biologically relevant stimuli (primary reinforcers). According to this view, a conditional reinforcer's ability to increase responding results not from any acquired value, but instead from this ability to guide responding.

Davison and Baum (2006) rejected the conditioned-value account after an experiment in which they inserted response-contingent magazine-light illuminations (unaccompanied by food) into a frequently changing concurrent schedule of response-contingent foods (Davison & Baum, 2000). According to a pairing hypothesis or conditioned value account, magazine-light illuminations should be conditional reinforcers for these pigeon subjects with long histories of magazine light–food pairings. Davison and Baum (2006) judged the status of the magazine light as a conditional reinforcer by comparing the preference pulse (log response ratio as a function of time or responses since the event; Boutros, Elliffe, & Davison, 2010; Davison & Baum, 2002) after the magazine light with the preference pulse after a food reinforcer. A food reinforcer is typically followed first by a transient increase in relative responding to the alternative that produced that food reinforcer. Preference then settles at a level reflective of the overall reinforcer ratio (Davison & Baum, 2002; Landon, Davison, & Elliffe, 2003). The initial period of increased relative responding on the just-productive alternative may reflect that preceding reinforcer's response-strengthening properties. Davison and Baum (2006) thus reasoned that preference pulses could be used to evaluate the status of a stimulus as a (conditional) reinforcer.

Davison and Baum (2006) varied the correlation between the relative nonfood stimulus (magazine light) rate on an alternative with the relative food rate on that alternative. When the correlation was +1, the alternative that delivered the greater number of magazine lights also delivered the greater number of foods and each magazine light signaled that the just-productive alternative was likely to provide a greater proportion of the food deliveries in that component. When the correlation was -1, the alternative that delivered the greater number of magazine lights delivered the lesser number of food deliveries. When the correlation was 0, there were always equal numbers of magazine lights on each alternative, regardless of the component food ratio. Only when the relative magazine-light rate was positively correlated with the relative food rate was there a consistent period of preference for the just-productive alternative after a magazine light. This was also true when green keylights, with no history of food pairing, were used in place of magazine lights: Local preference after a stimulus was a function of the food ratio–stimulus ratio correlation, and not of any history of pairing the stimulus with food.

Davison and Baum (2006) even reported some preference for the not-just-productive alternative after a stimulus whose left∶right delivery ratio was negatively correlated with the left∶right food ratio. This was the case even when the stimulus was a magazine light with an extensive history of pairing with food. This finding in particular supports only a signaling interpretation of conditional reinforcer effects and not a strengthening account: Response-contingent presentation of a stimulus which signaled that the prior response is locally unlikely to produce food was followed by a relative decrease in the responses that led to this event. Such a relative decrease in responses to the productive alternative contradicts a pairing or conditioned value account. Davison and Baum (2010) later replicated these results using green keylight as both the unpaired and the (simultaneously) paired stimulus, demonstrating that whether the stimulus is a magazine light, or a green keylight, the food ratio–stimulus ratio correlation determines subsequent choice responding. Whether that stimulus has been presented in close temporal contiguity with a biologically relevant stimulus does not determine subsequent choice.

Boutros, Davison and Elliffe (2009) systematically replicated Davison and Baum's (2006) experiment with two major procedural differences. First, whereas Davison and Baum arranged a frequently-changing concurrent schedule, Boutros et al. (2009) arranged a steady-state procedure in which each left∶right food ratio remained unchanged for at least 50 sessions. Second, unlike Davison and Baum (2006; 2010) who presented red keylight and food simultaneously in their paired-stimulus conditions, Boutros et al. (2009) used forward-paired stimuli in which the 3-s red keylight preceded food delivery. These procedural differences proved to have large effects on the results. In Boutros et al.'s (2009) study, the food ratio–stimulus ratio correlation had no discernable effect on preference pulses, whereas the pairing history (paired vs. unpaired) of that stimulus with food strongly determined local preference. Local preference after any stimulus (paired or unpaired) was always towards the just-productive alternative, regardless of whether the food ratio–stimulus ratio correlation was −1, 0 or +1. Moreover, the preference pulse after a paired stimulus was markedly larger than the preference pulse after an unpaired stimulus.

Boutros et al. (2009) argued that they failed to obtain any effect of the food ratio–stimulus ratio correlation (cf., Davison and & Baum, 2006; 2010) because the signaling properties of response-contingent stimuli correlated with food differ between frequently changing and steady-state environments. Sensitivity to the current reinforcer ratio is low early in a component in frequently changing environments, taking up to eight food deliveries to reach an asymptotic level between 0.4 and 0.7 (Davison & Baum, 2000; Landon & Davison, 2001). In a frequently changing environment, response-contingent stimuli whose left∶right delivery ratio is correlated with the left∶right food ratio provide additional information about the current (unknown) food ratio. In steady-state environments on the other hand, the animal has extended experience with the current, unchanging, food ratio, making the stimuli redundant relevant cues (Trabasso & Bower, 1968). In Boutros et al.'s (2009) study, the response ratio approached strict matching to the reinforcer ratio (a group mean sensitivity to reinforcement of 0.94), in the absence of any response-contingent red keylights. This ceiling effect may have masked any discriminative control by the food ratio–stimulus ratio correlation. Response-contingent nonfood stimuli, such as those in Boutros et al.'s (2009) and Davison and Baum's (2006; 2010) experiments, may increase relative responding to the just-productive alternative (an apparent conditional reinforcer effect) only when the stimuli nonredundantly signal that such responding is likely to lead to food.

The present experiments investigated the importance of information nonredundancy in a steady-state arrangement. As in the earlier studies (Boutros et al., 2009; Davison & Baum, 2006; 2010), responses in a two-key concurrent schedule procedure sometimes produced food reinforcers, and sometimes produced keylight color changes (white to red for 3 s). Although the red keylights were never paired with food, they did predict food in a temporal sense: The next response-contingent event after a response-contingent red keylight was always a food delivery, just as the next response-contingent event after a food delivery was always a red keylight. In some conditions, the next food reinforcer was highly likely (p = .9) to be delivered for a response to the alternative that provided the prior red keylight. We termed these psame = .9 conditions. In other conditions, the subsequent food was highly likely to be on the other alternative. We termed these psame = .1 conditions, because the probability of a same-alternative food was low (p = .1). When psame was .1, the red keylights signaled that responses to the not-just-productive alternative were locally more likely to be reinforced. A discriminative view of conditional reinforcement predicts a relative decrease in responses to the just-productive alternative in these conditions. On the other hand, if these stimuli have any acquired appetitive properties (perhaps acquired as a result of their temporal relationship with food), and if these response-strengthening properties drive poststimulus choice responding, then increased responding to the just-productive alternative would be predicted. These psame = .1 conditions are in this sense akin to the negative correlation conditions of Davison and Baum's (2006; 2010) experiments.

Both when psame = .9 and when psame = .1, the stimulus was informative about the likely location of the next food delivery. We also arranged conditions in which the next food was as likely to be delivered for a response to the just-productive alternative as for a response to the not-just-productive alternative (psame = .5). In these conditions, the stimuli provided no information on the likely location of the next food delivery. Thus, as in Davison and Baum's (2006; 2010) experiments, but not Boutros et al.'s (2009) experiment, red keylights (in some conditions) provided nonredundant information about the likely location of the next food delivery. However, similar to Boutros et al.'s (2009) experiment, the global left∶right food ratio remained unchanged for extended periods (65 sessions in the present experiment). Will response-contingent stimuli that provide nonredundant information on the likely location of subsequent reinforcement be able to shift local preference in a steady-state preparation in a manner similar to that seen in Davison and Baum's frequently-changing arrangement? A positive finding would confirm that the discrepancies between Boutros et al.'s (2009) and Davison and Baum's (2006; 2010) experiments were not due to different rates of environmental variability per se, but were instead caused by differences in the signaling properties of the response-contingent nonfood stimuli that accompanied the changes in environmental variability.

We wish to stress that while post-red-keylight preference for the locally richer (as opposed to the just-productive) alternative would support a discriminative account of conditional reinforcer effects (Davison & Baum, 2006; 2010), such a finding would not constitute direct evidence against a response-strengthening account. In the present experiments the red keylights were never paired with food and the temporal relationship between red keylight and food remained constant throughout. Response-strengthening accounts of conditional reinforcement thus make no specific predictions about the results of these experiments. The present experiments instead clarify the role of information nonredundancy in discriminative accounts of conditional reinforcer effects.

EXPERIMENT 1

A single left∶right food ratio (1∶1 in five of the six conditions) was arranged throughout each 65-session condition. We varied the probability that the next food delivery would be on the same alternative as the last red keylight (psame) across conditions (Table 1). When the left∶right food ratio was 1∶1 and psame was either .9 or .1 (Conditions 1 and 2 respectively), red keylights provided nonredundant information about the likely location of the next food delivery. The probability of a left food was .5 before a red keylight, and was either .9 or .1 after it. When the global food ratio was 9∶1 and psame was .9 (Condition 3), left red keylights were not informative; they signaled no change in the local probability of a left food (.9 both before and after the red keylight). However, right red keylights signaled a change from the .9 global probability of a left food to a local .1 probability. In Conditions 4 and 5, psame was .5; meaning that the stimuli were always uninformative (as in Boutros et al.'s 2009 experiment). Condition 4 investigated any potential effects of unequal red keylight rates across the alternatives: there were many more (uninformative) red keylights on the left (left∶right red-keylight ratio = 9∶1). In Condition 5, red keylights remained uninformative about the likely location of the next food (psame = .5), and there were equal numbers of them on the left and right. Any effect of signaling the likely location of the subsequent food should be absent in these psame = .5 conditions.

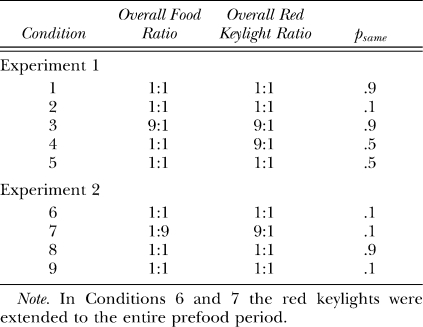

Table 1.

Sequence of conditions in Experiments 1 and 2 along with the overall food ratio, overall red ratio and probability of a food delivery on the same alternative as the last red keylight (psame).

Method

Subjects

Six homing pigeons, all experimentally naïve at the start of training and numbered 11 through 16, served as subjects. Pigeons were maintained at 85% ±15 g of their free-feeding body weights by postsession supplementary feedings of mixed grain when required. Water and grit were freely available in the home cages at all times. The home cages were situated in a room with about 80 other pigeons.

Apparatus

Each pigeon's home cage also served as its experimental chamber. Each cage measured 385 mm high, 370 mm wide and 385 mm deep. Three of the walls were constructed of metal sheets. The front wall, ceiling, and floor were metal bars. Two wooden perches were 60 mm above the floor. One of these was parallel to, and the other at a right angle to, the back wall. Three 20-mm diameter circular translucent response keys were on the right wall. These keys were 85 mm apart and 220 mm above the perches. The keys required a force exceeding approximately 0.1 N to register an effective response when illuminated. The food magazine (50-mm high, 50-mm wide, 40-mm deep) was also on the right wall, 100 mm below the center key. A food hopper, containing wheat, was situated behind the magazine and was raised and illuminated during food presentations. All experimental events were arranged and recorded on an IBM-PC compatible computer running MED-PC IV software in a room adjacent to the colony room.

Procedure

As the animals were experimentally naïve prior to this experiment, they were first magazine-trained. The duration that the food hopper was raised and the magazine illuminated was progressively decreased, and the interval between hopper presentations was progressively increased, until all birds were reliably eating as indicated by daily weighing.

Autoshaping (Brown & Jenkins, 1968) was then used to train the pigeons to peck lighted keys. One of the three keylights was illuminated. If no response was made after 4 s, the key was extinguished and the hopper raised and illuminated for 4 s. If an effective response was registered while the key was lit, the food was immediately delivered. After the food, there was a period of 5 s in which no food deliveries were arranged and the keylights were all darkened. After this period, a variable time (VT) schedule started. When this schedule timed out, a key was again chosen to be illuminated. Initially, a VT 5 s was used and this was increased across sessions to a VT 20 s. Autoshaping sessions lasted 60 min or until 120 food presentations had occurred, whichever happened first.

Training in the experimental conditions was started when all 6 pigeons were reliably pecking. A random-interval (RI, Clark & Hull, 1965) 5-s schedule of reinforcement was initially arranged and the RI schedule was progressively increased across sessions until the terminal RI 54-s schedule was reached. The switching key procedure was introduced at the same time as the RI 5-s schedule. The concurrent schedule contained elements of a two-key concurrent schedule and elements of a switching-key concurrent schedule: Food deliveries were arranged contingent on pecks to the left and right keys but explicit changeover responses to the center key were required to switch from one key to the other (a changeover ratio procedure, as used by Davison and Baum, 2006; 2010). At any one time, only the left or the right key was illuminated white along with the center switching key (illuminated red). In order to switch, the animal had to make four responses to the center key. The first response turned off the side key the animal had been pecking and the fourth response turned on the other key and turned off the switching key. The first peck to the newly illuminated side key turned the center key back on. Originally a two-response switching requirement was arranged but this was increased to four responses as part of attempts to correct somewhat abnormal behavior caused by a programming error.

The programming error, which occurred during pretraining prior to the introduction of any red keylights, consisted of the left key being illuminated (though not effective) after a right food delivery and was not detected for 41 sessions. After 73 corrected sessions with a 1∶1 food ratio, we arranged a 9∶1 ratio for 33 sessions followed by a 1∶9 ratio for 17 sessions. At this point, local preference appeared similar to results previously obtained (with different subjects). We subsequently ran another 65-session condition where the left∶right food ratio was 1∶1 prior to Condition 1. No data from any of these conditions prior to introduction of the red keylights are presented.

The red keylights were then introduced. The overall event-delivery schedule was an exponential RI 27-s schedule. Food deliveries and red keylights (each 3-s long) strictly alternated and all events were dependently arranged (that is, each event, whether a food delivery or a red keylight, had to be collected before the event timer would recommence). Every 1 s, the MED-PC program sampled a probability generator and decided whether to arrange an event. Once an event (either a red keylight or food) was arranged, it was allocated to the next effective left or to the next effective right keypeck according to probabilities in that condition (Table 1). Responses occurring during response-contingent red keylights or food deliveries were recorded but had no scheduled consequences. During a food reinforcer, both keylights were off and during a red keylight illumination the nonilluminated keylight and food magazine were off. Each session lasted 60 food deliveries or 60 min, whichever came first. Each condition lasted for 65 daily sessions.

Results and Discussion

Although 15 sessions has previously been identified as an adequate transition period in experiments with similarly long-running conditions, (e.g., Boutros et al., 2009; Landon, Davison & Elliffe, 2002) the relevant measures of choice took longer to adjust in the present experiment (Rodewald, Hughes & Pitts, 2010). While the global left/right response ratio was unchanged from Session 15 to Session 65 in all conditions, the local response ratios after response-contingent events did change throughout some conditions. Across (overlapping) five-session blocks, we calculated the log ratio (left/right) of responses emitted in the first 10 seconds after each of the four response-contingent stimuli. We judged postevent preference to have stabilized when the log response ratio in one five-session block differed from the log response-ratio in the preceding block by .25 log units or less. In Conditions 1 and 2 the postevent log response ratios reached stability within the first 10 sessions only to shift from this stable level to another (more extreme) level approximately 35 and 25 sessions into Conditions 1 and 2 respectively. Thus, we added to our stability criterion the requirement that at least 40 sessions had to have been conducted in that condition. We wished to equate the number of analyzed sessions across conditions, and so analyzed the last 20 sessions of each 65-session condition even when preference stabilized earlier (e.g., in Conditions 3–5).

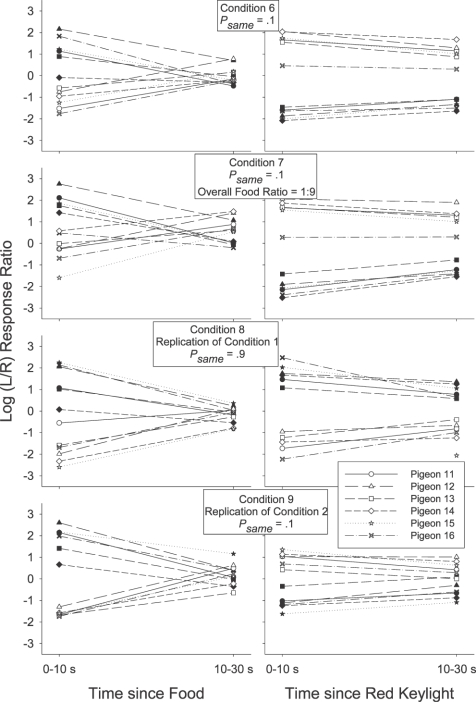

Before we present the preference pulse analyses, we wish to demonstrate that the group average preference pulse is broadly representative of local choice for all individual pigeons. Figure 1 presents local choice after a response-contingent event for each individual pigeon in each condition of Experiment 1. Responses in the postevent period were categorized as occurring 0 to 10 s after an event, or 10 to 30 s after that event. We chose to present individual subject choice in this way because preference pulse analyses require many responses to contribute to each data point and a number of individual subjects had minimal responses in some 2-s time bins in some conditions (because of the reduced number of sessions analyzed).

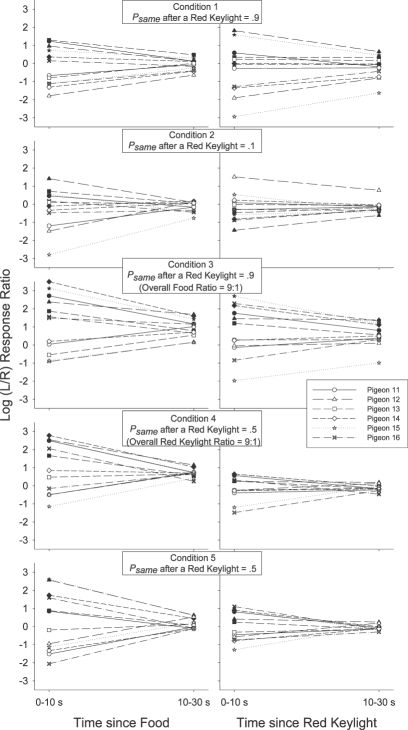

Fig 1.

Log (L/R) response ratio 0 to 10 s and 10 to 30 s after a response-contingent food delivery (left panels) or red keylight (right panels) for each individual subject in each condition of Experiment 1. Filled symbols depict preference after a left event (food or red keylight) and unfilled symbols depict preference after a right event.

Although Figure 1 indicates that the postevent response ratios varied, sometimes substantially, across subjects (e.g., compare Pigeon 15's response ratio after a left food delivery in Condition 3 with Pigeon 16's), the same general trends described choice responding for all pigeons in each condition. For all individual pigeons, responding was more extreme 0 to 10 s after an event (leftmost points on each plot) relative to 10 to 30 s after that event (rightmost points on each plot). Therefore, we will limit our presentation of the more detailed preference pulses to the group mean results. We will however continue to refer to the individual-subject data presented in Figure 1 to demonstrate that the effects visualized at a fine level of detail in the group preference pulses were also present in the data of all individual pigeons.

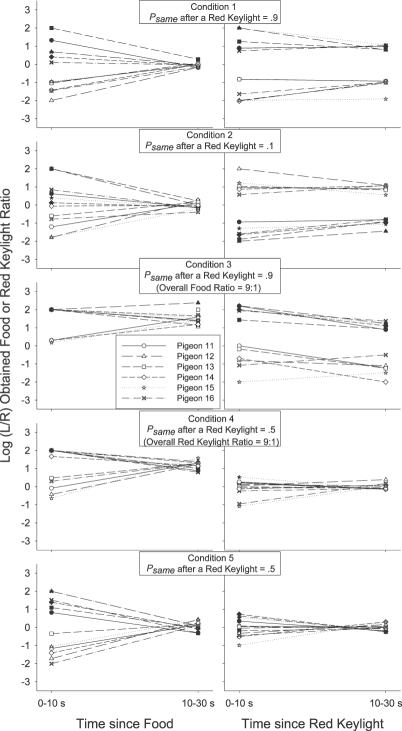

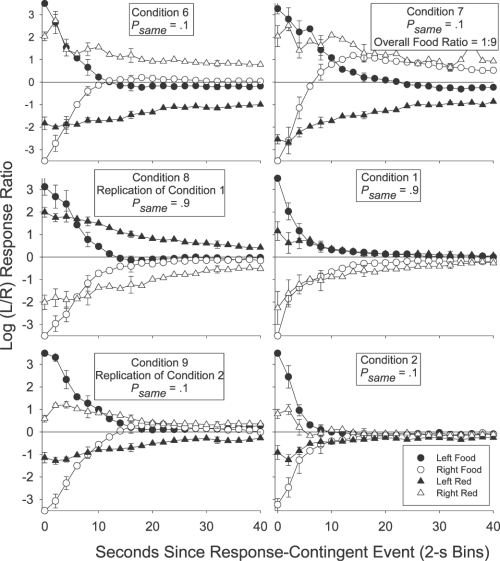

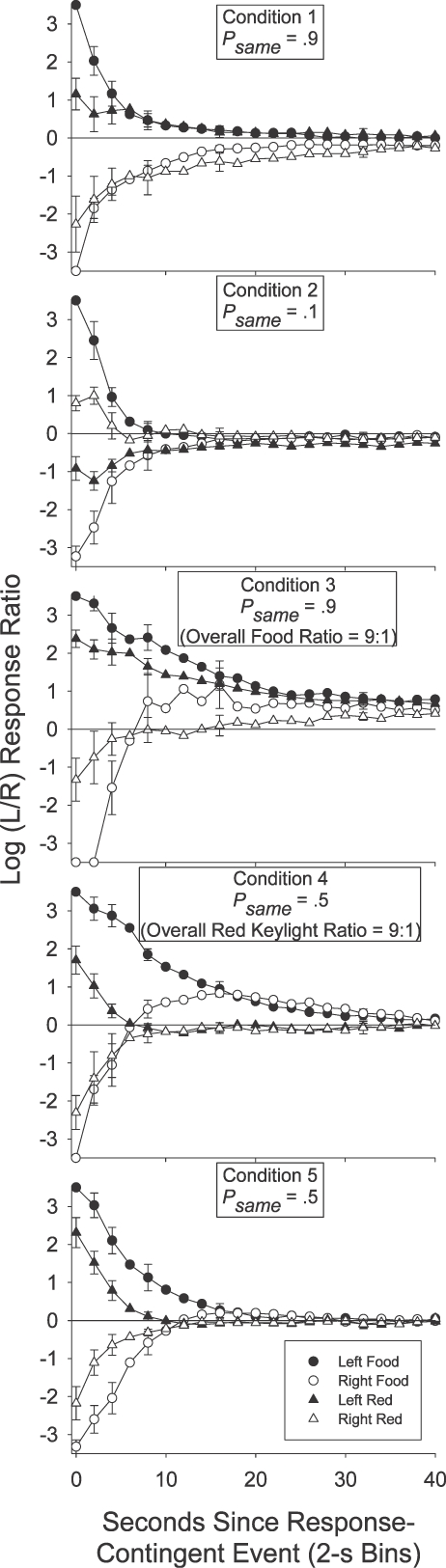

Figure 2 presents the group mean preference pulses in Conditions 1 to 5. The log (left/right) response ratio was calculated in each 2-s time bin after each response-contingent event for each individual subject. No individual subject log response ratio was calculated if there were fewer than 20 responses in total in a (2-s) time bin. A value of ±3.5 (more extreme than any nonexclusive log response ratio) was used if choice responding in a 2-s time bin was exclusive to one alternative. A data point (group mean) was plotted only if there were valid data for at least 3 of the 6 pigeons. For the sake of simplicity, and to avoid cluttering each plot, we limited error bars (±1 standard error) to representative data points starting at Bin 0, and then incrementing at log2 steps across time bins.

Fig 2.

Group mean log (L/R) response ratio in successive 2-s time bins after each of the four response-contingent events in each condition of Experiment 1. Error bars (±1 standard error) are plotted at representative data points, first at time bin 0, and then in each time bin increasing in log2 units.

If a local increase in preference to the just-productive alternative is interpreted as a typical, definitional reinforcer effect (an increase in the response previously followed by reinforcement; Skinner, 1938), then Figures 1 and 2 indicate that the red keylights were (conditional) reinforcers in Conditions 1, 3, 4 and 5: In those conditions group mean (Figure 2) preference shortly after a red keylight (lefthand portion of each plot) was to the left (above the horizontal line indicating indifference) if the preceding red keylight had been on the left (filled triangles), and was to the right (below the indifference line) if the preceding red keylight had been on the right (unfilled triangles). The same trends were also present in the individual-subject data (Figure 1). In Conditions 1 and 3 red keylights signaled that the next food reinforcer was highly likely (psame = .9) to be contingent on a response to the just-productive alternative. In Conditions 4 and 5 (psame = .5), the next food was as likely to be on the not-just-productive as on the just-productive alternative: Red keylights only signaled that food was temporally closer. The increases in local preference to the just-productive alternative after a red keylight in Conditions 4 and 5 thus seem to suggest that simply signaling an increase in the local food rate can produce a transient increase in preference to the just-productive alternative.

However, signaling the likely location of the next food did have a demonstrable effect over and above simply signaling that food was forthcoming. First, for both the group mean (Figure 2), and for each individual pigeon (Figure 1), preference shortly (up to 10 s) after a red keylight was towards the not-just-productive alternative when the red keylights signaled a low probability (psame = .1) of a same-alternative food (Condition 2). In Condition 2 preference after a left red keylight (filled triangles in Figure 2) was below the indifference line, indicating more responding to the right, while preference after a right red keylight (unfilled triangles) was above the indifference line, indicating more responding to the left. Second, preference after a red keylight was towards the just-productive alternative for longer when the probability of a same-alternative food was .9 (Conditions 1 and 3) than when this probability was .5 (Conditions 4 and 5). This was apparent in the group preference pulses (Figure 2): When psame was .9 (Conditions 1 and 3), preference after left and right red keylights remained different from one another and from indifference (the horizontal line) for 30 s. When psame was .5 (Conditions 4 and 5), the preference pulses converged on one another and on indifference within 15 s. It was also evident in the simplified depictions of individual-subject postevent preference (Figure 1): A Wilcoxon matched-pairs signed ranks test indicated that preference 10 to 30 s after a left red keylight remained significantly different from preference 10 to 30 s after a right red keylight when psame was .9 (T6 = 0, p < .05 in both Conditions 1 and 3) but not when psame was .5 (T6 = 9; p > .05 in Condition 4; T6 = 7, p > .05 in Condition 5).

Despite this continuing influence of the last red keylight's location up to 30 s after its delivery when psame was .9 (preference pulses; Figure 2), there was nonetheless some tendency for the behavior ratio to progressively move away from the local arranged left∶right food ratio (either 9∶1 or 1∶9 depending on the location of the prior red keylight): In all three Figure 2 panels depicting post-red-keylight preference when psame was .9, preference immediately after a red keylight was extreme and at times even exclusive for the just-productive alternative. Although preference continued to remain away from indifference for an extended period, it also moved towards indifference: Preference after a left red keylight (filled triangles) moved down towards the indifference line while preference after a right red keylight (unfilled triangles) moved up towards this indifference line. This shift to indifference seems somewhat at odds with a discriminative account of preference as it represents a shift in the response ratio away from the local arranged food ratios: The local probability of a left (versus a right) food delivery after a left red keylight was .9 throughout the period after a left red keylight, while this probability was .1 throughout the period after a right red keylight. Despite these very different local food ratios, preference still converged towards a single point of indifference.

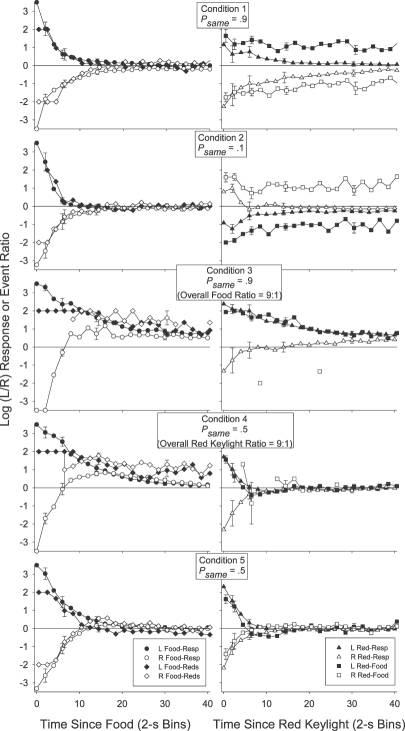

We investigated this by calculating the log obtained response-contingent event (food or red keylight) ratios in successive time bins after each response-contingent event. The individual-subject log-obtained contingent event ratios across time were calculated in much the same way as were the log response ratios across time: As with the preference pulse analyses, the number of food reinforcers or red keylight illuminations received on each alternative in each 2-s time bin after a response-contingent red keylight or food was tallied. Then, a log (left/right) food or red keylight ratio was calculated in each time bin. We could then plot the log (left/right) food and red keylight ratios in each time bin as a function of the time since that last event. Unlike the preference pulse analyses, the minimum number of response-contingent events per time bin was 5 rather than 20 (as there were fewer reinforcers than responses per session), and when the event ratio in a particular time bin was exclusive, a value of ±2 was plotted rather than ±3.5 in the response analysis (± 2 was more extreme than any of the calculated response-contingent event ratios which were generally less extreme than the response ratios). The obtained food and red keylight ratios for each individual pigeon are presented in Figure 3 for 0 to10 s and 10 to 30 s after a response-contingent event. The group mean obtained response-contingent event pulses are presented in Figure 4 in successive 2-s time bins following an event along with the preference pulses in the same period (reprinted from Figure 2). In both Figures 3 and 4, left panels present the log (left/right) response (Figure 4 only) or obtained red keylight ratios after a food delivery, and right panels present the log (left/right) response (Figure 4 only) or obtained food ratios after a red keylight.

Fig 3.

Log (L/R) response-contingent event ratio 0 to 10 s and 10 to 30 s after a response-contingent food delivery (left panels) or red keylight (right panels) for each individual subject in each condition of Experiment 1. Filled symbols depict event (food or red keylight) ratios after a left event and unfilled symbols depict event ratios after a right event.

Fig 4.

Group mean log (L/R) response and response-contingent event ratios in successive 2-s time bins after a response-contingent food delivery (left panels) or red keylight (right panels) in each condition of Experiment 1. Error bars (±1 standard error) are plotted at representative data points, first at time bin 0, and then increasing in log2 units. The contingent-event pulses are shifted to the right of the response pulses by .5 s on the x-axis in order to aid comparison. The preference (behavior) pulses in this figure are reprinted from Figure 2.

We were struck by the occasional apparent inconsistencies between the obtained response-contingent event ratio in a time bin and the experimenter-arranged response-contingent event ratio in that time bin. Immediately after a food (leftmost data points in the left panels of Figures 3 and 4), both the log response and obtained red-keylight ratios were strongly towards the just-productive alternative in all conditions for both the group mean (Figure 4) and all individual subjects (Figure 3). These relatively extreme obtained red-keylight ratios (greater than 10∶1) were often inconsistent with the arranged left∶right red-keylight ratios (1∶1 in Conditions 1, 2 and 5). As all events were dependently arranged, these extreme obtained local red-keylight ratios likely resulted from the extreme local preference for the just-productive alternative seen immediately after a food delivery in all conditions (Figures 1 and 2): Immediately after a food delivery, preference was often exclusive to the just-productive alternative (depicted by a data point at ±3.5 in Figures 1 and 2). Thus, no response-contingent red keylight could be delivered on the not-just-productive alternative in that time bin, even if arranged.

The extreme local behavior ratio immediately after a food delivery may initially have been caused by the changeover contingencies (Boutros et al., 2009; Krägeloh & Davison, 2003): Immediately after any response-contingent event, the just-productive alternative was available while access to the not-just-productive alternative required that the pigeon first complete the changeover requirement. The resulting extreme local response ratio, perhaps initially due only to the changeover contingencies, then drove the local obtained response-contingent event (red keylight) ratio to levels more extreme than those arranged: Collection of a response-contingent red keylight arranged on the not-just-productive alternative was deferred until the pigeon made a response to that alternative. This (delayed) response-contingent event may have then later signaled the availability of future response-contingent events in the time-bin in which that response occurred. In this way, the extreme response ratio drove the obtained red-keylight ratio to relatively extreme levels and this extreme obtained red-keylight ratio maintained the extreme behavior ratio (Herrnstein, 1970). Wilcoxon matched-pairs signed ranks tests were conducted to compare the individual subject log behavior ratio 0 to 10 s after a food delivery (leftmost data points in all Figure 1 plots) with the behavior ratio 10 to 30 s after that food delivery (rightmost data points in all Figure 1 plots). The differences were significant after a left food in Conditions 1, 3, 4 and 5 and after a right food in Conditions 1 and 5 (T6 = 0, p < .05 in all cases). The differences were not significant in the other four cases (T6 = 6, p > .05 after a left food in Condition 2 and after a right food in Conditions 2, 3 and 4). Thus, at least in 6 of these 10 cases, behavior changed as a function of time in the postfood period. The log obtained red-keylight ratios were significantly different 0–10 s after a food delivery (leftmost data points in Figure 3) from the ratios 10–30 s (rightmost data points in Figure 3) after that food in 8 of the 10 tests conducted on individual-subject log-obtained red-keylight ratios (significant differences after a left food in Conditions 1, 2, 4, and 5 and after a right food delivery in Conditions 1, 2, 3, and 5, T6 = 0, p < .05 in all cases; nonsignificant difference after a left food delivery in Condition 3, T6 = 5, p > .05, and after a right food delivery in Condition 4, T6 = 6, p > .05). This indicates that the obtained response-contingent event ratio also changed through time even though the arranged ratio did not. That both the log-response and log-obtained red-keylight ratios changed in similar ways, and both in a way contrary to that predicted by the arranged ratios in that period, suggests that these two local measures (one of behavior, Figures 1 and 2, and one of response-contingent events, Figures 3 and 4) fed back upon each other (Davison, 1998).

Such continual feedback between the local response and food ratios was less apparent after response-contingent red keylights (right panels of Figures 3 and 4). Preference immediately after a red keylight (data points on the left of the righthand panels of Figures 1 and 2) tended to be relatively extreme (log response ratios greater than ± 2 for the individual subjects, except when psame was .1 in Condition 2). Throughout the post-red keylight period (data points on the right of the righthand panels of Figures 1 and 2), preference shifted towards indifference (a log response ratio of 0). Preference was significantly more extreme 0 to 10 s after a red keylight than 10 to 30 s after a red keylight in 9 of the 10 comparisons (Figure 1; T6 = 0, p < .05 after a left food in all conditions and after a right food in Conditions 2–5; T6 = 5, p > .05 after a right food in Condition 1). The local obtained food ratios (Figure 3) in that period however did not change: This local obtained food ratio was consistently towards the just-productive alternative throughout the post-red keylight period. Only 2 of the 9 Wilcoxon matched-pairs signed-ranks tests conducted on the individual-subject data (Figure 3) indicated a significantly different obtained food ratio 0 to 10 s after a red keylight compared to 10 to 30 s after that keylight (after a left food delivery in Conditions 2 and 3, T6 = 0, p < .05 in both cases). The log obtained food ratio did not change as a function of time since any food delivery in Conditions 1 (T6 = 6, p > .05 for left food deliveries; T6 = 9, p > .05 for right food deliveries), 4 (T6 = 4, p > .05 for left food deliveries; T6 = 9, p > .05 for right food deliveries), 5 (T6 = 10, p > .05 for left and right food deliveries) and did not change after a right food delivery in Condition 2 (T6 = 8, p > .05) and Condition 3 (T6 = 7, p > .05). Thus, while the local behavior ratio after a food delivery may have been similar to the local obtained red-keylight ratio throughout the postfood period (similarity of left panels of Figures 1 and 3; see above analysis), the local behavior ratio after a red keylight moved away from the local obtained food ratio throughout the post-red keylight period (dissimilarity of right panels of Figures 1 and 3). This apparently weak control by the local contingencies of reinforcement is surprising given a number of findings showing that behavior can be highly responsive to often-changing local contingencies (e.g., Davison & Baum, 2000; Davison & Hunter, 1979; Hunter & Davison, 1985; Krägeloh, Davison & Elliffe, 2005; Schofield & Davison, 1997).

The change from relatively extreme preference for the locally-rich alternative to relative indifference (Figure 2) resembles a typical memory-decay function (White, 2001; Wright, 2007). Thus, the low sensitivity to the local food ratio at relatively long times from the last red keylight (Figure 4) may have been due to a shortcoming of working memory. We did investigate whether proactive interference (Grant, 1975; Grant & Roberts, 1973) from earlier food deliveries or red keylights masked control by the most recent red keylight. There was no evidence of any control by the last food delivery, nor by any of the prior red keylights (analyses not presented), eliminating proactive interference as a possible explanation for the weak control by the most recent event. Thus, Experiment 2 attempted to identify the mechanism responsible for the apparently ever-decreasing control by the local food ratio (9∶1 or 1∶9) and ever-increasing control by the global food ratio (1∶1).

EXPERIMENT 2

Although the progressive decrease in sensitivity to the local food ratio may not have been due to proactive interference, some shortcoming of working memory may still have been responsible. Though response–reinforcer contingencies have been learned with delays of up to 60 s (Critchfield & Lattal, 1993; Lattal & Gleeson, 1990; Williams & Lattal, 1999), and even 24 hr (Ferster & Hammer, 1965), long intervening delays degrade discrimination of the response–reinforcer contingency. For example, response rate is generally an inverse function of response–reinforcer delay (Odum, Ward, Barnes, & Burke, 2006; Richards, 1981), and responding is more likely to be maintained on additional, nonfunctional manipulanda when there is a delay between reinforcement and responses on the functional manipulandum (Escobar & Bruner, 2007; Keely, Feola, & Lattal, 2007). The relation between a response-contingent red keylight and the location of the subsequent food delivery may similarly have been less easily learned at longer red keylight–food intervals. Further, learning the relation between a red keylight and the next food location at short temporal intervals does not necessarily imply generalization of this relation to longer intervals. White and Cooney (1996) separately manipulated the red∶green reinforcer ratio at two delays (0.1 s and 4 s) in a delayed matching-to-sample task. They found that varying the reinforcer ratio at the long delay had no effect on the response ratio at the short delay and vice versa (see also Sargisson & White, 2001), implying independent stimulus control across successive time intervals.

In Experiment 2, the red keylights were extended until the next food delivery, eliminating any requirement to hold that red keylight's position in working memory. In all other respects, Conditions 6 and 7 (Experiment 2) were the same as Conditions 1 through 5 (Experiment 1): Responses during the first 3 s of a red keylight continued to have no scheduled consequences, while subsequent responses could be followed by food. Only the key that delivered the response-contingent red keylight was illuminated red; the other key remained white (when illuminated). In both Conditions 6 and 7 psame was .1. The red keylights were therefore unlikely to acquire conditioned value via pairing—approximately 90% of food deliveries followed a peck to a white keylight, not a red one. Additionally, increased control by the red keylights in Conditions 6 and 7 would manifest as greater preference to the not-just-productive alternative, and thus could not result from any increases in hedonic value brought about via the (relatively infrequent) instances in which red keylights preceded food.

Extending the red keylight duration may increase sensitivity to the local obtained food ratio via at least two (not necessarily mutually-exclusive) mechanisms. First, the extended red keylights may reduce working memory load by eliminating the need to actively recall the left versus right location of the most recent red keylight. Second, extending the red keylights may allow for greater discrimination of the red keylight–food relation and thus greater concordance between the local response and obtained food ratios.

Conditions 8 and 9 were direct procedural replications of Conditions 1 and 2 respectively. If extending the stimuli had no effect aside from removing the need to remember the location of the last stimulus, the results of Conditions 8 and 9 should exactly replicate those from Conditions 1 and 2. Thus, low indices of stimulus control at long times since the last red keylight would again be expected. On the other hand, experience of the red keylight extending until the next food delivery in Conditions 6 and 7 may allow the pigeons to learn that the red keylights signal the location of the next food delivery even at relatively long times from initial presentation. Once learned, this relation may continue to control choice responding even when the red keylights no longer persist beyond 3 s and in this way cause more extreme preference in Conditions 8 and 9 than in Conditions 1 and 2.

Method

Subjects and Apparatus

The subjects and apparatus from Experiment 1 were again used.

Procedure

In Conditions 6 and 7 red keylights were extended beyond the 3 s of Experiment 1. In all other respects, the basic procedure in Conditions 6 and 7 was unchanged from the procedure of Experiment 1: Response-contingent events were delivered according to an overall RI 27-s schedule and food deliveries and red keylights strictly alternated. The left versus right location of each food delivery was determined according to probabilities unique to that condition (Table 1).

The first 3 s of a red keylight were the same in Conditions 6 and 7 as in Experiment 1: That is, no other keylight in the chamber was illuminated, and responses had no scheduled consequences. After 3 s, the switching key was turned on and responses to it and the red side key were again effective. Pecks to the red keylight could be followed by food (although this was scheduled to happen for only 10% of the total food deliveries). The switching key continued to operate in the same way as in Experiment 1. Whenever the side key that had provided the last red keylight came on, it was red. The other side key was lit white whenever it was on.

The sequence of conditions in Experiment 2 is listed in Table 1. The overall left∶right red ratio was 1∶1 in Condition 6 and 9∶1 in Condition 7. In both conditions, psame after a red keylight was .1; thus in Condition 6, the overall food ratio was 1∶1 while in Condition 7 this ratio was 1∶9. Conditions 6 and 7 were each conducted for 49 daily sessions. Conditions 8 and 9 were replications of Conditions 1 and 2 respectively: Red keylights again only lasted 3 s and each condition was run for 65 daily sessions. In both conditions, the global food and red keylight ratios were 1∶1. In Condition 8 psame was .9 and in Condition 9 psame was .1. Each session of each condition lasted 60 food deliveries or 60 min, whichever occurred first.

Results

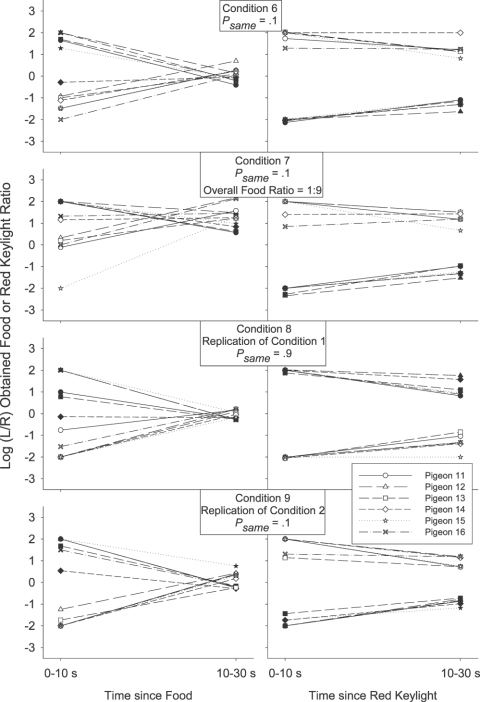

Although fewer sessions were conducted in Conditions 6 and 7, behavior stabilized more quickly (and remained stable) so data from the final 20 sessions could still be taken for analysis. Figure 5 presents the log (left/right) response ratio 0 to 10 s after a response-contingent event (leftmost data points) and 10 to 30 s after the same event (rightmost data points) for each individual pigeon in all conditions of Experiment 2. Figure 6 presents the group mean log (left/right) response ratio in successive 2-s time bins after each of the four response-contingent events in all conditions of Experiment 2. The preference pulses from Conditions 1 and 2 (Experiment 1) which Conditions 8 and 9 replicated are reprinted from Figure 2 to aid comparison. All Experiment 2 preference pulses were calculated in the same way as the preference pulses in Experiment 1.

Fig 5.

Log (L/R) response ratio 0 to 10 s and 10 to 30 s after a response-contingent food delivery (left panels) or red keylight (right panels) for each individual subject in each condition of Experiment 2. Filled symbols depict preference after a left event (food or red keylight) and unfilled symbols depict preference after a right event.

Fig 6.

Group mean log (L/R) response ratio in successive 2-s time bins after each of the four response-contingent events in each condition of Experiment 2. Preference pulses from Conditions 1 and 2 (Experiment 1) are reprinted to aid evaluation of the replication. Error bars (±1 standard error) are plotted at representative data points, first at time bin 0, and then in each time bin increasing in log2 units.

When the red keylights were extended (Conditions 6 and 7), preference after a red keylight (righthand panels of Figure 5 for individual subjects and triangles in Figure 6 for the detailed group mean) was strongly towards the not-just-productive alternative both immediately (data points on the lefthand side of the plots), and up to 30 s after (data points on the righthand side of the plot) that red keylight. Preference after a left-red keylight (filled symbols in the righthand panels of Figure 5; filled triangles in all panels of Figure 6) remained significantly different from preference after a right red keylight (unfilled symbols in the righthand panels of Figure 5; unfilled triangles in all panels of Figure 6) for 10 to 30 s in both conditions with extended red keylights (Figure 5; Wilcoxon matched-pairs signed ranks, T6 = 0, p < .05). Although, procedurally, Condition 8 was identical to Condition 1 (psame = .9) and Condition 9 was identical to Condition 2 (psame = .1), the results were not replicated. Preference in the latter conditions remained away from the horizontal line indicating indifference (and towards the locally richer food alternative) for longer compared to the earlier, pre-red keylight extension conditions (Figure 6).

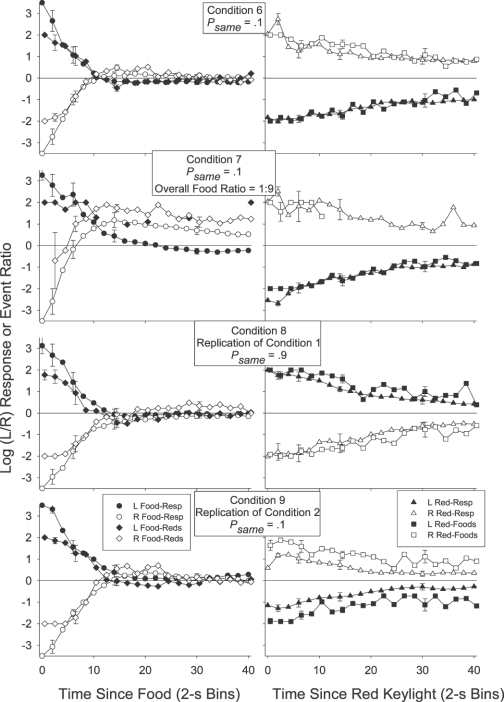

What of the local obtained food ratios? Figure 7 presents the obtained log (left/right) response-contingent event ratios for each individual subject 0 to 10 s and 10 to 30 s after a response-contingent event in all conditions of Experiment 2. Figure 8 presents the group mean obtained log (left/right) response and response-contingent event ratios in each 2-s time bin after a response-contingent event in all conditions of Experiment 2.

Fig 7.

Log (L/R) response-contingent event ratio 0 to 10 s and 10 to 30 s after a response-contingent food delivery (left panels) or red keylight (right panels) for each individual subject in each condition of Experiment 2. Filled symbols depict event (food or red ke ylight) ratios after a left event and unfilled symbols depict event ratios after a right event.

Fig 8.

Group mean log (L/R) response and response-contingent event ratios in successive 2-s time bins after a response-contingent food delivery (left panels) or red keylight (right panels) in each condition of Experiment 2. Error bars (±1 standard error) are plotted at representative data points, first at time bin 0, and then in each time bin increasing in log2 units. The contingent event pulses are shifted to the right of the response pulses by .5 s on the x-axis in order to aid comparison. The preference (behavior) pulses in this figure are reprinted from Figure 6.

The top two righthand plots in both Figures 7 and 8 show that local response and obtained food ratios after a red keylight were more similar to one another when the red keylights were extended (Conditions 6 and 7) than in Experiment 1 (Figures 3 and 4). The log response ratio 0 to 10 s after a red keylight (leftmost data points in the top right panel of Figure 5) was significantly different from the log response ratio 10 to 30 s after that red keylight (rightmost data points in the top right panel of Figure 5) in Condition 6 (Wilcoxon matched-pairs signed-ranks, T6 = 0, p < .05 for both left and right red keylights). The log obtained food ratio 0–10 s after a red keylight (leftmost data points in the top right panel of Figure 7) was also significantly different from the log obtained food ratio 10–30 s after a red keylight (rightmost data points in the top right panel of Figure 7) in this condition (Wilcoxon matched-pairs signed-ranks, T6 = 0, p < .05 for both left and right red keylights). In Condition 7, both the log behavior and log obtained food ratio 0 to 10 s after a red keylight (leftmost data points in the second righthand panel of Figure 5) differed significantly from the log behavior or obtained food ratio 10 to 30 s after that red keylight (rightmost data points in the second righthand panel of Figure 5) only for left red keylights (filled symbols, T6 = 0, p < .05); neither preference nor the obtained food ratio 0 to 10 s after a right red keylight (unfilled symbols) differed from preference or the obtained food ratio 10 to 30 s after a right red keylight (T6 = 6, p > .05 for the behavior ratio after a red keylight; T6 = 9, p > .05 for the obtained food ratio after a red keylight). Thus, extending the red keylights led to greater concordance between the local response ratios and local obtained food ratios: The local behavior ratio changed only when the local obtained food ratio changed.

This greater concordance of the local response and food ratios persisted when the stimuli were again shortened (Conditions 8 and 9; bottom two rows of panels in Figures 7 and 8). The local response ratios were more similar to the local obtained food ratios in Conditions 8 and 9 (Figures 7 and 8) than in Conditions 1, 2 and 3 (Figures 3 and 4). In both Conditions 8 and 9, the log response ratio 0 to 10 s after a left or a right red keylight (leftmost data points in the bottom two right panels of Figure 5) was significantly different from the log response ratio 10 to 30 s (rightmost data points in the bottom two righthand panels of Figure 5) after that red keylight (T6 = 0, p < .05 after left and right foods in both conditions). The log obtained food ratio 0 to 10 s after a red keylight (leftmost data points in the bottom two right panels of Figure 7) also differed significantly from the log obtained food ratio 10 to 30 s after a red keylight (rightmost data points in the bottom two right panels of Figure 7) in both of these conditions (T6 = 0, p < .05 after both left and right food deliveries). Thus, unlike Experiment 1, the log response and obtained food ratios both changed throughout the period following the red keylight.

GENERAL DISCUSSION

Both in Experiment 1 and in Experiment 2, choice responding after a response-contingent red keylight was reliably towards the alternative signaled by that red keylight as more likely to arrange the next food delivery. When psame was .9 and the next food was likely to be on the just-productive alternative, preference after a red keylight was towards that alternative, as predicted by both a discriminative stimulus or signpost account (Davison & Baum, 2006; 2010; Shahan, 2010), and by a response-strengthening account (Kelleher & Gollub, 1962; Williams, 1994). However, preference for the not-just-productive alternative when psame was .1 argues against this instrumental interpretation of the red keylight's effects. When the just-productive alternative was unlikely to provide the next food delivery, responding to that alternative decreased: The pigeons preferentially responded to the other, not-just-reinforced, locally richer alternative. This finding is contrary to a conditional reinforcement or strengthening account. The pigeons consistently preferred the locally richer alternative both before the red keylights were extended (Experiment 1) and after experience of the extended red keylights (Experiment 2). Thus, extending the red keylights did not change their function, but instead enhanced the red keylight effects: Preference was simply more extreme after the red keylights had been extended.

In fact, our major finding was invariant across a number of manipulations: Preference after a response-contingent nonfood stimulus was toward the alternative signaled by that nonfood stimulus as more likely to provide the next food delivery. This was the case when the stimuli were 3 s long (Conditions 1, 2, 3, 8, and 9) and when the stimuli were extended until the food delivery (Conditions 7 and 8), when psame was .9 (Conditions 1, 3 and 8) and when it was .l (Conditions 2, 6, 7 and 9), when the global food ratio was 1∶1 (Conditions 1, 2, 6, 8 and 9) and when it was 9∶1 or 1∶9 (Conditions 3 and 7). Thus, preference for the alternative most likely to provide the next food delivery appeared to be fairly general, in that it did not vary with the overall food ratio, psame, or stimulus duration.

We, like Boutros et al. (2009), arranged a constant food ratio across each 65-session condition. However, unlike Boutros et al. (2009), we here found an effect of signaling the next food delivery's likely location. Davison and Baum (2006; 2010) also reported a consistent preference for the locally richer alternative after an informative response-contingent nonfood stimulus, but in a frequently changing environment. We suggest that information nonredundancy can account for all of these results: In a frequently changing procedure, sensitivity to the current reinforcer ratio is generally lower (.6; Davison & Baum, 2000) relative to more static environments (.85–.95; Baum, 1974; 1979), suggesting lower discrimination of the current food ratio. Thus, stimuli whose left∶right ratio is positively or negatively correlated with the current component's food ratio can signal that the alternative last responded to is either highly likely (in the case of positive correlations) or highly unlikely (in the case of negative correlations) to provide food in the current component. In this way, the stimuli in such frequently-changing procedures offer nonredundant information about the current food ratio. A similarly positively or negatively correlated stimulus in a constant environment where sensitivity to reinforcement is high and the current reinforcer ratio is highly discriminated (as in Boutros et al.'s, 2009 experiment) provides no similarly nonredundant information. In the present procedure, the red keylights were arranged to provide nonredundant information about the likely location of the next food delivery in the context of a steady-state procedure. The effect of these stimuli was similar to the effect of the stimuli in Davison and Baum's experiments: The pigeons consistently preferred the alternative more likely to provide the next food delivery. Thus, information nonredundancy appears to be crucial to a stimulus' discriminative control, and thus to choice responding after the stimulus. The rate of environmental variability has an effect only in that the degree to which behavior is controlled by the current contingencies of reinforcement tends to be correlated with rate of environmental variability.

When psame was .5 (Conditions 4 and 5) and the stimuli signaled that the two alternatives were equally likely to provide the next food delivery, preference immediately after a red keylight was reliably towards the just-productive alternative. This appears to suggest that, in addition to their discriminative properties, the red keylights had some direct strengthening effects, increasing the probability of the preceding response. While we cannot definitively eliminate such a response-strengthening function of the response-contingent red keylights, our analysis of the local obtained food ratios suggests another possible explanation for the preference for the just-productive alternative when psame was .5. Although the arranged probability of a same-alternative food delivery may have been .5 throughout the post-red keylight period, the obtained food ratio varied across time since the red keylight delivery, and in fact was strongly in favor of the just-productive alternative in the first few seconds after a response-contingent red keylight (Figures 3 and 4). The pigeons' behavior ratio (which favored the just-productive alternative) drove the obtained momentary response-contingent event ratio away from the arranged ratio: A food delivery could be arranged on either alternative with equal probability immediately after the red keylight. Only after a response was emitted could the food be collected, however. Thus, the extreme preference to the just-productive alternative immediately after a red keylight produced an extreme obtained local food ratio which continued to maintain the extreme response ratio.

We suggest that the above feedback cycle was initiated by changeover requirements which favored the just-productive response (Boutros et al., 2009; Davison & Baum, 2006; Krägeloh & Davison, 2003), along with historical contingencies of reinforcement. Just before the first condition where psame was .5 (Condition 4), psame was .9 (Condition 3). The preference for the just-productive alternative present in, and appropriate to, that earlier condition may have persisted into the next condition where, although somewhat less than optimal, it was not directly punished. Historical contingencies can have enduring effects which persist beyond removal of those contingencies (Freeman & Lattal, 1992; Johnson, Bickel, Higgins, & Morris, 1991; Krägeloh & Davison, 2003; Weiner, 1964, 1969) and even in the face of extant contingencies which directly penalize such continued adherence (Weiner, 1970). When psame was .9 (Condition 3), local choice after a red keylight strongly favored the just-productive alternative. When psame was changed to .5 (Condition 4), some small cost was imposed on continuing extreme preference for the just-productive alternative (e.g., food deliveries on the not-just-productive alternative may have been somewhat delayed). This small cost was insufficient to counteract the prior history of more often reinforcing responses to the just-productive alternative. Psame of .5 may be a weak contingency (Davison, 1998) which does not strongly drive behavior and readily reflects prior history, in this case, the immediately prior psame of .9. An established preference or pattern of behavior could be changed by current contingencies only if these new contingencies were sufficiently strong. This was the case when psame was .1 (Conditions 2 and 9) and followed a psame of .9 (Conditions 1 and 8) but not when psame = .5 (Condition 4) and followed a psame of .9 (Condition 3).

What can the results of the present experiments say about conditional reinforcement and the role of putative conditional reinforcers in signaling the likely location of future primary reinforcers? First, note that the red keylights were only paired with food in Conditions 6 and 7 of the nine experimental conditions and, even in those conditions, keylight–food pairings were rare (only 10% of food deliveries in those conditions followed a red keylight). Despite this, red keylights produced a transient increase in preference to the just-productive alternative whenever they did not signal that food was more likely on the other alternative. Any account which requires that a stimulus be temporally contiguous with primary reinforcement in order for this stimulus to engender apparent reinforcer effects cannot account for these results (see Williams, 1991, 1994 for an extended discussion of pairing accounts).

Might the red keylights have acquired conditioned value through some means other than temporal contiguity with food? The notion that stimuli which signal a reduction in time to primary reinforcement themselves acquire value as reinforcers has been formalized a number of times (e.g., Killeen, 1982; Mazur, 2001; Squires & Fantino, 1971). Although the red keylights always signaled a reduction in time to primary reinforcement, choice responding in the post-red-keylight period was not always indicative of the keylight having any acquired value: When the red keylights signaled that food was likely to come next for a response to the not-just-productive alternative (Conditions 3 and 10), there was a relative decrease in responses to the alternative that preceded that red keylight. Such a relative decrease in responses to the alternative that produced the stimulus is not consistent with any account of the stimulus acting as a reinforcer. Thus, red keylights in the present experiments may be best understood as discriminative stimuli which signaled the local obtained food ratio in the postkeylight period.

Our experiment was not a definitive test of any response-strengthening account of conditional reinforcement: The red keylights were only incidentally and infrequently paired with food, and the temporal relationship between red keylights and food was constant throughout. Additionally, we cannot eliminate the possibility that the red keylights acquired response-strengthening properties through some other means. Thus our results cannot be used to definitively reject any response-strengthening account of conditional reinforcement. However, the above-noted findings, and in particular the preference for the not-just-productive alternative when psame = .1 suggests not only that conditional reinforcers have discriminative properties, but also that, at least in choice situations, the influence of these discriminative properties appears to dwarf any direct response-strengthening properties of the reinforcers. For example, we cannot eliminate the possibility that the post-red-keylight preference for the just-productive alternative when psame = .5 reflected a response-strengthening property of the preceding response-contingent red keylight. However, even if this was the case, than the preference for the not-just-productive alternative when psame = .1 indicates that any response-strengthening properties are subservient to the signalling properties (when the stimuli are in fact nonredundantly informative).

Taken together, Davison and Baum's (2006; 2010) experiments, Boutros et al.'s (2009) experiment and the present experiments all support the view that conditional reinforcers function as discriminative stimuli (Davison & Baum, 2006; 2010) or signposts (Shahan, 2010), guiding the behaving animal towards the source of subsequent reinforcement. Although biologically neutral stimuli may acquire hedonic properties via their relationship with intrinsically appetitive stimuli (these experiments are silent on this), these properties appear to have a smaller role in influencing choice responding after the stimuli. Rather, the discriminative or informational properties seem mostly responsible for the behavioral effects. If a stimulus uniquely and nonredundantly signals that the preceding behavior is highly likely to produce food, then it will appear to be a conditional reinforcer—an increase in preference to the alternative that produced it will follow. However, this is insufficient to claim definitively that the stimulus is indeed a reinforcer. Just as behavior can be driven by the stimulus towards the immediately preceding response, it can also be driven away from this response.

Acknowledgments

This experiment was conducted by the first author in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the University of Auckland. We thank Mick Sibley for his care of the birds and members of the Auckland University Operant Laboratory who helped conduct the experiments.

REFERENCES

- Baum W.M. On two types of deviation from the matching law: Bias and undermatching. Journal of the Experimental Analysis of Behavior. 1974;22:231–242. doi: 10.1901/jeab.1974.22-231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baum W.M. Matching, undermatching, and overmatching in studies of choice. Journal of the Experimental Analysis of Behavior. 1979;32:269–281. doi: 10.1901/jeab.1979.32-269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boutros N, Davison M, Elliffe D. Conditional reinforcers and informative stimuli in a constant environment. Journal of the Experimental Analysis of Behavior. 2009;91:41–60. doi: 10.1901/jeab.2009.91-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boutros N, Elliffe D, Davison M. Time versus response indices affect conclusions about preference pulses. Behavioural Processes. 2010;84:450–454. doi: 10.1016/j.beproc.2009.11.007. [DOI] [PubMed] [Google Scholar]

- Brown P.L, Jenkins H.M. Autoshaping of the pigeon's key-peck. Journal of the Experimental Analysis of Behavior. 1968;11:1–8. doi: 10.1901/jeab.1968.11-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark F.C, Hull L.D. The generation of random interval schedules. Journal of the Experimental Analysis of Behavior. 1965;8:131–133. doi: 10.1901/jeab.1965.8-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchfield T.S, Lattal K.A. Acquisition of a spatially defined operant with delayed reinforcement. Journal of the Experimental Analysis of Behavior. 1993;59:373–387. doi: 10.1901/jeab.1993.59-373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M. Experimental design: Problems in understanding the dynamical behavior–environment system. The Behavior Analyst. 1998;21:219–240. doi: 10.1007/BF03391965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Choice in a variable environment: Every reinforcer counts. Journal of the Experimental Analysis of Behavior. 2000;74:1–24. doi: 10.1901/jeab.2000.74-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Choice in a variable environment: Effects of blackout duration and extinction between components. Journal of the Experimental Analysis of Behavior. 2002;77:65–89. doi: 10.1901/jeab.2002.77-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Do conditional reinforcers count. Journal of the Experimental Analysis of Behavior. 2006;86:269–283. doi: 10.1901/jeab.2006.56-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Stimulus effects on local preference: Stimulus–response contingencies, stimulus–food pairing, and stimulus food correlation. Journal of the Experimental Analysis of Behavior. 2010;93:45–59. doi: 10.1901/jeab.2010.93-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M.C, Hunter I.W. Concurrent schedules: Undermatching and control by previous experimental conditions. Journal of the Experimental Analysis of Behavior. 1979;32:233–244. doi: 10.1901/jeab.1979.32-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinsmoor J.A. The etymology of basic concepts in the experimental analysis of behavior. Journal of the Experimental Analysis of Behavior. 2004;82:311–316. doi: 10.1901/jeab.2004.82-311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Escobar R, Bruner C.A. Response induction during the acquisition and maintenance of lever pressing with delayed reinforcement. Journal of the Experimental Analysis of Behavior. 2007;88:29–49. doi: 10.1901/jeab.2007.122-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C.B, Hammer C. Variables determining the effects of delay in reinforcement. Journal of the Experimental Analysis of Behavior. 1965;8:243–254. doi: 10.1901/jeab.1965.8-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman T.J, Lattal K.A. Stimulus control of behavioral history. Journal of the Experimental Analysis of Behavior. 1992;57:5–15. doi: 10.1901/jeab.1992.57-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant D.S. Proactive interference in pigeon short-term memory. Journal of Experimental Psychology: Animal Behavior Processes. 1975;104:207–220. [Google Scholar]

- Grant D.S, Roberts W.A. Memory trace interaction in pigeon short-term memory. Journal of Experimental Psychology. 1973;101:21–29. [Google Scholar]

- Herrnstein R.J. On the law of effect. Journal of the Experimental Analysis of Behavior. 1970;13:243–266. doi: 10.1901/jeab.1970.13-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunter I, Davison M. Determination of a behavioral transfer function: White-noise analysis of session-to-session response ratio dynamics on concurrent VI VI schedules. Journal of the Experimental Analysis of Behavior. 1985;43:43–59. doi: 10.1901/jeab.1985.43-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson L.M, Bickel W.K, Higgins S.T, Morris E.K. The effects of schedule history and the opportunity for adjunctive responding on behavior during a fixed-interval schedule of reinforcement. Journal of the Experimental Analysis of Behavior. 1991;55:313–322. doi: 10.1901/jeab.1991.55-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keely J, Feola T, Lattal K.A. Contingency tracking during unsignaled delayed reinforcement. Journal of the Experimental Analysis of Behavior. 2007;88:229–247. doi: 10.1901/jeab.2007.06-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelleher R.T, Gollub L.R. A review of positive conditioned reinforcement. Journal of the Experimental Analysis of Behavior. 1962;5:543–597. doi: 10.1901/jeab.1962.5-s543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen P.R. Incentive theory II: Models for choice. Journal of the Experimental Analysis of Behavior. 1982;38:217–232. doi: 10.1901/jeab.1982.38-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krägeloh C.U, Davison M. Concurrent-schedule performance in transition: Changeover delays and signaled reinforcer ratios. Journal of the Experimental Analysis of Behavior. 2003;79:87–109. doi: 10.1901/jeab.2003.79-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krägeloh C.U, Davison M, Elliffe D.M. Local preference in concurrent schedules: The effects of reinforcer sequences. Journal of the Experimental Analysis of Behavior. 2005;84:37–64. doi: 10.1901/jeab.2005.114-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landon J, Davison M. Reinforcer-ratio variation and its effects on rate of adaptation. Journal of the Experimental Analysis of Behavior. 2001;75(2):207–234. doi: 10.1901/jeab.2001.75-207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landon J, Davison M, Elliffe D. Concurrent schedules: Short- and long-term effects of reinforcers. Journal of the Experimental Analysis of Behavior. 2002;77:257–271. doi: 10.1901/jeab.2002.77-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landon J, Davison M, Elliffe D. Choice in a variable environment: Effects of unequal reinforcer distributions. Journal of the Experimental Analysis of Behavior. 2003;80:187–204. doi: 10.1901/jeab.2003.80-187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattal K.A, Gleeson S. Response acquisition with delayed reinforcement. Journal of Experimental Psychology: Animal Behavior Processes. 1990;16:27–39. [PubMed] [Google Scholar]

- Mazur J.E. Hyperbolic value addition and general models of animal choice. Psychological Review. 2001;108:96–112. doi: 10.1037/0033-295x.108.1.96. [DOI] [PubMed] [Google Scholar]

- Odum A.L, Ward R.D, Barnes C.A, Burke K.A. The effects of delayed reinforcement on variability and repetition of response sequences. Journal of the Experimental Analysis of Behavior. 2006;86:159–179. doi: 10.1901/jeab.2006.58-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards R.W. A comparison of signaled and unsignaled delay of reinforcement. Journal of the Experimental Analysis of Behavior. 1981;35:145–152. doi: 10.1901/jeab.1981.35-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodewald A.M, Hughes C.E, Pitts R.C. Development and maintenance of choice in a dynamic environment. Journal of the Experimental Analysis of Behavior. 2010;94:175–195. doi: 10.1901/jeab.2010.94-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sargisson R.J, White K.G. Generalization of delayed matching to sample following training at different delays. Journal of the Experimental Analysis of Behavior. 2001;75:1–14. doi: 10.1901/jeab.2001.75-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schofield G, Davison M. Nonstable concurrent choice in pigeons. Journal of the Experimental Analysis of Behavior. 1997;68:219–232. doi: 10.1901/jeab.1997.68-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A. Conditioned reinforcement and response strength. Journal of the Experimental Analysis of Behavior. 2010;93:269–289. doi: 10.1901/jeab.2010.93-269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner B.F. The Behavior of Organisms: An Experimental Analysis. New York: Appleton-Century-Crofts; 1938. [Google Scholar]

- Squires N, Fantino E. A model for choice in simple concurrent and concurrent-chains schedules. Journal of the Experimental Analysis of Behavior. 1971;15:27–38. doi: 10.1901/jeab.1971.15-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trabasso T, Bower G.H. Attention in learning: Theory and research. New York: Wiley; 1968. [Google Scholar]

- Weiner H. Conditioning history and human fixed-interval performance. Journal of the Experimental Analysis of Behavior. 1964;7:383–385. doi: 10.1901/jeab.1964.7-383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner H. Controlling human fixed-interval performance. Journal of the Experimental Analysis of Behavior. 1969;12:349–373. doi: 10.1901/jeab.1969.12-349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner H. Human behavioral persistence. The Psychological Record. 1970;20:445–456. [Google Scholar]

- White K.G. Forgetting functions. Animal Learning & Behavior. 2001;29:193–207. [Google Scholar]

- White K.G, Cooney E.B. Consequences of remembering: Independence of performance at different retention intervals. Journal of Experimental Psychology: Animal Behavior Processes. 1996;22:51–59. [Google Scholar]

- Williams A.M, Lattal K.A. The role of the response–reinforcer relation in delay-of-reinforcement effects. Journal of the Experimental Analysis of Behavior. 1999;71:187–194. doi: 10.1901/jeab.1999.71-187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B.A. Marking and bridging versus conditioned reinforcement. Animal Learning & Behavior. 1991;19:264–269. [Google Scholar]

- Williams B.A. Conditioned reinforcement: Neglected or outmoded explanatory construct. Psychonomic Bulletin & Review. 1994;1:457–475. doi: 10.3758/BF03210950. [DOI] [PubMed] [Google Scholar]

- Wright A.A. An experimental analysis of memory processing. Journal of the Experimental Analysis of Behavior. 2007;88:405–433. doi: 10.1901/jeab.2007.88-405. [DOI] [PMC free article] [PubMed] [Google Scholar]