Abstract

It has long been understood that food deliveries may act as signals of future food location, and not only as strengtheners of prefood responding as the law of effect suggests. Recent research has taken this idea further—the main effect of food deliveries, or other “reinforcers”, may be signaling rather than strengthening. The present experiment investigated the ability of food deliveries to signal food contingencies across time after food. In Phase 1, the next food delivery was always equally likely to be arranged for a left- or a right-key response. Conditions were arranged such that the next food delivery was likely to occur either sooner on the left (or right) key, or sooner on the just-productive (or not-just-productive) key. In Phase 2, similar contingencies were arranged, but the last-food location was signaled by a red keylight. Preference, measured in 2-s bins across interfood intervals, was jointly controlled by the likely time and location of the next food delivery. In Phase 1, when any food delivery signaled a likely sooner next food delivery on a particular key, postfood preference was strongly toward that key, and moved toward the other key across the interreinforcer interval. In other conditions in which food delivery on the two keys signaled different subsequent contingencies, postfood preference was less extreme, and quickly moved toward indifference. In Phase 2, in all three conditions, initial preference was strongly toward the likely-sooner food key, and moved to the other key across the interfood interval. In both phases, at a more extended level of analysis, sequences of same-key food deliveries caused a small increase in preference for the just-productive key, suggesting the presence of a “reinforcement effect”, albeit one that was very small.

Keywords: choice, food reinforcement, signaling, preference pulse, pecking, pigeon

The law of effect was described by Thorndike (1911) and later by Skinner (1938) as generically asserting that reinforcers increase the probability of the response they follow. More recent research suggests that this prediction is true only when past and future contingencies are the same; in situations where future consequences differ from those in the past, it is the future contingency that controls choice following reinforcers, rather than the effect of reinforcers enhancing or maintaining responses emitted just prior to the reinforcer.

The ability of reinforcers to function as discriminative stimuli signaling future behavior–food contingencies has frequently been acknowledged in the experimental analysis of behavior. The period of decreased or absent responding following a food delivery on fixed-interval and fixed-ratio schedules is thought to occur because each reinforcer delivery signals the start of a period during which no responses will be reinforced (e.g., Schneider, 1969). A closely-related literature further suggests that the signaling properties of reinforcers may sometimes outweigh the strengthening effects. In the radial-arm maze (Olton & Samuelson, 1976), for example, rats quickly learned not to reenter an arm in which they had recently found food. That is, the response of entering a particular arm is not “reinforced” in the simple sense implied by the law of effect, because the discovery of food in one arm signals a change in the response–reinforcer contingency—food will not be found again in that arm in the immediate future. Similarly, in a conditional-discrimination task, the presence or absence of a reinforcer following the sample-stimulus presentation can signal the location of the alternative that will likely produce reinforcement in the comparison phase (Randall & Zentall, 1997). Under these conditions, the comparison choice was generally made to the key likely to produce a reinforcer, even when this key was the one that had not produced the reinforcer in the sample phase.

The location of the last-obtained reinforcer can also be a discriminative stimulus signaling future behavior–food contingencies. Krägeloh, Davison and Elliffe (2005) varied the conditional probability of obtaining food on one key, given that the previous food delivery was obtained on that key. At conditional probabilities of .7 and above, postfood preference was strongly toward the just-productive key. Preference pulses—the log response ratio plotted as a function of time or responses since a food delivery—lasted longer at higher conditional probabilities, and the level at which preference stabilized was more extreme. As the probability that the next food delivery would be on the just-productive key was decreased, postfood preference moved toward the not-just-productive key, although it was never as extreme as that for the just-productive key at high conditional probabilities. Thus, the direction of postfood preference appeared to be controlled by the likely location of the next food delivery, rather than by the location of the previous food.

Stimuli that are often called “conditional reinforcers” may also act as signals of future food ratios and thus produce effects which have been attributed to “reinforcement” when they signal similar future food contingencies. Davison and Baum (2006; 2010) noted that when ratios of additional nonfood stimuli were presented, and were highly correlated with food ratios, poststimulus preference was in the same direction as postfood preference, but when the stimulus-ratio to food-ratio correlation was negative, poststimulus preference pulses were toward the not-just-productive key. This was so whether or not the additional stimuli were paired with food.

In a steady-state environment, however, the correlation between stimulus ratio and food ratio has no effect on poststimulus choice. Under such conditions, Boutros, Davison and Elliffe (2009) found that postfood preference was affected only by stimulus–food pairing, but that the effect of the stimulus was discernible only at the most local level of analysis, as an increase in preference-pulse amplitude. The effect of the response-contingent stimuli on preference was also small in comparison with that of a food delivery. Boutros et al.(2009) concluded that the importance of response-contingent stimuli in a steady-state environment was small because the overall food ratio is already discernible from continued food ratios. In contrast, response-contingent stimuli in a highly variable environment in which the current food ratio is unknown, as used by Davison and Baum (2006; 2010), are important in that they do provide additional information about where the next food is more likely to occur. Thus, in any environment, the pattern of food delivery itself can provide information about the likely future contingencies of food delivery, and choice follows these future contingencies. This explanation was confirmed by Boutros, Davison & Elliffe (2011).

Krägeloh and Davison (2003) suggested that both time to food and location of next food may moderate the magnitude of preference, with relatively more delayed food being followed by smaller, shorter preference pulses (see also Davison & Baum, 2007). The studies above also show that the magnitude of preference is in some way related to the contingency; choice for the just-productive alternative generally occurs more frequently, or is more extreme, than choice for the not-just-productive alternative, even when the contingencies favor the not-just-productive alternative. Randall and Zentall (1997) noted that when responding was required on a center key between the presentation of the reinforcer and the comparison phase in a conditional discrimination, their pigeons tended to respond slightly more on the just-productive alternative, even when this was associated with a lower probability of reinforcement. Similarly, although Krägeloh, Davison and Elliffe (2005) found that the direction of preference changed with the probability of obtaining food on that alternative, preference under conditions where the next food was very likely to occur on the not-just-productive alternative was much less extreme. Such findings suggest an effect additional to a signaling effect, which could either be a “reinforcement” effect or possibly a simple continuation of responding on a key such as occurs in a normal visit to that key (e.g., Schneider & Davison, 2006).

Behavior also appears to be controlled by the probability of obtaining a reinforcer on a response alternative at any point in time. Catania and Reynolds (1968) observed an increase in response rate as time since a reinforcer increased, either if the variable-interval (VI) schedules were arranged arithmetically, or if the probability of a reinforcer being arranged increased with time since the last reinforcer. In contrast, when VI schedules were arranged according to a constant-probability schedule, the rate of responding remained relatively constant across time since a reinforcer. Elliffe and Alsop (1996) also reported clear changes in concurrent choice that were associated with differences in the way in which reinforcer availability changed with the passage of time. Similarly, Church and Lacourse (2001) observed that when rats were required to work on VI schedules with equal means and standard deviations, but with differing distributions of food in time after reinforcers, the pattern of postreinforcer responding differed depending on the distribution of foods in time. When intervals were arranged according to an exponential distribution, the mean first postfood response and the maximum rate of responding occurred earlier than when intervals were arranged according to a Wald distribution, in which the probability of food increases and then decreases in interreinforcer intervals. The effects of the different distributions across time since an event again show that the local time-based probability of obtaining food is an important determinant of behavior.

Do food deliveries signal local changes in food probabilities on conventional concurrent VI VI schedules? Any such effects require that there are local changes in food probability for responses after food deliveries, and such changes will occur in conventional concurrent VI VI schedules that are arranged with a changeover delay (Herrnstein, 1961) that continues to operate in the time following food delivery. Consider a 3-s changeover delay. If this operates after food delivery (i.e., according to the usual definition of a changeover delay), food is only available for responding after food delivery on the key that just produced food—the food ratio is locally infinite. If the animal changes over, it can receive no food for 3 s. Furthermore, such an effect may also occur when no changeover delay is arranged: If a food delivery on a key produces a locally strong preference for responding on (or an extended visit to) the same key after food, food deliveries scheduled and held on the not-just- productive key will not be collected and will become available later, again resulting in a locally extreme food ratio to the just-productive key. In this way, a dynamical enhancement of preference can occur: A local preference can produce a local change in the food ratio on a key, which will then presumably further enhance the local preference. Thus local preference pulses may be held up by their own bootstraps, as it were.

The present experiment investigated the ability of individual reinforcers to signal future time-based contingencies. Under some conditions, the signaling properties of the reinforcer were in direct opposition to the strengthening effects of the reinforcer. Phase 1 of the present experiment was designed to investigate whether food delivery can signal the likely availability of future food in time, using concurrent VI schedules on which the overall food ratio in sessions was always 1∶1, but food could occur on the average sooner or later after food delivery on the just-productive or not-just-productive key, or on the left or the right keys. In order to reduce or eliminate the effects of memory decrement over time since food, in Phase 2 a key-light stimulus that signaled the location of the just-productive key was arranged throughout the following interfood interval. No changeover delay was used.

METHOD

Subjects

Six naïve homing pigeons numbered 21 to 26 were maintained at 85% ± 15 g of their free-feeding body weight. Water and grit were available at all times. Pigeons were fed postfeed of mixed grain when necessary to maintain their designated body weights.

Apparatus

The pigeons were housed individually in their home cages (375 mm high by 375 mm deep by 370 mm wide) which also served as experimental chambers. On one wall of the cage, 20 mm above the floor, were three plastic keys (20 mm in diameter) set 100 mm apart center to center. Each key could be illuminated yellow or red, and responses to illuminated keys exceeding 0.1 N were recorded. Beneath the center key, 60 mm from the perch, was a magazine aperture measuring 40 mm by 40 mm. During food delivery, key lights were extinguished, the aperture was illuminated, and the hopper containing wheat was raised for 2.5 s. The subjects could see and hear other pigeons in the room during the experiment; no person entered the room during this time.

Procedure

The pigeons were slowly deprived of food by limiting their intakes, and were taught to eat from the food magazine when it was presented. When pigeons were reliably eating during 2.5-s magazine presentations, they were autoshaped to peck the two response keys. One of the two keys was illuminated yellow or red for 4 s, after which food was presented independently of responding. If the pigeon pecked the illuminated key, food was presented immediately. Once the pigeons were reliably pecking the illuminated keys, they were trained over 2 weeks on a series of food-delivery schedules increasing from continuous reinforcement to VI 50 s presented singly on the left or right keys with yellow keylights. They were then placed on the final procedure described below.

Sessions were conducted in the pigeons' home cages in a time-shifted environment in which the room lights were lit at 12 midnight. Sessions for all 6 pigeons commenced at about 1.00 am. Room lights were extinguished at 4 pm.

Sessions were conducted once a day, commencing with the left and right keylights lit yellow, signaling the availability of a VI schedule on each key. Additionally, in Phase 2 Conditions (8, 9 and 10), the key that had produced the last food was illuminated red during the following interfood interval. Sessions ran for 60 minutes or until 60 food deliveries had been collected, whichever occurred first. No changeover delay (COD; Herrnstein, 1961) was used.

Phase 1

Food was arranged according to a modified concurrent VI VI schedule, where the next-food location was determined at the prior food delivery (and at the start of each session) with a probability of .5. Thus, approximately equal numbers of food deliveries were available on both alternatives in each session. Although only one schedule at a time was ever in operation, both keys remained illuminated for the duration of each interfood interval.

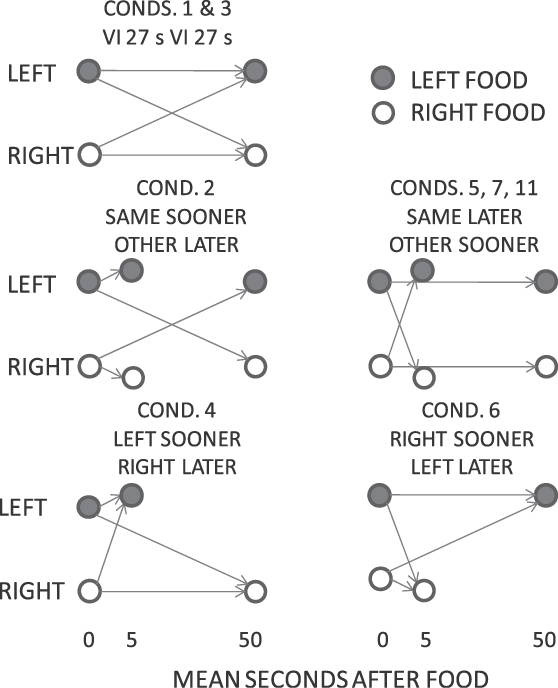

Figure 1 shows a diagram of the contingencies arranged in Phase 1. In Conditions 1 and 3, both schedules were VI 27 s, so the probability of food delivery at any time after food delivery on either key remained equal, and a food delivery did not signal any difference in expected time to the next food. In subsequent conditions, the two schedules were VI 5 s and VI 50 s, and across conditions we varied how the last food delivery, and in some conditions, its key location, signaled the key on which the VI 5-s and the VI 50-s schedules were available in the next interfood interval (Figure 1). Thus, in Condition 2, food was arranged on a VI 5-s schedule on the just-productive key, or on a VI 50-s schedule on the not-just-productive key. In Condition 5, 7 and 11, the contingencies were the reverse of those in Condition 2, so that food was available on a VI 5-s schedule on the not-just-productive key, and on a VI 50-s schedule on the just-productive key. Thus, for Conditions 2, 5, 7 and 11, the location of the last reinforcer determined the location of the subsequent sooner schedule. In Condition 4, food was available on a VI 5-s schedule on the left key, and on a VI 50-s schedule on the right key, and these schedules were reversed in Condition 6—thus, under these conditions the location of the sooner schedule was independent of the last-reinforcer location. In all conditions, the mean time to the next food was 27 s.

Fig 1.

Phase 1. A schematic diagram of the likely mean time to food on the left and right keys following a left- or right-key food delivery in Conditions 1 to 7, and 11. The mean interval for left- and right-key food deliveries, respectively, is shown by filled and open circles, respectively. Similar contingencies were arranged in Phase 2 (Conditions 8 to 10), but the key that produced the last food delivery was illuminated red (the other remained yellow) during the next interfood interval.

Phase 2

As in Phase 1, the overall food ratio was held approximately equal on the two keys, and the probability that the next food would be obtained sooner on the just-productive key, or sooner on the not-just-productive key, or sooner at a particular location (left or right key), was varied across conditions. However, the just-productive key was lit red for the duration of the following interreinforcer interval (IRI). In Condition 8, food was arranged on a VI 5-s schedule on the not-just-productive key, and a VI 50-s schedule on the just-productive key, as in Conditions 5, 7 and 11 in Phase 1. In Condition 9, food was arranged on a VI 5-s schedule on the right key, and on a VI 50-s schedule on the left key (as for Condition 6, Phase 1). In Condition 10, food was available on a VI 5-s schedule on the just-productive key, and on a VI 50-s schedule on the not-just-productive key (as in Condition 2 of Phase 1). Thus, in Conditions 8 and 10, the location of the likely-sooner food delivery depended on the location of the previous reinforcer, but in Condition 9, the location of the likely-sooner food delivery was always on the same key and was thus independent of the last-reinforcer location.

A PC-compatible computer running MED-PC® IV software in an adjacent room controlled and recorded all experimental events and the time at which they occurred. Each condition lasted for 85 daily sessions, and the data from the last 60 sessions were analyzed. Stability of data was assessed visually using graphs of log response ratios, updated daily. Changes in performance were complete within the first 25 sessions, and thus the data from the last 60 sessions used in the analysis may be regarded as being stable.

RESULTS

In the following data analyses, no group mean data were plotted if there were fewer than 120 responses or 60 food deliveries in a time bin summed across all pigeons over the 65 sessions. For individual data points, the respective numbers were 20 and 10. Additionally, some log-ratio data could not be plotted because no responses were emitted, or no foods obtained, on one key in a 2-s time bin.

Phase 1

Figure 2 shows the mean obtained log left/right food ratios in successive 2-s bins in interfood intervals after left- and right-key food deliveries for Phase 1 (Conditions 1 to 7 and 11). As arranged, for all conditions except Conditions 1 and 3, the local distribution of food deliveries changed with increasing time since the last food. In the same–later conditions (Conditions 5, 7, and 11), the log food ratio immediately following a food delivery was strongly in the direction of the not-just-productive key. As time since the last food increased, the log food ratio moved in the direction of the just-productive key, becoming equal at around 10 s since the last food delivery, then moving strongly toward the just-productive key. In the same–sooner condition (Condition 2), the local food ratio in interfood intervals was in the opposite direction, beginning strongly in the direction of the just-productive key, and moving toward the not-just-productive key. In the left–sooner condition (Condition 4), the local food ratio was strongly toward the left key immediately following the delivery of a food, but moved toward the right key with time since food. The opposite was true for the left–later condition (Condition 6).

Fig 2.

Phase 1. Mean log (L/R) obtained food ratio as a function of time since a left or right food delivery, in 2-s bins. Some data fell off the graphs.

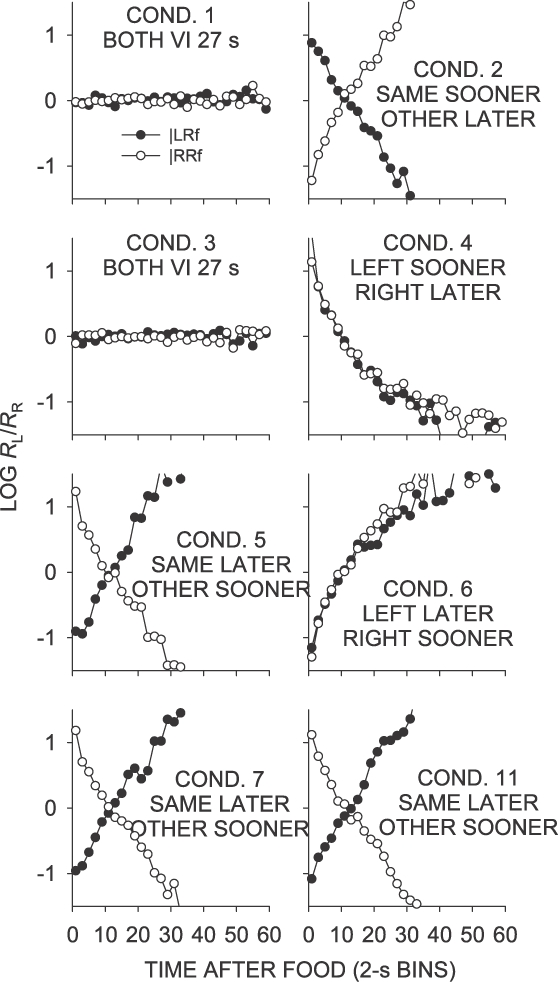

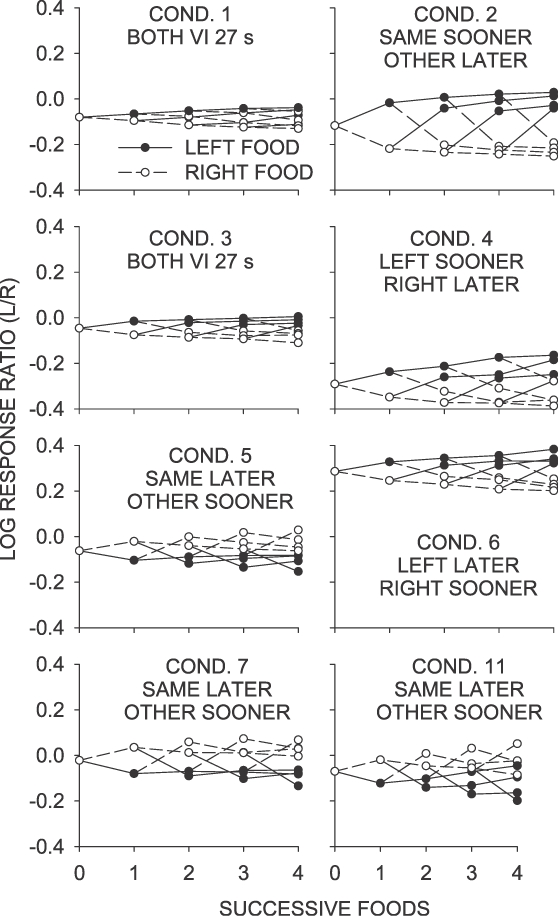

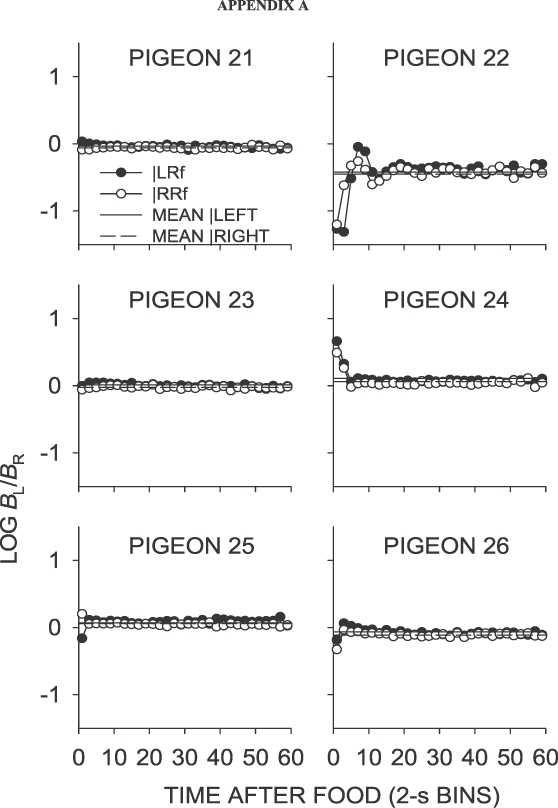

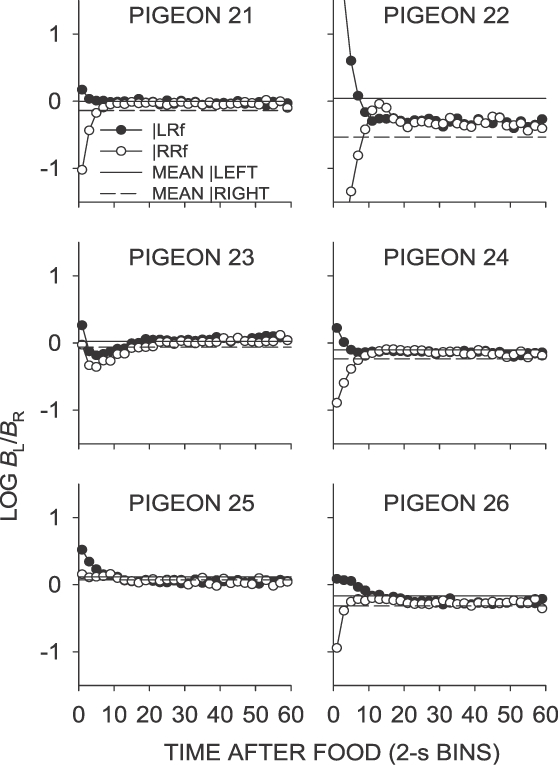

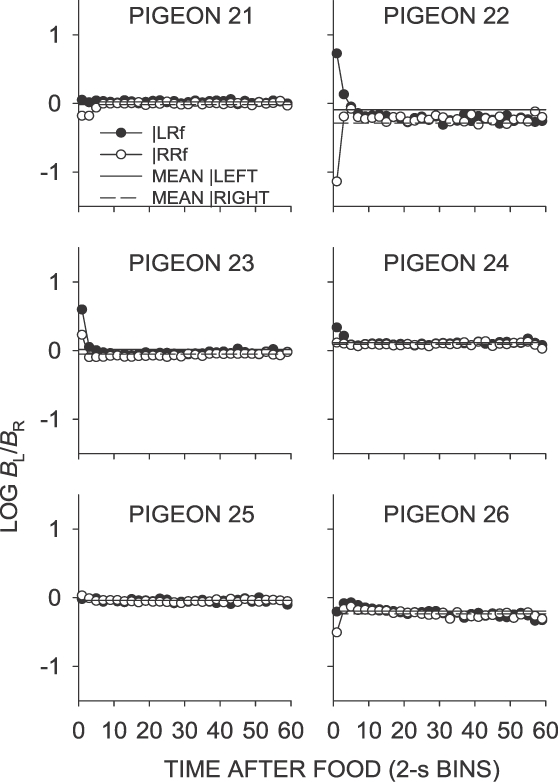

The effect of recent food deliveries on postfood choice was analyzed using preference pulses, in which the log left∶right response ratios were plotted as a function of time in 2-s bins since the last food, following both left- and right-key food deliveries. Figure 3 shows preference pulses from Phase 1 (Conditions 1 to 7 and 11) of the experiment collapsed across the 6 pigeons. Comparing this figure with Appendix Figures A1 to A7 (individual-subject data), it is apparent that the group data were generally representative of individual responding, with the possible exception of Conditions 5, 7 and 11. In these conditions, choice remained close to indifference for most birds, but the pattern of the mean data was affected by Pigeon 22, whose postfood choice was strongly controlled by the sooner-on-other-key contingency (Appendix Figures A5, A7 and A8). A similar pattern developed for Pigeon 25 in Condition 11. However, despite these quantitative differences, left-key choice was higher than right-key choice immediately after food for 5 pigeons in Condition 5 and 7, and all 6 pigeons in Condition 11, showing a degree of control by the other–sooner contingency. Henceforth, discussion will focus on group data, noting individual-pigeon discrepancies. Particular features of interest are the magnitude and direction of the preference pulses immediately following the food delivery, and mean choice averaged across interfood intervals (the same as across whole sessions). The mean and range first-bin and extended log response ratios for each condition are shown in Table 1.

Fig 3.

Phase 1. Mean log (Left/Right) response ratios as a function of time since left and right food deliveries. Also shown is the extended-level preference averaged across the last 65 sessions of the condition separately for each prior reinforcer. Condition 3 was a replication of Condition 1, and Conditions 7 and 11 replicated Condition 5.

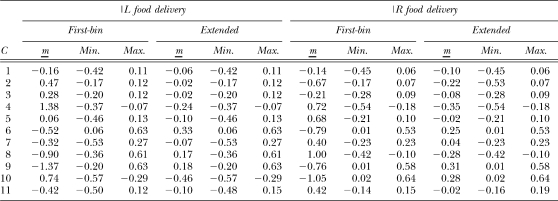

Table 1.

Mean (m), lower (Min.) and upper (Max.) first-bin, and extended, log response ratios following left and right food deliveries. C is condition number.

When food deliveries were arranged according to a VI 27-s schedule on both keys (Conditions 1 and 3; Figure 3), postfood preference in the first 2-s time bin was generally close to indifference following left-food deliveries from either alternative (Table 1). Preference stabilized around indifference after about 4 s. In Condition 3, immediately after food, Pigeon 22 showed strong choice toward the key that had just provided food—an apparent carryover from its performance in Condition 2.

Mean first-bin preference in the same–sooner condition (Condition 2; Figure 3), was larger than in the equal VI 27-s conditions (Conditions 1 and 3) following left- and right-key food deliveries (Table 1). Figure 3 shows that extended choice moved toward the sessional mean, slightly below indifference. As in other conditions, Pigeon 22 showed strong first-bin choice toward the key that arranged the sooner food.

Table 1 shows that first-bin preference in the left–sooner condition (Condition 4; Figure 3) was again more extreme than the VI 27-s and same–sooner conditions (Conditions 1 to 3). In the left–sooner condition, first-bin preference was strongly toward the left key, irrespective of the last-food location. First-bin preference following left-key food was systematically more extreme than preference following a right-key food (Table 1). A binomial test of response ratios during the first 20 s following a food delivery averaged over the last 65 sessions of Condition 4 indicated that this difference was significant across individual subjects, z = 2.1, N = 6, p < .05. Preference moved toward and past indifference, and stabilized at a level reflecting extreme right-key choice. Such increased preference for the later-food key at longer times since the last food delivery resulted in mean choice across interfood intervals being toward the right (later) key for both the group mean and for each individual pigeon (Table 1).

In the left-food later condition (Condition 6), the pattern of preference was generally opposite to that observed in the left–sooner condition (Condition 4), as might be expected given the opposing contingencies in effect. First-bin preference was strongly in the direction of the right key following both left-key and right-key food deliveries (Table 1). This pattern of responding was opposite to that seen in Condition 4. First-bin preference favored the right key, as indicated by the negative log ratios in Figure 3. A binomial test of log response ratios during the first 20 s after a food delivery averaged over the last 65 sessions indicated that this difference was significant across individual subjects, z = 2.1, N = 6, p < .05.

As found in the left-food sooner condition (Condition 4), choice across interfood intervals moved toward the key on which the later schedule operated, reaching the sessional mean approximately 20 s after the last food delivery, and continuing to a level reflecting more extreme preference for the later-food (left) key. Unlike preference in the other Phase-1 conditions, preference in the left–sooner and left–later conditions did not stabilize at a level reflecting the sessional mean. As a result, mean preference in the both the right-food later and left-food later conditions (Conditions 4 and 6) reflected overall preference for the later-food alternative.

In the same–later conditions (Conditions 5, 7 and 11), food deliveries occurred sooner on the not-just productive key, and postfood preference in the first 2-s bin was generally toward the not-just-productive key following a food delivery from either alternative (Table 1). In Condition 7, mean first-bin preference was in the direction of the likely sooner-food location, whereas in Condition 5, only preference following right-key food deliveries was in the direction of the likely sooner-food location (Table 1). Although Condition 11 was conducted after several conditions in which the last-food location was signaled (Phase 2), the intervening conditions did not appear to affect postfood preference; the results from Condition 11 closely replicated those from Condition 7. As previously noted, local choice for all pigeons except Pigeon 22 (in all same–later conditions) and Pigeon 25 (in Condition 11) generally was close to indifference immediately following food delivery, and quickly stabilized at a level close to the sessional mean.

Although the contingencies were the direct opposite of those in the same–sooner condition (Condition 2), preference in the same–later conditions (Conditions 5, 7 and 11) was substantially less extreme. Both the magnitude and the duration of the postfood preference pulse were notably smaller in the same–later conditions, possibly reflecting a bias against postfood responding on the not-just-productive alternative.

Thus, the contingencies when the VI schedules were the same on both keys produced the least extreme postfood preference (Conditions 1 and 3). When the schedule was arranged to reinforce further the effect of the previous food delivery (same–sooner; Condition 2), postfood preference was more extreme. When the schedule favored a particular key (left sooner and left later; Conditions 4 and 6), there were strong postfood pulses in the direction of that key. The choice data from conditions in which the schedule favored the not-just-productive key (same later; Conditions 5, 7 and 11) were somewhat ambiguous, but suggested only weak control by the contingencies in most pigeons, with the exception of Pigeon 22, and Pigeon 25 in Condition 11, which showed more extreme preference for the not-just-productive key.

Effects of Successive Reinforcers

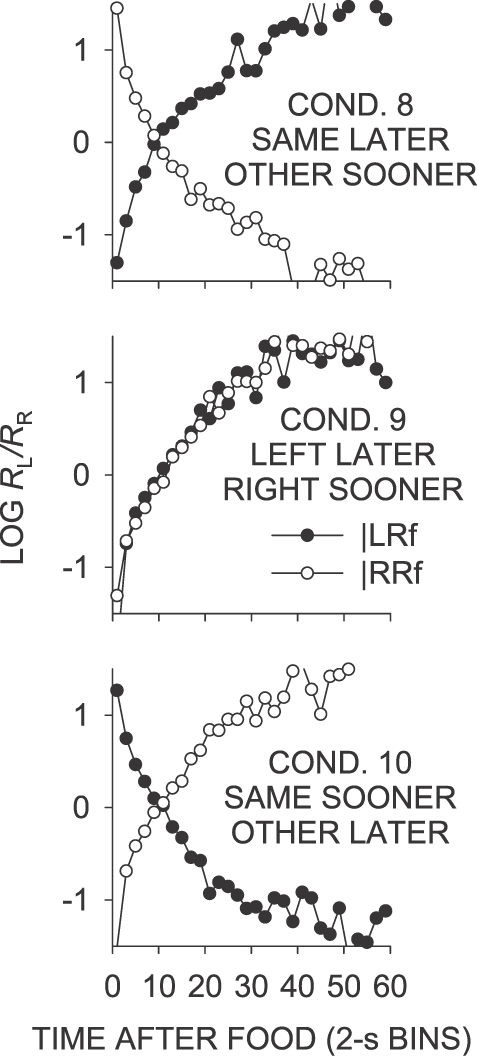

The log response ratios averaged over successive interfood intervals (“trees”) for selected sequences of up to four food deliveries obtained in a condition are plotted in Figure 4 as a function of food deliveries. In Conditions 1 and 3 (VI 27 s), the trees were similar in shape to those obtained elsewhere (e.g., Davison & Baum, 2000; 2002), with each successive food delivery having a small but reliable increasing effect on preference. This change in preference was greatest following discontinuations of sequences of same-key food deliveries. The tree diagrams were symmetrical, with left-key and right-key foods having a similar-sized effect on preference. But the size of the preference changes across successive food deliveries were much attenuated compared with those reported by Davison and Baum (2000; 2002).

Fig 4.

Phase 1. Mean log response ratio within interreinforcer intervals as a function of successive food deliveries from left and right keys. Sequences analyzed overlapped—that is, the data points plotted for an x value if 1 were from any sequence ending in a left or a right reinforcer; for x = 2, the points were for any sequences ending in two left food deliveries, two right food deliveries, or left-right or right-left food deliveries.

Successive-reinforcer analyses for the same–sooner condition (Condition 2), were similar to those in Conditions 1 and 3 in that they were symmetrical, with each food delivery being followed by a change in preference toward the just-productive key, and with the greatest effects occurring following discontinuations of same-key food deliveries. The change in preference following the first food delivery was, however, substantially greater, and the difference between post-left and post-right choice more apparent than in Conditions 1 and 3. In the left–sooner condition (Condition 4), tree analyses showed a similar magnitude of change in preference across successive food deliveries to that observed in the same–sooner condition (Condition 2), but with a smaller change occurring over the first three left-key food deliveries. Preference following continued right-key food deliveries was slightly less extreme than following left-key food deliveries. The opposite pattern was observed in the left–later condition (Condition 6), with the differential effects of the opposite contingencies (left food sooner in Condition 4, right food sooner in Condition 6) apparent in the overall level of the tree graphs. Preference in the left–sooner condition (Condition 4) was more toward the right key, while preference in the left–later condition (Condition 6) was more toward the left key— in both cases, toward the key providing the average longer time to food. The overall level of preference for the later alternative in both conditions is a necessary consequence of the excellent control by the local food ratios for the duration of the IRI. With the VI 5-s and VI 50-s schedules in operation, responding quickly moved from the sooner key to the later key, and thus, overall, more time was spent responding on the later key.

The group-mean data show that each food delivery in the same–later conditions (Conditions 5, 7 and 11; Figure 4) produced a change in preference toward the not-just-productive key. The magnitude of change in preference produced across a sequence of four food deliveries in these conditions was similar in extent to that in Conditions 2, 4 and 6, but the pattern was quite different. While discontinuations again had a greater effect on preference than continuations, discontinuations changed choice toward the key that had not just provided food. Continued food deliveries on a key, however, changed preference slightly but progressively toward the key that had provided the last food.

Phase 2

In Phase 2, the last-food location was signaled by a red keylight, and henceforth Phase 1 conditions will be termed “unsignaled”, and Phase 2 conditions “signaled”, conditions. Log food ratios as a function of time since the last food delivery for Phase 2 (Conditions 8 to 10) are shown in Figure 5. Under these conditions, which were replications of the same–later (Conditions 5, 7 and 11), left–later (Condition 6), and same–sooner (Condition 2) conditions, but with the last-food location signaled by a red keylight, the local food-ratio changes were generally similar to those in the Phase 1 conditions, although more extreme immediately following the delivery of a food from either key in the signaled left–later condition (Condition 9), and slightly less extreme later in the IRI in the signaled same–later condition (Condition 8).

Fig 5.

Phase 2. Mean log (L/R) obtained food ratio as a function of time since a left or right food delivery, in 2-s bins. Some data fell off the graphs.

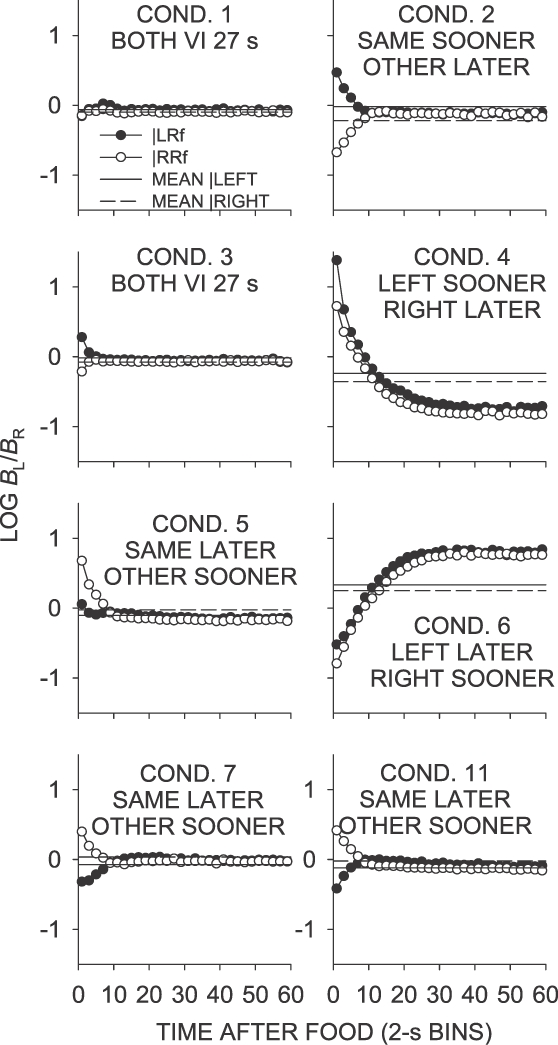

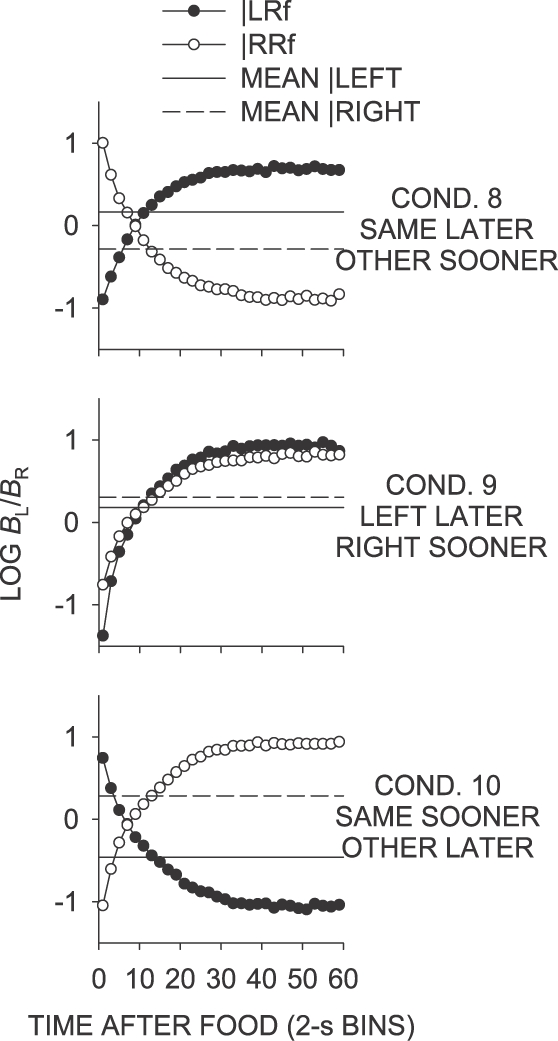

Figure 6 shows the log response ratio plotted as a function of time since a left and right food delivery for Conditions 8 to 10. Comparison of these data with the log food ratios in Figure 5 shows that the log food ratio was followed closely throughout the IRI in all conditions of Phase 2.

Fig 6.

Phase 2. Mean log (Left/Right) response ratios as a function of time since left and right food deliveries. Also shown is the extended-level preference averaged across the last 65 sessions of the condition separately for each prior reinforcer.

In the signaled same–later condition (Condition 8), individual data were well represented by the mean data (Figure 6; Appendix Figure B1), unlike those in the unsignaled same–later conditions (Conditions 5, 7 and 11). First-bin preference in the signaled same–later condition was strongly in the direction of the not-just-productive key for all birds. As in the unsignaled same–later conditions, preference moved from the not-just-productive key toward indifference over a period of 10 s, but eventually stabilized at a level strongly in the direction of the just-productive key, rather than at a level reflecting the sessional mean, which was close to indifference. The mean log response ratio across the interfood interval following a left food delivery was thus substantially more toward the later alternative than in the unsignaled same–later conditions (Table 1).

As in the unsignaled left–later condition (Condition 6; Figure 3), first-bin preference in the signaled left–later condition (Condition 9; Figure 6) was strongly toward the right key. The magnitude of this preference was slightly more extreme than in the unsignaled left–later condition (Table 1). Preference in the first 2-s bin was marginally more toward the right key following left-key food deliveries than following right-key food deliveries. The change in preference over time since food delivery in the signaled left–later condition was similar to that that found in the unsignaled left–later condition, although the mean across interfood intervals was somewhat lower (Table 1). The level at which IRI preference stabilized was similar to that in the unsignaled left–later condition. As in the unsignaled left–sooner and left–later conditions (Conditions 4 and 6, respectively), preference across interreinforcer intervals in the signaled left–later condition stabilized at a level much more extreme than the sessional mean.

First-bin postfood preference in the signaled same–sooner condition (Condition 10; Figure 6) was directionally similar to that in the unsignaled same–sooner condition (Condition 2), but was much more extreme (Table 1). Interfood preference did not stabilize at the same point across the interreinforcer interval as in the unsignaled same–sooner condition, instead crossing the sessional mean to reflect strong preference for the not-just-productive key. Mean preference across interreinforcer intervals was thus more extreme for the signaled same–sooner condition than for the unsignaled same–sooner condition, as a result of the increased time spent responding on the later-food key, later in the interfood interval.

With the addition of a stimulus signaling the last-food location, postfood preference under conditions where the location of the sooner schedule depended on the last-food location (Conditions 8 and 10) was more extreme, and always in the direction of the likely sooner next-food location, regardless of whether the next food delivery was arranged sooner on the just-productive, or on the not-just-productive key. The addition of the signaling stimulus when the sooner schedule remained on one key (Condition 9) had only a minimal effect on the magnitude of choice immediately following the delivery of a food in comparison with the unsignaled left–later condition (Condition 6).

In addition, preference later in the interfood interval for the signaled conditions approximated the local food ratio, in accordance with the contingencies in effect. In comparison, preference later in the interfood interval in the unsignaled conditions (with the exception of the left–sooner and left–later conditions; Conditions 4 and 6) approximated the overall food ratio. The addition of the signaling stimuli in Phase 2 thus appears to have removed the requirement to remember the last-food location in order to discriminate the location of the next sooner schedule; under these signaled conditions, only time since food was necessary for tracking the next-food location, and thus the behavior was more similar to that observed in those conditions where last-food location signaled nothing about the next-food location.

Effects of Successive Reinforcers

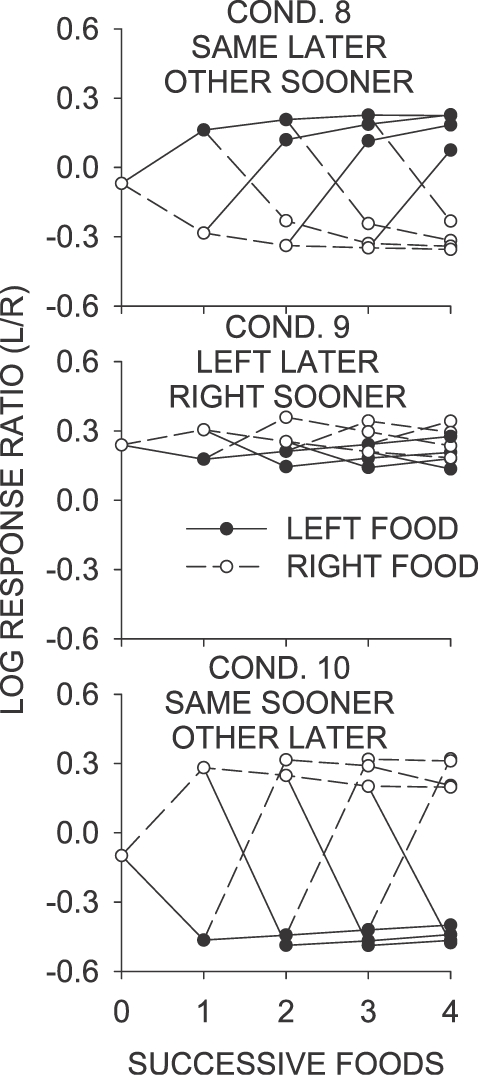

Figure 7 shows tree analyses for selected sequences of food deliveries obtained in a condition, for Conditions 8 to 10. When the signaled same–later contingencies in operation (Condition 8), the change in preference that accompanied the first food delivery in a sequence was much greater than in any Phase 1 conditions. Discontinuations again had the greatest effect on preference, moving it to a level slightly less than that of a continuation of the same length on the other key. Unlike the unsignaled same–later conditions (Conditions 5, 7 and 11), food deliveries in the signaled same–later condition were followed by a change in preference toward the just-productive key, reflecting the increased preference for the just-productive (later-food) alternative later in the interfood interval.

Fig 7.

Phase 2. Mean log response ratio within interreinforcer intervals as a function of successive food deliveries from left and right keys. See legend to Figure 4.

In the signaled left–later condition (Condition 9), overall preference was toward the left key—a pattern similar to that observed in the unsignaled left–later condition (Condition 6), which likely reflects a tendency to spend more time responding on the left key. As in the unsignaled same–later conditions (Conditions 5, 7 and 11), but unlike the unsignaled left–later condition (Condition 6), continued left- or right-key food deliveries after the first moved choice toward the key on which the food had been obtained, although the amount of change was slight. Discontinuations had a larger effect, moving choice toward the key that did not provide the last food delivery; for example, a left food delivery followed by a right food delivery produced a strong left-key choice, whereas a right food delivery followed by a left food delivery resulted in a smaller left-food choice.

The pattern of choice changes in the signaled same–sooner condition (Condition 10) were the reverse of those in the unsignaled same–sooner condition (Condition 2)—food on either key produced a strong choice for the other key over the next interfood interval. Again, this difference is attributable to the differences in the pattern of interfood choice, in particular the later stabilization of preference at a point beyond indifference in the signaled same–sooner condition, toward the not-just-productive (later-food) alternative. In contrast, local preference in the unsignaled same–sooner condition stabilized close to indifference, with extreme preference occurring only in the first bins for the just-productive alternative. Thus, in the unsignaled same–sooner conditions, more time was spent responding on the just-productive (sooner) alternative, and the pattern of choice on a more extended level was thus reversed. However, continued foods on a key did produce a small but consistent change in choice toward that key, as observed in the unsignaled same–sooner condition.

In summary, the addition of a stimulus that signaled the location of the last food delivery had two main effects discernible at the most local level of analysis: heightened postfood preference for the next-sooner key, and extreme preference for the next-later key at increased time since the last food delivery. As would be expected, these effects were generally present only when the contingency was such that the location of the next-sooner schedule depended on the last-food location. These effects of the signaling stimuli were also observable in analyses of the effects of sequences of food deliveries, and are likely responsible for the majority of differences between the tree analyses for the signaled versus unsignaled conditions.

DISCUSSION

A number of replications were conducted in this experiment (see Figure 2): Condition 3 was the same as Condition 1 (both schedules VI 27 s), but did not produce an exact reproduction of the results, in that small postfood preference pulses lasting about 6 s occurred in Condition 3, but not in Condition 1. The difference was largely due to Pigeon 22, the only pigeon that showed strong differential choice in Condition 3. Condition 7 (same–later) was a replication of Condition 5, and was done to investigate whether the considerable control over postfood choice in Condition 6 (left–later) had any lasting effect on choice. Again, the result was not identical, but first-bin choice given left and right food deliveries in Condition 7 differed directionally in the same way as in Condition 5. The conditions were replicated again in Condition 11, and the near-identity of the results of Conditions 7 and 11 suggests that it is those of Condition 5, not Condition 7, that are anomalous. Condition 5 may have been affected by the prior Condition 4, which arranged a left–sooner contingency. Condition 11 was conducted after Phase 2 to check whether the Phase 2 conditions had any lasting effect on postfood choice, and, as it provided an almost perfect reproduction of the choice in Condition 7, it appears there was no lasting effect.

In Phase 1 of the present experiment, food deliveries signaled subsequent contingencies of reinforcement in varying ways, but the key on which the just-obtained food had occurred was not separately signaled. In Conditions 1, 3, 4 and 6, the last-food location was unrelated to the location of the next-sooner schedule, while in Conditions 2, 5, 7 and 11, left- and right-key food deliveries differentially signaled the locations of the next-sooner food. The contingencies signaled by food delivery were either nondifferential with respect to the sooner food (the same mean times for both keys; Conditions 1 and 3), or were differential with respect to the next-sooner food (different contingencies for each key; Conditions 2, 4, 5, 6, 7 and 11).

As a general conclusion, local choice was jointly controlled by the probability of obtaining food at a location at a given time, by the complexity of the contingency, and apparently by decaying control over time by the last-food location. When the food locations were nondifferential signals, and the subsequent contingencies were nondifferential (when the likelihood of obtaining a food on the left or right key was similar; Conditions 1 and 3), there were virtually no preference pulses (Figure 3). When only the key position signaled the next sooner-food location (Conditions 4, left sooner, and 6, right sooner), postfood preference pulses were strongly toward the key providing the likely sooner food as signaled by the time since the last food delivery, and control by obtained local food ratios lasted throughout the IRI (compare Figures 2 and 3). When left and right food deliveries signaled different postfood contingencies (Condition 2, same–sooner), preference pulses were smaller than in Conditions 4 and 6, and control by the local food ratio was observed only for about 10 s after either food delivery. When left and right food deliveries not only signaled different contingencies, but each signaled contingencies favoring the other key sooner (Conditions 5, 7, and 11), preference pulses were small, and were caused mainly by one individual pigeon in Condition 5 and 7 (Appendix Figures A5 and A7). Thus it appears that postfood preference was controlled by the contingencies signaled by prior food location, but that the degree of control depended on the complexity of the stimulus–behavior–reinforcer contingency. Control was greatest when any food signaled sooner foods on a particular key, less when food signaled sooner food on the same key, less again when food signaled sooner on the other key, and absent when food was not a differential signal for the next likely sooner food.

The present results are important in showing that a changeover delay (Herrnstein, 1961) is not necessary for preference pulses to develop. However, the arrangement of a changeover delay may be sufficient for preference pulses because such procedures do naturally affect the local probability of food delivery on two keys. If, as appears common, a food delivery on a key leaves the changeover delay satisfied, the obtained food ratio will be infinitely toward the just-reinforced key during the changeover-delay period, as no food can be obtained on the other key, even if arranged, during this time—although the overall food rate in the changeover-delay period will be lowered. Given the results reported here, such an immediate local food differential signaled by prior food would strongly affect local choice.

Reinforcement effects may be thought of as local or as extended. Local reinforcement effects would be shown by choice being enhanced toward the just-productive key over the next interfood interval. Extended effects can be thought of as a similar differential choice but across continuations of same-key food deliveries (Figure 4). First, local reinforcement effects are discussed. In Phase 1 conditions in which it was possible to see differential interfood choice (Figure 3, in particular the left–sooner and left–later Conditions 4 and 6), the location of the just-productive key biased choice toward that key over the next interreinforcer interval, suggesting a local reinforcement effect. But this was evident in only a transient way (the first 6 s) in Conditions 1 and 3, in which it might have been expected to have occurred more strongly because there could be no signaling effect of the last food delivery.

A method of calculating the size of reinforcement effects is to take the following measure:

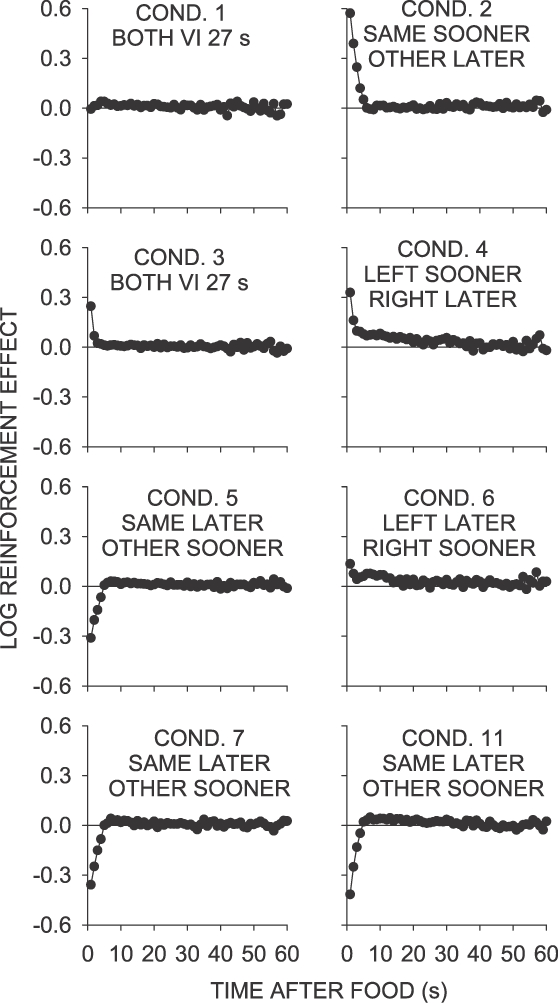

where B and R refer to responses and reinforcers respectively, and L and R to the left and right keys respectively. Notice the similarity of this measure to measures taken in conditional-discrimination analyses (e.g., Davison & Nevin, 1999), with RL and RR replacing S1 and S2. The measure, applied to responses in each 2-s time bin, provides an estimate of the local reinforcement effect independent of the signaling effect of the prior food delivery. Where the measure is zero, the pigeon emitted equal numbers of responses to the two keys regardless of where the last food was delivered; thus, no signaling or reinforcer effects were present. When the measure is positive, more responses were emitted on the just-productive key than on the not-just-productive key, indicating a reinforcement effect. When the measure is negative, more responses were emitted on the not-just-productive key, indicating a signaling (or location) effect. This analysis is shown in Figure 8. The measure showed that in Conditions 2, 3, 4, and 6, there was a small, transient enhancement in preference to the just-productive key (reinforcer effect), followed, in the same–sooner and VI 27-s conditions (Conditions 2 and 3) by a fall to zero (equal responding on both keys)—thus, the reinforcement effect did not last. In the left– and right–sooner conditions (Conditions 4 and 6), the local increase in preference to the just-reinforced key lasted longer than in Conditions 2 and 3, being maintained for 20 to 40 s after the last food delivery. However, these effects were smaller in magnitude than in Conditions 2 and 3.

Fig 8.

Calculated effects of left and right food deliveries across 60 s following those deliveries for selected Phase 1 conditions. See text for further explanation.

The left– and right–sooner conditions (Conditions 4 and 6) were the only conditions in Phase 1 in which choice in interreinforcer intervals continued to favor the just-productive key well into the interreinforcer interval, as shown by the log response ratios following a left food delivery being consistently more to the left key than those following a right food delivery (Figure 3). Since this effect only occurred in these two conditions, it does not appear to be a “reinforcement” effect; rather, it must be due to some other cause specific to these conditions, perhaps related to the excellent control by the time since the last food delivery across the interfood interval.

Figure 8 shows that, in the same–later conditions (Conditions 5, 7 and 11), no effect of prior food (reinforcement effect) was present, due to the strong signaling properties of the reinforcers. Postfood choice was driven toward the not-just-reinforced key over the first 10 s of the interfood interval; after this 10-s period, very little signaling effect was evident. Thus, if there was a small transient reinforcement effect, it was easily overcome by strong signaling effects of foods when these favor changing between keys. Perhaps the pure “reinforcement” effect is simply a bias to continue emitting the same behavior that just paid off, easily overcome when the subsequent contingencies favor just one of the two keys (Conditions 4 and 6), and when they favor changing to the other keys.

Was there evidence of extended reinforcer effects occurring across successive reinforcers? Figure 4 shows that successive same-key food deliveries (continuations) did progressively increase preference for a key in Conditions 3, 4 and 6, although the changes were generally small even after four successive reinforcers—much smaller than found in procedures in which reinforcer ratios frequently changed across relatively short components (e.g., Davison & Baum, 2002). However, the same–later conditions (Conditions 5, 7 and 11) gave a very different pattern of choice across continuations: The pattern is alternation, as would be expected given the preference-pulse results. While a single left food moved choice toward the right key, successive reinforcers on the left key produced a pattern of stronger right-key choice. This pattern was exactly reversed after right-key foods. The pattern of decreasing other-key choice across successive food deliveries thus may constitute a small, but consistent, reinforcement effect. The increase in preference toward the just-reinforced key across successive food in all Phase 1 conditions appears to be clear evidence of extended reinforcement effects across continued food deliveries on keys.

In summary, there was little evidence of a reinforcement effect within interreinforcer intervals, in which choice was controlled strongly by the signaling effects of food deliveries, but, rather, evidence of a reinforcement effect operating across successive food deliveries on a key. However, even this effect might also be a discriminative, rather than a reinforcement, effect: Clearly, continued foods at a key will begin to undermine the arranged contingencies (e.g., other–sooner) in some conditions: In the same–later conditions (Conditions 5, 7, and 11), extended continuations of food deliveries on a key might begin to signal more frequent (but more delayed) food deliveries on the same key. However, in the left–sooner condition (Condition 4), continued left-key food deliveries could signal a high frequency of short-delayed food deliveries, and continued right-key food deliveries could signal a high frequency of long-delayed food deliveries (Condition 6 being the inverse of this). Under these conditions, choice moved toward the alternative on which the continuations occurred— that is, choice moved toward the key that appeared to be providing more food deliveries. In the same–sooner condition (Condition 2), choice also moved toward the key that had recently provided more food deliveries, but in this case, the food availabilities were at the higher rate on both keys—and choice changes were more extreme than in the left–sooner or left–later conditions (Conditions 4 and 6). What is surprising is that continued lower-rate food deliveries changed overall choice about the same amount as continued higher-rate food deliveries in Conditions 4 and 6 (that is, the preference trees for these conditions were symmetric) when high-rate food continuations had a greater effect on choice in Condition 2. However, in all conditions, continued food deliveries on a key constituted training for “stay at a key” independent of the time to the next food, choice coming more under control of food frequency than of the expected times to the next food on keys.

Clearly, locally, when food on either key signaled no differential reinforcer ratios over time since food (Conditions 1 and 3), there could be, and was, very little signaling effect of food. When the VI 5-s and VI 50-s schedules remained on a particular key for the duration of a condition (left–sooner or right–sooner; Conditions 4 and 6), changes in choice closely followed changes in the obtained log food ratio for the duration of the interfood interval, and thus there was a strong signaling effect that spanned the whole of the next interfood interval. When food deliveries on the left and right keys signaled subsequent reinforcer ratios that followed the location of the last food (same–sooner; Condition 2), the signaling effect was smaller and lasted only 8 to 10 s. Thereafter, log response ratios deviated from the obtained log food ratio to settle at a level reflecting the sessional mean food ratio. Finally, when food deliveries on the left and right keys signaled subsequent reinforcer ratios that were opposite from the last-food location (same–later; Conditions 5, 7, and 11), the signaling effect was transient, producing brief but consistent preference for the not-just-reinforced key, a clear, albeit transitory, signaling effect.

Thus, only when any food signaled that reinforcers would likely follow sooner on a key independent of the last-reinforcer location, did the signaling effect last the whole interreinforcer interval. Of course, only in the left–sooner and left–later conditions (Conditions 4 and 6; ignoring Conditions 1 and 3) was the last reinforcer location irrelevant to how reinforcer ratios changed in the next interreinforcer interval—all that was relevant was the time elapsed since the last food delivery.

Phase 2 of this experiment asked whether signaling the last food location would enhance control over choice across the next interreinforcer interval, presumably making conditions in which the last-food location was relevant, more like Conditions 4 and 6. The results from Phase 2 supported this interpretation. The addition of the signal for the last-food location did not change the way interreinforcer choice changed in the signaled left–later condition (Condition 9), which was similar to Condition 6—in Condition 9, the signal for the last-food location would be irrelevant. But in Conditions 8 (equivalent to Conditions 5, 7 and 11) and 10 (equivalent to Condition 2), choice was under precise control of the obtained food ratios across the interreinforcer interval. In particular, choice following a left or right food delivery in Condition 8 strongly favored initial preference to the right or left keys respectively, and preference changes in this condition were almost, but not quite, the inverse of those in Condition 10.

The reasons for the patterns of choice changes across successive food deliveries in Phase 2 (Figure 7) seem less obvious—for instance, the pattern in Condition 8 (same–later) was similar to that seen in Condition 2 (same–sooner; Figure 4), but they can be understood by looking at the mean interreinforcer choice shown in Figures 3 and 6. Mean interfood choice depends on the relative number of responses on the left and right keys across the whole interfood interval. In Condition 2, and more so in Condition 8, more responses were emitted on the left key following a left-key food delivery than on the left following a right-key food delivery simply because more of the interreinforcer interval was spent choosing left after a right food delivery. In Condition 2, this was because of the immediate postfood pulses; in Condition 8, it was because more time was spent responding on the left key after a left food delivery toward the end of the interreinforcer interval. As a result, the preference tree for Condition 8 appears to favor responding left after a left food delivery, even though the immediate postfood preference was to the right key. The reverse is true of Condition 10 (same–sooner), in which the preference trees suggest preference for the left key after a right food delivery. Condition 9 (left later) gave a similar preference tree to Conditions 5, 7 and 11 (same later) for a similar reason: For example, following either a left or right food delivery, preference transiently moved to the right key, but more responses were emitted to the left key over the interreinforcer interval. This difference provided the strong overall preference for the left key (the later reinforcer) in Conditions 6 and 9. However, the mean interreinforcer choice following a left food delivery in Condition 9 was more to the left, and following a right reinforcer was more to the right, giving the preference-tree structure shown in Figure 7—with this pattern reversed in Condition 6. Hence, the differences in tree structure are explicable. However, it is worth highlighting that preference trees can clearly provide confusing information when choice changes across interreinforcer intervals. It would be possible to interpret the Condition 8 preference tree as suggesting that only in this same–later condition did successive food deliveries from a key enhance choice toward that key—as indeed it did, in one sense—but this conclusion would rest on the different average times to food, and would not reflect the precise control by the location of the last food delivery and the time since the last food delivery shown in local choice within the interreinforcer intervals. An extended-level example makes this problem clearer: If VI 5-s VI 300-s schedules had been arranged on the keys in Condition 9, preference within the interreinforcer intervals would have looked similar, but the interfood preference toward the right, later, key would have been considerably greater than in VI 5 s VI 50 s because of the greater amount of the interreinforcer interval spent responding on the right key. Clearly, overall preference in such situations will be controlled by the inverse of the overall reinforcer-frequency ratio, a result which appears incompatible with the generalized matching law (Baum, 1974). Thus, extended measures of choice, be they mean choice across interreinforcer intervals or choice across whole sessions, fail to represent choice accurately when there is local control of choice within interreinforcer intervals.

The present results showed remarkably good control by time, up to 60 s, since food in Conditions 4, 6, 8, 9 and 10—conditions in which either the postfood reinforcer ratios were simply located, or in which the location of the last food delivery was signaled throughout the interval. For example, in Condition 10, at 60 s after food, preference was strongly to the right key when the left was lit red, and strongly to the left key when the right key was red. But the present experiment was not designed to investigate timing, and ought not be used for this purpose. It should be mentioned, however, that the present results do raise questions about food contingencies and signals in timing research—an aspect of control that has been rather neglected in that area.

What do the present results tell us about preference pulses—periods of increased preference following food deliveries, usually but not invariably toward the key that just provided food? They likely arise when food deliveries themselves, or other stimuli, signal immediate local deviations of food ratios from the overall or sessional ratio arranged—and such local deviations will be directly produced by the arrangement of changeover delays or changeover ratios. Thus, Krägeloh and Davison (2003) found that postfood preference pulses were absent when no changeover delay was arranged. Further, Davison, Elliffe and Marr (2010) found no postfood preference pulses in conventional concurrent VI VI schedule performance when no changeover delay was arranged (Phase 1)—they presented no preference-pulse analyses simply because no pulses were present. Preference pulses thus do not measure the effects of the just-received food, but rather the effects of the obtained food ratio following food. Furthermore, this effect is dynamic, because enhanced preference to a key drives obtained food ratios in the direction of the local preference. Preference following food is controlled by time signaling local food ratios—and, inasmuch as local food ratios are consonant with the likely location of food at the end of an interfood interval, they may signal the next food location. It is this latter effect that may produce changing interfood preference across continuations of food deliveries on a key. It is apparent that food reinforcement does not produce changes in choice by increasing prior behavior—rather, the contingencies signaled by the food delivery itself, and by the time since the food delivery, drive the behavior. It remains the case that, if food signals more food at a location, preference for that location will increase as implied by the law of effect. The caveat is that food may not always signal more food at a key, but may signal more food at a different key—each food delivery signals the start of a time period with a changed food ratio, and this food-ratio change can be further amplified by a reversal of preference.

In summary, food delivery at a response alternative can control local choice when contingencies systematically change after such food deliveries, and we need to be aware of such signaling effects. It is not known to what extent such effects have occurred in data on choice that have been reported. Take, for example, concurrent VI 5-s VI 100-s performance. The degree of signaling that may occur will depend on how the schedules are arranged. With nonindependent exponential (also termed constant-probability, or random-interval) schedules, the expected time to the next food delivery does not change with time since food. Thus, any food delivery signals a 5-s average interval on one key, and a 100-s average interval on the other. The situation will be similar to Condition 6 (left sooner, right later) here. It would be expected that preference immediately after any food delivery would be to the VI 5-s key, but would not subsequently increase to the right key because expected times to food remain constant. But other ways of arranging concurrent VI VI schedules will give subtly different effects: For example, if the interreinforcer intervals are arranged using randomized lists of either exponentially or arithmetically determined intervals, expected times to food will not stay constant over time since food, and a reversal of preference may follow the initial preference pulse as in Conditions 4 and 6. Such a reversal appears to be a recipe for extended-level undermatching (Baum, 1974). However, it is beyond the scope of this paper to consider the theory of these differences, and arguably an empirical approach to determine whether food deliveries do act as signals in such situations is preferable.

It is apparent, however, that control by food delivery can occur in some concurrent schedules. For example, White and Davison (1973) investigated performance on concurrent fixed-interval (FI) FI schedules and reported that, under some conditions, FI scallops developed on both keys, in some just on one key, and in some on neither key. The scalloped patterns clearly show control by changing food ratios across time since food on a key. Additionally, the results of Krägeloh et al. (2005) showed that prior food location can control subsequent interreinforcer choice when food signals particular conditional probabilities of subsequent food locations. It is clear that signaling effects of food can happen when the conditions support that—the question is, under what conditions does the signaling effect become important and override any purely reinforcing (or response-continuation) effects?

Acknowledgments

We thank the members of the Auckland University Experimental Analysis of Behaviour Research Unit for their help in conducting this experiment, which will form part of Sarah Cowie's doctoral dissertation. We also thank Mick Sibley for looking after the pigeons.

Appendix A

Fig A1.

Phase 1, Condition 1. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

Fig A2.

Phase 1, Condition 2. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition. The initial point for Pigeon 22 fell off the graph.

Fig A3.

Phase 1, Condition 3. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

Fig A4.

Phase 1, Condition 4. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition. Some initial points fell off the graphs.

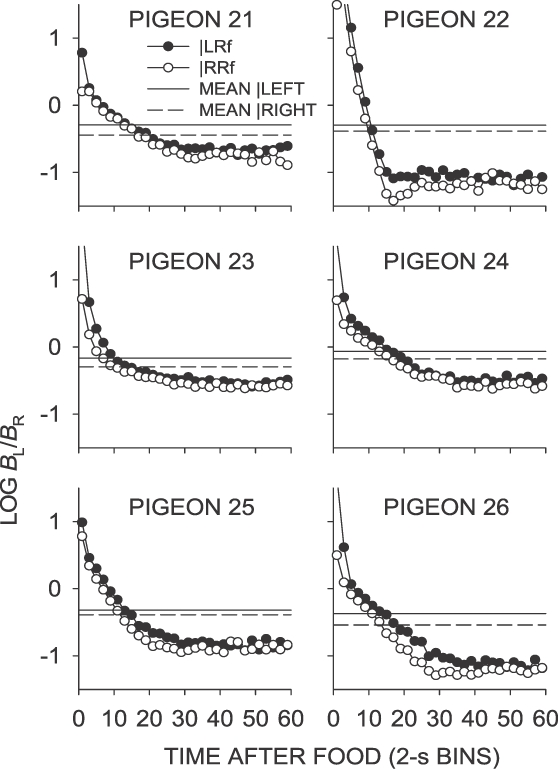

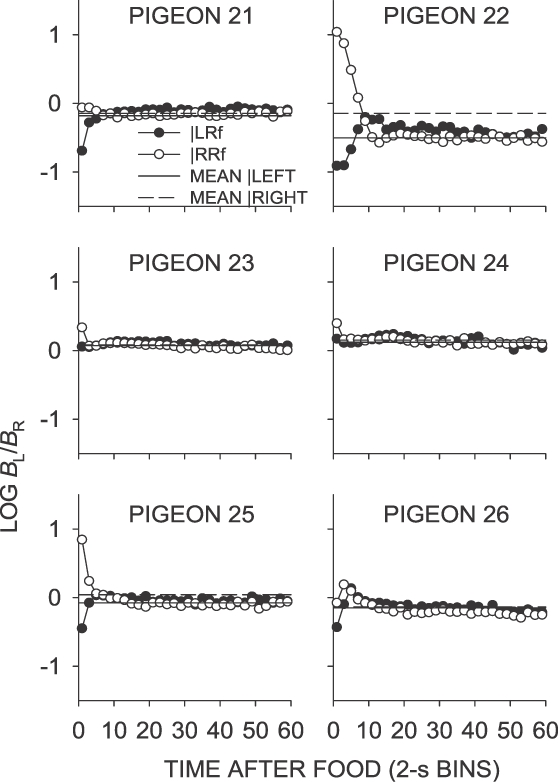

Fig A5.

Phase 1, Condition 5. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

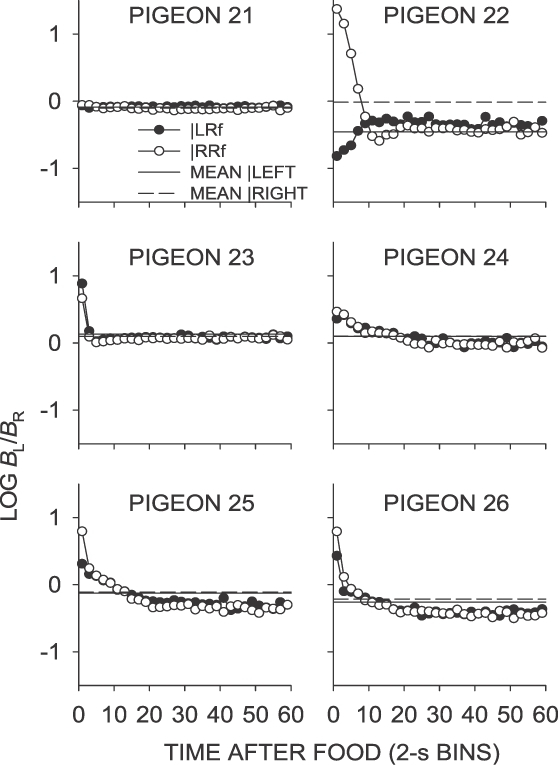

Fig A6.

Phase 1, Condition 6. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

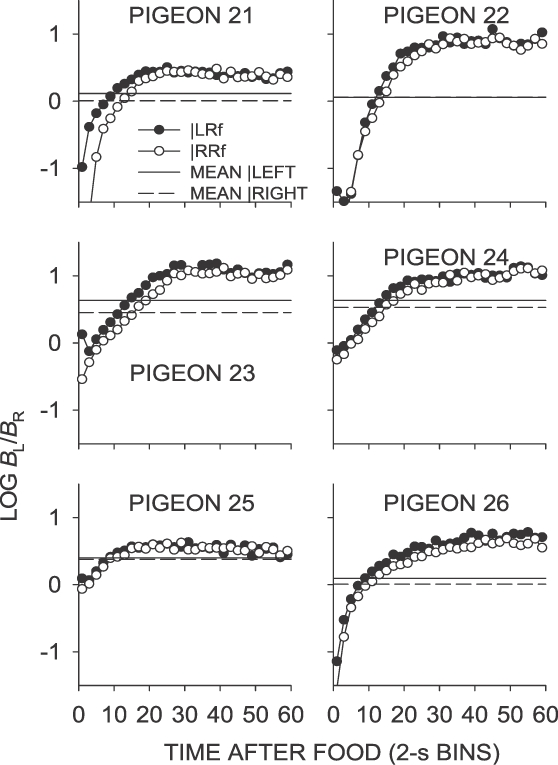

Fig A7.

Phase 1, Condition 7. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

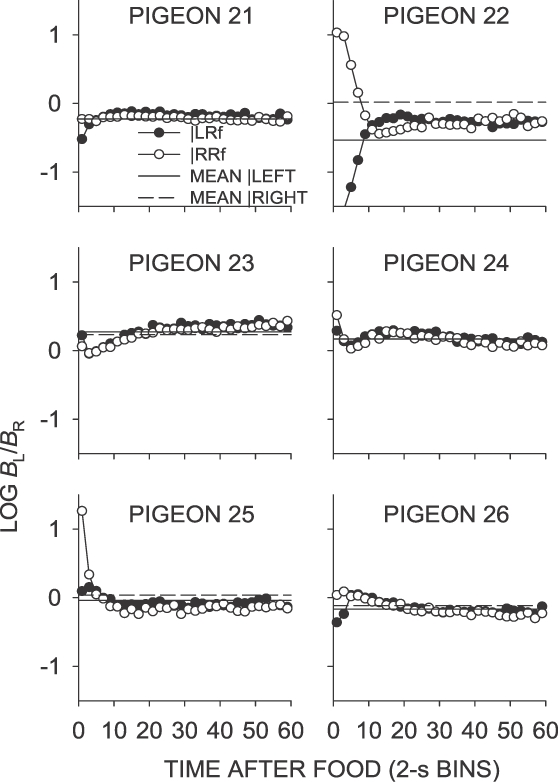

Fig A8.

Phase 1, Condition 11. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

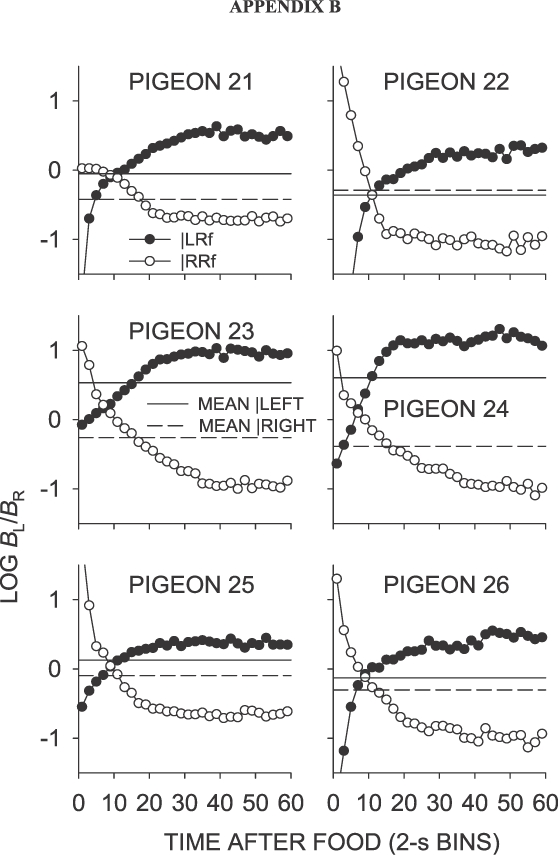

Appendix B

Fig B1.

Phase 2, Condition 8. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition. The initial point for Pugeon 22 fell off the graph.

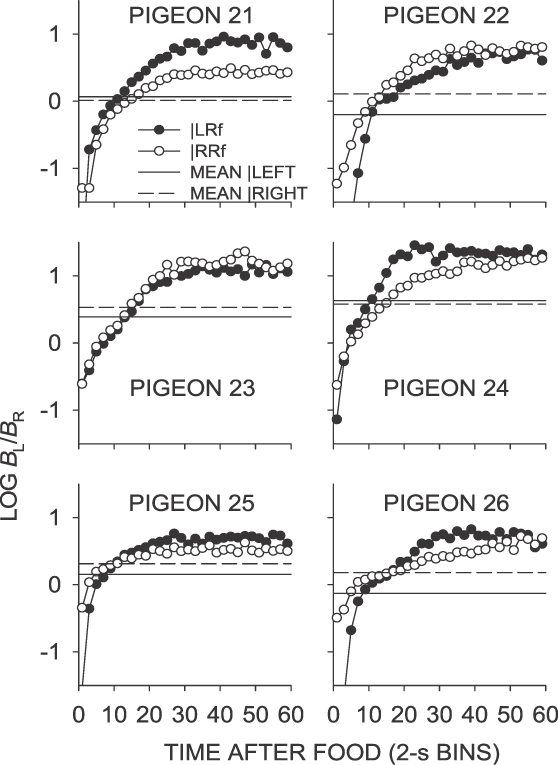

Fig B2.

Phase 2, Condition 9. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

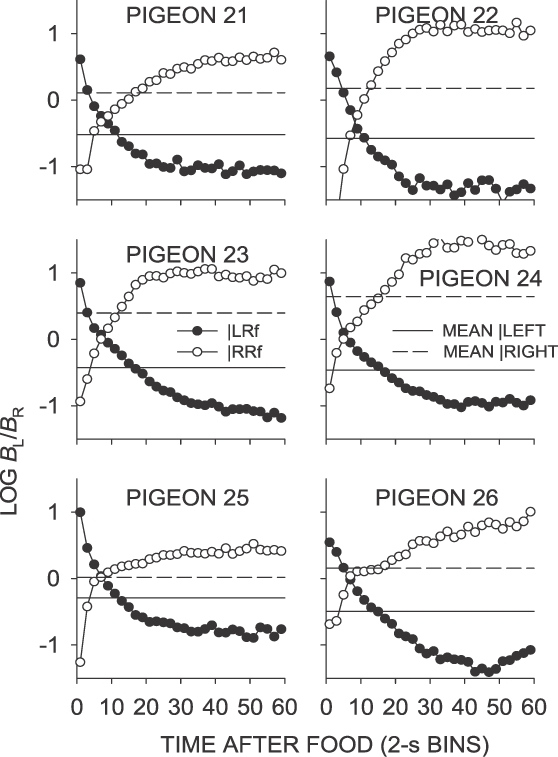

Fig B3.

Phase 2, Condition 10. Log response ratio as a function of time since food, and mean extended-level preference, for Pigeons 21 to 26 according to the location of the last food delivery. The data are the last 65 sessions of this condition.

REFERENCES

- Baum W.M. On two types of deviation from the matching law: bias and undermatching. Journal of the Experimental Analysis of Behavior. 1974;22:231–242. doi: 10.1901/jeab.1974.22-231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boutros N, Davison M, Elliffe D. Conditional reinforcers and informative stimuli in a constant environment. Journal of the Experimental Analysis of Behavior. 2009;91:41–60. doi: 10.1901/jeab.2009.91-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boutros N, Davison M, Elliffe D. Contingent stimuli signal subsequent reinforcer ratios. Journal of the Experimental Analysis of Behavior. 2011;96:39–61. doi: 10.1901/jeab.2011.96-39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catania A.C, Reynolds G.S. A quantitative analysis of the responding maintained by interval schedules of reinforcement. Journal of the Experimental Analysis of Behavior. 1968;11:327–383. doi: 10.1901/jeab.1968.11-s327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Church R.M, Lacourse D.M. Temporal memory of interfood interval distributions with the same mean and variance. Learning and Motivation. 2001;32:2–21. [Google Scholar]

- Davison M, Baum W.M. Choice in a variable environment: Every reinforcer counts. Journal of the Experimental Analysis of Behavior. 2000;74:1–24. doi: 10.1901/jeab.2000.74-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Choice in a variable environment: Effects of blackout duration and extinction between components. Journal of the Experimental Analysis of Behavior. 2002;77:65–89. doi: 10.1901/jeab.2002.77-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Do conditional reinforcers count. Journal of the Experimental Analysis of Behavior. 2006;86:269–283. doi: 10.1901/jeab.2006.56-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Local effects of delayed food. Journal of the Experimental Analysis of Behavior. 2007;87:241–260. doi: 10.1901/jeab.2007.13-06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Stimulus effects on local preference: Stimulus–response contingencies, stimulus–food pairing, and stimulus–food correlation. Journal of the Experimental Analysis of Behavior. 2010;93:45–59. doi: 10.1901/jeab.2010.93-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Elliffe D, Marr M.J. The effects of a local negative feedback function between choice and relative reinforcer rate. Journal of the Experimental Analysis of Behavior. 2010;94:197–207. doi: 10.1901/jeab.2010.94-197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Nevin J.A. Stimuli, reinforcers, and behavior: An integration. Journal of the Experimental Analysis of Behavior. 1999;71:439–482. doi: 10.1901/jeab.1999.71-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elliffe D, Alsop B. Concurrent choice: Effects of overall reinforcer rate and the temporal distribution of reinforcers. Journal of the Experimental Analysis of Behavior. 1996;65:445–463. doi: 10.1901/jeab.1996.65-445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein R.J. Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krägeloh C.U, Davison M. Concurrent-schedule performance in transition: changeover delays and signaled reinforcer ratios. Journal of the Experimental Analysis of Behavior. 2003;79:87–109. doi: 10.1901/jeab.2003.79-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krägeloh C.U, Davison M, Elliffe D.M. Local preference in concurrent schedules: the effects of reinforcer sequences. Journal of the Experimental Analysis of Behavior. 2005;84:37–64. doi: 10.1901/jeab.2005.114-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olton D.S, Samuelson R.J. Remembrance of places passed: Spatial memory in rats. Journal of Experimental Psychology: Animal Behavior Processes. 1976;2:97–116. doi: 10.1037/0097-7403.33.3.213. [DOI] [PubMed] [Google Scholar]

- Randall C.K, Zentall T.R. Win-stay/lose-shift and win-shift/lose-stay learning by pigeons in the absence of overt response mediation. Behavioural Processes. 1997;41:227–236. doi: 10.1016/s0376-6357(97)00048-x. [DOI] [PubMed] [Google Scholar]

- Schneider B.A. A two-state analysis of fixed-interval responding in the pigeon. Journal of the Experimental Analysis of Behavior. 1969;12:677–687. doi: 10.1901/jeab.1969.12-677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider S.M, Davison M. Molecular order in concurrent response sequences. Behavioural Processes. 2006;73:187–198. doi: 10.1016/j.beproc.2006.05.008. [DOI] [PubMed] [Google Scholar]

- Skinner B.F. The behavior of organisms. New York: Appleton-Century; 1938. [Google Scholar]

- Thorndike E.L. Animal intelligence. New York: Macmillan; 1911. [Google Scholar]

- White A.J, Davison M.C. Performance in concurrent fixed-interval schedules. Journal of the Experimental Analysis of Behavior. 1973;19:147–153. doi: 10.1901/jeab.1973.19-147. [DOI] [PMC free article] [PubMed] [Google Scholar]