Abstract

Background

Behavioral intervention effectiveness in randomized controlled trials requires fidelity to the protocol. Fidelity assessment tools tailored to the intervention may strengthen intervention research.

Objective

To describe the assessment of fidelity to the structured intervention protocol in an examination of a nurse-delivered telephone intervention designed to improve medication adherence.

Method

Fidelity assessment included random selection and review of approximately 10% of the audiorecorded intervention sessions, stratified by interventionist and intervention session. Audiotapes were reviewed along with field notes for percentage of agreement, addressing whether key components were covered during the sessions. Visual analog scales were used to provide summary scores (0 = low to 5 = high) of interaction characteristics of the interventionists and participants with respect to engagement, demeanor, listening skills, attentiveness, and openness.

Results

Four nurse interventionists delivered 871 structured intervention sessions to 113 participants. Three trained graduate student researchers assessed 131 intervention sessions. The mean percentage of agreement was 92.0% (±10.5) meeting the criteria of 90% congruence with the intervention protocol. The mean interventionist interaction summary score was 4.5±0.4 and the mean participant interaction summary score was 4.5±0.4.

Discussion

Overall, the interventionists successfully delivered the structured intervention content with some variability in both the percentage of agreement and quality of interaction scores. Ongoing assessment aids in ensuring fidelity to study protocol and having reliable study results.

Keywords: HIV infection, intervention fidelity, medication adherence

Well-designed randomized controlled trials (RCTs) are considered the gold standard for the development of efficacious health care interventions; however, inadequate methodological approaches to RCT planning and implementation can lead to biased treatment effects and inaccurate reporting and interpretation of study findings (Altman et al. 2001; Moher, Schulz, & Altman, 2001). One aspect of RCTs that affects the design, implementation, analysis, and valid interpretation of study findings is intervention fidelity (Bellg et al., 2004; Dumas, Lynch, Laughlin, Smith, & Prinz, 2001; Santacroce, Macarelli, & Grey, 2004), defined as “the methodological strategies used to monitor and ensure the reliability and validity of behavioral interventions” (Bellg et al., 2004, p. 443). A lack of fidelity monitoring masks whether study findings are due to an ineffective treatment or infidelity to an intervention protocol (Planas, 2008).

Different components of intervention fidelity, such as process fidelity (the consistency in which intervention content was delivered; Dumas et al., 2001; Erlen & Sereika, 2006; Moncher & Prinz, 1991) and content fidelity (whether intervention components were delivered as prescribed; Dumas et al., 1991, Moncher & Prinz, 1991) have been reported, as well as various strategies for fidelity assessment, such as treatment manuals, real-time observation, or videotapes or audiotapes of treatment sessions (Bellg et al., 2004; Markowitz, Spielman, Scarvalone, & Perry, 2000; Resnick et al., 2005; Waskow, 1984). The use of multiple strategies and components has made comparison across studies difficult; however, the development of intervention fidelity assessment tools informed by prior research but tailored to a particular intervention has become necessary due to varying theoretical underpinnings of interventions (Song, Happ, & Sandelowski, 2010) and modes of delivery. For example, the SPIRIT Intervention Fidelity Assessment Tool was developed to address four components of intervention fidelity: overall adherence to the intervention content elements; pacing of the intervention delivery; overall dyad responsiveness; and overall quality index of intervention delivery (Song et al., 2010). In their assessment, interrater reliability ranged from 0.80 to 0.87 for the four components.

Because methods for fidelity assessment may change according to the nature of an intervention, the purpose of this presentation is to report the methods and findings of an intervention fidelity assessment tailored for a study examining a nurse-delivered telephone intervention for improving adherence to antiretroviral therapy (ART) for patients with HIV/AIDS.

Method

This report addresses assessment of fidelity to the structured intervention in a longitudinal RCT, “Improving Adherence to Antiretroviral Therapy” (2R01 NR04749; n = 349). The effectiveness of two nurse-delivered telephone interventions was compared to usual care and control for improving adherence for individuals with HIV/AIDS. Human subjects approval was obtained from the university institutional review board prior to study initiation.

Participants were recruited between 2003 and 2007 from sites of usual care in Western Pennsylvania and Eastern Ohio. Following informed consent, participants were assigned randomly to one of three groups: usual care and control, structured (scripted) intervention, or individualized (unscripted) intervention. Those randomized to an intervention received 12 weekly telephone interventions based on social cognitive and self-efficacy theories (Bandura, 1986, 1997) and six maintenance interventions tapered over 12 weeks to clarify, review, and support the medication-taking regimen. Following maintenance, participants were randomized on a 1:1 ratio to receive either three booster interventions for positive reinforcement over 8 weeks or no booster. Only the structured intervention is examined here, as the assessment of the individualized intervention based on patient needs requires a different approach.

Four baccalaureate-prepared, registered nurse interventionists delivered the structured intervention. To ensure they were at the same baseline prior to study initiation, nurses without prior experience in the care or research of individuals with HIV/AIDS were selected to deliver the interventions. They received extensive training, including intervention delivery, ethical concerns of research, and HIV/AIDS treatment, prior to delivering telephone interventions and on an ongoing basis. Due to staff turnover, two of the four interventionists were hired and trained after study start; however, their training was identical to those trained prior to study start.

Intervention Fidelity

Measures

Intervention fidelity measures were study-specific, guided by the literature, and included two components: content fidelity and quality of interaction (QOI), or the establishment of a therapeutic relationship between the participant and the interventionist. Content fidelity was chosen based on published reports of intervention fidelity assessment. Quality of interaction was selected due to telephone-delivered nature of the intervention and the expected development of an interaction between the participant and the interventionist. These components were included in the intervention fidelity assessment plans of prior studies evaluating interventions for medication adherence at the School of Nursing.

Content fidelity was assessed by examining percentage of agreement (POA), which was calculated using an intervention review form (checklist) that captured whether key theoretical concepts of the structured intervention content were delivered using present orabsent categories. For example, generally, an intervention included an introduction, questions about the participant’s medications and use of adherence measures (microelectronic monitoring system [MEMS] and diary), a review of their homework, scripted content, participant reinforcement, and scheduling of the next session. Using the checklist, the reviewer determined whether the interventionist covered key applicable topics according to the structured intervention. The POA score was calculated by taking the total number of topics covered by the interventionist and dividing it by the number of total topics, then multiplying by 100. Though there is no actual agreement as to what comprises an acceptable POA, the goal was 90%.

Quality of interaction was measured through use of a participant and interventionist interaction visual analog scale, which provided a global rating of the interaction characteristics for both the interventionist and the participant with respect to engagement, demeanor, listening skills, attentiveness, accuracy, openness, responsiveness, and understanding or appropriateness (Table 1). Individual characteristics were measured on a 0–1 continuous scale of absent to present, and then were summed for total interventionist and participant summary scores ranging from 0–5.

Table 1.

Interventionist and Participant Interaction Concepts and Descriptions

| Rating | Concept | Description |

|---|---|---|

| Interventionist | Engagement | Evidence of effective engagement of the interventionist in the intervention, appears to be trying to engage the participant. |

| Demeanor | Positive, pleasant attitude and demeanor toward the participant, non- judgmental responses. | |

| Listening Skills | Active listening, reflecting, and content that reflects the participant’s comments. | |

| Attentiveness | Attentiveness to the participant, free from other distractions, ability to keep intervention on task, appropriateness and effectiveness of interventionists in response to participant’s comments. | |

| Accuracy | Accuracy of information provided, adhered to intervention protocol content. | |

| Participant | Engagement | Appears engaged with the interventionist and the process. |

| Openness | Shares details of their lives pertinent to the conversation, demonstrates a level of trust with the interventionist. | |

| Responsiveness | Responsive to the interventionist and the content being offered, willingness to participate in process, asks questions. | |

| Attentiveness | Demonstrates listening to and hearing interventionist, appears to be free from other distractions. | |

| Understanding and Appropriateness | Appears to understand and comprehend information being provided, responds appropriately to topics introduced, stays on task. |

Pilot

Measures for assessing content fidelity (POA) and QOI were piloted in a review of 47 structured intervention sessions for four participants. This review included assessment of all intervention sessions that had been completed at the time of review and conducted by three of the four nurse interventionists; no maintenance or booster sessions were included. Overall, the mean POA for three nurse interventionists was 94.6% (Table 2). Although one session for one interventionist (Nurse A) revealed a POA in the 80s, overall, the data indicated that the interventionists successfully delivered intervention content, with some variability in the QOI (Table 3) among the nurses, among the participants, and between the nurses and the participants. As a result of the pilot, two significant changes were implemented. First, the pilot review included all intervention sessions (1–12, or as many as had been completed at the time of review) for a single participant. To be more representative of the study, sampling was changed to the random selection and review of 10% of the audiorecorded sessions in the intervention, stratified by interventionist and intervention session. Second, an interventionist might not address every aspect of an intervention because a participant may provide the information unsolicited, or a given topic was not applicable. Therefore, the review form was revised to include a column for not applicable (NA) and developed a standard operating procedure to ensure a systematic review process.

Table 2.

Pilot-Mean Percentage of Agreement by Nurse Interventionist

| Number of Intervention Sessions Reviewed | Number of Maintenance Sessions Reviewed | Number of Booster Sessions Reviewed | M % (SD) | |

|---|---|---|---|---|

| Nurse A | 1 | -- | -- | 84.6 (1) |

| Nurse B | 21 | -- | -- | 93.0 (6.6) |

| Nurse C | 25 | -- | -- | 96.6 (5.8) |

| Nurse D* | -- | -- | -- | -- |

| Total (s = 98) | 47 | -- | -- | 94.6 (6.5) |

Note.

Nurse D was not yet hired at the time of the pilot review.

Table 3.

Pilot-Mean Nurse and Participant Quality of Interaction Scores by Nurse Interventionist

| Nurse Interaction Score M (SD) | Participant Interaction Score M (SD) | |

|---|---|---|

| Nurse Aa | 3.5 (−) | 3.9 (−) |

| Nurse B | 4.2 (0.3) | 3.9 (0.1) |

| Nurse C | 4.4 (0.1) | 4.3 (0.1) |

| Nurse Db | -- | -- |

Notes.

Standard deviation was not calculated since only one session was reviewed for this interventionist;

Nurse D was not yet hired at the time of the pilot review.

Sampling and procedure

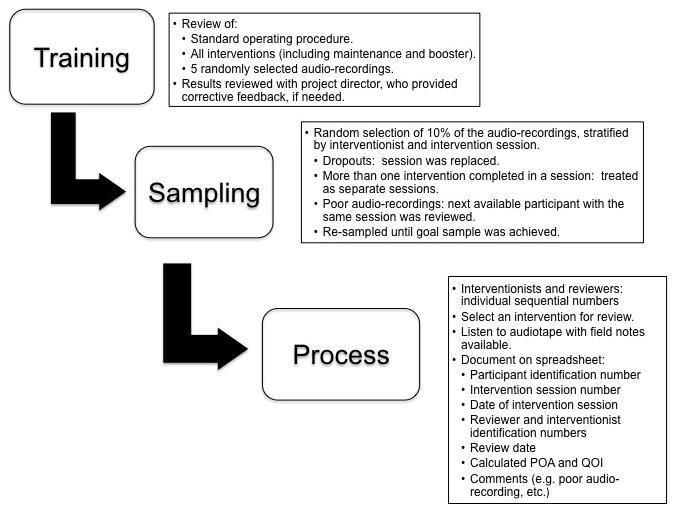

Participant consent was obtained prior to audiotaping each intervention session. Nurse interventionists used field notes to record their impressions of each intervention session, including events that affected the participant’s medication-taking regimen (e.g., vacation or the death of family or friends). Three graduate student researchers performed review of fidelity to the structured intervention. Their training and review process is outlined in Figure 1.

Figure 1.

Intervention Fidelity Assessment: Sampling and Review Process

Data analysis

Descriptive statistics summarizing POA and QOI summary scores were performed using SPSS version 16.0 (SPSS Inc., Chicago, IL).

Results

Four nurse interventionists delivered 871 structured intervention, 385 maintenance, and 90 booster sessions to 113 participants. In total, 131 sessions (88 intervention, 30 maintenance, and 13 booster) were assessed for 64 participants. The reviewed sample was 67% male, 47% White, and had a mean age of 44.3 years, which was representative of both the parent study and of patients with HIV/AIDS in Western Pennsylvania and Eastern Ohio.

The results of the assessments are shown in Tables 4 and 5. On average, the participants in the reviewed sample completed 9.9 intervention sessions (15.5 when including maintenance and booster sessions). The overall mean POA was 92.0% (±10.5) with the intervention protocol (intervention 92.9% [10.3], maintenance 89.4% [10.7], booster 91.3% [11.4]). The mean percentage of agreement scores by interventionist is summarized in Table 4. The overall mean interventionist QOI summary score was 4.5±0.4 and the mean participant QOI summary score was 4.5±0.4 (intervention 4.5 [0.5]/4.4 [0.4], maintenance 4.5 [0.3]/4.5 [0.4], booster 4.6 [0.3]/4.7 [0.2]). The mean nurse interventionist and participant QOI scores by nurse interventionist are reported in Table 5.

Table 4.

Mean Percentage of Agreement by Nurse Interventionist

| Number of Intervention Sessions Reviewed | Number of Maintenance Sessions Reviewed | Number of Booster Sessions Reviewed | M% (SD) | |

|---|---|---|---|---|

| Nurse A | 24 | 11 | 4 | 89.0 (12.4) |

| Nurse B | 16 | 3 | 3 | 85.0 (12.4) |

| Nurse C | 16 | 6 | 3 | 97.1 (5.2) |

| Nurse D | 32 | 10 | 3 | 95.0 (7.1) |

| Total (n = 131) | 88 | 30 | 13 | 92.0 (10.5) |

Table 5.

Mean Nurse and Participant Quality of Interaction Scores by Nurse Interventionist

| Nurse Interaction Score M (SD) | Participant Interaction Score M (SD) | |

|---|---|---|

| Nurse A | 4.4 (0.7) | 4.3 (0.5) |

| Nurse B | 4.5 (0.2) | 4.5 (0.4) |

| Nurse C | 4.7 (0.1) | 4.7 (0.2) |

| Nurse D | 4.6 (0.2) | 4.4 (0.4) |

The original plan was to combine the results of the pilot study and the actual study. When the two groups were compared after reviewing 51 sessions, the POA for both groups was above 90, and the variability in QOI scores was similar. However, when comparing the POA for the pilot data with the actual data, a significant difference was found between the two groups (tdf=96 = 2.125, p = .037). Therefore, intervention sessions reviews were continued to meet the preset criteria. Once the targeted number of sessions were reviewed, the same comparison was made and no significant difference was found (tdf=176 = 1.492, p = .138). The variability in QOI scores was similar.

Discussion

Strategies for assessment of content fidelity to an intervention protocol and the QOI between the nurse interventionist and the participant were addressed beginning with initial study design through the implementation and analysis of study findings. Overall, the interventionists successfully delivered structured intervention content with some variability in the POA and QOI scores. Potential outliers may explain the variability of the POA scores. In addition, while the mean POA scores for the intervention and booster sessions were above 90%, the mean POA score for the maintenance sessions was slightly below 90% (89.4% [±10.7]). This decrease may represent study fatigue based on the multiple sessions within a short period of time on the part of the participant, the interventionist, or both. Other explanations may be that the participant’s perception of the value of the content of the maintenance or booster sessions may have been different from that of the nurse interventionists because no new content was introduced.

Consistent with pilot review results, variability in QOI scores was found among the nurse interventionists, among the participants, and between the interventionists and the participants. Possible explanations for this variability may include an unusual event (e.g., death in the family) in the participant’s or interventionist’s lives, affecting the nurse-participant interaction.

The results should be interpreted with several limitations in mind. First, the use of visual analog scales to measure participant-interventionist interaction is a quantitative way to measure a very subjective concept. While standard definitions for each concept were developed prior to review, differences in interpretation among the reviewers themselves as to what listening skills or openness entailed may exist. However, these measurements provided a strong basis for review and a starting point for assessment of fidelity to an intervention protocol for future studies. In addition, the establishment of rapport and high-quality interactions is critically important for such interventions. Such measures are needed, despite the inherent limitations.

Second, the intervention was delivered by telephone, so participants and interventionists were unable to use nonverbal cues, which can affect both intervention delivery and intervention fidelity. Intervention sessions with lower POA scores were not reviewed again due to the fast-paced enrollment of the study. Re-review of these sessions may have resulted in reconciliation to either a higher or lower score. In either case, an opportunity for additional feedback to the interventionists may have been missed.

Future directions for study of intervention fidelity may include assessment of interrater reliability, investigation of significant differences between nurse and participant interactions, correlations of intervention completeness with participant demographics, and investigation of individual intervention doses with regard to completeness and interaction. Most importantly, one size does not fit all when considering fidelity to an intervention protocol. Ultimately, the intervention will drive the strategy used to measure intervention fidelity. As is intended, the results of this study speak directly to the reliability and validity of the findings in the parent study.

Acknowledgments

Funding for this study was provided by the National Institute for Nursing Research (2R01 NR004749) to Judith A. Erlen, PhD, RN, FAAN (Principal Investigator). This paper was presented at the 22nd Annual Scientific Sessions of the Eastern Nursing Research Society in Providence, Rhode Island on March 26, 2010. We would like to acknowledge the support of Jacqueline Dunbar-Jacob, PhD, RN, FAAN, the research team members, staff, graduate student researchers, and student workers who implemented this project. We would like to extend thanks to Karen Kovach, BSN, RN for her contributions to the intervention fidelity assessment of this project. Finally, without the participants, this study would not have been possible.

References

- Altman DG, Schulz KF, Moher D, Egger M, Davidoff F, Elbourne D…, Lang T. The revised CONSORT statement for reporting randomized trials: Explanation and elaboration. Annals of Internal Medicine. 2001;134:663–694. doi: 10.7326/0003-4819-134-8-200104170-00012. [DOI] [PubMed] [Google Scholar]

- Bandura A. Social foundations of thought and action: A social cognitive theory. Englewood Cliffs, NJ: Prentice-Hall; 1986. [Google Scholar]

- Bandura A. Self-efficacy: The exercise of control. New York: W. H. Freeman and Company; 1997. [Google Scholar]

- Bellg AJ, Borelli B, Resnick B, Hecht J, Minicucci DS, Ory M…, Czajkowski S. Enhancing treatment fidelity in health behavior change studies: Best practices and recommendations from the NIH Behavior Change Consortium. Health Psychology. 2004;23(5):443–451. doi: 10.1037/0278-6133.23.5.443. [DOI] [PubMed] [Google Scholar]

- Dumas JE, Lynch AM, Laughlin JE, Smith EP, Prinz RJ. Promoting intervention fidelity: Conceptual issues, methods, and preliminary results from the EARLY ALLIANCE prevention trial. American Journal of Preventive Medicine. 2001;20(1 Suppl):38–47. doi: 10.1016/s0749-3797(00)00272-5. [DOI] [PubMed] [Google Scholar]

- Erlen JA, Sereika SM. Fidelity to a 12-week structured medication adherence intervention in patients with HIV. Nursing Research. 2006;55(2 Suppl):S17–S22. doi: 10.1097/00006199-200603001-00004. [DOI] [PubMed] [Google Scholar]

- Markowitz JC, Spielman LA, Scarvalone PA, Perry SW. Psychotherapy adherence of therapist treating HIV-positive patients with depressive symptoms. The Journal of Psychotherapy Practice and Research. 2000;9:75–80. [PMC free article] [PubMed] [Google Scholar]

- Moher D, Schulz KF, Altman DG. The CONSORT statement: Revised recommendations for improving the quality of reports of parallel-group randomized trials. Lancet. 2001;357:1191–1194. [PubMed] [Google Scholar]

- Moncher FJ, Prinz FJ. Treatment fidelity in outcome studies. Clinical Psychology Review. 1991;11:247–266. [Google Scholar]

- Planas LG. Intervention design, implementation, and evaluation. American Journal of Health-System Pharmacy. 2008;65:1854–1863. doi: 10.2146/ajhp070366. [DOI] [PubMed] [Google Scholar]

- Resnick B, Inguito P, Orwig D, Yahiro JY, Hawkes W, Werner M…, Magaziner J. Treatment fidelity in behavior change research: A case example. Nursing Research. 2005;54:139–143. doi: 10.1097/00006199-200503000-00010. [DOI] [PubMed] [Google Scholar]

- Santacroce SJ, Maccarelli LM, Grey M. Intervention fidelity. Nursing Research. 2004;53:63–66. doi: 10.1097/00006199-200401000-00010. [DOI] [PubMed] [Google Scholar]

- Song MK, Happ MB, Sandelowski M. Development of a tool to assess fidelity to a psycho-educational intervention. Journal of Advanced Nursing. 2010;66(3):673–682. doi: 10.1111/j.1365-2648.2009.05216.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waskow IE. Specification of the technique variable in NIMH Treatment of Depression Collaborative Research Program. In: Williams JBW, Spitzer RL, editors. Psychotherapy research: Where are we and where should we go? New York, NY: Guilford Press; 1984. [Google Scholar]