Abstract

Music is a cross-cultural universal, a ubiquitous activity found in every known human culture. Individuals demonstrate manifestly different preferences in music, and yet relatively little is known about the underlying structure of those preferences. Here, we introduce a model of musical preferences based on listeners’ affective reactions to excerpts of music from a wide variety of musical genres. The findings from three independent studies converged to suggest that there exists a latent five-factor structure underlying music preferences that is genre-free, and reflects primarily emotional/affective responses to music. We have interpreted and labeled these factors as: 1) a Mellow factor comprising smooth and relaxing styles; 2) an Urban factor defined largely by rhythmic and percussive music, such as is found in rap, funk, and acid jazz; 3) a Sophisticated factor that includes classical, operatic, world, and jazz; 4) an Intense factor defined by loud, forceful, and energetic music; and 5) a Campestral factor comprising a variety of different styles of direct, and rootsy music such as is often found in country and singer-songwriter genres. The findings from a fourth study suggest that preferences for the MUSIC factors are affected by both the social and auditory characteristics of the music.

Keywords: MUSIC, PREFERENCES, INDIVIDUAL DIFFERENCES, FACTOR ANALYSIS

Music is everywhere we go. It is piped into retail shops, airports, and train stations. It accompanies movies, television programs, and ball games. Manufacturers use it to sell their products, while yoga, massage, and exercise studios use it to relax or invigorate their clients. In addition to all of these uses of music as a background, a form of sonic wallpaper imposed on us by others, many of us seek out music for our own listening – indeed, Americans spend more on music than they do on prescription drugs (Huron, 2001). Taken together, background and intentional music listening add up to more than 5 hours a day of exposure to music for the average American (Levitin, 2006; McCormick, 2009).

When it comes to self-selected music, individuals demonstrate manifestly different tastes. Remarkably, however, little is known about the underlying principles on which such individual musical preferences are based. A challenge to such an investigation is that music is used for many different purposes. One common use of music in contemporary society is pure enjoyment and aesthetic appreciation (Kohut & Levarie, 1950), another common use relates to music’s ability to inspire dance and physical movement (Dwyer, 1995; Large, 2000; Ronström, 1999). Many individuals also use music functionally, for mood regulation and enhancement (North & Hargreaves, 1996b; Rentfrow & Gosling, 2003; Roe, 1985). Adolescents report that they use music for a distraction from troubles, a means of mood management, for reducing loneliness, and as a badge of identity for inter- and intragroup self-definition (Bleich, Zillman & Weaver, 1991; Rentfrow & Gosling, 2006; 2007; Rentfrow, McDonald, & Oldmeadow, 2009; Zillmann & Gan, 1997). As adolescents and young adults, we tend to listen to music that our friends listen to, and this contributes to defining our social identity as well as our adult musical tastes and preferences (Creed & Scully, 2000; North & Hargreaves, 1999; Tekman & Hortaçsu, 2002).

Music is also used to enhance concentration and cognitive function, to maintain alertness and vigilance (Emery, Hsiao, Hill, & Frid, 2003; Penn & Bootzin, 1990; Schellenberg, 2004) and increase worker productivity (Newman, Hunt & Rhodes, 1966); moreover, it may have the ability to enhance certain cognitive networks by the way in which it is organized (Richard, Toukhsati, & Field, 2005). Social and protest movements use music for motivation, group cohesion, and to focus their goals and message (Eyerman & Jamison, 1998), and music therapists encourage patients to choose music to meet various therapeutic goals (Davis, Gfeller & Thaut, 1999; Särkamö, et al., 2008). Historically, music has also been used for social bonding, comfort, motivating or coordinating physical labor, the preservation and transmission of oral knowledge, ritual and religion, and the expression of physical or cognitive fitness (for a review, see Levitin, 2008).

Despite the wide variety of functions music serves, a starting point for this article is the assumption that it should be possible to characterize a given individual’s musical preferences or tastes overall, across this wide variety of uses. Although music has received relatively little attention in mainstream social and personality psychology, recent investigations have begun to examine individual differences in music preferences (for a review, see Rentfrow & McDonald, 2009). Results from these investigations suggest that there exists a structure underlying music preferences, with fairly similar music-preference factors emerging across studies. Independent investigations (e.g., Colley, 2008; Delsing, ter Bogt, Engels, & Meeus, 2008; Rentfrow & Gosling, 2003) have also identified similar patterns of relations between the music-preference dimensions and various psychological constructs. The degree of convergence across those studies is encouraging because it suggests that the psychological basis for music preferences is firm. However, despite the consistency, it is not entirely what it is about music that attracts people. Is there something inherent in music that influences people’s preferences? Or, are music preferences shaped by social factors?

The aim of the present research is to inform our understanding of the nature of music preferences. Specifically, we argue that research on individual differences in music preferences has been limited by conceptual and methodological constraints that have hindered our understanding of the psychological and social factors underlying preferences in music. This work aims to correct these shortcomings with the goal of advancing theory and research on this important topic.

Individual Differences in Music Preferences

Cattell and Anderson (1953) conducted one of the first investigations of individual differences in music preferences. Their aim was to develop a method for assessing dimensions of unconscious personality traits. Accordingly, Cattell and his colleagues developed a music preference test consisting of 120 classical and jazz music excerpts, to which respondents reported their degree of liking for each of the excerpts (Cattell & Anderson, 1953; Cattell & Saunders, 1954). These investigators attempted to interpret 12 factors, which they explained in terms of unconscious personality traits. For example, musical excerpts with fast tempos defined one factor, labeled surgency, and excerpts characterized by melancholy and slow tempos defined another factor, labeled sensitivity. Cattell’s music-preference measure never gained traction, but his results were among the first to suggest a latent structure to music preferences.

It was not until some 50 years later that research on individual differences in music preferences resurfaced. However, whereas Cattell and his colleagues assumed that music preferences reflected unconscious motives, urges, and desires (Cattell & Anderson, 1953; Cattell & Saunders, 1954), the contemporary view is that music preferences are manifestations of explicit psychological traits, possibly in interaction with specific situational experiences, needs, or constraints. More specifically, current research on music preferences draws from interactionist theories (e.g., Buss, 1987; Swann, Rentfrow, & Guinn, 2002) by hypothesizing that people seek musical environments that reinforce and reflect their personalities, attitudes, and emotions.

As a starting point for testing that hypothesis, researchers have begun to map the landscape of music-genre preferences with the aim of identifying its structure. For example, Rentfrow and Gosling (2003) examined individual differences in preferences for 14 broad music genres in three US samples. Results from all three studies converged to reveal four music-preference factors that were labeled reflective & complex (comprising classical, jazz, folk, and blues genres), intense & rebellious (rock, alternative, heavy metal), upbeat & conventional (country, pop, soundtracks, religious), and energetic & rhythmic (rap, soul, electronica). In a study of music preferences among Dutch adolescents, Delsing and colleagues (Delsing, et al., 2008) assessed self-reported preferences for 11 music genres. Their analyses also revealed four preference factors, labeled rock (comprising rock, heavy metal/hardrock, punk/harcore/grunge, gothic), elite (classical, jazz, gospel), urban (hip-hop/rap, soul/r&b), and pop (trance/techno, top 40/charts). And Colley (2008) investigated self-reported preferences for 11 music genres in a small sample of British university students. Her results revealed four factors for women and five for men. Specifically, three factors, sophisticated (comprising classical, blues, jazz, opera), heavy (rock, heavy metal), and rebellious (rap, reggae), emerged for both men and women, but the mainstream (country, folk, chart pop) factor that emerged for women split into traditional (country, folk) and pop (chart pop) for men.

However, not all studies of music preference structure have obtained as similar findings. For example, George, Stickle, Rachid, and Wopnford (2007) studied individual differences in preferences for 30 music genres in sample of Canadian adults. Their analyses revealed nine music-preference factors, labeled rebellious (grunge, heavy metal, punk, alternative, classic rock), classical (piano, choral, classical instrumental, opera/ballet, Disney/broadway), rhythmic & intense (hip-hop & rap, pop, rhythm & blues, reggae), easy listening (country, 20th century popular, soft rock, disco folk/ethnic, swing), fringe (new age, electronic, ambient, techno), contemporary Christian (soft contemporary Christian, hard contemporary Christian), jazz & blues (blues, jazz), and traditional Christian (hymns & southern gospel, gospel). In a study involving German young adults, Schäfer and Sedlmeier (2009) assessed individual differences in self-reported preferences for 25 music genres. Results from their analyses uncovered six music-preference factors, labeled sophisticated (comprising classical, jazz, blues, swing), electronic (techno, trance, house, dance), rock (rock, punk, metal, alternative, gothic, ska), rap (rap, hip hop, reggae), pop (pop, soul, r&b, gospel), and beat, folk, & country (beat, folk, country, rock’n’roll). And in a study involving participants mainly from the Netherlands, Dunn (in press) examined individual differences in preferences for 14 music genres and reported six music-preference factors, labeled rhythm’n blues (comprising jazz, blues, soul), hard rock (rock, heavy metal, alternative), bass heavy (rap, dance), country (country, folk), soft rock (pop, soundtracks), and classical (classical, religious).

Even though the results are not identical, there does appear to be a considerable degree of convergence across these studies. Indeed, in every sample three factors emerged that were very similar: One factor was defined mainly by classical and jazz music; another factor was defined largely by rock and heavy metal music; and the third factor was defined by rap and hip-hop music. There was also a factor comprising mainly country music that emerged in all the samples in which singer-songwriter or story-telling music was included (i.e., six of seven samples). And in half the studies there was a factor composed mostly of new age and electronic styles of music. Thus, there appears to be at least four or perhaps five robust music-preference factors.

Limitations of Past Research on Individual Differences in Music Preferences

Although research on individual differences in music preferences has revealed some consistent findings, there are significant limitations that impede theoretical progress in the area. One limitation is based on the fact that there is no consensus about which music genres to study. Indeed, few researchers even appear to use systematic methods to select genres or even provide explanations about how it was decided which genres to study. Consequently, different researchers focus on different music genres, with some studying as few as 11 (Colley, 2008; Delsing, et al., 2008) and others as many as 30 genres (George, et al., 2007). Ultimately, these different foci yield inconsistent findings and make it difficult to compare results across studies.

Another significant limitation stems from the reliance on music genres as the unit for assessing preferences. This is a problem because genres are extremely broad and ill-defined categories, so measurements based solely on genres are necessarily crude and imprecise. Furthermore, not all pieces of music fit neatly into a single genre. Many artists and pieces of music are genre defying or cross multiple genres, so genre categories do not apply equally well to every piece of music. Assessing preferences from genres is also problematic because it assumes that participants are sufficiently knowledgeable with every music genre that they can provide fully informed reports of their preferences. This is potentially problematic for comparing preferences across different age groups where people from older generations, for instance, may be unfamiliar with the new styles of music enjoyed by young people. Genre-based measures also assume that participants share a similar understanding of the genres. This is an obstacle for research comparing preferences from people in different socioeconomic groups or cultures because certain musical styles may have different social connotations in different regions or countries. Finally, there is evidence that some music genres are associated with clearly defined social stereotypes (Rentfrow, et al., 2009; Rentfrow & Gosling, 2007), which makes it difficult to know whether assessments based on music genres reflect preferences for intrinsic properties of a particular style of music or for the social connotations that are attached to it.

These methodological limitations have thwarted theoretical progress in the social and personality psychology of music. Indeed, much of the research has identified groups of music genres that covary, but we do not know why those genres covary. Why do people who like jazz also like classical music? Why are preferences for rock, heavy metal, and punk music highly related to each other? Is there something about the loudness, structure, or intensity of the music? Do those styles of music share similar social and cultural associations? Moreover, we do not know what it is about people’s preferred music that appeals to them. Are there particular sounds or instruments that guide preferences? Do people prefer music with a particular emotional valence or level of energy? Are people drawn to music that has desirable social overtones? Such questions need to be addressed if we are to develop a complete understanding of the social and psychological factors that shape music preferences. But how should music preferences be conceptualized if we are to address these questions?

Re-conceptualizing Music Preferences

Music is multifaceted: it is composed of specific auditory properties, communicates emotions, and has strong social connotations. There is evidence from research concerned with various social, psychological, and physiological aspects of music, not with music preferences per se, suggesting that preferences are tied to various musical facets. For example, there is evidence of individual differences in preferences for vocal as opposed to instrumental music, fast vs. slow music, and loud vs. soft music (Rentfrow & Gosling, 2006; Kopacz, 2005; McCown, Keiser, Mulhearn, & Williamson, 1997; McNamara & Ballard 1999). Such preferences have been shown to relate to personality traits such as Extraversion, Neuroticism, Psychoticism, and sensation seeking. Research on music and emotion has revealed individual differences in preferences for pieces of music that evoke emotions like happiness, joy, sadness, and anger (Chamorro-Premuzic & Furnham, 2007; Rickard, 2004; Schellenberg, Peretz, & Vieillard, 2008; Zentner, Grandjean, & Scherer, 2008). And research on music and identity suggests that some people are drawn to musical styles with particular social connotations, such as toughness, rebellion, distinctiveness, and sophistication (Abrams, 2009; Schwartz & Fouts, 2003; Tekman & Hortaçsu, 2002).

These studies suggest that we should broaden our conceptualization of music preferences to include the intrinsic properties, or attributes, as well as external associations of music. Indeed, if there are individual differences in preferences for instrumental music, melancholic music, or music regarded as sophisticated, such information needs to be taken into account. How should preferences be assessed so that both external and intrinsic musical properties are captured?

There are good reasons to believe that self-reported preferences for music genres reflect, at least partially, preferences for external properties of music. Indeed, research has found that individuals, particularly young people, have strong stereotypes about fans of certain music genres. Specifically, Rentfrow and colleagues (Rentfrow et al., 2009; Rentfrow & Gosling, 2007) found that adolescents and young adults who were asked to evaluate the prototypical fan of a particular music genre displayed significant levels of inter-judge agreement for several genres (e.g., classical, rap, heavy metal, country), suggesting that participants held very similar beliefs about the social and psychological characteristics of such fans. Furthermore, research on the validity of the music stereotypes suggested that fans of certain genres reported possessing many of the stereotyped characteristics. Thus, it would seem that genres alone can activate stereotypes that are associated with a suite of traits, which could, in turn, influence individuals’ stated musical preferences.

There are a variety of ways in which intrinsic musical properties could be measured. One approach would involve manipulating audio clips of musical pieces to emphasize specific attributes or emotional tones. For instance, respondents could report their preferences for clips engineered to be fast, distorted, or loud. McCown et al. (1997) used this approach to investigate preferences for exaggerated bass in music by playing respondents two versions of the same song: one version with amplified bass and one with deliberately flat bass. Though such procedures certainly yield useful information, a song never possesses only one characteristic, but several. As Hevner (1935) pointed out, hearing isolated chords or modified music is not the same as listening to music as it was originally intended, which usually involves an accumulation of musical elements to be expressed and interpreted as a whole. A more ecologically valid way to assess music preferences would be to present audio recordings of real pieces of music.

Indeed, measuring affective reactions to excerpts of real music has a number of advantages. One advantage of using authentic music, as opposed to music manufactured for an experiment, is that it is much more likely to represent the music people encounter in their daily lives. Another important advantage is that each piece of music can be coded on a range of musical qualities. For example, each piece can be coded on music-specific attributes, like tempo, instrumentation, and loudness, as well as on psychological attributes, such as joy, anger, and sadness. Furthermore, using musical excerpts overcomes several of the problems associated with genre-based measures because excerpts are far more specific than genres, and respondents need not have any knowledge of genre categories in order to indicate their degree of liking for a musical excerpt. Thus, it seems that preferences for musical excerpts would provide a rich and ecologically valid representation of music preferences that capture both external and intrinsic musical properties.

Overview of the Present Research

The goal of the present research is to broaden our understanding of the factors that shape the music preferences of ordinary music listeners, as opposed to trained musicians. Past work on individual differences in music preferences focused on genres, but genres are limited in several ways that ultimately hinder theoretical progress in this area. This research was intended to rectify those problems by developing a more nuanced assessment of music preferences. Previous work suggests that audio excerpts of authentic music would aid the development of such an assessment. Thus, the objective of the present research was to investigate the structure of affective reactions to audio excerpts of music, with the aim of identifying a robust factor structure.

Using multiple pieces of music, methods, samples, and recruitment strategies, four studies were conducted to achieve that objective. In Study 1, we assessed preferences for audio excerpts of commercially released, but not well-known music in a sample of Internet users. To assess the stability of the results, a follow-up study was conducted using a subsample of participants. Study 2 also used Internet methods, but unlike Study 1, preferences were assessed for pieces of music that had never been released to the public, and to which we purchased the copyright. In Study 3 we examined music preferences among a sample of university students using a subset of the pieces of music from Study 2. In Study 4 the pieces of music from the previous studies were coded on several musical attributes and analyzed in order to examine the intrinsic properties and external associations that influence the structure of music preferences.

Study 1: Are There Interpretable Factors Underlying Musical Preferences?

The objective of Study 1 was to determine whether there is an interpretable structure underlying preferences for excerpts of recorded music. As noted previously, although past research on music-genre preferences has reported slightly different factor structures, there is some evidence for four to five music-preference factors. Therefore, in the present study, we expected to identify at least four factors. Although we had some ideas about how many factors to expect, we used exploratory factor-analytic techniques to examine the hierarchical structure of music preferences without any a priori bias or constraints.

We wanted to assess preferences among a representative sample of music listeners as opposed to a sample of university students, which is the population typically studied in music preference research. So we recruited participants over the Internet to participate in a study concerned with psychology and music. Additionally, to determine the stability of the results, we used a subsample of participants to examine generalizability of the music factors across methods and over time.

Methods

Participants and procedures

In the Spring of 2007, advertisements were placed in several locations on the Internet (e.g., Craigslist.com) inviting people to participate in an Internet-based study of personality, attitudes, and preferences. In recruitment, we sought to obtain a wider, more heterogeneous cross-section of respondents than is typically found in such studies, which tend to employ university undergraduates. Approximately 1,600 individuals responded to the advertisement and provided their email addresses. They were then contacted and told that participation entailed completing several surveys on separate occasions, one of which included our music preference measure. Those who agreed to participate were directed to a Webpage where they could begin the first survey. After completing each survey, they were informed that they would receive an e-mail message within a few days with a hyperlink that would direct them to the next survey. Participants who completed all surveys received a $25 gift certificate to Amazon.com.

A total of 706 participants completed the music preference measure. Of those who indicated, 452 (68%) were female and 216 (32%) were male. The median age of participants was 31. Of those who reported their level of education, 27 (4%) had not completed high school, 406 (62%) completed high school or vocational school, 177 (27%) had a college degree and/or some post-college education, and 48 (7%) had a post-college degree. This sample met our goals of obtaining a broad representation of age groups and educational background.

Music Preference Stimuli

Our objective was to assess individual differences in preferences for the many different styles of music that people are likely encounter in their everyday lives. So it was crucial that we cast as wide a net as possible in selecting musical pieces in order to cover as much of the musical space as possible. Because the music space is vast, it was necessary that we develop a systematic procedure for choosing musical pieces to ensure that we covered as much of that space as possible. We thus developed a multi-step procedure to select musical pieces.

Music genre selection

Our first step was to identify broad musical styles that appeal to most people. To that end, a sample of 5,000 participants who responded to an Internet advertisement, plus a sample of 600 university students, filled out an open-ended questionnaire to name their favorite music genres (e.g. “rock”) and subgenres (e.g. “classic rock,” “alternative rock”) and examples of music for each one. From this, we identified 23 genres and subgenres that occurred on lists most often. In some cases, experimenter judgment was required (e.g., AC/DC was termed “heavy metal” by some and “classic rock” by others) in order to create coherent categories. To this list of 23, we added three sub-genres that were mentioned only a small number of times in our pilot study, because our aim was to cover as wide a range of musical styles as possible and we were concerned that these may have been omitted due to a pre-selection effect (Internet users and college students are not necessarily representative of all music listeners). Therefore, for the sake of completeness, we added polka, marching band, and avant-garde classical. Examples of those sub-genres that appeared on a moderate number of lists and that we did not include are Swedish death metal, West Coast rap, Bebop, Psychedelic rock, and Baroque. We folded these into the categories of heavy metal, rap, jazz, classic rock, and classical, respectively.

Music stimuli selection

The next step involved obtaining musical exemplars for the 26 music subgenres. There is evidence that well-known pieces of music can serve as powerful cues to autobiographical memories (Janata, Tomic & Rakowski, 2007) and that familiar music tends to be liked more than unfamiliar music (Dunn, in press; North & Hargreaves, 1995). Because we were interested in affective reactions only in response to the musical stimuli, we needed to reduce the possibility of obtaining preference ratings contaminated by idiosyncratic personal histories. We therefore required that the exemplars were of unknown pieces of music.

Our aim in selecting exemplars was not to find pieces of music from obscure artists necessarily, but pieces that were of a similar quality to hits and yet were unknown. To accomplish this, we consulted ten professionals – musicologists and recording industry veterans – to identify representative or prototypical pieces for each of the 26 sub-genres. We instructed them to choose major-record-label music that had been commercially released, but that achieved only low sales figures, so it was unlikely to have been heard previously by our participants. This created a set of pieces that had been through all of the many steps prior to commercialization that more popular music had gone through – being discovered by a talent scout, being signed to a label, selecting the best piece with an artists and repertoire executive, and recording in a professional studio with a professional production team. Most of these selections were clearly not well known: Booney James, Meav, and Cat's Choir; And a few pieces were recorded by better-known artists (Kenny Rankin, Karla Bonoff, Dean Martin) but the pieces themselves were not hits, nor were they taken from albums that had been hits. This procedure generated several exemplars for each subgenre.

Next we reduced the lists of exemplars for each subgenre by collecting validation data from a pilot sample. Specifically, excerpts of the musical pieces were presented in random order to 500 listeners, recruited over the Internet, who were asked to (a) name the genre or sub-genre that they felt best represented the musical piece, and (b) to indicate, on a scale of 1 – 9, how well they thought each piece represented the genre or sub-genre they had chosen. Using the results from this pilot test we chose the two musical pieces that were rated as most prototypical of each music category, which resulted in 52 excerpts altogether (2 for each of the 26 subgenres).

Thus, we measured music preferences by asking participants to indicate their degree of liking for each of the 52 musical excerpts using a nine-point rating scale, with endpoints at 1 (Not at all) and 9 (Very much). The stimuli were 15-second excerpts from 52 different pieces of music, digitized and played over a computer as MP3 files. The complete list of pieces presented appears in Table 1.

Table 1.

Five Varimax-Rotated Principal Components Derived from Music Preference Ratings in Study 1

| Principal component |

|||||||

|---|---|---|---|---|---|---|---|

| Artist | Piece | Genre | I | II | III | IV | V |

| Philip Glass | Symphony No. 3 | Avant-garde classical | .83 | −.02 | .13 | .10 | −.10 |

| Louise Farrenc | Piano Quintet No. 1 in A Minor | Classical | .79 | −.03 | .13 | .17 | −.07 |

| The Americus Brass Band | Coronation March | Marching band | .79 | .04 | .08 | .17 | −.14 |

| William Boyce | Symphony No.1 in B Flat Major | Classical | .78 | −.03 | .05 | .15 | −.16 |

| Ruben Gonzalez | Easy Zancudo | Latin | .74 | .05 | .08 | .07 | .16 |

| Oscar Peterson | The Way You Look Tonight | Traditional jazz | .74 | .02 | .00 | .20 | .09 |

| Charles Lloyd | Jumping the Creek | Acid jazz | .71 | −.01 | .06 | .04 | .26 |

| Elliott Carter | Boston Concerto, Allegro Staccatissimo | Avant-garde classical | .69 | .04 | .02 | −.01 | .07 |

| Walter Legawiec & His Polka Kings | Bohemian Beer Party | Polka | .66 | .34 | .08 | −.02 | .10 |

| Herb Ellis and Joe Pass | Cherokee (Concept 2) | Traditional jazz | .65 | .03 | −.05 | .20 | .21 |

| The American Military Band | Crosley March | Marching band | .64 | .33 | .06 | .10 | −.01 |

| Mantovani | I Wish You Love | Adult contemporary | .64 | .07 | −.06 | .33 | −.14 |

| Hilton Ruiz | Mambo Numero Cinco | Latin | .64 | .07 | −.06 | .17 | .17 |

| Meav | You Brought Me Up | Celtic | .61 | .09 | .11 | .22 | .11 |

| Frankie Yankovic | My Favorite Polka | Polka | .59 | .41 | .09 | .00 | .10 |

| King Sunny Adeé | Ja Funmi | World beat | .55 | .20 | .11 | .07 | .37 |

| Dean Martin | Take Me in Your Arms | Adult contemporary | .55 | .28 | .08 | .21 | −.08 |

| Booney James | Backbone | Quiet storm | .55 | .07 | −.03 | .40 | .23 |

| Jah Wobble | Waxing Moon | World beat | .53 | .13 | .14 | −.06 | .32 |

| 1 | One Term President | Electronica | .52 | −.03 | .14 | .25 | .32 |

| Ornette Coleman | Rock the Clock | Acid jazz | .49 | .22 | .13 | −.21 | .35 |

| Eilen Ivers | Darlin Corey | Celtic | .45 | .40 | .21 | −.02 | .10 |

| The O’Kanes | Oh Darlin’ | Country rock | .14 | .80 | .11 | .10 | .07 |

| Carlene Carter | I Fell in Love | New country | −.11 | .79 | .08 | .18 | −.02 |

| Jim Lauderdale | Heavens Flame | New country | −.05 | .77 | .14 | .23 | .01 |

| Tracy Lawrence | Texas Tornado | Mainstream country | −.13 | .76 | .08 | .21 | −.01 |

| The Mavericks | If You Only Knew | Mainstream country | .07 | .73 | .12 | .22 | .00 |

| Uncle Tupelo | Slate | Country rock | .12 | .72 | .20 | .14 | .02 |

| Iris Dement | Let the Mystery Be | Bluegrass | .27 | .65 | .06 | .00 | .11 |

| Doc Watson | Interstate Rag | Bluegrass | .44 | .57 | .06 | −.06 | .11 |

| Bill Haley and His Comets | Razzle Dazzle | Rock-n-roll | .36 | .47 | .14 | .16 | .02 |

| Flamin’ Groovies | Gonna Rock Tonight | Rock-n-roll | .12 | .46 | .26 | .21 | −.03 |

| Social Distortion | Cold Feelings | Punk | .02 | .05 | .78 | −.04 | .08 |

| Poster Children | Roe v Wade | Alternative rock | .12 | .05 | .78 | −.03 | .07 |

| Iron Maiden | Where Eagles Dare | Heavy metal | −.02 | .15 | .71 | .05 | .03 |

| Owsley | Oh No the Radio | Power pop | .04 | .06 | .69 | .07 | .09 |

| Kingdom Come | Get it On | Classic rock | .00 | .16 | .66 | .06 | .02 |

| X | When our Love Passed out on the Couch | Punk | .26 | .10 | .66 | −.14 | .15 |

| Scorpio Rising | It’s Obvious | Alternative rock | .06 | .01 | .65 | .14 | .13 |

| Cat’s Choir | Dirty Angels | Heavy metal | .00 | .15 | .63 | .10 | .07 |

| BBM | City of Gold | Classic rock | .07 | .29 | .59 | .16 | .04 |

| Adrian Belew | Big Blue Sun | Power pop | .12 | .12 | .35 | .28 | .02 |

| Brigitte | Heute Nact | Europop | .14 | .25 | .30 | .27 | .16 |

| Skylark | Wildflower | R&B/soul | .13 | .24 | .06 | .68 | −.01 |

| Karla Bonoff | Just Walk Away | Soft rock | .26 | .27 | .15 | .65 | −.02 |

| Ace of Base | Unspeakable | Europop | .13 | .21 | .18 | .63 | −.01 |

| Kenny Rankin | I Love You | Soft rock | .26 | .26 | .14 | .59 | −.02 |

| Earl Klugh | Laughter in the Rain | Quiet storm | .37 | .08 | −.19 | .58 | .19 |

| Billy Paul | Brown Baby | R&B/soul | .26 | .35 | .17 | .46 | .16 |

| D-Nice | My Name is D-Nice | Rap | .10 | .08 | .17 | .02 | .76 |

| Ludacris | Intro | Rap | .02 | −.06 | .25 | .03 | .72 |

| Age | Lichtspruch | Electronica | .30 | .07 | .11 | .10 | .45 |

Note. Each piece’s largest factor loading is in italics. Factor loadings ≥|.40| are in bold typeface.

Results and Discussion

Factor structure

Multiple criteria were used to decide how many factors to retain: parallel analyses of Monte Carlo simulations, replicability across factor-extraction methods, and factor interpretability. Principal-components analysis (PCA) with varimax rotation yielded a substantial first factor that accounted for 27% of the variance, reflecting individual differences in general preferences for music. Parallel analysis of random data suggested that the first five eigenvalues were greater than chance. Examination of the scree plot suggested an “elbow” at roughly six factors. Successive PCAs with varimax rotation were then performed for one-factor through six-factor solutions. In the six-factor solution, the sixth factor was comparatively small with low-saturation items. Altogether these analyses suggested that we retain no more than five broad music-preference factors.

To determine whether the factors were invariant across methods, we examined the convergence between orthogonally rotated factor scores from PCA, principle-axis (PA), and maximum-likelihood (ML) extraction procedures. Specifically, PCAs, PAs, and MLs were performed for one- through five-factor solutions; the factor scores for each solution were then intercorrelated. The results revealed very high convergence across the three extraction methods, with correlations averaging above .99 between the PCA and PA factors, .99 between the PCA- and ML factors, and above .99 between the PA and ML factors. These results indicate that the same solutions would be obtained regardless of the particular factor-extraction method that was used. As PCAs yield exact and perfectly orthogonal factor scores, solutions derived from PCAs are reported in this article.

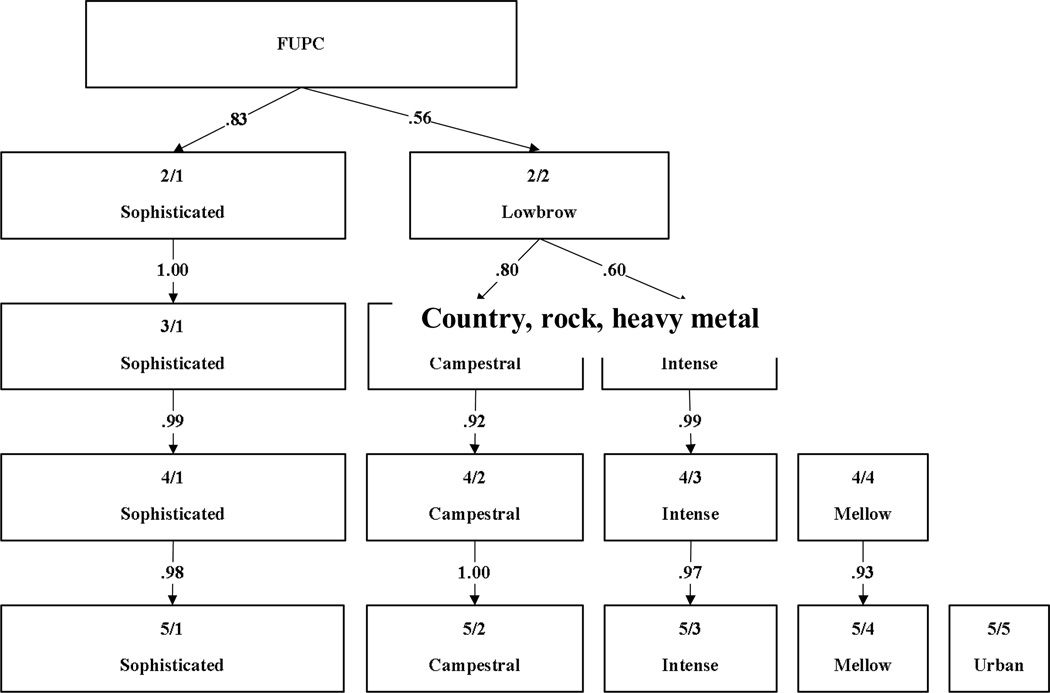

We next examined the hierarchical structure of the one- through five-factor solutions using the procedure proposed by Goldberg (2006). First, a single factor was specified in a PCA and then in four subsequent PCAs we specified two, three, four, and five orthogonally rotated factors. The factor scores were saved for each solution. Next, correlations between factor scores at adjacent levels were computed. The resulting hierarchical structure is displayed in Figure 1.

Figure 1.

Varimax-rotated principal components derived from preference ratings for 52 commercially released musical clips in Study 1. The figure begins (top box) with the First Unrotated Principal Component (FUPC) and displays the genesis of the derivation of the 5 factors obtained. Text within each box indicates the label of the factor or, in some cases, the genres or subgenres that best describe those pieces that loaded most highly onto that factor. Arabic numerals within boxes indicate the number of factors extracted for a given level (numerator) and the factor number within that level (denominator; e.g., 2/1 indicates the first factor in a two-factor solution). Arabic numerals within the arrow paths indicate the Pearson product-moment correlation between a factor obtained early in the extraction and a later factor. For example, when expanding from a two-factor solution to a three-factor solution (rows 2 and 3), we see that Factor 2/2, “Lowbrow” splits into two new factors, “Campestral” (which correlates .80 with the parent factor) and “Intense” (which correlates .60 with the parent factor). Thus, the 1.00 correlation between 2/1 and 3/1 indicates that this factor did not change between the two- and three-factor solutions, but that it did change slightly in each subsequent extraction. Note that a feature of the display method we employed is that the box widths are proportional to factor sizes.

There are several noteworthy findings that can be seen in this figure. The factors in the two-factor solution resemble the well-documented “Highbrow” (or Sophisticated) and “Lowbrow” music-preference dimensions; the excerpts with high loadings on the “Sophisticated/aesthetic” factor were drawn mainly from classical, jazz, and world music. This factor remained virtually unchanged through the three-, four-, and five-factor solutions. The excerpts with high loadings on the Lowbrow factor were predominately country, heavy metal, and rap. In the three-factor solution, this factor then split into subfactors that appear to differentiate music based on its forcefulness or intensity. The “Intense/aggressive” factor comprised heavy metal, punk, and rock excerpts, and remained fully intact through the four- and five-factor solutions. The less intense factor comprised excerpts from the country, rock-n-roll (early rock, rockabilly), and pop genres, and these first two music types remained consistent through the four- and five-factor solutions, at which point we labeled the factor “Campestral/sincere.” In the four-factor solution, a “Mellow/relaxing” factor emerged that comprised predominately pop, soft-rock, and soul/R&B excerpts. That factor remained in the five-factor solution, where an “Urban/danceable” factor emerged which included mainly rap and electronica music.

Although the factors depicted in Figure 1 are clear and interpretable, some of them (e.g., Urban, Mellow) might be driven by demographic differences in gender and/or age. This is a particularly important issue for music-preference research because some music might appeal more or less to men than to women (e.g., punk and soul, respectively), or more or less to younger people than to older people (e.g., electronica and classic rock, respectively).

To test whether the music preference structure was influenced by the demographics of the participants, we compared the factor structure based on the original preference ratings with the structure derived from residualized musical ratings, from which sex and age were statistically removed. Specifically, we conducted a PCA with varimax rotation on the residualized musical ratings and specified a five-factor solution. The factor structure derived from the residualized ratings was virtually identical to the one derived from the original musical ratings, with factor congruence coefficients ranging from .99 (Urban) to over .999 (Sophisticated). Furthermore, analyses of the correlations between the corresponding factor scores derived from the original and the residualized ratings revealed high convergence for all of the factors, with convergent correlations ranging from .96 (Urban) to .99 (Mellow). These results indicate that even though there are significant sex and age differences in preferences for specific pieces of music, the factors underlying music preferences are invariant to gender and age effects. Table 1 provides the factor loadings for the five music-preference factors.

Follow-up

Close inspection of the excerpts that loaded strongly on each factor indicated that most of those on the Sophisticated factor were recordings of instrumental jazz, classical, and world music, whereas the majority of the excerpts on the other factors included vocals. This confound obscures the meaning of the factors, particularly the Sophisticated factor, because it was not clear whether the factors reflect preferences for general musical characteristics common to the factors, or whether the factors merely reflect preferences for instrumental versus vocal music.

We addressed this issue by revising our music preference measure to include a balance of instrumental and vocal music excerpts for each of the music genres and subgenres. The same 52 excerpts that were included in the original measure were kept, but for 12 of them that had vocals, we created one excerpt using a section of the piece with vocals and a second excerpt from a section of the same piece that was purely instrumental. The revised measure comprised 64 musical excerpts that were each approximately 15 seconds in length. A total of 75 participants from the original sample volunteered to complete the revised music-preference survey without compensation.

If the five music-preference factors were not an artifact of confounding instrumental and vocal music excerpts, we should expect the same five dimensions to emerge from the revised music-preference measure. And, indeed, the same five factors were recovered in a PCA with varimax rotation, with a structure that was nearly identical to the one derived from the original musical excerpts. Analyses of the correlations between the factor scores derived from the original and the revised excerpts revealed high convergence for all of the factors, with convergent correlations ranging from .61 (Urban) to .82 (Sophisticated). These results indicate that the original factor structure was not an artifact due to the confounding of the unequal numbers of musical excerpts with vocals for each factor. Furthermore, because the follow-up took place 5 months after the original study ended, these results also suggest that the music-preference factors are stable over time.

Summary

The findings from Study 1 and its follow-up provide substantial evidence for five music-preference factors. These five factors capture a broad range of musical styles and can be labeled MUSIC, for the Mellow, Urban, Sophisticated, Intense, and Campestral music-preference factors. Three of these factors (Sophisticated, Campestral, and Intense) are similar to factors reported previously (e.g., Delsing, et al., 2008; Rentfrow & Gosling, 2003). On the other hand, previous studies have suggested that preferences for rap, soul, electronica, dance, and R&B music comprise one broad factor, whereas in the current study rap, electronica, and dance music form one factor (Urban) while soul and R&B music comprise another (Mellow). One likely explanation for this difference is that the present research examined a broader array of music genres and subgenres than did most previous research. Moreover, the results from the follow-up study five months later suggest that our music-preference dimensions are reasonably stable over time.

Taken together, the findings from this study are encouraging. However, a potential problem with the current work is that several of the music excerpts used in the music-preference measure were from pieces recorded by famous music artists (e.g., Ludacris, Dean Martin, Oscar Peterson, Ace of Base, Social Distortion). This is potentially problematic because it is likely that some of the excerpts were more familiar to some participants than to others, and several studies (e.g., Brickman, & D’Amato, 1975; Dunn, in press) indicate that familiarity with a piece of music is positively related to liking it. Even if the particular pieces were unfamiliar, listeners may have associations or memories for these particular artists independent of the excerpts themselves. Therefore, it is necessary to confirm the music-preference structure using both artists and music that are unfamiliar to listeners.

Study 2: Are the Same Music-Preference Factors Recovered Using a Different Set of Excerpts from Pieces by Unknown Artists?

The results from Study 1 reveal an interpretable set of music-preference factors that resemble the factors reported in previous research. This is encouraging because it further supports the hypothesis that there is a robust and stable structure underlying music preferences. However, it is conceivable that the factors obtained in Study 1, although consistent with previous research, could be a result of the specific pieces of music administered. In theory, if the five music-preference factors are robust, we should expect to obtain a similar set of factors from an entirely different selection of musical pieces. This is a very conservative hypothesis, but necessary for evaluating the robustness of the MUSIC model.

Therefore, the aim of Study 2 was to investigate the generalizability of the music-preference factor structure across samples as well as musical stimuli. Specifically, an entirely new music-preference stimulus set was created that included only previously unreleased music from unknown, aspiring artists. Because none of the excerpts included in Study 1 were included in Study 2, evidence for the same five music-preference factors would ensure that the structure is not merely an artifact of the particular pieces or artists used in Study 1, thereby providing strong support for the MUSIC model.

Methods

Participants and procedures

In the Spring of 2008, advertisements were placed in several locations on the Internet (e.g., Craigslist.com) inviting people to participate in an Internet-based study. All those who volunteered and provided consent were directed to a website where they could complete a measure of music preferences. A total of 354 people chose to participate in the study. Of those who indicated, 235 (66%) were female and 119 (34%) were male; 11 (3%) were African American, 52 (15%) were Asian, 266 (75%) were Caucasian, 15 (4%) were Hispanic, and 10 (3%) were of other ethnicities. The median age of the participants was 25. After completing the survey, participants received a $5 gift certificate to Amazon.com.

Music preference stimuli

The primary aim of Study 2 was to replicate the MUSIC model using a new set of unfamiliar musical pieces. To obtain unfamiliar pieces of music, we purchased from Getty Images the copyright to several pieces of music that had never been released to the public. Getty Images is a commercial service that provides photographs, films, and music for the advertising and media industries. All materials are of professional-grade in terms of the quality of recording, production, and composition (indeed, they pass through many of the same filters and levels of evaluation that commercially released recordings do).

In the autumn of 2007, five expert judges searched the Getty database (http://www.Getty.com) for pieces of music to represent the same 26 genres and subgenres used in Study 1. The judges worked independently to identify exemplary pieces of music and then pooled their results to reach a consensus on those pieces that were the best prototypes for each category. We sought to obtain four pieces for each category, but for a few (such as World Beat and Celtic) the judges were only able to agree on two or three as to their goodness of fit to the category, and hence the resulting set comprised a total of 94 excerpts. A complete list of the pieces used is shown in Table 2.

Table 2.

Five Varimax-Rotated Principal Components Derived from Music Preference Ratings in Study 2

| Principal component |

|||||||

|---|---|---|---|---|---|---|---|

| Artist | Piece | Genre | I | II | III | IV | V |

| Ljova | Seltzer Do I Drink Too Much? | Avant-garde classical | .77 | .05 | .12 | .01 | .16 |

| Various Artists | La Trapera | Latin | .75 | .18 | .07 | .12 | .03 |

| Various Artists | Polka From Tving | Polka | .74 | .29 | −.01 | .11 | −.23 |

| The Evergreen Production Music Library | Braunschweig Polka | Polka | .73 | .31 | .05 | .16 | −.21 |

| Golden Bough | The Keel Laddie | Celtic | .71 | .31 | .05 | .13 | −.19 |

| Laurent Martin | Scriabin Etude Opus 65 No 3 | Avant-garde classical | .71 | .11 | .12 | −.03 | .12 |

| Moh Alileche | North Africa’s Destiny | World beat | .70 | .09 | .10 | .17 | .06 |

| Wei Li - Soprano Diva of the Orient | I Love You Snow of the North | World beat | .70 | .22 | −.01 | .14 | .11 |

| Erik Jekabson | Fantasy in G | Classical | .70 | −.02 | .16 | −.03 | .34 |

| Bruce Smith | Sonata A Major | Classical | .69 | .18 | −.03 | −.01 | .26 |

| Antonio Vivaldi | Concerto in C | Classical | .68 | .09 | .05 | −.05 | .27 |

| Claude Debussy | Debussy Livres II | Classical | .68 | .11 | .07 | .11 | .26 |

| Niklas Ahman | Turbulence | Avant-garde classical | .67 | .11 | .18 | .13 | .20 |

| Valentino Production Library | Jefferson’s March | Marching band | .67 | .41 | −.04 | .05 | −.11 |

| Valentino Production Library | Battle Cry of Freedom #2 | Marching band | .67 | .39 | −.02 | .15 | .02 |

| Various Artists | Quarryman’s Polka | Polka | .64 | .30 | .01 | .09 | −.24 |

| DNA | La Wally | Classical | .62 | .05 | .05 | −.03 | .01 |

| Valentino Production Library | Long May She Wave | Marching band | .62 | .42 | −.04 | .02 | −.12 |

| BadaBing Music Group | Hail to the Chief | Marching band | .61 | .44 | −.04 | .13 | −.13 |

| Ron Sunshine | Still Too Late | Traditional jazz | .59 | .04 | .01 | .20 | .33 |

| Anna Coogan & North 19 | The World is Waiting on You | Bluegrass | .59 | .44 | .02 | .05 | .06 |

| Twelve 20 Six | And What You Hear | Acid jazz | .59 | .14 | .28 | .26 | −.07 |

| BadaBing Music Group | Happy Hour | Adult contemporary | .58 | .19 | .02 | .25 | .15 |

| Daniel Nahmod | I Was Wrong | Traditional jazz | .55 | .21 | −.09 | .25 | .29 |

| Ezekiel Honig | Falling Down | Electronica | .54 | .12 | .11 | .30 | .07 |

| Ron Levy's Wild Kingdom | Memphis Mem’ries | R&B/soul | .54 | .08 | .02 | .34 | .29 |

| Jason Greenberg | Quest | World beat | .52 | .02 | .10 | .18 | .16 |

| Mamborama | Chocolate | Latin | .50 | .10 | .07 | .36 | .38 |

| Mamborama | Night of the Living Mambo | Latin | .47 | .05 | .05 | .34 | .24 |

| The Tossers | With the North Wind | Celtic | .44 | .33 | .13 | .05 | .11 |

| Linn Brown | Never Mind | Soft rock | .42 | .41 | .02 | −.02 | .35 |

| Lisa McCormick | Fernando Esta Feliz | Latin | .38 | .17 | .08 | .32 | .30 |

| Paul Serrato & Co. | Who Are You? | Traditional jazz | .38 | −.04 | .00 | .13 | .16 |

| Michelle Owens | Sweet Pleasure | Quiet storm | .38 | .09 | .11 | .30 | .38 |

| James E. Burns | I’m Already Over You | New country | .15 | .78 | .01 | .13 | .06 |

| Bob Delevante | Penny Black | New country | .20 | .75 | .03 | .16 | .11 |

| Babe Gurr | Newsreel Paranoia | Bluegrass | .23 | .73 | .08 | .18 | −.09 |

| Five Foot Nine | Lana Marie | Country rock | .19 | .72 | −.07 | .11 | .19 |

| Carey Sims | Praying For Time | Mainstream country | .04 | .72 | .07 | .15 | .29 |

| Jono Fosh | Lets Love | Adult contemporary | .28 | .71 | .08 | .20 | .11 |

| Babe Gurr | Hard to Get Over Me | Mainstream country | .02 | .69 | .01 | .07 | .03 |

| Laura Hawthorne | Famous Right Where I Am | Mainstream country | −.09 | .66 | .07 | .17 | .25 |

| Anglea Motter | Mama I’m Afraid to Go There | Bluegrass | .25 | .63 | .12 | .07 | .12 |

| Anna Coogan & North 19 | All I Can Give to You | Bluegrass | .31 | .59 | .03 | .04 | .34 |

| Brad Hatfield | Breakup Breakdown | Country-rock | .11 | .58 | .19 | .19 | .36 |

| Diana Jones | My Remembrance of You | Bluegrass | .44 | .58 | .00 | .05 | −.02 |

| Hillbilly Hellcats | That’s Not Rockabilly | Rock-n-roll | .39 | .55 | .15 | .18 | −.14 |

| Carey Sims | Christmas Eve | New country | .08 | .55 | .08 | .19 | .49 |

| Greazy Meal | Grieve | R&B/soul | .30 | .54 | .15 | .13 | .09 |

| Curtis | Carrots and Grapes | Rock-n-roll | .29 | .54 | .18 | .22 | .01 |

| Mark Erelli | Passing Through | Country rock | .33 | .52 | −.04 | −.04 | .23 |

| Doug Astrop | Once in a Lifetime | Adult contemporary | .27 | .49 | −.03 | .29 | .34 |

| Ali Handal | Sweet Scene | Soft rock | .37 | .47 | −.03 | .05 | .37 |

| Epic Hero | Angel | Alternative rock | .29 | .39 | .15 | .07 | .32 |

| Travis Abercrombie | Let Me In | Alternative rock | −.18 | .39 | .33 | .07 | .39 |

| Squint | Michigan | Punk | .09 | .03 | .83 | .06 | .03 |

| The Tomatoes | Johnny Fly | Classic rock | .03 | .04 | .80 | .05 | .14 |

| The Stand In | Frequency of a Heartbeat | Punk | .01 | −.01 | .80 | .12 | .04 |

| Five Finger Death Punch | Death Before Dishonor | Heavy metal | .07 | −.04 | .77 | .18 | .09 |

| Straight Outta Junior High | Over Now | Punk | −.02 | .10 | .76 | .01 | .02 |

| Five Finger Death Punch | Salvation | Heavy metal | .08 | −.07 | .76 | .11 | −.08 |

| Bankrupt | Face the Failure | Punk | .14 | .06 | .76 | .15 | −.15 |

| Cougars | Dick Dater | Classic rock | .07 | .08 | .76 | .14 | .03 |

| Dawn Over Zero | Out of Lies | Heavy metal | .18 | −.14 | .75 | .15 | −.13 |

| The Peasants | Girlfriend | Classic rock | −.12 | .09 | .73 | −.03 | .09 |

| Exit 303 | Falling Down 2 | Classic rock | .04 | .08 | .72 | .09 | .13 |

| Tiff Jimber | Prove It To Me | Classic rock | −.08 | .20 | .68 | .05 | .31 |

| Human Signals | Oh Thumb! | Classic rock | −.01 | .16 | .68 | .17 | .09 |

| Five Finger Death Punch | White Knuckles | Heavy metal | .18 | −.07 | .63 | .17 | −.19 |

| Human Signals | Jack Buddy | Classic rock | .24 | .15 | .59 | .07 | .10 |

| Phaedra | Feed Your Head | Power pop | .18 | .22 | .42 | .26 | .35 |

| Ciph | Brooklyn Swagger | Rap | .04 | .07 | .15 | .68 | −.11 |

| Sammy Smash | Get the Party Started | Rap | −.07 | .09 | .15 | .65 | −.19 |

| Mykill Miers | Immaculate | Rap | .11 | .01 | .02 | .64 | .18 |

| Robert LaRow | Sexy | Europop | −.03 | .11 | .10 | .63 | −.07 |

| DJ Come Of Age | Thankful | R&B/soul | .13 | .26 | −.02 | .60 | .14 |

| Preston Middleton | Latin 4 | Quiet storm | .33 | .17 | −.01 | .58 | .20 |

| Snake & Butch | Love is Good | Europop | .23 | .21 | .26 | .56 | .02 |

| The Cruxshadows | Go Away | Europop | .18 | .04 | .28 | .56 | .09 |

| AB+ | Recess | Electronica | .15 | .19 | −.02 | .55 | .34 |

| Magic Dingus Box | The Way It Goes | Electronica | .15 | .23 | .08 | .52 | .30 |

| Grafenberg All-Stars | Sesame Hood | Rap | .14 | .26 | .31 | .51 | −.28 |

| Tony Lewis | Skyhigh | R&B/soul | .04 | .25 | .27 | .51 | .14 |

| The Alpha Conspiracy | Close | Europop | .36 | .07 | .24 | .50 | .21 |

| Benjamin Chan | MATRIX | Electronica | .24 | .01 | .31 | .49 | .07 |

| Michael Davis | Big City | Traditional jazz | .41 | .12 | .16 | .43 | .29 |

| Gogo Lab | The Escape | Acid jazz | .26 | .06 | .11 | .31 | .14 |

| Walter Rodriguez | Safety | Electronica | .18 | .17 | .02 | .38 | .60 |

| Frank Josephs | Mountain Trek | Quiet storm | .21 | .39 | −.07 | .30 | .57 |

| Taryn Murphy | Love Along the Way | Soft rock | .13 | .28 | .15 | .09 | .55 |

| Bruce Smith | Children of Spring | Adult contemporary | .40 | .39 | −.07 | .14 | .50 |

| Human Signals | Birth | Soft rock | .28 | .41 | .03 | .20 | .47 |

| Lisa McCormick | Let’s Love | Adult contemporary | .37 | .34 | .08 | −.08 | .46 |

| Language Room | She Walks | Soft rock | .08 | .31 | .17 | −.09 | .42 |

Note. Each piece’s largest factor loading is in italics. Factor loadings ≥ |.40| are in bold typeface.

As in Study 1, preferences were assessed by asking participants to indicate their degree of their liking for each of 94 musical excerpts using a nine-point rating scale with endpoints at 1 (Not at all) and 9 (Very much).

Results and Discussion

As in Study 1, multiple criteria were used to decide how many factors to retain. A PCA with varimax rotation yielded a large first factor that accounted for 26% of the variance; parallel analysis of random data suggested that the first seven eigenvalues were greater than chance; and the scree plot suggested an “elbow” at roughly six factors. PCAs with varimax rotation were then performed for one-factor through six-factor solutions. One of the factors in the six-factor solution was comparatively small and included several excerpts with large secondary loadings. Based on those findings, we elected to retain the first five music-preference factors.

Examination of factor invariance across extraction methods again revealed very high convergence across the PCA, PA, and ML extraction methods, with correlations averaging above .999 between the PCA and PA factors, .99 between the PCA and ML factors, and over .999 between the PA and ML factors. Given that the factors were equivalent across extraction methods and that we presented the loadings from the PCAs in Study 1, we again report solutions derived from PCAs in Study 2.

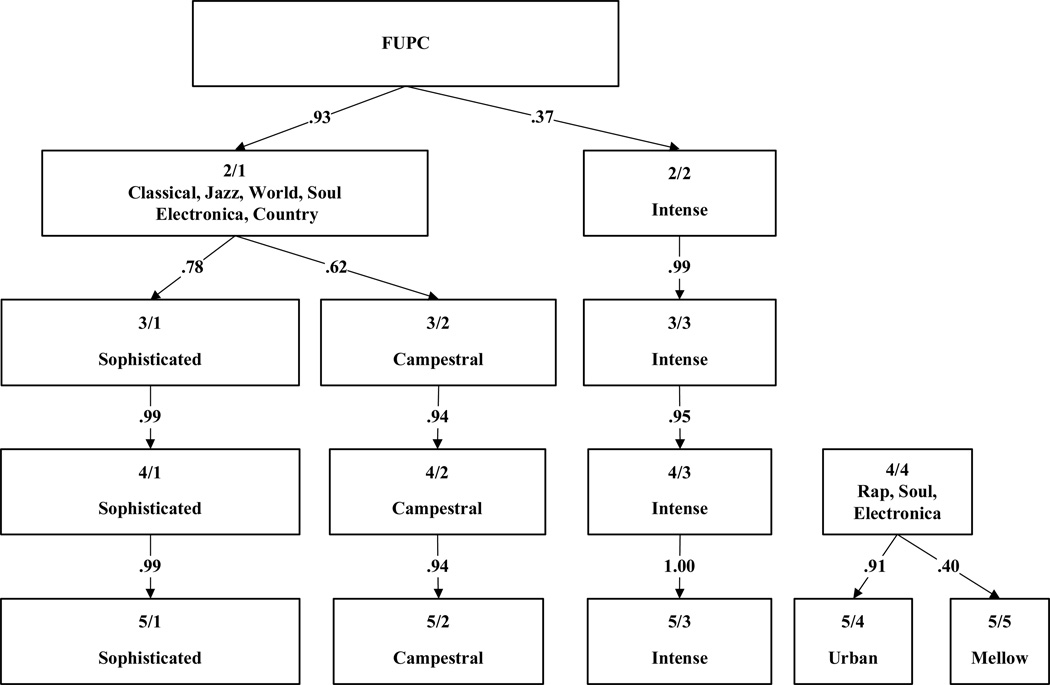

The final five-factor solutions were virtually identical between Study 1 and Study 2, although inspection of the one- through five-factor solutions revealed a slightly different order of emergence in the two studies. As can be seen in Figure 2, the first factor in the two-factor solution was difficult to interpret because it comprised a wide array of musical styles, from classical and soul, to electronica and country. In contrast, the second factor clearly resembled the Intense factor found in Study 1, and remained virtually unchanged through the three-, four-, and five-factor solutions. In the three-factor solution, a factor resembling the Sophisticated dimension emerged, comprising classical, jazz, and world music excerpts. This factor remained in the four- and five-factor solutions. A factor resembling Campestral also emerged in the three-factor solution, and was composed mainly of country and rock-n-roll musical excerpts. The Campestral factor emerged fully in the four- and five-factor solutions. In the four-factor solution a factor comprised primarily of rap, electronic, and soul/R&B music excerpts emerged. This factor split in the five-factor solution into factors closely resembling the Urban and Mellow dimensions. The Urban factor included mainly rap and electronica music and the Mellow factor included predominately pop, soft-rock, and soul/R&B excerpts. The music excerpts and their loadings on each of the five factors are presented in Table 2.

Figure 2.

Varimax-rotated principal components derived from preference ratings for 94 unknown musical excerpts in Study 2.

The five music-preference factors that emerged in Study 2 replicate the factors identified in Study 1. This is a particularly impressive finding considering that entirely different excerpts from different pieces and different artists were included in the two studies. However, Studies 1 and 2 share three characteristics that could limit the generalizability of the results. First, both studies were conducted over the Internet. Although there is evidence that the results obtained from Internet-based surveys are similar to those based on paper-and-pencil surveys (Gosling, Vazire, Srivastava, & John, 2004), the stimuli used in the present research were musical excerpts, not text-based items. The contexts in which participants completed the survey were most certainly different, and it is possible that the testing conditions could have affected participants’ ratings. Second, both studies relied on samples of self-selected participants. It is reasonable to suppose that people who responded to the online advertisements about a study on the psychology of music might be more interested in music and/or share other kinds of preferences compared to people who chose not to participate or who did not visit the websites where the advertisements were posted. And third, the music preference question used in both studies was potentially ambiguous. For each music excerpt, participants were asked, “How much do you like this music?” The question was intended to assess participants’ degree of liking for the style of music that the excerpts represented, but it is possible that some participants reported their degree of liking for the excerpt itself. Given these limitations, it is important to know whether the results from Studies 1 and 2 would generalize across other samples and methods.

Study 3: How Robust Are the Music-Preference Factors?

Study 3 was designed to investigate the generalizability of the music-preference factors across samples and methods. A subset of the music excerpts used in Study 2 was administered to a sample of university students in person. Participants listened to the excerpts in a classroom setting. For each excerpt, half of the participants rated how much they liked that excerpt, and the other half rated how much they liked the genre that the excerpt represented.

Methods

Participants

In the Fall of 2008, students registered for introductory psychology at the University of Texas at Austin were invited to participate in an in-class survey of music preferences. A total of 817 students chose to participate in the study. Of those who indicated, 488 (62%) were female and 306 (38%) were male; 40 (5%) were African American, 144 (18%) were Asian, 397 (51%) were Caucasian, 171 (22%) were Hispanic, and 28 (4%) were of other ethnicities. The median age of participants was 18.

Procedures

As part of the curriculum for two introductory psychology courses, which were taught by the same pair of instructors, surveys, questionnaires, and exercises that pertained to the lecture topics were periodically administered to students. A survey about music preferences was administered as part of the lecture unit on personality and individual differences. Students were invited to participate in a study of music preferences, which involved listening to 25 music excerpts and reporting their degree of liking for each one (a complete list of the pieces is shown in Table 3). For each music excerpt, participants in one class were asked to rate how much they liked the excerpt, whereas participants in the other class were asked to rate how much they liked the genre of the music. All the musical excerpts were played entirely and only once.

Table 3.

Five Varimax-Rotated Principal Components Derived from Music Preference Ratings in Study 3

| Principal component |

|||||||

|---|---|---|---|---|---|---|---|

| Artist | Piece | Genre | I | II | III | IV | V |

| Ljova | Seltzer, do I Drink Too Much? | Avant-garde classical | .82 | −.02 | −.01 | .10 | −.03 |

| Bruce Smith | Sonata A Major | Classical | .72 | −.03 | −.09 | .23 | .10 |

| Paul Serrato & Co. | Who are You? | Traditional jazz | .69 | .05 | .19 | −.12 | −.02 |

| Various Artists | La Trapera | Latin | .68 | .00 | .05 | −.03 | .04 |

| DNA | La Wally | Classical | .68 | .07 | −.11 | −.03 | .10 |

| Daniel Nahmod | I Was Wrong | Traditional jazz | .64 | −.06 | .15 | .18 | .00 |

| Lisa McCormick | Let’s Love | Adult contemporary | .54 | .10 | .04 | .33 | .01 |

| Five Finger Death Punch | Death Before Dishonor | Heavy metal | −.06 | .84 | .08 | −.01 | −.02 |

| Bankrupt | Face the Failure | Punk | .02 | .77 | .03 | .03 | .05 |

| Five Finger Death Punch | White Knuckles | Heavy metal | .03 | .76 | .10 | −.13 | −.01 |

| The Tomatoes | Johnny Fly | Classic rock | .12 | .73 | −.06 | .02 | .14 |

| Exit 303 | Falling Down 2 | Classic rock | −.05 | .72 | .00 | .23 | .06 |

| Mykill Miers | Immaculate | Rap | .05 | .07 | .78 | −.07 | −.05 |

| Sammy Smash | Get the Party Started | Rap | −.20 | .06 | .74 | .03 | .09 |

| DJ Come Of Age | Thankful | R&B/soul | −.09 | −.12 | .68 | .19 | .04 |

| Robert LaRow | Sexy | Europop | .15 | −.03 | .65 | −.04 | .17 |

| Walter Rodriguez | Safety | Electronica | .12 | .06 | .62 | .25 | −.26 |

| Magic Dingus Box | The Way It Goes | Electronica | .25 | .22 | .51 | .03 | −.10 |

| Language Room | She Walks | Soft rock | −.01 | .18 | .10 | .74 | −.01 |

| Ali Handal | Sweet Scene | Soft rock | .23 | −.01 | .03 | .73 | .06 |

| Bruce Smith | Children of Spring | Adult contemporary | .35 | −.09 | .11 | .65 | .06 |

| Hillbilly Hellcats | That’s Not Rockabilly | Rock-n-roll | .17 | .09 | −.02 | −.09 | .74 |

| Curtis | Carrots and Grapes | Rock-n-roll | .22 | .16 | −.02 | −.03 | .70 |

| James E. Burns | I’m Already Over You | New country | −.19 | −.06 | .07 | .44 | .67 |

| Carey Sims | Praying for Time | Mainstream country | −.27 | .00 | .06 | .50 | .57 |

Note. Each piece’s largest factor loading is in italics. Factor loadings ≥ |.40| are in bold typeface.

Music-preference measure

Due to time constraints and concerns about participant fatigue, a shortened music-preference measure was used in Study 3. Specifically, a subset of 25 of the musical excerpts used in Study 2 was used as stimuli. We tried not to select only excerpts with high factor loadings in Study 2, but excerpts that captured the breadth of the factors. Preferences were measured by asking participants to indicate the degree of their liking for each of the 25 musical excerpts using a five-point rating scale, with endpoints at 1 (Extremely dislike) and 5 (Extremely like). The set of excerpts used can be found in Table 3.

Results and Discussion

We first examined the equivalence of the music-preference factor structures across test formats (i.e., ratings of excerpt preferences compared to ratings of genre preferences). PCAs with varimax rotation yielded first factors that accounted for 17% and 18% of the variance (excerpt preferences and genre preferences, respectively). For both groups, parallel analyses of randomly selected data suggested that the first five eigenvalues were greater than chance, and the scree plots suggested “elbows” at roughly six factors. PCAs with varimax rotation were performed for one-factor through six-factor solutions for both groups. Examination of factor congruence between the two groups revealed high congruence for the five-factor solution (mean factor congruence = .97), suggesting that the factor structures were equivalent across the two test formats. Based on those findings, we combined the ratings for both groups.

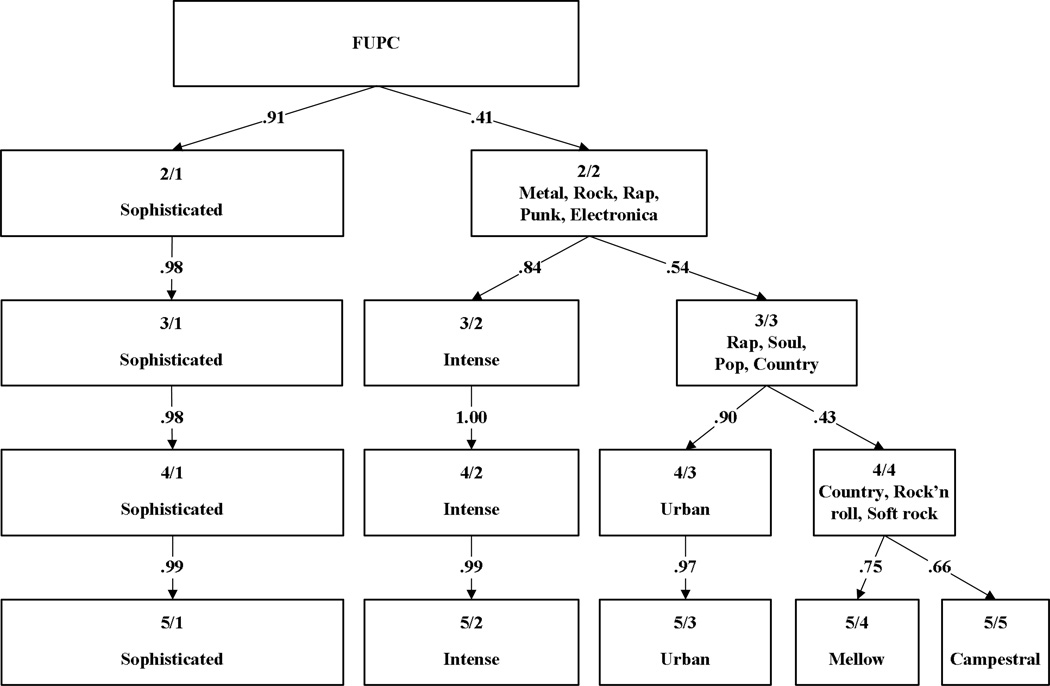

We next conducted a PCA with varimax rotation using the full sample and specified a five-factor solution. As can be seen in Figure 3 and Table 3, the excerpts loading on each of the factors clearly resemble those observed in the previous studies. The first factor included primarily classical, jazz, and world music excerpts and clearly resembled the Sophisticated preference dimension. The second factor replicates the Intense factor, as it is composed entirely of heavy metal, rock, and punk music. The third factor reflects the Urban music-preference factor and includes mainly rap and electronica music excerpts. The fourth factor is composed of predominately soft rock and adult contemporary excerpts and resembles the Mellow dimension. The fifth factor comprises country and rock-n-roll excerpts, thus clearly corresponding to the Campestral factor.

Figure 3.

Varimax-rotated principal components derived from preference ratings for 25 musical excerpts in Study 3.

Taken together, the results from all three studies provide compelling evidence that the five MUSIC factors are quite robust: The same factors emerged in three independent studies that used different sampling strategies, methods, musical content, participants, and test formats. Based on these findings, it seems reasonable to conclude that the MUSIC dimensions reflect individual differences in preferences for broad styles of music that share common properties. But what are those properties? What do the styles of music that comprise each music-preference dimension have in common?

Study 4: How Should We Interpret the Music-Preference Factors?

The factor loadings reported in Tables 1, 2, and 3 might suggest that the factors can be characterized in terms of musical genres. For example, most of the excerpts with high loadings on the Sophisticated dimension fall within the classical, jazz, or world music genres, and most of the excerpts on the Intense dimension fall in the rock, heavy metal, or punk genres. However, some genres load on more than one music-preference dimension. For instance, jazz is represented on the Sophisticated and the Urban factors, and electronica is represented on the Sophisticated, Urban, and Mellow factors. Thus, the preference factors seem to capture something more than just preferences for genres.

Music varies on a range of features, from tempo, instrumentation, and density, to psychological characteristics like sadness, enthusiasm, and aggression. Although genres are defined in part by an emphasis of certain musical attributes, it is conceivable that individuals have preferences for particular music attributes. For example, some people might prefer sad music to joyful music, regardless of genre, just as other people might prefer instrumental music to vocal music. So it would seem reasonable to ask if our five MUSIC factors reflect preferences for attributes in addition to genres. If we are to develop a complete understanding of the music-preferences, it is necessary that we go beyond the genre and examine more specific features of music.

The objective of Study 4 was to examine those variables that contribute to the structure of musical preferences. Are the factors best understood as simply composites of music from similar genres? Or are the factors the result of preferences for particular musical attributes? To investigate those questions, we analyzed the independent and combined effects of genre preferences and music-related attributes on the MUSIC model.

Method

Differentiating the effects of genre preferences and attributes required that we code the various music pieces investigated in the previous studies for their attributes. We wanted to cover many aspects of music, so we developed a multi-step procedure to create lists of descriptors to describe qualities specific to music (e.g., loud, fast) as well as psychological characteristics of music (e.g., sad, inspiring).

Music attributes

Creating a list of attributes involved two steps. First, we generated sets of music-specific and psychological attributes on which pieces could be judged. The selection procedure started with the set of 25 music-descriptive adjectives reported by Rentfrow and Gosling (2003). Those attributes were derived from a multi-step procedure in which participants independently generated lists of terms that could be used to describe music (for details, see Rentfrow and Gosling, 2003). Some of the attributes in that set were highly related (e.g., depressing/sad, cheerful/happy) or displayed low reliabilities (e.g., rhythmic, clever), so we eliminated redundant attributes (with rs > |.70|) and unreliable attributes (with coefficient alphas < .70).

To increase the range of music attributes, two expert judges supplemented the initial list with a new set of music-descriptive adjectives. Next, two different judges independently evaluated the extent to which each music descriptor could be used to characterize various aspects of music. Specifically, the judges were instructed to eliminate from the list attributes that could not easily be used to describe a piece of music and then to rank order the remaining music attributes in terms of importance. This strategy resulted in seven music-specific attributes: dense, distorted, electric, fast, instrumental, loud, and percussive; and seven psychologically oriented attributes: aggressive, complex, inspiring, intelligent, relaxing, romantic, and sad.

Forty judges, with no formal music training, independently rated the 146 musical excerpts used in Studies 1 and 2 (i.e., 52 excerpts used in Study 1 and the 94 excerpts in Study 2) on each of the 14 attributes. Specifically, 18 judges coded the excerpts used in Study 1 and 30 judges coded those from Study 2. To reduce the impact of fatigue and order effects, the judges coded subsets of the excerpts; no judge rated all of them (the number of judges per song ranged from 6 to 18; mean number of judges per song was 10). Judges were unaware of the purpose of the study and were simply instructed to listen to each excerpt in its entirety, then to rate it on each of the music attributes, using a 9-point scale with endpoints at 1 (Extremely uncharacteristic) and 9 (Extremely characteristic). Our analyses in Studies 1–3 were based on the music preferences of ordinary music listeners, so for this study we were interested in ordinary listeners’ impressions of music (rather than the impressions of trained musicians). Thus, judges were given no specific instructions about what information they should use to make their judgments.

Results and Discussion

Reliability

We computed coefficient alphas to assess the reliability of the judges’ attribute ratings. Analyses across all the excerpts revealed high attribute agreement for the music-specific attributes (mean alpha = .93), with the highest agreement for Instrumental (mean alpha = .99) and the lowest agreement for Distorted (mean alpha = .81). Attribute agreement was also high for the psychologically oriented attributes (mean alpha = .83), with the highest agreement for Aggressive (mean alpha = .93) and the lowest agreement for Inspiring (mean alpha = .68). These results suggest that judges perceived similar qualities in the music and generally agreed about the rank ordering of the excerpts on each of the attributes.

Correlations between music-preference factors and musical attributes and genres

To learn more about the nature of the music-preference factors, we examined the musical attributes and genres of the excerpts studied in Studies 1 and 2. Specifically, using musical excerpts as the unit of analysis, we correlated the factor loadings of each excerpt on each MUSIC factor with the mean music-specific attributes, emotion-oriented attributes, and genres of the excerpts. These analyses shed light on the broad and specific qualities that compose each of the MUSIC factors.

As can be seen in Table 4, the MUSIC factors were related to several of the attributes and genres. The results in the first column show the results for the Mellow factor. Musically, the excerpts with high loadings on the Mellow factor were perceived as slow, quiet, and not distorted. Emotionally, the excerpts were perceived as romantic, relaxing, not aggressive, sad, somewhat simple, but intelligent. Mellow was also associated with the soft rock, r&b, quiet storm, and adult contemporary music genres. As can be seen in the second column, the excerpts on the Urban factor were perceived as percussive, electric, and not sad. Moreover, Urban was primarily related to rap, electronica, Latin, acid jazz, and Euro pop styles of music. The results in the third column reveal several associations between the Sophisticated factor and its attributes. Musically, the Sophisticated excerpts were perceived as instrumental, and not electric, percussive, distorted, or loud, and in terms of emotions, they were perceived as intelligent, inspiring, complex, relaxing, romantic, and not aggressive. The genres with the strongest relations with Sophisticated were classical, marching band, avant-garde classical, polka, world beat, traditional jazz, and Celtic. As shown in the fourth column, Intense music was perceived as distorted, loud, electric, percussive, and dense, and also aggressive, not relaxing, romantic, intelligent, nor inspiring. The classic rock, punk, heavy metal, and power pop genres had the strongest relations with Intense. Finally, as can be seen in the fifth column, Campestral music was perceived as not distorted, instrumental, loud, electric, nor fast. In terms of the emotional attributes, the Campestral excerpts were perceived as somewhat romantic, relaxing, sad, and not aggressive, complicated, nor especially intelligent. The musical styles most strongly associated with the Campestral factor were of course subgenres of country music.

Table 4.

Correlations Between Music-Preference Factors and Musical Attributes and Genres.

| Music-Preference Factor |

|||||

|---|---|---|---|---|---|

| Mellow | Urban | Sophisticated | Intense | Campestral | |

| Music-specific attributes | |||||

| Dense | −.02 | −.01 | −.08 | .22* | −.07 |

| Distorted | −.16* | .09 | −.42* | .67* | −.31* |

| Electric | −.05 | .32* | −.66* | .54* | −.25* |

| Fast | −.43* | .08 | −.07 | .41* | −.22* |

| Instrumental | .05 | .04 | .30* | .05 | −.31* |

| Loud | −.38* | −.03 | −.27* | .64* | −.26* |

| Percussive | −.11 | .17* | −.53* | .49* | −.11 |

| Emotion-oriented attributes | |||||

| Aggressive | −.47* | .08 | −.22* | .66* | −.48* |

| Complex | −.18* | .08 | .34* | .14 | −.41* |

| Inspiring | .09 | −.11 | .55* | −.32* | −.10 |

| Intelligent | .18* | −.08 | .58* | −.40* | −.15* |

| Relaxing | .56* | −.07 | .32* | −.54* | .15* |

| Romantic | .57* | −.10 | .23* | −.49* | .18* |

| Sad | .32* | −.24* | .01 | −.10 | .15* |

| Genres | |||||

| Soft rock | .33* | −.12 | −.06 | −.10 | .07 |

| R&B/soul | .31* | .06 | −.11 | −.10 | .04 |

| Quiet storm | .26* | .13 | −.02 | −.12 | −.03 |

| Adult contemporary | .18* | −.01 | .05 | −.15* | .08 |

| Rap | −.17* | .51* | −.25* | −.06 | −.20* |

| Electronica | .10 | .24* | −.05 | −.04 | −.14 |

| Latin | .01 | .20* | .14 | −.11 | −.09 |

| Acid jazz | −.13 | .19* | .05 | −.05 | −.10 |

| Euro pop | .02 | .19* | −.12 | .02 | −.09 |