ABSTRACT

BACKGROUND

Readmissions cause significant distress to patients and considerable financial costs. Identifying hospitalized patients at high risk for readmission is an important strategy in reducing readmissions. We aimed to evaluate how well physicians, case managers, and nurses can predict whether their older patients will be readmitted and to compare their predictions to a standardized risk tool (Probability of Repeat Admission, or Pra).

METHODS

Patients aged ≥65 discharged from the general medical service at University of California, San Francisco Medical Center, a 550-bed tertiary care academic medical center, were eligible for enrollment over a 5-week period. At the time of discharge, the inpatient team members caring for each patient estimated the chance of unscheduled readmission within 30 days and predicted the reason for potential readmission. We also calculated the Pra for each patient. We identified readmissions through electronic medical record (EMR) review and phone calls with patients/caregivers. Discrimination was determined by creating ROC curves for each provider group and the Pra.

RESULTS

One hundred sixty-four patients were eligible for enrollment. Of these patients, five died during the 30-day period post-discharge. Of the remaining 159 patients, 52 patients (32.7%) were readmitted. Mean readmission predictions for the physician providers were closest to the actual readmission rate, while case managers, nurses, and the Pra all overestimated readmissions. The ability to discriminate between readmissions and non-readmissions was poor for all provider groups and the Pra (AUC from 0.50 for case managers to 0.59 for interns, 0.56 for Pra). None of the provider groups predicted the reason for readmission with accuracy.

CONCLUSIONS

This study found (1) overall readmission rates were higher than previously reported, possibly because we employed a more thorough follow-up methodology, and (2) neither providers nor a published algorithm were able to accurately predict which patients were at highest risk of readmission. Amid increasing pressure to reduce readmission rates, hospitals do not have accurate predictive tools to guide their efforts.

KEY WORDS: readmission, unplanned, prediction

BACKGROUND

Against the background of rising concerns about both the cost and quality of American medical care, hospital readmissions have come under increasing scrutiny from both outside and within the government.1–3 Hospital readmissions may be a marker for poor quality care, are dissatisfying for patients and families, and increase health care costs. Medicare estimates that $15 billion is spent on the 17.6% of patients who are readmitted within 30 days 4.

Although it would be ideal to develop interventions that improve the hospital-to-home transition for all patients, given limited resources, some have argued for targeting intense efforts—such as comprehensive discharge planning, post-discharge phone calls or home visits, and early clinic visits—towards high risk patients. However, such strategies require that we have accurate methods to identify patients at highest risk.

Anecdotal evidence suggests that inpatient providers (physicians, nurses, discharge planners) currently make informal predictions of readmission that affect discharge planning. Such predictions are not new: providers have tried to predict other outcomes, such as mortality and length of stay, in several settings (e.g., intensive care unit, emergency department), with varying success.5–10 However, the accuracy of informal predictions of hospital readmission is unknown. Several algorithms have also been developed in recent years to predict hospital readmissions, but their use has been limited, because they require information not typically gathered during clinical care, their models are complex and difficult to use, and/or because they are not accurate. A few studies have compared providers with algorithm-based tools to predict readmission and mortality in other settings,9 but it remains unknown how well providers’ predictions of readmission for general medicine patients compare with published algorithms or how the predictions of multiple disciplines compare with one another.

To reach the ultimate goal of preventing readmissions, identifying the highest risk patients is the first of a multistep process. Providers would next need to speculate on the reason for readmission before then targeting an effective intervention to prevent the readmission. However, currently no literature exists to evaluate the ability of providers to predict the reason for a patient’s readmission.

The goal of this study was to evaluate how well physicians, case managers, and nurses can predict whether their patients aged ≥65 will be readmitted, and to compare their predictions to a standardized risk tool, the Probability of Repeat Admission (Pra). As a secondary examination, we also evaluated how well providers could predict the reason for readmission. We hypothesized that providers could better predict readmission than the risk tool because (1) the ability of the Pra to predict readmission was only modest in the original study, with an area under the curve (AUC) of 0.61, and (2) providers may incorporate important factors not easily translated into variables and therefore omitted from prediction tools, such as socioeconomic factors, health literacy, or even unmeasured clinical variables.

METHODS

Sites and Subjects

Patients aged ≥65 discharged from the general medical service at the University of California, San Francisco Medical Center, a 550-bed tertiary care academic medical center, were eligible for enrollment. Patients transferred to another inpatient service or to another inpatient acute care hospital were excluded. We used the electronic medical record to screen all general medicine admissions daily to ensure complete capture of all eligible patients. Patients were enrolled during a 5-week period beginning March 17, 2008. This enrollment period was chosen in part to maximize the number of provider participants. Provider subjects included 24 attending physicians, 42 housestaff physicians, six case managers, and over 30 nurses.

Design

At the time of discharge, we contacted (in-person, by phone, or by e-mail) the inpatient team members caring for each patient and asked them to estimate the chance of unscheduled readmission to any acute care hospital within 30 days. Each member of the team (including the attending, resident, and intern physicians, case manager, and discharging nurse) predicted the chance of readmission from 0–100%, based on their independent overall evaluation of the patient. We asked providers to predict readmissions on a continuous percentage scale in order to facilitate creating receiver-operating characteristic (ROC) curves. The team members also predicted the reason for potential readmission with an open-ended response. We attempted to obtain responses on the day of discharge, but accepted responses until 48 h post- discharge.

We then calculated the Probability of Repeat Admission (Pra) for each patient. The Pra is a commonly cited instrument that uses eight risk factors (older age, male sex, poor self-rated general health, availability of an informal caregiver, having ever had coronary artery disease, diabetes, hospital admission within the last year, more than six doctor visits during the previous year) to predict the probability of two or more hospital readmissions during a 4-year period.11 In the original study where this instrument was developed, the area under the ROC curve was 0.61. More recently, it was shown to predict 30-day readmission with similar accuracy.12 The eight risk factors for the Pra calculation were obtained via chart abstraction. We asked housestaff to include availability of informal caregiver and patient’s self-rated health status (variables that are not customarily included in the chart) when completing the admission history and physical, and added these questions to their template documentation. Additional information such as length of stay, disposition, and primary language were obtained by reviewing the EMR.

Outcome

Three methods were used to obtain a thorough capture of unscheduled readmissions within 30 days. First, readmissions to our hospital were identified through our institution’s EMR. Second, patients or their caregivers were contacted by phone to determine if patients were admitted to an outside hospital during this 30-day period. Third, the electronic medical records at the local county hospital were reviewed to determine if any of our cohort patients were readmitted. This last method helped ensure capture of our marginally housed population and others that may not have phone contact. Elective admissions, such as scheduled chemotherapy or scheduled procedures, were excluded.

We excluded patients who died during the 30-day follow-up period for several reasons: the predictors of death versus readmission are different, providers were asked specifically to predict readmission and not death, and obviously patients who die can no longer be readmitted. Deaths were identified by review of electronic medical records and during the follow-up phone calls. Readmission diagnoses and number of days since discharge were determined for patients readmitted to our hospital by reviewing the EMR.

Data Analysis

Calibration, or accuracy of the magnitude of overall readmission rate, was calculated for each provider group using a one-sample T test. Discrimination, or the providers’ ability to differentiate patients who would be readmitted versus not readmitted, was determined by creating receiver-operating characteristic (ROC) curves and calculating the area under the curve (AUC).13 The ROC curve for each group of providers shows the sensitivity and specificity that could be achieved for those providers at each potential prediction threshold, while the AUC integrates those sensitivities and specificities into a single value. The AUC would need to be above 0.7 for a group of providers to have acceptable discrimination,14 and an AUC that was not significantly higher than 0.5 would be indicative of providers’ predictions that were no better than random guessing. Logistic regression was used to calculate the AUC and its 95% confidence intervals in order to determine whether any provider group’s predictions of readmission were better than chance (i.e., whether the AUC was statistically different from 0.50) or different from each other. Sensitivity, specificity, positive predictive value, and negative predictive value were calculated for each provider group and for the Pra using a threshold of 50% for provider prediction. Alternative sensitivities and specificities based on other thresholds can be estimated directly from the ROC curves. Correlation of readmission predictions between provider group pairs was calculated using intra-class correlation coefficients. Two internal medicine physicians reviewed the electronic medical record to determine if the reason for readmission was the same, related, or different from the initial admission. The readmission was deemed "related" if it was associated with or a complication of the original diagnosis or treatment rendered during the initial admission. The reviewers also determined the accuracy of providers’ predictions of reason for readmission, again categorized as same, related, or different from the actual reason for readmission.

RESULTS

One-hundred sixty-four consecutive patients were eligible for enrollment; all eligible patients were included in our cohort. Of these 164 patients, five died during the 30-day period post-discharge. Of the remaining 159 patients, 52 patients (32.7%) were readmitted (41 patients to our own medical center and 11 patients to outside hospitals). An additional seven patients (4.4%) presented to the emergency department but were not readmitted. Baseline patient characteristics are presented in Table 1. Age of patients ranged from 65–96 years, with approximately a quarter of patients aged 85 or older. About one-third of patients had a primary language other than English. Most patients were admitted for fewer than 6 days and discharged to home. The cohort used medical services frequently, as shown by a majority of patients who had over six doctor visits and had been hospitalized within the last year.

Table 1.

Baseline Characteristics, Including Pra variables

| Characteristic | Number of patients (%) |

|---|---|

| Age* | |

| 65–69 | 26 (16) |

| 70–74 | 28 (18) |

| 75–80 | 29 (18) |

| 81–84 | 33 (21) |

| ≥85 | 43 (27) |

| Female* | 90 (57) |

| Primary language | |

| English | 102 (64) |

| Not English | 57 (36) |

| LOS | |

| ≤2 days | 59 (37) |

| 3–5 days | 65 (41) |

| ≥6 days | 35 (22) |

| Day of discharge | |

| Monday–Friday | 126 (79) |

| Saturday–Sunday | 33 (21) |

| Disposition | |

| Home | 107 (67) |

| SNF | 40 (25) |

| Assisted living or board and care | 8 (5) |

| Hospice (home or inpatient) | 4 (3) |

| Coronary artery disease* | 34 (21) |

| Diabetes mellitus* | 53 (33) |

| Hospitalization in the past year* | 99 (62) |

| >6 doctor visits in the past year* | 120 (76) |

| Self-rated health status*,† | |

| Excellent or very good | 17 (11) |

| Good or fair | 92 (58) |

| Poor | 29 (18) |

| Informal caregiver available* | 41 (25) |

*Pravariables

†Missing for 21 (13%) of patients

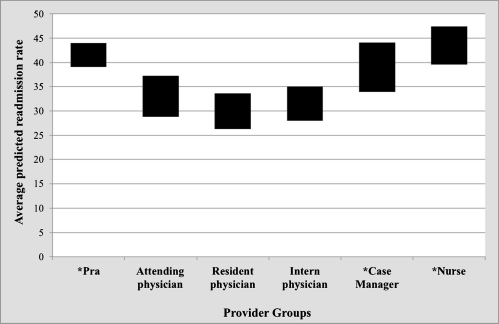

Provider response rate for predictions was 96.1%. Readmission predictions for individual patients ranged from 0–100% for all provider groups. Mean readmission predictions for the physician providers (attendings 33.0%, residents 30.0%, interns 31.5%) were closest to the actual readmission rate (32.7%), while case managers (39.0%), nurses (43.5%), and the Pra (41.5%) all overestimated readmissions (p values <0.05 for the comparison of case managers, nurses, and Pra with the actual readmission rate) (Fig. 1).

Figure 1.

Calibration of provider group predictions of readmission compared to the actual readmission rate. *Significant difference from the true readmission rate of 32.7% Bars indicate ±2 SD.

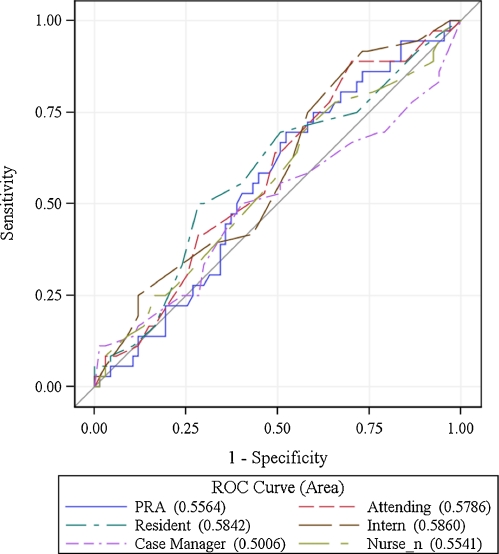

The ability to discriminate between readmissions and non-readmissions was poor for all provider groups and the Pra, as demonstrated by the ROC curves (Fig. 2), whose AUCs ranged from 0.50 for nurses to 0.56 for the Pra to 0.59 for interns). Discriminatory ability was best for the intern physician group, followed closely by attending and resident physicians and the Pra. However, none of the AUC values were statistically different from 0.50 (i.e., chance); likewise, there was no statistically significant difference between provider groups (p > 0.05 for all comparisons, data not shown). Sensitivity, specificity, positive predictive value, and negative predictive value for each provider group and the Pra are shown in Tables 1 and 2. Sensitivity was poor for all groups, ranging from 14.9–30.6%; specificity ranged from 71.9–91.4%. Predictions were positively but only weakly correlated across all groups, with correlation coefficients ranging from 0.055–0.441. Correlation was highest among the physician groups (r = 0.326–0.441) and also between intern physician and case manager (r = 0.369).

Figure. 2.

ROC curves for readmission predictions.

Table 2.

Prediction of Readmission by Provider Group

| Attending physician | Resident physician | Intern physician | Case manager | Nurse | Pra | |

|---|---|---|---|---|---|---|

| AUC (95% CI) | 0.58 (0.46–0.69) | 0.58 (0.47–0.70) | 0.59 (0.47–0.70) | 0.50 (0.38–0.63) | 0.55 (0.44–0.67) | 0.56 (0.44–0.67) |

| Sensitivity* (95% CI) | 23 (13–37) | 15 (6–28) | 22 (12–36) | 27 (15–43) | 29 (17–44) | 31 (18–45) |

| Specificity* (95% CI) | 84 (75–90) | 88 (80–93) | 91 (84–96) | 73 (63–82) | 74 (64–82) | 72 (61–81) |

| Positive predictive value* (95% CI) | 41 (24–61) | 35 (15–59) | 55 (32–77) | 33 (19–51) | 36 (22–52) | 38 (23–54) |

| Negative predictive value* (95% CI) | 69 (60–77) | 70 (61–77) | 71 (62–78) | 67 (57–76) | 68 (58–76) | 65 (55–75) |

*Sensitivity, Specificity, Positive Predictive Value, and Negative Predictive Value Were Calculated Using a Threshold of 50% for Provider Prediction

For the patients readmitted to our own medical center, the majority (90.2%) were readmitted to the general medicine team, with the remaining patients readmitted to the cardiology, neurology, and urology services. Over half of readmitted patients (61.0%) were readmitted within 10 days, with an overall average of 10.2 days between discharge and readmission. The majority of patients (70.7%) were readmitted with the same or related diagnosis. The most common readmission diagnoses included pneumonia, gastrointestinal disorders (bowel obstruction, gastroenteritis, and bleeding), cellulitis, and Clostridium difficile colitis. Accuracy of the reason for readmission predictions are presented in Table 3. None of the provider groups predicted the reason for readmission with accuracy (e.g., no group predicted the correct or a related diagnosis more than 51% of the time). Nurses and intern physicians had the highest accuracy (where accuracy was defined as the same or a related diagnosis), and case managers the lowest. All groups underestimated the degree to which patients would be readmitted for adverse effects of therapy, whereas most groups (all but the case managers) overestimated the rate at which patients would be admitted for the same condition.

Table 3.

Readmission Diagnosis Accuracy

| Same n (%) | Related | Different | |

|---|---|---|---|

| n (%) | n (%) | n (%) | |

| Attending physician | 13 (32.50) | 7 (17.50) | 20 (50.00) |

| Resident physician | 11 (31.43) | 3 (8.57) | 21 (60.00) |

| Intern physician | 12 (30.77) | 8 (20.51) | 19 (48.72) |

| Case manager | 7 (20.59) | 6 (17.65) | 21 (61.76) |

| Nurse | 12 (30.77) | 8 (20.51) | 19 (48.72) |

DISCUSSION

In a cohort of elderly patients hospitalized on a general medical service of an academic medical center, nearly one-third were readmitted within 30 days. Neither their inpatient providers nor a readmission risk tool could accurately predict which patients would be readmitted (i.e., the area under the AUC was less than 0.60 in all cases and not statistically different from chance).

The 32.7% readmission rate seen in our population is significantly higher than the 19.6% readmission rate observed in a recent study of almost 12 million Medicare beneficiaries.15 Reasons for the higher readmission rate in our cohort compared with the large Medicare cohort likely stem from differences in these two populations, specifically that our population consisted of a smaller sample of tertiary care patients in an urban setting. Our study may have selected for patients at particularly high risk for readmission, for both clinical and social causes. Regardless, whether the risk for unplanned readmission is one in five or one in three, it is clear that this area is fertile ground for quality improvement and cost reduction initiatives.

When considering the value of a predictive tool, one needs to consider how accurate it is in the aggregate (calibration) and how well it predicts which individual patients have the outcome of interest (discrimination). In the case of calibration, we found that the aggregate predictions were reasonably accurate (particularly by the physicians; the nurses, case managers, and the predictive tool all overestimated the risk of readmission in our population). However, neither the provider groups nor the risk tool was able to discriminate which individual patients were at high risk for readmission. The inability of providers to predict readmissions goes against our initial hypothesis that the providers’ experience and interpretation of innumerable factors would make them ideal candidates for this task. In the only comparable published study, providers were similarly unable to predict rehospitalization for heart failure patients.16 Presumably each provider group has unique expertise that would influence their predictions. For example, the bedside nurse is best positioned to know a patient’s functional limitations and level of dependence, which have been shown to be risk factors for readmission.17,18 Our case managers were often familiar with patients from prior admissions and therefore could incorporate longitudinal information into their predictions. Both the nurse and case manager are likely better informed than physicians regarding psychosocial factors that may influence readmission, such as whether a patient lives alone, another factor that has been associated with readmission.19,20 Physicians on the other hand should be best equipped to predict clinical reasons for readmission, such as complications of disease or treatments. However, in spite of these various skill sets, all provider groups essentially performed equally poorly in predicting which patients would be readmitted. It is possible that predictions might have been better if providers had collaborated, thereby pooling their collective knowledge of the patient.

We suggest several reasons for the poor provider and Pra predictions. First, general medicine patients consist of an inherently heterogeneous group, with a multitude of variables contributing to both their admission and potential readmission. The interplay of these variables—both clinical and social—may simply be too complex to allow accurate predictions of readmission. Comparing severity of illness among patients with many different diseases is also likely more difficult than when patients suffer from one disease. Secondly, providers may overlook important elements that play a role in readmission. For example, providers rarely gave complications of treatments as a reason for potential readmission, thereby underestimating readmissions for Clostrium difficile colitis or bleeding after starting warfarin for a pulmonary embolus. It is now known that adverse drug events are very common after discharge and likely play a role in readmissions,21,22 but the risk factors for post-discharge ADEs (such as patient understanding of their medication regimens)23 have not been part of algorithms published to date or a likely part of clinicians’ informal assessments of risk. Lastly, it is plausible that readmission risk has a weaker correlation with patients’ clinical characteristics and social circumstances than it does with the processes of care during hospitalization and with post-discharge care.24 Clinicians are likely poor judges of the quality of their own discharge processes.

As hospitals, payers, and health policy increasingly focus on reducing readmissions, our study offers insight into developing effective interventions. While many studies focus on only “high risk” patients,25–28 we show that readmission is very common and unpredictable among all medicine patients. While better prediction tools would allow interventions to be directed only at the highest risk patients, the most recent such tools have had only modest predictive value, reiterating the complex nature of general medicine readmissions.29 An alternative approach is to apply interventions to all patients, including the >60–80% of patients who were not destined to be readmitted. In the meantime, an increase in public reporting of readmission rates and impending changes in remuneration to reduce payment for the lowest performing hospitals create further pressure to reduce readmission rates, without effective prediction tools to guide these efforts.

Our study has several limitations. First, our cohort was limited to one institution over a 5-week period. However, this limitation allowed us to ensure uniform methodology in obtaining predictions. Next, while the details of the readmission such as diagnoses were limited to patients readmitted to our medical center, we did optimize capture of which patients were readmitted through exhaustive measures (review of EMR at our institution and the local county hospital, as well as phone calls to patients and caregivers). The Pra was originally developed to predict readmission within 4 years of the index admission, but here we have used it for 30-day readmission. While this difference may limit its accuracy, the Pra was the best fit of the published risk tools, and it has since been validated as a tool to predict short-term readmission.12 The Pra was not calculated for 13% of patients because of missing health status. Regarding patient input, the study would have required patient consent and therefore would have led to incomplete capture of all otherwise eligible patients, especially the non-English speaking population.

Readmission is common and surprisingly unpredictable. Proposed changes in public reporting and reimbursement for readmissions will make hospitals more accountable for their readmission rates and in turn for improving their discharge process. Risk stratification is one tool that may be helpful in addressing this problem; however, neither an algorithm nor providers have been successful in predicting readmissions, leaving hospitals to struggle without guidance to aim their efforts.

Acknowledgements

None.

Conflict of interest None disclosed.

References

- 1.A New Era of Responsibility: Renewing America’s Promise. http://www.whitehouse.gov/omb/assets/fy2010_new_era/A_New_Era_of_Responsibility2.pdf. Accessed February 2, 2011.

- 2.U.S. Department of Health & Human Services. http://www.hospitalcompare.hhs.gov. Accessed February 2, 2011.

- 3.University HealthSystem Consortium. https://www.uhc.edu/. Accessed February 2, 2011.

- 4.A path to bundled payment around a rehospitalization.: Medicare payment Advisory Commission; June 2005.

- 5.Copeland-Fields L, Griffin T, Jenkins T, Buckley M, Wise LC. Comparison of outcome predictions made by physicians, by nurses, and by using the Mortality Prediction Model. Am J Crit Care. 2001;10(5):313–319. [PubMed] [Google Scholar]

- 6.Ebell MH, Bergus GR, Warbasse L, Bloomer R. The inability of physicians to predict the outcome of in-hospital resuscitation. J Gen Intern Med. 1996;11(1):16–22. doi: 10.1007/BF02603480. [DOI] [PubMed] [Google Scholar]

- 7.Eriksen BO, Kristiansen IS, Pape JF. Prediction of five-year survival for patients admitted to a department of internal medicine. J Intern Med. 2001;250(5):435–440. doi: 10.1046/j.1365-2796.2001.00904.x. [DOI] [PubMed] [Google Scholar]

- 8.Gusmao Vicente F, Polito Lomar F, Melot C, Vincent JL. Can the experienced ICU physician predict ICU length of stay and outcome better than less experienced colleagues? Intensive Care Med. 2004;30(4):655–659. doi: 10.1007/s00134-003-2139-7. [DOI] [PubMed] [Google Scholar]

- 9.Pierpont GL, Parenti CM. Physician risk assessment and APACHE scores in cardiac care units. Clin Cardiol. 1999;22(5):366–368. doi: 10.1002/clc.4960220514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Poses RM, Smith WR, McClish DK, et al. Physicians’ survival predictions for patients with acute congestive heart failure. Arch Intern Med. 199;157(9):1001–1007. doi: 10.1001/archinte.157.9.1001. [DOI] [PubMed] [Google Scholar]

- 11.Boult C, Dowd B, McCaffrey D, Boult L, Hernandez R, Krulewitch H. Screening elders for risk of hospital admission. J Am Geriatr Soc. 1993;41(8):811–817. doi: 10.1111/j.1532-5415.1993.tb06175.x. [DOI] [PubMed] [Google Scholar]

- 12.Novotny NL, Anderson MA. Prediction of early readmission in medical inpatients using the Probability of Repeated Admission instrument. Nurs Res. 2008;57(6):406–415. doi: 10.1097/NNR.0b013e31818c3e06. [DOI] [PubMed] [Google Scholar]

- 13.Centor RM, Schwartz JS. An evaluation of methods for estimating the area under the receiver operating characteristic (ROC) curve. Med Decis Making Summer. 1985;5(2):149–156. doi: 10.1177/0272989X8500500204. [DOI] [PubMed] [Google Scholar]

- 14.Hosmer DW, Lemeshow S. Applied Logistic Regression. 2. NY: John Wiley & Sons Inc.; 2000. [Google Scholar]

- 15.Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360(14):1418–1428. doi: 10.1056/NEJMsa0803563. [DOI] [PubMed] [Google Scholar]

- 16.Yamokoski LM, Hasselblad V, Moser DK, et al. Prediction of rehospitalization and death in severe heart failure by physicians and nurses of the ESCAPE trial. J Card Fail. 2007;13(1):8–13. doi: 10.1016/j.cardfail.2006.10.002. [DOI] [PubMed] [Google Scholar]

- 17.Dobrzanska L, Newell R. Readmissions: a primary care examination of reasons for readmission of older people and possible readmission risk factors. J Clin Nurs. 2006;15(5):599–606. doi: 10.1111/j.1365-2702.2006.01333.x. [DOI] [PubMed] [Google Scholar]

- 18.Laniece I, Couturier P, Drame M, et al. Incidence and main factors associated with early unplanned hospital readmission among French medical inpatients aged 75 and over admitted through emergency units. Age Ageing. 2008;37(4):416–422. doi: 10.1093/ageing/afn093. [DOI] [PubMed] [Google Scholar]

- 19.Grant RW, Charlebois ED, Wachter RM. Risk factors for early hospital readmission in patients with AIDS and pneumonia. J Gen Intern Med. 1999;14(9):531–536. doi: 10.1046/j.1525-1497.1999.08157.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Murphy BM, Elliott PC, Grande MR, et al. Living alone predicts 30-day hospital readmission after coronary artery bypass graft surgery. Eur J Cardiovasc Prev Rehabil. 2008;15(2):210–215. doi: 10.1097/HJR.0b013e3282f2dc4e. [DOI] [PubMed] [Google Scholar]

- 21.Forster AJ, Murff HJ, Peterson JF, Gandhi TK, Bates DW. The incidence and severity of adverse events affecting patients after discharge from the hospital. Ann Intern Med. 2003;138(3):161–167. doi: 10.7326/0003-4819-138-3-200302040-00007. [DOI] [PubMed] [Google Scholar]

- 22.Forster AJ, Murff HJ, Peterson JF, Gandhi TK, Bates DW. Adverse drug events occurring following hospital discharge. J Gen Intern Med. 2005;20(4):317–323. doi: 10.1111/j.1525-1497.2005.30390.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pippins JR, Gandhi TK, Hamann C, et al. Classifying and predicting errors of inpatient medication reconciliation. J Gen Intern Med. 2008;23(9):1414–1422. doi: 10.1007/s11606-008-0687-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Polanczyk CA, Newton C, Dec GW, Salvo TG. Quality of care and hospital readmission in congestive heart failure: an explicit review process. J Card Fail. 2001;7(4):289–298. doi: 10.1054/jcaf.2001.28931. [DOI] [PubMed] [Google Scholar]

- 25.Coleman EA, Parry C, Chalmers S, Min SJ. The care transitions intervention: results of a randomized controlled trial. Arch Intern Med. 2006;166(17):1822–1828. doi: 10.1001/archinte.166.17.1822. [DOI] [PubMed] [Google Scholar]

- 26.Koehler BE, Richter KM, Youngblood L, et al. Reduction of 30-day postdischarge hospital readmission or emergency department (ED) visit rates in high-risk elderly medical patients through delivery of a targeted care bundle. J Hosp Med. 2009;4(4):211–218. doi: 10.1002/jhm.427. [DOI] [PubMed] [Google Scholar]

- 27.Woodend AK, Sherrard H, Fraser M, Stuewe L, Cheung T, Struthers C. Telehome monitoring in patients with cardiac disease who are at high risk of readmission. Heart Lung. 2008;37(1):36–45. doi: 10.1016/j.hrtlng.2007.04.004. [DOI] [PubMed] [Google Scholar]

- 28.Tibaldi V, Isaia G, Scarafiotti C, et al. Hospital at home for elderly patients with acute decompensation of chronic heart failure: a prospective randomized controlled trial. Arch Intern Med. 2009;169(17):1569–1575. doi: 10.1001/archinternmed.2009.267. [DOI] [PubMed] [Google Scholar]

- 29.Hasan O, Meltzer DO, Shaykevich SA, et al. Hospital readmission in general medicine patients: a prediction model. J Gen Intern Med. Mar; 25(3):211–219 [DOI] [PMC free article] [PubMed]