Abstract

In the current study we examined the recognition of facial expressions embedded in emotionally expressive bodies in case LG, an individual with a rare form of developmental visual agnosia who suffers from severe prosopagnosia. Neuropsychological testing demonstrated that LG‘s agnosia is characterized by profoundly impaired visual integration. Unlike individuals with typical developmental prosopagnosia who display specific difficulties with face identity (but typically not expression) recognition, LG was also impaired at recognizing isolated facial expressions. By contrast, he successfully recognized the expressions portrayed by faceless emotional bodies handling affective paraphernalia. When presented with contextualized faces in emotional bodies his ability to detect the emotion expressed by a face did not improve even if it was embedded in an emotionally-congruent body context. Furthermore, in contrast to controls, LG displayed an abnormal pattern of contextual influence from emotionally-incongruent bodies. The results are interpreted in the context of a general integration deficit in developmental visual agnosia, suggesting that impaired integration may extend from the level of the face to the level of the full person.

Keywords: developmental visual agnosia, developmental prosopagnosia, emotion recognition, facial expressions, body language, perceptual integration

1. Introduction

Developmental visual agnosia (DVA) is characterized by lifelong difficulties with visual recognition in the absence of evident brain lesions (Gilaie-Dotan et al., 2009). Individuals with DVA may present with impaired object recognition in addition to deficits in face identity and expression processing (Ariel and Sadeh, 1996). This differentiates DVA from pure developmental prosopagnosia (DP) in which the visual deficit is largely circumscribed to face identity recognition (Dobel et al., 2007; Duchaine et al., 2009a; Duchaine et al., 2009b; Duchaine and Nakayama, 2006b; Duchaine et al., 2003a; Garrido et al., 2009; Humphreys et al., 2007). An additional important distinction is that DP is more common, with an approximate prevalence of 2% in the general population (Kennerknecht et al., 2006). By contrast, developmental visual agnosia with deficits in visual integration and object recognition is far rarer and only seldom described in the literature (Ariel and Sadeh, 1996; Duchaine et al., 2003b)

In the current study we investigated the visual recognition of emotional expressions in LG, a young man with DVA. We tested LG‘s ability to recognize emotions expressed by isolated faces, faceless emotional body context with affective paraphernalia. Most importantly we were interested in exploring how LG would integrate information from facial expressions with the emotional body context in which the face appears. Successful integration of facial expressions with contextual information may be crucial for interpreting emotions in everyday social interactions in which multiple, and potentially conflicting, channels of emotional information need to be computed (Meeren et al., 2005).

With a few notable exceptions (de Gelder et al., 2006) most previous research on facial expression recognition in healthy individuals has relied primarily on isolated and bodiless faces. The methodological choice of using isolated faces has been guided by the notion that basic facial expressions are universal (Ekman, 1993) and categorically discrete signals of emotion (Etcoff and Magee, 1992; Young et al., 1997). Consequently, these strong signals were assumed to be directly mapped to specific emotional categories while overriding and dominating surrounding contextual information (Buck, 1994; Ekman, 1992; Ekman and O'Sullivan, 1988; Nakamura et al., 1990). More recent accounts acknowledge the potential importance of contextual information (Adolphs, 2006; Brosch et al., 2010), yet current theoretical models do not describe when and how context might influence facial expression recognition (Calder and Young, 2005).

Previous studies addressing the facial expression processing of individuals with developmental as well as acquired visual agnosia and or prosopagnosia also focused mostly on the recognition of expressions in isolated faces (Ariel and Sadeh, 1996). Specifically, it is unclear if and how the recognition of facial expressions is influenced by contextual emotional body language in individuals with DVA or DP. Indeed, the few DP studies in which the body as well as face expression were manipulated focused on comparing the facial and body expression processing rather than exploring their possible mutual influence (Duchaine et al., 2006; Van den Stock et al., 2007). While the approach of studying the recognition of isolated facial expressions has proved fruitful, it may have ecological limitations. Real life facial expressions are typically embedded in a rich and informative context which may impeded or enhance the recognition of emotions from the face (Zaki and Ochsner, 2009).

Recent work in healthy and neurological populations has indeed shown that emotional body context affects face-based emotion recognition (Aviezer et al., 2008a; Aviezer et al., 2008b; Meeren et al., 2005; Van den Stock et al., 2007). In fact, under certain conditions, the context can dramatically shift the emotional category recognized from basic facial expressions (Aviezer et al., 2009; Aviezer et al., 2008a; Aviezer et al., 2008b). For example, Aviezer and colleagues “planted” prototypical pictures of disgust faces on bodies of models conveying different emotions (such as anger and sadness). Their results showed that placing a face in an incongruent emotional body context induces striking changes in the recognition of emotional categories from the facial expressions. These recent findings indicate that a full understanding of facial expression processing in both healthy and clinical populations may benefit from taking into account the context in which the face appears. Along this line of research we describe case LG, a rare case of developmental visual agnosia with severe prosopagnosia, focusing on his unique visual integration deficits and follow with an examination of his emotional face-body integration.

2. Case History - LG

LG is a 21 year old male who was first diagnosed with developmental visual agnosia and prosopagnosia at the age of 8 (Ariel and Sadeh, 1996). He has no psychiatric or neurological disease, an MRI scan found no discernible structural brain abnormality (Gilaie-Dotan et al., 2009) and his low level vision (acuity, contrast sensitivity, color vision) is basically intact. We next present a brief synopsis of his current condition focusing on his performance in tasks which require visual integration. Additional neuropsychological and neuroimaging information can be found elsewhere (Ariel and Sadeh, 1996; Gilaie-Dotan et al., 2009)

2.1 Global-local processing

LG‘s performance in the Navon test of hierarchical letters (Navon, 1977) showed the normal pattern of considerable global interference in the local task and much attenuated local interference in the global task. LG‘s normal global interference resembles that of some DP‘s (Duchaine et al., 2007a; Duchaine et al., 2007b); but not of others (Behrmann and Avidan, 2005; Bentin et al., 2007; DeGutis et al., 2007).

2.2 Low-level perceptual integration

LG‘s low acuity in the standard ETDRS chart deficit was due to crowding (~0.3 log units, which is larger than normal as measured with crowded and uncrowded displays of tumbling E patterns (Bonneh et al., 2004). A conspicuous difficulty with dot grouping suggested problems of visual integration that were further investigated. Two tests suggested abnormal early integration mechanisms. In a contour-in-noise card test, (Kovács et al., 1999) his performance was at the level of 5–6 year olds (threshold spacing ratio of ~1); in a lateral masking experiment (Polat and Sagi, 1993) he showed no collinear facilitation, which also indicates impairment in local integration mechanisms. In contrast, he performed normally on the standard stereo-vision test (Randot, Stereo Optical Co., Inc).

2.3 High-level perceptual integration

In realistic natural viewing conditions, LG has serious recognition difficulties. Informally, the way he describes his problems is that

“Looking at objects further than about 4 m, I can see the parts but I cannot see them integrated as coherent objects, which I could recognize; however, closer objects I can identify if they are not obstructed; sometimes I can see coherent integrated objects without being able to figure out what these objects are.”

Hence, LG is impaired in everyday perception, which inevitably requires the integration of overlapping and non-contiguous visual information. LG‘s integration was also formally examined with the Hooper Visual Organization Test (HVOT) in which he scored 12.5/30 points, indicating “very high probability of impairment” by the cutoff norms. It is noteworthy that LG‘s performance stands in contrast to that of individuals with the more common DP who may present with perfect performance on the HVOT (Bentin et al., 2007)

LG was also tested with the Overlapping Figure Test (Birmingham Object Recognition Battery [BORB-6; (Riddoch and Humphreys, 1993)]. His performance on this task was in the deficient range (but note that the control data for the BORB are not age matched to LG). He performed better with simple geometrical shapes and had a conspicuous difficulty with letters and more complex line drawings. This difficulty was reflected both by errors (e.g., 11 errors out of 36 superimposed triples of letters [108 letters altogether]) and particularly by extremely long RTs—even for the correctly identified trials. The ratio between the RTs of overlapping stimuli compared with RTs of isolated stimuli was three times the ratio of the normal mean. Notably, individuals with pure DP may have no difficulty with this form of visual segmentation and integration (Duchaine, 2000).

2.4 Face Processing

Like other individuals with visual agnosia (Aviezer et al., 2007; Riddoch and Humphreys, 1987). LG is extremely impaired in face processing. In the Benton Facial Recognition Test (Benton et al., 1983) he was able to match only 33 out of the 54 faces, a score that places him in the severely impaired group. Similarly, his performance in the Cambridge Face Memory Test (Duchaine and Nakayama, 2006a), was 34/75, which is 6 points less than the average norm of individuals with DP and significantly below the normal mean performance (58/75). Furthermore, he only recognized 5/53 famous faces (compared with a control average of 40/53. LG was unable to identify his parents, his sister or himself, in photographs in which the contour and the hairline have been eliminated.

Previous testing found LG to be impaired in facial expression recognition (Ariel and Sadeh, 1996). However, as previously noted, LG was a young boy at the time and the facial expressions used were not standardized. Thus, it was unknown if his performance in facial expressions recognition improved over time as a function of learning and experience. The aim of the current study was twofold: First, we wanted to establish LG‘s current recognition of facial expressions and emotional body context. Second, we aimed to further examine the nature of LG‘s agnosia. Although it seems clear that his visual integration ability is deficient, a more definitive diagnosis of developmental integrative agnosia warrants further testing. Exploring LG‘s integration of facial expressions with congruent and incongruent emotional body context would provide us with additional evidence concerning the nature of his agnosia.

3. The Current Study

Because LG is an extremely rare case of DVA with profound prosopagnosia, it is of special interest to explore his visual integration deficits and understand how they may impact his perception of social stimuli such as expressive faces and bodies. At the face level, impaired integration may hinder the holistic processing and configural aspects of identity perception (de Gelder and Rouw, 2000, 2001; Maurer et al., 2002) as well as expression perception (Calder and Young, 2005). However, an integrative deficit may extend to broader levels in which information from the face and complex body context cannot be properly combined. To this end, we examined LG‘s ability to recognize emotional faces and emotional bodies in isolation, and, most importantly, we examined his ability to integrate these two sources of emotional information as a function of the emotional congruency between the face and body.

In participants with normal vision, a given facial expression is not uniformly influenced by all incongruent emotional contexts (Aviezer et al., 2008b). Rather, the magnitude of contextual influence (a measure of face-body integration) is strongly correlated with the degree of similarity between the expression of the target face (i.e., the face being presented), and the facial expression that is typically associated with the emotional context (Aviezer et al., 2008b). For example, disgust faces are perceptually similar to anger faces yet perceptually dissimilar to fearful faces (Susskind et al., 2007). Consequently, disgust faces are strongly influenced by angry bodies but weakly influenced by fearful bodies, a pattern we coined the "similarity effect” (Aviezer et al., 2009). The current investigation sought to characterize if LG would show a normal similarity effect or, if his performance would display an abnormal pattern of face-context integration.

4. Methods

4.1 Participants

A group of 7 males (Mean age 23.4, range 20–25) served as controls for LG. Participants in the control group were free from neurological or psychiatric conditions and had normal or corrected to normal vision.

4.2 Stimuli

4.2.1 Facial expressions

Portraits of 10 individuals (5 female) each posing the basic facial expressions of disgust, anger and sadness were selected (Ekman and Friesen, 1976). The faces appeared on emotionally neutral upper torso images (see Figure 1a and 1b). We selected faces of anger, disgust and sadness because previous work from our lab has characterized how each of these expressions is influenced by the different kinds of face-context combination and for the sake of the case study, we wished to utilize well characterized stimuli.

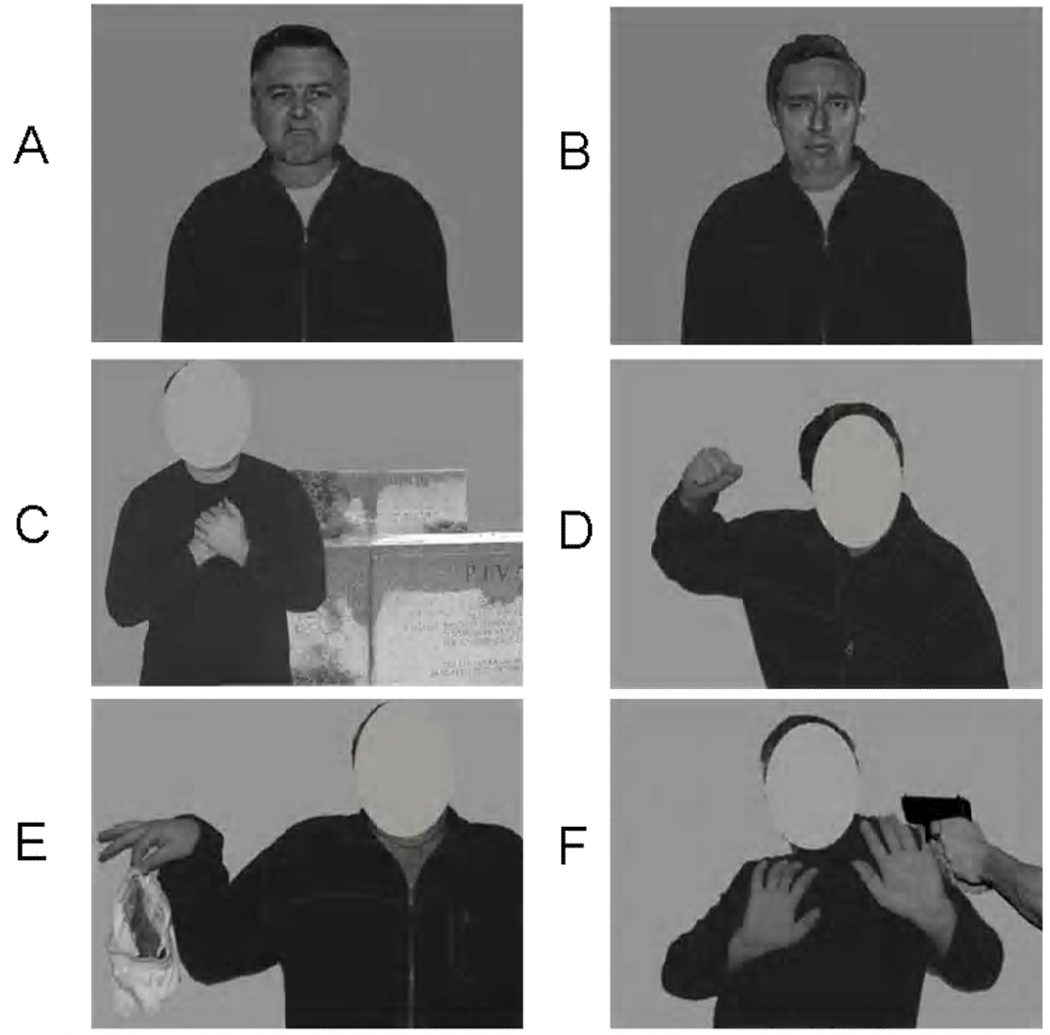

Figure 1.

Examples of baseline emotional stimuli used in the study: (A) disgust and (B) sadness faces in neutral context, (C) sadness context, (D) anger context, (E) disgust context and (F) fear context.

4.2.2 Faceless emotional bodies

Emotional body contexts included images of two models (1 male and 1 female) positioned in scenes conveying prototypical emotions via body language and additional paraphernalia. These images have been previously shown to be highly and equal recognizable indicators of their respective emotion categories (Aviezer et al., 2008b). The displayed emotions were disgust, sadness, fear and anger (see examples in Figure 1c–f). Importantly, the faces were cut out from these images so that they were not available for deducing the emotion of the scene.

4.2.3 Face-body combinations

Faces from each emotional category were combined with bodies to create proportional and seamless face-body units. The combinations were tailored to exert three different levels of similarity (and hence confusability) between the actually presented face and between the facial expression that would typically be associated with the emotional context: high similarity, low similarity, and identity. In the high similarity condition, disgust faces appeared in an anger context, sadness faces appeared in a fearful context and anger faces appeared in a disgust context (Susskind et al., 2007). In the low similarity condition, disgust faces appeared in a fearful context, sadness faces appeared in an anger context and anger faces appeared in a sadness context. In the congruent identity condition, facial expressions of disgust, anger and sadness appeared in their respective emotional context (i.e., a disgust face on a disgust body etc).

We used a 2×3 mixed design with Group (LG, controls) as a between participant factor and Context similarity (identity, high similarity and low similarity) as a within participant factor. Overall, the face context combinations included 3 facial emotion categories × 10 exemplars from each category × 3 context similarity conditions resulting in a total of 90 trials. Figure 2 presents examples of the three levels of contextual similarity for the disgust faces. Note that our aim was to create strong contexts that would successfully influence the perception of the face. Thus, while these stimuli are useful for testing if LG can integrate the overall (extra-facial) contextual information with the face they do not reflect pure emotional body language (de Gelder, 2006).

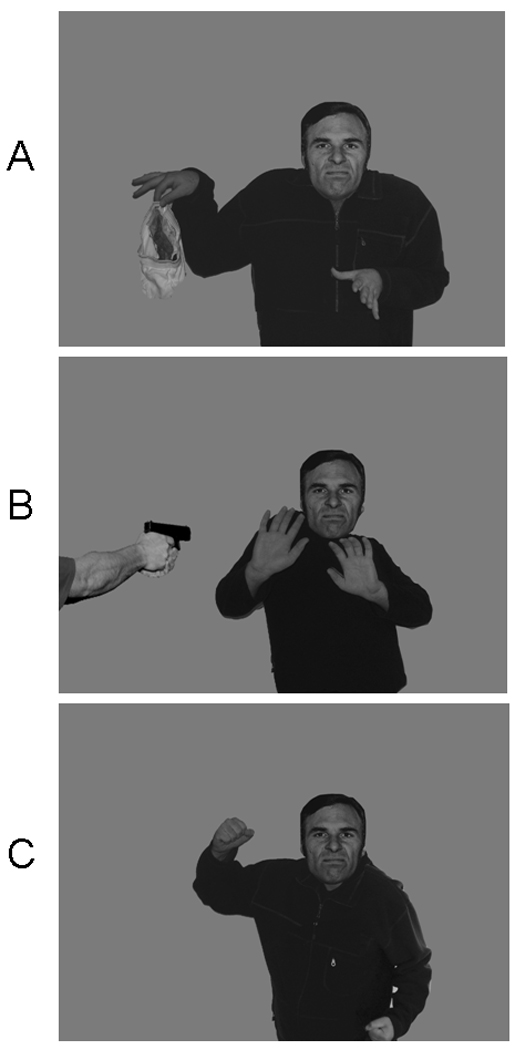

Figure 2.

Examples of stimuli from the three levels of Perceptual Similarity between the disgust face and the face typically associated with the context. Identical disgust faces appeared in (A) disgust context (identity), (B) fear context (low similarity) (C) anger context (high similarity).

4.3 Procedure

In a first experimental session face-context composites were randomly presented on a computer monitor one at a time with no time limits. The instructions were to press a button indicating the category that “best described the facial expression” from a list of six basic emotion labels (sadness, anger, fear, disgust, happiness, and surprise) listed under the image. All 6 basic emotions were allowable at categorization for LG and controls in order to examine any atypical response errors. In a second session, facial expressions on neutral bodies and faceless emotional bodies from the first session were presented to compare LG‘s ability to identify emotions from faces and bodies separately. This session appeared second to ensure that performance with contextualized expressions was not influenced by memory of isolated facial expressions. The two sessions were separated by a ten minute break. The experiments were approved by the ethics committee of the Hebrew University.

4.4 Single Case Statistical Analysis

Crawford and Howell (1998) suggest an adjusted t-test for single case studies in which the control group is of modest size. The appropriateness of this adjusted t-test has recently been extended to analysis of variance, allowing one to assess individual cases in multi-factorial experiments (Corballis, 2009b). We followed Corbalis‘s protocol, in which differences between the control group and single case are examined with an ANOVA in which the between subject Group factor (LG, N=1; vs Controls, N=7) is tested for a main effect and interaction (Corballis, 2009b). Although some concerns with the Corbalis protocol have been raised (Crawford et al., 2009), we found using it justifiable as 1) we did not use it to examine dissociations in performance across different cognitive domains, and 2) we were content with conservative interpretations concerning the relations of the single case to the population from which the control group was drawn (Corballis, 2009a).

5. Results

5.1 Recognition of Facial Expressions in Neutral Context

5.1.1 Accuracy

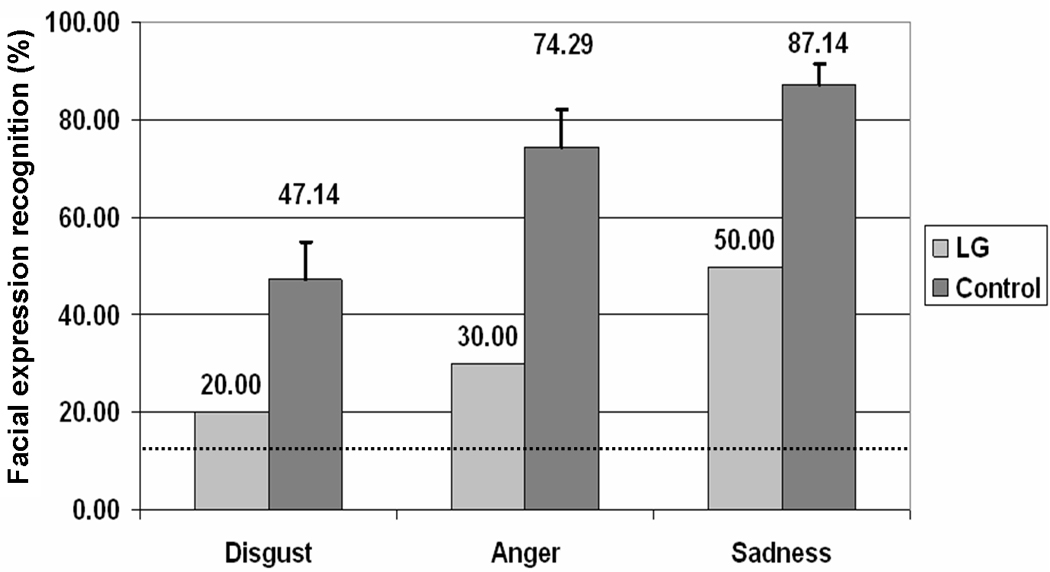

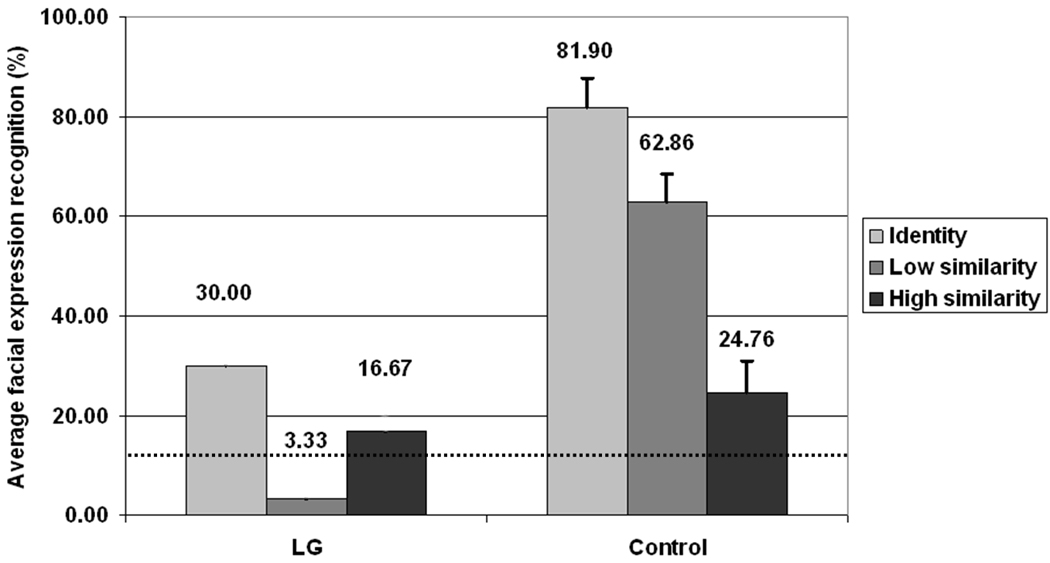

Accurate responses were defined as those in which the faces were assigned to their respective intended emotion categories1 (Ekman and Friesen, 1976). Recognition of the three different isolated facial expressions (anger, disgust, and sadness) was compared between LG and the controls in a 2 (Group: LG vs. Control) × 3 (Expression: disgust, anger and sadness) mixed ANOVA (Figure 3). LG performed worse than controls (Mean 33.3% vs. 69.4%) at recognizing the facial expressions, F (1,6) = 5.58; MSe =615.8, p < 0.056, and, as indicated by the absence of Group × Expression interaction F (2, 12) < 1.0, this deficiency was similar across the different types of expressions. A significant effect of Expression category, F(2, 12) = 5.9; MSe = 180.1, p < 0.05, indicated that some facial expressions were less recognizable than others, a finding which is in accordance with previous work using similar face sets2.

Figure 3.

Recognition of isolated facial expressions in neutral context by LG and controls. Error bars represent standard error. The dashed line indicates chance level.

5.1.2 Reaction time

A face expression × group repeated ANOVA for the RT‘s did not yield any significant main effects or interaction, all p > .1. The mean RT‘s for each expression by group are summarized in Table 1.

Table 1.

Reaction times (mean and standard deviation) for recognizing emotional faces, emotional bodies, and face-body composites as a function of the group.

| Control | LG | |||

|---|---|---|---|---|

| Mean | SD | Mean | ||

| Faces | Disgust | 3656 | 956 | 4310 |

| Anger | 3522.1 | 1203.1 | 5419.1 | |

| Sadness | 2812.7 | 788.8 | 5385.2 | |

| Bodies | Disgust | 1645.8 | 406.6 | 3439 |

| Fear | 2371 | 906.4 | 4382.5 | |

| Sadness | 1630.4 | 346.6 | 11615.5 | |

| Anger | 2088.3 | 856 | 6052.2 | |

| Faces + Bodies | Identity | 3740.7 | 1046 | 9690.1 |

| Low similarity | 5447.2 | 1540 | 8813.3 | |

| High Similarity | 5196.5 | 1050 | 9608.8 | |

5.2 Recognition of Faceless Emotional Bodies

5.2.1 Accuracy

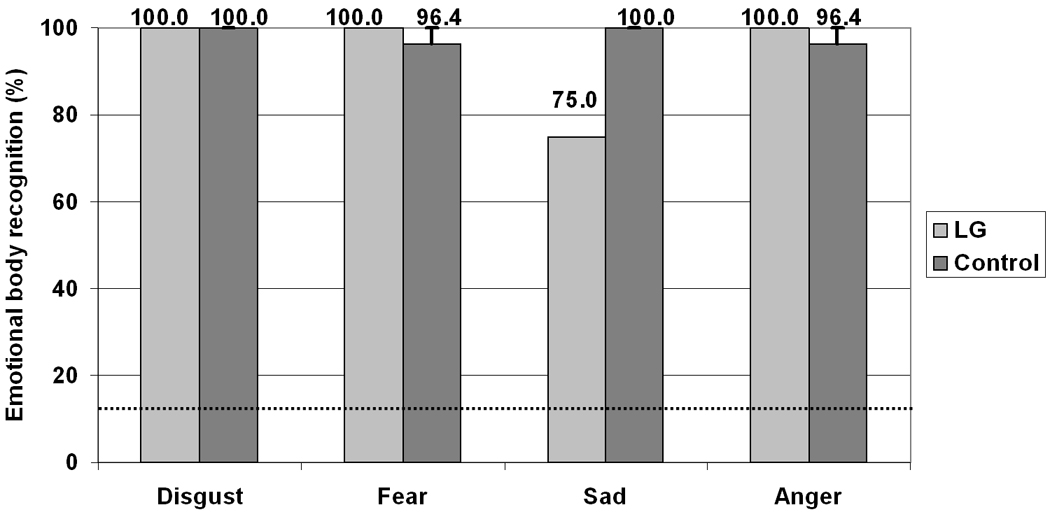

Recognition of the four different body-emotion categories (anger, sadness, disgust, and fear) was compared between the groups in a 2 (Group: LG vs. controls) × 4 (Emotion: anger, fear, sadness, disgust) mixed-model ANOVA. Accurate responses were defined as those which corresponded with the intended posed emotion category, shown previously to yield very high agreement between viewers (Aviezer et al., 2008a).

As can be seen in Figure 4 the overall recognition of body context by LG (93.7%) was high and largely comparable the control group recognition (98.2%; F(1,6) = 1.8; MSe =37.2, p > .22). There was no significant effect of Emotion category F(3,18) = 2.4; MSe = 47.1, p = .099, however, a significant interaction was found suggesting that LG was poorer than controls at recognizing the sadness context F(3,18) = 3.5; MSe = 47.1, p < .04 but not the other contexts, all Fs < 1.

Figure 4.

Recognition of faceless emotional scenes and body language. Error bars represent standard error. The dashed line indicates chance level.

5.2.2 Reaction time

LG was slower than the controls at categorizing all the body expressions F(1,6) = 76.6, p < .0001, and a significant interaction showed this difference was most notable for the sad body expressions F(1, 6) = 35.4, p < .001 (see Table 1).

5.3 Interim discussion

While LG‘s recognition of isolated facial expressions is clearly abnormal, his recognition of the emotional bodies was mostly accurate, albeit considerably slower than controls. However, the successful recognition of body-expressed emotions does not necessarily indicate that LG shows a dissociation between face and body expression recognition. Specifically, the bodies we used were highly recognizable and certainly less ambiguous than the facial expressions. Furthermore, the reaction times suggest that LG‘s processing of bodies is not comparable to controls. Indeed, individuals with DP who have less severe visual agnosia have shown abnormal processing of bodies when presented with more subtle body expressions (Righart and de Gelder, 2007). However, our main interest was not the body recognition per se, but rather the face-context integration. Therefore, the fact that LG ultimately recognized the body images is important because it allows us to examine his processing of poorly recognized faces combined with highly recognizable bodies.

5.4 Recognition of Facial Expressions in Context

Two dependent measures were used in order to assess the recognition of contextualized facial expressions. Recognition Accuracy, was defined as the degree to which the face is recognized as an exemplar of the emotion it was originally intended to convey and Contextual Influence, defined as the degree to which the face is recognized as an exemplar of the emotion the context was intended to convey. Note that these measures do not necessarily overlap as accuracy can decline without a rise in contextual influence. In other words, a participant may categorize a face to an emotion which does not correspond with the isolated face or the body context.

5.4.1 Recognition Accuracy

In order to examine LG‘s pattern of face-context integration we compared his overall recognition accuracy with that of the control group. Similar patterns emerged for the different facial expressions (see Table 2), hence, the analysis was collapsed across all 3 facial expressions (anger, disgust, and sadness) for all three levels of context-face similarity (congruent, low similarity, high similarity). The mixed ANOVA revealed a significant effect of context-face similarity, F(2, 12) =6.3, MSe = 177, p < .015, and a significant effect of the group F(1, 6) =11.6, MSe = 357.3, p < .02. A marginally significant interaction revealed that the context influenced the recognition of the emotions differently for LG and the controls F(2, 12) =3.8, MSe = 177, p < .052. As seen in Figure 5, the controls were most accurate in the congruent context, less accurate in the low similarity context, and least accurate in the high similarity context, a pattern replicated time and again in several studies (Aviezer et al., 2008a, 2009). LG, however, did not display this characteristic linear tendency. Rather, he was more accurate in the high similarity context than in the low similarity context. Such a pattern was not observed in any of the control participants (see Table 3). Hence, LG‘s performance was more accurate in the condition in which healthy participants are typically the least accurate.

Table 2.

Accuracy of recognizing the different facial expressions as a function of the context similarity and the group.

| Control | LG | |||

|---|---|---|---|---|

| Mean | SE | Mean | ||

| Disgust Face | Identity | 87.1 | 6.1 | 30.0 |

| Low similiarity | 61.4 | 8.6 | 0.0 | |

| High similarity | 15.7 | 7.8 | 0.0 | |

| Anger Face | Identity | 82.9 | 6.8 | 20.0 |

| Low similiarity | 51.4 | 7.7 | 0.0 | |

| High similarity | 30.0 | 7.6 | 30.0 | |

| Sad Face | Identity | 75.7 | 12.5 | 40.0 |

| Low similiarity | 75.7 | 11.9 | 10.0 | |

| High similarity | 28.6 | 7.0 | 20.0 | |

Figure 5.

Facial expression recognition as a function of context similarity for LG and controls. Error bars represent standard error. The dashed line indicates chance level.

Table 3.

Individual control data, average control data and LG‘s data for recognition of facial expressions as a function of the context similarity.

| Face-Context combination | |||

|---|---|---|---|

| Identity | Low similarity | High similarity | |

| C1 | 73.3 | 73.3 | 16.7 |

| C2 | 60.0 | 70.0 | 26.7 |

| C3 | 70.0 | 36.7 | 20.0 |

| C4 | 100.0 | 43.3 | 16.7 |

| C5 | 96.7 | 80.0 | 56.7 |

| C6 | 93.3 | 73.3 | 16.7 |

| C7 | 80.0 | 63.3 | 20.0 |

| AVG Control | 81.9 | 62.9 | 24.8 |

| LG | 30.0 | 3.3 | 16.7 |

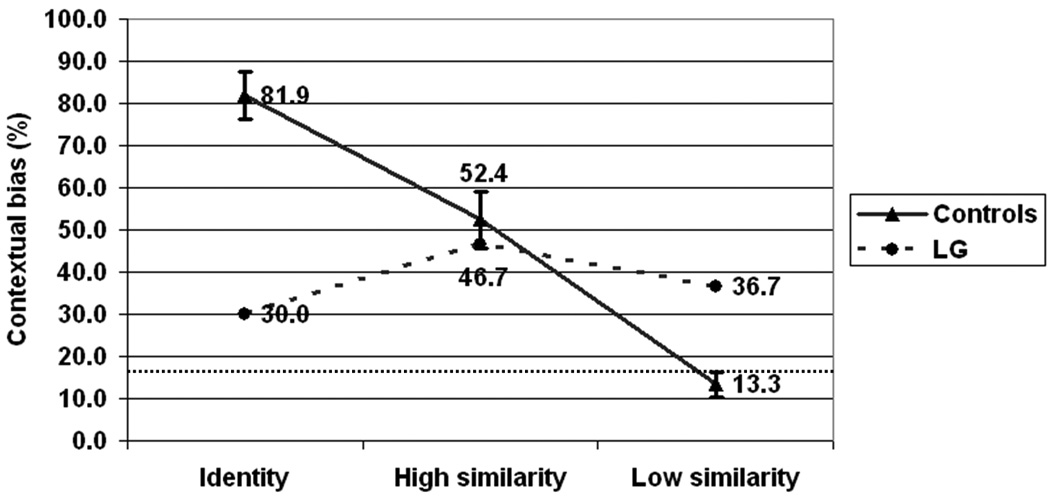

5.4.2 Contextual Influence

We next examined LG‘s tendency to categorize the faces as conveying the emotion of the context. For this analysis, the mean percentage of responses corresponding with the context emotion in each condition (congruent, high similarity, low similarity) was compared between groups (LG and controls), collapsed across all 3 facial expressions (anger, disgust, and sadness). As seen in Figure 6, the control group displayed a highly characteristic similarity effect. This mirrored the effect of accuracy on facial expression recognition, showing the strongest contextual influence in the identity condition, less contextual influence in the high similarity condition, and the least amount of contextual influence in the low similarity condition. By contrast, LG showed similar levels of contextual influence in all conditions.

Figure 6.

Contextual bias, the percent of face categorizations which were in accordance with the body context, as a function of context similarity for LG and controls. Error bars represent standard error. The bottom dashed line indicates chance level.

The statistical analyses concur with this pattern: Repeated-measures Group × Context similarity ANOVA showed a significant effect of the context condition, F(2, 12) = 6.6, MSe = 139.6, p < .01, and no effect of the group F(1,6) = 1.0. Most importantly, a significant interaction between the group and context condition F(2, 12) = 9.03, MSe = 139.6, p < .004, indicated that LG‘s pattern of contextual influence was different than that of the controls.

Follow up t-tests were used to examine if LG‘s similarity effect pattern was indeed atypical. We used the Revised Standardized Difference Test (RSDT) which allows intra-individual comparisons in different conditions by using normative data from a small N control group (Crawford and Garthwaite, 2005). The results showed that the difference between LG‘s scores in the congruent vs. high similarity conditions was indeed abnormal and highly unlikely to occur in controls (one tailed), t(6) = 2.298, p < 0.03. Similarly, the difference between LG‘s scores in the low similarity vs. high similarity conditions was highly unlikely to occur in controls (one tailed), t(6) = 2.3, p < 0.03. These analyses indicate that LG‘s pattern of contextual influence was abnormal in the sense that he did not show a typical similarity effect. While controls showed a gradual decline in contextual influence as a function similarity between the expression in the face and the expression of the face that would have fit the context, LG did not show such a reliable pattern.

Finally, an unexpected finding of LG‘s performance was his frequent tendency to categorize contextualized negative faces as happy, an error that rarely occured in the control group. Overall, LG categorized 16/90 of the contextualized facial expressions as happy, as opposed to an average of 0.7/90 in the control group. This pattern has been documented previously in patients with bilateral amygdala damage (Adolphs and Tranel, 2003; Sato et al., 2002) and appears to apply to at least some of DVA individuals as well.

5.4.3 Reaction times

LG was slower than the controls at categorizing all the contextualized facial expressions F(1,6) = 17.1, p < .006. The remaining effects were not significant, p > .19.

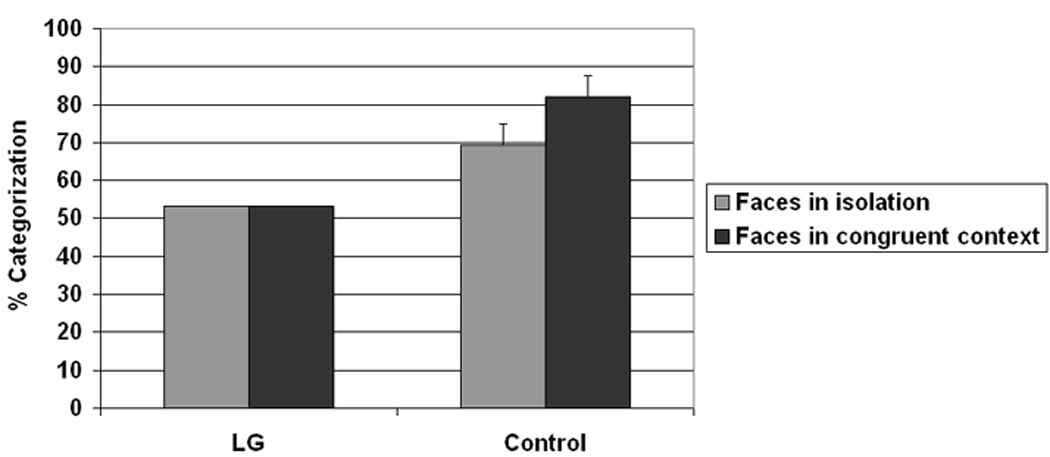

5.5 Assessing Face-Body integration with LG’s idiosyncratic recognition profile

One potential concern about the assessment of LG‘s face-context integration was that his recognition of the facial expressions was very low, hence an exact assessment of integration or its lack, may be difficult. One way to address this caveat is by examining the errors in emotion categorization. LG‘s poor recognition may result from diffuse random errors or from consistent and specific mis-categorizations. The latter case is more revealing because it suggests a unique and idiosyncratic pattern of emotion recognition that can be tested post-hoc for face-body integration deficits. In other words, LG may not show the same pattern of face-body integration as controls because the facial expressions may erroneously convey very different emotions to him than to controls. Nevertheless, these erroneous categorizations might still be integrated with the body context.

An examination of LG‘s categorization of face-expressions in the Ekman-60 test from the FEEST (Young et al., 2002), indicated that, LG‘s recognition was below the norm impairment cutoff for all emotions. More important, his poor recognition did not reflect random noise, but rather systematic biases. LG displayed prominent response peeks (even if wrong) for each face. Disgust faces were most frequently categorized as anger (50%), anger faces were most frequently categorized as sad (60%), and sad faces were most frequently (and correctly) categorized as sad (50%).

Given these prominent peaks in categorizations, we reassessed LG‘s face-context integration, taking into consideration his idiosyncratic recognition of the facial expressions. For example, if LG most frequently recognized anger faces as sad, we considered anger faces on sad bodies to be a “congruent” combination for him. We then assessed LG‘s face-context integration by comparing the most frequent categorization of the faces in each category with and without a “congruent” context. As seen in Figure 7, LG did not show evidence of integration as he showed exactly the same rate of categorization (53.3%) regardless of whether the faces appeared with or without “congruent” context. By comparison, control participants showed a near significant accuracy benefit when the faces were embedded in congruent context t(6) =2.4, p < .052, despite the fact that their accuracy was high to start with (Figure 7).

Figure 7.

Average dominant response categorization to facial expressions in congruent context or no context. Note that the dominant response need not be correct, only most frequent. Congruent body context is defined as the body emotion conveying the same emotion as the most face-frequent response even if erroneous.

Finally, we preformed a complementary item analysis and examined LG‘s face-body integration focusing on facial expressions which were accurately recognized. To this end we selected specific sad facial expression exemplars which were correctly recognized when appearing in neutral context. We focused on the sad expressions because LG‘s overall recognition for this face expression category (50%) was well above chance level (16.6%). We then examined the influence of the different emotional body context conditions on the recognition of these correctly recognized sad exemplars. In the congruent identity condition (sad body) the recognition accuracy was 40% (i.e., 40% of the correctly recognized sad expressions when presented in neutral context), in the low similarity condition in which the body bias is weak the accuracy dropped to 20%. However in the high similarity condition, in which the body bias is very strong and recognition is typically lowest, LG‘s recognition improved back to 40%.

Using the Revised Standardized Difference Test (Crawford and Garthwaite, 2005) a comparison was made between LG and controls for the difference between accuracy scores in the low similarity vs. high similarity bodies for sad face only. A trend indicated that LG‘s pattern was different than the controls: LG‘s accuracy in the low similarity condition was lower than controls Z = (−1.8) while his accuracy in the high similarity was slightly higher than controls Z = (+.08), t(6)=1.56, p < .08, one tailed, (although caution is warranted with running analysis with few data points). The overall pattern nicely replicates our main findings with the full data set and with LG‘s idiosyncratic analysis and clearly demonstrates that LG does not properly integrate faces and bodies even when the faces are recognized at a high and consistent rate.

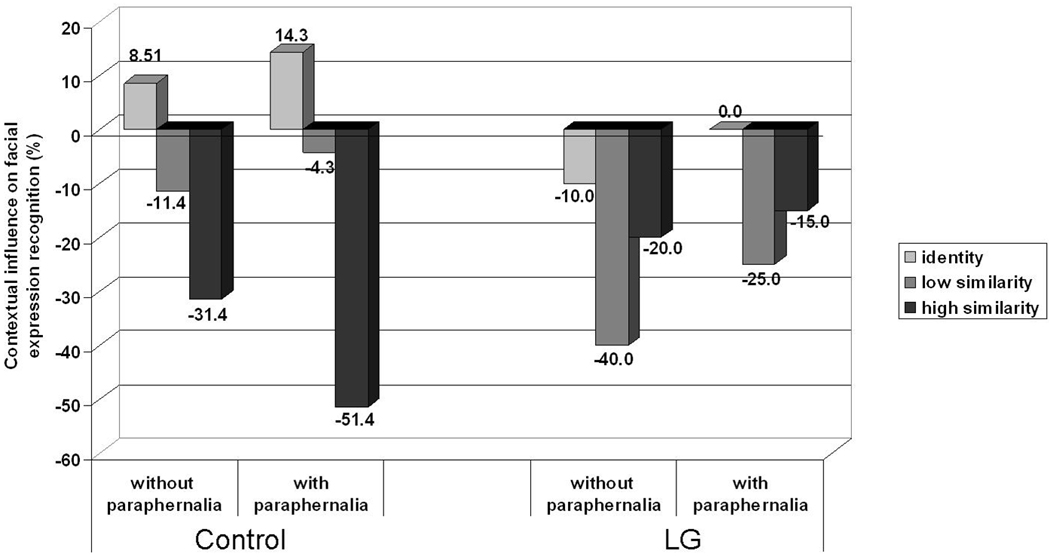

5.6 Assessing differences between bodies with and without affective paraphernalia

As some of our contextual stimuli had additional affective paraphernalia while others did not, we examined if this factor was critical in explaining our results. To this end we split all our stimuli to those with and without paraphernalia and examined the mean categorization of Identity, High similarity, and Low similarity composites, for LG and controls. In the stimuli without paraphernalia: Identity composites included anger faces on anger bodies, High similarity composites included disgust faces on anger, and Low similarity composites included sad faces on anger. In the stimuli with paraphernalia: Identity composites included disgust faces on disgust and sad faces on sad, High similarity composites included sad faces on fear and anger faces on disgust, and Low similarity composites included disgust faces on fear and anger faces on sad.

We used a dependent measure expressing the net impact of context on expression recognition defined as (Accuracy of contextualized faces) − (Accuracy of Isolated faces). Using this measure, positive scores reflect a boost in face recognition due to the context while negative scores reflect a reduction in face recognition due to the context. As seen in Figure 8, controls showed the characteristic pattern of the similarity effect (Identity > High similarity > Low similarity) irrespective of paraphernalia conditions. By contrast, LG showed abnormal similarity effects and no boost from congruent bodies, irrespective of paraphernalia conditions. A repeated ANOVA confirmed that the paraphernalia factor was not significant, nor did it interact in any way with the Similarity and Group, all F’s < 1.

Figure 8.

Influence of contextual bodies on the recognition of facial expressions broken down by the presence of paraphernalia, face-context similarity level, and group. Positive scores reflect an increase in recognition relative to the performance with no affective context, while negative scores reflect a decrease in recognition relative to the performance with no affective context.

To summarize the results, LG presented with relatively good recognition of highly recognizable emotional context bodies alongside impaired recognition of isolated facial expressions. When presented with contextualized facial expressions he failed to display the typical contextual influence and similarity effect as controls and failed to take advantage of the highly recognizable bodies, suggesting abnormal integration of facial expressions and contextual bodies.

6. General Discussion

In the present paper we described the facial expression recognition patterns of LG, a young adult with DVA and severe DP. The main objective of the study was to examine LG‘s integration of facial expressions and their context. Although LG was capable of extracting the emotional meaning from highly recognizable emotional body context, he was largely unable to accurately identify face expressions and he displayed an abnormal pattern of contextual influence from the body to the face.

6.1 Impaired face-context integration

LG‘s recognition of facial expressions was not normally influenced by the perceptual context as evidenced by the fact that he failed to show the typical "similarity effect". To reiterate, the hallmark of this effect is that the magnitude of contextual influence is strongly correlated with the degree of similarity between the expression of the target face and the facial expression that would fit with the emotional context. In contrast, LG‘s recognition of facial expressions was more accurate when the face was embedded in “high similarity” context than when embedded in “low similarity” context, a pattern never observed in any of the control participants.

Importantly, LG fails to integrate even when his idiosyncratic perceptual misrecognitions are taken into account and even when focusing on the specific facial expressions that were relatively well recognized. His performance was largely unchanged even when faces appeared in congruent bodies because the body context does not help him disambiguate the faces. Thus, even when the context contains information that can improve his performance, LG tends to rely on facial information which he cannot process well.

Interestingly, recent imaging work with DP has indicated that they have less segregated activation for faces and bodies (Van den Stock et al., 2008). Specifically, they found that compared to controls, DP‘s have increased activation for bodies in the inferior occipital gyrus (IOG) and increased activation for neutral faces in the extrastriate body area (EBA). To the degree that less neural segregation implies increased integration, it appears that DP‘s and DVA may display a very different pattern of face body integration. This may be particularly true for LG who has documented difficulties with visual integration that are not characteristic of individuals with DP (Van den Stock et al., 2008).

6.2 Characterizing LG’s Developmental Visual Agnosia

One of the classical clinical distinctions in the agnosia literature is the differentiation between the apperceptive and associative agnosia (Lissauer, 1890; Shallice and Jackson, 1988). These terms describe a breakdown in different stages of the perceptual hierarchy: apperception as a deficit in the initial stages of sensory processing in which the perceptual representation is constructed, and association as a deficit in mapping the final structural representation onto stored knowledge. While some findings support the possibility of pure associative agnosia (Anaki et al., 2007), others posit that a more basic, low level deficit will always be found at the core (Delvenne et al., 2004; Farah, 1990).

In contrast to the clear-cut distinction of Lissauer (1890) more recent studies have established intermediate stages between the more associative appearing agnosia and the more apperceptive low-level agnosia. Specifically, Riddoch and Humphreys (1987) presented case HJA and coined the term integrative agnosia to describe conditions in which the individual has deficient integration of local features of a visual stimulus into a coherent perceptual whole. HJA was capable of reproducing complex images by copy with exceptional quality. However, he was unable to integrate all the details into a coherent whole. Importantly, in addition to his integrative visual agnosia HJA also suffered from profound prosopagnosia, suggesting that integrative abilities are necessary for normal face as well as object perception. A similar pattern of integrative visual deficits was reported by Aviezer et al., (2007) with case SE. Similar to HJA, SE could reproduce copies of complex images, yet when presented with an easily recognizable schematic face, he would describe it as a “random bunch of lines”.

Both HJA and SE were born with normal vision and acquired their agnosia after brain damage following bilateral stroke. By contrast, LG, the individual at the focus of the current report has no obvious structural brain damage (Gilaie-Dotan et al., 2009) and his deficit became apparent from when he was a toddler (Ariel and Sadeh, 1996).

The etiology of LG‘s agnosia is very different from SE and HJA. Yet, from the clinical phenomenology perspective, there are several indications which suggest that, the agnosia of all these individuals includes integrative impairments. This would explain LG‘s deficient recognition of fragmented images (HVOT), and his phenomenological experience of seeing parts that do not integrate into coherent objects. The results of the present investigation indicate abnormal integration of facial and body expression and add supporting evidence to the notion that LG‘s agnosia is integrative at nature.

6.3 A possible mechanism for impaired face-context integration

LG‘s deficits in the integration of emotional contexts and facial expressions may stem from deficient face scanning patterns. A scanning deficiency might prevent processing of specific diagnostic emotional features of the face, which in turn, may alter the recognition of emotions from the face (Smith et al., 2005). In line with this thought, we recently showed that the fixation patterns to facial expressions did indeed change as a function of the context in which they were embedded (Aviezer et al., 2008b). For example, disgust expressions perceived in an anger context were scanned like anger expressions in an anger context. Conversely, anger expressions perceived in a disgust context were scanned similarly to disgust expressions in a disgust context. These findings suggest that healthy participants may use the context as a guide to the informative diagnostic regions in the face. Yet, LG, despite his intact recognition of the context, may not be able to utilize that information to direct his fixations to the appropriate regions in the face. Consequently, he fails to recognize the facial expressions even when the context is well recognized. Tentative support for this assumption is provided by the fact that under certain conditions, priming LG with emotionally diagnostic face components improves his ability to identify the full facial expression (Aviezer et al., submitted).

6.4 Summary

We described LG, an individual with DVA who has severe difficulties with visual perception and integration as well as severe prosopagnosia. LG has impaired recognition of facial expressions although he succeeded in recognizing highly prototypical images of faceless emotional body context. When presented with face-body combinations LG failed to integrate the facial expressions with the body context. He did not show characteristic influences of incongruent body context on the recognition of emotion from the face and he did not benefit from context which was congruent with the (actual or misperceived) emotion of the face. Hence, abnormal integration in DVA may extend from the face level to the full person level.

Acknowledgments

This work was funded by an NIMH grant R01 MH 64458-06 to Bentin, and an ISF grant 1035/07 to Hassin. We thank LG and his family for their cooperation and help.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

We use the term “accuracy” in the limited sense of conforming to the consensus. This terminology is used for reasons of convenience. The question if consensual categorizations of facial expressions are indeed accurate remains to be determined empirically.

Note, however, that the different base rates for each face expression are inconsequential for the main purpose of our study because our critical comparisons are all within a given facial expression category (i.e., comparing the recognition of Face expression Y in contexts A, B, and C).

References

- Adolphs R. Perception and emotion. Current Directions in Psychological Science. 2006;15(5):222–226. [Google Scholar]

- Adolphs R, Tranel D. Amygdala damage impairs emotion recognition from scenes only when they contain facial expressions. Neuropsychologia. 2003;41(10):1281–1289. doi: 10.1016/s0028-3932(03)00064-2. [DOI] [PubMed] [Google Scholar]

- Anaki D, Kaufman Y, Freedman M, Moscovitch M. Associative (prosop) agnosia without (apparent) perceptual deficits: a case-study. Neuropsychologia. 2007;45(8):1658–1671. doi: 10.1016/j.neuropsychologia.2007.01.003. [DOI] [PubMed] [Google Scholar]

- Ariel R, Sadeh M. Congenital visual agnosia and prosopagnosia in a child: a case report. Cortex. 1996;32(2):221–240. doi: 10.1016/s0010-9452(96)80048-7. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Bentin S, Hassin RR, Meschino WS, Kennedy J, Grewal S, Esmail S, Cohen S, Moscovitch M. Not on the face alone: perception of contextualized face expressions in Huntington's disease. Brain. 2009;132(6):1633–1644. doi: 10.1093/brain/awp067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H, Hassin R, Bentin S, Trope Y. Putting facial expressions into context. In: Ambady N, Skowronski J, editors. First Impressions. New York: Guilford Press; 2008a. [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, Moscovitch M, Bentin S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science. 2008b;19(7):724–732. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Landau AN, Robertson LC, Peterson MA, Soroker N, Sacher Y, Bonneh Y, Bentin S. Implicit integration in a case of integrative visual agnosia. Neuropsychologia. 2007;45(9):2066–2077. doi: 10.1016/j.neuropsychologia.2007.01.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrmann M, Avidan G. Congenital prosopagnosia: face-blind from birth. Trends in Cognitive Sciences. 2005;9(4):180–187. doi: 10.1016/j.tics.2005.02.011. [DOI] [PubMed] [Google Scholar]

- Bentin S, DeGutis JM, D'Esposito M, Robertson LC. Too many trees to see the forest: Performance, event-related potential, and functional magnetic resonance imaging manifestations of integrative congenital prosopagnosia. Journal of Cognitive Neuroscience. 2007;19(1):132–146. doi: 10.1162/jocn.2007.19.1.132. [DOI] [PubMed] [Google Scholar]

- Benton A, Sivan A, Hamsher KS, Varney N, Spreen O. Benton Facial Recognition Test. New York: Oxford University Press; 1983. [Google Scholar]

- Bonneh YS, Sagi D, Polat U. Local and non-local deficits in amblyopia: acuity and spatial interactions. Vision research. 2004;44(27):3099–3110. doi: 10.1016/j.visres.2004.07.031. [DOI] [PubMed] [Google Scholar]

- Brosch T, Pourtois G, Sander D. The perception and categorisation of emotional stimuli: A review. Cognition & Emotion. 2010;24(3):377–400. [Google Scholar]

- Buck R. Social and emotional functions in facial expression and communication: the readout hypothesis. Biological Psychology. 1994;38(2–3):95–115. doi: 10.1016/0301-0511(94)90032-9. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nature Reviews Neuroscience. 2005;6(8):641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Corballis MC. Comparing a single case with a control sample: Correction and further comment. Neuropsychologia. 2009a;47(13):2696–2697. doi: 10.1016/j.neuropsychologia.2009.04.012. [DOI] [PubMed] [Google Scholar]

- Corballis MC. Comparing a single case with a control sample: Refinements and extensions. Neuropsychologia. 2009b;47(13):2687–2689. doi: 10.1016/j.neuropsychologia.2009.04.007. [DOI] [PubMed] [Google Scholar]

- Crawford JR, Garthwaite PH. Testing for suspected impairments and dissociations in single-case studies in neuropsychology: Evaluation of alternatives using Monte Carlo simulations and revised tests for dissociations. Neuropsychology. 2005;19(3):318–331. doi: 10.1037/0894-4105.19.3.318. [DOI] [PubMed] [Google Scholar]

- Crawford JR, Garthwaite PH, Howell DC. On comparing a single case with a control sample: An alternative perspective. Neuropsychologia. 2009;47(13):2690–2695. doi: 10.1016/j.neuropsychologia.2009.04.011. [DOI] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nature Reviews Neuroscience. 2006;7(3):242–249. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Meeren HKM, Righart R, Stock J, van de Riet WAC, Tamietto M. Beyond the face: exploring rapid influences of context on face processing. Progress in brain research. 2006;155:37–48. doi: 10.1016/S0079-6123(06)55003-4. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Rouw R. Configural face processes in acquired and developmental prosopagnosia: evidence for two separate face systems? NeuroReport. 2000;11(14):3145. doi: 10.1097/00001756-200009280-00021. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Rouw R. Beyond localisation: a dynamical dual route account of face recognition. Acta Psychologica. 2001;107(1–3):183–207. doi: 10.1016/s0001-6918(01)00024-5. [DOI] [PubMed] [Google Scholar]

- DeGutis JM, Bentin S, Robertson LC, D'Esposito M. Functional plasticity in ventral temporal cortex following cognitive rehabilitation of a congenital prosopagnosic. Journal of Cognitive Neuroscience. 2007;19(11):1790–1802. doi: 10.1162/jocn.2007.19.11.1790. [DOI] [PubMed] [Google Scholar]

- Delvenne JF, Seron X, Coyette F, Rossion B. Evidence for perceptual deficits in associative visual (prosop) agnosia: A single-case study. Neuropsychologia. 2004;42(5):597–612. doi: 10.1016/j.neuropsychologia.2003.10.008. [DOI] [PubMed] [Google Scholar]

- Dobel C, Bölte J, Aicher M, Schweinberger SR. Prosopagnosia without apparent cause: Overview and diagnosis of six cases. Cortex. 2007;43(6):718–733. doi: 10.1016/s0010-9452(08)70501-x. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Germine L, Nakayama K. Family resemblance: Ten family members with prosopagnosia and within-class object agnosia. Cognitive neuropsychology. 2007a;24(4):419–430. doi: 10.1080/02643290701380491. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Jenkins R, Germine L, Calder AJ. Normal gaze discrimination and adaptation in seven prosopagnosics. Neuropsychologia. 2009a;47(10):2029–2036. doi: 10.1016/j.neuropsychologia.2009.03.011. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Murray H, Turner M, White S, Garrido L. Normal social cognition in developmental prosopagnosia. Cognitive neuropsychology. 2009b;26(7):620–634. doi: 10.1080/02643291003616145. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Nakayama K. The Cambridge Face Memory Test: Results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia. 2006a;44(4):576–585. doi: 10.1016/j.neuropsychologia.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Yovel G, Nakayama K. No global processing deficit in the Navon task in 14 developmental prosopagnosics. Social cognitive and affective neuroscience. 2007b doi: 10.1093/scan/nsm003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duchaine BC. Developmental prosopagnosia with normal configural processing. NeuroReport. 2000;11(1):79. doi: 10.1097/00001756-200001170-00016. [DOI] [PubMed] [Google Scholar]

- Duchaine BC, Nakayama K. Developmental prosopagnosia: a window to content-specific face processing. Current Opinion in Neurobiology. 2006b;16(2):166–173. doi: 10.1016/j.conb.2006.03.003. [DOI] [PubMed] [Google Scholar]

- Duchaine BC, Parker H, Nakayama K. Normal recognition of emotion in a prosopagnosic. Perception. 2003a;32(7):827–838. doi: 10.1068/p5067. [DOI] [PubMed] [Google Scholar]

- Duchaine BC, Wendt TN, New J, Kulomäki T. Dissociations of visual recognition in a developmental agnosic: Evidence for separate developmental processes. Neurocase. 2003b;9(5):380–389. doi: 10.1076/neur.9.5.380.16556. [DOI] [PubMed] [Google Scholar]

- Duchaine BC, Yovel G, Butterworth EJ, Nakayama K. Prosopagnosia as an impairment to face-specific mechanisms: Elimination of the alternative hypotheses in a developmental case. Cognitive neuropsychology. 2006;23(5):714–747. doi: 10.1080/02643290500441296. [DOI] [PubMed] [Google Scholar]

- Ekman P. Facial expressions of emotion: New findings, new questions. Psychological Science. 1992;3(1):34–38. [Google Scholar]

- Ekman P. Facial expression and emotion. American Psychologist. 1993;48(4):384–392. doi: 10.1037//0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Ekman P, O'Sullivan M. The role of context in interpreting facial expression: Comment on Russell and Fehr (1987) Journal of Experimental Psychology: General. 1988;117(1):86–88. doi: 10.1037//0096-3445.117.1.86. [DOI] [PubMed] [Google Scholar]

- Etcoff NL, Magee JJ. Categorical perception of facial expressions. Cognition. 1992;44(3):227–240. doi: 10.1016/0010-0277(92)90002-y. [DOI] [PubMed] [Google Scholar]

- Farah MJ. Visual agnosia: Disorders of object recognition and what they tell us about normal vision. MIT: MIT Press; 1990. [Google Scholar]

- Garrido L, Furl N, Draganski B, Weiskopf N, Stevens J, Tan GCY, Driver J, Dolan RJ, Duchaine B. Voxel-based morphometry reveals reduced grey matter volume in the temporal cortex of developmental prosopagnosics. Brain. 2009;132(12):3443. doi: 10.1093/brain/awp271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilaie-Dotan S, Perry A, Bonneh Y, Malach R, Bentin S. Seeing with Profoundly Deactivated Mid-level Visual Areas: Non-hierarchical Functioning in the Human Visual Cortex. Cerebral Cortex. 2009;19(7):1687–1703. doi: 10.1093/cercor/bhn205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys K, Avidan G, Behrmann M. A detailed investigation of facial expression processing in congenital prosopagnosia as compared to acquired prosopagnosia. Experimental Brain Research. 2007;176(2):356–373. doi: 10.1007/s00221-006-0621-5. [DOI] [PubMed] [Google Scholar]

- Kennerknecht I, Grueter T, Welling B, Wentzek S, Horst J, Edwards S, Grueter M. First report of prevalence of non syndromic hereditary prosopagnosia (HPA) American Journal of Medical Genetics Part A. 2006;140(15):1617–1622. doi: 10.1002/ajmg.a.31343. [DOI] [PubMed] [Google Scholar]

- Kovács I, Kozma P, Fehér Á, Benedek G. Late maturation of visual spatial integration in humans. Proceedings of the National Academy of Sciences of the United States of America. 1999;96(21):12204. doi: 10.1073/pnas.96.21.12204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lissauer H. Ein Fall von Seelenblindheit nebst einem Beitrage zur Theorie derselben. European Archives of Psychiatry and Clinical Neuroscience. 1890;21(2):222–270. [Google Scholar]

- Maurer D, Grand RL, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6(6):255–260. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- Meeren HKM, van Heijnsbergen CCRJ, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(45):16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura M, Buck R, Kenny DA. Relative contributions of expressive behavior and contextual information to the judgment of the emotional state of another. Journal of Personality and Social Psychology. 1990;59(5):1032–1039. doi: 10.1037//0022-3514.59.5.1032. [DOI] [PubMed] [Google Scholar]

- Navon D. Forest before trees: The precedence of global features in visual perception. Cognitive psychology. 1977;9(3):353–383. [Google Scholar]

- Polat U, Sagi D. Lateral interactions between spatial channels: suppression and facilitation revealed by lateral masking experiments. Vision research. 1993;33(7):993–999. doi: 10.1016/0042-6989(93)90081-7. [DOI] [PubMed] [Google Scholar]

- Riddoch M, Humphreys GW. A case of integrative visual agnosia. Brain. 1987;110(6):1431. doi: 10.1093/brain/110.6.1431. [DOI] [PubMed] [Google Scholar]

- Riddoch MJ, Humphreys GW. BORB: Birmingham object recognition battery. Lawrence Erlbaum; 1993. [Google Scholar]

- Righart R, de Gelder B. Impaired face and body perception in developmental prosopagnosia. Proceedings of the National Academy of Sciences. 2007;104(43):17234. doi: 10.1073/pnas.0707753104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato W, Kubota Y, Okada T, Murai T, Yoshikawa S, Sengoku A. Seeing happy emotion in fearful and angry faces: Qualitative analysis of facial expression recognition in a bilateral amygdala-damaged patient. Cortex. 2002;38(5):727–742. doi: 10.1016/s0010-9452(08)70040-6. [DOI] [PubMed] [Google Scholar]

- Shallice T, Jackson M. Lissauer on agnosia. Cognitive neuropsychology. 1988;5(2):153–156. [Google Scholar]

- Smith ML, Cottrell GW, Gosselin F, Schyns PG. Transmitting and Decoding Facial Expressions. Psychological Science. 2005;16(3):184–189. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- Susskind JM, Littlewort G, Bartlett MS, Movellan J, Anderson AK. Human and computer recognition of facial expressions of emotion. Neuropsychologia. 2007;45(1):152–162. doi: 10.1016/j.neuropsychologia.2006.05.001. [DOI] [PubMed] [Google Scholar]

- Van den Stock J, Righart R, de Gelder B. Body Expressions Influence Recognition of Emotions in the Face and Voice. Emotion. 2007;7(3):487–494. doi: 10.1037/1528-3542.7.3.487. [DOI] [PubMed] [Google Scholar]

- Van den Stock J, van de Riet WAC, Righart R, de Gelder B. Neural correlates of perceiving emotional faces and bodies in developmental prosopagnosia: an event-related fMRI-study. PLoS One. 2008;3(9):3195. doi: 10.1371/journal.pone.0003195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young A, Perrett D, Calder A, Sprengelmeyer R, Ekman P. Facial expressions of emotion: Stimuli and tests (FEEST) 2002 [Google Scholar]

- Young AW, Rowland D, Calder AJ, Etcoff NL, Seth A, Perrett DI. Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition. 1997;63(3):271–313. doi: 10.1016/s0010-0277(97)00003-6. [DOI] [PubMed] [Google Scholar]

- Zaki J, Ochsner K. The need for a cognitive neuroscience of naturalistic social cognition. Annals of the New York Academy of Sciences. 2009;1167:16–30. doi: 10.1111/j.1749-6632.2009.04601.x. [DOI] [PMC free article] [PubMed] [Google Scholar]