Abstract

Objectives: The purpose of this study is to assess the usefulness of five full-text drug databases as evaluated by medical librarians, pharmacy faculty, and pharmacy students at an academic health center. Study findings and recommendations are offered as guidance to librarians responsible for purchasing decisions.

Methods: Four pharmacy students, four pharmacy faculty members, and four medical librarians answered ten drug information questions using the databases AHFS Drug Information (STAT!Ref); DRUGDEX (Micromedex); eFacts (Drug Facts and Comparisons); Lexi-Drugs Online (Lexi-Comp); and the PDR Electronic Library (Micromedex). Participants noted whether each database contained answers to the questions and evaluated each database on ease of navigation, screen readability, overall satisfaction, and product recommendation.

Results: While each study group found that DRUGDEX provided the most direct answers to the ten questions, faculty members gave Lexi-Drugs the highest overall rating. Students favored eFacts. The faculty and students found the PDR least useful. Librarians ranked DRUGDEX the highest and AHFS the lowest. The comments of pharmacy faculty and students show that these groups preferred concise, easy-to-use sources; librarians focused on the comprehensiveness, layout, and supporting references of the databases.

Conclusion: This study demonstrates the importance of consulting with primary clientele before purchasing databases. Although there are many online drug databases to consider, present findings offer strong support for eFacts, Lexi-Drugs, and DRUGDEX.

INTRODUCTION

Drug literature is vast and complex. Keeping up with the literature is a universal problem in the pharmaceutical and other sciences.

Drug literature is growing rapidly in size. It is also increasingly complex, i.e., interdisciplinary and interprofessional in nature.

Literature on clinical experience with drugs is sizable and is growing. Its effective use by the practitioner offers many difficulties.

Competent evaluation of masses of drug information is particularly necessary. [1]

Although these words could have been written today, Hubert Humphrey actually wrote them in 1963 when a Senate committee on government operations requested a National Library of Medicine faculty survey on “The Nature and Magnitude of Drug Literature.” As the amount of information available to pharmacists has increased, the pharmacist's role has expanded. Over the past forty years pharmacists have become more than mere dispensers of pills; they are often the first providers of information about prescribed medications or over-the-counter remedies. Furthermore, patients and the general public often consider a pharmacist the most accessible and trusted health care professional [2]. Growth in medication use during the 1990s has further escalated the demand for pharmacists [3]. Pharmacists are now located in supermarkets, ubiquitous chain pharmacies, and specialized care settings, such as transplantation, cancer, and critical care hospital units. In addition to counseling patients, pharmacists advise other health professionals about the interactions and side effects of drugs in order to reduce medication errors. As a consequence, a pharmacist must know how to efficiently and quickly find accurate and complete drug information without searching through stacks of print resources or spending hours using the computer. Moreover, librarians must know what resources to select for their pharmacist clientele.

In 1983, there were three bibliographic databases published exclusively for pharmacy: International Pharmaceutical Abstracts, Pharmaceutical News Index, and Ringdoc [4]. MEDLINE, Excerpta Medica, and other scientific databases also covered pharmacy topics. With the advent of CD-ROM, full-text databases were developed. Most of these databases were electronic versions of print sources. By 2001, Malone had identified eight full-text general drug information sources: AHFS (American Society of Health-System Pharmacists) Drug Information; Clinical Pharmacology; Clinical Reference Library; DRUGDEX Information System; Drug Facts & Comparisons; Mosby's GenRx; the Physician's Desk Reference (PDR); and USPDI (United States Pharmacopeial Convention). This figure did not include databases designed for nurses or consumers [5].

When deciding which full-text databases to purchase, health sciences librarians face many choices. In addition to considering the cost and search features, it is important for the librarian to consider how often the content is updated, accuracy of the content, access options for remote users, whether the users require drug photographs or imprint information, whether related patient education sources should be purchased, and whether sources should be loaded on an intranet or accessed via the Internet.

This study assesses the usefulness of five full-text drug databases as evaluated by medical librarians, pharmacy faculty, and pharmacy students. The database preferences and comments from members of each study group are compared and contrasted. Recommendations are provided to assist librarians who are responsible for purchasing electronic resources.

LITERATURE REVIEW

A literature search revealed several previous evaluations and comparative studies involving online drug information sources [6–9]. The majority of authors or participants in these studies were practicing pharmacists. In the often-cited 1997 article by Barillot and colleagues, nine bibliographic drug information databases were included in a study designed to determine which databases offered the most comprehensive, relevant answers to a set of drug interaction questions [10]. In this case, the authors and database searchers were all pharmacists. We did not find other drug information studies that included pharmacy students or pharmacy faculty as study participants. While librarians have published database evaluations [11, 12], we did not find drug information studies using librarians as test subjects.

METHODS

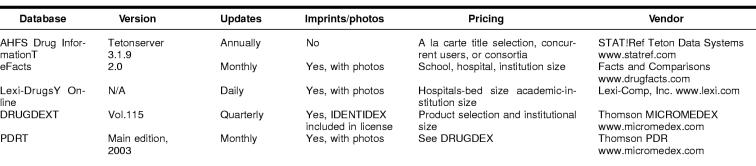

This study focuses on the evaluation of five full-text drug information databases: AHFS Drug Information (provided by STATRef); DRUGDEX (from Thomson MICROMEDEX); eFacts (from Drug Facts and Comparisons); Lexi-Drugs Online (from Lexi-Comp); and the PDR Electronic Library (from Thomson). These databases were chosen because Ohio State University subscribed to them or could easily arrange for their trial. Table 1 offers a summary of product information for the selected databases.

Table 1 Database purchase Information

Evaluators, who were from Ohio State University (OSU), included: first-year pharmacy students, medical librarians who were not directly involved with the College of Pharmacy, and College of Pharmacy faculty members. First-year students were chosen because they had not yet taken a required drug information course and were assumed to be novice-level searchers. Four student participants were randomly chosen from twelve volunteers. Four pharmacy faculty and four medical librarians volunteered to participate in the study. Informal email surveys indicated that the search experience of the four pharmacy faculty ranged from five to over fifteen years. This equaled the search experience of the four librarians, which also ranged from five to over fifteen years.

We designed the study so there were four people in each subset because this was manageable for the authors and because current research indicates that four to five participants will expose the vast majority of usability problems [13, 14]. Given the varied levels of experience and an even gender split among our participants, we believe they are representative of their user groups. As an incentive to participate, all subjects were given gift certificates to the OSU Medical Center Bookstore.

Each group of participants was given identical half-hour training concerning the basic search features of all five databases. We did not offer a conventional, extensive library instruction session. Rather, our goal was to ensure that all participants understood our instructions and were provided the same information about each source. During the training sessions we did not encourage individual questions from the participants.

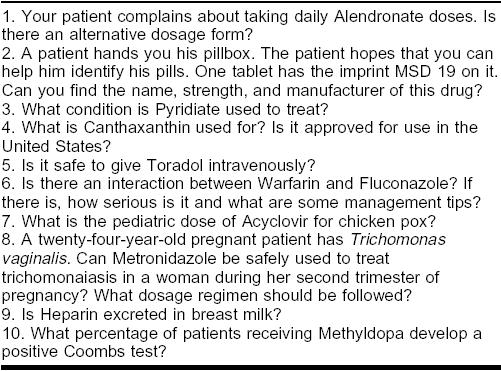

For this study, we reviewed over 100 documented inquiries received by the OSU Medical Center Drug Information Center during the 1990s. The director of the center provided questions that he uses in the drug information course taught in the College of Pharmacy. We eliminated any questions requiring extensive knowledge of pharmacy, such as those pertaining to pharmacokinetics or questions pertaining to drugs recently discontinued by the US Food and Drug Administration (FDA). We also did not consider questions about herbal remedies. From the large list, we selected twenty questions that concerned dosage, identification, availability, indications, adverse effects or drug interactions, and drug use in pregnancy and lactation. Next, the study authors and two library assistants attempted to answer the twenty questions. We eliminated questions with answers that varied considerably among the testers or that required more than twenty minutes to complete. Figure 1 shows the set of ten questions that were finally selected for use in the study.

Figure 1.

Questions for study participants

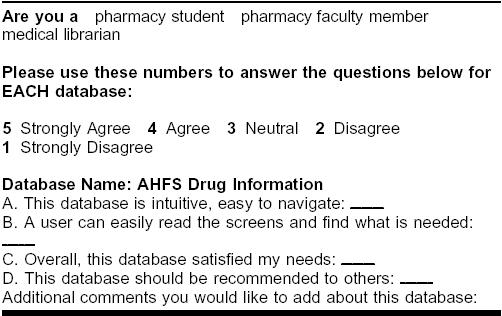

Participants were instructed to answer the ten selected questions using each of the five drug information databases. For purposes of this study, participants were not required to record their answers to the ten questions. Subjects were informed that actual searches would not be graded. Participants first used log sheets to record their search results and document the amount of time spent on each question. Participants noted whether each database provided a direct answer, provided sufficient information to form an answer, provided no answer, and if they were not sure that an answer was provided. Based on their search experiences, participants completed the Database Evaluation Form (Figure 2). Subjects rated each database on ease of navigation, screen readability, overall satisfaction, and product recommendation.

Figure 2.

Database evaluation form

RESULTS

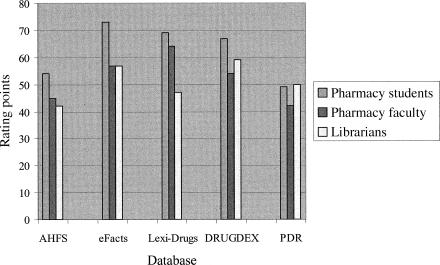

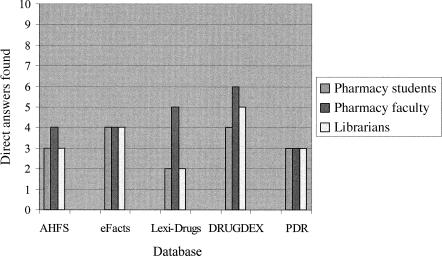

The results of the study demonstrate that although each database has its merits, user preferences do exist. Somewhat surprisingly, database familiarity or an ability to locate direct answers to the questions did not always translate to preference. In addition to variations in user preferences, the results also show the differences in the approach and evaluative process used by each study group. Figure 3 provides a summary of database evaluation rankings by study group. These rankings are based on participants' Database Evaluation Form answers. Figure 4 indicates the ability of each database to provide direct answers to the ten drug information questions as reported by each study group. This information is compiled from log sheet data provided by participants. We emphasize this particular finding because we believe our participants were looking for the most direct answer to the questions.

Figure 3.

Database ratings by study group

Figure 4.

Database search results by study group

Pharmacy faculty members

The Database Evaluation Form results indicate that pharmacy faculty members preferred Lexi-Drugs. Lexi-Drugs was the only database that received more than sixty points, out of a maximum of eighty, from the faculty group. Pharmacy faculty rated eFacts and DRUGDEX similarly with fifty-seven and fifty-four points respectively. In open-ended comments, faculty members focused on the speed and ease in finding answers when using Lexi-Drugs and eFacts. Although some faculty members found DRUGDEX difficult to use, most faculty members did observe that DRUGDEX has a wealth of information. The PDR and AHFS received the lowest average rankings from the pharmacy faculty, with forty-five and forty-two points respectively.

A particularly striking observation is the lack of uniformity among the pharmacy faculty members' evaluations concerning three of the five databases. Simply reviewing the average evaluative rankings (Figure 3) of AHFS, eFacts, and the PDR does not expose the pharmacy faculty members' opinions of these databases. When looking at individual rankings of AHFS, one participant assigned this database four points on a scale of one to twenty, while another rated it sixteen points. One faculty member assigned eFacts eight points while another rated it twenty. Similarly, a review of the PDR rankings found one faculty member assigned it four points and two others assigned it fourteen points. Although the data are inconclusive, we speculate that these wide deviations are due to the differences in ease and experience with online database searching or each participant's unique approaches to searching based on areas of pharmacy specialization.

Although pharmacy faculty members had an overall neutral evaluative reaction to DRUGDEX, they had the greatest success finding direct answers to the questions using this database. The faculty members' log sheets indicated that they found a high number of direct answers using Lexi-Drugs, the top-rated database on their evaluation forms. Their success in using DRUGDEX, if not based on the content alone, could be based on their familiarity with DRUGDEX because it has been available at OSU for several years. As was predicted by the authors, faculty members found the fewest direct answers to the questions when searching the PDR and gave it a low rating. Pharmacy faculty noted that the electronic version of the PDR has the same content limitations as the print version because information in this reference is limited to FDA product labeling. Manufacturers must pay to have their medications included in the PDR.

Faculty members required an average of 142 minutes to complete all ten drug information questions. The group spent the most time looking for answers in AHFS. The pharmacy faculty, as well as the other two study groups, spent the least amount of time, twenty-three minutes, when searching Lexi-Drugs. This result has a direct association with the high average evaluative ranking from faculty members. This finding is particularly interesting because Lexi-Drugs was previously unfamiliar to all study participants.

Pharmacy students

Although the outcome of the students' database evaluation was similar to that of the faculty members', the students, as a group, were much more generous with their ratings than the faculty members. On the Database Evaluation Form, students assigned seventy-three points to eFacts, the highest database rating given by any study group. Students' comments indicated that they found eFacts user-friendly and easy to navigate. Students rated Lexi-Drugs and DRUGDEX almost equally, with sixty-nine and sixty-seven points respectively. The students appreciated Lexi-Drugs' ease-of-use and concise results. One student summed up DRUGDEX by saying, “information, information, information.” Like faculty members, students assigned their lowest ratings to AHFS, fifty-four points, and the PDR, forty-nine points. The authors hypothesize the students' higher database ratings may be due to their unbiased opinions of the databases at this point in their pharmacy training as well as on overall comfort with electronic resources.

Like the faculty group, the student group found the most direct answers to questions using DRUGDEX and eFacts. They found the fewest direct answers using Lexi-Drugs. Interestingly, although the student and faculty groups successfully answered the most questions using DRUGDEX, neither group gave DRUGDEX the highest evaluative rating. Students commented that although they found it a great source of detailed information, they had difficulty following its outline format. One student recommended DRUGDEX as the source for in-depth information rather than a quick answer to questions.

Students spent much less time searching databases for answers than the faculty members or librarians. Faculty and librarians spent, on average, 142 and 144 minutes to complete the study, while, on average, students spent 107 minutes to complete the study. Although this study data is inconclusive, one previous study found that students enjoy searching the Web because they can find information quickly [15]. One could speculate that perhaps the students hurried to complete this study in order to finish other assignments. Likewise, students as a group may have been more impatient than the other groups. For example, the students spent, on average, only eighteen minutes searching Lexi-Drugs and, consequently, found the fewest direct answers in this database. As with the faculty members, the students spent the most time on the questions when searching AHFS. Although students found valuable information in AHFS, they commented that it needs more organization and features such as bold-faced headings to help locate necessary information.

Librarians

The librarians were the most critical of all groups when rating and commenting on the databases. The authors speculate that this criticism may reflect the librarians' extensive training on how to evaluate Websites and databases. The evaluative data and comments from the librarians contrasted with that of the faculty and students. DRUGDEX, while receiving an average response from the other two groups, received the highest rating from the librarian group with fifty-nine points. eFacts followed closely with an average of fifty-seven points. The librarians agreed that while the layout of text in DRUGDEX is difficult to read and navigate, the content is difficult to top—especially its thorough references. While the librarians' comments concerning DRUGDEX were similar, they could not agree on eFacts. One librarian preferred the interface and layout while another found it difficult to use and read. Librarians also had varying opinions regarding the quality of the content and references provided in eFacts.

Interestingly, the librarians rated the PDR higher than Lexi-Drugs or AHFS. Although it received a fifty point average rating, comments varied from one extreme to the other. One librarian found the PDR layout to be the easiest to read and search. Like the faculty members, most librarians commented on the limitations of the PDR because of its FDA and manufacturer-focused content. Librarians found Lexi-Drugs' content abbreviated and the search interface simplistic. And, in agreement with the other groups, the librarians found it difficult to sift through the voluminous information contained in AHFS.

Unlike the other two study groups, the librarian group's evaluative ratings corresponded almost exactly with their log sheets. Librarians found the greatest number of direct answers to the questions when using DRUGDEX. They were also successful when searching eFacts. Librarians found Lexi-Drugs provided the fewest direct answers.

Librarians spent, on average, 144 minutes to complete the study. The average time taken by librarians was very similar to that of the faculty members. Librarians spent the most time searching for answers in eFacts and the least amount of time searching for answers in Lexi-Drugs.

CONCLUSION

Most libraries are facing tight budgets and simply cannot afford subscriptions to every pharmaceutical database. Many factors can and do influence local database purchasing decisions. Librarians must balance the needs and preferences of their primary user groups while weighing the content, features, and price of the products. The diversity of clientele at a large academic institution will likely demand a combination of products, including specialized sources covering topics such as foreign drugs, drug use during pregnancy, and treatment of geriatric or pediatric populations. Smaller institutions may be able to choose one or two products that will satisfy the majority of their users' needs.

The results of this study show that the “librarians know best model” may not work when selecting sources of drug information. Librarians were bothered by such factors as too many frames, poor layout, a simplistic search interface, and lack of references. Participants in the student and faculty groups did not mention references or citations during their evaluations. The student and faculty participants focused on ease of use and whether answers were found quickly. Librarians may need to focus on these more practical aspects of the databases.

Before purchasing databases, librarians should arrange a reasonable trial period for students and faculty to experiment with the databases and comment on their experiences. Database evaluation forms could be distributed through email communications or made available on library Websites. Although we offered only a small financial incentive for participating in this usage study, we did not have trouble recruiting participants. We believe obtaining this level of selection support justified this minimal monetary expenditure.

Possible limitations of this study include the relatively low number of participants in each of our groups and that fact that not all available full-text pharmacy databases were studied. Two other widely used drug databases that warrant consideration are Clinical Pharmacology from Gold Standard Multmedia and Mosby's Drug Consult. Because databases change their interfaces and content frequently, future replication of this type of study with more participants and additional databases would be valuable.

Based on the results of this study, we cannot simply recommend one product over others. We can suggest that librarians pay particular attention to the databases that received high marks from our study participants. eFacts, Lexi-Drugs, and DRUGDEX were well-received. eFacts and Lexi-Drugs appear to offer a more concise, to-the-point source of drug information. DRUGDEX provides comprehensive coverage of drug information that may be critical in diverse settings. The producers of all three of these products offer the option of purchasing these databases individually or purchasing packages that contain more specialized sources of drug information on topics such as foreign drugs, drug use during pregnancy, and serving geriatric or pediatric populations. Based on participants' evaluations in this study, the authors believe AHFS and the PDR should be considered optional purchases.

Additional research on the distinctive opinions of librarians, students, and faculty in evaluating databases would also be useful. It would be interesting to determine whether these results would be duplicated when comparing products in areas other than pharmacy. If so, librarians may wish to significantly revise the processes they use in making purchasing decisions.

Acknowledgments

The authors thank James A. Visconti, director of the Ohio State University Medical Center Drug Information Center, and Mary Beth Shirk, critical care pharmacist, Ohio State University Hospitals, for supplying the questions used in this study; library assistants Brian Miller and Anna Estep for testing the questions; and Jeffry Hartel for his thoughtful review of the manuscript.

Contributor Information

Natalie Kupferberg, Email: kupferberg.1@osu.edu.

Lynda Jones Hartel, Email: hartel.642@osu.edu.

REFERENCES

- National Library of Medicine. Drug literature: a factual survey on “the nature and magnitude of drug literature.”. Report prepared for the study of Interagency Coordination in Drug Research and Regulation by the Subcommittee on Reorganization and International Organizations of the Senate Committee on Government Operations, 88th Cong., 1st sess., 1963. Committee Print. [Google Scholar]

- Brooks DJ. Rating the ethics of medical professionals. Gallup Poll Tuesday Briefing 2002 Dec 17;26–7. [Google Scholar]

- Cooksey JA, Knapp KK, Walton SM, and Cultice JM. Challenges to the pharmacist profession from escalating pharmaceutical demand. Health Aff (Millwood). 2002 Sep–Oct. 21(5):182–8. [DOI] [PubMed] [Google Scholar]

- Kruse KW. Online searching of the pharmaceutical literature. Am J Hosp Pharm. 1983 Feb. 40(2):240–53. [PubMed] [Google Scholar]

- Malone PM, Mosdell KW, Kier KL, and Stanovich JE. Drug information: a guide for pharmacists. 2nd ed. New York, NY: McGraw Hill, 2001. [Google Scholar]

- Belgado BS, Hatton RC, and Doering PL. Evaluation of electronic drug information resources for answering questions received by decentralized pharmacists. Am J Health Syst Pharm. 1997 Nov 15. 54(22):2592–6. [DOI] [PubMed] [Google Scholar]

- Duffull SB, Begg EJ. Comparative assessment of databases used in a drug information service. Aust J Hosp Pharm. 1992 Oct. 22(5):364–8. [Google Scholar]

- Al Hefzi A, Catania PN, Mergener MA, and Lum BL. Evaluation of three manual drug information retrieval systems for investigational antineoplastic drugs. Drug Intell Clin Pharm. 1987 Feb. 21:(2). 196–200. [PubMed] [Google Scholar]

- Tourville JF, McLeod DC. Comparison of the clinical utility of four drug information services. Am J Hosp Pharm. 1975 Nov. 32(11):1153–8. [PubMed] [Google Scholar]

- Barillot MJ, Sarrut B, and Doureau CG. Evaluation of drug interaction document citation in nine on-line bibliographic databases. Ann Pharmacother. 1997 Jan. 31(1):45–9. [DOI] [PubMed] [Google Scholar]

- Fishman DL, Stone VL, and DiPaula BA. Where should the pharmacy researcher look first? Comparing International Pharmaceutical Abstracts and MEDLINE. Bull Med Libr Assoc. 1996 Jul. 84(3):402–8. [PMC free article] [PubMed] [Google Scholar]

- Stone VL, Fishman DL, and Frese DB. Searching online and Web-based resources for information on natural products used as drugs. Bull Med Libr Assoc. 1998 Oct. 86(4):523–7. [PMC free article] [PubMed] [Google Scholar]

- Travis TA, Norlin E. Testing the competition: usability of commercial information sites compared with academic library Web sites. Coll Res Libr. 2002 Sep. 63(5):433–48. [Google Scholar]

- Virzi RA. Refining the test phase of usability evaluation: how many subjects is enough? Hum Factors. 1992 Aug. 34(4):457–68. [Google Scholar]

- Fidel R, Davies RK, Douglass MH, Holder JK, Hopkins CJ, Kushner EJ, Miyagishma BK, and Toney CD. A visit to the information mall: Web searching behavior of high school students. J Am Soc Inf Sci. 1999 Jan. 50(1):24–37. [Google Scholar]