Abstract

Objective

To determine radiologists’ reactions to uncertainty when interpreting mammography and the extent to which radiologist uncertainty explains variability in interpretive performance.

Methods

The authors used a mailed survey to assess demographic and clinical characteristics of radiologists and reactions to uncertainty associated with practice. Responses were linked to radiologists’ actual interpretive performance data obtained from 3 regionally located mammography registries.

Results

More than 180 radiologists were eligible to participate, and 139 consented for a response rate of 76.8%. Radiologist gender, more years interpreting, and higher volume were associated with lower uncertainty scores. Positive predictive value, recall rates, and specificity were more affected by reactions to uncertainty than sensitivity or negative predictive value; however, none of these relationships was statistically significant.

Conclusion

Certain practice factors, such as gender and years of interpretive experience, affect uncertainty scores. Radiologists’ reactions to uncertainty do not appear to affect interpretive performance.

Keywords: medical decision making, physician uncertainty, medical malpractice, mammography interpretation

Detecting breast cancer on mammography is an area in which significant variability exists among radiologists,1-4 even in controlled conditions such as when interpreting a test set of mammograms, in which efforts to perform accurately would likely be heightened.1,2 What might be causing this variability in interpretive performance? Visual abnormalities could be missed or the same abnormality perceived differently by radiologists.1 Ambiguity associated with the image, such as subtle calcifications, architectural distortion, or breast density, as well as patient age, have also influenced interpretation.4 Variability in interpretive performance (e.g., sensitivity, specificity, positive predictive value, negative predictive value, and recall rate) is an important clinical problem. Low recall rates could result in missed cancer or delay in diagnosis, whereas high recall rates can lead to unnecessary work up with associated costs and potential for patient morbidity.

Decision making in mammography is highly complex due to the interaction between visual perceptions and clinical judgment, both of which can be influenced by such factors as the experience of interpreter or concerns about malpractice. The malpractice issue is especially relevant in mammography since failure or delay in breast cancer diagnosis is the most frequent medical malpractice allegation in the United States.5

Although a vast array of studies on medical decision making have appeared over the past 2 decades,6,7 biases and factors that affect these studies have gained recent attention.8-11 Physicians employ different approaches in gathering and using information when making clinical decisions.8 Although some approaches reduce partiality, others can enter bias into the decision-making process. Such factors can include an individual’s training or past history of untoward experiences.8 Physicians’ affective or attitudinal reactions to uncertainty in clinical decision making have been shown to bias medical decisions,9-11 leading to excessive resource use.12 By affective reactions to uncertainty, we mean the stress physicians experience due to various amounts of uncertainty inherent in their practice, such as their level of concern about possible bad outcomes or their reluctance to disclose mistakes to other physicians.

We conducted an observational study with radiologists in 3 distinct regions of the United States to assess their reactions to the uncertainty associated with their practice and to identify what factors in the practice environment may be associated with reactions to uncertainty. In addition, we explored the extent to which radiologists’ reactions to uncertainty explain variability in interpretive performance. We were specifically testing the hypothesis that higher reactions to uncertainty would be associated with higher recall rates, which would result in lower specificity but higher sensitivity.

METHODS

Study Population

Three members of the federally funded Breast Cancer Surveillance Consortium13 participated in this study. Group Health Cooperative is a nonprofit health maintenance organization (HMO) in the Pacific Northwest,14 the New Hampshire Mammography Network15 enrolls 90% of women undergoing mammography in New Hampshire, and the Colorado Mammography Program captures approximately 50% of the women in the 6-county metropolitan area of Denver, Colorado.16 These registries represent the diverse spectrum of health delivery systems providing mammography in the United States. The data they provided for this study are protected so that we can evaluate the public health impact of screening mammography.17

Eligible radiologists included those who interpreted mammograms captured by 1 of the 3 mammography registries between 1 January 1996 and 31 December 2001. Radiologists were excluded if they did not interpret mammography, interpreted fewer than 480 mammograms between 1996 and 2001, or planned to move or retire during the study period. After institutional review board approval was obtained for the study at each site, radiologists were recruited by mail and telephone followup. Radiologists were informed that their participation would involve completing a survey about their demographic, clinical, and other mammography-related experience and personal medico-legal experiences, which would then be linked to their interpretive performance of mammography as obtained from their respective mammography registries.

Instrument Development and Data Collection

The radiologist survey assessed demographic and clinical characteristics of radiologists, including gender, years interpreting mammography, interpretive volume, reimbursement mechanism, medico-legal experience, and reactions to uncertainty involved in patient care. Criterion-related, content, and construct validity of these survey items were addressed using 2 approaches. First, a national advisory committee of academic, scientific, and clinical colleagues in the fields of mammography, epidemiology, economics, medical decision making, and medical malpractice was convened. Members of this committee reviewed the literature on existing measures to assess various characteristics of radiologists and the setting in which they practiced to identify those most relevant for use in the proposed study. Second, a series of draft surveys were sequentially pilot tested among community radiologists from neighboring regions to the study sites. All pilot tests involved cognitive interviews either during completion or shortly following completion of each draft being tested until all variables were assessed as accurately collected. The final 6-page survey took approximately 10 min to complete, and survey mailings were administered directly from the 3 mammography registries to preserve confidentiality and optimize response rates.

The reactions to uncertainty in the clinical decision-making instrument were adapted using an instrument developed by Gerrity and others.18,19 This instrument characterizes physicians’ reactions to the uncertainty associated with clinical care in 4 domains. The evolution of this instrument involved the development of 61 items that were completed by 700 physicians using a 6-category response scale (strongly disagree, moderately disagree, slightly disagree, slightly agree, moderately agree, and strongly agree).18 Exploratory factor analysis initially identified 2 dimensions (stress from uncertainty and reluctance to disclose uncertainty). A factor rotation was used to select best items, and Cronbach’s α was used to assess internal consistency, which was further refined into 4 dimensions19 (stress from uncertainty, concern about bad outcomes, reluctance to disclose mistakes to patients, and reluctance to disclose mistakes to physicians). The 3 scales included in this study19 contained a total of 10 items, 5 of which reflected stress from uncertainty (α > 0.80), 3 reflecting concern about bad outcomes (α > 0.80), and 2 reflecting reluctance to disclose mistakes to physicians (α > 0.80).

The individual items were revised to make them more relevant for the practice of mammography interpretation, as indicated in Table 1. Cronbach’s α assessed the internal consistency of the revision. For stress due to uncertainty, it was 0.89; for concern about bad outcomes, it was 0.69; and for reluctance to disclose mistakes to physicians, it was 0.81. The α coefficient for the reaction to uncertainty measure with all 10 scale items combined was 0.86, indicating a high level of internal consistency of this measure.

Table 1.

Radiologists’ Reactions to Uncertaintya by Individual Survey Items and Domains

| Reaction to Uncertainty Domain and Individual Survey Items | Mean Scoreb | Standard Error |

|---|---|---|

| Stress due to uncertainty | 19.5 | 0.51 |

| Uncertainty in mammography makes me uneasy | 4.2 | 0.12 |

| I am quite comfortable with the uncertainty in mammography practicec | 4.0 | 0.12 |

| I find the uncertainty involved in mammography disconcerting | 3.9 | 0.12 |

| The uncertainty in mammography often troubles me | 3.6 | 0.13 |

| I usually feel anxious when I am not sure of a mammographic interpretation | 3.8 | 0.11 |

| Concern about bad outcomes | 10.3 | 0.30 |

| When I am uncertain of a mammographic interpretation, I imagine all sorts of bad scenarios: patient dies, patient sues, etc. | 3.1 | 0.13 |

| I fear being held accountable for the limits of my knowledge | 3.2 | 0.13 |

| I worry about malpractice when I am not sure of a mammographic interpretation | 4.0 | 0.12 |

| Reluctance to disclose mistakes to physicians | 3.8 | 0.18 |

| I never tell other physicians about mammographic interpretation errors I have made | 1.8 | 0.09 |

| I almost never tell other physicians about cancer diagnoses I have missed | 2.0 | 0.11 |

Items adapted to mammography practice.

Based on response scale of 1 = strongly disagree, 2 = moderately disagree, 3 = slightly disagree, 4 = slightly agree, 5 = moderately agree, and 6 = strongly agree.

Item is reverse scored.

Data Linkages

Survey data were independently double entered at each site and sent encrypted and deidentified (except for a study ID) to the main analytic center at Group Health Cooperative. Survey data were then merged with actual interpretation and outcome data for screening mammograms interpreted by study radiologists between 1 January 1996 and 31 December 2001. Core registry variables included date and type of mammogram (screening v. diagnostic); BI-RADS interpretation and recommendation categories20; patient demographic, clinical, and risk characteristics; and breast cancer outcome. Encrypted study identifiers were used for the patient, the radiologist, and the mammogram facility. Standardized variable definitions allowed for merging of data from different screening programs while ensuring both uniformity and confidentiality of all data.17 Linkages between radiologists’ uncertainty scores from the mailed survey and performance data were made. A composite uncertainty score with the 3 domains combined could range between 10 and 60, with 10 being low reactions to uncertainty and 60 being high reactions to uncertainty. Low reactions to uncertainty versus high reactions indicate that participants experience low (v. high) affective responses to stress related to uncertainty, concern about bad outcomes, and reluctance to disclose mistakes.

Analytic Definitions

A screening mammogram was defined as a bilateral routine examination as designated by the radiologist. Exams were excluded if they were done on women with breast implants, were unilateral, or were done on women who self-reported previous breast cancer at the time of that exam. To avoid inadvertently including diagnostic mammography as screening encounters, we also excluded screening exams preceded by any radiologic exam within 270 days (9 months) prior to the screening mammogram, within which it would be unlikely that the examination would really be a screening exam.

Mammograms were considered positive if assigned a BI-RADS code of 0 (needs additional assessment), 4 (suspicious abnormality), 5 (highly suggestive of cancer), or 3 (probably benign) with a recommendation for immediate workup (≤6 months). Because mammograms are interpreted at the level of the breast, the highest BI-RADS code was used to classify each mammogram. We classified mammograms as negative if assigned a BI-RADS code of 1 (negative), 2 (benign finding), or a 3 (probably benign) with a recommendation for normal or short-interval followup. Our followup period for cancer outcomes associated with each screening examination was for 1 year (365 days) or until the next screening exam, if the screening exam occurred in the period between 270 and 364 days. Breast cancer outcomes (benign and malignant) were identified through one or more of the following sources: pathology data banks and/or regional cancer registries. Only invasive breast cancer and ductal carcinoma in situ cases were included. Cases known to be lobular carcinoma in situ were excluded because mammography identifies it only on rare occasions. When women had more than 1 breast pathology report associated with a cancer, the highest category was used.

Analytic Calculations

Examinations were false positive when the assessment was positive and a breast cancer diagnosis did not occur within the followup period (365 days following the index screening examination or until the next examination, whichever occurred first). Examinations were true positive when the assessment was positive and a cancer diagnosis followed within the 365-day time period. A false-negative examination was a negative assessment with a diagnosis of cancer within the followup period. A true negative examination was a negative assessment with no subsequent cancer diagnosis within the followup period. Sensitivity was calculated as true positive/(true positive + false negative). Specificity was calculated as true negative/(true negative + false positive). Positive predictive value was calculated as true positive/(true positive + false positive) and negative predictive value as true negative/(true negative + false negative). Recall rate was calculated as positive/(positive + negative).

Statistical Analysis

Each radiologist characteristic of interest (gender, years interpreting mammography, interpretive volume, reimbursement mechanism, medico-legal experience) was initially examined univariately with respect to the combined uncertainty scale as well as the individual subscales (stress due to uncertainty, concern about bad outcomes, and reluctance to disclose information). The mean and 95% confidence intervals (CIs) were computed for each scale by radiologist characteristic.

Univariate analyses also examined whether the radiologist interpretive performance measures were related to physicians’ overall reaction to uncertainty. In this analysis, the uncertainty score was divided by 5 and put into a logistic regression as a continuous explanatory variable so that the interpretation of the odds ratio is the increase of the likelihood for every 5-point increase in the uncertainty score. We used a 5-point division to allow for exploration of fine differences we expected to find. Means and 95% CIs were computed for each performance measure and radiologist characteristic by radiologists’ reactions to uncertainty.

The multivariable analysis examined the association between the combined ambiguity scale and each performance measurement while adjusting for potential confounders found in the univariate analyses. Each screening mammogram was associated with an assessment and breast cancer outcome; therefore, the analysis was performed at the mammogram level but accounted for the correlation within each radiologist. We further restricted this analysis to mammograms of women with dense breasts (classified as either heterogeneously dense or extremely dense) since focusing on the interpretation of these more complicated films may allow for more significant reactions to uncertainty to occur. Logistic regression models were fit using generalized estimating equations, which assumed an independent working correlation matrix. A 2-side P value <0.05 was considered to be statistically significant.

RESULTS

One hundred eighty-one radiologists were eligible for participation. Of these, 139 consented and completed all or a portion of the survey for a response rate of 76.8%. One hundred twenty-four participating radiologists (89.2%) interpreted the minimal number of mammograms outlined in our inclusion criteria. Table 2 outlines the demographic and practice characteristics of study participants by mean uncertainty score overall and for each of the 3 domains (with 95% CIs). As indicated, the majority was male, most were experienced mammographers, and there was a fairly even distribution across reported annual interpretive volume categories of 500 to 5000, with very few interpreting more or less than these categories. Most radiologists were reimbursed through shared partnership profits (income that is dispersed through professional associations based on contractual agreements with institutions or facilities in need of specific services) or through annual set salary, with few being reimbursed per screening mammogram. Approximately half (49%) reported a prior medical malpractice lawsuit. Only 14% had a previous history of a mammography-related lawsuit.

Table 2.

Physician Characteristics by Level of Uncertainty

| Stress due to Uncertainty (n = 120)a |

Concern about Bad Outcomes (n = 121)b |

Reluctance to Disclose Mistakes to Physicians (n = 121)c |

Combined Uncertainty Score (n = 120)d |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Physician Characteristic | N | % | Mean | 95% CI | Mean | 95% CI | Mean | 95% CI | Mean | 95% CI |

| Overall | 19.5 | 18.5 to 20.5 | 10.3 | 9.7 to 10.9 | 3.8 | 3.5 to 4.2 | 33.5 | 31.9 to 35 | ||

| Gender | ||||||||||

| Male | 96 | 77.4 | 20.0 | 18.9 to 21.2 | 10.6 | 9.9 to 11.3 | 3.9 | 3.5 to 4.3 | 34.5 | 32.7 to 36.3 |

| Female | 28 | 22.6 | 17.5 | 15.6 to 19.3 | 9.1 | 7.8 to 10.5 | 3.6 | 2.9 to 4.2 | 29.7 | 26.8 to 32.6 |

| Years of interpretation | ||||||||||

| ≤9 | 28 | 22.8 | 20.8 | 18.7 to 22.8 | 11.0 | 9.7 to 12.2 | 4.0 | 3.1 to 4.9 | 35.4 | 32.1 to 38.6 |

| 10–19 | 57 | 46.3 | 19.3 | 17.9 to 20.7 | 10.6 | 9.7 to 11.4 | 3.8 | 3.3 to 4.3 | 33.6 | 31.5 to 35.8 |

| ≥20 | 38 | 30.9 | 18.9 | 16.9 to 21.0 | 9.4 | 8.3 to 10.6 | 3.7 | 3.0 to 4.4 | 32.0 | 28.9 to 35.2 |

| Interpretive volume | ||||||||||

| <500 | 3 | 2.4 | 19.7 | 2.8 to 36.6 | 10.7 | 0.3 to 21.0 | 5.7 | −4.4 to 15.7 | 36.0 | 1.2 to 70.8 |

| 500–1000 | 28 | 22.8 | 20.3 | 18.2 to 22.3 | 11.1 | 9.8 to 12.3 | 4.2 | 3.3 to 5.1 | 35.5 | 32.2 to 38.8 |

| 1001–2000 | 46 | 37.4 | 19.7 | 17.9 to 21.5 | 10.1 | 9.1 to 11.2 | 3.5 | 3.0 to 4.0 | 33.3 | 30.5 to 36.0 |

| 2001–5000 | 41 | 33.3 | 19.2 | 17.5 to 20.9 | 10.2 | 9.1 to 11.2 | 3.9 | 3.3 to 4.5 | 32.9 | 30.4 to 35.4 |

| >5000 | 5 | 4.1 | 16.2 | 8.5 to 23.9 | 8.8 | 4.7 to 12.9 | 3.4 | 1.3 to 5.5 | 28.4 | 17.9 to 38.9 |

| Method of reimbursement | ||||||||||

| Annual set salary | 42 | 34.1 | 20.3 | 18.8 to 21.7 | 10.7 | 9.6 to 11.8 | 3.5 | 3.0 to 4.1 | 34.3 | 31.8 to 36.7 |

| Per mammogram | 5 | 4.1 | 16.4 | 10.4 to 22.4 | 9.2 | 6.0 to 12.4 | 3.0 | 1.2 to 4.8 | 28.6 | 21.9 to 35.3 |

| Shared partnership profits | 67 | 54.5 | 19.7 | 18.3 to 21.1 | 10.5 | 9.7 to 11.3 | 4.1 | 3.6 to 4.6 | 34.3 | 32.2 to 36.4 |

| Some combination | 9 | 7.3 | 15.3 | 8.6 to 21.9 | 7.1 | 4.5 to 9.7 | 3.3 | 1.5 to 5.0 | 25.6 | 16.1 to 35.2 |

| Practice-related law suit | ||||||||||

| Any | 64 | 52.5 | 19.9 | 18.5 to 21.3 | 10.4 | 9.7 to 11.2 | 3.9 | 3.4 to 4.4 | 34.2 | 32.1 to 36.4 |

| None | 58 | 47.5 | 19.0 | 17.5 to 20.4 | 10.1 | 9.2 to 11.1 | 3.7 | 3.2 to 4.3 | 32.6 | 30.3 to 35.0 |

Note: Includes 124 radiologists with ≥480 screening mammograms between 1996 and 2001. CI = confidence interval.

Possible range = 5–30.

Possible range = 3–18.

Possible range = 2–12.

Possible range = 10–60.

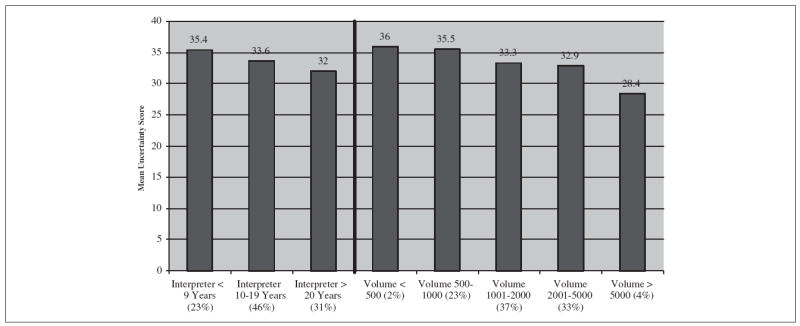

Figure 1 highlights the relationship between overall reactions to uncertainty score and interpretive experience and interpretive volume. As indicated, radiologists who are more experienced interpreters and those who interpret large volumes have lower reactions to uncertainty than do radiologists who are new to practice or who interpret smaller volumes of mammograms. A trend analysis suggests a trend for more years of interpretation and lower uncertainty scores, although this was not statistically significant (P = 0.13).

Figure 1.

Uncertainty score by interpretive experience and volume (n = 120).

The trend analysis for the relationship between uncertainty and interpretive volume was also not significant (P = 0.49).

The mean combined uncertainty score was 33.5 (95% CI, 31.9 to 35.0) (Table 2). It was lower among female radiologists (P = 0.01) as compared to male radiologists. More years interpreting mammography and higher interpretive volume were associated with lower uncertainty scores (P = 0.03). Method of reimbursement was also associated with uncertainty scores. Shared partnership profits and annual set salaries were associated with higher levels of uncertainty than were salaries based on per-screening mammography interpretations or some combination of these (P = 0.02), although small cell sizes in some categories may be influencing the stability of this finding. Radiologists with any prior medico-legal experience had slightly higher uncertainty scores, although this was not significant (P = 0.31).

Female radiologists were more likely to have an annual set salary and less likely to have shared partnership profits than were male radiologists (P = 0.004), and they were somewhat less likely to have been involved in lawsuits, though not statistically so (37.0% v. 56.8%; P = 0.08). Physicians well established in practice (v. new to practice) were less likely to have annual set salaries and more likely to have shared partnership profits (32.9% v. 58.3%; P = 0.03). They were also more likely to have 1 or more medico-legal experiences (62.1% v. 18.5%; P < 0.001).

Performance data linked to survey responses included a mean of 4493 mammograms per radiologists (range = 484–13,727) in the study time period. Breast cancer was diagnosed in 2840 women. Of the mammograms included in the analysis, 2302 (80.7% of all positive examinations) were true positive examinations, 499,667 (90.1% of all negative examinations) were true negative examinations, 54,636 (9.9% of all positive examinations) were false positive examinations, and 538 (19.3% of all negative examinations) were false negatives.

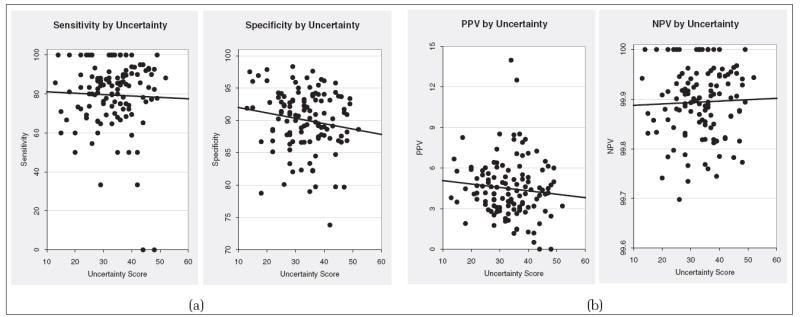

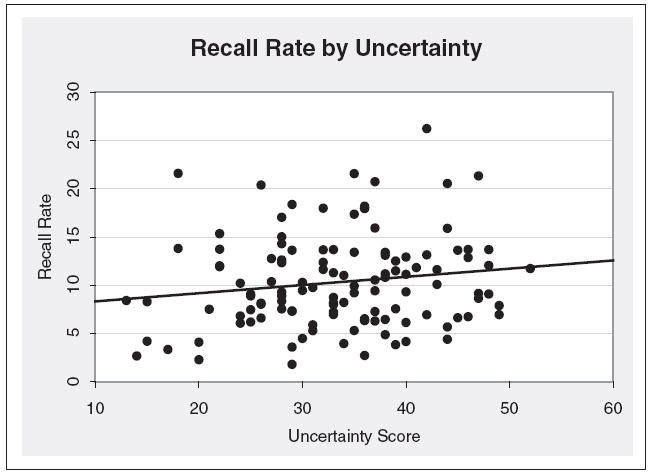

Figure 2a illustrates the relationship between overall uncertainty scores and the standard performance indices of sensitivity and specificity. Figure 2b illustrates the relationship between overall uncertainty scores and the standard performance indices of positive (PPV) and negative predictive values (NPV). As indicated in these 2 figures, specificity and PPV were more affected by uncertainty than sensitivity and NPV in this unadjusted analysis. However, no statistically significant relationships were noted between uncertainty and any performance measure, and the variation explained was low (R2 values ranged from 0.02 to 0.001). Figure 3 illustrates the relationship between uncertainty and recall rate, which suggests that higher scores in uncertainty are associated with increases in recall rates, though not statistically so.

Figure 2.

(a) Relationship between uncertainty score and standard performance indices: sensitivity and specificity. (b) Relationship between uncertainty score and standard performance indices: positive predictive value (PPV) and negative predictive value (NPV).

Figure 3.

Relationship between radiologist recall rate in clinical practice and uncertainty score from survey.

Table 3 illustrates the results of the multivariate analysis with and without adjustments for mammography registry, radiologist age, gender, and interpretive volume. As indicated in the table, adjustment for these factors, which have been associated with variability in interpretive performance, did not reveal any significant effect due to uncertainty. When we restricted this analysis to mammograms of women with dense breasts (classified as either heterogeneously dense or extremely dense), the results did not differ significantly (data not shown) from the findings presented in Table 3.

Table 3.

Multivariable Analysis of Interpretive Performance Shown as Adjusted Odds Ratios (ORs) and 95% Confidence Intervals (CIs)

| Odds of a Positive Mammogram Given a Cancer Diagnosis during Follow-up

|

Odds of a Negative Mammogram Given no Cancer Diagnosis during Follow-up

|

Odds of Cancer Diagnosis Given a Positive Mammogram

|

Odds of Recall

|

|||||

|---|---|---|---|---|---|---|---|---|

| OR | 95% CI | OR | 95% CI | OR | 95% CI | OR | 95% CI | |

| Uncertainty score | ||||||||

| Every 5-point increase | 1.08 | 0.99 to 1.17 | 0.97 | 0.91 to 1.03 | 0.98 | 0.94 to 1.02 | 1.03 | 0.97 to 1.10 |

| Uncertainty scorea | ||||||||

| Every 5-point increase | 1.06 | 1.01 to 1.13 | 0.97 | 0.92 to 1.01 | 0.98 | 0.95 to 1.02 | 1.03 | 0.99 to 1.08 |

Model adjusted for mammography registry, radiologist age, gender, and interpretive volume.

DISCUSSION

Radiologists experience a range of reactions to the uncertainty inherent in the practice of mammographic interpretation. Male radiologists report more intense reactions to uncertainty than do female radiologists, and reactions to uncertainty appear to lessen with more years of experience and with higher volume versus lower volume interpreters. Surprisingly, these 3 factors (gender, interpretive volume, and years in practice) were more closely associated with reactions to uncertainty than radiologists’ medico-legal experiences. We hypothesized that medico-legal experiences would generate a much higher response to uncertainty than it did. This may be due to the fact that Colorado, one of the 3 study sites, is a capped litigation state and likely has fewer malpractice lawsuits (a few hundred per decade for mammography) than occur in states where malpractice litigation is not capped. Thus, participating physicians from Colorado may not be as reactive to uncertainty as physicians practicing elsewhere. Although method of reimbursement also was associated with reactions to uncertainty, we believe this finding was affected by small cell sizes in at least 1 of the response categories.

In our univariate analysis, higher uncertainty scores were associated with minor insignificant increases in recall rates and decreases in specificity and PPV, which were not statistically significant. Sensitivity and NPV were not associated with any reactions to uncertainty. We initially hypothesized that physicians who are highly reactive to uncertainty would be more likely to order additional and perhaps unnecessary imaging as part of the screening workup. However, our findings differed from what we expected in that there were no statistical differences in any performance measures based on levels of uncertainty. When we adjusted for gender, age, interpretive volume, and registry in our multivariate analysis, and when we restricted our analyses to interpretations of mammograms in women with dense breasts, we still found no statistical differences that could be attributed to radiologists’ reactions to uncertainty. Perhaps there is too little variation in perceptions of uncertainty and reactions to it among the radiologists we studied for it to be identified or perhaps there is too little variation in recall rates in our study, given how large our sample size was.

The Physicians’ Reactions to Uncertainty Scale was adapted to measure radiologists’ affective reactions or attitudes toward uncertainty. Thus, our study is subject to the same limitations as other studies attempting to demonstrate an association between complex attitudes and behaviors.21 The strength of the association between radiologists’ reactions to uncertainty and their interpretive behavior may be influenced by several factors, including the intensity of their reactions to uncertainty, the salience of their reactions when interpreting mammograms, and situational factors that may override the influence of their behavioral reactions to uncertainty.

To our knowledge, no previous study has been published on radiologists’ reactions to uncertainty and how these reactions might influence practice. Allison and others12 studied the relationship between affective reactions to uncertainty and resource use of general internists in a Medicare HMO and found that high reactions to uncertainty scores were associated with more test ordering and, subsequently, higher patient charges, even after adjustment for patient and physician factors. This finding differs from our study of radiologists’ use of supplemental imaging. This may be due to the inherent differences between the discipline of internal medicine and radiology. Although internists generally use both a physical examination and a clinical history when assessing a patient’s status, this is not always the case for radiologists. Internists are also more likely to have longitudinal face-to-face relationships with their patients than are radiologists, which may make their reactions to uncertainty less stressful. Perhaps certain reactions to uncertainty are influenced by self-selection into that discipline.

The differences between our study and Allison’s may also be due to the practice environment of an HMO versus a population of radiologists who predominantly practice in community settings. Unfortunately, we could not make direct comparisons between assessments of uncertainty in our study and Allison’s because the uncertainty measures were similar but not identical. This occurred because we used Gerrity and others’ refined measure of uncertainty19 and Allison and others used a previous version.

Radiologists’ reactions to uncertainty in our study were higher than that of general internists participating in a study by Gerrity and others.19 This was true for 2 of the 3 uncertainty domains. In Gerrity’s study of 260 internists, in which the same instrument we used was administered, they found a mean stress score of 18.8 and a mean score for concern about bad outcomes of 9.5 compared to our stress score of 19.5 and 10.3 for concern about bad outcomes. It appears that radiologists experience more intense reactions to uncertainty than internists do in these 2 domains. Radiologists interpreting screening mammography tend to have little if any contact with patients, and they often interpret screening mammograms alone. Their reactions to uncertainty, which were evidenced by higher stress scores and concerns about bad outcomes, are not then likely related to patient interaction but rather may be related to other factors, such as practice isolation, ambiguity associated with complex breast architecture, or perhaps the tediousness associated with interpreting screening mammograms.

Interestingly, we found that radiologists compared to internists in Gerrity and others’ study had lower reactions to uncertainty in disclosing mistakes to other physicians. A very recent study by Gallagher and others22 indicates that physicians worry regularly about the possibility of medical errors and that their worst fears are related to malpractice litigation, loss of trust, loss of colleagues’ respect, and diminished self-confidence. We speculate that interacting more frequently with other physicians around patient care issues, which likely occurs more often in internal medicine than in mammographic interpretation, may generate higher reactions for the domain of disclosing mistakes for internists rather than radiologists.

We conducted this study because we initially thought that we could identify specific biases that affect radiologists’ decision thresholds, which might be amenable to change. Identifying these might then lead to a better understanding of how to modify bias in decision making with an eye toward enhancing interpretive performance. Our study was not specifically designed to assess how radiologists set their thresholds for decision making. This may be the next logical step in evaluating sources of variability in radiologist practice. Reducing unnecessary recall in the United States, while maintaining sensitivity, could result in both significant cost savings and a reduction in the anxiety women experience when receiving an abnormal mammographic interpretation.23-25

However, we did not determine that radiologists’ reactions to uncertainty were associated with differences in their interpretive practices. Perhaps the uncertainty that arises in this discipline of medicine expresses itself elsewhere. For example, we know that manpower in mammography is a problem,26 with some radiologists stopping mammography interpretation altogether. Perhaps leaving the practice of mammography interpretation is where this uncertainty expresses itself. Unfortunately, our study was not designed to determine whether this is the case. Alternatively, perhaps our study showed no effect because radiologists as a profession have already adapted their recall rates to the US practice environment, and there may not be room for variation attributable to what may actually be small variations in uncertainty.

Our study, although rigorously designed, is not without limitations. Our study population included a representative sample of radiologists practicing in 3 distinct regions of the United States. Although our high response rate allows us to generalize to other radiologists in these regions, it does not allow us to generalize to radiologists around the entire country. A strength of our study is that we included the breadth of settings, including community-based, academic, and open- as well as closed-system HMOs. Although this allows us to capture mammography interpretation practice as it is commonly performed in the United States, we were not able to conduct our analysis by specific practice settings, such as among radiologists whose practice is confined specifically to mammography interpretation as this was not common in our study population.

In conclusion, we found that radiologists interpreting screening mammography experience a range of reactions to uncertainty in their clinical practice. These reactions are higher than have been reported in other medical disciplines, such as internal medicine, and certain characteristics of the radiologist, such as gender and years of interpretive experience, mediate these reactions. Despite the high level of reactions experienced by radiologists to the uncertainty inherent in their practice, their interpretive performance appears to be unaffected.

Acknowledgments

This work was supported by the Agency for Healthcare Research and Quality (HS-10591) and the National Cancer Institute (U01 CA63731, U01 CA86082, and U01 CA63736-08).

References

- 1.Elmore J, Wells C, Lee C, Howard D, Feinstein A. Variability in radiologists’ interpretations of mammograms. N Engl J Med. 1994;331(22):1493–9. doi: 10.1056/NEJM199412013312206. [DOI] [PubMed] [Google Scholar]

- 2.Beam CA, Layde PM, Sullivan DC. Variability in the interpretation of screening mammograms by US radiologists. Arch Intern Med. 1996;156:209–13. [PubMed] [Google Scholar]

- 3.Brown M, Houn F, Sickles E, Kessler L. Screening mammography in community practice: positive predictive value of abnormal findings and yield of follow-up procedures. AJR Am J Roentgenol. 1995;165:1373–7. doi: 10.2214/ajr.165.6.7484568. [DOI] [PubMed] [Google Scholar]

- 4.Beam CA, Conant EF, Sickles EA. Factors affecting radiologist in consistency in screening mammography. Acad Radiol. 2002;9:531–40. doi: 10.1016/s1076-6332(03)80330-6. [DOI] [PubMed] [Google Scholar]

- 5.Physicians Insurers Association of America Data Sharing Committee. Cumulative Reports, January 1st, 1985-June 30, 1996. Rockville (MD): Physicians Insurers Association of America; 1996. [Google Scholar]

- 6.Beck JR. Medical decision-making: 20 years of advancing the field. Med Decis Making. 2001;21(1):73–5. doi: 10.1177/0272989X0102100111. [DOI] [PubMed] [Google Scholar]

- 7.Fryback DG. Reflections on beginnings and future of medical decision-making. Med Decis Making. 2001;21(1):71–3. doi: 10.1177/0272989X0102100110. [DOI] [PubMed] [Google Scholar]

- 8.Bornstein BH, Emler AC. Rationality in medical decision making: a review of the literature on doctors’ decision-making biases. J Eval Clin Pract. 2001;7(2):97–107. doi: 10.1046/j.1365-2753.2001.00284.x. [DOI] [PubMed] [Google Scholar]

- 9.Landon BE, Reschovsky J, Reed M, Blumenthal D. Personal, organizational and market level influences on physicians’ practice patterns: results of a national survey of primary care physicians. Med Care. 2001;39(8):889–905. doi: 10.1097/00005650-200108000-00014. [DOI] [PubMed] [Google Scholar]

- 10.Pearson S, Goldman L, Orav E. Triage decisions for emergency department patients with chest pain: do physicians’ risk attitudes make a difference? J Gen Intern Med. 1995;10:557–67. doi: 10.1007/BF02640365. [DOI] [PubMed] [Google Scholar]

- 11.Gifford D, Vickery B, Millman B. How physicians’ uncertainty influences clinical decision making: a study of the evaluation and management of early Parkinson’s disease. J Gen Intern Med. 1995;10(Suppl):4–66. [Google Scholar]

- 12.Allison JJ, Kiefe CI, Cook EF, Gerrity MS, Orav EJ, Centor R. The association of physician attitudes about uncertainty and risk taking with resource use in a Medicare HMO. Med Decis Making. 1998;18(3):320–9. doi: 10.1177/0272989X9801800310. [DOI] [PubMed] [Google Scholar]

- 13.Ballard-Barbash R, Taplin SH, Yankaskas BC, et al. Breast Cancer Surveillance Consortium: a national mammography screening and outcomes database. Am J Roentgen. 1997;169:1001–8. doi: 10.2214/ajr.169.4.9308451. [DOI] [PubMed] [Google Scholar]

- 14.Taplin SH, Mandelson MT, Anderman C, et al. Mammography diffusion and trends in late-stage breast cancer: evaluating outcomes in a population. Cancer Epidemiol Biomarkers Prev. 1997;6:625–31. [PubMed] [Google Scholar]

- 15.Carney PA, Poplack SP, Wells WA, Littenberg B. Development and design of a population-based mammography registry: the New Hampshire Mammography Network. Am J Roentgen. 1996;167:367–72. doi: 10.2214/ajr.167.2.8686606. [DOI] [PubMed] [Google Scholar]

- 16.Jacobellis J, Cutter GR. Mammography screening and differences in stage of disease by race/ethnicity. Am J Public Health. 2002;92:1144–50. doi: 10.2105/ajph.92.7.1144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Carney PA, Geller BM, Moffett H, et al. Current medico-legal and confidentiality issues in large multi-center research programs. Am J Epidemiol. 2000;152:371–8. doi: 10.1093/aje/152.4.371. [DOI] [PubMed] [Google Scholar]

- 18.Gerrity MS, DeVellis RF, Earp J. Physicians’ reactions to uncertainty in Patient Care. Med Care. 1990;28(8):724–36. doi: 10.1097/00005650-199008000-00005. [DOI] [PubMed] [Google Scholar]

- 19.Gerrity MS, White KP, DeVellis RF, Dittus RS. Physicians’ reactions to uncertainty: refining the constructs and scales. Motivation and Emotion. 1995;19(3):175–91. [Google Scholar]

- 20.D’Orsi CJ, Bassett LW, Feig SA, et al. Breast Imaging Reporting and Data System. 3. Reston (VA): American College of Radiology; 1998. [Google Scholar]

- 21.Taylor SE, Peplau LA, Sears DO, Peplau AL. Social Psychology. 11. Englewood Cliffs (NJ): Prentice Hall; 2002. [Google Scholar]

- 22.Gallagher TH, Waterman AD, Ebers AG, Fraser VJ, Levinson W. Patients and physicians attitudes regarding disclosure of medical errors. JAMA. 2003;289(8):1001–7. doi: 10.1001/jama.289.8.1001. [DOI] [PubMed] [Google Scholar]

- 23.Lerman C, Rimer BK, Jepson C, Brody D, Boyce A. Psychological side effects of breast cancer screening. Health Psychol. 1991;10(4):259–326. doi: 10.1037//0278-6133.10.4.259. [DOI] [PubMed] [Google Scholar]

- 24.Lerman C, Trock B, Rimer BK, Boyce A, Jepson C, Engstrom PF. Psychological and behavioral implications of abnormal mammograms. Ann Intern Med. 1991;114(8):657–61. doi: 10.7326/0003-4819-114-8-657. [DOI] [PubMed] [Google Scholar]

- 25.Gram IT, Lund E, Slenker SE. Quality of life following a false positive mammogram. Br J Cancer. 1990;62:1018–22. doi: 10.1038/bjc.1990.430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.D’Orsi C, Tu SP, Nakano C, et al. Realities of Delivering Mammography in the Community: Challenges with Staffing and Scheduling. doi: 10.1148/radiol.2352040132. Unpublished manuscript. [DOI] [PMC free article] [PubMed] [Google Scholar]