A psychologist’s task is to discover facts about the mind by measuring responses at the level of a person (e.g., reaction times, perceptions, eye or muscle movements, or bodily changes). A neuroscientist’s task is to make similar discoveries by measuring responses from neurons in a brain (e.g., electrical, magnetic, blood flow or chemical measures related neurons firing). Both psychologists and neuroscientists use ideas (in the form of concepts, categories, and constructs) to transform their measurements into something meaningful. The relation between any set of numbers (reflecting a property of the person, or the activation in a set of neurons, a circuit, or a network) and a psychological construct is a psychometric issue that is formalized as a “measurement model.” The relation is also a philosophical act. Scientists (both neuroscientists and psychologists) who make such inferences, but don’t explicitly declare their measurement models, are still doing philosophy, but they are doing it in stealth, enacting certain assumptions that are left unsaid.

In “Mind the Gap,” Kievit and colleagues (this issue) take the admirable step of trying to unmask the measurement models that lurk within two well-defined traditions for linking the actions of neurons to the actions of people. They translate identity theory and supervenience theory into popular measurement models that exist in psychometric theory using the logic and language of structural equation modeling. By showing that both philosophical approaches can be represented as models that relate measurements to psychological constructs, Kievit et al. lay bare the fact that all measurement questions are also philosophical questions about how variation in numbers hint at or point to reality. They make the powerful point that translating philosophical assumptions into psychometric terms allows both identity theory and supervenience theory to be treated like hypotheses that can be empirically evaluated and compared in more or less a concrete way. The empirical example offered by Kievit et al. (linking intelligence to brain volume) is somewhat simplistic on both the neuroscience and psychological ends of the equation, and the nitty-gritty details of applying an explicit measurement approach to more complex data remains open, but this article represents a big step forward in negotiating the chasm between measures taken at the level of the brain and those taken at the level of the person.

The overall approach is applauded, but a closer look at the details of how Kievit et al. operationalized identity and supervenience theory is in order. In science, as in philosophy, the devil is in the details. In the pages that follow, I highlight a few lurking demons that haunt the Kievit et al. approach. I don’t point out every idea that I take issue with in the article, just as I don’t congratulate every point of agreement. Instead, I focus in on a few key issues in formalizing identity and supervenience theory, with an eye to asking whether they are really all that different in measurement terms, as well as whether standard psychometric models can be used to operationalize each of them equally well. Like Kievit et al., I conclude that a supervenience theory might win the day, but I try to get more specific about a version of supervenience that would successfully bridges the gap between the brain and the mind.

What Is the Correct Measurement Model for Identity Theory?

The first issue to consider is whether Kievit et al. provided the correct measurement formalization for identity theory. Identity theories define the mental in terms of the physical (i.e., they ontologically reduce mental states to states of the nervous system). Right off the bat, this contradicts Kievit et al. claim that neural and psychological measures are on equal footing. As a consequence, I operationalize identity theory slightly differently than do Kievit et al. Also, there are two versions of mind:brain identity: type identity and token identity. When discussing identity theory, Kievit et al. explicated the type version, but the token version is important to consider because it blurs the distinction between identity and supervenience.

The type version of identity theory assumes that psychological kinds are physical kinds (e.g., Armstrong, 1968; Place, 1956; Smart, 1959). It assumes that the mind is populated by abstract categories for different mental faculties (like emotion, memory, perception, intelligence, etc.) and correspondence between mind and brain resides at the level of the abstract category. Type identity theories of emotion (e.g., “basic emotion” models and some appraisal models), for example, assume that certain emotion categories (e.g., fear) can be reduced to the activation of one and only one brain region (e.g., Calder, 2003; Ekman, 1999), one specific neural circuit (e.g., Izard, in press; Panksepp, 1998), or one physiological state (e.g., Ekman & Cordano, in press; Levenson, in press). Sometimes types are conceived of as natural kinds (e.g., Panksepp, 2000) or sets (e.g., as in families of emotion; Ekman, 1992). In the extreme, type identity theories do away with mental concepts altogether because they can be merely redefined in terms of their physical causes (e.g., Feyerabend, 1963).1

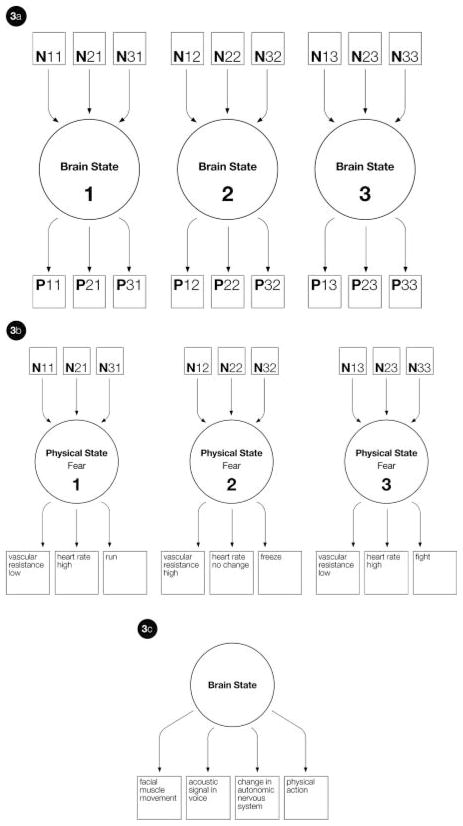

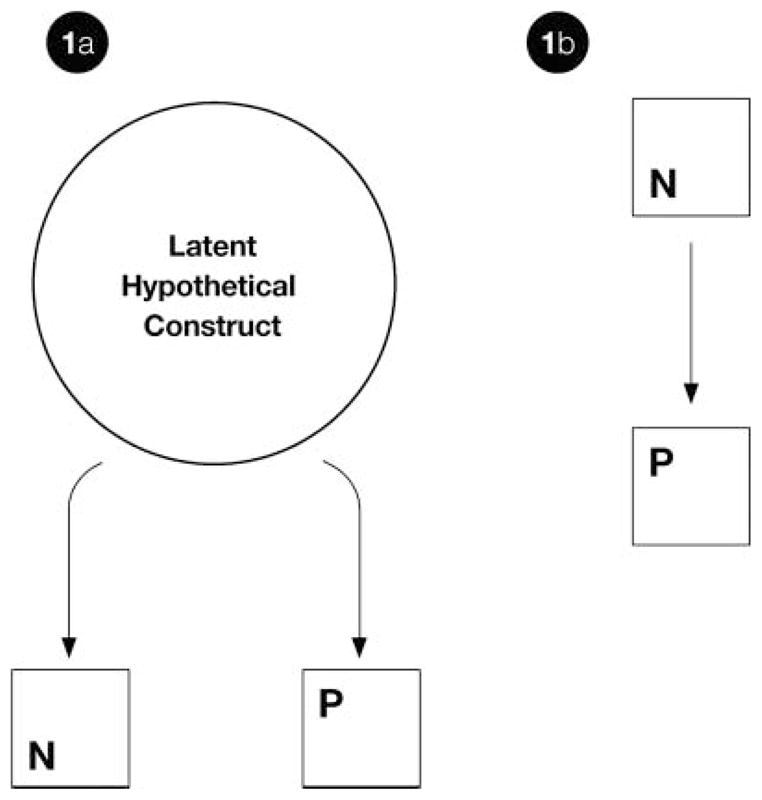

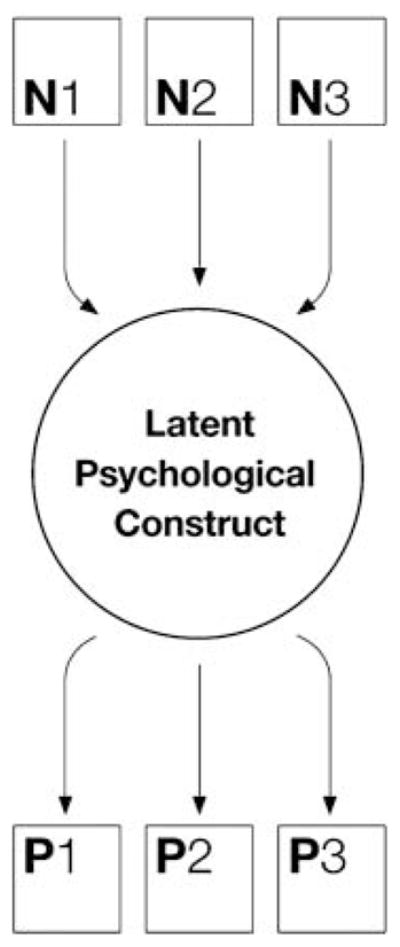

Kievit et al. formalize type identity theory using a measurement model called an effect indicator model (also called a reflective model; Bollen & Lennox, 1991) as Figure 1a. In this kind of a model, the circle represents a hypothetical but not directly observable (i.e., latent) construct that is estimated by observed, measurable variables represented by the squares (Kievit et al. label the squares as “N” and “P” for neural and psychological measures, respectively). In an effect indicator model, the measured variables correlate with each other perfectly (barring measurement error) because they have a common cause (the hypothetical construct). Statistically, their observed correlation is taken as evidence that the hypothetical construct exists (because it cannot be measured directly by its nature or given the limits of existing measurement tools); mathematically, this shared correlation estimates the value of the latent hypothetical construct. The observed measures are said to be indicators or reflections of that hypothetical construct. In Kievit et al.’s view, this hypothetical construction is akin to a psychological faculty, like intelligence (their example), or presumably emotion, memory, perception, and the like.

Figure 1.

Simple measurement models for type identity theory. Note. (a) Kievit et al.’s measurement model for identity theory. Circles refer to latent constructs. Squares refer to measured variables. N and P refer to neural and psychological measurements, respectively. (b) A revised measurement model for type identity theory.

It is unclear, however, whether an effect indicator model is the correct measurement model for type identity theory, largely because a hypothetical construct might not be needed. If mental states are nothing more than physical states (in this case, states of the nervous system), so that one (the mental) can be ontologically reduced to the other (the physical), then measures of the person and of the brain both result from the same underlying cause—the state of the nervous system at the time of measurement. Because that state is captured by the neural measures, the hypothetical construct is superfluous (at least in principle, assuming you have adequate measures). Neural measurements of that state can be said to directly bring the psychological measurements into existence (i.e., to cause them), so all you need is a zero-order correlation between the two to make your point, as in Figure 1b. For example, in emotion, if psychological measures are caused by activation in some brain region, circuit, or measure of some brain state, then measures of facial muscle movement, acoustical changes in vocalizations, physical actions, and changes in autonomic measures are directly reducible to neural firing in type identity theory.

The original conception of a “hypothetical construct” also makes us more confident that such a construct is not needed in type identity theory. In 1948, as psychology was starting to struggle its way free from behaviorism, MacCorquodale and Meehl (1948) clarified the idea of a hypothetical latent construct as a process or event that is allegedly real, whose existence is inferred based on a set of observed empirical relations between measurements, but whose existence cannot be reduced to those relations. According to MacCorquodale and Meehl, a hypothetical latent construct is not merely abstracted from a set of observable measures—it relates those measures to one another by adding something (“a fictitious substance or process or idea”; p. 46). Such a latent construct has what Reichenbach (1938) called “surplus meaning”; it is a hypothesis that something exists, even if that something cannot be measured directly. The processes or events are not necessary unobservable in principle—they could be unobservable at the moment due to temporary ignorance, a lack of sophistication in measurement or mathematical model. In fact, MacCorquaodale and Meehl explicitly assume that a hypothetical construct includes inner (by which they mean neural) events that will someday be discovered (e.g., 1948, pp. 105–106). Once the inner (neural) events are specified, a theoretical construct with surplus meaning is no longer necessary to translate type identity theory into a measurement model. The “underlying attribute,” as Kievit et al. call it, is the state of the nervous system.2

In describing identity theory as an effect indicator model with a hypothetical latent construct as in Figure 1a, Kievit et al. assume that psychological and neural measurements are on “equal footing” because both imperfectly reflect the true state of the underlying attribute. But if we really assume that mental states can be ontologically reduced to brain states, as type identity theory does, then the two sorts of measurements are not on equal footing. Any measure of the person is dependent on the conditions of the brain, and so any measure of the brain will have causal ascendancy. (In a certain sense, this has to be correct—unless you are a dualist, psychological measurements, in the end, have to be causally reduced to the brain. This does not mean that type identity theory is correct, however. The fact that mental states are caused by brain states cause mental states does not mean that one should be ontologically reduced to (i.e., merely defined as nothing but the other; I return to this issue later in the commentary.)

Figure 1b is a very simple measurement model and would need to be expanded to include multiple measures. Kievit et al. drew an expanded version of an effect indicator model that involves a hypothetical latent construct (Figure 2a), again where the latent construct is a hypothetical mental faculty (their example is intelligence). Using a similar logic to that previously laid out, however, I would draw the measurement model for type identity theory as in Figure 2b. Multiple measurements of a brain state combine to produce an estimate of that state, which in turn causes the psychological measurements. MacCorquaodale and Meehl (1948) called this an “intervening variable” or an “abstractive concept.” The abstractive concept is estimated as a straightforward empirical summary of the measured variables that constitute it. This kind of construct appears in structural equation models that are called “causal indicator” or “formative” models (Bollen & Lenox, 1991). Here, neural measures are not expected to correlate with one another because they add together in a linear fashion and this aggregate realizes or constitutes the latent construct in question. Adequate measurement of the construct is dependent on measuring the correct variables. Each measure is expected to contribute unique variance to the construct, so that any small variation (again, not due to measurement error) that occurs in the brain-based measures will produce a real change in the latent construct itself (because the latent construct supervenes on the neural measures that constitute it). We might be tempted to add in a latent construct for the psychological state, as in Figure 2c, but eliminative identity theory (e.g., Feyerabend, 1963) would have us believe that the psychological can be ontologically reduced to the physical, and thus the psychological construct is superfluous.

Figure 2.

Elaborated models for type identity theory with multiple measruements. Note. (a) Kievit et al.’s model. (b) A revised measurement model. (c) An extended model including both neural and psychological latent constructs that reflect abstract, universal types (although strictly speaking, the psychological construct is redundant because in identity theory, the mental is ontologically reduced to the physical).

The philosophical model represented in Figure 2b is elegant and intuitive, and it frames a hypothesis that psychology has been wrestling with for over a century. At this point, however, it is possible to marshal a lot of empirical evidence to show that it is not correct. There is no one single brain region, network, or broadly distributed brain state for intelligence, or for memory, or even for any type of emotion. Take, for example, the category “fear.” There are well-articulated brain circuits for the behavioral adaptation of freezing, for potentiated startle, and for behavioral avoidance (Davis, 1992; Fanselow & Poulous, 2005; Fendt & Fanselow, 1999; Kopchia, Altman, & Commissaris, 1992; LeDoux, 2007; Vazdarjanova & McGaugh, 1998). These three circuits are distinct from one another, but none of them count as the brain circuit for the category “fear.” This means that measurement model in Figure 2b is empirically false when the latent construct (i.e., the brain state) is assumed to reflect a mental faculty or “type.” Faculty psychology is dead and should be given a respectful burial.

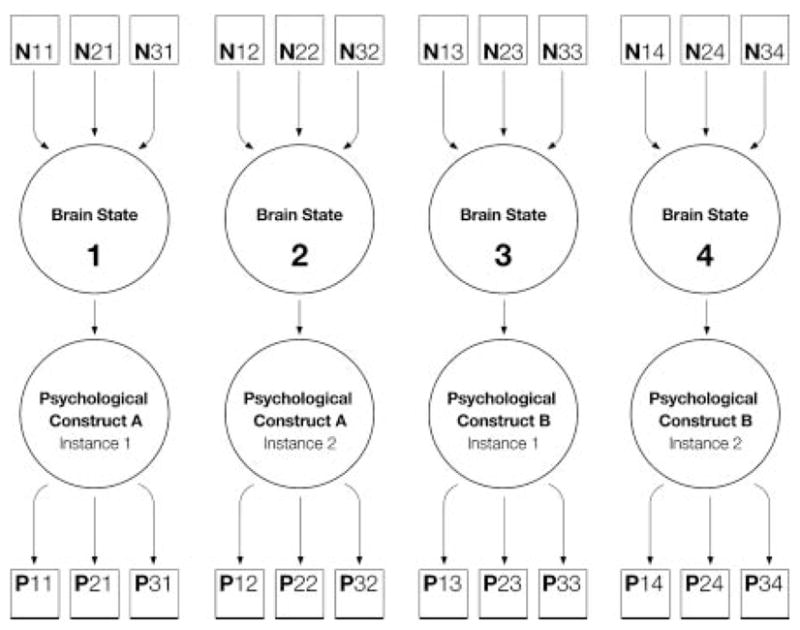

But what if the measurement model in Figure 2b was generalized to depict individual instances of a psychological category rather than the category as an entity, as in Figure 3a? That is, what if the latent construct referred to a mental state, rather than a type of mental faculty. In Figure 3a, each set of neural measurements constitutes an abstractive latent construct corresponding to a different brain state, which then produces a set of psychological measurements for a particular mental instance. These various brain states could all be variations within the same abstract psychological category. This is the token version of identity theory.

Figure 3.

Measurement models for token identity theory. Note. (a) A general depiction. (b) Examples of three different instances of the category “fear.” (c) A depiction of James’s (1890) token identity theory of emotion; the brain state produces a set of outcomes that are then perceived and experienced as emotion (note that Lange’s 1887 version of this model is a type identity model.)

The token version of identity theory gives no ontological power to abstract psychological categories. Instead, it assumes that individual instances of a category (“tokens”) are equivalent to individual states of the nervous system (e.g., Davidson, 1980; Fodor, 1974; Taylor, 1967). Continuing with the emotion example, this is illustrated in Figure 3b. For example, token identity theories of emotion (e.g., James, 1890) assume that an individual instance of, say, fear, occurring in an individual person in a particular context is identical to a distinctive physical state, so that, for example, this instance of fear will be realized as its own specific state. According James, the perception of this state is the emotion (by which he meant the experience of emotion). Other specific instances of fear in that person, or in another person, would correspond to different distinctive physical states (depicted in Figure 3c). In token identity theory, correspondence between mind and brain is thought to reside at the level of the mental instance, and abstract categories are assumed to be folk categories with limited use for scientific induction (e.g., James’s psychologist’s fallacy; James, 1890). As in type identity theory, the correlation between the neuronal and psychological measures is thought to reflect the fact that one state (the mental state) is literally identical to the other state (the neuronal state), but in token identity theory, each brain/mental state is an instance of more abstract psychological category. There is no assumption that the variety of instances belonging to the same abstract psychological category share a physical substrate that makes them a member of that, and only that, category (James made this very point about emotion categories like anger and fear). This is why abstract psychological categories are not suitable to support scientific induction about mechanisms (although they might do a perfectly fine job at describing a phenomenon; cf. Barrett, 2009a).

Is the Measurement Model for Token Identity Theory at Odds With the Model for Supervenience Theory?

Although Kievit et al. present identity and supervenience theory as two philosophical traditions with distinct measurement models, the difference between them is less striking when using token identity theory in the comparison. There are many subtle flavors of supervenience theory (e.g., Chalmers, 1996; McLaughin, 1997; Seager, 1991; Searle, 1992), but the central idea of this philosophical position as a perspective on mind:brain correspondence is that a given kind of mental category can be realized by multiple states of the nervous system (Putnam, 1975), as represented in Figure 4. If two mental states differ in their content, then they must also be physically different—this seems obvious. But the reverse is not true—two mental states can belong to the same type of category (a mental state at two instances within the same person, or a mental state across different people), and yet their corresponding brain states can be different. (Using the concept of Neural Darwinism [Edelman, 1987], it is possible that even the same token mental state can be realized by multiple brain states within a person, but that is beyond the scope of this commentary.) So in supervenience theory, there can be no psychological difference without a brain difference, but psychological sameness does not imply neural sameness. For example, several recent publications have offered supervenient models of emotion (Barrett, 2000, 2006b; Coan, 2010; Russell, 2003). By comparing Figure 3a (representing token identity theory) and Figure 4 (representing supervenience theory), it is clear that the two are not really inconsistent with one another. The main difference is that in token identity theory (Figure 3a), a latent psychological construct is philosophically redundant with the latent physical construct. In supervenience theory (Figure 4), the psychological construct is not superfluous. It exists and, as we will discuss shortlly, it is real in a particular way, but one cannot use backward inference to infer the exact cause of something (the brain state) from its product (the mental state).

Figure 4.

A general measurement model for supervenience theory.

Supervenience is consistent with the idea that neurons can be functionally selective for a psychological event in a given instance, even if they are not functionally specific to that psychological event. For example, we might observe consistent activation of the amygdala in the perception of fear (Lindquist, Wager, Kober, Bliss-Moreau, & Barrett, in press) and in animals who learn to anticipate a shock when presented with a tone (LeDoux, 1996), but this does not mean that we can infer the existence of fear when we observe an increase in amygdala activity (for a recent discussion, see Suvak & Barrett, 2011).

Although there are many varieties of supervenience theory, here we are concerned with two: constitutive and causal (Searle, 1992; similar to Chalmer’s distinction between logical and natural supervenience or Seager’s distinction between constitutive and correlational supervenience). The difference between constitutive and causal supervenience lies in the nature of the latent psychological state—is it elemental or emergent?

Constitutive supervenience is typically used to show that higher level properties (of the mind) can be directly derived from the properties or features of the lower level causes (in the brain). Facts about the mind merely redescribe facts about the brain, so that the latent psychological construct in Figure 4 is abstractive—it is a psychological label that names a state that merely intervenes between the brain state and the behavioral measures. So from the perspective of constitutive supervenience theory, Figure 4 depicts the assumption that neural measurements constitute the brain state that can then be redescribed as a psychological state that is measured by a set of person-level variables.

Causal supervenience is the more typical version of supervenience theory and is used to show how higher level properties of the mind depend on lower level properties of the brain without being reduced to them (i.e., to show how higher level constructs can be causally reduced to these lower level constructs without ontologically reduced or being merely defined in terms of them). In causal supervenience, the latent psychological construct in Figure 4 would be hypothetical in nature—a psychological state that is more than the sum of its parts (this is easier to see in Figure 7, which is an elaboration of Figure 4). Some kind of law is required to get from the physical (neural measurements) to the mental (psychological measurements), and here the concept of emergence is usually invoked. Emergence typically arises from a complex system where the collective behavior of a large assembly of more simple elements produces system-level properties. These novel properties are irreducible (they are distinct in existence from the more basic elements that give rise to them) and unpredictable (not due to temporary ignorance but because the starting values, context, and the interactions between more basic elements produce probabilistic outcomes). Emergent properties are also conceptually novel, in the sense that the assembly of more basic elements can be described effectively only by introducing a new concept that is ontologically new with respect to the more basic elements (i.e., the concept does not exist at the lower level of the elements). Sometimes, but not always, the emergent phenomenon must have causal powers that the lower level elements do not (this issue is typically discussed as the question of downward causation; Campbell, 1974; Kim, 1999). Many psychological phenomena have been described as emergent (McClelland, 2010), including emotion (e.g., Barrett, 2006b; Clore & Ortony, 2008; Coan, 2010).

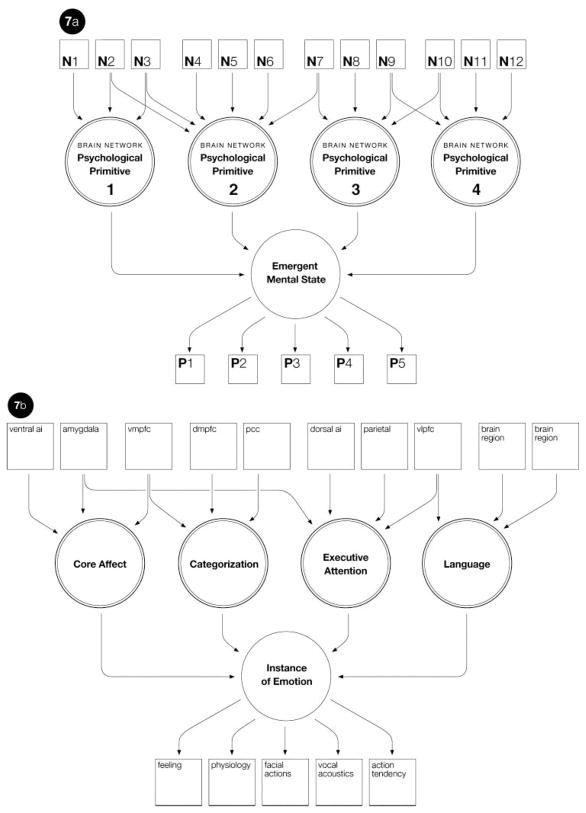

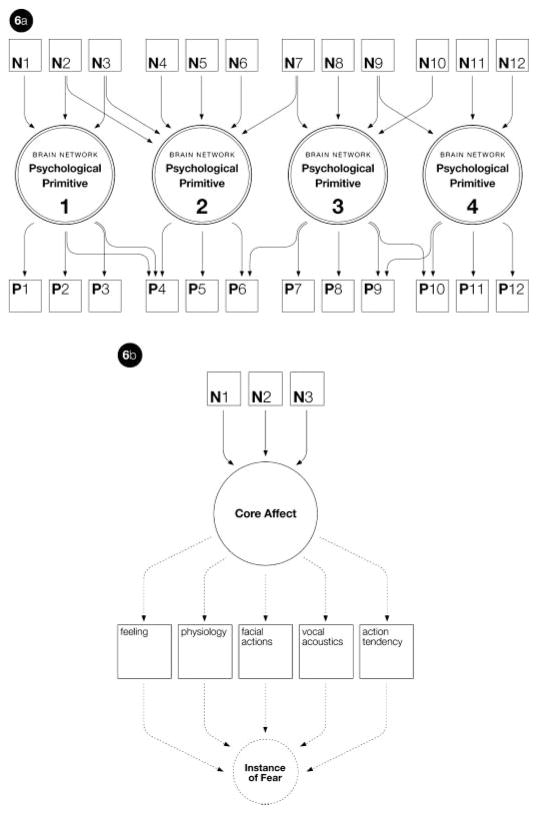

Figure 7.

Measurement models for emergent psychological construction. Note. (a) A general measurement model for an emergent approach to psychological construction. (b) A partial example of Barrett’s (2006b) psychological construction model of emotion (for a partial justification of the brain regions listed, see Lindquist et al., in press); ai = anterior insula; vmpfc = ventromedial prefrontal cortex; dmpfc = dorsomedial prefrontal cortex; pcc = posterior cingulate cortex; vlpfc = ventrolateral prefrontal cortex.

It is not clear whether Kievit et al. had constitutive or emergent psychological constructs in mind when they formalized supervenience theory as neural measures instantiating a latent psychological construct within a causal indicator model (their depiction is represented here in Figure 5). This lack of clarity occurs because it is not possible to empirically distinguish between the two versions of supervenience theory using the mathematics of a causal indicator model (in either Figures 4 or 5). In principle, a latent psychological construct can be determined by the constellation of neural measurements either directly in an additive sense (as in an abstractive construct) or via some transformation in the emergent sense (as in a hypothetical construct). In practice, however, the latent constructs in a causal indicator measurement model must be, necessarily, only abstractive; this is because, mathematically, the construct is realized by a linear combination of its elements. The math does not yet exist to estimate an emergent phenomenon using structural equation modeling. An emergent property is a system-level property that is dependent on the organization of the system’s elements or parts, and there is nothing in a causal indicator measurement model that allows us to model the configurations that produce emergence (not even interactions between the constructs). So a causal indicator model can be used to depict emergence in a heuristic sense (e.g., Barrett, 2000, 2006a; Coan, 2010), but not in an actual mathematical sense.

Figure 5.

Kievit et al.’s measurement model for supervenience theory.

If the math does not exist to model emergent phenomena within traditional psychometric approaches, then this might present a limitation for using those approaches to formalizing philosophy as measurement models. This would be a real scientific setback, because it means we don’t have the statistical tools we need to test our theoretical ideas. I suspect that in the end, new mathematical formulations will be necessary to properly map the brain to the mind. For the present, however, it makes sense to forge ahead with the mathematical tools that are available to most psychologists. Perhaps we can better formalize the philosophy of mind:brain correspondence by using a different set of psychological constructs that allow for a more tractable and testable model and that can be adapted later to better reflect the theoretical idea of emergence.

What Are The Best Categories for Use in Bridging the Gap Between Mind and Brain?

To try to understand how the brain creates the mind (and therefore how measurements of the brain are linked to measurements of the person), we have already established that a good first step is to discard abstract psychological categories of mental faculties for scientific use (at least in the fields of neuroscience and psychology). The earliest psychological scientists, like Wundt and James, advocated this move on logical grounds, and as noted previously in the discussion on type identity theory, a century’s worth of research now supports it. Such a move is consistent with supervenience theory, where the mental supervenes on the physical, so that multiple physical configurations can produce instances of the same psychological category (Figure 4). Emotion and cognition do not exist as natural kinds of psychological causes, nor can it be said that their interaction causes behavior (Duncan & Barrett, 2007). For psychology, such categories do not allow us to accumulate knowledge about how their instances are caused. Neither do they allow the most precise scientific predictions.

Yet a science of mind:brain correspondence that focuses on tokens (instances of mental states) does also not allow for much scientific induction or prediction either. Psychologists know this, because we have been down this road before. This was one of the lessons of behaviorism. In the formative years of scientific psychology (in the late 1800s), when psychologists first realized that constructs for mental faculties like emotion, cognition, perception, intelligence, attention, and so on, could not do the work of psychological science, they moved toward a science of instances, which led them to functionalism. To have a science of instances, they tried to discover something about the specific contexts in which each instance occurred, its specific causes, and its specific effects or the outputs that derived from that instance. This is a very inefficient way of doing science, because the fine-grained descriptions would be very complicated and unrealistic to generate in any comprehensive way (Dennett, 1991).

To this inefficiency on the psychological end of the equation add also the ambiguity about the level of measurement that is needed to adequately measure the brain. Do we measure the activation of single neurons, of columns or groups of cells, of anatomical regions, networks of regions, or (like Kievit et al.) very molar measurements like brain volume? The prospect of increasing and unrelenting complexity becomes quickly overwhelming, and there appears to be no principled level of measurement that can be proclaimed (the measure usually depends on the goals and proclivities of the scientist; Barrett, 2009a; Dennett, 1991).

Historically, a science of instances also turned out to be a perilous path for psychology, because to make a science of instances more tractable, the mind was essentially defined out of existence. Behaviorism was only a stone’s throw from functionalism. Mental states were ontologically reduced to physical instances that could then be more easily measured and catalogued. As a result, a science of instances produced a false kind of psychology, because both a functionalist and a behaviorist approach to behavior failed to capture much about the mind that is scientifically useful. The mind cannot be simply reduced to easily measurable causes and effects. Its contents also have to be described. Many philosophers have made this point (but for a particularly good discussion, see Searle, 1992). For this reason, a psychological level of description is needed. To properly describe the brain’s function, we must translate it into human terms. Neurons need to be understood not only as collections of cells but also for their functions within a person’s life. A neural process becomes a mental process when it plays a role in the organism (Lewes, 1875). As Kievit et al. correctly point out, the identification of mental constructs, even if they are neurologically grounded, depends on the psychological part of the model.

So, we find ourselves in the interesting position of needing supervenience to derive the mental from the physical but also of suspecting any version of supervenience that involves psychology constructs for mental faculties of the sort that populate folk psychology. We require a translation from brain to mind that at once preserves some aspects of token identity theory and that also addresses the need for a level of description at the psychological level that does not resort to faculty psychology.

One proposed solution is a model where (1) combinations of activations in various neuronal groups combine to realize the activation of a distributed brain network that itself is redescribed in the most basic psychological terms (i.e., it is psychologically primitive), such that (2) the interplay amongst these basic ingredients realize emergent mental states (Barrett, 2009a). This perspective, called psychological construction, hypothesizes that all mental events can be reduced to a common set of basic psychological ingredients that combine to make instances of many different psychological faculties. So, unlike a faculty psychology approach, which assumes there are “cognitive” processes that produce cognitions (e.g., a memory system the produces memories), “emotional” processes that produce emotions (e.g., a fear system that produces fear), and “perceptual” processes (e.g., a visual system that produces vision), psychological construction hypothesizes that psychological primitives are the basic elements of the mind that combine to make instances of cognition, emotion, and perception (and so on). Psychological construction models, at least in a nascent state, stretch back to the beginning of psychology (e.g., for a review see Gendron & Barrett, 2009), but because they are largely unintuitive, they are relatively rare as fully developed theories in psychology (or for mind:brain correspondence).

There are two varieties of psychological construction, one elemental and the other emergent, corresponding to the two varieties of supervenience theory. Elemental psychological construction models ontologically reduce mental categories to more basic psychological operations, as depicted in Figure 6a. Here, measures of the person reflect psychological primitives, and it is only in perception, after the fact and as a separate mental state, that humans categorize the measureable psychological event an instance of “fear,” “memory,” “perception,” and so on. For example, Figure 6a can be adapted to represent Russell’s (2003) model of emotion as in Figure 6b, where neurons activate to produce a state of core affect (Posner, Russell, & Peterson, 2005) that can be measured by a person’s subjective feeling, their autonomic physiology, their facial muscle movements, and their vocal acoustics. In this model, the psychological primitive “core affect” is represented as the common cause for a variety of behavioral measures, meaning that those measures should correlate strongly with one another (and in the measurement of affect, they do tend to correlate; for a review, see Barrett, 2006a).

Figure 6.

Measurement models for elemental psychological construction. Note. (a) A general measurement model for an elemental approach to psychological construction. (b) An example of Russell’s (2003) psychological construction model of emotion.

Emergent psychological construction is similar to its elemental cousin, except that instances of mental categories are thought to emerge from the interplay of the more basic operations that cause them, so that the resulting mental states cannot be merely defined in terms of those more basic parts (i.e., mental instances can be causally reduced to those operations but not ontologically reduced to them), as in Figure 7a. By analogy, we can think of these basic operations as combining, like ingredients in a recipe, to produce the instances of (or token) mental states that people give commonsense names to (and that correspond to instances of folk psychology) categories like “emotion” (or “anger,” “sadness,” etc.), “cognition” (or “belief,” “memory,” etc.), “perception,” “intelligence,” and the like (Barrett, 2009a). Emergence cannot be easily modeled in standard psychometric models, and so it is necessary to add a hypothetical latent construct to represent the emergent mental state as the instance of a folk psychological category that is created.

Inspired by the scope of the earliest psychological models, our lab introduced the first psychological construction approach to mind:brain correspondence that we know of and published several papers articulating the key assumptions and hypotheses of the model (Barrett, 2006b, 2009b; Barrett & Bar, 2009; Barrett & Bliss-Moreau, 2009; Barrett & Lindquist, 2008; Barrett, Lindquist, Bliss-Moreau, et al., 2007; Barrett, Lindquist, & Gendron, 2007; Barrett, Mesquita, Ochsner, & Gross, 2007; Barrett, Ochsner, & Gross, 2007; Duncan & Barrett, 2007; Gendron & Barrett, 2009; Lindquist & Barrett, 2008a, 2008b). Our working hypothesis is that every human brain contains a number of distributed networks that correspond to the basic ingredients of emotions and other mental states (like thoughts, memories, beliefs, and perceptions).3 In my lab’s model of emotion, for example (depicted, in part, in Figure 7b), categorization is treated as a psychological primitive—it is part of the emergence of an instance of emotion, not something that comes after the fact that stands apart as a separate mental event. Our model views folk psychology categories as having meaning, not as explanatory mechanisms in psychology, but as ontologically subjective categories they have functional distinctions for human perceivers in making mental state inferences that allow communicating about and predicting human action (for a discussion see Barrett, 2009a).4

In both the elemental or emergent variety, a psychological construction approach to mind:brain correspondence is an example of what Dennett (1991) referred to as the design level of the mind. By adopting the ontology of the design level, it is possible to both support scientific induction and predict “sketchily and riskily,” as Dennett (1991, p. 199) put it. Dennett (1996) recommended that a good starting point for the ontology is the intentional stance of a human perceiver. In fact, psychology did begin with the intentional stance, and it led us astray for almost a century by having us mistakenly treat folk faculty psychology concepts as scientifically causal. If psychological states are constructed, emergent phenomena, then they will not reveal their more primitive elements, any more than a loaf of bread reveals all the ingredients that constitute it.

My lab takes the view that this ontology is a work in progress and that it can be inductively discovered by more systematically investigating how that the same brain regions and networks show up across a variety of different psychological task domains (for a recent discussion, see Suvak & Barrett, 2011). In this way, it is possible to ask whether a brain region or network is performing a more basic process that is required across task domains (for a recent discussion on the related idea of “neural re-use,” see Anderson, 2010). Another important source of relevant evidence for psychological primitives comes from an inductive analysis of a meta-analytic database for neuroimaging studies of emotion (Kober et al., 2008). Using cluster analysis and multiple dimensional scaling, we identified six functional groupings consistently coactivated across neuroimaging studies of emotional experience and emotion perception. These functional groupings appear to be task-related combinations of distributed networks that exist within the intrinsic connectivity of the human brain.5 Intrinsic connectivity reveals many topographically distinct networks that appear to have distinct mechanistic functions (Corbetta & Shulman, 2002; Corbetta, Patel, & Shulman, 2008; Dosenbach et al., 2007; Seeley et al., 2007; Smith et al., 2009; Sridharan, Levitin, & Menon, 2008), some of which appear similar to the psychological ingredients we proposed in our original psychological construction models (e.g., see Barrett, 2006b, 2009a).

One advantage of a psychological construction approach is that it captures insights from both token identity and causal supervenience theories. Psychological construction honors the idea that different levels of neural measurement can be described with different psychological functions. In fact, emergence very likely occurs at other levels of the model (e.g., as neurons configure into networks or perhaps if networks combine to make psychological primitives). This is a feature, not a bug, in psychological science. Furthermore, psychological construction acknowledges that a set of neurons can be described as having one psychological function when they participate in one brain network but another function when they participate in a second network. This is not a failure of cognitive neuroscience to localize function but rather an inherent property of brain function and organization. In addition, psychological construction avoids what Dennett (1996 called the “intolerable extremes of simple realism and simple relativism” (p. 37). It also gives the science of psychology a distinct ontological value and reason for existing in the age of neuroscience (cf. Barrett, 2009a). And whereas faculty psychology categories might be completely ontologically subjective (and made real by the collective intentionality in a group of perceivers, like members of a culture; cf. Barrett, 2009a), psychological primitives might be more objective, in the sense that they correspond to brain networks that are “out there” to be detected (although perhaps not anatomically). That being said, it cannot be said that these networks are the “truest” level of brain organization in a perceiver-independent kind of way. They are not entirely independent of the goals and needs of human perceivers because they are the psychological categories that we find most useful (see Dennett, 1996, p. 39; e.g., Wilson, Gaffan, Browning, & Baxter, 2010). In this way, it is possible to discuss causal reduction without being a “neophrenologist” and entering into a kind of ontological reduction that is not tenable. Thoughts and feelings do not exist separately from the neurons that create them in the moment. But they cannot necessarily be reduced to the firing of those neurons for our purposes, either for ontological (emergent) or practical (design) reasons.

Conclusions

It has been said that although physics, chemistry, and biology might be the hard sciences, psychology is the hardest science of all, because in psychology we must make inferences about the mental from measures of the physical. Anything that helps scientists to be explicit about their philosophical assumptions in making these inferences, and forces them to be clearer about manner and viability of testing their own hypotheses, is a valuable tool. In this regard, Kievit et al.’s article was an inspiration to take the ideas of psychological construction and attempt to formalize them as measurement models. This attempt will hopefully increase the likelihood that experiments will be conducted that can properly test those models. For example, the majority of studies that have been published on emotion thus far do not involve anything other than the most typical instances of emotion and so cannot be used to properly test the basic tenants of psychological construction. Published research often treats emotions as elemental entities rather than as end states to be created and deconstructed. Studies relevant to psychological construction are just now just starting to be run and published (Lindquist & Barrett, 2008b; Wilson-Mendenhall, Barrett, Simmons, & Barsalou, in press), and we hope that clear measurement models will encourage more research and future mathematical developments (for an interesting example of using intrinsic networks to explain a psychological task, see Spreng, Stevens, Chamberlain, Gilmore, & Schacter, 2010).

Kievit et al.’s article is also valuable because it reminds us that there is no “value-free” way to describe the relation between measures of the brain and measures of the mind. This observation is not specific to understanding mind:brain correspondence, of course. It is true whenever scientists take physical measurements and make psychological inferences from them. This is because psychological constructs are not real in the natural sense—they are real in subjective sense, and therefore they are subject to human goals and concerns. It is perfectly possible to have a mathematically sophisticated science of the subjectively real—just look at economics.

Still, challenges remain for Kievit et al.’s approach. Foremost is, practically speaking, the scalability of their approach. Their versions of identity and supervenience theory were tested using very molar measures (e.g., brain volume), but it is an open question how well this approach will work with other measures of the nervous system. Another challenge is the mathematics of standard psychometric theory. There is a lot we can do to test our philosophical notions with what we have, but the idea that might turn out to have the most scientific traction (emergence) seems to require a different set of statistical models to properly test it.

Perhaps more important than the practical obstacles is an emotional one that some might experience when reading Kievit et al.’s article. When we, as scientists, do not formalize our measurement models to lay bare our underlying philosophy of science, we are free to say (or write) one thing and mean another. And sometimes we do just that, not because of any mal-intent but more because we are unaware of the philosophical implications in how we are interpreting our data. When measurement models are excavated for their philosophical foundations, however, people have fewer opportunities for inconsistency. Being forced to be explicit in our assumptions, perhaps in their starkest form, allows us to realize that we might actually mean something other than what we intend. We give up our wiggle room. This can sometimes cause hard feelings, just as when a therapist acts the mirror to reveal a self-deception. One defense, in science, is to invoke the notorious “straw man” argument. But discomfort is not always a sign that someone intends harm—sometimes it is simply an indication that our own deeply held beliefs require a closer look, a little more deliberation, and even a change in point of view. What is intuitive is always more comfortable than what is not. But in science, such comfort rarely indicates that an idea or hypothesis is true.

Acknowledgments

Preparation of this article was supported by the National Institutes of Health Director’s Pioneer Award (DP1OD003312), a grant from the National Institute of Aging (AG030311), and a contract with the U.S. Army Research Institute for the Behavioral and Social Sciences (contract W91WAW-08-C-0018). The views, opinions, and/or findings contained in this article are solely those of the author(s) and should not be construed as an official Department of the Army or DOD position, policy, or decision. Thanks to the members of my Interdisciplinary Affective Science Laboratory for stimulating discussions on the link between philosophy of mind:brain correspondence and psychological construction.

Footnotes

In the emotion example, some older emotion models (e.g., Dewey, 1895) and some newer ones (e.g., LeDoux, 1996) ontologically reduce emotions to physical states and set aside as ontologically separate the experience of emotion as the conscious feeling of this physical state (in these models, emotion experience would be called a “nomological dangler,” a mental phenomenon that does not function as a cause for any observable behavior; but see Baumeister & Masicampo, 2010).

Frankly, from the standpoint of identity theory, it is not clear what the “surplus meaning” would actually refer to. What is the nature of the nonobserved events or processes that are causing both the neural and the psychological measurements?

Taking inspiration from connectionist and network approaches to the brain (e.g., Fuster, 2006; Mesulam, 1998; O’Reilly & Munakata, 2000; Poldrack, Halchenko, & Hanson, 2009; Raichle & Snyder, 2007; Seeley et al., 2007; Smith et al. 2009), we hypothesized that basic psychological ingredients correspond to distributed functional network of brain regions. Like ingredients in a recipe, the weighting and contribution of each network is predicted to vary across instances of each psychological category, or even across instances within the same category. One possibility is that these brain networks have intrinsic connectivity (i.e., show correlated activity during mental activity that is not triggered by an external stimulus). Another possibility is that these networks have dynamic functional connectivity (i.e., producing neural assemblies that routinely emerge in response to an external stimulus). The central idea, however, is psychology’s job is to identify and understand these basic psychological functions, whereas neuroscientific investigations can reveal the underlying brain basis of these psychological ingredients.

Complex psychological categories like emotion, cognition, perception, intelligence, and so on, are not real in an objective sort of way—they derive their reality from the intentionality that is shared by a group of people (i.e., they are folk categories that are ontologically subjective; for a discussion, see Barrett, 2009a). Therefore might retain their scientific use as descriptions of mental states that require explanation, or in sociology and other social sciences that occupy a different positions in the ontological hierarchy of sciences, but they do not themselves correspond to mental mechanisms.

Intrinsic connectivity networks are identified by examining correlations in low-frequency signals in fMRI data recorded when there is no external stimulus or task (hence this misnomer “resting state” or “default” activity; Beckmann, DeLuca, Devlin, & Smith, 2005; Biswal, Yetkin, Haughton, & Hyde, 1995; Buckner & Vincent, 2007; Greicius, Krasnow, Reiss, & Menon, 2003; Fox et al., 2005). The temporal dynamics of these low-frequency signals reveals networks of regions that increase and decrease in their activity together in a correlated fashion.

References

- Anderson ML. Neural reuse: A fundamental organizational principle of the brain. Behavioral and Brain Sciences. 2010;33:245–313. doi: 10.1017/S0140525X10000853. [DOI] [PubMed] [Google Scholar]

- Armstrong DM. A materialist theory of the mind. London: Routledge; 1968. [Google Scholar]

- Barrett LF. Modeling emotion as an emergent phenomenon: A causal indicator analysis. Paper presented at the annual meeting of the Society for Personality and Social Psychology; Nashville, TN. 2000. Feb, Retrieved from http://www.affective-science.org/publications.shtml. [Google Scholar]

- Barrett LF. Emotions as natural kinds? Perspectives on Psychological Science. 2006a;1:28–58. doi: 10.1111/j.1745-6916.2006.00003.x. [DOI] [PubMed] [Google Scholar]

- Barrett LF. Solving the emotion paradox: Categorization and the experience of emotion. Personality and Social Psychology Review. 2006b;10:20–46. doi: 10.1207/s15327957pspr1001_2. [DOI] [PubMed] [Google Scholar]

- Barrett LF. The future of psychology: Connecting mind to brain. Perspectives on Psychological Science. 2009a;4:326–339. doi: 10.1111/j.1745-6924.2009.01134.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF. Variety is the spice of life: A psychological construction approach to understanding variability in emotion. Cognition & Emotion. 2009b;23:1284–1306. doi: 10.1080/02699930902985894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Bar M. See it with feeling: Affective predictions in the human brain. Philosophical Transactions of the Royal Society of London Series B, Biological Sciences. 2009;364:1325–1334. doi: 10.1098/rstb.2008.0312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Bliss-Moreau E. Affect as a psychological primitive. Advances in Experimental Social Psychology. 2009;41:167–218. doi: 10.1016/S0065-2601(08)00404-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist K. The embodiment of emotion. In: Semin G, Smith E, editors. Embodied grounding: Social, cognitive, affective, and neuroscience approaches. New York, NY: Cambridge University Press; 2008. pp. 237–262. [Google Scholar]

- Barrett LF, Lindquist K, Bliss-Moreau E, Duncan S, Gendron M, Mize J, et al. Of mice and men: Natural kinds of emotion in the mammalian brain? Perspectives on Psychological Science. 2007;2:297–312. doi: 10.1111/j.1745-6916.2007.00046.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist K, Gendron M. Language as a context for emotion perception. Trends in Cognitive Sciences. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Mesquita B, Ochsner KN, Gross JJ. The experience of emotion. Annual Review of Psychology. 2007;58:373–403. doi: 10.1146/annurev.psych.58.110405.085709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Ochsner KN, Gross JJ. On the automaticity of emotion. In: Bargh J, editor. Social psychology and the unconscious: The automaticity of higher mental processes. New York, NY: Psychology Press; 2007. pp. 173–218. [Google Scholar]

- Baumeister RF, Masicampo EJ. Conscious thought is for facilitating social and cultural interactions: Mental simulations serve the animal–culture interface. Psychological Review. 2010;117:945–971. doi: 10.1037/a0019393. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, DeLuca M, Devlin JT, Smith SM. Investigations into resting-state connectivity using independent component analysis. Philosophical Transactions of the Royal Society of London Series B, Biological Sciences. 2005;360:1001–1013. doi: 10.1098/rstb.2005.1634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biswal B, Yetkin F, Haughton V, Hyde J. Functional connectivity in the motor cortex of resting human brain using echo-planar MRI. Magnetic Resonance in Medicine. 1995;34:537–541. doi: 10.1002/mrm.1910340409. [DOI] [PubMed] [Google Scholar]

- Bollen KA, Lennox R. Conventional wisdom on measurement: A structural equation perspective. Psychological Bulletin. 1991;110:305–314. [Google Scholar]

- Buckner RL, Vincent JL. Unrest at rest: Default activity and spontaneous network correlations. Neuro Image. 2007;37:1091–1096. doi: 10.1016/j.neuroimage.2007.01.010. [DOI] [PubMed] [Google Scholar]

- Calder AJ. Disgust discussed. Annals of Neurology. 2003;53:427–428. doi: 10.1002/ana.10565. [DOI] [PubMed] [Google Scholar]

- Campbell DT. “Downward causation” in hierarchically organized biological systems. In: Ayala FJ, Dobzhansky T, editors. Studies in the philosophy of biology. Berkeley, CA: University of California Press; 1974. pp. 179–186. [Google Scholar]

- Chalmers DJ. The conscious mind. New York, NY: Oxford University Press; 1996. [Google Scholar]

- Clore GL, Ortony A. Appraisal theories: How cognition shapes affect into emotion. In: Lewis M, Haviland-Jones JM, Barrett LF, editors. Handbook of emotions. 3. New York, NY: Guilford; 2008. pp. 628–642. [Google Scholar]

- Coan JA. Emergent ghosts of the emotion machine. Emotion Review. 2010;2:274–285. [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3:215–229. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Patel G, Shulman GL. The reorienting system of the human brain: From environment to theory of mind. Neuron. 2008;58:306–324. doi: 10.1016/j.neuron.2008.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson D. The material mind. In: Davidson D, editor. Essays on action and events. New York, NY: Oxford University Press; 1980. pp. 245–260. [Google Scholar]

- Davis M. The role of the amygdala in fear and anxiety. Annual Review of Neuroscience. 1992;15:353–375. doi: 10.1146/annurev.ne.15.030192.002033. [DOI] [PubMed] [Google Scholar]

- Dennett DC. Real patterns. The Journal of Philosophy. 1991;88:27–51. [Google Scholar]

- Dennett DC. The intentional stance. Cambridge, MA: MIT Press; 1996. [Google Scholar]

- Dewey J. The theory of emotion. II. The significance of emotions. Psychological Review. 1895;2:13–32. [Google Scholar]

- Dosenbach NU, Fair DA, Miezin FM, Cohen AL, Wenger KK, Dosenbach RAT, et al. Distinct brain networks for adaptive and stable task control in humans. Proceedings of the National Academy of Sciences. 2007;104:11073–11078. doi: 10.1073/pnas.0704320104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan S, Barrett LF. Affect as a form of cognition: A neurobiological analysis. Cognition and Emotion. 2007;21:1184–1211. doi: 10.1080/02699930701437931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan S, Barrett LF. Affect as a form of cognition: A neurobiological analysis. Cognition and Emotion. 2007;21:1184–1211. doi: 10.1080/02699930701437931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edelman G. Neural Darwinism. The theory of neuronal group selection. New York, NY: Basic; 1987. [DOI] [PubMed] [Google Scholar]

- Ekman P. An argument for basic emotions. Cognition and Emotion. 1992;6:169–200. [Google Scholar]

- Ekman P. Basic emotions. In: Dalgleish T, Power MJ, editors. Handbook of cognition and emotion. New York, NY: Wiley; 1999. pp. 46–60. [Google Scholar]

- Ekman P, Cordaro D. What is meant by calling emotion basic. Emotion Review in press. [Google Scholar]

- Fanselow MS, Poulos AM. The neuroscience of mammalian associative learning. Annual Review of Psychology. 2005;56:207–234. doi: 10.1146/annurev.psych.56.091103.070213. [DOI] [PubMed] [Google Scholar]

- Fendt M, Fanselow MS. The neuroanatomical and neurochemical basis of conditioned fear. Neuroscience and Biobehavioral Reviews. 1999;23:743–760. doi: 10.1016/s0149-7634(99)00016-0. [DOI] [PubMed] [Google Scholar]

- Feyerabend PK. Comment: Mental events and the brain. Journal of Philosophy. 1963;60:295–296. [Google Scholar]

- Fodor JA. Special sciences. Synthese. 1974;28:97–115. [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proceedings of the National Academy of Science. 2005;102:9673–9678. doi: 10.1073/pnas.0504136102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM. The cognit: A network model of cortical representation. International Journal of Psychophysiology. 2006;60:125–132. doi: 10.1016/j.ijpsycho.2005.12.015. [DOI] [PubMed] [Google Scholar]

- Gendron M, Barrett LF. Reconstructing the past: A century of ideas about emotion in psychology. Emotion Review. 2009;1:1–24. doi: 10.1177/1754073909338877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greicius MD, Krasnow B, Reiss AL, Menon V. Functional connectivity in the resting brain: a network analysis of the default mode hypothesis. Proceedings of the National Academy of Sciences. 2003;100:253–258. doi: 10.1073/pnas.0135058100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izard C. Forms and functions of emotions: Matters of emotion-cognition interactions. Emotion Review in press. [Google Scholar]

- James W. The principles of psychology. New York, NY: Holt; 1890. [Google Scholar]

- Kim J. Making sense of emergence. Philosophical Studies. 1999;95:3–36. [Google Scholar]

- Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist KA, Wager TD. Functional networks and cortical-subcortical interactions in emotion: A meta-analysis of neuroimaging studies. Neuro Image. 2008;42:998–1031. doi: 10.1016/j.neuroimage.2008.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kopchia KL, Altman HJ, Commissaris RL. Effects of lesions of the central nucleus of the amygdala on anxiety-like behaviors in the rat. Pharmacology, Biochemistry, and Behavior. 1992;43:453–461. doi: 10.1016/0091-3057(92)90176-g. [DOI] [PubMed] [Google Scholar]

- LeDoux JE. The emotional brain: The mysterious underpinnings of emotional life. New York, NY: Simon & Schuster; 1996. [Google Scholar]

- LeDoux J. The amygdala. Current Biology. 2007;17:R868–R874. doi: 10.1016/j.cub.2007.08.005. [DOI] [PubMed] [Google Scholar]

- Levenson R. Basic emotion questions. Emotion Review in press. [Google Scholar]

- Lewes GH. Problems in life and mind. Vol. 2. London, UK: Kegan Paul, Trench, Turbner, & Co; 1875. [Google Scholar]

- Lindquist K, Barrett LF. Constructing emotion: The experience of fear as a conceptual act. Psychological Science. 2008a;19:898–903. doi: 10.1111/j.1467-9280.2008.02174.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist K, Barrett LF. Emotional complexity. In: Lewis M, Haviland-Jones JM, Barrett LF, editors. The handbook of emotion. 3. New York, NY: Guilford; 2008b. pp. 513–530. [Google Scholar]

- Lindquist KA, Wager TD, Kober H, Bliss-Moreau E, Barrett LF. The brain basis of emotion: A meta-analytic review. Behavioral and Brain Sciences. doi: 10.1017/S0140525X11000446. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacCorquodale K, Meehl PE. On a distinction between hypothetical constructs and intervening variables. Psychological Review. 1948;55:95–107. doi: 10.1037/h0056029. [DOI] [PubMed] [Google Scholar]

- McClelland JL. Emergence in cognitive science. Topics in Cognitive Science. 2010;2:751–770. doi: 10.1111/j.1756-8765.2010.01116.x. [DOI] [PubMed] [Google Scholar]

- McLaughin B. Emergence and supervenience. Intellectia. 1997;25:25–43. [Google Scholar]

- Mesulam MM. Brain: A Journal of Neurology. Pt. 6. Vol. 121. 1998. From sensation to cognition; pp. 1013–1052. [DOI] [PubMed] [Google Scholar]

- O’Reilly RC, Munakata Y. Computational explorations in cognitive neuroscience. Cambridge, MA: MIT Press; 2000. [Google Scholar]

- Panksepp J. Affective neuroscience: The foundations of human and animal emotions. New York, NY: Oxford University Press; 1998. [Google Scholar]

- Panksepp J. Emotions as natural kinds within the mammalian brain. In: Lewis M, Haviland-Jones JM, editors. Handbook of emotions. 2. New York, NY: Guilford; 2000. pp. 137–156. [Google Scholar]

- Place UT. Is Consciousness a Brain Process? British Journal of Psychology. 1956;47:44–50. doi: 10.1111/j.2044-8295.1956.tb00560.x. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Halchenko YO, Hanson SJ. Decoding the large-scale structure of brain function by classifying mental states across individuals. Psychological Science. 2009;20:1364–1372. doi: 10.1111/j.1467-9280.2009.02460.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner J, Russell JA, Peterson BS. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Development and Psychopathology. 2005;17:715–734. doi: 10.1017/S0954579405050340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Putnam Hilary. Mind, language, and reality. Cambridge, UK: Cambridge University Press; 1975. [Google Scholar]

- Raichle ME, Snyder AZ. A default mode of brain function: A brief history of an evolving idea. Neuro Image. 2007;37:1083–1090. doi: 10.1016/j.neuroimage.2007.02.041. [DOI] [PubMed] [Google Scholar]

- Reichenbach H. Experience and prediction. Chicago, IL: University of Chicago Press; 1938. [Google Scholar]

- Russell JA. Core affect and the psychological construction of emotion. Psychological Review. 2003;110:145–172. doi: 10.1037/0033-295x.110.1.145. [DOI] [PubMed] [Google Scholar]

- Seager WE. Metaphysics and consciousness. London, UK: Routledge; 1991. [Google Scholar]

- Searle JR. The rediscovery of the mind. Cambridge, MA: MIT Press; 1992. [Google Scholar]

- Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, et al. Dissociable intrinsic connectivity networks for salience processing and executive control. Journal of Neuroscience. 2007;27:2349. doi: 10.1523/JNEUROSCI.5587-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smart JJC. Sensations and brain processes. Philosophical Review. 1959;68:141–156. [Google Scholar]

- Smith SM, Fox PT, Miller KL, Glahn DC, Fox PM, Mackay CE, et al. Correspondence of the brain’s functional architecture during activation and rest. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:13040–13045. doi: 10.1073/pnas.0905267106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spreng NR, Stevens WD, Chamberlain JP, Gilmore AW, Schacter DL. Default network activity, coupled with the frontoparietal control network, supports goal-directed cognition. Neuro Image. 2010;53:303–317. doi: 10.1016/j.neuroimage.2010.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sridharan D, Levitin DJ, Menon V. A critical role for the right fronto-insular cortex in switching between central-executive and default-mode networks. Proceedings of the National Academy of Sciences of the United States of America. 2008;105:12569–12574. doi: 10.1073/pnas.0800005105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suvak MK, Barrett LF. The brain basis of PTSD: A psychological construction analysis. Journal of Traumatic Stress. 2011;24:3–24. doi: 10.1002/jts.20618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor C. Mind-body identity, a side issue? Philosophical Review. 1967;76:201–213. [Google Scholar]

- Vazdarjanova A, McGaugh JL. Basolateral amygdala is not critical for cognitive memory of contextual fear conditioning. Proceedings of the National Academy of Sciences. 1998;95:15003–15007. doi: 10.1073/pnas.95.25.15003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson CRE, Gaffan D, Browning PGF, Baxter MG. Functional localization within the prefrontal cortex: Missing the forest for the trees? Trends in Cognitive Sciences. 2010;33:533–540. doi: 10.1016/j.tins.2010.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson-Mendenhall CD, Barrett LF, Simmons WK, Barsalou LW. Grounding emotion in situated conceptualization. Neuropsychologia. doi: 10.1016/j.neuropsychologia.2010.12.032. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]