Abstract

Objective. To develop a formative assessment strategy for use in an online pharmacy orientation course that fosters student engagement with the course content and facilitates a manageable grading workload for the instructor.

Design. A formative assessment strategy involving student-generated, multiple-choice questions was developed for use in a high-enrollment, online course.

Assessment. Three primary outcomes were assessed: success of the assessment in effectively engaging students with the content, interrater reliability of the grading rubric, and instructor perception of grading workload. The project also evaluated whether this metacognitive strategy transferred to other aspects of the students' academic lives. The instructor perception was that the grading workload was manageable.

Conclusion. Using student-generated multiple-choice questions is an effective approach to assessment in an online course introducing students to and informing them about the profession of pharmacy.

Keywords: pharmacy education, online learning, assessment, multiple-choice questions, formative assessment, instructor workload, student perceptions

INTRODUCTION

Orientation to Pharmacy is an informational course taught entirely online to educate students about the role of pharmacists in consumer healthcare and as part of the healthcare team. Students enroll in this course for 2 primary reasons: interest in pursuing a career in pharmacy and thus a desire to learn more about the profession, or a scheduling need for 2 credits and/or an online course.

Developing formative and summative assessments appropriate for the learning goals and content has been difficult because this course is both introductory and informational. Several strategies for student assessment have been used over the 7-year history of this online course, including having students post responses to a discussion board for each topic, choose and critique a pharmacy-related article, take an instructor-created, multiple-choice examination, and journal on content-related topics. The writing strategies resulted in an unmanageable grading workload for instructors of this growing, high-enrollment course, which averages 120 students for each fall and spring offering. Instructor-generated, multiple-choice examinations resulted in too much memorization of detailed facts, which were often not necessarily of interest to the students or even central to understanding of the profession. The instructional team sought to develop formative and summative assessments that engaged students in the content and were both appropriate for the learning objectives and manageable for instructors to evaluate.

The idea that students require learning activities that foster active engagement with course material is not a new concept. It is a central tenet among educational scholars ranging from John Dewey to more contemporary voices such as David Jonassen. While this educational approach is generally accepted, implementing the strategies can be difficult. Multiple-choice examination questions are commonly used to assess learning.1 This assessment format offers many advantages, but the primary reasons many educators use this strategy are the ease and objectivity of grading, which are especially important for a high-enrollment course. 1,2 Multiple-choice examination questions can be written in a way that assesses more than simple learning outcomes,1 but it is difficult to write high-quality constructed multiple-choice questions that measure complex learning outcomes, especially in the large numbers often required for a course.3 Frequent updating of the questions requires much instructor time and effort and often results in inconsistent quality. 3,4 Multiple-choice assessment approaches are often criticized for not facilitating active learning2,4 because they provide students with a list of choices rather than requiring them to actively identify the correct choice and explain or justify why it is best, as they would be required to do in real life.

Educational activities that engage learners in exploring content independently require additional instructor time and effort, which is not always feasible. Educational contexts that involve a large, diverse student enrollment and distance education present significant challenges. A context such as this requires not only engaging activities to capture and maintain student motivation, which is a primary determinant of student retention,5 but also a substantial amount of instructor guidance and feedback.5-11

Within formal educational structures, students have many opportunities to answer questions but few opportunities to pose them.12 In the sciences, inquiry is an especially important skill that instructors try to help their students develop, yet students are rarely engaged in this activity due to the instructor workload challenges.13 The argument that students must apply, practice, and struggle with the material in a way that is purposeful, situated, and collaborative is supported in many theorists’ works, but these theoretical frameworks require complex learning experiences (coupled with necessary learner scaffolding) that engage both cognitive and affective domains to facilitate meaningful learning and knowledge transfer.

Assessing learning in high-enrollment courses continues to be a significant challenge for the educational community because of the difficulty of achieving a reasonable balance between providing experiences that foster student exploration of content and maintaining a manageable instructor workload. Using student-generated questions as a formative assessment is one potential approach to achieving this balance.3, 13-20

DESIGN

This study evaluated the use of student-generated, multiple-choice questions as a formative assessment strategy in a high-enrollment, online course. Three primary outcomes were assessed: success of formative assessment to facilitate meaningful student engagement with the content, interrater reliability of the grading rubric, and instructor perception of grading workload. The project explored the transfer of this metacognitive strategy to other aspects of the students’ lives; specifically, did this formative assessment impact student ability to self-assess learning progress and think critically? This learning objective was addressed through a student survey in which students were asked to comment on any influence the experience of creating multiple-choice questions may have had on their learning strategies for other courses.

Course Structure

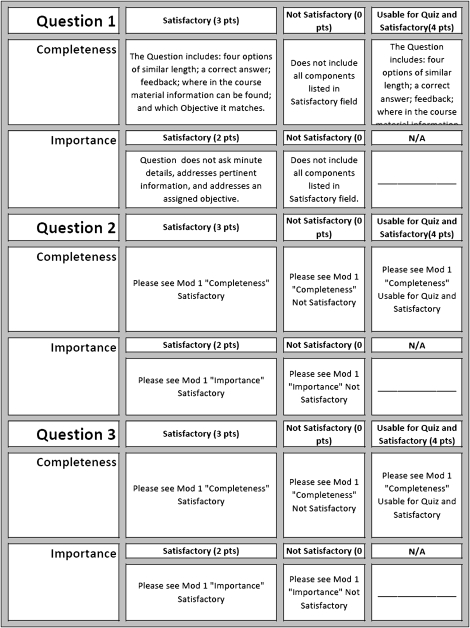

In this online course, content is divided into 3 sections. The first focuses on the practice philosophy of pharmacy and basic information about the structure of the health care system with respect to prescription drug pricing and insurance. The second section addresses the diverse range of practice settings and career opportunities for pharmacists. The third section is about pharmacy professional education and postgraduate education and training options. For each section, students were asked to create multiple-choice questions that measure the desired learning objectives for that section. After questions were graded by the instructional team using the grading rubric (Figure 1), well-crafted, student-generated questions were chosen by the course director and used as the summative assessment for each section. Only questions that received a total score of 6 on the grading rubric (4 points for completeness and 2 points for importance) were eligible for inclusion in the section quiz. Students’ final course grades were determined by their scores for the 3 student-generated, multiple-choice question assignments and on the 3 section quizzes comprised of student-generated questions selected by the course director. Students who wrote questions appropriate for use in the quiz received 1 extra-credit point for each well-crafted question. Summative quizzes for sections 1 and 3 were comprised of 15 student-generated questions selected by the course director. The quiz for section 2, which is larger, included 20 questions. For ease of scoring, the grading rubric was an all-or-nothing point allocation, in which no partial credit was awarded. To qualify for points for the assignment, the multiple-choice quiz questions had to be turned in on time and written to specifications, including all required components. These specifications were explained to students at the beginning of the course in the online orientation, the syllabus, and the assignment directions. The grading rubric was available to students at the start of the course. Students also had an opportunity to earn extra credit by interviewing a pharmacist and reporting on what they learned from the interview. This opportunity allowed for additional personal exploration as well as extra points if needed to compensate for incomplete section assignments.

Figure 1.

Assignment grading rubric for an online introduction to pharmacy course.

EVALUATION AND ASSESSMENT

This study included a “triangulation of data sources and analytical perspectives to increase the accuracy and credibility of finding.”21 Multiple sources of quantitative and qualitative data were collected to provide a greater understanding of the data generated by the questions.22-24

Data Analysis

Descriptive statistics of student-ranked survey responses were collated and reported. Repeated measures ANOVA was used to test interrater reliability of the grading rubric, with dependent-variable score and independent variable rater. Ten randomly selected student assignments were evaluated by each rater using the grading rubric and agreement across raters was assessed.

A survey was developed using guidelines described by Gaddis25 and Dillman, Tortora, and Bowker.26 Following the initial survey development, a health-care professional student completed the survey using the “think aloud” approach, wherein the student took the survey in the presence of an investigator, describing what she thought each item in the survey was asking, what she was thinking as she responded, and any difficulty she was having completing the survey. Based on these comments, the survey instrument was revised, pilot tested with 5 students not involved in the study, and modified accordingly. The survey instrument contained 7 ranked questions and 6 open-ended questions. The consent form and survey instrument were delivered at the end of the semester by means of a hypertext link within an e-mail invitation generated using SurveyMonkey. Students were assured that responses were anonymous. One reminder notification to complete the survey was sent out 1 week following the original invitation.

Content analysis of both the open-ended survey responses and focus-group session was conducted using the Classic Analysis Strategy, a constant comparison-like approach27 to reveal what participants said within their groups.28 Student comments were first reviewed for emerging themes by the 2 investigators; after several passes over the comments, themes were combined when appropriate and compared for internal consistency. This study was deemed exempt from review by the University of Minnesota Institutional Review Board.

Eighty-nine of the 136 students in the class responded to the survey, for a 65% response rate. Demographic characteristics of respondents are reported in Table 1. Respondents are primarily 18 to 20 years of age (70.8%), female (60.7%), and in their freshman year (40.4%). Fifty-two (58.4%) students reported their majors to be in the sciences (eg, biology, biochemistry, chemistry, and neuroscience). The majority (73%) of all enrolled students received an A for the course, and most received an A on the 3 multiple-choice question-writing assignments (assignment 1, 86%; assignment 2, 92%; assignment 3, 85%).

Table 1.

Demographics of Students in an Online Orientation to Pharmacy Course, N = 89

| Variable | Total |

| Age, y | |

| Less than 18 | 0 |

| 18-20 | 63 (70.8) |

| 21-25 | 18 (20.2) |

| 26-30 | 6 (6.7) |

| 31-40 | 1 (1.1) |

| 41 and older | 1 (1.1) |

| Gender | |

| Male | 54 (60.7) |

| Female | 34 (38.2) |

| Other | 1 (1.1) |

| Year in school | |

| Freshman | 36 (40.4) |

| Sophomore | 26 (29.2) |

| Junior | 12 (13.5) |

| Senior | 10 (11.2) |

| Graduate/professional | 1 (1.1) |

| PSEOa | 2 (2.2) |

| Other | 2 (2.2) |

| Ethnicity | |

| African-American | 8 (9.0) |

| American Indian or Alaskan Native | 2 (2.2) |

| Asian or Pacific Islander | 15 (16.9) |

| Chicano/Latino/Hispanic | 3 (3.4) |

| Caucasian | 56 (62.9) |

| Other | 6 (6.7) |

| English as first language | |

| Yes | 66 (74.2) |

PSEO = Post Secondary Enrollment Options

Using one-way repeated measures ANOVA with the independent-variable rater and dependent-variable score resulted in a nonsignificant result (P = 0.17), indicating no significant scoring differences across the different raters. The 3 raters played different roles in the course: rater 1 was a pharmacist and course director, rater 2 was a nonpharmacist not formally involved in the course, and rater 3 was a nonpharmacist and course coordinator. The results of the 3 independent raters grading 10 randomly selected student assignments are provided in Table 2; the total points possible from each rater on each assignment was 15. Table 3 describes student responses to the 7 ranked statements relating to the activity of writing multiple-choice questions. Regarding writing their own multiple-choice questions, students agreed or strongly agreed that it was a meaningful learning activity (89.9%), allowed for personalized learning (88.8%), helped the students learn more about pharmacy (87.6%), helped engage them in the learning materials (94.4%), helped their performance on quizzes (83.1%), and was a reasonable course expectation (91.0%). Students disagreed or strongly disagreed that the activity created a barrier to learning (73.0%).

Table 2.

Results of Three Independent Raters Grading 10 Randomly Selected Student Assignments From an Online Introduction to Pharmacy Coursea

| Student | Rater 1 | Rater 2 | Rater 3 |

| 1 | 6 | 6 | 6 |

| 2 | 15 | 15 | 15 |

| 3 | 15 | 15 | 15 |

| 4 | 15 | 15 | 15 |

| 5 | 15 | 15 | 15 |

| 6 | 15 | 15 | 15 |

| 7 | 6 | 0 | 4 |

| 8 | 15 | 10 | 15 |

| 9 | 12 | 12 | 12 |

| 10 | 15 | 15 | 15 |

Total points possible per assignment was 15.

Table 3.

Student Perceptions on Writing Multiple-Choice Questions

| Statement | Strongly Disagree | Disagree | Agree | Strongly Agree |

| Writing my own multiple-choice questions is a meaningful learning activity | 4 (4.5) | 5 (5.6) | 46 (51.7) | 34 (38.2) |

| Writing my own multiple-choice questions allows me to personalize my learning | 4 (4.5) | 6 (6.7) | 47 (52.8) | 32 (36.0) |

| Writing my own multiple-choice questions helps me learn more about pharmacy | 3 (3.4) | 8 (9.0) | 48 (53.9) | 30 (33.7) |

| Writing my own multiple-choice questions helps me engage in the learning materials | 1 (1.1) | 4 (4.5) | 45 (50.6) | 39 (43.8) |

| Writing my own multiple-choice questions creates a barrier to my learning | 19 (21.3) | 46 (51.7) | 18 (20.2) | 6 (6.7) |

| Writing my own multiple-choice questions helps my performance on the quizzes | 2 (2.2) | 13 (14.6) | 47 (52.8) | 27 (30.3) |

| Being asked to write my own multiple-choice questions is a reasonable expectation | 2 (2.2) | 6 (6.7) | 46 (51.7) | 35 (39.3) |

All student responses to the open-ended questions were first evaluated for emerging themes by 2 independent reviewers. After several passes over the responses, 5 emerging themes were identified and used for coding.

Theme 1: Student-generated questions resulted in greater time and depth of content engagement. Students stated that having to create multiple-choice questions required them to more thoroughly study and understand the course materials. Interestingly, students stated that without this assignment, they would have only superficially reviewed the course materials. Based on their statements, students were surprised that learning objectives were the organizing format for the course material and that these objectives determined examination items. Students reported that this knowledge helped them study for examinations and that the formative assessment improved their test-taking skills.

Theme 2: Student-generated questions fostered personal exploration of content. Students reported that the formative assessment facilitated a more personal engagement with the course material. They reported that they were able to focus on areas of the course that interested them and that taking on the role of “instructor” changed the context of the assignment for them. Students stated that they have continued to use this strategy in other courses as a self-assessment or metacognitive tool, suggesting that perhaps these students had not been reflecting on their own learning in this way prior to taking the course.

Theme 3: Creating multiple-choice questions was more difficult than anticipated. The difficulty in creating multiple-choice questions, formulating a correct answer and 3 detractors, and justifying why each option was correct or incorrect proved to be a challenging activity for students. As demonstrated in Theme 2, this activity enhanced student engagement with the course materials and also led to an appreciation for the time and effort it takes instructors to create an examination. Students seemed to realize that it is easy to criticize examinations but difficult to construct a better alternative.

Theme 4: Student-generated questions led to better understanding of the pharmacy profession/dispelling of pharmacy myths. Students seemed to gain a better understanding and appreciation of the profession of pharmacy as a result of this class, which is its primary learning objective. Student responses suggest that having to create their own multiple-choice questions facilitated engagement and exploration of the areas of pharmacy that either interested the students most and/or were most difficult for them to understand.

Theme 5: Student-generated questions negatively impacted learning for some students. Although most students found this formative assignment to be beneficial, 14 (15.7%) respondents made negative comments regarding the student-generated question assignment. About half of these students said the assignment negatively impacted their learning, explaining that instead of engaging more with the content, they focused on finding details around which they could construct questions. One student was clearly angry about having points deducted for not following the question-writing guidelines.

Student Suggestions for Improving the Assignment. The instructional team solicited feedback from students regarding how to improve the question-generating assignment. The 14 students who made negative comments about the assignment suggested it be removed from the course or that partial credit and/or resubmission of the assignment with revisions be allowed. There were also several positive comments expressing appreciation for the activity and suggesting that the instructors continue to use this strategy. Other suggestions were to require more questions for each section of the course, incorporate peer review into the activity, and give students an opportunity to submit a practice question prior to the due date for the first section assignment. The purpose of this practice question would be to generate feedback concerning completeness and it would not be graded.

Focus Group. The 3 independent raters participated in a focus group session to discuss the rater perspective of this student-generated multiple-choice activity and to provide feedback on the effectiveness of the grading rubric and workload. The raters all agreed that the activity was an effective strategy for engaging students in the content and that it was in line with the course objectives. They also agreed that the rubric was easy to use, streamlined the grading, and provided objective feedback to students. All raters stated that the grading workload was manageable, estimating that each spent approximately 3 to 4 hours grading for each of the 3 assignments, (this time estimation was for each rater grading approximately 45 assignments for each of the 3 sections for a total estimated grading time per rater of 9 to 12 hours).

The primary problem with the rubric was that inaccurate multiple-choice quiz questions could potentially receive full credit, provided the directions were followed. Even though these questions would not be chosen by the course director for the summative quiz, there was a potential for the students who submitted them to think they understood a concept when, in fact, they did not. The raters noted that this issue should be addressed even though it rarely occurs and the problems with the questions are minor. One suggestion emerging from the focus group was to post a summary statement to the whole class at the end of grading, clarifying any misconceptions that were noted in the submitted assignments. This approach would provide students an additional source of feedback from the course team.

DISCUSSION

Implementing student-generated, multiple-choice questions is an appropriate and effective strategy for an informational introductory online course designed to inform students about the profession of pharmacy. The raters found that use of this formative assessment fosters a deeper engagement with the course content for most students. The grading rubric proved easy to use and resulted in similar scores, even though the raters were diverse with respect to pharmacy knowledge and involvement with the course. The raters stated that the grading workload was manageable, and based on student feedback, the course appeared to achieve its educational goals of informing future pharmacists about opportunities within the profession and educating general student population about the role of the pharmacist in meeting their health care needs. This formative assessment seemed to have a positive impact on some students’ ability to assess their own learning progress and think critically.

This study's findings are consistent with those of other reports in the literature about student-generated questions as a formative assessment tool. Overall, the literature supports the conclusion that implementing student-generated questions is conducive to students having more meaningful and personal engagement with the course content. 3,13-20 Practice with inquiry builds metacognitive skills and independence with respect to learning as well as a sense of confidence with successfully mastering the learning goals of the course.3,13,14,16-20,29 However, inquiry alone is not optimal. Having to provide the correct answer and explain why it is the best choice extends learning in a way that cannot be reproduced by simply posing questions or answering instructor questions.4,30 Student responses in this study suggest that having to provide an explanation for the correct answer and why other options are incorrect resulted in a greater depth of learning.

In other studies assessing student perceptions regarding student-generated questions as a learning strategy, most students reported that the activity enhanced their learning14,16,17 and that posing questions is a challenging activity. 2,17 A body of research indicates that student perceptions of efficacy are not necessarily tied to increased effort or learning outcome, so tying analysis to student perceptions has its limitations.31 Nonetheless, many students in this study and in other similar studies reported that this strategy positively impacted their learning, and the mechanism through which this improved learning could occur is supported in cognitive science.

Instructors who intend to incorporate this strategy into a course should remember that students need guidance with this strategy. Thorough instructions, specific examples, instructor feedback, and prompts have all been shown to be effective and necessary components in the successful use of this strategy.14,19 In this study, the instructional team required students to link each student-generated, multiple-choice question to a learning objective to avoid the potential for students to focus on irrelevant detail.32

Because it is important for students to practice formulating questions, Web-based tools have been developed to facilitate peer evaluation and grading.14,16,17,20 Peer assessment was one of the suggestions for improvement received from this study's student survey. The authors are exploring ways for students to share questions for peer review while still maintaining the integrity of the assignment. Given the overall risk of potential cheating (eg, students providing multiple-choice question assignments to a roommate taking the class a semester later), students are now required to link multiple-choice questions to assigned learning objectives rather than being allowed to choose which learning objectives to focus on. This requirement does not completely remove the risk of cheating, since a roommate could be assigned the same learning objectives, but it adds another layer of challenge.

Instructors can use student-generated questions to assess student understanding, make changes to content, and/or clarify or emphasize concepts to the class.20 The raters stated that they did not use this learning activity to make changes in the course content, but could see the potential for that to occur. There is less relevance to this kind of instructional feedback, probably because of the informational nature of the content of this course, but the utility of this activity to assist instructors with course development and revisions is apparent. If this strategy were to be implemented in the professional pharmacy curriculum, misconceptions could be detected and addressed quickly through this formative assessment tool.

Student perceptions of this learning activity were not universally positive. A subset of students felt that the strategy negatively impacted their learning. Students’ statements indicated anger about the lack of partial credit, the inability to resubmit with revisions, the inability to submit an assignment late for partial credit, and the perception that this strategy did not fairly measure comprehension of the course material. Students are provided with explicit instructions, specific examples, and the grading rubric prior to submitting the first assignment. Instructors have found that the students who contacted the course team after receiving a low grade had submitted the assignment at the last possible moment and, based on a review of the course tracking, also had spent a small amount of time on the course Web site. While the instructional team does not want to dismiss the negative comments, they noted a potential connection between lack of planning and effort and dissatisfaction with the activity. Comments about the activity resulting in a decreased depth of engagement are of greater concern. A few students stated that the activity caused/led them to focus on only a few specific items needed to complete the assignment, resulting in a more superficial understanding than if they had been asked to complete instructor-generated activities. Although these comments are in contrast to those of the majority, they are nonetheless a matter of concern. Because the objective is for all students to engage fully and to develop a meaningful understanding of the material in the course, the instructional team is exploring and attempting to address this issue further.

This study has some limitations. While the implementation of student-generated, multiple-choice questions has been largely successful in this undergraduate informational course, there are problems with this approach. One issue identified by the raters was the occurrence of inaccurately phrased and/or low-quality questions. There is debate in the educational literature about requiring high-quality questions from students, since many instructors have difficulty consistently creating high-quality examination questions.2,3 The course instructors, however, argue that the process of generating questions, providing explanations for both correct and incorrect options, and tying the question to a course learning objective was a sufficient and effective strategy to help students achieve the course learning goals. Only the questions that earned a score of 6 were eligible for inclusion in the section quiz, and in order to receive a score of 6, the question had to meet the completeness and importance criteria and also be accurate.

Lack of personalized feedback provided to students was another limitation of this study. The raters felt that the standardized rubric was effective in providing objective feedback, but subjective feedback was rarely given and is one of the essential items listed in the educational literature for successfully expanding learning. Providing subjective, personalized feedback in this high-enrollment course would be unmanageable from an instructional team perspective and not necessary for the course type and audience. However, if this strategy were to be implemented in a doctor of pharmacy course, it would be necessary to provide subjective feedback either through Web-based tools, peer review, or both.

Sixty-five percent of students responded to the survey, which is a large response rate for an online survey. Even though about a third of students did not respond, the authors are confident that the survey data, which were mostly positive but included 14 negative responses, are an accurate representation of the range of student experiences.

CONCLUSION

Student-generated, multiple-choice questions are an appropriate and effective formative assessment in an online informational course in which the primary learning goal is to inform students about the profession of pharmacy. The educational literature supports the use of this learning activity to foster personal engagement with course content, metacognitive skills, and independent ownership of the learning experience. The pharmacy education community may benefit from experimenting with this strategy and potentially investing in one of the many Web-based tools that facilitate feedback and peer review of student-generated questions.

ACKNOWLEDGEMENTS

The authors recognize the expert editing assistance of Amy LimBybliw, MA, in preparing this manuscript.

REFERENCES

- 1.Gronlund NE, Linn RL. Measurement and Evaluation in Teaching. 6th ed. New York: MacMillan; 1990. Constructing objective test items: multiple-choice forms; pp. 166–191. [Google Scholar]

- 2.Fellenz MR. Using assessment to support higher level learning: the multiple choice item development assignment. Assess Eval High Educ. 2004;29(6):703–719. [Google Scholar]

- 3.Nicol D. E-assessment by design: using multiple-choice tests to good effect. J Furth High Educ. 2007;31(1):53–64. [Google Scholar]

- 4.Arthur N. Using student-generated assessment items to enhance teamwork, feedback and the learning process. Synergy. 2006;24:21–23. [Google Scholar]

- 5.Pittenger AL, Doering AH. Influence of motivational design on completion rates in online self-study pharmacy-content courses. Dist Educ. 2010;31(3):275–293. [Google Scholar]

- 6.Merrill MD. First principles of instruction. Educ Technol Res Dev. 2002;50(3):43–59. [Google Scholar]

- 7.van Gelder T. Teaching critical thinking: some lessons from cognitive science. Coll Teach. 2005;53(1):41–46. [Google Scholar]

- 8.Rea RV, Hodder DP. Improving a field school curriculum using modularized lessons and authentic case-based learning. J Nat Resour Life Sci Educ. 2007;36:11–18. [Google Scholar]

- 9.Bransford JD, Brown AL, Cocking RR, editors. How People Learn: Brain, Mind, Experience, and School (expanded edition) Washington: National Academy Press; 2000. [Google Scholar]

- 10.van Merriënboer JJG. Alternate models of instructional design: holistic design approaches and complex learning. In: Reiser RA, Dempsey JV, editors. Trends and Issues in Instructional Design and Technology. Upper Saddle River, New Jersey: Pearson Education, Inc; 2007. pp. 72–81. [Google Scholar]

- 11.Halpern DF. Teaching critical thinking for transfer across domains: dispositions, skills, structure training, and metacognitive monitoring. Am Psychol. 1998;53(4):449–455. doi: 10.1037//0003-066x.53.4.449. [DOI] [PubMed] [Google Scholar]

- 12.Dori YJ, Herscovitz O. Question-posing capability as an alternative evaluation method: analysis of an environmental case study. J Res Sci Teach. 1999;36(4):411–430. [Google Scholar]

- 13.Marbach-Ad G, Sokolove PG. Can undergraduate biology students learn to ask higher level questions? J Res Sci Teach. 2000;37(8):854–870. [Google Scholar]

- 14.Yu FY. Scaffolding student-generated questions: design and development of a customizable online learning system. Comp Hum Behav. 2009;25(5):1129–1138. [Google Scholar]

- 15.Balajthy E. Using student-constructed questions to encourage active reading. J Read. 1984;27(5):408–411. [Google Scholar]

- 16.Barak M, Rafaeli S. On-line question-posing and peer-assessment as means for web-based knowledge sharing in learning. Int J Hum Comput Stud. 2004;61(1):84–103. [Google Scholar]

- 17.Yu FY, Liu YH, Chan TW. A web-based learning system for question-posing and peer assessment. Innov Educ Teach Int. 2005;42(4):337–348. [Google Scholar]

- 18.Taylor LK, Alber SR, Wakler DW. The comparative effects of a modified self-questioning strategy and story mapping on the reading comprehension of elementary students with learning disabilities. J Behav Educ. 2002;11(2):69–87. [Google Scholar]

- 19.Rash AM. An alternative method of assessment: using student-created problems. Primus. 1997;7(1):89–95. [Google Scholar]

- 20.Denny P, Hamer J, Luxton-Reilly A, Purchase H. Peerwise: students sharing their multiple choice questions. Proceeding of the Fourth International Workshop on Computing Education Research. 2008:51–58. [Google Scholar]

- 21.Patton MQ. Qualitative Research and Evaluation Methods. 3rd ed. Thousand Oaks, CA: Sage Publications; 2002. [Google Scholar]

- 22.Creswell JW. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. 3rd ed. Thousand Oaks, CA: Sage Publications; 2009. [Google Scholar]

- 23.Creswell JW. Mapping the developing landscape of mixed methods research. In: Tashakkori A, Teddlie CB, editors. Handbook of Mixed Methods in Social & Behavior Research. Thousand Oaks, CA: Sage Publications; 2010. pp. 45–68. [Google Scholar]

- 24.Johnson RB, Onwuegbuzie AJ. Mixed methods research: a research paradigm whose time has come. Educ Res. 2004;33(7):14–26. [Google Scholar]

- 25.Gaddis SE. How to design online surveys. Train Dev. 1998;52(6):67–71. [Google Scholar]

- 26.Dillman DA, Tortora RD, Bowker D. Principles for Constructing Web Surveys. Pullman, Washington: SESRC Technical Report; 1998. pp. 98–50. http://www.sesrc.wsu.edu/dillman/papers/1998/principlesforconstructingwebsurveys.pdf. Accessed May 24, 2011. [Google Scholar]

- 27.Krueger RA, Casey MA. Focus Groups: A Practical Guide for Applied Research. 4th ed. Thousand Oaks, CA: Sage Publishing, Inc; 2009. [Google Scholar]

- 28.Paulus TM. Challenge or connect? dialogue in online learning environments. J Comput High Educ. 2006;18(1):3–29. [Google Scholar]

- 29.Shodell M. The question-driven classroom: student questions as course curriculum in biology. Am Biol Teach. 1995;57(5):278–281. [Google Scholar]

- 30.Draper S. Having students design research questions. 2008 http://www.psy.gla.ac.uk/∼steve/ilig/studdesign.html. Accessed May 1, 2011. [Google Scholar]

- 31.Clark RE. Antagonism between and enjoyment in ATI studies. Educ Psychol. 1982;17(2):92–101. [Google Scholar]

- 32.Lehman JR, Lehman KM. The relative effects of experimenter and subject generated questions on learning from museum case exhibits. J Res Sci Teach. 1984;21(9):932–935. [Google Scholar]