Abstract

Purpose: This work describes a spatially variant mixture model constrained by a Markov random field to model high angular resolution diffusion imaging (HARDI) data. Mixture models suit HARDI well because the attenuation by diffusion is inherently a mixture. The goal is to create a general model that can be used in different applications. This study focuses on image denoising and segmentation (primarily the former).

Methods: HARDI signal attenuation data are used to train a Gaussian mixture model in which the mean vectors and covariance matrices are assumed to be independent of spatial locations, whereas the mixture weights are allowed to vary at different lattice positions. Spatial smoothness of the data is ensured by imposing a Markov random field prior on the mixture weights. The model is trained in an unsupervised fashion using the expectation maximization algorithm. The number of mixture components is determined using the minimum message length criterion from information theory. Once the model has been trained, it can be fitted to a noisy diffusion MRI volume by maximizing the posterior probability of the underlying noiseless data in a Bayesian framework, recovering a denoised version of the image. Moreover, the fitted probability maps of the mixture components can be used as features for posterior image segmentation.

Results: The model-based denoising algorithm proposed here was compared on real data with three other approaches that are commonly used in the literature: Gaussian filtering, anisotropic diffusion, and Rician-adapted nonlocal means. The comparison shows that, at low signal-to-noise ratio, when these methods falter, our algorithm considerably outperforms them. When tractography is performed on the model-fitted data rather than on the noisy measurements, the quality of the output improves substantially. Finally, ventricle and caudate nucleus segmentation experiments also show the potential usefulness of the mixture probability maps for classification tasks.

Conclusions: The presented spatially variant mixture model for diffusion MRI provides excellent denoising results at low signal-to-noise ratios. This makes it possible to restore data acquired with a fast (i.e., noisy) pulse sequence to acceptable noise levels. This is the case in diffusion MRI, where a large number of diffusion-weighted volumes have to be acquired under clinical time constraints.

Keywords: HARDI, mixture model, random Markov field, denoising, expectation maximization

INTRODUCTION

Background: diffusion MRI

Diffusion weighted magnetic resonance imaging (DW-MRI) is the only technique that can image axonal fiber tracts in the brain in a noninvasive manner. DW-MRI produces images of biological tissue weighted by the local characteristics of water diffusion. Comparing a baseline T2-MRI scan with the MRI signal when a diffusion sensitizing gradient is added to the magnetic field in a certain direction, water diffusivity in that direction can be estimated. Repeating the procedure with different gradient directions, it is possible to reconstruct a diffusion profile at each spatial location. Due to the fact that water mainly diffuses along rather than across axonal fibers, the diffusivity information can be used to track the neural tracks in the brain white matter.

The continuous diffusivity profile, defined on the unit sphere, can be reconstructed from discrete measurements in different ways. The simplest approach is diffusion tensor imaging (DTI),1, 2 in which a zero-mean Gaussian probability distribution function (PDF) is fitted to the data measurements. At least seven samples are required for each voxel: six for the unique elements of the covariance matrix and one for the baseline MRI signal. DTI has shown great utility in imaging the fiber bundles in the brain because the architecture of the tissue can be inferred from the eigenstructure of the tensor: the major eigenvector gives the mean fiber direction at each location, whereas the anisotropy is a marker of fiber density. However, DTI has the limitation that it can only resolve one fiber direction in a voxel. When fibers cross or bifurcate within the voxel, which happens very often because voxels are from 100 to 1000 times wider than fibers, the monomodal Gaussian distribution fails to capture the diffusion profile.3, 4

In order to resolve more complex fiber geometries, it is necessary to sample a higher number of directions. This approach is known as high angular resolution diffusion imaging (HARDI).4, 5, 6 Mathematical entities more complex than a Gaussian PDF can be fit to the measurements, overcoming the limitation that DTI uses a monomodal distribution. Among the most popular are spherical harmonics,7, 8 fourth-order tensors,9, 10 and mixture models.3, 11

From the HARDI data, the PDF of the average spin displacement of water or a variant of this function can be computed. Several approaches have been proposed in the literature: the PDF of the fiber orientation distribution,12 the diffusion orientation transform,13 the persistent angular structure of the PDF,14 and the orientation distribution function (ODF), which is the most popular. The ODF is the radial projection of the water diffusivity PDF, and is defined on a spherical shell. Much of the ongoing research in HARDI is focused on the computation of the ODF, because its maxima correspond to the most likely orientation of the underlying fibers, whereas the maxima of the raw attenuation data do not. In a first approach, Tuch15 used a numerical implementation of the Funk–Radon transform to approximate the ODF from the HARDI measurements. More recent work from different groups16, 17, 18 has converged to computing the Funk–Radon transform analytically from the spherical harmonic expansion of the HARDI data.

If the attenuation by diffusion of a scan (which depends of the pulse sequence and its parameter settings) is small, the directional information of the data can be enhanced by spherical deconvolution.12 The ODF is assumed to be the convolution of a sharp fiber ODF (fODF) and a blurring kernel. Ideally, the fODF is zero for all directions except for those exactly corresponding to the orientation of fibers. The kernel can be estimated from highly anisotropic voxels, which are assumed to contain only one fiber population, and then inverted to compute the fODF from the ODF. It has been shown that using the fODF rather than smoother ODF in fiber tracking can improve the results.19

Background: denoising of diffusion MRI

Denoising strategies are very attractive in HARDI because acquiring a high number of gradient images under time constraints limits the signal to noise ratio (SNR). Denoising methods from the natural or medical image processing literature can be extended to HARDI by denoising each channel (gradient or baseline volumes) separately or by considering the HARDI data a vector-valued function. However, these extensions suffer from the same limitations as the methods they are based on.

Specifically, traditional image denoising algorithms based on (local or nonlocal) spatial smoothing20, 21, 22, 23 improve the SNR but also cause a loss of fine structures and increase partial volume error at the interfaces between different types of tissue. Techniques based on wavelets24 preserve edges well but suffer from pseudo-Gibbs artifacts. This problem is solved by approaches based on variational calculus25, 26 and partial differential equations, but such methods still have the disadvantage that they cause loss of texture, leading to the well-known “staircase” effect. In general, all these methods perform satisfactorily when the noise level is moderate, but their limitations arise when the SNR decreases. Therefore, denoising of DW-MRI data remains an open, relevant problem, and algorithms that overcome the drawbacks of existing methods are necessary.

Contribution

In this study, we propose an approach to the DW-MRI denoising problem based on unsupervised learning. We take advantage of the relatively low variability in DW-MRI to learn the typical distribution of the data, which is fairly constant across subjects (except for cases with pathology). We then use this information to tailor a denoising method to DW-MRI and outperform the algorithms summarized in Sec. 1B.

We propose using a spatially variant Gaussian mixture model (GMM) to learn a relatively small dictionary of components that can accurately represent the diffusion data. GMMs, which have been successfully applied in many different problems in the literature, are very suitable to represent the signal attenuation of HARDI data, which is a weighted sum of contributions from different fiber populations. The number of components, whose predefinition is a limitation of GMMs in general, is automatically estimated using the minimum message length, a concept from information theory.

The mean and covariance of each component, which are learned from training data, are constant. This allows us to use a large number of different voxels to train the components. If the mean and covariances were spatially variant, if would be impossible to estimate them for each single voxel unless strong assumptions were made (as in DTI, for example). Each mixture component will typically correspond to isotropic profiles or fibers in a certain orientation, with some flexibility provided by their covariances. The mixing weights are allowed to vary across space such that different components are represented in different regions. Then, a smoothness prior is placed on top on these spatially variant weights to exploit the fact that neighboring voxels tend to be similar. This constraint is very flexible because it does not force neighboring voxels to have similar values, but similar statistical distributions, decreasing the spatial blurring (which can lead to spurious fiber crossings).

The trained model can be fitted to a (noisy) test case by maximizing the posterior probability of the underlying noiseless data in a Bayesian framework. The output is a denoised version of the image. A by-product is the set of mixture weights for each voxel, which can be used in image segmentation.

To summarize, the main contributions of this study are: (1) unsupervised learning of diffusion MRI attenuation data with a spatially variant mixture model for DW-MRI-tailored denoising; (2) rather than modeling the DW-MRI data in individual locations (as it is typically done in the DW-MRI literature), we exploit spatial regularities by modeling the diffusion data as a field; and (3) application of a spatially variant GMM to denoising.

It is important to note that this article is an extension of our own preliminary work35 with the following differences: (1) we model the signal attenuation instead of the anisotropic diffusion coefficient, which is more principled; (2) the training stage has been improved; and (3) new experiments have been added, including fiber tracking, segmentation, and extended denoising experiments.

Further related literature

As explained above, mixture models in general suit DW-MRI very well because the attenuation by diffusion in a fiber crossing or bifurcation can be modeled as the sum of two or more mixture components. Therefore, they have been extensively used in the DW-MRI literature to model the diffusion signal for a given voxel. For example, Tuch et al.4 propose extending DTI to a mixture of ellipsoidal profiles. Assaf et al.31 use a mixture of hindered and restricted models of water diffusion. Behrens et al.11, 32 propose a partial volume model that mixes one isotropic (the background) and several anisotropic components (the fibers). A continuous mixture of diffusion tensors is proposed by Jian et al.33 The ODF is modeled as the convolution of the fODF and a smoother response function in a number of other studies.12, 17, 19, 34 However, these estimation methods do not exploit the relations between neighboring voxels; they model the data for a single spatial location. Another key difference with our proposed method is that the distribution of the HARDI data is assumed to follow a specific model (mixture of tensors, convolution of fODF and a constant kernel), whereas in our approach, the distributions are learned from training data using a generic model (a GMM).

Spatially variant GMMs have been used in natural image processing by Nikou et al.36 (extended by Sfikas et al.37) and Rivera et al.38 (the latter with some simplifications). These methods use the weights of the mixture components to segment the images. Peng et al.39 also applied a similar method to segmentation of conventional (i.e., nondiffusion-weighted) MRI data into three different types of tissue. As opposed to our proposed method, the data in these three studies are scalar fields, the number of mixture components is fixed rather than learned, and no denoising is performed. Martin-Fernandez et al.40 proposed a Gaussian Markov random field for simple denoising of DTI data, but their system cannot model multimodal distributions (and therefore HARDI). Finally, King et al.41 also described a system similar to ours in a general way, though without any mathematical formulation.

Outline

The rest of the paper is organized as follows. Section 2 describes the dataset utilized in the study. The image model, as well as the mechanisms to train it and to apply it to image denoising and segmentation, is described in Sec. 3. The proposed method is evaluated in Sec. 4. Finally, Sec. 5 includes the discussion.

MATERIALS

HARDI data from 100 different healthy subjects were acquired at the Center for Magnetic Resonance at the University of Queensland using a 4 Tesla Bruker Medspec scanner. A single-shot echo planar technique with a twice-refocused spin echo sequence was utilized. Ninety-four diffusion-sensitized gradient directions and 11 baseline images with no diffusion-sensitization were obtained for every subject. Imaging parameters were as follows: b-value = 1159 s∕mm2, TE∕TR = 92.3∕8259 ms, voxel size = 1.8 mm × 1.8 mm × 2.0 mm, and image size 128 × 128 × 55 voxels. The acquisition time was approximately 15 min. The SNR, using the mean square of the foreground as signal power and the square of the first peak in the histogram as noise power (estimator for Rice distribution), was on average 24 dB for the baselines and 15 dB for the diffusion-attenuated images. The 11 baseline images were merged down to a single estimate of the reference using a method tailored for Rician data,42 increasing the SNR by approximately 10 dB up to 34 dB. The reference image was used to segment the brain using the BET algorithm.43 The resulting mask was then applied to all the diffusion images to avoid including nonbrain matter in the model.

In order to evaluate denoising algorithms, it is necessary to a have ground truth that can be corrupted, subsequently restored and finally compared with the original. Hardware or software phantoms can be used as ground truth, but they are not a realistic approximation of the brain white matter. Ideally, one could use very long acquisition sequences (e.g., 24 or 48 h) to obtain very high SNR data that could be used as ground truth. However, these acquisition protocols are usually limited to ex-vivo scenarios due to motion artifacts. In this study, the original 94D data are too noisy to be directly used as ground truth [see Fig. 1a]. Instead, we propose to trade some angular resolution for redundancy that can be used to obtain a cleaner (though angularly coarser) pseudoground truth as follows.

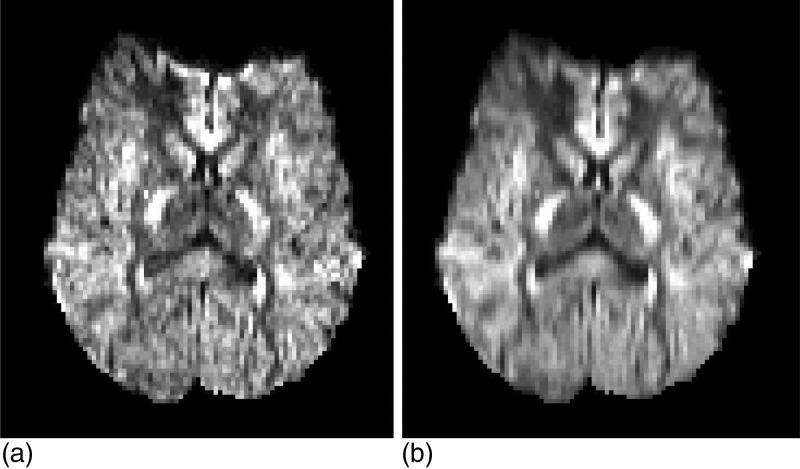

Figure 1.

Axial slice (i.e., parallel to the ground) of the gradient image corresponding to direction (x,y,z)=(0,1,0) before (a) and after (b) angular downsampling. The downsampled images are the cleanest data that could be generated and were thus used as ground truth (i.e., SNR assumed to be ∞). Note that there is no spatial smoothing in (b).

First, the 94D DW-MRI data at each spatial location are downsampled to a new set of directions using a Laplace–Beltrami regularized spherical harmonic expansion of order six44 (regularization coefficient λ=0.006). The fewer directions, the better the fit will be. On the other hand, the number of directions must remain high enough to be considered HARDI. We found 30 directions to be a good compromise. The 30 directions were computed with an electrostatic approach.45 Henceforth, the 94D data are discarded, and the downsampled, smoothed (in angular domain) 30D data are assumed to be the noiseless (SNR = ∞) ground truth [see Fig. 1b]. A secondary advantage of the angular downsampling is that it lightens the computational load of the algorithms (denoising a volume with the proposed approach takes 25 min, see Discussion).

To evaluate the usefulness of the mixture weights in image segmentation, the second author made (under supervision of an expert radiologist) annotations of two brain structures (left and right ventricles and caudate nuclei) on the baseline images, which have higher SNR than the directional data because they are not attenuated by diffusion. Moreover, 25 of the test images were reannotated by the same author to estimate the intrauser variability in the annotations. These annotations can be used to train a supervised pixel classifier and evaluate its performance.

Finally, the 100 DW-MRI scans were randomly divided into two groups of 50 for cross validation. The first group was used to estimate the parameters of the model and to train the classifier. The images in the second group were artificially corrupted with noise and subsequently denoised to assess the performance of the proposed method.

METHODS

In this section, we first describe the image model in Sec. 3A. Then, the steps to estimate the parameters of the model from the data are detailed in Sec. 3B. Finally,the process to denoise diffusion MRI data is described in Sec. 3C.

Image model

A HARDI volume consists of a set of MRI images corresponding to a baseline and a set of different gradient directions. Since the diffusion is estimated by comparing the gradient images with the baseline, having a reliable estimate of the latter is very important. For this reason, it is usual that multiple (four or five) instances of the baseline volume are acquired and merged down into a reference with little noise. If this reference is denoted by S0, the signal intensity Sk at a voxel location r when a gradient is applied in the direction (θk,ϕk) is given by the Stejskal–Tanner equation46

where θ∈[0,π] is the elevation, ϕ∈[0,2π] is the azimuth, ADCk(r)≥0 is the apparent diffusion coefficient, xk(r)∈[0,1] is the attenuation by diffusion and bk is the Le Bihan’s factor, which groups several physical parameters from the image acquisition. ADC={ADCk}, x={xk} and S={Sk} have the property that they are symmetric because diffusion MRI cannot distinguish the two directions of each orientation, and hence, S(θ,ϕ)=S(π-θ,ϕ±π).

The attenuation by diffusion in a voxel can be modeled with a multicompartmental model4, 32

| (1) |

where NF is the number of fiber populations that coexist in the voxel, wf≥0 is the weight of each population, χ0 is the anisotropic diffusion, is the diffusion tensor corresponding to each fiber population, and ιk is the unit vector in direction (θk,ϕk). The attenuation xk (and thus the signal Sk=S0xk) is hence a mixture of an isotropic component (the first term in Eq. 1) plus a number of fiber components (the second half of the equation).

Rather than estimating NF for each voxel and then fitting the weights and diffusion tensors,4 we propose modeling the attenuation by diffusion x(r) as a generic mixture learned from training data.

We assume that the baseline S0(r) is a perfect estimate and only the gradient images Sk(r) are affected by noise (see Fig. 2). This is reasonable because, if we estimate (where S0,i are the signal intensities corresponding to the 11 acquired baseline images), the sensitivities of the estimate with respect to Sk and S0,i are

since .

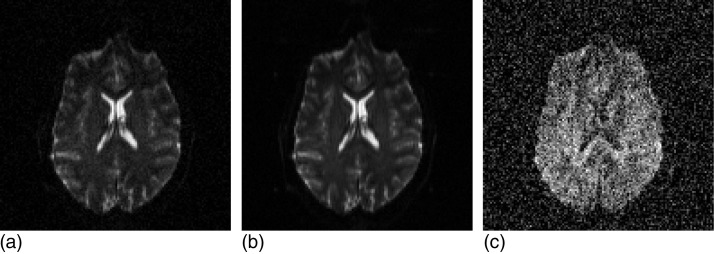

Figure 2.

Axial slice of a baseline volume (a), the corresponding average baseline (b), and one of the corresponding gradient images (c). We assume in this study that the noise of (b) is negligible compared with the noise of (c). The SNR of the original images was degraded 5 dB to better illustrate the difference between (b) and (c). Note that the intensity scales of (a–b) and (c) are different: the noise level is actually the same in (a) and (c).

Because the typical value of is 1∕3 (9 dB attenuation, see Sec. 2), the sensitivity with respect to Sk is typically ~30 larger than with respect to S0,i, and at least 11 times larger. In other words, the noise in Sk has to be at least 11 times larger than in S0,i to have the same impact on .

Now, under the assumption that Sk is the only source of noise, the data for each voxel are uniquely characterized by the vector of attenuations x(r)=[x1(r),x2(r),…,xD(r)]t, where D is the number of probed directions. This assumption also simplifies the framework described in Sec. 3B

Next, we assume that the underlying “true” (i.e., not corrupted by noise) attenuation x(r) is a realization of a Gaussian mixture model (GMM)

| (2) |

where G(x(r)|μc,Σc) is a Gaussian distribution with mean μc and covariance Σc, and each component plays a similar role as a fiber population in Eq. 1. While the means {μc} and covariance matrices {Σc} are independent of lattice locations, the weights of the mixture π(r)=[π1(r)...πC(r)]t are allowed to be spatially variant, and they are constrained to lie in the probability simplex , with πc(r)≥0. Most of the weights will be close to zero for a given voxel, which can be interpreted as a way of selecting NF(r).

Now, spatial coherence of the data is ensured by placing a Markov random field (MRF) on top of the mixture weights. Instead of laying a distribution on top of the data directly, laying it on top of another distribution (i.e., the GMM, which already has certain flexibility in the covariance matrices) gives an additional degree of freedom to the model36

| (3) |

where N is the total number of voxels in the volume, is the variance of the mixture component c in the spatial directions d={1,2,3} (corresponding to the x,y,z axes), and ud represents the unit vector along d. The variances are allowed to be direction dependent because the voxels are in general nonisotropic, and larger pixel spacings require larger variances in the model. The interpretation of the MRF prior is as follows: when one mixture component at one voxel is predicted as the average of the values of its neighbors in direction d, the error follows a zero-mean Gaussian distribution with variance .

Finally, the observed image data are a noise-corrupted version of x(r). The probability distribution of the noise in MRI is Rician

| (4) |

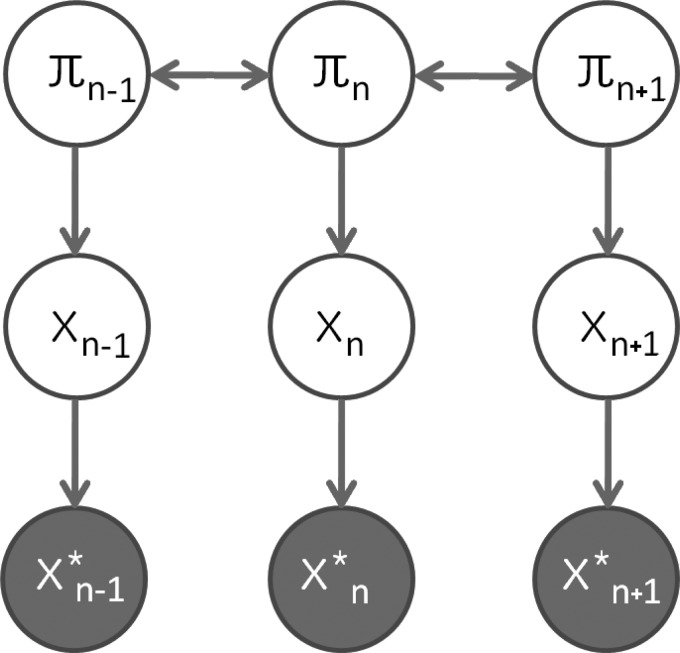

where x*(r) represents the noise-corrupted observations, I0 is the modified Bessel function of the first kind with order zero, and is the noise power for gradient image in direction (θk,ϕk), typically constant across k, i.e., . The complete graphical model of the data is depicted in Fig. 3.

Figure 3.

Graphical model for HARDI (in 1D, for the sake of simplicity): π represents the mixture weights, x represents the ADC data, and x* represents the noise-corrupted observations.

Model training

Given some training data, the parameters of the model are estimated by maximizing the target function Q, which depends on {μc}, {Σc}, and {πc(r)}

| (5) |

where zc(r) is the posterior probability that the voxel at r belongs to component c

| (6) |

The first term (first row) in Eq. 5 corresponds to the conditional likelihood of the GMM given the weights.47 The second term accounts for the joint likelihood of the weights themselves, given by the MRF. The approach that was utilized to maximize Q is an expectation maximization (EM) algorithm inspired by the work by Nikou et al.,36 with two major differences: the maximization step of the EM algorithm has been substantially improved and the number of components is not fixed but determined by the MML criterion.48

Initialization: choice of C with minimum message length criterion (MML)

To initialize the algorithm, the problem can first be solved without the MRF term from Eq. 3 in the target function Q from Eq. 5. This amounts to the well-known problem of estimating the parameters of a GMM, which can be solved: with the EM algorithm. In turn, the EM can be initialized with the K-means algorithm, which is further initialized with random samples from the training data. The EM algorithm is prone to getting stuck in local maxima, and it is therefore beneficial to train the GMM with different initializations for K-means and then keep the instance with the highest final likelihood.

It is well known that one of the main limitations of mixture models is that the number of components C must be defined in advance. A possible way of tackling this problem is to select the value of C that minimizes the minimum message length (MML), a criterion from information theory. MML combines two terms: the inverse of the likelihood of the training data and a term that penalizes the complexity of the model. Higher values of C decrease the first term but also increase the second, so a compromise must be reached

| (7) |

where the weights πc are location-independent at this point (the MRF prior is not considered yet).

Training the GMM for a wide range of values of C to find which one provides the minimal MML is computationally impractical. The following greedy algorithm was used instead: the parameters are estimated just once for a sole, high value of C, and then, components are merged down as long as the MML decreases.49 At each step, all possible pairwise merges are considered. The merge that leads to a model with the lowest MLL is selected and proposed for acceptance. If this value of MML represents an improvement with respect to the MML of the current model, the merge is accepted and the process is repeated. If it does not, the algorithm terminates. The procedure is fast because, when a merge is considered, the terms corresponding to the C-2 components not being merged do not need to be reevaluated.

Optimization with EM algorithm

Once the number of components C is defined, the cost function from Eq. 5 can be maximized. This can be achieved through an EM algorithm. In the E step, the posterior probabilities zc(r) are calculated using Eq. 6. In the M step, the estimates of the parameters and the mixture weights at each pixel πc(r) must be updated to maximize Q. The update equations for the mixture model parameters are well-known50

| (8) |

Updating the mixture weights in order to be able to update their variances is more complicated. Simultaneous optimization with respect to all the weights is very difficult and impractical. An alternative is to utilize coordinate ascent, in which voxels are visited in a random order and their weights are optimized under the assumption that the weights at all other locations are fixed. The algorithm typically converges after five or six passes over the volume.

In order to optimize the weights for a given spatial location r, Nikou et al.36 compute the derivatives of the target function Q with respect to {πc(r)}, set them to zero, and solve the resulting second degree equations. Because the weights are optimized in an unconstrained fashion, they must be projected back onto the probability simplex after each step. The problem with this approach is that the solution to the equations can yield a point arbitrarily far from the simplex, and the projected point is not guaranteed to increase Q (in practice, this often happens). Instead, we solve the problem exactly with the help of a Lagrange multiplier. If the terms in the target function that are independent from {πc(r)} are disregarded, some algebraic manipulation of Eq. 5 yields the following optimization problem:

| (9) |

where

The corresponding Lagrangian is

where the constraint πc(r)>0 has been left implicit (it must be observed so that logπc(r)∈(R)) and λ is the multiplier corresponding to the explicit constraint. Now, setting the derivatives with respect to the weights equal to zero gives

| (10) |

where the solution corresponding to the minus sign is discarded because it would yield πj(r)≤0, since wj>0. Finally, the value of the multiplier can be calculated by solving . If f(r,λ) is expanded,

There is no simple expression for the roots of this function. However, it is easy to check that df∕dλ<0 ∀λ. Since f is continuous and differentiable everywhere, this means that there is only one zero, which can be easily found with numerical methods; a bisection strategy was used in this study. Once the value of the multiplier λ has been determined, substitution in Eq. 10 provides the optimal mixture weights.

Finally, the EM iteration ends with calculating the new class variances from the updated mixture weights

| (11) |

The EM algorithm is summarized in Table TABLE I..

TABLE I.

EM algorithm to estimate the model parameters.

| 1. | Initialize the mixture model components {μc,Σc} with the results from Sec. 3B1. |

| 2. | Initialize the mixture weights for every voxel with the weights of the MRF-free GMM from the same section. |

| 3. | While the function Q from Equation 5 keeps increasing: |

| a) Calculate the posterior probabilities zc(r) with Equation 6. | |

| b) Update the mixture model components with Equation 8. | |

| c) Visit all voxels in random order, updating their mixture weights πc(r) with the solution of the problem from Equation 9. | |

| d) Repeat step c) until convergence (typically 5–6 times). | |

| e) Update the class variances with Equation 11. |

Application to image denoising

The model can be used for image denoising by fitting it to an image. In a Bayesian framework, the goal is to maximize the posterior probability of the underlying noise-free image given the noisy version. As it happened in the EM algorithm from the training stage (Sec. 3B2), direct optimization with respect to all variables is computationally very difficult. Once more, coordinate descent can be used. In this context, the algorithm is known as iterative conditional modes (ICM).51 In ICM, voxels are visited in random order, and their values are optimized by maximizing the conditional probability , where x*(r) is the observed (noisy) attenuation at the given voxel and is the current restored value at all other locations. The algorithm typically converges (i.e., negligible change in x) after a few passes over the whole volume.

The joint conditional distribution of the mixture weights π(r) and the “real” attenuation x(r) at each voxel are given by Bayes’s rule [(Eq. 13]. Maximizing the joint probability p(x(r),π(r)) is much faster than maximizing the marginal p(x(r)) and yields good results (see Sec. 5). Under the assumptions in this study (namely the graphical model in Fig. 3), the following independence relationships hold:

p(x*(r)|x(r),π(r),π(S\r),x(S\r))=p(x*(r)|x(r)),

p(x(r)|π(r),π(S\r),x(S\r))=p(x(r)|π(r)),

p(π(r)|π(S\r),x(S\r))=p(π(r)|π(ℵr)).

where ℵr represents the neighborhood of r. Substitution in Eq. 13 yields the final expression for the posterior likelihood of the “real,” underlying data

| (12) |

| (13) |

| (14) |

| (15) |

| (16) |

The first term in Eq. 12 corresponds to the Rician noise model from Eq. 4. The second term in F is just the GMM from Eq. 2. The third term is a product of univariate Gaussians corresponding to the different mixture components and directions in the MRF. Each Gaussian term is the result of multiplying the conditional probabilities given by the neighbors in each of the three spatial directions [Eq. 14].

Maximizing the posterior F [(Eq. 12] is equivalent to maximizing its logarithm, which has a simpler expression. The derivatives of logF with respect to the mixture weights and attenuation values can be computed analytically [see Eqs. 15, 16, where [·]k denotes the kth element of a vector, and I1 is the modified Bessel function of the first kind with order one]. This makes it possibly to optimize logF efficiently using gradient ascent, i.e., taking small steps along the direction of the gradient at each point. After each step, it is necessary to project the mixture weights onto its constraint (i.e., the probability simplex). Unlike in Sec. 3B2, this now works well because the user-defined step is fixed and small. A simple and fast algorithm for projecting onto the probability simplex52 was utilized. It is also necessary to project x(r) onto its own constraint (x(r)∈[0,1]) after each step. This is accomplished by setting to zero any component below zero and setting to one any component over one.

EXPERIMENTS AND RESULTS

Training the model

The proposed model was trained with the 50 images from the training dataset. Rather than using all the available voxels, a random patch of size 25 × 25 × 20 voxels was extracted from each of them to lighten the computational load of the algorithm (in total N≈500,000 voxels). The patches were constrained to have at least two thirds of their voxels in the mask provided by the BET algorithm. A preliminary experiment evaluating the MML in Eq. 7 at C=5n,n=1,…,10, revealed that the minimum was achieved around C=25~30.

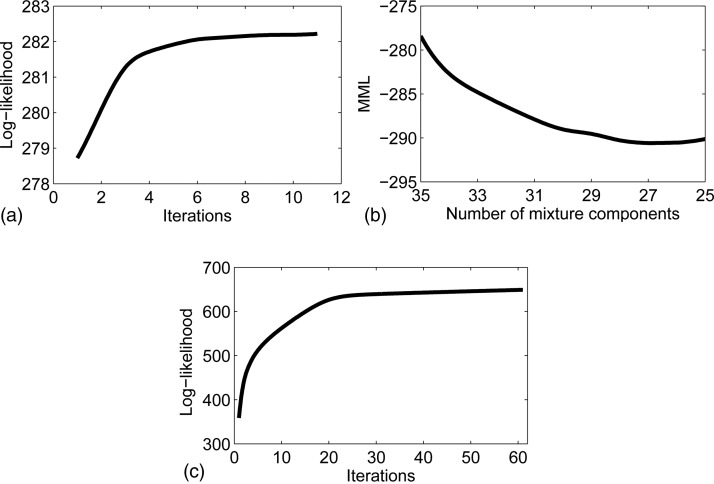

Because the algorithm that selects C can only decrease from an initial value Cini by merging components, the initial GMM without the MRF term was first trained with Cini=35 components. The training was repeated five times using EM with different seeds for the K-means algorithm, whose output initializes the EM. The model with the highest log-likelihood logQ was kept. The evolution of the metric logQ with the number of iterations for the best seed is displayed in Fig. 4a.

Figure 4.

Evolution of metrics in training: (a) GMM (best of 5), (b) MML, and (c) GMM-MRF.

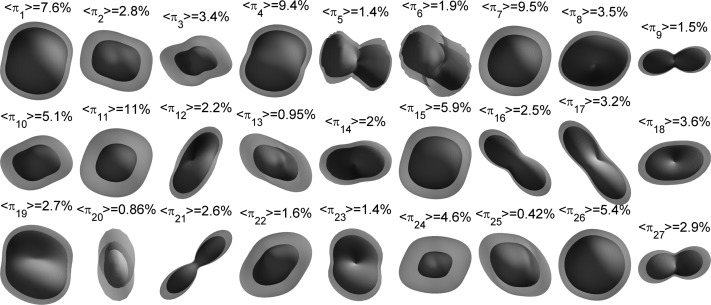

Once the GMM was trained with Cini=35, components were iteratively merged until the MML did not decrease anymore, which happened at C = 27, as shown in Fig. 4b. The resulting GMM was used as initialization to train the GMM-MRF model [Fig. 4c]. The averages over all training pixels of the mixture weights (henceforth 〈πc〉), which are useful in order to initialize the denoising algorithm (Sec. 4B), were saved along with the model. The model components, along with their weights and first mode of variation, are displayed in Fig. 5. The first mode depicts in most cases the scale of the diffusion. The second mode, displayed in Fig. 6, shows more variability in the orientations. From Figs. 56, we see that components such as {1,4,7,11,26} represent the isotropic part of the data, whereas the rest of the components display anisotropy in different directions.

Figure 5.

Mixture components after training. For each component, the first mode of variation (μ, where {μ,λ1,e1} are the mean, first eigenvalue, and first eigenvector of the covariance matrix) and the average weights over the training data 〈πc〉 are shown. The components were fitted to a (Laplace–Beltrami regularized) spherical harmonic expansion of order six and min-max normalized for display. The components are color-coded as follows: red indicates left/right direction, green is anterior/posterior, and blue is inferior/superior. Note that the components represent attenuation, so a toroidal shape implies a single fiber population along the axis.

Figure 6.

Mixture components after training: second mode of variation, (see caption of Fig. 5).

Denoising

Rician noise was added to the 50 images in the test dataset, and the proposed method (as well as other three algorithms) was then utilized to restore them. Since the system was trained on the 50 other subjects in the training dataset, no bias was introduced in the evaluation. The RMS error in the attenuation data was used as evaluation metric

where xdenoised(r) represents the denoised attenuation data and x(r) is the ground truth.

In the denoising scheme proposed in this study, the restored data xdenoised(r) was initialized with the noisy version x*(r), whereas the mixture weights π(r) were initialized with the average weights from the training data (Sec. 4A): πc(r)=〈πc〉 ∀r. The noise parameters {σk} are assumed to be known and constant across the gradient directions: σk=σ,∀k. This is a fair assumption in MRI because there is a large amount of background pixels in the data, so σk can be accurately estimated from them, for example, by studying their statistical moments53 or histogram.54

The proposed denoising algorithm was compared at different noise levels with the following strategies: a Gaussian smoothing filter, the classical anisotropic diffusion (AD) filter,55 and a Rician-adapted, nonlocal means (NLM) filter.21 Rician bias correction was added to the first two in order to take advantage of the fact that the noise parameter σ is known; otherwise, the experiment would be biased against these two algorithms (the NLM filter already makes use of this information).

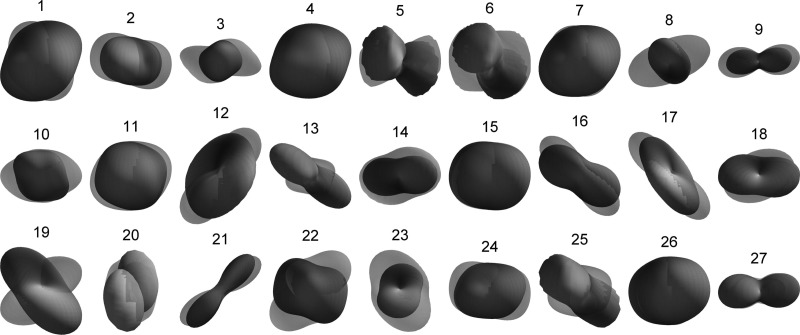

Figure 7 shows the performance of the different algorithms at different noise levels. The width of the Gaussian kernel and the conductance parameter of the AD filter were optimized to minimize the error at each noise level using the training data. Gaussian blurring is clearly worse than the other methods for every SNR. Regarding the other approaches, none of them is significantly better than the others at low noise levels. However, the performance of our method decreases slower with noise power than the others. The improvement with respect to AD and NLM is statistically significant (p<0.01) approximately when SNR<11 dB. The proposed method would for example allow for a very fast, noisy acquisition at SNR ≈8 dB that could be denoised to provide the same quality as an acquisition at SNR ≈22 dB restored with a Gaussian filter. At higher SNR, it would be more efficient to use AD or NLM due to their lower computational cost, unless image segmentation or analysis is to be carried out after the denoising. In that case, the features (probability maps) produced by our method can be worth the longer execution time (see Sec. 4C)

Figure 7.

Average RMS error from uncorrupted image to restored version at different noise levels. The 95% confidence interval, calculated at image level (rather than pixel level), is also displayed.

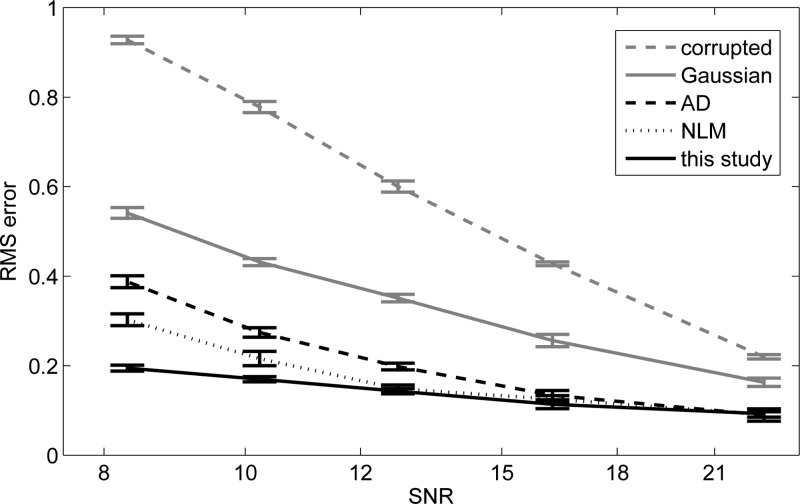

In Fig. 8, a slice of a diffusion-weighted image of a sample brain is displayed for the uncorrupted, corrupted (SNR = 10 dB), and denoised versions. The fODF field is also plotted for a region of interest (ROI) focused on the crossing between the corona radiata and the radiation of the corpus callosum. The ODF was estimated at 642 directions using a method by Descoteaux et al.16 with a spherical harmonic expansion of order six. The fODF was computed from the ODF in a nonparametric fashion using Tikhonov regularization.56 The Gaussian filter requires a very wide kernel to eliminate the noise, which leads to oversmoothing. The anisotropic diffusion filter does preserve some edges slightly better (e.g., the callosal bundle on the right of the fODF field looks thinner) but also introduces too much blurring (e.g., the in the central region of the ROI, where the fiber crossing is smudged). The NLM filter respects the edges in some extent but does not eliminate as much noise as the other filters. Finally, the proposed method takes advantage of the learned diffusion distribution to remove most of the noise with less blurring and therefore less spurious crossings (these are especially noticeable in the output of the Gaussian filter).

Figure 8.

Color-coded coronal slice of a diffusion weighted scan (left column), corresponding fractional anisotropy (middle) and zoom-in of fODF field around the crossing between the corona radiata and the radiation of the corpus callosum (right). The corrupted version has SNR = 10 dB. The white square on the uncorrupted, color-coded slice marks the ROI for which the fODF field is displayed. AD stands for anisotropic diffusion and NLM for nonlocal means.

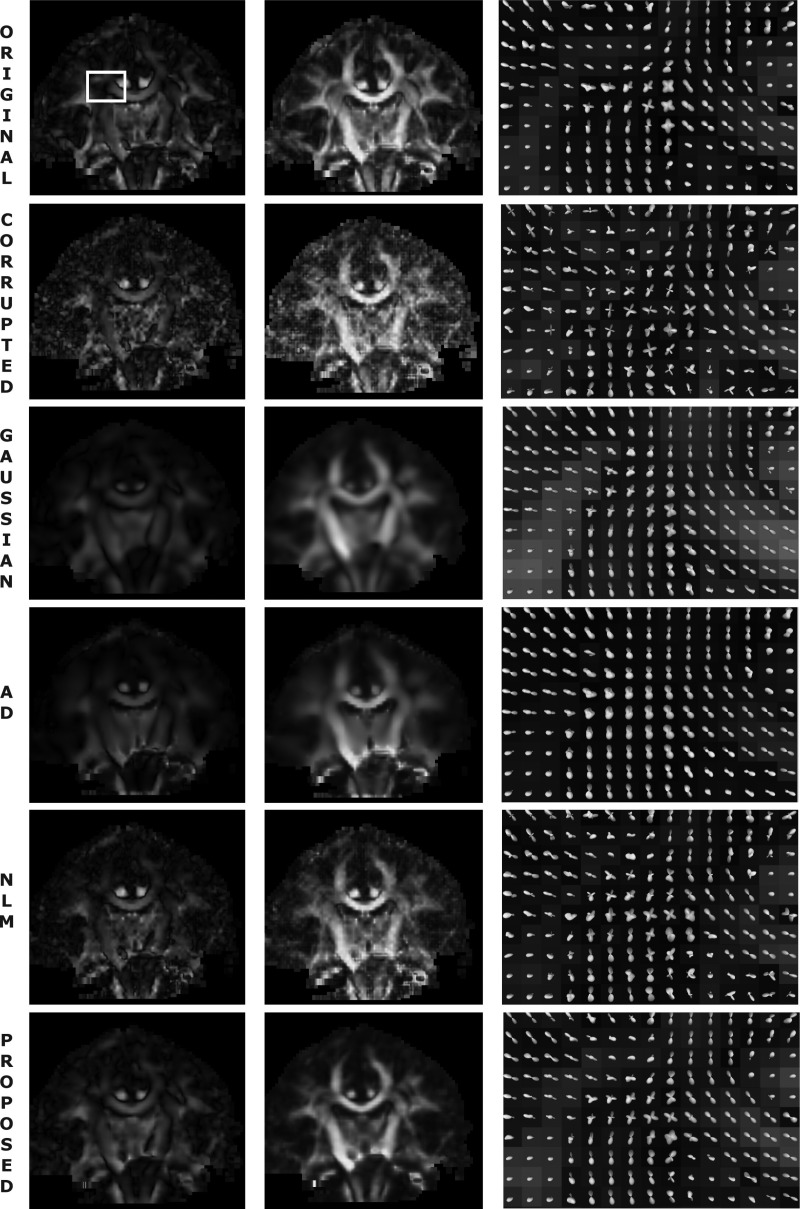

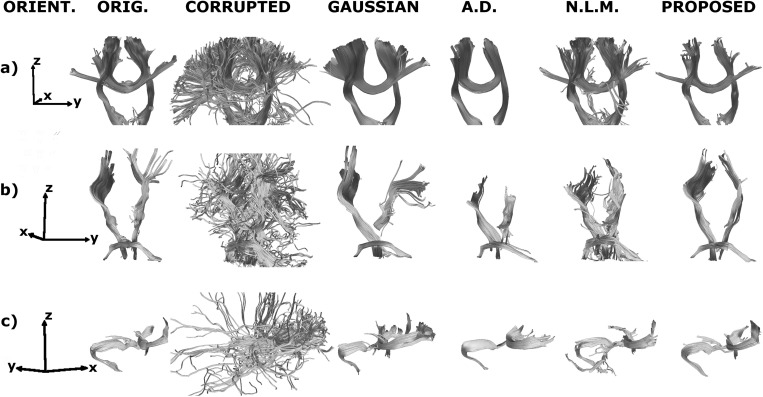

The ultimate goal of DW-MRI is fiber tracking. Figure 9 shows 3D reconstructions of the tractography output for a test subject given by a classic streamline tractography method very similar to Descotaux et al.19 From a number of points (1000) in a seed region, a step (0.5 mm) is taken in the direction of the global maximum of the fODF. Then, subsequent steps follow the orientation of the local maximum of the fODF that is closest to the direction of the previous step. The tracking ends if a point of low fractional anisotropy (<0.1) is reached or if the angle between two consecutive steps is above a threshold (60∘).

Figure 9.

3D reconstruction of the fiber tracking output for the uncorrupted, corrupted (SNR = 10 dB) and denoised volumes of a sample test brain. The relative orientation of the reconstruction is displayed in the leftmost column: x represents posterior to anterior, y is right to left, and z is inferior to superior. The yellow spheres mark the seed regions. a) Corticospinal tract and corpus callosum b) Corticospinal tract and middle cerebellar peduncle. c) Corpus callosum, capsule fibers, and superior frontal gyrus fibers.

In Fig. 9a, one seed was placed on the corticospinal tract and another in the body of the corpus callosum to observe the behavior at the intersection of the corpus callosum and its radiation with the corona radiata. In Fig. 9b, the seeds are in corticospinal tract and in the middle cerebellar peduncle. The corticospinal tract crosses the peduncle on its way to the spinal cord. In Fig. 9c, there are three seeds: one to track the superior frontal gyrus fibers, one to track the capsule fibers, and one in the genu or the corpus callosum. The genu of the corpus callosum and the capsule fibers converge in the anterior∕posterior direction, whereas the superior frontal gyrus fibers bend toward the superior part of the brain. In all three cases, the corrupted version produces many false positives that lead to spurious tracts away from the bundles of interest. The Gaussian filter can partially recover the tracts, but the smoothing makes the bundles look thicker than they really are, especially at crossings. The anisotropic diffusion filter does a better job restoring the main tracts, but falters at the fiber intersections. The nonlocal means filter recovers the bundles to some extent but still displays some false positives. When the noisy data are fitted to our proposed model, the algorithm is able to track the bundles across the intersections as in the uncorrupted volumes.

Segmentation

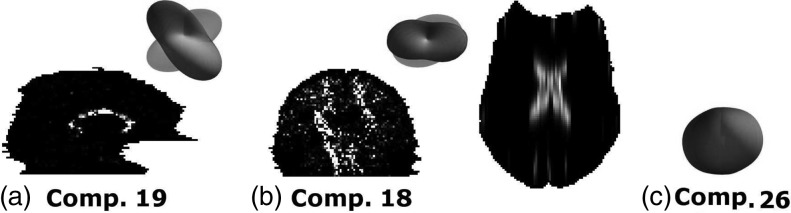

Nikou et al.36 and Rivera et al.38 use a spatially variant mixture model for image segmentation: when the model is fitted to the data, the most likely mixture weights for each voxel π(r) are provided by the optimization algorithm, and these probability maps can be used as features in a segmentation algorithm. In our case, some of the probability maps correlate almost directly with anatomy (see the example in Fig. 10). It is interesting to test whether these probabilities can be used as features with classification purposes. The following experiment was set up: a support vector machine (SVM) was trained to classify the voxels as ventricle, caudate nucleus, or rest of the brain using the probability images; if the results are good, the mixing weights are good features.

Figure 10.

Probability maps for different mixture components (for which the second mode of variation, often more informative than the first, is displayed). (a) Sample saggital slice that shows high probability for a left/right component around the corpus callosum. (b) Coronal slice for a probability volume that displays high probability for a vertical component around the corticospinal tract. (c) Axial slice of the probability volume of an isotropic component that shows high probability around the ventricles.

The SVM was trained on the 50 training images and evaluated on the 50 test images. In the training stage, the model was first fitted to the training images. Then, all the positive voxels (i.e., annotated as ventricle or caudate) and an equal amount of randomly selected negative voxels (i.e., marked as background) were used to train the SVM. In the evaluation stage, the voxels in the test images were classified as ventricle, caudate, or background. This provides a mask for the ventricle and a mask for the caudate. These masks were morphologically closed with a spherical kernel (radius 3 mm), and then holes and islands were removed.

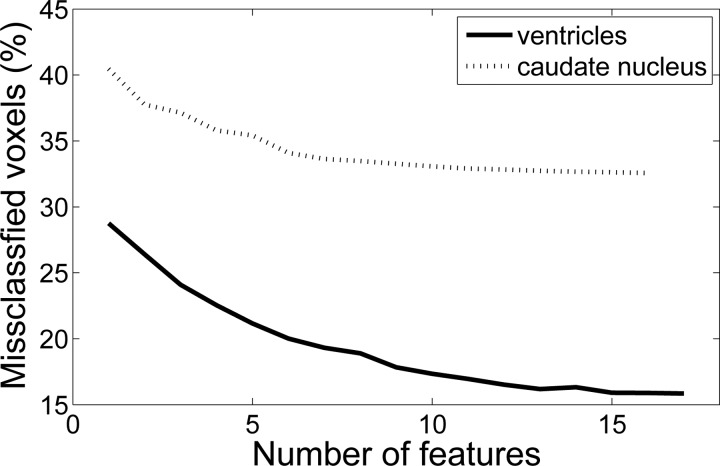

Rather than using the C = 27 weights as features for each voxel, a feature selection algorithm was used (“plus 3-take away 1”57). Feature subsets were evaluated by cross-validation (ten folds with five images each) on the training data. The evolution of the error rate with the number of features is shown in Fig. 11. By visual inspection, the cut-off was set at 13 features for the ventricles and 8 for the caudate nucleus. Once the classifier was trained, its performance was evaluated with the 50 test images. The similarity indices (SI) of the gold standard and the automated segmentations are displayed in Table TABLE II.. The SI is defined as

where La,b are the compared labels and n(·) is the number of voxels.

Figure 11.

Feature selection: error rate in cross-validation vs. number of selected features: (a) ventricles and (b) caudate nucleus.

TABLE II.

Segmentation results and intra-user variability.

| Structure | SI auto | SI intrauser |

|---|---|---|

| Ventricles | 0.75±0.05 | 0.85 ± 0.05 |

| Caudate nucleus | 0.61±0.06 | 0.75 ± 0.06 |

These SI values are not far from the intrauser variability (also in Table TABLE II.), which was determined using the 25 images that were reannotated. This variability defines the precision of the ground truth, and it is an intrinsic limit of the performance that an automated system can achieve. In absolute terms, the SI values are relatively low because of the low resolution of DW-MRI and the fact that the caudate is difficult to delineate in T2-weighted MRI, which is the modality of the baseline. For instance, a recent study using a multiatlas approach58 reported SI values between 0.66 and 0.83 for the caudate.

DISCUSSION

A spatially variant mixture model for diffusion MRI has been presented in this article. The signal attenuation, which is inherently a mixture due to the coexistence of fiber populations, is modeled as a Gaussian mixture in which the mean vectors and covariance matrices are assumed to be independent of spatial locations, whereas the mixture weights are allowed to vary at different lattice locations. Spatial smoothness of the data is ensured by imposing a Markov random field prior on the mixture weights. The model is trained on clean pseudoground truth data using the expectation maximization algorithm. The number of mixture components is determined using the minimum message length criterion from information theory. The system has the advantage of not having any parameters to tune.

The main application of the model is image denoising: given the noisy data, the denoised image can be computed as the most likely explanation in a Bayesian framework. This poses an optimization problem that can be solved within a reasonable time with coordinate descent. The proposed approach was compared with some of the most popular denoising algorithms in the domain. Even though the presented methodology does not clearly outperform these approaches at typical SNR ratios, it is substantially superior at low SNR thanks to its prior knowledge on the data. This makes it possible to acquire the images using a fast (i.e., noisy) sequence and denoise them to acceptable noise levels. The denoised data were shown to be able to recover the tractography results from the uncorrupted version.

A possible improvement of the methodology would be to denoise the image by maximizing p(x(r)) rather than p(x(r),π(r)) (Sec. 3C). The problem is that the marginalization integral p(x(r))=∫RCp(x(r),π(r))dπ(r) cannot be calculated analytically, so it would require statistical sampling, which is computationally taxing. Moreover, optimizing the joint probability has the advantage that it also provides the optimal mixture weights for each voxel, which can be used as features for subsequent image analysis.

Another possible improvement would be use a robust metric to penalize the differences between neighboring mixture weights. The proposed MRF uses a quadratic penalty corresponding to a Gaussian distribution. This might not be the most suitable approach when there exist discontinuities (e.g., edges) in the data. A possibility would be to use a Laplace distribution or generalization thereof, which penalizes the absolute difference rather than the squared difference.37 This could help reduce the (on the other hand minimal) blurring introduced by the method, which can potentially introduce spurious crossings. However, this would greatly complicate the analysis and the optimization process.

One potential limitation of the model is the lack of a proper ground truth, which is required in the training stage. This is a recurrent problem in DW-MRI. Phantoms consisting of separate fibers embedded in a homogeneous background are commonly used in the literature, but this is not a realistic approximation of the brain white matter. Elaborating more sophisticated phantom remains an open problem (see Refs. 59, 60 for some recent efforts). Long image acquisition MRI sequences (24–48 h) can be used to obtain very high SNR data, but they typically require ex-vivo data from deceased subjects to avoid motion artifacts. In this study, the lack of ground truth is circumvented by downsampling the images in the directional domain to obtain relatively clean data.

The proposed algorithm makes two important assumptions about noise. First, that the noise in the baseline T2 data is negligible compared to the noise in the gradient images. And second, that the noise power is known exactly. Both assumptions are however reasonable: due to multiple acquisitions and the lack of attenuation by diffusion, the baseline images have indeed much higher SNR than the gradient images. The noise parameter σ can be accurately estimated as the mode of the background of the image, thanks to the large amount of background voxels that are typically present in a 3D scan.

Regarding the ability to generalize to other datasets, retraining should not be necessary as long as the new data are acquired in the set of directions in the dataset presented here and at the same spatial resolution. If the resolution was different, the class variances could be modified to account for the difference in voxels size. If the gradient directions were different, the data could be resampled to the set of directions used here. Correction for different Le Bihan’s constant is immediate. Evaluating the performance of the algorithm on a different dataset and exploring the impact of these adjustments when necessary remains as future work.

Another interesting aspect is the application of the algorithm to cases displaying pathology. In that case, the learned statistical distributions might not hold anymore. If a certain pathology is expected to be present in the test set, it should be possible to ensure that subjects with that particular disease are present in the training dataset. However, if there is no knowledge on potential conditions present in the test data, a very comprehensive training dataset might be necessary to obtain good results. Evaluating the method in these situations also remains as future work.

Finally, it is important to discuss the execution speed of the algorithm. All the described algorithms were implemented in java and run in an Intel Core i7 desktop. The training stage takes 3 h, which is not a problem because it must be executed only once. Denoising an image takes approximately 25 min. This is longer than the execution time of the other approaches discussed here (Gaussian filtering, NLM, and AD). However, given that the code was not optimized for speed and that java is an interpreted language, it should be possible to reduce the execution time to the order of ~10 min, which is completely acceptable given the dimensionality of the data (i.e., 128 × 128 × 128 voxels × 94 directions∕voxel).

ACKNOWLEDGMENTS

This work was funded by the National Science Foundation (Grant No.0844566) and the National Institutes of Health through the NIH Roadmap for Medical Research, Grant No. U54 RR021813 entitled Center for Computational Biology (CCB). The authors would like to thank Greig de Zubicaray, Kathie McMahon, and Margaret Wright from the Center for Magnetic Resonance at the University of Queensland for acquiring the data, and professor Lieven Vandenberghe from UCLA for his insight on the optimization algorithm. The first author would also like to thank the U.S. Department of State’s Fulbright program for the funding.

References

- Basser P., et al. , “Estimation of the effective self-diffusion tensor from the NMR spin echo,” J. Magn. Reson., Ser. B 103, 247–247 (1994). 10.1006/jmrb.1994.1037 [DOI] [PubMed] [Google Scholar]

- Pierpaoli C., Jezzard P., Basser P., Barnett A., and Di Chiro G., “Diffusion tensor MR imaging of the human brain,” Radiology 201, 637–648 (1996). [DOI] [PubMed] [Google Scholar]

- Alexander A., Hasan K., Lazar M., Tsuruda J., and Parker D., “Analysis of partial volume effects in diffusion-tensor MRI,” Magn. Reson. Med. 45, 770–780 (2001). 10.1002/mrm.v45:5 [DOI] [PubMed] [Google Scholar]

- Tuch D., Reese T., Wiegell M., Makris N., Belliveau J., and Wedeen V., “High angular resolution diffusion imaging reveals intravoxel white matter fiber heterogeneity,” Magn. Reson. Med. 48, 577–582 (2002). 10.1002/mrm.v48:4 [DOI] [PubMed] [Google Scholar]

- Frank L., “Anisotropy in high angular resolution diffusion-weighted MRI,” Magn. Reson. Med. 45, 935–939 (2001). 10.1002/mrm.v45:6 [DOI] [PubMed] [Google Scholar]

- Ozarslan E. and Mareci T., “Generalized diffusion tensor imaging and analytical relationships between diffusion tensor imaging and high angular resolution diffusion imaging,” Magn. Reson. Med. 50, 955–965 (2003). 10.1002/mrm.v50:5 [DOI] [PubMed] [Google Scholar]

- Frank L., “Characterization of anisotropy in high angular resolution diffusion-weighted MRI,” Magn. Reson. Med. 47, 1083–1099 (2002). 10.1002/mrm.v47:6 [DOI] [PubMed] [Google Scholar]

- Alexander D., Barker G., and Arridge S., “Detection and modeling of non-Gaussian apparent diffusion coefficient profiles in human brain data.” Magn. Reson. Med. 48, 331–340 (2002). 10.1002/mrm.v48:2 [DOI] [PubMed] [Google Scholar]

- Barmpoutis A., Jian B., Vemuri B., and Shepherd T., “Symmetric Positive 4th Order Tensors& Their Estimation from Diffusion Weighted MRI,” in MICCAI 2007, Lect. Notes Comput. Sci. 4584, 308–319 (2007). 10.1007/978-3-540-73273-0_26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghosh A., Descoteaux M., and Deriche R., “Riemannian Framework for Estimating Symmetric Positive Definite 4th Order Diffusion Tensors,” in MICCAI 2008, Lecture Notes in Computer Science (Springer, New York, 2008), Vol. 5241, pp. 858–865. [DOI] [PubMed] [Google Scholar]

- Behrens T., Woolrich M., Jenkinson M., Johansen-Berg H., Nunes R., Clare S., Matthews P., Brady J., and Smith S., “Characterization and propagation of uncertainty in diffusion-weighted MR imaging,” Magn. Reson. Med. 50, 1077–1088 (2003). 10.1002/mrm.v50:5 [DOI] [PubMed] [Google Scholar]

- Tournier J., Calamante F., Gadian D., and Connelly A., “Direct estimation of the fiber orientation density function from diffusion-weighted MRI data using spherical deconvolution,” Neuroimage 23, 1176–1185 (2004). 10.1016/j.neuroimage.2004.07.037 [DOI] [PubMed] [Google Scholar]

- Özarslan E., Shepherd T., Vemuri B., Blackband S., and Mareci T., “Resolution of complex tissue microarchitecture using the diffusion orientation transform (DOT),” Neuroimage 31, 1086–1103 (2006). 10.1016/j.neuroimage.2006.01.024 [DOI] [PubMed] [Google Scholar]

- Jansons K. and Alexander D., “Persistent angular structure: New insights from diffusion magnetic resonance imaging data,” Inverse Probl. 19, 1031–1046 (2003). 10.1088/0266-5611/19/5/303 [DOI] [PubMed] [Google Scholar]

- Tuch D., “Q-ball imaging,” Magn. Reson. Med. 52, 1358–1372 (2004). 10.1002/mrm.v52:6 [DOI] [PubMed] [Google Scholar]

- Descoteaux M., Angelino E., Fitzgibbons S., and Deriche R., “Regularized, fast, and robust analytical Q-ball imaging,” Magn. Reson. Med. 58, 497–510 (2007). 10.1002/mrm.v58:3 [DOI] [PubMed] [Google Scholar]

- Anderson A., “Measurement of fiber orientation distributions using high angular resolution diffusion imaging,” Magn. Reson. Med. 54, 1194–1206 (2005). 10.1002/mrm.v54:5 [DOI] [PubMed] [Google Scholar]

- Hess C., Mukherjee P., Han E., Xu D., and Vigneron D., “Q-ball reconstruction of multimodal fiber orientations using the spherical harmonic basis,” Magn. Reson. Med. 56, 104–117 (2006). 10.1002/mrm.v56:1 [DOI] [PubMed] [Google Scholar]

- Descoteaux M., Deriche R., Knosche T., and Anwander A., “Deterministic and probabilistic tractography based on complex fibre orientation distributions,” IEEE Trans. Med. Imaging 28, 269–286 (2009). 10.1109/TMI.2008.2004424 [DOI] [PubMed] [Google Scholar]

- Pennec X., Fillard P., and Ayache N., “A Riemannian framework for tensor computing,” Int. J. Comput. Vis. 66, 41–66 (2006). 10.1007/s11263-005-3222-z [DOI] [Google Scholar]

- Descoteaux M., Wiest-Daessle N., Prima S., Barillot C., and Deriche R., “Impact of rician adapted non-local means filtering on HARDI,” in MICCAI 2008, Lecture Notes in Computer Science (Springer, New York, 2008), Vol. 5242, pp. 122–129. [DOI] [PubMed] [Google Scholar]

- He L. and Greenshields I., “A Nonlocal maximum likelihood estimation method for rician noise reduction in MR images,” IEEE Trans. Med. Imaging 28, 165–172 (2009). 10.1109/TMI.2008.927338 [DOI] [PubMed] [Google Scholar]

- Trist A.án-Vega and S. Aja-Fernández, “DWI filtering using joint information for DTI and HARDI,” Med. Image Anal. 14, 205–218 (2010). 10.1016/j.media.2009.11.001 [DOI] [PubMed] [Google Scholar]

- Wirestam R., Bibic A., Latt J., Brockstedt S., and Stahlberg F., “Denoising of complex MRI data by wavelet-domain filtering: Application to high-b-value diffusion-weighted imaging,” Magn. Reson. Med. 56, 1114–1120 (2006). 10.1002/mrm.v56:5 [DOI] [PubMed] [Google Scholar]

- Kim Y., Thompson P., Toga A., Vese L., and Zhan L., “HARDI denoising: Variational regularization of the spherical apparent diffusion coefficient sADC,” in IPMI 2009, Lect. Notes Comput. Sci. 5636, 515–527 (2009). 10.1007/978-3-642-02498-6_43 [DOI] [PubMed] [Google Scholar]

- McGraw T., Vemuri B., Ozarslan E., Chen Y., and Mareci T., “Variational denoising of diffusion weighted MRI,” Inverse Probl. Imaging 3, 625–648 (2009). 10.3934/ipi.2009.3.625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudin L., Osher S., and Fatemi E., “Nonlinear total variation based noise removal algorithms,” Physica D 60, 259–268 (1992). 10.1016/0167-2789(92)90242-F [DOI] [Google Scholar]

- Tschumperle D. and Deriche R., “Diffusion PDEs on vector-valued images,” IEEE Signal Process. Mag. 19, 16–25 (2002). 10.1109/MSP.2002.1028349 [DOI] [Google Scholar]

- Chen B. and Hsu E., “Noise removal in magnetic resonance diffusion tensor imaging,” Magn. Reson. Med. 54, 393–407 (2005). 10.1002/mrm.v54:2 [DOI] [PubMed] [Google Scholar]

- McGraw T., Vemuri B., Chen Y., Rao M., and Mareci T., “DT-MRI denoising and neuronal fiber tracking,” Med. Image Anal. 8, 95–111 (2004). 10.1016/j.media.2003.12.001 [DOI] [PubMed] [Google Scholar]

- Assaf Y., Freidlin R., Rohde G., and Basser P., “New modeling and experimental framework to characterize hindered and restricted water diffusion in brain white matter,” Magn. Reson. Med. 52, 965–978 (2004). 10.1002/mrm.v52:5 [DOI] [PubMed] [Google Scholar]

- Behrens T., Berg H., Jbabdi S., Rushworth M., and Woolrich M., “Probabilistic diffusion tractography with multiple fibre orientations: what can we gain?,” Neuroimage 34, 144–155 (2007). 10.1016/j.neuroimage.2006.09.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jian B., Vemuri B., Özarslan E., Carney P., and Mareci T., “A novel tensor distribution model for the diffusion-weighted MR signal,” NeuroImage 37, 164–176 (2007). 10.1016/j.neuroimage.2007.03.074 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander D., “Maximum entropy spherical deconvolution for diffusion MRI,” Inf. Process. Med. Imaging, 76–87 (2005). 10.1007/11505730_7 [DOI] [PubMed]

- Iglesias J., Thompson P., and Tu Z., “A spatially variant mixture model for diffusion weighted mri: application to image denoising,” in MICCAI Workshop on Probabilistic Models for Medical Image Analysis, 2009, pp. 103–114.

- Nikou C., Galatsanos N., and Likas A., “A class-adaptive spatially variant mixture model for image segmentation,” IEEE Trans. Image Process. 16, 1121–1130 (2007). 10.1109/TIP.2007.891771 [DOI] [PubMed] [Google Scholar]

- Sfikas G., Nikou C., Galatsanos N., and Heinrich C., “Spatially varying mixtures incorporating line processes for image segmentation,” J. Math. Imaging Vision 36, 91–110 (2010). 10.1007/s10851-009-0174-x [DOI] [Google Scholar]

- Rivera M., Ocegueda O., and Marroquin J., “Entropy-controlled quadratic Markov measure field models for efficient image segmentation,” IEEE Trans. Image Process. 16, 3047–3057 (2007). 10.1109/TIP.2007.909384 [DOI] [PubMed] [Google Scholar]

- Peng Z., Wee W., and Lee J., “Automatic segmentation of MR brain images using spatial-varying Gaussian mixture and Markov random field approach,” in CVPR Workshop, CVPRW, 2006, pp. 80–87. 10.1109/CVPRW.2006.38 [DOI] [Google Scholar]

- Martin-Fernandez M., Westin C., and Alberola-Lopez C., “3D Bayesian Regularization of Diffusion Tensor MRI Using Multivariate Gaussian Markov Random Fields,” in MICCAI 2004, Lect. Notes Comput. Sci. 3216, 351–359 (2004). 10.1007/978-3-540-30135-6_43 [DOI] [Google Scholar]

- King M., Gadian D., and Clark C., “A random effects modelling approach to the crossing-fibre problem in tractography,” Neuroimage 44, 753–768 (2009). 10.1016/j.neuroimage.2008.09.058 [DOI] [PubMed] [Google Scholar]

- Aja-Fernandez S., Alberola-Lopez C., and Westin C., “Signal LMMSE estimation from multiple samples in MRI and DT-MRI,” MICCAI 2007, Lect. Notes Comput. Sci. 4792, 368–375 (2007). 10.1007/978-3-540-75759-7_45 [DOI] [PubMed] [Google Scholar]

- Smith S., “Fast robust automated brain extraction,” Hum. Brain Mapp. 17, 143–155 (2002). 10.1002/hbm.v17:3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Descoteaux M., Angelino E., Fitzgibbons S., and Deriche R., “Apparent diffusion coefficients from high angular resolution diffusion imaging: Estimation and applications,” Magn. Reson. Med. 56, 395–410 (2006). 10.1002/mrm.v56:2 [DOI] [PubMed] [Google Scholar]

- Jones D., Horsfield M., and Simmons A., “Optimal Strategies for Measuring Diffusion in Anisotropic Systems by Magnetic Resonance Imaging,” Magn. Reson. Med. 42, 515–525 (1999). [DOI] [PubMed] [Google Scholar]

- Stejskal E. and Tanner J., “Spin diffusion measurements: Spin echoes in the presence of a time-dependent field gradient,” J. Chem. Phys. 42, 288–292 (1965). 10.1063/1.1695690 [DOI] [Google Scholar]

- Sanjay-Gopal S. and Hebert T., “Bayesian pixel classification using spatially variant finite mixtures and the generalized EM algorithm,” IEEE Trans. Image Process. 7, 1014–1028 (1998). 10.1109/83.701161 [DOI] [PubMed] [Google Scholar]

- Oliver J., Baxter R., and Wallace C., “Unsupervised learning using mml,” in Proceedings of ICML 96 (Morgan Kaufmann Publishers, 1996), pp. 364–372.

- Figueiredo M. and Jain A., “Unsupervised Learning of Finite Mixture Models,” IEEE Trans. Pattern Anal. Mach. Intell. 24, 381–396 (2002). 10.1109/34.990138 [DOI] [Google Scholar]

- Dinov I., “Expectation maximization and mixture modeling tutorial,” Statistics Online Computational Resource (2008), http://repositories,cdlib.org/socr/EM_MM.

- Besag J., “On the statistical analysis of dirty pictures,” J. R. Stat. Soc. 48, 259–302 (1986). [Google Scholar]

- Zymnis A., Kim S., Skaf J., Parente M., and Boyd S., “Hyperspectral image unmixing via alternating projected subgradients,” in Proceedings of ACSSC 2007, pp. 1164–1168. 10.1109/ACSSC.2007.4487406 [DOI]

- Nowak R., “Wavelet-based Rician noise removal for magnetic resonance imaging,” IEEE Trans. Image Process. 8, 1408–1419 (1999). 10.1109/83.791966 [DOI] [PubMed] [Google Scholar]

- Sijbers J., Poot D., Dekker A., and Pintjens W., “Automatic estimation of the noise variance from the histogram of a magnetic resonance image,” Phys. Med. Biol. 52, 1335–1348 (2007). 10.1088/0031-9155/52/5/009 [DOI] [PubMed] [Google Scholar]

- Perona P. and Malik J., “Scale-space and edge detection using anisotropic diffusion,” IEEE Trans. Pattern Anal. Mach. Intell. 12, 629–639 (1990). 10.1109/34.56205 [DOI] [Google Scholar]

- Iglesias J. E., Thompson P. M., Liu C. Y. and Tu Z., “Fast Approximate Stochastic Tractography,” Neuroinformatics (accepted for publication). [DOI] [PubMed]

- Stearns S., “On selecting features for pattern classifiers,” in Proceedings of the 3rd International Joint Conference on Pattern Recognition (Coronado, CA, 1976), pp. 71–75.

- Artaechevarria X., Munoz-Barrutia A., and Ortiz-de Solorzano C., “Combination strategies in multi-atlas image segmentation: Application to brain MR Data,” IEEE Trans. Med. Imaging 28, 1266–1277 (2009). 10.1109/TMI.2009.2014372 [DOI] [PubMed] [Google Scholar]

- Balls G. and Frank L., “A simulation environment for diffusion weighted MR experiments in complex media.,” Magn. Reson. Med. 62, 771–778 (2009). 10.1002/mrm.22033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Close T., Tournier J., Calamante F., Johnston L., Mareels I., and Connelly A., “A software tool to generate simulated white matter structures for the assessment of fibre-tracking algorithms,” Neuroimage 47, 1288–1300 (2009). 10.1016/j.neuroimage.2009.03.077 [DOI] [PubMed] [Google Scholar]