Abstract

Introduction: While preparing a graduate survey for medical education in 2008 we realized that no instrument existed that would be suitable to evaluate whether the learning outcomes outlined in the Medical Licensure Act (ÄAppO) would be met. Therefore we developed the Freiburg Questionnaire to Assess Competencies in Medicine (Freiburger Fragebogen zur Erfassung von Kompetenzen in der Medizin, FKM)1 which has been revised and extended several times since then.

Currently the FKM includes 45 items which are assigned to nine domains that correspond to the CanMEDS roles: medical expertise, communication, team-work, health and prevention, management, professionalism, learning, scholarship, and personal competencies.

Methods: In order to test the reliability and validity of the questionnaire we have repeatedly surveyed medical students and residents since May 2008. In this article we report on the results of a cross-sectional study with 698 medical students from the preclinical and clinical years. In addition, we report the results of a survey of 514 residents who were up to two years into their residency.

Results and conclusions: In summary, results show that the scales of the FKM are reliable (Cronbach’s α between .68 and .97). Significant differences in means between selected groups of students support the measure’s construct validity. Furthermore, there is evidence that the FKM might be used as a screening tool e.g. in graduate surveys to identify weaknesses in the medical education curriculum.

Keywords: competency based education, medical competencies, graduate survey, assessment of competencies, outcome based education

Abstract

Einführung: Bei der Vorbereitung einer Absolventenbefragung im Fach Humanmedizin wurde deutlich, dass ein Verfahren fehlte, mit dem erfasst werden kann, ob die in der ÄAppO aufgeführten Lehr- und Lernziele erreicht werden. Aus diesem Grund wurde der Freiburger Fragebogen zur Erfassung von Kompetenzen in der Medizin (FKM)1 von einer Arbeitsgruppe an der Medizinischen Fakultät der Universität Freiburg entwickelt. Der FKM wurde seitdem mehrmals überarbeitet und erweitert. Er umfasst derzeit 45 Items, die neun Kompetenzbereichen zugeordnet werden. Diese Kompetenzbereiche stimmen im Wesentlichen mit den CanMEDS-Rollen überein.

Methode: Um die Reliabilität und Validität des Fragebogens zu überprüfen, wurden seit Mai 2008 mehrfach Studierende und Assistenzärzte befragt. In dem vorliegenden Beitrag werden die Ergebnisse einer Querschnittsstudie berichtet, in der 698 Studierende des ersten und zweiten Studienabschnitts den FKM beantwortet haben. Des Weiteren werden die Ergebnisse von 514 Assistenzärzten mit bis zu zwei Jahren Berufserfahrung beschrieben, die den FKM im Rahmen einer Absolventenbefragung ausgefüllt haben.

Ergebnisse und Schlussfolgerungen: Die bisherigen Ergebnisse zeigen, dass die einzelnen Kompetenzskalen des FKM hinreichend reliabel sind (Cronbach's α=.68 bis .97). Mittelwertunterschiede zwischen ausgewählten Gruppen von Studierenden stützen die Annahme der Konstruktvalidität des Verfahrens. Zudem gibt es Hinweise darauf, dass der FKM zumindest ab dem 2. Abschnitt des Studiums der Humanmedizin und im Rahmen von Absolventenstudien als Screening-Instrument eingesetzt werden kann, um Schwächen in der Ausbildung festzustellen.

Introduction

Since the 1980s it has been increasingly accepted at all levels of the educational system that ultimately the quality of teaching and learning processes must be evaluated by analyzing results and outcomes. Acquiring competencies to solve professional as well as everyday problems is considered the most important outcome in this regard [1], [2].

This paradigm shift can also be found in the medical realm, yet primarily in the English-speaking countries [3]. The CanMEDS-Project of the Royal College of Physicians and Surgeons of Canada [4] is probably the best known example. This framework conceptualizes the professional activities of physicians as seven roles that allow the definition of respective competencies and obligations. Other examples of such frameworks have been put forward by the Accreditation Council for Graduate Medical Education (ACGME, http://www.acgme.org/outcome) or Brown University [5] and comprise similar competency profiles. Although no comparable framework exists for Germany, Öchsner and Forster [6] pointed out that the latest (2002) version of the Medical Licensure Act (Approbationsordnung für Ärzte, ÄAppO) that regulates a number of important issues in German medical education implicitly defines similar roles that – taken together – might be used to describe the competencies of medical graduates. Based on these considerations we developed a questionnaire to assess these competencies, the “Freiburg Questionnaire to Assess Competencies in Medicine” (Freiburger Fragebogen zur Erfassung von Kompetenzen in der Medizin, FKM).

Competencies

Numerous attempts have been made to define “competence”. A much acknowledged definition by Weinert [7], [8] identifies competencies as

“…individually available or learnable cognitive abilities and skills to solve certain problems, associated with the motivational, volitional, and social willingness and abilities to use this problem solving capability in diverse situations successfully and responsibly”

(translation by the authors). Thus, competencies are understood as complex and context-specific dispositions that are acquired by learning and needed to successfully deal with tasks and situations.

With regard to medical competencies, just as many definitions exist. Perhaps the most substantial one among these has been put forward by Epstein and Hundert [9] who propose that "professional competence is the habitual and judicious use of communication, knowledge, technical skills, clinical reasoning, emotions, values, and reflection in daily practice for the benefit of the individual and community being served.” This definition emphasizes that in medical practice competencies manifest themselves in various ways mostly without conscious reflection (although they can be made conscious, at least partly).

Construction of the Freiburg Questionnaire to Assess Competencies in Medicine (FKM)

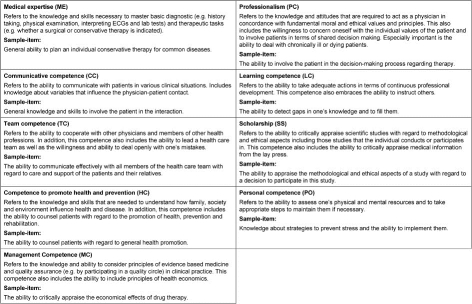

As the Medical Licensure Act (ÄAppO) defines the educational objectives, knowledge, abilities and skills a medical graduate must master it was used as the conceptual basis for the questionnaire development. We furthermore incorporated the work of Öchsner and Forster [6] who employed the content of section 1 and 28 of the ÄAppO to develop a profile of competencies for medical graduates. As a first step, our working group at Freiburg Medical School added “scholarship” as a further domain of competence. Then, items were developed for every domain of competence. In interviews with final year students we checked whether these items were comprehensible. Students’ suggestions from these interviews were integrated in the first version of the questionnaire which consisted of 36 items in seven domains of competence. In a pilot study with 48 final year students and 42 residents the reliabilities of the scales that correspond to the seven domains were satisfactory (Cronbach’s α ranging from .68 to .88). However, to increase the reliability of some of the scales more items were added. Furthermore, to complete the content of the questionnaire we also added two more domains of competence, “communicative competence” and “personal competence”. Thus, in its current (2nd) version the FKM consists of 45 items. The domains of competence are listed in Table 1 (Tab. 1) with corresponding sample items.

Table 1. The nine competence domains of the FKM and corresponding sample-items (scale abbreviations).

Structure of the FKM

Each item of the FKM is to be rated twice: Firstly with regard to the respondent’s current level of competence or the respondent’s level of competence at the time of graduation (retrospective rating) and secondly with regard to the level of competence that respondents think is required by their job. While item content is identical for different target groups (e.g. students from different academic years, residents) the instruction on how to rate the items differs between groups.

For students the instruction reads:

“To what extent do you have the following competencies at your disposal?”

“To what extent will your future job require the following competencies?”

All items are to be rated on a five-point Likert-scale. With regard to current level of competence as well as to the level of competence demanded by the job the endpoints of the scale are labeled 1 “very little” and 5 “very much”2.

For residents, another target group of the FKM, the instructions are as follows:

“To what extent did you have the following medical competencies at the time of your graduation at your disposal?”

“To what extent are the following abilities/competencies required by your current job?”

Again, five-point Likert-scales were used for this version of the questionnaire (1 “not at all”, 5 “very much”).

Studies

To determine the reliability and validity of the FKM we conducted several studies with different target groups (e.g. students from different academic years, residents) starting in May 2008. In this article we report the results of two studies.

Methods

In both studies item analyses were computed for each scale, using Cronbach’s α to determine its internal consistency. With regard to validity we were specifically interested in analyzing the construct validity of the FKM. This means that a respective construct is placed within a network of conceptual and empirical hypotheses, which are then tested empirically [10]. Following this approach we analyzed whether medical students from different academic years differed with regard to their rated level of competencies.

Differences between groups of students were tested by means of t-tests and ANOVAs. A posteriori comparisons were performed by means of Scheffé-tests. With regard to the ANOVAs η2 was used as effect size measure that represents the proportion of the variance explained by the factor of interest.

All statistical analyses were performed with SPSS, version 17.0.

Study 1: Students

Sample: During the summer term 2008 students from the first up to the fourth academic year at Freiburg Medical School were requested to fill out the FKM (version 1) as part of the regular end of term evaluation.

698 students participated. The response rate was 75% for the first and 59% for the second year students. In the third year (the first clinical year) the response rate was 43%, in the fourth it was 33%. About 60% of the participants were women, 13% had completed a vocational training in health care (e.g. as nurses or paramedics) prior to their medical studies and 3% had completed vocational training outside the health care system.

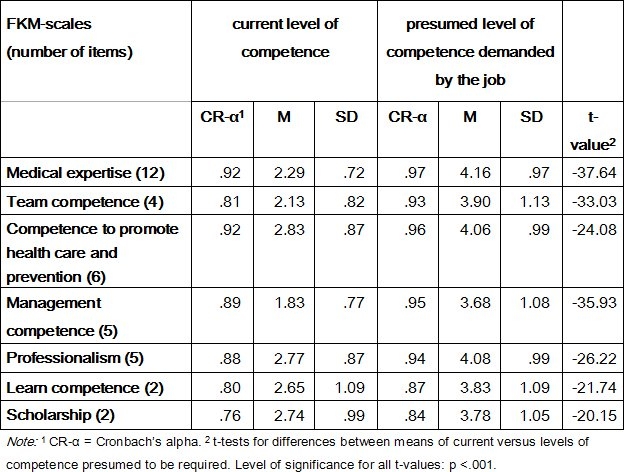

Results: Based on the data of the total sample, we calculated coefficients of internal consistency. Values for Cronbach’s α ranged from .76 to .92 for the FKM-scales addressing the current level of competencies (see Table 2 (Tab. 2)). With regard to the scales measuring the presumed level of competencies demanded by the job consistency-coefficients ranged from .84 to .97. All scales addressing the current level of competencies were strongly intercorrelated, with correlation coefficients varying between r=.49 and r=.76 with a median of .63. The intercorrelations of the FKM-scales aimed at the presumed level of competence demanded by the job are also significant, ranging from r=.64 to r=.91 with a median of .79. Further inspection revealed that for both types of scales the intercorrelations between scales decreases as the number of the respondents’ academic years increases. The medians of the intercorrelations of the scales aiming at the current level of competencies are .61, .59, .41 and .52, those of the scales aiming at the demanded level of competencies are .80, .85, .73 and .70. In line with that trend item level factor analyses show that increasingly more factors are needed to explain the item intercorrelations within the groups of the more senior students. For student groups in their academic year 1 through 4, the number of eigenvalues greater than 1 is 6, 6, 9 and 9 with regard to the items aiming at the current level of competencies and 3, 3, 5 and 7 for the items aiming at the demanded level of competencies.

Table 2. Characteristics of the FKM-scales (version 1) referring to the ratings of the current level of competence and the presumed level of competence demanded by the job (546≤n≤589 students in academic year 1 to 4).

Further analyses reveal (see Table 2 (Tab. 2)) significant differences in all competence domains between the ratings of the current level of competencies and the presumed level of competencies demanded by the job. The means of scales aiming at the presumed level of demanded competencies are significantly higher than the means of scales aiming at the current level of competencies.

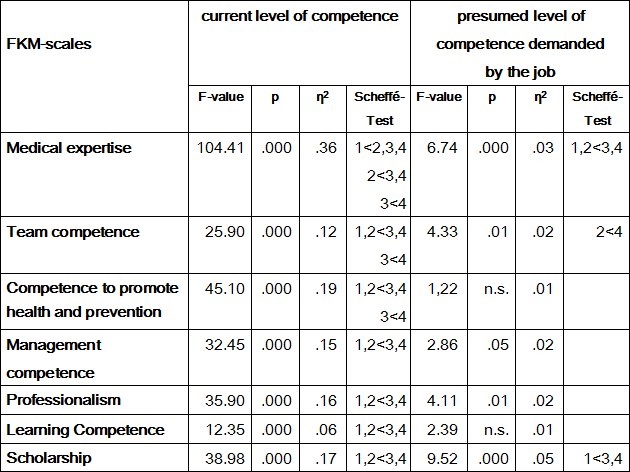

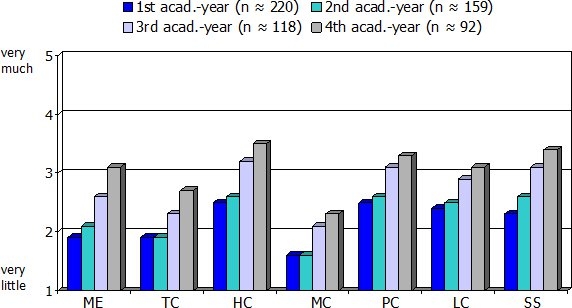

In addition, there are significant differences between students from different academic years with regard to the mean ratings of competencies (see Table 3 (Tab. 3)). Figure 1 (Fig. 1) shows the means of the FKM-scales for the ratings of the current level of competencies by academic year. With regard to medical expertise the Scheffé-tests revealed differences between all four groups of students. With regard to the other competence domains Scheffé-tests revealed significant differences between the pre-clinical years (year 1 and 2) and the clinical years (year 3 and 4). Students in the preclinical years rate their current level of competencies significantly lower than students in the clinical years.

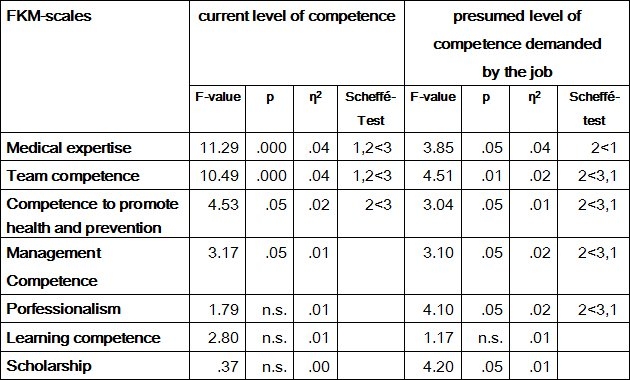

Table 3. Analyses of variance for FKM-scales by the factor “academic year” (1st to 4th). (Sample: 552≤n≤589 students in academic year 1 to 4).

Figure 1. Means of FKM-scales referring to the ratings of the current level of competencies as a function of academic year. (Abbreviations of the scales see Table 1).

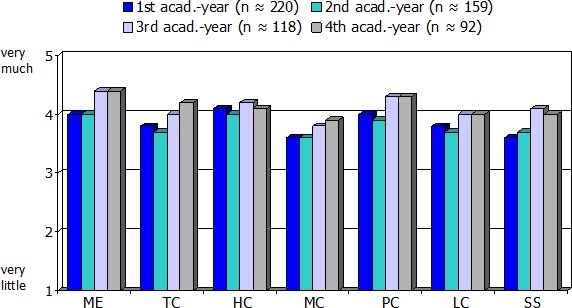

With regard to the presumed level of competencies demanded by the job the differences are less pronounced (see Table 3 (Tab. 3) and Figure 2 (Fig. 2)). Students in the preclinical years rate demanded medical expertise significantly lower than students of in their clinical years. Further differences can be found with regard to team competence: Ratings from students in second year are significantly lower than ratings from students in fourth year. Additionally, significant differences exist with regard to scholarship: Students from the clinical years presume a higher demand in this regard compared to their peers from the first preclinical year.

Figure 2. Means of FKM-scales referring to the ratings of the presumed level of competencies demanded by the job as a function of academic year. (Abbreviations of the scales see Table 1).

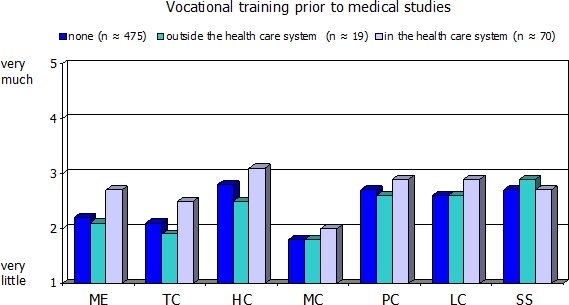

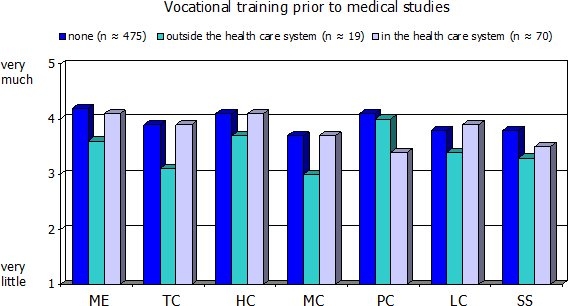

Finally (see Table 4 (Tab. 4), Figures 3 (Fig. 3) and 4 (Fig. 4)), students who had completed vocational training in the health care system prior to their medical studies rated their current medical expertise, their team competence and their competence to promote health and prevention significantly higher than students with no such training or students with a professional background outside health care. Also, students with prior vocational training in health care and students with no such training rated the presumed level of competencies demanded by the job significantly higher than students with professional training outside the health care system in four out of seven domains. However, the respective η2-coefficients clearly show that academic year is of much greater relevance than prior professional experience.

Table 4. Analysis of variance for FKM-scales by the factor “professional training” (1=none, 2=outside the health care system, 3=in the health care system). (Sample: 553≤n≤580 students in academic year 1 to 4).

Figure 3. Means of FKM-scales referring to the ratings of the current level of competencies as a function of prior vocational training. (Abbreviations of the scales see Table 1).

Figure 4. Means of FKM-scales referring to the ratings of the presumed level of competencies demanded by the job as a function of prior vocational training. (Abbreviations of the scales see table 1).

Discussion: The reliability of the FKM-scales is satisfactory to very good. Since the current conceptual frameworks of medical competencies and physician’s roles (such as CanMEDS) presume that these competencies and roles share common elements, we expected to find low to medium intercorrelations between the FKM-scales. However, the actual intercorrelations of the scales regarding the current level of competencies and the scales intercorrelations focusing on the presumed level of competencies demanded by the job are in part much greater than expected. One possible explanation for this might be a response set: due to a lack of experience [11], [12], [13] the respondents might consider the differences between the different domains of competencies as rather small which might cause them almost naturally to give concordant ratings on each of the scales. This might hold especially true for the presumed level of competencies demanded by the job. The observation that the intercorrelations of the scales for current competencies and demanded competencies decline with length of medical studies may be interpreted as supporting this explanation.

The differences reported with regard to the ratings of the current level of competencies correspond to the anticipated course of study progress. This result may be taken as supporting the validity of the measure if one is willing to assume that medical education actually leads to such an increase in competence. However, a response bias may also be a plausible explanation insofar as the very thought of having spent considerable time in medical school per se may lead respondents to assume to have acquired increased competencies. Other results supporting the construct validity of the FKM refer to the self-assessment of those participants who completed vocational training in the health-care system prior to their medical studies. These students rate the current level of their competencies significantly higher in just those domains (medical expertise, team competence, competence in promoting health and prevention) for which a higher level can be expected on the basis of prior professional experience.

Study 2: Residents

Sample: In cooperation with the International Center for Higher Education Research (INCHER) a graduate survey was conducted at many German universities during the winter term 2009/2010 to learn more about the graduates’ vocational situation up to two years after their graduation and how they would evaluate their studies retrospectively. All medical schools in the federal state of Baden-Württemberg participated in that survey. Of the 1228 graduates of the academic year 2008 contacted, 514 (42%) completed the extensive questionnaire (61% women, 38% men, 1% provided no information on their sex). On average the participants were 29.5 years (SD=3.6) old and had studied medicine for 13.0 terms (SD=2.2). More than 90% of the participants were already employed for up to two years.

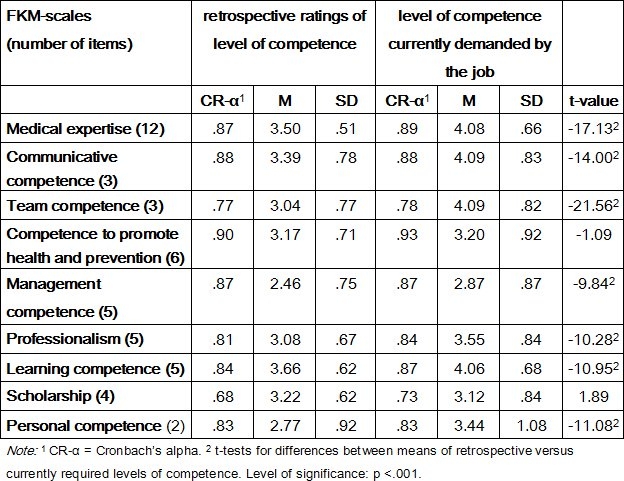

Results: The reliability of the FKM-scales (version 2) requesting a retrospective rating of the level of competencies at the time of graduation is satisfactory (Cronbach’s α between .68 and .90, see Table 5 (Tab. 5)). The respective values for the FKM-scales referring to the level of competencies currently demanded by the job vary between .73 and .93. The intercorrelations of the scales referring to the retrospective ratings of competencies at the time of graduation are all significant, with coefficients varying between r=.34 and r=.60 (median=.45). Similarly, the scales referring to the level of competencies currently demanded by the job also correlate significantly with coefficients varying between .20 and .67 (median=.43).

Table 5. Characteristics of the FKM-scales (version 2) referring to the retrospective ratings of the level of competencies at time of graduation and the current level of competencies demanded by the job. Sample: 422≤n≤471 graduates.

As t-tests for dependent samples (see Table 5 (Tab. 5)) reveal, the retrospective ratings of the level of competencies at time of graduation differ from those of the level of competencies currently demanded by the job in seven of the nine domains. In these domains, the level of demanded competencies is rated significantly higher than the level of competencies at time of graduation. The largest differences – in terms of “standardized response means” (SRM), i.e. the difference of the means divided by the standard deviation of the difference scores – are to be found in the domains of medical expertise (SRM=-.84), communicative competence (SRM=-.68), and team competence (SRM=-1.04). In the domains of competence to promote health and prevention as well as in the domain of scholarship no significant differences were found between the retrospective ratings and the ratings of the currently demanded level of competencies.

Discussion: Again, the reliability of the FKM-scales turns out to be satisfactory to very good. The intercorrelations of the scales referring to the retrospective ratings of competencies and of those referring to the level of competencies currently demanded by the job demonstrate that the scales are not independent from each other. Nevertheless the size of these correlations does by no means imply that the scales are measuring the same construct. Comparing the retrospective ratings of competencies with the ratings of the currently demanded level of competencies one finds that aside from the domain of medical expertise the largest differences between these two types of ratings are found in the domains of communicative and team competence. This is in line with other graduate studies that also revealed striking shortcomings within the domain of psychosocial competencies [14]. Thus, discrepancies become manifest in part in those domains which are not yet comprehensively implemented in the German medical curricula [15].

Summary and conclusions

In this study we analyzed the reliability and (construct) validity of the Freiburg Questionnaire to Assess Competencies in Medicine (FKM) based on a survey with medical students (first to fourth academic year) and a survey with residents. Our results show that the scales of the FKM are sufficiently reliable. In the cross-sectional analysis with medical students from the first to the fourth academic year we found relatively high intercorrelations between these scales: Correlations between the scales referring to the assumed level of competence demanded by the job were the highest. However, further analyses revealed that these intercorrelations decline as the number of academic years increases. Thus, we assume that with students’ cumulative experience (e.g. through clinical rotations and placements) their ratings of competencies become more and more differentiated. This hypothesis is supported by the moderate intercorrelations found in the study with the residents. These had graduated in the academic year 2008 and had already been employed for about 1.5 to 2 years. Against this background we would recommend to use the FKM only from the clinical years onwards.

Initial evidence supporting the construct validity of the FKM comes from the analyses of the competence ratings of students, who had completed a vocational training in the health care system (e.g. as nurses or paramedics) prior to their studies. As expected, the ratings of these students are higher compared to those of their fellow students with regard to medical expertise, team competence and competence to promote health and prevention. In addition, the results from the study with junior residents reveal that in retrospect they perceive deficiencies in medical education not only in the domain of medical expertise but exactly in those domains that are barely integrated to the medical curricula yet: communicative competence and team competence.

Finally, we would like to stress that the self-rating instrument presented here measures medical competence on a relatively general level. To measure medical competence with a greater degree of differentiation more specific operationalizations are called for. This would also contribute to a better differentiation of the domains of competencies presented here. In any case, further studies on the validity of the FKM are needed. In particular, it is especially important to address criterion-related validity to determine whether self-ratings of competencies correspond to objective indicators or external ratings of competencies. Insofar systematic differences exist between ratings of different domains of competence we think that the FKM helps detect shortcomings within medical education. This can be achieved either by using the FKM-scales or by analyzing single items as in the analyses of the graduate survey by INCHER [16].

Note

1 A copy of the questionnaire can be requested by contacting the corresponding author.

2 In the graduate survey conducted by INCHER the Likert-scales are labeled: 1 “very much” and 5 “not at all”. Following the conventions in the psychological literature where higher numbers usually represent a higher level of a respective variable we decided to reverse the scoring of the scales for the analyses presented here.

Acknowledgements

We would like to thank the organisations who contributed to this research project: The International Centre for Higher Education Research Kassel (INCHER; http://www.incher.uni-kassel.de) and the Kompetenznetz “Lehre in der Medizin in Baden-Württemberg” (http://www.medizin-bw.de/Kompetenznetz_Lehre.html)

Competing interests

The authors declare that they have no competing interests.

References

- 1.Klieme E, Avenarius H, Blum W, Döbrich P, Gruber H, Prenzel M, Reiss K, Riquarts K, Rost J, Tenorth H-E, Vollmer HJ. Zur Entwicklung nationaler Bildungsstandards – Eine Expertise. Berlin: Bundesministerium für Bildung und Forschung (BMBF); 2003. [Google Scholar]

- 2.Schaeper H, Briedis K. Kompetenzen von Hochschulabsolventinnen und Hochschulabsolventen, berufliche Anforderungen und Folgerungen für die Hochschulreform. Hannover: HIS; 2004. [Google Scholar]

- 3.Harden RM, Crosby JR, Davis MH. Outcome based education: Part 1 – An introduction to outcome-based education. AMEE Education Guide No. 14. Med Teach. 1999;21(1):7–14. doi: 10.1080/01421599979969. Available from: http://dx.doi.org/10.1080/01421599979969. [DOI] [PubMed] [Google Scholar]

- 4.Frank JR. The CanMEDS 2005 Physician Competency Framework. Ottawa: Royal College of Physicians and Surgeons of Canada; 2005. [Google Scholar]

- 5.Smith SR, Dollase R. Outcome based education: Part 2 –Planning, implementing and evaluating a competency-based curriculum. AMEE Education Guide No. 14. Med Teach. 1999;21(1):15–22. doi: 10.1080/01421599979978. Available from: http://dx.doi.org/10.1080/01421599979978. [DOI] [Google Scholar]

- 6.Öchsner W, Forster J. Approbierte Ärzte – kompetente Ärzte?: Die neue Approbationsordnung für Ärzte als Grundlage für kompetenzbasierte Curricula. GMS Z Med Ausbild. 2005;22(1):Doc04. Available from: http://www.egms.de/static/de/journals/zma/2005-22/zma000004.shtml. [Google Scholar]

- 7.Weinert FE. Vergleichende Leistungsmessung in Schulen – eine umstrittene Selbstverständlichkeit. In: Weinert FE, editor. Leistungsmessung in Schulen. Weinheim: Beltz; 2002. pp. 17–31. [Google Scholar]

- 8.Weinert FE. Concept of Competence: A conceptual clarification. In: Rychen DL, Salgamik LH, editors. Defining and selecting key competencies. Göttingen: Hogrefe; 2001. pp. 45–65. [Google Scholar]

- 9.Epstein RM, Hundert EM. Defining and assessing professional competence. JAMA. 2002;287(2):226–235. doi: 10.1001/jama.287.2.226. Available from: http://dx.doi.org/10.1001/jama.287.2.226. [DOI] [PubMed] [Google Scholar]

- 10.Messick S. Validity of psychological assessment. Validation of inferences from person’s responses and performances as scientific inquiry into score meaning. Am Psychol. 1995;50(9):741–749. doi: 10.1037/0003-066X.50.9.741. Available from: http://dx.doi.org/10.1037/0003-066X.50.9.741. [DOI] [Google Scholar]

- 11.Colthart I, Bagnall G, Evans A, Allbutt H, Haig A, Illing J, McKinstry B. The effectiveness of self-assessment on the identification of learner needs, learner activity, and impact on clinical practice: BEME Guide no. 10. Med Teach. 2008;30(2):124–145. doi: 10.1080/01421590701881699. Available from: http://dx.doi.org/10.1080/01421590701881699. [DOI] [PubMed] [Google Scholar]

- 12.Langendyk V. Not knowing that they do not know: self-assessment accuracy of third- year medical students. Med Educ. 2006;40:173–179. doi: 10.1111/j.1365-2929.2005.02372.x. Available from: http://dx.doi.org/10.1111/j.1365-2929.2005.02372.x. [DOI] [PubMed] [Google Scholar]

- 13.Berdie RF. Self claimed and tested knowledge. Educ Meas. 1971;31:629–635. doi: 10.1177/001316447103100304. Available from: http://dx.doi.org/10.1177/001316447103100304. [DOI] [Google Scholar]

- 14.Jungbauer J, Kamenik C, Alfermann D, Brähler E. Wie bewerten angehende Ärzte rückblickend ihr Medizinstudium? Ergebnisse einer Absolventenbefragung. Gesundheitswesen. 2004;66(1):51–56. doi: 10.1055/s-2004-812705. Available from: http://dx.doi.org/10.1055/s-2004-812705. [DOI] [PubMed] [Google Scholar]

- 15.Kiesling C, Dieterich A, Fabry G, Hölzer H, Langewitz W, Mühlinghaus I, Pruskil S, Scheffer S, Schubert S. Basler Consensus Statement “Kommunikative und soziale Kompetenzen im Medizinstudium“; Ein Positionspapier des GMA-Auschusses Kommunikative und soziale Kompetenzen. GMS Z Med Ausbild. 2008;25(2):Doc83. Available from: http://www.egms.de/static/de/journals/zma/2008-25/zma000567.shtml. [Google Scholar]

- 16.Janson K. Die Sicht der Nachwuchsmediziner auf das Medizinstudium – Ergebnisse einer Absolventenbefragung der Abschlussjahrgänge 2007 und 2008. Kassel: Universität Kassel; 2010. [Google Scholar]