Abstract

We present a novel technique for three-dimensional (3D) image processing of complex fields. It consists in inverting the coherent image formation by filtering the complex spectrum with a realistic 3D coherent transfer function (CTF) of a high-NA digital holographic microscope. By combining scattering theory and signal processing, the method is demonstrated to yield the reconstruction of a scattering object field. Experimental reconstructions in phase and amplitude are presented under non-design imaging conditions. The suggested technique is best suited for an implementation in high-resolution diffraction tomography based on sample or illumination rotation.

OCIS codes: (090.1995) Digital holography, (110.0180) Microscopy, (100.1830) Deconvolution, (100.6890) Three-dimensional image processing, (180.6900) Three-dimensional microscopy, (100.5070) Phase retrieval

1. Introduction

High-resolution three-dimensional (3D) reconstruction of weakly scattering objects is of great interest for biomedical research. Diffraction tomography has been demonstrated to yield 3D refractive index (RI) distributions of biological samples [1]. For the use of such techniques in the field of virology and cancerology, a spatial resolution in the sub-200nm range is required. Consequently, experimental setups must shift to shorter wavelengths, higher numerical apertures (NA) and steeper illumination and/or sample rotation angles. However, the scaling of resolution to high-NA systems introduces strong diffraction and aberration sensitivity [2]. The use of microscope objectives (MO) under non-design conditions, in particular for sample rotation, introduces additional experimental aberrations that may further degrade resolution [3, 4]. Unfortunately, the theory of diffraction tomography cannot correct for these conditions since it is based on direct filtering by an ideal Ewald sphere [5].

We present a novel technique that reconstructs the object scattered field using high-NA MO under non-design imaging conditions. Opposed to classical reconstruction methods like filtered back projection [6], our approach is based on inverse filtering by a realistic coherent transfer function (CTF), namely 3D complex deconvolution. We expect this technique to lead to aberration correction and improved resolution [7, 8]. By combining the theory of coherent image formation [9] and diffraction [5], the deduced theory enables reconstruction of object scattered field by inverse 3D CTF filtering. Under sample rotation, experimental evidence of this theory is presented. It confirms that the realistic 3D CTF can be directly reconstructed from a hologram acquisition of a complex point source [10, 11]. For this purpose, DHM’s feature of digital refocusing is exploited [12]. As simulations have shown, digital refocusing bears the capacity of optical sectioning [13]. By regularizing the complex filter function, phase degradation by noise amplification is suppressed as anticipated from intensity deconvolution [14]. The effectiveness and importance of the proposed method is demonstrated with experimental applications.

Independent of the non-design imaging condition, our method serves to reconstruct the scattered complex object function from a single hologram acquisition. One reconstruction does not feature tomography but rather optical sectioning of one rotation angle measurement. However, the reconstruction can be applied to various complex field acquisitions, which can be ultimately used for super-resolved diffraction tomography.

2. Description of method

The proposed method consists of three major inventions. First, for inverse filtering the three-dimensional deconvolution of complex fields is formalized by complex noise filtering. Secondly, based on single hologram reconstruction, an experimental filter function is defined. Third, in a rigorous approach, the filtered field is used to retrieve the scattered object function.

2.1. Regularized 3D deconvolution of complex fields

For a coherently illuminated imaging system, the 3D image formation of the complex field U is expressed as the convolution of the complex object function, called o, and the complex point spread function (APSF), called h [9]:

| (1) |

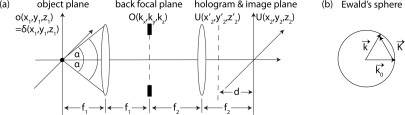

where r⃗ = (x,y,z) denotes the location vector in the object space r⃗1 and the image space r⃗2 as shown in Fig. 1(a). Equation (1) can be recast into reciprocal space by a 3D Fourier transformation ℱ defined as:

| (2) |

The reciprocal space based on the free-space (index of refraction n = 1) norm of wavenumber k with wavelength λ, relates to the spatial frequency ν and wave vector k⃗ = (kx, ky, kz) by

| (3) |

According to the convolution theorem, applying Eq. (2) to Eq. (1) results in:

| (4) |

Conventionally, the 3D Fourier transforms of U, o, and h are called G, the complex image spectrum, O, the complex object spectrum, and, c, the coherent transfer function (CTF), as summarized in Eq. (4). The latter is bandpass limited through h, with the maximal lateral wave vector,

| (5) |

and the maximal longitudinal wave vector

| (6) |

scaled by k from Eq. (3). The angle α indicates the half-angle of the maximum cone of light that can enter into the MO (cf. Fig. 1) and is given by its NA = ni sinα (ni is the immersion’s index of refraction). Through Eq. (4), the complex image formation can be easily inverted:

| (7) |

The 3D inverse filtering can be directly performed by dividing the two complex fields G and c. As known from intensity deconvolution [15], the inverse filtering method in the complex domain suffers from noise amplification for small values of the denominator G(k⃗)/c(k⃗), particularly at high spatial frequencies.

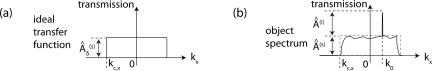

Fig. 1.

Optical transfer of a point source in real and reciprocal space. In scheme (a), a practical Abbe imaging system with holographic reconstruction. In scheme (b), full Ewald’s sphere under Born approximation in the reciprocal object plane according to Eq. (11).

As stated by Eq. (4), the recorded spectrum G(k⃗) is physically band limited by the CTF, thus it can be low-pass filtered with the maximal frequency kxy,c of Eq. (5) in order to suppress noise [8]. However, small amplitude transmission values within the band pass of the 3D CTF may still amplify noise. The noise amplification results in peak transmission values in the deconvolved spectrum, which add very high modulations in phase. Thus, phase information could be degraded through amplitude noise. To reduce noise degradation effectively we propose a threshold in the 3D CTF of Eq. (7), such as:

| (8) |

For modulus of c smaller than τ, the CTF’s amplitude is set to unity, so that its noise amplification is eliminated while its phase value still acts for the deconvolution. By controlling τ, truncated inverse complex filtering (τ << 1) or pure phase filtering (τ =1) can be achieved. Therefore, the deconvolution result depends on the parameter τ. Compared to standard regularization 3D intensity deconvolution [14], the threshold acts as a regularization parameter in amplitude domain while the complex valued domain is unaffected. The influence of this parameter is discussed in section 3.

Note that thresholding based regularization is comparable to other schemes, like gradient based total variation regularization [16]. However, the presented method does not assume sparsity, as known from compressive sensing approaches. Instead, it is based on the inversion of the coherent image formation of Eq. (1).

2.2. 3D field reconstruction of 2D hologram

Typically, the 3D image of a specimen is acquired from a series of 2D images by refocusing the MO at different planes [14]. In the proposed technique, however, the complex fields are provided by digital holographic microscopy (DHM) in transmission configuration [17] as shown in Fig. 2(a).

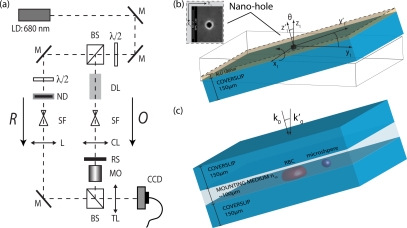

Fig. 2.

Experimental configuration. (a) Optical setup: LD, laser diode; BS, beam splitter; M, mirror; DL, delay line; SF, spatial filter; ND, neutral density filter; L, lens; TL, tube lens; CL, condenser lens; MO, microscope objective; RS, rotatable specimen. (b) RS with complex point source in MO design and non-design conditions. Insert: SEM image of nano-metric aperture (⌀≈75nm) in aluminum film at 150000× magnification. (c) RS with objects (see section 3) in experimental conditions with incident light along optical axis.

Thus, the amplitude A(r⃗2) as well as the phase Φ(r⃗2) of the hologram Ψ can be reconstructed by convolution [12]:

| (9) |

where is a spatial coordinate in the hologram plane, and d is the hologram reconstruction distance as shown in Fig. 1(a). Using digital refocusing, a pseudo 3D field can be retrieved by varying the reconstruction distance dz2 = d + M2z1 scaled by the MO’s longitudinal magnification of M2 = (f2/f1)2. Note that opposed to MO refocusing, the physical importance of digital refocusing is related to the camera’s distance from the system’s image plane.

2.3. Experimental pseudo 3D APSF

The coherent imaging system can be experimentally characterized by a complex point source, shown in Fig. 2(b). It consists of an isolated nano-metric aperture (⌀≈75nm) in a thin opaque coating (thickness=100nm) on a conventional coverslip [11]. The aperture was fabricated in the Center of MicroNano-Technology (CMI) clean room facilities by focused ion beam (FIB) milling in the evaporated aluminum film (thickness=100nm). For a single point object o(r⃗1) = δ(r⃗1), the image field Uδ (r⃗2) is the APSF h(r⃗2). This approximation yields aperture diameters ⌀<< dmin (dmin: limit of resolution), and its imaged amplitude and phase have been shown to be characteristic [10, 18].

The coverslip is mounted on a custom diffraction tomography microscope [19] based on sample rotation and transmission DHM as shown in Fig. 2(a). The setup operates at λ = 680nm and is equipped with a dry MO for long working distance of nominal NA = 0.7 and magnification M = 100 ×. The sample rotation by θ, as depicted in Fig. 2, introduces non-design MO conditions of imaging [4], consecutively discussed in section 3.1. The optical path difference between corresponding rays in design and non-design systems can be caused by use of a coverslip with a thickness or refractive index differing from that of the design system, the use of a non-design immersion oil ni, the defocus of the object in a mismatched mounting medium nm [3]. Consequently, a system’s defocus must be avoided through sample preparation, as discussed in section 3. Since a dry MO is used with matched coverslips, we expect the tilt to introduce the main experimental aberration, apart from MO intrinsic aberrations.

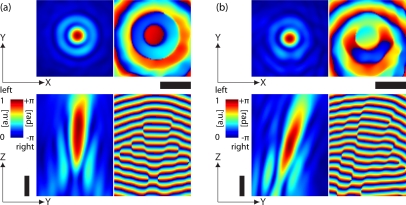

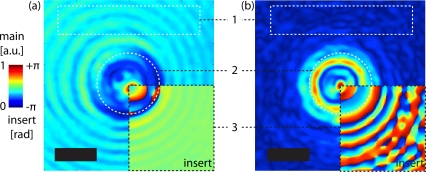

In order to demonstrate the importance of the proposed technique to diffraction tomography by sample rotation, experimental holograms are recorded for tilt positions as well. The digitally reconstructed pseudo 3D APSF are shown for two positions in Figs. 3(a)–3(b), without tilt and for θ = 15°.

Fig. 3.

Measurement of complex point source in MO design and MO non-design imaging conditions (cf. Fig. 2). The experimental APSF sections in (a) yield design MO imaging conditions (θ = 0°), whereas sections in (b) yield non-design conditions (θ = 15°). The left side images show |h| central sections and the right images arg[h], respectively. Colorbar, Scalebars: 2μm.

In MO design conditions, the complex field in Fig. 3(a) indicates a typical point spread function pattern [10]. In amplitude, the diffraction pattern is similar to the Airy diffraction pattern, derived from the Bessel function of first kind J1. The phase part oscillates at J1’s roots with spacing λ/NA from –π to π [7]. Nonetheless, the axial sections in Fig. 3(a) are prone to a spherical like aberration due to intrinsic aberrations [2]. The lateral sections show a good axial symmetry, hence the absence of strong coma or astigma like aberrations.

In the case of θ = 15°, the field in Fig. 3(b) features asymmetries of the diffraction pattern in amplitude and phase. The aberration can be especially well observed in a lateral phase distortion. Likewise, the asymmetric aberration is also expressed in the axial direction of the APSF. The introduction of coma-like aberration [2] is due to the tilted coverslip system. Just like above mentioned non-design conditions [3], the tilt results in optical path differences in the experimental system, which act as an additional aberration function [20].

The dependence of the APSF’s amplitude Aδ and phase Φδ on the sample rotation (cf. Fig. 2) is defined by

| (10) |

with the illumination wavevector in the laboratory reference frame, meaning relative to the optical axis. In the demonstrated case of sample rotation, relative to the optical axis does not change. However, the illumination relative to the sample does change and the incident field vector can be expressed as in the sample frame of reference, according to Fig. 2(b).

The complex point source technique allows registering the scattered h background illumination free. As a result, the SNR is advantageous and the required h can be directly used for Eq. (2). The amplitude and phase of the recorded field Aδ,k⃗0 (r⃗2) and Φδ,k⃗0 (r⃗2) then correspond to the scattered components and .

Eventually, it is the design of the complex point source (cf. Fig. 2) that guaranties a high accuracy of the APSF with respect to realistic imaging. The complex point source is directly located on a conventional coverslip, also used for object imaging. Thus, the APSF acquisition does not require any modifications of the imaging system, is stable, and most importantly, it is representative for object imaging under the same conditions. Even, for defocused objects, the experimental APSF can be estimated by flipping the complex point source and immersion it with approximated defocus.

2.4. Experimental 3D CTF

If the incident illumination field on the scatterer is in direction k⃗0 and the scattered field is measured in direction of k⃗, the first-order Born approximation states that the 3D CTF is given by the cap of an Ewald sphere defined for

| (11) |

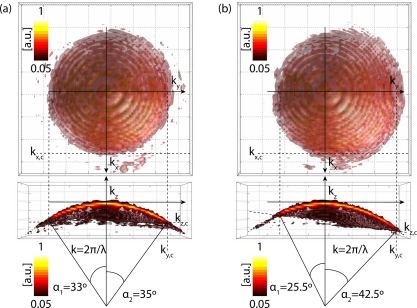

as schematically shown in Fig. 1(b) for DHM [21]. NA limits the sphere, so that only part of the diffracted light can be transmitted. The experimental DHM’s 3D CTF can be directly calculated by applying Eq. (2) to Eq. (10) and is depicted in Fig. 4.

Fig. 4.

Experimental 3D CTF in different imaging conditions. The experimental CTF in (a) obtained for MO design imaging conditions (θ = 0°), whereas the CTF depicted in (b) is obtained for non-design conditions (θ = 15°), according to Fig. 2. The upper row shows the top view on the CTF and bottom row shows the side view through the CTF for kx = 0, respectively. Colorbars.

From this reconstruction, the NA can be directly measured through the subtended half-angle α according to the cut-off frequencies of Eqs. (5) and (6). The experimental CTF includes experimental conditions, i. e. aberrations, as well. By comparing Fig. 4(a) and Fig. 4(b) the impact of illumination becomes apparent. Due to the sample rotation, the MO can accept higher frequencies from one side of the aperture, while frequencies from the opposed side are cut off. As a result, the CTF is displaced along the Ewald’s sphere [22]. Note that this displacement is a combination of translation and rotation if the rotational center is not coincident with the sample geometrical center.

Thus, the 3D CTF can be written as a function of K⃗:

| (12) |

where a hat indicates the Fourier component in amplitude  and phase Φ̂ as summarized in Fig. 5(a). Similarly to the 3D CTF reconstruction, the three-dimensional complex spectrum G(k⃗ – k⃗0) is calculated from experimental configurations shown in Fig. 2(c).

Fig. 5.

Scheme of reciprocal space. In image (a), a 1D coherent transfer function as given by the complex point source [see Eq. (12)]. In image (b), image’s spectrum with background illumination k⃗0 ≠ 0.

2.5. Scattered field retrieval

In the case of transmission microscopy, the APSF in Eq. (1) is not directly convolved with the complex object function o. According to diffraction theory [5], the total field o can be expressed as the sum of the incident field o(i) in direction of k⃗0 and the scattered field o(s),

| (13) |

where

| (14) |

with the scattered field amplitude and phase . On substituting from Eq. (13) into Eq. (1) and using Eq. (7) we see that the complex deconvolution satisfies

| (15) |

The subtracted convolution term in the numerator of Eq. (15) can be identified as , the reference field of an empty field of view [23]. Suppose that the field incident on the scatterer is a monochromatic plane wave of constant amplitude A(i), propagating in the direction specified by k⃗0. The time-independent part of the incident field is then given by the expression

| (16) |

| (17) |

On the other hand, according to Eqs. (13), (14), and (16) the image spectrum may be expressed as

| (18) |

as shown in Fig. 5(b).

Hence, substituting Eqs. (18) and (10) into Eq. (17), yields:

| (19) |

Finally, the means of Â(s) can be normalized to , to equalize their spectral dynamic ranges. Preferably, in order to avoid any degradation of the image spectrum by direct subtraction, can alternatively be calculated by:

| (20) |

In summary, the scattered field o(s) can be obtained for any illumination by Eq. (15) if an experimental reference field is provided. Alternatively, under the assumption of plane wave illumination, it can be calculated by Eqs. (19) or (20). The latter is used for processing in section 3.

We compare this result to the Fourier diffraction theorem [5] of scattering potential F(r⃗1):

| (21) |

It states that the scattered field , recorded at plane z±, is filtered by an ideal Ewald half sphere (nm: refractive index of mounting medium), and propagated by the latest term as known by the filtered back propagation algorithm of conventional diffraction tomography [6]. In our case, dividing by the 3D CTF, the spectrum is inversely filtered by an ’experimental’ Ewald sphere. Moreover, the field propagation is intrinsically included through the z-dependent pre-factor in the reconstruction of Eqs. (9) and (10). Therefore, the product of the right side in Eq. (21) may be approximated as

| (22) |

The main difference consists in the filter function. A multiplicative filter in Eq. (21) cannot correct for realistic imaging conditions, such as aberrations or MO diffraction. It merely acts as a low pass filter in the shape of an Ewald sphere. By contrast, Eq. (22) implies the object function , which is compensated for realistic imaging conditions. In order to achieve that correction, fields must be divided [8]. A priori, the experimental CTF is more apt for this division since it intrinsically corrects for MO diffraction, apodization, aberrations and non-design imaging conditions, as discussed in section 3.1.

The function F̂(K⃗ ) is the 3D Fourier transform of the scattering potential F(r⃗1) derived by the inhomogeneous Helmholz equation of the medium n(r⃗1), where:

| (23) |

and n(r⃗1) is the complex refractive index. The real part of Eq. (23) is associated with refraction while its imaginary part is related to absorption.

If one were to measure the scattered field in the far zone for all possible directions of incidence and all possible directions of scattering one could determine all the Fourier components F̂(K⃗ ) of the scattering potential within the full Ewald limiting sphere of 2k = 4π/λ. One could synthesize all Fourier components to obtain the approximation

| (24) |

called the low-pass filtered approximation of the scattering potential [5] that gives rise to diffraction tomography. Opposed to this full approach, the approximation of the partial low-pass filtered scattering potential F in only one direction of k⃗0 gives rise to optical sectioning, as discussed in section 3.3.

3. Applications

In this section, general imaging aspects of the proposed method and their impact on phase signal is evaluated. Moreover, the extraction of a scattered object field is practically demonstrated to result in optical sectioning.

3.1. Coherent imaging inversion

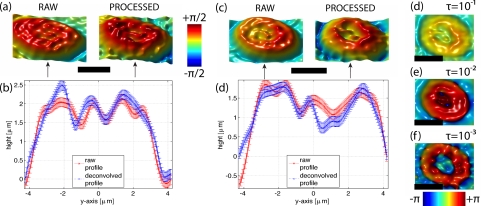

First, the general impact of complex deconvolution on the coherent imaging inversion under non-design conditions is discussed. For this purpose, experimental images of non-absorbing mono-dispersed polystyrene microspheres (nsph = 1.59, ⌀ ≈ 5.8μm) in water (nm,H2O = 1.33) are recorded at a tilt angle of θ = 15°, shown in Fig 6(a) for raw data and in Fig. 6(b) after deconvolution.

Fig. 6.

Complex fields of polystyrene microspheres in water at a tilt angle of θ = 15°, according to Fig. 2. The main images show the raw amplitude (a) and the deconvolved amplitude (b) with background ROI-1 and object ROI-2 with circle ⌀ ≈ 5.8μm. The inserts in ROI-3 show the phase parts, respectively. Colorbar, Scalebar: 4μm.

If complex deconvolution is successful, then a number of improvements in the complex field should be noted, accordingly indicated in Fig. 6 by regions of interests (ROI):

Background extinction: The transparent sample images are recorded with the incident light o(i) in direction of k⃗0. According to Eq. (20), a DC value is added to the APSF to compensate for o(i) well seen by reduced background haze in the amplitude (cf. Fig. 6 ROI-1). Similarly, the removed background results in full 2π-dynamic range of phase oscillation (cf. Fig. 6 ROI-3). Finally, the object ROI-2 in Fig. 6 displays improved contrast since the objects’ edges are sharpened by the complex deconvolution.

Diffraction pattern suppression: A second motivation consists in correcting the diffraction pattern of the MO’s APSF. This correction is in particular required for high-NA imaging systems since the APSF diffraction pattern may result in incorrect tomographic reconstruction in the near resolution limit range. The diffraction pattern can be observed to be well suppressed by comparing ROI-1 in Fig. 6. As a result, the diffraction pattern of the refractive index mismatched sphere [24, 25] becomes apparent in ROI-2 of Fig. 6.

Complex aberration correction: Aberrations are intrinsic to MO, especially for high-NA objectives [2]. Additionally, experimental MO non-design conditions may introduce symmetric aberrations [3]. Those conditions include non-design refraction index of ni, mismatch of ni and nm, defocus of object in nm, and non-design coverslip thickness or refraction index. Also, axially asymmetric aberrations are introduced by the sample rotation [4, 20]. This aberration can be recognized as the asymmetric deformation of the diffraction pattern in the raw images (cf. Fig. 6 ROI-1 and ROI-3). As a consequence, the raw object in Fig. 6 ROI-2 is deformed, too. However, after deconvolution in ROI-1 of Fig. 6 the asymmetric diffraction pattern is removed. In the same manner, the phase oscillation becomes equally spaced after deconvolution as shown in Fig. 6 ROI-3. Eventually, the object can be reconstructed in a deformation-free manner in Fig. 6 ROI-2.

Note that even with an accurate APSF and an effective deconvolution algorithm, deconvolved images can suffer from a variety of defects and artifacts described in detail in reference [15].

3.2. The impact of phase deconvolution

The source of most artifacts is due to noise amplification as mentioned in section 2.1. The suggested 3D deconvolution of complex fields has the capacity to tune between complex and phase deconvolution according Eq. (8). Thus, noise amplification can be excluded for τ = 1 while the phase part still leads to image correction according to the previous section. As seen by Eq. (19), the phase deconvolution effectively acts as a subtraction of the diffraction pattern in phase. Strictly speaking, the recorded phase is not the phase difference between object and reference beam, but also includes the MO’s diffraction due to frequency cutoff. The coherent system is seen to exhibit rather pronounced coherent imaging edges, known as ‘ringing’ [26]. For biological samples [27], the diffraction influence in phase may be of great importance for the interpretation of phase signal.

Consequently, we investigate the complex deconvolution’s influence on biological samples’ phase signal. The samples are human red blood cells (RBC) that have been fixed by ethanol 95% and are suspended in a physiological solution (nm,sol = 1.334 at λ = 682nm). This preparation method allows fixing the RBC directly on the coverslip surface to avoid defocus aberrations, and a space invariant APSF may be assumed within the field of view [28]. The experimental APSF used for processing was acquired under identical object imaging conditions, i.e. same tilt positions with identical coverslips as shown in Fig. 2. The experimental APSF can therefore be accurately accessed. A comparison between the raw phase images and the phase deconvolved images is shown for two RBCs in Figs. 7(a) and 7(c).

Fig. 7.

Human RBCs in phase. Images (a) and (c) show the phase images of two RBCs at θ = 0. Unprocessed images are labeled ’RAW’ and the label ’PROCESSED’ indicates the deconvolved phase for τ = 1. The profiles in (b) and (d) compare the according height differences of images above, at central sections (indicated by flashes). The error bars indicate the level of phase noise (≈ 0.1rad). Images (d)–(f) show the top view on RBC (c), processed with different τ (expressed in units of ). Colorbars, Scalebars: 4μm.

The influence of the phase deconvolution can be seen directly by comparing these topographic images. Based on their shapes, RBCs are classified in different stages [27]. The raw image in Fig. 7(c) resembles a trophozoite stage while its phase deconvolved image reveals a ring structure. Similarly, the processed RBC in Fig. 7(a) reveals a trophozoite stage. A more detailed comparison is given by its central height profiles in Figs. 7(b) and 7(d), calculated by assuming a constant refractive index of nRBC = 1.39. It shows that the deconvolved phase profile follows basically the raw trend. However, similar to coherent imaging ringing, edges are less prone of oscillations after phase deconvolution, in particular on the RBC’s edges. Thus the impact of the complex deconvolution is to de-blur the phase signal.

However, the recovered phase signal is highly sensitive to noise, as demonstrated in Figs. 7(d)–7(f). Decreasing value of τ, implies a decreased SNR through amplitude noise amplification. As a consequence, random phasers can be introduced that degrade the phase signal. Figures 7(e) and 7(f) show that signal degradation affects most prominently RBC’s central and border regions. For a pure retrieval of phase that is unaffected by noise amplification, τ should not be smaller than −1dB.

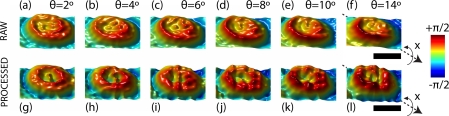

In Fig. 8, measurements under non-design imaging conditions are presented. The RBC’s raw phase measurements become strongly deformed with increasing coverslip tilt [cf. Figs. 8(a)–8(f)]. This observation is in accordance with section 2.3 where strong coma-like aberrations are observed. Consequently, APSFs acquired under same non-design conditions, i.e. same tilt, can be used to effectively correct for coma aberration within the depth of field. Accordingly processed RBC measurements successfully recover the ring stage, even at steeper tilting angles, as demonstrated in Figs. 8(g)–8(l). Note that the phase signal is increased for steeper θ since projection surface along y-axis decreases, as well observed in Figs. 8(g)–8(l).

Fig. 8.

Inclined human RBCs in phase at various tilt angles θ. Images (a)–(f) are unprocessed and images (g)–(l) are pure phase deconvolved τ = 1. According to Fig. 2, the axis of rotation ’x’ is indicated. Colorbar, Scalebars: 4μm.

3.3. Scattered object field retrieval and optical sectioning

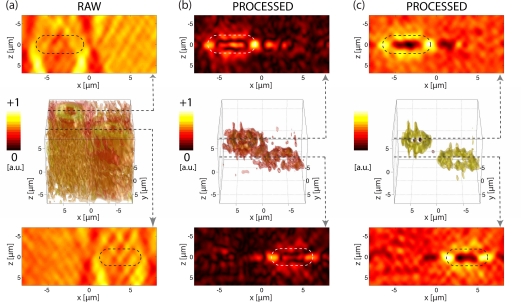

From the intensity deconvolution point of view, pseudo 3D microscopy can be achieved by reduction of out-of-focus haze [14], which means that the spread of objects in the z-direction is reduced. Recently, this potential has also been demonstrated for digitally refocused 3D fields [13] and compressive holography [16]. Optical sectioning effects are therefore intrinsic to 3D complex deconvolution if τ << 1 and amplitude information is not discarded. From the inverse filtering point of view, the z-confinement arises from the filtering by the 3D CTF as shown in Fig. 4. To demonstrate that scattered object field retrieval features this effect, complex deconvolution was performed on the RBC sample of the previous section 3.2 with τ determined by CTF’s noise level. For optimal amplitude contrast, the value of τ was calculated by a histogram based method, Otsu’s rule, well known in intensity deconvolution [29]. The resulting τ ≈ −3dB, lies beneath the pure phase deconvolution criterion of −1dB, but it allows effective diffraction pattern suppression. Therefore, high amplitude contrast compromises phase signal. The results are shown in Fig. 9.

Fig. 9.

3D rendered images of two human RBC at θ = 0. Images (a), shows |U| in 3D-space in the middle row. Bottom and top rows show the sections through central RBC positions (indicated by flashes). Accordingly, the field |o(s)| is represented in (b), and |n| in (c) (uncalibrated levels), respectively. The RBCs’ positions are compared to an oval area of 6μm × 2.5μm, based on measurements from section 3.2. Colorbars.

The raw 3D field |U| in Fig. 9(a) shows the object spread along the axial direction according to Eq. (9). As expected, the reconstruction features no axial confinement and the RBCs cannot be recognized.

On the other hand, the scattered object field |o(s)| after truncated inverse filtering according to Eq. (20) is depicted in Fig. 9(b). It shows that the background field and out-of-focus haze are successfully removed. The RBCs’ edges can be identified as strong scattering objects and the scattered field can be recognized to match in size and position the anticipated RBC values. Although the image quality is affected by artifacts in axial elongation [30], its axial dimensions match well. However, the refractive index mismatched cell membrane (nlipid > 1.4) and mounting medium (nm,sol = 1.334 at λ = 682nm) result in strong scattering well visible in the xz sections.

Finally, the fields related to refraction, |n|, can be reconstructed in Fig. 9(c) according to Eq. (23). In particular, the refraction due to the strong scattered field around the RBCs allows a good three-dimensional localization of the RBCs’ edges. Moreover, the higher refraction index due to its hemoglobin content is well visible in the xz sections. Note that these data are reconstructed from only one hologram for a single incident angle k⃗0 and therefore missing angles in Eq. (24) affect the reconstruction as seen by the lateral artifacts. However, if the 3D inverse filtering technique is combined with a multi-angle acquisition, it holds the potential of quantitative 3D refraction index reconstruction.

4. Concluding remarks

In this paper, we have described theory, experimental aspects as well as applications of a novel method of 3D imaging using realistic 3D CTF inverse filtering of complex fields.

Our theory connects three-dimensional coherent image formation and diffraction theory and results in a model for object scattering reconstruction by inverse filtering. This approach is experimentally complimented by the ability to characterize the DHM setup with background free APSF thanks to the use of a complex point source. The physical importance of the realistic 3D CTF is demonstrated with experimental data. The technique features effective correction of background illumination, diffraction pattern, aberrations and non-ideal experimental imaging conditions. Moreover, the regularization of the three-dimensional deconvolution of complex fields is shown to yield reconstruction in the complex domain. Depending on the threshold, phase de-blurring or optical sectioning is demonstrated with RBC measurements. Most essentially, the capability of scattered field extraction is experimentally presented.

In conclusion, the demonstrated technique bears the potential to reconstruct object scattering functions under realistic high-NA imaging conditions which play a key role in high resolution diffraction tomography.

Acknowledgments

This work was funded by the Swiss National Science Foundation (SNSF), grant 205 320 – 130 543. The experimental data used in section 3 and intended to tomographic application has been obtained in the framework of the Project Real 3D, funded by the European Community’s Seventh Framework Program FP7/2007-2013 under grant agreement n# 216105.The authors also acknowledge the Center of MicroNano-Technology (CMI) for the cooperation on its research facilities.

References and links

- 1.Sung Y., Choi W., Fang-Yen C., Badizadegan K., Dasari R. R., Feld M. S., “Optical diffraction tomography for high resolution live cell imaging,” Opt. Express 17(1), 266–277 (2009). 10.1364/OE.17.000266 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Sheppard C. J. R., Gu M., “Imaging by a high aperture optical-system,” J. Mod. Opt. 40, 1631–1651 (1993). 10.1080/09500349314551641 [DOI] [Google Scholar]

- 3.Frisken Gibson S., Lanni F., “Experimental test of an analytical model of aberration in an oil-immersion objective lens used in three-dimensional light microscopy,” J. Opt. Soc. Am. A 8(10), 1601–1613 (1991). 10.1364/JOSAA.8.001601 [DOI] [PubMed] [Google Scholar]

- 4.Arimoto R., Murray J. M., “A common aberration with water-immersion objective lenses,” J. Microsc. 216, 49–51 (2004). 10.1111/j.0022-2720.2004.01383.x [DOI] [PubMed] [Google Scholar]

- 5.Born M., Wolf E., Principles of Optics, 7th ed. (Cambridge University Press, 1999). [Google Scholar]

- 6.Devaney A., “A filtered backpropagation algorithm for diffraction tomography,” Ultrason. Imaging 4(4), 336–350 (1982). 10.1016/0161-7346(82)90017-7 [DOI] [PubMed] [Google Scholar]

- 7.Cotte Y., Toy M. F., Shaffer E., Pavillon N., Depeursinge C., “Sub-Rayleigh resolution by phase imaging,” Opt. Lett. 35, 2176–2178 (2010). 10.1364/OL.35.002176 [DOI] [PubMed] [Google Scholar]

- 8.Cotte Y., Toy M. F., Pavillon N., Depeursinge C., “Microscopy image resolution improvement by deconvolution of complex fields,” Opt. Express 18(19), 19462–19478 (2010). 10.1364/OE.18.019462 [DOI] [PubMed] [Google Scholar]

- 9.Gu M., Advanced Optical Imaging Theory (Springer-Verlag, 2000). [Google Scholar]

- 10.Marian A., Charrière F., Colomb T., Montfort F., Kühn J., Marquet P., Depeursinge C., “On the complex three-dimensional amplitude point spread function of lenses and microscope objectives: theoretical aspects, simulations and measurements by digital holography,” J. Microsc. 225, 156–169 (2007). 10.1111/j.1365-2818.2007.01727.x [DOI] [PubMed] [Google Scholar]

- 11.Cotte Y., Depeursinge C., “Measurement of the complex amplitude point spread function by a diffracting circular aperture,” in Focus on Microscopy, Advanced linear and non-linear imaging, pp. TU–AF2–PAR–D (2009).

- 12.Montfort F., Charrire F., Colomb T., Cuche E., Marquet P., Depeursinge C., “Purely numerical compensation for microscope objective phase curvature in digital holographic microscopy: influence of digital phase mask position,” J. Opt. Soc. Am. A 23(11), 2944–2953 (2006). 10.1364/JOSAA.23.002944 [DOI] [PubMed] [Google Scholar]

- 13.Latychevskaia T., Gehri F., Fink H.-W., “Depth-resolved holographic reconstructions by three-dimensional deconvolution,” Opt. Express 18(21), 22527–22544 (2010). 10.1364/OE.18.022527 [DOI] [PubMed] [Google Scholar]

- 14.Sarder P., Nehorai A., “Deconvolution methods for 3-D fluorescence microscopy images,” IEEE Signal Process. Mag. 23, 32–45 (2006). 10.1109/MSP.2006.1628876 [DOI] [Google Scholar]

- 15.McNally J. G., Karpova T., Cooper J., Conchello J. A., “Three-dimensional imaging by deconvolution microscopy,” Methods 19, 373–385 (1999). 10.1006/meth.1999.0873 [DOI] [PubMed] [Google Scholar]

- 16.Brady D. J., Choi K., Marks D. L., Horisaki R., Lim S., “Compressive holography,” Opt. Express 17(15), 13040–13049 (2009). 10.1364/OE.17.013040 [DOI] [PubMed] [Google Scholar]

- 17.Cuche E., Marquet P., Depeursinge C., “Simultaneous amplitude-contrast and quantitative phase-contrast microscopy by numerical reconstruction of Fresnel off-axis holograms,” Appl. Opt. 38, 6994–7001 (1999). 10.1364/AO.38.006994 [DOI] [PubMed] [Google Scholar]

- 18.Heng X., Cui X. Q., Knapp D. W., Wu J. G., Yaqoob Z., McDowell E. J., Psaltis D., Yang C. H., “Characterization of light collection through a subwavelength aperture from a point source,” Opt. Express 14, 10410–10425 (2006). 10.1364/OE.14.010410 [DOI] [PubMed] [Google Scholar]

- 19.Bergoend I., Arfire C., Pavillon N., Depeursinge C., “Diffraction tomography for biological cells imaging using digital holographic microscopy,” in Laser Applications in Life Sciences, SPIE vol. 7376 (2010).

- 20.Braat J., “Analytical expressions for the wave-front aberration coefficients of a tilted plane-parallel plate,” Appl. Opt. 36(32), 8459–8467 (1997). 10.1364/AO.36.008459 [DOI] [PubMed] [Google Scholar]

- 21.Kou S. S., Sheppard C. J., “Imaging in digital holographic microscopy,” Opt. Express 15(21), 13,640–13,648 (2007). 10.1364/OE.15.013640 [DOI] [PubMed] [Google Scholar]

- 22.Kou S. S., Sheppard C. J. R., “Image formation in holographic tomography: high-aperture imaging conditions,” Appl. Opt. 48(34), H168–H175 (2009). 10.1364/AO.48.00H168 [DOI] [PubMed] [Google Scholar]

- 23.Colomb T., Kühn J., Charrière F., Depeursinge C., Marquet P., Aspert N., “Total aberrations compensation in digital holographic microscopy with a reference conjugated hologram,” Opt. Express 14(10), 4300–4306 (2006). 10.1364/OE.14.004300 [DOI] [PubMed] [Google Scholar]

- 24.Lock J. A., “Ray scattering by an arbitrarily oriented spheroid. II. transmission and cross-polarization effects,” Appl. Opt. 35(3), 515–531 (1996). 10.1364/AO.35.000515 [DOI] [PubMed] [Google Scholar]

- 25.Chowdhury D. Q., Barber P. W., Hill S. C., “Energy-density distribution inside large nonabsorbing spheres by using Mie theory and geometrical optics,” Appl. Opt. 31(18), 3518–3523 (1992). 10.1364/AO.31.003518 [DOI] [PubMed] [Google Scholar]

- 26.Goodman J. W., Introduction to Fourier Optics (McGraw-Hill, 1968). [Google Scholar]

- 27.Park Y., Diez-Silva M., Popescu G., Lykotrafitis G., Choi W., Feld M. S., Suresh S., “Refractive index maps and membrane dynamics of human red blood cells parasitized by Plasmodium falciparum,” Proc. Natl. Acad. Sci. U.S.A. 105(37), 13730–13735 (2008). 10.1073/pnas.0806100105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kam Z., Hanser B., Gustafsson M. G. L., Agard D. A., Sedat J. W., “Computational adaptive optics for live three-dimensional biological imaging,” Proc. Natl. Acad. Sci. U.S.A. 98(7), 3790–3795 (2001). 10.1073/pnas.071275698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Otsu N., “A threshold selection method from gray-level histograms,” IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979). 10.1109/TSMC.1979.4310076 [DOI] [Google Scholar]

- 30.McNally J. G., Preza C., Conchello J.-A., Thomas L. J., “Artifacts in computational optical-sectioning microscopy,” J. Opt. Soc. Am. A 11(3), 1056–1067 (1994). 10.1364/JOSAA.11.001056 [DOI] [PubMed] [Google Scholar]