Abstract

Neural spike trains, which are sequences of very brief jumps in voltage across the cell membrane, were one of the motivating applications for the development of point process methodology. Early work required the assumption of stationarity, but contemporary experiments often use time-varying stimuli and produce time-varying neural responses. More recently, many statistical methods have been developed for nonstationary neural point process data. There has also been much interest in identifying synchrony, meaning events across two or more neurons that are nearly simultaneous at the time scale of the recordings. A natural statistical approach is to discretize time, using short time bins, and to introduce loglinear models for dependency among neurons, but previous use of loglinear modeling technology has assumed stationarity. We introduce a succinct yet powerful class of time-varying loglinear models by (a) allowing individual-neuron effects (main effects) to involve time-varying intensities; (b) also allowing the individual-neuron effects to involve autocovariation effects (history effects) due to past spiking, (c) assuming excess synchrony effects (interaction effects) do not depend on history, and (d) assuming all effects vary smoothly across time. Using data from primary visual cortex of an anesthetized monkey we give two examples in which the rate of synchronous spiking can not be explained by stimulus-related changes in individual-neuron effects. In one example, the excess synchrony disappears when slow-wave “up” states are taken into account as history effects, while in the second example it does not. Standard point process theory explicitly rules out synchronous events. To justify our use of continuous-time methodology we introduce a framework that incorporates synchronous events and provides continuous-time loglinear point process approximations to discrete-time loglinear models.

Key words and phrases: Discrete-time approximation, loglinear model, marked process, nonstationary point process, simultaneous events, spike train, synchrony detection

1 Introduction

One of the most important techniques in learning about the functioning of the brain has involved examining neuronal activity in laboratory animals under varying experimental conditions. Neural information is represented and communicated through series of action potentials, or spike trains, and the central scientific issue in many studies concerns the physiological significance that should be attached to a particular neuron firing/spiking pattern in a particular part of the brain. In addition, a major relatively new effort in neurophysiology involves the use of multielectrode recording, in which responses from dozens of neurons are recorded simultaneously. Much current research focuses on the information that may be contained in the interactions among neurons. Of particular interest are spiking events that occur across neurons in close temporal proximity, within or near the typical one millisecond accuracy of the recording devices. In this paper we provide a point process framework for analyzing such nearly synchronous events.

The use of point processes to describe and analyze spike train data has been one of the major contributions of statistics to neuroscience. On the one hand, the observation that individual point processes may be considered, approximately, to be binary time series allows methods associated with generalized linear models to be applied [cf. Brillinger (1988), (1992)]. On the other hand, basic point process methodology coming from the continuous-time representation is important both conceptually and in deriving data-analytic techniques [e.g., the time-rescaling theorem may be used for goodness-of-fit and efficient spike train simulation; see Brown, et al. (2001)]. The ability to go back and forth between continuous time, where neuroscience and statistical theory reside, and discrete time, where measurements are made and data are analyzed, is central to statistical analysis of spike trains. From the discrete-time perspective, when multiple spike trains are considered simultaneously it becomes natural to introduce loglinear models [cf. Martignon, et al. (2000)] and a widely read and hotly debated report by Schneidman, et al. (2006) examined the extent to which pairwise dependence among neurons can capture stimulus-related information. A fundamental limitation of much of the work in this direction, however, is its reliance on stationarity. The main purpose of the framework described below is to handle the non-stationarity inherent in stimulus-response experiments by introducing appropriate loglinear models while also allowing passage to a continuous-time limit. The methods laid out here are in the spirit of Ventura, Cai, and Kass (2005), who proposed a bootstrap test of time-varying synchrony, but our methods are different in detail and our framework is much more general.

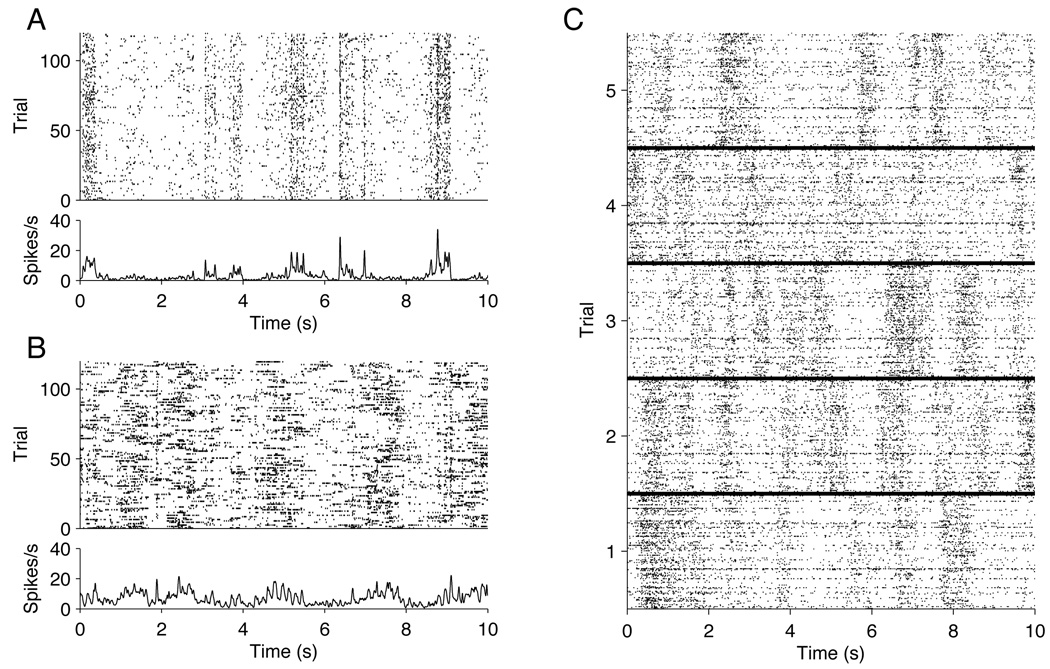

Statistical modeling of point process data focuses on intensity functions, which represent the rate at which the events occur, and often involve covariates [cf. Brown, et al. (2003); Kass, Ventura and Brown (2005); Paninski, et al. (2009); and references therein]. A basic distinction is that of conditional versus marginal intensities: the conditional intensity determines the event rate for a given realization of the process, while the marginal intensity is the expectation of the conditional intensity across realizations. In neurophysiological experiments stimuli are often presented repeatedly across many trials, resulting in many replications of the multiple sequences of spike trains. This is the situation we concern ourselves with here, and it is illustrated in Figure 1, part A, where the responses of a single neuron for 120 trials are displayed: each line of the raster plot shows a single spike train, which is the neural response on a single trial. The experiment that generated these data is described in Section 1.1. The bottom panel in part A of Figure 1 displays a smoothed peristimulus time histogram (PSTH), which summarizes the trial-averaged response by pooling across trials. As we explain in greater detail in Section 1.2, scientific questions and statistical analyses may concern either within-trial responses (conditional intensities) or trial-averaged responses (marginal intensities).

Figure 1.

Neural spike train raster plots for repeated presentations of a drifting sine wave grating stimulus. (A): Single cell responses to 120 repeats of a 10 second movie. At the top is a raster corresponding to the spike times, and below is a peri-stimulus time histogram (PSTH) for the same data. Portions of the stimulus eliciting firing are apparent. (B): The same plots as in (A), for a different cell. (C): Population responses to the same stimulus, for 5 repeats. Each block, corresponding to a single trial, is the population raster for ν = 128 units. On each trial there are several dark bands, which constitute bursts of network activity sometimes called “up states.” Up state epochs vary across trials, indicating they are not locked to the stimulus.

A point process evolves in continuous time but, as we have noted, it is convenient for many statistical purposes to consider a discretized version. Decomposing time into bins of width δ, we may define a binary time series to be 1 for every time bin in which an event occurs, and 0 for every bin in which an event does not occur. It is not hard to show that, under the usual regularity condition that events occur discretely (i.e. no two events occur at the same time), the likelihood function of the binary time series approximates the likelihood of the point process as δ → 0. For a pair of point processes, the discretized process is a time series of 2×2 polytomous variables indicating, in each time bin, whether an event of the first process occurred, an event of the second process occurred, or both, or neither. This suggests analyzing nearly synchronous events based on a loglinear model with cell probabilities that vary across time. Intuitive as such procedures may be, their point process justification is subtle: the standard regularity condition forbids two processes having synchronous events, so it is not obvious how we might obtain convergence to a point process (as δ → 0) for discrete-process likelihoods that incorporate synchrony.

One way out of this impasse is to introduce a marked point process framework in which each event/mark could be of three distinct types: first process, second process, or both. The standard marked point process requires modification, however, because it fails to accommodate independence as a special case. Under independence, the discretized events for each process occur with probability of order O(δ) while the synchronous events occur with probability of order O(δ2) as δ → 0. We refer to this as a sparsity condition, and the generalization to multiple processes involves a hierarchical sparsity condition. Once we introduce a family of marked point processes indexed by δ, we can guarantee hierarchical sparsity. Not only does this allow, as it must, the special case of independence models, but it also makes the conditional intensity for neuron i depend only on the history for neuron i, asymptotically (as δ → 0). This in turn avoids confounding the dependence described by the loglinear model and greatly reduces the dimensionality of the problem. We require two very natural regularity conditions based on well-known neurophysiology: the existence of a refractory period, during which the neuron cannot spike again, and smoothness of the conditional intensity across time. It would be possible, and sometimes advantageous, instead to model dependence through the individual-neuron conditional intensity functions. The loglinear modeling approach used here avoids this step.

1.1 A motivating example

In a series of experiments performed by one of us (Kelly, together with Dr. Matthew Smith), visual images were displayed at resolution 1024 × 768 pixels on a computer monitor while the neural responses in primary visual cortex of an anesthetized monkey were recorded. Each of 98 distinct images consisted of a sinusoidal grating that drifted in a particular direction for 300 milliseconds, and each was repeated 120 times. Each repetition of the complete sequence of stimuli lasted approximately 30 seconds. This kind of stimulus has been known to drive cells in primary visual cortex since the Nobel prize-winning work of Hubel and Wiesel in the 1960s. With improved technology and advanced analytical strategies, much more precise descriptions of neural response are now possible. A small portion of the data from 5 repetitions of many stimuli is shown in part C of Figure 1.

The details of the experiment and recording technique are reported in Kelly, et al. (2007). A total of 125 neural “units” were obtained, which included about 60 well-isolated individual neurons; the remainder were of undetermined origin (some mix of 1 or more neurons). The goal was to discover the interactions among these units in response to the stimuli. Each neuron will have its own consistent pattern of responses to stimuli, as illustrated in parts A and B of Figure 1. Synchronous spiking across neurons is relatively rare. However, in each of the 5 blocks within part C of Figure 1 (each block corresponding to a single trial) several dark bands of activity across most neurons may be seen during the trial. These bands correspond to what are often called network “up” states, and are often seen under anesthesia. For discussion and references see Kelly, et al. (2009). It would be of interest to separate the effects of such network activity from other synchronous activity, especially stimulus-related synchronous activity. The framework in this paper provides a foundation for statistical methods that can solve such problems.

1.2 Overview of approach

We begin with some notation. Suppose we observe the activity of an ensemble of ν neurons labeled 1 to ν over a time interval [0, T), where T > 0 is a constant. Let denote the total number of spikes produced by neuron i on [0, T) where i = 1, …, ν. The resulting (stochastic) sequence of spike times is written as . For the moment we focus on the case ν = 3, but other values of ν are of interest and with contemporary recording technology ν ≈ 100 is not uncommon, as in the experiment in Section 1.1. Let δ > 0 be a constant such that T is a multiple of δ (for simplicity). We divide the time interval into bins of width δ. Define Xi(t) = 1 if neuron i has a spike in the time bin [t, t + δ) and 0 otherwise. Because of the existence of a refractory period for each neuron, there can be at most 1 spike in [t, t + δ) from the same neuron if δ is sufficiently small. Then writing

the data would involve spike counts across trials [e.g., the number of trials on which (X1(t), X2(t), X3(t)) = (1, 1, 1)]. The obvious statistical tool for analyzing spiking dependence is loglinear modeling and associated methodology.

Three complications make the problem challenging, at least in principle. First, there is nonstationarity: the probabilities vary across time. The data thus form a sequence of 2ν contingency tables. Second, absent from the above notation is a possible dependence on spiking history. Such dependence is the rule rather than the exception. Let denote the set of values of Xi(s), where s < t, and s, t are multiples of δ. Thus is the history of the binned spike train up to time t. We may wish to consider conditional probabilities such as

for a, b, c ∈ {0, 1}. Third, there is the possibility of precisely timed lagged dependence (or time-delayed synchrony): e.g., we may want to consider the probability

| (1) |

where s, t, u may be distinct. Similarly, we might consider the conditional probability

In principle we would want to consider all possible combinations of lags. Even for ν = 3 neurons, but especially as we contemplate ν ≫ 3, strong restrictions must be imposed in order to have any hope of estimating all these probabilities from relatively sparse data in a small number of repeated trials. To reduce model dimensionality we suggest four seemingly reasonable tactics: (i) considering models with only low-order interactions, (ii) assuming the probabilities vary smoothly across time t, (iii) restricting history effects to those that modify a neuron’s spiking behavior based on its own past spiking, and then (iv) applying analogues to standard loglinear model methodology. Combining these, we obtain tractable models for multiple binary time series to which standard methodology, such as maximum likelihood and smoothing, may be applied. In modeling synchronous spiking events as loglinear time series, however, it would be highly desirable to have a continuous-time representation, where binning becomes an acknowledged approximation. We therefore also provide a theoretical point process foundation for the discrete multivariate methods proposed here.

It is important to distinguish the probabilities . The former are trial-averaged or marginal probabilities, while the latter are within-trial or conditional probabilities. Both might be of interest but they quantify different things. As an extreme example suppose, as sometimes is observed, each of two neurons has highly rhythmic spiking at an approximately constant phase relationship with an oscillatory potential produced by some large network of cells. Marginally these neurons will show strongly dependent spiking. On the other hand, after taking account of the oscillatory rhythm by conditioning on each neuron’s spiking history and/or a suitable within-trial time-varying covariate, that dependence may vanish. Such a finding would be informative, as it would clearly indicate the nature of the dependence between the neurons. In Section 3 we give a less dramatic but similar example taken from the data described in Section 1.1.

We treat marginal and conditional analyses separately. Our use of two distinct frameworks is a consequence of the way time resolution will affect continuous-time approximations. We might begin by imagining the situation in which event times could be determined with infinite precision. In this case it is natural to assume, as is common in the point process literature, that no two processes have simultaneous events. As we indicate, this conception may be applied to marginal analysis. However, the event times are necessarily recorded to fixed accuracy, which becomes the minimal value of δ, and δ may be sufficiently large that simultaneous events become a practical possibility. Many recording devices, for example, store neural spike event times with an accuracy of 1 millisecond. Furthermore, the time scale of physiological synchrony—the proximity of spike events thought to be physiologically meaningful—is often considered to be on the order of δ = 5 milliseconds [cf. Grün, Diesmann and Aertsen (2002a), (2002b) and Grün (2009)]. For within-trial analyses of synchrony, the theoretical conception of simultaneous (or synchronous) spikes across multiple trials therefore becomes important and leads us to the formalism detailed below. The framework we consider here provides one way of capturing the notion that events within δ milliseconds of each other are essentially synchronous.

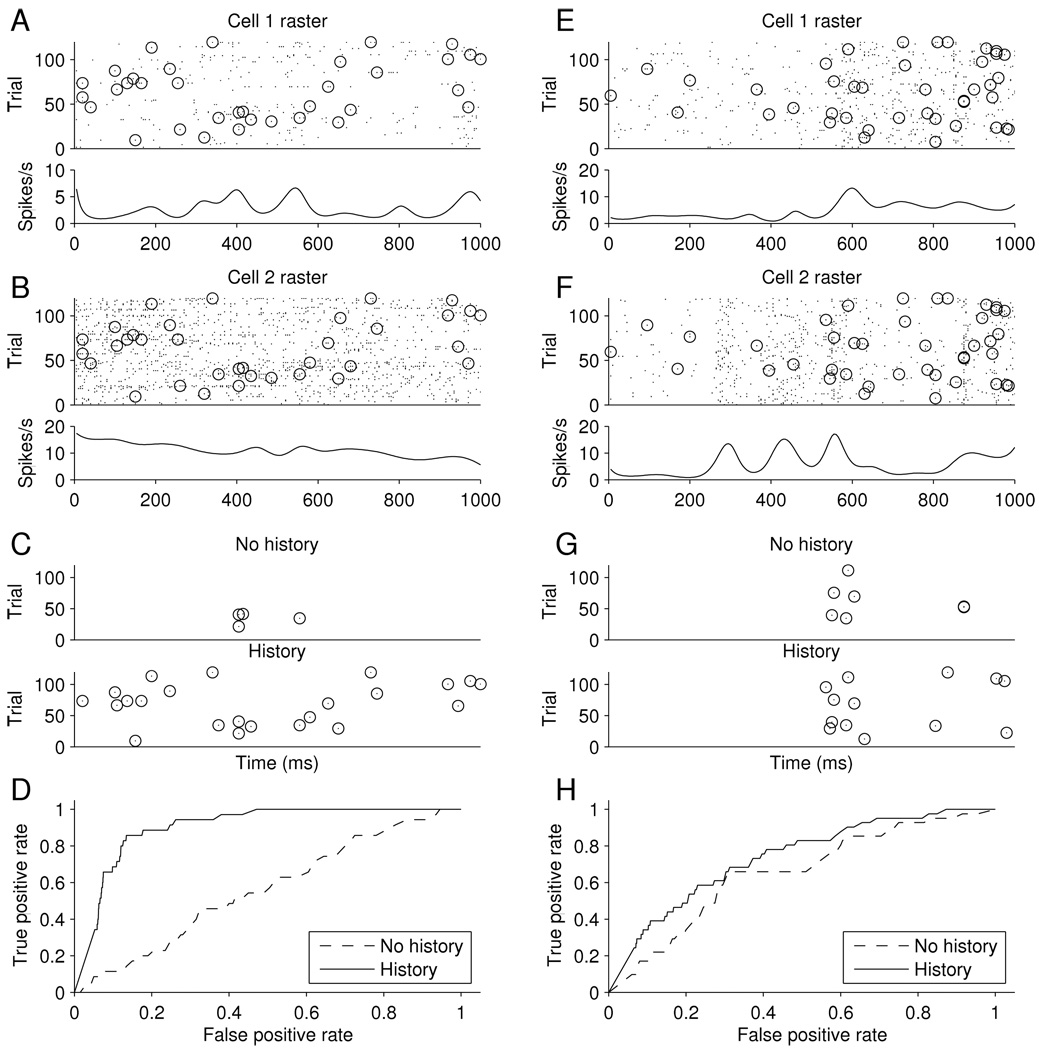

The rest of this article is organized as follows. Section 2 presents the methodology in three subsections: Sections 2.1 and 2.2 introduce marginal and conditional methods in the simplest case while Section 2.3 discusses the use of loglinear models and associated methodology for analyzing spiking dependence. In Section 3 we illustrate the methodology by returning to the example of Section 1.1. The main purpose of our approach is to allow covariates to take account of such things as the irregular network rhythm displayed in Figure 1, so that synchrony can be understood as either related to the network effects or unrelated. Figure 2 displays synchronous spiking events for two different pairs of neurons, together with accompanying fits from continuous-time loglinear models. For both pairs the independence model fails to account for synchronous spiking. However, for one pair the apparent excess synchrony disappears when history effects are included in the loglinear model, while in the other pair they do not, leading to the conclusion that in the second case the excess synchrony must have some other source. Theory is presented in Sections 4–6. We add some discussion in Section 7. All proofs in this article are deferred to the Appendix.

Figure 2.

Synchronous spike analysis for two pairs of neurons. Results for one pair shown on left, in parts (A)–(D) and for the other pair on the right in parts (E)–(H). Part (A): Response of a cell to repetitions of a 1 second drifting grating stimulus. The raster plot is shown above and the smoothed PSTH below. Part (B): Response from a second cell, as in (A). In both (A) and (B), spikes that are synchronous between the pair are circled. Part (C): Correct joint spike predictions from model, shown as circles (as in parts (A) and (B)), when false positive rate is set at 10%. In top plot the joint spikes are from the history-independent model, as in (13), while in the bottom plot they are as in (16), including the network covariate in the history term. Part (D): ROC curves for the models in part (C). Parts (E), (F), (G), and (H) are similar to Parts (A), (B), (C), and (D) but for the second pair of neurons.

2 Methodology

In this section we present our continuous-time loglinear modeling methodology. We begin with the simplest case of ν = 2 neurons, presenting the main ideas in Sections 2.1 and 2.2 for the marginal and conditional cases, respectively, in terms of the probabilities , etc., for all a, b ∈ {0, 1}. We show how we wish to pass to the continuous-time limit, thereby introducing point process technology and making sense of continuous-time smoothing, which is an essential feature of our approach. In Section 2.3 we reformulate using loglinear models, and then give continuous-time loglinear models for ν = 3. Our analyses in Section 3 are confined to ν = 2 and ν = 3 because of the paucity of higher-order synchronous spikes in our data. Our explicit models for ν = 3 should make clear how higher-order models are created. We give general recursive formulas in Sections 5 and 6.

2.1 Marginal methods for ν = 2

The null hypothesis

| (2) |

is a statement that both neurons spike in the interval [t, t + δ), on the average, at the rate determined by independence. Defining ζ(t) by

| (3) |

we may rewrite (2) as

| (4) |

As in Ventura, et al. (2005) to assess H0 the general strategy we follow is to (i) smooth the observed-frequency estimates of across time t, and then (ii) form a suitable test statistic and compute a p-value using a bootstrap procedure. We may deal with time-lagged hypotheses similarly, e.g., for a lag h > 0, we write

| (5) |

then smooth the observed-frequency estimates for as a function of t, form an analogous test statistic and find a p-value.

To formalize this approach we consider counting processes corresponding to the point processes , i = 1, 2 (as in Section 1.2 with ν = 2). Under regularity conditions, the following limits exist:

| (6) |

Consequently, for small δ, we have

The smoothing of the observed-frequency estimates for may be understood as producing an estimate λ̂1,2(t) for λ1,2(t). The null hypothesis in (2) becomes, in the limit as δ → 0,

or equivalently,

| (7) |

The lag h case is treatly similarly. Under mild conditions, Theorems 1 and 2 of Section 5 show that the above heuristic arguments hold for a continuous-time regular marked point process. This in turn gives a rigorous asymptotic justification (as δ → 0) for estimation and testing procedures such as those in steps (i) and (ii) mentioned above, following (4), and illustrated in Section 3.

2.2 Conditional methods for ν = 2

To deal with history effects, Equation (3) is replaced with

| (8) |

where , i = 1, 2, i = 1, 2, are, as in Section 1, the binned spiking histories of neurons 1 and 2, respectively, on the interval [0, t). Analogous to (4), the null hypothesis is

We note that there are two substantial simplifications in (8). First, , which says that neuron i’s own history is relevant in modifying its spiking probability (but not the other neuron’s history). Second, ζ(t) does not depend on the spiking history ℋ̄t. This is important for what it claims about the physiology, for the way it simplifies statistical analysis, and for the constraint it places on the point process framework. Physiologically, it decomposes excess spiking into history-related effects and stimulus-related effects, which allows the kind of interpretation alluded to in Section 1 and presented in our data analysis in Section 3. Statistically, it improves power because tests of H0 effectively pool information across trials, thereby increasing the effective sample size.

Consider counting processes , i = 1, 2, as in Section 2.1. Under regularity conditions, the following limits exist for t ∈ [0, T):

| (9) |

where ℋt = limδ→0 ℋ̄t and , i = 1, 2. For sufficiently small δ, we have

| (10) |

for all t ∈ 𝒯. Again following Ventura, et al. (2005), we may smooth the observed-frequency estimates of to produce an estimate of λ1,2(t|ℋt), and smooth the observed-frequency estimates of to produce estimates of , i = 1, 2. Letting δ → 0 in (8), we obtain

| (11) |

Consequently, for sufficiently small δ, a conditional test of H0 : ζ(t) = 1 for all t becomes a test of the null hypothesis for all t or, equivalently, in this conditional case we have the same null hypothetical statement as (7).

In attempting to make Equation (9) rigorous, a difficulty arises: for a regular marked point process, the function ξ need not be independent of the spiking history. This would create a fundamental mismatch between the discrete data-analytical method and its continuous-time limit. The key to avoiding this problem is to enforce the sparsity condition (10). Specifically, the probabilities are of order O(δ) while the probabilities are of order O(δ2). This also allows independence models within the marked point process framework. Section 6 proposes a class of marked point process models indexed by δ and provides results that validate the heuristics above.

2.3 Loglinear models

We now reformulate in terms of loglinear models the procedures sketched in Sections 2.1 and 2.2 for ν = 2 neurons, and then indicate the way generalizations proceed when ν ≥ 3.

In the marginal case of Section 2.1, it is convenient to define

Equation (3) implies that

| (12) |

for all a, b ∈ {0, 1} and t ∈ 𝒯 and in the continuous-time limit, using (6), we write

| (13) |

for t ∈ [0, T). The null hypothesis may then be written as

| (14) |

In the conditional case of Section 2.2, we similarly define

we may rewrite (8) as the loglinear model

| (15) |

for all a, b ∈ {0, 1} and t ∈ 𝒯 and in the continuous-time limit we rewrite (11) in the form

| (16) |

for t ∈ [0, T). The null hypothesis may again be written as in (14).

Rewriting the model in loglinear forms (12), (13), (15), and (16) allows us to generalize to ν ≥ 3 neurons. For example, with the obvious extensions of the previous definitions, for ν = 3 neurons the two-way interaction model in the continuous-time marginal case becomes

| (17) |

for all a, b, c ∈ {0, 1} and t ∈ 𝒯, and

for all t ∈ (0, T]. The general form of (17) is given by Equation (28) in Section 5. In the conditional case, the two-way interaction model becomes

| (18) |

for all a, b, c ∈ {0, 1} and t ∈ 𝒯 and in continuous time,

for all t ∈ (0, T]. In either the marginal or conditional case, the null hypothesis of independence may be written as

| (19) |

On the other hand, we could include the additional term abc log[ξ{1,2,3}(t)] and use the null hypothesis of no three-way interaction

| (20) |

These loglinear models offer a simple and powerful way for studying dependence among neurons when spiking history is taken into account. It has an important dimensionality reduction property in that the higher order terms are asymptotically independent of history. In practice this provides a huge advantage: the synchronous spikes are relatively rare; in assessing excess synchronous spiking with this model, the data may be pooled over different histories leading to a much larger effective sample size. The general conditional model in Equation (34) retains this structure. An additional feature of these loglinear models is that time-varying covariates may be included without introducing new complications. In Section 3 we use a covariate to characterize the network up states, which are visible in part C of Figure 1, simply by including it in calculating each of the individual-neuron conditional intensities in (16).

Sometimes, as in the data we analyze here, the synchronous events are too sparse to allow estimation of time-varying excess synchrony and we must assume it to be constant, ζ(t) = ζ for all t. Thus, for ν = 2, the models of (12) or (15) take simplified forms in which ζ(t) is replaced by the constant ζ and we would use different test statistics to test the null hypothesis H0 : ζ = 1. To distinguish the marginal and conditional cases we replace ζ(t) by ζH in (15) and then also write H0 : ζH = 1. Moving to continuous time, which is simpler computationally, we write ξ(t) = ξ, estimate ξ and ξH, and test H0 : ξ = 1 and H0 : ξH = 1. Specifically, we apply the loglinear models (12), (13), (15), and (16) in two steps. First, we smooth the respective PSTHs to produce smoothed curves λ̂i(t), as in parts A and B of Figure 1. Second, ignoring estimation uncertainty and taking λi(t) = λ̂i(t), we estimate the constant ζ. Using the point process representation of joint spiking (justified by the results in Sections 5 and 6) we may then write

where the sum is over the joint spike times ti and λ(t) is replaced by the right-hand side of (13), in the marginal case, or (16), in the conditional case. It is easy to maximize the likelihood L(ξ) analytically: setting the left-hand side to ℓ(ξ), in the marginal case we have

where N is the number of joint (synchronous) spikes (the number of terms in the sum), while in the conditional case we have the analogous formula

and setting to 0 and solving gives

| (21) |

and

| (22) |

which, in both cases, is the ratio of the number of observed joint spikes to the number expected under independence.

We apply (21) and (22) in Section 3. To test H0 : ξ = 1 and H0 : ξH = 1 we use a bootstrap procedure in which we generate spike trains under the relevant null-hypothetical model. This is carried out in discrete time, and requires all 4 cell probabilities at every time t ∈ 𝒯. These are easily obtained by subtraction using and ζ̂ = ξ̂ or, in the conditional case, , and ζ̂H = ξ̂H. As we said above, λi(t) = λ̂i(t) is obtained from the preliminary step of smoothing the PSTH. Similarly, the conditional intensities are obtained from smooth history-dependent intensity models such as those discussed in Kass, et al. (2005). In the analyses reported here we have used fixed-knot splines to describe variation across time t.

In the case of 3 or more neurons the analogous estimates and cell probabilities must, in general, be obtained by a version of iterative proportional fitting. For ν = 3, to test the null hypothesis (20) we follow the steps leading to (21) and (22). Under the assumption of constant ζ123 we obtain

| (23) |

and

| (24) |

In Section 3 we fit (17) and report a bootstrap test of the hypothesis (20) using the test statistic ξ̂123 in (23).

3 Data Analysis

We applied the methods of Section 2.3 to a subset of the data described in Section 1.1 and present the results here. We plan to report a more comprehensive analysis elsewhere.

We took δ = 5 milliseconds (ms), which is a commonly-used window width in studies of synchronous spiking. Raster plots of spike trains across repeated trials from a pair of neurons are shown in Parts A and B of Figure 2, with synchronous events indicated by circles. Below each raster plot is a smoothed PSTH, that is, the two plots show smoothed estimates λ̂1(t) and λ̂2(t) of λ1(t) and λ2(t) in (6), the units being spikes per second. Smoothing was performed by fitting a generalized additive model using cubic splines with knots spaced 100ms apart. Specifically, we applied Poisson regression to the count data resulting from pooling the binary spike indicators across trials: for each time bin the count was the number of trials on which a spike occurred. To test H0 under the model in (13) we applied (21) to find log ξ̂. We then computed a parametric bootstrap standard error of log ξ̂ by generating pseudo-data from model (13) assuming H0 : log ξ = 0. We generated 1000 such trials, giving 1000 pseudo-data values of log ξ̂, and computed the standard deviation of those values as a standard error, to obtain an observed z-ratio test statistic of 3.03 (p = .0012 according to asymptotic normality).

The highly significant z ratio shows that there is excess sychronous spiking beyond what would be expected from the varying firing rates of the two neurons under independence. However, it does not address the source of the excess synchronous spiking. The excess synchronous spiking could depend on the stimulus or, alternatively, it might be due to the slow waves of population activity evident in part (C) of Figure 1, the time of which vary from trial to trial and therefore do not depend on the stimulus. To examine the latter possibility we applied a within-trial loglinear model as in (16) except that we incorporated into the history effect not only the history of each neuron but also a covariate representing the population effect. Specifically, for neuron i (i = 1, 2) we used the same generalized additive model as before, but with two additional variables. The first was a variable that, for each time bin, was equal to the number of neuron i spikes that had occurred in the previous 100ms. The second was a variable that, for each time bin, was equal to the number of spikes that occurred in the previous 100ms across the whole population of neurons, other than neurons 1 and 2. We thereby obtained fitted estimates of . Note that the fits for the independence model, defined by applying (7) to (11), now vary from trial to trial due to the history effects. Applying (22) we found log ξ̂H, and then again computed a bootstrap standard error of log ξ̂ by creating 1000 trials of pseudo-data, giving a z-ratio of .39, which is clearly not significant.

Raster plots for a different pair of neurons are shown in parts (E) and (F) of Figure 2. The same procedures were applied to this pair. Here, the z-ratio for testing H0 under the marginal model was 3.77 (p < .0001) while that for testing H0 under the conditional model remained highly significant at 3.57 (p = .0002). In other words, using the loglinear model methodology we have discovered two pairs of V1 neurons with quite different behavior. For the first pair, synchrony can be explained entirely by network effects, while for the second pair it can not; this suggests that, instead, for the second pair, some of the excess synchrony may be stimulus-related.

We also compared the marginal and conditional models (13) and (16) using ROC curves. Specifically, for the binary joint spiking data we used each model to predict a spike whenever the intensity was larger than a given constant: for the marginal case whenever log λ1,2(t) > cmarginal, and for the conditional case whenever log λ1,2(t|ℋt) > cconditional. The choice of constants cmarginal and cconditional reflect trade-offs between false positive and true positive rates (analogous to type I error and power) and as we vary the constants the plot of true vs. false positive rates forms the ROC curve. To determine the true and false positive rates we performed ten-fold cross-validation, repeatedly fitting from 90% of the trials and predicting from the remaining 10% of the trials. The two resulting ROC curves are shown in part D of Figure 2, labeled as “no history” and “history,” respectively. To be clear, in the two cases we included the terms corresponding, respectively, to ξ and ξH, and in the history case we included both the auto-history and the network history variables specified above. The ROC curve for the conditional model strongly dominates that for the marginal model, indicating far better predictive power. In part C of Figure 2 we display the true positive joint spike predictions when the false-positive rate was held at 10%. These correctly-predicted joint spikes may be compared to the complete set displayed in parts A and B of the figure. The top display in part C, labeled “no history,” shows that only a few joint spikes were correctly predicted by the marginal model, while the large majority were correctly predicted by the conditional model. Furthermore, the correctly predicted joint spikes are spread fairly evenly across time. In contrast, the ROC curves for the second pair of neurons, shown in part (G) of Figure 2, are close to each other: inclusion of the history effects (which were statistically significant) did not greatly improve predictive power. Furthermore, the correctly predicted synchronous spikes are clustered in time, with the main cluster occurring near a peak in the individual-neuron firing-rate functions shown in the two smoothed PSTHs in parts (E) and (F).

Taking all of the results together, our analysis suggests that the first pair of neurons produced excess synchronous spikes solely in conjunction with network effects, which are unrelated to the stimulus, while for the second pair of neurons some of the excess synchronous spikes occurred separately from the network activity and were, instead, often time-locked and, therefore, stimulus-related.

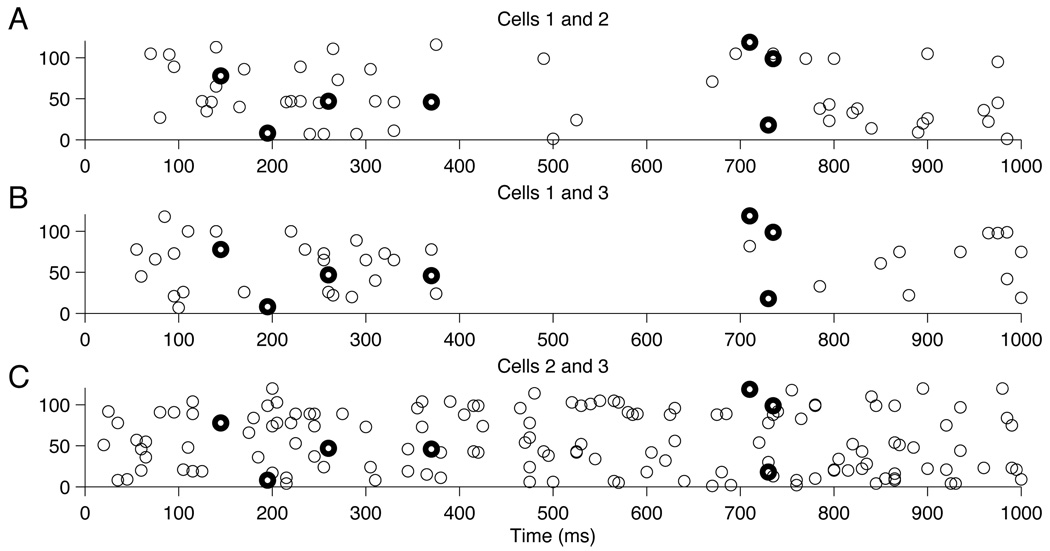

We also tried to assess whether 2-way interactions were sufficient to explain observed 3-way events by fitting the no-3-way interaction model given by (17), and then testing the null hypothesis in (20). We did this for a particular set of 3 neurons, whose joint spikes are displayed in Figure 3. The method is analogous to that carried out above for pairs of neurons, in the sense that the test statistic was ξ̂123 given by (23) and a parametric bootstrap procedure, based on the fit of (17), was used to compute an approximate p-value. Fitting of (17) required an iterative proportional fitting procedure, which we will describe in detail elsewhere. We obtained p = .16, indicating no significant 3-way interaction. In other words, for these three neurons, 2-way excess joint spiking appears able to explain the occurrence of the 3-way joint spikes. However, as may be seen in Figure 3, there are very few 3-way spikes in the data. We mention this issue again in our discussion.

Figure 3.

Plots of synchronous spiking events for 3 neurons. Each of the three plots displays all joint spikes (as circles) for a particular pair of neurons. The dark circles in each plot indicate the 3-way joint spikes.

4 A marked point process framework

In this section, a class of marked point processes for modeling neural spike trains is briefly surveyed. These models take into account the possibility of two or more neurons firing in synchrony (i.e. at the same time). Consider an ensemble of ν neurons labeled 1 to ν. For T > 0, let NT denote the total number of spikes produced by this ensemble on the time interval [0, T) and let 0 ≤ s1 < ⋯ < sNT < T denote the specific spike times. For each j = 1, …, NT, we write (sj, (i1, …, ik)) to denote the event that a spike was fired synchronously) at time sj by (and only by) the i1, …, ik neurons. We observe that

| (25) |

forms a marked point process on the interval [0, T) with 𝒦 as the mark space satisfying

We follow Daley and Vere-Jones (2002), page 249, and define the conditional intensity function of ℋT as

where h1(․) is the hazard function for the location of the first spike s1, h2(․|(s1, κ1)) the hazard function for the location of the second spike s2 conditioned by (s1, κ1), and so on, while f1(․|t) is the conditional probability mass function of κ1 given s1 = t, and so on. It is also convenient to write λ(t, κ|∅) = λ(t, κ|ℋt) for all t < s1. The following proposition and its proof can be found in Daley and Vere-Jones (2002), page 251.

Proposition 1. Let ℋT be as in (25). Then the density of ℋT is given by

5 Theoretical results: marginal methods

In this section we (i) provide a justification of the limiting statements in (6) and (ii) generalize to higher-order models. We also note that lagged dependence can be accommodated within our framework, treating the case ν = 2.

5.1 Regular marked point process and loglinear modeling

In this subsection, we prove that the heuristic arguments of Section 2 for marginal methods hold under mild conditions. Consider ν ≥ 1 neurons labeled 1 to ν. For T > 0, let NT denote the total number of spikes produced by these ν neurons on the time interval [0, T) and let 0 ≤ s1 < ⋯ < sNT < T denote the specific spike times. For each j = 1, …, NT, we write (sj, (ij)) to represent the event that a spike was fired at time sj by neuron ij where ij ∈ {1, …, ν}. We observe from Section 4 that

forms a marked point process on the interval [0, T) with mark space 𝒦 = {(1), …, (ν)}. Following the notation of Section 4, let λ(t, (i)|ℋt) denote the conditional intensity function of the point process ℋT. We assume that the following two conditions hold.

Condition (I) There exists a strictly positive refractory period for each neuron in that there exists a constant θ > 0 such that λ(t, (i)|ℋt) = 0 if there exists some (s,(i)) ∈ ℋt such that t − s ≤ θ, i ∈ {1, …, ν}.

Condition (II) For each k ∈ {0, …, 2⌈T/θ⌉−1} and i, i1, …, ik ∈ {1, …, ν}, the conditional intensity function λ(t, (i)|{(s1, (i1)), …, (sk, (ik))}) is a continuously differentiable function in (s1, …, sk, t) over the simplex 0 ≤ s1 ≤ ⋯ ≤ sk ≤ t ≤ T.

If δ < θ, then Condition (I) implies that there is at most 1 spike from each neuron in a bin of width δ. Conditions (I) and (II) also imply that the marked point process is regular in that (exactly) synchronous spikes occur only with probability 0. Theorem 1 below gives the limiting relationship between the bin probabilities of the induced discrete-time process and the conditional intensities of the underlying continuous-time marked point process.

Theorem 1. Suppose that Conditions (I) and (II) hold, 1 ≤ i1 < ⋯ ik ≤ ν and 1 ≤ k ≤ ν. Then

where tm = mδ → t as δ → 0 and λ(t, (i2)|{(t, (i1))} ∪ ℋt) = limt*→t− λ(t, (i2)|{(t*, (i1))} ∪ ℋt), etc. Here the expectation is taken with respect to ℋt.

Theorem 1 validates the heuristics stated in (6) where ν = 2,

Next we construct the discrete-time loglinear model induced by the above marked point process. First define recursively for tm = mδ,

| (26) |

We further define

| (27) |

where limδ→0 tm → t, whenever the expression on the right hand side of (27) is well-defined. The following is an immediate corollary of Theorem 1.

Corollary 1. Let ξ{i1}(t) and ξ{i1,…,ik}(t) be as in (27). Then with the notation and assumptions of Theorem 1, we have

whenever the right hand sides are well-defined.

It is convenient to define . For a1, …, aν ∈ {0, 1} and not all 0, define where i ∈ {i1, …, ik} if and only if ai = 1. Then using the notation of (26), the corresponding loglinear model induced by the above marked point process is

| (28) |

for all a1, …, aν ∈ {0, 1}. Under Conditions (I) and (II), Corollary 1 shows that ξΞ(t) = limδ→0 ζΞ(tm) is continuously differentiable. This gives an asymptotic justification for smoothing the estimates of ζΞ, Ξ ⊆ {1, …, ν}.

5.2 Case of ν = 2 neurons with lag h

This subsection considers the lag h case for two neurons labeled 1 and 2. Let h, m be integers such that 0 ≤ m ≤ m + h ≤ Tδ−1 − 1. As in (1), we write

where tm = mδ and tm + h = (m + h)δ. Analogous to Theorem 1, we have the following results for the lag case.

Theorem 2. Suppose Conditions (I) and (II) hold. Then

where tm+h → t + τ and tm → t as δ → 0 for some constant τ > 0. Here the expectation is taken with respect to ℋt+τ (and hence also ℋt).

Corollary 2. Let ζ(tm, tm+h) be defined as in (5). Then with the notation and assumptions of Theorem 2, we have

| (29) |

whenever the right hand side is well-defined.

We observe from Conditions (I) and (II) that the right hand side of (29) is continuously differentiable in t. Again this provides an asymptotic justification for smoothing the estimate of ζ(t, t + τ), with respect to t, when δ is small.

6 Theoretical results: conditional methods

This section is analogous to Section 5, but treats the conditional case. We (i) provide a justification of the limiting statements in (9) and (ii) generalize to higher-order models. We again also note that lagged dependence can be accommodated within our framework, treating the case ν = 2.

6.1 Synchrony and loglinear modeling

This subsection considers ν ≥ 1 neurons labeled 1 to ν. We model the spike trains generated by these neurons on [0, T) by a marked point process ℋT with mark space

Here, for example, the mark (i1) denotes the event that neuron i1 (and only this neuron) spikes, (i1, i2) denotes the event that neuron i1 and neuron i2 (and only these two neurons) spike in synchrony (i.e. at the same time), and the mark (1, …, ν) denotes the event that all ν neurons spike in synchrony.

Let Nt denote the total number of spikes produced by these neurons on [0, t), 0 < t ≤ T, and let 0 ≤ s1 < ⋯ < sNT < T denote the specific spike times. For each j = 1, …, NT, let κj ∈ 𝒦 be the mark associated with sj. Then ℋT can be expressed as

| (30) |

Given ℋt, we write

denotes the spiking history of neuron i on [0, t). The conditional intensity function λ(t, κ|ℋt), t ∈ [0, T) and κ ∈ 𝒦, of the marked point process ℋT is defined to be

| (31) |

where δ > 0 is a constant, γ{i1,…,ik}(t)’s are functions depending only on t and the are conditional intensity functions depending only on the spiking history of neuron i. We take γ{i}(t) to be identically equal to 1.

From (31), we note that the above marked point process model is not a single marked point process but rather a family of marked point processes indexed by δ. In the sequel, we let δ → 0. We further assume that the following two conditions hold.

Condition (III) There exists a strictly positive refractory period for each neuron in that there is a constant θ > 0 such that for i = 1, …, ν and t ∈ [0, T),

Condition (IV) For each k ∈ {0, …, ⌈T/θ⌉ − 1} and i ∈ {1, …, ν}, λi(t|{s1, …, sk}) is a continuously differentiable function in (s1, …, sk, t) over the simplex 0 ≤ s1 ≤ ⋯ ≤ sk ≤ t ≤ T.

Following Section 2.2, we divide the time interval [0, T) into bins of width δ. For simplicity, we assume that T is a multiple of δ. Let tm = mδ and Xi(tm), m = 0, …, Tδ−1 − 1, be as in Section 1. If Xi(tl) = 1 for all l ∈ {l1, …, lk}, and Xi(tl) = 0 otherwise, for some subset 0 ≤ l1 < ⋯ < lk ≤ m − 1, we write

| (32) |

It should be observed that although the above definitions of differ from those given in Section 1.2, they are equivalent. We note that the conditional intensity functions λ(t, (i1, …, ik)|ℋt) in (31) depend on the bin width δ. This is necessary in order to preserve the natural hierarchical sparsity conditions given by

as δ → 0 for all 1 ≤ i1 < ⋯ < ik ≤ ν, 1 ≤ k ≤ ν.

Theorem 3. Consider the marked point process ℋT as in (30) with conditional intensity function satisfying (31). Then under Conditions (III) and (IV), we have

and in general,

for sufficiently small δ where ℋ̄tm and are defined by (32).

The following is an immediate corollary of Theorem 3. It gives an asymptotic justification for Equation (8) in Section 2.2.

Corollary 3. With the notation and assumptions of Theorem 3, we have for ν = 2,

for sufficiently small δ > 0 uniformly over , m = 0, …, Tδ−1 − 1 where ζ(tm) = 1 + γ{1,2}(tm).

We now use Theorem 3 to construct a loglinear model (for the above spike train data) whose higher order coefficients are asymptotically independent of past spiking history. First define recursively,

| (33) |

It follows from Theorem 3 and (33) that for sufficiently small δ,

whenever 1 ≤ i1 < ⋯ < ik ≤ ν and k ≥ 2, assuming that terms on the right hand side are well-defined. The practical importance of these results lies in the fact that the coefficients ζ{i1,…,ik}(tm|ℋ̄tm) with k ≥ 2 are asymptotically (as δ → 0) independent of ℋ̄tm, the spiking history of the neurons. It is convenient to define . For a1, …, aν ∈ {0, 1} and not all 0, define where i ∈ {i1, …, ik} if and only if ai = 1. Then the induced loglinear model is

| (34) |

for all a1, …, aν ∈ {0, 1} where the second term on the right hand side of (34) is asymptotically (as δ → 0) independent of the spiking history ℋ̄tm.

6.2 ν = 2 neurons with time-delayed synchrony

This subsection considers ν = 2 neurons labeled 1, 2 and let τ > 0 be a constant denoting the spike lag. We model the spike train generated by the two neurons on [0, T) by a marked point process ℋT as in (25) with mark space 𝒦 = {(1), (2), (1, 2)}. The marks (1), (2) are interpreted as before as isolated (i.e. non-synchronous) spikes. However now, (1, 2) is interpreted to be neuron 1 spiking first and then neuron 2 spiking second after a delay of τ time units. The mark (1, 2) is used to model a precise time-delayed synchronous spiking of lag τ between the 2 neurons.

Let NT denote the number of times the three marks occur on [0, T) and s1 < ⋯ < sNT be the specific spike times. For each j = 1, …, NT, let κj ∈ 𝒦 be the mark associated with sj. Then ℋt can be decomposed into where

| (35) |

To be definite, (s, κ) = (s, (1, 2)) means neuron 1 spikes at time s and neuron 2 spikes at time s + τ. The conditional intensity function λ(t, κ|ℋt), t ∈ [0, T) and κ ∈ 𝒦, of the marked point process ℋT is defined to be

| (36) |

where δ > 0 is a constant, γ(t, t + τ) is a continuously differentiable function in t on the interval 0 ≤ t ≤ T − τ, and are conditional intensity functions depending only on the spiking history of neuron 1 up to time t and on the spiking history of neuron 2 up to time t + τ respectively.

As in Section 1, we divide the time interval [0, T) into bins of width δ > 0. Let be as in (32) where, for simplicity, we assume that δ is chosen such that m, h are integers satisfying 0 ≤ m ≤ m + h ≤ Tδ−1 − 1 and tm + h = tm + τ. Recall that by definition,

Theorem 4. Consider the marked point process ℋT as in (35) with conditional intensity function satisfying (36). Let m, h be integers satisfying 0 ≤ m ≤ m + h ≤ Tδ−1 − 1 and tm + h = tm + τ. Then under Conditions (III) and (IV), we have

for sufficiently small δ.

The practical significance of Theorem 4 is that γ(tm, tm + h) does not depend on the spiking history of the 2 neurons. This implies that a statistic based on γ can be constructed to test the null hypothesis H0 that there is no time-delayed spiking synchrony at lag τ between the 2 neurons.

7 Discussion

We have described an approach to assessing spike train synchrony using loglinear models for multiple binary time series. We tried to motivate the application of loglinear modeling technology in Section 1, emphasizing two features of individual neural response: stimulus-induced nonstationarity that remains time-locked across trials, and within-trial effects that are history-dependent, with timing that varies across trials. These were incorporated into the models by including for individual neurons both time-varying marginal effects, which stay the same across trials, and history-dependent terms; interaction terms were treated separately. In Section 3 we presented results for two pairs of neurons. For both pairs there was evidence of excess synchronous spiking beyond that explained by stimulus-induced changes in individual-neuron firing rates. In one pair, network activity, represented as history dependence, was sufficient to account for excess synchronous spiking, but the other pair displayed excess synchronous spiking that remained highly statistically significant even after network effects were incorporated, indicating stimulus-related synchrony. Our theoretical results provided a continuous-time point process foundation for the methods, justifying both our use of smoothing and our derivation of the excess-synchrony estimators ζ̂ and ζ̂H.

Assessment of synchrony via continuous-time loglinear models is closely related to the unitary-event analysis of Grün, et al. (2002a), (2002b). Unitary event analysis assumes each neuron follows a locally-stationary Poisson processes, which has been shown to be somewhat conservative in the sense of providing inflated p-values in the presence of non-Poisson history dependence. Its main purpose is to identify stimulus-locked excess synchrony. Because the loglinear models could be viewed as generalizations of locally-stationary Poisson models, they could extend unitary-event analysis to cases in which it seems desirable to account more explicitly for stimulus and history effects. This is a topic for future research.

We also provided an example of testing for 3-way interaction. The results we gave in Section 3 for a particular triple of neurons indicated no evidence of excess 3-way joint spiking above that explained by 2-way joint spiking. A systematic finding along these lines, examining large numbers of neurons, would be consistent with findings of Schneidman, et al. (2006). However, as may be seen from Figure 3, 3-way joint spikes are very sparse. A careful study of the power to detect 3-way joint spiking in contexts like the one considered here could be quite helpful. We plan to carry out such a study and report it elsewhere.

We have restricted history effects to individual neurons by assuming, first, that each neuron’s history excludes past spiking of the other neurons under consideration and, second, that the interaction effects are independent of history. This greatly simplifies the modeling and avoids confounding the interaction effects with cross-neuron effects. While it would be possible, in principle (by modifying the hierarchical sparsity condition), to allow history-dependence within interaction effects, we see no practical benefit of doing so. With the two additional, highly plausible assumptions used here we get both tractable discrete-time methods and a sense in which the methods may be understood in continuous time. It is somewhat inelegant to have a sequence of marked processes (indexed by δ) but this appears to be the best that can be achieved by starting with very natural discrete-time loglinear models. An alternative would be to use more standard point process models with short time-scale cross-neuron effects. Presumably similar results could be obtained, but the relationship between these different approaches is also a subject for future research. A quite different technology involves permutation-style assessment via “dithering” or “jittering” of individual spike times [cf. Geman, et al. (2010), Grün (2009)]. Synchrony is one of the deep topics in computational neuroscience and its statistical identification is subtle for many reasons, including inaccuracies in reconstruction of spike timing from the complicated mixture of neural signals picked up by the recording electrodes [e.g., Harris, et al. (2000), Ventura (2009)]. It is likely that multiple approaches will be needed to grapple with varying neurophsyiological circumstances.

Appendix

Proof of Theorem 1. For simplicity, we shall consider only the case for ν = 2. The proof for other values of ν is similar though more tedious. We observe from Proposition 1 that

| (37) |

Condition (I) implies that the summations ΣkΣi1, … , ik∈{1,2} in (37) contain a finite number of summands. Hence letting δ → 0, the right hand side of (37) equals

| (38) |

Using Condition (II) and Taylor expansion, we have

uniformly over tm ≤ sk+1 ≤ tm+1, 0 ≤ s1 ≤ ⋯ ≤ sk ≤ tm. Consequently (38) equals

since tm+1 − tm = δ and limδ→0 tm = t. Using a similar argument, we have

and as δ → 0.

Proof of Theorem 2. For simplicity, define

for all a, b, c, d, ∈ {0, 1}. We observe from Proposition 1 that

as δ → 0. Theorem 2 follows since as δ → 0.

Proof of Theorem 3. For simplicity, we only consider the case ν = 2. Let where 0 ≤ l1 < ⋯ < lk ≤ m − 1. We observe from (31) that , and

for sufficiently small δ where F denotes the distribution function of sl1, …, slk. Here sl1 ∈ [tl1, tl1+1), sl1,1 ∈ [tl1,1, tl1,1+1), etc. This proves the first statement of Theorem 3. Next we observe that

for sufficiently small δ. This proves Theorem 3.

Proof of Theorem 4. We observe that ℋtm → ℋ̄tm is a many-to-one mapping and from (36) that

| (39) |

for sufficiently small δ. We further observe that

| (40) |

Thus it follows from (39) and (40) that

for sufficiently small δ. This proves Theorem 4.

Footnotes

AMS 2000 subject classifications. Primary 62J12; secondary 62M99, 62P10.

References

- 1.Brillinger DR. Some statistical methods for random process data from seismology and neurophysiology. Ann. Statist. 1988;16:1–54. [Google Scholar]

- 2.Brillinger DR. Nerve cell spike train data analysis: a progression of technique. J. Amer. Statist. Assoc. 1992;87:260–271. [Google Scholar]

- 3.Brown EN, Barbieri R, Eden UT, Frank LM. Computational Neuroscience: A Comprehensive Approach. London: CRC Press; 2003. Likelihood methods for neural spike train data analysis; pp. 253–286. [Google Scholar]

- 4.Brown EN, Barbieri R, Ventura V, Kass RE, Frank LM. A note on the time-rescaling theorem and its implications for neural data analysis. Neural Comput. 2001;14:325–346. doi: 10.1162/08997660252741149. [DOI] [PubMed] [Google Scholar]

- 5.Daley DJ, Vere-Jones D. An Introduction to the Theory of Point Processes, Vol. 1: Elementary Theory and Methods. 2nd edition. Springer: New York; 2002. [Google Scholar]

- 6.Geman S, Amarasingham A, Harrison M, Hatsopoulos N. The statistical analysis of temporal resolution in the nervous system. 2010. Submitted. [Google Scholar]

- 7.Grün S. Data-driven significance estimation for precise spike correlation. J. Neurophysiol. 2009;101:1126–1140. doi: 10.1152/jn.00093.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Grün S, Diesmann M, Aertsen A. Unitary events in multiple single-neuron spiking activity: I. detection and significance. Neural Comput. 2002a;14:43–80. doi: 10.1162/089976602753284455. [DOI] [PubMed] [Google Scholar]

- 9.Grün S, Diesmann M, Aertsen A. Unitary events in multiple single-neuron spiking activity: II. nonstationary data. Neural Comput. 2002b;14:81–119. doi: 10.1162/089976602753284464. [DOI] [PubMed] [Google Scholar]

- 10.Harris KD, Henze DA, Csicsvari J, Hirase H, Buzsaki G. Accuracy of tetrode spike separation as determined by simultaneous intracellular and extracellular measurements. J. Neurophysiol. 2000;84:401–414. doi: 10.1152/jn.2000.84.1.401. [DOI] [PubMed] [Google Scholar]

- 11.Kass RE, Ventura V, Brown EN. Statistical issues in the analysis of neuronal data. J. Neurophysiol. 2005;94:8–25. doi: 10.1152/jn.00648.2004. [DOI] [PubMed] [Google Scholar]

- 12.Kelly RC, Smith MA, Kass RE, Lee TS. Local field potentials indicate network state and account for neuronal response variability. 2009 doi: 10.1007/s10827-009-0208-9. Submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kelly RC, Smith MA, Samonds JM, Kohn A, Bonds AB, Movshon JA, Lee TS. Comparison of recordings from microelectrode arrays and single electrodes in the visual cortex. J. Neurosci. 2007;27:261–264. doi: 10.1523/JNEUROSCI.4906-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Martignon L, Deco G, Laskey K, Diamond M, Freiwald W, Vaadia E. Neural coding: higher-order temporal patterns in the neurostatistics of cell assemblies. Neural Comput. 2000;12:2621–2653. doi: 10.1162/089976600300014872. [DOI] [PubMed] [Google Scholar]

- 15.Paninski L, Brown EN, Iyengar S, Kass RE. Statistical models of spike trains. In: Liang C, Lord GJ, editors. Stochastic Methods in Neuroscience. Oxford: Clarendon Press; 2009. pp. 278–303. [Google Scholar]

- 16.Schneidman E, Berry MJ, Segev R, Bialek W. Weak pairwise correlations imply strongly correlated network states in a neural population. Nature. 2006;440:1007–1012. doi: 10.1038/nature04701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ventura V. Traditional waveform based spike sorting yields biased rate code estimation. Proc. Nat. Acad. Sci. 2009;106:6921–6926. doi: 10.1073/pnas.0901771106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ventura V, Cai C, Kass RE. Statistical assessment of time-varying dependency between two neurons. J. Neurophysiol. 2005;94:2940–2947. doi: 10.1152/jn.00645.2004. [DOI] [PubMed] [Google Scholar]