Abstract

Spectral ripple discrimination thresholds were measured in 15 cochlear-implant users with broadband (350–5600 Hz) and octave-band noise stimuli. The results were compared with spatial tuning curve (STC) bandwidths previously obtained from the same subjects. Spatial tuning curve bandwidths did not correlate significantly with broadband spectral ripple discrimination thresholds but did correlate significantly with ripple discrimination thresholds when the rippled noise was confined to an octave-wide passband, centered on the STC’s probe electrode frequency allocation. Ripple discrimination thresholds were also measured for octave-band stimuli in four contiguous octaves, with center frequencies from 500 Hz to 4000 Hz. Substantial variations in thresholds with center frequency were found in individuals, but no general trends of increasing or decreasing resolution from apex to base were observed in the pooled data. Neither ripple nor STC measures correlated consistently with speech measures in noise and quiet in the sample of subjects in this study. Overall, the results suggest that spectral ripple discrimination measures provide a reasonable measure of spectral resolution that correlates well with more direct, but more time-consuming, measures of spectral resolution, but that such measures do not always provide a clear and robust predictor of performance in speech perception tasks.

INTRODUCTION

Poor spectral resolution is thought to be one of the factors that limit the ability of cochlear-implant (CI) users to understand speech, particularly in noisy conditions. Increasing the spectral resolution of the CI processor itself, by increasing the number of electrodes and decreasing the bandwidths of the analysis filters, yields some benefits, but only up to a certain limit. For instance, speech perception for normal-hearing listeners in noise continues to improve as the number of bands in a noise-excited envelope vocoder (e.g., Shannon et al., 1995) increases from 4 to 20, whereas speech perception for CI users tends to improve with increasing number of electrodes up to about 8 and then remains roughly constant, and well below the performance levels obtained by normal-hearing listeners (e.g., Friesen et al., 2001). The limit to the number of usable channels in cochlear implants is thought to be determined by the extent to which electrodes stimulate non-overlapping populations of functional auditory neurons. The limit of this “spatial resolution” may be due to a number of factors, such as the spread of current produced by each electrode and uneven neural survival patterns along the sensory epithelia, which are likely to vary between individual subjects (e.g., Hinojosa and Marion, 1983; Kawano et al., 1998; Pfingst and Xu, 2003).

A number of measures of spatial resolution have been proposed for cochlear implants. Perhaps most direct, but also time-consuming, are the spatial tuning curves (STCs), where the current level needed to mask a brief low-level signal is measured as a function of the spatial separation between the masker and the signal electrode (e.g., Nelson et al., 2008). Another measure that has recently gained popularity involves spectral ripple discrimination, where a spectrally rippled stimulus is discriminated from another spectrally rippled stimulus, with the spectral (or spatial) positions of the peaks and valleys reversed (e.g., Henry and Turner, 2003; Henry et al., 2005; Won et al., 2007). The reasoning behind the test, which was originally developed to test normal acoustic hearing (e.g., Supin et al., 1994, 1997), is that the maximum ripple rate at which the original and phase-reversed stimuli are discriminable provides information regarding the limits of spectral resolution.

Discriminating between different spectrally rippled broadband stimuli may be particularly relevant to speech tasks because the ripples are typically distributed over a wide spectral region and because the task of discriminating the positions of spectral peaks has some commonalities with identifying spectral features such as vowel formant frequencies in speech. Henry et al. (2005) found a strong correlation between spectral ripple resolution and both consonant and vowel recognition when pooling the results from normal-hearing, impaired-hearing, and CI subjects. However, the correlations were weaker when only the results from CI users were analyzed. Won et al. (2007) used a similar paradigm in CI users to compare spectral ripple discrimination with closed-set spondee identification in steady-state noise and two-talker babble. They found significant correlations between ripple resolution and speech reception threshold (SRT), consistent with the idea that spectral resolution is particularly important for understanding speech in noise (e.g., Dorman et al., 1998). Berenstein et al. (2008), in a study examining virtual channel electrode configurations, demonstrated a small but significant correlation between spectral ripple discrimination and word recognition in quiet and in fluctuating noise. However, while spectral resolution could be shown to improve with a tripolar electrode configuration, speech recognition performance did not show commensurate gains, suggesting that spectral ripple discrimination may be more sensitive to changes in spectral resolution than are measures of speech perception (see also Drennan et al., 2010).

Despite these mostly promising results, some fundamental questions have been raised recently regarding the extent to which spectral ripple discrimination thresholds actually reflect spectral or spatial resolution (e.g., McKay et al., 2009). First, because the spectral ripple discrimination tasks are generally presented via loudspeaker to the speech processor of the CI, it is not always clear whether the performance limits are determined by the analysis filters of the speech processor (which can be as wide as 0.5 octaves), or “internal” factors, such as the spread of current within the cochlea. Second, when a spectral peak or dip occurs at the edge of the stimulus spectrum, it is possible that CI users detect the change in level at the edge without necessarily resolving the peaks and valleys within the stimulus spectrum; similarly, in some cases a spectral phase reversal may lead to a perceptible shift in the spectral center of gravity (weighted average electrode position) of the entire stimulus. Third, ripple discrimination relies not only on spectral resolution, but also on intensity resolution, as listeners must perceive the changes in current levels produced by the changes in the rippled spectrum.

The primary aim of this study was to test the relationship between spectral ripple discrimination thresholds and the more direct measure of spectral resolution, the STC. The hypothesis was that if spectral ripple discrimination does reflect underlying spectral (or spatial) resolution, then thresholds should be strongly correlated with STC bandwidths. In experiment 1, spectral ripple discrimination thresholds in broadband noise, similar to those of Won et al. (2007), were measured and compared with STC bandwidths (BWSTC), which were reported in an earlier study (Nelson et al., 2008). To test whether any discrepancies between the two measures was related to the fact that one (BWSTC) was limited to a narrow spectral region, whereas the other (spectral ripple discrimination) was broadband, Experiment 2 compared BWSTC with spectral ripple discrimination thresholds in an octave-wide band of noise, with a center frequency (CF) corresponding to the CF of the electrode for which the STC was measured in each subject. Experiment 3 measured spectral ripple discrimination for fixed octave bands between 350 and 5600 Hz to test for any systematic changes in thresholds as a function of spectral region. In Experiment 4, spectral ripple discrimination thresholds and BWSTC were compared to various measures of speech recognition in the same subjects, to test whether either was a good predictor of speech intelligibility in quiet or noise.

The design of this study allows for a comparison of measures obtained under direct stimulation with sound-field measures via the speech processor. To our knowledge, such comparisons have not been widely reported in the literature. Passing an acoustic signal through a speech processor will impose specific stimulation parameters on the processed signal (e.g., processing scheme, input dynamic range, stimulation rate) that may vary with the different implant devices. It is possible that these differences might act as confounding variables when trying to correlate measures of spectral resolution obtained via direct stimulation and speech perception measured through the speech processor. In the current study, we chose to use sound field acoustic presentation rather than direct stimulation for the spectral ripple discrimination experiment, in part to be able to compare more directly to other recent studies of spectral ripple resolution in CI listeners (e.g., Henry et al., 2005; Won et al., 2007). Inasmuch as spectral ripple resolution might relate to spectral shape recognition in speech perception (e.g., vowel formants), it seems appropriate to process the signals in the same way that individual CI users are accustomed to listen to speech.

EXPERIMENT 1: BROADBAND RIPPLE DISCRIMINATION

Subjects

Fifteen CI users (5 Clarion I, 5 Clarion II, and 5 Nucleus-22), all having had forward-masked STCs measured on at least one electrode from the middle of the array (Nelson et al., 2008), participated in all experiments. Table TABLE I. shows individual subject characteristics.

Table 1.

Summary of CI subject characteristics. The subject identifiers C, D, and N denote Clarion I, Clarion II, and Nucleus users, respectively.

| Subject Code | M∕F | Age (yrs) | CI use (yrs) | Etiology | Duration of deafness (yrs) | Device | Strategy |

|---|---|---|---|---|---|---|---|

| C03 | F | 58.8 | 9.7 | Familial Progressive SNHL | 27 | Clarion I | CIS |

| C05 | M | 52.5 | 10.2 | Unknown | < 1 | Clarion I | CIS |

| C16 | F | 54.2 | 6.7 | Progressive SNHL | 13 | Clarion I | MPS |

| C18 | M | 74.0 | 7.2 | Otosclerosis | 33 | Clarion I | MPS |

| C23 | F | 48.1 | 6.4 | Progressive SNHL; Mondini’s | 27 | Clarion I | CIS |

| D02 | F | 58.2 | 6.4 | Unknown | 1 | Clarion II | HiRes -P |

| D05 | F | 78.2 | 6.6 | Unknown | 3 | Clarion II | HiRes -S |

| D08 | F | 55.9 | 5.0 | Otosclerosis | 13 | Clarion II | HiRes-S |

| D10 | F | 53.8 | 5.2 | Unknown | 8 | Clarion II | HiRes-S |

| D19 | F | 48.2 | 3.5 | Unknown | 7 | Clarion II | HiRes-S |

| N13 | M | 69.9 | 17.5 | Hereditary; Progressive SNHL | 4 | Nucleus 22 | SPEAK |

| N14 | M | 63.5 | 13.9 | Progressive SNHL | 1 | Nucleus 22 | SPEAK |

| N28 | M | 68.8 | 11.8 | Meningitis | < 1 | Nucleus 22 | SPEAK |

| N32 | M | 40.1 | 10.3 | Maternal Rubella | < 1 | Nucleus 22 | SPEAK |

| N34 | F | 62.0 | 8.4 | Mumps; Progressive SNHL | 9 | Nucleus 22 | SPEAK |

Stimuli

Spectrally rippled noise was generated using matlab software (The Mathworks, Natick, MA). Gaussian broadband (350–5600 Hz) noise was spectrally modulated, with sinusoidal variations in level (dB) on a log-frequency axis (as in Litvak et al., 2007), using the equation:

where X(f) is the amplitude at frequency f (in Hz), D is the spectral depth or peak-to-valley ratio (in dB), L is the low cutoff frequency of the noise pass band (350 Hz in this case), fs is the spectral modulation frequency (in ripples per octave), and θ is the starting phase of the ripple function. Logarithmic frequency and intensity units were used as these are generally considered to be more perceptually relevant than linear units. Sinusoidal modulation was used, as this lends itself more readily to linear systems analysis and has been used in a number of studies of spectral modulation perception in normal (acoustic) hearing (e.g., Saoji and Eddins, 2007; Eddins and Bero, 2007). The peak-to-valley ratio of the stimuli was held constant at 30 dB. The stimulus duration was 400 ms, including 20-ms raised-cosine onset and offset ramps.

The stimuli were presented via a single loudspeaker (Infinity RS1000) positioned at approximately head height and about 1 m from the subject in a double-walled, sound attenuating chamber. The average sound level of the noise was set to 60 dBA when measured at the location corresponding to the subject’s head. In order to reduce any possible cues related to loudness, the noise level was roved across intervals within each trial by ± 3 dB. The starting phase of the spectral modulation was selected at random with uniform distribution for each trial to reduce the potential for any consistent local intensity cues that fixed-phase stimuli might create.

Procedure

Subjects wore their everyday speech processors at typical use settings for all experiments. A three-interval, three-alternative forced-choice (3I-3AFC) procedure was used. All three intervals in each trial contained rippled noise. In two of the intervals the spectral ripple had the same starting phase, and in the other interval the phase was reversed (180° phase shift). The interval containing the phase reversal was selected at random on each trial with equal a priori probability, and the listener’s task was to identify the interval that sounded different. Each test run started at a ripple rate of 0.25 ripples per octave (rpo), corresponding to a single ripple across the 4-octave passband. The ripple rate was varied adaptively using a 1-up, 2-down rule, with rpo initially increasing or decreasing by a factor of 1.41. After the first two reversals the step size changed to a factor of 1.19, and after two more reversals, to 1.09. The run was terminated after ten reversals, and the geometric mean ripple rate at the last six reversal points was used to determine the threshold for ripple discrimination. If the adaptive procedure called for a ripple rate lower than 0.25 ripples per octave, the program set the ripple rate to 0.25 ripples per octave and the adaptive procedure continued. However, this “floor” was never reached during the measurement phases of the adaptive runs and no estimates of threshold included turnpoints of 0.25 ripples∕octave. Each subject completed six runs, with the exception of one subject who completed only four runs (due to time and scheduling constraints). In order to minimize potential learning and inattention effects, the first threshold estimate was excluded for each subject, as were individual measurements for runs that were more than 3 standard deviations removed from the mean of the remaining measurements. In general, thresholds from the last five runs were used to compute an arithmetic mean threshold for each subject. (An alternate approach, computing the geometric mean, produced the same pattern of results.)

Comparison with spatial tuning curve bandwidths

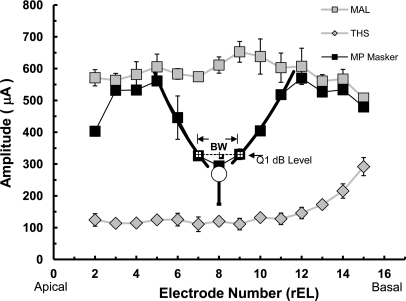

As described in Nelson et al. (2008), a forward-masking paradigm was used to obtain spatial tuning curves. The procedure measured the masker level needed to produce a constant amount of forward masking on a specific probe electrode, for several individual masker electrodes surrounding the probe electrode. The signals were biphasic current pulses delivered in a direct-stimulation mode via a specialized CI interface (Nucleus devices) or dedicated research processor (Advanced Bionics devices). The stimulation mode was bipolar (BP) for Nucleus users and monopolar (MP) for Advanced Bionics users. For several subjects, the stimulation mode was adjusted (e.g., from BP to BP + 1) to allow for sufficient levels of masking for the STC procedure, and thus was slightly different from that used in a subject’s speech processor program. The STCs were measured on a single electrode in the middle of the array for each of the 15 subjects. Bandwidth was defined as the width in mm of the STC, at a masker level that was 1 dB above its level at the STC tip. Figure 1 shows an example of a single spatial tuning curve (black squares) for one electrode in the middle of the array for one subject. Further details can be found in Nelson et al. (2008) and in Table TABLE II. in Appendix A. The STC data were collected, on average, 3.5 yr prior to spectral ripple data. The stimulation mode of the subjects’ implants remained the same over that period.

Figure 1.

Spatial tuning curve for subject D08, measured in a previous study (Nelson et al., 2008). Depicted by the black squares in this figure is the current level needed to just mask a low-level probe presented to electrode 8, as a function of masker electrode number. Stimulus amplitude (µA) is shown on the ordinate, with research electrode number (rEL) displayed on the abscissa. (The rEL numbering system normalizes the different numbering systems used by different implant devices; number 1 is assigned to the most apical electrode, with consecutive numbering proceeding to the basal end of the array.) Error bars indicate standard deviations. Gray squares represent current levels at maximum acceptable loudness (MAL) for each electrode; gray diamonds indicate current levels at threshold (THS) for each electrode. The open circle at the tip of the curve indicates the probe electrode, with probe level shown by the symbol’s vertical position and sensation level indicated by the height of the vertical line beneath it. Tuning curve bandwidth (BW) is defined as the width of the STC at 1 dB above the tip of the STC (Q1 dB Level).

Table 2.

Individual STC data (from Nelson et al., 2008).

| Subject Code | STC BW (mm) | Analysis Channel CF (Hz) | Analysis Channel BW (oct) | Broadband Ripple Discrim threshold (rpo) |

|---|---|---|---|---|

| C03 | 1.67 | 1159 | 0.48 | 1.29 |

| C05 | 6.7 | 1159 | 0.48 | 3.07 |

| C16 | 0.83 | 1388 | 0.57 | 2.28 |

| C18 | 3.51 | 1388 | 0.57 | 0.49 |

| C23 | 9.09 | 1159 | 0.48 | 0.41 |

| D02 | 0.95 | 1278 | 0.25 | 2.43 |

| D05 | 4.85 | 1278 | 0.25 | 0.68 |

| D08 | 2.22 | 1394 | 0.27 | 1.43 |

| D10 | 2.61 | 1160 | 0.27 | 4.27 |

| D19 | 2.01 | 1160 | 0.28 | 1.86 |

| N13 | 0.61 | 1447 | 0.20 | 2.64 |

| N14 | 1.54 | 1741 | 0.20 | 0.85 |

| N28 | 3.83 | 2177 | 0.20 | 0.74 |

| N32 | 3.82 | 2177 | 0.20 | 0.95 |

| N34 | 1.55 | 1672 | 0.20 | 1.80 |

Results and discussion

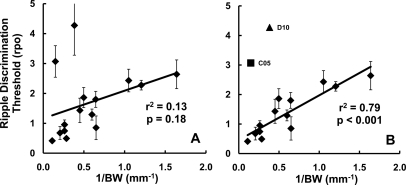

Spectral ripple discrimination thresholds—the highest ripple rate at which phase-reversed spectrally rippled stimuli could be discriminated—ranged from 0.41 to 4.27 rpo, with a mean of 1.68 rpo. This wide range across subjects is similar to that observed for the STC measures of bandwidth in the same subjects (Nelson et al., 2008). In addition, spectral ripple discrimination thresholds in these subjects are quite similar to the range of data reported by Won et al. (2007), despite the differences in spectral envelopes (full-wave rectified) used in that study. The left panel of Fig. 2 shows the broadband ripple discrimination thresholds as a function of the reciprocal of the BWSTC from Nelson et al. (2008) for each subject. The solid line is the least-squares fits to the data. Error bars represent one standard deviation. When all 15 subjects were included, simple regression analysis failed to reveal a significant correlation between BWSTC and spectral ripple discrimination thresholds (r2 = 0.13, p = 0.18). However, the plot indicates two obvious outliers, subjects C05 and D10, whose results did not fall within the 95% confidence intervals of the linear regression fits. When these two subjects were removed from the regression (Fig. 2, right panel), a strong and significant correlation was observed (r2 = 0.79, p < 0.001). For these remaining 13 subjects, broader spatial tuning (smaller values on the x axis) corresponded with poorer spectral ripple discrimination (fewer rpo at threshold, or smaller values on y axis).

Figure 2.

Ripple discrimination thresholds as a function of transformed STC bandwidth (from Nelson et al., 2008). (a) Broadband ripple discrimination thresholds, in ripples per octave (rpo) with a linear regression fit to all 15 data points; (b) the regression line for the least squares fit to data from 13 subjects, excluding subjects C05 and D10.

Despite the encouraging trend observed in most subjects, the overall data set does not provide compelling evidence for concluding that BWSTC and ripple discrimination threshold are measures of the same underlying mechanism. One possible explanation for any discrepancies between spatial tuning and spectral ripple discrimination is that the STC represents a local, focused measure of spectral resolution at a place in the cochlea corresponding to the location near the single probe electrode in the middle of the implant array, whereas the standard broadband rippled noise paradigm yields a more global measure because the stimuli encompass a frequency range that spans almost all the electrodes in the array. It may be that discrimination thresholds in the spectral ripple task were mediated by the spectral resolution associated with electrodes remote from the electrode tested in the STC task. This explanation is tested in Experiment 2.

Another explanation may be that good performance in the spectral ripple discrimination task does not depend solely on spectral resolution abilities. The two outliers may have been using other cues to perform the task. As mentioned in the introduction, it has been suggested that spectral edge effects may play a role. The stimuli used in Experiment 1 had sharp spectral edges, limited only by the temporal onset and offset ramps applied to the stimuli. Because of this, the level of the spectral components at the edge could vary dramatically, depending on the starting phase of the stimuli. Also, having sharp spectral edges leaves open the possibility that the change in ripple phase is detected via a shift in the centroid of the spectral envelope. To examine this possibility, we re-tested a subset of our original subjects, including the two outliers and three additional subjects representing a wide range of performance, with shallow spectral slopes on either end of the spectrum to reduce potential spectral edge effects. The re-test occurred between 15 and 20 months after the original test.

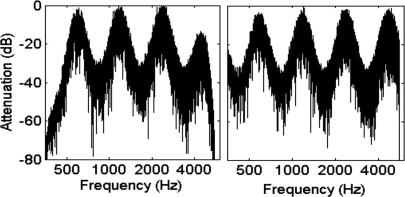

Experiment 1b: Effects of spectral edges on ripple discrimination

Spectrally rippled noise was generated in the same way as for Experiment 1, with either steep spectral edges (“non-windowed” stimuli) or with Hanning (raised-cosine) ramps applied to the spectral edges (“windowed” stimuli). The passband of the windowed stimuli was two octaves, with one-octave spectral ramps on either side, giving a total bandwidth of four octaves, as for the non-windowed stimuli. Figure 3 displays sample plots of spectrally windowed and non-windowed rippled noise in the left and right panels, respectively. All other stimulus parameters and the test procedure remained the same as in Experiment 1. Five of the original group of CI subjects participated. Each listener completed six runs of the adaptive procedure for windowed stimuli, and six runs for non-windowed stimuli run in blocks.

Figure 3.

Plots of windowed (left) and non-windowed (right) stimulus spectra. Stimuli are broadband (350–5600 Hz) noise with sinusoidal spectral ripples; spectral modulation frequency is 1 ripple per octave. The windowed stimulus includes Hanning (raised-cosine) ramps applied to the spectral edges. The non-windowed stimulus has steep spectral edges.

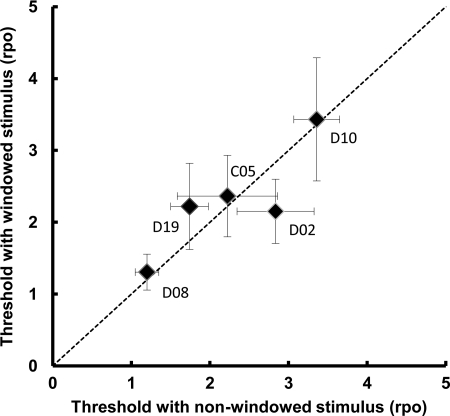

The results are displayed in Fig. 4. Ripple discrimination thresholds for the windowed stimuli are plotted as a function of thresholds for non-windowed stimuli. The correspondence between thresholds for the two stimulus types is good. Using paired comparisons, no significant difference in threshold was found between the spectrally windowed and non-windowed stimuli [t(4) = 0.59; p = 0.59], suggesting similar performance for broadband ripple discrimination regardless of whether the noise passband has steep or shallow slopes. This in turn suggests that spectral edge effects are unlikely to have dominated performance in the main experiment, at least for this subset of listeners.

Figure 4.

Ripple discrimination thresholds for shallow-sloped (windowed) stimuli, with half-octave Hanning ramps on each side, as a function of thresholds for steep-sloped (non-windowed) stimuli. The diagonal line represents perfect correspondence between the two types of stimuli (slope = 1).

One unexpected result of this comparison was that thresholds for broadband non-windowed stimuli for the two CI subjects who were outliers in the original data set were somewhat lower (poorer) on retest, compared to their original data. However, even when taken individually (with no post hoc correction factor), the difference was significant (p = 0.05) for one subject, C05, but not for the other (p = 0.06). The three other CI subjects from Experiment 1b who were retested on broadband “non-windowed” ripple discrimination, plus one additional subject drawn from the larger group, showed no significant test-retest differences. Therefore, for this group of six subjects there was no significant test-retest difference in the ripple discrimination threshold [t(5) = 1.44; p = 0.21]. Won et al. (2007) reported ripple discrimination test-retest data for 20 of their subjects (two sets of six runs completed on different days) and found no significant group differences; they concluded that the ripple reversal test appears to be a stable, repeatable measure. Our test-retest measures support their conclusions and extend them by showing that in general the thresholds can remain stable over the period of a year or more. We have no similar test-retest data for the STC measurements, and it may be that changes over the time course of these experiments (∼4 yr) added to the variability in comparing the measures of STC bandwidth and ripple discrimination.

EXPERIMENT 2: COMPARISON OF OCTAVE-BAND SPECTRAL RIPPLE DISCRIMINATION AND SPATIAL TUNING CURVES OBTAINED FROM THE SAME REGION OF THE COCHLEA

Rationale

The aim of this experiment was to explore the use of the ripple discrimination paradigm using octave-band rippled noise stimuli, matched in frequency for each subject to the probe electrodes used in obtaining their original STC measures, to test whether the correspondence between the two measures would become stronger if both measures were focused on the same cochlear location.

Methods

The subjects and procedure were the same as those used in Experiment 1. Spectrally rippled noise was again generated with log-spaced ripples along the frequency axis, using sinusoidal variations in level (dB) in the same way as in Experiment 1. The only difference was that the noise was band-limited to two octaves, with raised-cosine highpass and lowpass slopes applied to the amplitude spectrum of the lower and upper half-octaves, respectively, so that the unattenuated passband of the noise was one octave wide. The center frequency of the octave passband was selected for each subject individually to correspond to the center frequency of the filter from the speech processor that was assigned to the electrode for which the STC had been measured. In this way the STC and the ripple-discrimination task were as closely matched as possible in terms of assessing spatial resolution in the same region of the cochlea. As in Experiment 1, the stimuli were presented in the sound field at 60 dBA, roved by ± 3 dB on each interval.

Results and discussion

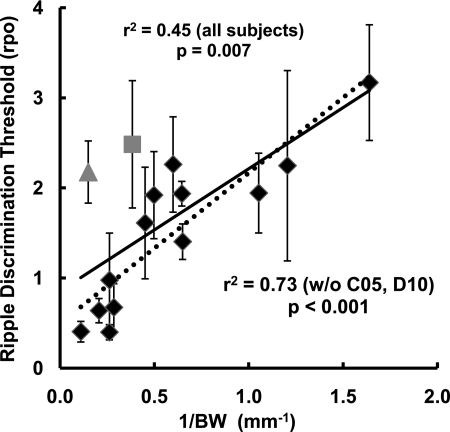

Octave-band ripple discrimination thresholds ranged from 0.4 to 3.17 rpo, with an average of 1.59 rpo. Figure 5 shows octave-band ripple discrimination thresholds plotted against the transformed BWSTC for the same subjects. Comparing this plot to the ones in Fig. 2, it can be seen that the data from the two subjects previously identified as outliers are now closer to the trend line fitted to data from the other 13 subjects. In fact, this experiment revealed a significant relationship between BWSTC and spectral ripple threshold when including the data from all subjects (r2 = 0.45, p = 0.007; solid line), although the regression excluding subjects C05 and D10 continued to be stronger (r2 = 0.73, p < 0.001; dotted line). Despite the differences evident in two subjects, octave-band and broadband ripple discrimination thresholds were strongly correlated across subjects overall (r2 = 0.65, p < 0.001).

Figure 5.

Octave-band ripple discrimination (from Experiment 2) as a function of transformed STC bandwidth. Data from all subjects are included in the solid regression line; subjects C05 and D10 are identified with different symbol shapes. Data from 13 subjects, excluding C05 and D10, are included in the dotted regression line.

One possible interpretation of the better correspondence between STCs and narrow-band ripple measures when compared with Experiment 1 is that ripple discrimination using a broadband rippled noise stimulus might not reflect local differences in spectral resolution along the electrode array for a given listener. With a broadband stimulus, a listener might be responding to acoustic information within a limited region of better spectral∕spatial resolution. If ripple discrimination varies with the region of the electrode array being stimulated by the noise carrier, this might be demonstrated by using different narrow passbands of spectrally rippled noise.

EXPERIMENT 3: FIXED OCTAVE-BAND RIPPLE DISCRIMINATION

Rationale

The aim of this experiment was to measure spectral ripple discrimination thresholds using four contiguous fixed octave-band noise stimuli to determine whether spectral ripple discrimination thresholds could vary across different regions of the electrode array. For each subject, broadband ripple discrimination thresholds were compared to fixed octave-band thresholds, to determine more accurately the relationship between broadband and narrowband spectral ripple discrimination measures.

Methods

The subjects and procedures were the same as in Experiments 1 and 2. The stimulus was one of four bands with one-octave passbands (350–700 Hz, 700–1400 Hz, 1400–2800 Hz, or 2800–5600 Hz), each with half-octave raised-cosine ramps applied to either side of the amplitude spectrum. The order in which the conditions were tested was randomized between subjects and between each repetition. For each subject, all four conditions were tested before any was repeated. Thresholds reflect the average of at least five repetitions.

Results

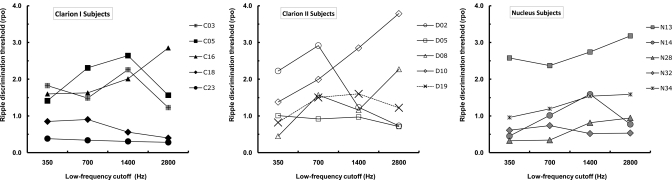

Figure 6 displays ripple discrimination thresholds for the individual listeners. The results are divided across three panels, according to implant device used by the subject. Ripple discrimination thresholds varied across listeners as well as within listeners as a function of frequency band. A repeated-measures analysis of variance (ANOVA) showed no main effect for frequency band [Greenhouse-Geisser corrected F(1.8, 24.8) = 1.68, p = 0.21], suggesting that there was no orderly pattern in ripple discrimination thresholds as a function of frequency band, when pooled across all subjects. Nevertheless, there were substantial variations in threshold across frequency in individual subjects. One-way ANOVAs performed on each individual subject’s data showed a main effect of frequency band for 11 of the 15 subjects. If such effects were produced only by random variability in threshold measurements, a significant effect would be expected to occur by chance in only about one of the 15 subjects. Thus it seems that these variations were “real” if not consistent between subjects. Interestingly, both subjects who were outliers in Experiment 1 (C05 and D10) showed substantial variation in threshold across frequency band, as would be expected if narrowband and broadband measures of frequency resolution did not correspond well.

Figure 6.

Ripple discrimination thresholds as a function of low-frequency cutoff of octave-band rippled noise stimuli, for 15 individual subjects, separated by device type. (a) Performance of Clarion I subjects; (b) Clarion II subjects; and (c) Nucleus 22 subjects.

Thresholds in the octave bands were compared with thresholds in the broadband conditions tested in Experiment 1. To facilitate the comparisons, summary measures of the octave-conditions were derived in three ways for each subject: (1) unweighted average of thresholds across all four octave-band conditions; (2) best threshold (i.e., greatest rpo value), and (3) worst threshold (lowest rpo value). Performance in the broadband condition (Experiment 1) was compared with performance in each of the three summary measures using paired-sample t tests. The broadband thresholds were not significantly different than average fixed-octave band thresholds (t =− 2.00, p = 0.07) or the best octave-band thresholds (t =− 1.9, p = 0.09), but were significantly different than worst octave-band thresholds (t =− 3.71, p = 0.003). This result suggests that the CI users may be utilizing information from across the array when performing broadband ripple discrimination, but our method lacks the statistical power to determine whether the integration of information is “optimal” in any sense.

Discussion

Ripple discrimination of octave-band noise stimuli in different frequency regions revealed significant variability within and between subjects. Ripple discrimination appears to vary across the electrode array, presumably due to many factors such as neural survival and electrode placement. Supin et al. (1997) measured ripple discrimination in normal-hearing subjects as a function of the center frequency (CF) of octave-band rippled noise stimuli. They found that for frequencies below 1000 Hz, ripple discrimination threshold increased with increasing CF, but above 1000 Hz, thresholds remained roughly constant with increasing CF, in line with estimates of frequency selectivity using simultaneously presented notched noise (Glasberg and Moore, 1990). Although there was substantial variation across the electrode array for many of our individual CI subjects, on average there was no systematic trend in performance with increasing center frequency of the octave-band carrier. The lack of a systematic effect in the pooled data is in line with expectations based on the fact that the CI processor filters have roughly constant bandwidth on a log-frequency scale, implying that the filters’ spectral resolution should be independent of spectral region. Other factors, such as individual electrode placement, current spread, and neural survival patterns, may vary substantially with cochlear location in individual subjects, but such variations are unlikely to result in a systematic trend within a subject group (e.g., Hinojosa and Marion, 1983; Kawano et al., 1998).

COMPARISONS WITH MEASURES OF SPEECH PERCEPTION

Rationale

One benefit of the spectral ripple discrimination test is that it has been shown in some previous studies to correlate with measures of speech perception in noise (Won et al., 2007). The present experiment sought to replicate and extend these earlier findings by correlating both spectral ripple discrimination thresholds and STC bandwidths with measures of speech perception in quiet and in noise.

Methods

The subjects tested for STCs and spectral ripple discrimination were assessed on multiple measures of speech recognition. The tests included sentence and vowel materials in quiet and in noise. Sentence recognition testing was performed using IEEE sentences (IEEE, 1969), spoken by one male and one female talker. Each sentence contained five keywords. Subjects orally repeated each sentence after presentation, and one of the experimenters, sitting in the booth with the subject, recorded the number of correct keywords. The vowel test involved Hillenbrand vowels (Hillenbrand et al., 1995) spoken by six male talkers; this closed-set test is composed of 11 vowels in an h∕V/d context. Subjects identified each vowel token by selecting the appropriate word from a list on a computer screen. Speech-shaped background noise was generated to match the long-term average spectrum of the speech materials. The speech and noise were mixed to produce the desired speech-to-noise ratio (SNR), which was verified acoustically with a sound level meter.

Speech recognition testing was performed in a sound-treated booth. All speech materials were presented at 65 dBA. Subjects used their own speech processors for speech recognition testing, with the processors set at each individual’s typical use settings; programs optimized for noise reduction were not used. The speech stimuli were presented through a single speaker placed one meter in front of the subject. For sentences, one 10-sentence list spoken by a male talker and one list spoken by a female talker were presented in each condition (in quiet and at SNRs of + 20, + 15, + 10, and + 5 dB), giving a total of 100 keywords for each condition.

Vowel stimuli were presented in the sound field in the same manner, with subjects using their own speech processors at typical use settings. The 11 vowels were presented in 66-item blocks (six presentations of each vowel per block), after a 33-item practice run preceding each SNR condition. Feedback was provided during the practice runs, but not during the test trials. The average of three blocks was used to calculate percent correct scores for each condition, with the requirement that all three scores fall within a 10% performance range. Thus, each score was based on 18 presentations of each vowel token.

Speech recognition performance in quiet and in noise for each subject was compared to BWSTC and to broadband spectral ripple discrimination thresholds, to investigate whether these measures of nonspeech spectral resolution were predictive of speech recognition ability. Octave-band ripple discrimination was not included as an independent variable in the analyses, since speech is a broadband signal.

Results

Speech performance in quiet is reported as rationalized arcsine-transformed (rau) scores (Studebaker, 1985) for recognition of key words in sentence materials and for vowel identification. Since no significant differences were found for sentence recognition performance for male vs. female talkers across subjects [repeated measures ANOVA, F(1,69) = 0.68, p = 0.41], the average score for male∕female talkers for each condition was used. For this group of subjects, percent correct for key words on sentence materials ranged from 5% to 99%. Percent correct on vowel recognition in quiet ranged from 33% to 96%, with a median of 87%.

Performance in noise for both sentence and vowel materials was quantified as SNR50%, determined as follows: performance-intensity functions (percent correct as a function of SNR) were plotted for each subject, and logistic functions were fitted to the curves, using a least-squares criterion. The fitting method was the same as that used by Qin and Oxenham (2003), with the exception that the maximum level of performance was a free parameter, and the minimum level of performance was set to ∼9.1% in the vowel task to reflect chance performance in the closed-set task. The 50%-correct point was calculated from each of the fitted curves to produce the so-called speech reception threshold.

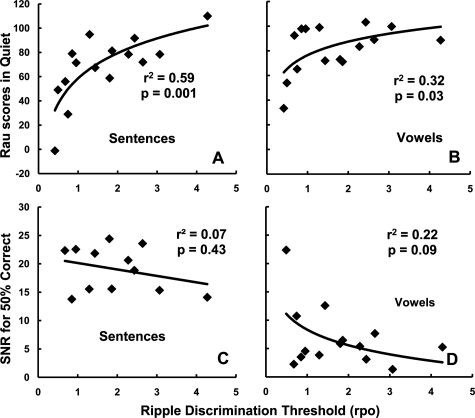

Broadband ripple discrimination and speech recognition

Since multiple comparisons were being made (four conditions: vowels and sentences, in quiet and in noise), a Bonferroni-corrected value of α = 0.0125 was used to determine statistical significance. Broadband ripple discrimination thresholds showed a moderate, statistically significant correlation with rau scores for word recognition within sentences in quiet (r2 = 0.47, p = 0.005), using linear regression. Visual inspection of the data suggested a compressive relationship between percent word recognition and ripple discrimination, even after arcsine transformation. Therefore the relationship was modeled using a logarithmic function, as shown in Fig. 7a, which resulted in a somewhat stronger relationship (r2 = 0.59, p = 0.001). In contrast, the correlations between ripple discrimination thresholds and arcsine-transformed recognition scores for vowels in quiet failed to reach significance using either linear regression (r2 = 0.19, p = 0.10) or a logarithmic function (r2 = 0.32, p = 0.03), as shown in Fig. 7b. All 15 subjects are included in the regressions.

Figure 7.

(a) Sentence recognition in quiet (rau scores) as a function of broadband ripple discrimination threshold. (b) Vowel recognition in quiet (rau scores) as a function of broadband ripple discrimination threshold. (c) SNR for 50% correct sentence recognition, interpolated∕extrapolated from performance-intensity (P-I) functions, as a function of broadband ripple discrimination threshold. Data from 12 subjects are included. (d) SNR for 50% correct vowel recognition, which was interpolated∕extrapolated from P-I functions for 14 subjects, as a function of broadband ripple discrimination threshold. Regression lines are all logarithmic fits, with the exception of (c), which shows a linear fit.

The relationships between ripple discrimination and sentence and vowel recognition in noise are shown in Figs. 7c, 7d, respectively. Plotted are the SNRs corresponding to 50% correct performance (calculated from the best-fitting performance-intensity functions, as described above) as a function of ripple discrimination threshold. Three subjects (C18, C23, N28) were excluded from sentence analysis and one (C23) from vowel analysis because their asymptotic performance fell below 50%. The SNR required for 50% word recognition in sentences for the remaining 12 subjects did not show a significant relationship with spectral ripple discrimination (r2 = 0.07, p = 0.43); the corresponding SNR for vowel recognition in noise for 14 subjects also failed to exhibit a significant correlation with ripple discrimination (r2 = 0.22, p = 0.09).

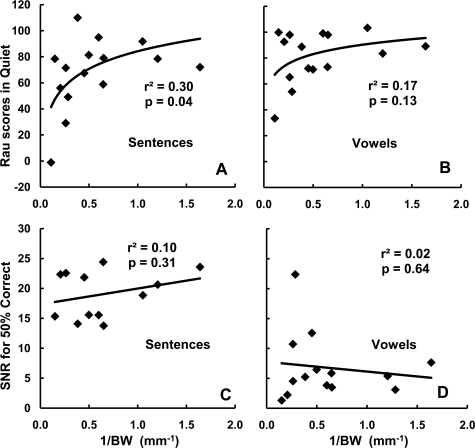

STC bandwidth and speech recognition

The upper two panels of Fig. 8 show sentence and vowel recognition in quiet (rau scores) as a function of 1∕BWSTC. A Bonferroni-corrected value of α = 0.0125 was again used to determine statistical significance. The correlations between transformed BWSTC and both the log-transformed sentence and vowel (rau) scores in quiet failed to reach statistical significance [r2 = 0.30, p = 0.04 and r2 = 0.17, p = 0.13, respectively; see Figs. 8a, 8b].

Figure 8.

(a) Sentence recognition in quiet (rau scores) as a function of 1∕BW. (b) Vowel recognition in quiet (rau scores) as a function of 1∕BW. Regression lines are log fits. (c) SNR for 50% correct sentence recognition, interpolated∕extrapolated from P-I functions, as a function of 1∕BW. The regression line is a linear fit. Data from 12 subjects are included. (d) SNR for 50% correct vowel recognition, which was interpolated∕extrapolated from P-I functions for 14 subjects, as a function of 1∕BW. The regression line is a linear fit.

The relationships between BWSTC and sentence and vowel recognition in noise are shown in the lower two panels of Fig. 8, which plot the SNRs corresponding to 50% correct performance (derived from the fitted performance-intensity functions) as a function of 1∕BWSTC. Again, three subjects (C18, C23, N28) were excluded from sentence analysis and one (C23) from vowel analysis because asymptotic performance fell below 50%. The BWSTC did not show a significant relationship with sentence recognition in noise for the remaining 12 subjects (r2 = 0.10, p = 0.31), nor did vowel recognition in noise for 14 subjects (r2 = 0.02, p = 0.64).

Discussion

Neither measure of spectral resolution—the spectrally local BWSTC measured using direct stimulation or the spectrally global spectral ripple discrimination threshold measured using the subjects’ own speech processor—produced robust correlations with measures of speech perception either in quiet or in noise. The one exception was the measure of sentence recognition in quiet, which correlated significantly with spectral ripple discrimination thresholds but missed significance when correlated with BWSTC. The lack of strong correlations is particularly surprising for the vowel stimuli, as it is generally thought that vowel recognition relies to a large degree on the discrimination of the formant frequencies. On the other hand, earlier studies in normal-hearing listeners using noise-excited envelope-vocoded speech had shown that reasonable vowel recognition was possible with as few as four broadly tuned channels of spectral information (Shannon et al., 1995).

In general, our correlations were not as strong as those reported by Won et al. (2007), who found robust correlations between spectral ripple discrimination thresholds and closed-set word recognition in various types of background, including noise, two-talker babble, and quiet. One potential reason is statistical power: their sample included 29 CI users, whereas our maximum sample size was 15; and Won et al. (2007) included no correction for multiple comparisons, whereas we adopted a more conservative approach. A more detailed comparison of the r2 values shows that the values found by Won et al. (2007) ranged from 0.25 to about 0.38 (absolute r values between 0.5 and 0.62). Although our values were lower than this range for the speech-in-noise conditions, the ranges from the two studies do overlap, suggesting that the differences may have been due at least in part to sample size and statistical approach, rather than other differences, such as the speech material used. Thus, a conservative conclusion may be that there exists a relationship between measures of spectral resolution and speech perception, but that the variance accounted for is relatively small.

GENERAL DISCUSSION

Spectral ripple discrimination as a measure of spectral resolution

The results of this study suggest that the performance on spectral ripple discrimination tasks through the speech processor correlates with psychophysical forward-masked spatial tuning curves obtained through direct stimulation. Specifically, discrimination of spectral ripples in an octave-band carrier correlated significantly with BWSTC, when the band’s center frequency was selected individually for each subject to stimulate a region of the array centered on the probe electrode used in the STC experiment. In general, the same conclusion held when comparing the results from broadband spectral ripple discrimination and BWSTC, although the correlation was not significant when all subjects, including two “outliers” were included. Results from rectangular and more gently sloped broadband spectra were very similar, suggesting that thresholds for ripple discrimination in the broadband condition were not influenced by artifacts at the spectral edges of the stimuli.

Discrimination of fixed octave-band rippled noise showed substantial variations across the four spectral regions in individual subjects, suggesting that spectral resolution may vary substantially on an individual basis across different cochlear locations. From the four fixed octave-band ripple discrimination measures for each subject, both the average threshold and the best threshold were congruent with broadband ripple discrimination thresholds. Overall, the results support the idea that spectral ripple discrimination and spatial tuning curves reflect the same underlying mechanisms relating to spatial selectivity or spectral resolution.

How do spectral ripple discrimination thresholds compare with the spectral resolution of the analysis filters within the subjects’ speech processors? Given that both the ripples and filter center frequencies in the speech processor are spaced (approximately) evenly along a logarithmic frequency scale, one might expect the limits of ripple discrimination to follow the Nyquist-Shannon sampling theorem (e.g., Oppenheim and Schafer, 1999). This theorem, translated into the spectral domain, states that perfect reconstruction of the ripple spectrum is only possible if the filters are spaced no further apart than half the period of the highest spectral ripple rate. In other words, if the ripple rate is 1 ripple per octave, then the filters would need to be spaced less than 0.5 octaves apart for perfect reconstruction to be possible. In our subject population, the average filter spacing ranges from roughly 0.25 to 0.5 octave, depending on the device and the number of activated electrodes in the individual’s map, and excluding the apical- and basal-most electrodes, whose filters were often broader. In general, our Advanced Bionics C-I users, with maximum eight-electrode arrays, had average filter bandwidths of about a half octave (range 0.49–0.57) across the array; users of the C-II device, with 16 electrodes maximum, had average filter bandwidths of about a quarter octave (range 0.25–0.27). Nucleus users, with 22 electrodes maximum, had average filter bandwidths of just over a quarter octave (range 0.26–0.29). Based on sampling theorem, perfect reconstruction would therefore only be possible for spectral ripples of somewhere between 0.5 and 1 ripples per octave (i.e., twice the bandwidth and spacing of the filters). However, it is important to remember that the task of ripple discrimination does not require perfect reconstruction of the spectrum; instead, any difference between the original and phase-reversed spectra would be sufficient to perform the task perfectly. Therefore, the Nyquist-Shannon limit does not strictly apply here, and discrimination may remain possible in principle at considerably higher ripple rates, depending on factors such as the relationship between the phase of the stimulus and the filter center frequency, and the slopes of the filters. Figure 9 in Appendix B shows examples of simulated electrode outputs in response to sample stimuli from the experiment.

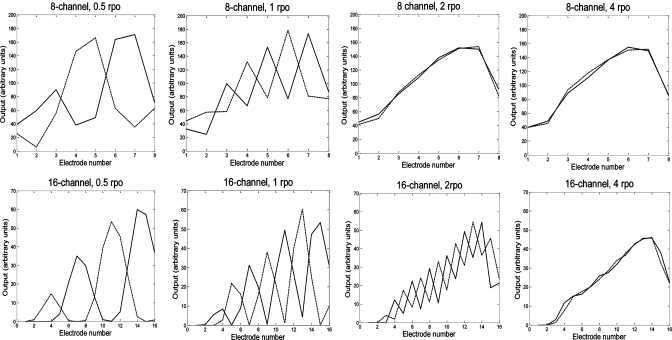

Figure 9.

Output patterns based on characteristics of one C-I subject and one C-II subject. Electrode number is shown on the abscissa, with current output (in arbitrary units) displayed on the ordinate. The top row of panels shows four stimulation patterns for an 8-channel CIS processor, and the bottom row simulates a 16-channel processor. From left to right, the plots illustrate the output for rippled noise stimuli of 0.5, 1, 2, and 4 rpo.

In the octave-band conditions (Experiments 2 and 3), band-limiting the stimulus presumably resulted in the activation of fewer electrodes than in the broadband condition (Experiment 1). As a rule, depending on individual subjects’ filter bandwidths (0.25 to 0.5 octaves), a one-octave stimulus would activate two to four electrodes. A decrease in the number of channels of information has been shown to adversely affect spectral-ripple discrimination with a broadband stimulus (e.g., Henry and Turner, 2003), with ripple discrimination thresholds reaching an asymptote at around 8 channels. However, in that study, as the number of channels was reduced, the (speech processor analysis) filters were proportionally broadened to encompass the full, original frequency range. As noted previously, spectral contrast should disappear once the filter bandwidths exceed the ripple spacing, so that a spectral peak and valley would both fall within the same filter. Certainly this would be the case in those studies manipulating the number of channels, in which a broadband rippled noise is “compressed” onto a small number of electrodes. In our study, the analysis filters were unmodified, so that for a given ripple rate the proportion of spectral peaks per electrode in the octave-band conditions essentially remained the same as in the broadband condition. This important difference may explain why we did not observe a large difference between thresholds in the octave-band and broadband conditions.

Overall, the comparison of BWSTC and spectral ripple discrimination thresholds suggests that ripple discrimination provides a reasonable measure of spectral resolution that is generally consistent with more direct but more time-consuming measures of spectral resolution. Our results also show that spectral edge effects are unlikely to have affected thresholds, at least for broadband spectrally rippled stimuli, and they suggest that the other potential problems with the ripple task, such as detection of shifts in the spectral centroid of the stimulus, may not adversely affect the technique’s ability to estimate spectral resolution. Indeed, it can be argued that all the potential “artifacts” mentioned in Sec. I require some degree of spectral resolution to become detectable. Nevertheless, it should also be acknowledged that a correlational study, by its nature, cannot be used to conclusively establish a causal link between two measures; it is, for instance, possible that the shared variance between the two measures is dictated by some other causal factor not necessarily related to frequency resolution. Even so, our results are certainly consistent with the idea that both BWSTC and spectral ripple discrimination thresholds reflect the underlying spectral resolution available to the CI user.

Relationships between spatial tuning curves, spectral ripple discrimination, and speech perception

Broadband spectral ripple discrimination performance correlated significantly with sentence recognition in quiet but did not correlate significantly with any speech in noise measures, in apparent contrast to the recent study of Won et al. (2007), which showed correlations between measures of spondee identification in noise and spectral ripple discrimination. However, as mentioned earlier, it is possible that the difference is primarily one of sample size and statistical approach used, as trends toward significance were also apparent in our data. Nevertheless, given the relatively small percentage of variance accounted for (typically less than 30%), it is clear that measures of frequency resolution alone do not provide a strong predictor of speech performance in individual subjects. One possible reason is that performance in the broadband ripple discrimination task can, in principle, be based on information from a local spectral region, in which resolution is particularly good, whereas good speech perception often relies on information from a wide spectral region, with loss of information in any region leading to potentially worse speech intelligibility.

Interestingly, correlating STC bandwidth and speech measures did not always produce the same conclusions as correlating spectral ripple discrimination thresholds and speech measures, even though the two measures of spectral resolution were correlated with each other. In an attempt to address this apparent discrepancy, the relationships between broadband spectral ripple discrimination thresholds, STCs, and speech recognition in quiet and in noise were re-examined, excluding data from subjects who might be considered outliers, or whose data were not included in one or other measure for other reasons. Specifically, regression analyses were repeated excluding one subject (C23) who differed from the rest of the subject pool in that she had a substantially broader STC and essentially no open-set speech understanding, as well as the two subjects (C05, D10) who were flagged as outliers in the ripple discrimination experiment. Using data from the remaining 12 subjects, correlation coefficients were derived by comparing transformed STC bandwidth, broadband spectral ripple discrimination thresholds, rau scores for sentence and vowel recognition, and SNRs corresponding to 50% correct for sentence and vowel recognition. Regression analysis, using a best-fit (in a least-squares sense) logarithmic model, showed only a relatively weak correlation remaining (non-significant using a Bonferroni-corrected α = 0.0125) between ripple discrimination and sentence recognition in quiet (r2 = 0.35, p = 0.042), closely matching the corresponding relationship between transformed STC bandwidth and sentence recognition in quiet (r2 = 0.36, p = 0.041). Using this same subset of subjects, the correlations between transformed STC bandwidth and both broadband and octave band ripple discrimination remained strong (r2 = 0.79, p < 0.001 for the former, and r2 = 0.83, p < 0.001 for the latter). Thus, the discrepancy in the relationships between the two non-speech measures and speech recognition can most likely be attributed to our small sample size.

Finally, the finding that spectral ripple discrimination can vary across different spatial∕spectral regions within a given subject may have potential clinical significance. Although measures of frequency resolution do not appear to account for the majority of variance in speech perception scores, they may nevertheless provide useful information. For instance, it may be beneficial to eliminate electrodes from a CI user’s MAP in regions identified as having poor spatial selectivity, in favor of regions identified as having better resolution. Further investigation of the significance of local variations in spectral resolution is warranted to test this conjecture.

CONCLUSIONS

Spectral ripple discrimination thresholds for an octave-wide band of noise were significantly correlated with the bandwidths of the spatial tuning curve centered on the same electrode. Some differences were found between broad-band and octave-band measures of spectral ripple discrimination, suggesting that differences in resolution across different regions of the cochlea may account in part for any discrepancies between spectrally global and spectrally local measures.

Overall the results suggest that spectral ripple discrimination provides a reasonable measure of spectral resolution in CI users—one that yields estimates that are consistent with the more direct but more time-consuming method of STC analysis. The results also suggest that CI subjects do not use spectral edge cues to perform the broadband ripple discrimination task.

Some significant correlations were found between the measures of spectral resolution and sentence recognition in quiet, but correlations with both STC bandwidth and spectral ripple discrimination threshold measures with measures of speech perception in noise were not statistically significant. These results are consistent with other reports in the literature (e.g., Hughes and Stille, 2009; Cohen et al., 2003; Zwolan et al., 1997) that measures of spectral resolution, whether global or local, do not provide a robust predictor of speech recognition abilities.

ACKNOWLEDGMENTS

This work was supported by a grant from the National Institutes of Health (R01 DC 006699) and by the Lions International Hearing Foundation. We thank Christophe Micheyl for help with the statistical analyses, Leo Litvak of Advanced Bionics for providing the matlab code for the spectral ripple computer simulations, and our research subjects for their participation.

APPENDIX A: DETAILED RESULTS FROM STC MEASUREMENTS

Additional details are provided in Table TABLE II. from STC measurements and from individual subjects’ clinical MAPs, including assigned analysis channel CF and BW for the probe electrode, as well as broadband spectral ripple discrimination thresholds. The analysis channel bandwidths for adjacent electrodes on either side of the probe electrode differed by 5% or less. All Clarion I and Clarion II subjects were tested in MP mode and Nucleus subjects were tested in some form of BP mode for the STC experiment.

APPENDIX B: COMPUTER SIMULATIONS OF RIPPLED NOISE PROCESSING

Calculated stimulation patterns across electrodes are shown in Fig. 9 for broadband rippled noise passed through a computer simulation of Advanced Bionics HiRes CIS processing. Parameters (including number of electrodes, threshold and comfort levels for each electrode, and input dynamic range, all obtained from clinical MAPS) were entered to simulate specific CI subject characteristics.

References

- Berenstein, C. K. Mens, L. H. Mulder, J. J. S. and Vanpoucke, F. J. (2008). “Current steering and current focusing in cochlear implants: Comparison of monopolar, tripolar, and virtual channel electrode configurations,” Ear Hear. 29, 250–260. 10.1097/AUD.0b013e3181645336 [DOI] [PubMed] [Google Scholar]

- Cohen, L. T., Richardson, L. M., Saunders, E., and Cowan, R. S. C. (2003). “Spatial spread of neural excitation in cochlear implant recipients: Comparison of improved ECAP method and psychophysical forward masking,” Hear. Res. 179, 72–87. 10.1016/S0378-5955(03)00096-0 [DOI] [PubMed] [Google Scholar]

- Dorman, M. F., Loizou, P. C., Fitzke, J., and Tu, Z. (1998). “The recognition of sentences in noise by normal-hearing listeners using simulations of cochlear-implant signal processors with 6-20 channels,” J. Acoust. Soc. Am. 104, 3583–3585. 10.1121/1.423940 [DOI] [PubMed] [Google Scholar]

- Drennan, W. R., Won, J. H., Nie, K., Jameyson, E., and Rubinstein, J. T. (2010). “Sensitivity of psychophysical measures to signal processor modifications in cochlear implant users,” Hear. Res. 262, 1–8. 10.1016/j.heares.2010.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eddins, D. A., and Bero, E. M., (2007). “Spectral modulation detection as a function of modulation frequency, carrier bandwidth, and carrier frequency region,” J. Acoust. Soc. Am. 121, 363–372. 10.1121/1.2382347 [DOI] [PubMed] [Google Scholar]

- Friesen, L., Shannon, R. V., Baskent, D., and Wang, X. (2001). “Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants,” J. Acoust. Soc. Am. 110, 1150–1163. 10.1121/1.1381538 [DOI] [PubMed] [Google Scholar]

- Glasberg B. R., and Moore, B. C., (1990). “Derivation of auditory filter shapes from notched-noise data,” Hear. Res. 1, 103–138. 10.1016/0378-5955(90)90170-T [DOI] [PubMed] [Google Scholar]

- Henry, B. A., and Turner, C. W. (2003). “The resolution of complex spectral patterns by cochlear implant and normal-hearing listeners,” J. Acoust. Soc. Am. 113, 2861–2873. 10.1121/1.1561900 [DOI] [PubMed] [Google Scholar]

- Henry, B. A., Turner, C. W., and Behrens, A. (2005). “Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listeners,” J. Acoust. Soc. Am. 118, 1111–1121. 10.1121/1.1944567 [DOI] [PubMed] [Google Scholar]

- Hillenbrand, J., Getty, L. A., Clark, M. J., and Wheeler, K. (1995). “Acoustic characteristics of American English vowels,” J. Acoust. Soc. Am. 97, 3099–3111. 10.1121/1.411872 [DOI] [PubMed] [Google Scholar]

- Hinojosa, R., and Marion, M. (1983). “Histopathology of profound sensorineural deafness,” Ann. N.Y. Acad. Sci. 405, 459–484. 10.1111/j.1749-6632.1983.tb31662.x [DOI] [PubMed] [Google Scholar]

- Hughes, M. L., and Stille, L. J. (2009). “Psychophysical and psysiological measures of electrical-field interaction in cochlear implants,” J. Acoust. Soc. Am. 125, 247–60. 10.1121/1.3035842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- IEEE (1969). “IEEE recommended practice for speech quality measurements,” Trans. Audio. Electroacoust. 17, 225–246. 10.1109/TAU.1969.1162058 [DOI] [Google Scholar]

- Kawano, A., Seldon, H. L., Clark, G. M., Ramsden, R. T., and Raine, C. H. (1998). “Intracochlear factors contributing to psychophysical percepts following cochlear implantation,” Acta Otolaryngol. 118, 313–326. 10.1080/00016489850183386 [DOI] [PubMed] [Google Scholar]

- Litvak, L. M., Spahr, A. J., Saoji, A. A., and Fridman, G. Y. (2007). “Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners,” J. Acoust. Soc. Am. 122, 982–991. 10.1121/1.2749413 [DOI] [PubMed] [Google Scholar]

- McKay, C., Azadpour, M., and Akhoun, I. (2009). “In search of frequency resolution,” Conference on Implantable Auditory Prostheses, July 2009. Lake Tahoe, CA.

- Nelson, D. A., Donaldson, G. S., and Kreft, H. (2008). “Forward-masked spatial tuning curves in cochlear implant users,” J. Acoust. Soc. Am. 123, 1522–1543. 10.1121/1.2836786 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oppenheim, A. V., Schafer, R. W., and Buck, J. R. (1999) Discrete-time Signal Processing, 2nd ed. (Prentice-Hall, Upper Saddle River, NJ: ), pp. 86–87. [Google Scholar]

- Pfingst, B. E., and Xu, L. (2003). “Across-site variation in detection thresholds and maximum comfortable loudness levels for cochlear implants,” J. Assoc. Res. Otolaryngol. 5, 11–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qin, M. K., and Oxenham, A. J. (2003). “Effects of simulated cochlear-implant processing on speech reception in fluctuating maskers,” J. Acoust. Soc. Am. 114, 446–454. 10.1121/1.1579009 [DOI] [PubMed] [Google Scholar]

- Saoji, A. A., and Eddins, D. A. (2007). “Spectral modulation masking patterns reveal tuning to spectral envelope frequency,” J. Acoust. Soc. Am. 122, 1004–1013. 10.1121/1.2751267 [DOI] [PubMed] [Google Scholar]

- Shannon, R. V., Zeng, F.-G., Kamath, V., Wygonski, J., and Ekelid, M. (1995). “Speech recognition with primarily temporal cues,” Science 270, 303–304. 10.1126/science.270.5234.303 [DOI] [PubMed] [Google Scholar]

- Studebaker, G. (1985). “A ‘rationalized’ arcsine transform,” J. Speech Hear. Res. 28, 455–462. [DOI] [PubMed] [Google Scholar]

- Supin, A. Y., Popov, V. V., Milekhina, O. N., and Tarakanov, M. B. (1994). “Frequency resolving power measured by rippled noise,” Hear. Res. 78, 31–40. 10.1016/0378-5955(94)90041-8 [DOI] [PubMed] [Google Scholar]

- Supin, A. Y., Popov, V. V., Milekhina, O. N., and Tarakanov, M. B. (1997). “Frequency-temporal resolution of hearing measured by rippled noise,” Hear. Res. 108, 17–27. 10.1016/S0378-5955(97)00035-X [DOI] [PubMed] [Google Scholar]

- Won, J. H., Drennan, W. R., and Rubinstein, J. T. (2007). “Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users,” J. Assoc. Res. Otolaryngol. 8, 384–392. 10.1007/s10162-007-0085-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won, J. H., Drennan, W. R., Jung, K. H., Jameyson, E. M., Nie, K., and Rubinstein, J. T. (2010). “The effect of the number of channels on spectral-ripple, Schroeder-Phase discrimination, and modulation detection in cochlear implant and normal-hearing listeners,” Poster presented at 33rd Annual Mid-Winter ARO Conference, Feb., Anaheim, CA.

- Zwolan, T. A., Collins, L. M., and Wakefield, G. H. (1997). “Electrode discrimination and speech recognition in postlingually deafened adult cochlear implant subjects,” J. Acoust. Soc. Am. 102, 3673–3685. 10.1121/1.420401 [DOI] [PubMed] [Google Scholar]