Abstract

There is increasing evidence to suggest that primary sensory cortices can become active in the absence of external stimulation in their respective modalities. This occurs, for example, when stimuli processed via one sensory modality imply features characteristic of a different modality; for instance, visual stimuli that imply touch have been observed to activate the primary somatosensory cortex (SI). In the present study, we addressed the question of whether such cross-modal activations are content specific. To this end, we investigated neural activity in the primary somatosensory cortex of subjects who observed human hands engaged in the haptic exploration of different everyday objects. Using multivariate pattern analysis of functional magnetic resonance imaging data, we were able to predict, based exclusively on the activity pattern in SI, which of several objects a subject saw being explored. Along with previous studies that found similar evidence for other modalities, our results suggest that primary sensory cortices represent information relevant for their modality even when this information enters the brain via a different sensory system.

Keywords: cross-modal processing, functional neuroimaging, multivariate pattern analysis, sensory systems

Introduction

An increasing number of studies indicate that primary sensory cortices can be activated in the absence of modality-specific external stimulation. For example, the primary visual (V1), auditory (A1), and somatosensory (SI) cortices can be activated during visual (Le Bihan et al. 1993; Kosslyn et al. 1995), auditory (Yoo et al. 2001; Kraemer et al. 2005), and tactile (Yoo et al. 2003) mental imagery, respectively. Furthermore, visual stimuli that imply sound, touch, or smell can activate primary auditory (Calvert et al. 1997), somatosensory (Blakemore et al. 2005; Ebisch et al. 2008; Schaefer et al. 2009; Pihko et al. 2010), and olfactory (González et al. 2006) cortices. Of note, in the visual and auditory systems, these activity patterns appear to be content specific: for instance, V1 activity reflects the content of visual mental images subjects maintain (Kosslyn et al. 1995; Slotnick et al. 2005; Thirion et al. 2006; Harrison and Tong 2009; Serences et al. 2009), and the activity pattern in primary auditory cortex and early auditory association cortices differentiates among visual stimuli that imply different kinds of sound (Meyer et al. 2010). In the present study, we addressed the analogous question for the somatosensory modality. We created several short video clips, each of which portrayed human hands exploring an everyday object, such as a light bulb or a tennis ball. While our subjects watched these clips, we investigated the patterns of ongoing neural activity in primary somatosensory cortex using multivariate pattern analysis (MVPA) of functional magnetic resonance imaging (fMRI) data. If the neural representations induced in SI during the observation of the stimuli reflected specifically the tactile and proprioceptive information depicted in each of the clips, then MVPA of the SI activity fingerprint should allow us to predict which of several clips a subject had seen.

Materials and Methods

Participants

Five female and 4 male right-handed subjects were initially enrolled in the study. One subject informed us midway through the scan that she had trouble seeing the stimuli due to myopia (the subject had initially stated she could see the stimuli clearly); her data therefore were excluded from all analyses. The experiment was undertaken with the understanding and written consent of each subject.

Stimuli

The stimuli consisted of 5 short video clips, each of which displayed 2 human hands engaged in the haptic exploration of a common object: a set of keys, a tennis ball, a skein of soft yarn, the leaves of a plant, and a light bulb (see Supplementary Video Clips 1–5). All clips were 5 s long. The subjects were told simply to “watch the video clips attentively and move as little as possible”; in other words, they were not asked to make an effort to imagine the tactile and proprioceptive content of the video clips.

Stimulus Presentation

Timing and presentation of the video clips were controlled with MATLAB (The Mathworks), using the freely available Psychophysics Toolbox Version 3 software (Brainard 1997). The clips were projected onto a rear projection screen at the end of the scanner bore which subjects viewed through a mirror mounted on the head coil. One clip was presented every 16 s. A fixation cross was displayed during the 11-s interval between the end of one clip and the beginning of the next. During each functional run, the 5 video clips were presented 4 times each in random order, for a total of 20 clips per run. Each subject completed 10 runs, seeing 40 repetitions of each video clip across the entire experiment.

Image Acquisition

Images were acquired with a 3-Tesla Siemens MAGNETON Trio System. Echo-planar volumes were acquired continuously with the following parameters: repetition time (TR) = 2,000 ms, echo time (TE) = 25 ms, flip angle = 90°, 64 × 64 matrix, in-plane resolution 3.0 × 3.0 mm, 41 transverse slices, each 3.0 mm thick, covering the whole brain. To precisely define the somatosensory mask (see below), we also acquired a structural T1-weighted magnetization-prepared rapid gradient echo (MP-RAGE) image in each subject (TR = 2,530 ms, TE = 3.09 ms, flip angle = 10°, 256 × 256 matrix, 208 coronal slices, 1 mm isotropic resolution).

Univariate Analysis of fMRI Data

Although the main focus of the present study lay in the multivariate analysis of the activity patterns in primary somatosensory cortex and the stimulus paradigm was optimized accordingly, we analyzed the data using a conventional, univariate approach as well. This analysis was performed with FSL (FMRIB Software Library, Smith et al. 2004). Data preprocessing for the GLM analysis involved the following steps: motion correction (Jenkinson et al. 2002), brain extraction (Smith 2002), slice timing correction, spatial smoothing with a 5-mm full-width at half-maximum Gaussian kernel, high-pass temporal filtering using Gaussian-weighted least-squares straight line fitting with σ = 50.0 s (where σ denotes the standard deviation of the Gaussian distribution) and prewhitening (Woolrich et al. 2001). Each of the 5 stimulus types was modeled with a separate regressor derived from a convolution of the task design and a gamma function to represent the hemodynamic response function. Motion correction parameters were included in the design as additional regressors. The 10 runs for each participant were combined into a second-level random-effects analysis, and a third-level intersubject analysis was performed using a mixed-effects design.

Registration of the functional data to the high-resolution anatomical image of each subject and to the standard Montreal Neurological Institute (MNI) brain was performed using the FSL FLIRT tool (Jenkinson and Smith 2001). Functional images were aligned to the high-resolution anatomical image using a 7 degree-of-freedom linear transformation. Anatomical images were registered to the MNI-152 brain using a 12 degree-of-freedom affine transformation. All images were thresholded with FSL's cluster thresholding algorithm, using a minimum Z score of 2.3 (P < 0.01) and a cluster size probability of P < 0.05. As opposed to conventional cluster thresholding, which uses an absolute number of voxels as cluster size criterion, FSL's cluster thresholding algorithm uses Gaussian random field theory to invoke an image's spatial smoothness when determining an appropriate cluster size threshold. In our case, for instance, the algorithm considers the spatial smoothness of the image thresholded at Z = 2.3 and determines, accordingly, which clusters are big enough to occur by chance less than 5% of the time.

Regions of Interest

The primary somatosensory cortex is composed of Brodmann's areas (BA) 3, 1, and 2, which, in anteroposterior order, are located on the postcentral gyrus. We opted for an anatomical, rather than a functional, definition of our region of interest for 2 reasons. First, a functional localizer (defined as the areas active while the subjects themselves would perform actions similar to those depicted in the video clips) would likely label somatosensory association areas as well (such as the secondary somatosensory cortex SII and BA 5 and 7). Since our aim was to predict visual stimuli from SI activity alone, we would have had to revert to anatomical criteria at any rate to exclude such secondary activations. Second, previous MVPA studies (e.g., Harrison and Tong 2009; Serences et al. 2009) have demonstrated that information about perceptual stimuli can be contained in areas that do not show “net activations.” Thus, defining our mask by a functional localizer would have incurred the risk of missing relevant SI voxels.

For these 2 reasons, we defined individual anatomical masks of the postcentral gyrus in all subjects (Supplementary Figure 1). In the anteroposterior dimension, the mask was limited by the floors of the central and postcentral sulci, respectively. We are aware that BA 3a often extends more anteriorly than the floor of the central sulcus, onto the posterior wall of the precentral gyrus; however, as there is no anatomical landmark to identify the veridical border between BA 3 and 4, the central sulcus appeared to be the most reasonable approximation. The mask was confined to the hemispheric convexity and did not include any areas on the medial wall of the hemisphere. To define the lateroinferior mask border, we chose the following criterion: we only labeled the postcentral gyrus on horizontal slices on which the corpus callosum was not visible. Following this procedure resulted in masks that were cut off well above the Sylvian fissure (Supplementary Figure 1, right panel), so that the probability that the masks included parts of SII was low (Eickhoff et al. 2006).

To illustrate classifier performance outside primary somatosensory cortex, we established control masks in primary visual cortex, secondary somatosensory cortex (subregion OP1), anterior cingulate cortex, middle frontal gyrus, and anterior middle temporal gyrus. With the exception of the secondary somatosensory cortex, these were the same control masks we had used in a previous study concerned with MVPA in the early auditory cortices (Meyer et al. 2010). The masks were obtained by choosing the corresponding probabilistic masks from the Harvard–Oxford (anterior cingulate cortex, middle frontal gyrus and anterior middle temporal gyrus) and Jülich (primary visual cortex and secondary somatosensory cortex) cortical atlases included with FSL and then warping them from the standard space into each individual's functional space. We selected a probability threshold for each mask so that the average number of voxels it occupied in the subjects' functional spaces did not differ significantly from the average number of voxels occupied by the SI target mask.

Multivariate Pattern Analysis

MVPA uses pattern classification algorithms to identify distributed neural representations associated with specific perceptual stimuli or classes of stimuli. As opposed to traditional (univariate) fMRI analysis techniques, in which each voxel is analyzed separately, MVPA can find information in the spatial pattern of activation across multiple voxels. For example, whereas the average activation in a region of interest may not differ significantly between 2 stimulus conditions, information may be contained nonetheless within the spatial profile of activity in that region. MVPA is most often used for decoding, that is, it aims at identifying a specific perceptual representation based on the pattern of neural activation in a region of interest. For a more detailed description of the method and its applications, we refer the reader to some of the reviews that have been published on the topic: Haynes and Rees 2006; Norman et al. 2006; Kriegeskorte and Bandettini 2007.

MVPA was performed using the PyMVPA software package (Hanke et al. 2009), in combination with LibSVM's implementation of the linear support vector machine (http://www.csie.ntu.edu.tw/∼cjlin/libsvm/). Data from the 10 functional scans of each subject were concatenated and motion corrected to the middle volume of the entire time series and were then linearly detrended and converted to Z scores by run. For each subject, the anatomically defined mask was warped from the structural into the functional space, where all subsequent statistical analyses were performed.

Given that we presented one video clip every 16 s and that the TR was 2 s, 8 whole-brain volumes were acquired between the onset of a video clip and the onset of the next. We focused our analysis on the 2 volumes acquired between 6 and 10 s post stimulus onset for 2 reasons. First, these 2 images could be assumed to capture the peak of the hemodynamic response function. Second, in our previous study (Meyer et al. 2010), we had successfully discriminated (silent) sound-implying video clips from neural activity recorded in the early auditory cortices between 7 and 9 s post stimulus onset. The mentioned study had used a sparse-sampling paradigm (so that the sound-implying video clips could be presented during scanner silence) with a single-image acquisition per video clip, the timing of which had been based on prior piloting. As there was no need for sparse sampling in the present study, we could afford to extend the time window of the previous study. In the light of these 2 arguments, the somatosensory activity patterns acquired between 6 and 10 s after stimulus onset were the most likely to contain information about the video clips, with information content presumably decreasing both before and after that time window. As is evident from Supplementary Figure 2, post hoc analyses confirmed this hypothesis.

We further hypothesized that prediction performance would improve if we averaged the 2 mentioned image volumes to train and test the classifier. It is known that consecutively acquired fMRI volumes are not independent measures, so that even if we assessed classifier performance on the 2 time points separately, the tests would not be independent. Averaging the 2 time points, on the other hand, amounts to a temporal smoothing of the data and can be expected to increase the signal-to-noise ratio of the patterns that are used to train and test the classifier. For this reason, averaging of consecutively acquired images is a commonly used technique in MVPA (e.g. Kamitani and Tong 2005; Clithero et al. 2009; Rissman et al. 2010). Thus, all the results presented below were obtained by training and testing the classifier on the activity patterns averaged from the 2 volumes acquired between 6 and 10 s post stimulus onset. As expected, training and testing the algorithm on either of the 2 individual volumes alone produced slightly lower prediction performance, but the results were comparable in terms of statistical significance (the time point between 8 and 10 s actually producing more statistically significant discriminations than the 2 time points combined).

We performed all possible 2-way discriminations between pairs of individual stimuli (n = 10) as well as a 5-way discrimination between all 5 stimuli. Training and testing of the classifier algorithm for each discrimination task was performed according to a cross-validation approach: for each cross-validation step, the classifier was trained on 9 functional runs and tested on the tenth. This procedure was repeated 10 times, using each run as test run once. In each cross-validation step, performance was calculated as the number of correct guesses of the classifier divided by the number of test trials. Overall performance for each discrimination task was obtained by averaging the results from the 10 cross-validation steps.

In order to determine which voxels contributed most to the classifier's successful discrimination of the stimuli, we established sensitivity maps based on the 5-way discrimination task among all stimuli. When performing multiclass discriminations, LibSVM uses the so-called “one-against-one method”: it carries out all pairwise discriminations among the stimuli and then bases its final “guess” on the outcome of these individual discriminations. For each of the pairwise discriminations, the so-called linear support vector machine (SVM) weights can be extracted, which provide an index for how much individual voxels contributed to the prediction performance of the classifier. In order to determine sensitivity maps for the 5-way discrimination, we added the SVM weights of all pairwise discriminations (averaged across all 10 cross-validation folds) for each voxel. In each subject, we thresholded the resulting map at the 95th percentile (i.e., we only included the 5% most discriminative voxels) and then warped it into the standard space in order to display a map of the resulting overlap.

Statistical Analyses

All P values indicated along with the prediction performance of the multivariate pattern classifier are the results of 2-tailed t-tests across all 8 subjects. An underlying assumption of parametric testing is that the values for which significance is assessed are normally distributed and are free to vary widely about the mean. This assumption is violated when testing percentages or ratios that are bound to a certain interval (e.g., to the interval between 0 and 1 in the case of an MVPA classifier). For the following 2 reasons, we assessed the significance of our data using t-tests, nonetheless. First, we conducted a Lilliefors test on the results of all discriminations (2-way and 5-way) to assess whether the values were compatible with the assumption of an underlying normal distribution; this assumption could not be rejected in a single case (at P < 0.05). Second, we carried out an arcsine-root transformation of all results and applied t-tests to the transformed values; this is an accepted procedure to perform t-testing on percentage and proportion values. The resulting P values were very similar to those we obtained from the t-tests on the untransformed results: in particular, Figure 4b, which illustrates the levels of significance for all 2-way discriminations, would look identical except for a single discrimination which would no longer be significant at P < 0.001 but at P < 0.01 instead (P = 0.0015). Presumably, the similarity in t-test results among the transformed and untransformed values was observed because the classifier performance values generally were not located near the limits of the possible range; that is, they were not located close to 0 or 1).

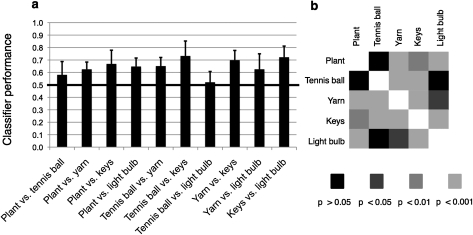

Figure 4.

Classifier performance on 2-way discrimination tasks. (a) Each bar represents classifier performance on a 2-way discrimination task, averaged across all 8 subjects. Error bars represent the standard deviation. (b) Levels of significance of all 2-way discrimination tasks (2-tailed t-tests, n = 8).

Results

Univariate Analysis of fMRI Data

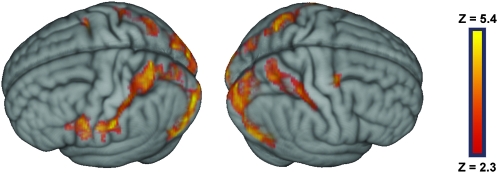

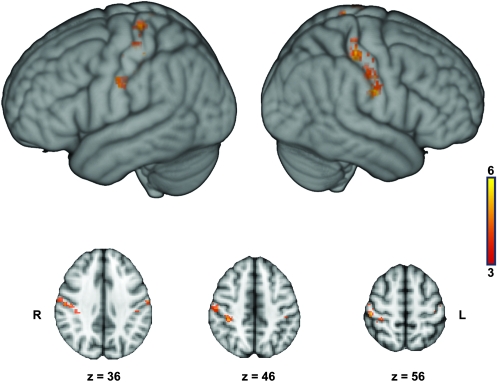

At the group level, watching touch-implying video clips, as compared with fixation, led to significant activity in the medial and lateral aspects of the occipital lobe bilaterally, the superior parietal lobule bilaterally, the postcentral sulcus and posterior portion of the postcentral gyrus bilaterally, the ventro-anterior portion of the inferior parietal lobule and adjacent postcentral gyrus on the left, the ventral precentral gyrus and the adjacent, posterior portion of the inferior frontal gyrus on the left, and at the junction of the precentral sulcus and the superior frontal sulcus on the right (Fig. 1 and Supplementary Table 1). Contrasting separately each of the video clips against fixation led to activity patterns very similar to the overall contrast just described (Supplementary Figure 3).

Figure 1.

Group statistical maps illustrating the brain areas that were more activated during the observation of touch-implying video clips than during fixation. Activated areas have a minimum Z score of 2.3 (P < 0.01) and passed a cluster size probability of P < 0.05.

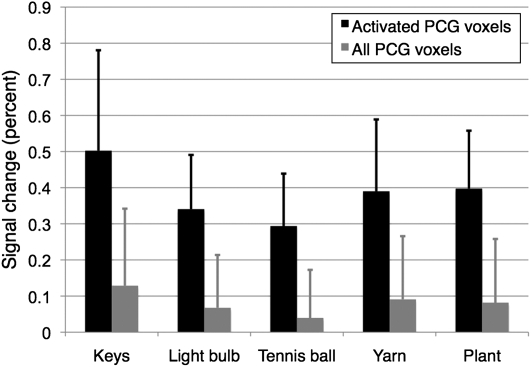

To further analyze the individual contributions of the 5 video clips to the overall activation pattern, we calculated the percent signal change each of the clips induced in the postcentral gyrus (gray bars in Fig. 2). The ROI for this group-level analysis was obtained by warping the anatomically defined postcentral gyrus masks of the individual subject into the standard space and including all those voxels in the group-level mask that were present in at least 3 subjects. While these data show that all 5 clips led to activation within the postcentral gyrus, it is clear from Figure 1 and Supplementary Figure 3 that the area activated by the video clips comprised only a relatively small portion of the postcentral gyrus. In order to obtain a more meaningful comparison of the activity levels induced by the different clips, we also assessed the percent signal change induced by each clip in an ROI limited to the voxels that had been significantly activated in the overall contrast depicted in Figure 1. While the signal changes in this subregion of the postcentral gyrus, not surprisingly, were more pronounced (black bars in Fig. 2), the relationships between the individual activation amplitudes remained similar: while the signal changes elicited by the plant, tennis ball, light bulb, and yarn stimuli were comparable, that of the key stimulus was somewhat higher than the others.

Figure 2.

Percent signal change induced by the individual clips within the postcentral gyrus. The gray bars represent the signal changes induced within the entire mask (which was obtained by warping all individual anatomical masks into the standard space and then including those voxels that were present in at least 3 subjects). The black bars represent the signal changes induced in a subregion of the postcentral gyrus mask limited to the voxels that were significantly activated in the overall contrast of touch observation versus fixation, as shown in Figure 1. Error bars represent the standard deviation. PCG: postcentral gyrus.

Multivariate Pattern Analysis

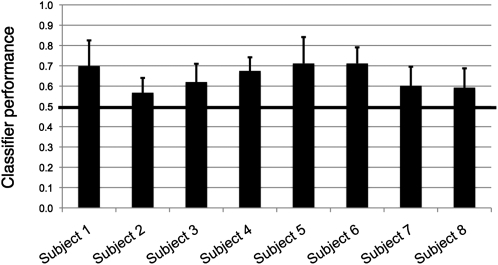

We first performed all 10 possible 2-way discriminations among the 5 video clips for each subject. Overall prediction performance, averaged across all discriminations and all subjects, was 0.647; this result was significantly different from the chance level of 0.5 (P = 1.9 × 10−4; 2-tailed t-test, n = 8). When assessed separately for each hemisphere, prediction performance was 0.633 (P = 2.4 × 10−4) in the right-hemispheric mask and 0.586 (P = 1.6 × 10−3) in the left-hemispheric mask. These 2 values were significantly different from one another (P = 0.016, 2-tailed, paired t-test, n = 8). Performance in individual subjects, averaged across all ten discrimination tasks, ranged from 0.568 to 0.711 (Fig. 3).

Figure 3.

Classifier performance in individual subjects. Each bar represents classifier performance in an individual subject, averaged across all 10 two-way discrimination tasks. Chance performance is 0.5, as indicated by the black line. Error bars represent the standard deviation.

Averaged across all subjects, the classifier performed significantly above chance (P < 0.05) for 8 of the 10 2-way discriminations (Fig. 4). Given that the proportion of significant t-tests was much higher than the significance criterion applied (0.8 vs. 0.05), no correction for multiple comparisons was carried out. The only stimulus pairs that did not yield significant discrimination performance were tennis ball versus plant (performance 0.581; P = 0.069) and tennis ball versus light bulb (performance 0.521; P = 0.526). Thus, while the discrimination between the tennis ball and the plant clips was close to significant, prediction performance for the discrimination between the tennis ball and the light bulb was practically at chance level. Performance on the other 8 two-way discriminations ranged from 0.625 to 0.732. By contrast, when we only used the mean activity value within the postcentral gyrus mask of each subject to train and test the classifier, none of the discriminations yielded a prediction performance significantly different from chance (Supplementary Figure 4).

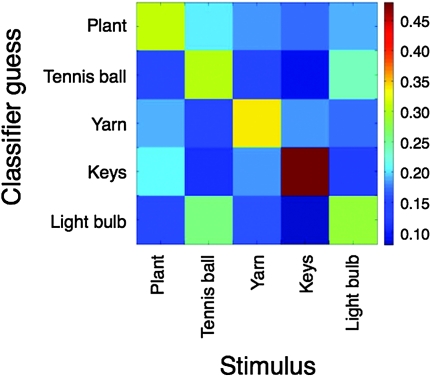

Performance on the 5-way discrimination among all stimuli, averaged across all subjects, was 0.342; this result was significantly different from the chance level of 0.2 (P = 1.4 × 10−3). Two interesting observations could be made based on the confusion matrix resulting from the 5-way discrimination (Fig. 5): first, for each video clip, the classifier produced the correct guess more often than any other guess (as is evident from the lightly colored rectangles along the diagonal of the graph). Second, while the classifier produced the correct guess considerably more often than any other guess for the plant, yarn, and key stimuli, the poor discrimination between the tennis ball and the light bulb clips is apparent once more.

Figure 5.

Confusion matrix illustrating classifier performance on the 5-way discrimination task. The x-axis represents the pattern presented to the classifier and the y-axis represents the classifier's guess. The classifier produced the correct guess more often than any other guess for all 5 stimuli. It is also obvious that the tennis ball and the light bulb trials were often confused.

Figure 6 displays a group-level sensitivity map derived from the 5-way discrimination (see Materials and Methods). In accordance with the superior classifier performance, more of the highly discriminative voxels were found in the right hemisphere than in the left. The map also suggests that classifier performance did not result from one circumscribed area of the primary somatosensory cortex but relied on distributed representations. While the most discriminative voxels were clustered in the posterior part of the postcentral gyrus and in the postcentral sulcus in the right hemisphere, their location appears somewhat more arbitrary on the left. In both hemispheres, a cluster of discriminative voxels can be found close to the inferior border of the mask, in the ventral-most part of the primary somatosensory cortex, near the border of the secondary somatosensory cortex SII (see Eickhoff et al. 2006).

Figure 6.

Group-level sensitivity map, representing the overlap of the 5% most discriminative voxels in individual subjects, after warping the individual subject maps into the standard space (see Materials and Methods). The color code denotes the number of subjects in whom a certain voxel surpassed the threshold criterion. It is obvious that a majority of the most discriminative voxels was located in the right hemisphere, in accordance with the asymmetry in classifier performance. It is also obvious, especially on the axial cuts, that most of these voxels are clustered on the posterior wall of the postcentral gyrus and in the postcentral sulcus, corresponding mainly to BA 2. On the axial cuts, the right hemisphere is displayed on the left side of the image and vice versa.

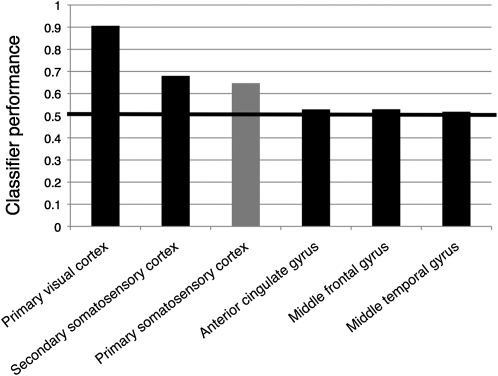

Finally, we assessed classifier performance in a number of regions outside the SI target mask (see Materials and Methods). As can be gleaned from Figure 7, the classifier performed extremely well in the primary visual cortex: prediction performance, averaged across all subjects and all 2-way discriminations, exceeded 0.9. Performance in secondary somatosensory cortex was also slightly better than in SI. By contrast, the classifier performed close to chance level in the other control regions.

Figure 7.

Classifier performance outside the primary somatosensory cortex. The bars represent classifier performance averaged across all subjects and all 2-way discriminations for the target mask in primary somatosensory cortex (gray bar) and for control masks in primary visual cortex, secondary somatosensory cortex, anterior cingulate cortex, middle frontal gyrus, and middle temporal gyrus (black bars). The control masks were adjusted in size to the somatosensory target mask (see Materials and Methods).

Discussion

Brain Areas Activated during the Observation of Touch

The results of the univariate subtraction contrast between the observation of touch-implying video clips and fixation are in general agreement with those of earlier studies investigating either the observation of touch applied to human body parts or the observation of actions that involved touching objects (Keysers et al. 2004; Blakemore et al. 2005; Ebisch et al. 2008; Schaefer et al. 2009; Gazzola and Keysers 2009; for a review, see Keysers et al. 2010). Specifically, these studies noted activations in the lateral occipital cortices (presumably corresponding to area hMT+), the superior parietal lobule, the anterior part of the inferior parietal lobule, the dorsal premotor cortex, and the inferior frontal gyrus. Furthermore, most, but not all (see Keysers et al. 2004), prior studies found that observing touch activates the primary somatosensory cortex, in particular, BA 2 (Ebisch et al. 2008; Schaefer et al. 2009 [see their allocentric condition]; Gazzola and Keysers 2009). In keeping with this, the superior parietal activity cluster we observed in the present investigation encompassed the postcentral sulcus and posterior aspects of the postcentral gyrus, corresponding predominantly to BA 2.

Interestingly, part of the activity cluster we found in the left inferior parietal lobule also extended into the postcentral gyrus. Based on the probabilistic maps established by Eickhoff et al. (2006), at least part of this activity likely falls into primary somatosensory cortex. Previous touch observation studies also reported activity in this general region, but they attributed it to SII (Keysers et al. 2004; Ebisch et al. 2008), possibly because it was located somewhat more posteriorly than the activation focus we found in the current study. It may seem surprising that the observation of touch-implying hand actions would activate this ventral portion of the postcentral gyrus, which falls outside the region classically thought to represent the hand and forearm areas. It is interesting to note, however, that in subjects who engage in tactile object recognition themselves (as opposed to watching video clips depicting such action), there is also one continuous cluster of activity that reaches from the hand area of SI all the way to the SII area (Reed et al. 2004).

Tactile and Proprioceptive Representations

It is interesting to note that there are 2 broad categories of studies that show activation of somatosensory cortices in response to visual stimuli: those addressing the observation of touch (Keysers et al. 2004; Blakemore et al. 2005; Ebisch et al. 2008; Schaefer et al. 2009; Pihko et al. 2010) and those addressing the observation of action (Avikainen et al. 2002; Oouchida et al. 2004; Raos et al. 2007; Evangeliou et al. 2009; Gazzola and Keysers 2009; Pierno et al. 2009). Clearly, the 2 groups are not entirely distinct as many of the latter studies involved the observation of transitive actions that implied touch as well (e.g., grasping certain objects). However, there are exceptions: activation of the primary somatosensory cortex was also observed when subjects observed simple flexion and extension movements of the experimenter's wrist (Oouchida et al. 2004) and even when they viewed still images of a hand executing a pointing movement (Pierno et al. 2009). BA 2, in particular, is activated by visual stimuli both when they depict touch (Ebisch et al. 2008; Schaefer et al. 2009; Gazzola and Keysers 2009) and when they do not (Oouchida et al. 2004; Pierno et al. 2009). These findings presumably can be explained by the fact that BA 2 processes not only tactile (along with BA 3b and 1) but also proprioceptive (along with BA 3a) signals. In a recent review on somatosensation in social perception, Keysers et al. (2010) mention that “Neurons in BA2 are especially responsive when objects are actively explored or manipulated with the hands so that tactile and proprioceptive information is combined.” The authors go on to conclude that BA 2 could be activated during the observation of actions because “BA 2 might be particularly involved in vicariously representing the haptic combination of tactile and proprioceptive signals that would arise if the participant manipulated the object in the observed way” (Keysers et al. 2010).

Based on this evidence, we believe that both the tactile and proprioceptive information contained in the video clips contributed to the successful classification of the somatosensory activity patterns. In fact, it is interesting to note that the only 2 stimuli the classifier failed to discriminate completely were the light bulb and the tennis ball. This finding suggests that object shape (which is similar between the 2 objects) might be more important for classification than surface texture (which is distinct). As proprioception plays an essential role in shape discrimination, one might speculate, by extension, that the classifier relies more on proprioceptive than on tactile representations.

Content Specificity of the Activity Patterns in Primary Somatosensory Cortex

The results of the univariate subtraction contrast discussed earlier indicate that the mere observation of video clips that contain tactile and proprioceptive information activates part of the primary somatosensory cortex and thus confirms earlier findings. However, the main goal of the current study was to determine whether activity patterns in SI were correlated specifically with the content of the video clips our subjects watched, just as they have been found to be correlated with actual touch stimuli subjects perceive (Beauchamp et al. 2009). A recent single-unit study in monkeys (Lemus et al. 2010) suggested that such content specificity for cross-modal stimulus processing might not exist in primary somatosensory cortex. Specifically, in the mentioned study, single-unit recordings were obtained from SI while monkeys performed an audio-tactile frequency discrimination task. The monkeys were presented with a tactile flutter stimulus, followed by an acoustic flutter stimulus (or vice versa), and had to indicate, each time, whether the first or the second stimulus was of higher frequency. During the presentation of the tactile flutter stimulus, many SI neurons fired at a higher rate when a flutter stimulus of higher frequency was presented. While some primary somatosensory neurons also responded to the presentation of the acoustic flutter stimulus, their firing rate was not correlated with stimulus frequency. The authors concluded that cross-modal encoding of sensory stimuli was restricted to areas outside the primary sensory cortices.

By showing that touch-implying visual stimuli can be successfully predicted from the neural activity pattern in SI, the current data point in a different direction. We can see several possible reasons for this discrepancy. First and foremost, the use of MVPA permitted us to analyze the behavior of the neuronal population in the primary somatosensory cortex as a whole, whereas Lemus and colleagues were only able to assess the firing rates of a restricted sample of primary somatosensory neurons and only evaluated those firing rates individually or as a population average. Small variations in the firing rates of individual neurons thus may not have reached significance and may have canceled each other out at the population level. An MVPA of the single-unit firing rates recorded by Lemus and colleagues might have been more successful at decoding the identity of the acoustic flutter stimuli. A second difference between their paradigm and ours is the nature of the stimuli. While Lemus and colleagues employed abstract acoustic and tactile flutter stimuli that became associated with each other while the monkeys were trained for the audio-tactile frequency discrimination task, our video clips had been specifically designed to trigger strong tactile associations in the subjects. We assume that a single-unit analysis of primary somatosensory neurons in our subjects (possibly involving a multivariate analysis technique) would have been successful at predicting the video clips as well. Conceivably, such an experiment could also be performed in monkeys: ideally, the video clips would depict monkey hands exploring toys and food items the animals often touch themselves. Finally, the cross-modal association in the monkey study was audio-tactile, while our video clips triggered visuo-tactile associations. Although we do not consider this difference of prime importance, we cannot rule out that it contributes to the discrepancy.

The hemispheric asymmetry in classifier performance is intriguing. This finding would be in line with the general idea that the right-hemispheric parietal cortices are more important for stereognosis than the left, as suggested by neuropsychological observations in lesion patients. Note that it is unlikely that the interhemispheric difference in performance was due to the nature of the video clips: since the right hand was closer to the camera and more visible in all clips, one would assume that, if any, the representations evoked in the left-hemispheric somatosensory mask would be more detailed.

The superior classifier performance in the right postcentral gyrus is reflected in the group-level sensitivity map as well: the majority of the most discriminative voxels were located in the posterior part of the right postcentral gyrus and in the right postcentral sulcus (Fig. 6). The finding that this area contains the most detailed information about the video clips is, again, supportive of the suggestion made by Keysers and colleagues (see preceding section), according to which BA 2 would be of significance in representing the haptic and proprioceptive content of actions the subject observes. It is somewhat surprising that there are also bilateral clusters of discriminative voxels in the very ventral portion of SI, near the border of the secondary somatosensory cortex SII (Eickhoff et al. 2006). The meaning of these ventral activations is not clear to us, as they do not fall into the classical hand/forearm area of SI. However, the sensitivity maps are in line with the fact that we also observed activity in this area in several of the univariate contrasts of the individual video clips versus rest (Supplementary Figure 3). Furthermore, as mentioned earlier, a continuous activation cluster reaching from the SI hand region all the way to the SII area was also observed in subjects who used their hands for tactile object recognition (Reed et al. 2004).

Was Prediction Performance Due to Differences in the Overall Activity Level within the Mask?

As is evident from Figure 2, the different video clips did not activate the primary somatosensory cortex to the same extent. Does this indicate that the prediction performance of the classifier was due to differences in the overall level of activity within SI, rather than different spatial activation patterns? Several points should be kept in mind when answering this question.

First, as mentioned in the Results section, none of the 2-way discriminations among individual stimuli were successful when training and testing the classifier solely on the mean level of activity within the mask, rather than on the spatial profile. (It could be objected, however, that the postcentral gyrus masks were quite large, and signal differences within the principal areas of activation may therefore have been diluted.)

Second, while it is true that the key stimulus activated the postcentral gyrus more strongly than the other stimuli, the differences among the remaining clips were less pronounced. Consider, for instance, the light bulb, yarn, and plant stimuli: although the signal levels induced by these clips were similar, they were reliably discriminated by MVPA. Furthermore, if classification accuracy were determined mainly by differences in overall signal level, it would be difficult to explain why the 2 discriminations that did not yield significant prediction performance both involved the tennis ball clip, which induced an overall signal level quite a bit lower than all the other clips. We speculate, instead, that the tennis ball clip was not well discriminated from other stimuli because, due to the low overall signal it induced, it did not create a reliable “neural signature” in the primary somatosensory cortex (i.e., the signal-to-noise ratio of the somatosensory activity pattern was low). The light bulb, yarn, and plant clips, on the other hand, produced a higher overall signal level; the activity profiles they induced, although of similar amplitude, were therefore more distinct in their spatial pattern and discriminated more successfully by the classifier. Along the same lines, it could be argued that the key stimulus was so reliably distinguished from all other clips (Fig. 5) not because of differences in overall signal level but because the pattern it evoked was most distinct (i.e., had the highest signal-to-noise ratio). In keeping with this explanation, it has recently been shown in visual cortex that signals of higher amplitude lead to more robust MVPA classification (Smith et al. 2010).

Third, the observation that classifier performance may be partly due to differences in overall signal level does not challenge our conclusion that neural activity in primary somatosensory cortex correlates with the content of touch-implying visual stimuli. It is indeed remarkable that the key stimulus, although quite similar to the other clips in that it depicted the haptic exploration of an everyday object, would lead to more pronounced cross-modal activations. We speculate this finding may be due to the somewhat more complex finger movements depicted in this clip. Again, this would point to the significance of proprioceptive information for classifier performance.

In conclusion, while we cannot exclude that part of the classifier's prediction performance was due to overall differences in signal level induced by the video clips, this is unlikely to account for our results entirely and does not question our main conclusion.

Classifier Performance Outside the Primary Somatosensory Cortex

Given the visual nature of our stimuli, it came as no surprise that the classifier performed well in the probabilistic mask of primary visual cortex; nonetheless, the level of accuracy (greater than 0.9 on average) is impressive. This result can serve as a validation of our methods. By contrast, the poor prediction performance in a number of brain regions outside the somatosensory cortices suggests that information about the video clips was not distributed indiscriminately across the brain but was present specifically in those areas that are concerned with the somatosensory content of the clips.

Of particular interest is the finding that classifier performance in secondary somatosensory cortex was higher than in SI. This is not unexpected, as an activation of this area is found more consistently in action and touch observation studies than activation of SI (Keysers et al. 2004; Blakemore et al. 2005; Ebisch et al. 2008; Schaefer et al. 2009; Gazzola and Keysers 2009; for a review, see Keysers et al. 2010). As mentioned in the Materials and Methods, our probabilistic SII mask was centered in subregion OP1; this decision was partly informed by the fact that 2 of the studies just cited reported activity specifically in this area (Keysers et al. 2004; Schaefer et al. 2009). A possible explanation for the higher prediction performance in SII, as compared with SI, is that the somatosensory information contained in the video clips is projected from the visual cortices to SI via SII (and possibly other intermediaries before SII). In other words, while tactile and proprioceptive signals usually pass through the hierarchy of the somatosensory system in a bottom-up direction, the sequence of events would be reversed when relevant information is not received by the brain via the “traditional” (i.e., somatosensory) channel but via the visual system. A question that naturally arises from this speculation is how similar the patterns established by top-down projections during the observation of the video clips are to the patterns that would be induced by bottom-up projections if the subjects explored the depicted objects themselves. Our finding that the top-down induced patterns are content specific renders a similarity plausible, but it certainly is no proof. Future experiments could address this question by attempting to classify video clips such as ours after training a classifier on the somatosensory activity patterns recorded while subjects explore similar objects themselves. Presumably, the subjects would have to be instructed to mimic as closely as possible the hand actions depicted in the videos when exploring the objects, as this would increase the likelihood of the induced activity patterns to match each other.

Conclusions

The present study shows that the perception of purely visual stimuli that imply tactile and proprioceptive information activates the primary somatosensory cortex in a content-specific fashion. This is in keeping with earlier evidence, suggesting content-specific representations in primary visual and early auditory cortices in the absence of modality-specific sensory stimulation. Together, these findings suggest that even the earliest stages of the sensory processing chains are not concerned with intramodal sensory stimulation alone; rather, they appear to be activated whenever the brain processes content relevant to the respective modality, independently of the sensory channel which triggered the representation of that content.

Supplementary Material

Supplementary Video Clips 1–5, Figures 1–4, and Table 1 can be found at: http://www.cercor.oxfordjournals.org/

Funding

Mathers Foundation (to A. D. and H. D.); the National Institutes of Health (to A. D. and H.D.; grant number 5P50NS019632-27).

Supplementary Material

Acknowledgments

Author contributions: K.M. conceived and designed the study with input from all coauthors, traced the anatomical masks, conducted fMRI, participated in data analysis, and wrote the manuscript; J.T.K. advised on study design, conducted fMRI, performed the data analysis, and wrote part of the Materials and Methods section; R.E. prepared the video clips, wrote the stimulus presentation script, and conducted fMRI; H.D. participated in data analysis and provided conceptual advice; A.D. supervised the project and advised K.M. on the preparation of the manuscript. All authors discussed the results and their implications at all stages. Conflict of Interest: None declared.

References

- Avikainen S, Forss N, Hari R. Modulated activation of the human SI and SII cortices during observation of hand actions. Neuroimage. 2002;15:640–646. doi: 10.1006/nimg.2001.1029. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, LaConte S, Yasar N. Distributed representation of single touches in somatosensory and visual cortex. Human Brain Mapp. 2009;30:3163–3171. doi: 10.1002/hbm.20735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore S-J, Bristow D, Bird G, Frith C, Ward J. Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain. 2005;128:1571–1583. doi: 10.1093/brain/awh500. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Calvert GA, Bullmore ET, Brammer MJ, Campbell R, Williams SCR, McGuire PK, Woodruff PWR, Iversen SD, David AS. Activation of auditory cortex during silent lipreading. Science. 1997;276:593–596. doi: 10.1126/science.276.5312.593. [DOI] [PubMed] [Google Scholar]

- Clithero JA, McKell Carter R, Huettel SA. Local pattern classification differentiates processes of economic valuation. Neuroimage. 2009;45:1329–1338. doi: 10.1016/j.neuroimage.2008.12.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebisch SJH, Perrucci MG, Ferretti A, Del Gratta C, Romani GL, Gallese V. The sense of touch: embodied stimulation in a visuotactile mirroring mechanism for observed animate or inanimate touch. J Cogn Neurosci. 2008;20:1611–1623. doi: 10.1162/jocn.2008.20111. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Amunts K, Mohlberg H, Zilles K. The human parietal operculum. II. Stereotaxic maps and correlation with functional imaging results. Cereb Cortex. 2006;16:268–279. doi: 10.1093/cercor/bhi106. [DOI] [PubMed] [Google Scholar]

- Evangeliou MN, Raos V, Galleti C, Savaki HE. Functional imaging of the parietal cortex during action execution and observation. Cereb Cortex. 2009;19:624–639. doi: 10.1093/cercor/bhn116. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Keysers C. The observation and execution of actions share motor and somatosensory voxels in all tested subjects: single-subject analyses of unsmoothed fMRI data. Cereb Cortex. 2009;19:1239–1255. doi: 10.1093/cercor/bhn181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- González J, Barros-Loscertales A, Pulvermüller F, Meseguer V, Sanjuán A, Belloch V, Avila C. Reading cinnamon activates olfactory brain regions. Neuroimage. 2006;32:906–912. doi: 10.1016/j.neuroimage.2006.03.037. [DOI] [PubMed] [Google Scholar]

- Hanke M, Halchenko YO, Sederberg PB, Hanson SJ, Haxby JV, Pollmann S. PyMVPA: a Python toolbox for multivariate pattern analysis of fMRI data. Neuroinformatics. 2009;7:37–53. doi: 10.1007/s12021-008-9041-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison SA, Tong F. Decoding reveals the contents of visual working memory in early visual areas. Nature. 2009;458:632–635. doi: 10.1038/nature07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes J-D, Rees G. Decoding mental states from brain activity in humans. Nat Rev Neurosci. 2006;7:523–534. doi: 10.1038/nrn1931. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimisation for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keysers C, Wicker B, Gazzola V, Anton J-L, Fogassi L, Gallese V. A touching sight: SII/PV activation during the observation and experience of touch. Neuron. 2004;42:335–346. doi: 10.1016/s0896-6273(04)00156-4. [DOI] [PubMed] [Google Scholar]

- Keysers C, Kaas JH, Gazzola V. Somatosensation in social perception. Nat Rev Neurosci. 2010;11:417–428. doi: 10.1038/nrn2833. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Thompson WL, Kim IJ, Alpert NM. Topographical representations of mental images in primary visual cortex. Nature. 1995;378:496–498. doi: 10.1038/378496a0. [DOI] [PubMed] [Google Scholar]

- Kraemer DJM, Macrae CN, Green AE, Kelley WM. Sound of silence activates auditory cortex. Nature. 2005;434:158. doi: 10.1038/434158a. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Bandettini P. Analyzing for information, not activation, to exploit high-resolution fMRI. Neuroimage. 2007;38:649–662. doi: 10.1016/j.neuroimage.2007.02.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Bihan D, Turner R, Zeffiro TA, Cuénod CA, Jezzard P, Bonnerot P. Activation of human primary visual cortex during visual recall: a magnetic resonance imaging study. Proc Natl Acad Sci U S A. 1993;90:11802–11805. doi: 10.1073/pnas.90.24.11802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lemus L, Hernández A, Luna R, Zainos A, Romo R. Do sensory cortices process more than one sensory modality during perceptual judgments? Neuron. 2010;67:335–348. doi: 10.1016/j.neuron.2010.06.015. [DOI] [PubMed] [Google Scholar]

- Meyer K, Kaplan JT, Essex R, Webber C, Damasio H, Damasio A. Predicting visual stimuli on the basis of activity in auditory cortices. Nat Neurosci. 2010;13:667–668. doi: 10.1038/nn.2533. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci. 2006;10:424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- Oouchida Y, Okada T, Nakashima T, Matsumura M, Sadato N, Naito E. Your hand movements in my somatosensory cortex: a visuo-kinesthetic function in human area 2. NeuroReport. 2004;15:2019–2023. doi: 10.1097/00001756-200409150-00005. [DOI] [PubMed] [Google Scholar]

- Pierno AC, Tubaldi F, Turella L, Grossi P, Barachino L, Gallo P, Castiello U. Neurofunctional modulation of brain regions by the observation of pointing and grasping actions. Cereb Cortex. 2009;19:367–374. doi: 10.1093/cercor/bhn089. [DOI] [PubMed] [Google Scholar]

- Pihko E, Nangini C, Jousmäki V, Hari R. Observing touch activates human primary somatosensory cortex. Eur J Neurosci. 2010;31:1836–1843. doi: 10.1111/j.1460-9568.2010.07192.x. [DOI] [PubMed] [Google Scholar]

- Raos V, Evangeliou MN, Savaki HE. Mental simulation of action in the service of action perception. J Neurosci. 2007;27:12675–12683. doi: 10.1523/JNEUROSCI.2988-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed CL, Shoham S, Halgren E. Neural substrates of tactile object recognition: an fMRI study. Human Brain Mapp. 2004;21:236–246. doi: 10.1002/hbm.10162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rissman J, Greely HT, Wagner AD. Detecting individual memories through the neural decoding of memory states and past experience. Proc Natl Acad Sci U S A. 2010;107:9849–9854. doi: 10.1073/pnas.1001028107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaefer M, Xu B, Flor H, Cohen LG. Effects of different viewing perspectives on somatosensory activations during observation of touch. Human Brain Mapp. 2009;30:2722–2730. doi: 10.1002/hbm.20701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, Ester EF, Vogel EK, Awh E. Stimulus-specific delay activity in human primary visual cortex. Psychol Sci. 2009;20:207–214. doi: 10.1111/j.1467-9280.2009.02276.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slotnick SD, Thompson WL, Kosslyn SM. Visual mental imagery induces retinotopically organized activation of early visual areas. Cereb Cortex. 2005;15:1570–1583. doi: 10.1093/cercor/bhi035. [DOI] [PubMed] [Google Scholar]

- Smith SM. Fast robust automated brain extraction. Human Brain Mapp. 2002;17:143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, et al. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23(Suppl 1):208–219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Smith AT, Kosillo P, Williams AL. The confounding effect of response amplitude on MVPA performance measures. Neuroimage. Forthcoming 2010 doi: 10.1016/j.neuroimage.2010.05.079. doi:10.1016/j.neuroimage.2010.05.079; available online 4 June 2010. [DOI] [PubMed] [Google Scholar]

- Thirion B, Duchesnay E, Hubbard E, Dubois J, Poline J-B, Lebihan D, Dehaene S. Inverse retinotopy: inferring the visual content of images from brain activation patterns. Neuroimage. 2006;33:1104–1116. doi: 10.1016/j.neuroimage.2006.06.062. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM. Temporal autocorrelation in univariate linear modeling of FMRI data. Neuroimage. 2001;14:1370–1386. doi: 10.1006/nimg.2001.0931. [DOI] [PubMed] [Google Scholar]

- Yoo S-S, Lee CU, Choi BG. Human brain mapping of auditory imagery: event-related functional MRI study. Neuroreport. 2001;12:3045–3049. doi: 10.1097/00001756-200110080-00013. [DOI] [PubMed] [Google Scholar]

- Yoo S-S, Freeman DK, McCarthy JJ, Jolesz FA. Neural substrates of tactile imagery: a functional MRI study. Neuroreport. 2003;14:581–585. doi: 10.1097/00001756-200303240-00011. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.