Abstract

Purpose

Even though pay-for-performance programs are being rapidly implemented, little is known about how patient complexity affects practice-level performance assessment in rural settings. We sought to determine the association between patient complexity and practice-level performance in the rural United States.

Basic procedures

Using baseline data from a trial aimed at improving diabetes care, we determined factors associated with a practice’s proportion of patients having controlled diabetes (hemoglobin A1c ≤7%): patient socioeconomic factors, clinical factors, difficulty with self-testing of blood glucose, and difficulty with keeping appointments. We used linear regression to adjust the practice-level proportion with A1c controlled for these factors. We compared practice rankings using observed and expected performance and classified practices into hypothetical pay-for-performance categories.

Main Findings

Rural primary care practices (n = 135) in 11 southeastern states provided information for 1641 patients with diabetes. For practices in the best quartile of observed control, 76.1% of patients had controlled diabetes vs 19.3% of patients in the worst quartile. After controlling for other variables, proportions of diabetes control were 10% lower in those practices whose patients had the greatest difficulty with either self testing or appointment keeping (p < .05 for both). Practice rankings based on observed and expected proportion of A1c control showed only moderate agreement in pay-for-performance categories (κ = 0.47; 95% confidence interval, 0.32–0.56; p < .001).

Principal Conclusions

Basing public reporting and resource allocation on quality assessment that does not account for patient characteristics may further harm this vulnerable group of patients and physicians.

Keywords: diabetes, quality of care, primary care

The quality of care delivered to patients with chronic disease is now increasingly discussed, measured, and managed. For example, performance measures such as the proportion of diabetes patients with uncontrolled hemoglobin A1c (A1c), which reflects glycemic control, are included in public reporting and pay-for-performance programs designed to improve overall care.1 There is some evidence such programs may lead to population-based improvements in diabetes control.2 Similarly, at the individual physician level, performance feedback on quality measures can spur local quality improvement initiatives to improve diabetes care.

However, performance measures based on achievement of risk factor control may not provide a balanced assessment of quality from the perspective of the practicing physician.3–5 Several reports have demonstrated that accounting for patient factors such as age, comorbidity, severity of illness, income, and education dramatically changes practice rankings, creating important shifts in which practices are considered high or low performers. 3,5,6 In particular, recent studies in Veterans Affairs (VA) found that factors such as marital status, only remotely linked to quality of care, were strongly associated with diabetes control.3–5 To date, the quality measurement field has not adequately grappled with patient complexity, although widespread implementation of performance improvement programs is proceeding at a rapid pace.

Patient complexity, as proposed in the vector model of complexity, goes beyond a count of comorbid conditions to describe the multiple influences on a patient’s health.7 Safford et al describe patients who are clinically similar with regard to disease processes, but a patient who lacks socioeconomic resources, cultural networks, environmental support, and healthy behaviors would be considered more complex. Current performance assessment attempts to case-mix adjust based on certain patient-level factors but falls short in capturing how complexity affects disease management.

The effect of patient complexity on and limitations of diabetes performance assessment may be particularly important in rural areas. Because several factors make accessing high-quality medical care more difficult, rural residents have been designated as a vulnerable population in the US Healthcare Disparities Report.8 Poverty is common in rural areas, creating barriers to diabetes self-care behaviors such as self-testing of blood glucose, which requires expensive supplies. In addition, the large travel distances faced by rural residents may be a formidable barrier to receiving timely care.9 The inclusion and influence of these factors, which exceed case-mix adjustment, on quality assessment of rural practices remains unknown.

Therefore, we sought to better understand how patient complexity influenced the performance profiles of physicians managing patients with diabetes in rural settings. More specifically, we examined the association of sociodemographic, clinical, and behavioral patient characteristics with diabetes control at the practice level. Because poverty and distance are such important barriers in rural settings, we investigated how difficulties with self-testing and appointment keeping influenced practice performance and ranking.

METHODS

Study Design and Setting

We report on baseline data from the Rural Diabetes Online Care study, an Internet-based educational intervention aimed at improving diabetes care by rural primary care providers using a group-randomized design. The results of the trial are not presented here. The protocol was approved by the University of Alabama at Birmingham’s institutional review board (ClinicalTrials.gov identifier: NCT00403091).

Participating physicians were family, general, and internal medicine physicians located in rural areas of 11 southeastern states in the United States (Alabama, Arkansas, Florida, Georgia, Kentucky, Mississippi, Missouri, North Carolina, South Carolina, Tennessee, West Virginia). Rural areas were identified by the standard definition of the US Office of Management and Budget as those not included in metropolitan statistical areas. Practices were recruited by mailed and faxed materials, presentations at professional meetings, physician- to-physician telephone conversations, and office visits. Details of the recruitment process have been published previously.10 Interested primary care physicians were invited to enroll in the study by visiting the study Web site. After providing informed consent online, physicians were then randomized to either the intervention or control arms of the study. Only 1 physician per practice was allowed to submit patient records for review.

At baseline, before the trial started, participating physicians provided the study team with 10 to 15 patient records of the most recently seen consecutive patients with diabetes who met eligibility criteria: at least 2 office visits; at least 1 year of observation data in the medical record; and no metastatic malignancy, dementia, or hospice care. Records were blinded and sent to the study center, or abstractors were permitted to abstract the records on site.

Data abstraction was performed by blinded, trained abstractors using a structured medical record review instrument using the publicly available MedQuest software. Quality control conducted on 5% of records revealed agreement of greater than 90%. Baseline patient data from participating practices were collected from September 2006 through July 2008.

Measures

The most recent A1c level recorded in the patient chart was used to assess diabetes control. To organize the patient factors abstracted from the medical records that could influence A1c, we used the vector model of patient complexity.7 Factors available to characterize the biological axis were age, obesity (either a diagnosis in the chart or body mass index [BMI] ≥30 kg/m2), the use of insulin (a proxy for diabetes severity), and diabetes complications (nephropathy, retinopathy, or neuropathy). Factors available to characterize the socioeconomic axis included insurance status, specifically, Medicaid insurance. The cultural axis was represented by race/ethnicity as it was recorded in the patient’s chart. Because multiple barriers to medical care in the rural setting are overlapping, we did not distinguish between the environmental and behavioral axes, representing these domains by 2 related variables. For these 2 axes, we first defined difficulty with attending appointments as any documentation in the record that the patient had missed appointments. In addition, we constructed a second variable to reflect difficulty with self-testing. Here, any comment in the medical record of the physician’s perception that the patient was not following recommendations to self-test was defined as difficulty with self-testing. We note that this variable also reflects the socioeconomic axis because testing supplies are costly, and poverty is a common barrier in rural America.

After examining all variables at the patient level, we constructed practice-level performance measures. The main outcome for this study was the proportion of patients with controlled diabetes (hemoglobin A1c ≤7%) at the practice level. We chose this metric based on the American Diabetes Association standards published during the design phase of the study.11 In addition, we assessed for each practice the proportion of patients who: were older than 65 years, were obese, were on insulin, had diabetes complications (neuropathy, nephropathy, retinopathy), were on Medicaid, and were African American.

Upon review of the practice-level performance measures, it was determined that the variables reflecting difficulty with self-testing and difficulty keeping appointments demonstrated a nonlinear relationship with the proportion of patients within the practices having controlled diabetes. Thus, to better account for self-testing and appointment keeping at the practice level, we created new dichotomous indicators for these variables. More specifically, we ranked all practices by proportion of patient records indicating difficulty in patient self-testing and then separately indicating difficulty in keeping appointments from highest to lowest. Practices organized into the highest one-third of all practices on these variables were characterized as having practice-level difficulty with self-testing and difficulty keeping appointments. All others were characterized as having no difficulty.

Statistical Approach

For all main comparisons, the unit of analysis was the physician’s practice. For the bivariate comparisons, we classified physicians’s practices according to quartile of diabetes control. Practices within the first quartile of diabetes control had the most patients with hemoglobin A1c of 7% or less and therefore best glycemic control, and practices within the fourth quartile had the fewest controlled patients. We used nonparametric trend tests to compare practice characteristics across quartiles of control.

The proportion of patients with controlled diabetes at the practice level was the dependent variable. After determining that the distribution of the dependent variable approached normality, we developed a linear regression model. Residuals from the model satisfied tests of independence, normality, and equal variance. For modeling, only practices that had submitted at least 5 patient records with complete data available on all covariates were included in the analysis, resulting in 89 practices being available for the ranking comparisons.

We conducted several sensitivity analyses to determine if our findings were dependent upon the modeling strategy. More specifically, we developed models with mean A1c at the practice level as the dependent variable. In addition, we examined the effect of relaxing the criterion for diabetes control to A1c of 8% or less. Finally, we constructed a model at the patient level, with continuous A1c as the dependent variable, the same covariates as in the practice-level model, and accounting for the clustering of patients within physicians using generalized linear latent mixed models. We elected not to use these analyses as the primary analysis since performance is assessed at the physician level.

Next, we compared physician practices by ranking similar to that done in quality reporting.12 The physician practice with the highest proportion of patients with A1c of 7% or less was ranked first, and the practice with the lowest proportion was ranked last (observed rankings). We then calculated the expected proportion of patients with A1c of 7% or less within each practice, accounting for all variables in the full model described above and reranked the practices (expected rankings). Finally, to illustrate the potential implications of implementing a financial incentive program without adjusting for patient complexity, we classified practices into 3 hypothetical pay-for-performance categories: best 2 deciles (eligible for a bonus), middle 6 deciles (no financial consequence), and worst 2 deciles (possible penalty).13 We examined change in practice rank from the observed to expected rankings both graphically and with the κ statistic for the 3 pay-for-performance categories. A κ value of 0 to 0.19 is considered poor agreement; 0.20 to 0.39, fair; 0.40 to 0.59, moderate; 0.60 to 0.79, substantial; and 0.80 to 1, almost perfect.14 All analyses were performed using STATA Version 10 (College Station, Texas).

RESULTS

Overall, we analyzed data from 135 study physicians’s practices and 1641 of their patients receiving primary care for diabetes. The mean number of records per practice was 12.2 (SD 2.9). The average practice had 48.3% of patients with a hemoglobin A1c of 7% or less (Table 1). However, rates of diabetes control varied dramatically from the worst (19.3%) to the best quartile (76.1%) of practice control. Likewise, there was important variability in patient characteristics across quartiles of practice control. For example, practices in the best quartile of control had more patients aged at least 65 years (p = .03). Practices in the worst quartile of control had higher proportions of patients on insulin and more African Americans (p < .01 and p = .02, respectively). Difficulties with self-testing and keeping appointments were also significantly related to quartile of diabetes control. In both instances, practices in the worst quartile of control had a higher proportion of patients experiencing difficulty with these activities (p = .02 and p < .01, respectively).

Table 1.

Characteristics of 135 Rural Primary Care Practices in the United States, Overall and by Quartile of A1c Control (Proportion of Diabetes Patients With A1c ≤7%), 2006–2008

| Practice-level Characteristics | All Practices (Range) | Quartiles of A1c Control

|

Pa | |||

|---|---|---|---|---|---|---|

| Best Q1 | Q2 | Q3 | Worst Q4 | |||

| Mean practice-level A1c | 7.5 (5.9–13.9) | 6.6 | 7.1 | 7.7 | 8.6 | |

| Patients with A1c controlled ≤7%, % | 48.3 (0–93) | 76.1 | 55.7 | 40.4 | 19.3 | |

| Patients aged >65 y, % | 38.9 (0–100) | 45.0 | 39.1 | 35.9 | 35.0 | .03 |

| Patients using insulin, % | 27.2 (0–100) | 21.0 | 23.3 | 29.9 | 35.4 | <.01 |

| Patients with obesity,b % | 38.8 (0–100) | 41.7 | 37.7 | 33.9 | 40.9 | .45 |

| Patients with diabetes complications,c % | 22.4 (0–67) | 21.9 | 22.8 | 23.2 | 21.7 | .48 |

| Patients with Medicaid insurance, % | 10.8 (0–73) | 9.7 | 10.7 | 11.7 | 11.3 | .75 |

| African American patients, % | 26.8 (0–100) | 14.9 | 31.1 | 22.4 | 36.8 | .02 |

| Patients having difficulty with self-testingd | 31.3 (0–100) | 25.8 | 23.7 | 25.0 | 50.0 | .02 |

| Patients having difficulty with keeping appointments,d % | 32.8 (0–100) | 14.7 | 34.2 | 32.1 | 50.0 | <.01 |

p values: for differences among quartiles based on nonparametric test for linear trend.

Obesity = body mass index ≥30 kg/m2 or clinical diagnosis.

Diabetes complications: retinopathy, neuropathy, or nephropathy.

The highest one-third of practices reporting the most patients with difficulties self-testing or appointment keeping compared to the other practices.

The results of the linear regression analysis are shown in Table 2. From the model, having more patients aged at least 65 years was associated with better practice-level control, while having more patients using insulin was a marker for worse practice-level control. After controlling for other variables, practices with the greatest proportion of patients having difficulty with either self-testing or appointment keeping had predicted levels of control that were 10% lower.

Table 2.

Linear Regression Results for Practice-Level Diabetes Control (Proportion of Patients with A1c ≤7%) Among 135 Rural Primary Care Practices, 2006–2008

| Practice-level Characteristics | Model ϐ Coefficient (95% CI) |

|---|---|

| Patients aged >65 y, % | 0.27 (0.09 to 0.47) |

| Patients using insulin, % | −0.38 (−0.57 to −0.20) |

| Patients with obesity,a % | 0.08 (−0.08 to 0.23) |

| Patients with diabetes complications,b % | 0.03 (−0.21 to 0.26) |

| Patients with Medicaid insurance | 0.07 (−0.15 to 0.29) |

| African American patients, % | −0.08 (−0.20 to 0.04) |

| Patients having difficulty with self-testing,c % | −0.10 (−0.18 to −0.02) |

| Patients having difficulty with keeping appointments,c % | −0.10 (−0.18 to −0.02) |

| Adjusted R2 | 0.25 |

Obesity = body mass index ≥30 kg/m2 or clinical diagnosis.

Diabetes complications: retinopathy, neuropathy, or nephropathy.

The highest one-third of practices reporting the most patients with difficulties self-testing or appointment keeping compared to the other practices.

In the sensitivity analyses, the main results were confirmed when using A1c of 8% or less as the cutoff, when using continuous A1c at the practice level as the dependent variable, and when using continuous A1c at the patient level as the dependent variable (results not shown).

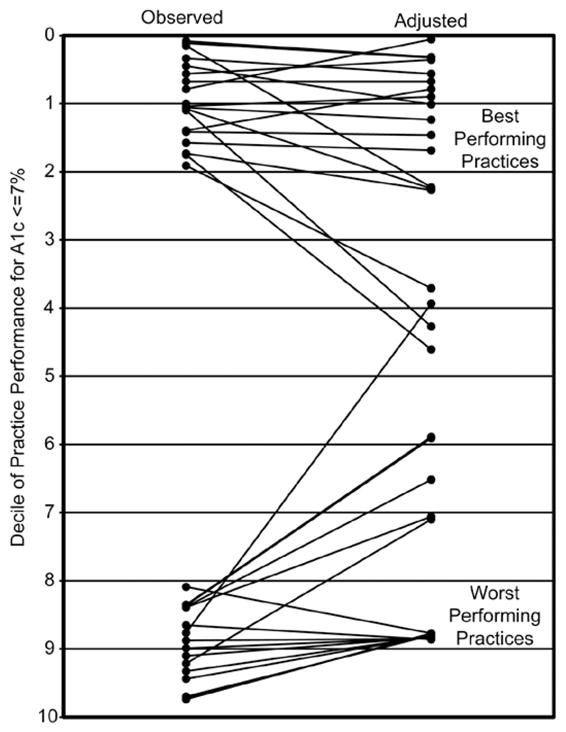

The ranking analyses demonstrated significant changes when using observed proportions of diabetes control to using expected proportions from the fully adjusted model. In fact, with full adjustment, the average practice changed 13 (95% confidence interval [CI], 01.9–15.6) rank positions. Agreement between observed and expected ranks for the 3 pay-for-performance categories was only moderate, with κ = 0.47 (95% CI, 0.32–0.56; p < .001). The Figure displays the changes in ranks of practices that were initially ranked in the best and worst 2 deciles (18 and 17 practices, respectively, out of 89 practices ranked). Overall, 6 of 18 (33%) of the practices in the best 2 deciles eligible for a bonus based on observed ranks would no longer be eligible after adjustment. Furthermore, 6 of 17 (35%) of the practices in the worst 2 deciles charged with a penalty without adjustment would be moved into a financially neutral category with adjustment.

Figure.

Practice Rank of the Highest and Lowest Deciles of Performance based on Proportion of Patients With A1c ≤7%

Adjusted rankings were based on the predicted proportion of patients at the practice level with A1c ≤7% (Table 2).

DISCUSSION

In this study of rural primary care practices, we found that physician-level performance was significantly related to patients’ age and insulin use as well as difficulties with self-testing and keeping appointments. Difficulties with self-testing and keeping appointments have not been included in other studies of practice-level performance on quality of care but likely play a prominent role in barriers to achieving control for rural patients with diabetes and were strong predictors of practice-level glycemic control. We acknowledge that current quality assessment frequently include other performance measures than A1c and may measure quality of care delivered based on composite measures. In our analyses, accounting for patient factors resulted in a marked impact on ranked performance, suggesting the need for caution in making quality comparisons. If pay-for-performance programs or other reimbursement plans are based on observed A1c values, the risk of unanticipated consequences is real. What does a practice with a wide geographic catchment area of low-income patients do to boost their performance ratings? Doctors practicing in these critical at-risk areas are at significant risk of disproportionately low rankings based on factors over which they have less than total control.

We do not mean to imply that rural (or any) physicians should not play a strong role in assisting patients with self-care and visit attendance. However, these private offices were not part of larger health systems that could provide special outreach programs, such as telemedicine, home visits, or financial assistance. Although our study was not designed to examine the barriers rural patients with diabetes face nor to compare rural to urban practices, it is likely that the quality of care being provided by the physician had less influence than low income, transportation difficulties, and lack of social support systems. Indeed, difficulties with self-testing and keeping appointments are mulifactorial problems. It is important to acknowledge the significant role physicians play in recognizing and assisting patients who have barriers, but it is equally important to recognize how far removed their influence may be from the considerably more distal outcome of glycemic control.

Although there is conflicting evidence regarding positive health outcomes associated with self-testing for patients with diabetes who are not on insulin, lack of self-testing may reflect suboptimal patient activation.15 Self-testing of blood glucose requires time and therefore an economic cost that lower-income patients may not be able to afford,16 yet a recent study demonstrated that extra time spent on self-care activities was greater in traditionally disadvantaged patients.17 If patients are vigilant about ambulatory glucose monitoring and note worsening glycemic control, medication intensification may be initiated sooner with better health outcomes long-term. Similarly, faithful adherence to appointments may prompt health care providers to attend to problems sooner, before complications arise. Poor adherence with appointments has been associated with worse glycemic control in a similar population from rural Virginia.18

Our study underscores an important limitation of current diabetes quality measurement. Simply reporting the proportion of patients controlled in a physician’s practice does little to point the way toward improvement. The proportion in control does not permit assessment of how much of the result can be attributed to patient characteristics vs direct quality of care delivered by the practice. “Smarter” performance measures less influenced by patient factors are needed, especially for vulnerable or complex patients. For example, a measure that provides information on specific clinical actions, such as how many patients with uncontrolled A1c had their medications adjusted, would be much more helpful for physicians wishing to improve their practices.19–21

Our study also demonstrates the difficulty of physician-level performance assessment in the private practice rural setting, which used predominately handwritten records. The labor-intensive and costly approach we needed to take in this study is unlikely to be feasible in an ongoing, sustained manner. Electronic health records open the door to more refined performance assessment; more reliable data; and ongoing, automated reporting. Continued efforts to provide electronic health records to remote practices are badly needed.22,23

Our findings are consistent with other studies reported in the scientific literature. Other studies have found patient clinical and sociodemographic factors to be associated with glycemic control.3–5 However, we are not aware of other studies that directly examine the association of patient’s difficulties with self-testing and keeping appointments on practice-level performance on diabetes control. Several other studies have linked insulin use with worse diabetes control at the population level, and our study again demonstrated the association of older age with better control.3–5,24 These findings confirm that this population of patients was similar to others in these aspects.

Our study has several limitations. A convenience sample of physicians agreed to participate in the study, and they may not be representative of rural primary care practices in the southeast. We relied on the physician or his/her office staff to select consecutive patients with diabetes. Consecutive sampling of patient charts mitigates against “cherry-picking” those patients with well-controlled diabetes but may not be as good as random sampling for physician performance feedback.25

The small sample size of patient charts submitted in our study is similar to the minimum amount requested by the American Board of Internal Medicine for self-evaluation of practice performance to maintain certification.26 Though Hofer et al proposed that reliable assessment of physician performance on quality measures would require a panel of 100 patients with diabetes,27 the median panel sizes in studies involving large health maintenance organizations (HMOs) and the VA were fewer than 30 patients with diabetes.28 However, for feedback purposes, highly reliable estimates are not needed.29 The main purpose of the intervention portion of our study was to provide feedback to physicians so that quality improvement efforts could be focused in their offices. Therefore, the baseline analyses in the current study were constrained by small clusters of patients within physicians. As pointed out by Greenfield et al, to adequately power comparisons of physicians or practices, one would need to increase the number of physicians or practices.30 Likewise, our sample size of practices was limited to those recruited in the trial.

We relied on medical records to ascertain patient difficulties with self-testing and appointment keeping. We acknowledge that it is likely that physicians recognize only a subset of patients struggling with these barriers and note them in extreme cases, suggesting that our report may be an underestimate of their true effect. Finally, future studies are needed to examine structural and process features of the practices associated with variations in glycemic control rates.

In conclusion, few studies have focused on the quality of care of physicians caring for patients with diabetes living in rural areas. In this setting, we found important predictors of physicians’s practice ranking on glycemic control that were beyond immediate and direct physician control. Clearly, rural patients confront barriers in self-testing and appointment keeping, and fair and informative quality assessment should account for these factors. Basing public reporting and resource allocation on quality assessment that does not account for patient characteristics may further harm this vulnerable group of patients and physicians.

Acknowledgments

We thank Katie Crenshaw for her editorial assistance.

Funding and Support: Dr Allison was supported by the National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK, 5R18DK065001). Dr Salanitro was supported by a Veterans Affairs National Quality Scholars fellowship.

Role of the Sponsor: The NIDDK had no role in the design and conduct of the study; in the collection, management, analysis, and interpretation of the data; or in the preparation, review, or approval of the manuscript.

Footnotes

Previous Presentation: Presented in part at the 31th Society of General Internal Medicine National Meeting, Pittsburgh, Pennsylvania, April 9–12, 2008 (Salanitro A, Safford MM, Houston TK, et al. Is patient complexity associated with physician performance on diabetes measures?. J Gen Intern Med. 2008;23[suppl 2]:335).

References

- 1.Centers for Medicare & Medicaid Services. [Accessed February 15, 2009];The Medicare care management performance demonstration fact sheet. www.cms.hhs.gov/Demo-ProjectsEvalRpts/downloads/MMA649_Summary.pdf.

- 2.Jencks SF, Huff ED, Cuerdon T. Change in the quality of care delivered to Medicare beneficiaries, 1998–1999 to 2000–2001. JAMA. 2003;289:305–312. doi: 10.1001/jama.289.3.305. [DOI] [PubMed] [Google Scholar]

- 3.Safford MM, Brimacombe M, Zhang Q, et al. Patient complexity in quality comparisons for glycemic control: an observational study. Implement Sci. 2009;4:2. doi: 10.1186/1748-5908-4-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Maney M, Tseng CL, Safford MM, Miller DR, Pogach LM. Impact of selfreported patient characteristics upon assessment of glycemic control in the Veterans Health Administration. Diabetes Care. 2007;30:245–251. doi: 10.2337/dc06-0771. [DOI] [PubMed] [Google Scholar]

- 5.Zhang Q, Safford M, Ottenweller J, et al. Performance status of health care facilities changes with risk adjustment of HbA1c. Diabetes Care. 2000;23:919–927. doi: 10.2337/diacare.23.7.919. [DOI] [PubMed] [Google Scholar]

- 6.Mehta RH, Liang L, Karve AM, et al. Association of patient case-mix adjustment, hospital process performance rankings, and eligibility for financial incentives. JAMA. 2008;300:1897–1903. doi: 10.1001/jama.300.16.1897. [DOI] [PubMed] [Google Scholar]

- 7.Safford MM, Allison JJ, Kiefe CI. Patient complexity: more than comorbidity. the vector model of complexity. J Gen Intern Med. 2007;22:382–390. doi: 10.1007/s11606-007-0307-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Department of Health and Human Services. [Accessed February 15, 2009];National Healthcare Disparities Report. www.qualitytools.ahrq.gov/disparitiesreport/download_report.aspx.

- 9.Strauss K, MacLean C, Troy A, Littenberg B. Driving distance as a barrier to glycemic control in diabetes. J Gen Intern Med. 2006;21:378–380. doi: 10.1111/j.1525-1497.2006.00386.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Foster PP, Williams JH, Estrada CA, et al. Recruitment of rural physicians in a diabetes internet intervention study: overcoming challenges and barriers. J Natli Med Assoc. 2010;102:101–107. doi: 10.1016/s0027-9684(15)30497-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Standards of medical care in diabetes—2006. Diabetes Care. 2006;29:S4–42. [PubMed] [Google Scholar]

- 12. [Accessed February 15, 2009];CMS/Premier Hospital Quality Incentive Demonstration. www.premierinc.com/quality-safety/tools-services/p4p/hqi/results/decile-thresholdsyear2.pdf.

- 13.Premier Inc. [Accessed February 15, 2009];Centers for Medicare & Medicaid Services (CMS)/Premier Hospital Quality Incentive Demonstration (HQID) Project: Findings from Year Two. www.premierinc.com/quality-safety/tools-services/p4p/hqi/results/decile-thresholds-year2.pdf.

- 14.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. [PubMed] [Google Scholar]

- 15.O’Kane MJ, Bunting B, Copeland M, Coates VE. Efficacy of self monitoring of blood glucose in patients with newly diagnosed type 2 diabetes (ESMON study): randomised controlled trial. BMJ. 2008;336:1174–1177. doi: 10.1136/bmj.39534.571644.BE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Russell LB, Safford MM. The importance of recognizing patients’ time as a cost of self-management. Am J Manag Care. 2008;14:395–396. [PubMed] [Google Scholar]

- 17.Ettner SL, Cadwell BL, Russell LB, et al. Investing time in health: do socioeconomically disadvantaged patients spend more or less extra time on diabetes self-care? Health Econ. 2009;18:645–663. doi: 10.1002/hec.1394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Schectman JM, Schorling JB, Voss JD. Appointment adherence and disparities in outcomes among patients with diabetes. J Gen Intern Med. 2008;23:1685–1687. doi: 10.1007/s11606-008-0747-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rodondi N, Peng T, Karter AJ, et al. Therapy modifications in response to poorly controlled hypertension, dyslipidemia, and diabetes mellitus. Ann Intern Med. 2006;144:475–484. doi: 10.7326/0003-4819-144-7-200604040-00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Selby JV, Uratsu CS, Fireman B, et al. Treatment intensification and risk factor control: toward more clinically relevant quality measures. Med Care. 2009;47:395–402. doi: 10.1097/mlr.0b013e31818d775c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schmittdiel JA, Uratsu CS, Karter AJ, et al. Why don’t diabetes patients achieve recommended risk factor targets? Poor adherence versus lack of treatment intensification. J Gen Intern Med. 2008;23:588–594. doi: 10.1007/s11606-008-0554-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bahensky JA, Jaana M, Ward MM. Health care information technology in rural America: electronic medical record adoption status in meeting the national agenda. J Rural Health. 2008;24:101–105. doi: 10.1111/j.1748-0361.2008.00145.x. [DOI] [PubMed] [Google Scholar]

- 23.Menachemi N, Perkins RM, van Durme DJ, Brooks RG. Examining the adoption of electronic health records and personal digital assistants by family physicians in Florida. Inform Prim Care. 2006;14:1–9. doi: 10.14236/jhi.v14i1.609. [DOI] [PubMed] [Google Scholar]

- 24.Kerr EA, Gerzoff RB, Krein SL, et al. Diabetes care quality in the Veterans Affairs Health Care System and commercial managed care: the TRIAD study. Ann Intern Med. 2004;141:272–281. doi: 10.7326/0003-4819-141-4-200408170-00007. [DOI] [PubMed] [Google Scholar]

- 25.Pogach L, Xie M, Shentue Y, et al. Diabetes healthcare quality report cards: how accurate are the grades? Am J Manag Care. 2005;11:797–804. [PubMed] [Google Scholar]

- 26.American Board of Internal Medicine. [Accessed March 4, 2010];Maintain & Renew Your Certification. www.abim.org/moc/choose/module/diabetes.aspx.

- 27.Hofer TP, Hayward RA, Greenfield S, Wagner EH, Kaplan SH, Manning WG. The unreliability of individual physician “report cards” for assessing the costs and quality of care of a chronic disease. JAMA. 1999;281:2098–2105. doi: 10.1001/jama.281.22.2098. [DOI] [PubMed] [Google Scholar]

- 28.Krein SL, Hofer TP, Kerr EA, Hayward RA. Whom should we profile? Examining diabetes care practice variation among primary care providers, provider groups, and health care facilities. Health Serv Res. 2002;37:1159–1180. doi: 10.1111/1475-6773.01102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Scholle SH, Roski J, Adams JL, et al. Benchmarking physician performance: reliability of individual and composite measures. Am J Manag Care. 2008;14:833–838. [PMC free article] [PubMed] [Google Scholar]

- 30.Greenfield S, Kaplan SH, Kahn R, Ninomiya J, Griffith JL. Profiling care provided by different groups of physicians: effects of patient case-mix (bias) and physician-level clustering on quality assessment results. Ann Intern Med. 2002;136:111–121. doi: 10.7326/0003-4819-136-2-200201150-00008. [DOI] [PubMed] [Google Scholar]