Abstract

This review surveys the recent literature on visuo-haptic convergence in the perception of object form, with particular reference to the lateral occipital complex (LOC) and the intraparietal sulcus (IPS) and discusses how visual imagery or multisensory representations might underlie this convergence. Drawing on a recent distinction between object- and spatially-based visual imagery, we propose a putative model in which LOtv, a subregion of LOC, contains a modality-independent representation of geometric shape that can be accessed either bottom-up from direct sensory inputs or top-down from frontoparietal regions. We suggest that such access is modulated by object familiarity: spatial imagery may be more important for unfamiliar objects and involve IPS foci in facilitating somatosensory inputs to the LOC; by contrast, object imagery may be more critical for familiar objects, being reflected in prefrontal drive to the LOC.

Keywords: Cross-modal, visual, haptic, imagery

INTRODUCTION

Recent research has dispelled the established orthodoxy that the brain is organized around parallel processing of discrete sensory inputs and has provided strong evidence for a ‘metamodal’ brain with a multisensory task-based organization (Pascual-Leone and Hamilton, 2001). For example, it is now well known that many cortical regions previously considered to be specialized for processing various aspects of visual input are also activated during analogous tactile or haptic tasks (reviewed in Sathian and Lacey, 2007). Here, we outline the current state of knowledge about such visuo-haptic processing in the domain of object form, and the nature of the underlying representation, in order to introduce a putative conceptual model.

Loci of multisensory shape processing

The lateral occipital complex

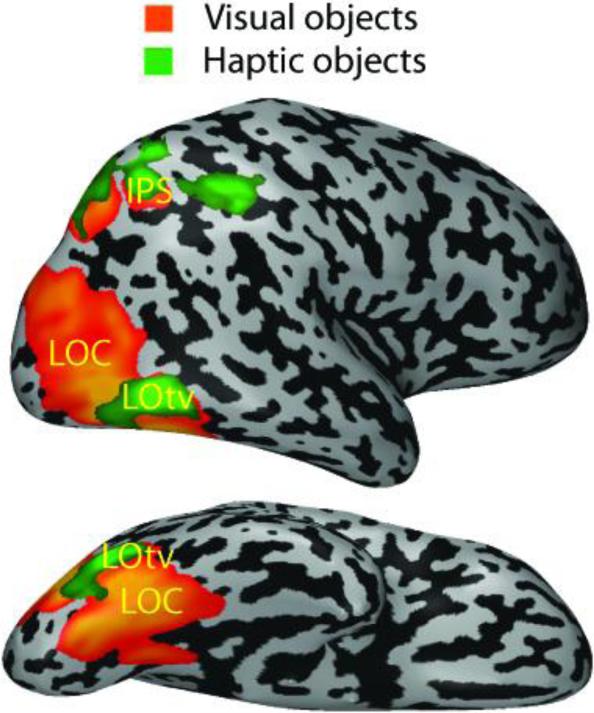

Chief among the several cortical areas implicated in visuo-haptic shape processing is the lateral occipital complex (LOC), an object-selective area in the ventral visual pathway (Malach et al., 1995). As Figure 1 shows, part of the LOC, designated LOtv, responds selectively to objects in both vision and touch (Amedi et al. 2001, 2002). The LOC is shape-selective both during haptic 3D perception (Amedi et al., 2001; Stilla and Sathian, 2008; Zhang et al., 2004) and tactile 2D perception (Stoesz et al., 2003; Prather et al., 2004). LOtv can be characterized as a processor of geometric shape since it is unresponsive during conventional auditory object recognition triggered by object-specific sounds (Amedi et al., 2002). However, LOtv does respond to soundscapes created by a visual-auditory sensory substitution device (SSD) that converts visual shape information into an auditory stream via a specific algorithm in which auditory time and stereo panning convey the visual horizontal axis, varying tone frequency represents the visual vertical axis, and varying tone loudness equates to pixel brightness (Amedi et al., 2007). Using this SSD, both sighted and blind humans (including one congenitally blind individual) learned to recognize objects by extracting shape information from the resulting soundscapes. While LOtv responded to soundscapes constructed according to the trained algorithm, it did not respond when specific soundscapes were arbitrarily associated with specific objects (Amedi et al., 2007). This strengthens the notion that LOtv is driven by geometric shape information, irrespective of the sensory modality used to acquire it. Case studies suggest that the LOC is necessary for both haptic and visual shape perception. Feinberg et al. (1986) reported a patient with a lesion to the left occipito-temporal cortex, likely including the LOC, who exhibited both tactile and visual agnosia (inability to recognize objects), although somatosensory cortex and basic somatosensation were spared. Another patient with bilateral lesions to LOC was unable to learn new objects by either vision or touch (James et al., 2006).

Figure 1.

Object-related regions in the visual and haptic modalities shown on an inflated right hemisphere (top: lateral view; bottom: ventral view). Visual object selectivity is relative to scrambled visual images; haptic object selectivity is relative to haptic textures. Visuo-haptic object selectivity in the lateral occipital complex (LOC) is found within the lateral occipito-temporal sulcus (delineating LOtv) and several foci in the intraparietal sulcus (IPS). (Modified from Amedi et al., 2001).

Parietal cortical foci

Multisensory shape selectivity also occurs in parietal cortical regions, in particular the cortex of the intraparietal sulcus (IPS) (Figure 1). Studies of common visuo-haptic processing implicate the IPS in perception of both object shape and object location, with the main differences being LOC activation during shape discrimination and frontal eye field activation during location discrimination (Stilla and Sathian, 2008; Gibson et al., 2008). The specific foci in these studies are located in an anterior region that we refer to as aIPS (Grefkes et al., 2002; Stilla and Sathian, 2008; Zhang et al., 2004), probably corresponding to the areas termed AIP (anterior intraparietal area [Grefkes and Fink, 2005; Shikata et al., 2008]) or MIP (medial intraparietal area [Grefkes et al., 2004]), depending on the study, and in a posteroventral region (Saito et al., 2003; Stilla and Sathian, 2008) spanning zones identified with the areas CIP (caudal intraparietal area, Shikata et al., 2008), IPS1 and V7 – the latter two together probably corresponding to macaque area LIP (lateral intraparietal area) (Swisher et al., 2007). It should be noted that areas AIP, MIP, CIP and V7 were first described in macaque monkeys, and their homologies in humans remain somewhat uncertain. Whilst the IPS is classical multisensory cortex, visuo-haptic shape selectivity has also been reported in the postcentral sulcus (PCS) (Stilla and Sathian, 2008), which corresponds to the site of Brodmann's area 2 of primary somatosensory cortex (S1) (Grefkes et al., 2001). This region has traditionally been assumed to be exclusively somatosensory but in fact, multisensory selectivity for shape in this region is consistent with earlier suggestions of visual responsiveness in parts of S1 in some neurophysiological studies (Iwamura, 1998; Zhou and Fuster, 1997).

Evidence for a shared visuo-haptic representation of shape

The convergence of visual and haptic shape-selective activity in LOtv suggests the possibility of a shared representation of shape. We designate such a representation as ‘multisensory’ in that it can be encoded and retrieved by multiple sensory systems (Sathian, 2004) and distinguish this from a visual representation which is encoded by a single modality, though it might be triggered by different modalities during retrieval. Consistent with a shared representation, behavioral studies have shown that visuo-haptic cross-modal priming is just as effective as within-modal priming for both unfamiliar (Easton et al., 1997a,b) and familiar (Easton et al., 1997a; Reales and Ballesteros, 1999) objects. Similarly, LOC activity increased when viewing novel objects that were previously explored haptically (i.e. that had been ‘primed’), compared to viewing non-primed objects (James et al., 2002).

An important question is whether this multisensory convergence on a shared representation reflects multisensory integration at the neuronal level or interdigitation of unisensory neurons receiving information from visual or haptic inputs. To examine this question for visuo-haptic integration of object-selective information, Tal and Amedi (2008) used an fMRI-based adaptation paradigm (fMR-A). fMR-A takes advantage of the repetition suppression effect, i.e. attenuation of the blood-oxygen level dependent signal when the same stimulus is repeated (see Grill-Spector et al., 2006, and Krekelberg et al., 2006, for reviews) and provides a tool for assessing the functional properties of cortical neurons beyond the spatial resolution of several millimeters imposed by conventional fMRI. This technique has been successfully used to explore many aspects of unisensory processing, in particular visual shape processing (see Kourtzi et al., 2003; Kourtzi and Kanwisher, 2000, 2001). As expected, LOtv and a focus in the aIPS did indeed show robust cross-modal adaptation from vision to touch. Interestingly, the precentral sulcus bilaterally (corresponding to ventral premotor cortex) and the right anterior insula also showed clear fMR-A. Multisensory convergence at these sites had previously been reported somewhat inconsistently: many studies did not find activity in these regions (e.g. Amedi et al. 2001, 2002; James et al. 2002; Pietrini et al. 2004; Saito et al. 2003; Stilla and Sathian, 2008; Zhang et al. 2004) while others showed cross-modal activity in the right (Hadjikhani and Roland, 1998) and left (Banati et al., 2000) insula-claustrum and precentral sulcus (Amedi et al. 2007). The fMR-A findings suggest that the precentral sulcus and anterior insula, in addition to LOtv and the aIPS, show multisensory responses at the neuronal level. By contrast, other areas, for example the PCS, posteroventral IPS, and the CIP did not show fMR-A, implying that multisensory convergence in these zones is not at the neuronal level.

Role of mental imagery

An obvious explanation for haptically-evoked activation of visual cortex is the possibility of mediation by visual imagery (Sathian et al., 1997) rather than direct activation by somatosensory inputs. Consistent with the visual imagery hypothesis, the LOC is active during visual imagery. For example, the left LOC is active during retrieval of geometric and material object properties from memory (Newman et al., 2005), and during auditorily-cued mental imagery of familiar object shape derived from predominantly haptic experience in the case of blind participants and predominantly visual experience in sighted participants (De Volder et al., 2001). Also, individual differences in ratings of visual imagery vividness were strongly correlated with individual differences in haptic shape-selective activation magnitudes in the right LOC (Zhang et al., 2004). On the other hand, LOC activity during visual imagery has been found to be substantially less than during haptic shape perception, suggesting a relatively minor role for visual imagery (Amedi et al., 2001; see also Reed et al., 2004). Furthermore, studies with both early- and late-blind individuals show shape-related LOC activation via touch (Amedi et al., 2003; Burton, 2002; Pietrini et al., 2004; Stilla et al., 2008a; reviewed by Pascual-Leone et al., 2005; Sathian, 2005; Sathian and Lacey, 2007) and via audition using SSDs (Amedi et al., 2007; Arno et al., 2001; Renier et al., 2004, 2005). These findings have led to the conclusion by some that visual imagery does not account for cross-modal activation of visual cortex. While this conclusion is clearly true for the early blind, it does not necessarily negate a role for visual imagery in the sighted, given the abundant evidence for cross-modal plasticity resulting from visual deprivation (Pascual-Leone et al., 2005; Sathian, 2005; Sathian and Lacey, 2007).

Visual imagery is often treated as a unitary ability. However, recent work has shown that it can be divided into ‘object imagery’ (images that are pictorial and deal with the literal appearance of objects in terms of shape, color, brightness, etc.) and ‘spatial imagery’ (more schematic images dealing with the spatial relations of objects and their component parts and with spatial transformations) (Kozhevnikov et al., 2002, 2005; Blajenkova et al., 2006). This is relevant because both vision and touch encode spatial properties of objects – for example, size, shape, and the relative positions of different object features – such properties may well be encoded in a modality-independent spatial format (Lacey and Campbell, 2006). This view is supported by recent work showing that spatial, but not object, imagery scores were correlated with accuracy on cross-modal object identification but not within-modal object identification, for a set of closely similar and previously unfamiliar objects (Lacey et al., 2007b). Thus, we suggest exploring ‘object’ and ‘spatial’ imagery as opposed to an undifferentiated ‘visual’ imagery approach. It is important to note that the object-spatial dimension of imagery can be viewed as orthogonal to the modality involved – both visual and haptic imagery can potentially be subdivided into object imagery dealing with the appearance or feel of objects, and spatial imagery dealing with spatial relationships between objects, or between parts of objects.

While the object-spatial dimension of haptically-derived representations remains relatively unexplored, similarities in processing of visually- and haptically-derived representations support the idea that vision and touch engage a common spatial representational system. The time taken to scan both visual images (Kosslyn 1973; Kosslyn et al., 1978) and haptically derived representations (Röder and Rösler, 1998) increases with the spatial distance to be inspected, suggesting that spatial metric information is preserved in both visually- and haptically-derived images, and that similar, if not identical, imagery processes operate in both modalities (Röder and Rösler, 1998). By the same token, for mental rotation of both visual (Shepard and Metzler, 1971) and haptic stimuli (Marmor and Zaback, 1976; Carpenter and Eisenberg, 1978; Hollins, 1986; Dellantonio and Spagnolo, 1990), in judging whether two objects are the same or mirror-images, the time taken increases nearly linearly with increasing angular disparity between the objects. A similar relationship was found for tactile stimuli in relation to the angular disparity between the stimulus and a canonical angle, with associated activity in the left aIPS (Prather et al., 2004) at a site also active during mental rotation of visual stimuli (Alivisatos and Petrides, 1997). Similar processing appears to characterize sighted, early- and late-blind individuals (Carpenter and Eisenberg, 1978; Röder and Rösler, 1998).

A putative conceptual model for visuo-haptic shape representation

The literature just reviewed provides a wealth of information about brain regions showing multisensory responses in object processing and about potential accounts of the underlying representations. What is lacking is a model that makes sense of the roles played by these different processes and cortical regions. To this end, we recently investigated the role of visual object imagery and object familiarity in haptic shape perception by examining inter-task correlations of activation magnitudes and Granger causality analyses of effective connectivity (Stilla et al., 2008b). In the imagery task, participants listened to pairs of words and decided whether the objects designated by those words had the same or different shapes. Thus, in contrast to earlier studies, participants had to process their images throughout the scan and this could be verified by monitoring their performance. In a separate session, participants performed a haptic shape discrimination task. For this, two sets of objects were used: one familiar and one unfamiliar. The results showed that both inter-task correlations and connectivity were modulated by object familiarity. Although the LOC was activated bilaterally in both visual object imagery and haptic shape perception, there was an inter-task correlation only for familiar shape. Analysis of connectivity showed that visual object imagery and haptic perception of familiar objects engaged similar networks, with top-down connections from prefrontal and parietal regions into the LOC. By contrast, a very different network emerged during haptic perception of unfamiliar shape, with bottom-up inputs from somatosensory cortex (PCS) and the IPS to the LOC (Stilla et al., 2008b) consistent with earlier analyses of effective connectivity (Peltier et al., 2007; Deshpande et al., 2008).

Along with the literature reviewed above, these findings allow us to propose a preliminary conceptual model for the representation of object form in vision and touch that reconciles the visual imagery and multisensory approaches. In this model, LOtv contains a representation of object form that can be flexibly addressed either bottom-up or top-down, depending on object familiarity, but independent of the modality of sensory input. Haptic perception of unfamiliar shape relies more on a bottom-up pathway from the PCS (part of S1) to the LOtv with support from spatial imagery processes. Since the global shape of an unfamiliar object can only be computed by exploring it in its entirety, the model predicts heavy somatosensory drive of LOtv, with associated involvement of the IPS in processing the relative spatial locations of object parts in order to compute global shape. Haptic perception of familiar shape depends more on object imagery involving top-down paths from prefrontal and parietal areas into LOtv. For familiar objects, while spatial imagery remains available (perhaps in support of view-independent recognition), the use of object imagery comes on-line (perhaps as a kind of representational shorthand sufficient for much cross-modal processing of familiar objects), served by top-down pathways from prefrontal areas into the LOtv. Here, the model predicts reduced somatosensory drive of LOtv because, for familiar objects, global shape can be inferred more easily, and we suggest that swift haptic object identification triggers an associated visual object image.

The main parameters for this model are object familiarity, object and spatial imagery, and bottom-up/top-down processing. For ease of description, we have treated these factors as dichotomies but we do not imply that objects are exclusively familiar or unfamiliar, or that individuals are either object or spatial imagers: clearly, these are continua along which objects and individuals may vary. By the same token, we do not suggest that a multisensory representation is necessarily characterized only by bottom-up pathways. Instead, we suggest that these factors can take different weights in different circumstances: for example bottom-up pathways may be most important for haptic exploration of unfamiliar objects but are surely not absent from haptic perception of familiar objects since at least some bottom-up processing must be required in order to trigger access to a visual image. These factors may also interact depending on task demands (object complexity, discrimination within or between categories) or subject history (imagery ability and preference, visual experience, training, etc.) and the model lends itself to an individual differences approach (see Lacey et al., 2007a; Motes et al., 2008).

There are two ways in which the model could profitably be further developed. Firstly, the temporal resolution of the connectivity analyses is too low to give a detailed picture of the temporal aspects of processing. A recent electrophysiological study shows that, during tactile discrimination of simple geometric shapes applied to the fingerpad, activation in S1 propagates very early into LOtv at around 150ms (Lucan et al., 2008) and further studies of this nature are required. Secondly, although activation of the LOC is typically bilateral, there may be some lateralization. For example, although the LOC was bilaterally activated during visual and haptic perception of unfamiliar shape, activation magnitudes were significantly correlated across subjects in the right LOC only (Stilla and Sathian, 2008). However, for visual presentation of familiar objects, the left LOC was more active during a naming task and the right LOC more active during a matching task (Large et al., 2007). The main parameters of the model lend themselves to further investigation of such effects in cross-modal contexts.

REFERENCES

- Alivisatos B, Petrides M. Functional activation of the human brain during mental rotation. Neuropsychologia. 1997;36:11–118. doi: 10.1016/s0028-3932(96)00083-8. [DOI] [PubMed] [Google Scholar]

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb Cortex. 2002;12:1202–1212. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nature Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amedi A, Raz N, Pianka P, Malach R, Zohary E. Early ‘visual’ cortex activation correlates with superior verbal memory performance in the blind. Nature Neuroscience. 2003;6:758–66. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- Amedi A, Stern WM, Camprodon JA, Bermpohl F, Merabet L, Rotman S, Hemond C, Meijer P, Pascual-Leone A. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nature Neurosci. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- Arno P, De Volder AG, Vanlierde A, Wanet-Defalque M-C, Streel E, Robert A, Sanabria-Bohórquez S, Veraart C. Occipital activation by pattern recognition in the early blind using auditory substitution for vision. Neuroimage. 2001;13:632–645. doi: 10.1006/nimg.2000.0731. [DOI] [PubMed] [Google Scholar]

- Banati RB, Goerres GW, Tjoa C, Aggleton JP, Grasby P. The functional anatomy of visual-tactile integration in man: a study using positron emission tomography. Neuropsychologia. 2000;38:115–124. doi: 10.1016/s0028-3932(99)00074-3. [DOI] [PubMed] [Google Scholar]

- Burton H, Snyder AZ, Conturo TE, Akbudak E, Ollinger JM, Raichle ME. Adaptive changes in early and late blind: a fMRI study of Braille reading. J Neurophysiol. 2002;87:589–607. doi: 10.1152/jn.00285.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blajenkova O, Kozhevnikov M, Motes MA. Object-spatial imagery: a new self-report imagery questionnaire. Appl Cognit Psychol. 2006;20:239–263. [Google Scholar]

- Carpenter PA, Eisenberg P. Mental rotation and the frame of reference in blind and sighted individuals. Percept Psychophys. 1978;23:117–124. doi: 10.3758/bf03208291. [DOI] [PubMed] [Google Scholar]

- Dellantonio A, Spagnolo F. Mental rotation of tactual stimuli. Acta Psychol. 1990;73:245–257. doi: 10.1016/0001-6918(90)90025-b. [DOI] [PubMed] [Google Scholar]

- Deshpande G, Hu X, Stilla R, Sathian K. Effective connectivity during haptic perception: A study using Granger causality analysis of functional magnetic resonance imaging data. NeuroImage. 2008;40:1807–1814. doi: 10.1016/j.neuroimage.2008.01.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Volder AG, Toyama H, Kimura Y, Kiyosawa M, Nakano H, Vanlierde A, Wanet-Defalque M-C, Mishima M, Oda K, Ishiwata K, Senda M. Auditory triggered mental imagery of shape involves visual association areas in early blind humans. NeuroImage. 2001;14:129–139. doi: 10.1006/nimg.2001.0782. [DOI] [PubMed] [Google Scholar]

- Easton RD, Greene AJ, Srinivas K. Transfer between vision and haptics: memory for 2-D patterns and 3-D objects. Psychon Bull Rev. 1997a;4:403–410. [Google Scholar]

- Easton RD, Srinivas K, Greene AJ. Do vision and haptics share common representations? Implicit and explicit memory within and between modalities. J Exp Psychol Learn. 1997b;23:153–163. doi: 10.1037//0278-7393.23.1.153. [DOI] [PubMed] [Google Scholar]

- Feinberg TE, Rothi LJ, Heilman KM. Multimodal agnosia after unilateral left hemisphere lesion. Neurology. 1986;36:864–867. doi: 10.1212/wnl.36.6.864. [DOI] [PubMed] [Google Scholar]

- Gibson G, Stilla R, Sathian K. Segregated visuo-haptic processing of texture and location. Abstract, Human Brain Mapping. 2008 doi: 10.1002/hbm.20456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grefkes C, Fink G. The functional organization of the intraparietal sulcus in humans and monkeys. J Anat. 2005;207:3–17. doi: 10.1111/j.1469-7580.2005.00426.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grefkes C, Geyer S, Schormann T, Roland P, Zilles K. Human somatosensory area 2: observer-independent cytoarchitectonic mapping, interindividual variability, and population map. NeuroImage. 2001;14:617–631. doi: 10.1006/nimg.2001.0858. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Ritzl A, Zilles K, Fink GR. Human medial intraparietal cortex subserves visuomotor coordinate transformation. NeuroImage. 2004;23:1494–1506. doi: 10.1016/j.neuroimage.2004.08.031. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Weiss PH, Zilles K, Fink GR. Crossmodal processing of object features in human anterior intraparietal cortex: an fMRI study implies equivalencies between humans and monkeys. Neuron. 2002;35:173–184. doi: 10.1016/s0896-6273(02)00741-9. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Henson R, Martin A. Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn Sci. 2006;10:14–23. doi: 10.1016/j.tics.2005.11.006. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, Roland PE. Cross-modal transfer of information between the tactile and the visual representations in the human brain: a positron emission tomographic study. J Neurosci. 1998;18:1072–1084. doi: 10.1523/JNEUROSCI.18-03-01072.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollins M. Haptic mental rotation: more consistent in blind subjects? J Visual Impair Blin. 1986;80:950–952. [Google Scholar]

- Iwamura Y. Hierarchical somatosensory processing. Curr Opin Neurobiol. 1998;8:522–528. doi: 10.1016/s0959-4388(98)80041-x. [DOI] [PubMed] [Google Scholar]

- James TW, Humphrey GK, Gati JS, Servos P, Menon RS, Goodale MA. Haptic study of three-dimensional objects activates extrastriate visual areas. Neuropsychologia. 2002;40:1706–1714. doi: 10.1016/s0028-3932(02)00017-9. [DOI] [PubMed] [Google Scholar]

- James TW, James KH, Humphrey GK, Goodale MA. Do visual and tactile object representations share the same neural substrate? In: Heller MA, Ballesteros S, editors. Touch and Blindness: Psychology and Neuroscience. Lawrence Erlbaum Associates; Mahwah, NJ: 2006. pp. 139–155. [Google Scholar]

- Kosslyn SM. Scanning visual images: some structural implications. Percept Psychophys. 1973;14:90–94. [Google Scholar]

- Kosslyn SM, Ball TM, Reiser BJ. Visual images preserve metric spatial information: evidence from studies of image scanning. J Exp Psychol Human. 1978;4:47–60. doi: 10.1037//0096-1523.4.1.47. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Cortical regions involved in perceiving object shape. J Neurosci. 2000;20:3310–3318. doi: 10.1523/JNEUROSCI.20-09-03310.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Representation of perceived object shape by the human lateral occipital complex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Tolias AS, Altmann CF, Augath M, Logothetis NK. Integration of local features into global shapes: Monkey and human fMRI studies. Neuron. 2003;37:333–346. doi: 10.1016/s0896-6273(02)01174-1. [DOI] [PubMed] [Google Scholar]

- Kozhevnikov M, Hegarty M, Mayer RE. Revising the visualiser-verbaliser dimension: evidence for two types of visualisers. Cognition Instruct. 2002;20:47–77. [Google Scholar]

- Kozhevnikov M, Kosslyn SM, Shephard J. Spatial versus object visualisers: a new characterisation of cognitive style. Mem Cognition. 2005;33:710–726. doi: 10.3758/bf03195337. [DOI] [PubMed] [Google Scholar]

- Krekelberg B, Boynton GM, van Wezel RJA. Adaptation: from single cells to BOLD signals. Trends Neurosci. 2006;29:250–256. doi: 10.1016/j.tins.2006.02.008. [DOI] [PubMed] [Google Scholar]

- Lacey S, Campbell C. Mental representation in visual/haptic crossmodal memory: evidence from interference effects. Q J Exp Psychol. 2006;59:361–376. doi: 10.1080/17470210500173232. [DOI] [PubMed] [Google Scholar]

- Lacey S, Campbell C, Sathian K. Vision and touch: Multiple or multisensory representations of objects? Perception. 2007a;36:1513–1521. doi: 10.1068/p5850. [DOI] [PubMed] [Google Scholar]

- Lacey S, Peters A, Sathian K. Cross-modal object representation is viewpoint-independent. PLoS ONE. 2007b;2:e890. doi: 10.1371/journal.pone.0000890. doi: 10.1371/journal.pone0000890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Large M-E, Aldcroft A, Vilis T. Task-related laterality effects in the lateral occipital complex. Brain Res. 2007;1128:130–138. doi: 10.1016/j.brainres.2006.10.023. [DOI] [PubMed] [Google Scholar]

- Lucan JN, Foxe JJ, Gomez-Ramirez M, Sathian K, Molholm S. The spatio-temporal dynamics of somatosensory shape discrimination. Abstract, International Multisensory Research Forum. 2008 [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RBH. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. P Natl Acad Sci USA. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marmor GS, Zaback LA. Mental rotation by the blind: does mental rotation depend on visual imagery? J Exp Psychol Human. 1976;2:515–521. doi: 10.1037//0096-1523.2.4.515. [DOI] [PubMed] [Google Scholar]

- Motes MA, Malach R, Kozhevnikov M. Object-processing neural efficiency differentiates object from spatial visualizers. NeuroReport. 2008;19:1727–1731. doi: 10.1097/WNR.0b013e328317f3e2. [DOI] [PubMed] [Google Scholar]

- Newman SD, Klatzky RL, Lederman SJ, Just MA. Imagining material versus geometric properties of objects: an fMRI study. Cognit Brain Res. 2005;23:235–246. doi: 10.1016/j.cogbrainres.2004.10.020. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Amedi A, Fregni F, Merabet LB. The plastic human brain. Annu Rev Neurosci. 2005;28:377–401. doi: 10.1146/annurev.neuro.27.070203.144216. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Hamilton RH. The metamodal organization of the brain. Prog Brain Res. 2001;134:427–445. doi: 10.1016/s0079-6123(01)34028-1. [DOI] [PubMed] [Google Scholar]

- Peltier S, Stilla R, Mariola E, LaConte S, Hu X, Sathian K. Activity and effective connectivity of parietal and occipital cortical regions during haptic shape perception. Neuropsychologia. 2007;45:476–483. doi: 10.1016/j.neuropsychologia.2006.03.003. [DOI] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu W- HC, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: Object-based representation in the human ventral pathway. P Natl Acad Sci USA. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prather SC, Votaw JR, Sathian K. Task-specific recruitment of dorsal and ventral visual areas during tactile perception. Neuropsychologia. 2004;42:1079–1087. doi: 10.1016/j.neuropsychologia.2003.12.013. [DOI] [PubMed] [Google Scholar]

- Reales JM, Ballesteros S. Implicit and explicit memory for visual and haptic objects: cross-modal priming depends on structural descriptions. J Exp Psychol Learn. 1999;25:644–663. [Google Scholar]

- Reed CL, Shoham S, Halgren E. Neural substrates of tactile object recognition: an fMRI study. Hum Brain Mapp. 2004;21:236–246. doi: 10.1002/hbm.10162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Renier L, Collignon O, Tranduy D, Poirier C, Vanlierde A, Veraart C, De Volder A. Visual cortex activation in early blind and sighted subjects using an auditory visual substitution device to perceive depth. Neuroimage. 2004;22:S1. [Google Scholar]

- Renier L, Collignon O, Poirier C, Tranduy D, Vanlierde A, Bol A, Veraart C, De Volder AG. Cross modal activation of visual cortex during depth perception using auditory substitution of vision. Neuroimage. 2005;26:573–580. doi: 10.1016/j.neuroimage.2005.01.047. [DOI] [PubMed] [Google Scholar]

- Röder B, Rösler F. Visual input does not facilitate the scanning of spatial images. J Mental Imagery. 1998;22:165–181. [Google Scholar]

- Saito DN, Okada T, Morita Y, Yonekura Y, Sadato N. Tactile-visual cross-modal shape matching: a functional MRI study. Cognit Brain Res. 2003;17:14–25. doi: 10.1016/s0926-6410(03)00076-4. [DOI] [PubMed] [Google Scholar]

- Sathian K. Modality, quo vadis?: Comment. Behav Brain Sci. 2004;27:413–414. [Google Scholar]

- Sathian K. Visual cortical activity during tactile perception in the sighted and the visually deprived. Dev Psychobiol. 2005;46:279–286. doi: 10.1002/dev.20056. [DOI] [PubMed] [Google Scholar]

- Sathian K, Lacey S. Journeying beyond classical somatosensory cortex. Can J Exp Psychol. 2007;61:254–264. doi: 10.1037/cjep2007026. [DOI] [PubMed] [Google Scholar]

- Sathian K, Zangaladze A, Hoffman JM, Grafton ST. Feeling with the mind's eye. NeuroReport. 1997;8:3877–3881. doi: 10.1097/00001756-199712220-00008. [DOI] [PubMed] [Google Scholar]

- Shepard RN, Metzler J. Mental rotation of three-dimensional objects. Science. 1971;171:701–703. doi: 10.1126/science.171.3972.701. [DOI] [PubMed] [Google Scholar]

- Shikata E, McNamara A, Sprenger A, Hamzei F, Glauche V, Büchel C, Binkofski F. Localization of human intraparietal areas AIP, CIP, and LIP using surface orientation and saccadic eye movement tasks. Hum Brain Mapp. 2008;29:411–421. doi: 10.1002/hbm.20396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stilla R, Hanna R, Hu X, Mariola E, Deshpande G, Sathian K. Neural processing underlying tactile microspatial discrimination in the blind: a functional magnetic resonance imaging study. J Vis. 2008a;8:1–19. doi: 10.1167/8.10.13. doi:10.1167/8.10.13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stilla R, Lacey S, Deshpande G, Hu X, Sathian K. Effective connectivity during haptic shape perception is modulated by object familiarity. Abstract, Society for Neuroscience. 2008b doi: 10.1016/j.neuroimage.2009.08.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stilla R, Sathian K. Selective visuo-haptic processing of shape and texture. Hum Brain Mapp. 2008;29:1123–1138. doi: 10.1002/hbm.20456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoesz M, Zhang M, Weisser VD, Prather SC, Mao H, Sathian K. Neural networks active during tactile form perception: common and differential activity during macrospatial and microspatial tasks. Int J Psychophysiol. 2003;50:41–49. doi: 10.1016/s0167-8760(03)00123-5. [DOI] [PubMed] [Google Scholar]

- Swisher JD, Halko MA, Merabet LB, McMains SA, Somers DC. Visual topography of human intraparietal sulcus. J Neurosci. 2007;27:5326–5337. doi: 10.1523/JNEUROSCI.0991-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tal N, Amedi A. Using fMR-adaptation to study visual-tactile integration of objects in humans. Abstract, Israel Society for Neuroscience. 2008 [Google Scholar]

- Zhang M, Weisser VD, Stilla R, Prather SC, Sathian K. Multisensory cortical processing of object shape and its relation to mental imagery. Cogn Affect Behav Ne. 2004;4:251–259. doi: 10.3758/cabn.4.2.251. [DOI] [PubMed] [Google Scholar]

- Zhou Y-D, Fuster JM. Neuronal activity of somatosensory cortex in a cross-modal (visuo-haptic) memory task. Exp Brain Res. 1997;116:551–555. doi: 10.1007/pl00005783. [DOI] [PubMed] [Google Scholar]