Abstract

A major goal in perceptual neuroscience is to understand how signals from different sensory modalities are combined to produce stable and coherent representations. We previously investigated interactions between audition and touch, motivated by the fact that both modalities are sensitive to environmental oscillations. In our earlier study, we characterized the effect of auditory distractors on tactile frequency and intensity perception. Here, we describe the converse experiments examining the effect of tactile distractors on auditory processing. Because the two studies employ the same psychophysical paradigm, we combined their results for a comprehensive view of how auditory and tactile signals interact and how these interactions depend on the perceptual task. Together, our results show that temporal frequency representations are perceptually linked regardless of the attended modality. In contrast, audio-tactile loudness interactions depend on the attended modality: Tactile distractors influence judgments of auditory intensity, but judgments of tactile intensity are impervious to auditory distraction. Lastly, we show that audio-tactile loudness interactions depend critically on stimulus timing, while pitch interactions do not. These results reveal that auditory and tactile inputs are combined differently depending on the perceptual task. That distinct rules govern the integration of auditory and tactile signals in pitch and loudness perception implies that the two are mediated by separate neural mechanisms. These findings underscore the complexity and specificity of multisensory interactions.

Keywords: psychophysics, vibration, tone, multisensory

Introduction

We are constantly bombarded by myriad sensory signals and are tasked with sorting these for useful information about our environment. Signals conveyed by our sensory systems interact in time and space, affecting not only when and where we perceive meaningful events, but even the identity and content of these occurrences. Familiar examples of such perceptual interactions include the ventriloquism illusion (in which viewing an object biases the perceived location of a separate sound source; Thomas, 1941) and the McGurk effect (in which seeing lip movements biases the perception of simultaneously heard speech sounds; McGurk and MacDonald, 1976). A major goal in perceptual neuroscience is to understand how signals from different sensory modalities are combined to produce stable and coherent perceptual experiences.

Multisensory interactions are complex and varied, and the establishment of multisensory neural mechanisms can depend on many factors. Because we rarely perceive the world through a single modality, we develop representations that are linked across our senses; the strengths of these links reflect the history of our multisensory experiences. For instance, objects we palpate must be in close proximity to our bodies. As a result, we typically can hear sounds generated during our haptic interactions with the objects. Such correlated sensory experiences, accrued over a lifetime of co-stimulation, may pattern the neural mechanisms underlying audio-tactile interactions in simple event detection (Gescheider and Niblette, 1967; Ro et al., 2009; Tajadura-Jimenez et al., 2009; Wilson et al., 2009; Occelli et al., 2010; Wilson et al., 2010b) and event counting (Hotting and Roder, 2004; Bresciani et al., 2005; Bresciani and Ernst, 2007).

Shared neural representations can also be highly specific. Because we can simultaneously experience environmental oscillations through audition and touch (transduced by receptors in the basilar membrane and in the skin, respectively), we previously reasoned that the two sensory systems might interact in the spectral analysis of vibrations. We tested this and found that auditory tones and noise stimuli indeed systematically influence tactile frequency perception (Yau et al., 2009b), although tones do not affect tactile intensity judgments. These linked audio-tactile frequency representations may underlie our capacity to perceive textures (Lederman, 1979; Jousmaki and Hari, 1998; Guest et al., 2002; Yau et al., 2009a), to appreciate music (Musacchia and Schroeder, 2009; Soto-Faraco and Deco, 2009), and even to comprehend speech (Gick and Derrick, 2009).

In the current study we tested the hypothesis that audio-tactile perceptual interactions are reciprocal in nature by examining how tactile distractors affect auditory tone analysis. We measured participants’ ability to discriminate the frequency or intensity of auditory pure tone stimuli in the presence or absence of simultaneous tactile vibrations. We further characterized the sensitivity of these effects to the relative timing between the auditory tones and tactile distractors. The results reported here, combined with our previous findings (Yau et al., 2009b), provide a comprehensive view of audio-tactile interactions in pitch and loudness perception.

Materials and Methods

Participants

All testing procedures were performed in compliance with the policies and procedures of the Institutional Review Board for Human Use of the University of Chicago. All subjects were paid for their participation. Subjects reported normal tactile and auditory sensitivity and no history of neurological disease. Subjects did not participate in all of the experiments. Thirteen subjects (five males and eight females; mean age = 20 ± 1.5 years) participated in the auditory frequency discrimination experiment with the 200-Hz standard. Eleven subjects (four males and seven females; mean age = 20.5 ± 2.6 years) participated in the auditory frequency discrimination experiment with the 400-Hz standard. Twenty-four subjects (12 males and 12 females; mean age = 19.9 ± 2.0 years) participated in the auditory intensity discrimination experiment. Ten subjects (two males and eight females; mean age = 19.8 ± 0.4 years) participated in the frequency discrimination experiment with the timing (synchrony) manipulation. Ten subjects (five males and five females; mean age = 20.7 ± 2.5 years) participated in the intensity discrimination experiment with the timing manipulation.

Stimuli

Auditory stimuli

Auditory stimuli were generated digitally and converted to analog signals using a digital to analog card (PCI-6229, National Instruments, Austin, TX, USA; sampling rate = 20 kHz). Stimuli were delivered binaurally via noise isolating in-ear earphones (ER6i, Etymotic Research, Elk Grove Village, IL, USA), as these allowed participants to wear noise-attenuating earmuffs (847NST, Bilsom, Winchester, VA, USA).

Tactile stimuli

The tactile distractors consisted of sinusoids that were equated in perceived intensity at different frequencies using a two-alternative forced-choice (2AFC) tracking procedure (Yau et al., 2009b). In a pilot experiment, subjects matched the intensities of stimuli whose amplitudes were initially set by the experimenter. The average intensities determined by the subjects were then used with all subjects in the main experiments. All distractors (100-, 200-, 300-, 400-, and 600-Hz) were suprathreshold and were presented to each participant's index finger (on his or her left hand) at subjectively matched amplitudes of 6.8, 3.6, 3.2, 1.8, and 2.1 μm, respectively. The fact that the amplitude of 400-Hz stimulus was lower than that of the 300-Hz stimulus is somewhat surprising given that thresholds of Pacinian afferents (the mechanoreceptor population sensitive to vibration) are higher at 400 Hz than at 300 Hz (Muniak et al., 2007). However, the slope of the rate intensity function is steeper at 400 Hz than at 300 Hz (Muniak et al., 2007), so the overall Pacinian response may be greater at 400 Hz than at 300 Hz at suprathreshold intensities. Tactile stimuli delivered to the finger at these amplitudes are unlikely to be detected via bone-conduction (Bekesy, 1939; Dirks et al., 1976). Distractors were delivered along the axis perpendicular to the skin surface by a steel-tipped plastic stylus mounted on a Mini-shaker motor (Type 4810, Brüel & Kjær, Skodsborgvej, Nærum, Denmark). The probe had a flat, circular (8 mm diameter) contact surface. The probe tip was indented 1 mm into the skin to ensure contact throughout the stimulus presentation. The motor was equipped with an accelerometer (Type 8702B50M1, Kistler Instrument Corporation, Amherst, NY, USA) with a dynamic range of ±50 g. The accelerometer output was amplified and conditioned using a piezotron coupler (Type 5134A, Kistler Instrument Corporation, Amherst, NY, USA). This signal was then digitized (PCI-6229, National Instruments, Austin, TX, USA; sampling rate = 20 kHz) and read into a computer.

We took great care in ensuring that the tactile distractors were inaudible to guarantee that observed audio-tactile interactions truly reflected multimodal interactions. We first required subjects to wear noise isolating in-ear earphones and noise-attenuating earmuffs. Furthermore, the tactile stimulator was housed in a custom-built noise attenuation chamber to further attenuate sounds emanating from the stimulator (for a complete description, see Yau et al.,2009b).

Auditory frequency discrimination

Auditory frequency discrimination with a 200-Hz standard

Participants sat facing the tactile stimulator with their left arm and hand comfortably resting in a half-cast and hand-mold. The restraints were mounted on a height-adjustable vertical stage, which allowed the stimulator to be reliably repositioned for each participant. When the participant was situated, the stimulator was gently lowered onto the distal pad of the participant's index finger and the experiment began. Participants were tested using a 2AFC design (Figure 1A). On each trial, a pair of auditory tones, equated in perceived intensity, was presented through the headphones and the participant judged which of the two tones was higher in frequency. The tones were each presented for 1 s and were separated by a 1-s inter-stimulus interval. One interval always contained a 200-Hz tone (standard stimulus); the frequency of the tone presented during the other interval (comparison stimulus) ranged from 195 to 205 Hz. The frequency of the comparison stimulus and the stimulus interval in which it was presented were randomized across trials. On most trials, a tactile distractor was presented at the same time as the auditory comparison stimulus. Participants were instructed to ignore the tactile distractors. On the remaining trials, no distractor was presented allowing us to establish a baseline against which we could compare performance achieved in the presence of the tactile distractors. The frequency of the tactile distractor was 100-, 200-, 300-, 400-, or 600-Hz. Twenty behavioral observations were obtained for every combination of auditory comparison stimulus and tactile distractor over 10 experimental runs distributed across two to three sessions. Participants were allowed time to rest between trial blocks. No feedback was provided.

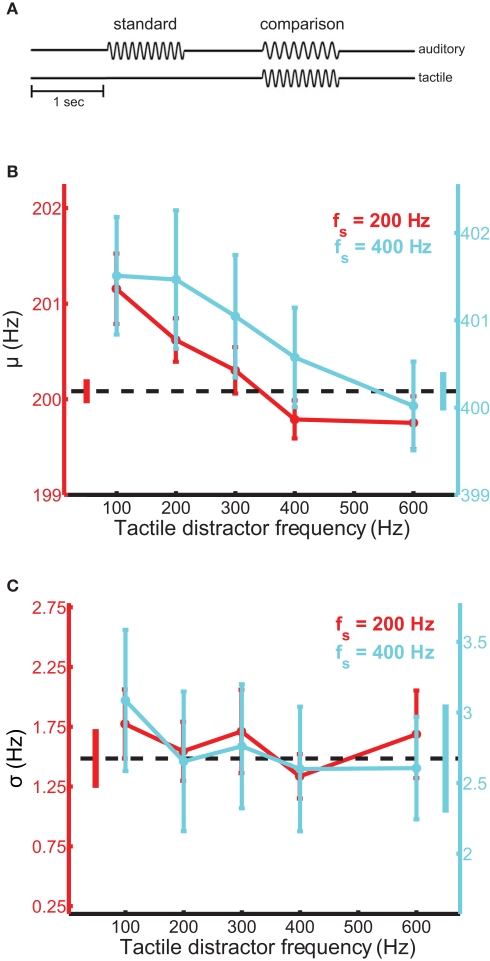

Figure 1.

Auditory frequency discrimination in the presence of tactile distractors. (A) Experimental design. Tactile distractors were delivered in the interval containing the comparison stimulus, which was randomized across trials. (B) Mean bias estimates (PSE) averaged across participants in the frequency discrimination experiments with the 200-Hz (red trace) and 400-Hz (cyan trace) standards, as a function of distractor frequency. The black dashed line and untethered bars correspond to baseline and SEM, respectively. Error bars indicate SEM. Bias estimates were significantly modulated by distractor frequency. (C) Mean sensitivity estimates averaged across participants in the frequency discrimination experiments. Conventions as in (B). Sensitivity did not depend on distractor frequency.

Equating auditory stimulus intensity

Two aspects of stimulus design were implemented to ensure participants did not rely on intensity information to perform the frequency discrimination task. In pilot experiments, we equated the perceived intensity of auditory stimuli at different frequencies using a 2AFC tracking procedure. The intensities of the tactile stimuli were determined using a similar procedure. On each trial, participants were presented sequentially with two 1-s stimuli separated by a 1-s inter-stimulus interval. One stimulus (the standard) was always a 200-Hz, 60.4 dB SPL (suprathreshold) tone; the other stimulus (the comparison) was a tone at one of the stimulus frequencies (195, 197, 198, 199, 201, 202, 203, and 205 Hz) tested in the frequency discrimination experiments. Participants reported which stimulus was more intense. If the participant judged the standard as more intense, the amplitude of the comparison stimulus increased on the following trial. Conversely, if the participant judged the comparison as more intense, the comparison amplitude was reduced on the following trial. The session concluded when the change in the amplitude of the comparison stimulus reversed three times. The geometric mean of the comparison stimulus amplitudes on the last 10 trials of the session was then computed. Three such measurements were recorded and averaged. The resulting mean was the stimulus amplitude at each comparison frequency that was perceived to be equally intense as a 200-Hz, 60.4 dB SPL tone. To further ensure that participants made judgments using only frequency information in the frequency discrimination experiments (and not intensive cues), the actual stimulus amplitudes used during the frequency discrimination experiments were randomly jittered on a trial-by-trial basis (the maximum jitter on any given trial was 25% of the subjectively matched amplitude).

Auditory frequency discrimination with a 400-Hz standard

The procedure was identical to that used in the auditory frequency discrimination experiment with the 200-Hz standard except that the frequency of the standard tone was 400 Hz and the frequencies of comparison stimuli were: 390, 394, 396, 398, 402, 404, 406, and 410 Hz. The amplitudes of the comparison tones were determined by equating the perceived intensity of the comparison stimuli to that of the 400-Hz, 41.72 dB SPL standard.

Auditory intensity discrimination

In this experiment, we wished to determine whether tactile distractors influence auditory intensity perception. In a 2AFC design, participants judged which of two sequentially presented auditory tones, equated in frequency (200 Hz), was more intense (Figure 2A). The standard amplitude was 67.2 dB SPL and the comparison amplitudes ranged from 64.8 to 69.5 dB SPL; these amplitudes were chosen because they are clearly audible but not uncomfortably loud and overlapped those used in our previous study (Yau et al., 2009b). On most trials, a tactile distractor (200- or 600-Hz) was presented at the same time as the comparison stimulus. The 200- and 600-Hz distractors were presented at four amplitude levels, each equated in perceived intensity. Amplitudes ranged from 0.9 to 3.6 and 0.5 to 2.1 μm for the 200- and 600-Hz distractors, respectively.

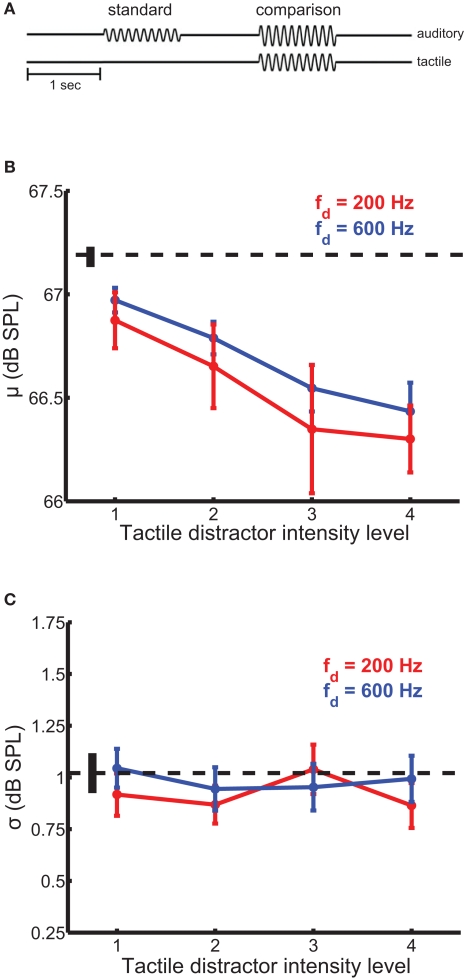

Figure 2.

Auditory intensity discrimination in the presence of tactile distractors. Conventions as in Figure 1. (A) Experimental design. (B) Mean bias estimates (PSE) averaged across participants in the intensity discrimination experiments, as a function of distractor intensity. Bias estimates scaled with distractor intensity. The 200-Hz (red trace) and 600-Hz (blue trace) distractors biased intensity judgments of 200-Hz auditory tones comparably. (C) Mean sensitivity estimates averaged across participants in the intensity discrimination experiments. Tactile distractors did not significantly affect sensitivity estimates.

Discrimination experiments with timing manipulation

In these experiments, we wished to determine the extent to which synchronous presentation of tactile and auditory stimuli was necessary for the former to affect the perception of the latter. The sensitivity of multisensory processes to the relative timing of their component signals can reveal the underlying mechanisms of cross-modal signal integration (Bensmaia et al., 2006; Wilson et al., 2009; Yau et al., 2009b). Temporal coincidence is a primary cue used by the nervous system to determine whether sensory signals should be combined (Burr et al., 2009). Sensory events that occur simultaneously or in close temporal proximity likely emanate from a common source, while stimuli separated by longer intervals likely represent distinct events (Stein and Meredith, 1993). Multisensory interplay may result from co-stimulation in two modalities in the absence of any particular relation between the stimuli, relying on a non-specific mechanism such as rapid alerting or arousal (Driver and Noesselt, 2008). Multisensory interactions mediated by the convergence of sensory signals onto common neuronal populations may be specific to stimulus parameters like temporal frequency (Yau et al., 2009b) and (with respect to time) may only depend on the degree of temporal overlap between the signals (Stein and Meredith, 1993). In timing experiments requiring subjects to discriminate auditory frequency, three frequencies of tactile distractors were tested (100, 200, and 600 Hz). Timing experiments requiring intensity judgments employed two distractor frequencies (200 and 600 Hz). In all timing experiments, distractor amplitudes were identical to those used in the main frequency discrimination experiments. The timing experiments (Figures 3A,B) were similar to the main frequency and intensity discrimination experiments except that, on a subset of trials, the onset of the tactile distractors was 250 ms before and its offset 250 ms after the onset and offset of the auditory stimuli, respectively (the total distractor duration was 1500 ms). Critically, the overlap in the duration of auditory tones and tactile distractors was maintained (1000 ms).

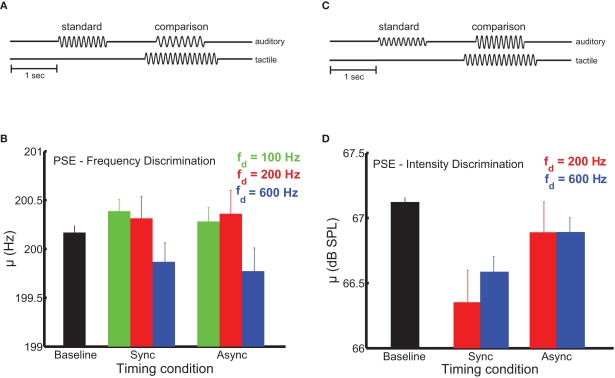

Figure 3.

Dependence of pitch and loudness bias interactions on stimulus timing. (A,B) Experimental design. The onset of distractors was 250 ms before and their offset 250 ms after the onset and offset of the auditory stimuli, respectively. (C) Mean bias estimates (PSE) on the frequency discrimination task (200-Hz standard) as a function of stimulus timing. The strength of the bias effect depended on distractor frequency (the green, red, and blue bars indicate the 100-, 200-, and 600-Hz distractors, respectively). The black bar shows the PSE in the baseline condition. Error bars indicate SEM. The frequency bias effects were comparable across the synchronous and asynchronous timing conditions. (D) Mean bias estimates on the intensity discrimination task as a function of stimulus timing. Conventions as in (C). Intensity bias effects depended on synchronous presentation of auditory stimuli and tactile distractors.

Data analysis

Psychometric functions

To quantify participants’ ability to discriminate auditory frequency we fit the following psychometric function to the data obtained from each participant:

| (1) |

where p(fc > fs) is the proportion of trials a comparison tone with frequency fc was judged to be higher in frequency than the standard stimulus (fs = 200 or 400 Hz), μ and σ are free parameters corresponding to estimates of the participant's bias and sensitivity, respectively. The bias indicates the point of subjective equality (PSE) while the sensitivity parameter denotes the change in frequency (with respect to the standard) that the participant could detect 73% of the time. The resulting sigmoid ranges from 0 to 1. Participants’ ability to discriminate auditory intensity was similarly quantified using a psychometric function analogous to that shown in Eq. 1. These psychometric functions accurately captured discrimination performance for all conditions tested (mean correlation = 0.97, SEM = 0.002).

Statistical tests

For the frequency discrimination experiments, we first determined whether the presentation of tactile distractors significantly affected the average estimates of bias and sensitivity. We tested the effect of the distractors using a repeated-measures ANOVA (ANOVARM), with distractor condition, including the baseline condition, as the within-subjects factor. If this test was significant (p < 0.05), we then conducted an ANOVARM to test whether the effect of the distractors was significantly modulated by distractor frequency, with distractor frequency (excluding the baseline condition) as the within-subjects factor. If this ANOVARM revealed a significant main effect of distractor frequency, we performed a two-tailed paired t-test comparing the bias (or sensitivity) estimates derived from the 100- and 600-Hz distractor conditions. For the intensity discrimination experiment, we first conducted a one-way ANOVARM with distractor condition, including the baseline condition, as the within-subjects factor. If this test was significant (p < 0.05), we conducted a two-way ANOVARM to test for effects of distractor frequency, distractor intensity, and their interaction. If this ANOVARM revealed a significant main effect of distractor intensity, we performed a two-tailed paired t-test comparing the bias (or sensitivity) estimates derived from the lowest and highest intensity distractor conditions. For the timing manipulation experiments, we first conducted a one-way ANOVARM with distractor condition, including the baseline condition, as the within-subjects factor. If this test was significant (p < 0.05), we tested for specific main effects and interactions using a two-way ANOVARM.

Results

Effect of tactile distractors on auditory frequency discrimination

Tactile distractors systematically influenced performance on the auditory frequency discrimination task (Figure 1). In experiments using the 200-Hz standard tone, distractors biased the perceived frequency of simultaneously heard tones [ANOVARM, F(5,71) = 6.8, p < 10−4; see Figure S1 in Supplementary Material for group psychometric functions for all experiments]. The magnitude of the bias effect depended on distractor frequency [F(4,59) = 7.9, p < 10−3] and was larger when the frequency of the distractor was lower than that of the auditory stimuli (Figure 1B, red trace). The average PSE (bias) estimated from the discrimination performance with the 100-Hz distractor was significantly higher than the average PSE obtained with the 600-Hz distractor [t(12) = 3.0, p = 0.01]. We confirmed the audio-tactile pitch interaction by assessing the influence of the same tactile distractors on participants’ ability to discriminate tones in a different frequency range, centered on 400 Hz (cyan trace). Tactile distractors also biased the perceived frequency of these tones [F(5,59) = 3.9, p < 0.01] and the strength of the bias again depended on distractor frequency [F(4,49) = 5.6, p < 0.01]. The average PSE estimated with the 100-Hz distractor again differed significantly from the average value estimated with the 600-Hz distractor [t(10) = 2.7, p = 0.02]. In both frequency discrimination experiments, the perceived frequency of the auditory tones tended to be pulled toward the frequency of the tactile distractors. This bias effect appears to be frequency-specific, as the set of distractors that substantially biased auditory discrimination performance using the 400-Hz standard tone was expanded and shifted in frequency compared to the most effective distractors found using the 200-Hz standard. Critically, in both experiments, we observed greater bias effects when the frequency of the distractor was lower than that of the tones. This pattern was evident in individual participants’ data (Figure S2 in lementary Material) and across the pooled sample. Notably, this pattern mirrors the effects of auditory distractors on tactile frequency discrimination (Yau et al., 2009b). Tactile distractors did not significantly affect estimates of sensitivity in the frequency discrimination experiments (Figure 1C) [F(5,71) = 2.0, p = 0.09 and F(5,59) = 1.1, p = 0.40 for the experiments using the 200- and 400-Hz standards, respectively].

Effect of tactile distractors on auditory intensity discrimination

Tactile distractors influenced performance on the auditory intensity discrimination task (Figure 2). The simultaneous presentation of tactile distractors led to an increase in perceived tone loudness (Figure 2B). The magnitude of this bias scaled with distractor intensity [F(3,185) = 12.7, p < 10−4] but did not depend on distractor frequency [F(1,185) = 1.1, p = 0.30]. The average PSE estimated with the least intense distractor was significantly higher than the average PSE estimated with the most intense distractor [i.e., the more intense distractor increased perceived loudness to a greater extent; t(23) = 7.4, p < 10−6 and t(23) = 4.8, p < 10−4 for the 200- and 600-Hz distractors, respectively). The intensity × frequency interaction on bias estimates was not significant [F(3,182) = 0.17, p = 0.91]. Tactile distractors generally did not affect estimates of perceptual sensitivity in the intensity discrimination experiments (Figure 2C). While the main effect of distractor frequency on sensitivity estimates was marginally significant [F(1,185) = 5.3, p = 0.03], the main effect of distractor intensity and the intensity × frequency interaction failed to achieve statistical significance (p values >0.05).

Effect of distractor timing on pitch and loudness interactions

In auditory frequency and intensity discrimination experiments, we compared the effect of distractors that were presented synchronously with the auditory tones to distractors that overlapped the tones but began earlier and terminated later. Audio-tactile pitch and loudness interactions differed in their sensitivity to this timing manipulation (Figure 3). As was the case in the main frequency and intensity discrimination experiments, the influence of auditory distractors was limited to estimates of bias (see Figure S3 in Supplementary Material for average sensitivity estimates in the timing manipulation experiments). In the frequency discrimination task (Figure 3C), tactile distractors significantly biased auditory judgments in a frequency-dependent manner [F(2,54) = 5.9, p < 0.01]. Critically, this effect did not depend on synchronous timing between the auditory tones and tactile distractors [F(1,54) = 2.1, p = 0.18], and there was no significant frequency × timing interaction [F(2,52) = 0.05, p = 0.95]. We previously found the auditory influence on tactile frequency perception to be similarly tolerant to stimulus onset (and offset) asynchrony (Yau et al., 2009b). In contrast, audio-tactile loudness interactions were sensitive to stimulus timing (Figure 3D). Although tactile distractors biased auditory intensity judgments when the stimuli coincided, this effect was abolished when the onset and offset timing was disrupted [F(1,37) = 26.7, p < 10−3]. As in the main intensity discrimination experiment, the bias effect of tactile distractors did not differ across distractor frequency [F(1,37) = 0.62, p = 0.45] and the frequency × timing interaction was not significant [F(1,34) = 1.5, p = 0.25].

Discussion

In a series of psychophysical experiments, we assessed the influence of tactile distractors on participants’ ability to discriminate the frequency and intensity of auditory tones. We also determined the dependence of these perceptual interactions on the relative timing between the auditory and tactile signals. Tactile distractors systematically biased auditory frequency perception (Figure 1): Distractors at frequencies lower than that of the auditory tones induced larger bias effects than distractors at higher frequencies. Tactile distractors also biased auditory perception of intensity (Figure 2). The magnitude of this effect scaled with distractor intensity, but did not vary with distractor frequency. We also found that audio-tactile interactions in the frequency and intensity domains differ in their sensitivity to stimulus timing: Breaking the correspondence between onset and offset times of the tones and distractors disrupted loudness interactions but had no effect on pitch interactions (Figure 3).

In all of our frequency discrimination experiments, the influence of distractors was greatest when they were lower in frequency than the test stimuli, regardless of the modality participants attended or ignored. This response pattern is reminiscent of the finding that auditory stimuli more effectively mask (auditory) stimuli at higher frequencies than they do stimuli at lower frequencies (Moore, 2003). Accordingly, auditory filters estimated from masking studies using the notched-noise method are asymmetric at center-frequencies ranging from 100 to 800 Hz (Moore et al., 1990), a result previously ascribed to the biomechanics of the basilar membrane. That this pattern also describes the interplay between audition and touch raises another possibility: The asymmetry potentially reflects the tuning properties of auditory cortical neurons, some of which have been shown to receive both auditory and tactile inputs (Fu et al., 2003). Quite possibly, these cortical ensembles, whose involvement in acoustic frequency analysis is unchallenged, also underlie tactile frequency perception. The finding that perceptual interactions in temporal frequency are insensitive to timing disruptions is consistent with sensory-level convergence. This view is further supported by the fact that auditory and tactile stimuli exhibit frequency-dependent interactions even when one of the inputs alone fails to evoke an explicit pitch percept (Yau et al., 2009b). Indeed, our results support the hypothesis that spectral analysis of auditory and tactile inputs is mediated by a common mechanism: Inseparability of auditory and tactile frequency representations, regardless of the attended modality, implies a supramodal operator for spectral analysis. Supramodal operators may also mediate perception of object shape (Amedi et al., 2001, 2002; Lacey et al., 2009), motion (Blake et al., 2004; Ricciardi et al., 2007), and microgeometric features (Zangaladze et al., 1999; Merabet et al., 2004). These studies support a metamodal view of brain organization, in which cortical areas perform particular operations regardless of input modality (Pascual-Leone and Hamilton, 2001).

Even in a shared neural representation of frequency, auditory and tactile inputs may not be conveyed with the same precision. This may explain why tactile distractors did not affect estimates of auditory sensitivity, despite robust changes in tactile sensitivity estimates with auditory distractors (Yau et al., 2009b). Changes in the sensitivity parameter (i.e., the slope of the psychometric curve) arise when a distractor unequally affects individual data points in a given psychometric function. In the current study, the range of auditory comparison frequencies was very narrow (spanning 10 and 20 Hz for the curves centered on 200 and 400 Hz, respectively). As a result, each tactile distractor affected all of the data points in individual psychometric functions equally, resulting in a uniform shift of the entire function and thus of a biasing effect with no concomitant effect on the slopes. In contrast, the range of tactile comparison frequencies we tested in our previous report (Yau et al., 2009b) was substantially broader (spanning 200 and 400 Hz for the curves centered on 200 and 400 Hz, respectively). As a result, auditory distractors affected the data points comprising individual psychometric functions to different degrees, thereby changing the slopes of the curves while also shifting the PSEs, and thus affecting both sensitivity estimates and bias estimates. Thus, the presence or absence of audio-tactile pitch interactions on the sensitivity (slope) estimates may be determined by the precision of the frequency representations. While auditory and tactile frequency representations may be integrated centrally, their precision appears to differ and may reflect differences at more peripheral stages of auditory and somatosensory processing. An obvious difference is the fact that the auditory system can exploit a place code at the receptor level (with frequency information conveyed by a receptor's position along the basilar membrane) whereas the somatosensory system cannot.

Audio-tactile loudness interactions do not appear to be reciprocal like those in the frequency domain. Though we previously found that tactile intensity judgments were unaffected by auditory tones (Yau et al., 2009b), in the current study, tactile distractors caused auditory tones to be perceived as more intense. A number of studies have reported similar tactile enhancement of auditory loudness (Schurmann et al., 2004; Gillmeister and Eimer, 2007; Yarrow et al., 2008; Wilson et al., 2010a). The fact that audio-tactile loudness interactions do not depend on stimulus frequency hints at a non-specific mechanism like rapid alerting or arousal. This is further supported by the sensitivity of the enhancement effect to stimulus timing. Interestingly, auditory loudness can be similarly biased by co-occurring visual stimulation (Marks et al., 2003; Odgaard et al., 2004), so intensity representations in the auditory system may be generally susceptible to non-auditory influence. Recent neurophysiological studies focusing on the role of neuronal oscillations in information processing provide a possible mechanistic explanation for both tactile and visual enhancement of auditory processing (Lakatos et al., 2007; Kayser et al., 2008). The evidence suggests that non-auditory sensory input can reset the phase of ongoing neuronal oscillations in auditory cortex. Such phase modulation results in the amplification of auditory inputs that arrive in cortex during high-excitability phases (Lakatos et al., 2007; Kayser et al., 2008). This mechanism may account for the timing dependence of the loudness interactions we observe, as well as previous neuroimaging results that reveal supra-additive integration of tactile and auditory stimulation in auditory cortex that display similar timing dependencies (Kayser et al., 2005).

The magnitude of the intensity bias did not vary with distractor frequency in our experiment, although loudness interactions have been shown to be frequency-dependent under certain conditions. For example, frequency-specific integration patterns are evident when subjects judge the combined loudness of concurrent auditory and tactile stimulation (Wilson et al., 2010a). Similarly, interactions between audition and touch can be frequency-dependent in detection paradigms (Ro et al., 2009; Tajadura-Jimenez et al., 2009; Wilson et al., 2009, 2010b). Although it is not immediately clear why we failed to see frequency-specific loudness interactions, a critical difference in these studies is that subjects deployed attention across audition and touch when making perceptual judgments, while participants directed attention to a single modality (and ignored the other) in our design. This difference potentially highlights the role of attention in giving rise to certain types of multisensory interactions (Senkowski et al., 2008; Talsma et al., 2010). Perhaps the separate neural mechanisms supporting audio-tactile frequency and intensity processing may be linked by attention signals. For instance, modulation of neuronal oscillations has been recently proposed as a mechanism for stimulus selection and binding through attention (Lakatos et al., 2009; Schroeder et al., 2010). Quite possibly, when attention is simultaneously deployed across touch and audition (rather than to one modality, as in our experiments), oscillatory activity in the neural substrates governing audio-tactile frequency and intensity processing may be synchronized and functionally linked, which would result in the frequency-specific interactions others have reported.

Multisensory studies often emphasize the specificity of sensory interactions (Stein and Meredith, 1993; Soto-Faraco and Deco, 2009; Sperdin et al., 2010). Factors such as the relative timing, location, and strength of sensory inputs can determine the degree of cross-modal interplay. Our work indicates that audio-tactile pitch interactions are highly specific to the relative frequencies of the sensory inputs, do not depend on specific stimulus onset timing, and are relatively insensitive to changes in stimulus intensity (see Yau et al., 2009b). In contrast, loudness interactions (which are unidirectional) do not appear to depend on stimulus frequency, are sensitive to stimulus timing, and can be modulated by changes in stimulus intensity. Critically, we have not investigated the spatial sensitivities of these perceptual interactions. Although some studies have argued that audio-tactile interactions are insensitive to spatial register (Murray et al., 2005; Zampini et al., 2007), others suggest that interactions can depend on the body part stimulated and its distance relative to the auditory stimulus (Tajadura-Jimenez et al., 2009). In our experiments, we always presented tactile stimuli to each participant's left index finger and auditory stimuli binaurally through headphones. Our observed effects could potentially be strengthened or weakened in other alignments (although it is unclear how the frequency-dependent effects might be affected). Notably, Gillmeister and Eimer (2007) tested the spatial sensitivities of audio-tactile loudness interactions and found that spatial registry did not affect intensity ratings.

A large body of neuroimaging and neurophysiology studies have provided candidate regions for audio-tactile convergence (Musacchia and Schroeder, 2009; Soto-Faraco and Deco, 2009). We previously speculated that shared auditory and tactile frequency representations might reside in the caudomedial belt area (area CM), part of the auditory association cortex. We based our speculation on the response characteristics of area CM neurons (Recanzone, 2000a; Kajikawa et al., 2005) and their anatomical connections (Cappe and Barone, 2005; Hackett et al., 2007a; Smiley et al., 2007). However, neurons in area CM may be better suited for spatial localization (Rauschecker and Tian, 2000; Recanzone, 2000b) and frequency tuning in area CM may be too poor to support spectral analysis (Lakatos et al., 2005; Kayser et al., 2009). The caudolateral belt area (area CL) may be a better candidate, given its more refined tonotopic organization (Foxe, 2009). Additionally, audio-tactile interactions are thought to occur throughout primary and association auditory cortices (Foxe et al., 2000, 2002; Schroeder et al., 2001; Kayser et al., 2005; Murray et al., 2005; Caetano and Jousmaki, 2006; Schurmann et al., 2006; Hackett et al., 2007b; Lakatos et al., 2007), secondary somatosensory cortex (Lutkenhoner et al., 2002; Iguchi et al., 2007; Beauchamp and Ro, 2008), posterior parietal cortex (Gobbele et al., 2003), and in the thalamus (Ro et al., 2007; Cappe et al., 2009a,b), providing many potential neural substrates for auditory and tactile crosstalk. How and when neural activity in these regions contributes to specific audio-tactile perceptual processes remains to be tested (see Sperdin et al., 2009, 2010 for a discussion of these issues in detection paradigms).

The current study complements our previous effort to characterize the effect of auditory distractors on tactile perception (Yau et al., 2009b). Because the current study employs the same psychophysical paradigm as its predecessor, we combined results from the two studies to establish a comprehensive view of audio-tactile interactions spanning different tasks (discrimination of frequency or intensity) and attentional states (directed to audition or touch). Together, our results show that audition and touch each influence the perception of frequency in the other, suggesting shared processing for spectral analysis. In contrast, audio-tactile interactions along the intensive continuum depend on the attended modality: Tactile distractors influence judgments of auditory intensity, but judgments of tactile intensity are impervious to auditory distraction. The distinction between pitch and loudness interactions is further supported by our finding that audio-tactile perceptual interactions in the intensity domain depend critically on stimulus timing, while those in the frequency domain do not. These results reveal separate integration mechanisms for audio-tactile interactions in frequency and intensity perception: The same sensory signals are combined differently depending on the perceptual task and the deployment of attention.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

Psychometric functions fit to data acquired in the frequency, intensity, and timing experiments. The data show the proportion of trials in which the frequency (intensity) of a given comparison stimulus is judged to be higher (louder) than that of a standard tone. Trace color indicates distractor condition. Error bars indicate SEM. (A) Frequency discrimination experiment with the 200-Hz standard. (B) Frequency discrimination experiment with the 400-Hz standard. (C) Intensity discrimination experiments. (D) Timing experiment requiring frequency judgments. (E) Timing experiment requiring intensity judgments.

Psychometric functions fit to individual subjects in the frequency discrimination experiments. The data show the proportion of trials in which the frequency of a given comparison stimulus is judged to be higher than that of the standard tone. Trace color indicates distractor condition. Distractors at frequencies lower than that of the test stimuli induced larger bias effects in experiments using the (A) 200-Hz standard and (B) the 400-Hz standard.

Sensitivity estimates for the frequency and intensity discrimination tasks with timing manipulations. Error bars indicate SEM. (A) Timing experiments requiring frequency judgments. There were no significant main or interaction effects on sensitivity (p > 0.05). (B) Timing experiments requiring intensity judgments. There were no significant main or interaction effects on sensitivity (p > 0.05).

Acknowledgments

We would like to thank Frank Dammann for his invaluable technical contribution, Jim Craig for stimulating discussion and comments on an earlier version of the manuscript, and Sami Getahun, Emily Lines, and Mark Zielinski for assistance in data collection.

References

- Amedi A., Jacobson G., Hendler T., Malach R., Zohary E. (2002). Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb. Cortex 12, 1202–1212 10.1093/cercor/12.11.1202 [DOI] [PubMed] [Google Scholar]

- Amedi A., Malach R., Hendler T., Peled S., Zohary E. (2001). Visuo-haptic object-related activation in the ventral visual pathway. Nat. Neurosci. 4, 324–330 10.1038/85201 [DOI] [PubMed] [Google Scholar]

- Beauchamp M. S., Ro T. (2008). Neural substrates of sound-touch synesthesia after a thalamic lesion. J. Neurosci. 28, 13696–13702 10.1523/JNEUROSCI.3872-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bekesy G. V. (1939). Uber die Vibrationsempfindung. Akust. Z. 4, 316–334 [Google Scholar]

- Bensmaia S. J., Killebrew J. H., Craig J. C. (2006). The influence of visual motion on tactile motion perception. J. Neurophysiol. 96, 1625–1637 10.1152/jn.00192.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake R., Sobel K. V., James T. W. (2004). Neural synergy between kinetic vision and touch. Psychol. Sci. 15, 397–402 10.1111/j.0956-7976.2004.00691.x [DOI] [PubMed] [Google Scholar]

- Bresciani J. P., Ernst M. O. (2007). Signal reliability modulates auditory-tactile integration for event counting. Neuroreport 18, 1157–1161 10.1097/WNR.0b013e3281ace0ca [DOI] [PubMed] [Google Scholar]

- Bresciani J. P., Ernst M. O., Drewing K., Bouyer G., Maury V., Kheddar A. (2005). Feeling what you hear: auditory signals can modulate tactile tap perception. Exp. Brain Res. 162, 172–180 10.1007/s00221-004-2128-2 [DOI] [PubMed] [Google Scholar]

- Burr D., Silva O., Cicchini G. M., Banks M. S., Morrone M. C. (2009). Temporal mechanisms of multimodal binding. Proc. R. Soc. Lond., B, Biol. Sci. 276, 1761–1769 10.1098/rspb.2008.1899 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caetano G., Jousmaki V. (2006). Evidence of vibrotactile input to human auditory cortex. Neuroimage 29, 15–28 10.1016/j.neuroimage.2005.07.023 [DOI] [PubMed] [Google Scholar]

- Cappe C., Barone P. (2005). Heteromodal connections supporting multisensory integration at low levels of cortical processing in the monkey. Eur. J. Neurosci. 22, 2886–2902 10.1111/j.1460-9568.2005.04462.x [DOI] [PubMed] [Google Scholar]

- Cappe C., Morel A., Barone P., Rouiller E. M. (2009a). The thalamocortical projection systems in primate: an anatomical support for multisensory and sensorimotor interplay. Cereb. Cortex 19, 2025–2037 10.1093/cercor/bhn228 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C., Rouiller E. M., Barone P. (2009b). Multisensory anatomical pathways. Hear. Res. 258, 28–36 10.1016/j.heares.2009.04.017 [DOI] [PubMed] [Google Scholar]

- Dirks D. D., Kamm C., Gilman S. (1976). Bone conduction thresholds for normal listeners in force and acceleration units. J. Speech Hear. Res. 19, 181–186 [DOI] [PubMed] [Google Scholar]

- Driver J., Noesselt T. (2008). Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron 57, 11–23 10.1016/j.neuron.2007.12.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foxe J. J. (2009). Multisensory integration: frequency tuning of audio-tactile integration. Curr. Biol. 19, R373–R375 10.1016/j.cub.2009.03.029 [DOI] [PubMed] [Google Scholar]

- Foxe J. J., Morocz I. A., Murray M. M., Higgins B. A., Javitt D. C., Schroeder C. E. (2000). Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res. Cogn. Brain Res. 10, 77–83 10.1016/S0926-6410(00)00024-0 [DOI] [PubMed] [Google Scholar]

- Foxe J. J., Wylie G. R., Martinez A., Schroeder C. E., Javitt D. C., Guilfoyle D., Ritter W., Murray M. M. (2002). Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J. Neurophysiol. 88, 540–543 [DOI] [PubMed] [Google Scholar]

- Fu K. M., Johnston T. A., Shah A. S., Arnold L., Smiley J., Hackett T. A., Garraghty P. E., Schroeder C. E. (2003). Auditory cortical neurons respond to somatosensory stimulation. J. Neurosci. 23, 7510–7515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gescheider G. A., Niblette R. K. (1967). Cross-modality masking for touch and hearing. J. Exp. Psychol. 74, 313–320 10.1037/h0024700 [DOI] [PubMed] [Google Scholar]

- Gick B., Derrick D. (2009). Aero-tactile integration in speech perception. Nature 462, 502–504 10.1038/nature08572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gillmeister H., Eimer M. (2007). Tactile enhancement of auditory detection and perceived loudness. Brain Res. 1160, 58–68 10.1016/j.brainres.2007.03.041 [DOI] [PubMed] [Google Scholar]

- Gobbele R., Schurmann M., Forss N., Juottonen K., Buchner H., Hari R. (2003). Activation of the human posterior parietal and temporoparietal cortices during audiotactile interaction. Neuroimage 20, 503–511 10.1016/S1053-8119(03)00312-4 [DOI] [PubMed] [Google Scholar]

- Guest S., Catmur C., Lloyd D., Spence C. (2002). Audiotactile interactions in roughness perception. Exp. Brain Res. 146, 161–171 10.1007/s00221-002-1164-z [DOI] [PubMed] [Google Scholar]

- Hackett T. A., De La Mothe L. A., Ulbert I., Karmos G., Smiley J., Schroeder C. E. (2007a). Multisensory convergence in auditory cortex, II. Thalamocortical connections of the caudal superior temporal plane. J. Comp. Neurol. 502, 924–952 10.1002/cne.21326 [DOI] [PubMed] [Google Scholar]

- Hackett T. A., Smiley J. F., Ulbert I., Karmos G., Lakatos P., de la Mothe L. A., Schroeder C. E. (2007b). Sources of somatosensory input to the caudal belt areas of auditory cortex. Perception 36, 1419–1430 10.1068/p5841 [DOI] [PubMed] [Google Scholar]

- Hotting K., Roder B. (2004). Hearing cheats touch, but less in congenitally blind than in sighted individuals. Psychol. Sci. 15, 60–64 10.1111/j.0963-7214.2004.01501010.x [DOI] [PubMed] [Google Scholar]

- Iguchi Y., Hoshi Y., Nemoto M., Taira M., Hashimoto I. (2007). Co-activation of the secondary somatosensory and auditory cortices facilitates frequency discrimination of vibrotactile stimuli. Neuroscience 148, 461–472 10.1016/j.neuroscience.2007.06.004 [DOI] [PubMed] [Google Scholar]

- Jousmaki V., Hari R. (1998). Parchment-skin illusion: sound-biased touch. Curr. Biol. 8, R190. 10.1016/S0960-9822(98)70120-4 [DOI] [PubMed] [Google Scholar]

- Kajikawa Y., De La Mothe L. A., Blumell S., Hackett T. A. (2005). A comparison of neuron response properties in area A1 and CM of the marmoset monkey auditory cortex: tones and broadband noise. J. Neurophysiol. 93, 22–34 10.1152/jn.00248.2004 [DOI] [PubMed] [Google Scholar]

- Kayser C., Petkov C. I., Augath M., Logothetis N. K. (2005). Integration of touch and sound in auditory cortex. Neuron 48, 373–384 10.1016/j.neuron.2005.09.018 [DOI] [PubMed] [Google Scholar]

- Kayser C., Petkov C. I., Logothetis N. K. (2008). Visual modulation of neurons in auditory cortex. Cereb. Cortex 18, 1560–1574 10.1093/cercor/bhm187 [DOI] [PubMed] [Google Scholar]

- Kayser C., Petkov C. I., Logothetis N. K. (2009). Multisensory interactions in primate auditory cortex: fMRI and electrophysiology. Hear. Res. 258, 80–88 10.1016/j.heares.2009.02.011 [DOI] [PubMed] [Google Scholar]

- Lacey S., Tal N., Amedi A., Sathian K. (2009). A putative model of multisensory object representation. Brain Topogr. 21, 269–274 10.1007/s10548-009-0087-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P., Chen C. M., O'Connell M. N., Mills A., Schroeder C. E. (2007). Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53, 279–292 10.1016/j.neuron.2006.12.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P., O'Connell M. N., Barczak A., Mills A., Javitt D. C., Schroeder C. E. (2009). The leading sense: supramodal control of neurophysiological context by attention. Neuron 64, 419–430 10.1016/j.neuron.2009.10.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P., Pincze Z., Fu K. M., Javitt D. C., Karmos G., Schroeder C. E. (2005). Timing of pure tone and noise-evoked responses in macaque auditory cortex. Neuroreport 16, 933–937 10.1097/00001756-200506210-00011 [DOI] [PubMed] [Google Scholar]

- Lederman S. J. (1979). Auditory texture perception. Perception 8, 93–103 10.1068/p080093 [DOI] [PubMed] [Google Scholar]

- Lutkenhoner B., Lammertmann C., Simoes C., Hari R. (2002). Magnetoencephalographic correlates of audiotactile interaction. Neuroimage 15, 509–522 10.1006/nimg.2001.0991 [DOI] [PubMed] [Google Scholar]

- Marks L. E., Ben Artzi E., Lakatos S. (2003). Cross-modal interactions in auditory and visual discrimination. Int. J. Psychophysiol. 50, 125–145 10.1016/S0167-8760(03)00129-6 [DOI] [PubMed] [Google Scholar]

- McGurk H., MacDonald J. (1976). Hearing lips and seeing voices. Nature 264, 746–748 10.1038/264746a0 [DOI] [PubMed] [Google Scholar]

- Merabet L., Thut G., Murray B., Andrews J., Hsiao S., Pascual-Leone A. (2004). Feeling by sight or seeing by touch? Neuron 42, 173–179 10.1016/S0896-6273(04)00147-3 [DOI] [PubMed] [Google Scholar]

- Moore B. C. J. (2003). An Introduction to the Psychology of Hearing. London: Academic. [Google Scholar]

- Moore B. C. J., Peters R. W., Glasberg B. R. (1990). Auditory filter shapes at low center frequencies. J. Acoust. Soc. Am. 88, 132–140 10.1121/1.399960 [DOI] [PubMed] [Google Scholar]

- Muniak M. A., Ray S., Hsiao S. S., Dammann J. F., Bensmaia S. J. (2007). The neural coding of stimulus intensity: linking the population response of mechanoreceptive afferents with psychophysical behavior. J. Neurosci. 27, 11687–11699 10.1523/JNEUROSCI.1486-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray M. M., Molholm S., Michel C. M., Heslenfeld D. J., Ritter W., Javitt D. C., Schroeder C. E., Foxe J. J. (2005). Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb. Cortex 15, 963–974 10.1093/cercor/bhh197 [DOI] [PubMed] [Google Scholar]

- Musacchia G., Schroeder C. E. (2009). Neuronal mechanisms, response dynamics and perceptual functions of multisensory interactions in auditory cortex. Hear. Res. 258, 72–79 10.1016/j.heares.2009.06.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Occelli V., O'Brien J. H., Spence C., Zampini M. (2010). Assessing the audiotactile Colavita effect in near and rear space. Exp. Brain Res. 203, 517–532 10.1007/s00221-010-2255-x [DOI] [PubMed] [Google Scholar]

- Odgaard E. C., Arieh Y., Marks L. E. (2004). Brighter noise: sensory enhancement of perceived loudness by concurrent visual stimulation. Cogn. Affect. Behav. Neurosci. 4, 127–132 10.3758/CABN.4.2.127 [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A., Hamilton R. (2001). The metamodal organization of the brain. Prog. Brain Res. 134, 427–445 10.1016/S0079-6123(01)34028-1 [DOI] [PubMed] [Google Scholar]

- Rauschecker J. P., Tian B. (2000). Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc. Natl. Acad. Sci. U.S.A. 97, 11800–11806 10.1073/pnas.97.22.11800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone G. H. (2000a). Response profiles of auditory cortical neurons to tones and noise in behaving macaque monkeys. Hear. Res. 150, 104–118 10.1016/S0378-5955(00)00194-5 [DOI] [PubMed] [Google Scholar]

- Recanzone G. H. (2000b). Spatial processing in the auditory cortex of the macaque monkey. Proc. Natl. Acad. Sci. U.S.A. 97, 11829–11835 10.1073/pnas.97.22.11829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ricciardi E., Vanello N., Sani L., Gentili C., Scilingo E. P., Landini L., Guazzelli M., Bicchi A., Haxby J. V., Pietrini P. (2007). The effect of visual experience on the development of functional architecture in hMT+. Cereb. Cortex 17, 2933–2939 10.1093/cercor/bhm018 [DOI] [PubMed] [Google Scholar]

- Ro T., Farne A., Johnson R. M., Wedeen V., Chu Z., Wang Z. J., Hunter J. V., Beauchamp M. S. (2007). Feeling sounds after a thalamic lesion. Ann. Neurol. 62, 433–441 10.1002/ana.21219 [DOI] [PubMed] [Google Scholar]

- Ro T., Hsu J., Yasar N. E., Elmore L. C., Beauchamp M. S. (2009). Sound enhances touch perception. Exp. Brain Res. 195, 135–143 10.1007/s00221-009-1759-8 [DOI] [PubMed] [Google Scholar]

- Schroeder C. E., Lindsley R. W., Specht C., Marcovici A., Smiley J. F., Javitt D. C. (2001). Somatosensory input to auditory association cortex in the macaque monkey. J. Neurophysiol. 85, 1322–1327 [DOI] [PubMed] [Google Scholar]

- Schroeder C. E., Wilson D. A., Radman T., Scharfman H., Lakatos P. (2010). Dynamics of active sensing and perceptual selection. Curr. Opin. Neurobiol. 20, 172–176 10.1016/j.conb.2010.02.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurmann M., Caetano G., Hlushchuk Y., Jousmaki V., Hari R. (2006). Touch activates human auditory cortex. Neuroimage 30, 1325–1331 10.1016/j.neuroimage.2005.11.020 [DOI] [PubMed] [Google Scholar]

- Schurmann M., Caetano G., Jousmaki V., Hari R. (2004). Hands help hearing: facilitatory audiotactile interaction at low sound-intensity levels. J. Acoust. Soc. Am. 115, 830–832 10.1121/1.1639909 [DOI] [PubMed] [Google Scholar]

- Senkowski D., Schneider T. R., Foxe J. J., Engel A. K. (2008). Crossmodal binding through neural coherence: implications for multisensory processing. Trends Neurosci. 31, 401–409 10.1016/j.tins.2008.05.002 [DOI] [PubMed] [Google Scholar]

- Smiley J. F., Hackett T. A., Ulbert I., Karmas G., Lakatos P., Javitt D. C., Schroeder C. E. (2007). Multisensory convergence in auditory cortex, I. Cortical connections of the caudal superior temporal plane in macaque monkeys. J. Comp. Neurol. 502, 894–923 10.1002/cne.21325 [DOI] [PubMed] [Google Scholar]

- Soto-Faraco S., Deco G. (2009). Multisensory contributions to the perception of vibrotactile events. Behav. Brain Res. 196, 145–154 10.1016/j.bbr.2008.09.018 [DOI] [PubMed] [Google Scholar]

- Sperdin H. F., Cappe C., Foxe J. J., Murray M. M. (2009). Early, low-level auditory-somatosensory multisensory interactions impact reaction time speed. Front. Integr. Neurosci. 3, 1–10 10.3389/neuro.07.002.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperdin H. F., Cappe C., Murray M. M. (2010). The behavioral relevance of multisensory neural response interactions. Front. Neurosci. 4, 9–18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein B., Meredith M. (1993). The Merging of the Senses. Cambridge, MA: MIT Press [Google Scholar]

- Tajadura-Jimenez A., Kitagawa N., Valjamae A., Zampini M., Murray M. M., Spence C. (2009). Auditory-somatosensory multisensory interactions are spatially modulated by stimulated body surface and acoustic spectra. Neuropsychologia 47, 195–203 10.1016/j.neuropsychologia.2008.07.025 [DOI] [PubMed] [Google Scholar]

- Talsma D., Senkowski D., Soto-Faraco S., Woldorff M. G. (2010). The multifaceted interplay between attention and multisensory integration. Trends Cogn. Sci. 14, 400–410 10.1016/j.tics.2010.06.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas G. (1941). Experimental study of the influence of vision on sound localization. J. Exp. Psychol. 28, 163–175 10.1037/h0055183 [DOI] [Google Scholar]

- Wilson E. C., Braida L. D., Reed C. M. (2010a). Perceptual interactions in the loudness of combined auditory and vibrotactile stimuli. J. Acoust. Soc. Am. 127, 3038–3043 10.1121/1.3377116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson E. C., Reed C. M., Braida L. D. (2010b). Integration of auditory and vibrotactile stimuli: effects of frequency. J. Acoust. Soc. Am. 127, 3044–3059 10.1121/1.3365318 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson E. C., Reed C. M., Braida L. D. (2009). Integration of auditory and vibrotactile stimuli: effects of phase and stimulus-onset asynchrony. J. Acoust. Soc. Am. 126, 1960–1974 10.1121/1.3204305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yarrow K., Haggard P., Rothwell J. C. (2008). Vibrotactile–auditory interactions are post-perceptual. Perception 37, 1114–1130 10.1068/p5824 [DOI] [PubMed] [Google Scholar]

- Yau J. M., Hollins M., Bensmaia S. J. (2009a). Textural timbre: the perception of surface microtexture depends in part on multimodal spectral cues. Commun. Integr. Biol. 2, 344–346 10.4161/cib.2.4.8551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yau J. M., Olenczak J. B., Dammann J. F., Bensmaia S. J. (2009b). Temporal frequency channels are linked across audition and touch. Curr. Biol. 19, 561–566 10.1016/j.cub.2009.02.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zampini M., Torresan D., Spence C., Murray M. M. (2007). Auditory-somatosensory multisensory interactions in front and rear space. Neuropsychologia 45, 1869–1877 10.1016/j.neuropsychologia.2006.12.004 [DOI] [PubMed] [Google Scholar]

- Zangaladze A., Epstein C. M., Grafton S. T., Sathian K. (1999). Involvement of visual cortex in tactile discrimination of orientation. Nature 401, 587–590 10.1038/44139 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Psychometric functions fit to data acquired in the frequency, intensity, and timing experiments. The data show the proportion of trials in which the frequency (intensity) of a given comparison stimulus is judged to be higher (louder) than that of a standard tone. Trace color indicates distractor condition. Error bars indicate SEM. (A) Frequency discrimination experiment with the 200-Hz standard. (B) Frequency discrimination experiment with the 400-Hz standard. (C) Intensity discrimination experiments. (D) Timing experiment requiring frequency judgments. (E) Timing experiment requiring intensity judgments.

Psychometric functions fit to individual subjects in the frequency discrimination experiments. The data show the proportion of trials in which the frequency of a given comparison stimulus is judged to be higher than that of the standard tone. Trace color indicates distractor condition. Distractors at frequencies lower than that of the test stimuli induced larger bias effects in experiments using the (A) 200-Hz standard and (B) the 400-Hz standard.

Sensitivity estimates for the frequency and intensity discrimination tasks with timing manipulations. Error bars indicate SEM. (A) Timing experiments requiring frequency judgments. There were no significant main or interaction effects on sensitivity (p > 0.05). (B) Timing experiments requiring intensity judgments. There were no significant main or interaction effects on sensitivity (p > 0.05).