Abstract

An accurate calculation of the first passage time probability density (FPTPD) is essential for computing the likelihood of solutions of the stochastic leaky integrate-and-fire model. The previously proposed numerical calculation of the FPTPD based on the integral equation method discretizes the probability current of the voltage crossing the threshold. While the method is accurate for high noise levels, we show that it results in large numerical errors for small noise. The problem is solved by analytically computing, in each time bin, the mean probability current. Efficiency is further improved by identifying and ignoring time bins with negligible mean probability current.

1 Introduction

The leaky integrate-and-fire (LIF) neuron is one of the most successful models for single neuron dynamics, combining conceptual simplicity with numerical efficacy while being biophysically realistic. For instance, a stochastic LIF can be used to reproduce the observed output of many biological neurons for a given input. A powerful method for finding the parameters of the appropriate model is to compute the likelihood of neuronal firing. A key component for obtaining the global likelihood is the calculation of the first passage time probability density (FPTPD), the probability of observing the first spike at a certain time. Recently, Paninski, Pillow, and Simoncelli (2004) proposed computing the FPTPD with a combination of two methods in which evaluation of a gaussian integral first gives a rough estimate, which is then refined by solving the Fokker-Planck equation. The Fokker-Planck method needs a partial differential equation solver, which discretizes both time and the membrane voltage. It suffers from numerical diffusion due to the finite discretization of the parameter space (Odman, 1997). It also confines the membrane voltage within a limited space, which can introduce another source of error. Based on earlier solutions of Volterra integral equations by Plesser and Tanaka (1997), more recently, Paninski, Haith, and Szirtes (2008) suggested a novel method that is applicable to the case of variable membrane conductances. Only time needs to be discretized and the membrane voltage is computed exactly as an Ornstein-Uhlenbeck process, with O(n2) complexity where n is the number of time bins (Paninski et al., 2008).

We refer to this procedure as the Plesser-Tanaka-Paninski method, or, for simplicity, as the gaussian method. While it is accurate for large noise levels, we found the proposed numerical approach to suffer from large numerical errors when the stochastic noise level is small. Low noise is not only a biophysically important regime but also crucial for parameter search applications which typically start with a high noise level but need to be run in the low-noise regime to obtain accurate parameter values. In this note, we present two improvements to the numerical calculation of the FPTPD: one improves the accuracy, potentially by orders of magnitude, and the other improves the algorithm’s efficiency.

2 The Problem

The stochastic integrate-and-fire neuron model is described as an Ornstein-Uhlenbeck process (Burkitt, 2006),

| (2.1) |

where C is the capacitance (in pF), Vm(t) is the neuron membrane voltage (mV), g(t) is the membrane conductance (nS), V0 is the equilibrium membrane voltage (mV), I(t) is the input current (pA), ε(t) is gaussian white noise ( ), τ is the neuron integration time (ms) which is a time unit on the order of the membrane time constant (see below), and σ0 is the standard deviation of the noisy input current (pA) averaged over τ. Different authors use different values for τ. Brunel (2000) equates τ with the membrane time constant, while Burkitt (2006) used twice the membrane time constant. In this note, we use the former (Brunel’s) convention.

It is convenient to write equation 2.1 in the form (Paninski et al., 2004, 2008),

| (2.2) |

where V(t) = Vm(t) − V0 is the voltage relative to the equilibrium membrane voltage, g(t) = g0(t)/C is the inverse of the time-dependent membrane time constant (1/ms), I (t) = I0(t)/C is the input (mV/ms) normalized by capacitance, and is the intensity of the noise ( ). We will use the form of equation 2.2 throughout this note.1

The first passage time probability density p(t) is then determined by a Volterra integral equation of the second kind,

| (2.3) |

where Vreset is the reset voltage and φ[Vth, t|x, s] is the singularity-removed probability current through Vth at time t conditioned on having the value x at time s.2 It can be expressed as

| (2.4) |

where Σ2(t|s) and μ(t|x, s) are, respectively, variance and mean at time t of the gaussian stochastic process V(t|x, s), the value of the voltage at time t if the process started with value x at time s. They are expressed as

| (2.5) |

Both Σ and μ have units of mV. The term G(Vth, t|x, s) is the conditional probability that the voltage V starting at value x at time s will evolve to Vth at time t, where the calculation is based on the dynamics of V in the absence of a threshold. It can be expressed as

| (2.6) |

Note that φ(Vth, t|x, s) and G(Vth, t|x, s) are functions of the membrane voltage which are evaluated at the threshold voltage V = Vth (the expressions on the left-hand side of equations 2.4 and 2.6 are to be read as φ (V, t|x, s)|V=Vth and G(V, t|x, s)|V=Vth) but that the dynamics do not explicitly depend on the threshold.

To numerically solve equation 2.3, the integration on the right-hand side is discretized in time. With the assumption that the integrand is constant inside each discretized time interval, the integration becomes a summation (Linz, 1985). This is a good approximation if the noise level (σ) is high. If, however, the noise level σ is low, the gaussian G(Vth, t|x, s) inside the probability current φ becomes a sharp, narrow function, and a small change in time can change the value of this gaussian function dramatically, as is illustrated in Figure 1.

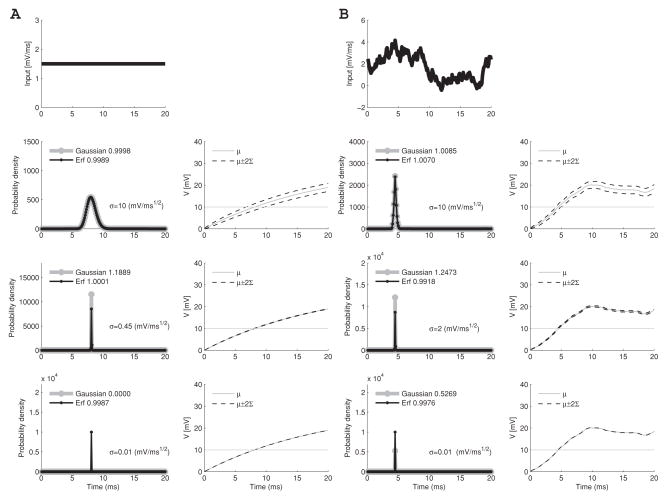

Figure 1.

Illustrative example of the numerical computation of the first passage time probability density function. Time is discretized into t0, t1, t2 … (dotted vertical lines). For a high noise level, the probability current φ varies slowly across multiple bins (bold line) while for low noise, φ varies fast (thin full line). To calculate the FPTPD (see equation 2.3), the gaussian method evaluates the function φ at times t0, t1, t2 … (the intersection of the dotted lines with the φ curve). This is a good approximation for the broad probability current, since it changes slowly within each time bin. In the low-noise case (sharp peak), however, this yields a poor estimate. In the example shown, the value of φ is estimated by the values at t1 and t2 (filled circles), which in this case under-estimates the FPTPD. In contrast, the erf method proposed in this study uses the fact that, independent of the discretization, the mean probability current (φ̂) in a bin can be computed analytically. Note that the gaussian method can be improved only with finer discretization; higher-order approximations for the integral will not substantially improve accuracy.

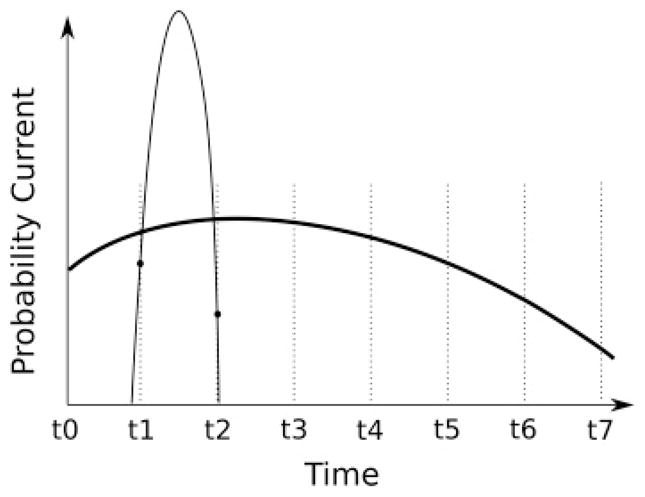

Two examples of the FPTPD with strong input currents are presented in Figure 2. In Figure 2A, input is constant; in Figure 2B, it is biologically more realistic and consists of Poisson-distributed events convolved with exponential functions. We used neuronal current data that were published for the 2009 spike prediction competition (Gerstner & Naud, 2009). We used data from Challenge A, B (time from 28.85 s to 29.05 s). We assumed a neuronal capacitance of C = 170pF (Amzica & Neckelmann, 1999) and threshold Vth = 10 mV. In row 2, noise is high ( ), it is intermediate in row 3 ( in Figure 2A and in Figure 2B), and low in row 4 ( ).3

Figure 2.

Model behavior at different noise levels for a time interval of length 20 ms, bin width 0.1 ms, and membrane time constant, 1/g(t) = 20 ms. (A) The neuron receives constant input. (B) The neuron receives exponentially decaying impulses from a realistic spike train (see text). The input is shown in both cases in the top row. The lower three rows show, for high (row 2), intermediate (row 3), and low (row 4) noise levels, the first passage time probability density on the left and the mean voltage μ(t) (gray full lines) with its standard deviation Σ(t) (black dashed lines) on the right. The threshold voltage (Vth = 10 mV) is shown by a horizontal line. The likelihood functions (in the left-most panels) are shown in gray lines for the gaussian method and black for the erf method. The integrated value of the FPTPD is shown in each legend; the correct value is unity in all examples. The gaussian method can either overestimate or underestimate the probability density when σ is small.

In all cases, the first spike occurs at about t = 8ms, with a broader spread for higher than for lower noise levels. The gray lines show the FPTPD computed with the gaussian method by Paninski et al. (2008) (we used the Matlab code provided by this reference), and the black lines were computed using the improved method we discuss below. The value of the FPTPD integrated over the whole interval is given in the legend in each panel; the correct value is unity in all cases. When σ is large, the gaussian method yields the correct answer (see Figure 2, row 2). However, at intermediate (third row) and small (fourth row) noise levels, the FPTPD can be either overestimated or underestimated.

3 Improvement in Accuracy

A solution to the problem is to calculate the mean probability current through Vth over the discretized time interval Δt rather than using the values estimated at the ends of the interval. In equation 2.4, we assume that only G(Vth, t|x, s) is variable within the time bin [t0, t0 + Δt], and is a constant term. In the function G(Vth, t|x, s), we consider μ(t|x, s) to vary linearly within the interval [μ(t0|x, s),μ(t0 + Δt|x, s)], and we also introduce the notational shortcuts μo:= μ(t0|x, s) and μ1:= μ(t0 + Δt|x, s). These approximations allow us to integrate the probability current through the interval [t0, t0 + Δt] analytically, making use of its gaussian shape. The average probability current φ̂(Vth, t0 |x, s) through threshold over the time interval of length Δt then is

| (3.1) |

where the variance Σ2(t0|s) term is a constant (we take it at the fixed time t = t0). Defining and , we can rewrite the average probability current φ̂(Vth, t0|x, s) in terms of the error function

| (3.2) |

This equation is the central result of this note. Using a discretized version of the mean probability current through the threshold based on equation 3.2 (erf method), the computation of the FPTPD becomes accurate at all noise levels (see Figure 2, black lines).4 Except for the probability current calculation, everything else to calculate the FPTPD is the same as in Paninski et al. (2008), including the use of this modified probability current directly in the matrix equation.

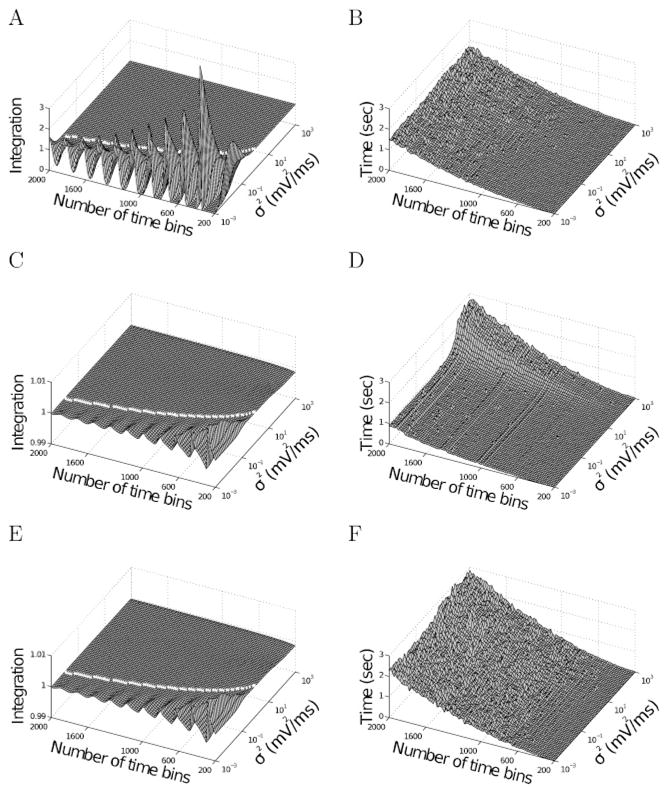

Next, we study how numerical errors and computation time depend on the number of bins and the noise level. We use constant input as in Figure 2 (left panels) and vary the number of time bins and the noise level σ2 (units of mV2/ms). Figures 3A and 3B show the results for the gaussian method. Figure 3A shows that the numerical error increases dramatically when σ2 is decreased. Figure 3B shows that computation time increases quadratically with the number of time bins and that it is independent of σ2. Increasing the number of bins does help to reduce the error, although at the cost of longer computation times, as shown in Figure 3B. With small σ2, it becomes very difficult to solve the problem due to necessarily limited system resources and computation time.

Figure 3.

Dependence of error and computation time on noise level (σ2) and number of bins. We solved the model for a time of 20ms (as in Figure 2). (A) The integrated FPTPD using the gaussian method and (C, E) using the erf method with and without speed optimization, respectively, as a function of noise level and number of bins. Deviations from unity represent numerical error (note the different axes). The white line shows the approximate limit where the instabilities start; it is computed in equation 3.4. (B) Shows the computation (wall-clock) time using one processor (AMD Phenom II X4 955 using Matlab 7.0) for the gaussian method. (D, F) Shows the erf method without and with speed optimization, respectively. For large noise levels, the computation time increases since the speed optimization algorithm from section 4 loses efficacy because the probability is spread over several bins.

Figures 3C and 3D show the analogous results for the erf method. For identical bin numbers, simulation time is approximately doubled relative to the gaussian method since the evaluation of two error functions takes about twice as long as that of one exponential function. The numerical error, however, is orders of magnitude smaller than that of the gaussian method; note the difference in scale between Figures 3A and 3C. Therefore, a much smaller number of bins can be used to achieve comparable precision.

The approximation error in Figures 3A shows large oscillations whose origin becomes clear from Figure 1. As long as the discretization is fine enough compared to the width of the probability current function (thick line), the gaussian method of computing the integral as the sum over the values at t1, t2, … is a good approximation. If, however, the width of the function becomes comparable to the discretization (thin line), the approximation becomes not only poor but very sensitive to the exact locations of the bins. In the example shown, the integral is severely underestimated; if the discretization were made slightly finer, the locations where the function φ is evaluated fall on the high parts of the peak, and the integral would be severely overestimated. As the number of time bins varies, the limits of the integration interval move systematically and the sum alternatively overestimates and underestimates the integral, leading to the oscillations seen in Figure 3A. In our method, we compute analytically the value of the integral itself. Very small oscillations (orders of magnitudes smaller) can be seen also in this case (see Figures 3C and 3E) because the moving intervals lead to small differences in this case too.

We can obtain quantitative insight into the limits of the original gaussian approximation by computing the Taylor expansion of the right-hand side of equation 3.2 with respect to E,

| (3.3) |

showing that for small |E|, the right-hand side of equation 3.2 converges to the expression in equation 2.4, which is the gaussian solution. Note that only a second-order term appears in equation 3.3 since the zeroth-order term is zero and the first-order term is part of φ. When μ0 approaches μ1 (i.e., E approaches 0), equation 3.3 shows that φ̂ approaches φ. In this case, however, the numerator and denominator in equation 3.2 both go to zero and cannot be separately computed numerically once they approach numerical precision. In this case, the gaussian method needs to be used. For double-precision computations, the theoretical limit is ≈ 10−15, but to obtain results with sufficient numerical precision, we switch to the gaussian method whenever . Note also that this switch between equations 3.2 and 2.4 can be done dynamically; since is calculated for every time step, the appropriate choice can be made at each time step.

We observe that E depends on five parameters: E(N, σ2, t, s, x). We are interested in characterizing the solution in terms of the first two (N and σ2), as in Figure 3. In a first-order approximation, we consider the initial conditions s = 0 and x = Vreset. To estimate the boundary of the region where the gaussian method is stable, we take the maximum of the function E with respect to t:

| (3.4) |

The white line in Figures 3A, 3C, and 3E shows where this function takes the value unity. The stable region (where E < 1) is up and to the left (larger number of time bins and larger σ2), and the instabilities appear to the bottom and right.

4 Improvement in Efficiency

For small noise, there is only a limited number of bins whose first passage time probability is nonzero; only those need to be taken into account, and we do not have to spend resources on other bins, which have very small likelihoods. The latter are those for which the membrane voltage is either far above or far below the threshold at both the beginning and the end of the bin. Formally, this means the probability flux is close to zero if

| (4.1) |

or

| (4.2) |

where M is the number of standard deviations beyond which the error function becomes indistinguishable from (positive or negative) unity for the number of bits in the floating number representation chosen. In our case (double precision accuracy), M = 5.9.

Instead of computing the probability current φ̂ using the methods from section 3, we perform a simple (computationally inexpensive) test of the conditions in equations 4.1 and 4.2. If one of them is met, we explicitly set the probability current value to zero; it will then not contribute to the FPTPD. As shown in Figure 3, application of this optimization technique speeds up the calculation for all but the largest noise levels (see Figure 3F) without affecting the numerical error (see Figure 3E). We note that this method is also useful if the noise is guaranteed to be small enough that the firing probability is essentially limited to one time bin and if only the firing time of the neuron needs to be determined.

5 Discussion

In this note, we compute the first passage time probability density for leaky integrate-and-fire neurons by solving Volterra integral equations. As mentioned, one important application for such maximum likelihood methods is optimal parameter search. Typically, parameter searches for neuron models start with a large noise level, so that the initial parameter set starts with a nonzero likelihood. The optimization algorithm (e.g., Nelder & Mead, 1965) then gradually tunes down the noise to physiological levels. A good parameter set should capture most of the variance and leave only a small portion of the variance as intrinsic noise. The accurate calculation of the likelihood for small noise is thus important for finding an acceptable parameter set. At low noise levels, the previously proposed (Plesser & Tanaka, 1997; Paninski et al., 2008) Plesser-Tanaka-Paninski method (called for simplicity the gaussian method in this report) suffers from large numerical errors for all but the finest discretizations (see Figure 3A) at which point, of course, the computation may demand excessive levels of resources. Our method solves this problem with high accuracy and high computational efficiency.

Paninski et al. (2008) showed that the gradient of the likelihood can be calculated easily due to the fact that a matrix equation was used to calculate the FPTPD. We improved the numerical solution by calculating the mean probability current φ̂ (see equation 3.2) instead of φ (see equation 2.4). Conceptually the computation of the likelihood gradient is the same for our improved method; in particular, the same chain rule is used to calculate the gradient. The difference lies in the calculation of the gradient of φ̂ where, instead of the gradient of the gaussian function, the gradient of the erf function is calculated. Note also that the latter is in the convenient form of an exponential function.

It is possible to use a more accurate high-order block-by-block for the integral calculation (Linz, 1985) to achieve better accuracy with higher efficiency, an approach we did not further pursue in this short note.5 We point out that the problems of the gaussian method in the case of low noise are not solved by using higher-order integration methods: the problems are due to undersampling of the probability flux within the integration bin, which, as a matter of principle, can within the gaussian method be solved only by higher sampling but not by using higher-order approximations.

Are the noise levels considered here of biological relevance? Answering this question is complicated by the fact that there is no universal agreement as to what part of the observed variability in the neuronal activity is noise and what part is signal. If only the mean firing rate is considered of importance (the rate coding hypothesis), then variations of neuronal firing shorter than tens of milliseconds or so are considered noise. If it is assumed that the precise timing of neural spikes is important (the temporal coding hypothesis), then such variations are part of the signal. We will provide noise estimates for both cases below.

Three types of noise are encountered in essentially any neuronal system. The first is Johnson noise, which is due to the discrete nature of electric charge carriers. The second is channel noise, which is caused by spontaneous opening and closing of transmembrane ion channels. Manwani and Koch (1999) show that channel noise is at least an order of magnitude larger than Johnson noise. We also consider a third type of noise, which we call vesicle noise and is caused by spontaneous vesicle fusion in synaptic terminals. Since in general each vesicle fusion will open (many) more than one channel, it can be expected that it dominates channel noise.6 There is a fourth type of noise, which is due to the variation in the exact timing of the input.7 This synaptic noise was shown to dominate Johnson noise and channel noise (Manwani & Koch, 1999) and since each postsynaptic event leads to the release of at least one synaptic vesicle (otherwise, in a failed synaptic event, there is no postsynaptic effect), it is likely that synaptic noise also dominates vesicle noise.

We estimate the synaptic noise by assuming it results from Poisson-distributed synaptic inputs from other neurons. Let the number of presynaptic neurons with average firing rate λ be E0. Within a time interval of length τ, the integration time of the neuron, the incoming number of spikes is a Poisson random variable with mean and variance N = E0λτ. Each input spike causes the influx of a charge Q. The sum of all these charges leads to an average current of whose mean is E0λQ and whose variance is . The standard deviation of the membrane voltage caused by input variability is σp,

| (5.1) |

where C is the capacitance of the neuron and . Since the AMPA receptor open time is considerably shorter than the membrane time constant, we assume that charge is injected instantaneously. P can then be estimated by the maximal EPSP value.8

One well-characterized system in which these parameters have been measured is the hippocampus. For CA1 neurons which receive input from CA3, we use P = 0.13 mV (Sayer, Redman, & Andersen, 1989), τ = 15.3 ms (Brown, Fricke, & Perkel, 1981), E0 = 30,000 (Megas, Emri, Freund, & Gulys, 2001), λ = 1 Hz (Frerking, Schulte, Wiebe, & Stubli, 2005), resulting in σp = 0.182 mV/ms. For these values, the noise level in Figure 2B, row 3 corresponds to σp = 0.511 mV/ms (see note 1 for the units). We showed that the gaussian method fails to give reasonable results for noise at this level, which is considerably larger than the estimated synaptic noise.

For temporal coding, the variation in input spike times is part of the signal; thus, there is no synaptic noise as defined above, and the largest noise component is vesicle noise. Using a mean rate of spontaneous release of 0.282 Hz (Fiacco & McCarthy, 2004) and considering that the (spontaneous) miniature EPSPs are at most as large as evoked EPSPs, an upper bound σp = 0.096 mV/ms is obtained. This upper bound is more than five times smaller than the noise level in Figure 2, which was discussed in the previous paragraph. It is clearly beyond the limit where the gaussian method can be applied.

It is highly likely that at least some nervous systems require levels of precision that go way beyond our rather crude estimates, One, possibly extreme, example is the circuits that control the electric fields in weakly electric fish, which have been shown to operate at submicrosecond precision (Moortgat, Keller, Bullock, & Sejnowski, 1998). Use of the original gaussian method, without the improvement introduced in this note, would be very problematic for these systems.

Finally, we point out that this paradigm is by no means limited to equation 2.2. It can just as easily be applied to the generalized leaky integrate-and-fire neuron (Mihalas & Niebur, 2009), which reproduces a large number of observed neuronal behaviors. In fact, applicability goes way beyond neural models. The algorithm can be used to compute first passage times through a constant threshold for all stochastic processes with linear dynamics, provided the noise is gaussian and does not depend explicitly on V, that is, Ornstein-Uhlenbeck processes. Efficient solution of this vast class of both theoretically and practically important problems should prove to be of significant value to a large computational community.

The source code (Matlab) is available on request from the authors.

Acknowledgments

We thank Dan Millman and Alexander Russell for discussions, and we acknowledge support through NIH grants R01NS040596, R01EY016281, and 5R01EY012124 and ONR grant N000141010278.

Footnotes

The physiologically measurable noise has units of mV/ms.

Paninski et al. (2008) showed that the probability current has a singularity due to the fact that the gaussian function (G(.) in equation 2.6) is a delta function when t = s. The singularity can be removed by adding a regularizing term (Buonocore, Nobile, & Ricciardi, 1987). The resulting regularized probability is the singularity-removed probability current.

See section 5 on how this relates to physiological values. Note that we assume a fixed threshold Vth = 10mV for the sake of concreteness, but all results can be formulated relative to the threshold and are then valid in general.

A limitation is, however, inherent in the process of taking the difference of two error functions, which can produce large errors when the difference is close to numerical precision, in our simulation, 10−15 (double precision). An estimate of the difference (erf(ξ + E) − erf(ξ)) can be obtained from the asymptotic series of the erf function (Abramowitz & Stegun, 1964, formula 7.1.23). For large |ξ | and |ξ + E|, taking only the leading terms, we find that this difference is . In many cases, it is, however, not necessary to evaluate this difference because bins with small mean probability current have a negligible effect and can be set to zero (see section 4, equations 4.1 and 4.2). It can be seen in Figure 3 that the error is indeed negligible.

Plesser (1999) applied this technique to compute the FPTPD of the Ornstein- Uhlenbeck process.

In this argument, we simplify the situation by not distinguishing among the different types of ion channels. While vesicle noise is due to opening of ligand-gated synaptic channels, the estimate for channel noise in Manwani and Koch (1999) is for voltage-gated Na+ and K+ channels.

In practice, even if one considers spike timing at quite short timescales important, one will usually not be able to account for all spike input to a neuron (except in special cases, like in a very quiet slice preparation). Thus, most spike time measurements are contaminated by noise due to background input.

We have assumed purely excitatory input for simplicity. Adding inhibitory input of the same statistics increases σp by a factor of , which does not change the basic conclusions.

Contributor Information

`Yi Dong, Email: doyen@pha.jhu.edu.

Stefan Mihalas, Email: mihalas@jhu.edu.

Ernst Niebur, Email: niebur@jhu.edu.

References

- Abramowitz M, Stegun I. Handbook of mathematical functions with formulas, graphs, and mathematical tables. New York: Dover; 1964. [Google Scholar]

- Amzica F, Neckelmann D. Membrane capacitance of cortical neurons and glia during sleep oscillations and spike-wave seizures. Journal of Neurophysiology. 1999;82(5):2731–2746. doi: 10.1152/jn.1999.82.5.2731. [DOI] [PubMed] [Google Scholar]

- Brown TH, Fricke RA, Perkel DH. Passive electrical constants in three classes of hippocampal neurons. J Neurophysiol. 1981;46(4):812–827. doi: 10.1152/jn.1981.46.4.812. [DOI] [PubMed] [Google Scholar]

- Brunel N. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J Comput Neurosci. 2000;8(3):183–208. doi: 10.1023/a:1008925309027. [DOI] [PubMed] [Google Scholar]

- Buonocore A, Nobile AG, Ricciardi LM. A new integral equation for the evaluation of first-passage-time probability densities. Advances in Applied Probability. 1987;19(4):784–800. [Google Scholar]

- Burkitt AN. A review of the integrate-and-fire neuron model: II. Inhomogeneous synaptic input and network properties. Biol Cybern. 2006;95(2):97–112. doi: 10.1007/s00422-006-0082-8. [DOI] [PubMed] [Google Scholar]

- Fiacco TA, McCarthy KD. Intracellular astrocyte calcium waves in situ increase the frequency of spontaneous AMP Areceptor currents in CA1 pyramidal neurons. J Neurosci. 2004;24(3):722–732. doi: 10.1523/JNEUROSCI.2859-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frerking M, Schulte J, Wiebe SP, Stubli U. Spike timing in CA3 pyramidal cells during behavior: Implications for synaptic transmission. J Neurophysiol. 2005;94(2):1528–1540. doi: 10.1152/jn.00108.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerstner W, Naud R. Neuroscience: How good are neuron models? Science. 2009;326(5951):379–380. doi: 10.1126/science.1181936. [DOI] [PubMed] [Google Scholar]

- Linz P. Analytical and numerical methods for Volterra equations. Philadelphia: Society for Industrial and Applied Mathematics; 1985. [Google Scholar]

- Manwani A, Koch C. Detecting and estimating signals in noisy cable structure, I: Neuronal noise sources. Neural Comput. 1999;11(8):1797–1829. doi: 10.1162/089976699300015972. [DOI] [PubMed] [Google Scholar]

- Megas M, Emri Z, Freund TF, Gulys AI. Total number and distribution of inhibitory and excitatory synapses on hippocampal CA1 pyramidal cells. Neuroscience. 2001;102(3):527–540. doi: 10.1016/s0306-4522(00)00496-6. [DOI] [PubMed] [Google Scholar]

- Mihalas S, Niebur E. Ageneralized linear integrate-and-fire neural model produces diverse spiking behavior. Neural Computation. 2009;21(3):704–718. doi: 10.1162/neco.2008.12-07-680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moortgat K, Keller C, Bullock T, Sejnowski T. Submicrosecond pacemaker precision is behaviorally modulated: The gymnotiform electromotor pathway. Proceedings of the National Academy of Sciences of the United States of America. 1998;95(8):4684–4689. doi: 10.1073/pnas.95.8.4684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelder JA, Mead R. A simplex method for function minimization. Computer Journal. 1965;7:308–313. [Google Scholar]

- Odman MT. A quantitative analysis of numerical diffusion introduced by advection algorithms in air quality models. Atmospheric Environment. 1997;31(13):1933–1940. [Google Scholar]

- Paninski L, Haith A, Szirtes G. Integral equation methods for computing likelihoods and their derivatives in the stochastic integrate-and-fire model. J Comput Neurosci. 2008;24(1):69–79. doi: 10.1007/s10827-007-0042-x. [DOI] [PubMed] [Google Scholar]

- Paninski L, Pillow JW, Simoncelli EP. Maximum likelihood estimation of a stochastic integrate-and-fire neural encoding model. Neural Comput. 2004;16(12):2533–2561. doi: 10.1162/0899766042321797. [DOI] [PubMed] [Google Scholar]

- Plesser HE. Unpublished doctoral dissertation. Georg-August-University; Göttingen, Germany: 1999. Aspects of signal processing in noisy neurons. [Google Scholar]

- Plesser H, Tanaka S. Stochastic resonance in a model neuron with reset. Physics Letters A. 1997;225:228–234. [Google Scholar]

- Sayer RJ, Redman SJ, Andersen P. Amplitude fluctuations in small EPSPS recorded from CA1 pyramidal cells in the guinea pig hippocampal slice. J Neurosci. 1989;9(3):840–850. doi: 10.1523/JNEUROSCI.09-03-00840.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]