Abstract

When observers search for a target object, they incidentally learn the identities and locations of “background” objects in the same display. This learning can facilitate search performance, eliciting faster reaction times for repeated displays (Hout & Goldinger, 2010). Despite these findings, visual search has been successfully modeled using architectures that maintain no history of attentional deployments; they are amnesic (e.g., Guided Search Theory; Wolfe, 2007). In the current study, we asked two questions: 1) under what conditions does such incidental learning occur? And 2) what does viewing behavior reveal about the efficiency of attentional deployments over time? In two experiments, we tracked eye movements during repeated visual search, and we tested incidental memory for repeated non-target objects. Across conditions, the consistency of search sets and spatial layouts were manipulated to assess their respective contributions to learning. Using viewing behavior, we contrasted three potential accounts for faster searching with experience. The results indicate that learning does not result in faster object identification or greater search efficiency. Instead, familiar search arrays appear to allow faster resolution of search decisions, whether targets are present or absent.

Imagine searching for some target object in a cluttered room: Search may at first be difficult, but will likely become easier as you continue searching in this room for different objects. Although this seems intuitive, the results of analogous experiments are quite surprising. In numerous studies, Wolfe and colleagues have shown that, even when visual search arrays are repeated many times, people do not increase the efficiency of visual search (see Oliva, Wolfe, & Arsenio, 2004; Wolfe, Klempen, & Dahlen, 2000; Wolfe, Oliva, Butcher, & Arsenio, 2002). More formally, these studies have shown that, even after hundreds of trials, there is a constant slope that relates search RTs to array set sizes. That is, search time is always a function of array size, never approaching parallel processing.

Although search efficiency does not improve with repeated visual search displays, people do show clear learning effects. If the search arrays are configured in a manner that helps cue target location, people learn to guide attention to targets more quickly – the contextual cuing effect (Chun & Jiang, 1998; Jiang & Chun, 2001; Jiang & Song, 2005; Jiang & Leung, 2005). But, even when search arrays contain no cues to target locations, people learn from experience. Consider an experimental procedure wherein search targets and distractors are all discrete objects (as in a cluttered room), and the same set of distractors is used across many trials: Sometimes a target object replaces a distractor; sometimes there are only distractors. As people repeatedly search through the distractor array, they become faster both at finding targets and determining target absence (Hout & Goldinger, 2010). Moreover, people show robust incidental learning of the distractor objects, as indicated in surprise recognition tests (Castelhano & Henderson, 2005; Hollingworth & Henderson, 2002; Hout & Goldinger, 2010; Williams, Henderson, & Zacks, 2005; Williams, 2009). Thus, although search remains inefficient with repeated displays, people acquire beneficial knowledge about the “background” objects, even if those objects are shown in random configurations on every trial (Hout & Goldinger, 2010).

In the present investigation, we sought to understand how people become faster at visual search through repeated, non-predictive displays. We assessed whether search becomes more efficient with experience, verifying that it did not (Wolfe et al., 2000). Nevertheless, people became faster searchers with experience, and they acquired incidental knowledge of non-target objects. Our new question regarded the mechanism by which learning elicits faster search, at the level of oculomotor behaviors. Do people become faster at identifying, and rejecting, familiar distractor objects? Do they become better at moving their eyes through spatial locations? Also, if visual search is improved by learning across trials, what conditions support or preclude such learning?

Attentional guidance in visual search

Imagine that you are asked to locate a target letter among a set of distractor letters. It would be quite easy if, for instance, the target was an X presented among a set of Os, or presented in green amid a set of red distractors. In such cases, attentional deployment in visual search is guided by attributes that allow you to process information across the visual field in parallel, directing your attention (and subsequently your eyes; Deubel & Schneider, 1996; Hoffman & Subramaniam, 1995) immediately to the target. Among these pre-attentive attributes are color, orientation, size, and motion (Treisman, 1985; Wolfe, 2010); they can be used to guide attention in a stimulus-driven manner (e.g., pop-out of a color singleton), or in a top-down, user-guided manner (e.g., searching for a blue triangle among blue squares and red triangles). In the latter conjunctive-search, attention may be swiftly focused on a subset of items (i.e., the blue items, or the triangles), after which you may serially scan the subset until you locate the target. Accordingly, Feature Integration Theory (FIT; Treisman & Gelade, 1980) explains search behavior using a two-stage architecture: A pre-attentive primary stage scans the whole array for guiding features, and a secondary, serial-attentive stage performs finer grained analysis for conjunction search. Like FIT, the influential Guided Search (GS) model (Wolfe, Cave, & Franzel, 1989; Wolfe & Gancarz, 1996) proposes a pre-attentive first stage, and an attentive second stage. In the pre-attentive stage, bottom-up and top-down information sources are used to create a “guidance map” which, in turn, serves to guide attentional deployments in the second stage. Bottom-up guidance directs attention toward objects that differ from their neighbors, and top-down guidance directs attention toward objects that share features with the target. Implicit in an early version of the model (GS2; Wolfe, 1994) was the assumption that people accumulate information about the contents of a search array over the course of a trial. Once an item is rejected, it is “tagged,” inhibiting the return of attention (Klein, 1988; Klein & MacInnes, 1999). GS2 was therefore memory-driven, as knowledge of previous attentional deployments was assumed to guide future attentional deployments (see also Falmange & Theios, 1969; Eriksen & Lappin, 1965).

Several studies, however, suggest that visual search processes are in fact “amnesic” (Horowitz & Wolfe, 2005). The randomized search paradigm (Horowitz & Wolfe, 1998) was developed to prevent participants from using memory during search. In this design, a traditional, static-search condition (wherein search objects remain stationary throughout a trial) is compared to a dynamic-search condition, wherein items are randomly repositioned throughout a trial (typically, the target is a rotated T, presented among distracting rotated Ls). If search processes rely on memory, this dynamic condition should be a hindrance. If search is indeed amnesic, performance should be equivalent across conditions. Results supported the amnesic account, with statistically indistinguishable search slopes across conditions (see Horowitz & Wolfe, 2003, for replications). In light of such evidence, the most recent GS model (GS4; Wolfe, 2007) maintains no history of previous attentional deployments; it is amnesic. A core claim of Guided Search Theory, therefore, is that the deployment of attention is not determined by the history of previous deployments. Rather, it is guided by the salience of the stimuli. Memory, however, is not completely irrelevant to search performance in GS4 (Horowitz & Wolfe, 2005); we return to this point later. Importantly, although GS4 models search performance without keeping track of search history, the possibility remains that, under different circumstances (or by using different analytic techniques), we may find evidence for memory-driven attentional guidance. Indeed, several studies have suggested that focal spatial memory may guide viewing behavior in the short-term by biasing attention away from previously examined locations (Beck, Peterson, & Vomela, 2006; Beck et al., 2006; Dodd, Castel, & Pratt, 2003; Gilchrist & Harvey, 2000; Klein, 1988; Klein & MacInnes, 1999; Müller & von Muhlenen, 2000; Takeda & Yagi, 2000, Tipper, Driver, & Weaver, 1991).

To assess claims of amnesic search, Peterson et al. (2001) examined eye-movement data, comparing them to Monte Carlo simulations of a memoryless search model. Human participants located a rotated T among rotated Ls; pseudo-subjects located a target by randomly selecting items to “examine”. For both real and pseudo-subjects, revisitation probability was calculated as a function of lag (i.e., the number of fixations between the original fixation and the revisitation). A truly memoryless search model is equivalent to sampling with replacement; it therefore predicts a small possibility that search will continue indefinitely. Thus, its hazard function (i.e., the probability that a target will be found, given that it has not already been found) is flat, because the potential search set does not decrease as items are examined. In contrast, memory-driven search predicts an increasing hazard function – as more items are examined, the likelihood increases that the next item will be the target. The results were significantly different from those predicted by the memoryless search model. Peterson et al. concluded that visual search has retrospective memory for at least 12 items, because participants rarely reexamined items in a set size of 12 (see also Dickinson & Zelinsky, 2005; Kristjánsson, 2000). Subsequent research (McCarley, et al., 2003) suggested a more modest amount of 3–4 items (see also Horowitz & Wolfe, 2001).

What do these conflicting results suggest about attentional guidance in visual search? The conclusions are threefold: 1) Visual attention is efficiently directed by both bottom-up features, and by user-defined, top-down guidance. 2) Search performance can be modeled with an architecture that disregards previous attentional deployments. And 3) despite the second conclusion, there is reason to believe that memory may bias attention away from previously visited locations.

The role of implicit memory in visual search

Although the role of memory in attentional guidance is a controversial topic, it is clear that implicit memory for display consistencies can facilitate performance. For example, research on perceptual priming has consistently shown that repeated targets are found quickly, relative to novel targets (Kristjánsson & Driver, 2008; Kristjánsson, Wang, & Nakayama, 2002). Moreover, Geyer, Müller, and Krummenacher (2006) found that, although such priming is strongest when both target and distractor features are repeated, it also occurs when only distractor features were repeated. They suggested that such distractor-based priming arises because their repetition allows faster perceptual grouping of homogenous distractors. With repetition, the salience of even a novel target is increased because it “stands out” against a background of perceptually grouped non-targets (see also Hollingworth, 2009). In similar fashion, Yang, Chen, and Zelinsky (2009) found preferential fixation for novel distractors (relative to old ones), suggesting that when search objects are repeated, attention is directed toward the novel items.

As noted earlier, the contextual cueing paradigm (Chun & Jiang, 1998, 1999; Jiang & Chun, 2001; Jiang & Song, 2005; Jiang & Leung, 2005) also suggests that implicit memory can facilitate visual search. The typical finding is that, when predictive displays (i.e., those in which a target is placed in a consistent location in a particular configuration of distractors) are repeated, target localization is speeded, even when observers are not aware of the repetition. Chun and Jiang (2003) found that contextual cuing was implicit in nature. In one experiment, following search, participants were shown the repeated displays, and performed at chance when asked to indicate the approximate location of the target. In another experiment, participants were told that the displays were repeated and predictive. Such explicit instructions failed to enhance contextual cueing, or explicit memory for target locations. Thus, when global configurations are repeated, people implicitly learn associations between spatial layouts and target placement, and use such knowledge to guide search (but see also Kunar, Flusberg, & Wolfe, 2006; Kunar, Flusberg, Horowitz, & Wolfe, 2007; Smyth & Shanks, 2008).1

The present investigation

In the current study, we examined the dynamics of attentional deployment during incidental learning of repeated search arrays. We tested whether search performance would benefit from incidental learning of spatial information and object identities, and we monitored both eye movements and search performance across trials. We employed an unpredictable search task for complex visual stimuli; the location and identity of targets was randomized on every trial, and potential targets were only seen once. Thus, participants were given a difficult, “serial” search task that required careful scanning. Of critical importance, across conditions, different aspects of the displays were repeated across trials. Specifically, we repeated the spatial configurations, the identities of the distractor objects, both, or neither. We had several guiding questions: 1) When target identities and locations are unpredictable, can incidental learning of context (spatial layouts, object identities) facilitate search? 2) If search improves as a function of learning, does attentional deployment become more efficient? And 3) how are changes in search performance reflected in viewing behavior?

In order to obtain a wide range of viewing behavior, we varied levels of visual WM load, requiring finer-grained distinctions at higher levels (Menneer et al., 2007; 2008), but remaining within the capacity of visual WM (Luck & Vogel, 1997). Across experiments, we systematically decoupled the consistency of distractor objects and spatial configurations. In Experiment 1, spatial configurations were held constant within blocks; in Experiment 2, spatial configurations were randomized on each trial. Each experiment had two between-subjects conditions: In the first condition, object identities were fixed within blocks of trials. In the second condition, objects were repeated throughout the experiment, but were randomly grouped within and across blocks. Specifically, in the consistent conditions, one set of objects was used repeatedly throughout one block of trials, and a new set of objects was used repeatedly in the next block, etc. In the inconsistent conditions, we spread the use of all objects across all blocks, in randomly generated sets, with the restriction that all objects must be repeated as often as in the consistent conditions. In this manner, learning of the distractor objects was equally possible, but they were inconsistently grouped for half the participants. In both experiments, we varied the set size across blocks so we could examine search efficiency.

Following all search trials, a surprise 2AFC, token-discrimination test was given, to assess incidental retention of target and distractor identities. Time series analyses were performed on search RTs, number of fixations per trial, and average fixation durations. We also regressed recognition memory performance on the total number of fixations and total gaze duration (per image) to determine which measure(s) of viewing behavior contributed to the retention of visual details for search items. Based on a recent study (Hout & Goldinger, 2010), we expected to find faster search RTs as a function of experience with the displays. As such, we contrasted three hypotheses pertaining to the specific conditions under which facilitation would occur, and how this would be reflected in the eye movements (note that the hypotheses are not mutually exclusive).

We know from prior work that memory for complex visual tokens is acquired during search (Castelhano & Henderson, 2005; Williams, 2009), and that search for familiar items is speeded, relative to unfamiliar ones (Mruczek & Sheinberg, 2005). Additionally, according to Duncan and Humphreys’ (1989, 1992) attentional engagement theory of visual search, the difficulty of search depends on how easily a target gains access to visual short-term memory (VSTM). Accordingly, our rapid identification and dismissal (RID) hypothesis posits that, as the identities of search items are learned over time, RTs may be speeded by increased fluency in the identification and dismissal of distractors. This is an intuitive hypothesis: When a person sees the same distractor object repeatedly, it should become easier to recognize and reject. The RID hypothesis makes several specific predictions. First, we should find faster RTs across trials in any condition wherein participants learn the search items, as measured by our surprise memory test. Second, this search facilitation should derive from shorter object fixations over time. By analogy fixation durations in reading are typically shorter for high-frequency words, relative to low-frequency words (Schilling, Rayner, & Chumbley, 1998; Reichle, Pollatsek, & Rayner, 2006).

Alternatively, the spatial mapping hypothesis suggests that repeated spatial contexts enhance memory for item locations (see, e.g., McCarley et al., 2003). By this hypothesis, incidental learning of repeated layouts may allow participants to avoid unnecessary refixations. As such, the spatial mapping hypothesis predicts significant search facilitation in Experiment 1, wherein spatial configurations were held constant within blocks. However, if spatial mapping is solely responsible for search facilitation, we should find no such effect in Experiment 2, wherein configurations were randomized on each trial. With respect to eye-movements, we should find that search facilitation derives from people making fewer fixations over time. (Note that such a spatial mapping mechanism could work in tandem with RID.)

Finally, following the work of Kunar et al. (2007), the search confidence hypothesis suggests a higher-level mechanism. Whereas the foregoing hypotheses suggest specific enhancements in viewing behaviors, search confidence suggests that learning a repeated display may decrease the information required for the searcher to reach a decision. By this view, familiar contexts encourage less conservative response thresholds, enabling faster decisions. Both object and spatial learning would contribute to this effect: Knowledge of a fixed spatial configuration may provide increased certainty that all relevant locations have been inspected, and memory for specific objects may reduce perceptual uncertainty about items under inspection, making targets more easily distinguished from distractors. The search confidence hypothesis therefore predicts that search facilitation will occur under coherent learning conditions. When object identities are consistent within blocks (Experiments 1a, 2a), facilitation should be robust. In Experiments 1b and 2b, despite object repetition, the lack of trial-to-trial set coherence should prevent people from searching faster over time. We should find that spatial knowledge aids, but is not critical to, facilitation (see Hout & Goldinger, 2010). With respect to eye movements, the search confidence hypothesis predicts fewer fixations as a function of experience, indicating an earlier termination of search, rather than an increased ability to process individual items.

Experiment 1

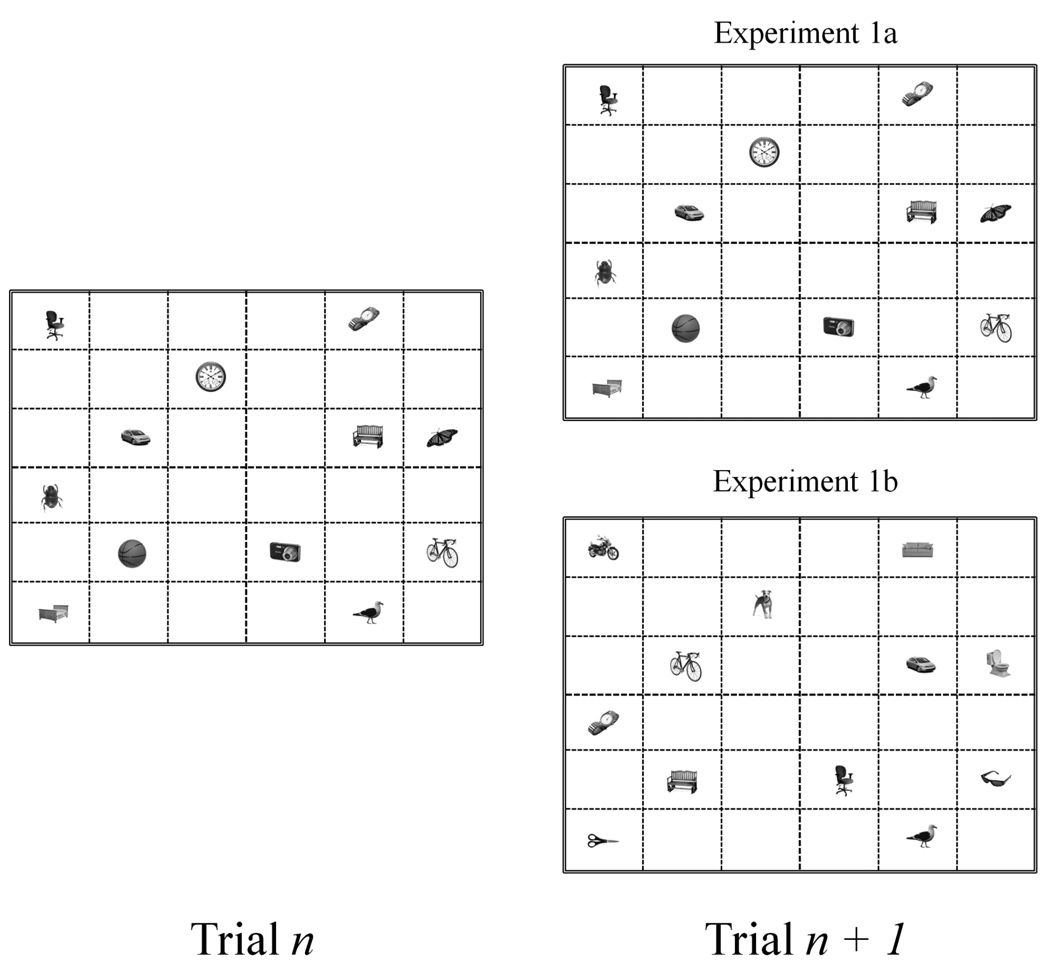

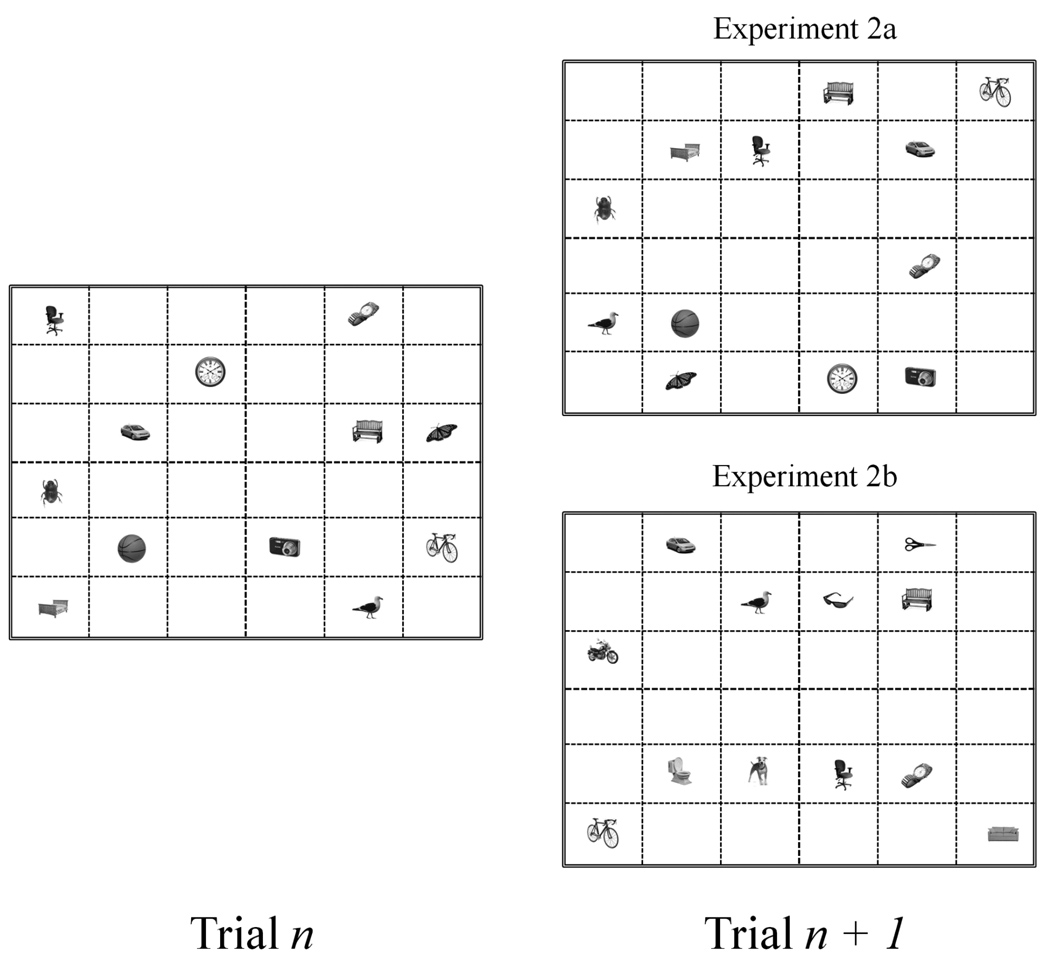

In Experiment 1, people searched for new targets (indicating their presence or absence) among repeated distractors in fixed spatial configurations. When present, the target replaced a single distractor object, and no target appeared more than once in any condition. As such, the displays conveyed no useful information about likely target location (i.e., no contextual cuing). Spatial layouts were randomly generated at the start of each block, and then held constant throughout the entire block. In Experiment 1a, distractor identities were fixed – the same non-target items appeared in consistent locations throughout each block of trials. (New distractors were used in subsequent blocks.) In Experiment 1b, distractor identities were quasi-randomized: They were not coherently grouped within sets, and could appear in any search block, in any of the fixed locations. Figure 1 shows these two forms of object consistency. Experiment 1 was thus designed to determine if (when spatial configurations are held constant), visual search facilitation occurs through familiarity with coherent search sets, or knowledge of individual search objects. Following all search trials, we administered a 2AFC, surprise recognition memory test. Participants were presented with the previously seen distractor and target images, each with a semantically matching foil (e.g., if the “old” image was a shoe, the matched foil would be a new, visually distinct shoe). Randomization of search items in Experiment 1b was constrained such that the number of presentations for every item was equivalent across both experiments, making recognition memory performance directly comparable.

Figure 1.

Examples of both forms of object consistency employed in Experiment 1 (for a set size of 12 items). The left panel depicts a starting configuration. The top-right panel shows a subsequent trial with consistent object identities (Experiment 1a); the bottom-right panel shows randomized object identities (Experiment 1b). Spatial configurations were constant in both experiments. Gridlines were imaginary; they are added to the figure for clarity.

Method

Participants

One hundred and eight students from Arizona State University participated in Experiment 1 as partial fulfillment of a course requirement (eighteen students participated in each of six between-subjects conditions). All participants had normal or corrected-to-normal vision.

Design

Two levels of object consistency (consistent, Experiment 1a; inconsistent, Experiment 1b), and three levels of WM load (low, medium, high) were manipulated between-subjects. In every condition, three search blocks were presented, one for each level of set size (12, 16, or 20 images). Target presence during search (present, absent) was a within-subjects variable, in equal proportions.

Stimuli

Search objects were photographs of real-world objects, selected to avoid any obvious categorical relationships among the images. Most categories were represented by a single pair of images (one randomly selected for use in visual search, the other its matched foil for 2AFC). Images were resized, maintaining original proportions, to a maximum of 2.5° in visual angle (horizontal or vertical), from a viewing distance of 55 cm. Images were no smaller than 2.0° in visual angle along either dimension. Stimuli contained no background, and were converted to grayscale. A single object or entity was present in each image (e.g., an ice cream cone, a pair of shoes). Elimination of color, coupled with the relatively small size of individual images, minimized parafoveal identification of images.

Apparatus

Data were collected using a Dell Optiplex 755 PC (2.66 GHz, 3.25 GB RAM). Our display was a 21-inch NEC FE21111 CRT monitor, with resolution set to 1280×1024, and refresh rate of 60 Hz. E-Prime v1.2 software (Schneider, Eschman, & Zuccolotto, 2002) was used to control stimulus presentation and collect responses. Eye-movements were recorded by an Eyelink 1000 eye-tracker (SR Research Ltd., Mississauga, Ontario, Canada), mounted on the desktop. Temporal resolution was 1000 Hz, and spatial resolution was 0.01°. An eye movement was classified as a saccade when its distance exceeded 0.5° and its velocity reached 30°/s (or acceleration reached 8000°/s2).

Procedure

Visual search

Participants completed three blocks of visual search, one for each set size (12, 16, 20 images). Each of the six possible permutations for the order of presentation of set sizes was evenly counterbalanced across participants. Each block consisted of an equal number of target-present and target-absent trials, randomly dispersed, for a total of 96 search trials. The number of trials per block was twice the number of items in the display (such that each item could be replaced once per block, while maintaining 50% target-presence probability). On target-present trials, the target replaced a single distractor, occupying its same spatial location. In Experiment 1a, the same distractor sets were repeated for an entire block (24, 32, or 40 trials). A new distractor set was introduced for each block of trials. In Experiment 1b, distractors were randomized and could appear in any search block, in any of the fixed locations (there were no restrictions regarding how many items could be repeated from trial n to trial n + 1). A one-minute break was given between blocks to allow participants to rest their eyes. Spatial layouts were randomly generated for each participant, and held constant throughout a block of trials. Target images were randomized and used only once. Participants were not informed of any regularity regarding spatial configuration or repeated distractors.

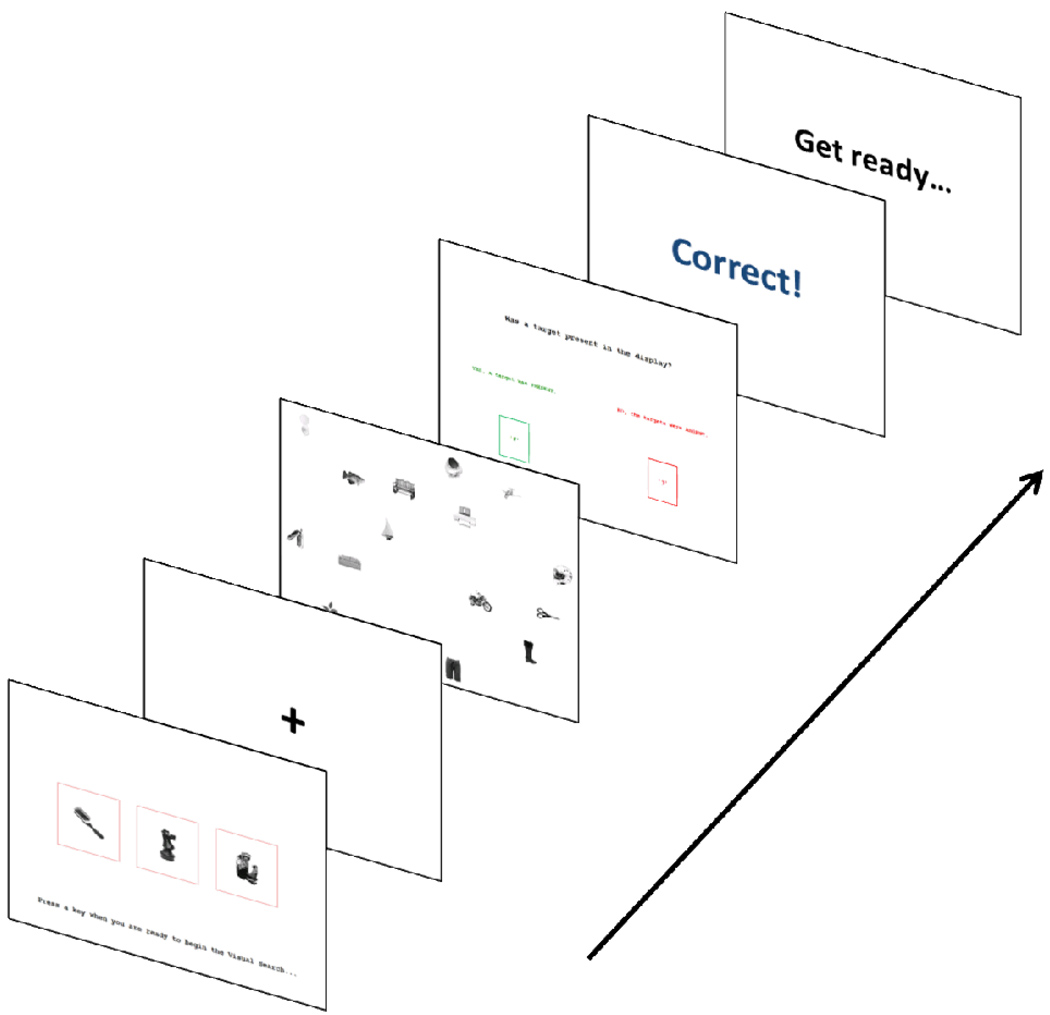

At the beginning of each trial, participants were shown one, two, or three different potential target images (low-, medium-, and high-load conditions, respectively) to be held in WM for the duration of the trial. Real-time gaze monitoring ensured that participants looked at each target for at least one second. Once a target was sufficiently viewed, the border around the image changed to green, signifying the criterion was met. When each target had a green border, the participant was able to begin search, or continue viewing the targets until ready to begin. A spacebar press cleared the targets from the screen, replacing them with a central fixation cross. Once the cross was fixated for one second (to ensure central fixation at the start of each trial), it was replaced by the search array presented on a blank white background. Participants searched until a single target was located, or it was determined that all targets were absent. People rested their fingers on the spacebar during search; once a decision had been reached, they used the spacebar to terminate the display and clear the images from view. Thereafter, they were prompted to indicate their decision (present, absent) using the keyboard. Brief accuracy feedback was given, followed by a one second delay screen before starting the next trial. Figure 2 shows the progression of events in a search trial.

Figure 2.

Timeline showing the progression of events for a single visual search trial. Participants were shown the target image(s) and progressed to the next screen upon key press. Next, a fixation cross was displayed and automatically cleared from the screen following 1-second fixation. The visual search array was then presented, and terminated upon spacebar press. Finally, target presence was queried, followed by 1-second accuracy feedback, and a 1-second delay prior to the start of the next trial.

Instructions emphasized accuracy over speed. Eight practice trials were given: the first six consisted of single-target search, allowing us to examine any potential pre-existing between-group differences by assessing participants’ search performance on precisely the same task. In order to familiarize the higher load participants with multiple-target search, the final two practice trials involved two and three targets, for medium- and high-load groups, respectively. (The low-load group continued to search for a single target).

Search array organization

A search array algorithm was used to create spatial configurations with pseudo-random organization. An equal number of objects appeared in each quadrant (three, four, or five, according to set size). Locations were generated to ensure a minimum of 1.5° of visual angle between adjacent images, and between any image and the edges of the screen. No images appeared in the center four locations (see Figure 1), to ensure that the participant’s gaze would never immediately fall on a target at the start of a trial.

Recognition

Following the search blocks, participants were given a surprise 2AFC recognition memory test for all objects encountered during search. Participants saw two objects at a time on a white background: one previously seen image and a matched foil, equally mapped to either side of the screen. (At the start of the experiment, image selection was randomized such that one item from each pair was selected for use in visual search, and its partner for use as a recognition foil). Participants indicated which image they had seen (using the keyboard), and instructions again emphasized accuracy. Brief feedback was provided. All 48 distractor-foil pairs were tested. Also, each of the target-present targets (i.e., targets that appeared in the search displays) were tested, for a total of 96 recognition trials. All pairs were pooled and order of presentation randomized to minimize any effect of time elapsed since learning. Although distractor sets were quasi-random across trials in Experiment 1b, the number of presentations across items was constrained to match that of Experiment 1a, making the recognition results directly comparable.

Eye-tracking

Monocular sampling was used at a collection rate of 1000 Hz. Participants used a chin-rest during all search trials, and were initially calibrated to ensure accurate tracking. Periodic drift correction and recalibrations ensured accurate recording of gaze position. Interest areas (IAs) were defined as the smallest rectangular area that encompassed a given image, plus .25° of visual angle on each side (to account for error or calibration drift).

Results

As noted earlier, we examined practice trials to identify any a priori differences across load groups when all participants searched for a single target. No differences were found in search performance (accuracy, RTs) or viewing behavior (average number of fixations, average fixation duration). Similarly, we found no differences across permutation orders of the counterbalanced design. Overall error rates for visual search were very low (5%). For the sake of brevity, we do not discuss search accuracy, but results are shown in Table A1.2

Table A1.

Visual search error rates, as a function of Set Size, WM Load, Trial Type, and Epoch, from Experiment 1.

| Trial Type | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Target-Absent | Target-Present | ||||||||

| Set Size | WM Load Group | Epoch 1 | Epoch 2 | Epoch 3 | Epoch 4 | Epoch 1 | Epoch 2 | Epoch 3 | Epoch 4 |

| Low | 0% | 2% | 0% | 0% | 4% | 5% | 5% | 2% | |

| Twelve | Medium | 3% | 2% | 1% | 0% | 7% | 5% | 5% | 5% |

| High | 2% | 1% | 2% | 3% | 16% | 8% | 7% | 8% | |

| Low | 1% | 1% | 4% | 1% | 8% | 4% | 6% | 6% | |

| Sixteen | Medium | 3% | 1% | 3% | 1% | 10% | 9% | 12% | 8% |

| High | 4% | 2% | 2% | 0% | 12% | 12% | 8% | 10% | |

| Low | 1% | 1% | 1% | 2% | 9% | 10% | 4% | 4% | |

| Twenty | Medium | 3% | 3% | 2% | 1% | 12% | 17% | 8% | 11% |

| High | 3% | 2% | 1% | 2% | 14% | 14% | 12% | 13% | |

Note. Data are presented collapsed across Experiments la and lb. as they did not differ significantly.

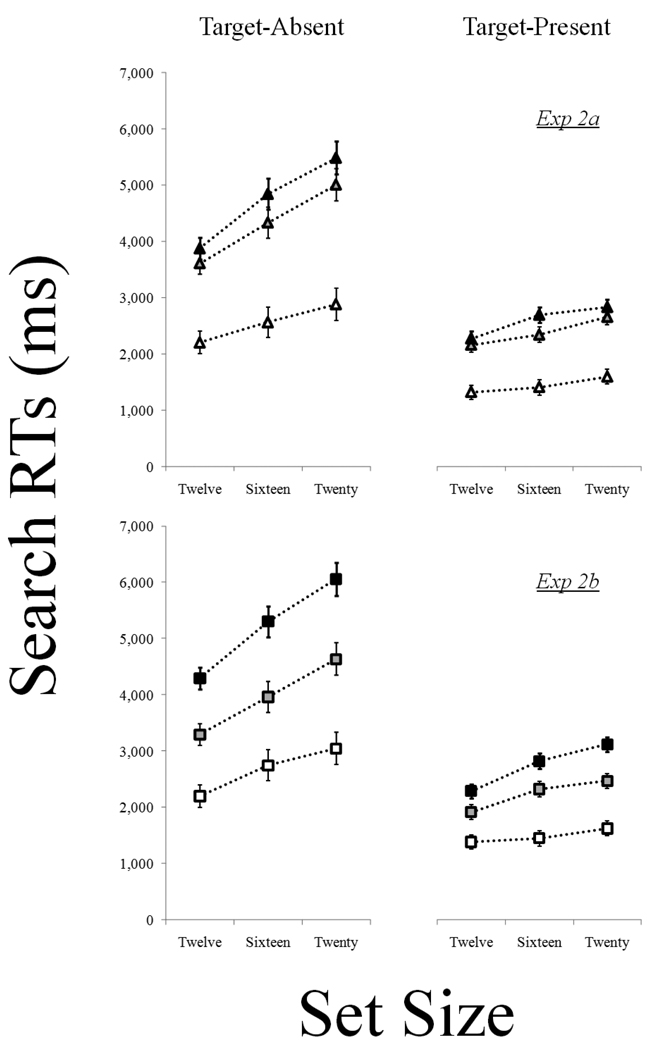

Visual search RTs

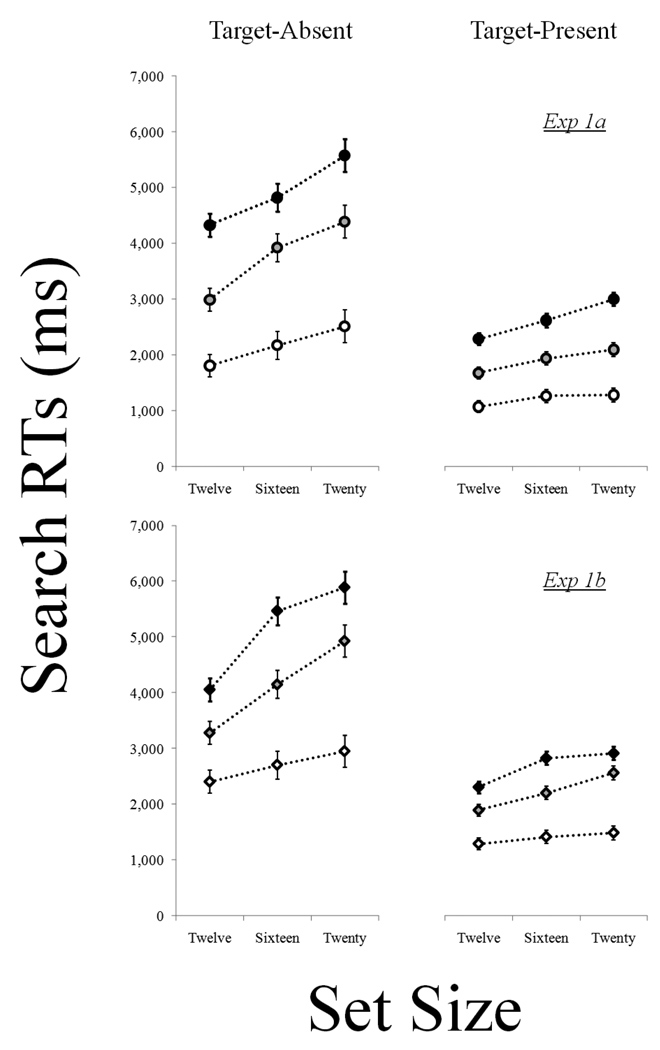

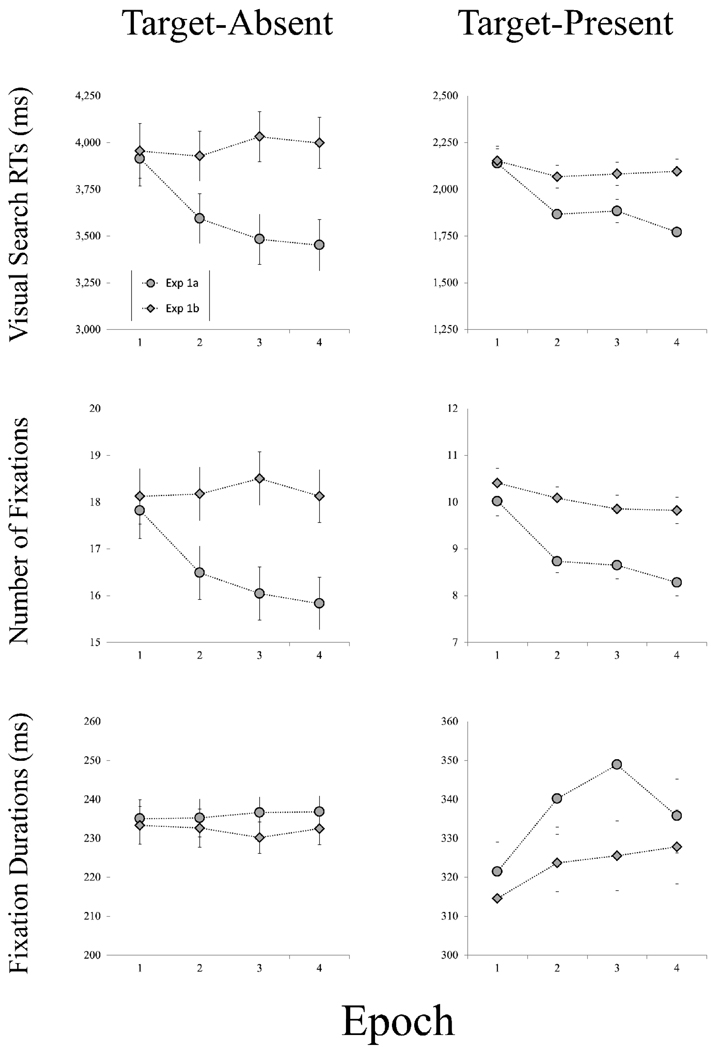

Search RTs were divided into epochs, with each comprising 25% of the block’s trials. Each block included a different number of trials (varying by set size); thus, epochs were composed of 3, 4, and 5 trials, for set sizes 12, 16, and 20, respectively. Only RTs from accurate searches were included in the analysis. Following Endo and Takeda (2004; Hout & Goldinger, 2010), we examined RTs as a function of experience with the search display. Main effects of Epoch would indicate that RTs reliably changed (in this case, decreased) as the trials progressed. Although Experiment 1 had many conditions, the key results are easily summarized. Figure 3 shows mean search RTs, plotted as a function of Experiment, WM Load, Set Size, and Trial Type. As shown, search times were slower in Experiment 1b, relative to Experiment 1a. Participants under higher WM load searched slower, relative to those under lower WM load. Search was slower among larger set sizes, and people were slower in target-absent trials, relative to target-present. Of key interest, reliable learning (i.e., an effect of Epoch) was observed in Experiment 1a, but not in Experiment 1b. Despite the facilitation in search RTs in Experiment 1a, there was no change in search efficiency across epochs. Figure 4 shows search RTs across epochs, plotted separately for target-present and absent trials, for each experiment. The second and third rows show fixation counts and average fixation durations, plotted in similar fashion; these findings are discussed next.

Figure 3.

Mean visual search RTs (± one SEM) in Experiment 1 as a function of Experiment, WM Load, Set Size, and Trial Type. Results are plotted for each WM-load group, collapsed across epochs. White, gray, and black symbols represent low-, medium-, and high-load groups, respectively.

Figure 4.

Mean visual search RTs, number of fixations, and fixation durations (± one SEM) in Experiment 1 as a function of Experiment and Epoch.

RTs were entered into a 5-way, repeated measures ANOVA, with Experiment (1a, 1b), WM Load (low, medium, high), Set Size (twelve, sixteen, twenty), Trial Type (target-present, target-absent), and Epoch (1–4) as factors. We found an effect of Experiment, F(1, 102) = 4.88, ηp2 = .05, p = .03, with slower search in Experiment 1b (3039 ms), relative to Experiment 1a (2763 ms). There was an effect of Load, F(2, 102) = 83.97, ηp2 = .62, p < .001, with slowest search among the high-load group (3840 ms), followed by medium-load (3000 ms), and low-load (1862 ms). There was also an effect of Set Size, F(2, 101) = 133.16, ηp2 = .73, p < .001, with slower search among larger set sizes (2448, 2958, and 3297 ms for 12, 16, and 20 items, respectively). We found an effect of Trial Type, F(1, 102) = 659.67, ηp2 = .87, p < .001, with participants responding slower to target-absence (3794 ms), relative to target-presence (2007 ms). Of key interest, there was a main effect of Epoch, F(3, 100) = 12.33, ηp2 = .27, p < .001, indicating that search RTs decreased significantly within blocks of trials (3040, 2864, 2870, and 2829 ms for epochs 1–4, respectively).

Of key interest, we found an Experiment × Epoch interaction, F(3, 100) = 8.67, ηp2 = .21, p < .001, indicating a steeper learning slope (i.e., the slope of the best fitting line relating RT to Epoch) in Experiment 1a (−129 ms/epoch), relative to Experiment 1b (+3 ms/epoch). The Set Size × Epoch interaction was not significant (F < 2), indicating no change in search efficiency over time. Because the effects of Epoch were of particular interest, we also tested for simple effects separately for each experiment and trial type. We found significant simple effects of Epoch on both trial types in Experiment 1a (target-absent: F(3, 49) = 10.44, ηp2 = .39, p < .001; target-present: F(3, 49) = 10.88, ηp2 = .40, p < .001), indicating that RTs decreased on both target-absent and target-present trials. In contrast, we found no effects of Epoch on either trial type in Experiment 1b (target-absent: F(3, 49) = 1.81, p = .16; target-present: F(3, 49) = 0.55, p = .65).

Eye movements

We performed time-series analyses on two eye-movement measures: number of fixations, and average fixation durations. Our questions were: 1) over time, do people demonstrate learning by making fewer fixations? And 2) do fixations become shorter as people acquire experience with the distractor items?

Number of fixations

Average fixation counts per trial were entered into a 5-way, repeated-measures ANOVA (see Visual Search RTs, above). The results mirrored those of the search RTs. Participants in Experiment 1b made more fixations than those in Experiment 1a. More fixations were also committed by higher load groups, among larger set sizes, and when the target was absent. Of particular interest, reliable effects of Epoch were found in Experiment 1a, but not Experiment 1b (see Figure 4).

Each of the main effects was significant: Experiment, F(1, 102) = 7.63, ηp2 = .07, p = .01; Load, F(2, 102) = 88.17, ηp2 = .63, p < .001; Set Size, F(2, 101) = 148.70, ηp2 = .75, p < .001; Trial Type, F(1, 102) = 677.05, ηp2 = .87, p < .001; Epoch, F(3, 100) = 11.50, ηp2 = .26, p < .001. More fixations occurred in Experiment 1b (14.14), relative to Experiment 1a (12.73), and among higher load groups relative to lower load (9.08, 13.92, and 17.31 for low-, medium-, and high-load, respectively). Larger set sizes elicited more fixations (11.35, 13.67, and 15.28 for 12, 16, and 20 items, respectively), as did target-absent trials (17.39) relative to target-present (9.48). Of key interest, fixation counts decreased significantly within blocks of trials (14.09, 13.37, 13.26, and 13.02 for epochs 1–4, respectively).

Of key interest, we found an Experiment × Epoch interaction, F(3, 100) = 6.78, ηp2 = .17, p < .001, indicating a steeper learning slope in Experiment 1a (−.59 fixations/epoch) relative to Experiment 1b (−.08 fixations/epoch). The Set Size × Epoch interaction was not significant (F < 2). We again found significant simple effects of Epoch on both trial types in Experiment 1a (target-absent: F(3, 49) = 9.12, ηp2 = .36, p < .001; target-present: F(3, 49) = 13.39, ηp2 = .45, p < .001), indicating that fixations decreased on both target-absent and target-present trials. We found no effects of Epoch in Experiment 1b (target-absent: F(3, 49) = 1.45, p = .24; target-present: F(3, 49) = 1.64, p = .19).

Average fixation durations

The average fixation durations were entered into a 5-way, repeated-measures ANOVA (identical to number of fixations). The main effect of Experiment was not significant, F(1, 102) = 1.68, p = .20. Each of the remaining four main effects was reliable: Load, F(2, 102) = 26.88, ηp2 = .35, p < .001; Set Size, F(2, 101) = 25.73, ηp2 = .34, p < .001; Trial Type, F(1, 102) = 715.11, ηp2 = .88, p < .001; Epoch, F(3, 100) = 3.92, ηp2 = .11, p = .01. When search RTs were longer (among higher load groups, larger set sizes, and target-absent trials), average fixation durations were shorter. Fixation durations were shortest among high-load participants (260 ms), followed by medium-load (270 ms) and low-load (316). Durations were shorter when search was conducted among more items (291, 283, and 272 ms for twelve, sixteen, and twenty items, respectively), and on target-absent trials (234 ms) relative to target-present (330 ms). Of key interest, and contrary to the RID hypothesis (which predicted decreasing fixation durations over epoch), average fixation durations changed significantly within blocks, increasing slightly (276, 283, 285, and 283 ms for epochs 1–4, respectively).

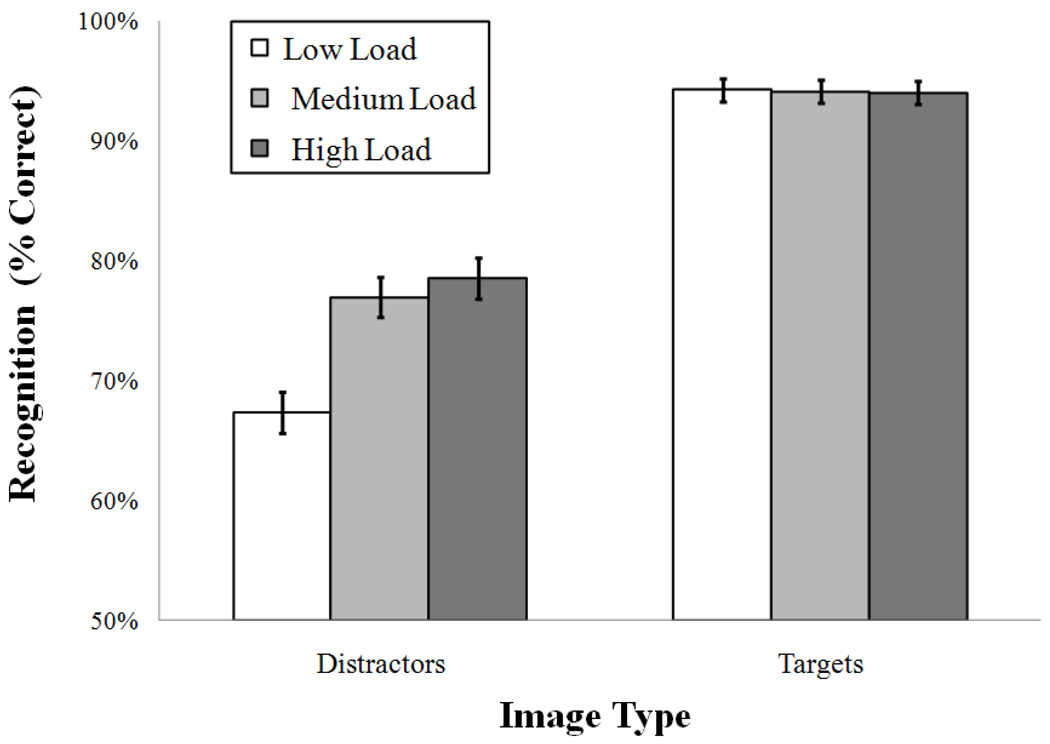

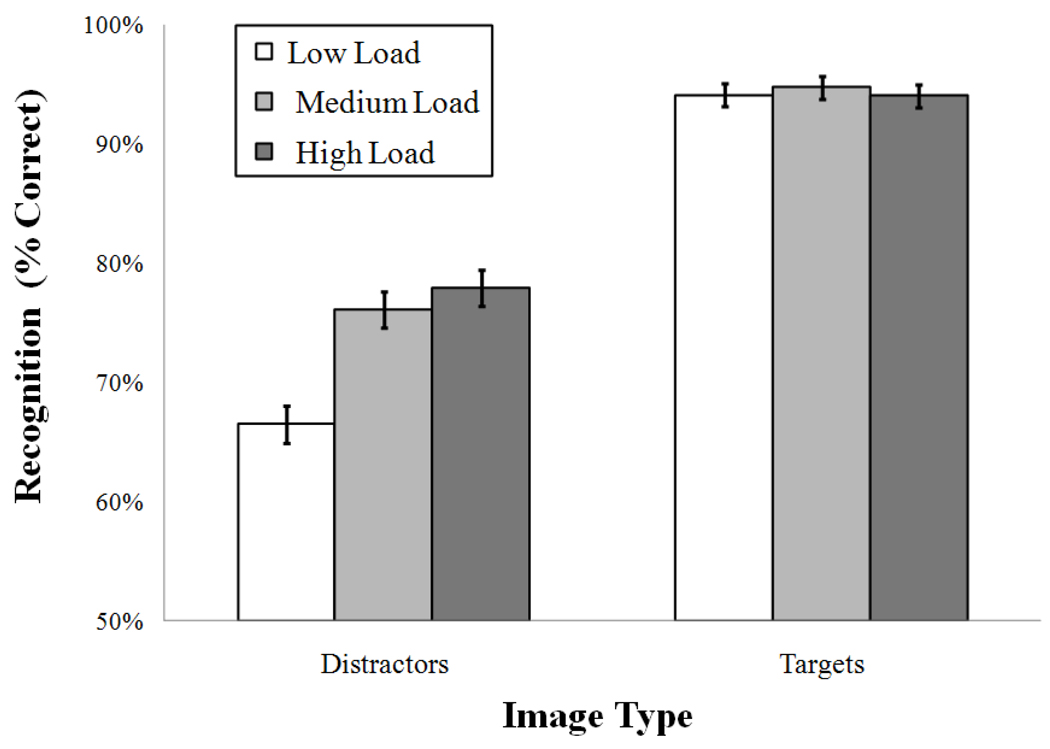

Recognition

Recognition accuracy was entered into a 3-way ANOVA with Experiment, WM Load, and Image Type (target, distractor) as factors. Figure 5 shows recognition accuracy as a function of Load and Image Type. As shown, higher load groups remembered more items, relative to lower load groups, and targets were remembered better than distractors. In all cases, search items were remembered at levels exceeding chance (50%). The main effect of Experiment was not significant, F(1, 102) = 2.82, p = .10. We found a main effect of Load, F(2, 102) = 7.36, ηp2 = .13, p < .001, with high- and medium-load participants performing best (both 86%), relative to low-load (81%). There was a main effect of Image Type, F(1, 102) = 444.36, ηp2 = .81, p < .001, with better performance on targets (94%), relative to distractors (74%). We also found a Load × Image Type interaction, F(1, 102) = 14.38, ηp2 = .22, p < .001. Planned comparisons revealed that recognition for target images (94% for each load group) were not statistically different (F < 1). Memory for distractors, F(2, 102) = 12.97, ηp2 = .20, p < .001, however, varied as a function of Load (67%, 77%, and 79% for low-, medium-, and high-load, respectively).

Figure 5.

Recognition performance (± one SEM) for targets and distractors, shown as a function of WM-load, collapsed across Experiments 1a and 1b.

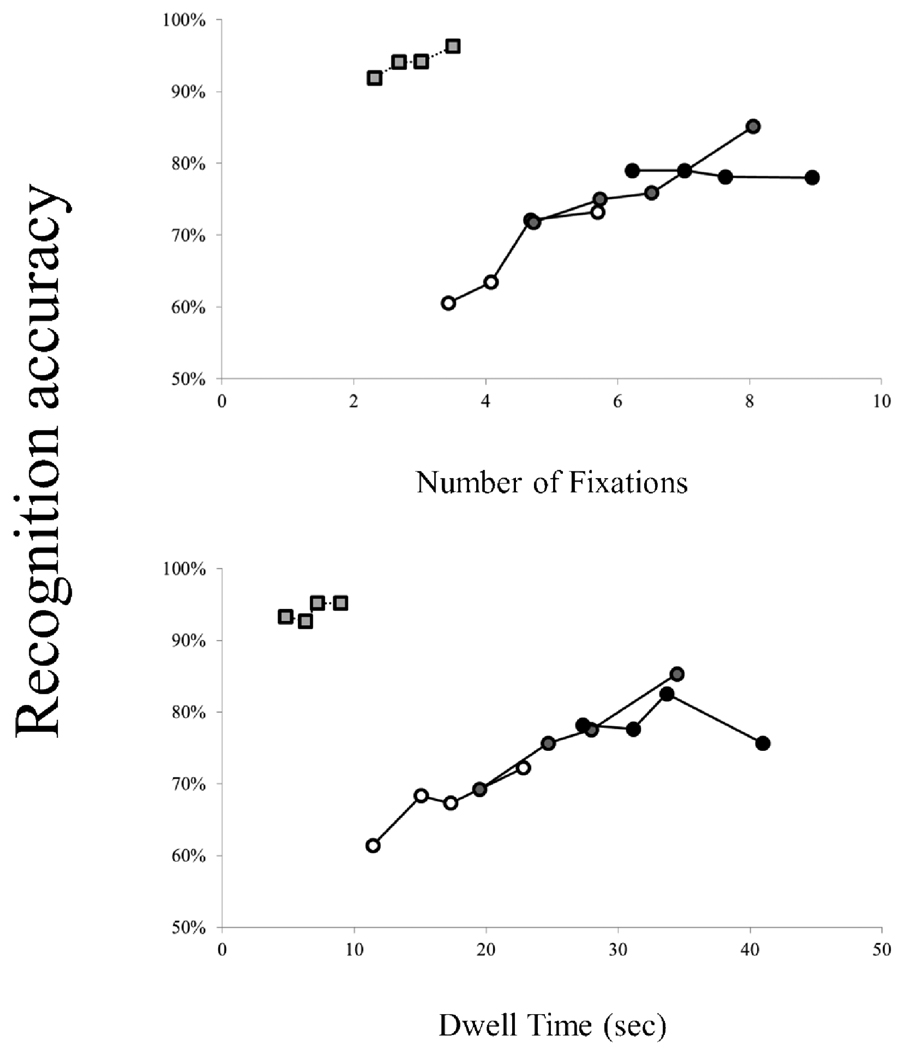

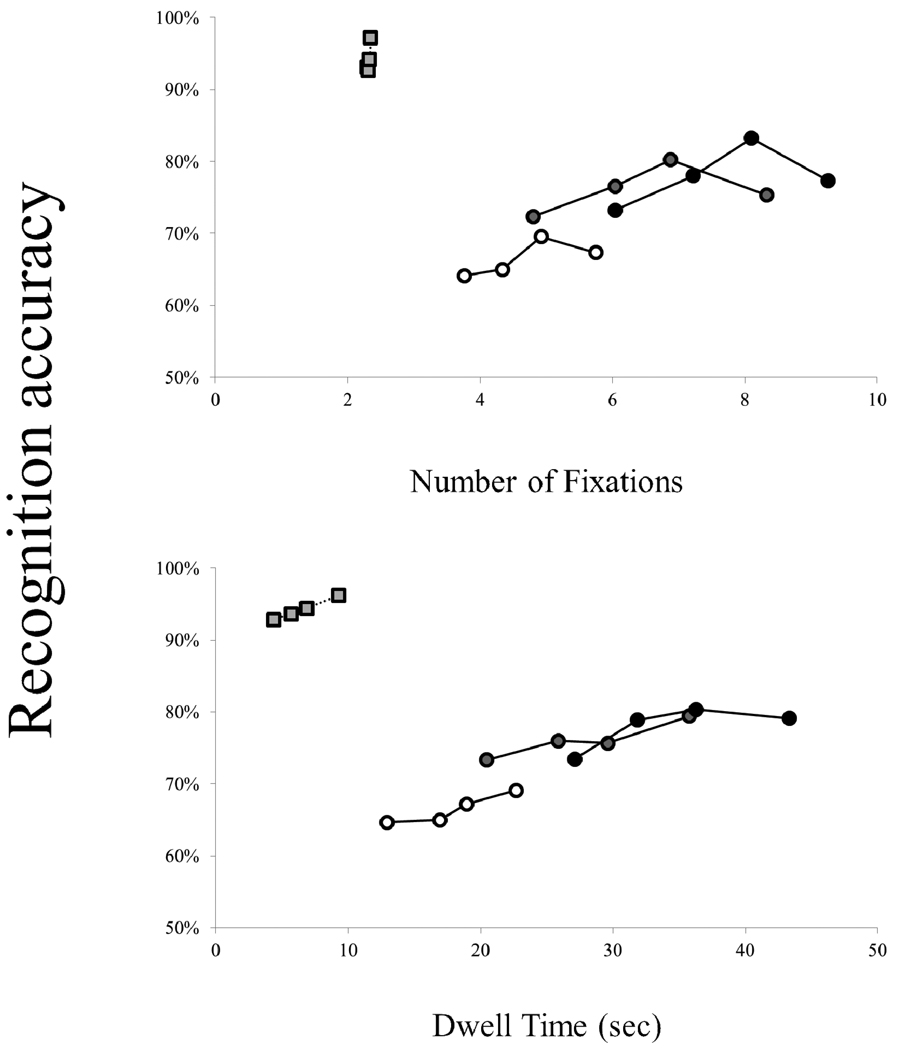

Regression analyses

We examined the relationship of eye movements to recognition memory performance by regressing the probability of correctly remembering an item on the total number of fixations, and total dwell time (the sum of all fixation durations) received per item. Because each participant contributed multiple data points, we included a “participant” factor as the first predictor in the regression model (Hollingworth & Henderson, 2002). In addition to the eye movement measures, we included coefficients for the between-subjects factors of Experiment and WM Load. Following Williams et al. (2005), we simplified presentation by dividing the data into quartile bins, and plotted them against the mean recognition accuracy for each quartile. We are primarily interested in the relationship between viewing behavior and visual memory. Thus, Figure 6 presents recognition memory performance for distractors and targets, as a function of total number of fixations (top panel), and total dwell time (bottom panel).

Figure 6.

Probability of correct recognition of distractors and targets, as a function of total number of fixations, and total dwell time, from Experiment 1. Results were divided into quartile bins for each load group, and plotted against the mean accuracy per quartile. Memory for distractors is indicated by white, gray, and black circles for low-, medium-, and high-load groups, respectively. Squares indicate memory for targets, collapsed across WM Load.

In order to determine which measurement best predicted visual memory, we entered the predictors using a stepwise procedure, following the participant factor (as in Williams, 2009). This allowed us to determine which predictor(s) accounted for significant variance in the model when each factor was considered. For the distractors, a regression model with a single factor, dwell time, accounted for the greatest change in variance, Fchange(1, 105) = 31.04, R2 = .27, p < .001, with no other significant predictors. Similarly, for the targets, the best model contained a single predictor, dwell time, Fchange(1, 106) = 9.95, R2 = .09, p < .001, with no other significant predictors.

Block effects

We examined practice effects across blocks to ascertain whether performance changed over the course of the experiment (see Table A2). We found no effect of Block on search accuracy or RTs (Fs < 1). Regarding eye-movements, there was no effect of Block on the number of fixations (F < 2). However, we did find an effect of Block on average fixation durations, F(2, 106) = 17.09, ηp2 = .24, p < .001, indicating longer durations across blocks.

Table A2.

Search performance (accuracy, RTs) and viewing behavior (number of fixations, average fixation duration), as a function of Experiment and Block Number.

| Block Number |

|||

|---|---|---|---|

| Dependent Measure | Block 1 | Block 2 | Block 3 |

| Experiment 1 | |||

| Search accuracy (% error) | 5% | 5% | 5% |

| Search RTs (ms) | 2970 | 2929 | 2895 |

| Average number of fixations | 13.73 | 13.41 | 13.18 |

| Average fixation duration (ms) * | 272 | 284 | 290 |

| Experiment 2 | |||

| Search accuracy (% error) | 6% | 5% | 5% |

| Search RTs (ms) * | 3196 | 3018 | 2974 |

| Average number of fixations * | 14.77 | 13.84 | 13.56 |

| Average fixation duration (ms) * | 266 | 284 | 280 |

p<.05.

Discussion

The results of Experiment 1 carry three implications: 1) Memory for search items is incidentally acquired over time, and contributes to RT facilitation when the search set is coherently grouped. 2) Even when search is faster, it does not become any more efficient (Oliva et al., 2004; Wolfe et al., 2000). And 3) RTs are speeded decreasing the number of fixations required to complete search, without a concomitant change in individual item processing.

When distractor sets were coherently grouped, RTs decreased across trials, consistent with our previous work (Hout & Goldinger, 2010). Conversely, when search sets were randomized across blocks, RTs remained flat. Despite finding significant facilitation (in Experiment 1a), we found no evidence for increased search efficiency (as measured by the slope of RT by set size) over time. Participants thus responded more quickly, without increasing the efficiency of attentional deployment. Moreover, we found that search facilitation was best explained by participants making fewer fixations per trial as the search sets were learned. This result is consistent with Peterson and Kramer (2001), who found that contextual cueing resulted in participants committing fewer fixations. On the other hand, average fixation durations remained flat across epochs, suggesting that participants did not increase their ability to process individual items, despite having learned their identities.

Given these results, how did our three hypotheses fare? First, because we found no change in fixation durations, there is little support for the RID hypothesis, which predicted more fluent identification and dismissal of distractors. The spatial mapping hypothesis was supported by Experiment 1a, as facilitation occurred by way of participants committing fewer fixations. However, this hypothesis also predicted facilitation in Experiment 1b (because spatial configurations were held constant), which did not occur. Moreover, we conducted a subsequent analysis, examining refixation probability as a function of “lag” (see Peterson et al., 2001) and epoch. In the event of a refixation, we recorded the number of intervening fixations since the initial visit, and examined potential changes in the distribution of refixations over various lags, as a function of epoch. We tested the possibility that, as the displays were learned, the distribution of fixations shifted. If the spatial mapping hypothesis is correct, we might expect to find that, as search RTs decreased, the likelihood of refixating an item shifts to longer and longer lags. That is, participants may get better at avoiding recently fixated items, only committing refixations when the original visit occurred far in the past. However, we found no evidence that refixation probability distributions changed over time, suggesting that participants did not become any better at avoiding recently fixated locations, which seems to argue against spatial mapping.

The search confidence hypothesis fared best with the results of Experiment 1. As it predicts, we found search facilitation under coherent set conditions, and no facilitation when search sets were randomized. Also of key importance, search facilitation occurred to equivalent degrees in both target-present and target-absent trials. While this finding is consistent with all three hypotheses, it suggests that search facilitation did not entirely derive from perceptual or attentional factors. Performance was improved only when distractors were coherently grouped, but without any evidence that people processed them more quickly on an individual basis. Because fixations decreased within blocks (in Experiment 1a), without a concurrent shift in the refixation probability distribution, it appears that search was terminated more quickly as displays grew more familiar. Although the RID hypothesis appears disconfirmed by Experiment 1, we cannot rule out the spatial mapping hypothesis. Experiment 2 was designed to further adjudicate between the spatial mapping and search confidence hypotheses.

Additionally, the results of Experiment 1 replicated and extended several previous findings. Not surprisingly, search was slower in larger sets (e.g., Palmer, 1995), and when targets were absent (e.g., Chun & Wolfe, 1996). Increasing WM load elicited slower, more difficult search processes, but increased incidental retention for distractor objects (Hout & Goldinger, 2010). Our surprise recognition test showed that all objects were incidentally learned during search, and that recognition was very high for targets, relative to distractors (Williams, 2009). Although participants had many opportunities to view the distractors (and saw targets only once), it seems that intentionally encoding the targets prior to search created robust visual memories for their appearance.

Early work by Loftus (1972) indicated that the number of fixations on an object was a good predictor of its later recognition, a finding that has been often replicated (Hollingworth, 2005; Hollingworth & Henderson, 2002; Melcher, 2006; Tatler, Gilchrist, & Land, 2005). Although we found a trend for increased memory as a function of fixation counts, our stepwise regression analyses indicated that memory was better predicted by a model with a single predictor of total dwell time. Our findings complement those of Williams (2009), who found that distractor memory appears to be based on cumulative viewing experience. With respect to WM load, it may be suggested that memory was better among higher load groups simply because they viewed the items longer and more often. Indeed, our WM load manipulation caused participants to make more fixations (consistent with Peterson, Beck, & Wong, 2008) and to thus spend more time on the items. In a previous experiment (Hout & Goldinger, 2010), however, we used a single-object search stream, with constant presentation times, equating stimulus exposure across WM load conditions. Higher WM loads still resulted in better memory for search items, suggesting that loading WM enhances incidental retention of visual details, perhaps by increasing the degree of scrutiny with which items are examined.

Experiment 2

In Experiment 2, we removed the ability to guide search through the learning of consistent spatial configurations by randomizing the layout on each trial. In Experiment 2a, object identities remained constant within each block of trials: Participants could use experience with the distractors to improve search, but could not use any predictable spatial information. In Experiment 2b, object identities were randomized within and across blocks, as in Experiment 1b (see Figure 7). The spatial mapping hypothesis predicts that search RTs will not decrease without spatial consistency, whereas search confidence predicts that facilitation will still occur (in Experiment 2a) through the learning of coherent distractor sets. No such learning should occur in Experiment 2b. As before, the RID hypothesis predicts that equivalent facilitation should occur in both conditions.

Figure 7.

Examples of both forms of object consistency employed in Experiment 2 (for a set size of 12 items). The left panel depicts a starting configuration. The top-right panel shows a subsequent trial with consistent object identities (Experiment 2a); the bottom-right panel shows randomized object identities (Experiment 2b). Spatial configurations were randomized on every trial, in both experiments. Gridlines were imaginary; they are added to the figure for clarity.

Method

One hundred and eight new students from Arizona State University participated in Experiment 2 as partial fulfillment of a course requirement. The method was identical to the previous experiment, with the exception of search arrays, which were now randomized on each trial.

Results

As in Experiment 1, we examined practice trials to gauge any a priori group differences under identical task conditions. We found no differences in search performance or viewing behaviors. Similarly, we found no differences across permutation orders of the counterbalanced design. Overall error rates for visual search were very low (6%; see Table A3). 3

Table A3.

Visual search error rates, as a function of Set Size, WM Load, Trial Type, and Epoch, from Experiment 2.

| Trial Type | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Target-Absent | Target-Present | ||||||||

| Set Size | WM Load Group | Epoch 1 | Epoch 2 | Epoch 3 | Epoch 4 | Epoch 1 | Epoch 2 | Epoch 3 | Epoch 4 |

| Low | 0% | 2% | 0% | 0% | 4% | 5% | 5% | 2% | |

| Twelve | Medium | 3% | 2% | 1% | 0% | 7% | 5% | 5% | 5% |

| High | 2% | 1% | 2% | 3% | 16% | 8% | 7% | 8% | |

| Low | 1% | 1% | 4% | 1% | 8% | 4% | 6% | 6% | |

| Sixteen | Medium | 3% | 1% | 3% | 1% | 10% | 9% | 12% | 8% |

| High | 4% | 2% | 2% | 0% | 12% | 12% | 8% | 10% | |

| Low | 1% | 1% | 1% | 2% | 9% | 10% | 4% | 4% | |

| Twenty | Medium | 3% | 3% | 2% | 1% | 12% | 17% | 8% | 11% |

| High | 3% | 2% | 1% | 2% | 14% | 14% | 12% | 13% | |

Note. Data are presented collapsed across Experiments la and lb, as they did not differ significantly.

Visual search RTs

Visual search RTs were entered into a 5-way ANOVA, with Experiment, Load, Set Size, Trial Type and Epoch as factors, as in Experiment 1. Figure 8 presents mean search times, plotted as a function of Experiment, Load, Set Size, and Trial Type. The key results are easily summarized: Search was slower as WM load increased, and when set sizes increased. RTs were slower on target-absent trials, relative to target-present. Of particular interest, we found reliable effects of Epoch in Experiment 2a, but not in Experiment 2b (see Figure 9). As before, we did not find any change in search efficiency over time.

Figure 8.

Mean visual search RTs (± one SEM) in Experiment 1, as a function of Experiment, Load, Set Size, and Trial Type. White, gray, and black symbols represent low-, medium-, and high-load groups, respectively.

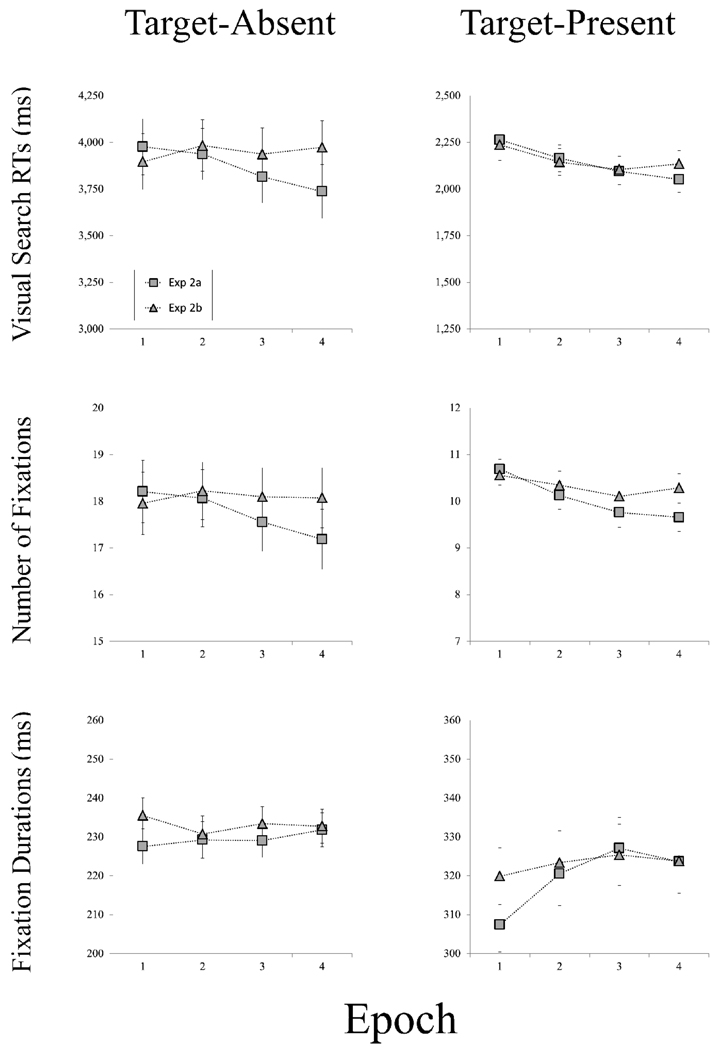

Figure 9.

Mean visual search RTs, number of fixations, and fixation durations (± one SEM) in Experiment 2, as a function of Experiment and Epoch.

The main effect of Experiment was not significant, F(1, 102) < 1, p = .73. There was an effect of Load, F(2, 102) = 62.56, ηp2 = .55, p < .001, with slower search among higher load groups (2035, 3225 ms, and 3824 ms for low-, medium-, and high-load groups, respectively). We found an effect of Set Size, F(2, 101) = 154.99, ηp2 = .75, p < .001, with slower search among larger set sizes (2569, 3065, and 3451 ms for 12, 16, and 20 items, respectively). There was an effect of Trial Type, F(1, 102) = 605.39, ηp2 = .86, p < .001, with slower responding to target-absence (3907 ms) than target-presence (2149 ms). And there was a main effect of Epoch, F(3, 100) = 4.02, ηp2 = .11, p < .01, indicating that search RTs decreased significantly within blocks of trials (3093, 3057, 2988, and 2974 ms for epochs 1–4, respectively).

We found a marginally significant Experiment × Epoch interaction, F(3, 100) = 2.38, ηp2 = .07, p = .07, with a steeper learning slope in Experiment 2a (−77 ms/epoch) relative to Experiment 2b (−8 ms/epoch). ). The Set Size × Epoch interaction was not significant (F < 2), indicating no change in search efficiency over time. Simple effects testing revealed significant effects of Epoch on both trial types in Experiment 2a (target-absent: F(3, 49) = 4.07, ηp2 = .20, p = .01; target-present: F(3, 49) = 3.66, ηp2 = .18, p = .02) but no effects of Epoch on either trial type in Experiment 2b (target-absent: F(3, 49) = 1.21, p = .32; target-present: F(3, 49) = 1.45, p = .24).

Eye movements

The second and third panels of Figure 9 show mean fixation counts and durations, as a function of Trial Type and Experiment. We entered fixation counts and average fixation durations into 5-way, repeated-measures ANOVAs, as in Experiment 1. The fixation counts again mirrored the search RTs: Higher load participants committed more fixations, people made more fixations to larger set sizes, and more fixations were recorded during target-absent search, relative to target-present. Reliable effects of Epoch were found in Experiment 2a, but not Experiment 2b.

Number of fixations

The main effect of Experiment was not significant, F(1, 102) < 1, p = .61. Each of the other main effects was significant: Load, F(2, 102) = 68.90, ηp2 = .57, p < .001; Set Size, F(2, 101) = 147.36, ηp2 = .74, p < .001; Trial Type, F(1, 102) = 549.62, ηp2 = .84, p < .001; Epoch, F(3, 100) = 4.51, ηp2 = .12, p < .01. More fixations occurred among higher load groups relative to lower load (9.65, 14.59, and 17.93 for low-, medium-, and high-load, respectively) and larger set sizes (11.94, 14.24, and 15.99 for 12, 16, and 20 items, respectively). There were more fixations on target-absent trials (17.92) relative to target-present (10.19), and the number of fixations decreased significantly within blocks of trials (14.35, 14.19, 13.88, and 13.80 for epochs 1–4, respectively).

Again, we found a marginal Experiment × Epoch interaction, F(3, 100) = 2.5, ηp2 = .07, p = .06, suggesting a steeper learning slope in Experiment 2a (−.35 fixations/epoch) relative to Experiment 2b (−.04 fixations/epoch). We again found significant simple effects of Epoch on both trial types in Experiment 2a (target-absent: F(3, 49) = 3.23, ηp2 = .17, p = .03; target-present: F(3, 49) = 4.88, ηp2 = .23, p = .01) but no significant simple effects of Epoch on either trial type in Experiment 2b (target-absent: F(3, 49) = 0.41, p = .75; target-present: F(3, 49) = 0.81, p = .49). The Set Size × Epoch interaction was not significant (F < 2).

Fixation durations

Average fixation durations were entered into a 5-way, repeated- measures ANOVA. There was no main effect of Experiment, F(1, 102) < 1, p = .62. There was an effect of Load, F(2, 102) = 24.42, ηp2 = .32, p < .001. There were also effects of Set Size, F(2, 101) = 7.68, ηp2 = .13, p < .001, and Trial Type, F(1, 102) = 755.18, ηp2 = .88, p < .001. The effect of Epoch was marginal, F(3, 100) = 2.55, ηp2 = .07, p = .06. Consistent with Experiment 1 (and again contrary to the RID hypothesis), fixation durations did not decrease across epochs (273, 276, 279, and 278 ms for epochs 1–4, respectively). As in Experiment 1, when search RTs were prolonged (among higher load groups, larger set sizes, and target-absent trials), average fixation durations decreased. They were shortest among high-load participants (252 ms), followed by medium-load (266 ms) and low-load (311), and were shorter when search was conducted among more items (288, 272, and 269 ms for 12, 16, and 20 items, respectively). Durations were shorter on target-absent trials (231 ms), relative to target-present (321 ms).

Recognition

Recognition accuracy (see Figure 10) was entered into a 3-way ANOVA, as in Experiment 1. Higher load groups again remembered more items, relative to lower load groups, and targets were remembered better than distractors. All items were remembered at levels exceeding chance performance. The main effect of Experiment was null, F(1, 102) < 1, p = .55. We found main effects of Load, F(2, 102) = 8.96, ηp2 = .15, p < .001, and Image Type, F(1, 102) = 603.59, ηp2 = .86, p < .001. Performance was better among higher load groups (80%, 85%, and 86% for low-, medium-, and high-load groups, respectively), and targets (94%) were remembered better than distractors (74%). We also found a Load × Image Type interaction, F(1, 102) = 17.13, ηp2 = .25, p < .001. Planned comparisons revealed that recognition for target images (94% for low- and high-load groups; 95% for medium-load) were equivalent (F < 1). Memory for distractors, F(2, 102) = 15.75, ηp2 = .24, p < .001, however, varied as a function of Load (67%, 76%, and 78% for low-, medium-, and high-load, respectively).

Figure 10.

Mean recognition accuracy (± one SEM) for targets and distractors in Experiment 2. Results are plotted as a function of WM load, collapsed across Experiments 2a and 2b.

Regression analyses

We regressed the probability of correct recognition per item on its total fixation count and total dwell time, as in Experiment 1. Figure 11 shows recognition memory performance for distractors and targets, as a function of total number of fixations (top panel), and total dwell time (bottom panel). For the distractors, a stepwise regression model indicated that a single factor, number of fixations, accounted for the greatest change in variance, Fchange(1, 105) = 31.13, R2 = .23, p < .001, with no other significant predictors. For the targets, the best model contained a single predictor, dwell time, Fchange(1, 106) = 8.03, R2 = .07, p < .01, with no other significant predictors.

Figure 11.

Probability of correct recognition of distractors and targets, as a function of total number of fixations, and total dwell time, from Experiment 2. Results were divided into quartile bins for each load group, and plotted against the mean accuracy per quartile. Memory for distractors is indicated by white, gray, and black circles for low-, medium-, and high-load groups, respectively. Squares indicate memory for targets, collapsed across WM Load.

Block effects

We examined practice effects across blocks to determine whether performance changed over the course of the experiment (see Table A2). We found no Block effect on search accuracy (F < 2), but we did find an effect on RTs, F(2, 105) = 4.13, ηp2 = .07, p = .02, with shorter RTs across blocks. Regarding eye-movements, there was an effect of Block on the number of fixations, F(2, 106) = 6.39, ηp2 = .11, p < .01, and average fixation durations, F(2, 106) = 14.38, ηp2 = .21, p < .001, indicating fewer and longer fixations across blocks.

Discussion

Despite the lack of spatial consistency in either Experiment 2a or 2b, we again found search facilitation with coherent sets (Experiment 2a), but not when distractors were randomly grouped across trials (Experiment 2b). Although there was a marginal interaction between our Experiment and Epoch factors (in RTs), the learning slope in Experiment 2a (−77 ms/epoch) was more than nine times the slope observed in Experiment 2b (−8 ms/epoch). Moreover, simple effects testing revealed significant facilitation in both target-present and target-absent trials in Experiment 2a, but no facilitation in Experiment 2b. As before, search efficiency remained constant across epochs, and facilitation occurred through a decrease in fixations, without a change in average fixation durations. These results again argue against the RID hypothesis, and also suggest that enhanced short-term memory for recent fixations (i.e., spatial mapping) cannot be solely responsible for the faster RTs across epochs found in Experiment 1a. However, the results support the search confidence hypothesis, which predicted facilitation in coherent set conditions, irrespective of spatial consistency.

To examine how the loss of spatial information affected search RTs, we conducted a combined analysis of Experiments 1a and 2a, which were identical, save the consistency of spatial layouts. There were main effects of Experiment and Epoch (both F > 4; p < .05), indicating faster RTs in Experiment 1a (2763 ms), relative to Experiment 2a (3005 ms), and a significant decrease in RTs over time. Critically, we found an Experiment × Epoch interaction (F > 3; p < .05), indicating a steeper learning slope in Experiment 1a (−129 ms/epoch), relative to Experiment 2a (−77 ms/epoch). This interaction indicates that when object identities are consistently tied to fixed locations, RT facilitation is greater, relative to when objects are held constant but spatial consistency is removed. These findings further support the search confidence hypothesis, which predicts search facilitation under conditions of object coherence, and to the highest degree, the conjunction of object and spatial consistency.

The present findings complement those of Chun and Jiang (1999) who found a contextual cueing effect in the absence of spatial consistency. Their participants searched for novel shapes: Targets were symmetrical around the vertical axis, and distractors were symmetrical around other orientations. Spatial configurations were randomized on each trial, but a given target shape was sometimes paired with the same distractor shapes; on such consistent-mapping trials, RTs were shorter, relative to inconsistent-mapping trials, wherein target-distractor pairings were randomized. Critically, our experiments differed from the contextual cueing paradigm in that neither the identities nor locations of targets were predicted by background context. Whereas Chun and Jiang’s (1999) findings suggest that background context can prime target identity, our results suggest that learned, coherent backgrounds can facilitate search for novel targets by enhancing search decision confidence. Because our participants encountered each target only once, this benefit in RTs must be explained not by perceptual priming, but rather by a shift in response thresholds.

More generally, the findings from Experiment 1 were replicated in Experiment 2. Loaded search was slower, as was search among larger set sizes. Memory for the targets was uniformly high, and WM load increased the retention of distractor identities. These findings corroborate those of Experiment 1, suggesting that degrading spatial information did not change basic search performance, or disrupt retention for encountered objects. However, the regression analyses yielded slightly different results. In Experiment 1, both target and distractor memory was best predicted by the total dwell time falling on the items. In Experiment 2, distractor memory was best predicted by the number of fixations received (although there was a trend toward better memory as a function of dwell time). This latter finding is consistent with several previous studies (Hollingworth, 2005; Hollingworth & Henderson, 2002; Loftus, 1972; Melcher, 2006; Tatler, Gilchrist, & Land, 2005); clearly both fixation frequency and duration contribute to object memory; future work should aim to determine which is a more powerful predictor.

General Discussion

This investigation contrasted three hypotheses regarding how repeated displays may facilitate search RTs: rapid identification and dismissal of familiar non-target objects, enhanced memory for their spatial locations, and a reduction in decisional evidence required for target detection. Our findings can be summarized by four points: 1) When search objects were coherently grouped across trials, RTs decreased within blocks; when distractor sets were unpredictable across trials, there was no facilitation. 2) Spatial consistency contributed to search facilitation, but repetition of spatial configurations was neither necessary nor sufficient to decrease RTs. 3) Even when search was facilitated by incidental learning, the efficiency of attentional deployments did not increase. And 4) facilitation arises by participants making fewer fixations over time, without a concomitant drop in the time required to identify and dismiss distractors. Although our three hypotheses were not mutually exclusive, our results best support the search confidence hypothesis, and argue against the RID and spatial mapping hypotheses.

Additionally, we replicated several well-known findings from the visual search literature, finding that target-present search was uniformly faster than exhaustive, target-absent search, but participants were more likely to miss than to false-alarm (Chun & Wolfe, 1996). Increasing the number of distractors (i.e., set size) led to slower RTs and decreased accuracy (Palmer, 1995; Treisman & Gormican, 1988; Ward & McClelland, 1989; Wolfe, et al., 1989). Memory for search items was incidentally generated, and, when viewing behavior on the items increased, so did memory (Williams, 2009). Finally, when participants searched for multiple targets, rather than a single target, search was slower and less accurate (Menneer et al., 2005, 2008) and it led to greater incidental learning for distractors (Hout & Goldinger, 2010).

Our RID hypothesis suggests that incidental learning of repeated search objects may increase the fluency of their identification and dismissal. As they are learned, attention may be engaged and disengaged more quickly, allowing the observer to move swiftly across items. Examination of average fixation durations suggested that this proposal is incorrect. Although we are hesitant to interpret a null effect, we found no decrease in fixation durations across trials in any of our conditions. Thus, despite its intuitive appeal, it seems unlikely that RID is responsible for facilitation of search RTs.4

Built upon a body of research indicating that retrospective memory biases fixations away from previously visited locations, the spatial memory hypothesis suggests that configural repetition may enhance visual search. It predicts that, when spatial layouts are held constant (Experiment 1), people should more effectively avoid refixating previous locations; faster search would thus be driven by fewer fixations per trial. This hypothesis attributes facilitation to spatial learning, thereby predicting no decrease in RTs in Experiment 2. Although prior research indicates that short-term spatial memory was likely utilized in Experiment 2 to bias attention away from previously fixated locations, this could only arise within a single trial. (See Peterson, Beck, & Vomela, 2007, for evidence that prospective memory, such as strategic scan-path planning, may also guide visual search.) No such learning could persist across trials. Thus, spatial mapping cannot explain all of our findings. It is clear that spatial consistency contributed to search facilitation, as it was greater in Experiment 1a, relative to Experiment 2a. However, facilitation still occurred in Experiment 2a, in the absence of spatial consistency, and failed to occur in Experiment 1b, wherein configurations were held constant. Neither finding is consistent with the spatial mapping hypothesis. Finally, a tacit assumption of spatial mapping is that, when facilitation occurs, we should find that fixations are biased away from previously visited locations at longer and longer lags. We found no evidence that fixation probability distributions changed over time, once more arguing against this hypothesis.

Our search confidence hypothesis was derived largely from work by Kunar and colleagues (2006; 2008b). It suggests that search facilitation does not occur by enhancement of specific viewing behaviors, but by speeding decision processes in the presence of familiar context. In nature, organisms and objects rarely appear as they do in an experiment: isolated, scattered arbitrarily across a blank background. Instead, they appear in predictable locations, relative to the rest of a scene. Imagine, for instance, that you are asked to locate a person in a photograph of a college campus. You are unlikely to find them crawling up the wall of a building or hovering in space. It is more likely that they will be standing on the sidewalk, sitting on a bench, etc. Biederman (1972) showed that the meaningfulness of scene context affects perceptual recognition. He had participants indicate what object occupied a cued position during briefly presented, real-world scenes. Accuracy was greater for coherent scenes, relative to a jumbled condition (with scenes cut into sixths and rearranged, destroying natural spatial relations). The visual system is sensitive to such regularities. For example, search paths to objects that appear in natural locations (e.g., a beverage located on a bar) are shorter, relative to objects that appear in arbitrary locations (e.g., a microscope located on a bar; Henderson, Weeks, & Hollingworth, 1999). Models that incorporate such top-down contextual information are successful in extrapolating regions of interest, when compared to data from human observers (Oliva, Torralba, Castelhano, & Henderson, 2003).

In line with such findings, Kunar et al. (2007) suggested that search times may improve with experience, without an improvement in attentional guidance. As an observer learns a display, less information may be necessary to reach a search decision. It might take just as long to search through a repeated scene, relative to a novel one, but familiar context may allow earlier, more confident decisions. Thus, in order to isolate the cost of visual search from perceptual or response factors, it is important to differentiate between the factors that jointly determine RT: the slope, and the intercept. The slope of the line (fitting RT × set size) indicates the efficiency of search, whereas nonsearch factors contribute to the intercept. Using the contextual cueing paradigm, Kunar et al. (2007) investigated search slopes in repeated visual contexts. If attention is guided by contextual cueing, then search slopes should be more efficient in repeated conditions. However, they found no differences in the search slopes: RT differences were explained by the intercept, suggesting no changes in attentional guidance (see also Kunar, Flusberg, & Wolfe, 2008a). Conversely, we should expect no contextual cueing to occur when the target “pops out” (Treisman, 1985; Treisman & Gelade, 1980; Treisman & Sato, 1990), as serial attentional guidance is unnecessary in such situations. Nevertheless, Kunar et al. (2007) found significant contextual cueing in basic feature search, wherein targets could be immediately identified by color.

Critically, in their final experiment, Kunar et al. (2007) added interference to the response selection process. Using a search task in which distractor items elicited either congruent or incongruent responses (Starreveld, Theeuwes, & Mortier, 2004), they looked for contextual cueing effects in the presence or absence of response selection interference. When target and distractor identities are congruent, slowing of RTs is generally attributed to interference with response selection (Cohen & Magen, 1999; Cohen & Shoup, 1997; Eriksen & Eriksen, 1974). Participants searched for a red letter among green distractors, indicating whether the target was an A or an R. In congruent trials, the target identity matched the distractors (e.g., a red A among green As); in incongruent trials, targets and distractors were different (e.g., a red R among green As). The results showed a contextual cueing effect only in congruent trials. When the target and distractor identities matched, RTs were faster in predictive (relative to random) displays. This pattern suggested that contextual cueing speeded response selection in a familiar context, reducing the threshold to respond. Taken together, the findings from Kunar et al. (2007) indicate a response selection component in the facilitation of search RTs in a repeated visual display.

The present results are consistent with view from Kunar et al. (2007) and with our search confidence hypothesis. We found search facilitation under coherent set conditions, but not when familiar objects were encountered in random sets. Facilitation was most robust in Experiment 1a, wherein items and locations were linked, providing the highest degree of contextual consistency. Critically, despite decreasing RTs, we found no change in search efficiency over time and fixations never became shorter in duration. Items were given fewer fixations across trials, without changes in individual item processing, indicating quicker termination of the search process (without any decrease in accuracy). Our findings therefore suggest that, when a display is repeated, familiarity with background information leads the observer to terminate search with less perceptual evidence. We hypothesize that, by becoming more familiar with the distractor objects, people become better at differentiating those objects from the targets held in visual working memory. Rather than allowing shorter fixations, which seems most intuitive, this familiarity instead allows people to confidently stop searching, rather than refixating prior locations.

Our results are also consistent with those of Wolfe et al. (2000), who showed that search efficiency was constant, even after 350 presentations of an unchanging, conjunctive display (see also Wolfe et al., 2002). They argued that the perceptual effects of attention vanish once attention is redeployed to a new location. That is, the attentional processes used to conduct search in a familiar scene are no different from those used to locate a target in a novel scene. With these findings in mind, it is easy to appreciate the (perhaps) counter-intuitive nature of the Guided Search model (Wolfe, 2007); because attentional guidance does not improve, even given extensive experience with a consistent display, it is reasonable to adopt an amnesic model, rather than one that remembers prior attentional deployments. Our findings fit well with this view: We propose that search decisions are facilitated by repeated context not because attentional guidance becomes more efficient, but because search decisions are executed more quickly.

Taken together, it appears that, when people repeatedly search in a novel display, information about object identities is incidentally acquired over time. When object information is coherent across repeated searches, observers become faster to locate a target, or to determine its absence. Although the present results suggest some role for a spatial memory component (Peterson, et al., 2001), it cannot explain the full pattern of results. Instead, memory for spatial configurations seems to complement object memory by increasing an observer’s overall sense of familiarity with a display. Eye movements appear to remain relatively chaotic, with memory acting only to avoid very recent fixations. Speeded search also appears no more efficient than unspeeded search in the identification and dismissal of well-learned distractors. Rather, given a repeated context, familiarity with background information facilitates search by decreasing response thresholds.