Abstract

Introduction

Global cognitive and psychomotor assessment in simulation based curricula is complex. We describe assessment of novices' cognitive skills in a trauma curriculum using a simulation aligned facilitated discovery method.

Methods

Third-year medical students in a surgery clerkship completed two student-written simulation scenarios (SWSS) as an assessment method in a trauma curriculum employing high fidelity human patient simulators (manikins). SWSS consisted of written physiologic parameters, intervention responses, a performance evaluation form, and a critical interventions checklist.

Results

Seventy-one students participated. SWSS scores were compared to multiple choice test (MCQ), checklist-graded solo performance in a trauma scenario (STS), and clerkship summative evaluation grades. The SWSS appeared to be slightly better than STS in discriminating between Honors and non-Honors students, although the mean scores of Honors and non-Honors students on SWSS, STS, or MCQ were not significantly different. SWSS exhibited good equivalent form reliability (r=0.88), and higher interrater reliability versus STS (r=0.93 vs r=0.79).

Conclusion

SWSS is a promising assessment method for simulation based curricula.

Introduction

Care of the injured patient is an essential knowledge area for graduating medical students.1 Medical student education in trauma resuscitation has several inherent challenges. Students must learn and apply both cognitive and psychomotor skills; perceived patient risk limits novice participation in direct patient care interventions; and finally, the majority of trauma education must take place within the time constraints of the surgical clerkship. Simulation based training with high-fidelity human patient simulators (manikins) have become a popular tool for teaching and assessing skills in trauma resuscitation.2–5 Manikin use in this context has several advantages: Integration of cognitive and psychomotor skills performance in rapid, reproducible scenarios; education in a supportive setting that eliminates patient risk; and consistent content for formative and summative assessment. Surgical residents who practiced with manikins demonstrated improved trauma assessment test scores compared to training with traditional moulage patients.6 Simulation based training in undergraduate surgical curriculum, when compared to case-based lecture, has resulted in improvement on objective structured clinical examinations.7

Despite enthusiasm for the use of manikins, optimal methods for assessing educational outcomes have not been established, and few studies validate performance evaluation methods for simulation based training. Global rating scales and checklists are widely utilized, but continue to have variable internal reliability and correlation with other educational outcome standards.8

The authors instituted a simulation based trauma resuscitation curriculum designed to allow the student a learner-centered, self-paced method of assimilating content. We sought to design and evaluate a summative cognitive assessment method administered over a prolonged interval, allowing for reflection and incorporating principles of inductive learning.9 This method, the student-written simulation scenarios (SWSS), was designed to be less subject to student performance anxiety, to incur less rater variability, and to avoid bias against students with less mature psychomotor development. We hypothesized that student assessment based upon SWSS creation might produce scores that are more reliable than summative assessment of observed performance in a simulation scenario (STS). Further, we sought to evaluate the association between multiple summative assessment methods: simulation resuscitation scenario performance (STS), multiple choice question test (MCQ), and SWSS, with the “gold standard” of global clinical performance assessment.

Methods

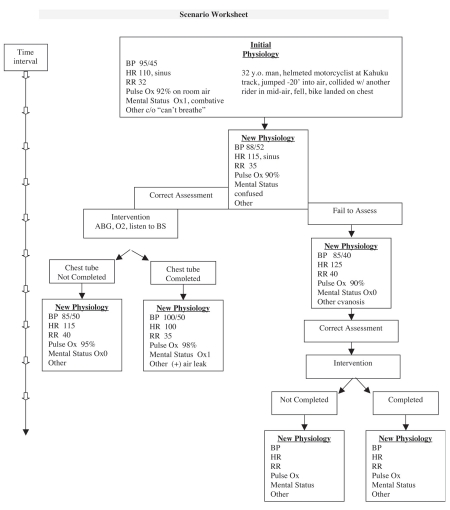

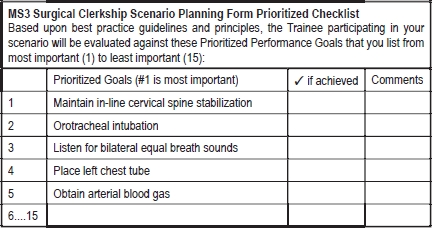

All third-year medical students at the University of Hawaii, John A. Burns School of Medicine (JABSOM) enrolled in a 7-week surgery clerkship from January 2007 to June 2008 consented to participate in this study, which was approved by the University of Hawaii's Committee on Human Studies. Students were required to complete the trauma curriculum as one element of their surgical clerkship. This curriculum included (a) assigned reading of the American College of Surgeons Committee on Trauma TEAM booklet;10 (b) 90-minute lecture by a trauma surgeon on TEAM core content; (c) clinical experience in trauma patient management; and (d) a 3-hour, hands-on, demonstration and practice session with simulator based trauma resuscitation. This session was conducted in small groups (2–4 students) on manikins (SimMan, Laerdal Medical Corporation, Wappingers Falls, NY) and included assessment and treatment of simulated trauma patients. During the weeks following the simulation session, the students received individualized mentored instruction in developing simulated case scenarios, and were asked to author two 10-minute simulation cases (SWSS) incorporating the key principles of trauma resuscitation taught in lecture and simulation lab. Students were instructed to select two different traumatic shock states to describe in the SWSS exercise. Tension pneumothorax, hemorrhagic shock, and pericardial tamponade were suggested as possible SWSS options. The SWSS consists of a flow sheet of physiologic parameters and response to interventions (Figure 1), and a 15-point checklist designed to evaluate a learner's performance in the simulation scenario (Figure 2).

Figure1.

Sample Flow Sheet for the Student-written Simulation Scenario (SWSS)

Figure 2.

Checklist to be Completed as Part of the Student-written Simulation Scenario (SWSS).

Students received one overall grade at the end of the clerkship (Honors, Credit, or Incomplete/No Credit) based primarily upon the subjective evaluation by the residents and attending surgeons, of their knowledge and clinical performance, the standard method for this clerkship. Specific trauma knowledge was assessed by three additional methods: (1) the standard multiple choice, 20-question TEAM written examination (MCQ), (2) solo performance in a simulated trauma scenario (STS), and (3) grading of the SWSS. Students were advised that their performance in these three trauma assessment areas would be graded and considered (weight of 5%) in their end-clerkship grade. Evaluations of the students in the simulated trauma scenario and the SWSS were done independently by three trauma practitioners (the trauma simulation faculty) who did not contribute to the students' end-clerkship clinical evaluation.

Solo performance in a simulated trauma scenario (STS) was assessed during the penultimate week of the clerkship. Students were required to act as the primary trauma surgeon in a 10-minute resuscitation scenario. Students were videotaped, and three independent assessment scores (range 0–21) of the STS were generated by the trauma simulation faculty using a binary checklist, based upon the “ABCDE” tasks of trauma assessment. (Figure 2) Likewise, grading of the SWSS was done independently by three reviewers. Faculty reviewers are all clinicians trained in Advanced Trauma Life Support, active in medical student education and evaluation, and cumulatively have over 65 years of clinical experience in trauma resuscitation. SWSS scores (range 0–15) were based upon key areas covered in the 15-point SWSS checklist, as well as content validity of the flow sheet, ie, the accuracy and completeness of the scenario in illustrating the key physiology and fundamental steps of trauma resuscitation. At the end of the assessment, students completed an attitudinal survey about their perception of the value of the components of the trauma curriculum.

Data were analyzed by a biostatistician. Interobserver reliability of test scores for SWSS and STS was determined by Intraclass Correlation Coefficient. Equivalent forms reliability was determined using Pearson's correlation. The mean difference between the two SWSS scores was also evaluated using a two-tailed paired t-test. Mean scores in the three trauma assessment areas (SWSS, STS, MCQ) were compared for students earning final clerkship grades of Honors versus non-Honors students using two-tailed two-sample t-tests. Logistic regression was used to measure any association between the assessment scores and the likelihood of a student having an Honors or a non-Honors grade. Significance was determined at P <.05.

Results

Seventy-three students were enrolled in the study, two students initially enrolled did not complete the clerkship for reasons unrelated to the trauma curriculum, and thus 71 students completed all phases of the curriculum and testing. Due to poor video recording, two of these students could not have interobserver reliability determined for their solo simulation performance session (STS). Nineteen students earned Honors in the clerkship, 48 received Credit, and 4 were Incomplete/No Credit. In no instance did a student's scores in the trauma curriculum alter the grade determined by their overall clinical performance on the surgery rotation.

Equivalent forms reliability for the two SWSS was high (r = 0.88) and the 95% confidence interval for the mean difference between the two mean SWSS scores was (−0.49, 0.25) indicating that on average there is no significant difference between the scores of the two SWSS for each student. The interobserver reliability for SWSS was also very high (r = 0.98), showing close agreement between graders of the SWSS. The interobserver reliability was higher for the SWSS than for performance on the simulated trauma scenario (STS, r = 0.92), despite the use of a simple, binary checklist to score the STS.

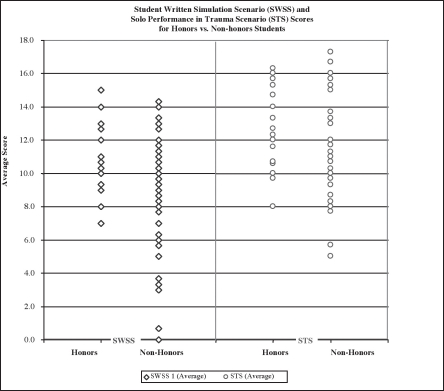

When compared to the “gold standard” of clinical assessment by surgeons (chief residents and attendings), the SWSS appeared better than STS in discriminating between Honors and non-Honors students (Figure 3). Although in this small sample of students, the difference between the mean SWSS scores of the Honors versus non-Honors students was not statistically significant, stepwise logistic regression analysis demonstrated a lower P-value (P=0.06) for the SWSS score than for the STS score (P=0.1). This indicates a higher probability of association with an Honors grade for students performing well on the SWSS than for the STS.

Figure 3.

Individual Scores for Honors vs. Non-honors Students Exhibit Less Overlap for the Student-written Simulation Scenarios (SWSS) than for Solo Performance in a Simulated Trauma Scenario (STS).

While designed primarily as an assessment method, students reported that they valued the SWSS as a learning method as well. 38% of students preferred the SWSS to the TEAM lecture as an educational method.

Discussion

The rapidly expanding use of simulation in surgical education has outpaced the development of supportive curricula. With any educational method it is imperative that the assessment method be reliable, valid, and relevant to the realm of actual clinical performance. Kneebone states, “Ideal simulation-based learning environments should provide a supportive, motivational, and learner-centered milieu which is conducive to learning.”11 Optimally, assessment methods for simulation based training should reflect this learnercentered philosophy, and should creatively employ the construct of simulation technology. Our traditional methods of performance assessment: Multiple-choice written examination, and evaluation of individual global performance in a brief, stressful simulated trauma scenario, fell short of this ideal.

Evaluation methods in simulation based training have typically focused on task completion rates, critical time to task performance on a simulator or standardized patient, and measures of teamwork.12 Evaluation of integrated higher order performance, such as sequential hierchical decision making or psychomotor performance, is even more complex and difficult to standardize. Evaluation methods may be inherently flawed, particularly when addressing a group of novices, who have yet to achieve the automaticity characteristic of conscious competence which may allow relaxed, rapid, simultaneous performance of cognitive and psychomotor tasks. Utilizing simulator based examination techniques may significantly disadvantage students whose self-confidence and psychomotor talents lag behind their cognitive achievements. Furthermore, such methods of evaluation require validation and reliability before being used for high stakes assessment. An examinee centered approach to establishing assessment standards in simulation based training has been recently validated and advocated for performance based assessment using simulation methodologies.13

JABSOM has been a pioneer in the use of problem-based learning (PBL) for medical education.14 Other institutions have reported their use of student-written medical cases as a learning and assessment tool. At Indiana University School of Medicine, students participate in a senior elective in PBL case writing. PBL case writing is reported to be an effective method to teach and evaluate students' application of basic science knowledge, communication, and problem solving skills.16,16 The concept of PBL case writing may be logically expanded to simulator scenario case writing, but to our knowledge no one has reported the use of simulation scenario writing in this fashion. We developed SWSS to better fulfill our ideal of the optimal instrument for cognitive summative assessment in a simulation based curriculum. We demonstrated the reliability of this instrument, as well as its validity relative to clinical performance evaluation. The successful use of the SWSS in our JABSOM students may be attributable in part to the extensive experience that our students have in the PBL method, which is the primary educational format for the first two years at our medical school. The clinical entity of trauma resuscitation, with its well-defined algorithmic approach and key clinical tasks, also lends itself easily to use of the SWSS. We found that grading of the SWSS was made more facile by requiring the students to construct a 15-point binary checklist of the key clinical tasks in their scenario.

We expect that the SWSS will be easily assimilated by students and faculty at other institutions who are familiar with interactive, case-based learning. We propose further investigation of this assessment tool in other simulation based curricula, particularly curricula for crisis management with defined clinical algorithms.

Disclosure Statement

The authors have no financial relationships with companies relevant to the content of this paper.

References

- 1.American College of Surgeons, author. Successfully Navigating the First Year of Surgical Residency: Essentials for Medical Students and PGY-1 Residents. Chicago, IL: American College of Surgeons; 2005. [Google Scholar]

- 2.Murray D, et al. An acute care skills evaluation for graduating medical students: a pilot study using clinical simulation. Med Educ. 2002;36(9):833–841. doi: 10.1046/j.1365-2923.2002.01290.x. [DOI] [PubMed] [Google Scholar]

- 3.Holcomb JB, et al. Evaluation of trauma team performance using an advanced human patient simulator for resuscitation training. J Trauma. 2002;52(6):1078–1085. doi: 10.1097/00005373-200206000-00009. discussion 1085–1086. [DOI] [PubMed] [Google Scholar]

- 4.Gilbart MK, et al. A computer-based trauma simulator for teaching trauma management skills. Am J Surg. 2000;179(3):223–228. doi: 10.1016/s0002-9610(00)00302-0. [DOI] [PubMed] [Google Scholar]

- 5.Marshall RL, et al. Use of a human patient simulator in the development of resident trauma management skills. J Trauma. 2001;51(1):17–21. doi: 10.1097/00005373-200107000-00003. [DOI] [PubMed] [Google Scholar]

- 6.Lee SK, et al. Trauma assessment training with a patient simulator: a prospective, randomized study. J Trauma. 2003;55(4):651–657. doi: 10.1097/01.TA.0000035092.83759.29. [DOI] [PubMed] [Google Scholar]

- 7.Nackman GB, Bermann M, Hammond J. Effective use of human simulators in surgical education. J Surg Res. 2003;115(2):214–218. doi: 10.1016/s0022-4804(03)00359-7. [DOI] [PubMed] [Google Scholar]

- 8.Kim J, et al. A comparison of global rating scale and checklist scores in the validation of an evaluation tool to assess performance in the resuscitation of critically ill patients during simulated emergencies. Sim Healthcare. 2009;4(1):6–16. doi: 10.1097/SIH.0b013e3181880472. [DOI] [PubMed] [Google Scholar]

- 9.Prince MJ, Felder RM. Inductive teaching and learning methods: definitions, comparisons and research bases. Journal of Engineering Education. 2006;95(2):123–138. [Google Scholar]

- 10.American College of Surgeons Committee on Trauma, author. TEAM Trauma Evaluation and Management. Early care of the injured patient: A program for medical students and multidisciplinary team members. 2nd Edition. Chicago, IL: American College of Surgeons; 2005. [Google Scholar]

- 11.Kneebone R. Evaluating clinical simulations for learning procedural skills: a theory-based approach. Acad Med. 2005;80(6):549–553. doi: 10.1097/00001888-200506000-00006. [DOI] [PubMed] [Google Scholar]

- 12.Rosen MA, et al. Measuring team performance in simulation based training: adopting best practices for healthcare. Sim Healthcare. 2008;3(1):33–41. doi: 10.1097/SIH.0b013e3181626276. [DOI] [PubMed] [Google Scholar]

- 13.Boulet JR, et al. Setting performance standards for mannequin-based acute-care scenarios. Sim Healthcare. 2008;3(2):72–81. doi: 10.1097/SIH.0b013e31816e39e2. [DOI] [PubMed] [Google Scholar]

- 14.Kramer KJ, et al. Hamilton to Honolulu: Problem based learning local style. Hawaii Medical Journal. 2002 Aug;61:175–176. [PubMed] [Google Scholar]

- 15.Agbor-Baiyee W. Problem-based learning case writing in medical science. O.o.E.R.a.I: U.S. Department of Education; 2002. pp. 1–7. Educational Resources Information Center (ERIC) [Google Scholar]

- 16.Bankston PW, Porter G. A five step approach to clinical case writing in the structural sciences. Pathology Education. 2001;25(2):42–44. [Google Scholar]