Abstract

Purpose

To investigate the association between physician participants’ levels of engagement in a Web-based educational intervention and their patients’ baseline diabetes measures.

Method

The authors conducted a randomized trial of online CME activities designed to improve diabetes care provided by family, general, and internal medicine physicians in rural areas of 11 southeastern states between September 2006 and July 2008. Using incidence rate ratios derived from negative binomial models, the relationship between physicians’ engagement with the study Web site and baseline proportion of their patients having controlled diabetes (hemoglobin A1c ≤7%) was explored.

Results

One hundred thirty-three participants (intervention = 64; control = 69) provided information for 1,637 patients with diabetes. In the intervention group, physicians in practices in the worst quartiles of A1c control were least engaged with the study Web site in nearly all dimensions. Total number of pages viewed decreased as quartile of A1c control worsened (137, 73, 68, 57; P = .007); similarly, for a given 10% increase in proportion of patients with controlled A1c, participants viewed 1.13 times more pages (95% CI: 1.02–1.26, P = .02). In the control group, engagement was neither correlated with A1c control nor different across quartiles of A1c control.

Conclusions

Engagement in Web-based interventions is measurable and has important implications for research and education. Because physicians of patients with the greatest need for improvement in A1c control may not use online educational resources as intensely as others, other strategies may be necessary to engage these physicians in professional development activities.

Internet use is now ubiquitous among physicians in practice. With over 96% of physicians in the United States reporting the Internet as a primary tool for addressing clinical questions,1,2 it comes as no surprise that providers of continuing medical education (CME) increasingly turn to the Internet to deliver and disseminate educational material. In 2008, accredited CME providers offered 22,006 Internet enduring materials for a total of 47,304 hours of instruction to over 3.7 million physician participants.3 Web-based offerings made up 28.5% of the total volume of CME activities and included 40.9% of total physician participation that year.3 In another report, physicians completed approximately 13% of their required CME credit online, a 63% increase since 2003.4

Online education offers great opportunity to overcome some of the barriers to physician participation in traditional educational formats. Issues of flexibility, convenience, and interactivity are more easily addressed in an online learning environment where content can be accessed at the learner’s discretion, particularly when immediately relevant to a clinical question. In addition, although studies of their effectiveness trail their proliferation,5 existing evidence suggests that Web-based interventions, including CME activities, have the potential to improve physician performance and patient health outcomes.6–9

It is likely that the impact of Web-based interventions and CME on physician performance and patient health outcomes is related to the level of participation and engagement of the individual learners with the online material. However, accurate definition and measurement of engagement is difficult, particularly when using basic metrics available in most Web tracking software.10 Further, although many have sought to outline the process of information seeking among physicians,11 no research has determined the exact relationship between baseline performance and subsequent level of engagement. Using a multidimensional approach to assessing participation,10,12 we examined differences in engagement with the Rural Diabetes Online Care (RDOC) Web site among physicians of patients with diabetes. Specifically, we investigated whether lower-performing physicians whose patients’ blood glucose levels were poorly controlled at baseline would be more likely to seek information and, thus, have higher engagement levels than their higher-performing counterparts.

Method

Study design and setting

We report on baseline data from the 2006–2009 RDOC study, a group-randomized trial testing the effectiveness of an Internet-based intervention aimed at improving diabetes care by rural primary care providers (ClinicalTrials.gov identifier: NCT00403091). This report does not address the primary results of the main study.

Participating physicians were family, general, and internal medicine physicians located in rural areas of 11 southeastern states in the United States (Alabama, Arkansas, Florida, Georgia, Kentucky, Mississippi, Missouri, North Carolina, South Carolina, Tennessee, and West Virginia). Rural areas were identified by the standard definition of the U.S. Office of Management and Budget as those not included in metropolitan statistical areas. Physicians were recruited between February 2006 and June 2008 with mailed and faxed materials, presentations at professional meetings, physician-to-physician telephone conversations, and office visits. Those interested were invited to enroll in the study by visiting the study Web site; enrollment occurred between September 2006 and July 2008. After providing informed consent online, physicians were then randomized to either the intervention or control arm of the study. All participating physicians were offered a maximum of 12 AMA PRA Category 1 CME credits at no charge.

Participating physicians provided the study team 10 to 15 patient records of consecutively seen patients with diabetes who met the following eligibility criteria: They were the physicians’ own patients, had at least two office visits and at least one year of observation data in the medical record, and had no dialysis, dementia, organ transplantation, HIV/AIDS, terminal illness, or malignancy (except skin and prostate cancer). After being blinded, records were sent to the study center or abstractors were permitted to abstract the records on-site in the office. Each physician received a $200 incentive for participation in the study, and the physicians’ staff received $50 for copying charts.

Experienced abstractors, who were trained specifically for the purposes of this study, abstracted the blinded data using a structured medical record review instrument and the publicly available MedQuest software. All abstractors had years of experience conducting chart reviews for multicenter studies. Throughout the duration of the study, all abstractors conducted quality control by dual abstraction and independent data entry on 5% of records (n = 90); dual abstraction revealed agreement of at least 90%. Baseline patient data from participating practices were collected from September 2006 through July 2008. The Web sites were launched on September 27, 2006.

Intervention description

The intervention and control Web sites were developed after a formative evaluation process,13 which included a barrier analysis,14 expert advisory panel review, and usability testing. The intervention site had six components: (1) practice timesavers, (2) challenging cases, (3) practical goals and guidelines, (4) patient resources, (5) an area to track and view CME credit, and (6) individualized performance feedback reports. The central components of the intervention were displayed prominently in the Web site (practice timesavers, cases, performance feedback).

The practice timesavers contained information about how to improve practice efficiency: time-saving tools, such as flow sheets, to track patients, standing orders, and tips for accurate billing; strategies for empowering office staff to promote efficient counseling; and strategies, such as quality improvement tools, for improving patient care without spending more time. For the last part of this component, we were permitted to adapt parts of the diabetes modules developed by the American Board of Internal Medicine15 and the American Board of Family Medicine.16

The challenging cases section contained four clinical vignettes, deployed across 12 months. The cases highlighted the essentials of diabetes control: hemoglobin A1c, blood pressure, and total cholesterol (case 1), intensification of therapy to achieve blood pressure control for hypertensive patients with diabetes (case 2), strategies to control glucose when oral therapy fails (case 3), and lipid control (case 4). All cases were interactive, and physicians received real-time feedback on responses to their questions as well as real-time comparison with other participants’ answers. The cases were concise and contained highlighted key points. We used this approach because performance on clinical vignettes is associated with actual clinical performance17 and has been effective in prior clinical trials.7,18

The practical goals and guidelines section summarized current recommendations by the American Diabetes Association19 and the Joint National Committee on Prevention, Detection, Evaluation, and Treatment of High Blood Pressure.20 The main focus of these was on glucose, blood pressure, and lipids control, and we did not modify the guidelines. We provided practical tips to improve control through a patient resources section that provided selected information from national organizations on methods for patients to track clinical measures (glucose, blood pressure, lipids), self-management, medication resources, dietary information, and diabetes prevention strategies.

The individualized performance feedback reports were added 12 to 18 months after launching the Web site for the physicians who provided patients’ information at baseline. We used the Achievable Benchmarks of Care method to provide feedback21,22 about the baseline data in the form of a graphical comparison of participating physicians’ personal performance on each indicator compared with a benchmark (representing the average performance for the top 10% of physicians). The indicators used were monitoring (A1c, blood pressure, cholesterol), counseling (diet or exercise), and acting to improve (if uncontrolled A1c, blood pressure, cholesterol).

Physicians in the intervention group also received e-mail reminders every one to three weeks about new updates to the Web site.

Control description

The control Web site had four components: (1) online resources, (2) a list of educational conferences in the area, (3) an area to track and view their CME credit, and (4) a link to an external medical blog. The online resources were links to five practice guidelines and four sites for patient education materials from national organizations. The educational conferences list and the medical blog were updated regularly. As opposed to their intervention counterparts, physicians in the control group did not receive the performance feedback reports or electronic communications reminding them of the Web site or contents.

Patient-level measures

The most recent hemoglobin A1c level recorded in the patient chart was used to assess glucose control at baseline. The main independent variable for this study was the proportion of patients with controlled diabetes (hemoglobin A1c ≤7%) for each physician’s practice. We chose this variable because it is a recommended performance measurement.19

Study engagement—Web participation

Participating physicians in both arms of the study were required to log in to access the study Web site. Between September 2006 and January 2009, we prospectively tracked and measured each physician’s engagement with the Web site by authenticating his or her identity as he or she logged onto the Web site. Server log files were tagged with date and time and linked to each visit.

We built on the work by Houston et al10 and Danaher et al12 to measure study engagement across four domains: volume, frequency, variety of components, and duration of visit. We defined volume as the total number of page views and frequency as the total number of visits. Because the variety of components was an important feature of the study’s design, we counted the number of components accessed, the number of cases completed (for the intervention group), and the number of CME credits requested. The duration of access was measured by the aggregate duration of visits (defined as the sum of Web page durations during each visit, time in minutes between start and logged end time).23,24 Exposure was measured by the total number of weeks participating (defined as weeks from first to last log-in—users who accessed the site once were counted as one week).

Each visit duration may not reflect the actual time spent, because physicians may have left the Web site open for any length of time but may not have spent all of that time on the site. The date and time stamp are only updated when the browser is closed or when the user logs off. Thus, to address this issue we censored the data for each visit in two ways. First, we explored the distribution of time spent by the intervention group working on the cases and found that 86% to 95% of the physicians spent less than 15 minutes on any of the cases. Thus, we censored the time spent on any given case at ≤15 minutes. Second, we explored the distribution of time spent by all participants on any given visit and found that 92% spent less than 30 minutes. Accordingly, we censored the time spent on any given visit at ≤30 minutes. These methods of censoring data are consistent with a standard set by organizations tracking Internet use.24,25

We did not calculate other measures of participation because we have found that some are highly interrelated10 and that they require additional computational calculation not usually offered by commercial Web-tracking software.

Statistical approach

For all main comparisons, the unit of analysis was the participating physician. We used three methods to explore the associations among the dependent variables (domains of study engagement) and the main independent variable (proportion of patients with A1c controlled at the physician level at baseline). Each of the main dependent variables was modeled as a count, such as the number of Web pages accessed. First, for the bivariate comparisons, we classified practices according to quartile of diabetes control. Physicians in the first quartile of diabetes control had the most patients with hemoglobin A1c ≤7%, and physicians in the fourth quartile had the fewer controlled patients. We used nonparametric trend tests to compare practice characteristics across quartile of control. Second, we used Spearman rho to compare continuous associations between the independent variable and each dependent variable.

Third, we used negative binomial regression26,27 to explore the relationship between the dependent variables and the independent variables, accounting for variable time of exposure to the intervention. Negative binomial models with exposure offsets are appropriate for count variables with overdispersion (variance > outcome mean) and variable exposure of each participant to the intervention. The incidence rate ratio (IRR) obtained from negative binomial regression is interpreted as a direct multiplier of the count outcome. We modeled a 10% change in the proportion of patients with A1c control at the physician level and accounted for three levels of exposure to the intervention (<1 week, ≥1 week to <12 weeks, and ≥12 weeks). Therefore, for each one-unit increase in the independent variable (a 10% increase in practice performance), the expected count for the outcome is multiplied by a factor of (IRR), holding all other covariates constant in the model.

We conducted sensitivity analysis by repeating the analyses using the A1c value as a continuous independent variable and obtained pairwise correlations between measures of study engagement using Spearman rho correlation coefficients. All analyses were done using SPSS 16.0 (Chicago, Illinois) and STATA SE 10.0 (College Station, Texas).

The protocol was approved by the University of Alabama at Birmingham and the University of Alabama at Tuscaloosa institutional review boards.

Results

We analyzed data from 133 physicians and 1,637 of their patients, 64 physicians from the intervention group (947 patients) and 69 physicians from the control group (690 patients), displayed in Table 1.

Table 1.

Engagement With a Study Web Site Offering Diabetes Care Resources of 133 Rural Primary Care Practices in the United States, Overall and by Quartile of A1c Control (Proportion of Patients With Diabetes With Hemoglobin A1c ≤7%), 2006–2008

| Variables by group | Overall

|

Quartile (Q)

|

Spearman rho | P† | |||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | (SD) | Q1 (Best) | Q2 | Q3 | Q4 (Worst) | P* | |||

| Intervention (n = 64) | |||||||||

|

| |||||||||

| Practice-level A1c ≤7% | 49.3% | 19.3% | 75% | 56% | 41% | 23% | |||

|

| |||||||||

| Mean practice-level A1c | 7.5 | 0.8 | 6.7 | 7.2 | 7.7 | 8.6 | |||

|

| |||||||||

| Study engagement volume

| |||||||||

| Total number of pages viewed | 83.6 | 68.8 | 137.0 | 73.3 | 68.7 | 57.5 | .007 | 0.33 | .007 |

|

| |||||||||

| Frequency

| |||||||||

| Total number of visits | 6.6 | 4.6 | 10.6 | 5.4 | 5.6 | 4.8 | .007 | 0.32 | .009 |

|

| |||||||||

| Variety of components

| |||||||||

| No. of components viewed | 4.8 | 2.3 | 6.2 | 4.8 | 4.0 | 4.2 | .007 | 0.36 | .004 |

|

| |||||||||

| No. of cases completed | 1.1 | 1.1 | 1.9 | 1.1 | 0.9 | 0.8 | .01 | 0.32 | .01 |

|

| |||||||||

| No. of CME credits obtained | 3.8 | 3.2 | 4.2 | 3.3 | 4.2 | 3.6 | .81 | 0.02 | Not significant (NS) |

|

| |||||||||

| Duration of access

| |||||||||

| Aggregate duration of visits, minutes | 48.4 | 43.2 | 80.1 | 40.6 | 41.4 | 32.9 | .003 | 0.36 | .04 |

|

| |||||||||

| Number of weeks participating | 57.9 | 27.5 | 69.7 | 56.9 | 58.1 | 46.5 | .06 | 0.26 | .04 |

|

| |||||||||

|

Control (n = 69)

| |||||||||

| Practice-level A1c ≤7% | 48.7% | 23.6% | 77% | 56% | 39% | 19% | |||

|

| |||||||||

| Mean practice-level A1c | 7.4 | 1.2 | 6.6 | 7.0 | 7.7 | 8.6 | |||

|

| |||||||||

| Study engagement volume

| |||||||||

| Total number of pages viewed | 12.9 | 17.4 | 9.4 | 17.8 | 9.3 | 13.3 | NS | −0.08 | NS |

|

| |||||||||

| Frequency

| |||||||||

| Total number of visits | 1.9 | 1.4 | 1.5 | 2.2 | 1.6 | 2.2 | NS | −0.05 | NS |

|

| |||||||||

| Variety of components

| |||||||||

| No. of components viewed | 2.5 | 1.7 | 1.9 | 2.9 | 2.7 | 2.6 | NS | −0.12 | NS |

|

| |||||||||

| No. of cases completed | — | — | — | — | — | — | — | — | — |

|

| |||||||||

| No. of CME credits obtained | 3.3 | 3.3 | 4.3 | 3.0 | 2.5 | 3.2 | NS | 0.17 | NS |

|

| |||||||||

| Duration of access

| |||||||||

| Aggregate duration of visits, minutes | 12.8 | 18.5 | 9.2 | 19.9 | 8.0 | 11.7 | NS | 0.0 | NS |

|

| |||||||||

| Number of weeks participating | 7.3 | 14.8 | 4.9 | 10.6 | 8.5 | 5.6 | NS | −0.02 | NS |

P values for differences among quartiles based on nonparametric test for linear trend.

Spearman rho P values for participation measure and % A1c ≤7.

The proportion of patients with hemoglobin A1c ≤7% for the average practice was 49.3% in the intervention group and 48.7% for the control group. The mean practice-level A1c level was not different between the intervention (7.5, SD 0.8) and control (7.4, SD 1.2) practices (P = .6). As expected, the rates of diabetes control varied dramatically from the worst (19%–23%) to the best quartile (75%–77%) of practice control across all practices (Table 1). The mean number of weeks participating was higher for the intervention group (57.9, SD 27.5) compared with the control group (7.3, SD 14.8; P < .001).

In the intervention group, the study engagement measures varied significantly across quartiles of A1c control, with the exception of number of CME credits obtained (Table 1). Importantly, physicians in practices in the worse quartiles of A1c control participated less, regardless of the domain measuring study engagement (volume, frequency, variety of components, duration of visit). The decrease in participation was graded across all quartiles. For example, the total number of pages viewed decreased as the quartile of A1c control worsened (137.0, 73.3, 68.7, 57.5; P = .007). Similarly, with the exception of number of CME credits obtained, as A1c control improved so did study engagement (correlations 0.26–0.36; all P < .05; Table 2).

Table 2.

Spearman Correlation Coefficients for Participating Physicians’ Practice-Level Glucose Control of Diabetes Patients and Engagement With a Study Web Site Offering Diabetes Care Resources, 2006–2008

| Practice-level A1c ≤7% | Mean practice-level A1c | Total no. of pages viewed | Total no. of visits | No. of components viewed | No. of cases completed | No. of CME credits obtained | Aggregate duration of visits | No. of weeks participating | |

|---|---|---|---|---|---|---|---|---|---|

| Intervention (n = 64) | |||||||||

| Practice-level A1c ≤7% | 1.0 | ||||||||

| Mean practice-level A1c | −0.86 | 1.0 | |||||||

| Total no. of pages viewed | 0.33 | −0.33 | 1.0 | ||||||

| Total no. of visits | 0.32 | −0.30 | 0.80 | 1.0 | |||||

| No. of components viewed | 0.36 | −0.37 | 0.86 | 0.71 | 1.0 | ||||

| No. of cases completed | 0.32 | −0.36 | 0.84 | 0.59 | 0.71 | 1.0 | |||

| No. of CME credits obtained | 0.02* | −0.02* | 0.82 | 0.53 | 0.45 | 0.61 | 1.0 | ||

| Aggregate duration of visits | 0.36 | −0.33 | 0.89 | 0.79 | 0.79 | 0.74 | 0.59 | 1.0 | |

| Number of weeks participating | 0.26 | −0.13* | 0.38 | 0.48 | 0.38 | 0.24 | 0.19* | 0.41 | 1.0 |

| Control (n = 69) | |||||||||

| Practice-level A1c ≤7% | 1.0 | ||||||||

| Mean practice-level A1c | −0.81 | 1.0 | |||||||

| Total no. of pages viewed | −0.08* | 0.06* | 1.0 | ||||||

| Total no. of visits | −0.05* | 0.13* | 0.67 | 1.0 | |||||

| No. of components viewed | −0.12* | 0.12* | 0.89 | 0.59 | 1.0 | ||||

| No. of cases completed† | N/A | N/A | N/A | N/A | N/A | N/A | |||

| No. of CME credits obtained | 0.17* | −0.13* | 0.72 | 0.24* | N/A | N/A | 1.0 | ||

| Aggregate duration of visits | 0.0* | 0.01* | 0.89 | 0.64 | 0.77 | N/A | 0.56 | 1.0 | |

| Number of weeks participating | −0.03* | 0.10* | 0.69 | 0.81 | 0.66 | N/A | 0.29* | 0.72 | 1.0 |

P > .05; all other values P < .05.

Cases not available to the control practices.

In the control group, study engagement lagged that of the intervention group significantly. In addition, measures were neither different across quartiles of A1c control nor were they correlated with A1c control (Table 1). The sensitivity analysis using hemoglobin A1c as a continuous variable yielded similar results for the analysis across quartiles and correlations for the intervention and control group (data not shown).

Study engagement measures correlated with each other in the intervention group (correlations 0.45–0.89) and in the control group (0.56–0.89, except number of CME credits obtained), Table 2.

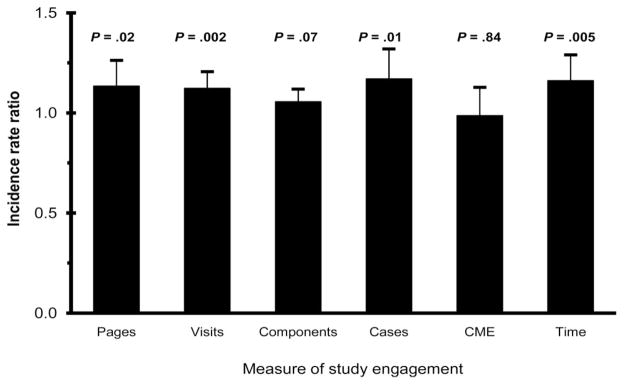

The IRR for study engagement measures in the intervention group is shown in Figure 1. For any given 10% increase in proportion of patients with A1c controlled, the physician viewed 1.13 times more pages (95% CI: 1.02–1.26; P = .02). A similar finding was observed for other measures of engagement, with the exception of number of CME credits obtained.

Figure 1.

Incidence rate ratios (IRRs) for measures of study engagement, intervention group (n + 64). The IRR is interpreted as a direct multiplier of the count outcome. For example, for any given 10% increase in % A1c controlled, the physician viewed 1.13 times more pages (95% 1.02–1.26; P + .02). IRRs were obtained using negative binomial regression between the dependent variable (study engagement) and the independent variable (proportion of patients with controlled diabetes; hemoglobin A1c ≤7%) while accounting for variable time of exposure to the intervention. “Pages” is defined as total number of pages viewed, “visits” as total number of visits, “components” as number of components viewed, “cases” as number of cases completed, “CME” as number of CME credits obtained, and “time” as aggregate duration of visits.

Discussion

In a population of physicians participating in the RDOC study, engagement with the study Web site varied significantly. Physicians randomized to the intervention group were more engaged with the study Web site than their control counterparts. Furthermore, within the group randomized to the intervention Web site, physicians serving patients with the greatest A1c control had the highest level of engagement, whereas physicians caring for those with the worst A1c control had the lowest level of engagement. This finding fails to support our hypothesis that lower-performing physicians would seek information at a higher rate than their higher-performing counterparts.

Because the intervention activities were more interactive than the control activities by design, with a more interactive web experience, performance feedback, and repeated e-mail reminders, differences in engagement between the intervention and control groups were anticipated. However, the pattern of engagement that emerged within the intervention group based on patients’ A1c levels at baseline was not expected. Perhaps higher-performing physicians in the intervention group were more likely to be interested and adept in using technology to support their patient care, a characteristic that may reflect that they are more up-to-date or otherwise more prepared to manage their patients. Research has shown the important relationship between the use of technology and quality of care.28 It also could be that physicians of the patients with the worst A1c control were simply too busy caring for their patients to participate. For physicians in busy practices, the act of seeking external resources that may support patient care takes away precious time from seeing patients.

The level of engagement may also reflect the inaccuracy of physicians’ self-assessed learning needs; most people cannot independently identify areas for self-improvement as accurately as they can when working toward an objective standard.29 In Choo’s11 conceptual framework, cognitive, affective, and situational factors contribute to the process of identification and satisfaction of informational needs, where individuals recognize gaps in their knowledge, seek information to close the gap, and ultimately apply the information to their situation. However, if the process of identifying learning needs is not reliant on an objective measure, like patients’ blood glucose level, lower-performing physicians may not be aware of the gap and, thus, may not seek information that could help them improve.

Implications for research and practice

Our findings have important implications for Web-based randomized clinical trials and CME programs. With respect to research trials, this study highlights three important points. First, an intervention’s beneficial outcomes more clearly emerge among higher risk groups.30 Therefore, factoring various risk categories into power and sample size calculations is warranted. Second, a study’s capacity to identify the benefits of an intervention increases as intended use of the intervention increases. Studies with lower intervention adherence rates bias findings toward the null hypothesis. Finally, planning a priori for analyses stratified by level of risk and per-protocol exploratory analyses may be necessary to identify the mechanisms or relationships at work. We are not aware of any similar study reporting that levels of engagement in Web-based interventions change by patient risk.

Our data suggest that the physicians who might benefit the most from this Web-based CME program were also the least likely to engage in it. Extra or different efforts may be needed to engage the physicians with the lowest performance as defined by one diabetes quality indicator. Whether these findings are similar for other quality measures is unclear, but worthy of study. If CME is to be important and relevant in today’s health care environment, it must be focused on helping physicians identify opportunities to reduce gaps between their existing practice and optimum care and offering meaningful activities to help them achieve such performance improvements. Such activities should be designed to engage the learner and might take new forms, using spaced education31,32 models and performance improvement formats within electronic systems delivered at the point of care. It is more reasonable to expect an interactive, integrated, intense, and sustained program of professional development with customized outcomes feedback directed at the point of care to lead to improvements in practice outcomes over time.

Limitations

Our study was not designed to understand the mechanisms underlying these findings. Furthermore, we make no claim with these baseline data as to whether participants’ engagement in this study positively impacted their patients’ A1c control, adherence, or management plans at follow-up; future analysis of the complete RDOC dataset will explore this hypothesis.

Conclusions

We conclude that engagement in Web-based interventions and CME programs is, in fact, measurable and has implications for research and online education. Engagement in this Web-based study differed in important ways among physicians according to the nature of the Web-based activity and, among the participants assigned to the more interactive Web site, according to their patients’ glycemic control. Contrary to our hypothesis, lower-performing physicians sought information at a lower rate than their higher-performing counterparts in the more interactive Web site. Such factors must be considered by online developers to understand what drives engagement so that physicians with the greatest opportunity for improvement are engaged in an effective and meaningful fashion. Reasons for these differences and methods of designing learning activities that address them should be the subject of future research.

Acknowledgments

Funding/Support: This study was funded by the National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK) 5R18DK065001 to Dr. Allison. Dr. Salanitro was supported by a Veterans Affairs Quality Scholars fellowship. The National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK) had no role in the design and conduct of the study; in the collection, management, analysis, and interpretation of the data; or in the preparation, review, or approval of the manuscript.

Footnotes

Other disclosures: None.

Ethical approval: This study was approved by the institutional review boards of the University of Alabama at Birmingham and the University of Alabama at Tuscaloosa.

Disclaimer: The opinions expressed in this article are those of the authors alone and do not reflect the views of the Department of Veterans Affairs.

Previous presentations: The abstract of an earlier version of this article was presented at the 2008 Southern Society of General Internal Medicine Meeting, New Orleans, Louisiana.

Contributor Information

Katie Crenshaw, Assistant director, Division of Continuing Medical Education, University of Alabama at Birmingham, Birmingham, Alabama.

William Curry, Professor of medicine, Division of General Internal Medicine, and associate dean for primary care and rural health, University of Alabama at Birmingham, Birmingham, Alabama.

Amanda H. Salanitro, Fellow, Veterans Affairs National Quality Scholars Program, Birmingham Veterans Affairs Medical Center, and instructor of medicine, Division of General Internal Medicine, University of Alabama at Birmingham, Birmingham, Alabama.

Monika M. Safford, Associate professor of medicine, Division of Preventive Medicine, and assistant dean for continuing medical education, University of Alabama at Birmingham, Birmingham, Alabama.

Thomas K. Houston, Scientist, Center for Health Quality, Outcomes & Economic Research, Bedford VAMC, Bedford, Massachusetts, professor of quantitative health sciences and medicine chief, Division of Health Informatics and Implementation Science, and assistant dean for continuing medical education/medical education research, University of Massachusetts Medical School, Worcester, Massachusetts.

Jeroan J. Allison, Vice chair, Department of Quantitative Health Sciences, associate vice provost for health disparities, and professor of quantitative health sciences, University of Massachusetts Medical School, Worcester, Massachusetts.

Carlos A. Estrada, Director, Veterans Affairs National Quality Scholars Program, and scientist, Deep South Center on Effectiveness, REAP Center for Surgical, Medical Acute Care Research & Transitions, Birmingham Veterans Affairs Medical Center, Birmingham, Alabama, and professor of medicine and director, Division of General Internal Medicine, University of Alabama at Birmingham, Birmingham, Alabama.

References

- 1.Podichetty VK, Booher J, Whitfield M, Biscup RS. Assessment of Internet use and effects among health professionals: A cross sectional survey. Postgrad Med J. 2006;82:274–279. doi: 10.1136/pgmj.2005.040675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bennett NL, Casebeer LL, Kristofco RE, Strasser SM. Physicians’ Internet information-seeking behaviors. J Contin Educ Health Prof. 2004;24:31–38. doi: 10.1002/chp.1340240106. [DOI] [PubMed] [Google Scholar]

- 3.Accreditation Council for Continuing Medical Education. [Accessed May 13, 2010.];ACCME Annual Report Data. 2008 Available at: http://www.accme.org/dir_docs/doc_upload/1f8dc476-246a-4e8e-91d3-d24ff2f5bfec_uploaddocument.pdf.

- 4.Izkowitz M. Researchers track Web’s presence in physician learning. Med Mark Media. 2007;42:24. [Google Scholar]

- 5.Curran VR, Fleet L. A review of evaluation outcomes of Web-based continuing medical education. Med Educ. 2005;39:561–567. doi: 10.1111/j.1365-2929.2005.02173.x. [DOI] [PubMed] [Google Scholar]

- 6.Casebeer L, Engler S, Bennett N, et al. A controlled trial of the effectiveness of Internet continuing medical education. BMC Med. 2008;6:37. doi: 10.1186/1741-7015-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Allison JJ, Kiefe CI, Wall T, et al. Multicomponent Internet continuing medical education to promote chlamydia screening. Am J Prev Med. 2005;28:285–290. doi: 10.1016/j.amepre.2004.12.013. [DOI] [PubMed] [Google Scholar]

- 8.Fordis M, King JE, Ballantyne CM, et al. Comparison of the instructional efficacy of Internet-based CME with live interactive CME workshops: A randomized controlled trial. JAMA. 2005;294:1043–1051. doi: 10.1001/jama.294.9.1043. [DOI] [PubMed] [Google Scholar]

- 9.Wantland JD, Portillo JC, Holzemer LW, Slaughter R, McGhee ME. The effectiveness of Web-based vs. non-Web-based interventions: A meta-analysis of behavioral change outcomes. J Med Internet Res. 2004;6:e40. doi: 10.2196/jmir.6.4.e40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Houston TK, Funkhouser E, Allison JJ, Levine DA, Williams OD, Kiefe CI. Multiple measures of provider participation in Internet delivered interventions. Stud Health Technol Inform. 2007;129(pt 2):1401–1405. [PubMed] [Google Scholar]

- 11.Choo CW. The Knowing Organization: How Organizations Use Information to Construct Meaning, Create Knowledge, and Make Decisions. New York, NY: Oxford University Press; 1998. [Google Scholar]

- 12.Danaher BG, Boles SM, Akers L, Gordon JS, Severson HH. Defining participant exposure measures in Web-based health behavior change programs. J Med Internet Res. 2006;8:e15. doi: 10.2196/jmir.8.3.e15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Beyer BK. How to Conduct a Formative Evaluation. Alexandria, Va: Association for Supervision and Curriculum Development; 1995. [Google Scholar]

- 14.Safford MM, Shewchuk R, Qu H, et al. Reasons for not intensifying medications: Differentiating “clinical inertia” from appropriate care. J Gen Intern Med. 2007;22:1648–1655. doi: 10.1007/s11606-007-0433-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.American Board of Internal Medicine. [Accessed May 13, 2010.];Diabetes Practice Improvement Module. Available at: http://www.abim.org/moc/earning-points/productinfo-demo-ordering.aspx.

- 16.American Board of Family Medicine. [Accessed May 13, 2010.];Maintenance of Certification for Family Physicians. Available at: https://www.theabfm.org/moc/index.aspx.

- 17.Peabody JW, Luck J, Glassman P, et al. Measuring the quality of physician practice by using clinical vignettes: A prospective validation study. Ann Intern Med. 2004;141:771–780. doi: 10.7326/0003-4819-141-10-200411160-00008. [DOI] [PubMed] [Google Scholar]

- 18.Casebeer L, Allison J, Spettell CM. Designing tailored Web-based instruction to improve practicing physicians’ chlamydial screening rates. Acad Med. 2002;77:929. doi: 10.1097/00001888-200209000-00032. [DOI] [PubMed] [Google Scholar]

- 19.American Diabetes Association. Standards of medical care in diabetes—2008. Diabetes Care. 2008;31(suppl 1):S5–S11. doi: 10.2337/dc08-S012. [DOI] [PubMed] [Google Scholar]

- 20.Chobanian AV, Bakris GL, Black HR, et al. The seventh report of the Joint National Committee on Prevention, Detection, Evaluation, and Treatment of High Blood Pressure: The JNC 7 report. JAMA. 2003;289:2560–2572. doi: 10.1001/jama.289.19.2560. [DOI] [PubMed] [Google Scholar]

- 21.Houston TK, Wall T, Allison JJ, et al. Implementing achievable benchmarks in preventive health: A controlled trial in residency education. Acad Med. 2006;81:608–616. doi: 10.1097/01.ACM.0000232410.97399.8f. [DOI] [PubMed] [Google Scholar]

- 22.Kiefe CI, Allison JJ, Williams OD, Person SD, Weaver MT, Weissman NW. Improving quality improvement using achievable benchmarks for physician feedback: A randomized controlled trial. JAMA. 2001;285:2871–2879. doi: 10.1001/jama.285.22.2871. [DOI] [PubMed] [Google Scholar]

- 23.Jansen BJ, Spink A, Pedersen J. A temporal comparison of AltaVista Web. J Am Soc Inf Sci. 2005;56:559–570. [Google Scholar]

- 24.Creese G, Burby J. [Accessed May 13, 2010.];Web Analytics Key Metrics and KPIs (Version 1.0) Available at: http://www.kaushik.net/avinash/waa-kpi-definitions-1-0.pdf.

- 25.Peterson ET. Website Measurement Hacks. Sebastopol, Calif: O’Reilly Media; 2005. [Google Scholar]

- 26.Coxe S, West SG, Aiken LS. The analysis of count data: A gentle introduction to Poisson regression and its alternatives. J Pers Assess. 2009;91:121–136. doi: 10.1080/00223890802634175. [DOI] [PubMed] [Google Scholar]

- 27.Hutchinson MK, Holtman MC. Analysis of count data using Poisson regression. Res Nurs Health. 2005;28:408. doi: 10.1002/nur.20093. [DOI] [PubMed] [Google Scholar]

- 28.Chaudhry B, Wang J, Wu S, et al. Systematic review: Impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144:E-12–E-22. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 29.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: A systematic review. JAMA. 2006;296:1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 30.Hayward RA, Hofer TP, Vijan S. Narrative review: Lack of evidence for recommended low-density lipoprotein treatment targets: A solvable problem. Ann Intern Med. 2006;145:520–530. doi: 10.7326/0003-4819-145-7-200610030-00010. [DOI] [PubMed] [Google Scholar]

- 31.Kerfoot BP, Brotschi E. Online spaced education to teach urology to medical students: A multi-institutional randomized trial. Am J Surg. 2009;197:89–95. doi: 10.1016/j.amjsurg.2007.10.026. [DOI] [PubMed] [Google Scholar]

- 32.Matzie KA, Kerfoot BP, Hafler JP, Breen EM. Spaced education improves the feedback that surgical residents give to medical students: A randomized trial. Am J Surg. 2009;197:252–257. doi: 10.1016/j.amjsurg.2008.01.025. [DOI] [PubMed] [Google Scholar]