SUMMARY

Parameters for latent transition analysis (LTA) are easily estimated by maximum likelihood (ML) or Bayesian method via Markov chain Monte Carlo (MCMC). However, unusual features in the likelihood can cause difficulties in ML and Bayesian inference and estimation, especially with small samples. In this study we explore several problems in drawing inference for LTA in the context of a simulation study and a substance use example. We argue that when conventional ML and Bayesian estimates behave erratically, problems often may be alleviated with a small amount of prior input for LTA with small samples. This paper proposes a dynamic data-dependent prior for LTA with small samples and compares the performance of the estimation methods with the proposed prior in drawing inference.

Keywords: latent transition analysis, small samples, MCMC, EM algorithm

1. INTRODUCTION

The analysis of stage-sequential development plays an important role in behavioral and biomedical applications, particularly in the area of substance use prevention and treatment. Using a stage-sequential process, prevention scientists are able to explore risk factors and thus find optimal opportunities for intervening in the substance use onset process. New developments in methods for the analysis of stage-sequential processes include latent transition analysis (LTA). LTA is a latent Markov model, where stage membership at each time is unobserved, but measured with a set of manifest items. In LTA the measurement model at each time point is a latent class model—the associations among manifest items are explained by the underlying categorical latent variable—and stage-sequential development is summarized in transition probability of latent classes over two consecutive times. LTA has been widely applied to the study of substance use behaviors, including: modeling yearly change in risk behavior among injection drug users [1]; exploring risk factors of adolescent substance use onset [2, 3]; assessing transitions between the stages of smoking behavior in the transtheoretical model of behavior change [4]; and exploring change in states of depression throughout adolescence [5].

Maximum likelihood (ML) estimates for LTA are easily estimated by using the EM algorithm [6]. Bayesian inference also has been applied by many researchers via Markov chain Monte Carlo (MCMC) [7, 8, 9, 10, 11, 12, 13]. However, the LTA likelihood contains some unusual features that can cause difficulties in ML and Bayesian inference [14, 15, 16, 17]. Specifically, when the sample size is small and many item-response probabilities are not close to zero or one, the conventional ML and Bayesian estimates behave erratically.

In what follows we explore difficulties in small-sample inference for LTA by comparing an ML to an MCMC approach in the context of a simulation study. The current study provides a detailed explanation of estimation strategies with possible complications, and their performance are evaluated in terms of estimates and intervals over repeated samples. We show that problems often may be alleviated with a small amount of prior information for LTA with small samples. We then examine stage-sequential patterns of female adolescent substance use and compare the results from various proposed strategies.

2. LATENT TRANSITION MODEL AND ESTIMATION ALGORITHMS

Latent transition models are based on latent class theory, which posits that homogeneous subgroups (i.e., latent classes) of individuals can be identified based on their responses to manifest items. In LTA the manifest items are measured repeatedly over time to identify latent classes at each occasion, and the probability of transitions over time in latent class membership are estimated. For example, Chung, Park, and Lanza [2] used LTA to model stage-sequential patterns of cigarette and alcohol use in order to investigate the relation between pubertal status and substance use across a range of ages. They identified five classes (No-use, Alcohol only, Cigarettes only, Alcohol and cigarettes, and Cigarettes and drunk) and found that experiencing puberty is related to increased substance use for females in Grades 7–12.

Parameters in the LTA model include class membership probability at time 1, transition probabilities from time 1 to time 2, time 2 to time 3, and so on, and item-response probabilities conditional on latent class. Let L = (L1, …, LT) be the latent class membership from initial time t = 1 to time T, where Lt = 1, …, L. Correspondingly, let Yt = (Y1t, …, YMt) be a vector of M manifest items measuring the class variable Lt, where each variable Ymt takes values 1, …, rm for t = 1, …, T. The joint probability that the ith individual belongs to l = (l1, …, lT) and provide item responses yi1, …, yiT would be

| (1) |

where We assume that Y1t, …, YMt are conditionally independent within each class of lt for t = 1, …, T. This assumption, called local independence, allows us to draw inference about the latent class variable [18]. We also assume that the sequence Lt constitutes a first-order Markov chain for t = 2, …, T. In (1), only the marginal probability of class membership at initial time t = 1, δl1, is estimated; the marginal probabilities of class membership at time t (≥ 2) are not directly estimated, but rather are a function of other parameters. The marginal prevalence of each class at time t (≥ 2) can be calculated as

Using (1), the contribution of the ith individual to the likelihood function of Y1, …, YT is given by

| (2) |

For simplicity, consider a sample of n individuals who responded to M binary items measured at two time periods. We hereafter consider the constrained LTA model where the item-response probabilities (ρ-parameters) are constrained to be equal across time, although an extension to unconstrained LTA is straightforward. For our example, the likelihood function (2) is reduced to

| (3) |

where τl2|l1 = P[L2 = l2 | L1 = l1]. Note that ρmkt|lt in (1) is reduced to τmk|lt. In (3), the free parameters are θ = (δ, τ1, …, τL, ρ1, …, ρL), where δ = (δ1, …, δL−1), τl = (τ1|l, …,τL−1|l) and ρl = (ρ11|l, …, ρM1|l) for l = 1, …, L.

Under ordinary circumstances, the ML estimates for θ solve the score equation, ∂ log Πi P[yi1, yi2]/∂θ= 0. Like many finite mixtures, ML estimates for LTA can be estimated using an EM algorithm [6]. For the E-step, we compute the conditional probability that each individual is a member of class l1 at t = 1 and class l2 at t = 2 given their item responses yi = (yi1, yi2) and current estimates θ̂ for the parameters,

| (4) |

In the M-step, we update the parameter estimates by

| (5) |

where . Iterating between these two steps produces a sequence of parameter estimates that converges reliably to a local or global maximum of the likelihood function [16, 17, 19].

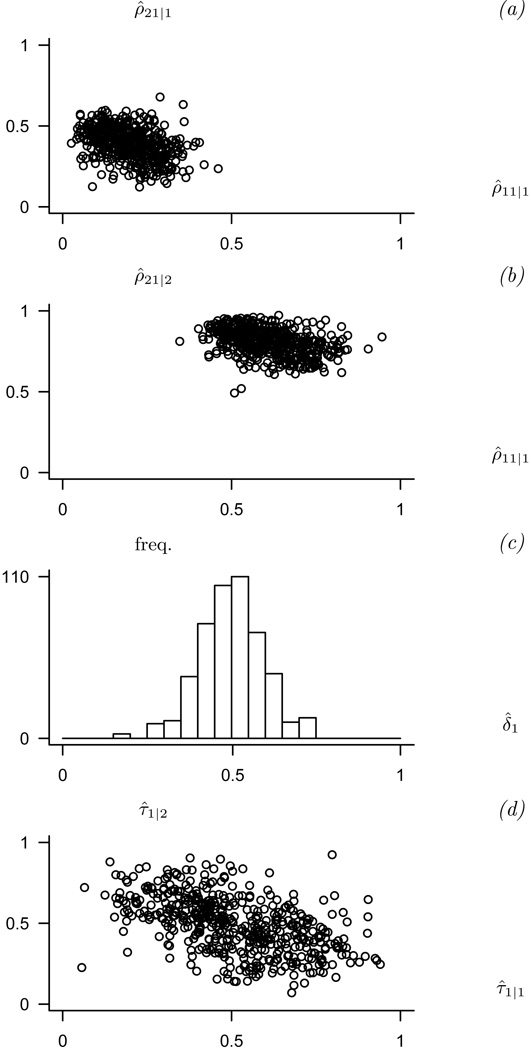

The challenges for ML inference in small-sample LTA are mainly due to parameters being estimated on the boundary of the parameter space (i.e., zero or one), causing difficulties in obtaining adequate standard errors. Although item-response probabilities close to zero or one are highly desirable from a measurement perspective, when some of these parameters are estimated on the boundary, it is impossible to obtain standard errors from the inverted Hessian matrix. To illustrate the problem of a boundary solution, we drew 500 samples of n = 100 from a two-time, two-class LTA measured by two binary items with parameters δ1 = 0.5, τ1|1 = 0.5, τ1|2 = 0.5, ρ1 = (ρ11|1, ρ21|1) = (0.2, 0.4), and ρ2 = (ρ11|2, ρ21|2) = (0.6, 0.8), and used the EM algorithm to compute ML estimates. Approximately 45% of the samples in Figures 1(a) and (b) and about 20% of the samples in Figure 1(d) converged to the boundary solution with a criterion of 10−7. The non-normal shape of the sampling distribution of model parameters in Figure 1 suggests that usual large-sample approximations would be inaccurate.

Figure 1.

Maximum likelihood estimates for (a) ρ1 = (ρ11|1, ρ21|1), (b) ρ2 = (ρ11|2, ρ21|2), (c) δ1, and (d) (τ1|1, τ2|2) from 500 samples with ρ1 = (0.2, 0.4), ρ2 = (0.6, 0.8), δ1 = 0.5, and τ1|1 = τ1|2 = 0.5.

To avoid a boundary solution, Bayesian analysis via Markov chain Monte Carlo (MCMC) has been applied widely over the last decade. The most popular MCMC method for finite mixtures is closely related to EM; it may be viewed either as a Gibbs sampler [20] or a variant of data augmentation [21]. In Bayesian analysis for LTA, we are interested in describing the posterior, P[θ | y1, y2]. The MCMC algorithm treats the class membership of each individual as missing data, and simulates the augmented posterior P[θ | y1, y2, z] as if class membership were known. Here, the element of z = (z1, …, zn), zi denotes a two-dimensional array indicating the latent class in which the ith individual belongs, so that zi(l1, l2) ∈ {0, 1} and Σl1 Σl2 zi(l1, l2) = 1. That is, if individual i belongs to class l1 at time 1 and l2 at time 2, then zi(l1, l2) equals 1 and 0 otherwise. In the first step of the MCMC procedure—the Imputation or I-step—we generate a random draw zi(l1, l2) from Multinomial independently for all individuals, where is the posterior probability given in (4) with the observed data (yi1, yi2) and current parameter draws . We then calculate marginal counts . In the second step—the Posterior or P-step—we draw new random values for the parameters from the augmented posterior distribution which regards the latent class membership zi(l1, l2) as known. Applying the Jeffreys priors to δ, τl, and ρl, new random values for the parameters are drawn from posterior distributions

| (6) |

for l1, l = 1, …, L and m = 1, …, M. Repeating this two-step procedure creates a sequence of iterates converging to the stationary joint posterior distribution for θ = (δ, τ1, …, τL, ρ1, …, ρL) given the data. This stream of parameter values (after a suitable burn-in period) is summarized in various ways to produce approximate Bayesian estimates, intervals, tests, etc. [14, 16, 22, 23]. The application of MCMC methods for the LTA model is discussed in [12].

Although the simplicity of EM and MCMC estimation has made the LTA model popular, the likelihood function of an LTA model may have unusual characteristics which can adversely affect inference. For example, there may be certain continuous regions of the parameter space for which the log-likelihood is constant, leading to indeterminacy for some parameters. In the two-class LTA given in (3), suppose that the prevalence of Class 1 is zero at time 1 but then Class 1 emerges at time 2 (i.e., δ1 = 0 and Σl1 δl1τ1|l1 > 0). Then the transition probabilities of the individuals in Class 1 at time 1 (i.e., τ1|1 and τ2|1) can take any value from zero to one without any effect on the value of log-likelihood. Because of this unusual geometry, likelihood-ratio test (LRT) statistics for testing hypotheses about number of classes L are not asymptotically distributed as chi-square [16, 24, 25]. In lieu of standard testing methods for choosing an appropriate L, statisticians have turned to penalized likelihood measures such as the Akaike or Bayesian information criteria (AIC or BIC), to the Lo-Mendell-Rubin LRT [26], to bootstrapping the LRTs [27], and to Bayesian posterior predictive check distributions [9, 28].

3. INFERENCE FOR A SMALL-SAMPLE LATENT TRANSITION ANALYSIS

Rubin and Schenker [29] applied the Bayesian approach to the standard ML method for a single binomial variable using the Jeffreys prior. They showed that the maximum posterior estimator (MPE) generally outperformed standard maximum likelihood estimator (MLE). For LTA, it is convenient to choose priors that cause δ, τ, and ρ to be a posteriori independent given η̂ = (η̂1, …, η̂n), where η̂i = (η̂i(1, 1), …, η̂i(L, L)). One way to achieve this is to impose the Dirichlet priors on the joint probabilities of class membership and the item-response probabilities, respectively, as given by

Then, the joint posterior for θ = (δ, τ1, …, τL, ρ1, …, ρL) given η̂ may be expressed as

| (7) |

where . The EM algorithm maximizing (7) is straightforward: the updated parameters for the MPE are obtained by

| (8) |

where .

The effect of constant hyper-parameters for δ and τ is to smooth the parameter estimates toward equivalent class sizes. For example, the use of ωl1 = α(l1, 1) = ⋯ = α(l1, L) has a flattening effect on the elements of τl1 = (τ1|l1, …, τL|l1) by adding the equivalent of ωl1 observations to each class at time 2 to those who belonged to class l1 at time 1. For δ-parameters, this prior is equivalent to adding the fictitious L × ωl1 observations to class l1 at time 1. The hyper-parameters β(m, k) could possibly depend on the data. For example, we can select β(m, k) ∝ Σi [I(yim1 = k) + I(yim2 = k)] /2n so that the prior distribution smoothes ρ toward an ML estimate of the raw distribution of the mth item. We drew 500 samples from the LTA model used in Figure 1, and computed maximum posterior estimates with hyper-parameters α(l1, l2) = 1/L and β(m, k) = Σi [I(yim1 = k) + I(yim2 = k)] /2n. Note that this prior is equivalent to adding just one observation to each class at time 1. Plots of the sampling distribution of the MPE are displayed in Figure 2. The boundary solutions evident in Figure 1 are now non-existent.

Figure 2.

Maximum posterior estimates for (a) ρ1 = (ρ11|1, ρ21|1), (b) ρ2 = (ρ11|2, ρ21|2), (c) δ1, and (d) (τ1|1, τ2|2) from 500 samples with ρ1 = (0.2, 0.4), ρ2 = (0.6, 0.8), δ1 = 0.5, and τ1|1 = τ1|2 = 0.5.

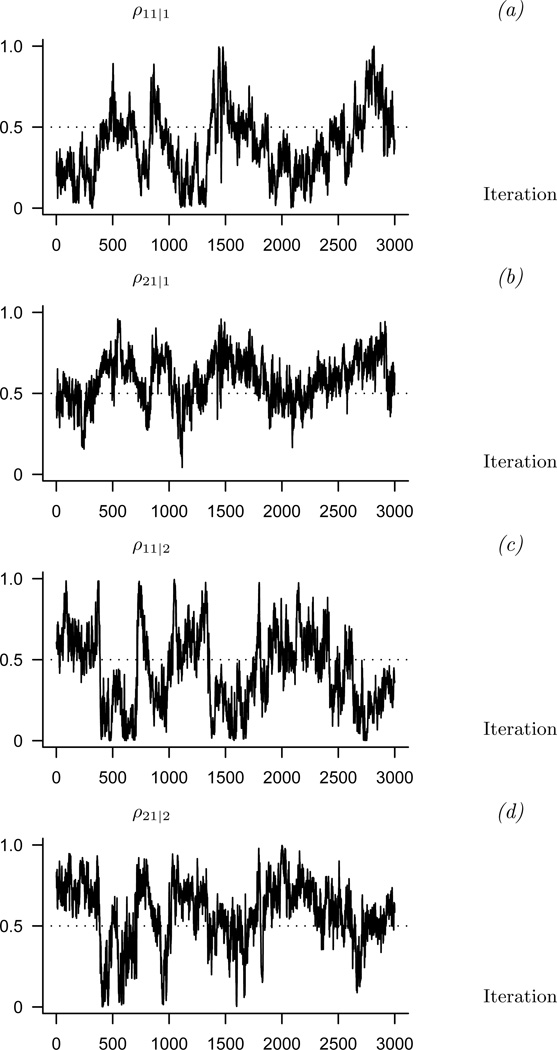

The likelihood function of the LTA model has multiple equivalent modes which are invariant to permutations of the class labels. When we have two classes in (3) (i.e., L = 2), P[yi1, yi2] achieves exactly the same value at (δ1, τ1|1, τ1|2, ρ1, ρ2) and (1 −δ1, 1 −τ1|2, 1 −τ1|1, ρ2, ρ1). This invariant property may cause the most troubling aspect of MCMC for LTA, called label switching. In many applications of MCMC, the analyst runs the algorithm for a “burn-in” period to eliminate dependence on the starting values, and then saves the subsequent output to estimate meaningful posterior summaries. For certain LTA models, however, the interpretation of long-run averages become dubious if the class labels switch during the MCMC run. Label switching can be particularly problematic in applications with smaller samples. To illustrate, we ran MCMC using one sample of n = 100 from the previous example. We set the prior hyper-parameters to 1/2 for all parameters, so that all priors become Jeffreys. Time-series plots for ρ11|1, ρ11|2, ρ21|1, and ρ21|2 over the first 3,000 iterations of MCMC are shown in Figure 3. In Figure 3, notice that the order of ρ-parameters reverses many times, requiring the tedious reordering of latent classes before meaningful averages for the parameter can be calculated.

Figure 3.

Time-series plots of ρ11|1, ρ21|1, ρ11|2, and ρ21|2 over 3000 iterations of MCMC.

An efficient strategy to handle the label switching is to pre-assign one or more subject’s class membership to break the symmetry of the posterior distribution and dampen the posterior density over the nuisance modes [15, 30]. This approach applies constraints to P[Z | θ, y1, y2] that result in asymmetric, data-dependent priors for P[θ | y1, y2]. By pre-classifying a subset of individuals, label switching in the parameter space can be greatly reduced or eliminated. Chung et al. [15] showed that the pre-classification method performed well over repeated samples for the exponential finite-mixture models. As the number of classes grows in an LTA model, the choice of which subjects to pre-classify may not be clear. Therefore, we will generalize the pre-classification technique and propose that the choice of which individuals to classify is automated and dynamically adapted throughout the posterior simulation.

4. DYNAMIC DATA-DEPENDENT PRIOR

We face the challenge of determining which individuals to pre-classify in order to achieve good MCMC performance. Although substantive knowledge often can inform this decision, analysts will sometimes need to pre-classify cases blindly. However, some individuals may be particularly informative: consider an individual who has posterior probabilities at one iteration during MCMC, implying they have a 97% chance of belonging to the first class at times 1 and 2 given his/her item responses. This individual will “almost always” be imputed into the first class for both times in the I-step, and should “almost always” have the same label across iterations. It would be a very strong indication that label switching had occurred if imputed class labels for this individual changed between iterations. A promising strategy is to assign a class label to a particular individual that has a high posterior probability of being in a specific class. A natural criterion is the posterior probability calculated on the basis of the running posterior mean of model parameters θ.

Let θ(j) represent the generated LTA parameters from the posterior distribution at the jth MCMC iteration, and let estimate the posterior mean at the Nth iteration. Consider the cumulative posterior probabilities at the Nth cycle,

| (9) |

We can evaluate for each combination of classes l1 and l2 in order to choose the subject with the largest value. By repeating this procedure independently for all combinations of classes for two-time periods, we can choose L2 subjects to be identified. We then assign one or more of these L2 subjects to respective classes with certainty at the Nth cycle. When the latent classes are well differentiated, pre-classifying a small number subjects out of L2 subjects may suffice to break the symmetry without introducing serious subjectivity. However, in situations where certain classes are not well differentiated, pre-classifying up to L2 − 1 subjects may be preferable. We can pre-classify subjects by a trivial modification of MCMC at the imputation step: if the ith subject is chosen for l1 and l2 at cycle N, we deterministically set zi(l1, l2) = 1 at that cycle. This asymmetric penalty to the likelihood, which will be referred to here as a “dynamic data-dependent prior”, tends to dampen the nuisance mode while having very little effect on the mode of interest.

To see how this forced classification translates into an asymmetric prior, we can consider a sequence of draws from the P-step of the data augmentation algorithm. In the previous example (i.e., n = 100 from a two-time, two-class LTA with parameters δ1 = 0.5, τ1|1 = 0.5, τ1|2 = 0.5, (ρ11|1, ρ21|1) = (0.2, 0.4), and (ρ11|2, ρ21|2) = (0.6, 0.8)), suppose the ith individual has a maximum cumulative posterior probability of belonging to the first class at both times at the Nth cycle. Therefore, we classify him/her to the first class for both times (i.e., zi(1, 1) = 1). Suppose this individual’s responses to the items are yi = (yi11, yi21, yi12, yi22) = (2, 2, 2, 2). In the absence of label switching in the space ρm1|1 < ρm1|2 for m = 1, 2, this is equivalent to draws ρm1|1 from a posterior distribution with a prior Beta(1/2, 2 + 1/2),

| (10) |

where the imputations have been carried out on the remaining n − 1 individuals. Compared to (6), marginal counts are partitioned as . Although this may seem like a stronger prior compared to that given in (6), it is actually just a reordering of the terms that (almost) always occurred in the unconstrained MCMC draws without label switching. Therefore it has very little impact on the posterior distribution in the space ρm1|1 < ρm1|2. All that has occurred is that the fixed individual i is never imputed to be in the second class, whereas he/she was “almost never” imputed there in the unconstrained case.

In the event of label switching, where the meaning of ρm1|1 and ρm1|2 are reversed, the situation is quite different. The prior for ρm1|1 is now the prior for the class with ρm1|1 > ρm1|2, and in general it would be very rare for responses yi = (2, 2, 2, 2) to be imputed to zi(1, 1) = 1. But the prior distribution Beta(1/2, 2 + 1/2) does not switch, amounting to a very strong requirement that the class with the smaller ρ-parameter always contains an observation whose responses are yi = (2, 2, 2, 2). The impact on the MCMC is to discourage draws from the space ρm1|1 > ρm1|2, thereby restricting the draws to the region of interest and ignoring the symmetric nuisance mode.

This pre-classifying technique can be used to classify more than one individual, although the assumptions on the prior become stronger. For example, suppose we identify the jth individual whose cumulative posterior probability is the largest for the second class at both times, and classify him/her into zj(2, 2) = 1 accordingly. Suppose this individual’s responses are yj = (1, 1, 1, 1). This provides an “identification” of the second class as the one containing the jth individual. It also stipulates that the ith and jth individuals are never imputed to be in the same class, although draws with both those individuals in the same class are extremely rare in the unconstrained MCMC. With two assigned individuals with responses (1, 1, 1, 1) and (2, 2, 2, 2), times-series plots for parameters over the first 3,000 iterations are shown in Figure 4. Comparing these new plots to those of Figure 3, it can be seen that label switching is now almost non-existent. We now evaluate the performance of our procedure over repeated samples.

Figure 4.

Time-series plots of ρ11|1, ρ21|2, ρ11|2, and ρ21|2 over 3,000 iterations of modified MCMC.

5. SIMULATION STUDY

The purpose of our simulation study is to investigate the performance of the maximum likelihood (ML), maximum posterior (MP), and Bayesian methods using a dynamic data-dependent prior over repeated samples. These methods represent variations in a solution to handle the problems of estimates on the boundary and label switching during an MCMC run. We drew 500 samples of n = 100 observations each from the previous example (i.e., two-class LTA with two binary items measured over two-time points with parameters δ1 = 0.5, τ1|1 = 0.5, τ1|2 = 0.5, (ρ11|1, ρ21|1) = (0.2, 0.4), and (ρ11|2, ρ21|2) = (0.6, 0.8)). We assessed the performance of point estimates and nominal 95% confidence intervals for parameters. Four types of estimates were calculated: the standard MLE using the EM algorithm, MPE with hyper-parameters α(c1, c2) = 0.5 and β(m, k) = Σi [I(yim1 = k) + I(yim2 = k)] /2n defined in (8), MCMC estimates based on two subjects assigned dynamically across iterations (DYN-2), and MCMC estimates based on four subjects assigned dynamically (DYN-4). For MLE and MPE, we calculated and inverted the Hessian of the log-likelihood and posterior log-likelihood to obtain interval estimates. For estimates close to zero or one, symmetric interval estimates (estimates plus or minus two standard errors) would not be appropriate as they may stray outside the unit interval. We solved this problem by applying the normal approximation on the logistic scale. For Bayesian methods, we ran MCMC for a 1,000 burn-in period plus 2,000 iterations, assigning two or four observations to classes for each time period.

Distributions of the Bayesian estimates from the MCMC procedure are shown in Figure 5. Recall that distributions of MLE and MPE were displayed in Figures 1 and 2. Comparing distributions of MLE to those of the Bayesian estimates from DYN-2 and DYN-4 in Figure 3, we see that the Bayesian method achieved obvious improvement over MLE.

Figure 5.

Bayesian estimates for (a) ρ1 = (ρ11|1, ρ21|1), (b) ρ2 = (ρ11|2, ρ21|2), (c) δ1, and (d) (τ1|1, τ2|2) from 500 samples with ρ1 = (0.2, 0.4), ρ2 = (0.6, 0.8), δ1 = 0.5, and τ1|1 = τ1|2 = 0.5.

The average and root-mean-square error (RMSE) of the parameter estimates under each estimation method are shown in Table I. All methods except standard ML performed well: the point estimates from MP, DYN-2 and DYN-4 tend to be unbiased, and the values of RMSE are smaller than those from ML. The performance of interval estimates is summarized in Table II, which shows the percentage of intervals that covered their targets and the average interval width. The ML and MP methods fail to produce standard errors for the estimates from 17 and 20 samples out of 500, respectively. The coverages in Table II are based on samples which provide standard errors successfully. ML performed poorly, with coverage lower than the nominal rate of 95% and with wide intervals. Among the MP and Bayesian methods, the interval width of MP tended to be wider and the rate of coverage was low except for τ-parameters. DYN-2 and DYN-4, however, were conservative, exhibiting higher rates of coverage, yet narrower intervals for the parameters than the MP method.

Table I.

Average (RMSE) of point estimates over 500 repetitions.

| True | MCMC | ||||

|---|---|---|---|---|---|

| value | ML | MP | DYN-2 | DYN-4 | |

| δ1 | 0.5 | 0.495 (0.237) | 0.503 (0.094) | 0.500 (0.094) | 0.500 (0.075) |

| τ1|1 | 0.5 | 0.504 (0.303) | 0.507 (0.177) | 0.447 (0.141) | 0.502 (0.103) |

| τ1|2 | 0.5 | 0.476 (0.293) | 0.491 (0.170) | 0.547 (0.134) | 0.483 (0.113) |

| ρ11|1 | 0.2 | 0.172 (0.143) | 0.199 (0.077) | 0.199 (0.057) | 0.180 (0.060) |

| ρ21|1 | 0.4 | 0.326 (0.225) | 0.389 (0.098) | 0.366 (0.086) | 0.342 (0.100) |

| ρ11|2 | 0.6 | 0.664 (0.218) | 0.609 (0.100) | 0.627 (0.087) | 0.651 (0.097) |

| ρ21|2 | 0.8 | 0.833 (0.135) | 0.807 (0.082) | 0.804 (0.057) | 0.821 (0.060) |

Table II.

Percent coverage (average width) of nominal 95% interval estimates over 500 repetitions.

| MCMC | ||||

|---|---|---|---|---|

| ML | MP | DYN-2 | DYN-4 | |

| δ1 | 78.7 (0.861) | 99.8 (0.801) | 99.6 (0.669) | 99.8 (0.593) |

| τ1|1 | 71.0 (0.918) | 95.6 (0.735) | 98.4 (0.806) | 99.8 (0.697) |

| τ1|2 | 74.7 (0.925) | 95.8 (0.738) | 99.8 (0.810) | 99.0 (0.700) |

| ρ11|1 | 93.2 (0.753) | 97.5 (0.564) | 99.8 (0.375) | 99.0 (0.357) |

| ρ21|1 | 88.8 (0.779) | 96.2 (0.614) | 99.6 (0.559) | 99.0 (0.554) |

| ρ11|2 | 87.4 (0.769) | 95.6 (0.615) | 99.0 (0.554) | 99.0 (0.554) |

| ρ21|2 | 94.4 (0.752) | 96.7 (0.570) | 99.6 (0.375) | 99.6 (0.354) |

In summary, in the two-class LTA model, using data-dependent prior information through the MP and Bayesian methods resulted in superior estimation compared to the standard ML. The Bayesian methods with the dynamic allocation based on the maximum posterior probabilities perform slightly better than the MP method, but the difference is inconsequential.

6. AN APPLICATION TO ADOLESCENT SUBSTANCE USE DATA

Our main focus in this paper is to describe the difficulties in inference with small-sample LTA. In order to demonstrate our approach, we draw data for a limited case example from The National Longitudinal Study of Adolescent Health (Add Health) [31]. The Add Health study was conducted to explore the causes of the health-related behaviors of adolescents in grades 7 through 12. The first survey, conducted from September 1994 through December 1995, employed a nationally representative sample of 11,796 high-school students. The second wave included the same participants interviewed again between April and August of 1996. The third wave, surveyed from those respondents between August 2001 and April 2002, was designed to collect data useful to examine the transitions from adolescence to young adulthood.

Early pubertal timing has been identified as a risk factor in relation to early adolescent substance use [3, 32, 33]. We investigate the latent structure of substance use onset in early maturers based on the model presented by Chung et al. [2] (i.e., an LTA is specified to include five classes of substance use). They examined stage-sequential patterns of substance use, focusing on measures of cigarette and alcohol use. Using the sample of 3,356 females in grades 7 through 12 from waves 1 and 2, they found that among 12-year old non-substance users, those who had experienced puberty were approximately three times more likely to advance in substance use than those who had not experienced puberty. The sample used in the current study only includes females age 12 or 13 who have experienced puberty by wave 1. To identify females who have experienced puberty, we used two items: how developed your breasts are compared to grade school, and how curvy your body is compared to grade school. We included females who reported significant changes in both items at wave 1, resulting in a sample of 202 females.

Four items are used to define adolescent substance use at each year: alcohol use in the past 12 months (AlcUse), cigarette use in the past 30 days (CigUse), five or more drinks at once in the past 12 months (5+Drinks), and drunkenness in the past 12 months (Drunk). All items are rescaled as binary indicators so that possible responses are 1 = yes and 2 = no. Table III shows the parameter estimates from the ML and MP methods for an LTA model with five latent classes of substance use; estimates represent the class prevalence at wave 1 (i.e., δ-parameters) and the probability of responding ‘yes’ to each of the four items for a given class of substance use (i.e., ρ-parameters). Based on the pattern of these probabilities, the meaning of the five classes can be interpreted and appropriate class labels can be assigned. The point estimates for ML and MP reported in Table III are nearly identical. Table IV gives the transition probabilities of moving from one class of substance use at wave 1 to another class at wave 2 (i.e., τ-parameters). The diagonal values in Table IV are the probabilities of membership in the same class over time. The estimates shown in Tables III and IV are computed by ML and MP methods using EM algorithm, but standard errors are not available because the Hessian matrix can not be inverted.

Table III.

Estimated class prevalence at wave 1 (δ-parameters) and the probabilities of responding ‘yes’ to items for each substance use class (ρ-parameters) from the maximum likelihood (ML) and maximum posterior likelihood (MP) methods.

| Prevalence at wave 1 |

Observed items | |||||

|---|---|---|---|---|---|---|

| Method | Class of substance use | AlcUse | CigUse | 5+Drinks | Drunk | |

| 1. No-use | 0.574 | 0.000 | 0.000 | 0.000 | 0.000 | |

| 2. Alcohol | 0.125 | 1.000 | 0.000 | 0.044 | 0.000 | |

| ML | 3. Cigarettes | 0.076 | 0.354 | 1.000 | 0.000 | 0.000 |

| 4. Drunk | 0.114 | 1.000 | 0.087 | 0.686 | 0.798 | |

| 5. Cigarettes + drunk | 0.112 | 1.000 | 0.677 | 0.805 | 0.873 | |

| 1. No-use | 0.561 | 0.013 | 0.011 | 0.001 | 0.002 | |

| 2. Alcohol | 0.131 | 0.924 | 0.019 | 0.042 | 0.037 | |

| MP | 3. Cigarettes | 0.081 | 0.355 | 0.880 | 0.010 | 0.012 |

| 4. Drunk | 0.098 | 0.988 | 0.042 | 0.712 | 0.768 | |

| 5. Cigarettes + drunk | 0.129 | 0.991 | 0.932 | 0.773 | 0.860 | |

Table IV.

Transition probabilities from wave 1 to wave 2 based on the maximum likelihood (ML) and maximum posterior likelihood (MP) methods.

| Class at wave 2 | ||||||

|---|---|---|---|---|---|---|

| Method | Class at wave 1 | 1 | 2 | 3 | 4 | 5 |

| 1. No-use | 0.594 | 0.142 | 0.080 | 0.116 | 0.068 | |

| 2. Alcohol | 0.042 | 0.344 | 0.049 | 0.334 | 0.231 | |

| ML | 3. Cigarettes | 0.210 | 0.000 | 0.274 | 0.228 | 0.288 |

| 4. Drunk | 0.051 | 0.000 | 0.069 | 0.570 | 0.310 | |

| 5. Cigarettes + drunk | 0.000 | 0.000 | 0.163 | 0.000 | 0.837 | |

| 1. No-use | 0.599 | 0.148 | 0.081 | 0.098 | 0.074 | |

| 2. Alcohol | 0.018 | 0.346 | 0.055 | 0.302 | 0.279 | |

| MP | 3. Cigarettes | 0.158 | 0.017 | 0.303 | 0.202 | 0.320 |

| 4. Drunk | 0.059 | 0.024 | 0.076 | 0.529 | 0.311 | |

| 5. Cigarettes + drunk | 0.010 | 0.010 | 0.142 | 0.022 | 0.817 | |

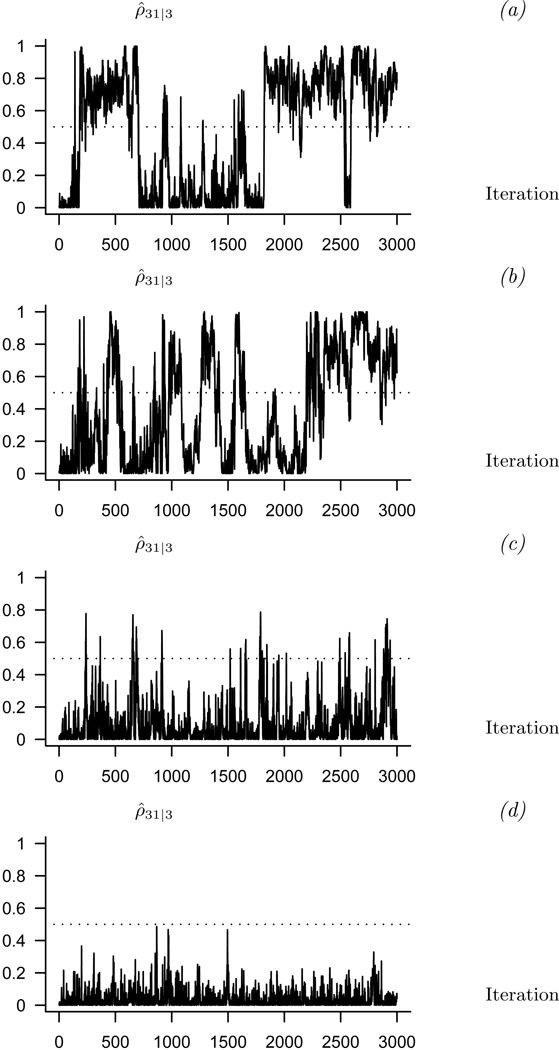

The Bayesian method has no difficulty producing intervals, but the class labels may switch during an MCMC run, making the output difficult to interpret. To illustrate, we analyze the same data with small amounts of data-dependent prior information to reduce the label switching. Using a Jeffrey’s prior, we perform 2,000 iterations after a burn-in period of 1,000 cycles. Note that we can identify up to 52 subjects who have the largest value in the cumulative posterior probabilities for each combination of classes for two-time periods. Four sets of estimates are calculated using a Bayesian approach: the MCMC with no subject assigned (MCMC), the MCMC with one subject assigned dynamically across iterations (DYN-1), the MCMC with five subjects assigned dynamically across iterations (DYN-5), and the MCMC with ten subjects assigned dynamically across iterations (DYN-10). The time-series plots for the probability of reporting 5+Drinks given membership in Class 3 (i.e., ρ31|3) for each MCMC procedure are displayed in Figure 6, showing that the label switching is no longer apparent in DYN-5 and DYN-10. A summary of the assigned individuals’ cumulative posterior probabilities is displayed in Table V. For example, during 3,000 iterations of DYN-1, the average value of the cumulative posterior probabilities for assigned subjects is close to one (i.e., 0.942), and therefore assigning one subject at each iteration has very little impact on the posterior distribution. However, label switching still occurs because the data-dependent prior given in DYN-1 is not sufficient to break the symmetry (see Figure 6 (b)). By pre-classifying more subjects, the posterior distribution tends to dampen the nuisance mode, but we are introducing more subjectivity (i.e., the average value of the cumulative posterior probabilities is decreasing).

Figure 6.

Time-series plots of endorsing 5+Drinks for Class 3 (i.e., ρ31|3) from (a) MCMC, (b) DYN-1, (c) DYN-5, and (d) DYN-10 over 3,000 iterations of MCMC.

Table V.

Summary of the cumulative posterior probabilities for the assigned subjects.

| Modified MCMC | |||

|---|---|---|---|

| DYN-1 | DYN-5 | DYN-10 | |

| Min. | 0.869 | 0.843 | 0.755 |

| Max. | 0.995 | 0.989 | 0.991 |

| Mean | 0.942 | 0.908 | 0.889 |

Tables VI and VII show the parameter estimates and their 95% confidence intervals based on DYN-5 and DYN-10. Point estimates from DYN-5 and DYN-10 are nearly identical except in AlcUse of Class 3. Based on the small size of Class 3, it is possible that label switching still occurs in Class 3 for DYN-5. However, the point estimates clearly reveal the nature of each of the five classes.

Table VI.

Estimated class prevalence at wave 1 (δ-parameters), the probabilities of responding ‘yes’ to items given substance use class (ρ-parameters), and their 95% confidence intervals based on DYN-5 and DYN-10.

| Prevalence at wave 1 |

Observed items | |||||

|---|---|---|---|---|---|---|

| Method | Class | AlcUse | CigUse | 5+Drinks | Drunk | |

| 1. No-use | 0.608 (0.50, 0.71) | 0.037 (0.00, 0.13) | 0.063 (0.00, 0.13) | 0.003 (0.00, 0.02) | 0.003 (0.00, 0.02) | |

| 2. Alcohol | 0.141 (0.07, 0.23) | 0.917 (0.53, 1.00) | 0.093 (0.00, 0.29) | 0.076 (0.00, 0.27) | 0.112 (0.00, 0.32) | |

| DYN-5 | 3. Cigarettes | 0.042 (0.00, 0.12) | 0.542 (0.04, 0.97) | 0.836 (0.40, 1.00) | 0.090 (0.00, 0.49) | 0.089 (0.00, 0.46) |

| 4. Drunk | 0.094 (0.02, 0.18) | 0.989 (0.94, 1.00) | 0.229 (0.00, 0.56) | 0.781 (0.56, 1.00) | 0.792 (0.59, 0.99) | |

| 5. Cig. + Drunk | 0.114 (0.04, 0.19) | 0.988 (0.94, 1.00) | 0.910 (0.67, 1.00) | 0.783 (0.58, 0.97) | 0.884 (0.70, 1.00) | |

| 1. No-use | 0.575 (0.48, 0.66) | 0.016 (0.00, 0.07) | 0.028 (0.00, 0.08) | 0.003 (0.00, 0.01) | 0.003 (0.00, 0.02) | |

| 2. Alcohol | 0.153 (0.09, 0.23) | 0.938 (0.72, 1.00) | 0.076 (0.00, 0.23) | 0.076 (0.00, 0.22) | 0.116 (0.00, 0.28) | |

| DYN-10 | 3. Cigarettes | 0.066 (0.02, 0.14) | 0.305 (0.01, 0.63) | 0.870 (0.49, 1.00) | 0.034 (0.00, 0.17) | 0.030 (0.00, 0.15) |

| 4. Drunk | 0.091 (0.03, 0.17) | 0.989 (0.94, 1.00) | 0.259 (0.00, 0.63) | 0.830 (0.60, 1.00) | 0.817 (0.61, 0.99) | |

| 5. Cig. + Drunk | 0.115 (0.04, 0.18) | 0.988 (0.94, 1.00) | 0.896 (0.57, 1.00) | 0.725 (0.42, 0.89) | 0.848 (0.63, 0.99) | |

Table VII.

Transition probabilities from wave 1 to wave 2 and 95% confidence intervals based on DYN-5 and DYN-10.

| Class at wave 1 |

Class at wave 2 | |||||

|---|---|---|---|---|---|---|

| Method | 1 | 2 | 3 | 4 | 5 | |

| 1. No-use | 0.613 (0.47, 0.73) | 0.155 (0.04, 0.31) | 0.052 (0.00, 0.15) | 0.123 (0.00, 0.15) | 0.056 (0.03, 0.24) | |

| 2. Alcohol | 0.039 (0.00, 0.17) | 0.309 (0.02, 0.59) | 0.077 (0.00, 0.31) | 0.367 (0.00, 0.58) | 0.209 (0.02, 0.76) | |

| DYN-5 | 3. Cigarettes | 0.141 (0.00, 0.57) | 0.104 (0.00, 0.52) | 0.269 (0.00, 0.75) | 0.207 (0.00, 0.77) | 0.278 (0.00, 0.69) |

| 4. Drunk | 0.070 (0.00, 0.29) | 0.086 (0.00, 0.39) | 0.077 (0.00, 0.31) | 0.553 (0.17, 0.90) | 0.214 (0.00, 0.57) | |

| 5. Cig. + Drunk | 0.029 (0.00, 0.15) | 0.036 (0.00, 0.16) | 0.170 (0.02, 0.42) | 0.048 (0.39, 0.24) | 0.716 (0.00, 0.93) | |

| 1. No-use | 0.600 (0.49, 0.15) | 0.179 (0.09, 0.57) | 0.057 (0.00, 0.22) | 0.115 (0.03, 0.65) | 0.048 (0.00, 0.48) | |

| 2. Alcohol | 0.036 (0.00, 0.15) | 0.347 (0.14, 0.57) | 0.060 (0.00, 0.22) | 0.361 (0.10, 0.65) | 0.196 (0.00, 0.48) | |

| DYN-10 | 3. Cigarettes | 0.123 (0.00, 0.39) | 0.062 (0.00, 0.31) | 0.341 (0.08, 0.71) | 0.230 (0.00, 0.64) | 0.244 (0.00, 0.64) |

| 4. Drunk | 0.090 (0.01, 0.27) | 0.083 (0.00, 0.37) | 0.061 (0.00, 0.26) | 0.543 (0.17, 0.89) | 0.223 (0.00, 0.60) | |

| 5. Cig. + Drunk | 0.027 (0.00, 0.13) | 0.039 (0.00, 0.19) | 0.133 (0.01, 0.37) | 0.074 (0.00, 0.45) | 0.727 (0.31, 0.94) | |

7. DISCUSSION

Many research questions in medical or behavioral science require methods that can classify individuals into pragmatically meaningful groups according to their item-response patterns. The popularity of latent transition analysis is increasing because the model can identify population subgroups and their transition probabilities over time. LTA models the structure of subjects’ item responses forming discrete classes based on similar item-response profiles. Unlike models for continuous latent variables (e.g., factor analysis and structural equation modeling), this categorical latent variable model does not require further assumptions about the nature of classes. Subjects classified into a particular class have both dimensional and configurational similarity in the distribution of item-response probabilities.

In this study, we explored possible complications in inference for small-sample LTA. In addition, we proposed plausible strategies that can alleviate these problems by using data-dependent priors, and evaluated their performance over repeated samples. We analyzed Add Health data using the maximum likelihood and the maximum posterior methods and demonstrated that Bayesian inference by MCMC may be an attractive alternative. However, new challenges, such as label switching emerge with MCMC, particularly when using a small sample. Recently, Chung et al. [15] showed that label switching could be reduced in the Bayesian analysis for exponential mixture models by pre-classifying one or more cases. We have extended their work by developing a dynamic algorithm for selecting subjects to pre-classify in LTA models. We also provided a justification of the procedure to highlight the theoretical contrast with the more traditional approach of relabeling the parameter draws. Although we illustrated our technique in a specific modeling context (LTA), it generalizes immediately to any other mixture case. This automated strategy dynamically assigns class membership of one or more subjects, and thus can be used with mixtures from any family of distributions. The dynamic pre-classification algorithm is easily applicable to the extended version of LTA, where the transition probabilities are modeled as a function of covariates. From a computational standpoint, including covariates is a trivial matter, requiring minor modification to the posterior mean of the transition probability (i.e., ) in (9): the posterior mean of the transition probability can be related to covariates using the logistic link function. The pre-classification technique is easy to implement, and performs at least as well as the standard approach of applying constraints [15]. Other researchers have noted that the choice of constraint to apply can affect inference [14, 23]. As the number of classes increases in LTA, the difficulty of identifying a unique mode with an appropriate set of constraints compounds.

The technique of pre-classifying a subset of subjects may have some limitations. Pre-classifying k observations into different classes does more than identify k classes—it also assigns these subjects to different classes with certainty. The pre-classification depends on the posterior probabilities of class membership given the parameters, and thus the amount of impact it has on the mode of interest depends on these probabilities. It is expected, however, that the nuisance mode is more adversely affected than the mode of interest. More research is required to compare the pre-classifying technique with traditional constraints across models with different distributions and larger numbers of classes.

Although many substantive interpretations could be pursued in light of the model, we mentioned only a few in this presentation. Note that our exploration was intended to demonstrate a possible solution to the difficulties in small-sample LTA inference and to provide practitioners with a well-worked and plausible example. We provided a limited demonstration using real data to elucidate the model. Our hope is that substantive researchers will be able to identify possible difficulties in estimation and inference for LTA with small samples, and consider using the proposed solution in their research.

Acknowledgments

Contract/grant sponsor: National Institute on Drug Abuse; contract/grant number: 5-R03-DA021639, 1-P50-DA10075

REFERENCES

- 1.Posner SF, Collins LM, Longshore D, Anglin D. The acquisition and maintenance of safer sexual behaviors among injection drug users. Substance Use and Misuse. 1996;31:1995–2015. doi: 10.3109/10826089609066448. [DOI] [PubMed] [Google Scholar]

- 2.Chung H, Park Y, Lanza ST. Latent transition analysis with covariates: Pubertal timing and substance use behaviors in adolescent females. Statistics in Medicine. 2005;24:2895–2910. doi: 10.1002/sim.2148. [DOI] [PubMed] [Google Scholar]

- 3.Lanza ST, Collins LN. Pubertal timing and the onset of substance use in females during early adolescence. Prevention Science. 2002;3:69–82. doi: 10.1023/a:1014675410947. [DOI] [PubMed] [Google Scholar]

- 4.Velicer WF, Martin RA, Collins LM. Latent transition analysis for longitudinal data. Addiction. 1996;91:S197–S209. [PubMed] [Google Scholar]

- 5.Lanza St, Flaherty BP, Collins LM. Latent class and latent transition analysis. In: Schinka JA, Velicer WF, editors. Handbook of Psychology: Vol. 2. Research Methods in Psychology. Hoboken, NJ: Wiley; 2003. pp. 663–685. [Google Scholar]

- 6.Dempster AP, Laird NM, Rubin DB. Maximum likelihood from incomplete data via EM algorithm (with discussion) Journal of the Royal Statistical Society, Series B. 1977;39:1–38. [Google Scholar]

- 7.Chung H, Flaherty BP, Schafer JL. Latent class logistic regression: application to marijuana use and attitudes among high school seniors. Journal of the Royal Statistical Society, Series A. 2006;169:723–743. [Google Scholar]

- 8.Chung H, Walls TA, Park Y. A latent transition model with logistic regression. Psychometrika. 2007;72 [Google Scholar]

- 9.Garrett ES, Zeger SL. Latent class model diagnosis. Biometrics. 2000;56:1055–1067. doi: 10.1111/j.0006-341x.2000.01055.x. [DOI] [PubMed] [Google Scholar]

- 10.Garrett ES, Eaton WW, Zeger SL. Methods for evaluating the performance of diagnostic tests in the absence of a gold standard: a latent class model approach. Statistics in Medicine. 2002;21:1289–1307. doi: 10.1002/sim.1105. [DOI] [PubMed] [Google Scholar]

- 11.Hoijtink H. Constrained latent class analysis using the Gibbs sampler and posterior predictive p-values: applications to educational testing. Statistica Sinica. 1998;8:691–711. [Google Scholar]

- 12.Lanza ST, Collins LM, Schafer JL, Flaherty BP. Using data augmentation to obtain standard errors and conduct hypothesis tests in latent class and latent transition analysis. Psychological Methods. 2005;10:84–100. doi: 10.1037/1082-989X.10.1.84. [DOI] [PubMed] [Google Scholar]

- 13.Loken E. Using latent class analysis to model temperament types. Multivariate Behavioral Research. 2004;39:625–652. doi: 10.1207/s15327906mbr3904_3. [DOI] [PubMed] [Google Scholar]

- 14.Celeux G, Hurn M, Robert CP. Computational and inferential difficulties with mixture posterior distributions. Journal of the American Statistical Association. 2000;95:957–970. [Google Scholar]

- 15.Chung H, Loken E, Schafer JL. Difficulties in drawing inferences with finite-mixture models: a simple example with a simple solution. The American Statistician. 2004;58:152–158. [Google Scholar]

- 16.McLachlan G, Peel D. Finite Mixture Models. New York: Wiley; 2000. [Google Scholar]

- 17.Titterington DM, Smith AFM, Makov UE. Statistical Analysis of Finite Mixture Distributions. New York: Wiley; 1985. [Google Scholar]

- 18.Lazarsfeld PF, Henry NW. Latent Structure Analysis. Boston: Houghton-Mifflin; 1968. [Google Scholar]

- 19.Little RJA, Rubin DB. Statistical Analysis with Missing Data. second edition. New York: Wiley; 1987. [Google Scholar]

- 20.Gelfand AE, Smith AFM. Sampling-based approaches to calculating marginal densities. Journal of the American Statistical Association. 1990;85:398–409. [Google Scholar]

- 21.Tanner WA, Wong WH. The calculation of posterior distributions by data augmentation. Journal of the American Statistical Association. 1987;82:528–550. [Google Scholar]

- 22.Robert CP. Mixtures of distributions: inference and estimation. In: Gilks WR, Richardson S, Spiegelhalter DJ, editors. Markov Chain Monte Carlo in Practice. London: Chapman & Hall; 1996. pp. 441–464. [Google Scholar]

- 23.Richardson S, Green PJ. On Bayesian analysis of mixtures with an unknown number of components. Journal of the Royal Statistical Society, Series B. 1997;59:731–792. [Google Scholar]

- 24.Ghosh JH, Sen PK. On the asymptotic performance of the likelihood ratio statistic for the mixture model and related results; Proceedings of the Berkeley Conference in Honor of Jerzy Neyman and Jack Kiefer, Vol. 2; Monterey: Wadsworth; 1985. pp. 789–806. [Google Scholar]

- 25.Lindsay BG. Mixture Models: Theory, Geometry and Applications. Hayward: Institute of Mathematical Statistics; 1995. [Google Scholar]

- 26.Lo Y, Mendell NR, Rubin DB. Testing the number of components in a normal mixture. Biometrika. 2001;88:767–778. [Google Scholar]

- 27.McLachlan GJ. On bootstrapping the likelihood ratio test statistic for the number of components in a normal mixture. Applied Statistics. 1987;36:318–324. [Google Scholar]

- 28.Rubin DB, Stern HS. Testing in latent class models using a posterior predictive check distribution. In: von Eye A, Clogg CC, editors. Latent Variables Analysis: Applications for Developmental Research. Thousand Oaks: Sage; 1994. pp. 420–438. [Google Scholar]

- 29.Rubin DB, Schenker N. Logit-based interval estimation for binomial data. In: Clogg CC, editor. Sociological Methodology 1987. Washington, D.C: American Sociological Association; 1987. pp. 131–144. [Google Scholar]

- 30.Loken E. Multimodality in mixture models and latent trait models. In: Gelman A, Meng X, editors. Applied Bayesian Modeling and Causal Inference from Incomplete-data Perspectives: An Essential Journey with Donald Rubin’s Statistical Family. New York: Wiley; 2004. pp. 202–213. [Google Scholar]

- 31.Udry JR. The National Longitudinal Study of Adolescent Health (Add Health), Waves I & II, 1994–1996; Wave III, 2001–2002. Chapel Hill, NC: Carolina Population Center, University of North Carolina at Chapel Hill; 2003. [Google Scholar]

- 32.Brooks-Gunn J, Petersen AC, Eichorn D. The study of maturational timing effects in adolescence. Journal of Youth and Adolescence. 1985;14:149–161. doi: 10.1007/BF02090316. [DOI] [PubMed] [Google Scholar]

- 33.Brooks-Gunn J, Reiter EO. The role of pubertal processes. In: Feldman S, Elliott GR, editors. At the Threshold. Cambridge: Harvard University Press; 1985. pp. 16–53. [Google Scholar]