Abstract

This study investigated navigation with route instructions generated by digital-map software and synthetic speech. Participants, either visually impaired or sighted wearing blind folds, successfully located rooms in an unfamiliar building. Users with visual impairment demonstrated better route-finding performance when the technology provided distance information in number of steps rather than walking time or number of feet.

A common problem faced by individuals with visual impairment is independent navigation (Loomis, Golledge & Klatzky, 2001). Although canes and guide dogs aid in obstacle avoidance, it is still difficult to plan and follow routes in unfamiliar environments (Golledge, Marston, Loomis & Klatzky, 2004). Navigation is especially problematic in indoor environments because Global Positioning System (GPS) signals are unavailable. Our laboratory is developing an indoor wayfinding technology, called the Building Navigator, that contains a route-planning feature. This paper describes user tests of this feature.

Successful wayfinding technology should provide two main pieces of information: 1) the current location and heading of the individual, and 2) the route to the destination (Loomis, et al., 2001; Golledge, et al., 2004; Loomis, Golledge, Klaztky & Marston, 2007). Routes consist of waypoints, locations where the navigator changes direction. To follow a route, the navigator needs to have real-time access to information about the distance and direction to waypoints until the destination is reached.

Thus far, several indoor wayfinding technologies for travelers with visual impairment have addressed the first issue of localization (Loomis, et al., 2001; Giudice & Legge, 2008). Braille signs are now commonly used in buildings, but they are sometimes difficult to locate because of sporadic placement. Also, the majority of people with visual impairment do not read braille (JVIB, 1996). Various other positioning technologies have been explored, such as Talking Signs (www.talkingsigns.com), Talking Lights (www.talking-lights.com), RFID tags, and systems using wireless signals, but there are still limitations in the accuracy and the cost of installing and maintaining these systems (Loomis, et al., 2001; Giudice & Legge, 2008). Our Building Navigator software has been designed to be integrated with positioning technologies while also providing information about the layout and other salient features of indoor spaces.

The Building Navigator

The Building Navigator provides information about the spatial layout of rooms, hallways, and other important features in buildings through synthetic speech output. This software was designed to be part of a portable system, perhaps installed on a cell phone or PDA. The following paragraphs describe the major components of the system: the Building Database that contains information about the layout of the floor (digital map), the Floor Builder used to input building layout information into the database, and two interface components for exploration and route finding.

The Building Database

The Building Database stores information about the physical features of the building as well as the spatial layout of these features. Stored features include spaces, such as rooms and lobbies, as well as important objects such as water fountains. Features are encoded into the database by first dividing a floor layout into meaningful spatial regions. These regions are assigned to feature types (e.g., door, room, hallway, window, stairs, elevator), and feature types are grouped into broader categories (e.g. physical space, connecting space, utility features) to facilitate fast searches for layout information.

Features that are spatially adjacent in the layout are associated with each other in the database through a set of logical relationships. For example, to indicate that a room is accessible from a particular hallway, a door feature is associated with both the hallway and room features. This makes the door logically accessible from both the hallway and the room.

The Building Database also includes functions for acquiring information (e.g. getting a list of known buildings, floors within a building, types of features present within a building) and also for managing information requests from input and output plugins. Input plugins are intended to handle environmental sensors like a wireless network location device, dead reckoning systems, or rotation/orientation sensors. Their primary purpose is to gather information regarding the user's location, heading and movements. Output plugins provide an interface through which the user interacts with the Building Database and the rest of the navigation device. This paper discusses two types of speech enabled output plugins for use in exploration and route finding.

Entering Building Information into the Database

A separate software program, called Floor Builder, is used for data entry. Conventional spatial mapping applications do not encode the range of features used by the Building Database, nor their spatial relations, resulting in the need for a custom application. First, a map of the building is digitized and segmented into features by a human operator, currently an experimenter. In the future, this person would be someone trained in the use of the software, and not the end user. The Floor Builder software has a graphical-user interface that allows the operator to follow along a series of simple point-and-click steps to parse the map into features. The segmented map is converted into the necessary data structures and uploaded into the Building Database. With the initial version of this software, an experienced operator can complete the mapping of a floor with 50 rooms in about two hours. A later version of the software, automating several of the component procedures, has reduced this time to approximately twenty minutes.

User Interfaces for Navigation

The Building Navigator presents information via synthetic speech output, but in principle the same verbal information could be sent to a braille display. A significant challenge was developing verbal descriptions of the space that were concise, informative, and easy to understand. These descriptions benefited from prior work on verbal descriptions for outdoor wayfinding, but differ in important ways. For instance, unlike streets, indoor hallways are not typically named. Also, more information needs to be conveyed within a smaller area of space in indoor environments compared to outdoor environments. Previous studies have found that individuals who are blind are able to effectively learn and navigate through buildings using consistent and structured verbal descriptions of layout geometry (Giudice, 2004; Giudice, Bakdash & Legge, 2007).

Upon entering an unfamiliar building, a user may have two possible goals: 1) to become familiar with the overall building layout through exploration, or 2) to find a specific location by following a route. The Building Navigator currently supports these two types of navigation.

Exploration Mode

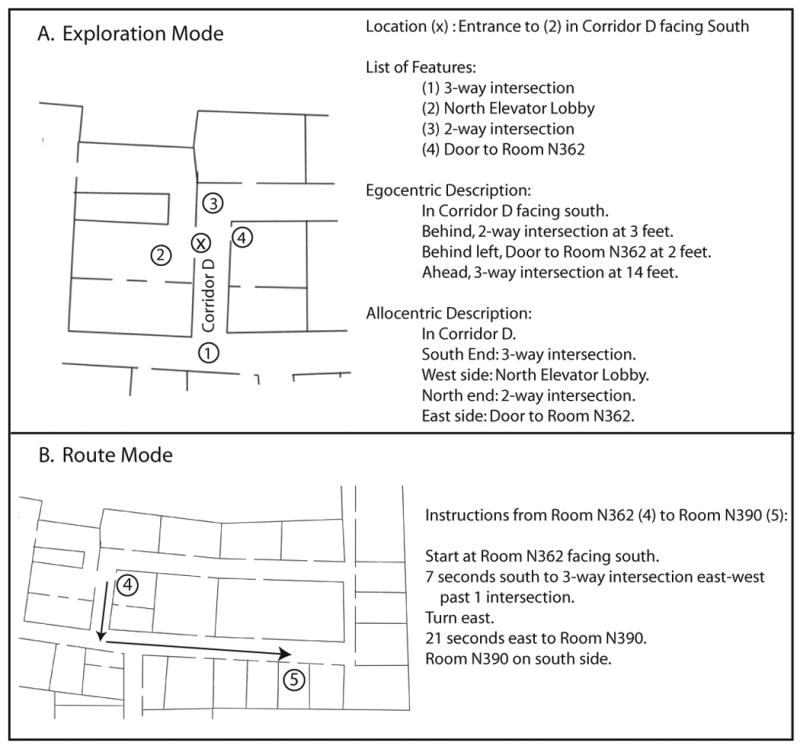

In the Exploration Mode, speech output describes the layout of features near the user's current location. For example, if the user is standing in a lobby, the set of features described in this mode would include the doors and hallways located on the perimeter of the lobby. Users can also receive egocentric and allocentric descriptions of these features. An egocentric description provides the direction to a feature relative to the user's current location and chosen heading. Allocentric descriptions present information with respect to a set of absolute reference directions such as North, South, East and West. These descriptions also provide distances to features. See Figure 1A for an example of a feature list and the corresponding egocentric and allocentric descriptions.

Figure 1.

A) Example of a feature list, egocentric, and allocentric descriptions provided by the Exploration Mode of the Building Navigator. B) Example of route instructions provided by the Route Mode of the Building Navigator.

The Exploration Mode can be used for virtual exploration of a space, meaning a user can simulate navigation through the layout without setting foot in the physical building. The interaction is similar to having a guide provide detailed layout information at the user's request. This ability to virtually explore and learn a space before actually going there, called pre-journey learning, has proven beneficial to pedestrians with blindness and visual impairment (Holmes, Jansson & Jansson, 1996).

Route Mode

The Route Mode produces a list of instructions for navigating from a start to goal location via a series of waypoints (Figure 1B). The route is computed using Dijkstra's Shortest Path algorithm (Dijkstra, 1959), a well-known graph search algorithm that finds the shortest path between nodes. The user listens to one route instruction at a time, and selects the instruction by moving up or down in the list with key presses. The first instruction always indicates the user's starting location and heading. The subsequent instructions describe the distance and direction of travel to a series of waypoints, and the direction of the turns. The final instruction indicates the distance to the goal location and on which side of the space it is located (for example, south side of the hallway). Currently, all directions use an allocentric frame of reference (i.e. North, South, East, and West). To provide egocentric directions, the system must incorporate sensors or otherwise obtain information about the user's current heading.

A sample verbal description to a waypoint is “104 feet south to 3-way intersection west past 2 intersections.” The descriptions contain three critical parts in a standard format. First, the distance and direction to the waypoint is given (“104 feet south”). Distance is provided in one of three units: feet, the number of steps, or the time in seconds to travel. Distances in number of steps and seconds are individually calibrated according to step length and walking speed.

The second part of the instruction describes the geometry of the waypoint intersection (“3-way intersection west”). The waypoint can be a 2, 3, or 4-way intersection. Also, for 2 and 3-way intersections, the directions of the branching arms are described in an allocentric frame of reference. Consequently, the user is responsible for translating the description into an egocentric frame of reference (e.g. 3-way intersection west means there is a hallway branching to the left if the user is facing north, or a hallway branching to the right if the user is facing south). Lastly, the verbal description indicates how many intersections the user will pass before reaching the waypoint (“past 2 intersections”). While these descriptions may seem hard to parse, their structured format supports rapid learning. Participants learned to comprehend the descriptions with modest practice.

The remainder of this paper describes user testing of the Route Mode of the Building Navigator. The goal was to validate our method of coding and presenting information from a building plan as a step towards developing a useful application for improving wayfinding by people with visual impairment.

User Testing of the Route Mode of the Building Navigator

We tested the ability of individuals with full sight, wearing blindfolds, and with visual impairment to use the Route Mode of the Building Navigator interface without the use of positioning sensors. The goal was to determine if the route information provided by the Building Navigator resulted in improved wayfinding performance. A second goal was to compare route-following performance with three metrics for describing distances to waypoints (feet, steps, or seconds). The purpose of testing fully-sighted participants was to evaluate how visual experience affects the ability to code spatial and distance information from the Building Navigator instructions. We anticipated that differences in performance between people with and without visual impairment would reveal improvements that could be made to the technology.

Methods

Participants

Twelve fully-sighted participants (6 males and 6 females, age range of 19-29) and eleven participants with visual impairment were tested. All participants except one person with visual impairment were unfamiliar with the building floors used for testing. The person who was the exception (S9 in Table 1) only had limited familiarity with the test floors and reported not having a good “cognitive map” of the room locations and layout. The criteria used for selecting participants with visual impairment were that their impairment resulted in limited or no access to visual signs, they were no older than 60 years of age, and otherwise had no deficits that impaired mobility. Table 1 describes additional characteristics of these participants. All participants provided informed consent and were compensated either monetarily or with extra credit in their introductory psychology course. This study was approved by the Institutional Review Board at the University of Minnesota.

Table 1. Description of the Eleven Participants with Visual Impairment.

| Gender | Age | Age of onset | Low vision or blind | Mobility | logMAR acuity | Diagnosis | |

|---|---|---|---|---|---|---|---|

| 1 | F | 35 | not available | Blind | Dog | NA | not available |

| 2 | F | 35 | Birth | Blind | Dog | NA | Clouded Cornea, Microthalmas |

| 3 | M | 34 | 6 months | Blind | Dog | NA | Retrolental Fibroplasia |

| 4 | M | 43 | 19 years | LV | Cane | 1.77 | Retinitis Pigmentosa |

| 5 | F | 26 | Birth | LV | Cane | 1.32 | Advanced Retinitis Pigmentosa |

| 6 | F | 41 | 18 months | Blind | Dog | NA | Retinal Blastoma |

| 7 | M | 24 | Birth | Blind | Cane | NA | Retinopathy of Prematurity |

| 8 | M | 47 | Birth | LV | none | 1.18 | Congenital Cataracts |

| 9 | M | 60 | 6 years | LV | none | 1.7 | Secondary Corneal Opacification |

| 10 | M | 52 | Birth | LV | none | 1.44 | Glaucoma, Congenital Cataracts |

| 11 | F | 55 | Birth | Blind | Dog | NA | Retinopathy of Prematurity |

Apparatus and Materials

The Building Navigator software was installed on an Acer TravelMate 3000 laptop carried by participants in a backpack. Participants wore headphones connected to the laptop to hear the speech output, and used a wireless numeric keypad to communicate with the laptop. The experimenter could also communicate with the user's laptop (via a Bluetooth connection to a second laptop) for the purpose of entering start and goal locations for wayfinding trials. Fully-sighted users wore a blindfold during testing and were guided by the experimenter. Participants with visual impairment used their preferred mobility aid (Table 1).

Procedure

Participants were tested in four conditions using a within-subjects design. In three conditions participants used the technology, once in each distance mode (feet, steps or seconds). In a baseline condition, participants were not allowed access to the Building Navigator technology.

In all conditions, participants were allowed to ask “bystanders,” played by the experimenter, for information. Bystander queries could only be made at office doors. The “bystander” provided participants with their current location and the egocentric direction to travel to reach the destination (e.g., “You are at Room 426. Go right.”). Participants were instructed to minimize the number of bystander queries and to only make them when necessary, as if they were interrupting people in their offices. In the real world, when signage is not accessible, individuals with visual impairment have no recourse but to seek information from bystanders. We simulated bystanders, rather than relying on actual bystanders, to equalize the access to information across participants and conditions.

Each condition was tested in a different building layout, and the condition-layout pairings and the order of the conditions were counterbalanced. Participants were first trained to use the system, as described below, before proceeding with testing.

Calibration

To individually calibrate distances given in steps and seconds, we obtained an accurate estimate of each participant's step length and walking speed. Participants were asked to wear the backpack with the laptop inside, as during testing. Sighted participants practiced walking blindfolded while guided by an experimenter until they felt comfortable. All participants were asked to walk a thirty-foot length of hallway three times while the experimenter counted their steps and timed them. The average number of steps and time walked were then entered into the system software. Fully-sighted participants had a mean step length of 1.85 feet/step and a mean velocity of 2.92 feet/second. Participants with visual impairment had a mean step length of 1.98 feet/step and a mean velocity of 3.43 feet/second. T-tests indicated no significant difference between groups for step length, but a significant difference in velocity (p = 0.02). The use of step length and walking speed to compute travel distances relies on consistency in a subject's walking characteristics. Previous work in our lab has shown that step-length variability is small for individuals with and without blindness (Mason, Legge & Kallie, 2005).

Training

Participants were first introduced to the structure of route descriptions produced by the system. This included explicit training on verbal descriptions used to convey the geometry of intersections. The experimenter showed an example of each type of intersection, using tactile maps for participants with visual impairment, and explained the corresponding verbal description given by the system. The experimenter then tested participants on their understanding by asking them to describe the geometry of intersections corresponding to verbal descriptions.

Participants were also trained on the functions of the keypad. The “2” and “8” keys were used to move up and down in the list of instructions. When in the “seconds” distance mode, the “4” was used to start and pause a timer that beeped until the number of seconds indicated by the selected instruction elapsed. The “6” was used to stop and reset the timer. Lastly, the “/” was used to repeat an instruction. Participants practiced using the keys with a sample list of instructions, and thus were also familiarized with the speech output.

To further familiarize the participant with the task and technology, participants completed three practice routes, one in each distance mode. The practice routes were located on a floor other than those used for testing.

Testing

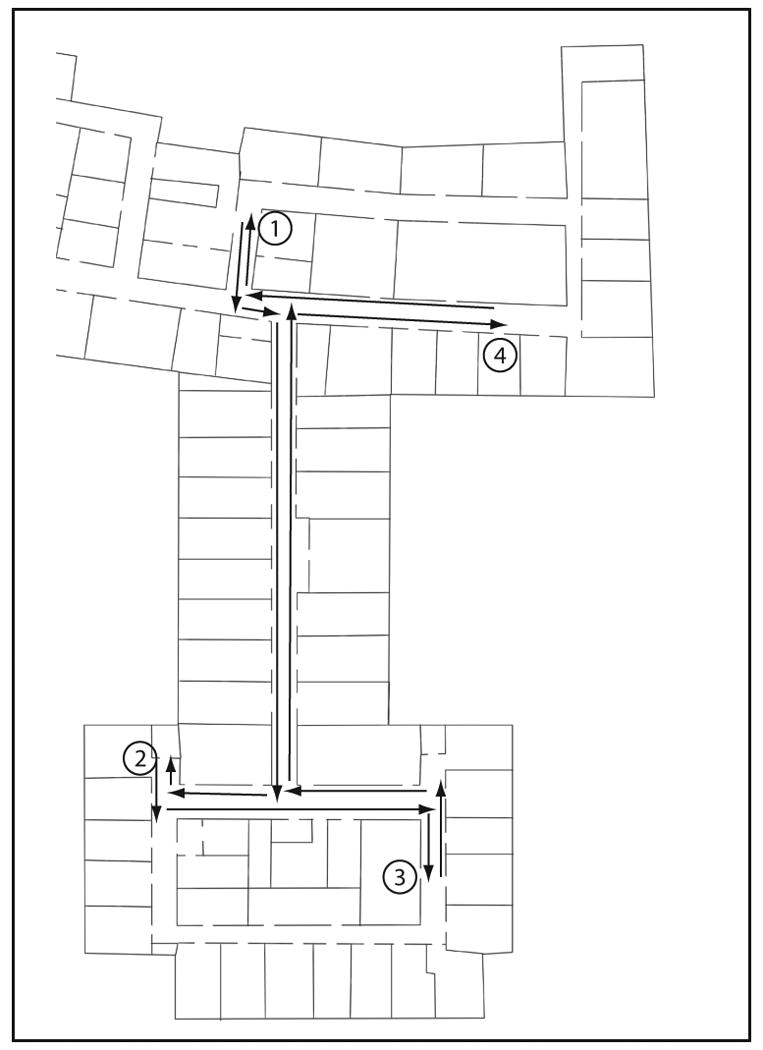

Participants were tested on four routes in a novel layout for each condition. The routes for each layout were chosen to be of similar difficulty. The average distance per route was 144.8 feet (SD = 8.3) and the average number of turns required was 2 (SD = 0.24). The complexity of the routes was chosen to test the effectiveness of the system while limiting the time required to test multiple routes in the experiment. Figure 2 shows a sample set of four routes used for testing.

Figure 2.

Example of a layout with four routes used for testing. The routes are from locations 1 to 2, 2 to 3, 3 to 4, and 4 to 1. The arrows between the locations depict the segments of the routes described by the Building Navigator.

At the beginning of a trial, participants were escorted to the starting location and instructed to face a specific direction. The experimenter then stated their current location, the direction they were facing, and the goal location (e.g. “You are at room N362 facing south. Go to room N349.”). Participants then attempted to find the goal location, using bystander queries when necessary, and indicated when they thought they had arrived at the goal location. The trial ended when participants correctly found the goal location or when they gave up. Participants with visual impairment also ended trials in the technology conditions when they felt the system was no longer helpful and they chose to rely exclusively on bystander queries. This was to prevent these participants, who were on average older than the fully-sighted participants, from getting too frustrated or tired during the experiment. The beginning of the next trial started at the previous goal location.

Participants with visual impairment were allowed to detect intersections with any information they would normally use, including their residual vision, echolocation, cane or guide dog. Because the blindfolded sighted participants were much less able to access information about intersections, due to lack of aids (e.g., white cane), lack of visual cues or unfamiliarity with the sound cues, they were told when they passed intersections by the experimenter. Also, if participants deviated from the prescribed route, they did not receive a new set of instructions from the Building Navigator. It was up to participants to find their way back to the route or to end the trial. A second experimenter timed each trial with a stopwatch, recorded the participant's trajectory through the layout, and noted the location of bystander queries. At the end of the experiment, participants completed a survey asking them to evaluate the technology and to rank the conditions from most to least preferred.

Data Analysis

Wayfinding performance was evaluated using several measures. For each condition, we computed the average number of turns made, distance traveled, and time taken to complete a route. We also measured the average number of bystander queries made in each condition as an indicator of how independently participants could locate the rooms.

The results for participants with full sight and visual impairment were analyzed separately. The dependent measures--number of turns, distance traveled, and travel time--were analyzed using Analyses of Variance (ANOVA) blocked on subject. Because the data were not normally-distributed, Box-Cox power transformations were performed on the data. The nonparametric version of the ANOVA was conducted for the bystander query measure since the data could not be normalized with a transformation. For all measures, contrasts comparing conditions with the technology to the baseline condition were performed. Also, Bonferroni-corrected pairwise comparisons were conducted to evaluate if one of the three distance modes provided by the technology resulted in better performance.

Results

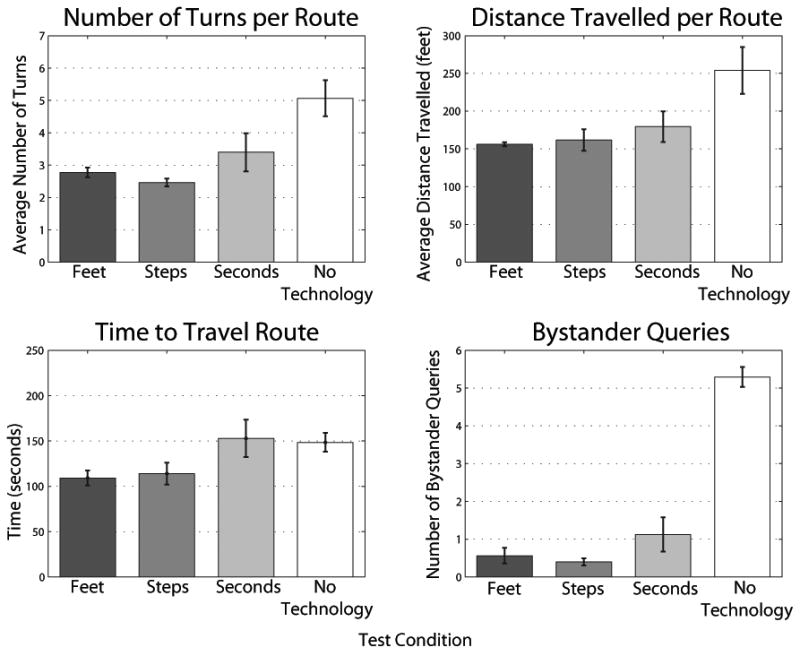

Participants with Full Vision

Participants were able to find 100% of the rooms across conditions. For all measures (Figure 3), the ANOVAs were highly significant (p < 0.004) and performance was significantly better with the technology than in the baseline condition (p < 0.007). For three of the four measures (number of turns made, distance traveled, and number of bystander queries), performance in each distance mode was significantly better than the baseline condition (p < 0.001). When distance was given in feet or steps, participants took significantly less time finding rooms compared to baseline (p < 0.003). There were no significant differences between distance modes for any of the measures. As shown by the median rankings displayed in Table 2, there was no clear preference for a particular distance mode when using the technology.

Figure 3. Route–Finding Performance of Fully-Sighted Participants.

Performance of fully-sighted participants in the route-finding task as measured by the number of turns made, distance travelled, time travelled, and number of bystander queries made.

Table 2. Median Participant Preference Rankings of the Four Test Conditions.

| Rankings for each condition (1 = most preferred) | ||||

|---|---|---|---|---|

| Group | Building Navigator: Distance in feet |

Building Navigator: Distance in steps |

Building Navigator: Distance in seconds |

No technology |

| Full vision | 2.5 | 2 | 3 | 4 |

| Impaired vision | 3 | 1 | 3 | 3 |

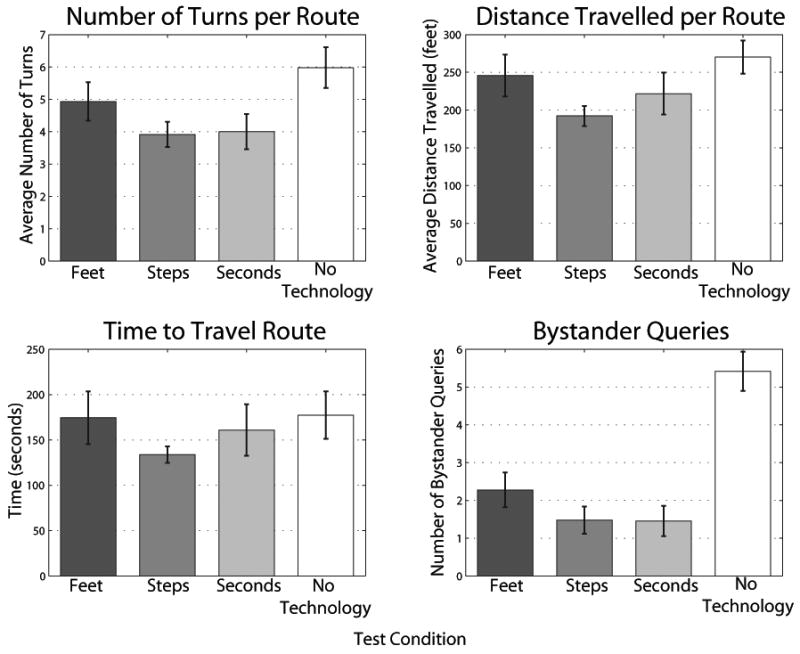

Participants with Visual Impairment

Across all conditions, participants were able to find 93% of the target rooms. ANOVAs revealed significant effects for the number of turns made, distance traveled, and number of bystander queries (p < 0.04) (Figure 4). For these measures, performance was significantly better with the technology compared to the baseline condition (p < 0.02).

Figure 4. Route–Finding Performance of Participants with Visual Impairment.

Performance of participants with visual impairment in the route-finding task as measured by the number of turns made, distance travelled, time travelled, and number of bystander queries made.

Participants made significantly fewer bystander queries in all the distance modes compared to the baseline condition (p < 0.001). They made significantly fewer turns with distance in steps and seconds (p < 0.008). When distance was given in steps, they also traveled a significantly shorter distance compared to baseline (p = 0.006). There were no significant differences between distance modes in any of the measures. Table 2 indicates that participants preferred distance in steps when using the technology.

Discussion

The goals of this experiment were to test if 1) the route-finding technology improved the ability to find rooms in an unfamiliar building, and 2) if one of the three distance modes (feet, steps or seconds) provided by the technology resulted in better performance. By testing both blindfolded-sighted participants and individuals with visual impairment, we also evaluated how visual experience affected the use of the technology. Four measures were used to evaluate route-finding performance: the number of turns made, the distance travelled, the time taken to find a room, and the number of bystander queries.

For fully-sighted participants, use of the technology improved performance on all measures. For participants with visual impairment, the technology improved the ability to take the shortest route to the target room, as indicated by a reduction in the number of turns and distance travelled. The technology also allowed these participants to navigate more independently, demonstrated by fewer bystander queries. Unlike for the fully-sighted participants, the technology did not significantly decrease the time needed to complete the routes. Because our participants with visual impairment were older, they might have required more time to interact with the technology (e.g., scrolling through the list of waypoints and absorbing the verbal instructions) compared to the fully-sighted participants. Participants with visual impairment also had greater difficulty, and thus required more time, when distances were provided in feet or seconds compared to fully-sighted participants.

The rankings provided by fully-sighted participants in the post-experiment survey did not reflect a strong preference among the distance modes. Participants with visual impairment preferred distance in steps to distance in time or feet. These participants also performed better in the route-finding task with distance in steps, demonstrated by fewer turns and shorter travelled distances. Distance in seconds, although individually calibrated, was not always reliable because walking speed was variable, especially for participants with guide dogs. According to comments from the participants and observations during testing, some participants with visual impairment did not have a good understanding of distance in feet. The difference in performance between individuals with full vision and impaired vision suggests that visual experience may improve understanding of metric distances. People with visual impairment may benefit from additional training to effectively use metric distance information. Most participants with visual impairment preferred distance in steps because it was consistently accurate, likely due to low variability of step length (Mason, et al., 2005), and they had more control over the counting compared to when distance was given in seconds.

Step counting has typically been discouraged by mobility instructors. First, step counting requires cognitive effort and may distract travelers from attending to other sources of information in the environment. It is also not feasible to memorize steps for a large number of routes. Furthermore, step counting is not a reliable strategy in changing environments, for example outdoors where objects such as cars may change positions from one day to the next. The participants with visual impairment in our study demonstrated improved performance and a preference for distance in steps because we remedied the limitations of step counting in several ways. In our application, the technology contains the digital map, removing the need for users to learn and memorize the step counts between locations. Therefore, the cognitive burden of step counting is reduced. Also, step counting is more reliable in building environments because the building structure is stable. With these considerations, we believe that providing distances in steps is a viable way for technologies to communicate route information for navigation inside buildings.

Some of the participants with visual impairment thought it was useful to know how many intersections to pass before arriving at a waypoint while others did not seem to use the information. The usefulness of this information seemed to depend on whether the user could detect intersections, either using their residual vision, echolocation, cane or guide dog. Several participants commented that it was difficult to maintain orientation in allocentric coordinates (North, South, East, West), and they would have preferred egocentric directions. Indeed, most mistakes participants made were turning the wrong direction when following the route instructions. These orientation issues will be solved in the future when the Route Mode is integrated with positioning and heading sensors.

In this study, the baseline condition required wayfinding without the Building Navigator technology. This baseline provided participants with access to bystander information at every doorway in the layout. We expect the assistive technology to be even more advantageous in realistic situations when access to bystander information is less reliable.

We conclude that route instructions can improve wayfinding by individuals with visual impairment in unfamiliar buildings. Even without additional positioning sensors, participants with visual impairment were able to successfully follow instructions to locate rooms. Additional findings indicate that distances to waypoints can be conveyed effectively by converting metric distance into an estimate of the number of steps by the user. The preference for step counting to estimate distance and the improved performance with this metric is an important finding for the design of wayfinding technology for people with visual impairment.

Acknowledgments

This study was supported by the U.S. Department of Education (H133A011903) through a subcontract through SenderoGroup LLC., and NIH grants T32 HD007151 (Interdisciplinary Training Program in Cognitive Science), T32 EY07133 (Training Program in Visual Neuroscience), EY02857, and EY017835.

We would like to thank Joann Wang, Julie Yang, Ameara Aly-youssef, and Ryan Solinsky for their help with data collection and analysis.

References

- Dijkstra EW. A note on two problems in connexion with graphs. Numerische Mathematik. 1959;1:269–271. [Google Scholar]

- Giudice NA. Dissertation Abstracts International B. Vol. 65. 2004. Navigating novel environments: A comparison of verbal and visual learning. (Doctoral dissertation, University of Minnesota, 2004) p. 6064. [Google Scholar]

- Giudice NA, Bakdash JZ, Legge GE. Wayfinding with words: Spatial learning and navigation using dynamically-updated verbal descriptions. Psychological Research. 2007;71(3):347–358. doi: 10.1007/s00426-006-0089-8. [DOI] [PubMed] [Google Scholar]

- Giudice NA, Legge GE. Blind navigation and the role of technology. In: Helal A, Mokhtari M, Abdulrazak B, editors. The Engineering Handbook on Smart Technology for Aging, Disability and Independence. Hoboken, NJ: John Wiley & Sons, Inc.; 2008. pp. 479–500. [Google Scholar]

- Golledge RG, Marston JR, Loomis JM, Klatzky RL. Stated preferences for components of a Personal Guidance System for nonvisual navigation. Journal of Visual Impairment and Blindness. 2004;98(3):135–147. [PMC free article] [PubMed] [Google Scholar]

- Holmes E, Jansson G, Jansson A. Exploring auditorily enhanced tactile maps for travel in new environments. New Technologies in the Education of the Visually Handicapped. 1996;237:191–196. [Google Scholar]

- JVIB. Estimated number of adult braille readers in the United States. Journal of Visual Impairment and Blindness. 1996;90(3):287. [Google Scholar]

- Loomis JM, Golledge RG, Klatzky RL. GPS-based navigation systems for the visually impaired. In: Barfield W, Caudell T, editors. Fundamentals of Wearable Computers and Augmented Reality. Mahwah, NJ: Lawrence Erlbaum Associates; 2001. pp. 429–446. [Google Scholar]

- Loomis JM, Golledge RG, Klatzky RL, Marston JR. Assisting wayfinding in visually impaired travelers. In: Allen G, editor. Applied spatial cognition: From research to cognitive technology. Mahwah, N. J.: Lawrence Erlbaum Associates; 2007. pp. 179–202. [Google Scholar]

- Mason SJ, Legge GE, Kallie CS. Variability in the length and frequency of steps of sighted and visually impaired walkers. Journal of Visual Impairment & Blindness. 2005;99:741–54. [PMC free article] [PubMed] [Google Scholar]