Abstract

Objective

The full benefit of bilateral cochlear implants may depend on the unilateral performance with each device, the speech materials, processing ability of the user, and/or the listening environment. In this study, bilateral and unilateral speech performances were evaluated in terms of recognition of phonemes and sentences presented in quiet or in noise.

Design

Speech recognition was measured for unilateral left, unilateral right, and bilateral listening conditions; speech and noise were presented at 0° azimuth. The “binaural benefit” was defined as the difference between bilateral performance and unilateral performance with the better ear.

Study Sample

9 adults with bilateral cochlear implants participated.

Results

On average, results showed a greater binaural benefit in noise than in quiet for all speech tests. More importantly, the binaural benefit was greater when unilateral performance was similar across ears. As the difference in unilateral performance between ears increased, the binaural advantage decreased; this functional relationship was observed across the different speech materials and noise levels even though there was substantial intra- and inter-subject variability.

Conclusions

The results indicate that subjects who show symmetry in speech recognition performance between implanted ears in general show a large binaural benefit.

Keywords: cochlear implant, speech perception, binaural hearing, signal-to-noise ratio

Introduction

Bilateral cochlear implants (CIs) have been shown to provide a significant advantage for speech perception in noise (Litovsky, Parkinson, & Arcaroli, 2009; van Hoesel & Tyler, 2003), relative to unilateral CIs. When speech and noise are presented from different sources, binaural advantages are driven primarily by head shadow and/or binaural squelch effects (Litovsky, Parkinson, & Arcaroli, 2009; Ramsden et al., 2005). When speech and noise are presented from the same source, the redundancy of information with two ears provides an advantage relative to either ear alone, i.e., the benefit of “binaural redundancy.” Some studies have shown consistent binaural benefits for bilateral CI users’ sentence recognition in noise, while others have shown benefits for only a small subset of CI subjects. The source of variability in binaural benefits is unclear.

When compared to normals, it is possible that each individual implanted ear processes sounds quite differently due to differences in electrode placement, insertion depth, and/or the number of surviving ganglion cells in each ear. Such “asymmetric” hearing is quite common in hearing-impaired listeners, due to binaural asymmetric hearing losses (Davis & Haggard, 1982; Nabelek, Letowski, & Mason, 1980). The effect of unaided and aided asymmetric hearing on speech recognition with hearing aids has been well documented. The substantial benefit from bilateral amplification was consistently observed for people with symmetrical unaided and aided hearing threshold (Davis and Haggard, 1982; Firszt, Reeder, & Skinner, 2008; Gatehouse and Haggard, 1986; Markides, 1977). In contrast, substantial differences in the values of suprathreshold indices (like most comfortable level and loudness discomfort level) and hearing loss configuration (flat in one ear and steep sloping in other ear) were also cited as contraindications for binaural amplification (Day et al., 1988; Nabelek, Letowski, & Mason, 1980; Robinson & Summerfield, 1996; Pittman & Stelmachowicz, 2003).

In bilateral CI users, many cases of asymmetric speech performance have been reported (Buss et al., 2008; Dunn et al., 2008; Eapen et al., 2009; Litovsky et al., 2009; Ricketts et al., 2006; Schleich et al., 2004; Tyler et al., 2007; Wackym et al., 2007). In particular, two studies investigated the effect of asymmetric or symmetric unilateral performance on binaural benefit (e.g., Litovsky et al., 2006; Mosnier et al., 2009). Litovsky et al. (2006) grouped 37 simultaneously implanted recipients into two distinct subgroups, based on their speech reception thresholds (SRTs) - defined as the signal-to-noise ratio (SNR) needed for 50% correct whole sentence recognition - when both speech and noise were presented at 0° (directly in front of the listeners). For asymmetric subjects (n=10), the difference in SRT between ears was ≥ 3.1 dB; for symmetric subjects (n=24), the difference in SRT between ears was < 3.1 dB. All of the symmetric subjects showed significant binaural benefits, but none of 10 asymmetric subjects showed significant binaural benefit over the better performing ear.

The impact of asymmetric hearing on binaural CI benefit was recently studied by Mosnier et al. (2009), who tested 27 bilateral CI users using disyllabic word recognition in noise and quiet. Subjects were grouped as “good” (n=19) or “poor” (n=8) performers, according to unilateral performance with the better ear; the mean difference between the good and poor subject groups was 60 percentage points. Subjects were also grouped as asymmetric (≥20 percentage point difference in unilateral performance between ears; n=11) or symmetric (< 20 percentage point difference between ears; n=16) performers. The authors found a significant binaural benefit for the symmetric subject group (11 percentage points for good performers and 19 percentage points for poor performers) in quiet, but no significant binaural benefit for the asymmetric subject group. While two previous studies (Litovsky et al., 2006; Mosnier et al., 2009) demonstrate that binaural benefit may depend on the difference in unilateral performance, criterion for asymmetric and symmetric performance is not consistently defined. The speech materials and listening conditions used to measure performance also differed across studies, further complicating matters.

In all of the studies discussed above, binaural benefit was evaluated using meaningful words (e.g., Buss et al., 2008; Eapen et al., 2009; Laszig et al., 2004; Muller et al., 2002; Zeitler et al., 2008) or sentences (e.g., Dunn et al., 2008; Litovsky et al., 2009; Muller et al., 2002; Ricketts et al., 2006; Schleich et al., 2004; Tyler et al., 2007; van Hoesel & Tyler, 2003; Wackym et al., 2007). As such, listeners may make strong use of contextual cues (e.g., meaning, grammar, prosody, etc.). These cues can increase speech understanding, particularly in noisy conditions, while not necessarily improving speech perception (Boothroyd & Nittrouer, 1988). The binaural benefit observed in previous studies may well depend on the availability of contextual cues. Alternatively, binaural benefit may be demonstrated when contextual cues are diminished. To date, the relationship between bilateral and unilateral performance has not been systematically evaluated using consonants and vowels. Phoneme discrimination depends more strongly on the reception of acoustic features. By comparing binaural benefits for phoneme and sentence recognition tasks, we may gain insight into the factors that may contribute to binaural benefits.

The use of background noise is also common to previous studies’ evaluation of binaural benefit. Many previous studies reported SRTs, i.e., the SNR needed for 50% correct whole sentence recognition (Laszig et al., 2004; Litovsky et al., 2006, 2009; Schleich et al., 2004; van Hoesel & Tyler 2003; Zeitler et al., 2008). While this criterion level of performance helps to avoid floor or ceiling performance effects, the SRT does not provide information at other criterion levels (e.g., 30% or 80% correct), at which bilateral and unilateral performance may differ (and to different degrees). Indeed, the SRT metric may not well quantify binaural benefit if the performance-intensity (PI) functions are not parallel between bilateral and unilateral listening conditions. Due to these constraints, other studies have used fixed SNRs to evaluate binaural benefits (Buss et al., 2008; Eapen et al., 2009; Muller et al., 2002; Ramsden et al., 2005; Wackym et al., 2007; Tyler et al., 2007). While using fixed SNRs allows bilateral and unilateral performance to be compared at relevant points along the PI function, it is often difficult to select appropriate SNRs due to floor or ceiling effect and the large variability in CI performance. To avoid ceiling effect Gifford, Shallop, & Perterson (2008) suggested use of more difficult materials when assessing the speech perception performance in quiet for both pre- and post-implant patients. For some previous studies binaural benefit data were excluded when performance was <20% correct or >80% correct with the poor ear alone to avoid floor and ceiling effects (Buss et al., 2008; Eapen et al., 2009; Ramsden et al., 2005; Tyler et al., 2007). To address the weaknesses associated with SRTs and fixed SNRs, some studies have used a relatively wide range of fixed SNRs (Mosnier et al., 2009; Ricketts et al., 2006). Using a range of fixed SNRs is necessary to determine whether binaural benefits are constant across noise levels or SNR-specific.

The previous studies show that binaural benefits in CI users have been evaluated using context-heavy speech materials, and in noise levels that are difficult to compare across studies. The criteria used to define asymmetric and symmetric performance has also varied according to the test conditions across studies. Because of these differences in studies, the factors contributing to binaural benefit shown in some subjects remains unclear. In this study, we measured vowel, consonant and sentence recognition in quiet and for a wide range of fixed SNRs; performance was compared across bilateral and unilateral listening conditions. By testing both phonemes and sentences, we were able to see the influence of contextual cues on binaural benefit. By testing with a wide range of SNRs, we were able to accommodate the variability in CI performance, and we were able to see whether binaural benefit was SNR-specific.

Methods

Subjects

Nine bilateral CI users (all post-lingually deafened and sequentially implanted) participated in the study. Subjects were native speakers of American English. Eight subjects were between the ages of 55 to 82 years old, and one subject was 22 years old. Four out of nine subjects have had their second implant for six months, and another four have used it for less than 5 years along with one subject who has used it for 7 years. Subject demographics are shown in Table 1. All subjects provided informed consent, and all procedures were approved by the local Institutional Review Board. Bilateral CI users were recruited with no particular consideration of implantation method (sequentially or simultaneously), age at implantation, duration of profound deafness, period for bilateral experience, etiology, CI type, CI configurations, or processor strategy as no significant difference in binaural benefits has been reported with these variables (Laszig et al., 2004; Ramsden et al., 2005).

Table 1.

CI subject demographics. SPEAK=Spectral PEAK, ACE=Advanced Combination Encoder, CIS=Continuous Interleaved Sampling, and HiRes=High Resolution. Additional SNRs used for consonant discrimination were given in the first column.

| ID (age, gender) (additional SNRs) | Ear | Years with CI With CI | Etiology | Device, Processor, strategy strategy | Acoustic level dB (A) SPL |

|---|---|---|---|---|---|

| S1 | Good | 19 | Ototoxicity | Nucleus 24, Freedom, ACE | 70 |

| (63, F) | Poor | 7 | Ototoxicity | Nucleus 24, Freedom, ACE | 70 |

| (0, 15, 20) | Both | 7 | 70 | ||

|

| |||||

| S2 | Good | 2 | Unknown | Nucleus 24, Freedom, SPEAK | 65 |

| (58, M) | Poor | 15 | Unknown | Nucleus 22, Freedom, SPEAK | 70 |

| (0) | Both | 2 | 65 | ||

|

| |||||

| S3 | Good | 2 | Unknown | Nucleus 22, Freedom, ACE | 65 |

| (56, F) | Poor | 14 | Unknown | Nucleus 22, Freedom, SPEAK | 70 |

| (15, 20, 25) | Both | 2 | 65 | ||

|

| |||||

| S4 | Good | 3 | Ototoxicity | C2, Harmony, HiRes | 65 |

| (59, F) | Poor | 15 | Ototoxicity | C2, Clarion, CIS | 65 |

| (0) | Both | 3 | 65 | ||

|

| |||||

| S5 | Good | 17 | Hereditary | Nucleus 22, Freedom, ACE | 65 |

| (82, F) | Poor | 0.5 | Hereditary | Nucleus 24, Freedom, ACE | 70 |

| (15, 20, 25) | Both | 0.5 | 65 | ||

|

| |||||

| S6 | Good | 8 | Hereditary | C2, Harmony, HiRes | 70 |

| (72, F) | Poor | 0.5 | Hereditary | C2, Harmony, HiRes | 70 |

| (0, 15, 20) | Both | 0.5 | 65 | ||

|

| |||||

| S7 | Good | 21 | Otosclerosis | Nucleus 22, Freedom, ACE | 65 |

| (76, F) | Poor | 0.5 | Otosclerosis | Nucleus, system 5, ACE | 70 |

| Both | 0.5 | 65 | |||

|

| |||||

| S8 | Good | 14 | Hereditary | Nucleus 22, Freedom, ACE | 65 |

| (22, M) | Poor | 0.5 | Hereditary | Nucleus 22, Freedom, ACE | 65 |

| Both | 0.5 | 65 | |||

|

| |||||

| S9 | Good | 4 | Hereditary | C2, Harmony, HiRes | 65 |

| (53, F) | Poor | 3 | Hereditary | C2, Harmony, HiRes | 65 |

| Both | 3 | 65 | |||

Stimuli and testing

Consonant discrimination was measured in quiet and in noise at +5 dB and +10 dB SNR. For 6 out of 10 subjects, consonant discrimination was measured at three additional SNRs (see Table 1). Medial consonants included /b/, /d/, /g/, /p/, /t/, /k/, /m/, /n/, /f/, /s/, /ʳ/, /v/, /ð/, /z/, / ʤ/and /tʳ/, presented in an /a/-consonant-/a/ context and produced by 5 male and 5 female talkers (Shannon et al. 1999). The presentation from both genders was balanced. Tokens and talkers were randomized at each SNR. Each consonant syllable was presented 10 times for each run (total 20 presentations =10 talkers × 2 runs) at each SNR. Consonant discrimination was measured using a 16-alternative, forced choice (16AFC) paradigm. During testing, a stimulus was randomly selected (without replacement) from the stimulus set. The subject responded by pressing on one of the 16 response buttons labeled in a /a/-consonant-/a/ context. No training or trial-by-trial feedback was provided during testing.

Vowel discrimination was measured in quiet and in noise at +5 dB and +10 dB SNR. Medial vowels included 10 monophthongs (/i/, /I/, /ɛ/, /æ/, /u/, /ʊ/,/ ɑ/, /ʌ/, /ɔ/, /ɝ/) and 2 diphthongs (/əʊ/, /eI/), presented in an /h/-vowel-/d/ context (‘heed’, ‘hid’, ‘head’, ‘had’, ‘who’d’, ‘hood’, ‘hod’, ‘hud’, ‘hawed’, ‘heard’, ‘hoed’, ‘hayed’) and produced by 5 male and 5 female talkers (Hillenbrand et al., 1995). A balanced presentation from both groups of talkers was used. The orders of tokens and of takers were randomized at each SNR. Each vowel syllable was presented 20 times (10 talkers × 2 runs) at each SNR. Vowel discrimination was measured using a 12AFC paradigm. During testing, a stimulus was randomly selected (without replacement) from the stimulus set. The subject responded by pressing on one of the 12 response buttons labeled in a /h/-vowel-/d/ context. No training or trial-by-trial feedback was provided during testing.

Sentence recognition was measured in quiet and in noise at 0 dB, +5 dB, +10 dB SNR, using hearing-in-noise test (HINT) sentences (Nilsson et al., 1994). The HINT sentences consist of 26 lists of ten sentences each, produced by a male talker; the level of difficulty for the HINT sentence is moderate. During testing, a sentence list was selected (without replacement), and sentences were randomly selected from within the list (without replacement) and presented to the subject, who repeated the sentence as accurately as possible. The experimenter calculated the percent of words correctly identified in sentences. Each listening condition was tested three different times with different sets of sentence (total 30 sentences presented) at each SNR. No training or trial-by-trial feedback was provided during testing.

Consonant, vowel, and sentence recognition were measured under three CI listening conditions: unilateral left, unilateral right, and bilateral. For testing in noise, speech-shaped steady noise (1000-Hz cutoff frequency, −12 dB/oct) was used. The SNR was calculated in terms of the long-term RMS of the speech signal and noise. Speech and noise were mixed at the target SNR, and the combined signal and noise was then scaled to most comfortable level in dB (A) sound pressure level (Fluke True RMS voltmeter). Combined signal and noise were delivered via an audio interface (Edirol UA 25) and amplifier (Crown D75A) to single loudspeaker (Tannoy Reveal). Subjects were seated in a double-walled sound treated booth (IAC), directly facing the loudspeaker (0° azimuth) one meter away. Subjects were tested using their clinical speech processors and settings. The output level of the amplifier was adjusted independently for each CI condition (left CI alone, right CI alone, and both) and corresponded to “most comfortable” loudness according to the Cox loudness rating scale (Cox 1995), in response to 10 sentences in quiet. The output levels for each subject and CI condition are listed in Table 1.

For each subject, unilateral performance was categorized in terms of a “good ear” and a “poor ear”. The good ear was defined as the ear with the higher performance score for HINT sentences (30 sentences for each SNR) averaged over SNRs including quiet condition. This categorization remained true for consonant and vowel discrimination as well. Throughout this article, “binaural benefit” refers to the difference between bilateral performance and unilateral performance with the good ear.

Results

Speech Recognition Performance

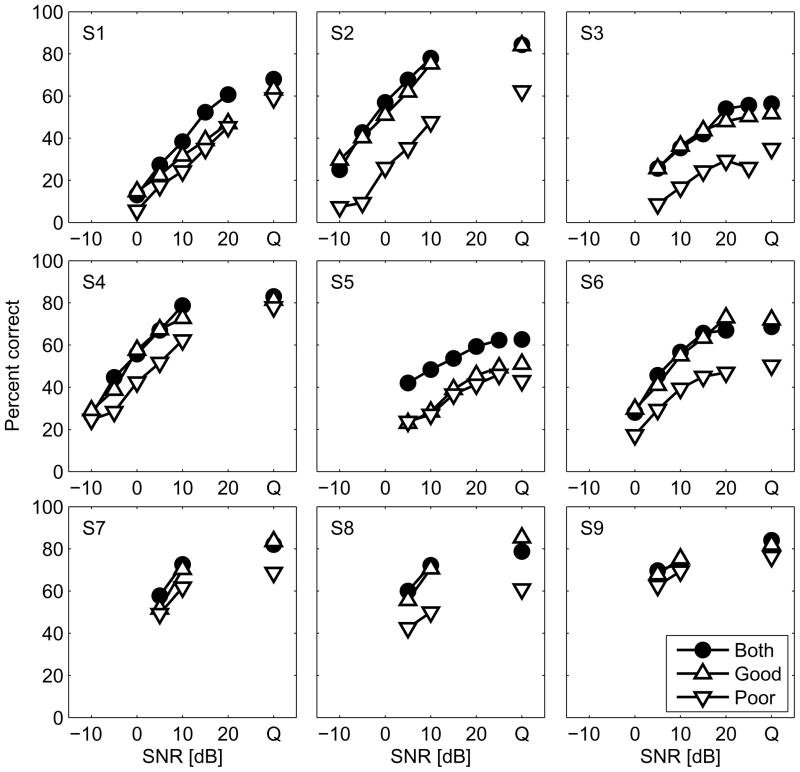

Figure 1 shows individual consonant discrimination scores for both ears, the good ear, and the poor ear as a function of SNR. Note that for subjects S1 – S6, consonant discrimination was measured over a wider range of SNRs. In general, binaural performance was similar to unilateral performance with the good ear. Only two of the nine subjects (S1 and S5) exhibited substantial binaural benefit; for these subjects, the difference between both unilateral performances was small. In seven of the nine subjects, the binaural benefit was almost nonexistent, and the difference in unilateral performance between the good and poor ear was larger than 15 percentage points across SNRs.

Figure 1.

Individual consonant discrimination performance (percent correct) as a function of SNR with unilateral good ear, unilateral poor ear, and both ears.

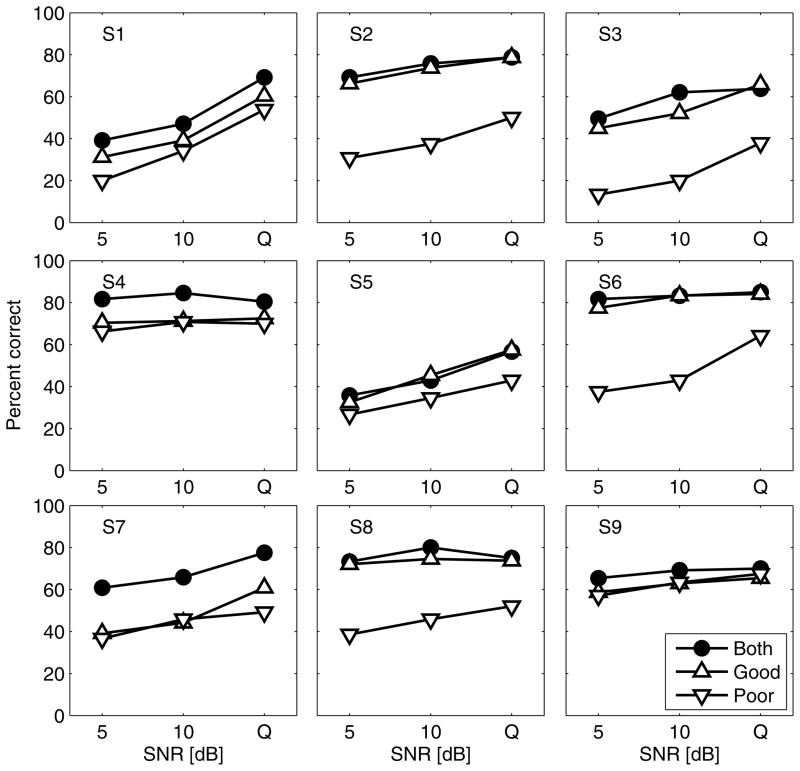

Figure 2 shows individual vowel discrimination scores a for both ears, the good ear, and the poor ear s a function of SNR. Similar to the consonant discrimination (Fig. 1), the binaural benefit, if any, was small for most subjects. Substantial binaural benefits were observed for two subjects (S4 and S7); for these subjects, the difference between both unilateral performances was small. Another subject (S1) exhibited a binaural benefit, albeit smaller than that for subjects S4 and S7; different from subjects S4 and S7, there was a difference between both unilateral performances. Subjects who exhibited binaural benefits for consonant discrimination (S1 and S5) did not show the binaural benefits for vowel discrimination (S4 and S7), and vice-versa. The difference between both unilateral performances was large for 4 of the subjects: S2, S3, S6 and S8; for these subjects, binaural benefits were consistently minimal across SNRs.

Figure 2.

Individual performance on vowel as a function of SNR with good ear alone, poor ear alone, and both ears.

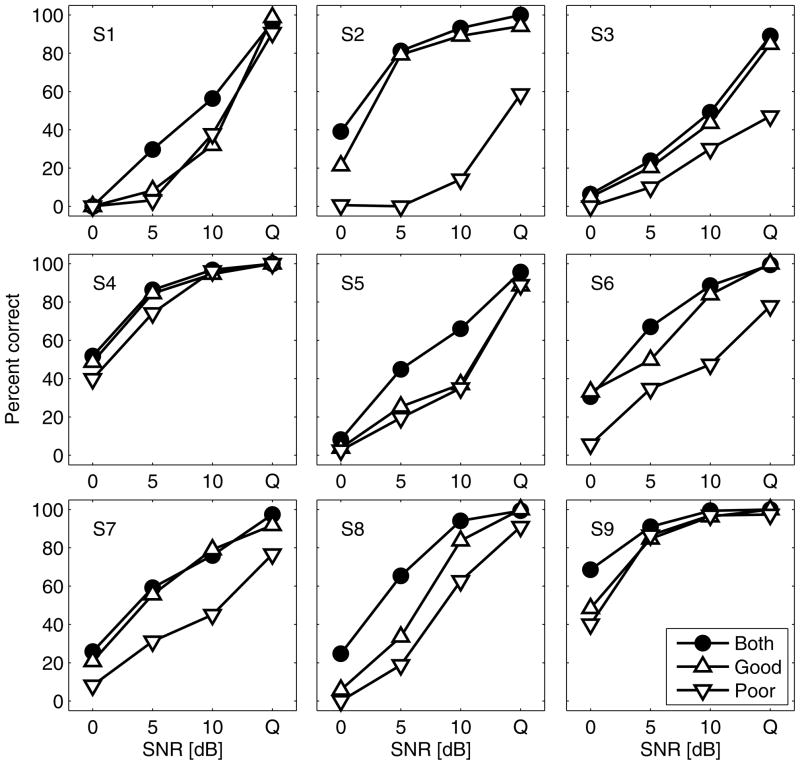

Figure 3 shows HINT sentence recognition as a function of SNR for both ears, the good ear, and the poor ear. As with consonant (Fig. 1) and vowel discrimination (Fig. 2), binaural benefits were observed in a subset of subjects (i.e., S1, S5, S6, S8, S9), and then only in a handful of inconsistent SNR conditions. For example, subjects S1, S5, and S8 exhibited binaural benefits at the +5 dB and +10 dB SNRs, while subjects S6 and S9 received the binaural benefit at the +5 dB SNR and the 0 dB SNR. In all these cases, the difference between both unilateral performances was small. Some subjects did not show improvement in the binaural condition for certain SNRs. For example, subjects S1, S4, S5, S8, and S9 showed ceiling effects at Q for all listening condition, in which case any improvement in the binaural condition would not be apparent, particularly for S1 and S5. Subjects S1 and S5 showed no improvement for the binaural condition at 0 dB SNR; however, this may be reflective of floor effects for these individuals. Three subjects (S6, S8, and S9) who did not exhibit binaural benefits for consonant (S1 and S5) and vowel (S4 and S7) discrimination did for sentence.

Figure 3.

Sentence recognition scores in percent correct as a function of SNR for individual subjects in unilateral and binaural listening conditions.

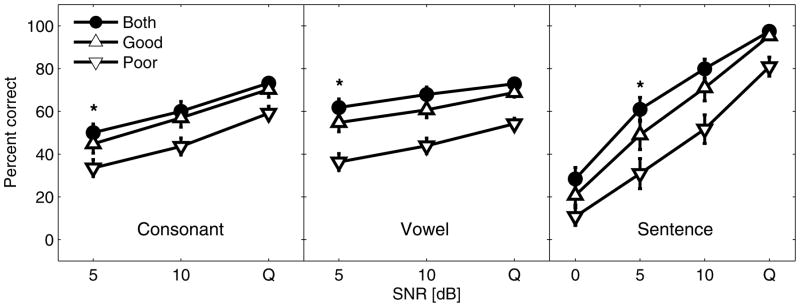

Figure 4 shows group mean speech performance and standard errors for consonant (left panel, n=9), vowel (middle panel, n=9), and sentence (right panel, n=9) for both ears, the good ear, and the poor ear, as a function of SNR. There was a small, but consistent binaural benefit across speech materials and SNR conditions. Specifically, binaural benefit in noise (averaged across two SNRs) was 4%, 7%, and 10%, but 3%, 4%, and 2% in quiet for consonant, vowel, and sentence, respectively. The magnitude of the binaural benefit was slightly larger in noise than in quiet for all speech test materials. Performance difference between good and poor ears averaged across two SNRs was 12%, 17.5%, and 16% for consonant, vowel, and sentence, respectively. In quiet, these interaural performance differences were similar to those in noise.

Figure 4.

Group means and standard errors for consonant (left panel), vowel (middle panel), and sentence (right panel) recognition scores. The asterisks show significant difference in performance between both ears and good ear alone (p< 0.05).

A two-way repeated-measures analysis of variance with SNR (+5 dB, +10 dB and quiet) and CI listening condition (good ear, poor ear, both ears) as factors, showed a significant main effect for SNR for consonant [F(2,32)=51.9, p<0.001], vowel [F(2,32)=19.73, p<0.001] and sentence recognition [F(3,48)=75.6, p<0.001], and a significant main effect for CI listening condition for consonant [F(2,32)=31.1, p<0.001], vowel [F(2,32)=19.8, p<0.001] and sentence recognition [F(2,48)=15.58, p<0.001]. There were no significant interactions for any of the speech tests (p>0.05). Post-hoc pair-wise comparisons (Sidak method) showed that a significant binaural benefit occurred only at the +5 dB SNR for all three speech tests materials (p<0.05).

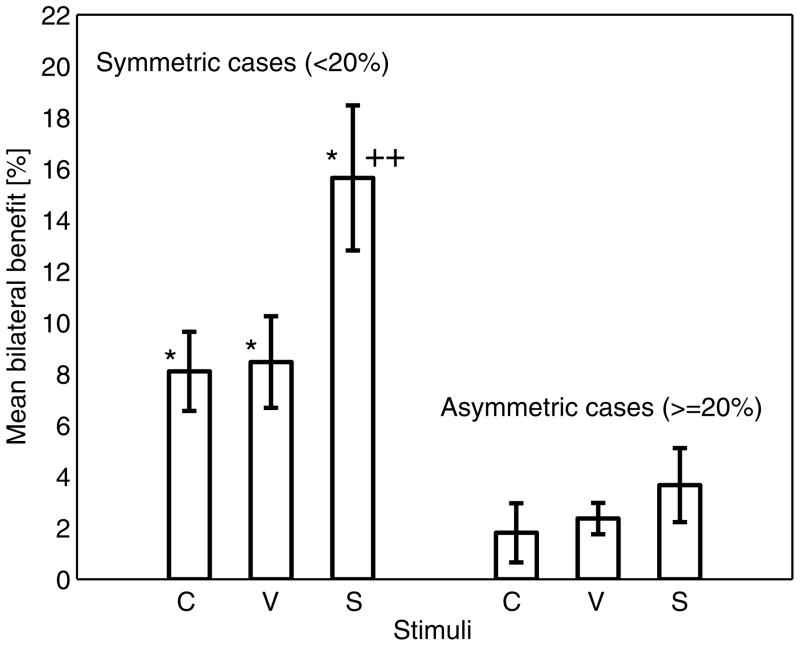

Functional Relationship between Binaural Advantage and Difference in Unilateral Performance

Unilateral performance for each speech measure was further categorized as being symmetric (<20 point difference between ears) or asymmetric (≥20 point difference between ears), as in Mosnier et al. (2009). Note that within the same subject, performance might be symmetric for one speech test and asymmetric for another. Figure 5 shows the mean binaural benefit for symmetric and asymmetric performance cases, as a function of speech measure (C: consonant; V: vowel; S: sentence). Binaural benefits were calculated for all available SNR conditions (note that some subjects were tested at additional SNRs for consonant discrimination). However, to avoid floor and ceiling effects, binaural benefit data were excluded when performance was <20% correct or >80% correct with the poor ear alone (Buss et al., 2008; Eapen et al., 2009; Ramsden et al., 2005; Tyler et al., 2007). That is, there were originally 45 data points for consonant perception [(6 subjects × 6 SNRs) + (3 subjects × 3 SNRs)], 27 points for vowel perception (9 subjects × 3 SNRs) and 36 data points (9 subjects × 4 SNRs) for sentence recognition. After exclusion, the numbers of data points used in the analyses were reduced to 36 (24 for symmetric case and 12 for asymmetric case), 26 (16 for symmetric case and 10 for asymmetric case), and 22 (14 for symmetric case and 8 for asymmetric case) data points for consonants, vowels, and sentences, respectively.

Figure 5.

Mean binaural benefits and standard errors for each speech test in terms of symmetric (difference in performance between two ears < 20%) and asymmetric cases (performance difference ≥ 20%). The left three bars shows the results of symmetric cases for each stimuli (C: consonant; V: vowel; S: sentence), while right three bars shows the results of asymmetric cases. Asterisk symbols represent significant difference between symmetrical and asymmetrical scores for each matched stimuli, while double-plus symbol represents significant difference between sentence and phonemes in symmetric cases.

For the symmetric case, the mean binaural benefit was 8.1, 8.4, and 15.6 percentage points for consonants, vowels, and HINT sentences, respectively. For the asymmetric case, the mean binaural benefit was 1.8, 2.3, and 3.6 points for consonants, vowels, and HINT sentences, respectively. For both cases, the binaural benefit was larger for sentence recognition than for phoneme discrimination. The mean benefits with symmetric scores are significantly higher than those with asymmetric scores (asterisk symbols) for each corresponding speech material (paired t-test, p<0.05). For symmetric cases, the magnitude of the benefit for sentence is significantly larger, (paired t-test, p<0.05), compared to those for phonemes (double-plus symbol), while for the asymmetric scores, none of the mean binaural advantages are significantly different from the others (paired t-test, p>0.05).

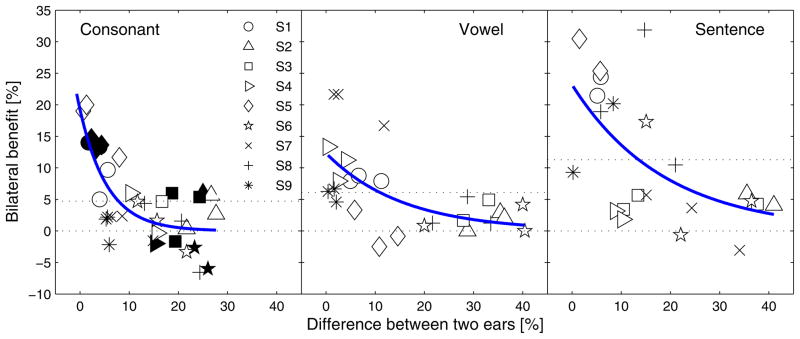

To further quantify this functional relationship, individual subject data were plotted across SNRs for each test material, along with mean binaural advantage, as shown in Fig. 6. As above, to avoid floor and ceiling effects, binaural benefit data were excluded when performance was <20% correct or >80% correct with the poor ear alone. Additional consonant data measured at wider SNRs were included as well and indicated by filled symbols (Fig. 6, left panel). After excluding, as for Fig. 5, 36, 26, and 22 data points (out of 45, 27, and 36 original data points) were included for consonant, vowel, and sentence recognition, respectively. The mean binaural benefit was 4.7, 6.1, and 11.3 percentage points for consonants, vowels, and sentences, respectively. Binaural benefits were greater than 10 percentage points when the difference between both unilateral performances was small, i.e., less than 10, 12, and 16 percentage points for consonant, vowel, and sentence, respectively.

Figure 6.

Binaural benefit function for each speech material. Ordinate indicates the difference in performance between binaural and better ear alone, while abscissa indicates the difference in performance between two ears. Each subject data were indicated by different symbols, and consonant data points measured with additional SNR are indicated by filled symbols. Exponential decay function fit is given as a solid line, along with mean binaural benefit and 0% benefit indicated by dotted lines.

Binaural Benefit Function for Speech Test Material

This functional trend was similar across different speech materials. Two subjects (S1 & S5) received binaural benefits higher than 10% for consonant discrimination (Fig. 6, left panel), while other two subjects (S4 & S7) were benefited higher than 10% for vowel discrimination. For sentence recognition (Fig. 6, right panel), another three subjects (S6, S8, & S9) along with S1 and S5 who were benefited for consonants received the benefit higher 10%. The result of t-test showed that there was no significant difference in mean binaural benefits between consonant and vowel (paired t-test, p>0.05) and vowel and sentence (paired t-test, p>0.05), but significant difference between consonant and sentence (paired t-test, p<0.05). This result suggests that there is somewhat contextual effect even though functional relationship still remains true across different test materials.

The functions shown in Fig. 6 were fit with exponential decay function f = ae−bx, where a is intercept and b is a decay constant. The differences between both unilateral performances were given in abscissa, and the binaural benefits were given in ordinate for each test materials individual-by-individual listener basis. Table 2 shows the goodness of fit (R2) along with coefficients (b = 0.18 for consonants, 0.06 for vowels, and 0.05 for sentences) and significance levels (p<0.05) for each speech measure. The differences between both unilateral performances accounted for 74%, 68% and 71% of the total variance between the binaural benefits and the difference in unilateral performance for consonants, vowels, and sentences, respectively.

Table 2.

Fitting coefficients, significant levels, and goodness of fit, R2. Decay exponential function was used for all conditions.

| a, p value | b, p value | R2 | |

|---|---|---|---|

| consonants | 19.3, p<0.0001 | 0.18, p<0.0001 | 0.74 |

| vowels | 12.3, p<0.0001 | 0.06, p<0.02 | 0.68 |

| sentences | 23.2, p<0.0001 | 0.05, p<0.01 | 0.71 |

| 5 dB SNR | 12.4, p<0.0001 | 0.03, p>0.05 | 0.54 |

| 10 dB SNR | 23.4, p<0.0001 | 0.14, p<0.001 | 0.72 |

| Quiet | 7.56, p<0.01 | 0.06, p>0.05 | 0.43 |

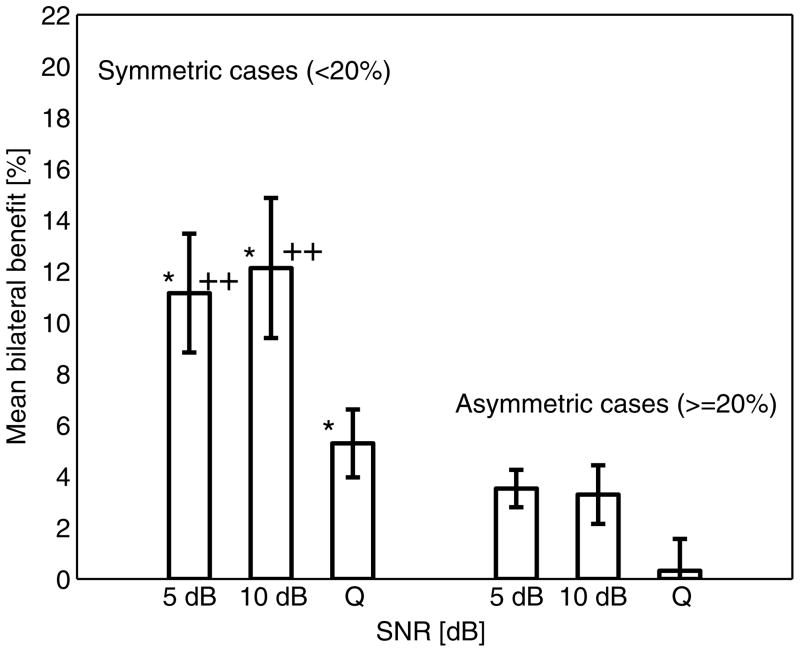

Binaural Benefit Function for SNR

Similar to Fig. 5, unilateral performance for symmetric and asymmetric performance cases is given in Fig. 7 as a function of SNR. Symmetric and asymmetric performance was defined as above. Note that within the same subject, performance might be symmetric for one SNR and asymmetric for another. Binaural benefits were calculated for all available speech tests. As above, to avoid floor and ceiling effects, binaural benefit data were excluded when performance was <20% correct or >80% correct with the poor ear alone. Since only data points that were measured at three common SNRs across speech materials were included in the analyses, the total number of data points was reduced from 84 to 68; 14 data points measured at additional SNRs for consonants were excluded and 2 data points measured at 0 dB SNR for sentences were also excluded. Consequently the final numbers of data points included for the analysis were 22 (17 for symmetric and 5 asymmetric), 23 (14 for symmetric and 9 asymmetric), and 23 (14 for symmetric and 9 asymmetric) for 5 dB SNR, +10 dB SNR, and Quiet, respectively. For the symmetric case, the mean binaural benefit was 11.1 percentage points for +5 dB SNR, 12.1 points for +10 dB SNR, and 5.3 points for quiet. For the asymmetric case, the mean binaural benefit was 3.5 percentage points for +5 dB SNR, 3.3 points for +10 dB SNR, and 0.3 points for quiet. The mean benefits with symmetric performances are significantly higher than those with asymmetric performances for each matched SNR (paired t-test, p<0.05). The magnitude of the benefit measured in noise is significantly larger than that of the benefit in quiet (paired t test, p<0.05) for the symmetric cases. For the asymmetric case, none of the mean binaural advantages are statistically different from the others (paired t-test, p>0.05).

Figure 7.

Mean binaural benefits and standard errors for each SNR. The left three bars show the results of symmetric cases for three SNRs, while right three bars show the results of asymmetric cases. Asterisk symbols represent significant difference between symmetrical and asymmetrical scores for each matched SNR, while double-plus symbol represents significant difference between noise and quiet in symmetric cases.

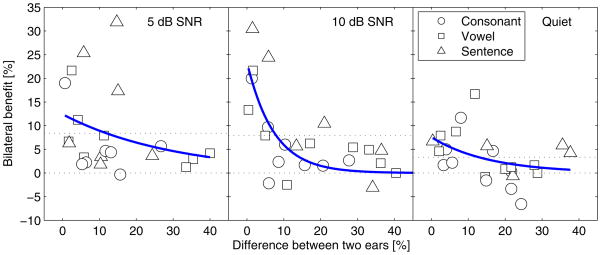

Similar to Fig. 6, binaural benefit for the + 5dB SNR, +10 dB SNR and quiet listening conditions is shown in Fig. 8 as a function of the unilateral performance difference. Within each panel, individual subject data is shown for different speech tests. As above, to avoid floor and ceiling effects, binaural benefit data were excluded when performance was <20% correct or >80% correct with the poor ear alone. As for analysis in Fig. 7, the total numbers of data points included for this analysis were 22, 23, and 23 for +5 dB, +10 dB, and Quiet conditions, respectively. The mean binaural benefit was 8.5, 7.9 and 3.1 percentage points for + 5dB SNR, +10 dB SNR and quiet listening conditions, respectively. Binaural benefits were greater than 10 percentage points when the difference between both unilateral performances was less than 15 percentage points. The functions shown in Fig. 8 again were fit with exponential decay function. The functional relationship was significant at +10 dB SNR (b=0.14, p<0.001), while the functional relationship was not significant at +5 dB SNR (b=0.03, p>0.05) and in quiet (b=0.06, p>0.05). The goodness of fit (R2) along with coefficients and significance levels for each speech measure are shown in Table 2. The differences between both unilateral performances accounted for 54%, 72% and 43% of the variance for the + 5dB SNR, +10 dB SNR and quiet listening conditions, respectively.

Figure 8.

Binaural benefit function at each SNR. Each speech test was indicated by different symbols. A solid line shows a fit by exponential decay function, while dotted lines show mean binaural benefit and no benefit.

Discussion

The present data reveal interesting functional relationship between the magnitude of binaural benefit and that of interaural difference in performance. While binaural benefits were not consistent across subjects, speech tests or listening conditions, substantial binaural benefits were observed when difference between both unilateral performances was similar. When unilateral performance was asymmetric (≥20 point difference between ears), binaural benefits were small or non-existent. Indeed, symmetric unilateral performance seemed to underlie binaural benefit, rather than absolute performance level, speech measure (high or low context) or noise level. Below we discuss the results in greater detail.

Dependence of Binaural Benefit on Unilateral Performance

The primary finding of the present study was that the largest advantage (>10%) with bilateral CIs as compared to scores with better performing ear alone was seen when unilateral speech recognition scores between two ears was similar (Fig. 6). These results are in an agreement with previous studies in larger patient groups (Laszig et al., 2004; Litovsky et al., 2006). Laszig et al. (2004) presented data with the greatest binaural benefit in 20 bilateral CI users when SRT differences for sentences between ears were not significant. Litovsky et al. (2006) also demonstrated an improvement in SRT performance for bilateral CI users compared to unilateral conditions in 24 symmetric patients (SRT between two ears < 3.1 dB SNR), but no benefit of bilateral implantation compared to the better ear in 10 asymmetrical patients (SRT between two ears > 3.1 dB SNR).

In these previous studies, observation about such a functional relationship was discussed, but the functional relationship was not quantified. As shown in Fig. 6, the functional relationship is well fitted with exponential decay function, and decay constant b was significantly related to the difference between both unilateral performances for all speech test materials (See Table 2).

To a large extent, direct comparisons between the results from the present study and those reported by Laszig et al. (2004) and Litovsky et al. (2006) might not be made. One issue is the difference in methodologies such as use of adaptive or fixed SNR and speech test materials. In the present study speech recognition performance of consonants and vowels along with sentences was measured at SNRs of +5 dB and +10 dB including quiet condition, as opposed to the sentence only at adaptive SNR (SRT measure) in the studies of Laszig et al. (2004) and Litovsky et al. (2006). Despite these differences, functional relationship between the binaural benefit and interaural difference in performance was observed.

A similar functional relationship was documented in bilateral amplification. The benefit of binaural amplification is generally greater for speech discrimination in noise for those with more symmetrical hearing loss (<15 dB hearing level) between 1 and 4 kHz (Davis and Haggard, 1982; Firszt, Reeder, & Skinner, 2008; Gatehouse and Haggard, 1986; Markides, 1977). Boymans et al. (2008) also reported that bilateral amplification provided not only a greater binaural benefit in speech perception in noise but also subjective benefits such as reduced listening effort (Noble, 2006).

Effect of Speech Materials

In this study, unilateral and bilateral performance was evaluated in terms of phoneme and sentence recognition to see whether contextual cues contributed to binaural benefit. The present results showed consistent binaural functional relationship relative to unilateral difference across speech measures even though within- and across-subject variability in binaural benefit across speech measures exists.

Due to many different aspects between two ears, it is not surprising to see variation of binaural benefit across testing materials. Symmetrical performance between two individual implanted ears in one test material may not necessarily translate to other test materials due to the use of different cues. For example, vowel recognition required more spectral cues while consonant recognition is less affected by spectral mismatch. With very different insertion depths for two implants, CI patients could still have similar consonant recognition scores even though vowel recognition scores could be very different across the two implanted ears. The functional relationship documented in the current MS becomes more important because it is less dependent on speech test materials.

As shown in Fig. 4, in quiet, the mean binaural benefit was 3–4 percentage points for phoneme discrimination, much less the 6–12 percentage points that observed in previous studies (e.g., Buss et al., 2008, Dunn et al., 2008; Litovsky et al., 2006; Wackym et al., 2007). Note that CNC monosyllabic word recognition (Tillman & Carhart, 1966) was measured in these previous studies, which may explain the greater binaural benefit. Across the +5 dB and +10 dB SNR noise conditions, the mean binaural benefit was 4–7 percentage points for phoneme discrimination, somewhat lower than the 10 percentage points reported by Eapen et al. (2009), who measured CNC word recognition at a fixed SNR, tailored to individual subject for the range of 40% to 80% performance. For HINT sentence recognition, there was little binaural benefit in quiet, most likely due to ceiling performance effects. Across the 0 dB, +5 dB and +10 dB SNR noise conditions, the mean binaural benefit was 10 percentage points, similar to previous results from Wackym et al. (2007) and Tyler et al. (2007). The generally lower binaural benefit for phoneme discrimination in the present study may have been due the greater asymmetry in unilateral phoneme discrimination. Differences in unilateral performance may be more pronounced when attending to acoustic cues rather than to the contextual/linguistic cues available with words and sentences.

While there is some difference in terms of the mean binaural benefit for different testing materials, the majority of data were accounted by the decay exponential function (Fig. 6), suggesting that the binaural benefit function was less dependent to speech materials. It rather suggests that binaural benefit is more dependent on relative performance across ears. However, one distinction should be made related to contextual effect on binaural benefit function. The magnitude of binaural benefit is somewhat related to contextual cues. For example, for sentence recognition five subjects (S1, S5, S6, S8, and S9) received the binaural advantage larger than 10% with 32 percentage points of maximum benefit, while for phonemes only two subjects showed the binaural benefit higher than 10% benefits with 21 percentage points of maximum advantages (Fig. 6). In addition, the mean binaural advantage for sentence is significantly higher than those for phonemes in symmetric case (Fig. 5). This result suggests that perception of running discourse may take advantage of increased information from complex signals and contextual and linguistic properties of speech as well as the linguistic experience of the listener (Boothroyd & Nittrouer, 1988), but auditory mechanisms for binaural benefit are less dependent on the increased information from contextual cues.

Effects of SNR levels

Unlike consistent binaural benefit function across different speech materials (Fig. 6), the binaural benefit function was distinctly affected by SNR (Fig. 8). It is clear in noise that the largest binaural benefit occurred when the difference between both unilateral performances was small, but such a pattern is ambiguous in quiet. Furthermore, the mean binaural advantages in noise were approximately 5 percentage points larger than that in quiet (statistically significant, Fig. 8) and for symmetric performers the mean benefit in noise was also significantly higher than that measured in quiet (Fig. 7). These results suggest that bilateral implantation seems to improve the ability to understand speech in noise, rather than to understand speech in general. This result also suggests that under optimal listening condition speech information is fully extracted by better performing ear so that binaural redundancy or/and integration mechanism generate no additional temporal and spectral representation of incoming signal.

This result is inconsistent with the results reported by Mosnier et al. (2009), who found such a relationship in quiet, but not in noise in terms of cases of symmetric or asymmetric performers. Their 16 symmetric patients (performance difference between two ears <20% correct) received the range of 12% to 17% binaural advantage in quiet (5.3% benefit in the present study, Fig. 7) for disyllabic word recognition, but 9 asymmetric patients (performance difference between two ears ≥ 20% correct) received only 1% of the binaural benefit (0.33% benefit in the present study, Fig. 7). In noise, since there was no statistical significant binaural benefit over the better unilateral performance in any cases of symmetric or asymmetric patients, they did not provide analyses regarding the functional relationship, as opposed to significant difference between performances measured in noise and in quiet in the present study (Fig. 7).

This inconsistency might be due to different arrangement of noise sources between two studies. In the present study speech and speech-weighted noise were emanated from the front, while in Mosnier et al. (2009) speech was presented at 0° azimuth, but the cocktail party noise was simultaneously presented from the 5 loudspeakers, including the central one that presented the speech target. When speech and noise are presented at 0° azimuth, the source of the binaural benefit is limited to binaural redundancy. In contrast, when noise is presented from the multiple locations, binaural benefit can be evoked by both binaural redundancy and squelch (Buss et al., 2008; Litovsky et al., 2006, 2009; Ricketts et al., 2006). Squelch effect describes phenomenon that the central auditory structures are able to suppress interfering noises while focusing on the speech of just one person in a noisy environment. It is possible that the binaural benefit function relative to interaural performance difference is affected by binaural squelch effect.

Asymmetric Performances between Two Ears

The result of the present study also showed that there was an important asymmetry in performance for some subjects on some materials, although this was not consistent for any subject across all materials. The difference in performance between better and poor performing ears ranges from 0% to 41%. The differences averaged across SNRs were 12%, 17.5%, and 16% for consonant, vowel, and sentence, respectively. Indeed, one problem with bilateral/unilateral performance comparisons is that the binaural benefits were commonly measured by having bilateral CI users switch off one of their CIs. Immediately this arrangement generates confusions as this configuration does not characterize their everyday listening experience and in essence, changes how their auditory system codes perceptual cues for speech recognition. Consequently, unilateral performance in one, particularly second implanted ear may be much poorer than binaural performance because of no unilateral listening experience at all. However, there was no clear pattern for the present data. While subjects S1, S5, S6, S7, and S8 performed better with the ear that was implanted first, subjects S2, S3, S4, and S9 performed better with the ear that implanted second.

Another possible reason for this interaural asymmetric performance is related to differences in subject demographics such as duration of bilateral listening experience and mode (simultaneous or sequential) of implantation. In the present study, experience with the second implant could be a potential factor for the binaural benefit given that four (S5, S6, S7, and S8) out of nine subjects had only six months experience with their second implant, which was the poorer performing implant for each subject. However, one out of the four patients with six months use for the second implant showed symmetrical vowel recognition performance, and two out of the five patients with longer use of the second implant also showed symmetrical vowel recognition performance across two ears. Similarly, one out of the four patients with shorter experience with a second implant has symmetrical consonant recognition performance, and one of the patients with longer experience with the second implant has symmetrical consonant recognition performance. We did not see any significant difference between patients with six months use with a second implant and patients with longer use with a second implant in terms of probability of symmetrical performance across two ears. Previous studies showed that binaural benefit for sentence recognition emerges early (usually between one month and six months after activation) and remains stable over time (Buss et al., 2008; Eapen et al., 2009) when speech and noise were presented at 0° azimuth.

Bilateral patients who were simultaneously implanted may have similar unilateral performance, compared with unilateral scores for bilateral CI users sequentially implanted. However, previous studies have not shown significant correlation between binaural benefit and CI users’ duration of deafness, time between implants, or age at implantation of the second CI (Buss et al., 2008; Eapen et al., 2009; Litovsky et al., 2009; Ramsden et al., 2005; Wolfe et a., 2007). It is also possible that subjects were not matched based on the type of CI they have and thus, subjects have various electrode configuration and signal processing strategies. In addition, subjects might have varying nerve survival patterns between two ears.

One or some combinations of these differences in nerve survival patterns, subject demographics, cochlear implantation method, and primary technical features of CI might be sources of variability in the magnitude of binaural benefits, observed in the present study. A subset of 9 bilateral CI users received the binaural benefit larger than 10 percentage points at certain SNR for some test materials. It is not uncommon in the previous studies with a larger subject population that a subset of bilateral CI subjects received the significant benefit in speech perception test. Litovsky et al. (2006) showed that 44% of their 34 subjects were benefited from binaural listening for sentence recognition in noise. Tyler et al. (2007) also found that only one of 7 subjects showed a significant binaural benefit in word recognition in quiet.

Possible Neural Mechanism of Binaural Benefits

This functional relationship between binaural benefit and the difference in unilateral performances may be due to redundant neural mechanism. That is, speech information coded by each peripheral auditory system is similar so that double neural coding could be achieved by central auditory system such as superior olivary complex, resulting in a synergetic effect (Dunn et al., 2008; Tyler et al., 2007; van Hoesel & Tyler, 2003). It is also possible that transmission of speech information, processed by two differently damaged peripheral auditory systems is different so that each ear processes independent differences in neural survival leading to different patterns of speech information integration. This integration most likely results in a better representation of spectral and temporal information. For bilateral users with asymmetric performance between two ears, the poorer ear did not seem to contribute to speech understanding, implying that speech cues were maximally utilized by the better ear, and that additional speech cues provided by the poorer ear were not utilized.

Potential Clinical Application

If binaural benefits require similar unilateral performance in each ear, then we can utilize this functional characterization for the purpose of maximizing the benefits. Two possible approaches can be taken: providing specific auditory training and optimizing bilateral mapping. Using perceptual confusion analyses for consonants and vowels, first documenting which consonants or vowels are easy, difficult, or intermediate for unilateral and binaural listening conditions can be done. Then the target-oriented auditory training will be given for sounds that were identified as the intermediate difficulty in a poor ear alone so that probability for success of training would be the highest as it is highly possible that auditory training provide the little benefit for the sounds categorized as either easy or difficult.

Even though adding a second CI is becoming more widespread, mapping procedures for the unilateral CI user are still world-widely used for bilateral CI users, which may not be optimal for bilateral CI users. Based on the binaural functional relationship characterized in the present study; binaural benefits require similar unilateral performance in each ear, it is possible by remapping the better performing ear that PI functions between two ears can be set within the range that provides the largest binaural benefit. That is, a situation was established that speech information processed at one ear was similar to that processed at the other ear, allowing central auditory system codes the same speech cues twice. However, this approach requires an assumption of that the binaural performance with new maps for the better performing ear should be significantly higher than performance with better performing CI alone.

Conclusions

The present study showed a greater binaural benefit in noise than in quiet for all speech tests. More importantly, the largest binaural benefits occurred when the interaural difference in performance was small; this functional relationship was observed across some different speech materials and noise levels. The results suggest that large differences in auditory processing between ears may reduce binaural benefit, and that binaural benefit depends more strongly on the listening environment than on the speech materials. If benefits require similar unilateral performance in each ear, then the interesting question is whether, benefits are due to double coding (redundancy) of the same speech cues by each ear or to integration of the different cues between ears. Further experiments are needed to address these two questions.

Acknowledgments

We thank our participants for their time and effort. We thank John J. Galvin III, Jaesook Gho, and Joseph Crew for their comments and editorial assistance. This study was presented in part at the 33rd Association for Research in Otolaryngology (ARO), under title of The Benefits and Perceptual Mechanism by Bilateral and Bimodal Cochlear Implant Users, in February 2010 in Anaheim, California.

Abbreviations

- SNR

Signal-to-noise ratio

- CI

Cochlear implant

- SRT

speech reception threshold

- HINT

Hearing-in-noise test

- AFC

Alternative-forced choice

- dB

Decibel

- PI

Performance intensity

Footnotes

Declaration of Interest

This work was supported by NIH grant 5R01DC004993.

Contributor Information

Yang-soo Yoon, Email: yyoon@hei.org.

Yongxin Li, Email: lyxent@hotmail.com.

Hou-Yong Kang, Email: hkang@hei.org.

Qian-Jie Fu, Email: qfu@hei.org.

References

- Boothroyd A, Nittrouer S. Mathematical treatment of context effects in phoneme and word recognition. J Acoust Soc Am. 1988;84:101–114. doi: 10.1121/1.396976. [DOI] [PubMed] [Google Scholar]

- Boymans M, Goverts ST, Kramer SE, Festen JM, Dreschler WA. A prospective multi-centre study of the benefits of bilateral hearing aids. Ear Hearing. 2008;29(6):930–941. doi: 10.1097/aud.0b013e31818713a8. [DOI] [PubMed] [Google Scholar]

- Buss E, Pillsbury HC, Buchman CA, et al. Multicenter U.S. bilateral MED-EL cochlear implantation study: speech perception over the first year of use. Ear Hear. 2008;29:20–32. doi: 10.1097/AUD.0b013e31815d7467. [DOI] [PubMed] [Google Scholar]

- Cox RM. Using loudness data for hearing aid selection: the IHAFF approach. Hearing J. 2005;48:39–44. [Google Scholar]

- Davis AC, Haggard MP. Some implications of audiological measures in the population for binaural aiding strategies. Scan Audiol Suppl. 1982;15:167–179. [PubMed] [Google Scholar]

- Day GA, Browning GG, Gatehouse S. Benefit from binaural hearing aids in individuals with a severe hearing impairment. Br J Audiol. 1988;22:273–277. doi: 10.3109/03005368809076464. [DOI] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Oakley S, Gantz BJ, Noble W. Comparison of speech recognition and localization performance in bilateral and unilateral cochlear implant users matched on duration of deafness and age at implantation. Ear Hear. 2008;29:352–359. doi: 10.1097/AUD.0b013e318167b870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eapen RJ, Buss E, Adunka MC, Pillsbury HC, Buchman CA. Hearing-in-noise benefits after bilateral simultaneous cochlear implantation continue to improve 4 years after implantation. Otol Neurotol. 2009;30:153–159. doi: 10.1097/mao.0b013e3181925025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt JB, Reeder RM, Skinner MW. Restoring hearing symmetry with two cochlear implants or one cochlear implant and a contralateral hearing aid. J Rehabil Res Dev. 2008;45:749–767. doi: 10.1682/jrrd.2007.08.0120. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Haggard M. The influence of hearing aysmmetries on benefits from binaural amplification. Hear J. 1986;39(11):15–20. [Google Scholar]

- Gifford RH, Shallop JK, Peterson AM. Speech recognition materials and ceiling effects: Considerations for cochlear implant programs. Audiol Neurotol. 2008;13:193–205. doi: 10.1159/000113510. [DOI] [PubMed] [Google Scholar]

- Hillenbrand J, Getty L, Clark M, Wheeler K. Acoustic characteristics of American English vowels. J Acoust Soc Am. 1995;97:3099–3111. doi: 10.1121/1.411872. [DOI] [PubMed] [Google Scholar]

- Laszig R, Aschendorff A, Stecker M, et al. Benefits of bilateral electrical stimulation with the Nucleus cochlear implant in adults: 6-month postoperative results. Otol Neurotol. 2005;25:958–968. doi: 10.1097/00129492-200411000-00016. [DOI] [PubMed] [Google Scholar]

- Litovsky R, Parkinson A, Arcaroli J, Sammeth C. Simultaneous bilateral cochlear implantation in adults: a multicenter clinical study. Ear Hear. 2006;27:714–731. doi: 10.1097/01.aud.0000246816.50820.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Parkinson A, Arcaroli J. Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear Hear. 2009;30:419–431. doi: 10.1097/AUD.0b013e3181a165be. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markides A. Binaural Hearing Aids. Academic Press; N.Y: 1977. [Google Scholar]

- Mosnier I, Sterkers O, Bebear JP, et al. Speech performance and sound localization in a complex noisy environment in bilaterally implanted adult patients. Audiol Neurotol. 2009;14:106–114. doi: 10.1159/000159121. [DOI] [PubMed] [Google Scholar]

- Müller J, Schön F, Helms J. Speech understanding in quiet and noise in bilateral users of the MED-EL COMBI 40/40+ cochlear implant system. Ear Hear. 2002;23:198–206. doi: 10.1097/00003446-200206000-00004. [DOI] [PubMed] [Google Scholar]

- Nabelek AK, Letowski T, Mason D. An influence of binaural hearing aids on positioning of sound images. J Speech Hear Res. 1980;23:670–687. [PubMed] [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Noble W. Bilateral hearing aids: a review of self-reports of benefit in comparison with unilateral fitting. Int J Audiol. 2006;45:S63–S71. doi: 10.1080/14992020600782873. [DOI] [PubMed] [Google Scholar]

- Pittman AL, Stelmachowica PG. Hearing loss in children and adults: audiometric configuration, asymmetry, and progression. Ear Hear. 2003;24:198–205. doi: 10.1097/01.AUD.0000069226.22983.80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramsden R, Greenhan P, O’Driscoll M, Mawman D, Proops D, Craddock L, et al. Evaluation of bilaterally implanted adult subjects with the Nucleus 24 cochlear implant system. Otol Neurotol. 2005;26:988–998. doi: 10.1097/01.mao.0000185075.58199.22. [DOI] [PubMed] [Google Scholar]

- Ricketts TA, Grantham DW, Ashmead DH, Saynes DS, Haynes DS, Labadie RF. Speech recognition for unilateral and bilateral cochlear implant modes in the presence of uncorrelated noise sources. Ear Hear. 2006;27:763–773. doi: 10.1097/01.aud.0000240814.27151.b9. [DOI] [PubMed] [Google Scholar]

- Robinson K, Summerfiled AQ. Adult auditory learning and training. Ear Hear. 2003;17:51S–65S. doi: 10.1097/00003446-199617031-00006. [DOI] [PubMed] [Google Scholar]

- Schleich P, Nopp P, D’Haese P. Head shadow, squelch, and summation effects in bilateral users of the MED-EL COMBI 40/40+ cochlear implant. Ear Hear. 2004;25:197–204. doi: 10.1097/01.aud.0000130792.43315.97. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Jensvold A, Padilla M, Robert M, Wang X. Consonant recordings for speech testing. J Acoust Soc Am, (ARLO) 1999;106:L71–L74. doi: 10.1121/1.428150. [DOI] [PubMed] [Google Scholar]

- Tillman TW, Carhart R. An expanded test for speech discrimination utilizing CNC monosyllabic words (Northwestern University Auditory Test No. 6, Technical Report No. SAM-TR-66-55) Brooks Air Force Base, Texas: USAF School of Aerospace Medicine; 1966. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Dunn CC, Witt SA, Noble WG. Speech perception and localization with adults with bilateral sequential cochlear implants. Ear Hear. 28:86S–90S. doi: 10.1097/AUD.0b013e31803153e2. [DOI] [PubMed] [Google Scholar]

- van Hoesel RJ, Tyler RS. Speech perception, localization, and lateralization with bilateral cochlear implants. J Acoust Soc Am. 2003;113:1617–1630. doi: 10.1121/1.1539520. [DOI] [PubMed] [Google Scholar]

- Wackym PA, Runge-Samuelson CL, Firszt JB, Alkaf FM, Burg LS. More challenging speech-perception tasks demonstrate binaural benefit in bilateral cochlear implant users. Ear Hear. 2007;28:80S–85S. doi: 10.1097/AUD.0b013e3180315117. [DOI] [PubMed] [Google Scholar]

- Wolfe J, Baker S, Caraway T, et al. 1-Year postactivation results for sequentially implanted bilateral cochelar implant usersl. Oto Neurotol. 2007;28:589–596. doi: 10.1097/MAO.0b013e318067bd24. [DOI] [PubMed] [Google Scholar]

- Zeitler DM, Kessler MA, Terushkin V, Roland TJ, Jr, Svirsky MA, Lalwani AK, Waltzman SB. Speech perception benefits of sequential bilateral cochlear implantation in children and adults: a retrospective analysis. Otol Neurotol. 2008;29:314–325. doi: 10.1097/mao.0b013e3181662cb5. [DOI] [PubMed] [Google Scholar]