Abstract

Dermoscopy is a non-invasive skin imaging technique, which permits visualization of features of pigmented melanocytic neoplasms that are not discernable by examination with the naked eye. One of the most important features for the diagnosis of melanoma in dermoscopy images is the blue-white veil (irregular, structureless areas of confluent blue pigmentation with an overlying white “ground-glass” film). In this article, we present a machine learning approach to the detection of blue-white veil and related structures in dermoscopy images. The method involves contextual pixel classification using a decision tree classifier. The percentage of blue-white areas detected in a lesion combined with a simple shape descriptor yielded a sensitivity of 69.35% and a specificity of 89.97% on a set of 545 dermoscopy images. The sensitivity rises to 78.20% for detection of blue veil in those cases where it is a primary feature for melanoma recognition.

Keywords: Melanoma, dermoscopy, blue-white veil, contextual pixel classification, decision tree classifier

1. INTRODUCTION

Malignant melanoma, the most deadly form of skin cancer, is one of the most rapidly increasing cancers in the world, with an estimated incidence of 59,940 and an estimated total of 8,110 deaths in the United States in 2007 alone [1]. Dermoscopy is a non-invasive skin imaging technique which permits visualization of features of pigmented melanocytic neoplasms that are not discernable by examination with the naked eye. Practiced by experienced observers, this imaging modality offers higher diagnostic accuracy than observation without magnification [2–5]. Dermoscopy allows the identification of dozens of morphological features one of which is the blue-white veil (irregular, structureless areas of confluent blue pigmentation with an overlying white “ground-glass” film) [6]. This feature is one of the most significant dermoscopic indicator of invasive malignant melanoma, with a sensitivity of 51% and a specificity of 97% [7]. Figure 1 shows a melanoma with blue-white veil.

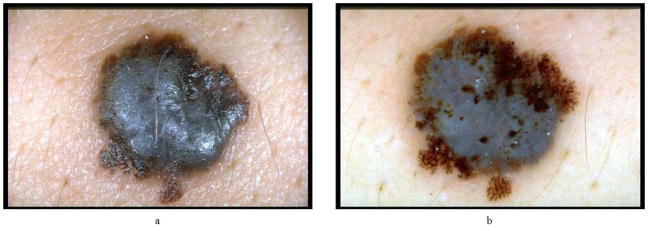

Figure 1.

Melanoma with blue-white veil (a) clinical image and (b) dermoscopy image. The steps of the blue-white veil detection procedure will be demonstrated on image (b).

Numerous methods for extracting features from clinical skin lesion images have been proposed in the literature [8–10]. However, feature extraction in dermoscopy images is relatively unexplored. The dermoscopic feature extraction studies to date include two pilot studies on pigment networks [11][12] and globules [11], and three systematic studies on dots [13] and blotches [14][15]. To the best of our knowledge, there is no published systematic study on the detection of blue-white veil.

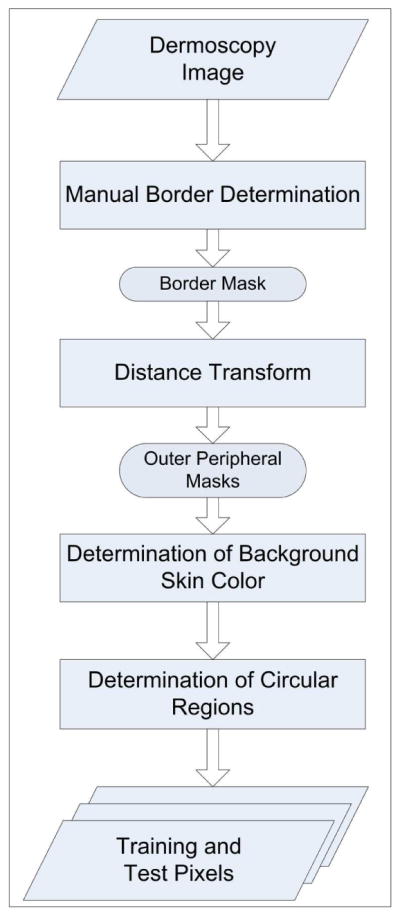

In this article, we present a machine learning approach to the detection of blue-white veil in dermoscopy images. Figure 2 shows an overview of the approach. The rest of the paper is organized as follows. Section 2 describes the image set and the preprocessing phase. Section 3 discusses the feature extraction. Section 4 presents the pixel classification. Section 5 describes the classification of lesions based on the blue-white veil feature. Finally, Section 6 gives the conclusions.

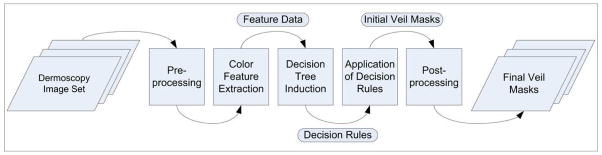

Figure 2.

Overview of the approach

2. IMAGE SET DESCRIPTION AND PREPROCESSING

2.1. Image Set Description

The image set used in this study consists of 545 digital dermoscopy images obtained from two atlases. The first is the CD-ROM Interactive Atlas of Dermoscopy [6], which is a collection of images acquired in three institutions: University Federico II of Naples, Italy, University of Graz, Austria, and University of Florence, Italy. The second atlas is a pre-publication version of the American Academy of Dermatology DVD on Dermoscopy, edited by Harold Rabinovitz et al. These were true-color images with a typical resolution of 768 × 512 pixels. The diagnosis distribution of the cases was as follows: 299 dysplastic nevi, 186 melanomas, 28 blue nevi, 14 Reed/Spitz nevi, 8 combined nevi, 8 basal cell carcinoma, and 2 intradermal nevi. The lesions were biopsied and diagnosed histopathologically in cases where significant risk for melanoma was present; otherwise they were diagnosed by follow-up examination.

2.2. Preprocessing

Prior to the feature extraction two preprocessing steps, namely the determination of the background skin color and selection of the training and test pixels, were performed on the images. Figure 3 shows an overview of this procedure.

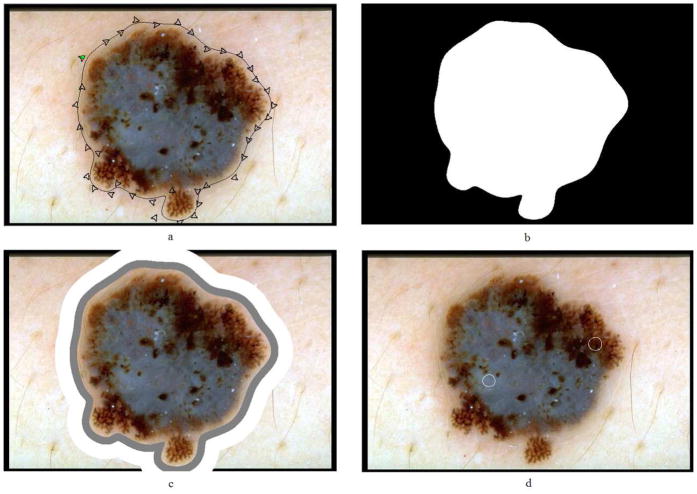

Figure 3.

Preprocessing

The lesion borders were obtained manually under the supervision of an experienced dermatologist (WVS). The motivation for using manual borders rather than computer-detected borders [16][17] was to separate the problem of feature extraction from the problem of automated border detection. The procedure for manual border determination was as follows. First, a number of points were selected along the lesion border. These points were then connected using a second-order B-spline function. Finally, the resulting closed curve was filled using a flood-fill algorithm to obtain the binary border mask. Figure 4a–b illustrates this procedure.

Figure 4.

Preprocessing steps (a) B-spline approximation of the border, (b) binary border mask, (c) 10% (gray) and 20% (white) areas outside the lesion, and (d) manually selected veil (left circle) and non-veil (right circle) regions

For the extraction of the color features, the background skin color needs to be determined. First, the region outside the border with an area equal to 10% of the lesion area was omitted to reduce the effects of peripheral inflammation and errors in border determination. The background skin color was then calculated as the average color over the next region outside the border with an area equal to 20% of the lesion area. The non-skin pixels (black image frames, rulers, hairs, and bubbles) were not included in the calculation. The omitted pixels were those that are determined not to satisfy the following empirical rule [18]: (R>90 ∩ R>B ∩ R>G), where R, G, and B denote the red, green, and blue values, respectively, of the pixel under consideration. The 10% and 20% areas outside the lesion were determined from the binary border mask using the Euclidean distance transform. Figure 4c shows these areas for a sample lesion.

In order to select training and test pixels for classification, 100 images were chosen from the entire image set. Forty-three of these images had sizeable pure veil regions and 62 had sizeable pure non-veil regions. In each image, a number of small circular regions that contain either veil or non-veil pixels were manually determined. Training and test pixels were then randomly selected from these manually determined regions. The selection method was designed to ensure a balanced distribution of the two classes (veil and non-veil) in the training set [19]. Figure 4d shows two manually selected regions on a sample image.

For each lesion, two additional features, primary blue-white veil and veil-related structures, were determined by a dermatologist (WVS). A feature such as a veil is said to be a primary feature if the veil is the feature most characteristic of melanoma, i.e. the feature present in the lesion which is most recognizable and specific for melanoma. Some structures related to blue-white veils were also considered in this study. These included gray or blue-gray veils or any veils which lacked the whitish film seen in the classic veil. These were identified as veil-related structures.

3. FEATURE EXTRACTION

After the selection of training and test pixels, features that will be used in the classification of these pixels need to be extracted. There are two main approaches to pixel classification: non-contextual and contextual [20]. In non-contextual pixel classification, during feature extraction, a pixel is treated in isolation from its spatial neighborhood. This often leads to noisy results. On the other hand, in contextual pixel classification, the spatial neighborhood of the pixel is also taken into account. In this study, the latter approach is followed. Several features were extracted in the 5 × 5 neighborhood of each pixel. For each feature, the median value in the neighborhood was then taken as the value for that feature of the center pixel. To speed up the median search in a 5 × 5 neighborhood, instead of fully sorting the 25 values, a minimum exchange network algorithm that performs a partial sort was employed [21]. Fifteen color features and three texture features were used to characterize the image pixels.

3.1. Absolute color features

The absolute color of a pixel was quantified by its chromaticity coordinates F1, F2, and F3 (see Table 1). An advantage of F1, F2, and F3 over the raw R, G, and B values is that while the former are invariant to illumination direction and intensity [22], the latter are not. This invariance is essential for dealing with images that are acquired in uncontrolled imaging conditions.

Table 1.

Description of the color features

| Feature Group | Description | |

|---|---|---|

|

|

Chromaticity Coordinates | |

|

|

Relative R, G, B Ratio | |

|

|

Normalized Relative R, G, B Ratio | |

|

|

Relative R, G, B Difference | |

|

|

Normalized Relative R, G, B Difference |

3.2. Relative color features

Relative color refers to the color of a lesion pixel when compared to the average color of the background skin. A total of 12 relative color features were extracted from each pixel (see Table 1). In the table, the lesion pixel and the average background skin color in the RGB color space are denoted as (RL, GL, BL) and (RS, GS, BS), respectively. The relative color features offer several advantages. First, they compensate for variations in the images caused by illumination and/or digitization. Second, they equalize variations in normal skin color among individuals. Third, relative color is more natural from a perceptual point of view. Recent studies [15][23][24] have confirmed the usefulness of relative color features in skin lesion image analysis.

3.3. Texture features

In order to quantify the texture in the 5 × 5 neighborhood of a pixel, a set of statistical texture descriptors based on the Gray Level Co-occurrence Matrix (GLCM) were employed [25]. Although many statistics can be derived from the GLCM, three gray-level shift-invariant statistics (entropy F16, contrast F17, and correlation F18) were used in this study to obtain a non-redundant texture characterization that is robust to linear shifts in the illumination intensity [26]. In order to achieve rotation invariance, the normalized GLCM was computed for each of the 4 directions {0°, 45°, 90°, 135°} and the statistics calculated from these matrices were averaged.

4. PIXEL CLASSIFICATION

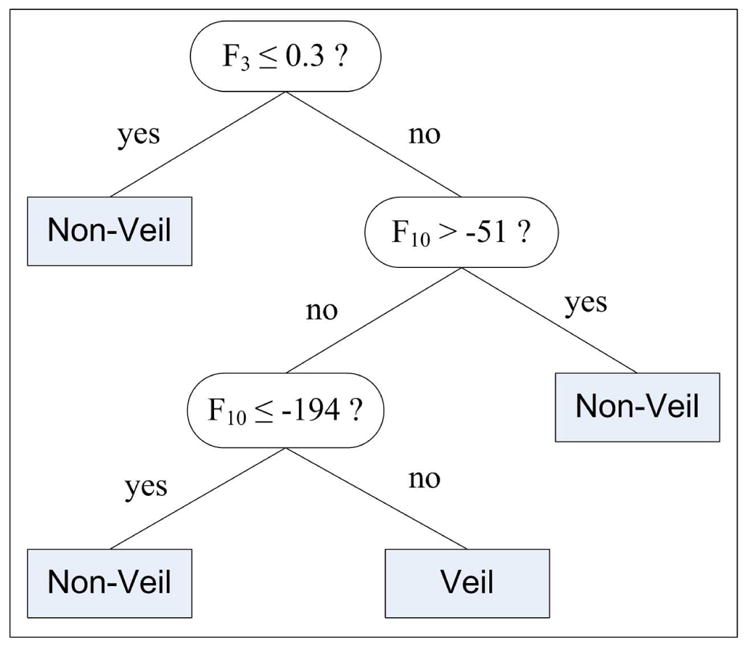

Popular classifiers used in pixel classification tasks include k-nearest neighbor [27], Bayesian [28], artificial neural networks [29], and support vector machines [28]. In this study, a decision tree classifier was used to classify the image pixels into 2 classes: veil and non-veil. The motivation for this choice was two-fold. First, decision tree classifiers generate easy-to-understand rules, which is important for the clinical acceptance of a computer-aided diagnosis system. Second, they are fast to train and apply. The well-known C4.5 algorithm [30] was used for decision tree induction.

Given a large training set, decision tree classifiers, in general, generate complex decision rules that perform well on the training data, but do not generalize well to unseen data [31]. In such cases, the classifier model is said to have overfit the training data. The C4.5 algorithm prevents overfitting by pruning the initial tree that is, by identifying subtrees that contribute little to predictive accuracy and replacing each by a leaf [30]. The confidence factor (C) parameter controls the level of pruning and has a default value of 0.25. Another parameter that influences the complexity of the induced tree is the minimum number of samples per leaf (M). The default value for M is 2. In order to induce a simple tree that generalizes better, C and M were set to 0.1 and 100, respectively. Using these parameter values, the C4.5 algorithm was trained with the manually selected training pixels (Section 2.2). Figure 5 shows the induced decision tree. It can be seen that only 2 of the 18 features were included in the classification model. One of these is an absolute color feature (F3), whereas the other one is a relative color feature (F10). The classification performance of the tree on the manually selected test pixels was a sensitivity (percentage of correctly detected veil pixels) of 84.33% and a specificity (percentage of correctly detected non-veil pixels) of 96.19%.

Figure 5.

Pixel classification tree

In order to evaluate the effectiveness of the classification model, the induced decision rules were applied to the entire image set. In the classifier training phase, 18 features were extracted from the training pixels. In contrast, in the rule application phase, only the two features that appear in the decision tree, namely F3 and F10, need to be extracted from the pixels. For each image, an initial binary veil mask was generated as a result of the rule application. To smooth the borders, a 5 × 5 majority filter [32] was applied to the initial masks. This filter replaces each pixel’s value with the majority class label in its 5 × 5 neighborhood. Figure 6 shows the initial and final veil masks for a sample image.

Figure 6.

Postprocessing (a) initial veil mask and (b) final veil mask

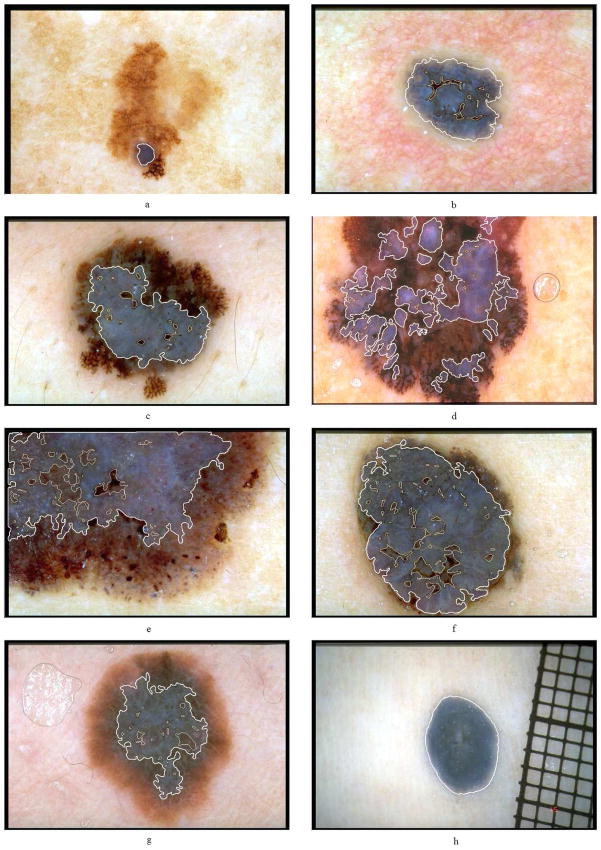

Figure 7 shows a sample of the detection results. In this figure, parts (a) through (f) are melanomas, (g) is a Reed/Spitz nevus, and (h) is a blue nevus. It can be seen that the presented method detects most of the blue-white areas accurately.

Figure 7.

Sample blue-white veil detection results. The veil and non-veil region borders are delineated with thick and thin lines, respectively.

5. LESION CLASSIFICATION BASED ON THE BLUE-WHITE VEIL FEATURE

In the second part of the study, we developed a second classifier to discriminate between melanoma and benign lesions based on the presence/absence of the blue-white veil feature. In order to characterize the detected blue-white areas, we used a numerical feature defined as follows:

| (1) |

The problem with using S1 alone is that a blue nevus (such as the one in Fig 7h) might be misclassified as melanoma due to its high percentage of blue-white areas. We can solve this problem by using additional features that characterize the circularity and/or ellipticity of the lesion. The circularity of a lesion can be characterized by [33]:

| (2) |

where P is the number of points on the lesion boundary, (rk, ck) is the spatial coordinate of the kth boundary point, and (r̄, c̄) is the centroid of the lesion object (see Fig. 4b). The ellipticity of a lesion can be measured by [34]:

| (3) |

where, I is the binary lesion image (see Fig. 4b), Nr and Nc are the number of rows and number of columns in I, respectively.

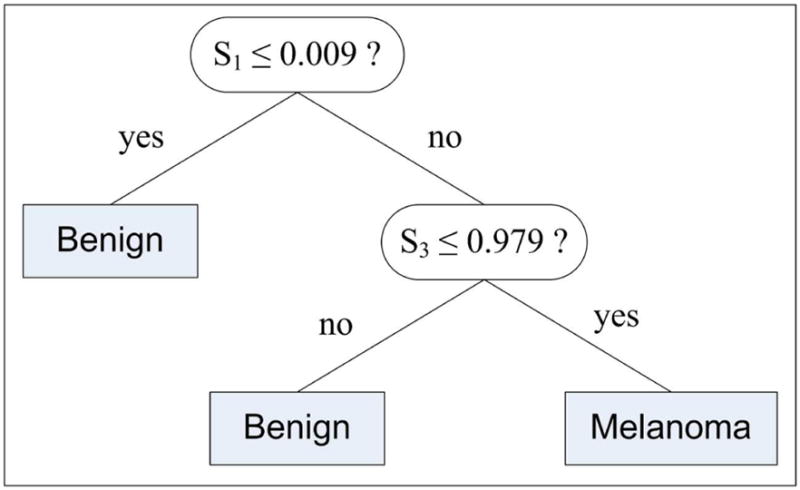

The rationale behind the inclusion of S2 and S3 is that benign lesions with blue-white areas might be distinguished from melanomas by their highly circular (S2) and/or elliptical (S3) shapes. As in the pixel classification procedure, we used the C4.5 algorithm with 10-fold cross-validation to generate a classification model based on the features S1, S2, and S3. Figure 8 shows the induced decision tree.

Figure 8.

Image classification tree

As expected, a lesion is classified as benign if it contains none to very small, i.e. less than 0.9%, blue-white areas. On the other hand, if the lesion contains significantly large blue-white areas, the ellipticity value is checked. If the lesion is highly elliptical, i.e. the S3 value is greater than 0.979, then it is classified as benign; otherwise, it is classified as melanoma. Note that, the circularity feature (S2) was discarded by the induction algorithm possibly because its characteristics are captured by the more general ellipticity feature (S3).

The performance of this decision tree on the entire image set (545 images) was a sensitivity (percentage of correctly classified melanomas) of 69.35% and a specificity (percentage of correctly classified benigns) of 89.97%. The overall classification accuracy for all areas, including structures related to blue-white veil, was 82.94%. This included some areas closely related to blue-white veil such as blue-gray or gray veil. On the subset of images that are known to have blue-white veil areas (44 benigns, 134 melanomas), the sensitivity and specificity were 76.87% and 75.00%, respectively. On the other hand, on the subset of melanomas (133 cases) for which the blue-white veil is the primary feature the sensitivity was 78.20%.

6. CONCLUSIONS

In this article, a machine learning approach to the detection of blue-white veil in dermoscopy images was described. The method is comprised of several steps including preprocessing, feature extraction, decision tree induction, rule application, and postprocessing. The detected blue-white areas were characterized using a numerical feature, which in conjunction with an ellipticity measure yielded a sensitivity of 69.35% and a specificity of 89.97% on a set of 545 dermoscopy images. The presented blue-white veil detector takes a fraction of a second for a 768 × 512 image on an Intel Pentium D 2.66GHz. computer.

Acknowledgments

This work was supported by grants from NIH (SBIR #2R44 CA-101639-02A2), NSF (#0216500-EIA), Texas Workforce Commission (#3204600182), and James A. Schlipmann Melanoma Cancer Foundation. The permissions to use the images from the CD-ROM Interactive Atlas of Dermoscopy and American Academy of Dermatology DVD on Dermoscopy are gratefully acknowledged.

Biographies

Dr. M. Emre Celebi received his BSc degree in computer engineering from Middle East Technical University (Ankara, Turkey) in 2002. He received his MSc and PhD degrees in computer science and engineering from the University of Texas at Arlington (Arlington, TX, USA) in 2003 and 2006, respectively. He is currently an assistant professor in the Department of Computer Science at the Louisiana State University in Shreveport (Shreveport, LA, USA). His research interests include medical image analysis, color image processing, content-based image retrieval, and open-source software development.

Dr. Hitoshi Iyatomi is a research associate in Hosei University, Tokyo, Japan. He received his BE, ME degrees in electrical engineering and PhD degree in science for open and environmental systems from Keio University in 1998, 2000 and 2004, respectively. During 2000–2004, he was employed by Hewlett Packard Japan. His research interests include intelligent image processing and development of practical computer-aided diagnosis systems.

William V. Stoecker, MD received the BS degree in mathematics in 1968 from the California Institute of Technology, the MS in systems science in 1971 from the University of California, Los Angeles, and the MD in 1977 from the University of Missouri, Columbia. He is adjunct assistant professor of computer science at the Missouri University of Science and Technology and clinical assistant professor of Internal Medicine-Dermatology at the University of Missouri-Columbia. His interests include computer-aided diagnosis and applications of computer vision in dermatology and development of handheld dermatology databases.

Dr. Randy H. Moss received his PhD in electrical engineering from the University of Illinois, and his BS and MS degrees from the University of Arkansas in the same field. He is now professor of electrical and computer engineering at the Missouri University of Science and Technology. He is an associate editor of both Pattern Recognition and Computerized Medical Imaging and Graphics. His research interests emphasize medical applications, but also include industrial applications of machine vision and image processing. He is a senior member of IEEE and a member of the Pattern Recognition Society and Sigma Xi. He is a past recipient of the Society of Automotive Engineers Ralph R. Teetor Educational Award and the Society of Manufacturing Engineers Young Manufacturing Engineer Award. He is a past National Science Foundation Graduate Fellow and National Merit Scholar.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Dr. M. Emre Celebi, Email: ecelebi@lsus.edu.

Dr. Hitoshi Iyatomi, Email: iyatomi@hosei.ac.jp.

William V. Stoecker, Email: wvs@mst.edu.

Dr. Randy H. Moss, Email: rhm@mst.edu.

Harold S. Rabinovitz, Email: harold@admcorp.com.

Giuseppe Argenziano, Email: argenziano@tin.it.

H. Peter Soyer, Email: peter.soyer@telederm.eu.

References

- 1.Jemal A, Siegel R, Ward E, et al. Cancer Statistics 2007. CA: A Cancer Journal for Clinicians. 2007;57(1):43–66. doi: 10.3322/canjclin.57.1.43. [DOI] [PubMed] [Google Scholar]

- 2.Nachbar F, Stolz W, Merkle T, et al. The ABCD Rule of Dermatoscopy. High Prospective Value in the Diagnosis of Doubtful Melanocytic Skin Lesions. Journal of the American Academy of Dermatology. 1994;30(4):551–559. doi: 10.1016/s0190-9622(94)70061-3. [DOI] [PubMed] [Google Scholar]

- 3.Menzies SW, Zalaudek I. Why perform Dermoscopy? The evidence for its role in the routine management of pigmented skin lesions. Arch Dermatol. 2006;142:1211–1222. doi: 10.1001/archderm.142.9.1211. [DOI] [PubMed] [Google Scholar]

- 4.Argenziano G, Fabbrocini G, Carli P, et al. Epiluminescence Microscopy for the Diagnosis of Doubtful Melanocytic Skin Lesions: Comparison of the ABCD rule of Dermatoscopy and a New 7-Point Checklist Based on Pattern Analysis. Archives of Dermatology. 1998;134(12):1563–1570. doi: 10.1001/archderm.134.12.1563. [DOI] [PubMed] [Google Scholar]

- 5.Argenziano G, Soyer HP, Chimenti S, Talamini R, Corona R, Sera F, et al. Dermoscopy of Pigmented Skin Lesions: Results of a Consensus Meeting via the Internet. Journal of the American Academy of Dermatology. 2003;48(5):679–693. doi: 10.1067/mjd.2003.281. [DOI] [PubMed] [Google Scholar]

- 6.Argenziano G, Soyer HP, De Giorgi V, et al. Interactive Atlas of Dermoscopy. Milan, Italy: EDRA Medical Publishing & New Media; 2002. [Google Scholar]

- 7.Menzies SW, Crotty KA, Ingwar C, McCarthy WH. An Atlas of Surface Microscopy of Pigmented Skin Lesions: Dermoscopy. Australia: McGraw-Hill; 2003. [Google Scholar]

- 8.Stoecker WV, Li WW, Moss RH. Automatic Detection of Asymmetry in Skin Tumors. Computerized Medical Imaging and Graphics. 1992;16(3):191–197. doi: 10.1016/0895-6111(92)90073-i. [DOI] [PubMed] [Google Scholar]

- 9.Lee TK, McLean DI, Atkins MS. Irregularity Index: A New Border Irregularity Measure for Cutaneous Melanocytic Lesions. Medical Image Analysis. 2003;7(1):47–64. doi: 10.1016/s1361-8415(02)00090-7. [DOI] [PubMed] [Google Scholar]

- 10.She Z, Fish PJ. Analysis of Skin Line Pattern for Lesion Classification. Skin Research and Technology. 2003;9(1):73–80. doi: 10.1034/j.1600-0846.2003.00370.x. [DOI] [PubMed] [Google Scholar]

- 11.Fleming MG, Steger C, Zhang J, et al. Techniques for a Structural Analysis of Dermatoscopic Imagery. Computerized Medical Imaging and Graphics. 1998;22(5):375–389. doi: 10.1016/s0895-6111(98)00048-2. [DOI] [PubMed] [Google Scholar]

- 12.Caputo B, Panichelli V, Gigante GE. Toward a Quantitative Analysis of Skin Lesion Images. Studies in Health Technology and Informatics. 2002;90:509–513. [PubMed] [Google Scholar]

- 13.Yoshino S, Tanaka T, Tanaka M, Oka H. Application of Morphology for Detection of Dots in Tumor. Proc of the SICE Annual Conf. 2004;1:591–594. [Google Scholar]

- 14.Pellacani G, Grana C, Cucchiara R, Seidenari S. Automated Extraction and Description of Dark Areas in Surface Microscopy Melanocytic Lesion Images. Dermatology. 2003;208(1):21–26. doi: 10.1159/000075041. [DOI] [PubMed] [Google Scholar]

- 15.Stoecker WV, Gupta K, Stanley RJ, et al. Detection of Asymmetric Blotches in Dermoscopy Images of Malignant Melanoma Using Relative Color. Skin Research and Technology. 2005;11(3):179–184. doi: 10.1111/j.1600-0846.2005.00117.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Celebi ME, Aslandogan YA, Stoecker WV, et al. Unsupervised Border Detection in Dermoscopy Images. Skin Research and Technology. 2007;13(4):454–462. doi: 10.1111/j.1600-0846.2007.00251.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Celebi ME, Kingravi HA, Iyatomi H, et al. Border Detection in Dermoscopy Images Using Statistical Region Merging. to appear in Skin Research and Technology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hance GA, Umbaugh SE, Moss RH, Stoecker WV. Unsupervised Color Image Segmentation with Application to Skin Tumor Borders. IEEE Engineering in Medicine and Biology. 1996;15(1):104–111. [Google Scholar]

- 19.Weiss GM, Provost FJ. Learning When Training Data are Costly: the Effect of Class Distribution on Tree Induction. Journal of Artificial Intelligence Research. 2003;19:315–354. [Google Scholar]

- 20.Sonka M, Hlavac V, Boyle R. Image Processing, Analysis, and Machine Vision. 2. Pacific Grove, CA: PWS Publishing; 1999. [Google Scholar]

- 21.Smith JL. Xcell Articles. 1996. Quarter 4 Implementing Median Filters in XC4000E FPGAs. [Google Scholar]

- 22.Gevers T, Smeulders AWM. Color-Based Object Recognition. Pattern Recognition. 1999;32(3):453–464. [Google Scholar]

- 23.Stanley RJ, Moss RH, Stoecker WV, Aggarwal C. A Fuzzy-based Histogram Analysis Technique for Skin Lesion Discrimination in Dermatology Clinical Images. Computerized Medical Imaging and Graphics. 2003;27(5):387–396. doi: 10.1016/s0895-6111(03)00030-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Celebi ME, Kingravi HA, Uddin B. A Methodological Approach to the Classification of Dermoscopy Images. Computerized Medical Imaging and Graphics. 2007;31(6):362–373. doi: 10.1016/j.compmedimag.2007.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Haralick RM, Shanmugam K, Dinstein I. Textural Features for Image Classification. IEEE Transactions on Systems, Man, and Cybernatics. 1973;3(6):610–621. SMC. [Google Scholar]

- 26.Clausi DA. An Analysis of Co-occurrence Texture Statistics as a Function of Grey Level Quantization. Canadian Journal of Remote Sensing. 2002;28(1):45–62. [Google Scholar]

- 27.Sampat MP, Bovik AC, Aggarwal JK, Castleman KR. Supervised Parametric and Non-parametric Classification of Chromosome Images. Pattern Recognition. 2005;38(8):1209–1223. [Google Scholar]

- 28.Meurie C, Lebrun G, Lezoray O, Elmoataz A. A Comparison of Supervised Pixel-based Color Image Segmentation Methods. Application in Cancerology WSEAS Transactions on Computers. 2003;2(3):739–744. [Google Scholar]

- 29.Lawson SW, Parker GA. Intelligent Segmentation of Industrial Radiographs Using Neural Networks. Proc of the SPIE. 1994;2347:245–255. [Google Scholar]

- 30.Quinlan JR. C4.5: Programs for Machine Learning. San Francisco, CA: Morgan Kaufmann; 1993. [Google Scholar]

- 31.Oates T, Jensen D. Large Datasets Lead to Overly Complex Models: An Explanation and a Solution. Proc of the 4th Int Conf on Knowledge Discovery and Data Mining. 1998:294–298. [Google Scholar]

- 32.Pratt WK. Digital Image Processing: PIKS Inside. New York, NY: John Wiley & Sons; 2001. [Google Scholar]

- 33.Haralick RM. A Measure for Circularity of Digital Figures. IEEE Transactions on Systems, Man, and Cybernetics. 1974;4(4):394–396. SMC. [Google Scholar]

- 34.Rosin PL. Measuring Shape: Ellipticity, Rectangularity, and Triangularity. Machine Vision and Applications. 2003;14(3):172–184. [Google Scholar]