Abstract

Background

To improve patient safety, organisations must systematically measure avoidable harms. Clinical surveillance—consisting of prospective case finding and peer review—could improve identification of adverse events (AEs), preventable AEs and potential AEs. The authors sought to describe and compare findings of clinical surveillance on four clinical services in an academic hospital.

Methods

Clinical surveillance was performed by a nurse observer who monitored patients for prespecified clinical events and collected standard information about each event. A multidisciplinary, peer-review committee rated causation for each event. Events were subsequently classified in terms of severity and type.

Results

The authors monitored 1406 patients during their admission to four hospital services: Cardiac Surgery Intensive Care (n=226), Intensive Care (n=211), General Internal Medicine (n=453) and Obstetrics (n=516). The authors detected 245 AEs during 9300 patient days of observation (2.6 AEs per 100 patient days). 88 AEs (33%) were preventable. The proportion of patients experiencing at least one AE, preventable AE or potential AE was 13.7%, 6.1% and 5.3%, respectively. AE risk varied between services, ranging from 1.4% of Obstetrics to 11% of Internal Medicine and Intensive Care patients experiencing at least one preventable AE. The proportion of patients experiencing AEs resulting in permanent disability or death varied between services: ranging from 0.2% on Obstetrics to 4.9% on Cardiac Surgery Intensive Care. No services shared the most frequent AE type.

Conclusions

Using clinical surveillance, the authors identified a high risk of AE and significant variation in AE risks and subtypes between services. These findings suggest that institutions will need to evaluate service-specific safety problems to set priorities and design improvement strategies.

Keywords: Medical errors, adverse events, prospective surveillance, patient safety, near miss

Introduction

Improving patient safety requires the minimisation of treatment-related harm. This is typically measured as adverse events (AEs) (harms caused by medical care), preventable AEs (harm caused by errors) and potential AEs (errors with the potential for harm). Numerous studies have demonstrated a high incidence of AEs and preventable AEs in hospitalised patients.1–8 These studies have prompted significant investments to improve patient safety.

Detection of AEs and preventable AEs is a primary step to achieving a safe healthcare system. By systematically measuring these outcomes and analysing their causes, healthcare planners may design system changes to prevent them.9–14 Unfortunately, most hospitals and health systems have rudimentary approaches to identify AEs. These approaches include the use of voluntary reporting, chart reviews and scanning of administrative data.15 Each of these methods has important limitations including a failure to report, inconsistent peer review and poor specificity for patient safety problems.13 15–23

Detecting AEs is especially challenging because of the invisible nature of treatment-related harm. When a patient experiences a poor outcome, it is often assumed the outcome was caused by the underlying disease process. While this is often true, careful peer review will often determine that medical care was, at least, partially responsible. Furthermore, adverse outcomes and the circumstances leading to them are often not systematically documented. This will often lead to disagreement between peers when chart review is used to identify AEs.24 25

Clinical surveillance is a promising method of AE detection. In this method, patients and providers are directly and indirectly monitored by a trained observer prospectively.26–29 As soon as clinical events are identified, the observer records prespecified information to facilitate subsequent peer review. This avoids missing information that occurs from incomplete documentation in the patient record.

Clinical surveillance has several advantages over existing AE detection methods. It consists of active surveillance rather than relying solely on voluntary reporting; it ensures timely collection of information relevant to case classification rather than retrospectively relying on what is documented in charts, and it enhances peer review through timely review of cases when reviewers still remember the case. On the other hand, although it has been used successfully for years in tracking surgical complications and healthcare-associated infections, it has not been widely adopted for all AE types in multiple, diverse settings.

In this study, we describe our findings from implementing clinical surveillance in our healthcare facility. We describe a general process and the results obtained during its application in four diverse services at an academic hospital. The comparison of results between services was of particular interest, as it would validate our ability to learn useful information from the programme and would direct strategies for improvement.

Methods

This study took place at a 1000-bed multicampus urban-based academic hospital. The hospital provides tertiary-level patient care, including organ transplants, and is a Level 1 trauma centre. We performed our clinical surveillance activities as part of a research study, which was approved by the Ottawa Hospital Research Ethics Board.

Overview

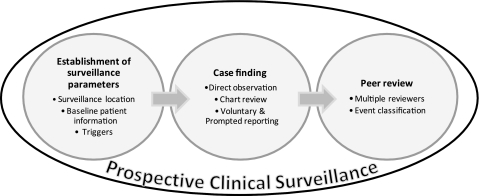

We conducted surveillance sequentially on the following four services: General Internal Medicine, Obstetrics, Intensive Care, and Cardiac Surgery Intensive Care. For each service, we monitored care for 12 weeks using a common method, which was adapted for the service to ensure relevancy to the patients treated. The general method included establishment of surveillance parameters, case finding, peer review and event classification (figure 1).

Figure 1.

Prospective clinical surveillance overview.

Establishment of surveillance parameters

Before starting surveillance, we identified several service-specific surveillance parameters describing surveillance location, baseline patient information and triggers.

We prespecified the surveillance location to ensure consistent and representative case identification. On the Obstetrics service, for example, we monitored patients in the case-room, the obstetrical operating room, and the postpartum ward. For Medicine, however, we monitored patients on the ward only. We also prespecified whether we would include care preceding the observation period. For example, for Intensive Care patients we included events leading to unit admission.

We prespecified variables describing baseline patient information to enable consistent case reviews and permit future risk adjustments. For example, when we implemented on the Medicine service, we collected information regarding the presence of diagnoses associated with poor outcomes.30

Most importantly, we prespecified triggers which represented the factors we used to prompt case reviews. Trigger tool methodology has been used successfully in other institutions to address specific AE types such as adverse drug events,18 19 31–37 hospital-acquired infections38 and surgical complications,39–41 so we adapted the concept to create a more generalised model for the identification of all types of AEs in various settings. For each service, we reviewed the literature to identify previously published risk factors for adverse outcomes. A multidisciplinary team specific to each service then determined the face validity of these triggers and discussed additional triggers. Consensus was used to define the final set of triggers. The triggers represent clinical phenomenon, pharmacy orders and system events relevant to the patients treated. For example, ‘stat Caesarean section’ was a system event on the Obstetrics service, while ‘unplanned OR return’ was used on the Cardiac Surgery Intensive Care unit. For all services, we had an ‘Other’ category to account for events that were not predicted a priori. (See online appendix 1) contains the triggers we used for the four services.

Case finding

Case finding was performed daily Monday to Friday between 08:00 and 16:00 by a trained nurse (observer). The observer training consisted of a patient safety presentation, relevant literature, practice case report generation and reviews, familiarisation with triggers and service-specific integration. We integrated the observer into the daily activities of the specific service to ensure maximal interaction with the providers and opportunities to observe care yet with minimal disruption to the providers' routine. This meant there were slight differences in how the observations were performed. For example, in the Intensive Care Unit, observations occurred at the bedside during morning team rounds, while on the Medicine service they occurred at the nursing station. The observer was encouraged to ask providers for details if they were not volunteered or documented in the chart.

All patients in the surveillance locations were eligible for case finding. The observer captured baseline information on all patients admitted to the service. Once data were entered, the observer monitored the patient until discharge. The observer identified triggers using a combination of three common AE detection methods: daily interactions with clinicians and administrators to enquire about or discuss trigger events (prompted and voluntary reporting), daily chart reviews and direct observation. If triggers occurred when our observer was not present, we identified them based on information volunteered by providers or from the chart.

Once a trigger was identified, we captured standard information describing the case. The observer generated a case summary using a standard format describing the patient and event in detail, the response to the event, the impact of the response and the documented cause of the response if there was one. This information was compiled by the observer through discussions with the relevant staff on the service.

Peer review

Peer review took place weekly. For all reviews, we used the same definitions and classification systems as those used in the Harvard Medical Practice Study and other patient safety studies (See online appendix 2).1–7 We used a majority opinion to consolidate the ratings of the multiple reviewers.42 Cases were initially classified as outcomes (in which a patient experienced some form of harm, including pain, other distressing symptoms, such as dyspnoea, or reasonable markers for such symptoms such as increases in respiratory rate) or process problems (in which a task did not occur as planned). For example, if a patient experienced a sudden drop in blood pressure, the event was classified as an outcome. On the other hand if a patient missed a dose of an antibiotic but had no discernible change in status, then the event was classified as a process problem. For outcomes, we first assessed whether they were caused by medical care (ie, an AE). If so, reviewers determined whether it was preventable (ie, it was caused by error or was avoidable in some other way). We used six-point Likert scales with a cut-point of 3 for both of these assessments. For processes, reviewers determined whether the procedural or process problem could have caused harm (ie, a potential AE). Again, we used a six-point Likert scale with a cut-point of 3 for this assessment (ie, a score of 4, 5 or 6 indicated a process problem).

Event classification

Event classification occurred following categorisation of cases as AEs, preventable AEs or potential AEs. For these cases, the observer defined the event's severity and type. For severity, events caused one of the following levels of increasing harm: none; laboratory abnormalities only; symptoms only; temporary disability; permanent disability; or death. Events were also classified according to one or more of the following types: hospital-acquired infection; adverse drug event; procedural complication; operative complication; diagnostic error; management error; anaesthetic complication; obstetrical injury; and system design flaw. As almost all errors could be argued to be system errors, only those events that could not be assigned to another type were deemed to be system design flaws.

Analysis

We described patient baseline characteristics using median and IQR for continuous variables and frequency distributions for categorical variables. We described events in terms of preventability, severity and type for each service overall. We calculated the risk of experiencing at least one event per hospital encounter. We also calculated the event rate in terms of events per patient day of observation. We used SAS version 9.0 for all data management and analyses.

Results

Table 1 describes the patient populations in terms of their baseline characteristics. There were important differences between services. We observed many more patients in Medicine (n=453) and Obstetrics (n=516) than Cardiac Surgery (n=226) or Intensive Care (n=211). Individual patients spent more days on Medicine than Intensive Care, Obstetrics and Cardiac Surgery, with median lengths of stay on the monitored unit of 8 days, 4.8 days, 2.4 days and 2.1 days, respectively. The indications for admission varied extensively between the four services. Medicine patients were older (median age on Medicine, Cardiac Surgery, Intensive Care and Obstetrics was 74, 70, 66 and 30 years, respectively) and frailer (proportion of patients with a Charlson Index of 3 on Medicine, Cardiac Surgery and Intensive Care was 24%, 3% and 7%, respectively).

Table 1.

Patients participating in the surveillance development cohort study

| Cardiac surgery | Intensive care | Internal medicine | Obstetrics | |||||

| Patients | N=226 | N=211 | N=453 | N=516 | ||||

| Age, median (IQR) | 70 (61 to 76) | 66 (54 to 75) | 74 (61 to 84) | 30 (26 to 33) | ||||

| Length of stay, median (IQR) | 2.1 (1.1 to 5.0) | 4.8 (2.6 to 9.3) | 8 (5 to 14) | 2.4 (1.9 to 3.1) | ||||

| Female | 68 (30%) | 79 (37%) | 225 (50%) | 516 (100%) | ||||

| Charlson index | ||||||||

| 0 | 69 (31%) | 63 (30%) | 78 (17%) | – | ||||

| 1 | 113 (50%) | 94 (45%) | 165 (36%) | – | ||||

| 2 | 37 (16%) | 40 (19%) | 103 (23%) | – | ||||

| 3 | 7 (3%) | 14 (7%) | 107 (24%) | – | ||||

| Diabetes | ||||||||

| Mild* | 61 (27%) | 19 (9%) | 63 (14%) | – | ||||

| Severe* | 19 (8%) | 9 (4%) | 76 (17%) | – | ||||

| Cancer | ||||||||

| Local | 0 (0%) | 23 (11%) | 61 (13%) | – | ||||

| Metastatic | 0 (0%) | 3 (1%) | 20 (4%) | – | ||||

| Congestive heart failure | 32 (14%) | 17 (8%) | 95 (21%) | – | ||||

| Chronic obstructive pulmonary disease | 31 (14%) | 33 (16%) | 84 (19%) | – | ||||

| Renal disease | 29 (13%) | 17 (8%) | 82 (18%) | – | ||||

| Admit indication (top 10) | CABG | 123 (54%) | Neurological disease | 39 (19%) | Infection | 122 (27%) | Labour | 324 (63%) |

| Other | 28 (12%) | Elective surgery | 34 (16%) | Fluid, electrolyte | 51 (11%) | Induction | 87 (17%) | |

| AVR | 26 (12%) | Respiratory failure | 30 (14%) | Pulmonary | 45 (10%) | Elective Caesarean section | 56 (11%) | |

| AVR+CABG | 23 (10%) | Other | 25 (12%) | Gastrointestinal | 34 (8%) | Ruptured membranes | 34 (7%) | |

| MVR | 12 (5%) | Trauma | 24 (11%) | Vascular | 34 (8%) | Pregnancy-induced hypertension | 10 (2%) | |

| MVR+CABG | 6 (3%) | Sepsis syndrome | 23 (11%) | Congestive heart failure | 28 (6%) | Maternal illness | 3 (1%) | |

| Multiple valves | 5 (2%) | Emergency surgery | 19 (9%) | Cognitive | 24 (5%) | Antepartum bleeding | 1 (0%) | |

| Multiple valves+CABG | 3 (1%) | Cardiogenic shock | 7 (3%) | Social | 16 (4%) | |||

| Shock other | 6 (3%) | Haematological | 14 (3%) | |||||

| Dysrhythmia | 3 (1%) | Diabetes mellitus complications | 11 (2%) | |||||

Diabetes was classified as mild or severe based on the presence of end organ damage.

AVR, aortic valve replacement; CABG, coronary artery bypass graft; MVR, mitral valve replacement.

Table 2 demonstrates the overall and service-specific AE risk and rate. 192 patients (13.7%) encountered 245 AEs (2.6 events per 100 patient days), 86 patients (6.1%) encountered 88 preventable AEs (0.9 events per 100 patient days), and 75 patients (5.3%) encountered 81 potential AEs (0.9 events per 100 patient days). The greatest number of events was observed on the Medicine service, which accounted for 63% (75/119) of patients with AEs, 56% of (48/86) patients with preventable AEs and 56% (40/75) of patients with potential AEs.

Table 2.

Adverse event (AE) risk and rate

| Overall | Cardiac surgery | Intensive care | Internal medicine | Obstetrics | p value (χ2 test) | |

| Risk* N (%) | ||||||

| Patients observed | 1406 | 226 | 211 | 453 | 516 | |

| Patients with at least one AE | 192 (13.7%) | 45 (19.9%) | 52 (24.6%) | 75 (16.6%) | 20 (3.9%) | <0.001 |

| Patients with at least one preventable AE | 86 (6.1%) | 8 (3.5%) | 23 (10.9%) | 48 (10.6%) | 7 (1.4%) | <0.001 |

| Patients with at least one potential AE | 75 (5.3%) | 6 (2.7%) | 17 (8.1%) | 40 (8.8%) | 12 (2.3%) | <0.001 |

| Rate† | ||||||

| Days of observation | 9300 | 1234 | 1592 | 5026 | 1448 | |

| AE rate (per 100 patient days) | 245 (2.6) | 62 (5.0) | 72 (4.5) | 89 (1.8) | 22 (1.5) | <0.001 |

| Preventable AE rate (per 100 patient days) | 88 (0.9) | 8 (0.6) | 23 (1.4) | 50 (1.0) | 7 (0.5) | 0.03 |

| Potential AE rate (per 100 patient days) | 81 (0.9) | 7 (0.6) | 20 (1.3) | 42 (0.8) | 12 (0.8) | 0.24 |

Risk was calculated as the number of patients with at least one event divided by the total number of patients observed.

Rate reported as events per 100 patient days, which was calculated as the number of events divided by the number of patient days of observation multiplied by 100.

The risk and rate of all AEs and preventable AEs were highest for the Intensive Care unit. Of the 211 patients observed on Intensive Care, 52 (24.6%) experienced at least one AE, and 23 (10.9%) experienced a preventable AE. The corresponding event rates were 4.5 and 1.4 per 100 patient days for AEs and preventable AEs, respectively. The lowest risk and rate of events occurred on the Obstetrics service. Of the 516 patients observed on Obstetrics, 20 (3.9%) experienced at least one AE, and seven (1.4%) experienced a preventable AE. The corresponding event rates were 1.5 and 0.5 per 100 patient days for AEs and preventable AEs, respectively.

Table 3 describes the severity of all AEs detected. Of all 245 events detected, six (2.5%) resulted in/were associated with/potentially led to death, and 16 (6.6%) led to permanent disability. The vast majority of events resulted in symptoms only (n=75, 30.7%) or led to temporary disability (n=112, 45.9%). The majority of events that led to death or permanent disability occurred in the two critical care areas (Cardiac Surgery Intensive Care and Intensive Care). The risk of AEs causing permanent disability or death varied 24-fold across services: Cardiac Surgery Intensive Care (11/226=4.9%), Intensive Care (8/211=3.8%), Medicine (2/453=0.4%), Obstetrics (1/516=0.2%).

Table 3.

Adverse event severity and type

| Overall N (%) | Cardiac surgery N (%) | Intensive care N (%) | Medicine N (%) | Obstetrics N (%) | p Value (χ2 test) | |

| Total | 245 (100) | 62 (100) | 72 (100) | 89 (100) | 22 (100) | |

| Severity | <0.001 | |||||

| Lab/physiological | 36 (14.7) | 2 (3.2) | 10 (13.9) | 23 (25.8) | 1 (4.6) | |

| Symptoms | 75 (30.7) | 9 (14.5) | 18 (25.0) | 38 (42.7) | 10 (45.5) | |

| Temporary | 112 (45.9) | 40 (64.5) | 36 (50.0) | 26 (29.2) | 10 (45.5) | |

| Permanent | 16 (6.6) | 8 (12.9) | 7 (9.7) | 0 (0.0) | 1 (4.6) | |

| Death | 6 (2.5) | 3 (4.8) | 1 (1.4) | 2 (2.3) | 0 (0.0) | |

| Type | <0.001 | |||||

| Procedural complication | 42 (17.14) | 7 (11.3) | 17 (23.6) | 11 (12.4) | 7 (31.8) | |

| Infection | 37 (15.1) | 12 (19.4) | 18 (25.0) | 7 (7.9) | 0 (0.0) | |

| Adverse drug event | 46 (18.8) | 4 (6.5) | 9 (12.5) | 27 (30.3) | 6 (27.3) | |

| Therapeutic error | 49 (20.0) | 5 (8.1) | 14 (19.4) | 22 (24.7) | 8 (36.4) | |

| Surgical complication | 37 (15.1) | 29 (46.8) | 7 (9.7) | 0 (0.0) | 1 (4.6) | |

| Diagnostic error | 10 (4.1) | 3 (4.8) | 4 (5.6) | 3 (3.4) | 0 (0.0) | |

| Falls | 10 (4.1) | 0 (0.0) | 0 (0.0) | 10 (11.2) | 0 (0.0) | |

| System problem | 11 (4.5) | 2 (3.2) | 3 (4.2) | 6 (6.7) | 0 (0.0) | |

| Pressure ulcer | 3 (1.2) | 0 (0.0) | 0 (0.0) | 3 (3.4) | 0 (0.0) | |

Table 3 also describes the types of AEs identified. There were important proportions of all AE types, but overall the most common types were therapeutic errors, procedural complications, and adverse drug events. Within each service, the top three AE types differed, and no service shared the most frequent AE type. For Cardiac Surgery, the top three types were surgical complications (47%), hospital-acquired infections (19%) and procedural complications (11%). For Intensive Care, the top three types were hospital-acquired infections (25%), procedural complications (24%) and therapeutic errors (19%). For Medicine, the top three types were adverse drug events (30%), therapeutic errors (24%) and procedural complications (12%). For Obstetrics, the top three types were therapeutic errors (36%), procedural complications (32%) and adverse drug events (27%).

Discussion

We used clinical surveillance to detect AEs on four hospital services. AE risk per encounter was 13% with a range of 4% to 25% depending on service. Overall, 36% of AEs were preventable, though this was highly variable between services, ranging from 13% to 56%. General Internal Medicine had the greatest number of AEs but also had the highest number of patients and comorbidities. After controlling for length of stay and the number of patients observed, the risk of AEs, including those with the most severe consequences, was greatest in the two critical care areas. There were differences in the types of AEs identified; however, therapeutic errors were common in all subgroups studied. Finally, clinical surveillance identified an important number of potential AEs.

These findings are important because they identify ample opportunities for quality improvement. We found a greater AE risk than most previous studies of AEs which have tended to rely solely on chart review.1–7 We used a clinical observer to identify events within 24 h of their occurrence, and reviewed them within 7 days of their identification. The timely identification of problems would ultimately mean a more rapid response to rectify them. The reviews were performed by clinicians who knew the clinical setting and were well respected by their local peers. We believe that these factors resulted in classifications with a much greater degree of face validity than existing methods to identify AEs (such as incident reports and chart reviews). These factors also led us to identify a significantly greater proportion of events attributed to diagnostic and therapeutic errors, than prior studies. Furthermore, the systematic nature of our data collection ensured our methods were less biased than morbidity and mortality reviews or closed-claims analysis.15 43 44

Our study supports the concept that improvement strategies must be locally developed and must target specific problems. We found that AE risk and type varied by service. The underlying explanation for this finding likely relates to the frequency of underlying processes occurring within these areas that in turn lead to more opportunities to cause harm. Regardless of the cause, it follows that solutions will also necessarily vary. For example, in the Cardiac Surgery Intensive Care unit, interventions should be directed to preventing certain types of surgical complications. On the Medicine service, interventions that focus specifically on reducing errors related to the medication administration process would be most beneficial. The conclusion that errors and their solutions vary by patient service is intuitively obvious but is often not incorporated in the ‘top down’ approaches to improve quality and safety taken by accreditation organisations or other regulators.

Our study also supports the conclusion that priority setting, as it pertains to safety improvement, must occur in a highly strategic manner. The greatest number of AEs occurred on the service with the frailest patients. Effective interventions to improve safety in these patients would have the largest reduction on the institutional number of events. However, after controlling for the number of patients and length of observation, these patients had a risk of events similar to the least frail patients. The highest event risk and the most severe events occurred in services that had very invasive therapies and where patients were most acutely ill. Therefore, efforts to prevent injuries in these groups might be more efficient and associated with a greater overall impact in terms of reducing the economic burden of AEs. For all these reasons, organisations will have to be very clear about their goals when targeting the safety problems they hope to solve.

Finally, this research has highlighted the feasibility of clinical surveillance. We used a comprehensive strategy involving the use of direct observation, voluntary and prompted reporting, as well as daily chart reviews. This combination of AE detection methods has been recommended by others15 and serves to increase the overall performance of the monitoring system. Our programme was well accepted in the four services studied with very little financial support (the clinical observer was a funded position). However, all other functions required to maintain the process were voluntary, and there was very little infrastructure support required. There was no evidence that providers who were being observed were overtly or covertly averse to the programme or changed their behaviours. In fact, the methodology was so well received that our institution wanted to and is currently taking steps to implement it on other services.

Our findings are important in that we used a reproducible method of case detection and case review applying a standard method of classification to facilitate comparison. However, there are three important limitations which we would like to highlight. First, we are uncertain of the programme's reliability. Threats to reliability include the observer and peer-review processes. We attempted to mitigate these concerns through standardisation of the process of observation using triggers and case report forms; and, for the peer review, the use of multiple reviewers. These approaches have been successful in improving reliability in prior settings. However, we recommend further research before our method be adopted by health systems to compare institutions. Second, the generalisability of our surveillance feasibility is unknown, particularly because it was performed using a single observer. Even though we successfully implemented our programme within multiple settings in different facilities, it is possible that other settings may create challenges. Further work is recommended to establish whether the programme can be successfully adapted within non-teaching hospitals and in different health systems. Third, the observer cannot be everywhere at once and may miss some events as they are happening. This means the programme cannot be used to intervene systematically and directly in patient care when it does not meet the standard. However, this is not the stated purpose of the programme. Furthermore, if cases are detected in which an immediate intervention is warranted, then this appropriate response should be easily performed. For example, we identified a few instances when a critical laboratory abnormality had not been recognised and simply brought it to the attention of the treating team.

In summary, our study supports the conclusion that clinical surveillance appears to be an effective means of detecting patient safety issues. Before making a general recommendation for widely adopting this method, we recommend further evaluations to address four issues. First, we recommend comparing the findings of clinical surveillance with other methods of identifying patient safety events. Second, we recommend an economic analysis to determine the most efficient method of adverse event detection. Third, we would recommend evaluations of this method in different hospitals. Finally, and most importantly, we recommend studies to determine if adverse event detection leads to improvement in patient outcomes.

Acknowledgments

We would like to thank A Jennings for her assistance throughout the study and in the preparation of the manuscript. This research received financial support from the Canadian Patient Safety Institute, the Canadian Institute for Health Research, the Healthcare Insurance Reciprocal of Canada, the University of Ottawa Heart Institute, and the Ottawa Hospital Centre for Patient Safety.

Footnotes

Funding: This research received financial support from the Canadian Patient Safety Institute, the Canadian Institute for Health Research, the Healthcare Insurance Reciprocal of Canada, the University of Ottawa Heart Institute and the Ottawa Hospital Centre for Patient Safety.

Competing interests: None.

Ethics approval: Ethics approval was provided by the Ottawa Hospital Research Ethics Board.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Baker GR, Norton PG, Flintoft V, et al. The Canadian Adverse Events Study: the incidence of adverse events among hospital patients in Canada. CMAJ 2004;170:1678–86 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Forster AJ, Asmis TR, Clark HD, et al. Ottawa Hospital Patient Safety Study: incidence and timing of adverse events in patients admitted to a Canadian teaching hospital. CMAJ 2004;170:1235–40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Brennan TA, Leape LL, Laird NM, et al. Incidence of adverse events and negligence in hospitalized patients. Results of the Harvard Medical Practice Study I. N Engl J Med 1991;324:370–6 [DOI] [PubMed] [Google Scholar]

- 4.Thomas EJ, Studdert DM, Burstin HR, et al. Incidence and types of adverse events and negligent care in Utah and Colorado. Med Care 2000;38:261–71 [DOI] [PubMed] [Google Scholar]

- 5.Vincent C, Neale G, Woloshynowych M. Adverse events in British hospitals: preliminary retrospective record review. BMJ 2001;322:517–19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wilson RM, Runciman WB, Gibberd RW, et al. The Quality in Australian Health Care Study. Med J Aust 1995;163:458–71 [DOI] [PubMed] [Google Scholar]

- 7.Davis P, Lay-Yee R, Briant R, et al. Adverse events in New Zealand public hospitals I: occurrence and impact. New Zeal Med J 2002;115:U271. [PubMed] [Google Scholar]

- 8.O'Neil AC, Petersen LA, Cook EF, et al. Physician reporting compared with medical-record review to identify adverse medical events. Ann Intern Med 1993;119:370–6 [DOI] [PubMed] [Google Scholar]

- 9.Cullen DJ, Bates DW, Small SD, et al. The incident reporting system does not detect adverse drug events: a problem for quality improvement. Jt Comm J Qual Improv 1995;21:541–8 [DOI] [PubMed] [Google Scholar]

- 10.Beckmann U, Bohringer C, Carless R, et al. Evaluation of two methods for quality improvement in intensive care: facilitated incident monitoring and retrospective medical chart review. Crit Care Med 2003;31:1006–11 [DOI] [PubMed] [Google Scholar]

- 11.Flynn EA, Barker KN, Pepper GA, et al. Comparison of methods for detecting medication errors in 36 hospitals and skilled-nursing facilities. Am J Health Syst Pharm 2002;59:436–46 [DOI] [PubMed] [Google Scholar]

- 12.Berwick DM, Leape LL. Reducing errors in medicine. BMJ 1999;319:136–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Leape LL. Reporting of adverse events. N Engl J Med 2002;347:1633–8 [DOI] [PubMed] [Google Scholar]

- 14.Leape LL. A systems analysis approach to medical error. J Eval Clin Pract 1997;3:213–22 [DOI] [PubMed] [Google Scholar]

- 15.Thomas EJ, Petersen LA. Measuring errors and adverse events in health care. J Gen Intern Med 2003;18:61–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kellogg VA, Havens DS. Adverse events in acute care: an integrative literature review. Res Nurs Health 2003;26:398–408 [DOI] [PubMed] [Google Scholar]

- 17.Walshe K. Adverse events in health care: issues in measurement. Qual Health Care 2000;9:47–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Murff HJ, Patel VL, Hripcsak G, et al. Detecting adverse events for patient safety research: a review of current methodologies. J Biomed Inform 2003;36:131–43 [DOI] [PubMed] [Google Scholar]

- 19.Karson AS, Bates DW. Screening for adverse events. J Eval Clin Pract 1999;5:23–32 [DOI] [PubMed] [Google Scholar]

- 20.Allan EL, Barker KN. Fundamentals of medication error research. Am J Hosp Pharm 1990;47:555–71 [PubMed] [Google Scholar]

- 21.Iezzoni LI. Assessing quality using administrative data. Ann Intern Med 1997;127:666–74 [DOI] [PubMed] [Google Scholar]

- 22.Bates DW, Evans RS, Murff H, et al. Detecting adverse events using information technology. J Am Med Inform Assoc 2003;10:115–28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Weinger MB, Slagle J, Jain S, et al. Retrospective data collection and analytical techniques for patient safety studies. J Biomed Inform 2003;36:106–19 [DOI] [PubMed] [Google Scholar]

- 24.Thomas EJ, Lipsitz SR, Studdert DM, et al. The reliability of medical record review for estimating adverse event rates. Ann Intern Med 2002;136:812–16 [DOI] [PubMed] [Google Scholar]

- 25.Hayward RA, Hofer TP. Estimating hospital deaths due to medical errors: preventability is in the eye of the reviewer. JAMA 2001;286:415–20 [DOI] [PubMed] [Google Scholar]

- 26.Forster AJ, Halil RB, Tierney MG. Pharmacist surveillance of adverse drug events. Am J Health Syst Pharm 2004;61:1466–72 [DOI] [PubMed] [Google Scholar]

- 27.Bates DW, Cullen DJ, Laird N, et al. Incidence of adverse drug events and potential adverse drug events. Implications for prevention. ADE Prevention Study Group. JAMA 1995;274:29–34 [PubMed] [Google Scholar]

- 28.Andrews LB, Stocking C, Krizek T, et al. An alternative strategy for studying adverse events in medical care. Lancet 1997;349:309–13 [DOI] [PubMed] [Google Scholar]

- 29.Donchin Y, Gopher D, Olin M, et al. A look into the nature and causes of human errors in the intensive care unit. 1995. Qual Saf Health Care 2003;12:143–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Charlson ME, Pompei P, Ales KL, et al. A new method of classifying prognostic comorbidity in longitudinal studies: development and validation. J Chronic Dis 1987;40:373–83 [DOI] [PubMed] [Google Scholar]

- 31.Classen DC, Pestotnik SL, Evans RS, et al. Description of a computerized adverse drug event monitor using a hospital information system. Hosp Pharm 1992;27:774–9 [PubMed] [Google Scholar]

- 32.Rozich JD, Haraden CR, Resar RK. Adverse drug event trigger tool: a practical methodology for measuring medication related harm. Qual Saf Health Care 2003;12:194–200 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Honigman B, Lee J, Rothschild J, et al. Using computerized data to identify adverse drug events in outpatients. JAMIA 2001;8:254–66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hope C, Overhage JM, Seger A, et al. A tiered approach is more cost effective than traditional pharmacist-based review for classifying computer-detected signals as adverse drug events. J Biomed Inform 2003;36:92–8 [DOI] [PubMed] [Google Scholar]

- 35.Jha AK, Kuperman GJ, Teich JM, et al. Identifying adverse drug events: development of a computer-based monitor and comparison with chart review and stimulated voluntary report. JAMIA 1998;5:305–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Jha AK, Kuperman GJ, Rittenberg E, et al. Identifying hospital admissions due to adverse drug events using a computer-based monitor. Pharmacoepidemiol Drug Saf 2001;10:113–19 [DOI] [PubMed] [Google Scholar]

- 37.Rothschild JM, Landrigan CP, Cronin JW, et al. The Critical Care Safety Study: The incidence and nature of adverse events and serious medical errors in intensive care. Crit Care Med 2005;33:1694–700 [DOI] [PubMed] [Google Scholar]

- 38.Haley RW, Culver DH, White JW, et al. The efficacy of infection surveillance and control programs in preventing nosocomial infections in US hospitals. Am J Epidemiol 1985;121:182–205 [DOI] [PubMed] [Google Scholar]

- 39.Neumayer L, Mastin M, Vanderhoof L, et al. Using the Veterans Administration National Surgical Quality Improvement Program to improve patient outcomes. J Surg Res 2000;88:58–61 [DOI] [PubMed] [Google Scholar]

- 40.Khuri SF, Daley J, Henderson WG. The comparative assessment and improvement of quality of surgical care in the Department of Veterans Affairs. Arch Surg 2002;137:20–7 [DOI] [PubMed] [Google Scholar]

- 41.Rowell KS, Turrentine FE, Hutter MM, et al. Use of national surgical quality improvement program data as a catalyst for quality improvement. J Am Coll Surgeons 2007;204:1293–300 [DOI] [PubMed] [Google Scholar]

- 42.Forster AJ, O'Rourke K, Shojania KG, et al. Combining ratings from multiple physician reviewers helped to overcome the uncertainty associated with adverse event classification. J Clin Epidemiol 2007;60:892–901 [DOI] [PubMed] [Google Scholar]

- 43.Vincent C, Taylor-Adams S, Chapman EJ, et al. How to investigate and analyse clinical incidents: clinical risk unit and association of litigation and risk management protocol. BMJ 2000;320:777–81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Vincent C, Stanhope N, Crowley-Murphy M. Reasons for not reporting adverse incidents: an empirical study. J Eval Clin Pract 1999;5:13–21 [DOI] [PubMed] [Google Scholar]