Abstract

Can people react to objects in their visual field that they do not consciously perceive? We investigated how visual perception and motor action respond to moving objects whose visibility is reduced, and we found a dissociation between motion processing for perception and for action. We compared motion perception and eye movements evoked by two orthogonally drifting gratings, each presented separately to a different eye. The strength of each monocular grating was manipulated by inducing adaptation to one grating prior to the presentation of both gratings. Reflexive eye movements tracked the vector average of both gratings (pattern motion) even though perceptual responses followed one motion direction exclusively (component motion). Observers almost never perceived pattern motion. This dissociation implies the existence of visual-motion signals that guide eye movements in the absence of a corresponding conscious percept.

Keywords: motion perception, eye movements, visual perception, motor processes

Human brains use visual information to guide conscious perception and motor action, such as eye or hand movements. Whether visual information is processed in the same way for perception and for motor action is much debated. In this study, we investigated the dissociation between motion perception and eye movements with a new approach enabling us to address whether eye movements are guided by conscious perception or whether they can reflect unconscious visual processing. We operationally define conscious perception as explicit perceptual report, whereas unconscious visual processing was determined by measuring reflexive eye movements.

One view in the debate about visual information processing is that visual signals for perception and for motor action are processed in two separate but interacting streams: a vision-for-perception pathway in ventral cortical areas and a vision-for-action pathway in dorsal cortical areas (Goodale & Milner, 1992). Compelling evidence for this view comes from a case study on a patient with damage to the ventral stream. Although this patient was unable to identify the size, shape, or orientation of an object, she could grasp it by opening her hand the correct amount and rotating her wrist to the correct angle in anticipation, actions that require size and orientation information (Goodale, Milner, Jakobson, & Carey, 1991). Neuroimaging and psychophysics studies in humans, and neurophysiology studies in monkeys, support the theory of perception-action dissociation. Milner and Goodale (2006) proposed a separation between the two pathways on the basis of awareness, suggesting that vision-for-perception information is more accessible to consciousness than is vision-for-action information.

Recent evidence for the dissociation between conscious and unconscious processing of visual information comes from binocular-rivalry studies. Almeida, Mahon, Nakayama, and Caramazza (2008) presented images of tools to one eye and images of animals or vehicles to the other eye in an experiment using continuous flash suppression. Although not consciously perceived, suppressed images of tools—manipulable objects related to motor action—produced faster reaction times in a subsequent categorization task than did suppressed images of either animals or vehicles. Moreover, when paired with suppressed images of human faces, suppressed images of tools produced stronger activity in dorsal than in ventral cortical areas (Fang & He, 2005). These results imply that action-related visual information can be processed in the absence of explicit perceptual experience.

It is questionable whether the dissociation between perception and action holds for the processing of motion information. Some psychophysical studies have found a dissociation between speed perception and the speed of smooth-pursuit eye movements, which track moving objects (Spering & Gegenfurtner, 2007; Tavassoli & Ringach, 2010). But neuronal activity in the middle temporal cortical area (area MT) has been closely linked to both the perception of visual motion (Newsome, Britten, & Movshon, 1989) and the control of pursuit eye movements (Komatsu & Wurtz, 1988). These electrophysiological findings are paralleled in the results of human neuroimaging and patient studies (Huk & Heeger, 2001; Marcar, Zihl, & Cowey, 1997). Furthermore, psychophysical studies show similarities between perception and pursuit eye movements in the precision of direction (Stone & Krauzlis, 2003) and speed judgments (Gegenfurtner, Xing, Scott, & Hawken, 2003; Kowler & McKee, 1987). These results suggest that the cortical location of motion processing for perception and the cortical location of motion processing for action may be similar. They further imply that perception and action are driven by the same visual signals, meaning that they are equally accessible to conscious perception.

Can observers react to a visual object that they do not consciously perceive? In this study, we used a new approach to investigate the dissociation between motion perception and eye movements. We compared perceptual responses and eye movement responses to two orthogonally drifting gratings whose perceptual strength was manipulated through adaptation. The prolonged presentation of a first stimulus—a grating moving in one direction—to one eye resulted in adaptation to that stimulus. The adapted eye was subsequently exposed to the same stimulus again, while a second, unadapted stimulus—a grating whose orientation and motion direction were orthogonal to those of the first stimulus—was presented simultaneously to the other eye. We adapted this procedure from the paradigm of binocular-rivalry flash suppression (Wolfe, 1984; see Method), and it resulted in a stimulus composed of two superimposed gratings differing in perceptual strength: The adapted information was usually perceived as weaker. This approach offers the advantage of presenting two stimuli with the same physical strength to the two eyes, yet producing a percept in which the unadapted stimulus is stronger than the adapted stimulus.

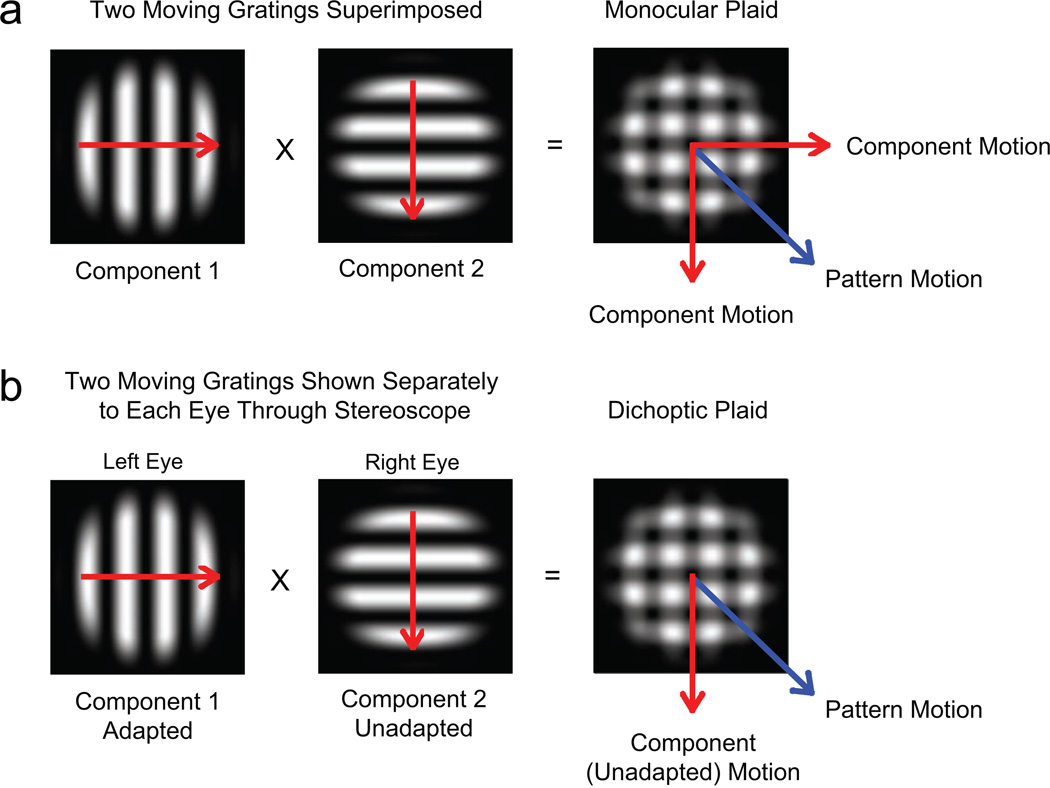

In general, when two gratings that drift in different directions are superimposed in the same eye, the resulting stimulus—a monocular plaid—can be perceived as drifting either in two independent component-motion directions or in a single intermediate pattern-motion direction (Fig. 1a; see Adelson & Movshon, 1982). In our experiments, observers viewed an unadapted motion component with one eye and an adapted motion component with the other eye; this resulted in the perception of a dichoptic plaid stimulus (Fig. 1b). If motion signals from the two components compete, responses should follow the direction of the stronger, unadapted image (component motion); if the two signals are integrated, responses should follow the vector average of the two directions in which the components are moving (pattern motion). In two main and four control experiments, we tested whether perception and eye movements dissociate.

Fig. 1.

Monocular and dichoptic plaid stimuli. A monocular moving plaid stimulus is composed of two superimposed gratings with different orientations and motion directions (a). Observers perceive plaid motion either in two component-motion directions or in the pattern-motion direction. When two gratings with different orientations and directions are presented separately, one to each eye, the images fuse into a dichoptic plaid (b). If one eye is presented with an adapted stimulus, and the other eye is presented with an unadapted stimulus, perceived motion of the resulting dichoptic plaid could be either in the component-motion direction (with a bias toward the direction of the unadapted stimulus) or in the pattern-motion direction.

Method

Observers

Eleven observers (5 females and 6 males; mean age = 33.8 years, SD = 9.1 years) with normal visual acuity were recruited for the study. Eight observers participated in Experiment 1, 5 participated in Experiment 2, and 3 or 4 observers participated in each of the four control experiments. Nine of the observers, graduate students at New York University’s Department of Psychology, were unaware of the purpose of the experiments; the other 2 observers, authors M.S. and M.P., participated in all experiments.

Visual stimuli and setup

In all six experiments, there were two sets of stimuli. The first set consisted of horizontally (90°) or vertically (0°) oriented sine-wave gratings drifting orthogonally to their orientation. The second set consisted of diagonally drifting plaids composed of two superimposed cardinal gratings whose direction and orientation differed by 90°. Spatial frequency (0.5 cycles/degree) and speed (5°/s) were the same for both types of stimuli. All stimuli were multiplied with a two-dimensional, flat-topped Gaussian window to reduce border artifacts; this process resulted in a visible stimulus size of 6.7° of visual angle.

Stimuli were presented at 100% contrast on a black background (0.01 cd/m2) on a 21-in. calibrated CRT monitor (Sony Trinitron CPD-G520; 100-Hz refresh rate; 1,280 × 1,024 pixels; 39.8 cm wide × 29.5 cm high). Observers viewed the display from a distance of 48 cm through a chin-rest-mounted four-mirror stereoscope (OptoSigma, Santa Ana, CA). For mirror adjustment and accurate binocular alignment, two sets of white nonius lines (diameter = 1.5°) surrounded by a texture-framed square (13.4° × 13.4°) were presented on each side of the visual field (Fig. 2).

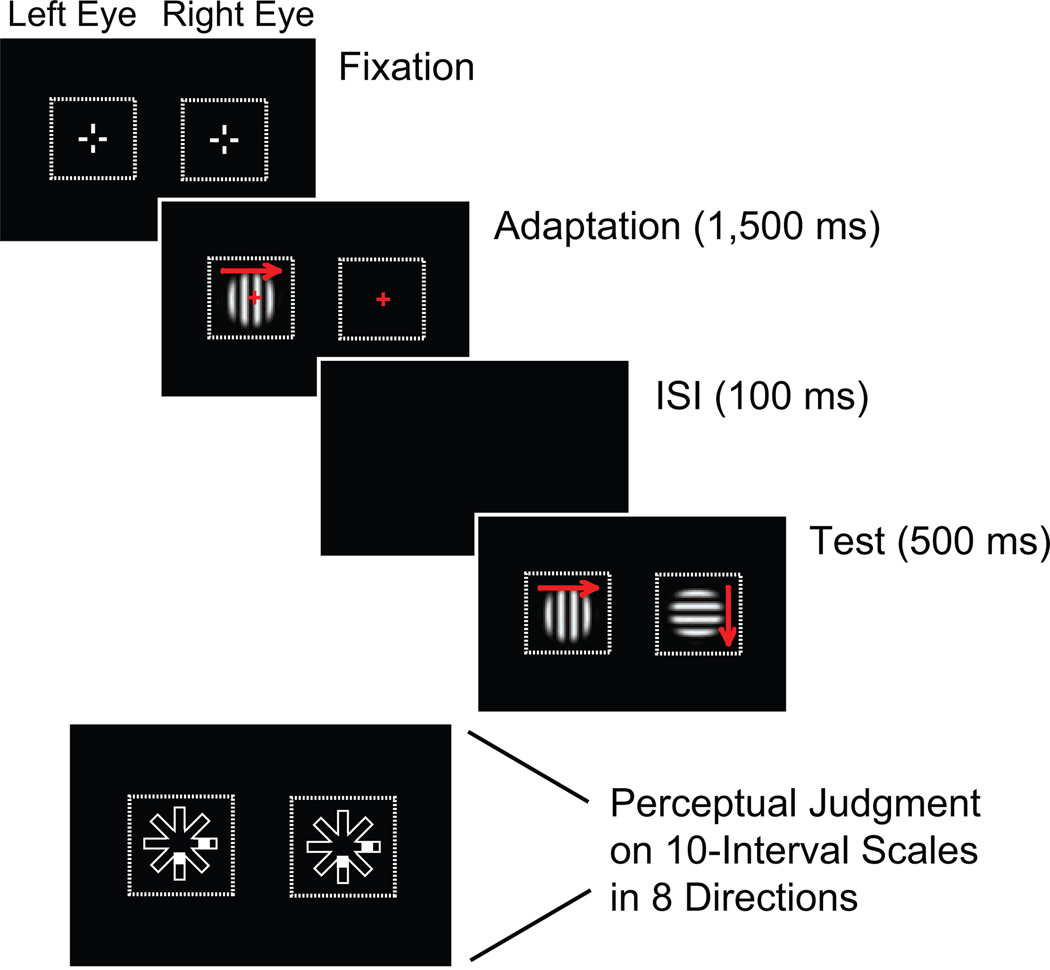

Fig. 2.

Trial timeline showing monocular adaptation for one example condition. At the start of a trial, two sets of nonius lines surrounded by a texture-framed square outlined the target area on each side of the visual field. A grating was then presented to one eye (in this case, the left eye) in order to encourage adaptation to that stimulus while the observer was fixating. After a 100-ms interstimulus interval (ISI), the adapted stimulus was presented again to the same eye, and a different grating was presented to the other eye. In this illustration, red arrows (not present in the actual experiment) show the motion direction of the stimuli. At the end of a trial, a 10-interval, eight-direction judgment scale appeared, and observers recorded their perceptual judgments of motion direction.

Procedure and design

In each of the six experiments, responses in experimental conditions were compared with responses in control conditions. We used monocular adaptation following binocular-rivalry flash suppression (Wolfe, 1984) to create different perceptual strengths for the two stimuli presented on experimental trials in all six experiments (Fig. 2). Regardless of condition, in all trials, one motion stimulus was initially presented to one eye for 1,500 ms (adaptation interval); adaptation of the left and right eyes was counterbalanced across trials. Adaptation was followed by a 100-ms interstimulus interval. Then, in the test interval, both eyes were stimulated for 500 ms: In the experimental conditions, the adapted eye received the same stimulus again, while the other eye received an unadapted stimulus with an orientation and a motion direction that were orthogonal to those of the adapted stimulus. In the control conditions, both eyes received the same stimulus shown in the adaptation interval.

In experimental conditions, a horizontally (90°) oriented grating drifting left or right was presented to one eye, and a vertically (0°) oriented grating drifting up or down was presented to the other. The adapted eye—either the left or the right eye—received either the horizontal or the vertical stimulus. There were 16 experimental conditions: 2 orientations (horizontal, vertical) × 2 directions (left, right) × 2 adaptation conditions (horizontal stimulus adapted, vertical stimulus adapted). In control conditions, the identical stimuli shown to the two eyes were either cardinally drifting gratings (cardinal control directions: 0°, 90°, 180°, 270°) or diagonally drifting plaids (diagonal control directions: 45°, 135°, 225°, 315°). There were thus eight control stimuli. In each trial block, each control stimulus combination was repeated twice as often as each experimental stimulus combination, so that equal numbers of experimental and control trials were presented. Both cardinal and diagonal control conditions were used in Experiments 1 and 2; only cardinal control conditions were used in Experiments 3 through 6.

At the end of each trial, observers were asked to report the motion direction of the stimulus. In Experiments 1 and 3 through 6, observers pressed assigned keys to move up to three rectangular markers along a 10-interval judgment scale that pointed in the directions of the eight possible physical stimulus motions (Fig. 2). Observers were instructed to move one marker if they perceived a single component or coherent plaid motion or two markers if they perceived two individual components. They could also move all three markers to indicate a mixed percept. Increased distance from the center of the scales indicated greater strength of the directional vector. In Experiment 2, observers were asked to rotate an arrow via a trackball mouse to indicate one perceived motion direction between 1° and 360°. In each experiment, observers completed eight blocks of 72 to 96 trials each over two 60-min sessions. Experimental and control conditions were evenly divided in each set of trials.

Eye movement recordings and preprocessing

In all experiments, observers were instructed to fixate on a red fixation cross in the center of the stimulus during the adaptation interval. For the test interval, observers were given different instructions depending on the experiment. In Experiments 1, 2, 5, and 6, observers received no explicit instruction regarding eye movements, but moving stimuli usually elicit tracking eye movements. In Experiment 3, observers were asked to fixate; in Experiment 4, observers were asked to actively track the motion direction of the stimulus. To evaluate eye movements during the test interval, we recorded eye position signals from the left eye with a video-based eye tracker (EyeLink 1000; SR Research, Kanata, Ontario, Canada). Eye movements were analyzed off-line following standard procedures for saccade and onset detection (e.g., Spering & Gegenfurtner, 2007). In a given trial, we excluded eye movements with blinks (< 0.5% of trials in all experiments) and those in which fixation was broken (eye position outside a 1° fixation window and eye velocity > 1°/s) in a 500-ms interval before the start of the test interval (< 2% of trials). This was done to prevent any systematic influence of larger eye movements that might have occurred in the adaptation interval.

Analysis of oculomotor and perceptual responses

To determine eye movement direction, we computed the mean point (center of gravity) of the two-dimensional eye position trace from eye movement onset (fixation) to the end of either the trial or the first saccade, whichever occurred earlier. Eye movement direction was defined as the angle of the vector connecting the eye position at eye movement onset to the center of gravity. Eye movement direction was classified as component when it fell within 22.5° of a given cardinal direction (horizontal or vertical) and as pattern when it fell within 22.5° of a diagonal direction. Each perceptual response in Experiment 2 was binned and classified in the same way as eye movement responses. Each perceptual response in Experiments 1 and 3 through 6 was classified as component or pattern depending on whether observers moved a marker in a cardinal or in a diagonal direction, respectively. Judgments in each direction were averaged across trials.

Results

Experiment 1: perception and eye movements are dissociated

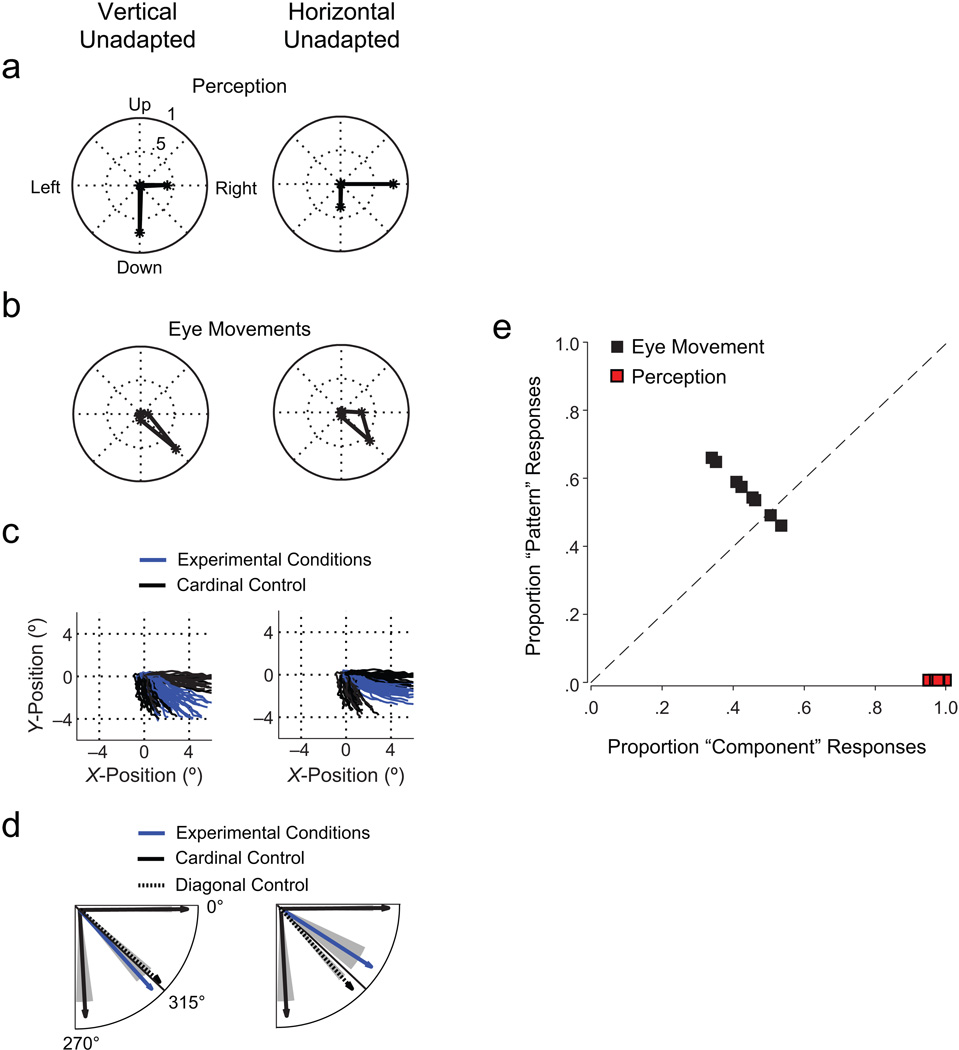

In the experimental conditions, the adaptation procedure successfully weakened the adapted stimulus once the unadapted stimulus was presented. Observers perceived a dichoptic plaid consisting of two independently moving components, a strong (unadapted) grating and a weak (adapted) grating, as if two gratings of different contrast were superimposed. Observers (n = 8) reported mostly perceiving motion in the direction of the unadapted stimulus (component motion). Figure 3a shows examples of directional judgments as mean proportions of trials with a given directional response across observers. Unadapted-stimulus motion was reported more than twice as often as adapted-stimulus motion. It is important to note that observers never reported perceiving pattern motion (see also Figs. S1a and S1b in the Supplemental Material available online).

Fig. 3.

Results from Experiment 1 (n = 8). Panels (a) through (d) show results for two example experimental conditions (vertical unadapted: unadapted stimulus moving down and adapted stimulus moving right; horizontal unadapted: unadapted stimulus moving right and adapted stimulus moving down); panel (e) shows results across all experimental conditions. The graph in (a) shows the mean proportions of perceptual judgments in eight directions (number of trials with judgments in a given direction divided by the total number of trials). The length of the line in each direction indicates the magnitude of the proportion. Note that proportions for unadapted-stimulus and adapted-stimulus motion directions add up to more than 1 because more than one motion direction could be indicated. In (b), the proportions of eye movement directions are shown for eight binned intervals. The graph in (c) shows 1 representative observer’s gaze trajectories in experimental conditions and cardinal control conditions (two identical stimuli moving right or down). Panel (d) shows mean eye movement directions for the same experimental and cardinal control conditions as in (c), as well as for diagonal control conditions; shaded areas show standard errors of the mean. Proportions of perceptual judgments and eye movement responses in the component- and pattern-motion directions for each observer are shown in (e).

Conversely, eye movements followed a motion direction intermediate between the directions of the unadapted and adapted stimuli (pattern motion; Fig. 3b, see also Figs. S1a and S1b in the Supplemental Material). Although observers received no eye movement instruction for the test interval, they made smooth tracking movements in 98% of all trials (latency: M = 156.7 ms, SD = 47.8). The results shown in Figures 3a and 3b are based on a method in which the eye movement vector was classified as representing either component or pattern motion, depending on the range of the angle. Eye position traces (Fig. 3c) revealed that eye movements followed an equal-weighted average of motion vectors from unadapted and adapted gratings.

We compared these results to eye movements in two control conditions that we expected to produce data following the predictions for component and pattern motion. In these conditions, identical stimuli were shown binocularly in the test phase: These stimuli consisted of either two gratings moving in the same cardinal direction (cardinal control) or two plaids moving in the same diagonal direction (diagonal control). As expected (Adelson & Movshon, 1982; Masson & Castet, 2002), eye movements in response to cardinal control stimuli always followed component motion, whereas eye movements in response to diagonal plaids always followed pattern motion (Fig. 3d; see also Fig. S1c in the Supplemental Material). Eye movement responses in the experimental conditions differed significantly from responses to cardinal control stimuli in seven out of eight comparisons (two-tailed paired t tests, p < .001; mean d = 3.2), but they differed from responses to diagonal control stimuli in only one out of eight comparisons of motion directions (Cohen’s d = 0.4, mean across all comparisons). These differences can be seen by comparing eye movement responses to experimental conditions with eye movement responses to cardinal control stimuli and diagonal control stimuli in Figure 3d.

We classified perceptual and oculomotor responses in each trial for each observer into component motion or pattern motion. Across observers, oculomotor responses corresponded to pattern motion in 57% of all trials (Fig. 3e) despite the fact that conscious perceptual responses corresponded to component motion in 100% of all trials. However, observers always reported diagonal motion when shown diagonal control stimuli, and this indicated that they reported pattern motion when they perceived it (Fig. S1d in the Supplemental Material).

In summary, the crucial finding of Experiment 1 is that perception and eye movements were dissociated: Perception followed component motion, and pattern motion was unperceived. Eye movements followed pattern motion in the absence of a corresponding conscious percept. Apparently, motion signals of unequal perceptual strength were integrated equally for the control of eye movements.

Experiment 2: comparable judgments in perception and action

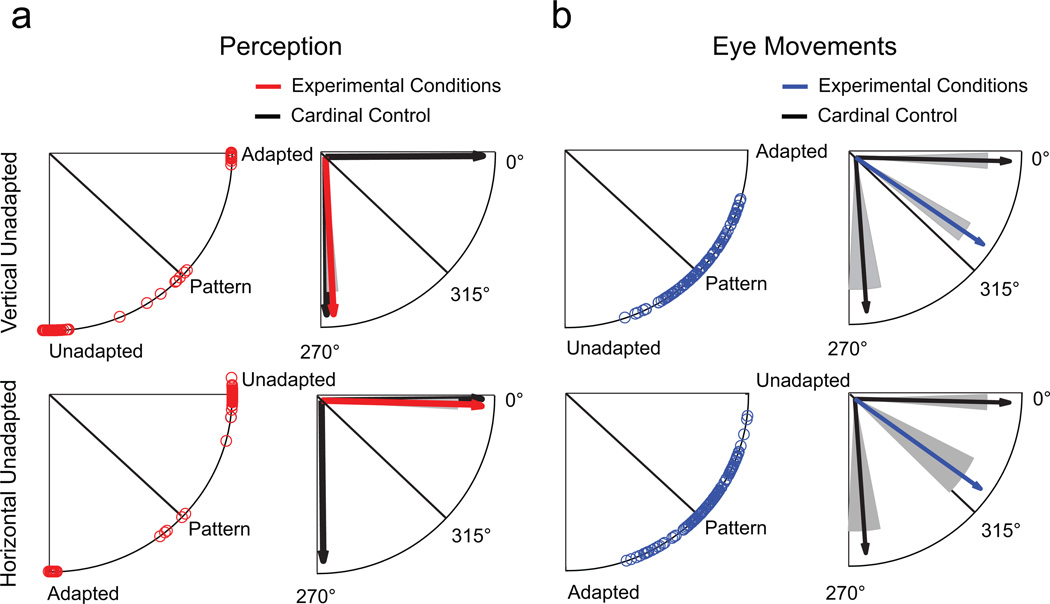

The perceptual index in Experiment 1 allowed observers to indicate the motion direction of both unadapted and adapted images. This method has the advantage of yielding two responses, thereby closely matching observers’ perception of a plaid with two components moving in different directions. It reflects the perceived strength of each component, rather than the notion of a plaid moving in one integrated motion direction. However, most eye movements are conjugate, meaning that they can respond to only one direction in a continuous 360° range. To directly compare perceptual and oculomotor responses, we therefore asked observers in Experiment 2 (n = 5) to choose one perceived motion direction (as opposed to up to three in Experiment 1) by rotating an arrow along a 360° circumference using a trackball mouse.

Eye movement results were similar to those in Experiment 1 and followed pattern motion in 61% of all trials. Figure 4b shows that the circular distribution of individual eye movement responses was clearly clustered around the pattern-motion direction. Responses in the experimental conditions differed from reactions to cardinal control stimuli in all eight t tests (p < .001; mean d = 4.8) and were significantly different from responses to diagonal control stimuli in only two out of eight comparisons (p < .05; mean d = 0.6 in all comparisons). Being forced to report only one motion direction, participants made perceptual judgments that favored unadapted-stimulus motion in 91.3% of trials (Fig. 4a). By contrast, the adapted-stimulus motion direction was reported in 6.5% of trials, and pattern-motion direction was reported in only 1.7% of trials (0.5% were error trials). We conclude that the findings in Experiment 1 were not due to differences in available judgment options for perceptual and eye movement responses; rather, they reflect a true dissociation between perception and action.

Fig. 4.

Results in Experiment 2 for the same two example conditions as in Figure 3. The upper four illustrations show results for vertically moving unadapted stimuli, and the lower four show results for horizontally moving unadapted stimuli. The circular distribution of individual perceptual responses is shown on the left side of (a), and mean angles for experimental conditions and cardinal control conditions are shown on the right side. The circular distribution of individual eye movement responses is shown on the left side of (b), and mean angles in the experimental conditions and control conditions are shown on the right side. Shaded areas indicate standard errors of the mean.

In all six experiments, we recorded movements of only the left eye, which could be either the eye receiving the unadapted stimulus or the eye receiving the adapted stimulus (50% of the trials each); however, data were averaged across both conditions. Thus, we needed to rule out the possibility that eye movements followed pattern motion merely as a result of averaging across conditions. If the adapted eye was driven by visual input to the eye receiving the unadapted stimulus because eye movements are conjugate, then both eyes should have followed component (unadapted) motion. In a corresponding manner, the pattern of results would differ if data were analyzed separately depending on whether the recorded eye received the unadapted or the adapted stimulus. We found that eye movements followed pattern motion in all conditions regardless of the stimulus viewed by the recorded eye (see Fig. S2 in the Supplemental Material). This result further supports our claim that motion perception and eye movements were truly dissociated.

Control experiments

To study where in the visuomotor processing stream a possible separation of visual signals for perception and for motor action may arise, we conducted two control experiments to determine which type of eye movement was elicited by adapted visual-motion information. In Experiment 3, observers had to fixate throughout the trial; in Experiment 4, they had to actively track the motion direction of the dichoptic plaid. If the elicited eye movements were voluntary, we predicted that observers in Experiment 3 would be able to suppress them; if the eye movements were reflexive, the instructions in both experiments would not matter. Thus, in these two experiments, both the perceptual judgment and the eye movements were manipulated explicitly in the same task, thereby matching the level of scrutiny required from both systems.

Eye movements were elicited more often when observers were asked to track motion (Experiment 4; 99% of all trials with eye movements) than when they were asked to fixate (Experiment 3; 84% of trials with eye movements). The main results of both experiments were similar to the results of Experiments 1 and 2: Perception followed component motion, whereas eye movements followed pattern motion, regardless of the instructions given (Fig. S3 in the Supplemental Material; results, given as proportions of trials in each direction, are based on the total number of trials with detected eye movements). The elicited eye movements can be classified as reflexive. At a latency of around 150 ms, these eye movements resemble the initial phase of the optokinetic nystagmus—an involuntary tracking movement that is driven by large, moving visual patterns and relies on many of the same cortical-processing structures as smooth-pursuit eye movements do.

We conducted two other control experiments to evaluate whether our findings generalize to stimuli of different size (1.6°, Experiment 5) or speed (10°/s, Experiment 6). The results (illustrated in Fig. S4 in the Supplemental Material) were similar to the results of Experiments 1 and 2; perception followed component motion, whereas eye movements followed pattern motion.

Discussion

We compared motion processing in perception and eye movements using dichoptic plaids, whose components differed in perceptual but not in physical strength. Our data showed that perception almost always followed component motion rather than pattern motion and was strongly biased in the direction of the unadapted, stronger image. However, eye movements followed pattern motion more often than component motion, presumably averaging available motion signals from the unadapted and adapted signals. The results were the same regardless of whether observers received explicit eye movement instructions.

Taken together, these findings indicate that observers’ eye movements were driven by a motion signal that did not produce a corresponding conscious percept: They moved their eyes in one motion direction while consciously perceiving another. These findings have two major implications: First, motion perception and reflexive eye movements can be dissociated, which indicates that there are differences in motion processing for perception and action. Second, reflexive eye movements can potentially serve as objective indicators of unconscious visual processing.

Are there separate motion-processing pathways for perception and action?

On the one hand, the present results are consistent with the proposition that perception and motor action are processed in two separate but interacting visual pathways (Goodale & Milner, 1992). On the other hand, physiological evidence strongly suggests that there is a common locus of motion processing in area MT for perception and for eye movements—both smooth-pursuit eye movements and reflexive optokinetic nystagmus (e.g., Groh, Born, & Newsome, 1997; Komatsu & Wurtz, 1988; Newsome, Wurtz, Dürsteler, & Mikami, 1985; Newsome et al., 1989). In addition, psychophysical studies suggest similar processing mechanisms for perception and eye movements (Gegenfurtner et al., 2003; Stone & Krauzlis, 2003). Our results indicate that either processing pathways or processing mechanisms are different. How can we reconcile these findings?

Physiological studies on motion processing have focused on area MT and the adjacent middle superior temporal area. However, a possible separation between perception and action might well occur before or after the cortical motion-processing stage. The present results suggest that information from a weakened visual-motion signal is more likely to reach brain areas responsible for reflexive motor action than areas mediating explicit motion perception. Two anatomically separate but interconnected pathways, the retino-geniculo-striate pathway and the retinotectal pathway, process visual signals that drive reflexive eye movements. The retinotectal pathway directly connects the retina to the superior colliculus and brainstem through the nucleus of the optic tract, as well as through the pulvinar nucleus of the thalamus. Both the nucleus of the optic tract and the pulvinar have connections to area MT (Berman & Wurtz, 2008; Distler & Hoffmann, 2008) and are involved in the initiation of the optokinetic nystagmus (Büttner-Ennever, Cohen, Horn, & Reisine, 1996). Recent studies suggest that this pathway may carry partially suppressed motion information that is not processed to the same extent in the retino-geniculostriate pathway. In patients with blindsight, the retinotectal pathway has been associated with residual visual abilities (Huxlin et al., 2009; Weiskrantz, 2004) and, in the case of the superior colliculus, with the translation of unperceived visual signals into motor outputs (Tamietto et al., 2010). Moreover, neuronal activity in the pulvinar has been found to be uncorrelated with perceptual reports of stimulus visibility (Wilke, Müller, & Leopold, 2009).

Are there different motion-processing mechanisms for perception and action?

Alternatively, it is possible that motion information for perception and motion information for eye movements are not processed separately, but rather are processed by the same neuronal populations, with motion information weighted differently for perception and action. Whereas perceptual judgments in our experiments were consistent with a weighted average, eye movements were consistent with a linear average.

One possible explanation for this finding is that perception and action could have different contrast-sensitivity thresholds. In our study, adaptation may have reduced the apparent contrast of the adapted grating (Gutnisky & Dragoi, 2008; Kohn, 2007; Pestilli, Viera, & Carrasco, 2007), such that its motion content may have been strong enough to influence only eye movements, and not perception. A threshold to combine information from both eyes would then be lower for eye movements than for perception, resulting in reflexive eye movements toward unperceived pattern motion and perception of component motion. Our results suggest that adapted motion information carries more weight for eye movements than for motion perception, and this indicates that eye movements might be more sensitive to low-visibility motion information.

Another possible explanation is that perception and action could have different underlying noise levels. Motion information for the two behaviors could be processed either by different neuronal subpopulations in area MT that have different signal-to-noise properties (Spering & Gegenfurtner, 2007; Tavassoli & Ringach, 2010) or by the same neuronal population with noise being added closer to the output stage, where sensory and motor pathways are separate (Gegenfurtner et al., 2003). Both alternatives could potentially explain our results. In any case, our findings cannot be explained by the traditional idea that motion perception and eye movements are processed in the same way and share the same neuronal pathways.

Can reflexive eye movements indicate unperceived motion?

We showed that reflexive eye movements followed unperceived pattern motion and were therefore more sensitive than conscious perception to weak visual-motion signals. This result differs from the findings of some previous studies on reflexive eye movements during binocular rivalry that reported that both perception and eye movements followed the same motion direction, either component motion (Enoksson, 1963; Fox, Todd, & Bettinger, 1975; Logothetis & Schall, 1990; Wei & Sun, 1998) or pattern motion (Sun, Tong, Yang, Tian, & Hung, 2002; for perceptual reports of pattern motion in binocular rivalry, see Andrews & Blakemore, 1999; Cobo-Lewis, Gilroy, & Smallwood, 2000; Tailby, Majaj, & Movshon, 2010).

Three differences in stimuli and timing between binocular rivalry and our adaptation paradigm may explain the discrepancy between these reports and our findings. First, whereas binocular rivalry usually produces complete dominance of one stimulus over the other (suppressed) stimulus, our large stimulus size produced a stronger unadapted stimulus and a weaker adapted stimulus. Second, in binocular rivalry, stimuli are usually presented for 1.5 s to 30 s, whereas we used a shorter presentation time (500 ms). Third, some of these previous studies used oppositely drifting gratings, implying a zero-velocity prediction for signal integration by vector averaging, whereas we used orthogonally drifting stimuli that implied pattern motion by vector averaging. Vector-averaging responses are a dominant processing mechanism for the integration of multiple motion signals in area MT (Groh et al., 1997; Lisberger & Ferrera, 1997) and for the guidance of eye movements (Lisberger & Ferrera, 1997; Spering & Gegenfurtner, 2008). The eye movement data in the present study fit well with those previous findings. In summary, reflexive eye movements, which are not under voluntary control, can serve as an objective indicator of unconscious visual processing following monocular adaptation.

Is the perception-action dissociation adaptive?

Our findings suggest that motion perception and eye movements might be driven by different computational mechanisms—weighted and linear averaging, respectively—and may possibly be mediated to some degree by separate neuronal populations either within the same pathway or within partly separate pathways. What is the utility of this dissociation? We speculate that perception and eye movements respond optimally to different situational and task requirements. On the one hand, the perceptual system may be best suited for situations that require a detailed analysis of local elements, and such analyses would lead to a decision weighting the available information according to the signal strength. On the other hand, reflexive eye movements are a fast but potentially imprecise and involuntary orienting response to averaged global motion and are based on information that observers do not always consciously perceive. Reflexive motor responses may reflect a tighter sensation-action coupling, which would result in faster processing times and therefore be more suitable when time is critical. The ability to act on unperceived visual information while simultaneously evaluating consciously perceived components facilitates successful interactions with a complex and dynamic visual environment.

Acknowledgments

We thank Mike Landy, Larry Maloney, Brian McElree, Eli Merriam, Gregory Murphy, Ulrike Rimmele, and members of the Carrasco laboratory for helpful comments. Part of this study was presented at the 9th Annual Meeting of the Vision Sciences Society in Naples, FL (Spering, Pomplun, & Carrasco, 2009).

Funding

This work was supported by a German Research Foundation fellowship (DFG 1172/1-1) to M.S., by National Eye Institute Grant NEI-R15EY017988 to M.P., and by National Institutes of Health Grant RO1-EY016200 to M.C.

Footnotes

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

Supplemental Material

Additional supporting information may be found at http://pss.sagepub.com/content/by/supplemental-data

References

- Adelson EH, Movshon JA. Phenomenal coherence of moving visual patterns. Nature. 1982;300:523–525. doi: 10.1038/300523a0. [DOI] [PubMed] [Google Scholar]

- Almeida J, Mahon BZ, Nakayama K, Caramazza A. Unconscious processing dissociates along categorical lines. Proceedings of the National Academy of Sciences, USA. 2008;105:15214–15218. doi: 10.1073/pnas.0805867105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrews TJ, Blakemore C. Form and motion have independent access to consciousness. Nature Neuroscience. 1999;2:405–406. doi: 10.1038/8068. [DOI] [PubMed] [Google Scholar]

- Berman RA, Wurtz RH. Exploring the pulvinar path to visual cortex. Progress in Brain Research. 2008;171:467–473. doi: 10.1016/S0079-6123(08)00668-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Büttner-Ennever JA, Cohen B, Horn AKE, Reisine H. Efferent pathways of the nucleus of the optic tract in monkeys and their role in eye movements. Journal of Comparative Neurology. 1996;373:90–107. doi: 10.1002/(SICI)1096-9861(19960909)373:1<90::AID-CNE8>3.0.CO;2-8. [DOI] [PubMed] [Google Scholar]

- Cobo-Lewis AB, Gilroy LA, Smallwood TB. Dichoptic plaids may rival but their motions can integrate. Spatial Vision. 2000;13:415–429. doi: 10.1163/156856800741298. [DOI] [PubMed] [Google Scholar]

- Distler C, Hoffmann KP. Private lines of cortical visual information to the nucleus of the optic tract and dorsolateral pontine nucleus. Progress in Brain Research. 2008;171:363–368. doi: 10.1016/S0079-6123(08)00653-5. [DOI] [PubMed] [Google Scholar]

- Enoksson P. Binocular rivalry and monocular dominance studied with optokinetic nystagmus. Acta Ophthalmologica. 1963;41:544–563. doi: 10.1111/j.1755-3768.1963.tb03568.x. [DOI] [PubMed] [Google Scholar]

- Fang F, He S. Cortical responses to invisible objects in the human dorsal and ventral pathways. Nature Neuroscience. 2005;8:1380–1385. doi: 10.1038/nn1537. [DOI] [PubMed] [Google Scholar]

- Fox R, Todd S, Bettinger LA. Optokinetic nystagmus as an indicator of binocular rivalry. Vision Research. 1975;15:849–853. doi: 10.1016/0042-6989(75)90265-5. [DOI] [PubMed] [Google Scholar]

- Gegenfurtner KR, Xing D, Scott BH, Hawken MJ. A comparison of pursuit eye movement and perceptual performance in speed discrimination. [Retrieved January 29, 2008];Journal of Vision. 2003 3(11) doi: 10.1167/3.11.19. Article 19. from http://journalofvision.org/3/11/19/ [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends in Neurosciences. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD, Jakobson LS, Carey DP. A neurological dissociation between perceiving objects and grasping them. Nature. 1991;349:154–156. doi: 10.1038/349154a0. [DOI] [PubMed] [Google Scholar]

- Groh JM, Born RT, Newsome WT. How is a sensory map read out? Effects of microstimulation in visual area MT on saccades and smooth pursuit eye movements. Journal of Neuroscience. 1997;17:4312–4330. doi: 10.1523/JNEUROSCI.17-11-04312.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gutnisky DA, Dragoi V. Adaptive coding of visual information in neural populations. Nature. 2008;452:220–224. doi: 10.1038/nature06563. [DOI] [PubMed] [Google Scholar]

- Huk AC, Heeger DJ. Pattern motion responses in human visual cortex. Nature Neuroscience. 2001;5:72–75. doi: 10.1038/nn774. [DOI] [PubMed] [Google Scholar]

- Huxlin KR, Martin T, Kelly K, Riley M, Friedman DI, Burgin WS, Hayhoe M. Perceptual relearning of complex visual motion after V1 damage in humans. Journal of Neuroscience. 2009;29:3981–3991. doi: 10.1523/JNEUROSCI.4882-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohn A. Visual adaptation: Physiology, mechanisms, and functional benefits. Journal of Neurophysiology. 2007;97:3155–3164. doi: 10.1152/jn.00086.2007. [DOI] [PubMed] [Google Scholar]

- Komatsu H, Wurtz RH. Relation of cortical areas MT and MST to pursuit eye movements: I. Localization and visual properties of neurons. Journal of Neurophysiology. 1988;60:580–603. doi: 10.1152/jn.1988.60.2.580. [DOI] [PubMed] [Google Scholar]

- Kowler E, McKee SP. Sensitivity of smooth eye movement to small changes in target velocity. Vision Research. 1987;27:993–1015. doi: 10.1016/0042-6989(87)90014-9. [DOI] [PubMed] [Google Scholar]

- Lisberger SG, Ferrera VP. Vector averaging for smooth pursuit eye movements initiated by two moving targets in monkeys. Journal of Neuroscience. 1997;17:7490–7502. doi: 10.1523/JNEUROSCI.17-19-07490.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK, Schall JD. Binocular motion rivalry in macaque monkeys: Eye dominance and tracking eye movements. Vision Research. 1990;30:1409–1419. doi: 10.1016/0042-6989(90)90022-d. [DOI] [PubMed] [Google Scholar]

- Marcar VL, Zihl J, Cowey A. Comparing the visual deficits of a motion blind patient with the visual deficits of monkeys with area MT removed. Neuropsychologia. 1997;35:1459–1465. doi: 10.1016/s0028-3932(97)00057-2. [DOI] [PubMed] [Google Scholar]

- Masson GS, Castet E. Parallel motion processing for the initiation of short-latency ocular following in humans. Journal of Neuroscience. 2002;22:5149–5163. doi: 10.1523/JNEUROSCI.22-12-05149.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milner AD, Goodale MA. The visual brain in action. 2nd ed. Oxford, England: Oxford University Press; 2006. [Google Scholar]

- Newsome WT, Britten KH, Movshon JA. Neuronal correlates of a perceptual decision. Nature. 1989;341:52–54. doi: 10.1038/341052a0. [DOI] [PubMed] [Google Scholar]

- Newsome WT, Wurtz RH, Dürsteler MR, Mikami A. Deficits in visual motion processing following ibotenic acid lesions of the middle temporal visual area of the macaque monkey. Journal of Neuroscience. 1985;5:825–840. doi: 10.1523/JNEUROSCI.05-03-00825.1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pestilli F, Viera G, Carrasco M. How do attention and adaptation affect contrast sensitivity? [Retrieved March 17, 2010];Journal of Vision. 2007 7(7) doi: 10.1167/7.7.9. Article 9. from http://www.journalofvision.org/content/7/7/9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spering M, Gegenfurtner KR. Contrast and assimilation in motion perception and smooth pursuit eye movements. Journal of Neurophysiology. 2007;98:1355–1363. doi: 10.1152/jn.00476.2007. [DOI] [PubMed] [Google Scholar]

- Spering M, Gegenfurtner KR. Contextual effects on motion perception and smooth pursuit eye movements. Brain Research. 2008;1225:76–85. doi: 10.1016/j.brainres.2008.04.061. [DOI] [PubMed] [Google Scholar]

- Spering M, Pomplun M, Carrasco M. Differential effects of suppressed visual motion information on perception and action during binocular rivalry flash suppression. [Retrieved April 22, 2010];Journal of Vision. 2009 9(8) Article 285. from http://www.journalofvision.org/content/9/8/285. [Google Scholar]

- Stone LS, Krauzlis RJ. Shared motion signals for human perceptual decisions and oculomotor actions. [Retrieved January 29, 2008];Journal of Vision. 2003 3(11) doi: 10.1167/3.11.7. Article 7. from http://www.journalofvision.org/content/3/11/7. [DOI] [PubMed] [Google Scholar]

- Sun F, Tong J, Yang Q, Tian J, Hung GK. Multidirectional shifts of optokinetic responses to binocular-rivalrous motion stimuli. Brain Research. 2002;944:56–64. doi: 10.1016/s0006-8993(02)02706-3. [DOI] [PubMed] [Google Scholar]

- Tailby C, Majaj NJ, Movshon JA. Binocular integration of pattern motion signals by MT neurons and by human observers. Journal of Neuroscience. 2010;30:7344–7349. doi: 10.1523/JNEUROSCI.4552-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamietto M, Cauda F, Corazzini LL, Savazzi S, Marzi CA, Goebel R, et al. Collicular vision guides nonconscious behavior. Journal of Cognitive Neuroscience. 2010;22:888–902. doi: 10.1162/jocn.2009.21225. [DOI] [PubMed] [Google Scholar]

- Tavassoli A, Ringach DL. When your eyes see more than you do. Current Biology. 2010;20:93–94. doi: 10.1016/j.cub.2009.11.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wei M, Sun F. The alternation of optokinetic responses driven by moving stimuli in humans. Brain Research. 1998;813:406–410. doi: 10.1016/s0006-8993(98)01046-4. [DOI] [PubMed] [Google Scholar]

- Weiskrantz L. Roots of blindsight. Progress in Brain Research. 2004;144:229–241. doi: 10.1016/s0079-6123(03)14416-0. [DOI] [PubMed] [Google Scholar]

- Wilke M, Müller K-M, Leopold DA. Neural activity in the visual thalamus reflects perceptual suppression. Proceedings of the National Academy of Sciences, USA. 2009;106:9465–9570. doi: 10.1073/pnas.0900714106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe JM. Reversing ocular dominance and suppression in a single flash. Vision Research. 1984;24:471–478. doi: 10.1016/0042-6989(84)90044-0. [DOI] [PubMed] [Google Scholar]