Abstract

There is some evidence that faces with a happy expression are recognized better than faces with other expressions. However, little is known about whether this happy-face advantage also applies to perceptual face matching, and whether similar differences exist among other expressions. Using a sequential matching paradigm, we systematically compared the effects of seven basic facial expressions on identity recognition. Identity matching was quickest when a pair of faces had an identical happy/sad/neutral expression, poorer when they had a fearful/surprise/angry expression, and poorest when they had a disgust expression. Faces with a happy/sad/fear/surprise expression were matched faster than those with an anger/disgust expression when the second face in a pair had a neutral expression. These results demonstrate that effects of facial expression on identity recognition are not limited to happy-faces when a learned face is immediately tested. The results suggest different influences of expression in perceptual matching and long-term recognition memory.

Keywords: facial expression, identity recognition, face matching

Introduction

Most studies on face recognition have employed images of faces displaying a neutral expression. However, it has been demonstrated that faces with different expressions may not be equally memorable. For example, there have been numerous reports that happy-faces are remembered better than faces with a neutral (Baudouin et al., 2000) or angry expression (D’Argembeau et al., 2003; Savaskan et al., 2007). In addition, happy-faces are also recognized more accurately than faces with a surprise or fear expression (Shimamura et al., 2006). The happy-face advantage is not limited to unfamiliar faces. Famous or familiar people are also recognized faster with a happy than with a neutral or angry expression (Endo et al., 1992; Kaufmann and Schweinberger, 2004; Gallegos and Tranel, 2005). Furthermore, facial expression has also been shown to influence familiarity ratings, such that faces with a happy expression are perceived as being more familiar than the same faces with a neutral and negative expression (Baudouin et al., 2000; Lander and Metcalfe, 2007). D’Argembeau et al. (2003) attributed the advantage to the social meaning of emotional expressions in relation to the self. D’Argembeau and Van Der Linden (2007) further suggested that the affective meaning of facial expressions automatically modulates the encoding of facial identity in memory. Together, these results suggest that the happy-face advantage may reflect a higher-level appraisal of emotionally positive and negative signals.

There are, however, a few disparate findings (Johansson et al., 2004; Sergerie et al., 2005), where memory for faces with negative expressions are better than with a positive or neutral expression. A potential cause of the discrepancy may be the way the face stimuli have been manipulated. Some studies have compared expressions on different faces, producing results that reflect differences between the faces (for example, distinctiveness, Valentine, 1991) rather than the expressions themselves. Thus, it is important to compare the effects of the expressions on the same face identities. Nevertheless, even when the contribution from individual face identities is controlled, significantly more angry face identities can be stored in visual short-term memory than happy or neutral face identities (Jackson et al., 2009). In D:Jackson et al. (2009) study, the task mainly involved the working memory, thus emotional valence may serve different functions in short- and long-term memory. Indeed, the authors interpreted the angry face advantage in terms of its special behavioral relevance for short-term memory, and attempted to exclude alternative accounts such as face discriminability and low-level feature recognition. If negative emotions enhance short-term memory, and positive emotions facilitate long-term memory, then the different roles of these emotions are consistent with a valence theory.

Because most studies to date have focused on the effect of expression on long-term face memory, it is difficult to determine whether the happy-face advantage is due to encoding or memory consolidation processes. If it is only due to consolidation, the effect of expression may be different for a short-term memory task. To investigate this, we employed a matching task that relies mainly on a direct perceptual comparison between two face images. A study by Levy and Bentin 2008; Experiment 2) assessed the effect of facial expression on identity matching, but only employed two expressions (happiness and disgust). They found no difference between results of the two. However, to fully evaluate the effects of expression on identity processing, it is necessary to include a full range of basic emotional expressions such as sadness, fear, and surprise, because results of happy and disgust expression alone cannot determine whether identity recognition for faces with these two expressions is superior or inferior to recognition for faces with other expressions.

A major alternative to the valence account for the advantage of an expression on identity recognition concentrates on distinctive physical characteristics of the expression. For example, after quantifying the changes in the configuration of facial features (i.e., mouth, nose, eyes, and eyebrows) associated with different facial expressions, Johnston et al. (2001) noted that happy expressions contain relatively less overlapping changes with other emotional expressions. This observation is consistent with results of computational studies, where face representations with a smile were found to create finer discrimination among faces (Yacoob and Davis, 2002; Li et al., 2008). The happy advantage could be attributed to the physical shape of the smiling faces, rather than the emotional valence of happiness per se. Goren and Wilson (2006) suggested that the magnitude of emotion category boundaries may arise from geometric similarity between them. They also point out that category boundaries of some emotions are clear (e.g., happiness and anger), while some are very small (e.g., fear and sadness).

Given that effects of expression on identity recognition can be explained by valence as well as physical features, comparing the identity matching with seven basic expressions can be a useful test of the two theories. The valence-based theory would predict an order of advantage correlated with valence dimension, where the effect of a positive emotion could be stronger than a neutral expression, followed by the negative emotions, which should lead to lower performance. On the other hand, identity recognition may be based on physical features that are not necessarily correlated with emotional valence. That is, two emotional expressions, irrespective of their valence, should result in similar identity recognition performance as long as their within-expression discriminability based on physical features is similar. To determine whether the advantage of a particular expression for identity matching can be explained by this account, we measured physical similarity to find out whether image pairs with this expression are more similar to each other than image pairs for other expressions. To define the physical similarity between the two faces in each trial, we employed an objective measure, the Structural SIMilarity Index (SSIM), developed by Wang et al. (2004). SSIM takes into account the perceived changes in global structural information variation. We adopted SSIM because it is an improved measure over the traditional measurements of similarity such as peak signal-to-noise ratio and mean squared error. Moreover, the design of SSIM is inspired by human vision and has been shown to be highly consistent with subjective rating of image similarity (Wang et al., 2004).

To fully understand the influence of expression on identity matching, we tested identity matching not only when a pair of face either had same expression (Experiment 1) but also when a face changed from an emotional to a neutral expression (Experiment 2). It is known that image differences created by facial expressions affect face recognition. Relative to a baseline where no change of expression occurs, changing from one expression to another can impair recognition of an unfamiliar face in both memory and matching tasks (Bruce, 1982; Chen and Liu, 2009). Apart from investigating whether identity recognition is affected differently by facial expressions in each experiment, this study allowed us to assess whether certain expressions produce less impairment of expression change for identity recognition by comparing the results of the two experiments.

Similar to expression change, it is also well known that face recognition is susceptible to errors when a face is trained and tested in different poses (see Liu et al., 2009, for a review). Recognition becomes less pose dependent or more image invariant when a face is well learned. We examined whether faces with some expressions relative to others can transfer better to another pose. To assess whether recognition of some emotional faces are less affected by pose change than others, we presented the face pairs either in the same pose or a different pose in our experiments.

In the face recognition literature, expression, pose, and other source of image transformation are often used to test the extent to which face recognition is image invariant. One of our primary interests is to find out whether effects of expression on identity recognition are image invariant. If an observer can only identify a face in the same picture, the behavior would be more adequately classified as picture recognition rather than face recognition. This distinction is equally important for understanding how expression processing affects identity recognition. In reality, a face displaying emotional expression routinely projects different poses and sizes on the retina. It is therefore important to study whether the effect of expression is consistent under these conditions.

To evaluate how general expression processing affects identity matching, we employed both own-race and other-race face stimuli. Although it is well known that both identity matching and emotion recognition are worse for faces of different ethnicity (Meissner and Brigham, 2001; Elfenbein and Ambady, 2002; Hayward et al., 2008), no research to date has investigated whether the effect of expression on identity recognition generalizes to other-race faces. According to a recent social categorization account of the other-race effect, merely classifying faces as out-group members could impair configural face processing (Bernstein et al., 2007). If the same social categorization bias weakens processing of facial expression of out-group faces, the advantage of certain expressions in identity processing may not apply to other-race faces. Our study was able to test this prediction based on the theory. We were also able to assess whether perceptual expertise modulates the role of expression processing on face recognition.

In sum, the present research intends to contribute to the ongoing debate about the relationship between identity and expression processing in face perception (Bruce and Young, 1986; Calder and Young, 2005). However, unlike most studies, whose primary concern is to determine whether facial expression influences face recognition, our primary aim is to determine whether the seven major categories of expression impact face recognition differently. In particular, we attempt to address the following new questions about the way facial expressions influence identity recognition: first, apart from the happy expression advantage, are there any differences among other basic categories of expression for identity recognition? Second, given that the happy-face advantage for unfamiliar faces has mainly been reported in recognition memory tasks, can the effect be generalized to perceptual matching, that does not involve long-term memory? Lastly, we ask whether the happy-face and any other expression advantages for identity recognition are best explained by emotional valence or by its special physical features.

Materials and Methods

Participants

Participants were undergraduate students from the University of Hull and the University of Manchester. All participants were Caucasian. Written consent was acquired from each participant prior to each experiment. Experiment 1 had 149 participants with mean age of 21.1 years, SD = 3.3. Experiment 2 had 94 participants with mean age of 22.2 years, SD = 4.3. All had normal or correct-to-normal vision.

Design

We utilized a counterbalanced design, such that each face was viewed in each expression across participants. We did not unduly repeat the same face to any participant, as this may facilitate matching performance. We decided that it was not practical to ask each participant to make identity matching decisions to each emotional expression, due to timing constraints and the availability of suitable images (to avoid repeatedly showing the same identity). Thus, the six emotional expressions for each face were randomly assigned across three participants: for example, one participant would match the face with the happy or sad expression, whereas another would match the same face with fear or surprise expression, and the third would match the face with disgust or angry expression. The neutral expression was tested in all participants as a baseline. In this way, identity matching decisions were made to each face in each expression across participants. Thus, we used an item-based (by face) analysis, rather than participant-based analysis, to compare all expressions. Such item-based ANOVA’s are popular in the area of psycholinguistics (e.g., Shapiro et al., 1991).

Finally, because our stimuli contained Asian faces that could be processed differently by British participants, we included face race as a factor (Chen and Liu, 2009). To summarize, our independent variables were studied using an item-based ANOVA with a mixed design, where expression (happiness, sadness, fear, surprise, anger, disgust, and neutral) and pose change (same vs. different) were within factors, and face race (own-race vs. other-race) was a between factor. The dependent variables were percentage accuracy and reaction time.

Materials

The face database was obtained from Binghamton University, USA. It contained 100 3D faces and texture maps without facial hair or spectacles. Details about this database can be found in Yin et al. (2006). In each experiment, we used all the 51 Caucasian and 24 Asian models in the database. Nine additional models were used in the practice session. Each face model was rendered against a black background in three poses, namely the full frontal (0°), one left and one right pose (±60°). Each pose had seven facial expressions (happiness, sadness, disgust, surprise, anger, fear, and neutral). Each emotional expression has four levels of intensity. We employed only the strongest intensity level in this study. The rendered faces were saved as gray-level bitmap images. To minimize the low-level image cues for the task, the luminance and root-mean-square contrast of the images were scaled to the grand means. The learning face and test face were also presented in different sizes, with half of these sized 512 by 512 pixels, whereas the other half were sized 384 by 384 pixels. We used two different image sizes to discourage pixel-wise matching based on screen locations. Although research has shown that face recognition is on the whole size invariant (Lee et al., 2006), size variation can make matching more difficult when a longer time interval is introduced between a pair of face images (Kolers et al., 1985). A limitation of using only different image size is that our finding may not apply to a condition where the two matching faces have the same image size. However, it would be equally difficult to ascertain whether an effect of expression can generalize to faces of different sizes if we used the same image size.

Using the SSIM algorithm (https://ece.uwaterloo.ca/∼z70wang/research/ssim/), we computed the physical similarity among images for each facial expression. The SSIM output ranges from −1 (entirely different) to 1 (identical). It is not meaningful to compute similarity for identical images because the SSIM score for this is always 1. Because the paired images in the same identity, same-pose condition of Experiment 1 were identical (apart from a size difference), we only calculated the image similarity for conditions that involved different images. The difference between a pair of images in our experiments was either due to a pose change (from frontal to side), an expression change (from emotional to neutral), an identity change (for unmatched trials), or a combination of these. Table 1 shows the mean similarity scores for each expression where paired images involved these types of image difference. The resulting rank order of similarity for each expression provided a basis for predicting matching performance based on a physical similarity account. As Table 1 shows, the rank order of these expressions defined by SSIM is highly consistent over these image transformations. The only exception appears to be the surprise expression, whose rank position fluctuates by 1–3 places across the columns.

Table 1.

Mean similarity scores between paired images.

| Expression | Source of image difference | ||||||

|---|---|---|---|---|---|---|---|

| Same identity | Different identity | ||||||

| Exp. 1 | Exp. 2 | Exp. 1 | Exp. 2 | ||||

| Diff. pose same expr. | Same pose diff. expr. | Diff. pose diff. expr. | Same pose same expr. | Diff. pose same expr. | Same pose diff. expr. | diff. pose Diff. expr. | |

| Happiness | 0.29 (0.22) | 0.32 (0.30) | 0.25 (0.22) | 0.37 (0.22) | 0.29 (0.22) | 0.35 (0.28) | 0.30 (0.23) |

| Surprise | 0.30 (0.16) | 0.19 (0.17) | 0.17 (0.15) | 0.43 (0.17) | 0.30 (0.17) | 0.22 (0.21) | 0.20 (0.18) |

| Disgust | 0.30 (0.22) | 0.36 (0.32) | 0.28 (0.23) | 0.33 (0.23) | 0.34 (0.23) | 0.42 (0.30) | 0.35 (0.24) |

| Fear | 0.40 (0.23) | 0.54 (0.31) | 0.41 (0.22) | 0.38 (0.27) | 0.39 (0.21) | 0.49 (0.29) | 0.41 (0.23) |

| Sadness | 0.57 (0.08) | 0.76 (0.11) | 0.56 (0.08) | 0.69 (0.10) | 0.56 (0.09) | 0.69 (0.10) | 0.59 (0.09) |

| Anger | 0.58 (0.08) | 0.76 (0.11) | 0.56 (0.08) | 0.69 (0.10) | 0.58 (0.08) | 0.70 (0.09) | 0.58 (0.07) |

| Neutral | 0.60 (0.04) | – | – | 0.73 (0.04) | 0.59 (0.04) | – | – |

Values in parentheses represent SDs.

ANOVAs on the SSIM scores showed that the main effect of expression for all conditions in Table 1 was significant, Fs > 43, ps < 0.001. Pair-wise comparisons were performed on the means. We only report significant results here. For the same identity, same expression, different poses (second column in Table 1), scores were higher for neutral, anger, and sadness than for other expressions. Fear scored higher than happiness but lower than sadness. When an emotional expression was paired with a neutral expression or when the pose and expression changes are combined (third and fourth columns), scores for anger, and sadness were higher than for other expressions. Fear scored higher than happiness and surprise. The score for surprise was lower than all other expressions. When different face identities with the same pose and expression were paired (fifth column), all mean scores were different from each other, except for those between happiness and fear, and between sadness and anger. When identity and pose were different (sixth column), all scores were different except for the ones between happiness and surprise. Similar results were found for same pose but different identity and expression (seventh column), where all comparisons were significant except for the difference between sadness and anger. When identity, pose, and expression were all different (eighth column), the similarity scores for all expressions were different from each other.

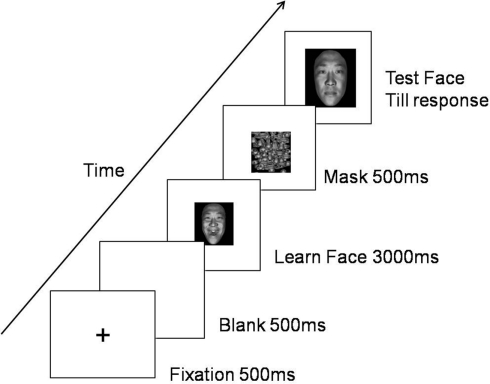

Procedure

Each matching trial began with a 500 ms central fixation cross and a 500 ms blank screen. Following this a learning face (center of screen) was presented for 3 s, followed by a 500 ms mask, which was a random arrangement of scrambled face parts. Finally, a test face was presented (see Figure 1) and participants were instructed to judge whether the learning face and test face were of the same person. They were told to give their answer as quickly and accurately as possible by pressing one of the two keys labeled “Yes” or “No.” The test face remained on screen until the participant responded.

Figure 1.

Illustration of the procedure. The test face was either shown in a frontal pose or a side pose. The procedure in the two experiments was identical except that the test face was shown in the same expression as the learning face in Experiment 1, but in a neutral expression in Experiment 2.

Each participant completed two blocks of trials (counterbalanced order), one where the learning and test faces showed the same pose, and another where they displayed a different pose. Specifically, in the same-pose block, the learning and test face were both shown in a frontal pose. In the different-pose block, the learning face was shown in a full frontal pose and the test face was shown in side pose (60° to the left or right, assigned randomly). Each block consisted of six practice trials and 50 experimental trials (112 trials per participant). In half of the trials, the test face was the same as the learning face, and the remaining half were different from the learning face. In Experiment 1, the test face had the same expression as the learning face. In Experiment 2, the test face had a neutral expression.

Results

Experiment 1

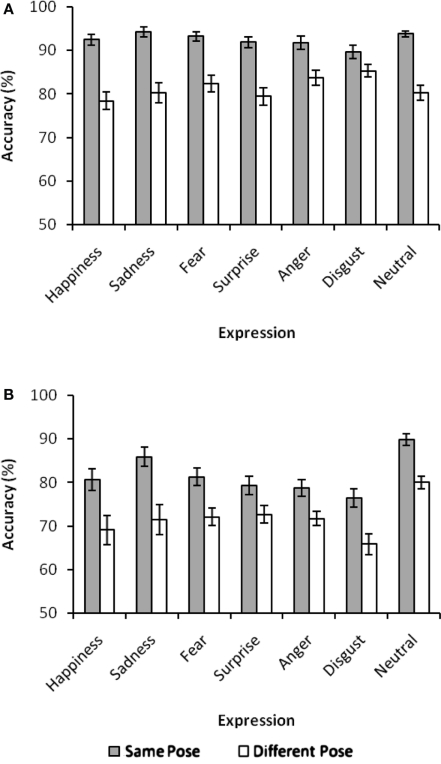

The mean accuracies in Experiment 1 are shown in Figure 2A. An item-based ANOVA revealed a significant main effect of pose change, F (1, 48) = 75.73, p < 0.001, partial η2 = 0.61, where performance for same pose was better than different pose. There was also a significant interaction between pose change and expression, F (6, 288) = 4.45, p < 0.001, partial η2 = 0.09. Simple main effect analyses revealed a significant effect of expression for both same- and different-pose conditions, F (6, 294) > 2.57, ps < 0.05. Post hoc tests showed lower matching performance for disgust relative to the neutral and sad expressions under the same-pose condition, whereas performance for disgust was marginally higher relative to happy expression under the different-pose condition, p = 0.06. The main effects of face race and expression, and other interactions were all not significant, ps > 0.36.

Figure 2.

(A) Matching accuracy in Experiment 1. (B) Matching accuracy in Experiment 2.

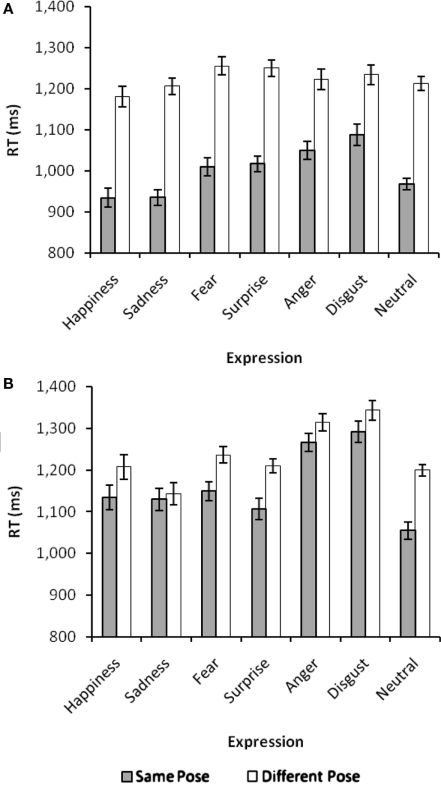

Results of reaction time are shown in Figure 3A. The ANOVA showed a significant main effects of pose change, F (1, 48) = 179.55, p < 0.001, partial η2 = 0.79, where the same-pose trials were matched faster than different pose trials. There was also a significant main effect of face race, F (1, 48) = 4.24, p < 0.05, partial η2 = 0.08, where the same-race faces were matched faster than other-race faces. There was also a significant main effect of expression, F (1, 48) = 6.73, p < 0.001, partial η2 = 0.12. There were no significant two-way or three-way interactions, all ps > 0.17, apart from between pose change and expression, F (6, 288) = 3.61, p < 0.01, partial η2 = 0.07. Simple main effect analysis suggested significant differences among the results of the seven expressions when the faces were matched in the same pose, p < 0.001, but no difference among results of these expressions when the faces were matched in different poses, p = 0.23. Post hoc comparisons revealed that under same-pose condition the faces with an expression of fear, surprise, and anger were matched more slowly than faces with happy, sad, and neutral expressions, but quicker than faces with a disgust expression, ps < 0.01.

Figure 3.

(A) Mean reaction time in Experiment 1. (B) Mean reaction time in Experiment 2.

Because the similarity between paired images was measured by SSIM (see stimulus section), it was possible to control for the influence image similarity had on matching performance by including it as a covariate in analyses of covariance (ANCOVA). One of the assumptions of ANCOVA is that the covariate must be correlated with the dependent variable. Hence we first tested whether this assumption was met. None of the scores for the same or different identity conditions in this experiment correlated significantly with the RT data. Only the similarity scores for images of different poses of the same identity correlated significantly with matching accuracy, r = −0.21, p < 0.01. ANCOVA for this condition showed a significant effect of the similarity, F (1, 167) = 4.01, p < 0.05, partial η2 = 0.02. The effect of expression remained significant after controlling the effect of similarity, F (1, 167) = 2.40, p < 0.05, partial η2 = 0.06.

To recap, both the accuracy and RT data revealed a lower performance for the disgust expression. The RT results further revealed that faces with some other expressions (fear, surprise, and anger) were matched slower than faces with the rest of the expressions. These effects were found when the faces were presented in an identical frontal pose (same-pose condition). The lack of significant expression results in the different pose condition may be due to the strong cost of pose change. Because face race only had a main effect (where the other-race faces were matched more slowly) without interacting with other variables, the results suggest that facial expression equally affected identity matching for other-race races. Image similarity had little effect on matching performance. The only condition affected by this variable was when different poses of the same identity were paired. The effect of expression in this condition remained significant after the effect of similarity was partialed out by ANCOVA.

Experiment 2

The mean accuracies are shown in Figure 2B. There were significant main effects of pose change, F (1, 48) = 37.76, p < 0.001, partial η2 = 0.44, and expression, F (6, 288 = 6.80, p < 0.001, partial η2 = 0.12. Post hoc tests showed matching performance was significantly poorer for all expressions compared with neutral, except sadness. The main effect of face race and all interactions were not significant, all ps > 0.14.

The results of reaction time are shown in Figure 3B. There were significant main effects of pose change, F (1, 48) = 30.99, p < 0.001, partial η2 = 0.39, and expression, F (1, 48) = 17.21, p < 0.001, partial η2 = 0.26. The main effect of face race approached significance, F (1, 48) = 3.28, p < 0.08, partial η2 = 0.06, where same-race faces were matched faster than other-face races. All interactions were not significant (all ps > 0.70), apart from between pose change and expression, F (6, 288) = 2.42, p < 0.05, partial η2 = 0.05. Simple main effect analyses showed a significant effect of expression for both same- and different-pose conditions, F (6, 294) = 11.87, ps < 0.001. Post hoc comparisons revealed that under the same-pose condition faces with happy, sad, fear, surprise expressions were matched quicker than faces with anger, or disgust expression, but slower than faces with a neutral expression. The speed advantage for neutral faces is hardly surprising, because no expression change was involved in this condition. Under the different-pose condition, response time for faces with happy, fear, surprise, or neutral expressions were matched more quickly than faces with anger or disgust expressions, but were slower than faces with a sad expression.

Again we assessed the contribution of image similarity to matching performance in this experiment. However, our regression analyses found no linear relationship between this measure and RT or accuracy data in all conditions of this experiment. Because this violates the assumption of ANCOVA, we did not carry out this analysis for this Experiment.

To summarize, transfer from an emotional expression to a neutral expression impaired matching performance relative to the baseline condition, where both faces in a pair had the same neutral expression. When the faces had an identical pose, faces with disgust, and angry expressions were matched slower relative to other expressions. Both pose conditions showed similar impairment for the two expressions. However, results for the sad expression varied between the two pose conditions, where matching was faster than for faces with other expressions when a face pair was shown in different pose, but not when the face pair was shown in the same pose. Consistent with Experiment 1, although response times were slower for other-race faces, this did not interact with other variables. This suggests that facial expression influenced identity matching for other-race faces in the same fashion. Our analysis also show that image similarity contributed very little to matching performance.

Cost of pose and expression change in experiments 1 and 2

Pose change appeared to have smaller effect for faces with certain expressions than others. Exploration of this issue is interesting as appears that some expressions create stronger invariant recognition (across pose change) than others. To explore this issue, we subtracted the result for different pose from the result for same pose for each expression condition. For example, disgust expression accuracy in same pose (Experiment 1, same expression in learning and test faces) was 90%, disgust expression accuracy in different pose (Experiment 1, same expression) was 85% (see Figure 2A), difference = 5%. The ANOVA showed a significant difference among these costs, F (6, 294) = 4.22, p < 0.001, partial η2 = 0.08. There was no significant main effect of face race, F (1, 49) = 0.36, p = 0.55, or interaction between the two factors, F (6, 294) = 0.88, p = 0.51. Post hoc comparisons showed lower cost for disgust than for happy, sad, and neutral expressions. Using the same procedure, we also calculated the accuracy cost of pose for each expression in Experiment 2 (Figure 2B). Here, the cell for neutral expression is empty because this expression was paired with itself in the different-expression condition. The rest of the column for neutral expression is also empty for the same reason. No significant difference of pose cost among the six emotional expressions was found in this experiment, F (5, 245) = 1.22, p = 0.30, partial η2 = 0.02. Neither was there significant effect of face race, F (1, 49) = 0.07, p = 0.79, or interaction between the two factors, F (6, 294) = 0.47, p = 0.83.

Apart from the cost of pose, we also calculated the cost of expression change. Some expressions are more difficult to generalize to a neutral expression compared to others. This cost is evident when the results from the two experiments are compared. The difference between these results was calculated and treated as the cost. This was done separately for the two pose conditions and the results are shown in the last two rows of Table 2. The difference among the six emotional expressions was not significant for same pose, F (5, 245) = 0.83, p = 0.53, partial η2 = 0.02, but was significant for different pose, F (5, 245) = 2.78, p < 0.05, partial η2 = 0.06. Neither condition showed any effect of face race, Fs (1, 49) < 3, p > 0.10, or interaction between this and expression, F (6, 294) < 0.70, p > 0.66. Post hoc comparisons showed that the cost of expression change for disgust is greater than for surprise.

Table 2.

Percent accuracy cost of transfer for each expression.

| Type of cost | Condition | Expression | ||||||

|---|---|---|---|---|---|---|---|---|

| Happiness | Sadness | Fear | Surprise | Anger | Disgust | Neutral | ||

| A. Pose | Same expression | 13.2 | 13.7 | 10.2 | 11.9 | 8.5 | 5.0 | 14.0 |

| B. Pose | Different expression | 12.3 | 13.7 | 9.5 | 7.1 | 7.7 | 10.0 | |

| C. Expression | Same pose | 11.1 | 8.0 | 11.9 | 11.2 | 12.1 | 13.5 | |

| D. Expression | Different pose | 10.2 | 8.0 | 11.2 | 6.3 | 11.3 | 18.5 | |

A. Pose transfer cost in Experiment 1 = same-pose results − different pose results.

B. Pose transfer cost in Experiment 2 = same-pose results − different pose results.

C. Expression transfer cost = same-pose results (Experiment 1 − Experiment 2).

D. Expression transfer cost = different pose results (Experiment 1 − Experiment 2).

Using the same method, we calculated the cost of pose and expression change for the reaction time data and the resulting values are shown in Table 3. Consistent with the accuracy data, none of the RT analyses produced a significant effect for face race, ps > 0.50, or interaction between this factor and expression, ps > 0.38. For the cost of pose change, ANOVA revealed a significant difference among the seven expressions in Experiment 1, F (6, 294) = 4.00, p < 0.001, partial η2 = 0.08. Post hoc comparisons showed that the cost for anger and disgust was significantly lower than for sad. In Experiment 2, where an emotional face was paired with a neutral face, no difference among the six emotional expressions was found, F (5, 245) = 1.42, p = 0.22, partial η2 = 0.03.

Table 3.

The RT cost of transfer for each expression.

| Type of cost | Condition | Expression | ||||||

|---|---|---|---|---|---|---|---|---|

| Happiness | Sadness | Fear | Surprise | Anger | Disgust | Neutral | ||

| A. Pose | Same expression | 239 | 271 | 247 | 232 | 167 | 149 | 233 |

| B. Pose | Different expression | 50 | 37 | 97 | 115 | 39 | 55 | |

| C. Expression | Same pose | 202 | 170 | 144 | 88 | 218 | 214 | |

| D. Expression | Different pose | 12 | −64 | −6 | −30 | 89 | 120 | |

A. Pose transfer cost in Experiment 1 = same-pose results − different pose results.

B. Pose transfer cost in Experiment 2 = same-pose results − different pose results.

C. Expression transfer cost = same-pose results (Experiment 1 − Experiment 2).

D. Expression transfer cost = different pose results (Experiment 1 − Experiment 2).

For the cost of expression change, there was significant difference among the six emotional expressions when the face pairs were shown in the same frontal pose, F (5, 245) = 3.06, p < 0.01, partial η2 = 0.06. Post hoc comparisons showed that the cost for angry and disgust was greater than for surprise. There was also a significant effect when the face pairs were shown in different pose, F (5, 245) = 6.19, p < 0.001, partial η2 = 0.11 where the cost for anger and disgust was greater than for sad and surprise.

Discussion

Consistent with the literature, facial expression clearly affected identity recognition in our study. However, unlike the happy-face effect in the recognition memory research, results from our sequential matching task are more complex. Thus, it was not the case that happy-faces were the only expression that enjoyed better matching performance. Rather, faces with several expressions were matched more effectively than those showing a disgust or angry expression. A disgust expression consistently made a face identity more difficult to match, regardless of whether the task required matching faces in the same expression (Experiment 1), or different expressions (Experiment 2). The effects were found in both accuracy and reaction time measures when the pair of faces was shown in the same pose. Along with disgust, an angry expression lengthened identity matching more than other expressions regardless of whether faces were shown in the same frontal pose (Experiments 1 and 2) or in different pose (Experiment 2).

Although the identity of happy-faces was matched faster than disgust and angry faces, the speed for happy-faces was indistinguishable from that for sad and neutral faces in Experiment 1. In addition, happy-faces were not matched quicker than sad, surprise, and fearful faces in Experiment 2. Because of different face stimuli, it is difficult to compare our results with prior studies. However, it is interesting to note that identities of happy-faces were better recognized than neutral, surprise, or fearful faces in prior face memory research (Baudouin et al., 2000; Shimamura et al., 2006). The difference between the results of the face matching and recognition memory tasks could mean that happy-faces linger longer in memory, although perceptually they are not necessarily easier to match than faces with some of other expressions.

Our results demonstrate that the effects of expression on identity recognition can occur when emotional expressions are shown in different poses, or when they are changed to a neutral expression. These results show that the effects are to some extent image invariant even though identity recognition of unfamiliar faces is strongly affected by image variation. Our results also reveal that a disgust or angry expression equally affected recognition of own-race and other-race faces. This may imply race-invariant processing of emotional signals. Namely, perceptual expertise or social categorization does not change the way processing of facial expression influences face recognition.

It is not entirely clear why faces displaying a disgust or angry expression are more difficult for identity matching. However, as other researchers speculated previously, it is possible that an angry face may direct attention to an emotional situation hence it leaves poorer resource for identity processing (D’Argembeau and Van Der Linden, 2007). Perhaps other facial expressions require fewer resources hence they produce less competition with identity processing. The slower matching performance for angry faces in our study may appear contradictory with the recent finding that more angry faces can be stored in short-term memory than happy or neutral faces (Jackson et al., 2009). Although our matching paradigm also engages short-term memory, it mainly measures identity discrimination between two face images rather than the capacity limit of short-term memory for faces. It is conceivable that faces with an angry expression are more difficult to discriminate but easier to maintain in short-term memory once the identification process is complete. Furthermore, because positive stimuli tend to recruit conceptual processes whereas negative stimuli more sensory processes (Kensinger, 2009), the subvocal rehearsing procedure in Jackson et al. (2009) that was employed to suppress the verbal short-term memory, could also have suppressed conceptual processing of the happy-faces and diminished the potential happy-face effect. When the short-term memory task was changed into a matching task in Jackson et al., 2009 (see their Experiment 4), the angry face advantage was abolished. This result is fairly close to ours, although the happy and neutral faces were not matched faster than angry faces in their study. The discrepancy may be due to the levels of difficulty involved in these matching tasks. Our matching task was more difficult because each pair of images was presented sequentially with an intervening mask, whereas the pair of images in Jackson et al. (2009) was presented next to each other simultaneously. Furthermore, because each face identity in their study was repeated many times, it could have made the task easier when participants became more familiar with the small number of face identities. Perhaps the difference between effects of anger and other expressions disappears when a matching task is too easy.

Apart from the effects of expression in an identical frontal pose, we also investigated whether identity recognition in certain expressions are less impaired by pose or expression change. Our cost analysis reveals that faces with different expressions were not equally affected by pose or expression variation. Interestingly, although faces with a disgust expression were difficult to match in the same-pose condition, they were nevertheless relatively less susceptible to a pose change. This was reflected in both accuracy and response time data in Experiment 1. The reaction time data also revealed a smaller cost of pose transfer for anger. In Experiment 2, however, neither accuracy nor RT results revealed any significant difference among the costs of pose change. The greater robustness of disgust and angry expressions against pose change found in Experiment 1 may be explained by better preserved identity information across different poses for these expressions. The lack of the same effect in Experiment 2 may be due to the absence of such preserved features across poses when an emotional face in a frontal pose was changed to a neutral face in another pose.

The overall poorer performance in Experiment 2 relative to Experiment 1 (cf. Figures 2 and 3) reflected a cost of expression change. The effect is consistent with the past observations (e.g., Bruce, 1982; Chen and Liu, 2009). However, the more interesting and novel finding in this study was that the cost of expression change depended on the expression of face at the time of encoding. The accuracy data showed that when the pose of the test face was different, the cost created by expression change was particularly high for disgust. In addition, the RT data showed higher costs of expression change for disgust and anger in both same-pose and different-pose conditions. These findings demonstrate that the cost of pose and expression variation in face identity matching is modulated by facial expression.

Our results present a mixed picture for the valence account. Because happiness was among the expressions that produced faster matching performance than expressions of disgust and anger, the pattern fits with the valance account based on the contrast of positive (happy) vs. negative emotions. However, it is difficult to explain why the happy expression is not also more effective than sadness or fear, which are classified as negative emotions. It is possible that face matching is affected by specific content of an expression rather than by a valence metric that categorizes different expressions into a dichotomous positive–negative dimension. It is also possible that the effects in our study depended on relatively extreme negative valence such as disgust and anger. On the whole, although our data are not easily predicted by a theory based on a simple divide between positive and negative emotions, they do not rule out potential contributions from expression processing.

The account that relies on physical advantage of certain expressions seems to meet greater challenges from our data. According this account, happy-faces are easier to discriminate because they create greater shape variation than other expressions. Following this logic, the happy-faces in our study should have created better performance than sad and neutral faces. Although our SSIM analysis also show that happy-faces created large image difference (see Table 1), faces with this expression only produced comparable matching performance to faces with sad or neutral expressions. It is equally difficult to explain why the happy-face advantage is found in recognition tasks but not in matching tasks, because the physical shape of an expression does not change from one task to another. Moreover, if the poorer performance for faces with expressions of disgust and anger were due to more confusable physical features created by this expression, it would be difficult to explain why faces with these expressions were more robust to pose change. The behavioral data are not easily predicted by the rank order of similarity in Table 1. For example, the similarity scores for disgusted faces (range: 0.22–0.37) were a lot closer to happy-faces (range: 0.28–0.34) than to angry faces (range: 0.56–0.76). This would predict similar performance for disgusted and happy-faces. Yet the matching performance for disgusted face was very different from the result for happy-faces. On the other hand, the results for disgusted and angry faces were comparable, even though their similarity scores were very different. Although the physical similarity ranking among the seven categories of expression is highly consistent across image conditions, the difference between the effects of these expressions on identity matching was not reflected by the ranking. Our ANCOVA results were consistent with this observation. Out of 14 ANCOVAs on the RT and accuracy data for the seven conditions listed in Table 1, only the accuracy data in the condition in Experiment 1 where the same identity with same expression was different poses was influenced by the similarity measure. Given the low-level of correlation (r = −0.21) between similarity and accuracy in this analysis, however, it is difficult to conclude that image similarity had any important contribution to the face matching performance. A theory based on higher-level appraisal of expression could offer a more plausible explanation, because recognizing faces with these emotions in different poses could be more important in social interactions.

Using the SSIM metric, we were able to assess the contribution of image similarity in face matching. The way the method evaluates structural information resembles the properties of human vision. Its correlation with subjective ratings been tested with images of scenes/objects (Wang et al., 2004). Some authors have also used it to measure facial features (Adolphs et al., 2008). In our study, the method allowed us to separate effects of processing high-level emotional signals from effects of processing lower-level image properties. Alternative measures of image similarity such as Gabor wavelets (Lades et al., 1993) may also accomplish the same purpose. Some authors have used the Gabor method to control physical image similarity (e.g., Russell et al., 2007). The relative advantage of these methods for research in human vision still awaits systematic investigation.

In summary, results in this study reveal a strong influence of facial expression on identity matching. This is consistent with the literature that supports an interactive rather than dual route hypothesis of identity and expression processing (e.g., Ganel and Goshen-Gottstein, 2004; Calder and Young, 2005). However, unlike the previously reported happy-face advantage, our results appear to suggest a sharp contrast between the effects of facial expression in a matching task that involves more direct perceptual comparison of two face images and a recognition task that requires long-term memory. Facial expressions may have different influences on face identity recognition depending on whether a newly learned emotional face is tested immediately or with some time delay. The advantage of certain expressions for identity recognition may only manifest in an immediate matching test, whereas the advantage of happy expression may last longer in memory. The matching task may be more affected by rapid category-specific expression processing, where a potentially urgent situation signaled by angry or disgusted faces requires immediate attention. The lingering effect of a happy expression could be due to memory consolidation or other processing strategies. This selective consolidation for faces with a happy expression may reflect the adaptive value of the socially “friendly” faces for long-term memory. This hypothetical difference between social functions of facial expression for perception matching and face memory awaits future direct comparisons of the two tasks with the same set of face stimuli.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This study was supported by grants from the Royal Society, the British Academy, and National Basic Research Program of China (2011CB302201). We thank Emily Hopgood, Melissa Huxley-Grantham, and Evangelia Protopapa for their help in data collection. Correspondence concerning this article should be addressed to Wenfeng Chen (e-mail: chenwf@psych.ac.cn) or Chang Hong Liu (e-mail: c.h.liu@hull.ac.uk).

References

- Adolphs R., Spezio M. L., Parlier M., Piven J. (2008). Distinct face-processing strategies in parents of autistic children. Curr. Biol. 18, 1090–1093 10.1016/j.cub.2008.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baudouin J. Y., Gilibert D., Sansone D., Tiberghien G. (2000). When the smile is a cue to familiarity. Memory 8, 285–292 10.1080/09658210050117717 [DOI] [PubMed] [Google Scholar]

- Bernstein M. J., Young S. G., Hugenberg K. (2007). The cross-category effect: mere social categorization is sufficient to elicit an own-group bias in face recognition. Psychol. Sci. 18, 706–712 10.1111/j.1467-9280.2007.01964.x [DOI] [PubMed] [Google Scholar]

- Bruce V. (1982). Changing faces: Visual and non-visual coding processes in face recognition. Br. J. Psychol. 73, 105–116 10.1111/j.2044-8295.1982.tb01795.x [DOI] [PubMed] [Google Scholar]

- Bruce V., Young A. W. (1986). Understanding face recognition. Br. J. Psychol. 77, 305–327 10.1111/j.2044-8295.1986.tb02199.x [DOI] [PubMed] [Google Scholar]

- Calder A. J., Young A. W. (2005). Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 6, 641–651 10.1038/nrn1724 [DOI] [PubMed] [Google Scholar]

- Chen W., Liu C. H. (2009). Transfer between pose and expression training in face recognition. Vision Res. 49, 368–373 10.1016/j.visres.2009.03.008 [DOI] [PubMed] [Google Scholar]

- D’Argembeau A., Van Der Linden M. (2007). Facial expressions of emotion influence memory for facial identity in an automatic way. Emotion 7, 507–515 10.1037/1528-3542.7.3.507 [DOI] [PubMed] [Google Scholar]

- D’Argembeau A., Van der Linden M., Comblain C., Etienne A.-M. (2003). The effects of happy and angry expressions on identity and expression memory for unfamiliar faces. Cogn. Emot. 17, 609–622 10.1080/02699930302303 [DOI] [PubMed] [Google Scholar]

- Elfenbein H. A., Ambady N. (2002). On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 128, 203–235 10.1037/0033-2909.128.2.203 [DOI] [PubMed] [Google Scholar]

- Endo N., Endo M., Kirita T., Maruyama K. (1992). The effects of expression on face recognition. Tohoku Psychol. Folia 51, 37–44 [Google Scholar]

- Gallegos D. R., Tranel D. (2005). Positive facial affect facilitates the identification of famous faces. Brain Lang. 93, 338–348 10.1016/j.bandl.2004.11.001 [DOI] [PubMed] [Google Scholar]

- Ganel T., Goshen-Gottstein Y. (2004). Effects of familiarity on the perceptual integrality of the identity and expression of faces: the parallel-route hypothesis revisited. J. Exp. Psychol. Hum. Percept. Perform. 30, 583–597 10.1037/0096-1523.30.3.583 [DOI] [PubMed] [Google Scholar]

- Goren D., Wilson H. R. (2006). Quantifying facial expression recognition across viewing conditions. Vision Res. 46, 1253–1262 10.1016/j.visres.2005.10.028 [DOI] [PubMed] [Google Scholar]

- Hayward W. G., Rhodes G., Schwaninger A. (2008). An own-race advantage for components as well as configurations in face recognition. Cognition 106, 1017–1027 10.1016/j.cognition.2007.04.002 [DOI] [PubMed] [Google Scholar]

- Jackson M. C., Wu C. Y., Linden D. E. J., Raymond J. E. (2009). Enhanced visual short-term memory for angry faces. J. Exp. Psychol. Hum. Percept. Perform. 35, 363–374 10.1037/a0013895 [DOI] [PubMed] [Google Scholar]

- Johansson M., Mecklinger A., Treese A.-C. (2004). Recognition memory for emotional and neutral faces: an event-related potential study. J. Cogn. Neurosci. 16, 1840–1853 10.1162/0898929042947883 [DOI] [PubMed] [Google Scholar]

- Johnston P. J., Katsikitis M., Carr V. J. (2001). A generalised deficit can account for problems in facial emotion recognition in schizophrenia. Biol. Psychol. 58, 203–227 10.1016/S0301-0511(01)00114-4 [DOI] [PubMed] [Google Scholar]

- Kaufmann J. M., Schweinberger S. R. (2004). Expression influences the recognition of familiar faces. Perception 33, 399–408 10.1068/p5083 [DOI] [PubMed] [Google Scholar]

- Kensinger E. A. (2009). Remembering the details: effects of emotion. Emot. Rev. 1, 99–113 10.1177/1754073908100432 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolers P. A., Duchnicky R. L., Sundstroem G. (1985). Size in the visual processing of faces and words. J. Exp. Psychol. Hum. Percept. Perform. 11, 726–751 10.1037/0096-1523.11.6.726 [DOI] [PubMed] [Google Scholar]

- Lades M., Vorbruggen J. C., Buhmann J., Lange J., von der Malsburg C., Wurtz R. P., Konen W. (1993). Distortion invariant object recognition in the dynamic link architecture. IEEE Trans. Comput. 42, 300–311 10.1109/12.210173 [DOI] [Google Scholar]

- Lander K., Metcalfe S. (2007). The influence of positive and negative facial expressions on face familiarity. Memory 15, 63–70 10.1080/09658210601108732 [DOI] [PubMed] [Google Scholar]

- Lee Y., Matsumiya K., Wilson H. R. (2006). Size-invariant but viewpoint-dependent representation of faces. Vision Res. 46, 1901–1910 10.1016/j.visres.2005.12.008 [DOI] [PubMed] [Google Scholar]

- Levy Y., Bentin S. (2008). Interactive processes in matching identity and expressions of unfamiliar faces: evidence for mutual facilitation effects. Perception 37, 915–930 10.1068/p5925 [DOI] [PubMed] [Google Scholar]

- Li Q., Kambhamettu C., Ye J. (2008). An evaluation of identity representability of facial expressions using feature distributions. Neurocomputing 71, 1902–1912 10.1016/j.neucom.2007.05.001 [DOI] [Google Scholar]

- Liu C. H., Bhuiyan M. A., Ward J., Sui J. (2009). Transfer between pose and illumination training in face recognition. J. Exp. Psychol. Hum. Percept. Perform. 35, 939–947 10.1037/a0013710 [DOI] [PubMed] [Google Scholar]

- Meissner C. A., Brigham J. C. (2001). Thirty years of investigating the own-race bias in memory for faces: a meta-analytic review. Psychol. Public Policy Law 7, 3–35 10.1037/1076-8971.7.1.3 [DOI] [Google Scholar]

- Russell R., Biederman I., Nederhouser M., Sinha P. (2007). The utility of surface reflectance for the recognition of upright and inverted faces. Vision Res. 47, 157–165 10.1016/j.visres.2006.11.002 [DOI] [PubMed] [Google Scholar]

- Savaskan E., Müller S. E., Böhringer A., Philippsen C., Müller-Spahn F., Schächinger H. (2007). Age determines memory for face identity and expression. Psychogeriatrics 7, 49–57 10.1111/j.1479-8301.2007.00179.x [DOI] [Google Scholar]

- Sergerie K., Lepage M., Armony J. L. (2005). A face to remember: emotional expression modulates prefrontal activity during memory formation. Neuroimage 24, 580–585 10.1016/j.neuroimage.2004.08.051 [DOI] [PubMed] [Google Scholar]

- Shapiro L. P., Brookins B., Gordon B., Nagel N. (1991). Verb effects during sentence processing. J. Exp. Psychol. Learn. Mem. Cogn. 17, 983–996 10.1037/0278-7393.17.5.983 [DOI] [PubMed] [Google Scholar]

- Shimamura A. P., Ross J., Bennett H. (2006). Memory for facial expressions: the power of a smile. Psychon. Bull. Rev. 13, 217–222 10.3758/BF03193833 [DOI] [PubMed] [Google Scholar]

- Valentine T. (1991). A unified account of the effects of distinctiveness, inversion and race in face recognition. Q. J. Exp. Psychol. 43A, 161–204 [DOI] [PubMed] [Google Scholar]

- Wang Z., Bovik A. C., Sheikh H. R., Simoncelli E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 10.1109/TIP.2004.823822 [DOI] [PubMed] [Google Scholar]

- Yacoob Y., Davis L. (2002). “Smiling faces are better for face recognition,” in International Conference on Face Recognition and Gesture Analysis, Washington, DC, 59–64 [Google Scholar]

- Yin L., Wei X., Sun Y., Wang J., Rosato M. J. (2006). “A 3D facial expression database for facial behavior research,” in IEEE 7th International Conference on Automatic Face and Gesture Recognition, Southampton, 211–216 [Google Scholar]