Abstract

The last decade has produced an explosion in neuroscience research examining young children’s early processing of language that has implications for education. Noninvasive, safe functional brain measurements have now been proven feasible for use with children starting at birth. In the arena of language, the neural signatures of learning can be documented at a remarkably early point in development, and these early measures predict performance in children’s language and pre-reading abilities in the second, third, and fifth year of life, a finding with theoretical and educational import. There is evidence that children’s early mastery of language requires learning in a social context, and this finding also has important implications for education. Evidence relating socio-economic status (SES) to brain function for language suggests that SES should be considered a proxy for the opportunity to learn and that the complexity of language input is a significant factor in developing brain areas related to language. The data indicate that the opportunity to learn from complex stimuli and events are vital early in life, and that success in school begins in infancy.

Introduction

Developmental studies suggest that children learn more and learn earlier than previously thought. In the arena of language development our studies show that children’s early learning is complex and multifaceted. For example, research shows that young children rely on what has been called “statistical learning,” a form of implicit learning that occurs as children interact in the world, to acquire the language spoken in their culture. However, new data also indicate that children require a social setting and social interaction with another human being to trigger their computations skills to learn from exposure to language. These data are challenging brain scientists to discover how brains actually work – how, in this case, computational brain areas and social brain areas mature during development and interact during learning. The results also challenge educational scientists to incorporate these findings about the social brain into teaching practices.

Behavioral and brain studies on developing children indicate that children’s skills, measured very early in infancy, predict their later performance and learning. For example, measures of phonetic learning in the first year of life predict language skills between 18 and 30 months of age, and also predict language abilities and pre-literacy skills at the age of 5 years. Moreover, by the age of 5, prior to formal schooling, our studies show that brain activation in brain areas related to language and literacy are strongly correlated with the socio-economic status (SES) of the children’s families. The implication of these findings is that children’s learning trajectories regarding language are influenced by their experiences well before the start of school.

In the next decade neuroscientists, educators, biologists, computer scientists, speech and hearing scientists, psychologists, and linguists will increasingly work together to understand how children’s critical “windows of opportunity” for learning work, what triggers their inception, and how learning can be encouraged once the optimal period for learning has passed. The ultimate goal is to alter the trajectories of learning to maximize language and literacy skills in all children.

To explore these topics, this review focuses on research in my laboratory on the youngest language learners—infants in the first year of life—and on the most elementary units of language—the consonants and vowels that make up words. Infants’ responses to the basic building blocks of speech provide an experimentally accessible porthole through which we can observe the interaction of nature and nurture in language acquisition. Recent studies show that infants’ early processing of the phonetic units in their language predicts future competence in language and literacy, contributing to theoretical debates about the nature of language and emphasizing the practical implications of the research as well as the potential for early interventions to support language and literacy. We are also beginning to discover how exposure to two languages early in infancy produces bilingualism and the effects of dual-language input on the brain. Bilingual children inform debates on the critical period, with implications for education, given the increasing number of bilingual children in the nation’s schools.

In this review I will also describe a current working hypothesis about the relationship between the “social brain” and learning, and its implications for brain development. I will argue that to “crack the speech code” infants combine a powerful set of domain-general computational and cognitive skills with their equally extraordinary social skills (Kuhl, 2007; 2011)—and explore what that means for theory and practice. Moreover, social experience with more than one language, either long-term experience as a simultaneous bilingual or short-term experience with a second language in the laboratory, is associated with increases in cognitive flexibility, in adults (Bialystok, 1991), in children (Carlson and Meltzoff, 2008), and in young infants (Conboy, Sommerville, and Kuhl, 2008; Conboy, Sommerville, Wicha, Romo, and Kuhl, 2011). Experience alters the trajectory of development in the young brain.

I have suggested that the social brain—in ways we have yet to understand—“gates” the computational mechanisms underlying learning in the domain of language (Kuhl, 2007; 2011). The assertion that social factors gate language learning may explain not only how typically developing children acquire language, but also why children with autism exhibit twin deficits in social cognition and language (see Kuhl, Coffey-Corina, Padden, and Dawson, 2005b for discussion). Moreover, this gating hypothesis may explain why social factors play a far more significant role than previously realized in human learning across domains throughout our lifetimes (Meltzoff, Kuhl, Movellan, and Sejnowski, 2009).

Windows to the Young Brain

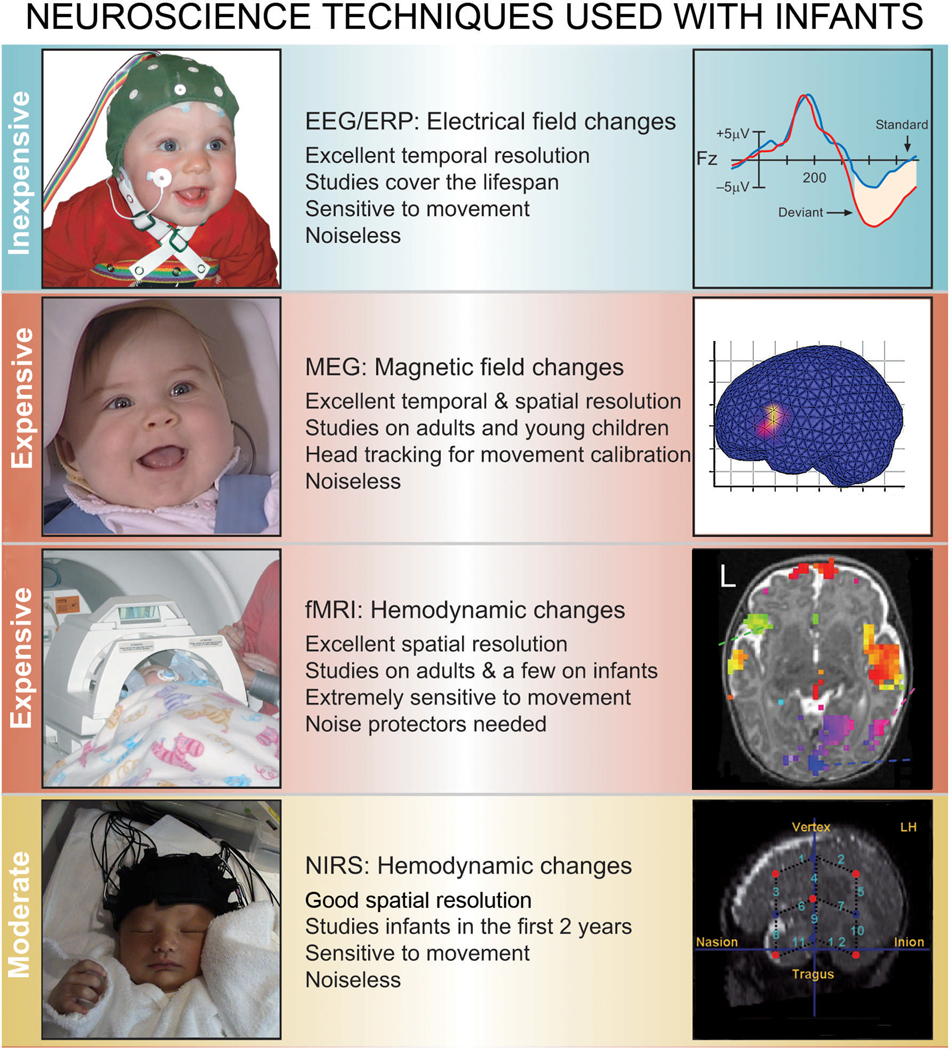

Rapid advances have been made in noninvasive techniques that examine language processing in young children (Figure 1). They include Electroencephalography (EEG)/Event-related Potentials (ERPs), Magnetoencephalography (MEG), functional Magnetic Resonance Imaging (fMRI), and Near- Infrared Spectroscopy (NIRS).

Figure 1.

Four techniques now used extensively with infants and young children to examine their responses to linguistic signals (From Kuhl and Rivera-Gaxiola, 2008).

Event-related Potentials (ERPs) have been widely used to study speech and language processing in infants and young children (for reviews, see Conboy, Rivera-Gaxiola, Silva-Pereyra, and Kuhl, 2008; Dehaene-Lambertz, Dehaene, & Hertz-Pannier, 2002; Friederici, 2005; Kuhl, 2004). ERPs, a part of the EEG, reflect electrical activity that is time-locked to the presentation of a specific sensory stimulus (for example, syllables or words) or a cognitive process (for example, recognition of a semantic violation within a sentence or phrase). By placing sensors on a child’s scalp, the activity of neural networks firing in a coordinated and synchronous fashion in open field configurations can be measured, and voltage changes occurring as a function of cortical neural activity can be detected. ERPs provide precise time resolution (milliseconds), making them well suited for studying the high-speed and temporally ordered structure of human speech. ERP experiments can also be carried out in populations who cannot provide overt responses because of age or cognitive impairment, such as children with autism (Coffey-Corina, Padden, Estes, & Kuhl, 2011; Kuhl et al., 2005b). However, spatial resolution of the source of brain activation using EEG has limitations.

Magnetoencephalography (MEG) is another brain imaging technique that tracks activity in the brain with exquisite temporal resolution. The SQUID (superconducting quantum interference device) sensors located within the MEG helmet measure the minute magnetic fields associated with electrical currents that are produced by the brain when it is performing sensory, motor, or cognitive tasks. MEG allows precise localization of the neural currents responsible for the sources of the magnetic fields. Cheour et al. (2004) and Imada et al. (2006) used new head-tracking methods and MEG to show phonetic discrimination in newborns and in infants during the first year of life. Sophisticated head-tracking software and hardware enables investigators to correct for infants’ head movements, and allows the examination of multiple brain areas as infants listen to speech (Imada et al., 2006). Travis et al. (2011) used MEG to examine the spaciotemporal cortical dynamics of word understanding in 12–18-month-old infants and adults, and showed that both groups encode lexico-semantic information in left frontotemporal brain areas, suggesting that neural mechanisms are established in infancy and operate across the lifespan. MEG (as well as EEG) techniques are completely safe and noiseless.

Magnetic resonance imaging (MRI) provides static structural/anatomical pictures of the brain, and can be combined with MEG and/or EEG. Structural MRIs show anatomical differences in brain regions across the lifespan, and have recently been used to predict second-language phonetic learning in adults (Golestani, Molko, Dehaene, LeBihan, and Pallier, 2007). Structural MRI measures in young infants identify the size of various brain structures and these measures correlate with later language abilities (Ortiz-Mantilla, Choe, Flax, Grant, and Benasich, 2010). When structural MRI images are superimposed on the physiological activity detected by MEG or EEG, the spatial localization of brain activities recorded by these methods can be improved.

Functional magnetic resonance imaging (fMRI) is a popular method of neuroimaging in adults because it provides high spatial-resolution maps of neural activity across the entire brain (e.g., Gernsbacher and Kaschak, 2003). Unlike EEG and MEG, fMRI does not directly detect neural activity, but rather the changes in blood-oxygenation that occur in response to neural activation. Neural events happen in milliseconds; however, the blood-oxygenation changes that they induce are spread out over several seconds, thereby severely limiting fMRI's temporal resolution. Few studies have attempted fMRI with infants because the technique requires infants to be perfectly still, and because the MRI device produces loud sounds making it necessary to shield infants' ears. fMRI studies allow precise localization of brain activity and a few pioneering studies show remarkable similarity in the structures responsive to language in infants and adults (Dehaene-Lambertz et al., 2002; 2006).

Near-Infrared Spectroscopy (NIRS) also measures cerebral hemodynamic responses in relation to neural activity, but utilizes the absorption of light, which is sensitive to the concentration of hemoglobin, to measure activation (Aslin and Mehler, 2005). NIRS measures changes in blood oxy- and deoxy-hemoglobin concentrations in the brain as well as total blood volume changes in various regions of the cerebral cortex using near infrared light. The NIRS system can determine the activity in specific regions of the brain by continuously monitoring blood hemoglobin level. Reports have begun to appear on infants in the first two years of life, testing infant responses to phonemes as well as longer stretches of speech such as “motherese” and forward versus reversed sentences (Bortfeld, Wruck, and Boas, 2007; Homae, Watanabe, Nakano, Asakawa, and Taga, 2006; Peña, Bonatti, Nespor, and Mehler, 2002; Taga and Asakawa, 2007). As with other hemodynamic techniques such as fMRI, NIRS typically does not provide good temporal resolution. However, event-related NIRS paradigms are being developed (Gratton and Fabiani, 2001a,b). One of the most important potential uses of the NIRS technique is possible co-registration with other testing techniques such as EEG and MEG.

Language Exhibits a “Critical Period” for Learning

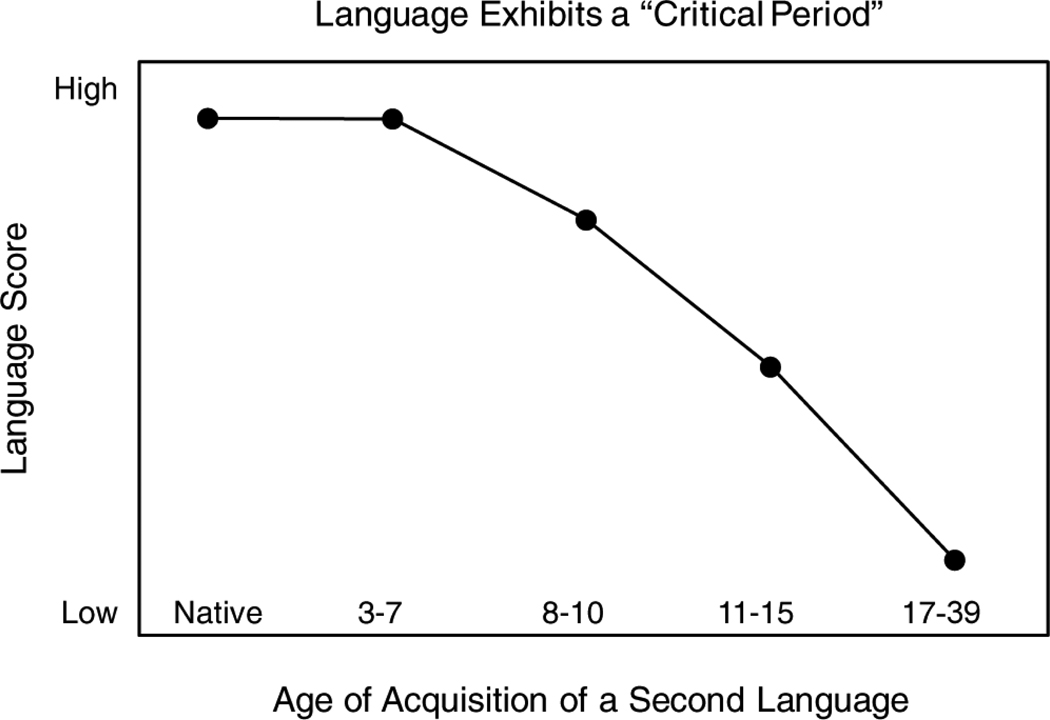

A stage-setting concept for human language learning is the graph shown in Figure 2, redrawn from a study by Johnson and Newport on English grammar in native speakers of Korean learning English as a second language (1989). The graph as rendered shows a simplified schematic of second language competence as a function of the age of second language acquisition.

Figure 2.

The relationship between age of acquisition of a second language and language skill (adapted from Johnson and Newport, 1989).

Figure 2 is surprising from the standpoint of more general human learning. In the domain of language, infants and young children are superior learners when compared to adults, in spite of adults’ cognitive superiority. Language is one of the classic examples of a “critical” or “sensitive” period in neurobiology (Bruer, 2008; Johnson and Newport, 1989; Knudsen, 2004; Kuhl, 2004; Newport, Bavelier, and Neville, 2001).

Does the “critical period” diagram mean that it is impossible to learn a new language after childhood? Both anecdotal and experimental evidence support the idea that the answer to this question is “No.” A new language can be learned at any age, but most agree that the level of expertise will differ from that of a native speaker if exposure to the new language occurs after puberty. After puberty, mastery of the pronunciation and mastery of the grammar is unlikely to be identical to that of a native speaker, though word learning does not appear to be as sensitive to age and remains good throughout life.

Scientists generally agree that the “critical period” learning curve is representative of data across a wide variety of second-language learning studies (Bialystok and Hakuta, 1994; Birdsong and Molis, 2001; Flege, Yeni-Komshian, and Liu, 1999; Johnson and Newport, 1989; Mayberry and Lock, 2003; Neville et al., 1997; Weber-Fox and Neville, 1999; Yeni-Komshian, Flege, and Liu, 2000; though see Birdsong, 1992; Snow and Hoefnagel-Hohle, 1978; White and Genesee, 1996). However, only a few studies have examined language learning in both children and adults. Snow and Hoefnagel-Hohle (1978) made such a comparison and report that English speakers learning Dutch in Holland over a one-year period learned it most quickly and efficiently if they were between 12–15 years of age, rather than younger or older, which is a very intriguing result. More second-language learning studies are needed that vary the age of the learner, and assess both the speed of initial learning as well as the final level of proficiency.

One important point is that not all aspects of language exhibit the same temporally defined critical “window” as that shown in Figure 2. The developmental timing of critical periods for learning phonetic, lexical, and syntactic levels of language vary, though studies cannot yet document the precise timing at each individual level. Studies in typically developing monolingual children indicate, for example, that an important period for phonetic learning occurs prior to the end of the first year, whereas syntactic learning flourishes between 18 and 36 months of age. Vocabulary development “explodes” at 18 months of age. One goal of future research is to identify the optimum learning periods for phonological, lexical, and grammatical levels of language, so that we understand how they overlap and differ. This in turn will assist in the development of novel methods that improve second-language learning at all ages.

Given the current state of research, there is widespread agreement that we do not learn equally well over the lifespan. Theoretical work is therefore focused on attempts to explain the phenomenon. What accounts for adults’ inability to learn a new language with the facility of an infant? One of the candidate explanations was Lenneberg’s hypothesis that development of the corpus callosum affected language learning (Lenneberg, 1967; Newport et al., 2001). More recent hypotheses take a different perspective. Newport raised a “less is more” hypothesis, which suggests that infants’ limited cognitive capacities actually allow superior learning of the simplified language spoken to infants (Newport, 1990). Work in my laboratory led me to advance the concept of neural commitment, the idea that neural circuitry and overall architecture develops early in infancy to detect the phonetic and prosodic patterns of speech (Kuhl, 2004; Zhang, Kuhl, Imada, Kotani, and Tohkura, 2005; Zhang et al., 2009). This architecture is designed to maximize the efficiency of processing for the language(s) experienced by the infant. Once established, the neural architecture arising from French or Tagalog, for example, impedes learning of new patterns that do not conform. I will return to the concept of the critical period for language learning, and the role that computational, cognitive, and social skills may play in accounting for the relatively poor performance of adults attempting to learn a second language.

Phonetic Learning

Perception of the phonetic units of speech—the vowels and consonants that make up words—is one of the most widely studied linguistic skills in infancy and adulthood. Phonetic perception and the role of experience in learning can be studied in children at birth, during development as they are bathed in a particular language, in adults from different cultures, in children with developmental disabilities, and in nonhuman animals. Phonetic perception studies provide critical tests of theories of language development and its evolution. An extensive literature on developmental speech perception exists and brain measures are adding substantially to our knowledge of phonetic development and learning (see Kuhl, 2004; Kuhl et al., 2008; Werker and Curtin, 2005).

The world’s languages contain approximately 600 consonants and 200 vowels (Ladefoged, 2001). Each language uses a unique set of about 40 distinct elements, phonemes, which change the meaning of a word (e.g. from bat to pat in English). But phonemes are actually groups of non-identical sounds, phonetic units, which are functionally equivalent in the language. Japanese-learning infants have to group the phonetic units r and l into a single phonemic category (Japanese r), whereas English-learning infants must uphold the distinction to separate rake from lake. Similarly, Spanish learning infants must distinguish phonetic units critical to Spanish words (bano and pano), whereas English learning infants must combine them into a single category (English b). If infants were exposed only to the subset of phonetic units that will eventually be used phonemically to differentiate words in their language, the problem would be trivial. But infants are exposed to many more phonetic variants than will be used phonemically, and have to derive the appropriate groupings used in their specific language. The baby’s task in the first year of life, therefore, is to make some progress in figuring out the composition of the 40-odd phonemic categories in their language(s) before trying to acquire words that depend on these elementary units.

Learning to produce the sounds that will characterize infants as speakers of their “mother tongue” is equally challenging, and is not completely mastered until the age of 8 years (Ferguson, Menn, and Stoel-Gammon, 1992). Yet, by 10 months of age, differences can be discerned in the babbling of infants raised in different countries (de Boysson-Bardies, 1993), and in the laboratory, vocal imitation can be elicited by 20 weeks (Kuhl and Meltzoff, 1982). The speaking patterns we adopt early in life last a lifetime (Flege, 1991). My colleague and I have suggested that this kind of indelible learning stems from a linkage between sensory and motor experience; sensory experience with a specific language establishes auditory patterns stored in memory that are unique to that language, and these representations guide infants’ successive motor approximations until a match is achieved (Kuhl and Meltzoff, 1996). This ability to imitate vocally may also depend on the brain’s social understanding mechanisms which form a human mirroring system for seamless social interaction (Hari and Kujala, 2009).

What enables the kind of learning we see in infants for speech? No machine in the world can derive the phonemic inventory of a language from natural language input (Rabiner and Huang, 1993), though models improve when exposed to “motherese,” the linguistically simplified and acoustically exaggerated speech that adults universally use when speaking to infants (de Boer and Kuhl, 2003). The variability in speech input is simply too enormous; Japanese adults produce both English r- and l- like sounds, exposing Japanese infants to both sounds (Lotto, Sato, and Diehl, 2004; Werker et al., 2007). How do Japanese infants learn that these two sounds do not distinguish words in their language, and that these differences should be ignored? Similarly, English speakers produce Spanish b and p, exposing American infants to both categories of sound (Abramson and Lisker, 1970). How do American infants learn that these sounds are not important in distinguishing words in English? An important discovery in the 1970s was that infants initially hear all these phonetic differences (Eimas, 1975; Eimas, Siqueland, Jusczyk, and Vigorito, 1971; Lasky, Syrdal-Lasky, and Klein, 1975; Werker and Lalonde, 1988). What we must explain is how infants learn to group phonetic units into phonemic categories that make a difference in their language.

The Timing of Phonetic Learning

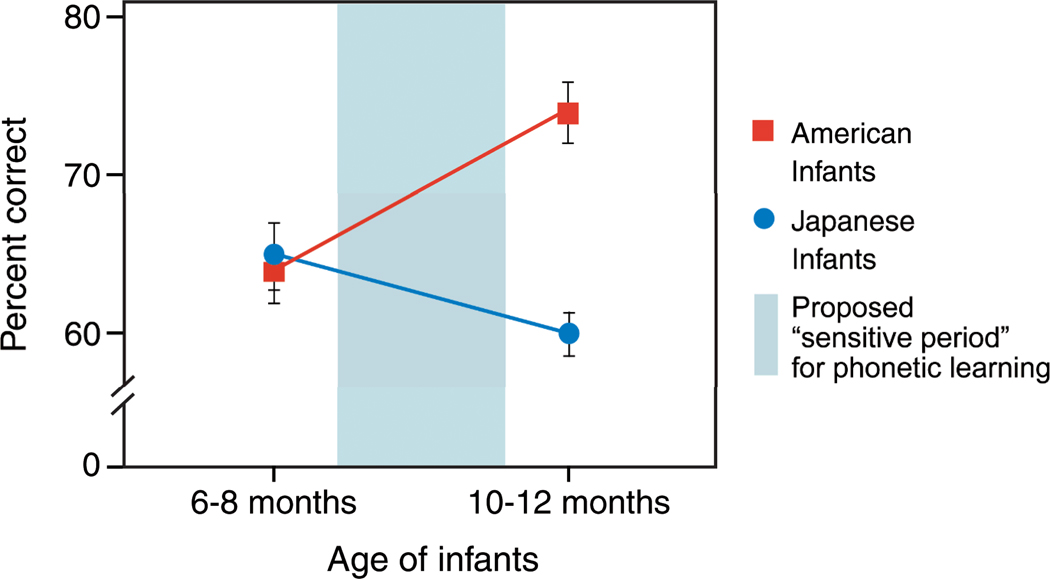

An important discovery in the 1980s identified the timing of a crucial change in infant perception. The transition from an early universal perceptual ability to distinguish all the phonetic units of all languages to a more language-specific pattern of perception occurred very early in development—between 6 and 12 months of age (Werker and Tees, 1984), and initial work demonstrated that infants’ perception of nonnative distinctions declines during the second half of the first year of life (Best and McRoberts, 2003; Rivera-Gaxiola, Silvia-Pereyra, and Kuhl, 2005a; Tsao, Liu, and Kuhl, 2006; Werker and Tees, 1984). Work in this laboratory also established a new fact: At the same time that nonnative perception declines, native language speech perception shows a significant increase. Japanese infants’ discrimination of English r-l declines between 8 and 10 months of age, while at the same time in development American infants’ discrimination of the same sounds shows an increase (Kuhl et al., 2006) (Figure 3).

Figure 3.

Effects of age on discrimination of the American English /ra-la/ phonetic contrast by American and Japanese infants at 6–8 and 10–12 months of age. Mean percent correct scores are shown with standard errors indicated (adapted from Kuhl et al., 2006).

We argued that the increase observed in native-language phonetic perception represented a critical step in initial language learning (Kuhl et al., 2006). In later studies testing the same infants on both native and nonnative phonetic contrasts, we showed a significant negative correlation between discrimination of the two kinds of contrasts—as native speech perception abilities increased in a particular child, that child’s nonnative abilities declined (Kuhl, Conboy, Padden, Nelson, and Pruitt, 2005a). This was precisely what our model of early language predicted (Kuhl, 2004; Kuhl et al., 2008). In the early period, our data showed that while better discrimination on a native contrast predicts rapid growth in later language abilities, better discrimination on nonnative contrasts predicts slower language growth (Kuhl et al., 2005a; Kuhl et al., 2008). Better native abilities enhanced infants’ skills in detecting words and this vaulted them towards language, whereas better nonnative abilities indicated that infants remained in an earlier phase of development – sensitive to all phonetic differences. Infants’ ability to learn which phonetic units are relevant in the language(s) they experience, while decreasing or inhibiting their attention to the phonetic units that do not distinguish words in their language, is the necessary step required to begin the path toward language– not auditory acuity, per se, since better sensory abilities would be expected to improve both native and nonnative speech discrimination. These data led to a theoretical model (Native Language Magnet, expanded, or NLM-e, see Kuhl et al., 2008 for details) which argues that an implicit learning process commits the brain’s neural circuitry to the properties of native-language speech, and that this neural commitment has bi-directional effects – it increases learning for patterns (such as words) that are compatible with the learned phonetic structure, while decreasing perception of nonnative patterns that do not match the learned scheme.

What Enables Phonetic Learning Between 8 and 10 Months of Age?

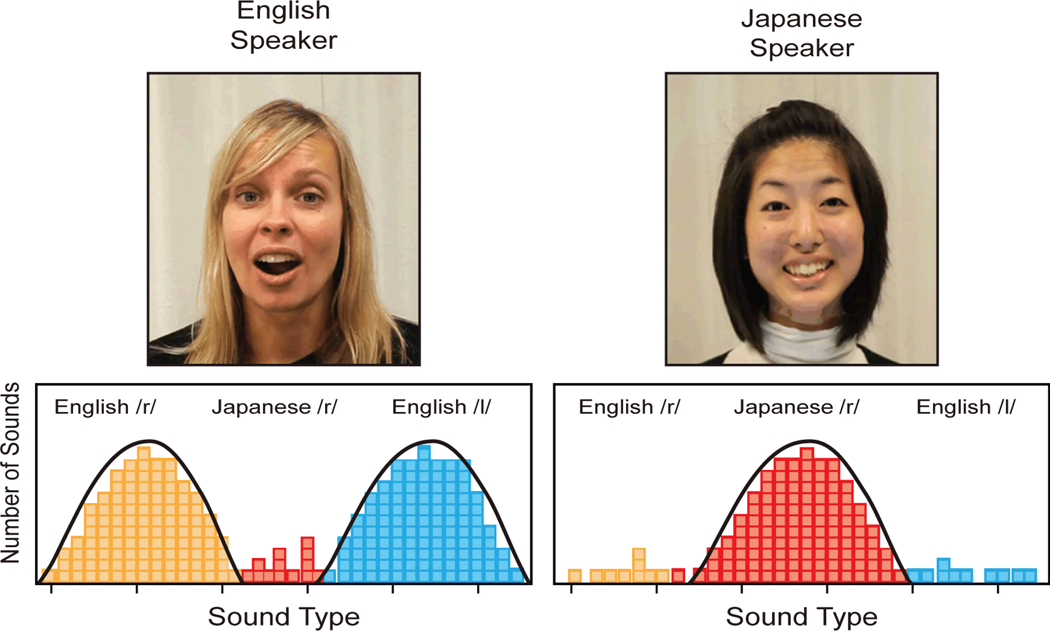

Children’s Computational Skills

An implicit form of computational learning, referred to as “statistical learning” (Saffran, Aslin, and Newport, 1996), was discovered to play a role in infants’ transition from universal to language-specific listeners. For example, studies show that adult speakers of English and Japanese produce both English r- and l-like sounds, so it is not the mere presence of the sound in language spoken to infants that accounts for learning, but instead the patterns of distributional frequency of sounds across the two languages. The idealized model of learning is shown in Figure 4. When infants listen to English and Japanese, they take into account the distributional properties of the phonetic units contained in the two languages. Infants are sensitive to these distributional frequency differences in language input, and the distributional data affects their perception (Kuhl, Williams, Lacerda, Stevens, and Lindblom, 1992; Maye, Weiss, and Aslin, 2008; Maye, Werker, and Gerken, 2002; Teinonen, Fellman, Naatanen, Alku, and Huotilainen, 2009). In fact, it has been shown that these distributional differences are exaggerated in “motherese,” the prosodically and phonetically stretched utterances that are near universal in language spoken to children around the world (Kuhl et al., 1997; Vallabha, McClelland, Pons, Weker, and Amano, 2007; Werker et al., 2007).

Figure 4.

Idealized case of distributional learning is shown. Two women speak “motherese,” one in English and the other in Japanese. Distributions of English /r/ and /l/, as well as Japanese /r/, are tabulated. Infants are sensitive to these distributional cues and, during the critical period, but only in a social context, plasticity is induced (modified from Kuhl, 2010).

As illustrated in the idealized case (Figure 4), the distributions of English and Japanese differ: English motherese contains many English /r/ and /l/ sounds and very few of the Japanese retroflex /r/ sounds, while the reverse is true for Japanese motherese. A variety of studies show that infants pick up the distributional frequency patterns in ambient speech, whether they experience them during short-term laboratory experiments or over months in natural environments, and that this experience alters phonetic perception. Statistical learning from the distributional properties in speech thus supports infants’ transition in early development from the “universal” state of perception they exhibited at birth to the “native” state of phonetic listening exhibited at the end of the first year of life.

From a theoretical standpoint, brain plasticity for speech could be described as a statistical process. Infants build up statistical distributions of the sounds contained in the language they hear, and at some point these distributional properties become stable—additional language input does not cause the overall statistical distribution of sounds to change substantially and, according to NLM-e, this would cause the organism to become less sensitive to language input. This change in plasticity with experience may be due to a statistical process: when experience produces a stable distribution of sounds, plasticity is reduced. Hypothetically, a child’s distribution of the vowel “ah” might stabilize when she hears her one-millionth token of the vowel “ah,” and this stability could instigate a closure of the critical period. On this account, plasticity is independent of time, and instead dependent on the amount and the variability of input provided by experience. The NLM-e account lends itself to testable hypotheses, and empirical tests of the tenets of the model are underway. Studies of bilingual infants, reviewed later in this chapter, provide one example of an empirical test of the model. In the case of bilingualism, increased variability in language input may affect the rate at which distributional stability is achieved in development. In other words, NLM-e proposes that bilingual children stay “open” longer to the effects of language experience, and therefore that bilingual children will show distinct patterns in early development, different from their monolingual peers (see Bilingual Language Learners, below). From a statistical standpoint, bilingual children may achieve a stable distribution of the sounds in their two languages at a later point in development when compared to monolingual children, therefore exhibiting greater plasticity than monolingual children at the same age. Experience drives plasticity.

The Social Component: A Possible Trigger for Critical Period Onset?

New studies suggested that infants’ computational abilities alone could not account for phonetic learning. Our studies demonstrated that infant language learning in complex natural environments required something more than raw computation. Laboratory studies testing infant phonetic and word learning from exposure to complex natural language demonstrated limits on statistical learning, and provided new information suggesting that social brain systems are integrally involved and, in fact, may be necessary to trigger natural language learning (Kuhl, Tsao, and Liu, 2003; Conboy and Kuhl, 2011).

The new experiments tested infants in the following way: At 9 months of age, when the initial universal pattern of infant perception is changing to one that is more language-specific, infants were exposed to a foreign language for the first time (Kuhl et al., 2003). Nine-month-old American infants listened to 4 different native speakers of Mandarin during 12 sessions scheduled over 4–5 weeks. The foreign language “tutors” read books and played with toys in sessions that were unscripted. A control group was also exposed for 12 sessions but heard only English from native speakers. After infants in the experimental Mandarin exposure group and the English control group completed their sessions, they were tested with a Mandarin phonetic contrast that does not occur in English. Both behavioral and ERP methods were used. The results indicated that infants showed a remarkable ability to learn from the “live-person” sessions – after exposure, they performed significantly better on the Mandarin contrast when compared to the control group that heard only English. In fact, they performed equivalently to infants of the same age tested in Taiwan who had been listening to Mandarin for 10 months (Kuhl et al., 2003).

The study revealed that infants can learn from first-time natural exposure to a foreign language at 9 months, and answered what was initially the experimental question: Can infants learn the statistical structure of phonemes in a new language given first-time exposure at 9 months of age? If infants required a long-term history of listening to that language—as would be the case if infants needed to build up statistical distributions over the initial 9 months of life—the answer to our question would have been no. However, the data clearly showed that infants are capable of learning at 9 months when exposed to a new language. Moreover, learning was durable. Infants returned to the laboratory for their behavioral discrimination tests between 2 and 12 days after the final language exposure session, and between 8 and 33 days for their ERP measurements. No “forgetting” of the Mandarin contrast occurred during the 2 to 33 day delay.

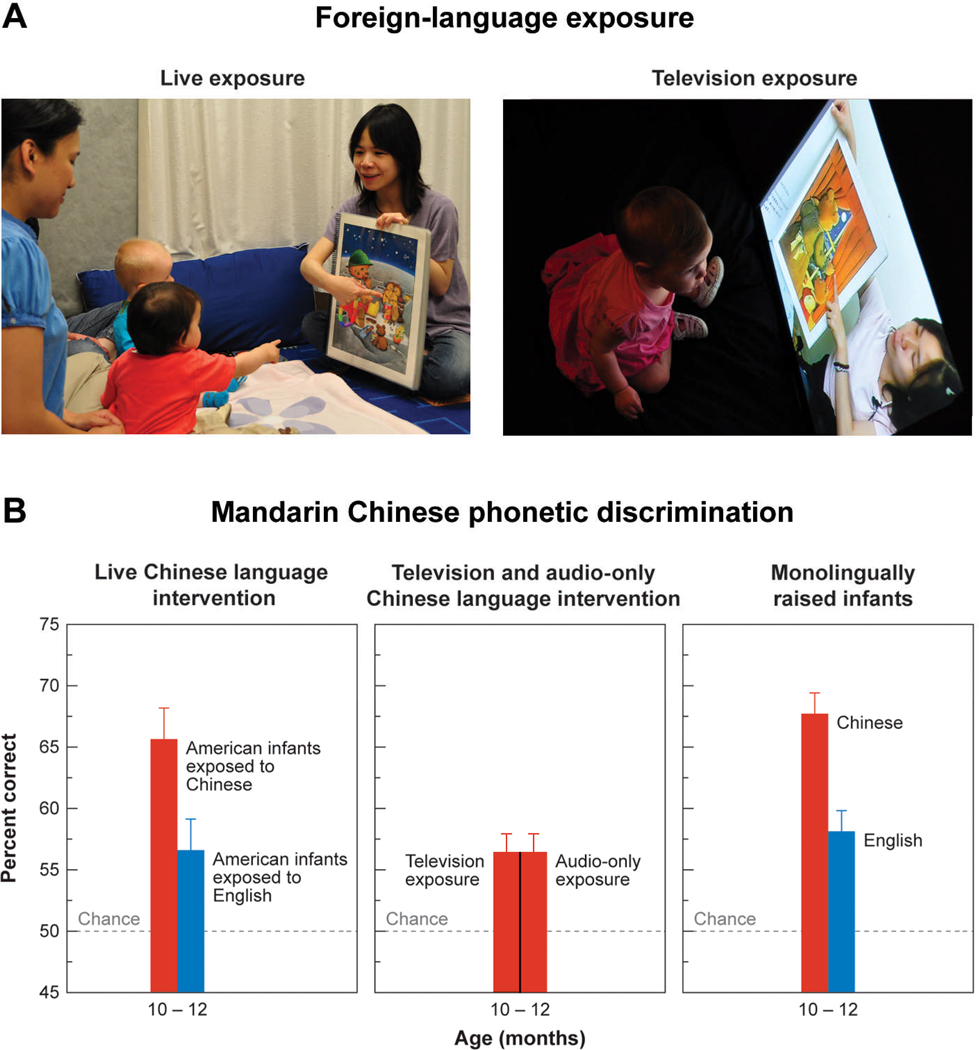

We were struck by the fact that infants exposed to Mandarin were socially very engaged in the language sessions and began to wonder about the role of social interaction in learning. Would infants learn if they were exposed to the same information in the absence of a human being, say, via television or an audiotape? If statistical learning is sufficient, the television and audio-only conditions should produce learning. Infants who were exposed to the same foreign-language material at the same time and at the same rate, but via standard television or audiotape only, showed no learning—their performance equaled that of infants in the control group who had not been exposed to Mandarin at all (Figure 5).

Figure 5.

The need for social interaction in language acquisition is shown by foreign-language learning experiments. Nine-month-old infants experienced 12 sessions of Mandarin Chinese through (A) natural interaction with a Chinese speaker (left) or the identical linguistic information delivered via television (right) or audiotape (not shown). (B) Natural interaction resulted in significant learning of Mandarin phonemes when compared with a control group who participated in interaction using English (left). No learning occurred from television or audiotaped presentations (middle). Data for age-matched Chinese and American infants learning their native languages are shown for comparison (right) (adapted from Kuhl et al., 2003).

Thus, the presence of a human being interacting with the infant during language exposure, while not required for simpler statistical-learning tasks (Maye et al., 2002; Saffran et al., 1996), is critical for learning in complex natural language-learning situations (Kuhl et al., 2003). Using the same experimental design, this work has been extended to Spanish and included measures beyond Kuhl et al. (2003); these studies demonstrated that infants not only learn Spanish phonemes (Conboy and Kuhl, 2011) but also Spanish words they were exposed to during the language-exposure sessions (Conboy and Kuhl, 2010). Moreover, our work demonstrates that individual differences in infants’ social behaviors during the Spanish exposure sessions is significantly correlated to the degree to which infants learn both phonemes and words, as indicated by the relationship between social behaviors during the sessions and brain measures documenting learning post-exposure (Conboy, Brooks, Meltzoff, and Kuhl, submitted).

These studies show that infants’ computational abilities are enabled by social interaction, a situation mirrored in neurobiological studies on vocal communication learning in other species, such as birds (Doupe and Kuhl, 2008). The notion that social interaction acts as a “gate” for infants initial language learning has important implications for children with autism that we are beginning to explore (see Kuhl, 2011; Kuhl et al., 2005b; Kuhl). The broader role of socio-cultural context on language learning is also illustrated in studies focusing on language and brain in children from families with low socio-economic status (See Raizada, Richards, Meltzoff, and Kuhl, 2008; Stevens, Lauinger, and Neville, 2009).

The model we have developed indicates that perhaps it is the interaction between computational skills and social cognition that opens plasticity for language learning. Infants have computational skills from birth (Teinonen et al., 2009). The fact that effects of linguistic experience on phonetic perception are not observed until 8 months of age is of interest to theory. We speculated that infants might require 8 months of listening to build up reliable statistical distributions of the sounds contained in the language they experienced. This idea in fact was the impetus for the Kuhl et al. (2003) investigation, which examined whether infants could learn from first-time foreign language exposure at 9 months of age. Our results verified that 9-month-old infants did not require 8 months of listening to learn from experiencing a new language—they learned after less than 5 hours of exposure to a new language, but only in a social context.

These data raise the prospect that infants’ social skills which develop at about this time—their ability to track eye movements, achieve joint visual attention, and begin to understand others’ communicative intentions—serve as a plasticity trigger. Social understanding might be the “gate” that initiates plasticity for phonetic learning in human infants (Kuhl, 2011). There is a neurobiological precedent for a social interaction plasticity trigger in songbirds: it is well established that a more natural social setting prompts learning and that manipulating social factors can either shorten or extend the optimum period for learning (Knudsen, 2004; Wooley and Doupe, 2008). The possibility of a social interaction plasticity trigger in humans raises many new questions, and also has implications for developmental disabilities (see Kuhl, 2011 for discussion).

Bilingual Language Learning

According to our modeling, bilingual language learners would be expected to follow the same principles as monolingual learners—both computational and social factors influence the period of plasticity. Nonetheless, we argue that this process might result in a developmental transition that occurs at a later point in time for bilingual infants than for monolingual infants learning either language. We have argued that infants learning two first languages simultaneously would remain “open” to experience for a longer period of time because they are mapping language input in two forms, each with distinct statistical distributions. Social experience often links the statistical distributions for particular languages to individual social partners, and thus perhaps assists infants in separating the statistics of one language from another. If this reasoning is correct, a longer period of time may be required to begin to close the critical period in bilinguals because infants must receive sufficient data from both languages to reach distributional stability. This in turn depends on factors such as the number of people in the infant’s environment producing the two languages in speech directed toward the child, and the amount of input each speaker in the infant’s environment provides. It would be highly adaptive for bilingual infants to remain perceptually “open” for a longer period of time. Social interaction would play a role as well, in that people in the bilingual child’s home often speak in their preferred language. This social information may help infants assign the statistical properties of language input to different languages. If an infant’s mother speaks English and the child’s father speaks Japanese, social information could allow the child to mentally separate the properties of the two languages.

Only a few studies have addressed the timing of the perceptual transition in bilingual infants and results have been mixed, perhaps due to differences in methodology, differences in the amount of language exposure to the two languages in individual bilingual participants, and the specific characteristics of the languages and speech contrasts studied. Some studies have reported that bilingual infants show different developmental patterns when compared to monolingual infants. Bosch and Sebastián-Gallés (2003a) compared 4-, 8- and 12-month-old infants from Spanish monolingual households, Catalan monolingual households, and Spanish-Catalan bilingual households on a vowel contrast that is phonemic in Catalan but not in Spanish (/ε/ vs. /e/). Their results showed that at 4 months infants discriminated the vowel contrast but that at 8 months of age only infants exposed to Catalan succeeded. Interestingly, the same group of bilinguals regained their ability to discriminate the speech contrast at 12 months of age. The authors reported the same developmental pattern in bilingual infants in a study of consonants (Bosch and Sebastián-Gallés, 2003b) and interpreted the results as evidence that different processes may underlie bilingual vs. monolingual phoneme category formation (at least for speech sounds with different distributional properties in each of the two languages). Sebastián-Gallés and Bosch (2009) reproduced this pattern of results in two vowels (/o/ vs. /u/), which are common to and contrastive in both languages. However, Sebastián-Gallés and Bosch also tested bilingual and monolingual Spanish/Catalan infants in their ability to discriminate a second pair of vowels that is common to and contrastive in both languages, but acoustically more salient (i.e., /e/ vs. /u/). Eight-month-old bilinguals were able to discriminate this acoustically distant contrast. The authors interpreted these data as supporting the idea that differences may exist in monolingual and bilingual phonetic development and that factors in addition to the distributional frequency of phonetic units in language input, such as lexical similarity, may play an important role.

Other investigations have found that bilingual infants discriminate phonetic contrasts in their native languages on the same timetable as monolingual infants. For example, Burns, Yoshida, Hill, and Werker (2007) tested consonant discrimination using English and French sounds at 6–8, 10–12, and 14–20 months in English monolingual and English-French bilingual infants. As expected, 6–8 month old English monolingual infants discriminated both contrasts while 10–12 and 14–20 month old English monolingual infants discriminated only the English contrast. In bilingual infants, all age groups were able to discriminate both contrasts. Sundara, Polka, and Molnar (2008; see also Sundara and Scutellaro, 2011) produced similar findings.

We conducted the first longitudinal study of English-Spanish bilingual infants combining a brain measure of discrimination for phonetic contrasts in both languages (Event Related Potentials, or ERPs) in 6–9 and 10–12 month-old infants, with concurrent measures of language input to the child in the home, and follow up examination of word production in both languages months later (Garcia-Sierra et al., in press). The study addressed three questions: Do bilingual infants show the ERP components indicative of neural discrimination for the phonetic units of both languages on the same timetable as monolingual infants? Is there a relationship between brain measures of phonetic discrimination and the amount of exposure to the two languages? Is later word production in the infants’ two languages predicted by early ERP responses to speech sounds in both languages, and/or the amount of early language exposure to each of the two languages.

As predicted, bilingual infants displayed a developmental pattern distinct from that of monolingual infants previously tested using the same stimuli and methods (Rivera-Gaxiola, et al., 2005a; Rivera-Gaxiola, Klarman, Garcia-Sierra, and Kuhl, 2005b). Our studies indicated that bilingual infants show patterns of neural discrimination for their (two) native languages at a later point in time when compared to monolingual infants (Garcia-Sierra et al., in press). The data suggest that bilingual infants remain “open” to language experience longer than monolingual infants, a response that is highly adaptive to the increased variability in the language input they experience.

We also showed that neural discrimination of English and Spanish in these bilingual infants was related to the amount of exposure to each language in the home—infants who heard more Spanish at home had larger brain responses to Spanish sounds, and the reverse was found in infants who heard more English at home. Finally, we hypothesized a relationship between the early brain measures and later word production, as well as relationships between early language exposure and later word production. Both hypotheses were confirmed. Children who were English dominant in word production at 15 months had shown relatively better early neural discrimination of the English contrast, as well as stronger early English exposure in the home. Similarly, children who were Spanish dominant in word production at 15 months had earlier shown relatively better neural discrimination of the Spanish contrast and stronger Spanish exposure in the home.

Taken as a whole, the results suggest that bilingual infants tested with phonetic units from both of their native languages stay perceptually “open” longer than monolingual infants—indicating perceptual narrowing at a later point in time, which is highly adaptive for bilingual infants. The results reinforce the view that experience alters the course of brain structure and function early in development. We also show that individual differences in infants’ neural responses to speech, as well as their later word production, are influenced by the amount of exposure infants have to each of their native languages at home.

Language, Cognition, and Bilingual Language Experience

Specific cognitive abilities, particularly the executive control of attention and the ability to inhibit a pre-potent response (cognitive control), are affected by language experience. Bilingual adult speakers are more cognitively flexible given novel problems to solve (Bialystok, 1999, 2001; Bialystok and Hakuta, 1994; Wang, Kuhl, Chen, and Dong, 2009), as are young school-aged bilingual children (Carlson and Meltzoff, 2008). We have recent evidence that cognitive flexibility is also increased in infants and young children who have short-term or long-term exposure to more than one language. In the short-term Spanish exposure studies described earlier, a median split of infants based on their post-exposure brain measures of Spanish language learning revealed that the best learners (top half of the distribution) have post-exposure cognitive flexibility scores that are significantly higher than infants whose Spanish-language learning scores put them in the lowest half of the distribution (Conboy et al., 2008). In a second experiment, we linked brain measures of word recognition in both languages to cognitive flexibility scores in 24–29 month old bilingual children—those with enhanced brain measures in response to words in both their languages show the highest cognitive flexibility scores (Conboy et al., 2011).

These data provide further evidence that experience shapes the brain; bilingual adults and children have advanced skills when coping with tasks that require the ability to “reverse the rules” and think flexibly. These skills could aid various forms of learning in school, and educators should be aware of them. At the same time, the cognitive flexibility induced by experience with two languages may play a role in bilingual children remaining open to language experience longer in development, which changes the timetable of learning.

Further research is needed to examine in detail the implications for bilingual language learning and literacy—studies on bilingual learning have just begun and will be a strong focus for neuroscience and education in the next decade.

Early Language Learning Predicts Later Language Skills

Early language learning is a complex process. Our working hypothesis is the following: Infants computational skills, modulated by social interaction, open a window of increased plasticity at about 8 months of life. Between 8 and 10 months monolingual infants show an increase in native language phonetic perception, a decrease in nonnative phonetic perception, and remain open to phonetic learning from a new language that can be induced by social experience with a speaker of that language (though not via a standard TV experience). The complexity of learning in this early phase is not trivial, and that complexity might explain why our laboratory studies show wide individual differences in the early phonetic transition. An important question, especially for practice, was suggested by these data: Is an individual child’s success at this early transition toward language indicative of future language skills or literacy?

We began studies to determine whether the variability observed in measures of early phonetic learning predicted children’s language skills measured at later points in development. We recognized that it was possible that the variability we observed was simply “noise,” in other words, random variation in a child’s skill on the particular day that we measured that child in the laboratory. We were therefore pleased when our first studies demonstrated that infants’ discrimination of two simple vowels at 6 months of age was significantly correlated with their language skills at 13, 16, and 24 months of age (Tsao, Liu, and Kuhl, 2004). Later studies confirmed the connection between early speech perception and later language skills using both brain (Rivera-Gaxiola et al., 2005b; Kuhl et al., 2008) and behavioral (Kuhl et al., 2005a) measures on monolingual infants, and with bilingual infants using brain measures (Garcia-Sierra et al., in press). Other laboratories also produced data that indicated strong links between the speed of speech processing and later language function (Fernald, Perfors, and Marchman, 2006) and between various measures of statistical learning and later language measures (Newman, Ratner, Jusczyk, Jusczyk,and Dow, 2006).

Recent data from our laboratory indicate long-term associations between early measures of infants’ phonetic perception and future language and reading skills. The new work measures vowel perception at 7 and 11 months and shows that the trajectory of learning between those two ages predicts the children’s language abilities and pre-literacy skills at the age of 5 years—the association holds regardless of socio-economic status, as well as the level of children’s language skills at 18 and 24 months of age (Cardillo Lebedeva and Kuhl, 2009).

Infants tested at 7 and 11 months of age show three patterns of speech perception development: (1) infants who show excellent native discrimination at 7 months and maintain that ability at 11 months, the high-high group, (2) infants who show poor abilities at 7 months but excellent performance at 11 months, the low-high group, and (3) infants who show poor abilities to discriminate at both 7 and 11 months of age, the low-low group. We followed these children until the age of 5, assessing language skills at 18 months, 24 months, and 5 years of age. Strong relationships were observed between infants’ early speech perception performance and their later language skills at 18 and 24 months. At 5 years of age, significant relationships were shown between infants’ early speech perception performance and both their language skills and the phonological awareness skills associated with success in learning to read. In all cases, the earlier in development that infants showed excellent skills in detecting phonetic differences in native language sounds, the better their later performance in measures of language and pre-literacy skills (Cardillo Lebedeva and Kuhl, 2009).

These results are theoretically interesting and also highly relevant to early learning practice. These data show that the initial steps that infants take toward language learning are important to their development of language and literacy years later. Our data suggest as well that these early differences in performance are strongly related to experience. Our studies reveal that these early measures of speech discrimination, which predict future language and literacy, are strongly correlated to experience with “motherese” early in development (Liu, Kuhl, and Tsao, 2003). Motherese exaggerates the critical acoustic cues in speech (Kuhl et al., 1997; Werker et al., 2007), and infants’ social interest in speech is, we believe, important to the social learning process. Thus, talking to children early in life, reading to them early in life, and interacting socially with children around language and literacy activities creates the milieu in which plasticity during the critical period can be maximized for all children.

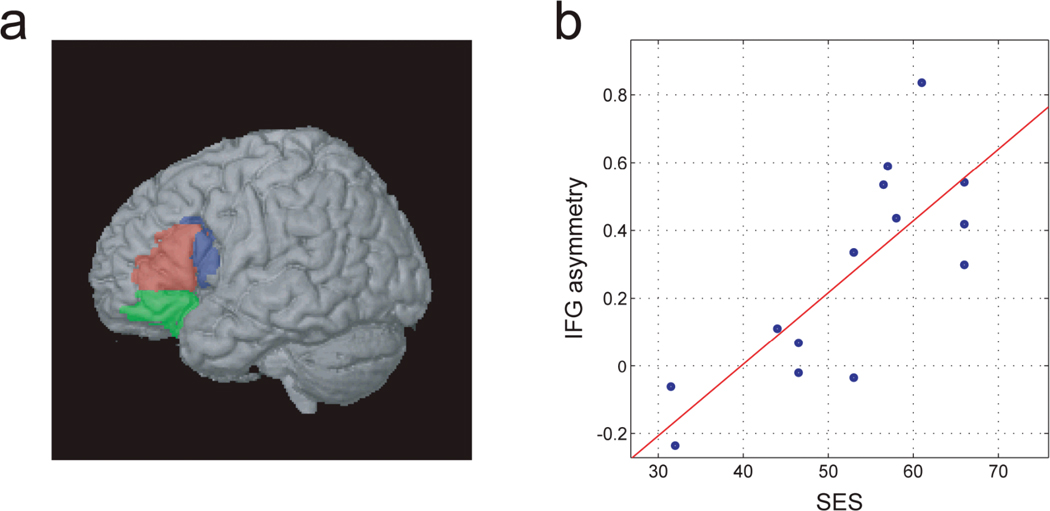

There is increasing evidence that children raised in families with lower socio-economic status (SES) show deficits in language measured either behaviorally or in brain studies (for extensive review, see Raizada and Kishiyama, 2010). In one of the first studies of 5-year-old children combining behavioral and brain measures, Raizada et al. (2008) examined the associations between standardized test scores of language, social cognition, intelligence, SES, and fMRI-measured brain activity as the 5-year-old children worked on a rhyming task. The results showed correlations between SES, language performance, and the degree of hemispheric specialization in Broca’s area, as measured by left-minus-right fMRI activation (Figure 6). The SES-Broca’s link remained highly significant after the effects of the language scores were removed, indicating the relationship cannot be attributed to both measures’ correlations with the language scores. The study shows a correlational link, which of course we cannot assume to be causal.

Figure 6.

Relationship between left hemisphere specialization in Broca’s area (a) and SES in 5-year-old children (b) (From Raizada et al., 2008).

The authors concluded that fMRI is a more sensitive measure of the development of Broca’s area than any of the behavioral tests; each behavioral score is a compound function of perception, cognition, attention and motor control, whereas fMRI probes Broca’s more directly. Thus, neuroimaging studies, especially early in development, may be able to provide us with highly sensitive measures of competence.

We assumed that SES is not itself the variable driving these effects on the brain—SES is likely a proxy for the opportunity to learn. We learned in a follow-up study that SES could be removed from the equation if language input itself was measured. The complexity of language input is the more direct factor influencing development of brain areas that code language. When measures of the complexity of maternal language were assessed across the entire sample of children in the study, we observed a correlation with structural measures of the brain in Broca’s area. These measures indicated that greater grey matter in the left hemisphere language areas was related to the complexity of maternal language in conversations between the mothers and their 5-year-old children.

In summary, our results suggested that language input to the child—its complexity and diversity—was the factor affecting brain development in the language areas, not SES per se. The implication is that children’s brains literally depend on input for development. Though these results are correlational, we believe that the connection between experience with language and brain development is potentially causal and that further research will allow us to develop causal explanations.

Discussion

There are two important implications of these data. The first is that early language learning is highly social. Children do not compute statistics indiscriminately. Social cues “gate” what and when children learn from language input. Machines are not sufficient as instructors, at least in the early period and when standard machines like television sets are used as the instructor. Further studies are needed to test whether our work suggesting that language learning must be social to “stick” applies to other learning domains—must cognitive learning or learning about numbers be social?

Studies will also be needed to determine the ages at which the social context conclusion applies—infants are predisposed to attend to people and do not learn from TVs, but that conclusion does not apply to older children, teenagers, or adult learners. What is it about social learning that is so important to the young? Animal models show that neurosteroids modulate brain activity during social interactions (Remage-Healey, Maidment, and Schlinger, 2008) and previous work has shown that social interaction can extend the “critical period” for learning in birds (Brainard and Knudsen, 1998). Social factors play a role in learning throughout life. The prevalence, across age, of new social technologies (text messaging, Facebook, Twitter) indicates a strong human drive for social communication. Technology used for teaching is increasingly embodying the principles of social interaction to enhance student learning (Koedinger and Aleven, 2007). A true understanding of the nature of the relationship between social interaction and learning requires more research, and the NSF-funded LIFE Center is focused on this question across domains and ages (see LIFE Website: http://life-slc.org).

The fact that young children use implicit and automatic mechanisms to learn and that children’s brains are influenced by the ambient information they experience is a sobering thought. Opportunities for learning of the right kind must be available for children to maximize the unique learning abilities that their brains permit during the early period of life. Scientists of course do not yet know how much or what kinds of experience are necessary for learning—we cannot write an exact prescription for success. Nonetheless, the data on language and literacy indicate a potent and necessary role for ample early experience, in social settings, in which complex language is used to encourage children to express themselves and explore the world of books. Further data will refine these conclusions and, hopefully, allow us to develop concrete recommendations that will enhance the probability that all children the world over maximize their brain development and learning.

Acknowledgements

The author and research reported here were supported by a grant from the National Science Foundation’s Science of Learning Program to the LIFE Center (SBE-0354453), and by grants from the National Institutes of Health (HD37954, HD55782, HD02274, DC04661). The review updates information in Kuhl (2010).

References

- Abramson AS, Lisker L. Discriminability along the voicing continuum: Cross-language tests. Proceedings of the International Congress of Phonetics Science. 1970;6:569–573. [Google Scholar]

- Aslin RN, Mehler J. Near-infrared spectroscopy for functional studies of brain activity in human infants: Promise, prospects, and challenges. Journal of Biomedical Optics. 2005;10:11009. doi: 10.1117/1.1854672. [DOI] [PubMed] [Google Scholar]

- Best CC, McRoberts GW. Infant perception of non-native consonant contrasts that adults assimilate in different ways. Language and Speech. 2003;46:183–216. doi: 10.1177/00238309030460020701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bialystok E. Letters, sounds, and symbols: Changes in children's understanding of written language. Applied Psycholinguistics. 1991;12:75–89. [Google Scholar]

- Bialystok E. Cognitive complexity and attentional control in the bilingual mind. Child Development. 1999;70:636–644. [Google Scholar]

- Bialystok E. Bilingualism in development: Language, literacy, and cognition. New York: Cambridge University Press; 2001. [Google Scholar]

- Bialystok E, Hakuta K. In other words: The science and psychology of second-language acquisition. New York: Basic Books; 1994. [Google Scholar]

- Birdsong D. Ultimate attainment in second language acquisition. Language. 1992;68:706–755. [Google Scholar]

- Birdsong D, Molis M. On the evidence for maturational constraints in second-language acquisitions. Journal of Memory and Language. 2001;44:235–249. [Google Scholar]

- de Boer B, Kuhl PK. Investigating the role of infant-directed speech with a computer model. ARLO. 2003;4:129–134. [Google Scholar]

- Bortfeld H, Wruck E, Boas DA. Assessing infants' cortical response to speech using near-infrared spectroscopy. NeuroImage. 2007;34:407–415. doi: 10.1016/j.neuroimage.2006.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosch L, Sebastián-Gallés N. Simultaneous bilingualism and the perception of a language-specific vowel contrast in the first year of life. Language and Speech. 2003a;46:217–243. doi: 10.1177/00238309030460020801. [DOI] [PubMed] [Google Scholar]

- Bosch L, Sebastián-Gallés N. Language experience and the perception of a voicing contrast in fricatives: Infant and adult data. In: Recasens D, Solé MJ, Romero J, editors. Proceedings of the 15th international conference of phonetic sciences; Barcelona: UAB/Casual Prods; 2003b. pp. 1987–1990. [Google Scholar]

- de Boysson-Bardies B. Ontogeny of language-specific syllabic productions. In: de Boysson-Bardies B, Schonen S, Jusczyk P, McNeilage P, Morton J, editors. Developmental neurocognition: Speech and face processing in the first year of life. Dordrecht, Netherlands: Kluwer; 1993. pp. 353–363. [Google Scholar]

- Brainard MS, Knudsen EI. Sensitive periods for visual calibration of the auditory space map in the barn owl optic tectum. The Journal of Neuroscience. 1998;18:3929–3942. doi: 10.1523/JNEUROSCI.18-10-03929.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruer JT. Critical periods in second language learning: Distinguishing phenomena from explanation. In: Mody M, Silliman E, editors. Brain, behavior and learning in language and reading disorders. New York, NY: The Guilford Press; 2008. pp. 72–96. [Google Scholar]

- Burns TC, Yoshida KA, Hill K, Werker JF. The development of phonetic representation in bilingual and monolingual infants. Applied Psycholinguistics. 2007;28:455–474. [Google Scholar]

- Carlson SM, Meltzoff AN. Bilingual experience and executive functioning in young children. Developmental Science. 2008;11:282–298. doi: 10.1111/j.1467-7687.2008.00675.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardillo Lebedeva GC, Kuhl PK. Individual differences in infant speech perception predict language and pre-reading skills through age 5 years; Paper presented at the Annual Meeting of the Society for Developmental & Behavioral Pediatrics; Portland, OR. 2009. [Google Scholar]

- Cheour M, Imada T, Taulu S, Ahonen A, Salonen J, Kuhl PK. Magnetoencephalography is feasible for infant assessment of auditory discrimination. Experimental Neurology. 2004;190:S44–S51. doi: 10.1016/j.expneurol.2004.06.030. [DOI] [PubMed] [Google Scholar]

- Coffey-Corina S, Padden D, Estes A, Kuhl PK. ERPs to words in toddlers with ASD predict behavioral measures at 6 years of age; Poster presented at the annual meeting of the International Meeting for Autism Research; San Diego, CA. 2011. May, [Google Scholar]

- Conboy BT, Brooks R, Meltzoff AN, Kuhl PK. Infants’ social behaviors predict phonetic learning. (submitted) [Google Scholar]

- Conboy BT, Kuhl PK. Brain responses to words in 11-month-olds after second language exposure; Poster presented at the American Speech-Language-Hearing Association; Philadelphia, PA. Nov, 2010. [Google Scholar]

- Conboy BT, Kuhl PK. Impact of second-language experience in infancy: Brain measures of first- and second-language speech perception. Developmental Science. 2011;14:242–248. doi: 10.1111/j.1467-7687.2010.00973.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conboy BT, Rivera-Gaxiola M, Silva-Pereyra J, Kuhl PK. Event-related potential studies of early language processing at the phoneme, word, and sentence levels. In: Friederici AD, Thierry G, editors. Early language development: Vol. 5. Bridging brain and behavior, trends in language acquisition research. Amsterdam, The Netherlands: John Benjamins; 2008. pp. 23–64. [Google Scholar]

- Conboy BT, Sommerville J, Kuhl PK. Cognitive control skills and speech perception after short term second language experience during infancy. Journal of the Acoustical Society of America. 2008;123:3581. [Google Scholar]

- Conboy BT, Sommerville JA, Wicha N, Romo HD, Kuhl PK. Word processing and cognitive control skills in two-year-old bilingual children; Symposium presentation at the 2011 Biennial Meeting for the Society for Research in Child Development; Montreal, Canada. March-April, 2011. [Google Scholar]

- Dehaene-Lambertz G, Dehaene S, Hertz-Pannier L. Functional neuroimaging of speech perception in infants. Science. 2002;298:2013–2015. doi: 10.1126/science.1077066. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Hertz-Pannier L, Dubois J, Meriaux S, Roche A, Sigman M, Dehaene S. Functional organization of perisylvian activation during presentation of sentences in preverbal infants. Proceedings of the National Academy of Sciences (USA) 2006;103:14240–14245. doi: 10.1073/pnas.0606302103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doupe AJ, Kuhl PK. Birdsong and human speech: Common themes and mechanisms. In: Zeigler HP, Marler P, editors. Neuroscience of birdsong. Cambridge, England: Cambridge University Press; 2008. pp. 5–31. [Google Scholar]

- Eimas PD. Auditory and phonetic coding of the cues for speech: Discrimination of the /r-l/ distinction by young infants. Perception and Psychophysics. 1975;18:341–347. [Google Scholar]

- Eimas PD, Siqueland ER, Jusczyk P, Vigorito J. Speech perception in infants. Science. 1971;171:303–306. doi: 10.1126/science.171.3968.303. [DOI] [PubMed] [Google Scholar]

- Fernald A, Perfors A, Marchman VA. Picking up speed in understanding: Speech processing efficiency and vocabulary growth across the 2nd year. Developmental Psychology. 2006;42:98–116. doi: 10.1037/0012-1649.42.1.98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferguson CA, Menn L, Stoel-Gammon C, editors. Phonological development: Models, research, implications. Timonium, MD: York Press; 1992. [Google Scholar]

- Flege J. Age of learning affects the authenticity of voice onset time (VOT) in stop consonants produced in a second language. Journal of the Acoustical Society of America. 1991;89:395–411. doi: 10.1121/1.400473. [DOI] [PubMed] [Google Scholar]

- Flege JE, Yeni-Komshian GH, Liu S. Age constraints on second-language acquisition. Journal of Memory and Language. 1999;41:78–104. [Google Scholar]

- Friederici AD. Neurophysiological markers of early language acquisition: From syllables to sentences. Trends in Cognitive Science. 2005;9:481–488. doi: 10.1016/j.tics.2005.08.008. [DOI] [PubMed] [Google Scholar]

- Garcia-Sierra A, Rivera-Gaxiola M, Conboy BT, Romo H, Percaccio CR, Klarman L, Ortiz S, Kuhl PK. Bilingual language learning: An ERP study relating early brain responses to speech, language input, and later word production. Journal of Phonetics. in press. [Google Scholar]

- Gernsbacher MA, Kaschak MP. Neuroimaging studies of language production and comprehension. Annual Review of Psychology. 2003;54:91–114. doi: 10.1146/annurev.psych.54.101601.145128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golestani N, Molko N, Dehaen S, LeBihan D, Pallier C. Brain structure predicts the learning of foreign speech sounds. Cerebral Cortex. 2007;17:575–582. doi: 10.1093/cercor/bhk001. [DOI] [PubMed] [Google Scholar]

- Gratton G, Fabiani M. Shedding light on brain function: The event related optical signal. Trends in Cognitive Science. 2001a;5:357–363. doi: 10.1016/s1364-6613(00)01701-0. [DOI] [PubMed] [Google Scholar]

- Gratton G, Fabiani M. The event-related optical signal: A new tool for studying brain function. International Journal of Psychophysiology. 2001b;42:109–121. doi: 10.1016/s0167-8760(01)00161-1. [DOI] [PubMed] [Google Scholar]

- Hari R, Kujala M. Brain basis of human social interaction: From concepts to brain imaging. Physiological Reviews. 2009;89:453–479. doi: 10.1152/physrev.00041.2007. [DOI] [PubMed] [Google Scholar]

- Homae F, Watanabe H, Nakano T, Asakawa K, Taga G. The right hemisphere of sleeping infant perceives sentential prosody. Neuroscience Research. 2006;54:276–280. doi: 10.1016/j.neures.2005.12.006. [DOI] [PubMed] [Google Scholar]

- Imada T, Zhang Y, Cheour M, Taulu S, Ahonen A, Kuhl PK. Infant speech perception activates Broca’s area: A developmental magnetoenceohalography study. NeuroReport. 2006;17:957–962. doi: 10.1097/01.wnr.0000223387.51704.89. [DOI] [PubMed] [Google Scholar]

- Johnson J, Newport E. Critical period effects in second language learning: The influence of maturation state on the acquisition of English as a second language. Cognitive Psychology. 1989;21:60–99. doi: 10.1016/0010-0285(89)90003-0. [DOI] [PubMed] [Google Scholar]

- Knudsen EI. Sensitive periods in the development of the brain and behavior. Journal of Cognitive Neuroscience. 2004;16 doi: 10.1162/0898929042304796. 1412-1225. [DOI] [PubMed] [Google Scholar]

- Koedinger KR, Aleven V. Exploring the assistance dilemma in experiments with cognitive tutors. Educational Psychology Review. 2007;19:239–264. [Google Scholar]

- Kuhl PK. Early language acquisition: Cracking the speech code. Nature Reviews Neuroscience. 2004;5:831–843. doi: 10.1038/nrn1533. [DOI] [PubMed] [Google Scholar]

- Kuhl PK. Is speech learning 'gated' by the social brain? Developmental Science. 2007;10:110–120. doi: 10.1111/j.1467-7687.2007.00572.x. [DOI] [PubMed] [Google Scholar]

- Kuhl PK. The linguistic genius of babies. 2010 October; A TED Talk retrieved from TED.com website: http://www.ted.com/talks/lang/eng/patricia_kuhl_the_linguistic_genius_of_babies.html)

- Kuhl PK. Social mechanisms in early language acquisition: Understanding integrated brain systems supporting language. In: Decety J, Cacioppo J, editors. The handbook of social neuroscience. Oxford UK: Oxford University Press; 2011. pp. 649–667. [Google Scholar]

- Kuhl PK, Andruski JE, Chistovich IA, Chistovich LA, Kozhevnikova EV, Ryskina VL, Stolyarova EI, Sundberg U, Lacerda F. Cross-language analysis of phonetic units in language addressed to infants. Science. 1997;277:684–686. doi: 10.1126/science.277.5326.684. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Coffey-Corina S, Padden D, Dawson G. Links between social and linguistic processing of speech in preschool children with autism: Behavioral and electro-physiological evidence. Developmental Science. 2005b;8:1–12. doi: 10.1111/j.1467-7687.2004.00384.x. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Conboy BT, Coffey-Corina S, Padden D, Rivera-Gaxiola M, Nelson T. Phonetic learning as a pathway to language: New data and native language magnet theory expanded (NLM-e) Philosophical Transactions of the Royal Society B: Biological Sciences. 2008;363:979–1000. doi: 10.1098/rstb.2007.2154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl PK, Conboy BT, Padden D, Nelson T, Pruitt J. Early speech perception and later language development: Implications for the ‘critical period.’. Language Learning and Development. 2005a;1:237–264. [Google Scholar]

- Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218:1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Meltzoff AN. Infant vocalizations in response to speech: Vocal imitation and developmental change. Journal of the Acoustical Society of America. 1996;100:2425–2438. doi: 10.1121/1.417951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl PK, Rivera-Gaxiola M. Neural substrates of language acquisition. Annual Review of Neuroscience. 2008;31:511–534. doi: 10.1146/annurev.neuro.30.051606.094321. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Stevens E, Hayashi A, Deguchi T, Kiritani S, Iverson P. Infants show facilitation for native language phonetic perception between 6 and 12 months. Developmental Science. 2006;9:13–21. doi: 10.1111/j.1467-7687.2006.00468.x. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Tsao F-M, Liu H-M. Foreign-language experience in infancy: Effects of short-term exposure and social interaction on phonetic learning. Proceedings of the National Academy of Sciences (USA) 2003;100:9096–9101. doi: 10.1073/pnas.1532872100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl PK, Williams KA, Lacerda F, Stevens KN, Lindblom B. Linguistic experience alters phonetic perception in infants by 6 months of age. Science. 1992;255:606–608. doi: 10.1126/science.1736364. [DOI] [PubMed] [Google Scholar]

- Ladefoged P. Vowels and consonants: An introduction to the sounds of language. Oxford: Blackwell Publishers; 2001. [Google Scholar]

- Lasky RE, Syrdal-Lasky A, Klein RE. VOT discrimination by four to six and a half month old infants from Spanish environments. Journal of Experimental Child Psychology. 1975;20:215–225. doi: 10.1016/0022-0965(75)90099-5. [DOI] [PubMed] [Google Scholar]

- Lenneberg E. Biological foundations of language. New York: John Wiley and Sons; 1967. [Google Scholar]

- Liu H-M, Kuhl PK, Tsao F-M. An association between mothers’ speech clarity and infants’ speech discrimination skills. Developmental Science. 2003;6:F1–F10. [Google Scholar]

- Lotto AJ, Sato M, Diehl R. Mapping the task for the second language learner: The case of Japanese acquisition of /r/ and /l/ In: Slitka J, Manuel S, Matties M, editors. From Sound to Sense. Cambridge, MA: MIT Press; 2004. pp. C181–C186. [Google Scholar]

- Mayberry RI, Lock E. Age constraints on first versus second language acquisition: Evidence for linguistic plasticity and epigenesis. Brain and Language. 2003;87:369–384. doi: 10.1016/s0093-934x(03)00137-8. [DOI] [PubMed] [Google Scholar]

- Maye J, Werker JF, Gerken L. Infant sensitivity to distributional information can affect phonetic discrimination. Cognition. 2002;82:B101–B111. doi: 10.1016/s0010-0277(01)00157-3. [DOI] [PubMed] [Google Scholar]

- Maye J, Weiss D, Aslin R. Statistical learning in infants: Facilitation and feature generalization. Developmental Science. 2008;11:122–134. doi: 10.1111/j.1467-7687.2007.00653.x. [DOI] [PubMed] [Google Scholar]

- Meltzoff AN, Kuhl PK, Movellan J, Sejnowski T. Foundations for a new science of learning. Science. 2009;17:284–288. doi: 10.1126/science.1175626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neville HJ, Coffey SA, Lawson DS, Fischer A, Emmorey K, Bellugi U. Neural systems mediating American Sign Language: Effects of sensory experience and age of acquisition. Brain and Language. 1997;57:285–308. doi: 10.1006/brln.1997.1739. [DOI] [PubMed] [Google Scholar]

- Newman R, Ratner NB, Jusczyk AM, Jusczyk PW, Dow KA. Infants’ early ability to segment the conversational speech signal predicts later language development: A retrospective analysis. Developmental Psychology. 2006;42:643–655. doi: 10.1037/0012-1649.42.4.643. [DOI] [PubMed] [Google Scholar]

- Newport E. Maturational constraints on language learning. Cognitive Science. 1990;14:11–28. [Google Scholar]

- Newport EL, Bavelier D, Neville HJ. Critical thinking about critical periods: Perspectives on a critical period for language acquisition. In: Dupoux E, editor. Language, brain, and cognitive development: Essays in honor of Jacques Mehlter. Cambridge, MA: MIT Press; 2001. pp. 481–502. [Google Scholar]

- Ortiz-Mantilla S, Choe MS, Flax J, Grant PE, Benasich AA. Association between the size of the amygdala in infancy and language abilities during the preschool years in normally developing children. NeuroImage. 2010;49:2791–2799. doi: 10.1016/j.neuroimage.2009.10.029. [DOI] [PubMed] [Google Scholar]

- Peña M, Bonatti L, Nespor M, Mehler J. Signal-driven computations in speech processing. Science. 2002;298:604–607. doi: 10.1126/science.1072901. [DOI] [PubMed] [Google Scholar]

- Rabiner LR, Huang BH. Fundamentals of speech recognition. Englewood Cliffs, NJ: Prentice Hall; 1993. [Google Scholar]

- Remage-Healey L, Maidment NT, Schlinger BA. Forebrain steroid levels fluctuate rapidly during social interactions. Nature Neuroscience. 2008;11:1327–1334. doi: 10.1038/nn.2200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raizada RDS, Richards TL, Meltzoff AN, Kuhl PK. Socioeconomic status predicts hemispheric specialization of the left inferior frontal gyrus in young children. NeuroImage. 2008;40:1392–1401. doi: 10.1016/j.neuroimage.2008.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raizada RDS, Kishiyama M. Effects of socioeconomic status on brain development, and how cognitive neuroscience may contribute to levelling the playing field. Frontiers in Human Neuroscience. 2010;4:3. doi: 10.3389/neuro.09.003.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivera-Gaxiola M, Klarman L, Garcia-Sierra A, Kuhl PK. Neural patterns to speech and vocabulary growth in American infants. NeuroReport. 2005b;16:495–498. doi: 10.1097/00001756-200504040-00015. [DOI] [PubMed] [Google Scholar]

- Rivera-Gaxiola M, Silvia-Pereyra J, Kuhl PK. Brain potentials to native and non-native speech contrasts in 7- and 11-month-old American infants. Developmental Science. 2005a;8:162–172. doi: 10.1111/j.1467-7687.2005.00403.x. [DOI] [PubMed] [Google Scholar]

- Saffran J, Aslin R, Newport E. Statistical learning by 8-month old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Sebastián-Gallés N, Bosch L. Developmental shift in the discrimination of vowel contrasts in bilingual infants: Is the distributional account all there is to it? Developmental Science. 2009;12:874–887. doi: 10.1111/j.1467-7687.2009.00829.x. [DOI] [PubMed] [Google Scholar]

- Snow CE, Hoefnagel-Hohle M. The critical period for language acquisition: Evidence from second language learning. Child Development. 1978;49:1114–1128. [Google Scholar]

- Stevens C, Lauinger B, Neville H. Differences in the neural mechanisms of selective attention in children from different socioeconomic backgrounds: An event-related brain potential study. Developmental Science. 2009;12:634–646. doi: 10.1111/j.1467-7687.2009.00807.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sundara M, Polka L, Molnar M. Development of coronal stop perception: Bilingual infants keep pace with their monolingual peers. Cognition. 2008;108:232–242. doi: 10.1016/j.cognition.2007.12.013. [DOI] [PubMed] [Google Scholar]

- Sundara M, Scutellaro A. Rhythmic distance between languages affects the development of speech perception in bilingual infants. Journal of Phonetics. in press. [Google Scholar]

- Taga G, Asakawa K. Selectivity and localization of cortical response to auditory and visual stimulation in awake infants aged 2 to 4 months. NeuroImage. 2007;36:1246–1252. doi: 10.1016/j.neuroimage.2007.04.037. [DOI] [PubMed] [Google Scholar]

- Teinonen T, Fellman V, Naatanen R, Alku P, Huotilainen M. Statistical language learning in neonates revealed by event-related brain potentials. BMC Neuroscience. 2009;10:21. doi: 10.1186/1471-2202-10-21. [DOI] [PMC free article] [PubMed] [Google Scholar]