Summary

There is growing evidence that genomic and proteomic research holds great potentials for changing irrevocably the practice of medicine. The ability to identify important genomic and biological markers for risk assessment can have a great impact in public health from disease prevention, to detection, to treatment selection. However, the potentially large number of markers and the complexity in the relationship between the markers and the outcome of interest impose a grand challenge in developing accurate risk prediction models. The standard approach to identifying important markers often assesses the marginal effects of individual markers on a phenotype of interest. When multiple markers relate to the phenotype simultaneously via a complex structure, such a type of marginal analysis may not be effective. To overcome such difficulties, we employ a kernel machine Cox regression framework and propose an efficient score test to assess the overall effect of a set of markers, such as genes within a pathway or a network, on survival outcomes. The proposed test has the advantage of capturing the potentially non-linear effects without explicitly specifying a particular non-linear functional form. To approximate the null distribution of the score statistic, we propose a simple resampling procedure that can be easily implemented in practice. Numerical studies suggest that the test performs well with respect to both empirical size and power even when the number of variables in a gene set is not small compared to the sample size.

Keywords: Genetic Association, Gene-set analysis, Genetic Pathways, Kernel Machine, Kernel PCA, Risk Prediction, Score Test, Survival Analysis

1. Introduction

Genomic technologies permit systematic approaches to discovery that have begun to have a profound impact on biological research, pharmacology, and medicine. The ability to obtain quantitative information about the complete transcription profile of cells promises to be powerful means to explore basic biology, diagnose disease, facilitate drug development, and tailor therapeutics to specific pathologies (Young, 2000). The standard approach to analyzing genetic data is to identify important genes by assessing the marginal effects of individual genes on the phenotype of interest. However, when multiple genes are related to the phenotype simultaneously via a complex structure, such a single gene analysis may not be effective and may lead to a large number of false positives and false negatives especially when the signals are weak and results that are not reproducible (Vo et al., 2007). To overcome such difficulties, biological knowledge based learning methods have been advocated to integrate biological knowledge into statistical learning (Brown et al., 2000). One important approach is through the use of genetic pathways or networks. Results from pathway/network analysis are often more reliable and reproducible (Goeman et al., 2004, 2005).

To identify pathways that are associated with disease progression, one may test for the overall effect of a pathway on the risk of developing a clinical event. Goeman et al. (2005) proposed a score test under the standard proportional hazards model framework, where linear covariate effects are assumed. However, the functionality of the genes within a pathway are often complicated, and is likely to yield non-linear and non-additive effects on disease progression. We propose to incorporate such complex joint pathway effects with kernel machine learning. The kernel machine framework has been employed in various settings as a powerful machine learning tool to incorporate complex feature spaces (Vapnik, 1998; Scholkopf and Smola, 2002). For example, support vector machine has been successfully used for classifying and validating cancer subtypes (Furey et al., 2000; Ramaswamy et al., 2001; Lee and Lee, 2003). Linear and logistic kernel machine methods have been proposed to model pathway effects on continuous and binary outcomes (Liu et al., 2007, 2008). However, limited work using kernel methods has been done for survival data. Li and Luan (2003) considered a kernel Cox regression model for relating gene expression profiles to survival outcomes. However, no inference procedures were provided for the resulting estimates of the gene expression level effects.

We propose in this paper the use of the kernel machine Cox regression to derive a score test for assessing the pathway effect on survival outcomes. The proposed test has the advantage of capturing both linear and non-linear effects. For nonlinear effects, it does not require the specification of any particular parametric non-linear functional form. To approximate the null distribution of the score test, we propose a simple resampling procedure that can be easily implemented in practice. Numerical studies suggest that the test performs well with respect to both empirical size and power even when the number of variables in a gene set is not small compared to the sample size.

The rest of the paper is organized as follows. In section 2, we introduce the Cox proportional hazards kernel machine model. In section 3, we present the score test and procedures for approximating the null distribution of the proposed test. Simulation results are presented in Section 4.1 and the proposed procedures are illustrated by assessing the effects of various canonical pathways on breast cancer survival using a breast cancer gene expression study conducted by van de Vijver et al. (2002). In the example section, we also discuss simultaneous testing procedures to adjust for multiple comparisons when there are more than one pathway of interest.

2. The Cox Proportional Hazard Kernel Machine Model

Suppose we are interested in assessing the association between genetic measurements, Zptimes1, and the survival outcome T adjusting for covariates U. For example, Z could represent the gene expression levels within a pathway and U may represent additional covariates such as age and gender. Due to censoring, the survival time T is not always observable. Instead, we observe a bivariate vector (X,Δ), where X = min(T, C), Δ = I(T ≤ C) and C is the censoring time. We require the standard assumption that C is independent of T conditional on Z and U. The data for analysis consist of n i.i.d copies of random vectors {(Xi, Δi, Zi, Ui), i = 1, …, n}. We assume that the survival time T relate to Z and U through the proportional hazards model (Cox, 1972):

where λZ,U(t) is the conditional hazard function given Z and U, λ0(t) is the baseline hazard function, γ is an unknown covariate effect for U, and h(Z) is an unknown centered smooth function of Z.

Here, we are particularly interested in testing the null hypothesis H0 : h(Z) = 0. If Z represents a genetic pathway/network, the null suggests that none of the genes in the pathway/network is associated with survival time conditional on U, i.e, no pathway/network effect. To test for such a hypothesis, one may consider a parametric or non-parametric specification for h(•). For example, if h(z) = βTz, the model becomes the standard Cox proportional hazard model (Cox, 1972). A score test for h(•) = 0 under such a framework has been considered by Goeman et al. (2005). However, such a test for linear effects may have limited power when the covariate effect is non-linear.

We consider a general parametric/non-parametric setting where we allow the functional form of h(•) to belong to a function space , generated by a given positive definite kernel function K(·, ·; ρ), where ρ is a possibly unknown scale parameter of the kernel function. By Mercer's Theorem (Cristianini and Shawe-Taylor, 2000), under regularity conditions, a kernel function K(·, ·; ρ) implicitly specifies a unique function space spanned by a particular set of orthogonal basis functions , where , and are the eigenvalue and eigenfunctions of K(·, ·; ρ) such that and λ1(ρ) ≥ λ2(ρ) ≥ ⋯ ≥ λL(ρ) ≥ 0. The functional space is essentially , where denotes the function space spanned by basis functions a. This gives a primal representation of . One popular kernel function is the rth polynomial kernel , where ρ is the intercept. For our present case, h(z) is centered and thus ρ may be set to 0. The first order polynomial kernel with r = 1 corresponds to the linear effect with h(z) = βTz and thus . If r = 2, , i.e., a model with main effects, quadratic effects and two-way interactions. The kernel function corresponds to the model with linear effects and all multi-way interactions, i.e. . Another popular kernel is the Gaussian Kernel , where and ρ is an unknown parameter. The Gaussian kernel generates the function space spanned by the radial basis functions. See Buhmann (2003) for the mathematical properties associated with this kernel function. Other kernel functions include the sigmoid, spline, Fourier and B-Spline kernels (Vapnik, 1998; Burges, 1998; Scholkopf and Smola, 2002). The kernel function can be viewed as a measure of similarity of gene profiles within the same pathway between two subjects. A choice of the kernels specifies a particular parametric/nonparametric model one is interested in fitting.

The explicit forms of the basis functions corresponding to K are generally difficult if not impossible to specify especially when p is not small and the function h is complex. Thus, it is generally difficult to estimate h based on its primal representation. On the other hand, estimating can be achieved by obtaining a dual representation of h with a given dataset. By the representer theorem (Kimeldorf and Wahba, 1970), any regularized estimator of with being the penalty function can be represented as , where α is the unknown regression parameter and is the n × n matrix with (i, j)th element being Kij(ρ) = K(Zi, Zj; ρ). The dual representation of h provides us a convenient way of assessing the effects of z on the outcome of interest without necessitating the specification of the basis functions.

Under the dual representation, the estimation of the unknown parameters {α, γ} could be facilitated by maximizing the penalized partial likelihood (PPL) function

| (1) |

where , , and is a penalty parameter. The PPL function (1) is closely related to the random effect Cox model:

| (2) |

where ∊ = (∊1, ⋯, ∊n)T are the unobserved random effects, τ is the unknown variance component, and is Moore-Penrose generalized inverse of . Specifically, if ∊ is further assumed to follow , equation (1) corresponds to fitting (2) using the PQL approximation (Breslow and Clayton, 1993) and the penalty parameter c corresponds to the reciprocal of τ2. Similar connections between the kernel machine penalized regression and the mixed effects models have been discussed in (Liu et al., 2007, 2008) for analyzing non-censored data.

3. Score Test for the Parametric/Non-parametric Function

The above connection between the PPL and the mixed effects model motivates us to employ the mixed effects model given in (2) and test the null hypothesis of no pathway effect by testing

since for a centered h, h(·) = 0 if and only if var{h(z)} = 0 for all z. Under the mixed effects framework, testing the variance being 0 for all z is equivalent to testing τ = 0.

3.1 Score Statistic

Since the null value of τ = 0 is on the boundary of the parameter space and the kernel matrix is not block diagonal, the distribution of the likelihood ratio statistic for H0 : τ = 0 is non-standard. Here, we propose a score test based on the working model (2) which can be derived along the lines of Commenges and Andersen (1995). Specifically, let the partial likelihood function conditional on ε be

If γ is known, then the score statistic is , where denotes the observed data. When γ is unknown as in most practical settings, we obtain the score statistic as , where is the maximum partial likelihood estimator of γ under H0 : h(z) = 0. It is straightforward to see that the resulting score statistic takes the form

where , , , , , and

In the Appendix, we show that which centers around 0, where which is the limiting mean of ,

| (3) |

, , , , , , , , and .

For the linear kernel with , is equivalent to the test statistic proposed in equation (4) of Goeman et al. (2005). In general, if the orthogonal basis functions for K(·, ·, ρ) with a given ρ are known and L is finite, then one may directly derive based on the primal representation . Specifically, assuming var(βl) = τ2 and cov(βl, βl′) = 0, one may obtain the same score statistic based on arguments given in Goeman et al. (2005, 2006). Furthermore, based on Lemma 4 of Goeman et al. (2006), one may argue that the score test based on is locally most powerful. However, deriving tests directly from basis functions may not be feasible as often cannot be specified explicitly and the parameter ρ is often unknown and not estimable under the null. Furthermore, Goeman et al. (2006) indicated that it is unclear how to approximate the null distribution of the score test statistic with a large L. Note that for many non-linear kernels such as the Gaussian kernel, L could be infinity even if p is fixed. The use of the kernel machine framework avoids the explicit specification of basis functions and allows us to derive the asymptotic null distribution of our proposed test as a process in ρ for a general kernel function.

3.2 Approximating the Score Test Statistic and Its Null Distribution

In the Appendix, we show that converges weakly to the process defined in (A.3). For a fixed ρ, one may use the Satterthwaite method to approximate the distribution of using a rescaled χ2 distribution, . The scale parameter c0 and the degrees of freedom (DF) d0 can be estimated by matching the first two moments of . The scaled χ2 distribution has shown to provide a good approximation to the distribution of the score statistic for continuous and binary outcomes (Liu et al., 2007, 2008).

The effective DF d0 decreases as we increase the correlation between covariates. Furthermore, d0 increases slowly with p when the correlation is moderate/high, but more rapidly with p when the correlation is low. Thus with the same h(z), a higher correlation among Z is likely to yield a higher power of the score test and a more gradual power loss over increasing p. This suggests that the kernel machine score test improves the power for testing for the pathway effect by effectively borrowing information across different genes and accounting for between-gene correlation in calculating a data-adaptive DF. By doing so, it often results in a low DF test and increase the test power. Specifically, consider the case h(z) = βTz. The null hypothesis for no pathway effect, H0 : h(z) = 0 is equivalent to H0 : β = 0. The traditional testing approach based on a p-DF test would suffer from power loss if p is large. The kernel machine score test effectively accounts for the correlation among the genes and is often based on a much smaller DF d0. For example, consider the extreme case if there are say 20 genes within a pathway and the genes are highly correlated, say correlation >0.95, the traditional 20-DF test for H0 : β = 0 will have little power. One can show that d0 is close to 1 by effectively accounting for the correlation between genes, and is hence much more powerful.

For the linear kernel , the score test can be obtained based on with ρ = 0 since ρ corresponds to the intercept which would be absorbed by the unknown baseline hazard function. For the Gaussian kernel, the score test may depend on ρ. It is not difficult to see that under H0, the kernel matrix disappears and hence the scale parameter ρ is inestimable. Davies (1987) studied the problem of a parameter disappearing under H0 and proposed a score test by treating the score statistic as a Gaussian process indexed by the nuisance parameter ρ. Here, we propose to take a similar approach by considering the score test statistic

where is the range of ρ to be considered and is a consistent estimator of To approximate the limiting distributions of and in finite samples, we consider a resampling procedure which has been successfully used in the literature (Parzen et al., 1994; Cai et al., 2000; Park and Wei, 2003). Specifically, let be a vector of n i.i.d standard normal random variables generated independent of the data. For each set of , we obtain

where , , , and are the empirical counterparts of , , , and , , , , and the empirical counterparts of WΛi which are defined in (A.1). It follows from similar arguments as given in Cai et al. (2000) that conditional on the data converges weakly to . To calculate the p-value for testing H0 one may generate a large number, say B, realizations of and obtain realizations of , . For any fixed ρ, the p-value of the test can be obtained as where and is the empirical mean of . Alternatively, one may estimate the first two moments of based on and then calculate the p-value based on thebone may χ2 approximation. When ρ is unknown in practice, one may test H0 based on the sup-statistic. The null distribution of can be approximated by the empirical distribution of given the data, where is the empirical variance of . To assess the significance for testing H0, one may the p-value based on as .

3.3 Kernel PCA Approximation

An appropriate selection of the kernel function could potentially lead to improved power in detecting the signal. However, especially in finite sample, the complexity of the feature space corresponding to the kernel function may lead to a larger number of nuisance parameters and thus result in a loss in power. On the other hand, there often exists some correlations among the covariates and thus dimensionality reduction or so-called feature extraction may allow us to restrict the entire feature space to a sub-space of lower dimensionality. One approach to achieving such a goal is through kernel principal component analysis (PCA) (Scholkopf et al., 1998). We propose to investigate the effect of dimension reduction on the power of the score test. To this end, we take a singular value decomposition of the matrix , where are the eigenvalues and are the corresponding eigenvectors. We assume that with a properly chosen ρ, the eigenvalues decay quickly and thus there exists an such that where is a prespecified constant. Consider the kernel PCA approximation to the original kernel matrix , . Let and be and with replaced by , respectively. It is not difficult to show that , where is the ith element of , and are the respective limit of and . Based on the convergence properties of the eigenvectors to the eigenfunctions (Bengio et al., 2004; Zwald and Blanchard, 2006), one may view as an empirical kernel matrix corresponding to , the -degenerated approximation of K (Braun, 2005). Thus, treating as a new kernel matrix, the same arguments as given in the Appendix can be used to establish the limiting distribution of and justify the resampling method. For a given range of of ρ, the final score test based on could be obtained as , where is the estimated variance of .

The kernel PCA approximation is equivalent to approximating by . Based on truncated set of basis functions, one may approximate the leading term of the score statistic by . Based on Satterthwaite method, one may approximate the distribution of as under H0 and as under the alternative. Both the DF d0(ℓ0) and the non-centrality parameter δ(ℓ0) are dominated by the larger eigenvalues. Thus, we expect that the majority of the information about h to be captured by . Similar findings based on eigenvalue decomposition have been discussed in Goeman et al. (2006) for the linear model.

3.4 Practical Issues with the Choice of ρ

Although our simulation results show that the score test is not sensitive to the choice of ρ within a reasonable range . However, when ρ → 0 or ρ → ∞, the kernel matrix may become degenerated and thus it may become infeasible to draw inference. For example, when K is the Gaussian kernel, ρ → 0 corresponds to no similarity between subjects and ρ → ∞ corresponds to no heterogeneity between subjects. For both extreme cases, one may be unable to draw conclusion about the association between T and Z based on the given kernel. It is important to note that the eigenvalues of the kernel matrix does not decay when ρ → 0 and would be all 0 except for the first one when ρ → ∞.

For the Gaussian kernel, to prevent from being degenerated while including a sufficiently wide range of ρ, one may as determine the range of ρ based on the kernel PCA. Specifically, let denote the smallest ℓ such that . Then the range of ρ can be chosen as , . In practice, we recommend with . The constants are chosen to ensure that the rank of is not close to 1 and the eigenvalue of has a reasonable decay rate. See more discussions on the choice of ℓ0 in the discussion section.

4. Simulation Studies

We conducted simulation studies to assess the performance of the proposed score test. Throughout, we generated Z from a multivariate normal with mean 0, unit variance and correlation ℘. We considered , and 0 to represent a moderate, weak, and zero correlation among the Z's, respectively. For simplicity, no additional covariates were considered for numerical studies. The censoring was generated from an exponential with mean μC. For each configuration, we generated 2000 datasets to calculate the empirical size and 1000 datasets to calculate the empirical power. For each simulated dataset, we carried out the score test for two types of kernels: (1) Gaussian kernel with KG(z1, z2; ρ) = exp{−∥z1 − z2∥2/ρ}; and (2) Linear kernel with . For comparison, we evaluated the performance of the score test using and the approximated kernel matrix with . We considered testing based on (a) sup statistic, and , with KG; (b) and with KG and ρ2 being the upper bound of ; and (c) and with KL. For the resampling procedure, we let B = 1000. We considered n = 100 and 200; censoring proportion of 25% (μC = 3) and 50% (μC = 1), and p = 5, 10, and 100.

First, to examine the validity of our proposed testing procedure in finite samples, we generated data under the null model to assess the size of the score test. Specifically, we generated log T from an extreme value distribution and thus the survival time is independent of the covariates. The empirical sizes of the proposed tests are summarized in Table 1 for and Table 2 for . We evaluated the performance of testing procedures based on various approximations to the null distribution: (i) the resampling procedure; (ii) the scaled χ2 approximation; and (iii) the normal approximation as suggested in the literature. Across all the configurations, the empirical sizes for , , and , are close to the nominal levels when the null is approximated based on the resampling and the scaled χ2. The PCA based test appears to perform slightly better when p is large. This is in part due to the reduction in the DF. On the contrary, the normal approximations do not appear to work well in many of the settings. For example, when n = 100, p = 5, with 50% of censoring, the empirical sizes for were (7.4%, 8.4%) based on the normal approximation, (5.8%, 5.5%) based on the resampling procedure and (5.5%, 6.2%) based on the χ2 approximation. Similar patterns were observed for and larger p's.

Table 1.

Empirical sizes at type I error rate of 0.05 for the case when the genes are moderately correlated (℘ = 0.5). Testing was performed based on both the Gaussian kernel and the Linear kernel. For Gaussian kernel, we compared (i) sup-statistic with the original kernel (subscript ); (ii) sup-statistic with the kernel PCA including 90% of eigenvalues (subscript ); (iii) score test with ρ fixed at ρ2 (upper bound of ) with original kernel (subscript ); (iv) score test at ρ2 with kernel PCA (subscript ). For the linear kernel, the testing was performed based on and . The null distributions were generated based on (a) the resampling procedure (indexed by P); (b) the χ2 approximation (indexed by χ2); and (c) the normal approximation (indexed by N).

| p = 5 | p = 10 | p= 100 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Censoring % | 50% | 25% | 50% | 25% | 50% | 25% | ||||||||

| Kernel | n | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | |

| Gaussian | sup | 5.8 | 4.1 | 4.5 | 4.2 | 4.0 | 5.2 | 4.0 | 4.3 | 5.1 | 5.2 | 5.1 | 4.0 | |

| 5.3 | 4.6 | 5.1 | 4.24 | 4.24 | 5.7 | 4.2 | 4.8 | 5.4 | 5.4 | 5.8 | 4.6 | |||

| 7.8 | 6.4 | 6.4 | 6.2 | 6.0 | 7.6 | 5.4 | 6.1 | 6.4 | 6.8 | 7.0 | 5.6 | |||

| 8.7 | 7.0 | 7.4 | 6.9 | 6.8 | 8.1 | 6.0 | 6.8 | 7.0 | 7.0 | 7.9 | 6.0 | |||

|

|

||||||||||||||

| ρ 2 | 5.8 | 4.5 | 5.0 | 4.5 | 4.0 | 5.4 | 4.0 | 4.6 | 5.0 | 5.2 | 5.0 | 4.1 | ||

| 5.5 | 4.7 | 5.4 | 4.6 | 4.8 | 6.0 | 4.4 | 4.9 | 5.6 | 5.2 | 5.9 | 4.6 | |||

| 7.4 | 5.9 | 6.4 | 5.8 | 5.8 | 7.3 | 5.2 | 5.8 | 6.4 | 6.8 | 7.1 | 5.6 | |||

| 8.4 | 7.0 | 7.1 | 6.4 | 6.2 | 7.8 | 6.6 | 6.9 | 7.5 | 7.1 | 8.0 | 6.3 | |||

| 5.5 | 4.4 | 4.8 | 4.4 | 4.0 | 5.5 | 4.1 | 4.6 | 5.2 | 5.2 | 5.2 | 4.4 | |||

| 6.2 | 4.8 | 5.4 | 4.5 | 4.6 | 5.9 | 4.4 | 4.9 | 5.4 | 5.2 | 6.0 | 4.7 | |||

|

|

||||||||||||||

| Linear | 4.5 | 6.0 | 3.9 | 5.6 | 5.2 | 4.0 | 4.7 | 4.8 | 5.8 | 4.9 | 6.0 | 4.6 | ||

| 4.2 | 5.9 | 4.4 | 5.3 | 5.2 | 4.2 | 4.8 | 4.6 | 5.8 | 5.0 | 6.1 | 4.6 | |||

| 6.0 | 7.2 | 5.8 | 6.8 | 7.0 | 5.2 | 6.2 | 6.4 | 7.5 | 6.9 | 7.6 | 6.0 | |||

| 5.7 | 7.5 | 5.8 | 6.7 | 6.8 | 5.4 | 6.2 | 6.8 | 7.6 | 7.0 | 7.8 | 6.0 | |||

| 4.5 | 5.9 | 3.8 | 5.6 | 5.2 | 4.1 | 4.6 | 4.6 | 6.0 | 5.2 | 6.2 | 4.9 | |||

| 4.2 | 5.8 | 4.3 | 5.2 | 5.2 | 4.2 | 4.7 | 4.7 | 6.0 | 5.2 | 6.2 | 5.0 | |||

Table 2.

Empirical sizes at type I error rate of 0.05 for the case when the genes are weakly correlated (℘ = 0.2). Testing was performed based on (i) sup-statistic with the original kernel (subscript ); (ii) sup-statistic with the kernel PCA including 90% of eigenvalues (subscript ); (iii) score test with ρ fixed at ρ2 (upper bound of ) with original kernel (subscript ); (iv) score test at ρ2 with kernel PCA (subscript ). The null distributions were generated based on (a) the resampling procedure (indexed by P); (b) the χ2 approximation (indexed by χ2 ); and (c) the normal approximation (indexed by N).

| p = 5 | p = 10 | p =100 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Censoring % | 50% | 25% | 50% | 25% | 50% | 25% | ||||||||

| Kernel | n | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | |

| Gaussian | sup | 4.8 | 3.6 | 3.4 | 4.0 | 3.3 | 4.5 | 3.0 | 3.6 | 3.5 | 4.6 | 3.0 | 3.0 | |

| 5.4 | 4.5 | 4.9 | 4.6 | 4.2 | 5.4 | 4.2 | 4.1 | 5.2 | 5.3 | 5.4 | 4.4 | |||

| 6.9 | 5.4 | 5.4 | 6.1 | 4.8 | 6.5 | 4.1 | 4.9 | 4.3 | 5.4 | 4.1 | 4.0 | |||

| 10. | 7.4 | 8.2 | 7.8 | 7.1 | 8.2 | 7.1 | 7.3 | 7.0 | 7.1 | 7.6 | 6.2 | |||

|

|

||||||||||||||

| ρ 2 | 5.4 | 3.7 | 3.8 | 4.0 | 3.5 | 4.4 | 3.2 | 4.0 | 3.6 | 4.6 | 3.0 | 3.2 | ||

| 6.2 | 4.7 | 5.3 | 4.5 | 4.4 | 5.8 | 4.6 | 4.8 | 5.6 | 5.3 | 5.8 | 4.7 | |||

| 7.2 | 5.3 | 5.5 | 6.0 | 4.8 | 6.4 | 4.6 | 4.9 | 4.4 | 5.3 | 4.0 | 4.1 | |||

| 8.4 | 6.7 | 7.0 | 6.5 | 6.2 | 7.2 | 6.2 | 6.8 | 7.4 | 7.2 | 8.0 | 6.2 | |||

| 5.2 | 3.6 | 3.6 | 4.0 | 3.4 | 4.8 | 3.3 | 4.0 | 3.6 | 4.8 | 3.2 | 3.3 | |||

| 6.0 | 4.6 | 5.3 | 4.4 | 4.4 | 6.0 | 4.6 | 4.8 | 5.5 | 5.3 | 5.9 | 5.0 | |||

|

|

||||||||||||||

| Linear | 4.1 | 5.6 | 4.2 | 5.1 | 4.3 | 3.4 | 3.2 | 3.6 | 4.0 | 4.3 | 4.0 | 3.4 | ||

| 4.4 | 5.5 | 4.1 | 5.4 | 4.4 | 3.7 | 3.7 | 4.1 | 4.4 | 4.5 | 4.5 | 3.4 | |||

| 6.0 | 7.1 | 5.8 | 6.7 | 5.8 | 4.9 | 5.1 | 4.8 | 5.4 | 5.4 | 5.0 | 4.4 | |||

| 6.2 | 7.3 | 5.9 | 6.6 | 6.2 | 5.2 | 5.3 | 5.4 | 5.6 | 5.4 | 5.4 | 4.6 | |||

| 4.0 | 5.4 | 4.2 | 4.8 | 4.2 | 3.3 | 3.3 | 3.6 | 4.4 | 4.6 | 4.2 | 3.4 | |||

| 4.2 | 5.3 | 4.2 | 5.2 | 4.4 | 3.7 | 3.8 | 4.0 | 4.6 | 4.8 | 4.4 | 3.6 | |||

Results reported in Tables 1 and 2 suggest that one may use the χ2 approximation to assess the DF for the score test. For example, when the correlation is weak with , the effective DF of are about 3.9, 6.1, and 11.4 for p = 5, 10, and 100, respectively. When , the DFs are 2.5, 2.9and 3.7, respectively. Thus, as we increase the correlation among Z, the effective DFs of the score test are much lower and do not increase much with the covariate dimension. This suggests that the kernel machine test effectively borrows information across genes within a pathway by accounting for their correlation to construct for a low-DF test and increase the power of the test. This shows an attractive feature of the kernel machine test as a powerful approach to detecting the pathway effect in high-dimensional data problems.

The tests based on and also perform well in maintaining the size. As expected, the performance improves as we increase n and/or decrease the censoring proportion. When p = 100 and , the empirical sizes based on tends to be slightly lower than the nominal level. For such settings, the effective DF is large relative to n, and thus the standard large sample distribution theory may not hold for and with a general K. On the other hand, the use of kernel PCA seems to reduce the effective DF and thus lead to with reasonable empirical sizes even with p = 100.

To assess the power of the proposed tests, we generated data from a proportional hazards model log T = h(Z) + ε, where ε follows an extreme value distribution. Two forms of h(·) was considered: (1) corresponding to a standard Cox model with linear effects, and (2) a complex functional form with . For each model, we generated p covariates with p = 5, 10, 100, and the event time T is only determined by the first 5 covariates based on the model. The empirical power was summarized in Table 3 for the standard Cox model and in Table 4 for the non-linear model.

Table 3.

Empirical power when h(Z) is linear at the type I error rate of 0.05 with p=5, 10, and 100 genes are used to fit the model while the true number of genes is 5. Testing was performed based on (i) sup-statistic with the original kernel (subscript ); (ii) sup-statistic with the kernel PCA including 90% of eigenvalues (subscript ); (iii) score test with ρ fixed at ρ2 (upper bound of ) with original kernel (subscript ); (iv) score test at ρ2 with kernel PCA (subscript ). The null distributions were generated based on the resampling procedure (indexed by P).

| p = 5 | p = 10 | p = 100 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Censoring % | 50% | 25% | 50% | 25% | 50% | 25% | ||||||||

| Kernel | n | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | |

| Correlation = 0.5 | ||||||||||||||

| Gaussian | sup | 67 | 94 | 83 | 99 | 68 | 94 | 83 | 99 | 67 | 92 | 82 | 98 | |

| 68 | 94 | 85 | 99 | 69 | 94 | 85 | 99 | 68 | 92 | 83 | 99 | |||

|

|

||||||||||||||

| ρ 2 | 69 | 95 | 86 | 99 | 69 | 94 | 84 | 100 | 67 | 92 | 82 | 98 | ||

| 72 | 96 | 88 | 99 | 71 | 94 | 86 | 100 | 69 | 92 | 84 | 99 | |||

|

|

||||||||||||||

| Linear | 74 | 97 | 88 | 100 | 71 | 96 | 86 | 100 | 66 | 94 | 81 | 99 | ||

| 75 | 96 | 88 | 100 | 72 | 96 | 87 | 100 | 66 | 94 | 81 | 99 | |||

|

| ||||||||||||||

| Correlation = 0.2 | ||||||||||||||

| Gaussian | sup | 42 | 72 | 57 | 89 | 37 | 66 | 49 | 83 | 28 | 57 | 37 | 73 | |

| 49 | 78 | 65 | 92 | 42 | 70 | 56 | 86 | 34 | 61 | 48 | 76 | |||

|

|

||||||||||||||

| ρ 2 | 43 | 74 | 59 | 90 | 38 | 67 | 50 | 84 | 28 | 57 | 37 | 73 | ||

| 54 | 82 | 69 | 94 | 45 | 72 | 60 | 87 | 34 | 62 | 48 | 76 | |||

|

|

||||||||||||||

| Linear | 44 | 77 | 59 | 89 | 39 | 69 | 54 | 86 | 29 | 58 | 39 | 73 | ||

| 44 | 78 | 61 | 90 | 40 | 70 | 54 | 86 | 30 | 58 | 40 | 74 | |||

Table 4.

Empirical power when h(Z) is non-linear at the type I error rate of 0.05 with p =5, 10, and 100 genes used to fit the model while the true number of genes is 5. Testing was performed based on (i) sup-statistic with the original kernel (subscript ); (ii) sup-statistic with the kernel PCA including 90% of eigenvalues (subscript ); (iii) score test with ρ fixed at ρ2 (upper bound of) with original kernel (subscript ); (iv) score test at ρ2 with kernel PCA (subscript ). The null distributions were generated based on the resampling procedure (indexed by P).

| p = 5 | p = 10 | p = 100 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Censoring % | 50% | 25% | 50% | 25% | 50% | 25% | ||||||||

| Kernel | n | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | 100 | 200 | |

| Correlation = 0.5 | ||||||||||||||

| Gaussian | sup | 94 | 100 | 94 | 100 | 78 | 100 | 76 | 100 | 30 | 75 | 29 | 73 | |

| 84 | 100 | 85 | 100 | 70 | 99 | 69 | 98 | 31 | 75 | 31 | 73 | |||

|

|

||||||||||||||

| ρ 2 | 54 | 97 | 56 | 96 | 32 | 81 | 34 | 82 | 21 | 55 | 22 | 56 | ||

| 19 | 36 | 21 | 40 | 13 | 21 | 17 | 26 | 10 | 17 | 12 | 18 | |||

|

|

||||||||||||||

| Linear | 8 | 10 | 9 | 10 | 9 | 11 | 10 | 14 | 8 | 10 | 9 | 10 | ||

| 7 | 9 | 9 | 10 | 9 | 11 | 9 | 13 | 8 | 10 | 9 | 10 | |||

|

| ||||||||||||||

| Correlation = 0.2 | ||||||||||||||

| Gaussian | sup | 65 | 99 | 61 | 99 | 21 | 60 | 19 | 54 | 5 | 7 | 4 | 6 | |

| 30 | 89 | 29 | 87 | 10 | 20 | 10 | 16 | 6 | 6 | 5 | 6 | |||

|

|

||||||||||||||

| ρ 2 | 17 | 35 | 16 | 33 | 8 | 15 | 9 | 13 | 5 | 6 | 4 | 6 | ||

| 11 | 16 | 12 | 15 | 8 | 9 | 8 | 9 | 5 | 6 | 5 | 6 | |||

|

|

||||||||||||||

| Linear | 6 | 8 | 7 | 8 | 8 | 7 | 7 | 7 | 4 | 6 | 4 | 6 | ||

| 7 | 8 | 7 | 8 | 8 | 8 | 7 | 7 | 4 | 7 | 5 | 6 | |||

First, consider the case with moderate correlation . For the standard Cox model, the best strategy would be based on KL with p = 5 (i.e., using the true 5 genes). For a n = 100 with 50% of censoring, this best strategy achieves about 74% of power. When we fit the model with p = 100 by including additional 95 noise covariates, the power decreases to 66%. Thus, the score test appears to have reasonable power in detecting the signal even in the presence of a large number of noise features. The use of kernel PCA does not appear to affect the power for linear kernel. When KG is used, the score test achieves about 67% of power with p = 5 and with p = 100. Applying kernel PCA test by thresholding the eigenvalues at , the power remains almost identical. There appears to be little loss in power for using KG compared to KL when and are considered. This can in part be attributed to the fact that KG is approximately KL when ρ is relatively large (Liu et al, 2008). When h(z) is non-linear, the use of KG resulted in a substantial improvement in power when compared to KL. For example, with p = 5, n = 100 and 50% of censoring, the power is about 94% for and 8% with the linear kernel. Even when p = 100 and n = 200, the power was 75% based on and merely 8% based on . This suggests drastically amount of gain in power for employing KG over KL when the true effects are highly non-linear. Again, the power does not appear to decrease dramatically as we increase the number of noise covariates. This is likely due to the fact that the kernel machine test effectively accounts for the correlation between the covariates which results in little increase in the effective DF as p increases.

We next summarize how the results may be affected by the covariate correlation ℘. As we decrease ℘, the effective DF of the score statistics under the null increases which is likely to yield a power loss. This is consistent with the results shown in Table 3 and 4. For example, under the standard Cox model, the power of decreased from 83% to 49% when ℘ was decreased from 0.5 to 0.2, when p = 10, n = 100 and 25% censoring. Moreover, the power appears to decrease more rapidly with p when ℘ is small. When n = 100 and 25% censoring, as p increases from 5 to 100, the power of decreases from 83% to 82% when and from 57% to 37% when . This is also expected since when ℘ is small, the effective DF increases more rapidly with p. One cannot borrow information across genes to improve power. In the extreme case that all genes are independent, the best test would need to be based on p DF. In pathway analysis, as genes are often correlated with pathway, one would expect a high power using the kernel machine test as it effectively borrow information across genes. It is also worth noting that when and the underlying effects are non-linear, there appears to be a substantial power loss by using which only includes PCAs that account for 90% of the total variation. Thus, for such settings, a higher threshold values should be considered to minimize the information loss. When the threshold value was increased to 99%, the difference between and becomes minimal with respect to power.

5. Example: Breast Cancer Gene Expression Study

Findings from genomic research hold great potential to improve disease outcomes for breast cancer patients. For example, the discovery that mutations in BRCA1 and BRCA2 genes increase the risk of breast cancer has radically transformed our understanding of the genetic basis of breast cancer, leading to improved management of high-risk women. A number of genomic biomarkers have been developed for clinical use, and increasingly, pharmacogenetic end points are being incorporated into clinical trial design (Olopade et al., 2008). Despite recent advances in understanding genetic susceptibility to breast cancer, it remains important to identify and understand molecular pathways of pathogenesis (Nathanson et al., 2001). Here, we are particularly interested in assessing whether various canonical pathways from the molecular signature database are related to breast cancer survival. Examples of these pathways include AKAP13, EGFR SMRTE and p53. Genetic alterations within AKAP13 are expected to provoke a constitutive Rho signaling, thereby facilitating the development of cancer (Wirtenberger et al., 2006). Epidermal growth factor receptor (EGFR) is a receptor tyrosine kinase and is expressed in a wide variety of epithelial malignancies including non-small-cell lung cancer, head and neck cancer, and breast cancer (Kuwahara et al., 2004; Nicholson et al., 2001). EGFR activation promotes tumor growth by increasing cell proliferation, motility, or angiogenesis, and by blocking apoptosis (Holbro et al., 2003). p53 mutation remains the most common genetic change identified in human neoplasia and is associated with more aggressive disease and worse overall survival in breast cancer (Gasco et al., 2002).

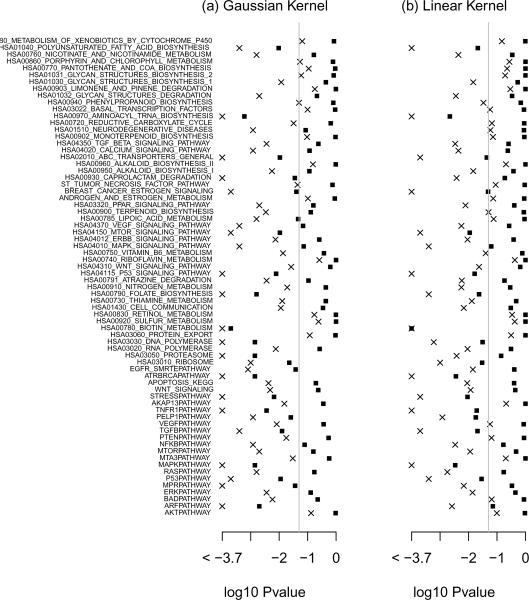

Here, we apply the proposed score test to assess the overall effect of various canonical pathways using a recent breast cancer study by van de Vijver et al. (2002). The primary goal of this study was to evaluate the performance of prognostic rules constructed based on microarray gene expression data from Van't Veer et al. (2002). There are a total of 260 patients with primary breast carcinomas from the Netherlands Cancer Institute. The median followup time was 8.8 years and there was about 75% of censoring. For illustration, we consider 70 pathways that are potentially related to breast cancer survival. The number of genes contained in a pathway ranges from 2 to 235 with a median of 22. We applied the the proposed score test with both the Gaussian based on and the linear kernel based on . The p-value for the aforementioned 70 pathways are shown bin Figure 1. The score test identifies 56 (80%) pathways as significantly associated with breast cancer survival with p-value < 0.05 when the Gaussian kernel is used; and 46 (66%) when the linear kernel is used. For example, the AKAP13 pathway is significant with a p-value of 0.015 based on the Gaussian kernel but not significant with a p-value of 0.21 based on the linear kernel. For this pathway, there are a total of 10 genes and their effects appear to be non-linear. When comparing the fitted Cox model with linear effects only to the model with both linear and quadratic effects, the inclusion of quadratic effects yields an increase of 19.5 in the log partial likelihood with a p-value of 0.035. This also suggests the underlying effects of these genes are potentially non-linear.

Figure 1.

log10 Pvalue for testing the overall effect of the 70 genetic pathways on breast cancer survival based on the kernel machine score test with Gaussian kernel and with the linear kernel. The crosses and the squares represent p-values before and after adjusting for multiple comparisons, respectively. The results are based on B = 5000 bperturbations.

In settings where multiple pathways are under examination, it is important to adjust for multiple comparison. The proposed procedure can easily be extended to incorporate such an adjustment. Specifically, let denote the observed score statistic for the jth pathway and represents null counterpart. Then the adjusted p-value can be obtained by comparing the observed score statistic to the distribution of . In practice, realizations of can be easily generated via the aforementioned resampling procedure. For b = 1, …, B, we generate a set of standard normal random variable to calculate simultaneously and obtain . The adjusted p-value for can be calculated as . After multiple comparisons, adjusting for 23 pathways remain significant when Gaussian kernel is used and 17 when Linear kernel is used. For example, without adjusting for multiple comparisons, the EGFR pathway is significantly associated with breast cancer survival with p-value 0.0008 based on the Gaussian kernel and 0.014 based on the linear kernel. However, after accounting for the fact that we tested 70 pathways, it remains significant with a p-value of 0.038 when the Gaussian kernel is used but no longer significant when the linear kernel is used.

6. Discussions

We develop a powerful kernel machine test to test for the parametric/nonparametric pathway effect for survival data. A key advantage of the kernel machine test is that it can effectively borrow information across genes within a pathway by accounting for between-gene correlation and yield a powerful test with a low DF test if the genes within a pathway are moderately correlated. Its power is shown not affected when the number of genes including noisy genes increases within a pathway as long as the genes are correlated. When the underlying effects of Z is complex, testing based on the Gaussian kernel could potentially lead to a substantial power gain when compared to testing with linear kernel which corresponds to the Goeman et al (2005) procedure. The R code for implementing the proposed testing procedures will be available upon request.

The scaled χ2 approximation to the null distribution of provides us a venue to assess the effective DF for the proposed test. As one expects, the DF does not increase with p quickly when Z are moderately or highly correlated and thus under such settings, the proposed test works well even for large p. On the other hand, when there is little correlation between the genes, the effective DF would be of similar order as p and thus the test may perform poorly when p is in the similar magnitude as n. In this case, resampling methods such as the permutation procedure could be used to estimate the null distribution of the test.

For the Gaussian kernel, the selection of ρ plays an important role in the score test. Large sample approximation to the null distribution may perform poorly when ρ is too small or too large. We determine the range of ρ based on kernel PCA. It is important to note the trade-off between selecting different values of ℓ0. A small ℓ0 might result in a significant power loss due to approximating with while a large ℓ0 may result in a higher effective DF and thus may also lead to efficiency loss. From Theorem 5.541 of Braun (2005), the projection error due to kernel PCA is approximately of order for some a > 0 when the eigenvalues of the kernel function K decay at a polynomial rate and of order for some b > 0 when the eigenvalues decay exponentially. It would be interesting to investigate the optimal thresholding value ℓ0. Our simulation results suggest that in most cases, the score test with with respect to power and size.

Our proposed test adjust for covariates U under a linearity assumption on the effects of U. When the true effect of U is non-linear, the proposed test derived under the linearity assumption may have incorrect size. Thus, it is important to examine the appropriateness of the linearity assumption. In practice, since U involves clinical variables that are typically well studied, prior information is often available to pre-specified potentially non-linear functional forms for U. More robust procedures such as the regression spline could also be used to incorporate non-linear effects in U.

Acknowledgement

Cai's research is supported by grants R01-GM079330-03 from the National Institute of General Medical Sciences and DMS 0854970 from the National Science Foundation. Lin's research is supported by the grants R37-CA076404 and P01-CA134294 from the National Cancer Institute. The authors thank the editor and the reviewers for their helpful comments for improving the manuscript.

Appendix: Asymptotic Distribution for the Score Test Statistic

Throughout, we assume that , Z is bounded, K(z1, z2, ρ) is continuously differentiable with respect to all of its arguments and is symmetric about z1 and z2, the conditional density of C given Z is continuous and bounded and the marginal density of C is bounded away from 0 on [0, τ]. We assume that the upper bound of the support of X, denoted by τ, is finite.

To derive the asymptotic distribution for , we first note that (Fleming and Harrington, 1991), , , where γ0 is the true value of γ,

| (A.1) |

, , for any vector u, , , and . It follows that

| (A.2) |

We next write , where

From (A.2), . By a uniform law of large numbers (ULLN, Pollard, 1990), . This, together with Lemma A1 of Bilias et al (1997), implies that

Furthermore, is asymptotically equivalent to

This, together with a ULLN for U-processes (Nolan and Pollard, 1987) and Lemma A1 of Bilias et al. (1997), implies that . Lastly, by a ULLN and the consistency of , , . This, together with the approximations for and , implies that .

We next derive the asymptotic distribution of . First, both I(Z ≤ z) and Mi(t) have finite pseudo-dimensions , implies that converge jointly to zero-mean Gaussian processes , and W. Since is a smooth functional of , and W, it then follows from a strong representation theorem (Pollard, 1990) and Lemma A1 of Bilias et al (1997) that that converges weakly to the process

| (A.3) |

References

- Bengio Y, Delalleau O, Le Roux N, Paiement J, Vincent P, Ouimet M. Learning Eigenfunctions Links Spectral Embedding and Kernel PCA. Neural Computation. 2004;16:2197–2219. doi: 10.1162/0899766041732396. [DOI] [PubMed] [Google Scholar]

- Bilias Y, Gu M, Ying Z. Towards a general asymptotic theory for Cox model with staggered entry. The Annals of Statistics. 1997;25:662–682. [Google Scholar]

- Braun M. PhD thesis. University of Bonn; 2005. Spectral Properties of the Kernel Matrix and their Application to Kernel Methods in Machine Learning. [Google Scholar]

- Breslow N, Clayton D. Approximate inference in generalized linear mixed models. Journal of the American Statistical Association. 1993;88:9–25. [Google Scholar]

- Brown M, Grundy W, Lin D, Cristianini N, Sugnet C, Furey T, Ares M, Haussler D. Knowledge-based analysis of microarray gene expression data by using support vector machines. Proceedings of the National Academy of Sciences. 2000;97:262–267. doi: 10.1073/pnas.97.1.262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buhmann M. Radial Basis Functions: Theory and Implementations. Cambridge University Press; 2003. [Google Scholar]

- Burges C. A Tutorial on Support Vector Machines for Pattern Recognition. Data Mining and Knowledge Discovery. 1998;2:121–167. [Google Scholar]

- Cai T, Wei L, Wilcox M. Semiparametric regression analysis for clustered failure time data. Biometrika. 2000;87:867–878. [Google Scholar]

- Commenges D, Andersen P. Score test of homogeneity for survival data. Lifetime Data Analysis. 1995;1:145–156. doi: 10.1007/BF00985764. [DOI] [PubMed] [Google Scholar]

- Cox D. Regression models and life tables (with Discussion) J. R. Statist. Soc. 1972;B 34:187–220. [Google Scholar]

- Cristianini N, Shawe-Taylor J. An Introduction to Support Vector Machines. Cambridge University Press; 2000. [Google Scholar]

- Davies R. Hypothesis testing when a nuisance parameter is present only under the alternative. Biometrika. 1987;74:33–43. [Google Scholar]

- Fleming T, Harrington D. Counting Processes and Survival Analysis. John Wiley and Sons; NY: 1991. [Google Scholar]

- Furey T, Cristianini N, Duffy N, Bednarski D, Schummer M, Haussler D. Support vector machine classification and validation of cancer tissue samples using microarray expression data. Bioinformatics. 2000;16:906–914. doi: 10.1093/bioinformatics/16.10.906. [DOI] [PubMed] [Google Scholar]

- Gasco M, Shami S, Crook T. The p53 pathway in breast cancer. Breast Cancer Research. 2002;4:70–76. doi: 10.1186/bcr426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goeman J, Oosting J, Cleton-Jansen A, Anninga J, van Houwelingen H. Testing association of a pathway with survival using gene expression data. Bioinformatics. 2005;21:1950–1957. doi: 10.1093/bioinformatics/bti267. [DOI] [PubMed] [Google Scholar]

- Goeman J, Van De Geer S, De Kort F, Van Houwelingen H. A global test for groups of genes: testing association with a clinical outcome. Bioinformatics. 2004;20:93–99. doi: 10.1093/bioinformatics/btg382. [DOI] [PubMed] [Google Scholar]

- Goeman J, Van De Geer S, Van Houwelingen H. Testing against a high dimensional alternative. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2006;68:477–493. [Google Scholar]

- Holbro T, Civenni G, Hynes N. The ErbB receptors and their role in cancer progression. Experimental cell research. 2003;284:99–110. doi: 10.1016/s0014-4827(02)00099-x. [DOI] [PubMed] [Google Scholar]

- Kimeldorf G, Wahba G. A correspondence between Bayesian estimation on stochastic processes and smoothing by splines. Ann. Math. Statist. 1970;41:495–502. [Google Scholar]

- Kuwahara Y, Hosoi H, Osone S, Kita M, Iehara T, Kuroda H, Sugimoto T. Antitumor activity of gefitinib in malignant rhabdoid tumor cells in vitro and in vivo. Clinical Cancer Research. 2004;10:5940–5948. doi: 10.1158/1078-0432.CCR-04-0192. [DOI] [PubMed] [Google Scholar]

- Lee Y, Lee C. Classification of multiple cancer types by multicategory support vector machines using gene expression data. Bioinformatics. 2003;19:1132–1139. doi: 10.1093/bioinformatics/btg102. [DOI] [PubMed] [Google Scholar]

- Li H, Luan Y. Kernel Cox regression models for linking gene expression profiles to censored survival data. Biocomputing. 2003:65. [PubMed] [Google Scholar]

- Liu D, Ghosh D, Lin X. Estimation and testing for the effect of a genetic pathway on a disease outcome using logistic kernel machine regression via logistic mixed models. BMC bioinformatics. 2008;9:292–2. doi: 10.1186/1471-2105-9-292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu D, Lin X, Ghosh D. Semiparametric Regression of Multidimensional Genetic Pathway Data: Least-Squares Kernel Machines and Linear Mixed Models. Biometrics. 2007;63:1079–1088. doi: 10.1111/j.1541-0420.2007.00799.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nathanson K, Wooster R, Weber B. Breast cancer genetics: what we know and what we need. Nature Medicine. 2001;7:552–556. doi: 10.1038/87876. [DOI] [PubMed] [Google Scholar]

- Nicholson R, Gee J, Harper M. EGFR and cancer prognosis. European Journal of Cancer. 2001;37:9–15. doi: 10.1016/s0959-8049(01)00231-3. [DOI] [PubMed] [Google Scholar]

- Nolan D, Pollard D. U-processes: rates of convergence. The Annals of Statistics. 1987:780–799. [Google Scholar]

- Olopade O, Grushko T, Nanda R, Huo D. Advances in Breast Cancer: Pathways to Personalized Medicine. Clinical Cancer Research. 2008;14:7988. doi: 10.1158/1078-0432.CCR-08-1211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park Y, Wei L. Estimating subject-specific survival functions under the accelerated failure time model. Biometrika. 2003;90:717–723. [Google Scholar]

- Parzen M, Wei L, Ying Z. A resampling method based on pivotal functions. Biometrika. 1994;81:341–350. [Google Scholar]

- Pollard D. Empirical processes: theory and applications. Institute of Mathematical Statistics; 1990. [Google Scholar]

- Ramaswamy S, Tamayo P, Rifkin R, et al. Multiclass cancer diagnosis using tumor gene expression signatures. Proceedings of the National Academy of Sciences. 2001;98:15149. doi: 10.1073/pnas.211566398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scholkopf B, Smola A. Learning with kernels. MIT Press Cambridge; Mass: 2002. [Google Scholar]

- Scholkopf B, Smola A, Muller K. Nonlinear Component Analysis as a Kernel Eigenvalue Problem. Neural Computation. 1998;10:1299–1319. [Google Scholar]

- van de Vijver M, He Y, van't Veer L, et al. A gene-expression signature as a predictor of survival in breast cancer. New England Journal of Medicine. 2002;347:1999–2009. doi: 10.1056/NEJMoa021967. [DOI] [PubMed] [Google Scholar]

- Van't Veer L, Dai H, Van de Vijver M, et al. Gene expression profiling predicts clinical outcome of breast cancer. Nature. 2002;415:530. doi: 10.1038/415530a. [DOI] [PubMed] [Google Scholar]

- Vapnik V. Statistical learning theory. Wiley; NY: 1998. [DOI] [PubMed] [Google Scholar]

- Vo T, Phan J, Huynh K, Wang M. Reproducibility of Differential Gene Detection across Multiple Microarray Studies. Engineering in Medicine and Biology Society, 2007. EMBS 2007; 29th Annual International Conference of the IEEE; 2007. pp. 4231–4234. [DOI] [PubMed] [Google Scholar]

- Wirtenberger M, Tchatchou S, Hemminki K, et al. Association of genetic variants in the Rho guanine nucleotide exchange factor AKAP13 with familial breast cancer. Carcinogenesis. 2006;27:593. doi: 10.1093/carcin/bgi245. [DOI] [PubMed] [Google Scholar]

- Young R. Biomedical discovery with DNA arrays. Cell. 2000;102:9–15. doi: 10.1016/s0092-8674(00)00005-2. [DOI] [PubMed] [Google Scholar]

- Zwald L, Blanchard G. On the Convergence of Eigenspaces in Kernel Principal Component Analysis. Advances In Neural Information Processing Systems. 2006;18:1649. [Google Scholar]