Abstract

We investigated the functional organization of neural systems supporting language production when the primary language articulators are also used for meaningful, but non-linguistic, expression such as pantomime. Fourteen hearing non-signers and 10 deaf native users of American Sign Language (ASL) participated in an H2 15O-PET study in which they generated action pantomimes or ASL verbs in response to pictures of tools and manipulable objects. For pantomime generation, participants were instructed to “show how you would use the object.” For verb generation, signers were asked to “generate a verb related to the object.” The objects for this condition were selected to elicit handling verbs that resemble pantomime (e.g., TO-HAMMER (hand configuration and movement mimic the act of hammering) and non-handling verbs that do not (e.g., POUR-SYRUP, produced with a “Y” handshape). For the baseline task, participants viewed pictures of manipulable objects and an occasional non-manipulable object and decided whether the objects could be handled, gesturing “yes” (thumbs up) or “no” (hand wave). Relative to baseline, generation of ASL verbs engaged left inferior frontal cortex, but when non-signers produced pantomimes for the same objects, no frontal activation was observed. Both groups recruited left parietal cortex during pantomime production. However, for deaf signers the activation was more extensive and bilateral, which may reflect a more complex and integrated neural representation of hand actions. We conclude that the production of pantomime versus ASL verbs (even those that resemble pantomime) engage partially segregated neural systems that support praxic versus linguistic functions.

1.1 Introduction

For sign languages, the primary language articulators (the hands) are also used for meaningful, but non-linguistic, expression, e.g., pantomime production or emblematic gestures such as “thumbs up/down.” In contrast, the use of the speech articulators to express meaningful non-linguistic information is relatively limited, e.g., vocal imitation, a few emblematic facial gestures such as sticking out one’s tongue. Furthermore, the sign articulators perform everyday actions that are clearly related to many symbolic gestures (e.g., object manipulation), whereas everyday actions of the speech articulators (e.g., chewing, swallowing) are ordinarily unrelated to symbolic gesture. There is also convincing evidence for a diachronic relationship between certain symbolic gestures and lexical signs within a signing community. For example, the “departure” gesture used across the Mediterranean was incorporated into French Sign Language as the verb PARTIR, “to leave” (Janzen & Shaffer, 2002), and the Central American gesture indicating a small animal has been incorporated into Nicaraguan Sign Language as the classifier form meaning “small animal” (Kegl, Senghas, & Coppola,1999). Although signs may have a gestural origin, they differ in systematic ways from gesture. For example, signs exhibit duality of patterning and hierarchical phonological structure (e.g., Sandler & Lillo-Martin, 2006), whereas gestures are holistic with no internal structure (McNeill, 1992). Signs belong to grammatical categories, and gestures do not. Sign production involves lemma selection and phonological code retrieval (Emmorey, 2007), whereas pantomimic gesture involves visual-motoric imagery of action production. Here, we investigate the engagement of neural substrates that might differentiate sign production from gesture production.

We focus on verbs in American Sign Language (ASL) that resemble pantomimic actions: handling classifier verbs in which the hand configuration depicts how the human hand holds and manipulates an instrument. For example, the sign BRUSH-HAIR is made with a grasping handshape and a “brushing” motion at the head. Such verbs are most often referred to as classifier verbs because the handshape is morphemic and refers to a property of the referent object (e.g., the handle of a brush; Supalla, 1986; see papers in Emmorey, 2003, for discussion). The form of handling classifier verbs is quite iconic, depicting the hand configuration used to grasp and manipulate an object and the movement that is typically associated with the object’s manipulation. Given the pantomime-like quality of these verbs, we investigated the neural bases for the production of pantomimes, handling classifier verbs (hereafter referred to as handling verbs), and ASL verbs that do not exhibit such sensory-motoric iconicity (hereafter referred to as non-handling verbs). We restricted our investigation to pantomimes and excluded emblems because both signs and pantomimes can easily be elicited with picture stimuli and because pantomimes are similar in form to handling verbs.

1.2 Dissociations between sign and pantomime production

Evidence that the ability to sign can be dissociated from pantomime ability comes from two case studies of deaf aphasic signers (Corina et al., 1992; Marshall et al., 2004). Corina et al. (1992) described the case of WL who had a large fronto-temporo-parietal lesion in the left hemisphere. WL exhibited poor sign comprehension, and his signing was characterized by phonological and semantic errors with reduced grammatical structure. A relevant example of a phonological error by WL is his production of the sign SCREWDRIVER, which iconically depicts the shaft of a screwdriver (index and middle fingers extended) twisting into the palm of the non-dominant hand. He substituted an A-bar handshape (fist with thumb extended) for the target handshape. In contrast to his sign production, WL was unimpaired in his ability to produce pantomime. For example, instead of signing DRINK (a curved “C” hand moves toward the mouth, with wrist rotation – as if drinking), WL cupped his hands together to form a small bowl. WL was able to produce stretches of pantomime and tended to substitute pantomimes for signs, even when pantomime required more complex movements. Similarly, Marshall et al. (2004) report another case of a deaf aphasic signer, “Charles,” who also had a left temporo-parietal lesion and exhibited sign anomia that was parallel to speech anomia. For example, his sign finding difficulties were sensitive to sign frequency and to cueing, and he produced both semantic and phonological errors. However, his gesture production was intact and superior to his sign production even when the forms of the signs and gestures were very similar. These lesion data indicate that the neural systems for sign language and pantomime production are not identical, but it is unlikely that these neural systems are completely distinct and independent. To our knowledge, there are no existing reports of brain-damaged patients with intact signing ability but impaired ability to produce pantomimic gestures, which suggests that the neural systems supporting pantomimic communication might also be involved in sign language processing (MacSweeney, Capek, Campbell, & Woll, 2008).

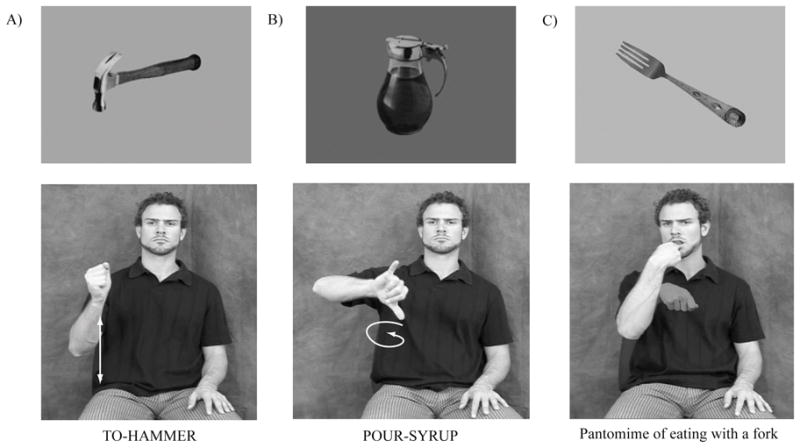

To identify overlapping neural substrates as well as those that might differentiate between sign and gesture production, we elicited signs and pantomimes from neurologically-intact deaf signers and from hearing non-signers. In the study reported here, participants viewed pictures of manipulable objects (e.g., a saw, a fork) and were asked to generate either an associated ASL verb (deaf signers only) or a pantomime showing how the object is typically used (see Figure 1). In a control task, participants viewed manipulable objects and an occasional non-manipulable object (e.g., a house) and were asked to decide whether the objects could be held in the hand, gesturing either “yes” (thumbs up) or “no” (hand wave). The manual response controlled for hand motion, and the decision task controlled for semantic processing of manipulable objects. This relatively high-level control task allowed us to examine the specific neural regions that are engaged when participants pantomime an action or produce an ASL verb associated with an object, as distinct from recognizing the object and its manipulability.

Figure 1.

Illustration of sample object pictures and the verbs or pantomimes generated in response to these stimuli.

1.3 Neural substrates for pantomime production by hearing non-signers vs. sign production by deaf signers

Previous studies of pantomime production in hearing non-signers point to a critical role for left parietal cortices. In a positron emission tomography (PET) study, Rumiati et al. (2004) reported increased neural activity in a dorsal region of the inferior parietal lobule (IPL) when participants pantomimed the use of a pictured object compared to naming that object. Using fMRI, Imazu et al. (2007) also found increased activity in left IPL when Japanese participants pantomimed picking up objects with chopsticks, compared to the actual use of chopsticks. Rumiati et al. (2004) hypothesize that left IPL is involved in the retrieval of object-related action schemata during pantomime execution (see also Buxbaum, 2001). In addition, several studies report increased neural activity in the left superior parietal lobule (SPL) when participants pantomime using tools compared to a motoric baseline task (Choi et al., 2001; Moll et al., 2000). Left SPL may be involved in the on-line organization of arm and hand actions in relation to an imagined object in space. Superior parietal cortex has been implicated in the representation of dynamic body schemas that monitor the location of the hand and arm during execution of movements (Khan et al., 2005; Wolpert, Goodbody, & Husain, 1998).

Left parietal cortices are also recruited during sign language production (for reviews see Corina & Knapp, 2006; MacSweeney et al., 2008). Greater activation has been reported in left inferior and superior parietal lobules for signing compared to speaking (Braun et al., 2001; Emmorey, Mehta, & Grabowski, 2007). Emmorey et al. (2007) suggested that the left superior parietal lobule may be involved in proprioceptive monitoring during sign language production. The inferior parietal lobule, specifically, the supramarginal gyrus (SMG), has been implicated in lexical-phonological processing for sign language. Corina et al. (1999) found that direct stimulation of SMG resulted in semantic errors and handshape substitutions in a picture naming task.

In addition, phonological similarity judgments in sign language elicited activation in the superior portion of left SMG, extending into SPL (MacSweeney, Waters, Brammer, Woll, & Goswami, 2008). In addition to left parietal cortex, sign language production depends upon left inferior frontal cortex. Damage to this region causes sign language aphasia characterized by non-fluent signing and production errors (Poizner, Bellugi, & Klima, 1987; Hickok et al., 1996). During overt signing, several studies report significant neural activity in left inferior frontal cortex (BA 44, 45, 46, and 47) (Corina et al., 2003; Braun et al. 2001; Emmorey et al., 2004; Petitto et al., 2000). In a conjunction analysis, Emmorey et al. (2007) found that Broca’s area (BA 45) was equivalently engaged for both sign and word production. The lexical-level functions of the left inferior frontal cortex are varied, but include phonological encoding, syntactic computations, and lexical semantic processing.

It is unclear whether the left inferior frontal gyrus plays a critical role in pantomime production (Frey, 2008). Neuroimaging results are mixed, with some studies reporting left IFG activation (Hermsdörfer et al., 2007; Peigneux et al., 2004; Rumiati et al., 2004), while others report no left IFG activation during pantomime production (Choi et al., 2001; Imazu et al., 2007; Moll et al., 2000). Activation in left IFG is generally attributed to the retrieval and processing of object semantics associated with tool use pantomime. However, an impairment of the semantic knowledge of tools and manipulable objects can occur in the face of an intact ability to produce tool-use pantomimes (Buxbaum, Schwartz, & Carew, 1997; Rosci, Chiesa, Laiacona, & Capitani, 2003). Impairments in pantomime production are most frequently observed with damage to left parietal cortex (e.g., Buxbaum, Johnson-Frey, & Barlett-Williams, 2005; Rothi, Heilman, & Watson, 1985), although there is some evidence that damage to left IFG is also associated with poor pantomime performance (Goldenberg et al., 2007).

Thus, there is evidence that both sign and pantomime production engage left fronto-parietal regions. Semantic processes are associated with inferior frontal cortex, while parietal regions are associated with manual articulation, object manipulation, and visually guided movements. By directly comparing sign and pantomime production by deaf signers, we can examine the extent to which distinct frontal and parietal regions are engaged during lexical verb retrieval and production compared to the production of familiar, non-linguistic actions. We predict that both verb generation and pantomime generation will engage fronto-parietal regions, but each may have a distinct neural signature. Specifically, we predict that verb generation will engage the left inferior frontal cortex to a greater extent than pantomime production because key linguistic processes are associated with this area (e.g., lexical search, phonological encoding, semantic processing) and because tool-use pantomime does not always entail semantic processing of the object (e.g., Rosci et al., 2003). We also predict that verb and pantomime generation will exhibit distinct patterns or levels of activation within parietal cortex, reflecting differences in the lexical versus praxic functions that underlie these tasks.

To further investigate the neural systems that underlie pantomime versus sign production, we included a group of hearing non-signers in the study. These participants produced pantomimes for two sets of manipulable objects. For one set, the deaf signers were asked to generate linguistic responses (ASL handling verbs related to the object), while the hearing non-signers were asked to produce pantomimes (likely to be similar in form to the ASL handling verbs). The between-group comparison for this set of objects provides an additional measure of the distinction between the neural systems that support sign language versus pantomime production. For the second set of objects, both deaf and hearing participants were asked to generate pantomimes related to object use. The between-group comparison for these objects will reveal whether pantomime production by deaf signers and by hearing non-signers engages similar cortical regions. It is possible that knowledge and use of ASL may alter the neural systems that are recruited for pantomime production.

Recently, Corina et al. (2007) reported that deaf signers did not engage frontal-parietal cortices when passively viewing manual actions that were self-oriented (e.g., scratch neck, lick lips, rub shoulder) or object-oriented (e.g., bite an apple, read a book, pop a balloon; the model handled the objects). In contrast, hearing non-signers showed robust activation in frontal-parietal regions when viewing these actions. Corina et al. (2007) hypothesized that life-long experience with a visual language shifts neural processing of human actions to extrastriate association areas, regions that were particularly active for the deaf signers. Corina et al. (2007) suggested that this shift arises because signers must actively filter human actions to quickly distinguish communicative from non-communicative actions for further linguistic processing. Such preprocessing of human action is not required for non-signers. Similarly, Emmorey, Xu, Gannon, Goldin-Meadow, and Braun (2009) found that passively viewing pantomimes strongly activated frontal-parietal regions in hearing non-signers, whereas activation was primarily observed in bilateral middle temporal regions in deaf signers. Emmorey et al. (2009) hypothesized that deaf signers recognize pantomimes quickly and relatively automatically and that this ease of processing leads to a substantial reduction of neuronal firing within frontal-parietal cortices.

1.4 Handling (“pantomimic”) verbs vs. non-handling verbs

Finally, given that ASL handling verbs are nearly identical in form to pantomimic gestures (see Figure 1), it was important to also elicit non-handling ASL verbs in order to assess whether the pantomimic properties of handling verbs affects the pattern of neural activity in frontal or parietal cortices. We did not anticipate differences between handling and non-handling verbs because we previously found very similar results for the production of handling verbs (e.g., BRUSH-HAIR, ERASE-BLACKBOARD) and non-handling “general verbs” (e.g., YELL, SLEEP) (Emmorey et al., 2004). Specifically, the production of both verb types in a picture-naming task engaged left inferior frontal cortex and the left superior parietal lobule (the baseline comparison was a face-orientation judgment task), and there were no significant differences in neural activation between the two verb types. However, the contrast between pantomimes and non-handling verbs may reveal differences that are not observed when pantomimes are contrasted with handling verbs. Specifically, because the phonological form of non-handling verbs does not involve reaching movements or grasping-type hand configurations (unlike object-use pantomimes or handling verbs), we may find a greater difference within parietal cortices between pantomimes and non-handling verbs than between pantomimes and handling verbs.

In sum, we investigated whether deaf signers and hearing non-signers differ with respect to neural regions engaged during the production of pantomimed actions and whether distinct patterns of neural activity are observed for the production of pantomimed actions, pantomimic-like ASL verbs, and non-pantomimic ASL verbs by deaf signers.

2. Methods

2.1. Participants

Twenty-four right-handed adults participated in the study. Ten participants (six males) were native deaf signers, aged 20–27 years (mean age = 23.2 years). All had deaf parents and acquired ASL as their first language from birth. Eight participants had severe to profound hearing loss, and hearing loss level was unknown for two participants. All deaf participants used ASL as their primary and preferred language. The other fourteen participants (7 males) reported normal hearing, had no knowledge of ASL, and were native English speakers (mean age = 28.7 years; range 22–41 years). All deaf and hearing participants had 12 or more years of formal education, and all gave informed consent in accordance with Federal and institutional guidelines.

2.2 Materials

To select objects that would elicit handling verbs as well as non-handling verbs, 166 photographs of manipulable objects were presented to an independent group of 16 deaf signers. Pictures were presented using Psyscope software (Cohen et al., 1993) at the same rate as for the neuroimaging experiment (see below). Participants were asked to “produce a verb that names an action you would perform with that object.” Instructions were given in ASL by a native signer. We selected 32 object pictures that consistently elicited handling classifier verbs and 32 objects that consistently elicited non-handling classifier verbs (i.e., a majority of participants produced the desired response). In addition, the object pictures that elicited handling verbs were selected because they also elicited consistent and easily performed pantomimic gestures from hearing non-signers, as described below. For example, objects in the handling verb elicitation set included a scrub brush (TO-SCRUB), a dart (THROW-DART), and a mug (TO-DRINK). Objects in the non-handling verb elicitation set included a ruler (TO-MEASURE), a paint brush (TO-PAINT), and syrup (TO-POUR-SYRUP). Example stimuli and verbs are presented in Figure 1.

To select object pictures that would elicit clear and appropriate pantomimic gestures, we presented the same object photographs to a separate group of 20 hearing non-signers and 16 deaf signers. Participants were asked “to pantomime how you would use that object or what you would do with that object.” We selected 32 object pictures that elicited consistent and easily performed pantomimes from both participant groups (i.e., the majority of participants produced appropriate pantomimes with little hesitation). Objects in the pantomime elicitation set included a fork, a drill, a key, and scissors (see Figure 1 for an example target pantomime). In addition, these object pictures were selected because they elicited pantomimes from deaf signers that were distinct from ASL verbs associated with the objects. For example, given a picture of a broom, participants produced a pantomime of sweeping with a broom, which does not resemble the ASL verb TO-SWEEP. Thus, we could determine whether deaf participants in the neuroimaging study were producing the expected pantomimes, rather than generating ASL verbs.

Finally, to assess the iconicity and meaningfulness of the elicited handling verbs, pantomimic gestures, and non-handling ASL verbs, we presented video of the target ASL verbs and pantomimes produced by a deaf native signer to another group of 10 hearing non-signers. Participants were asked to rate each gesture as having no meaning (rated as 0), weak meaning (rated as 1), moderate/fairly clear meaning (rated as 2), or absolute/strong direct meaning (rated as 3) and to write down the meaning of each gesture. The non-handling verbs (mean rating = 0.70; SD = 0.30) were rated as less meaningful than the handling verbs (mean rating = 1.73; SD = 0.39) and the pantomimic gestures (mean rating = 1.60; SD = 0.33). The majority of participants were also able to identify the general meaning of the target ASL handling verbs and the pantomimic gestures.

2.3 Procedure

Image Acquisition

All participants underwent MR scanning in a 3.0T TIM Trio Siemens scanner to obtain a 3D T1-weighted structural scan with isotropic 1 mm resolution using the following protocol: MP-RAGE, TR 2530, TE 3.09, TI 800, FOV 25.6cm, matrix 256 × 256 × 208. The MR scans were used to confirm the absence of structural abnormalities, aid in anatomical interpretation of results, and facilitate registration of PET data to a Talairach-compatible atlas.

Positron emission tomography (PET) data were acquired with a Siemens/CTI HR+ PET system using the following protocol: 3D, 63 image planes, 15 cm axial FOV, 4.5mm transaxial and 4.2mm axial FWHM resolution. Participants performed the experimental tasks during the intravenous bolus injection of 15 mCi of [15O]water. Arterial blood sampling was not performed.

Images of rCBF were computed using the [15O]water autoradiographic method (Herscovitch et al, 1983, Hichwa et al, 1995) as follows. Dynamic scans were initiated with each injection and continued for 100 seconds, during which 20 five-second frames were acquired. To determine the time course of bolus transit from the cerebral arteries, time-activity curves were generated for regions of interest placed over major vessels at the base of the brain. The eight frames representing the first 40 seconds immediately after transit of the bolus from the arterial pool were summed to make an integrated 40-second count image. These summed images were reconstructed into 2mm pixels in a 128x128 matrix.

Tasks

While undergoing PET scanning, participants performed the following tasks when presented with object photographs: a) generate an associated ASL verb (deaf only), b) generate an object-related pantomime, or c) make a handling decision. For the verb generation task, one set of objects elicited handling verbs and another elicited non-handling verbs. For both sets of objects, deaf participants were instructed in ASL by a deaf native signer to produce a verb that named an action performed with the object. They were not specifically told to produce handling or non-handling verbs. For the pantomime generation task, both participant groups were instructed to “show me how you would use the pictured object.” Hearing participants were also instructed to generate pantomimes for the same object stimuli that were used to elicit handling verbs from the deaf participants. For the control task, participants were instructed to decide whether the pictured object could be held in the hand and to indicate their response with either a “yes” gesture (thumbs up) or a “no” gesture (palm down, horizontal hand wave). Three to four objects in each block of 16 were not manipulable (e.g., a highway, a radio tower, a dam). Four practice items preceded each target task.

For all tasks, picture stimuli were presented for 2 s followed by a 500 ms inter-stimulus-interval. For each task condition, 32 picture stimuli were presented to participants using I-glasses SVGA Pro goggles (I-O Display Systems; Sacramento, CA), in two separate blocks of 16 pictures. For each block, the picture stimuli were presented from 5 s after the injection (approximately 7–10 s before the bolus arrived in the brain) until 35 s after injection.

Participants’ responses were recorded during the PET study by either a native ASL signer or a native English speaker. Responses were also digitally recorded for confirmation and later analysis; however, due to equipment malfunction, the responses of five deaf and three hearing participants were not recorded. Participants were allowed to produce verbs and/or gestures with both hands (a flexible intravenous cannula was used for the [15O]water injection, which permitted arm movement after the injection).

Spatial normalization

PET data were spatially normalized to a Talairach-compatible atlas through a series of coregistration steps (see Damasio et al., 2004; Grabowski et al., 1995, for details). Prior to registration, the MR data were manually traced to remove extracerebral voxels. Talairach space was constructed directly for each participant via user-identification of the anterior and posterior commissures and the midsagittal plane on the 3D MRI data set in Brainvox. An automated planar search routine defined the bounding box and piecewise linear transformation was used (Frank et al., 1997), as defined in the Talairach atlas (Talairach and Tournoux, 1988). After Talairach transformation, the MR data sets were warped (AIR 5th order nonlinear algorithm) to an atlas space constructed by averaging 50 normal Talairach-transformed brains, rewarping each brain to the average, and finally averaging them again, analogous to the procedure described in Woods et al., (1999). Additionally, the MR images were segmented using a validated tissue segmentation algorithm (Grabowski et al., 2000), and the gray matter partition images were smoothed with a 10 mm kernel. These smoothed gray matter images served as the target for registering participants’ PET data to their MR images.

For each participant, PET data from each injection were coregistered to each other using Automated Image Registration (AIR 5.25, Roger Woods, UCLA). The coregistered PET data were averaged, and the mean PET image was then registered to the smoothed gray matter partition using FSL’s linear registration tool (Jenkinson & Smith, 2001; Jenkinson et al., 2002). The deformation fields computed for the MR images were then applied to the PET data to bring them into register with the Talairach-compatible atlas. After spatial normalization, the PET data were smoothed with a 16 mm FWHM Gaussian kernel using complex multiplication in the frequency domain. The final calculated voxel resolution was 17.9 x 17.9 x 18.9 mm. PET data from each injection were normalized to a global mean of 1000 counts per voxel.

Regression Analyses

PET data were analysed with a pixelwise general linear model (Friston et al. 1995). Regression analyses were performed using tal_regress, a customized software module based on Gentleman’s least squares routines (Miller, 1991) and cross-validated against SAS (Grabowski et al, 1996). Regression analyses were performed separately for data from the deaf ASL participants and hearing non-signing participants. Models for both regression analyses included participant and task condition effects. For the deaf ASL participants, 6 contrasts were tested: 1) pantomime – decision, 2) handling verbs – decision, 3) non-handling verbs – decision, 4) pantomime – handing verbs, 5) pantomime – non-handling verbs, and 6) handling verbs – non-handling verbs. For the hearing non-signing participants, only contrasts 1, 2, and 4 were tested, and contrasts 2 and 4 differed for these participants in that they pantomimed when presented the stimuli for the handling verbs.

In addition, two random effects analyses were performed to assess differences between ASL and non-signing participant groups in the pantomime and handling verb conditions. For these analyses, data were averaged across task condition (either the pantomime task or the handling verbs/pantomime task). The difference image between the task and control task was used as the dependent measure to test for differences between deaf and non-signing participants.

Contrasts were tested with t-test and thresholded using random field theory (Worsley 1994), with a Familywise type I error set at alpha less than 0.05. In accordance with our hypotheses, an a priori search volume was used that included left frontal lobe, left temporal lobe, and bilateral parietal lobes. The search volume was delineated by manual tracing on the averaged MR and measured 258 cm3, calculated to comprise 43 resels. A whole brain search was also performed to look for areas of activation outside of this restricted search volume.

3.1 Results

For the behavioral results, non-responses were scored as incorrect for both verbs and pantomimes. Incorrect verb responses included naming the object (rather than generating a verb) or producing a non-target verb. Incorrect pantomime responses included producing a non-target pantomime or a non-pantomimic gesture, such as a hesitation gesture (e.g., waving the hand as if searching for an idea). Deaf participants were more accurate when generating handling verbs (93.13% correct) than when generating non-handling verbs (77.12% correct), t(9) = −3.414, p < .01. Incorrect responses for the non-handling verbs were primarily non-target verb responses in which the participant produced a handling, rather than a non-handling verb. Response accuracy for handling verbs did not differ significantly from response accuracy for generating pantomimes (91.88%), t(9) = 0.612, p = .55. Hearing participants were as accurate as deaf participants when generating pantomimes (94.01%), and response accuracy was similar for the “handling verb” object pantomimes (92.89%; deaf participants generated ASL verbs for these objects).

Tables 1 and 2 present the local maxima of areas of increased activity for each target task contrasted with the control task for deaf and hearing participants, respectively. As shown in Figure 2, when deaf participants generated pantomimes, they exhibited extensive bilateral activation in the superior parietal lobule (SPL) and along the precentral gyrus; in contrast, when hearing participants generated pantomimes to the same stimuli, activation was left lateralized within SPL. The hearing participants also exhibited activation within left middle/inferior temporal cortex (BA 37) when producing pantomimes for the same objects that elicited pantomimes from the deaf signers and when producing pantomimes for the objects that elicited handling verbs from signers (“handling verb” pantomimes). Finally, for both deaf and hearing participants, pantomime generation resulted in significant activation within the right cerebellum. For deaf signers, right cerebellar activation was also observed during the generation of non-handling verbs.

Table 1.

Summary of PET activation results for deaf participants. Local maxima of areas with increased activation for the target tasks in contrast to the control task and for pantomime production contrasted with ASL verb production. Results are from the a priori search volume (critical t(86) = 4.03) and the whole brain analysis (critical t(86) = 4.60; indicated by an asterisk).

| Region | Side | X | Y | Z | T |

|---|---|---|---|---|---|

| Pantomime | |||||

| Superior frontal gyrus (BA 6) | L/R | 0 | 0 | +66 | 5.02* |

| Supplementary Motor Area (BA 6) | R | +5 | −1 | +58 | 5.05* |

| Precentral gyrus (BA 6) | R | +26 | −9 | +49 | 5.13* |

| Precentral gyrus (BA 4) | L | −29 | −29 | +48 | 6.54* |

| Superior parietal lobule (BA 7) | L | −9 | −66 | +58 | 4.76 |

| R | +24 | −55 | +60 | 4.77 | |

| Cerebellum | L | −28 | −49 | −26 | 5.32* |

| R | +24 | −48 | −28 | 5.24* | |

| Handling Verbs | |||||

| Inferior frontal gyrus (BA 46) | L | −43 | +37 | +11 | 4.50 |

| L | −33 | +36 | +3 | 4.53 | |

| Inferior frontal gyrus (BA 44) | L | −42 | +9 | +18 | 4.34 |

| Cerebellum | R | +22 | −52 | −28 | 5.74* |

| Non-handling verbs | |||||

| Inferior frontal gyrus (BA 46) | L | −52 | +33 | +13 | 3.36‡ |

| Cerebellum | R | +4 | −58 | −19 | 5.50* |

| R | +22 | −51 | −27 | 5.71* | |

| R | +10 | −69 | −28 | 5.43* | |

| Pantomime > handling verbs | |||||

| Superior parietal lobule (BA 7) | R | +28 | −55 | +54 | 4.62 |

| Pantomime > non-handling verbs | |||||

| Precentral gyrus (BA 6) | R | +25 | −7 | +49 | 5.22* |

| Intraparietal sulcus | L | −41 | −38 | +47 | 5.40 |

| Inferior parietal lobule (BA 40) | R | +45 | −33 | +41 | 5.55 |

| Superior parietal lobule (BA 7) | R | +29 | −53 | +54 | 5.64 |

Significant at an uncorrected level (critical t = 3.2, p <0.001).

Table 2.

Summary of PET activation results for hearing participants. Local maxima of areas with increased activation for the target tasks in comparison to the control task. Results are from the a priori search volume (critical t(121) = 3.96) and the whole brain analysis (critical t(121) = 4.51; indicated by an asterisk).

| Region | Side | X | Y | Z | T |

|---|---|---|---|---|---|

| Pantomime | |||||

| Superior parietal lobule (BA 7) | L | −30 | −43 | +60 | 4.84 |

| Inferior temporal gyrus (BA 37) | L | −55 | −65 | +2 | 4.05 |

| “Handling verb” pantomime | |||||

| Superior parietal lobule (BA 7) | L | −26 | −51 | +63 | 4.44 |

| Intraparietal sulcus | L | −31 | −33 | +32 | 4.94 |

| Inferior temporal gyrus (BA 37) | L | −49 | −61 | +1 | 4.17 |

| Cerebellum | R | +26 | −52 | −29 | 4.90* |

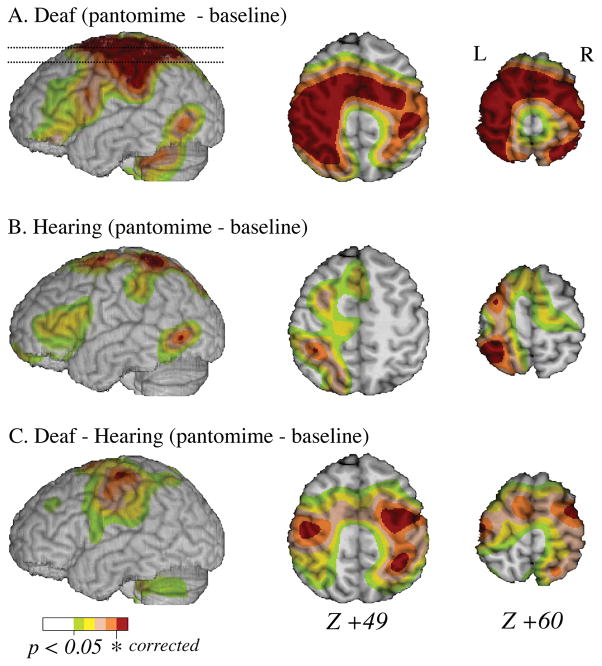

Figure 2.

Results of the contrast between pantomime production and baseline task in the deaf participants (row A) and hearing non-signers (row B). Row C shows the results for the between subjects contrast (deaf – hearing non-signers) for the same task comparisons. The color overlay reflects significant increased activity at an uncorrected level, and regions in red reflect increased activity at a RFT corrected level (p < 0.05). Compared to the baseline task, pantomime production by both deaf and hearing participants resulted in greater activity in left superior parietal lobule. For the deaf participants, the increased activity was more extensive, encompassing the right parietal lobule, the left and right precentral gyrus, and right supplementary motor area.

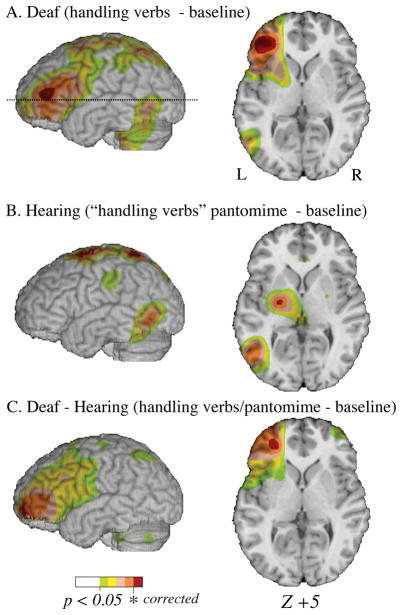

Unlike pantomime generation, when deaf signers produced ASL handling verbs, activation was observed at two local maxima within the left inferior frontal gyrus (IFG) (see Figure 3). Similarly, when deaf participants generated ASL non-handling verbs, activation was observed in left IFG, but this neural activity was weaker, reaching significance only at an uncorrected level. When pantomime production was directly contrasted with ASL handling or non-handling verb production, greater neural activity was observed for pantomimes within the right SPL (see Table 1). The contrast between pantomime production and the non-handling ASL verbs also revealed additional regions of greater neural activity within the right precentral gyrus and bilateral inferior parietal lobule. There were no neural regions that were significantly more active for handling verb than for pantomime production. For non-handling verbs compared to pantomimes, greater activation was observed in a small region within the posterior right cerebellum (+28, −85, −25; t = 4.68). Finally, a direct contrast between the generation of handling verbs and non-handling verbs revealed no differences in neural activity.

Figure 3.

Results of the contrast between handling verb generation and baseline task in the deaf participants (row A) and pantomime production (for the same ‘handling verb’ stimuli) and baseline task in hearing non-signers (row B). Row C shows the results for the between subjects contrast (deaf – hearing non-signers) for the same task comparisons. The color overlay reflects significant increased activity at an uncorrected level, and regions in red reflect increased activity at a RFT corrected level (p < 0.05). Increased activity in the inferior frontal gyrus is observed in the deaf signers but not the hearing participants.

We next conducted random effect analyses comparing the deaf and hearing groups (see Table 3). For pantomime production, greater activation was observed in right SPL and right precentral gyrus for deaf signers compared to hearing non-signers (see Figure 4); greater activation was observed within the retrosplenial area and hippocampus/parahippocampal gyrus for hearing non-signers compared to deaf signers. When deaf signers generated ASL verbs and hearing non-signers generated pantomimes to the same objects, we observed greater activation within left IFG and the middle frontal gyrus (BA 10) for deaf signers (see Figure 5). There were no regions that were significantly more active for hearing non-signers than for deaf signers for this contrast.

Table 3.

Summary of PET activation results for the two random effects analyses. Local maxima of areas with increased activation for the target tasks in comparison to the control task. Results are from the a priori search volume (critical t(22) = 4.88) and the whole brain analysis (critical t(22) = 5.82; indicated by an asterisk).

| Region | Side | X | Y | Z | T |

|---|---|---|---|---|---|

| Pantomime | |||||

| Deaf > hearing | |||||

| Precentral gyrus (BA 6) | R | +31 | −12 | +48 | 6.68* |

| Superior parietal lobule (BA 7) | R | +31 | −46 | +49 | 5.82 |

| Hearing > deaf | |||||

| Retrosplenium (BA 23) | L | −2 | −56 | +15 | 5.35 |

| Hippocampus/parahippocampal gyrus | L | −32 | −27 | −4 | 6.42* |

| Handling verbs/pantomime | |||||

| Deaf > hearing | |||||

| Inferior/middle frontal gyrus (BA 46/10) | L | −26 | +40 | +5 | 5.21 |

| Middle frontal gyrus (BA 10) | L | −30 | +54 | −11 | 6.24* |

|

Hearing > deaf No regions were more active for hearing participants | |||||

4.1 Discussion

The production of ASL verbs by deaf signers engaged the left inferior frontal gyrus (Figure 3A), replicating several previous studies of sign language production (e.g., Braun et al., 2001; Corina et al., 2003; Emmorey et al., 2007; Petitto et al., 2000). Verb generation tasks for spoken language also consistently elicit activation in left IFG (e.g., Buckner, Raichle, & Peterson, 1995; Klein et al., 1999). In contrast, activation in left inferior frontal cortex was not observed when either deaf signers or hearing non-signers generated pantomimic gestures in response to pictures of manipulable objects (Figures 2 and 3B). During verb generation, left IFG activation has been argued to reflect semantic search and lexical selection processes (e.g., Thompson-Schill et al., 1997), and such lexical processes are not required for pantomime generation. However, it is possible that some covert verb generation may have occurred for the deaf signers during pantomime production because the direct contrast between ASL verb and pantomime generation did not result in differential activation in left IFG for ASL verbs. Signers may have occasionally retrieved an ASL verb related to the pictured object, even though their task was to generate a pantomime of the objects’ use. Such covert lexical retrieval may have reduced the difference in neural activity within left IFG for the direct contrast between ASL verbs and pantomimes.

Further evidence that ASL verb generation engages frontal regions to a greater extent than pantomime is found in the random effects analysis comparing deaf participants’ production of handling verbs and hearing participants’ production of pantomimes in response to the same objects (Table 3). Deaf signers’ production of ASL verbs engaged left anterior frontal cortex (BA 46/10) to a greater extent than hearing participants’ production of pantomimes that resembled ASL handling verbs (Figure 3C).

As predicted, in comparison to the baseline task, pantomime production engaged the left superior parietal lobule for both hearing and deaf participants (Figure 2). Activation within the left inferior parietal lobule may not have been observed because the baseline task involved a determination of whether an object could be held in the hand, and the planning and representation of grasp configuration is associated with inferior parietal cortex (e.g., Grafton et al., 1996; Jeannerod, Arbib, Rizzolatti, & Sakata, 1995). Consistent with this explanation, we observed increased activity in IPL when contrasting pantomime production with non-handling verb generation (but not with handling verb generation).

Activation within SPL was bilateral and more extensive for deaf signers than for hearing non-signers, which may be due to the richer and more precisely articulated pantomimes that were produced by the deaf signers. Deaf signers produced crisp and clear hand configurations in their pantomimes (e.g., depicting a specific type of grip on a paint brush handle), while the hearing participants produced more lax, ambiguous handshapes. Deaf signers tended to produce longer pantomimes; for example, 60% of their pantomimes repeated the depicted action, compared to 46% for hearing participants. Signers also produced somewhat more complex pantomimes. In particular, the signers produced more two-handed pantomimes (80% vs. 41% for hearing non-signers), and their pantomimes represented more aspects of an action. For example, in response to a picture of a teapot, both groups tended to produce a pouring gesture, but the deaf signers were more likely to simultaneously produce a gesture representing the teacup with their other hand. Deaf signers may be more practiced in pantomime generation than hearing people because ASL narratives and stories often include pantomimic gestures (Emmorey, 1999; Liddell & Metzger, 1998), and they also sometimes need to pantomime when communicating with hearing people. Recent studies have shown that expertise can lead to an expanded neural representation for a given task or ability (e.g., Beilock et al., 2008; Landau & D’Esposito, 2006), and we hypothesize that the more extensive SPL activation observed for the deaf signers may reflect experience-dependent changes in the neural network that supports pantomime production.

When hearing non-signers produced pantomimes, we observed significant activation in left inferior temporal cortex (Figures 2 and 3), which was not observed for deaf signers. Activation within the ventral stream may reflect more extensive visual analysis of the object to be manipulated by the hearing non-signers as they planned their object pantomimes. The group comparison revealed greater activation in the retrosplenial cortex and the hippocampus/parahippocampal gyrus for the hearing group than the deaf group. These regions are known to be involved in the recall of episodic information, and we suggest that activation in these regions arises because hearing participants may be more likely to generate pantomimes by retrieving a specific memory of how they used the object in the past. In contrast, deaf signers may be more likely to generate a pantomimed action on the fly without attempting to recall a particular instance of using the object.

The direct contrast between pantomime production and the generation of both handling and non-handling verbs revealed activation in the right superior parietal lobule specific to pantomimes (Table 1). Kroliczak et al. (2007) reported that pantomimed grasping of an object recruited right SPL in comparison to pantomimed reaching toward the object, while the comparison between real grasping vs. real reaching did not show a difference in right SPL activation. Kroliczak et al. (2007) hypothesized that right parietal cortex may be involved in less familiar pantomimes that require additional spatial transformations and/or reprogramming of movement kinematics that depend upon right-hemisphere processing. Chaminade, Meltzoff, & Decety (2005) also argue that right superior parietal cortex is involved in the visuospatial analysis of gestures, particularly when spatial features are necessary to identify an action to be imitated. Such visuospatial analysis of an object to be grasped or of the movements needed to pantomime how an object is used are not required for the generation of ASL verbs. During verb generation, hand configuration and movement are retrieved as part of a phonological representation of a lexical sign – the form of the sign is not directly computed from an analysis of the visuospatial characteristics of the stimulus object.

The contrast between pantomime production and the generation of non-handling verbs revealed additional activation in the left inferior parietal lobule for pantomimes (Table 1). Left IPL activation is likely due to the grasping hand configurations and movements that were used for the pantomimes. By definition, the non-handling verbs did not involve hand configurations or motions that resembled how objects are grasped. The contrast between handling verb and pantomime generation revealed no differential activation within left IPL. This pattern of results suggests that left IPL may play a role in the production of both pantomimes and ASL signs that involve similar grasping hand configurations and movements and/or that convey semantic information about object manipulation.

These results are relevant to the hypothesis that motor systems are involved in the semantic processing and representation of action words (e.g., Boulenger, Hauk, & Pulvermüller, 2009; Hauk, Johnsrude, & Pulvermüller, 2004; Rizzolatti & Craighero, 2004). The fronto-parietal regions engaged during the generation of ASL handling verbs and pantomimic gestures were not identical, despite very similar motoric patterns and action semantics. The production of “pantomimic” ASL verbs does not appear to involve cognitive embodiment processes that are involved in demonstrating object manipulation or use. The findings are consistent, however, with the hypothesis that motor areas play a role in the conceptual representation of action verbs, as evidenced by the pattern of contrasts within left IPL described above. In addition, Emmorey et al. (2004) found very similar activation peaks in left premotor and left inferior parietal cortices for both ASL signers and English speakers when naming pictures of tool-based actions (e.g. stir, hammer, scrub). Emmorey et al. (2004) proposed that activation within these regions reflects retrieval of knowledge about the sensory- and motor-based attributes that partially constitute conceptual representations for these types of actions.

Compared to baseline, the generation of both handling and non-handling verbs activated left inferior frontal cortex; however, activation was weaker for the non-handling verbs. It is not clear why left frontal activation was reduced for the non-handling verbs. Weaker left IFG activation during verb generation has been associated with lower demands on lexical selection and with less lexical interference (e.g., Nelson et al., 2009; Thompson-Schill et al., 1997). However, more lexical competition may have occurred for non-handling verbs because participants sometimes produced non-target handling verbs, which suggests more possible responses existed for the non-handling verbs. Nonetheless, it is possible that retrieval of these verbs was less demanding because they are morphologically less complex than handling classifier verbs. Specifically, non-handling verbs are monomorphemic, whereas the handshape of classifier verbs represents a distinct morpheme that specifies either an instrument or handling agent (Benedicto & Brentari, 2004; see also papers in Emmorey, 2003).

In sum, the production of pantomime and ASL verbs relies on partially distinct neural systems. Pantomime production engaged superior parietal cortex bilaterally for deaf signers, while verb production engaged left inferior frontal cortex. Pantomime production by hearing non-signers also did not engage left inferior frontal cortex, indicating the left frontal cortex is not critical to the execution of pantomimes. Interestingly, the neural networks for pantomime generation were not identical for the deaf and hearing groups. Deaf signers recruited more extensive regions within superior parietal cortex, perhaps reflecting more practice with pantomime generation; whereas, hearing non-signers recruited neural regions associated with episodic memory retrieval, perhaps reflecting a recall-based strategy for pantomime generation. In line with previous research, we suggest that left inferior frontal cortex subserves lexical retrieval functions associated with verb generation (even for verbs that mimic hand actions), whereas superior parietal cortex subserves the execution of praxic motor movements involved in the pantomimed use of an object.

Acknowledgments

This research was supported by a grant from the National Institute on Deafness and other Communication Disorders (R01 DC006708). We thank Jocelyn Cole, Jarret Frank, Franco Korpics, and Heather Larrabee for their assistance with the study.

Contributor Information

Karen Emmorey, San Diego State University.

Stephen McCullough, San Diego State University.

Sonya Mehta, University of Iowa.

Laura L. B. Ponto, University of Iowa

Thomas J. Grabowski, University of Iowa

References

- Beilock SL, Lyons IM, Mattarella-Micke A, Nusbaum HC, Small SL. Sports experience changes the neural processing of action language. Proc Natl Acad Sci U S A. 2008;105(36):13269–13273. doi: 10.1073/pnas.0803424105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benedicto E, Brentari D. Where did all the arguments go?: Argument-changing properties of classifiers in ASL. Natural Language and Linguistic Theory. 2004;22(4):743–810. [Google Scholar]

- Boulenger V, Hauk O, Pulvermuller F. Grasping ideas with the motor system: Semantic somatotopy in idiom comprehension. Cerebral Cortex. 2009;19:1905–1914. doi: 10.1093/cercor/bhn217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braun AR, Guillemin A, Hosey L, Varga M. The neural organization of discourse - An (H2O)-O-15-PET study of narrative production in English and American sign language. Brain. 2001;124:2028–2044. doi: 10.1093/brain/124.10.2028. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Raichle ME, Petersen SE. Dissociation of human prefrontal cortical areas across different speech production tasks and gender groups. J Neurophysiol. 1995;74(5):2163–2173. doi: 10.1152/jn.1995.74.5.2163. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Schwartz MF, Carew T. The role of semantic memoryin object use. Cognitive Neuropsychology. 1997;14:219–254. [Google Scholar]

- Buxbaum LJ. Ideomotor apraxia: a call to action. Neurocase. 2001;7(6):445–458. doi: 10.1093/neucas/7.6.445. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Johnson-Frey SH, Bartlett-Williams M. Deficient internal models for planning hand-object interactions in apraxia. Neuropsychologia. 2005;43(6):917–929. doi: 10.1016/j.neuropsychologia.2004.09.006. [DOI] [PubMed] [Google Scholar]

- Chaminade T, Meltzoff AN, Decety J. An fMRI study of imitation: action representation and body schema. Neuropsychologia. 2005;43(1):115–127. doi: 10.1016/j.neuropsychologia.2004.04.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi SH, Na DL, Kang E, Lee KM, Lee SW, Na DG. Functional magnetic resonance imaging during pantomiming tool-use gestures. Exp Brain Res. 2001;139(3):311–317. doi: 10.1007/s002210100777. [DOI] [PubMed] [Google Scholar]

- Cohen JD, Macwhinney B, Flatt M, Provost J. Psyscope: A new graphic interactive environment for designing psychology experiments. Behavioral Research Methods, Instruments, and Computers. 1993;25:257–271. [Google Scholar]

- Corina D, Chiu YS, Knapp H, Greenwald R, San Jose-Robertson L, Braun A. Neural correlates of human action observation in hearing and deaf subjects. Brain Res. 2007;1152:111–129. doi: 10.1016/j.brainres.2007.03.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina DP, Knapp H. Sign language processing and the mirror neuron system. Cortex. 2006;42(4):529–539. doi: 10.1016/s0010-9452(08)70393-9. [DOI] [PubMed] [Google Scholar]

- Corina DP, McBurney SL, Dodrill C, Hinshaw K, Brinkley J, Ojemann G. Functional roles of Broca's area and SMG: evidence from cortical stimulation mapping in a deaf signer. Neuroimage. 1999;10(5):570–581. doi: 10.1006/nimg.1999.0499. [DOI] [PubMed] [Google Scholar]

- Corina DP, Poizner H, Bellugi U, Feinberg T, Dowd D, O’grady-Batch L. Dissociation between linguistic and non-linguistic gestural systems: A case for compositionality. Brain and Language. 1992;43:414–447. doi: 10.1016/0093-934x(92)90110-z. [DOI] [PubMed] [Google Scholar]

- Corina DP, San Jose-Robertson L, Guillemin A, High J, Braun AR. Language lateralization in a bimanual language. Journal of Cognitive Neuroscience. 2003;15(5):718–730. doi: 10.1162/089892903322307438. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92(1–2):179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- Emmorey K. Do signers gesture? In: Messing LS, Campbell R, editors. Gesture, Speech and Sign. New York: Oxford University Press; 1999. pp. 133–157. [Google Scholar]

- Emmorey K, editor. Perspectives on classifier constructions in signed languages. Mahwah, NJ: Lawrence Erlbaum and Associates; 2003. [Google Scholar]

- Emmorey K, Grabowski T, McCullough S, Damasio H, Ponto LL, Hichwa RD, et al. Neural systems underlying lexical retrieval for sign language. Neuropsychologia. 2004;41(1):85–95. doi: 10.1016/s0028-3932(02)00089-1. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Mehta S, Grabowski TJ. The neural correlates of sign versus word production. Neuroimage. 2007;36(1):202–208. doi: 10.1016/j.neuroimage.2007.02.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Xu J, Gannon P, Goldin-Meadow S, Braun A. CNS activation and regional connectivity during pantomime observation: No engagement of the mirror neuron system for deaf signers. 2009. Manuscript under review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank RJ, Damasio H, Grabowski TJ. Brainvox: an interactive, multimodal visualization and analysis system for neuroanatomical imaging. Neuroimage. 1997;5(1):13–30. doi: 10.1006/nimg.1996.0250. [DOI] [PubMed] [Google Scholar]

- Frey SH. Tool use, communicative gesture and cerebral asymmetries in the modern human brain. Philosophical Transactions of the Royal Society B. 2008;363:1951–1957. doi: 10.1098/rstb.2008.0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: a general linear approach. Human Brain Mapping. 1995;2:189–210. [Google Scholar]

- Goldenberg G, Hermsdorfer J, Glindemann R, Rorden C, Karnath HO. Pantomime of tool use depends on integrity of left inferior frontal cortex. Cereb Cortex. 2007;17(12):2769–2776. doi: 10.1093/cercor/bhm004. [DOI] [PubMed] [Google Scholar]

- Grabowski TJ, Damasio H, Frank R, Hichwa RD, Ponto LL, Watkins GL. A new technique for PET slice orientation and MRI-PET coregistration. Hum Brain Mapp. 1995;2:123–133. [Google Scholar]

- Grabowski TJ, Frank RJ, Szumski NR, Brown CK, Damasio H. Validation of partial tissue segmentation of single-channel magnetic resonance images. NeuroImage. 2000;12:640–656. doi: 10.1006/nimg.2000.0649. [DOI] [PubMed] [Google Scholar]

- Grabowski TJ, Frank R, Brown CK, Damasio H, Boles Ponto LL, Watkins GL, et al. Reliability of PET activation across stastical methods, subject groups, and sample sizes. Hum Brain Mapp. 1996;4:23–46. doi: 10.1002/(SICI)1097-0193(1996)4:1<23::AID-HBM2>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Arbib MA, Fadiga L, Rizzolatti G. Localization of grasp representations in humans by positron emission tomography. 2. Observation compared with imagination. Exp Brain Res. 1996;112(1):103–111. doi: 10.1007/BF00227183. [DOI] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermuller F. Somatotopic representation of action words in human motor and premotor cortex. Neuron. 2004;41(2):301–307. doi: 10.1016/s0896-6273(03)00838-9. [DOI] [PubMed] [Google Scholar]

- Hermsdörfer J, Terlinden G, Muhlau M, Goldenberg G, Wohlschlager AM. Neural representations of pantomimed and actual tool use: evidence from an event-related fMRI study. Neuroimage. 2007;36(Suppl 2):T109–118. doi: 10.1016/j.neuroimage.2007.03.037. [DOI] [PubMed] [Google Scholar]

- Hichwa RD, Ponto LL, Watkins GL. Clinical blood flow measurement with [150]water and positron emission tomography (PET) In: Emran AM, editor. Chemists' views of imaging centers, symposium proceedings of the International Symposium on "Chemists' Views of Imaging Centers". New York: Plenum Publishing; 1995. [Google Scholar]

- Hickok G, Kritchevsky M, Bellugi U, Klima ES. The role of the left frontal operculum in sign language aphasia. Neurocase. 1996;2:373–380. [Google Scholar]

- Imazu S, Sugio T, Tanaka S, Inui T. Differences between actual and imagined usage of chopsticks: an fMRI study. Cortex. 2007;43(3):301–307. doi: 10.1016/s0010-9452(08)70456-8. [DOI] [PubMed] [Google Scholar]

- Jeannerod M, Arbib MA, Rizzolatti G, Sakata H. Grasping objects: the cortical mechanisms of visuomotor transformation. Trends in Neuroscience. 1995;18(7):314–320. [PubMed] [Google Scholar]

- Jenkinson M, Bannister PR, Brady JM, Smith SM. Improved optimisation for the robust and accurate linear registration and motion correction of brain images. NeuroImage. 2002;17(2):825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith SM. A global optimisation method for robust affine registration of brain images. Medical Image Analysis. 2001;5(2):143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Janzen T, Shaffer B. Gesture as the substrate in the process of ASL grammaticization. In: Meier R, Quinto D, Cormier K, editors. Modality and structure in signed and spoken languages B2 - Modality and structure in signed and spoken languages. Cambridge University Press; 2002. pp. 199–223. [Google Scholar]

- Kegl J, Senghas A, Coppola M. Creation through contact: Sign language emergence and sign language change in Nicaragua. In: DeGraff M, editor. Language creation and language change: Creolization, diachrony, and development. Cambridge, MA: The MIT Press; 1999. pp. 179–237. [Google Scholar]

- Khan AZ, Pisella L, Vighetto A, Cotton F, Luauté J, Boisson D, Salemme R, Crawford JD, Rossetti Y. Optic ataxia errors depend on remapped, not viewed, target location. Nature Neuroscience. 2005;8(4):418–420. doi: 10.1038/nn1425. [DOI] [PubMed] [Google Scholar]

- Klein D, Milner B, Zatorre RJ, Zhao V, Nikelski J. Cerebral organization in bilinguals: a PET study of Chinese-English verb generation. Neuroreport. 1999;10(13):2841–2846. doi: 10.1097/00001756-199909090-00026. [DOI] [PubMed] [Google Scholar]

- Króliczak G, Cavina-Pratesi C, Goodman DA, Culham JC. What does the brain do when you fake it? An FMRI study of pantomimed and real grasping. J Neurophysiol. 2007;97(3):2410–2422. doi: 10.1152/jn.00778.2006. [DOI] [PubMed] [Google Scholar]

- Landau SM, D'Esposito M. Sequence learning in pianists and nonpianists: an fMRI study of motor expertise. Cogn Affect Behav Neurosci. 2006;6(3):246–259. doi: 10.3758/cabn.6.3.246. [DOI] [PubMed] [Google Scholar]

- Liddell S, Metzger M. Gesture in sign language discourse. Journal of Pragmatics. 1998;30:657–697. [Google Scholar]

- MacSweeney M, Capek CM, Campbell R, Woll B. The signing brain: the neurobiology of sign language. Trends in Cognitive Sciences. 2008;12(11):432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Waters D, Brammer MJ, Woll B, Goswami U. Phonological processing in deaf signers and the impact of age of first language acquisition. Neuroimage. 2008;40(3):1369–1379. doi: 10.1016/j.neuroimage.2007.12.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marshall J, Atkinson J, Smulovitch E, Thacker A, Woll B. Aphasia in a user of British Sign Language: Dissociation between sign and gesture. Cognitive Neuropsychology. 2004;21(5):537–554. doi: 10.1080/02643290342000249. [DOI] [PubMed] [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about thoughts. Chicago, IL: University of Chicago; 1992. [Google Scholar]

- Miller AJ. Least Squares Routines to Supplement those of Gentleman. Applied Statistics Algorithm. 1991:274. (AS274) [Google Scholar]

- Moll J, de Oliveira-Souza R, Passman LJ, Cunha FC, Souza-Lima F, Andreiuolo PA. Functional MRI correlates of real and imagined tool-use pantomimes. Neurology. 2000;54(6):1331–1336. doi: 10.1212/wnl.54.6.1331. [DOI] [PubMed] [Google Scholar]

- Nelson JK, Reuter-Lorenz PA, Persson J, Sylvester CYC, Jonides J. Mapping interference resolution across task domains: A shared control process in left inferior frontal gyrus. Brain Res. 2009;1256:92–100. doi: 10.1016/j.brainres.2008.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peigneux P, Van der Linden M, Garraux G, Laureys S, Degueldre C, Aerts J, et al. Imaging a cognitive model of apraxia: the neural substrate of gesture-specific cognitive processes. Hum Brain Mapp. 2004;21(3):119–142. doi: 10.1002/hbm.10161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proc Natl Acad Sci U S A. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poizner H, Klima ES, Bellugi U. What the hands reveal about the brain. Cambridge, MA: MIT Press; 1987. [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annu Rev Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rosci C, Chiesa V, Laiacona M, Capitani E. Apraxia is not associated to a disproportionate naming impairment for manipulable objects. Brain and Cognition. 2003;53:412–415. doi: 10.1016/s0278-2626(03)00156-8. [DOI] [PubMed] [Google Scholar]

- Rothi LJ, Heilman KM, Watson RT. Pantomime comprehension and ideomotor apraxia. J Neurol Neurosurg Psychiatry. 1985;48(3):207–210. doi: 10.1136/jnnp.48.3.207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rumiati RI, Weiss PH, Shallice T, Ottoboni G, Noth J, Zilles K, et al. Neural basis of pantomiming the use of visually presented objects. Neuroimage. 2004;21(4):1224–1231. doi: 10.1016/j.neuroimage.2003.11.017. [DOI] [PubMed] [Google Scholar]

- Sandler W, Lillo-Martin D. Sign language and linguistic universals. Cambridge: Cambridge University Press; 2006. [Google Scholar]

- Supalla T. The classifier system in American Sign Language. In: Craig C, editor. Noun Classes and Cateogrization. Philadelphia: John Benjamins; 1986. pp. 181–214. [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Thompson-Schill SL, D'Esposito M, Aguirre GK, Farah MJ. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proc Natl Acad Sci U S A. 1997;94(26):14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert DM, Goodbody SJ, Husain M. Maintaining internal representations: the role of the human superior parietal lobe. Nature Neuroscience. 1998;1(6):529–533. doi: 10.1038/2245. [DOI] [PubMed] [Google Scholar]

- Woods RP, Dapretto M, Sicotte NL, Toga AW, Mazziotta JC. Creation and use of a Talairach-compatible atlas for accurate, automated, nonlinear intersubject registration, and analysis of functional imaging data. Hum Brain Mapp. 1999;8(2–3):73–79. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<73::AID-HBM1>3.0.CO;2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley KJ. Local maxima and the expected Euler characteristic of excursion sets of chi-squared, F and t fields. Advanced Applied Probability. 1994;26:13–42. [Google Scholar]