Abstract

Objective

We assessed the usability of a health information exchange (HIE) in a densely populated metropolitan region. This grant-funded HIE had been deployed rapidly to address the imminent needs of the patient population and the need to draw wider participation from regional entities.

Design

We conducted a cross-sectional survey of individuals given access to the HIE at participating organizations and examined some of the usability and usage factors related to the technology acceptance model.

Measurements

We probed user perceptions using the Questionnaire for User Interaction Satisfaction, an author-generated Trust scale, and user characteristic questions (eg, age, weekly system usage time).

Results

Overall, users viewed the system favorably (ratings for all usability items were greater than neutral (one-sample Wilcoxon test, p<0.0014, Bonferroni-corrected for 35 tests). System usage was regressed on usability, trust, and demographic and user characteristic factors. Three usability factors were positively predictive of system usage: overall reactions (p<0 0.01), learning (p<0.05), and system functionality (p<0.01). Although trust is an important component in collaborative relationships, we did not find that user trust of other participating healthcare entities was significantly predictive of usage. An analysis of respondents' comments revealed ways to improve the HIE.

Conclusion

We used a rapid deployment model to develop an HIE and found that perceptions of system usability were positive. We also found that system usage was predicted well by some aspects of usability. Results from this study suggest that a rapid development approach may serve as a viable model for developing usable HIEs serving communities with limited resources.

Keywords: Information dissemination, medical records system, regional health planning, usability, usage, biomedical informatics, evaluation, Qualitative/ethnographic field study, Improving the education and skills training of health professionals, System implementation and management issues, Social/organizational study, Collaborative technologies, Methods for integration of information from disparate sources, Demonstrating return on IT investment, distributed systems, agents, Software engineering: architecture, Supporting practice at a distance (telehealth), Data exchange, communication, and integration across care settings (inter- and intra-enterprise), Visualization of data and knowledge, policy, Legal, historical, ethical study methods, clinical informatics, biomedical informatics, pediatrics, e-prescribing, human factors

Introduction and background

The need for electronic health records with information from multiple sites has steadily grown since 1990, but there have been and continue to be many challenges to the implementation of information technology in healthcare. Early attempts to develop health information exchange (HIE) systems were plagued by problems that temporarily halted progress.1 However, a federal report published in 2004 calling for a national health information network prompted renewed interest and catalyzed efforts to establish regional health information organizations.2

Most HIE systems are in the early stages of development; therefore, not surprisingly, studies have focused on anticipated healthcare outcomes,3 4 and few have directly addressed perceptions of usability.5 As defined by the ISO 9241-11 (1998) standard, usability is the ‘extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use.’ It is an important component of any study evaluating outcomes since such studies presume a high level of use. System adoption and use is generally tied to usability: if a system is difficult to use, people will be less likely to use it.6–10 Moreover, because HIE use implies a willingness to trust data from remote facilities when making decisions, it is possible that its adoption depends on trusting remotely-entered data. Therefore, usage may also be related to attributes of the data, such as what types of data are available and user perceptions of their reliability.11–14

In 2004, the MidSouth eHealth Alliance (MSeHA) introduced an HIE to three counties surrounding Memphis, Tennessee. MSeHA is an initiative funded by the Agency for Healthcare Research and Quality, the State of Tennessee, and Vanderbilt University.15 Its goal was to create an HIE that meets the basic needs of an underserved metropolitan region through exchange of clinical data among hospital emergency departments and community-based ambulatory clinics. To achieve this goal, MSeHA implemented a rapid deployment model that consolidated data from multiple facilities, while preserving the autonomy of participating entities.16 We adopted this approach to achieve a goal of having an operational system within 2 years of receiving grant funding, and because we believed that achieving operational status quickly would lead to wider participation among regional sites.

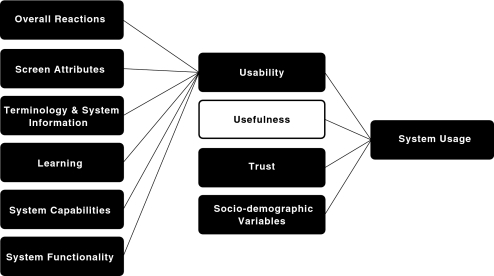

As a part of a larger study,17 and to better understand how the system was perceived by end users, we assessed user impressions of the HIE's usability. We hypothesized that system usage is linked to usability, an idea consistent with the widely-accepted technology acceptance model (TAM) which describes usage as a combination of factors related to user perceptions of its usability and its usefulness.7 18 Although we did not test the TAM directly, we included some of the factors and relationships modeled by the TAM such as usability, trust, and socio-demographic variables.11 19 Multiple dimensions of usability were tested by the Questionnaire for User Interaction Satisfaction (QUIS 7.0), a validated instrument that assesses perceptions of technological tools.20 21 Variables of interest included user impressions of the following system attributes: the system, screen attributes, terminology and system information, learnability, system capabilities, system functionality, and trust of data sources. The influence of socio-demographic variables (eg, age, gender, professional role) was also examined for potential influence on usage. We defined usage as the self-reported average weekly time spent using the system. Ordinal response categories were pre-defined according to QUIS as follows: <1 h, 1 to <4 h, 4 to <10 h, and over 10 h. Figure 1 shows how we adapted the TAM to illustrate the relationship between usage and usability (using QUIS variables), trust, and socio-demographic variables.

Figure 1.

Technology acceptance model (TAM) adapted to illustrate the relationship between system usage and usability (using QUIS variables), usefulness, trust, and socio-demographic variables. Shaded boxes indicate our variables of interest.

Methods

Study setting

The HIE design was based on four prominent models of interoperability used in four states.22 Vanderbilt University led the HIE design and development using a model consisting of the following primary components: (1) a patient-centered framework, a ‘decentralized’ system architecture with secure ‘vaults’ of patient information designated for and managed by each participating organization; (2) the ability for participating organizations to maintain current representations from each data input that are viewable by users via high-level identifiers; (3) minimal cost because organizations do not have to map their data and their systems can therefore evolve; (4) and a strong emphasis on security and privacy with two-factor authentication coupled with binding data sharing and participation agreements.16

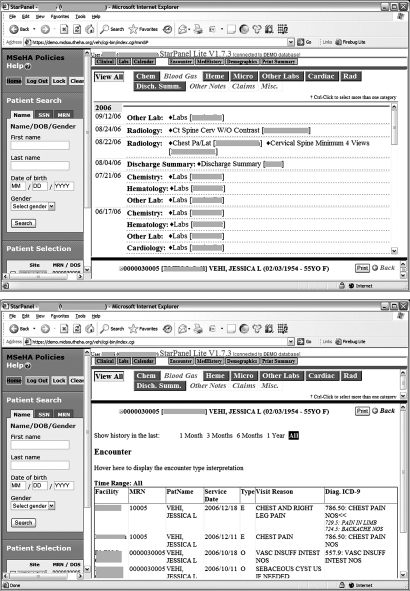

Early in the HIE's design phase, the MSeHA Board approved a platform and a plan for rapid data exchange and system dissemination. This decision was made because the urgent needs of the community precluded a standardized product development lifecycle, as had been executed with other HIE systems. As a result, we used an existing electronic health record platform and made only minimal changes to its interface. They included modifying the patient selection screen; displaying a facility name next to each test result, procedure note, or encounter summary; creating an aggregated view of laboratory data across facilities; and increasing the security of the login process by adding random number tokens. We also made minor changes to simplify navigation and remove functionality not required for the HIE system. Figure 2 shows examples of HIE interfaces. For more details, see Frisse et al.16

Figure 2.

Screenshots of the health information exchange interface for a test patient: Clinical History (top) and Encounters (bottom).

Our system serves 1.25 million patients in southwestern Tennessee. It shares patient data (eg, demographics, ICD-9 discharge codes, laboratory results, encounter data, and dictated reports) among nine hospital emergency departments, 15 ambulatory clinics, and one university medical group. A patient record-locator matches records across all vaults and displays an aggregate view of data in response to a user's query. Patients may opt out and keep their records local. The system records all logons and activity. Johnson and colleagues reported that the HIE was used for 3% of all encounters after its first year.16 Usage was steady at 7% at the time of this study, despite some changes in staff and faculty turnover which is high relative to some other recently reported usage rates.23

Sampling and subject recruitment

An email requesting participation in our study was sent to 345 healthcare professionals at participating MSeHA organizations in May 2009. This group included physicians, nurse practitioners, registered nurses, physician's assistants, and other medical staff. Candidates who agreed to participate were asked to indicate their preferred method of survey delivery. In June, we distributed a link to the electronic version of the survey, or sent it directly by email, postal mail or fax copy. Persons with invalid or missing email addresses were contacted by telephone or via the best contact method provided by the site supervisor. We informed prospective participants that those who completed the survey had up to a 1% chance of winning an iPod Touch. Seventy-six candidates could not be located (eg, workplace relocation). One of our co-authors (CMC) disseminated periodic emails to the remaining 269 candidates from June to November 2009, to remind them about the survey. Recruitment ceased when our sample was large enough to achieve sufficient statistical power.

Survey content

We evaluated user perceptions of the system with selected items from QUIS 7.0.20 21 Our survey had three sections: (1) demographics (age, job, gender) and system usage characteristics; (2) familiarity with technology (source: QUIS); and (3) user perceptions across seven scales: Overall Reactions, Screen, Terminology and System Information, Learning, System Capabilities, System Functionality (source: QUIS), and an author-generated Trust scale. The Trust scale probed beliefs about other participating organizations and the integrity of their data. Members of our evaluation team reviewed items for face validity. We collected responses for all items on a scale of 1 to 9, except for Trust, which was scaled from strongly disagree to strongly agree. Participants could also leave comments in each section. Online and paper versions of the survey were as similar as possible; however, there were some notable differences (see Data analysis section for details).

Data collection

We used REDCap (Research Electronic Data Capture, https://redcap.vanderbilt.edu/), a secure web-based tool to create the electronic version of the survey and administer it.24 In addition to delivery methods noted above, we hand-delivered surveys to providers who were unable to receive them any other form. The survey was distributed to health professionals with access to the HIE, including a subset of participants we refer to as ‘non-users’ who despite having access to the HIE subsequently reported that they did not use it. Data were collected from June to November 2009.

Data analysis

We analyzed data from the online and paper versions of the survey together; however, there were some notable differences between the two versions. The paper version of QUIS was scaled from 1 to 9, whereas the online version was scaled from 0 to 9. We compensated by transforming online scores so that both scales ranged from 1 to 9. In addition, QUIS items in the paper version included a Not Applicable (NA) option. The online version did not. Because the online survey did not disambiguate between missing and NA responses, we treated NA responses as missing. This approach is addressed in the Discussion section. Finally, the Terminology and System Information scale in the online version included ‘exploration of features by trial and error,’ which is typically considered a Learning item in QUIS. We analyzed it as part of the Learning scale to be consistent with QUIS. We calculated the internal reliability of each QUIS scale using Cronbach's α.

We used the statistical package Stata/SE 10.1 for quantitative analyses. A Wilcoxon rank sum test was used to investigate survey method effects on QUIS responses. We also compared descriptive statistics for both methods across demographics and usage characteristics. Data were summarized using mean, median, and SD, and we used a sign test to determine if individual subscale items were significantly different from neutral. To determine the effects of our independent variables (age, training role, system access time, computer literacy, trust, and QUIS items) on our dependent outcome, average system use per week, we used the ordinal logistic regression model shown below:

where ‘Qi’ is one of the six QUIS scale scores averaged across subscales, ‘system access time’ is an ordinal variable (<1 year, 1 year–<2 years, 2 years–<3 years, ≥3 years), ‘training role’ is a nominal category (Registered Nurse (RN)/Physician's Assistant (PA)/Licensed Practical Nurse (LPN) vs Nurse Practitioner (NP)/Physician (MD)), ‘age’ is a continuous variable, ‘computer literacy’ is scored from 0 to 25, and the trust index is the summed Trust subscale scores, yielding a value from 5 to 25. On average, 10% all responses per subscale item were not interpretable (NA) or missing. We used the multiple imputation approach with five imputations via the STATA ice package.25 26 This model included our outcome, average system use per week, and 40 independent variables: age, training role, system access time, computer literacy, trust, and 35 QUIS items. Although the missing at random assumption used in the multiple imputations is not directly testable, we evaluated the assumption by calculating the C-statistics (ROC value) from a logistic regression model in which the binary missing data indicator was regressed on variables used to impute missing values. Averaged imputed QUIS scales and summed Trust scores were included in the regression as single terms. A test of the proportional odds assumption for each of the six models (one for each QUIS item) verified the use of an ordinal logistic regression (p values range from 0.080 to 0.154).

User comments were analyzed qualitatively by three coauthors using a thematic coding approach whereby emergent themes in perceptions of usability were identified via inductive analysis and a consensus process.27

Results

Out of 237 distributed surveys, we received 165 responses (70% response rate). A total of 105 responses were submitted via REDCap and 60 were completed on paper. Three were excluded from analysis because there were no responses in any of the QUIS sections and the respondent did not self-identify as a non-user, which would have permitted the survey to be exited before the QUIS sections were reached. Of the remaining 162 completed surveys, 151 respondents identified themselves as system users and 11 identified themselves as non-users.

The Wilcoxon rank sum test showed no significant difference in QUIS scores between the responses to the online and paper versions (p<0.0014, Bonferroni-corrected for 35 tests), except for three subscale items: ‘I need to scroll to review information’ in Screen, and ‘Terminology relates well to the work you are doing: Never–Always’ and ‘Messages which appear on the screen are: Inconsistent–Consistent’ in Terminology and System Information. We also found no remarkable differences in the demographics or usage characteristics between online and paper survey respondents. For details about our respondents by survey method, see Table S1 of our online supplement at www.jamia.org.

Respondent profile

The mean respondent age was 43.8 years (SD 10.8; range: 23–76); 83 females (51.2%) and 76 males (46.9%) participated. Respondents identified themselves as follows (number per role is in parentheses): LPN (10), MD (85), NP (14), PA (3), RN (36), and Other (10).

We asked the 11 respondents who identified themselves as non-users to indicate a reason for non-use. Reasons included the following (frequency of responses is indicated in parentheses): Never been trained to use it (2); I do not find it useful (1); I do not have access to it (1); It is too difficult to use during my work (1); and Other (6). We thematically organized respondents' comments into the following categories of non-use: Someone else accesses the system when I need it (4); Do not know about the system (1); and Do not need the system (3). Comments included: ‘Don't know what it's about nor what to use it for’; ‘I have not worked in the emergency department since getting access’; ‘Had someone else get me information from it when I needed it (rarely)’; and ‘Info had already been pulled up before I saw patient needing this info, found it very helpful but haven't had a need/chance to use it myself.’

Table 1 shows users' average weekly time spent using the HIE. Overall, 43% of users reported using the system for <1 h a week. Users had personally used or were familiar with 15/25 technological devices listed, on average. Items ranged from ‘email’ to ‘CAD computer-aided design.’

Table 1.

Summary of health information exchange system usage

| Average system use per week (N=150, 1 missing) | Number of respondents |

| <1 h | 65 (43%) |

| 1 h–<4 h | 58 (39%) |

| ≥4 h | 27 (18%) |

The table shows the number of users who reported using the HIE for given lengths of time per week. Only six respondents indicated over 10 h of use; therefore, these responses were merged with the ‘≥4 h’ category. The percentage of total users is shown in parentheses.

There was no significant difference between the ages of users and non-users (p=0.86) or their genders (p=0.52).

Perceived system usability

A QUIS score of 5 was neutral, a score of >5 was favorable, and a score of <5 was unfavorable. The mean score of all 35 items of the six QUIS scales was 6.5 (SD 1.4) with high intrascale agreement (Cronbach's α scores ranged from 0.74 to 0.91), indicating a high degree of measurement reliability within and across scales.28 29 For all items, over 50% of scores were above neutral (>5), suggesting that the system was perceived positively. A one-sample Wilcoxon test revealed that all QUIS subscale item scores were significantly greater than 5 (p<0.0014, Bonferroni-corrected for 35 tests). For details about the distribution of all user QUIS scale scores, see Table S2 of our online supplement at www.jamia.org.

There were a relatively small number of NA values in the paper version of the survey received from users (117 or 5% of total potential NA values generated by the paper version) that were treated as missing data in our analysis. The following scales contained items that contained 10 or more NA responses: Terminology and System Information (ie, ‘help messages appear on screen,’ ‘messages which appear on screen,’ and ‘error messages’) and System Capabilities (ie, ‘correcting your mistakes’). AUC values ranged from 0.67 to 0.88, suggesting that missing data were likely to be missing at random. Therefore, we assume that there is likely to be no systematic pattern of missing data and report the following results from ordinal logistic regression models with a multiple imputation method.

In multivariate analyses, higher average weekly system use was associated with higher scores in Overall Reactions (OR 1.50, p<0.01), Learning (OR 1.32, p<0.05), and System Functionality (OR 1.34, p<0.01). Trust was not significantly predictive of system usage. Table 2 shows details of our regression model.

Table 2.

Results of ordinal logistic regression models fit for each QUIS scale predictor

| QUIS scale | Age | Professional role | System access time | Computer literacy | Trust |

| Overall Reactions | |||||

| 1.50** (1.17 to 1.93) | 0.97 (0.93 to 1.01) | 0.87 (0.44 to 1.75) | 1.16 (0.80 to 1.66) | 1.02 (0.96 to 1.08) | 1.02 (0.88 to 1.18) |

| Screen | |||||

| 1.30 (0.98 to 1.74) | 0.97 (0.93 to 1.01) | 0.89 (0.04 to 1.78) | 1.14 (0.79 to 1.63) | 1.01 (0.96 to 1.07) | 1.07 (0.94 to 1.22) |

| Terminology and System Information | |||||

| 1.25 (0.99 to 1.57) | 0.97 (0.93 to 1.01) | 0.93 (0.47 to 1.87) | 1.14 (0.79 to 1.63) | 1.02 (0.96 to 1.07) | 1.06 (0.93 to 1.21) |

| Learning | |||||

| 1.32* (1.04 to 1.67) | 0.97 (0.93 to 1.01) | 0.97 (0.48 to 1.96) | 1.19 (0.82 to 1.72) | 1.02 (0.96 to 1.08) | 1.05 (0.92 to 1.21) |

| System Capabilities | |||||

| 1.28 (0.96 to 1.70) | 0.97 (0.93 to 1.01) | 0.93 (0.47 to 1.87) | 1.13 (0.79 to 1.62) | 1.02 (0.97 to 1.09) | 1.05 (0.92 to 1.20) |

| System Functionality | |||||

| 1.34** (1.08 to 1.68) | 0.97 (0.93 to 1.01) | 0.88 (0.43 to 1.76) | 1.23 (0.85 to 1.78) | 1.02 (0.96 to 1.08) | 1.06 (0.93 to 1.20) |

Values are OR estimates (95% CIs) and are derived from five imputed data sets.

Average OR estimates are shown here for an ordered regression model fit separately to the system usage outcome (average time per week of system use) with each QUIS scale predictor and five other independent variables (system access time, training role, computer literacy, age and trust). Only the QUIS scales Overall Reactions, Learning, and System Functionality were found to be significant predictors of system usage.

*p<0.05; **p<0.01.

QUIS, Questionnaire for User Interaction Satisfaction 7.0.

A total of 98 comments were collected for the QUIS scales and the Trust scale (number per scale is in parentheses): Overall Reactions (29), Screen (13), Terminology and System Information (6), Learning (10), System Capabilities (7), System Functionality (21), and Trust (12). Table 3 shows themes that emerged and their frequencies. Representative quotes are also shown.

Table 3.

Themes in user comments

| Theme (n) | Example comment |

| HIE has great positive value (23) | ‘Invaluable, please keep this system operational! It cannot be replaced when patients jump from hospital to hospital. It saves time and resources.’ |

| HIE could be made more user-friendly (17) | ‘I love e-health but find it is very cumbersome to use.’ |

| ‘I think the way you search for information and view different locations' records could be more user-friendly. Switching between providers needs to be easier.’ | |

| Technical difficulties with system functionalities (eg, logon, logoff, timeout) (15) | ‘I loved being able to get labs and information on my patients from other facilities… The information was very valuable. However, my log in NEVER worked. It was beyond frustrating to stay on.’ |

| Need for HIE varies by user (12) | ‘This system is good in an office-based practice, not the ER. We do not have enough time to navigate in the program!! We have sick patients in the room. We see almost 40 patients per shift!’ |

| ‘Since I am a pediatrician and have access to (my own hospital's) computer system (that includes most pediatric patients in the region), I have stopped using this system entirely.’ | |

| HIE provides data in a timely manner (9) | ‘This system has allowed our staff to retrieve important medical reports in a timely manner.’ |

| HIE can be improved with some additional features (7) | ‘Would be nice to separate labs from tests from summaries.’ |

| ‘Desirable elements would be search by result type, tabular display of like results, graphical display of like results, transcriptions from all hospitals.’ | |

| HIE needs more tech support (5) | ‘NEED to have a formal tutorial online and/or in person.’ |

| HIE has reliable tech support (1) | ‘Whenever I have had to call for tech support they always call right back - I work nights so this is important to me and the physicians I work with.’ |

| HIE is easy to learn (2) | ‘I feel most could learn this system quickly.’ |

| HIE could use more data (eg, history and physical checklists, discharge summaries) (23) | ‘Labs, radiology, ultrasound almost always there, but 90% of the time have to call for a discharge summary following hospitalization.’ |

| HIE needs more data from other organizations (17) | ‘It would be helpful if all organizations had discharge summaries and every hospital used the network.’ |

| Shared information with other facilities is helpful (8) | ‘It is often helpful to see what was done at other facilities; can usually rely on results unless physical findings prove to contradict other facilities’ results.’ |

| HIE data are reliable (4) | ‘I believe the info is reliable and helpful. ’ |

Frequencies are shown in parentheses next to each theme.

Trust

We conducted an exploratory analysis of the Trust variable and found an interscale reliability of 0.62 (Cronbach's α for five items). The mean Trust scale score for all respondents was 3.6 on a scale of 1 to 5 where 3 was neutral and 5 was very favorable (median 3.8, SD 0.56). Table 4 shows each trust item with its percentage of total responses.

Table 4.

Trust scale results

| Trust item (Cronbach's α=0.62) | Strongly disagree | Disagree | Neutral | Agree | Strongly agree |

| I trust the care plans performed by outside institutions (145) | 3% | 2% | 36% | 48% | 11% |

| I can rely on test results from outside institutions (147) | 1% | 1% | 11% | 68% | 19% |

| There is a high level of risk to delivering high quality care when using data from an outside institution (146) | 11% | 45% | 24% | 16% | 4% |

| Many institutions that provide data to MSeHA are not as competent as we are to deliver high quality care (147) | 12% | 46% | 31% | 9% | 3% |

| My use of MSeHA is impacted by my concerns about data from outside institutions (144) | 14% | 44% | 26% | 13% | 3% |

We reversed the last three statements in our analyses so that all statements were consistent in direction. Summed subscale scores were used in our regression model; responses ranged from strongly disagree (score=1) to strongly agree (score=5), where high levels of trust correspond to high Trust index scores. The total number of respondents for each Trust item is indicated in parentheses.

MSeHA, MidSouth eHealth Alliance.

Discussion

Health information exchange systems are a cutting-edge component of healthcare. Recent legislation has catalyzed state-level collaborations to implement this technology. This study is one of the first to formally assess the usability of a regional HIE in emergency department and ambulatory clinic settings, and expose opportunities for enhancing HIEs.

Overall, we found an encouraging level of usability across all users. Their reactions to system usability in general and to specific aspects of usability (effort required to learn the system, system functionality) were good predictors of the average weekly time that they engaged with the system. The relationship between learning effort and system usage was also supported by qualitative comments about training and the need for ‘just in time’ online or in-person tutorials.

A previous study of this HIE noted its potential to reduce testing and admissions16; here, survey respondents believed that additional data could further increase the system's utility. Data types include discharge summaries and information from additional medical facilities. One user wished that ‘more of the state of Tennessee was included in the database… also Arkansas and Mississippi.’

Importantly, our results strongly suggest that an HIE may achieve a high level of usability with relatively primitive groupings of data, that is, a rapid development approach,16 which allows relatively quick implementation of a usable system in clinical settings.30 31 We believe that using an information model that consolidates data—rather than aggregating it—may allow an HIE to be implemented quickly with reasonably high usability.

We hypothesized that usage would be partially driven by user trust of different organizations. Surprisingly, this hypothesis was not confirmed—trust was not an important determinant of usage, although most users reported they could trust the HIE. Results from a validated trust scale may have been different. We also did not find any significant difference between the ages or genders of users and non-users, in contrast to previous studies.19 32

There were limitations to our findings. First, more than half of the respondents had used the system for ≤2 years, and 43% used it for <1 h per week. Although the model we have chosen to examine the relationship between usability and usage assumes usability predicts usage, the relationship is likely not unidirectional. Usability may have no impact on measurable use in settings where use is a job requirement or where a system under study is the only solution to achieve a user's need (i.e., ‘captive use’).33 It is also possible that respondent usage patterns reported in this survey may not accurately represent those of non-respondents. For example, a leadership change during the study period caused high physician turnover in at least two sites which affected our ability to obtain responses from experienced users at those sites. However, the number of affected physicians was relatively small compared to our sample size, therefore our findings were likely not adversely affected. Regarding the method of survey distribution, we chose to maximize our response rate by disseminating the survey according to respondent preferences. To ameliorate any differences that might arise between online and paper versions of the survey, we created the two versions to be as similar as possible and rectified any remaining differences before analysis. For example, we transformed the online 10-point scale to the paper version's 9-point scale to maintain consistency with the standard QUIS scale. However, it is unlikely that this adjustment affected the validity or reliability of our results.34 We also evaluated NA responses on paper as missing responses in our analyses, which may have inflated the prevalence of missing responses to some questions. However, the number of NA responses was small, and therefore it is unlikely that our treatment of these responses significantly affected our results.

Lessons learned

The HIE reported here is one of an increasing number of regional data-sharing initiatives that have been deployed since 2001.35 Unlike failed attempts at building similar networks in the 1990s, our HIE was built on a relatively low-cost data consolidation model designed to evolve with changes in patient data management.16 We learned that if an HIE system meets basic expectations shared among members of its user base, an acceptable level of usability is achievable. This fact is encouraging for developers with limited budgets who need to create HIEs. While evaluating an HIE from the standpoint of its users is only one measure of a system's success, it is also a critical one for determining how to improve and promote continued adoption.

Conclusions

Health information exchange systems are an emergent component of the healthcare landscape. This formal usability assessment of a regional HIE has documented overall encouraging levels of usability that, perhaps not surprisingly, were associated with the weekly exposure of the user to the system. This study also uncovered additional data that could enhance the meaningful use of the exchange, and some alternative instructional approaches to help maintain comfort with the system in the face of infrequent use. Overall, this study supports the rapid development approach to implementing an HIE.

Supplementary Material

Footnotes

Funding: This project was financially supported by the Agency for Healthcare Research and Quality contract 290-04-0006, the State of Tennessee, and Vanderbilt University. Funding for REDCap is provided by 1 UL1 RR024975 from the National Center for Research Resources/National Institutes of Health.

Competing interests: None.

Ethics approval: This study was conducted with the approval of the ethics committee of Vanderbilt University IRB.

Provenance and peer review: Commissioned; externally peer reviewed.

References

- 1.Lorenzi N. Strategies for Creating Successful Local Health Information Infrastructure Initiatives. Washington DC, USA: Services USDoHaH, 2003:1–27 [Google Scholar]

- 2.Thompson T, Brailer D. The Decade of Health Information Technology: Delivering Consumer-centric and Information-rich Health Care Framework for Strategic Action. Washington DC, USA: Department of Health and Human Services, 2004:178 [Google Scholar]

- 3.Shapiro JS, Kannry J, Lipton M, et al. Approaches to patient health information exchange and their impact on emergency medicine. Ann Emerg Med 2006;48:426–32 [DOI] [PubMed] [Google Scholar]

- 4.Finnell JT, Overhage JM, Dexter PR, et al. Community clinical data exchange for emergency medicine patients. AMIA Annu Symp Proc 2003:235–8 [PMC free article] [PubMed] [Google Scholar]

- 5.Cheng PH, Chen HS, Lai F, et al. The strategic use of standardized information exchange technology in a university health system. Telemed J E Health 2010;16:314–26 [DOI] [PubMed] [Google Scholar]

- 6.Weir CR, Crockett R, Gohlinghorst S, et al. Does user satisfaction relate to adoption behavior? an exploratory analysis using CPRS implementation. Proc AMIA Symp 2000:913–17 [PMC free article] [PubMed] [Google Scholar]

- 7.Davis F. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Quarterly 1989;13:319–40 [Google Scholar]

- 8.Johnson KB. Barriers that impede the adoption of pediatric information technology. Arch Pediatr Adolesc Med 2001;155:1374–9 [DOI] [PubMed] [Google Scholar]

- 9.Verhoeven F, Steehouder MF, Hendrix RM, et al. Factors affecting health care workers' adoption of a website with infection control guidelines. Int J Med Inform 2009;78:663–78 [DOI] [PubMed] [Google Scholar]

- 10.Gould DJ, Terrell MA, Fleming J. A usability study of users' perceptions toward a multimedia computer-assisted learning tool for neuroanatomy. Anat Sci Educ 2008;1:175–83 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gefen D, Karahanna E, Straub D. Trust and tam in online shopping: an integrated model. MIS Quarterly 2003;27:51–90 [Google Scholar]

- 12.Miller RH, Sim I. Physicians' use of electronic medical records: barriers and solutions. Health Affair (Millwood) 2004;23:116–26 [DOI] [PubMed] [Google Scholar]

- 13.Anderson JG, Aydin CE. Evaluating the impact of health care information systems. Int J Technol Assess Health Care 1997;13:380–93 [DOI] [PubMed] [Google Scholar]

- 14.Koivunen M, Hatonen H, Valimaki M. Barriers and facilitators influencing the implementation of an interactive Internet-portal application for patient education in psychiatric hospitals. Patient Educ Couns 2008;70:412–19 [DOI] [PubMed] [Google Scholar]

- 15.Midsouth e-Health Alliance, 2004. http://www.midsoutheha.org (accessed 1 Feb 2011). [Google Scholar]

- 16.Frisse ME, King JK, Rice WB, et al. A regional health information exchange: architecture and implementation. AMIA Annu Symp Proc 2008:212–16 [PMC free article] [PubMed] [Google Scholar]

- 17.Johnson KB, Gadd C. Playing smallball: approaches to evaluating pilot health information exchange systems. J Biomed Inform 2007;40(6 Suppl):S21–6 [DOI] [PubMed] [Google Scholar]

- 18.Davis F, Bagozzi R, Warshaw P. User acceptance of computer technology: a comparison of two theoretical models. Manag Sci 1989;35:982–1003 [Google Scholar]

- 19.Venkatesh V, Morris MG. Why don't men ever stop to ask for directions? gender, social influence, and their role in technology acceptance and usage behavior. MIS Quarterly 2000;24:115–39 [Google Scholar]

- 20.Chin JP, Diehl VA, Norman KL. Development of an instrument measuring user satisfaction of the human–computer interface. Proc CHI 1988:213–21 [Google Scholar]

- 21.Harper B, Slaughter L, Norman KL. Questionnaire administration via the WWW: a validation and reliability study for a user satisfaction questionnaire. In: Proc WebNet, 1997:808–10 [Google Scholar]

- 22.Frisse ME. State and community-based efforts to foster interoperability. Health Aff (Millwood) 2005;24:1190–6 [DOI] [PubMed] [Google Scholar]

- 23.Vest JR, Zhao H, Jaspserson J, et al. Factors motivating and affecting health information exchange usage. J Am Med Inform Assoc 2011;18:143–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)–a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42:377–81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Royston P. Multiple imputation of missing values. Stata J 2004;4:227–41 [Google Scholar]

- 26.Royston P. Multiple imputation of missing values: Further update of ice, with an emphasis on categorical variables. Stata J 2009;9:466–77 [Google Scholar]

- 27.Boyatzis R. Transforming Qualitative Information: Thematic Analysis and Code Development. Thousand Oaks, CA: SAGE, 1998 [Google Scholar]

- 28.Landauer T. Research methods in human-computer interaction. In: Helander M, Landauer T, Prabhu P, eds. Handbook of Human-Computer Interaction. New York, NY: Elsevier, 1998:203–27 [Google Scholar]

- 29.Nunnally J, Bernstein I. Psychometric Theory. New York, NY: McGraw-Hill, 1978 [Google Scholar]

- 30.Miller RH, Miller BS. The Santa Barbara County Care Data Exchange: what happened? Health Aff (Millwood) 2007;26:568–80 [DOI] [PubMed] [Google Scholar]

- 31.DeBor G, Diamond C, Grodecki D, et al. A tale of three cities—where RHIOS meet the NHIN. J Healthc Inf Manag 2006;20:63–70 [PubMed] [Google Scholar]

- 32.Morris M, Viswanath V. Age differences in technology adoption decisions: implications for a changing work force. Pers Psychol 2000;53:375–403 [Google Scholar]

- 33.Adams DA, Nelson RR, Todd PA. Perceived usefulness, ease of use, and usage of information technology: a replication. MIS Quarterly 1992;16:227–47 [Google Scholar]

- 34.Matell MS, Jacoby J. Is there an optimal number of alternatives for Likert scale items? Study I: reliability and validity. Educ Psychol Meas 1971;31:657–74 [Google Scholar]

- 35.Halamka J, Aranow M, Ascenzo C, et al. E-Prescribing collaboration in Massachusetts: early experiences from regional prescribing projects. J Am Med Inform Assoc 2006;13:239–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.