Abstract

A large-scale study of 484 elementary school children (6–10 years) performing word repetition tasks in their native language (L1-Japanese) and a second language (L2-English) was conducted using functional near-infrared spectroscopy. Three factors presumably associated with cortical activation, language (L1/L2), word frequency (high/low), and hemisphere (left/right), were investigated. L1 words elicited significantly greater brain activation than L2 words, regardless of semantic knowledge, particularly in the superior/middle temporal and inferior parietal regions (angular/supramarginal gyri). The greater L1-elicited activation in these regions suggests that they are phonological loci, reflecting processes tuned to the phonology of the native language, while phonologically unfamiliar L2 words were processed like nonword auditory stimuli. The activation was bilateral in the auditory and superior/middle temporal regions. Hemispheric asymmetry was observed in the inferior frontal region (right dominant), and in the inferior parietal region with interactions: low-frequency words elicited more right-hemispheric activation (particularly in the supramarginal gyrus), while high-frequency words elicited more left-hemispheric activation (particularly in the angular gyrus). The present results reveal the strong involvement of a bilateral language network in children’s brains depending more on right-hemispheric processing while acquiring unfamiliar/low-frequency words. A right-to-left shift in laterality should occur in the inferior parietal region, as lexical knowledge increases irrespective of language.

Keywords: foreign language, functional near-infrared spectroscopy (fNIRS), learning, native language, phonology

Introduction

Native, or first, language (L1) acquisition is a natural phenomenon, and it occurs even without intervention. Skinner (1957) suggested that a child acquires L1 through imitating the language of its parents or caregivers. Children do imitate adults, and repetition of new words and phrases is a basic feature of a child’s speech. A body of studies in various research domains, including psychology, linguistics, and anthropology, has intensively discussed the role of repetition (often referred to as imitation) in language acquisition, reporting that repetition facilitates grammatical and lexical development (Corrigan 1980; Snow 1981, 1983; Kuczaj 1982; Speidel and Nelson 1989; Perez-Pereira 1994).

On the other hand, learning a nonnative, or second, language (L2) is not always as easy as acquiring L1. Repetition in a foreign language is a more difficult task than that in L1 as it requires learners to process unfamiliar speech sounds. Particularly, it entails auditory perception skills as well as memory and articulation skills. Previous studies suggest that the ability to replicate unfamiliar foreign pronunciation and intonation is associated with the capacity to learn foreign languages (Tahta et al. 1981; Service 1992). Therefore, the ability to repeat unfamiliar foreign sounds can be considered an indicator of foreign language learning predisposition and also of the robustness of some neurofunctional processes involved in speech.

In recent years, a large body of neuroimaging and neurophysiological studies has been devoted to the study of the neural organization of language (Hickok and Poeppel 2000; Kutas and Federmeier 2000; Ullman 2001; Friederici 2002; Kaan and Swaab 2002). Such neuroimaging studies have not only converged with the findings of clinical aphasiology but have also started to broaden our understanding of the neural basis of language processing. The left perisylvian region of the human cortex is known to play a major role in language processing (Galaburda et al. 1978; Caplan and Waters 1999; Geschwind and Miller 2001). On the other hand, we have progressively learned that respective brain regions within or outside of the traditional left perisylvian areas and the language processing networks encompassing frontal, temporal, and/or parietal regions differentially contribute to or are involved in specific aspects of linguistic computation, such as syntax, semantics, and phonology, from the word level to sentence processing (Grodzinsky 2000; Price 2000; Friederici 2002; Indefrey and Levelt 2004; Poeppel and Hickok 2004; Szaflarski et al. 2006).

Recent research has also demonstrated that both the left and right hemispheres (LH, RH) contribute to varying aspects of language processing in the normal brain (Beeman and Chiarello 1998; Gandour et al. 2000; Friederici 2002; Zatorre et al. 2002; Friederici and Alter 2004) even though historical and current works still regard the LH as having a primary and significant role in language processing. As previous neuroimaging work has indicated that word repetition tasks elicit widespread bilateral activation in areas associated with auditory processing of speech (Howard et al.1992; Castro-Caldas et al. 1998; McCrory et al. 2000; Price 2000; Liégeois et al. 2003), in the present study, we employed a word repetition task as a robust predictor of language learning ability in children (Tahta et al. 1981; Service 1992) and explored its neural substrate.

To date, positron emission tomography (PET), functional magnetic resonance imaging (fMRI), event-related potential, and magnetoencephalography have been used extensively to elucidate detailed pictures of the brain–language relationship. In addition, a relatively new brain imaging technique, functional near-infrared spectroscopy (fNIRS), has been demonstrated to be an effective tool for monitoring local changes in cerebral oxygenation and hemodynamics during functional brain activation. Functional NIRS has a major advantage in developmental studies with children, especially for large-scale studies: Unlike PET, which uses injections of a radioactive substance, or fMRI, which uses strong magnetic fields and is physically restrictive, fNIRS is a fully noninvasive and unrestrictive neuroimaging technique that enables the real-time monitoring of brain hemodynamics of children (Hoshi and Chen 2002), infants (Meek et al. 1998; Taga et al. 2003; Homae et al. 2006, 2007; Bortfeld et al. 2007, 2009; Minagawa-Kawai et al. 2007, 2009), and even neonates (Sakatani et al. 1999; Peña et al. 2003), as well as adults (Maki et al. 1995; Watanabe et al. 1998). Its components and setup are compact compared with fMRI and PET, and the application of the measurement probes is also quick and easy, allowing the effective acquisition of mass data. In addition, a participant’s motion during measurement is tolerated to a higher degree than in fMRI and PET (Watanabe et al. 1998; Ikegami and Taga 2008; Hull et al. 2009), in which the head position must be strictly fixed and vocalization may induce severe motion artifacts (Hinke et al. 1993; Yetkin et al. 1995; Birn et al. 1998, 1999; Barch et al. 1999; Wilson et al. 2004). Given that elicited imitation is necessarily accompanied by articulation and small motions of the participant’s head, this advantage makes fNIRS a primary candidate for the language task employed in the current study.

In general, functional neuroimaging studies of children pose unique scientific, ethical, and technical challenges. Although there are numerous lesion and neuroimaging studies on the brain–language relationship, most of them are small in size. In addition, the inevitable differences in age, tasks, culture, L2-learning environments, and so on, make it difficult to see the overall picture of the study results. Studies with small sample pools also tend to result in reduced statistical power, limiting the interpretation of their results. In reality, however, it is often difficult for researchers to recruit participants and acquire data, especially in studies of children. Recruiting participants is especially challenging in the study of normally developing children as they do not receive any direct benefits from the research, and this difficulty increases for longitudinal studies. Moreover, acquisition of data for child subjects is restricted by many factors including restlessness, motion, lack of child-friendly language tasks, and so on, as children are unable to comply with complicated tasks for long periods of time. For these reasons, most studies focus on adults, infants, or patients. As language skills continue to develop rapidly in children during the school-age years, systematic observation of functional brain development (in both L1 and L2) is crucial. While behavioral studies are abundant, there are only a few studies dealing with normally developing school-aged children (ca., 6–12 years) using neuroimaging techniques (Gaillard et al. 2003a, 2003b; Sachs and Gaillard 2003; Szaflarski et al. 2006), and literature dealing with L2 acquisition is even more unobtainable, although studies dealing with older children have been conducted (Sakai 2005; Tatsuno and Sakai 2005). Furthermore, previous neuroimaging studies regarding children focused mainly on perception or comprehension rather than articulation or production because of instrumental limitations including articulation-induced motion artifacts.

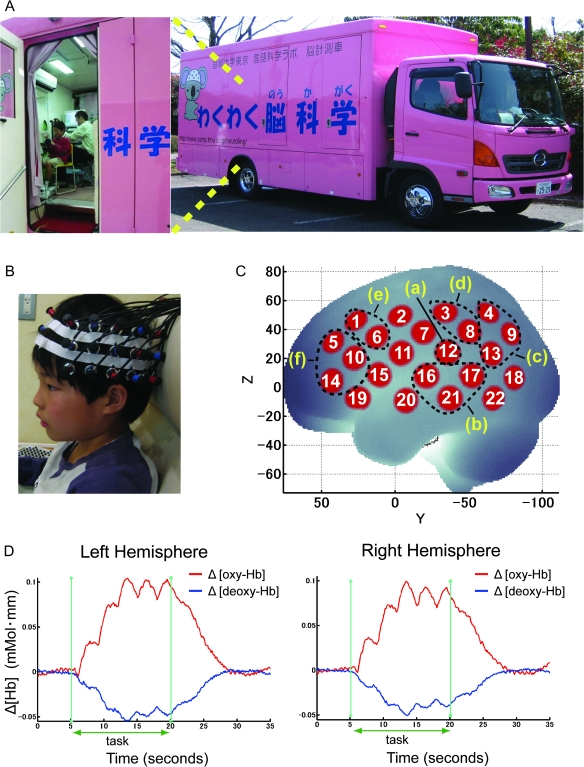

In order to overcome these limitations, we have conducted a large-scale 3-year cohort study enrolling approximately 500 normally developing elementary school children (6–10 years of age) per year in Japan. In this paper, we report the results of a cross-sectional examination of the data obtained from the middle year of the cohort study. We investigated children in 3 age groups in the initial analyses, and put them together in the subsequent analyses. We utilized fNIRS as a data acquisition tool and a basic word repetition task as a predictor of language learning ability. To fully exploit the merits of fNIRS while performing a massive neuroimaging analysis of elementary school children, we installed an fNIRS system in a mobile laboratory, shown in Figure 1A, so that the neuroimaging facility could be transported to the elementary schools.

Figure 1.

fNIRS measurements. (A) Our original neuroimaging vehicle. (B) Closeup view of the fNIRS equipment. fNIRS data were obtained using a 44-channel spectrometer (Hitachi ETG-4000). A 3 × 5 array of 8 laser diodes and 7 light detectors was applied, resulting in 22 channels on each side of the participant’s head. (C) Cortical projection points of fNIRS measurements (location of 22 channels) and the 6 defined ROIs for language processing are mapped onto the MNI standard brain coordinate system by spatial registration. This figure shows the left hemisphere. The locations of the 22 channels and 6 ROIs on the RH are symmetrical to those of the left hemisphere. The 6 defined ROIs: (a) the primary and auditory association cortices consisting of BAs (BA 41, 42) with channel 12, (b) the vicinity of Wernicke’s area, the posterior part of the superior/middle temporal gyri (BA 21, 22) with channels 16, 17, and 21, (c) the angular gyrus (BA 39) with channels 4, 9, and 13, (d) the supramarginal gyrus (BA 40) with channels 3 and 8, (e) the pars opercularis, part of Broca’s area, (BA 44) with channels 1 and 6, and (f) the pars triangularis, part of Broca’s area, (BA 45) with channels 5, 10, and 14. (D) An example of the time course in [oxy-Hb] and [deoxy-Hb] of grand-averaged data of the 392 participants for the channel that showed the highest t-value in [oxy-Hb] signals during word repetition tasks. (Channel 6 on the LH showed the highest t-value. The time course of the hemodynamic response at the same channel on the right homolog is also shown.) Red line: Δ [oxy-Hb]; blue line: Δ [deoxy-Hb]; vertical green line: task onset and end timing. Increases in [oxy-Hb] and decreases in [deoxy-Hb] indicate brain activations.

As the language system dramatically develops during childhood, we expect that brain functions and structures do as well. With this in mind, we first investigated whether developmental changes in cortical activation during a word repetition task exist or not. Following the results that age variances among our subjects produced no salient differences, in the present study, we aimed to investigate the factors (language: L1/L2 and word frequency: high/low), which would influence cortical representation. We also explored the different characteristics of language-related regions of interest (ROIs) and hemispheric laterality with respect to L1 and L2 processing in developing brains of school-age children. In addition, the characteristics of [oxy-Hb] and [deoxy-Hb] signals were compared. It should be noted here that, at this stage, presenting a broad view is of great importance because previous studies on this age group have been small in size. Consequently, creating a systematic picture based on various studies is difficult due to different task employment, differences in neuroimaging methods, cultural differences of participants, and differences in languages or L2-learning environments. Hence, we have chosen to omit the details of group and/or individual differences, which will be presented in subsequent reports. Unlike previous studies, which focused on either L1 or L2, but not both at the same time, this study addresses both L1 and L2 processing by the same individuals at the same time, enabling us to compare different facets of language processing in the young developing brain.

Materials and Methods

Participants

The present study was carried out on 484 children (248 girls and 236 boys) from 7 different elementary schools in Japan. Their mean age was 8.93 ± 0.89 (mean ± standard deviation [SD]) with an age range of 6–10 years. All participants completed a questionnaire before commencing this study. Nonnationals and participants with psychiatric disorders are excluded from the analyses. The Edinburgh Handedness Inventory (Oldfield 1971) was used to determine hand dominance. The left-handed (8) and ambidextrous (38) were excluded from the analyses and only right-handed participants (438) were further analyzed. Each participant’s parent gave written informed consent before their child’s participation in this study, and each participant was given a token of gratitude for his/her involvement after the experiment. All the procedures in this study were approved by the Human Subject Ethics Committee of Tokyo Metropolitan University.

Children’s Exposure to English

We had participants of the same age with different levels of English proficiency as they had had different levels of exposure to L2. Some public schools provided 45-min English lessons (11–35 school h/year), while others did not. The children who went to public schools that did not provide English lessons had been exposed to English through commercial language schools and/or home study. The frequency of the English lessons provided by commercial language schools did not differ much from those provided by public schools. As for home study, the parents/caretakers provided their children with exposure to English, using videos, CDs, and other learning materials. A few children who had at least one parent who was a native English speaker took part in our project, but their data were excluded from the analyses because English was not a foreign language for them. Our study also included some children who went to a private school which ran an immersion program, where English was not the subject of study but the language through which other subjects, such as arithmetic, were taught. Immersion programs are often associated with bilingual societies such as the Province of Quebec in Canada, but this Japanese private school is located in a monolingual city and is not an international school; hence, these children were not excluded.

As described above, we had participants with different levels of exposure to L2. However, before analyzing the effect of L2 proficiency or exposure, which will be presented in a subsequent paper, we have attempted to obtain an overall view of the cortical representation of L1 and L2 in the present report.

Experimental Tasks

We employed a word repetition task: recordings of speech samples from a female native speaker of Japanese and from one of English were used for the experimental stimuli. We used 120 single words: 30 Japanese high-frequency words (Jpn_HF), 30 Japanese low-frequency words (Jpn_LF), 30 English high-frequency words (Eng_HF), and 30 English low-frequency words (Eng_LF). High-frequency words are defined as words that have >50 occurrences per million while the low-frequency words have <5 occurrences per million. All words used in this experiment were emotionally neutral and taken from 2 corpora: one by Amano and Kondo (2000) for Japanese and the other by Kučera and Francis (1967) for English. A list of all the words used in this study is provided in Supplementary Table 1. All Japanese words contained 4 morae (Japanese syllabic unit), and English words consisted of 2 syllables. The length of Japanese and English words was kept approximately equal (within ±10% difference). The mean durations of Japanese and English words used in each task (30 words for each task) were 643.0 ms (Jpn_HF), 648.4 ms (Jpn_LF), 737.5 ms (Eng_HF), and 725.9 ms (Eng_LF).

After the procedure was described to the children, they were seated in a chair and given instructions to repeat the words presented from a loud speaker. They were asked to overtly repeat the words as they heard them. The children heard the stimuli through the loud speaker at a comfortable volume (around 65 dB SPL). The order of the 4 tasks (Jpn_HF, Jpn_LF, Eng_HF, and Eng_LF) was counterbalanced, and the stimuli within each task were presented in blocks of 5 words. One task consisted of 6 blocks, presented in random order while stimuli in each block were kept in the same sequence. One block was 35 s: a 5-s prestimulus period, 15-s stimulus period, and 10-s recovery period, followed by a 5-s poststimulus period. Children were asked to do a brief practice session of the word repetition task before the experiment. The word stimuli used for the practice session were not used for the experiment. Each experimental stimulus was presented only once per participant. During fNIRS measurement, children were instructed to look at a fixation point. In order to minimize head motion, children were asked to hold their body as still as possible during the tasks. Their oral repetition responses were recorded. An experimenter checked the children’s performance during the practice session for whether their utterance was clear and their head movement was within tolerance. When a participant’s utterance was so loud that his/her vocalization might induce severe motion artifacts, or so soft that his/her voice data may fail to record, the participant was asked to change his/her behavior until his/her performance level fell within tolerance. Children took a short rest between tasks.

As for the behavioral data, whether the words were correctly repeated or not was evaluated phoneme by phoneme by a native Japanese and bilingual (Japanese and English) speaker. Repetition success rates were calculated and statistical comparisons were made between the 4 tasks (Jpn_HF, Jpn_LF, Eng_HF, and Eng_LF) using a 2 × 2 repeated-measures analysis of variance (ANOVA) (2 languages × 2 word-frequencies). Children were also asked to judge whether they knew the words heard in the 4 repetition tasks or not, according to the following criteria: 1) I know the word and its meaning, 2) the word is familiar but its meaning is not known, or 3) the word is not familiar at all. Statistical comparisons of the children’s ratings of their semantic knowledge of the word stimuli (i.e., the relative frequencies of ratings of 1) in the above criteria) were conducted between the 4 tasks using a 2 × 2 repeated-measures ANOVA (2 languages × 2 word-frequencies). Details of the ANOVA main-effect results were investigated using paired t-tests when a significant interaction was found.

It is possible that duration and intensity of children’s utterances were different between L1 and L2, and it is conceivable that a longer and stronger utterance may lead to greater brain activation. In order to clarify this point, acoustic analysis was conducted, and the results were compared between the 4 tasks. For the acoustic analysis, the root mean square (RMS; an estimate of sound intensity) was calculated from the amplitude of the speech signal. The RMS amplitude is the square root of the average (mean) of the square of the distance of the sound curve (waveform) from the baseline. The amount of sound to which a child was exposed is not just a matter of sound intensity but also of the duration involved. Therefore, the total sound exposure during the word repetition period (6 blocks) was integrated and defined as TASK-RMS. The total of the rest period (7 rest blocks between 6 task blocks) was integrated in the same way and defined as REST-RMS. The ratios between TASK-RMS and REST-RMS were calculated for all the children and were represented in decibels (dB). Thus, the temporal integration of acoustic intensity (which represents intensity × duration of speech sound, that is, TASK-RMS/REST-RMS in decibels) during task periods and its statistics between the 4 tasks (Jpn_HF, Jpn_LF, Eng_HF, and Eng_LF) were determined. A 2 × 2 repeated-measures ANOVA (2 languages × 2 word frequencies) was performed.

Data Acquisition—fNIRS

Functional NIRS data were obtained using a multichannel spectrometer (ETG-4000, Hitachi Medical Co., Tokyo, Japan). A 3 × 5 array of optodes consisting of 8 laser diodes and 7 light detectors, alternately placed at an interoptode distance of 3 cm to yield 22 channels, was applied on each side of the participant’s head (Fig. 1B). The middle column of the 3 × 5 array was placed along the coronal reference curve (T3-C3-Cz-C4-T4) of the international 10/20 system (Jurcak et al. 2005, 2007) so that the lower edge of the array was placed directly above the ear. The highest sensitivity of hemodynamic changes in the lateral cortical region encompassing a pair of optodes is expected to be localized at the midpoint between the optodes (Okada et al. 1997), and this point is the location of a channel. Optical data from individual channels were collected at 2 different wavelengths (695 and 830 nm) and analyzed using the modified Beer–Lambert Law for a highly scattering medium (Cope et al. 1988). Changes in oxygenated ([oxy-Hb]), deoxygenated ([deoxy-Hb]), and total hemoglobin ([total-Hb]) signals were calculated in units of millimolar–millimeter (Maki et al. 1995). Optical signals were sampled at a rate of 10 Hz.

Spatial Registration

After going through all 4 tasks, the positions of optodes and scalp landmarks (i.e., nasion, right and left preauricular points, and Oz and Cz of the international 10–20 system) were measured for each participant using an electromagnetic 3D digitizer system (ISOTRAK II, Polhemus Inc.).

We employed virtual registration (Tsuzuki et al. 2007) to register fNIRS data to Montreal Neurological Institute (MNI) standard brain space (Brett et al. 2002). Briefly, utilizing the positional information of a particular channel relative to the anatomical landmarks, this method enables the placement of a virtual probe holder on the scalp by simulating the holder’s deformation and thereby registering probes and channels onto the reference brains, in place of a participant’s brain, in a probabilistic manner. The optodes and channels were registered onto the surface of an averaged reference brain in MNI space (Okamoto et al. 2004), and the most likely coordinates for the channels were subjected to anatomical labeling using a Matlab function (Okamoto et al. 2009; available at http://brain.job.affrc.go.jp. Last accessed date: February 18, 2011), which reads anatomical labeling information coded in a macroanatomical brain atlas constructed by Tzourio-Mazoyer et al. (2002) and the Brodmann cytoarchitectonic area atlas available in the MRIcro program (Rorden and Brett 2000). Specifically, for each surface voxel of the atlas brains, the function scanned anatomical labels of surface voxels located within a sphere with a radius of 10 mm from a given voxel corresponding to a channel location and reassigned the most frequent labels to that voxel.

Referring to thus-acquired macroanatomical labels, we combined the channels to set ROIs based on the mode macroanatomical label in each channel (Fig. 1C). For example, channels 3 and 8 with the mode anatomical label on the left supramarginal gyrus at 67% and 85%, respectively, were combined to generate the left supramarginal gyrus ROI. Since a recent study clarified that optical properties including optical path length between corresponding channels on the RH and LH do not differ significantly (Katagiri et al. 2010), brain activation in both hemispheres was compared.

Verification of Anatomical Information for Representative Data with MRI

Although Okamoto’s method is based on the adult brain, it was used for the children in our study, as there is evidence indicating minimal anatomical differences between children, ages 7 and 8, and adults relative to the resolution of fMRI data (Burgund et al. 2002) and minimal difference in functional foci between adults and children (Kang et al. 2003). Some other research has also indicated that adult standard brain atlases are valid for children over 6 years of age (Talaraich and Tournoux 1988; Muzik et al. 2000; Schlaggar et al. 2002). For confirmation, the positions of the probes from 30 representative cases (10 representative participants × 3 images, one from each of the 3 years of our cohort study) were measured using a 3D digitizer and translated to participants’ MRI images using a 3D Composite Display Unit (Hitachi Medical Co., Japan). The probe positions and the MR images compared are from the same children. Probe and channel positions were projected onto the cortical surface of individual participants, to examine cortical structures underlying each measuring position. Anatomical information obtained by spatial registration and by MR images was compared, and it was confirmed that the outcome was consistent.

fNIRS Data Analysis

First, the participants whose task performance or behavior did not meet our criteria were excluded from further analyses. We evaluated whether the words were correctly repeated or not phoneme by phoneme for each participant. The participants with a repetition success rate of less than 70% were excluded from the analyses. Note that the present study focused on the difference in cortical representation of L1 and L2, and whether the words were correctly pronounced or not was not a main issue here. A repetition was considered complete when we are able to evaluate a subject’s performance (pronunciation) from the oral recording. No repetition at all or vocalization that was too soft or not clear enough to evaluate, were considered repetition failures. fNIRS data were preprocessed using the Platform for Optical Topography Analysis Tools (Adv. Res. Lab., Hitachi Ltd.), a plug-in-based analysis platform that runs on Matlab (The MathWorks, Inc.). To remove components originating from slow fluctuations of cerebral blood flow and heartbeat noise, the Hb signals were bandpass filtered between 0.02 and 1 Hz, and, by detecting rapid changes in [total-Hb] signal (signal variations >0.1 mmol·mm over 2 consecutive samples), all blocks that had been affected by movement artifacts were subsequently identified and removed. Following this elimination process, participant data that contained a minimum of 3 of 6 data blocks for each task were used. In addition, by visual inspection, we discarded an entire task when there was insufficient optical signal (i.e., when the peak signal of [oxy-Hb] during the task period was lower than approximately 0.01 mmol·mm as determined with reference to the SD of the rest period) due to obstruction by hair or for other reasons. We utilized the channels that had >60% survival rate of data after the motion check. As channels 15 and 20 did not reach the criterion due to movement in the temporal muscles, they were not used for further analyses.

In each individual set of hemoglobin data, we extracted data blocks from time course data. Each data block consisted of 5 s prior to stimulus onset, 15 s of stimulus, 10 s of recovery, and a 5 s poststimulus period. For each channel in nonrejected blocks, a first-degree baseline fit to the mean of the 5 s prestimulus period and 5 s of the poststimulus period was performed.

For statistical analyses, we opted to focus on the [oxy-Hb] signal because it is more sensitive to changes in cerebral blood flow than are [deoxy-Hb] and [total-Hb] signals (Hoshi et al. 2001; Strangman et al. 2002; Hoshi 2003), has a higher signal-to-noise ratio (Strangman et al. 2002), and also has a higher retest reliability (Plichta et al. 2006). On the other hand, it has been indicated that [oxy-Hb] signal is sensitive to extracerebral blood volume changes and is more prone to contamination from extracerebral artifacts (Boden et al. 2007). Moreover, a recent fNIRS study revealed that the word-frequency effect elicited significant differences between low and high-frequency words for decreases in [deoxy-Hb], while [oxy-Hb] changes only showed a nonsignificant trend (Hofmann et al. 2008). Thus, we also examined [deoxy-Hb] for the main analyses (whole-group analyses). To apply [deoxy-Hb] changes to the analyses also has merit in linking the fNIRS studies to fMRI-based imaging literature, as a decrease in [deoxy-Hb] corresponds well to an increase in blood oxygen level–dependent contrast (Kleinschmidt et al. 1996). For each child, the mean change in concentration of [oxy-Hb] and [deoxy-Hb] over 25 s after the onset of stimulus was calculated for each task and for each channel.

All statistical analyses were carried out using the SPSS statistical package (SPSS Inc.). First, Student’s t-tests (P < 0.05 Bonferroni corrected for familywise errors) were conducted to examine the activation of each independent channel (22 channels in the LH and 22 channels in the RH) for each of the 4 tasks. Activity during the stimulus and recovery periods (25 s) was compared with that from the baseline periods (5 s prestimulus and 5 s poststimulus).

Second, we defined appropriate ROIs for language processing according to the results of spatial registration. Six ROIs were selected bilaterally referring to an MNI-compatible macroanatomical atlas (Automatic Anatomical Label) from the channels that showed a statistically significant increase of [oxy-Hb] for at least one out of 4 tasks (Jpn_HF, Jpn_LF, Eng_HF, and Eng_LF) in either the LH or the RH. We did this because even if [oxy-Hb] did not show significant activation for 3 of 4 tasks, there is value in comparing the 1 task that did show significant [oxy-Hb] increase with the other 3 tasks. The overall [oxy-Hb] signal level in a single ROI was obtained by calculating the unweighted mean [oxy-Hb] signal level of all the channels within the ROI. Figure 1C shows the location of the channels and the 6 defined ROIs for language processing mapped onto the MNI standard brain: a) the primary and auditory association cortices consisting of Brodmann areas (BA 41, 42) with channel 12; b) the vicinity of Wernicke’s area, the posterior part of the superior/middle temporal gyri (BA 21, 22) with channels 16, 17, and 21; c) the angular gyrus (BA 39) with channels 4, 9, and 13; d) the supramarginal gyrus (BA 40) with channels 3 and 8; e) the pars opercularis, part of Broca’s area (BA 44), with channels 1 and 6; and f) the pars triangularis, part of Broca’s area (BA 45), with channels 5, 10, and 14. MNI coordinates of the estimated cortical projection points for all the channels are also shown in Table 1.

Table 1.

MNI coordinates of the estimated cortical projection points for the channels used for ROIs

| LH | MNI coordinate |

RH | MNI coordinate |

Brain area | ||||

| X | Y | Z | X | Y | Z | |||

| CH01 | –45 | 26 | 45 | CH01 | 48 | 27 | 43 | POP (BA 44) |

| CH03 | –60 | –34 | 50 | CH03 | 64 | –31 | 49 | SMG (BA 40) |

| CH04 | –51 | –62 | 50 | CH04 | 56 | –57 | 48 | AG (BA 39) |

| CH05 | –45 | 41 | 30 | CH05 | 48 | 42 | 29 | PTR (BA 45) |

| CH06 | –58 | 12 | 34 | CH06 | 60 | 13 | 33 | POP (BA 44) |

| CH08 | –63 | –50 | 38 | CH08 | 66 | –45 | 37 | SMG (BA 40) |

| CH09 | –48 | –76 | 36 | CH09 | 52 | –72 | 34 | AG (BA 39) |

| CH10 | –56 | 27 | 20 | CH10 | 58 | 29 | 19 | PTR (BA 45) |

| CH12 | –68 | –35 | 24 | CH12 | 71 | –32 | 24 | PAAC (BA 41, 42) |

| CH13 | –60 | –64 | 23 | CH13 | 63 | –60 | 22 | AG (BA 39) |

| CH14 | –52 | 42 | 4 | CH14 | 54 | 44 | 4 | PTR (BA 45) |

| CH16 | –69 | –21 | 8 | CH16 | 72 | –19 | 8 | Posterior part of SMTG (BA 21, 22) |

| CH17 | –67 | –51 | 8 | CH17 | 70 | –48 | 8 | Posterior part of SMTG (BA 21, 22) |

| CH21 | –70 | –37 | –7 | CH21 | 72 | –34 | –7 | Posterior part of SMTG (BA 21, 22) |

Note: Names of the channels shown in Figure 1C are indicated in the first (LH) and fifth (RH) columns. All values are in millimeters. PAAC = primary and auditory association cortices, SMTG = superior/middle temporal gyri, AG = angular gyrus, SMG = supramarginal gyrus, POP = pars opercularis, part of Broca’s area, and PTR = pars triangularis, part of Broca’s area.

We first investigated the relationship between age and brain response during L1 task performance for the 6 ROIs. We opted to analyze the Japanese task data, as we had participants of the same age with different levels of English proficiency (exposure to L2), as mentioned above, and it is hard to observe developmental effects with L2 tasks. The total of 392 children who satisfied all our entry criteria were used as described earlier, and divided into 3 age groups (age 8 group = 130, mean age ± SD: 8.0 ± 0.4; age 9 group = 130, 8.9 ± 0.2; age 10 group = 132, 9.9 ± 0.4). 2 × 2 × 3 3-way ANOVAs were performed for each defined ROI with the within-subject effects of hemisphere (LH and RH) and word frequency (high and low), and the between-subject effect of age group (age 8–10). Familywise errors were Bonferroni-corrected for 6 tests. A significance level of P < 0.05 was applied after correction for multiple testing. For confirmation, we also conducted regression analyses of the relation between age and brain activation (relative [oxy-Hb] changes) during L1 frequent-word repetition tasks. The results were Bonferroni corrected for 12 tests with a significance level of P < 0.05.

Next, whole-study group analyses were conducted to produce an overall view of L1 and L2 processing. Statistical analyses using a 3-way repeated-measures ANOVA were conducted for each ROI to evaluate the effects of 3 within-subject factors: the 2 languages (Japanese: L1, and English: L2), 2 word-frequencies (high and low), and 2 hemispheres (LH and RH). P values were Bonferroni corrected for 6 tests with a significance level of P < 0.05 after correction for multiple testing.

Results

Behavioral Results

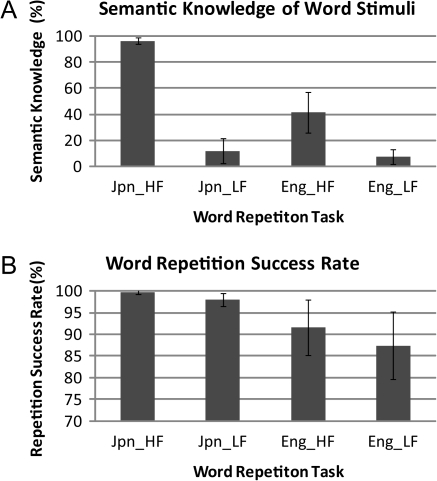

A comparison of the children’s ratings of their semantic knowledge of the word stimuli among the 4 repetition tasks is shown in Figure 2A. As exhibited in the figure, mean semantic knowledge of the Japanese high-frequency words (96%) was much higher than that of the Japanese low-frequency words (12%), the English high-frequency words (42%) and the English low-frequency words (8%). It was also revealed that children rarely have semantic knowledge of low-frequency words irrespective of language. Statistical comparisons of the children’s ratings of their semantic knowledge of the word stimuli between the 4 tasks using a 2 × 2 repeated-measures ANOVA revealed highly significant main effects of language (F [1, 29] = 253.76, P < 0.001), word frequency (F [1, 29] = 1492.75, P < 0.001), and a language × word-frequency interaction (F [1, 29] = 209.79, P < 0.001). Appropriate post hoc pairwise comparisons using paired t-tests showed significant differences in children’s ratings of their semantic knowledge between the tasks: Jpn_HF > Eng_HF, Jpn_HF > Jpn_LF, and Eng_HF > Eng_LF (corrected P < 0.001), but the difference between Jpn_LF & Eng_LF failed to reach significance.

Figure 2.

Behavioral results. (A) A comparison of the semantic knowledge between the 4 tasks (Jpn_HF, Jpn_LF, Eng_HF, and Eng_LF) and (B) a comparison of word repetition success rate between the 4 tasks.

A comparison of word repetition success rates between the 4 tasks is shown in Figure 2B. A 2 × 2 repeated-measures ANOVA on repetition success rates showed highly significant main effects of language (F [1, 391] = 853.62, P < 0.001), word frequency (F [1, 391] = 398.73, P < 0.001), and a language × word–frequency interaction (F [1, 391] = 63.74, P < 0.001). Appropriate post hoc pairwise comparisons using paired t-tests (Jpn_HF > Eng_HF, Jpn_LF > Eng_LF, Jpn_HF > Jpn_LF, and Eng_HF > Eng_LF) showed significant differences in rates between all pairs (corrected P < 0.001).

As is clear from comparison of Figure 2A,B, semantic knowledge did not strongly associate with word repetition success rate. Rather, language familiarity (difference in phonological familiarity between L1 and L2) is likely to be the dominant factor.

In order to clarify whether a longer and stronger utterance during repetition could lead to greater brain activation, statistical analyses were conducted to compare the children’s oral responses (see the Material and Methods section) between the 4 tasks. The 2 × 2 repeated-measures ANOVA between the 4 tasks showed significant main effects of language (F [1, 391] = 199.96, P < 0.001), word frequency (F [1, 391] = 21.71, P < 0.001), and a language × word-frequency interaction (F [1, 391] = 49.77, P < 0.001). Post hoc pairwise comparisons using paired t-tests showed significant differences in children’s oral responses between the tasks: Jpn_HF < Eng_HF, Jpn_LF < Eng_LF, and Jpn_HF > Jpn_LF (corrected P < 0.001) but not for Eng_HF & Eng_LF.

Functional Imaging Results

An example of the time course in [oxy-Hb] and [deoxy-Hb] of grand-averaged data for the 392 participants is given in Figure 1D. It is the channel that showed the highest t-value in [oxy-Hb] signals (channel 6) and in which we found an increase in [oxy-Hb] and a decrease in [deoxy-Hb] indicating brain activations similar to response patterns reported in a number of previous studies.

A Developmental Perspective (Age Factor)

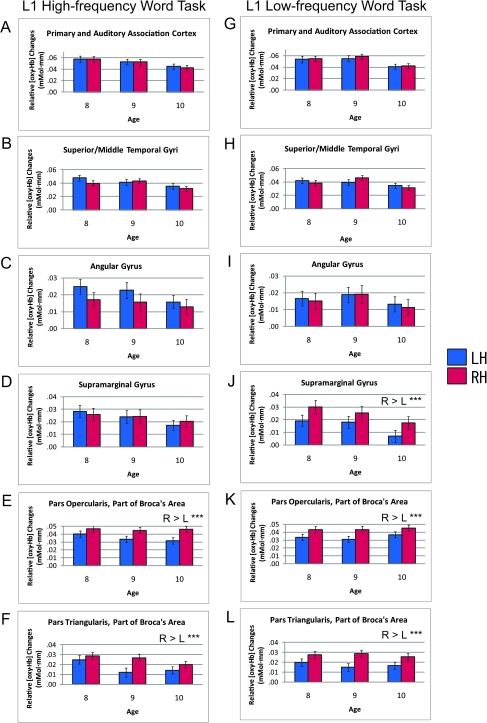

The differences in brain activations between the 3 age groups were derived from the results of descriptive statistics (Fig. 3). In general, increase in age was associated with decrease in brain activation, especially in the high-frequency word task, in all 6 ROIs. In order to examine the quantitative difference in brain activation between the 3 groups, a 2 × 2 × 3 3-way mixed-effects ANOVA was carried out for each defined ROI with the within-subject effects of hemisphere (LH and RH) and word frequency (high and low) and the between-subject effect of age group (age 8–10). The results of the 3-way ANOVAs for L1 word repetition tasks grouped by age are shown in Supplementary Table 2. Although a main effect of age group was found before correction for multiple comparisons (age 8 > age 9 > age 10) for the brain regions at an early stage of cortical auditory processing (i.e., the primary and auditory association cortices, and the superior/middle temporal gyri), none of the 6 ROIs reached significance after Bonferroni correction.

Figure 3.

Bar graphs of average brain activations of children during L1 word repetition tasks. ROI analyses were employed. The results of the L1 high-frequency word task are shown in (A–F) and those of the L1 low-frequency word task in (G–L): (A,G) primary and auditory association cortices (BA 41, 42), (B,H) superior/middle temporal gyri (BA 21, 22), (C,I) angular gyrus (BA 39), (D,J) supramarginal gyrus (BA 40), (E,K) pars opercularis, part of Broca’s area (BA 44), and (F,L) pars triangularis, part of Broca’s area (BA 45). Comparisons of 3 different age groups, 8–10, can be seen in each figure. The bar graphs show the relative changes in [oxy-Hb], and error bars indicate standard error. Asterisks indicate statistically significant results (*** corrected P < 0.001).

As for within-subject factors, the ANOVA revealed a main effect of hemisphere for the supramarginal gyrus (corrected P < 0.05, RH > LH) and with a nonsignificant trend of opposite dominance for the angular gyrus (LH > RH). There were also significant frequency and hemisphere interactions for the supramarginal gyrus, and, intriguingly, a higher activation in the right supramarginal gyrus was prominent for the low-frequency L1 word task (Fig. 3D,J), and an insignificant trend of higher activation in the left angular gyrus was observed for the high-frequency L1 word task (Fig. 3C,I). A post hoc simple main-effect analysis for the supramarginal gyrus applying the Bonferroni correction revealed a significant effect of hemisphere for low-frequency L1 words (corrected P < 0.001, RH > LH) but not for high-frequency L1 words. As for the Broca’s area, the ANOVA revealed a main effect of hemisphere for the pars opercularis (F [1,381] = 33.527, corrected P < 0.001, RH > LH) and for the pars triangularis (F [1,381] = 34.969, corrected P < 0.001, RH > LH).

Although there were moderate downward trends in brain activations as age increased and the linearization coefficients were negative in all 6 ROIs in the high-frequency word task, statistical analyses showed no significant difference in brain activation between age groups.

For confirmation, we also conducted regression analyses of the relation between age and brain activation (relative [oxy-Hb] changes) during high-frequency L1 word repetition tasks. Results of the regression analyses are shown in Supplementary Table 3. As with the age group analyses, the regression coefficients of the lines are negative in most of the brain regions (all the regions in the LH), but the decrease in brain activation was small and none of the 6 ROIs reached significance after Bonferroni correction for 12 tests.

Whole-Group Analyses

Followed by the observation that the age difference did not show any significance in our study group, whole-group analyses were conducted to achieve an overall view of neural substrates during L1 and L2 processing and their comparison in the young population as a whole.

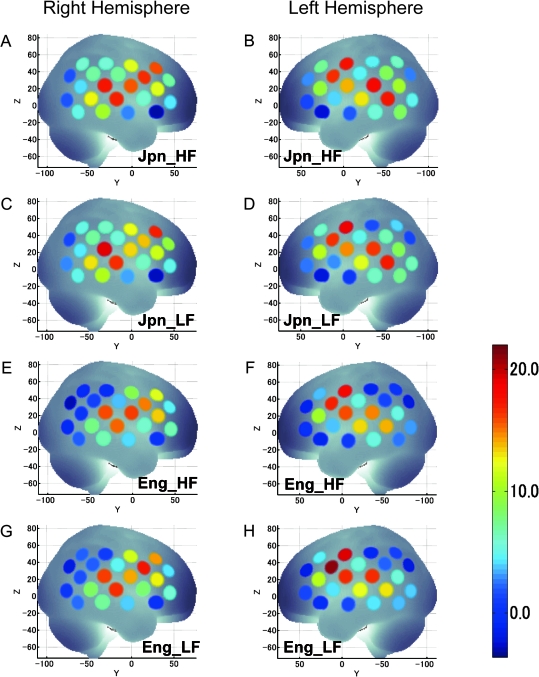

The positions of the measurement channels together with mean cortical activation in t-values (uncorrected) of both RH and LH are shown in Figure 4. The results show, in broad terms, that the overall activation patterns encompassing frontal, temporal, and parietal lobes were similar both in L1 and L2 irrespective of word frequency. The activated regions included the primary auditory area, classical Wernicke’s and Broca’s areas, the angular gyrus, and the supramarginal gyrus. These similar, widespread activation patterns indicate that children used largely overlapping neural substrates when processing words in both L1 and L2, irrespective of word frequency.

Figure 4.

Cortical activations during word repetition tasks. Average fNIRS data obtained from 392 participants were projected onto the MNI standard brain space by spatial registration. The position of the measurement channels together with cortical activation of both RH and LH are shown in the figures: high-frequency Japanese (Jpn_HF) RH (A) and LH (B); low-frequency Japanese (Jpn_LF) RH (C) and LH (D); high-frequency English (Eng_HF) RH (E) and LH (F); and low-frequency English (Eng_LF) RH (G) and LH (H). The color scale indicates t-values (uncorrected).

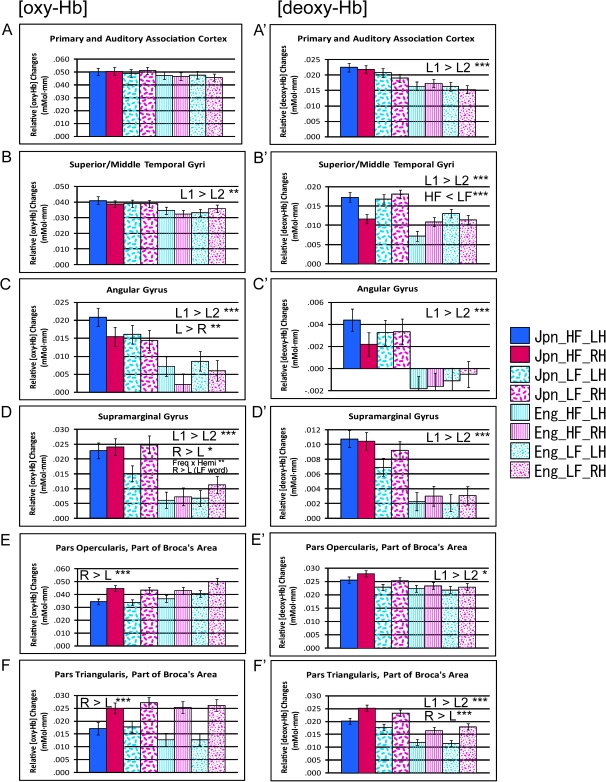

Cortical activations during word repetition tasks for each ROI are shown in Figure 5. Since a basic assumption of fNIRS measurements is that an increase in the [oxy-Hb] signal and a decrease in the [deoxy-Hb] signal indicate cortical activation (Villringer and Chance 1997; Obrig et al. 2000; Seiyama et al. 2004), relative changes in the [deoxy-Hb] signals are also indicated as positive values in the figure for comparison with [oxy-Hb] signals. In broad terms, the results of [oxy-Hb] and [deoxy-Hb] are quite similar as demonstrated in the figure. Three-way repeated-measure ANOVAs using within-subject factors (language [L1 and L2] × word frequency [low and high] × hemisphere [LH and RH]) were conducted for both [oxy-Hb] and [deoxy-Hb] in order to reveal different characteristic features for each defined ROI, and the results are summarized in Table 2.

Figure 5.

Average brain activation in children during word repetition tasks. ROI analyses were employed: primary and auditory association cortices (BA 41, 42) [oxy-Hb] (A) and [deoxy-Hb] (A′); superior/middle temporal gyri (BA 21, 22) [oxy-Hb] (B) and [deoxy-Hb] (B′), angular gyrus (BA 39) [oxy-Hb] (C) and [deoxy-Hb] (C′), supramarginal gyrus (BA 40) [oxy-Hb] (D) and [deoxy-Hb] (D′), pars opercularis, part of Broca’s area (BA 44) [oxy-Hb] (E) and [deoxy-Hb] (E′), and pars triangularis, part of Broca’s area (BA 45) [oxy-Hb] (F) and [deoxy-Hb] (F′). The bar graphs show the relative changes in [oxy-Hb] and [deoxy-Hb]. Error bars indicate standard error. Since an increase in the [oxy-Hb] signal and a decrease in the [deoxy-Hb] signal indicate cortical activation, the relative changes in the [deoxy-Hb] signals are indicated as positive values in the figure for comparison with [oxy-Hb] signals. Abbreviations: Jpn_HF_LH = Japanese high-frequency words (LH), Jpn_HF_RH = Japanese high-frequency words (RH), Jpn_LF_LH = Japanese low-frequency words (LH), Jpn_LF_RH = Japanese low-frequency words (RH), Eng_HF_LH = English high-frequency words (LH), Eng_HF_RH = English high-frequency words (RH), Eng_LF_LH = English low-frequency words (LH), Eng_LF_RH = English low-frequency words (RH). Abbreviations in the bar graphs: L1 = native language (Japanese), L2 = second language (English), L = left hemisphere, R = right hemisphere, Freq = word frequency, Hemi = hemisphere. Asterisks indicate statistically significant results (* P < 0.05, **P < 0.01, ***P < 0.001; all corrected).

Table 2.

ANOVA results

| Brain area | Source of variation | df | F | Puncorrected | Multiple comparison | Remarks |

| (a) Oxy-Hb | ||||||

| PAAC (BA 41,42) | Language | 1, 352 | 3.190 | 0.075 | ||

| Word frequency | 1, 352 | 0.027 | 0.870 | |||

| Hemisphere | 1, 352 | 0.000 | 0.988 | |||

| Language × frequency | 1, 352 | 0.004 | 0.950 | |||

| Language × hemisphere | 1, 352 | 1.974 | 0.161 | |||

| Frequency × hemisphere | 1, 352 | 0.019 | 0.891 | |||

| Language × frequency × hemisphere | 1, 352 | 0.523 | 0.470 | |||

| SMTG (BA 21,22) | Language | 1, 376 | 11.658 | 0.001 | <0.005** | L1 > L2 |

| Word frequency | 1, 376 | 0.000 | 0.997 | |||

| Hemisphere | 1, 376 | 0.083 | 0.774 | |||

| Language × frequency | 1, 376 | 0.270 | 0.604 | |||

| Language × hemisphere | 1, 376 | 0.504 | 0.478 | |||

| Frequency × hemisphere | 1, 376 | 3.232 | 0.073 | |||

| Language × frequency × hemisphere | 1, 376 | 0.510 | 0.476 | |||

| AG (BA 39) | Language | 1, 389 | 23.660 | 0.000 | <0.001*** | L1 > L2 |

| Word frequency | 1, 389 | 0.004 | 0.951 | |||

| Hemisphere | 1, 389 | 10.003 | 0.002 | <0.01** | L > R | |

| Language × frequency | 1, 389 | 1.515 | 0.219 | |||

| Language × hemisphere | 1, 389 | 0.055 | 0.815 | |||

| Frequency × hemisphere | 1, 389 | 4.265 | 0.040 | cf. captiona | ||

| Language × frequency × hemisphere | 1, 389 | 0.202 | 0.654 | |||

| SMG (BA 40) | Language | 1, 383 | 35.164 | 0.000 | <0.001*** | L1 > L2 |

| Word frequency | 1, 383 | 0.069 | 0.793 | |||

| Hemisphere | 1, 383 | 8.483 | 0.004 | <0.05* | R > L | |

| Language × frequency | 1, 383 | 1.682 | 0.195 | |||

| Language × hemisphere | 1, 383 | 2.822 | 0.094 | |||

| Frequency × hemisphere | 1, 383 | 12.813 | 0.000 | <0.005** | R > L (LF word) | |

| Language × frequency × hemisphere | 1, 383 | 2.201 | 0.139 | |||

| POP (BA 44) | Language | 1, 380 | 3.732 | 0.054 | ||

| Word frequency | 1, 380 | 2.214 | 0.138 | |||

| Hemisphere | 1, 380 | 26.347 | 0.000 | <0.001*** | R > L | |

| Language × frequency | 1, 380 | 3.760 | 0.053 | |||

| Language × hemisphere | 1, 380 | 1.853 | 0.174 | |||

| Frequency × hemisphere | 1, 380 | 0.605 | 0.437 | |||

| Language × frequency × hemisphere | 1, 380 | 1.721 | 0.190 | |||

| PTR (BA 45) | Language | 1, 378 | 2.056 | 0.152 | ||

| Word frequency | 1, 378 | 0.358 | 0.550 | |||

| Hemisphere | 1, 378 | 60.631 | 0.000 | <0.001*** | R > L | |

| Language × frequency | 1, 378 | 0.082 | 0.774 | |||

| Language × hemisphere | 1, 378 | 5.691 | 0.018 | |||

| Frequency × hemisphere | 1, 378 | 0.513 | 0.474 | |||

| Language × frequency × hemisphere | 1, 378 | 0.042 | 0.839 | |||

| (b) Deoxy-Hb | ||||||

| PAAC (BA 41,42) | Language | 1, 342 | 28.500 | 0.000 | <0.001*** | L1 > L2 |

| Word frequency | 1, 342 | 4.267 | 0.040 | HF > LF | ||

| Hemisphere | 1, 342 | 0.318 | 0.573 | |||

| Language × frequency | 1, 342 | 0.466 | 0.495 | |||

| Language × hemisphere | 1, 342 | 1.778 | 0.183 | |||

| Frequency × hemisphere | 1, 342 | 3.431 | 0.065 | |||

| Language × frequency × hemisphere | 1, 342 | 0.260 | 0.610 | |||

| SMTG (BA 21,22) | Language | 1, 372 | 43.317 | 0.000 | <0.001*** | L1 > L2 |

| Word frequency | 1, 372 | 15.561 | 0.000 | <0.001*** | HF < LF | |

| Hemisphere | 1, 372 | 0.469 | 0.494 | |||

| Language × frequency | 1, 372 | 0.027 | 0.869 | |||

| Language × hemisphere | 1, 372 | 8.474 | 0.004 | <0.05* | ||

| Frequency × hemisphere | 1, 372 | 0.572 | 0.450 | |||

| Language × frequency × hemisphere | 1, 372 | 32.913 | 0.000 | <0.001*** | ||

| AG (BA 39) | Language | 1, 386 | 28.607 | 0.000 | <0.001*** | L1 > L2 |

| Word frequency | 1, 386 | 0.336 | 0.563 | |||

| Hemisphere | 1, 386 | 0.331 | 0.565 | |||

| Language × frequency | 1, 386 | 0.241 | 0.624 | |||

| Language × hemisphere | 1, 386 | 4.770 | 0.030 | |||

| Frequency × hemisphere | 1, 386 | 4.053 | 0.045 | cf. captiona | ||

| Language × frequency × hemisphere | 1, 386 | 2.196 | 0.139 | |||

| SMG (BA 40) | Language | 1, 377 | 53.687 | 0.000 | <0.001*** | L1 > L2 |

| Word frequency | 1, 377 | 2.250 | 0.134 | |||

| Hemisphere | 1, 377 | 1.597 | 0.207 | |||

| Language × frequency | 1, 377 | 1.700 | 0.193 | |||

| Language × hemisphere | 1, 377 | 0.020 | 0.887 | |||

| Frequency × hemisphere | 1, 377 | 3.323 | 0.069 | |||

| Language × frequency × hemisphere | 1, 377 | 1.950 | 0.163 | |||

| POP (BA44) | Language | 1, 374 | 9.146 | 0.003 | <0.05* | L1 > L2 |

| Word frequency | 1, 374 | 3.703 | 0.055 | HF > LF | ||

| Hemisphere | 1, 374 | 4.430 | 0.036 | R > L | ||

| Language × frequency | 1, 374 | 1.194 | 0.275 | |||

| Language × hemisphere | 1, 374 | 2.742 | 0.099 | |||

| Frequency × hemisphere | 1, 374 | 0.001 | 0.978 | |||

| Language × frequency × hemisphere | 1, 374 | 0.002 | 0.964 | |||

| PTR (BA 45) | Language | 1, 374 | 64.363 | 0.000 | <0.001*** | L1 > L2 |

| Word frequency | 1, 374 | 0.945 | 0.332 | |||

| Hemisphere | 1, 374 | 58.095 | 0.000 | <0.001*** | R > L | |

| Language × frequency | 1, 374 | 2.151 | 0.143 | |||

| Language × hemisphere | 1, 374 | 0.080 | 0.777 | |||

| Frequency × hemisphere | 1, 374 | 2.199 | 0.139 | |||

| Language × frequency × hemisphere | 1, 374 | 0.564 | 0.453 | |||

Note: Statistical analyses using a 3-way repeated-measure ANOVAs were conducted for 6 ROIs to evaluate the effects of 3 within-subject factors: the 2 languages (Japanese: L1 and English: L2), 2 word frequencies (high: HF and low: LF) and 2 hemispheres (left: L and right: R). df = degree of freedom, PAAC = primary and auditory association cortices, SMTG = superior/middle temporal gyri, AG = angular gyrus, SMG = supramarginal gyrus, POP = pars opercularis, part of Broca’s area, and PTR = pars triangularis, part of Broca’s area.

aCf., Compare the laterality (hemisphere effects) for the angylar gyrus with that for the supramarginal gyrus: Additional paired t-test analyses for the [oxy-Hb] signals showed LH > RH for the high-frequency L1 (corrected P < 0.01) and L2 (corrected P < 0.05) word tasks, and for the [deoxy-Hb] signals showed LH > RH for the high-frequency L1 word task (corrected P < 0.05).

P values were Bonferroni corrected for 6 tests with a significance level of P < 0.05 after correction for multiple testing. Asterisks indicate statistically significant results (*P < 0.05, **P < 0.01, ***P < 0.001).

In the primary and auditory association cortices, the ANOVA for [oxy-Hb] showed neither a significant main effect nor an interaction after the conservative Bonferroni correction (Fig. 5A). However, the ANOVA for [deoxy-Hb] demonstrated a significant main effect of language (F [1,342] = 28.500, corrected P < 0.001, L1 > L2) (Fig. 5A′).

In the superior/middle temporal gyri, the ANOVA for [oxy-Hb] exhibited a significant main effect of language (F [1,376] = 11.658, corrected P < 0.01, L1 > L2) (Fig. 5B). The ANOVA for [deoxy-Hb] also demonstrated a significant main effect of language (F [1,372] = 43.317, corrected P < 0.001, L1 > L2) (Fig. 5B′). In addition, there was a significant main effect of word frequency (F [1,372] = 15.561, corrected P < 0.001, HF < LF), and there were also significant interactions between language and hemisphere (F [1,372] = 8.474, corrected P < 0.05) and language × frequency × hemisphere (F [1,372] = 32.913, corrected P < 0.001). A post hoc simple main-effect analysis applying the Bonferroni correction revealed a significant effect of hemisphere for L1 tasks (P < 0.05), but opposite trends of LH >> RH for HF task and RH > LH for LF task were observed.

In the angular gyrus, the ANOVA for [oxy-Hb] exhibited significant main effects of language (F [1,389] = 23.660, corrected P < 0.001, L1 > L2) and hemisphere (F [1,389] = 10.003, corrected P < 0.01, LH > RH) (Fig. 5C). In addition, there was a marginal interaction between frequency and hemisphere, which failed to reach significance after Bonferroni correction. Similarly, the statistical analyses for [deoxy-Hb] also revealed a significant main effect of language (F [1,386] = 28.607, corrected P < 0.001, L1 > L2; increase in [deoxy-Hb] for L2 was not statistically significant) and a marginal interaction between frequency and hemisphere, which also failed to reach significance after Bonferroni correction. As the omnibus ANOVA results comparing all 3 conditions for both [oxy-Hb] and [deoxy-Hb] for the angular gyrus (as well as the [oxy-Hb] within-subject ANOVA results for age group shown in Supplementary Table 2) revealed marginal interactions between frequency and hemisphere, we further explored the details to better characterize the hemisphere effect for the 4 tasks by conducting additional paired t-tests. The [oxy-Hb] results showed significant difference in activation (LH > RH) for both the high-frequency L1 (corrected P < 0.01) and L2 (corrected P < 0.05) word tasks (Fig. 5C), and the [deoxy-Hb] results showed significant difference in activation (LH > RH) for the high-frequency L1 word task (corrected p < 0.05) (Fig. 5C′). In contrast, neither the [oxy-Hb] nor the [deoxy-Hb] results showed significant hemispheric difference for the low-frequency word tasks.

In the supramarginal gyrus, the ANOVA for [oxy-Hb] demonstrated significant main effects of language (F [1,383] = 35.164, corrected P < 0.001, L1 > L2) and hemisphere (F [1,383] = 8.483, corrected P < 0.05, RH > LH), and a significant interaction between frequency and hemisphere (F [1,383] = 12.813, corrected P < 0.01) (Fig. 5D). A post hoc simple main-effect analysis applying the Bonferroni correction revealed a significant effect of hemisphere for low-frequency words (corrected P < 0.001, RH > LH). As for the [deoxy-Hb] analyses, there was only a significant main effect of language (F [1,377] = 53.687, corrected P < 0.001, L1 > L2), but, as shown in Figure 5D′, RH > LH activations similar to those seen in [oxy-Hb] were observed for the low-frequency word tasks.

In the pars opercularis, part of Broca’s area, the ANOVA for [deoxy-Hb] exhibited a significant main effect of language (F [1,374] = 9.146, corrected P < 0.05, L1 > L2), while that for [oxy-Hb] did not. On the other hand, while the ANOVA for [oxy-Hb] showed a significant main effect of hemisphere (F [1,380] = 26.347, corrected P < 0.001, RH > LH), that for [deoxy-Hb] did not survive Bonferroni correction (Fig. 5E,E′).

The results for the pars triangularis, part of Broca’s area, are similar to those of the pars opercularis: The ANOVA for [deoxy-Hb] only exhibited a significant main effect of language (F [1,374] = 64.363, corrected P < 0.001, L1 > L2), while that for [oxy-Hb] did not (Fig. 5F,F′). A significant main effect of hemisphere was demonstrated for both [oxy-Hb] (F [1,378] = 60.631, corrected P< 0.001, RH > LH) and [deoxy-Hb] changes (F [1,374] = 58.095, corrected P < 0.001, RH > LH) (Fig. 5F,F′).

Discussion

In this study, we revealed that the cortical activation pattern associated with language processing in elementary school children involves a bilateral network of regions in the frontal, temporal, and parietal lobes.

Here, we list the major findings:

Though not statistically significant, a trend toward lower hemodynamic responses with increasing age from 6 to 10 was observed, especially in the auditory and temporal regions.

L2 words were processed like nonword auditory stimuli in the brain as indicated by lower activation than that elicited by L1 words in the superior/middle temporal and inferior parietal regions.

Low-frequency words elicited more right-hemispheric activation (particularly in the supramarginal gyrus and high-frequency) words elicited more left-hemispheric activation (particularly in the angular gyrus).

The importance of the RH temporo-parieto-frontal network as well as the traditional LH language network was suggested especially at the early stages of language acquisition/learning both in L1 and L2.

Differences in sensitivity between [oxy-Hb] and [deoxy-Hb] signals for detecting language, frequency, and hemisphere effects were observed.

Details of the major findings are described in subsequent sections. We will first discuss the age factor in L1 tasks. Next, we will move on to the whole-group analyses to explore language difference (language effect), then we will examine the function of each ROI in relation to phonological versus semantic processing and LH versus RH, and, finally, we will note the different characteristics of [oxy-Hb] and [deoxy-Hb] signals.

A Developmental Perspective (Age Factor)

To summarize the results, both the age group and regression analyses between age and cortical activation revealed weak trends of decreasing cortical activation with age in L1 tasks, but such changes were not statistically significant. As the human language system dramatically develops during childhood, one might expect that brain functions and structures change dramatically in this period. However, we did not detect significant differences in brain response. This is probably because the age range of our participants was small (6–10 years, with very few 6-year-olds among our participants).

Alternatively, the absence of significant differences in cortical activation may be due to the tasks we employed. Since we focused on L2 learning in children, the single-word repetition task we employed was tailored to measure the level of L2 acquisition rather than to detect developmental changes in the mother tongue. Thus, the mere repetition of L1 words was presumed to be easy for elementary school children regardless of their semantic knowledge of the presented words. It is assumed that the repetition of L1 words by elementary school children occurs automatically. The “dual-process” information-processing model of Schneider and Shiffrin (1977) and Shiffrin and Schneider (1977) offered compelling evidence for the distinction between “automatic detection” and “controlled search,” 2 qualitatively different human information-processing operations. In their view, the execution of cognitive tasks changes with training. Acquiring a new skill primarily requires a controlled search operation. Gradually, as the skill is mastered, it becomes more automatic, enabling the participant to carry out another task simultaneously (dual-task performance). In fact, the cortical activations observed during L1 tasks in our study showed marginal decrease with age. Indeed, this may suggest that the repetition of L1 words is performed more automatically as age increases.

Whole-Group Analyses

To summarize the language effect, there was less overall cortical activation for L2 than for L1, but the statistical significance differed between [oxy-Hb] and [deoxy-Hb] analyses. The [deoxy-Hb] analysis showed greater sensitivity for detecting language effects than the widely used [oxy-Hb] analysis in our study, and this will be discussed later.

We have considered whether a longer and stronger utterance during word repetition would lead to greater hemodynamic response by acoustic analysis. While children’s brain responses during word repetition were significantly greater for L1 than L2, children’s oral responses were greater for L2 than L1. Therefore, it was confirmed that the greater brain responses for L1 than L2 were not because utterances during L1 tasks were longer or stronger than those during L2 tasks.

As for the hemisphere effect, while the [oxy-Hb] analyses showed no significant differences in brain activation between the LH and RH in the superior/middle temporal gyri, nor in the primary or auditory association cortices, significant differences in activation were found in the angular/supramarginal gyri. Interestingly, the LH showed greater activation than the right in the angular gyrus, whereas the RH showed greater activation than the left in the supramarginal gyrus. While the statistical analyses of [oxy-Hb] detected activation laterality in the angular/supramarginal gyri and roughly similar trends in both [oxy-Hb] and [deoxy-Hb] results were observed in the bar graphs (Fig. 5), the statistical analyses of [deoxy-Hb] did not detect critical differences in activations between LH and RH. In contrast to the language effect mentioned above, the [oxy-Hb] analysis showed better sensitivity for detecting language effects than did the [deoxy-Hb] analysis.

Language Difference and Lexicality

We detected equivalent bilateral activation in the primary and auditory association cortices but found less activation for L2 tasks than for L1 tasks in the superior/middle temporal gyri and in the inferior parietal region (angular/supramarginal gyri). Language processing involves lexical versus nonlexical processing, and phonological versus semantic processing. For auditory processing, the bilateral primary auditory cortex, the anterior superior temporal region, and the left-lateralized inferior parietal region near the angular and supramarginal gyri have been reported to be activated by lexical processing (Petersen et al. 1988), while presentation of nonword auditory stimuli failed to activate the anterior superior temporal and the inferior parietal regions (Roland et al. 1980; Mazziotta et al. 1982; Lauter et al. 1985). It is also reported that the human superior temporal region, consisting primarily of the auditory sensory cortex, is activated bilaterally and symmetrically by a variety of speech and nonspeech auditory stimuli (Binder et al. 2000; Patterson et al. 2002). The response at the level of the superior temporal sulcus is not considered to be speech specific but rather arises from the complex frequency and amplitude modulations that characterize speech, whereas speech-specific lexical and semantic processing is thought to be a function of the cortex ventral to the superior temporal sulcus (Binder et al. 1996; Binder and Frost 1998). As for the inferior parietal region (angular/supramarginal gyri), a greater response to words than to pseudowords during a feature detection task has been also shown in PET studies (Brunswick et al. 1999; Price 2000). A recent fNIRS study revealed the lexicality effect, in which words elicited a larger focal hyperoxygenation in comparison to pseudowords in the left inferior parietal gyrus (Hofmann et al. 2008). Taken all together, the superior/middle temporal gyri and the inferior parietal region are presumed to be associated with lexicality.

Considering our results together with previous findings, the language effect is likely to correspond to the lexicality effect (word or nonword). In other words, L2 words were processed like nonword auditory stimuli. As the children are at the very early stages of L2 learning so that the L2 words were not all familiar to them, the lexicality effect should be more pronounced in L1 than in the unfamiliar L2, regardless of whether or not subjects have semantic knowledge of the words. Cortical activations in the superior/middle temporal gyri and angular/supramarginal gyri may not simply depend on the acoustic complexity of speech sounds, but also reflect processes tuned to the phonology of the native language, suggesting that the activations in these brain regions are stronger for L1 than for L2.

Whether the superior/middle temporal gyri and angular/supramarginal gyri are related to phonological or semantic processing will be discussed in subsequent sections.

Phonological Versus Semantic Processing: Temporal Region (Superior/Middle Temporal Gyri)

Although the superior/middle temporal gyri and the inferior parietal region were revealed to be associated with lexicality, whether the observed lexicality effect arose from the semantic and/or the phonological content of words is worthy of intensive discussion.

As for the superior/middle temporal gyri, the [oxy-Hb] analyses did not reveal any significant difference in activations except for the language. In contrast, the [deoxy-Hb] analyses revealed a word-frequency effect (HF < LF) in addition to a language effect. Post hoc simple main-effect analyses revealed left-hemispheric dominance for high-frequency words and right-hemispheric dominance for low-frequency words for the L1 tasks. As revealed in a recent fNIRS study (Hofmann et al. 2008), the [deoxy-Hb] signal may be more sensitive for detecting the word-frequency effect than the [oxy-Hb] signal. Based on both [oxy-] and [deoxy-Hb] results, in the superior/middle temporal gyri, the lexcicality effect should be more pronounced in L1 than in the unfamiliar L2 since L1 words are expected to be perceived more lexically than L2 words regardless of the participants’ semantic knowledge of the words. More specifically, phonological processing is more likely to be executed than semantic processing. Importantly, as [deoxy-Hb] results revealed, during the repetition of L1 words, significantly greater activation was observed in the LH for high-frequency words (96% semantic knowledge) whereas greater activation was observed in the RH for low-frequency words (12% semantic knowledge) (Fig. 5B′). These results suggest that the left temporal region is engaged in semantic processing to some extent, whereas unknown words elicit more activation in the RH.

Phonological Versus Semantic Processing: Inferior Parietal Region (Angular/Supramarginal Gyri)

Another interesting observation was that the LH showed greater activation than the right in the angular gyrus, whereas the RH showed greater activation than the left in the supramarginal gyrus. The right-hemispheric dominance in the supramarginal gyrus was prominent for low-frequency word tasks in both L1 and L2.

An intriguing issue is roles of the angular gyrus and the supramarginal gyrus in relation to semantic and phonological processing. While lesion studies have reported that the inferior parietal region is associated with phonological deficits (Shallice 1981; Roeltgen et al. 1983), and is a good candidate for a phonological coding region, the role of the angular gyrus in semantic processing that supports “word meanings” has been identified (Mesulam 1990; Binder et al. 1997; Inui et al. 1998; Niznikiewicz et al. 2000; Obleser et al. 2007).

The roles of the LH and RH of these regions are also worth discussing. Activation of the left angular gyrus has, for many years, been reported to be associated with reading (Dejerine 1892; Damasio AR and Damasio H 1983; Henderson 1986; Horwitz et al. 1998). Horwitz et al. (1998) found a functional connectivity of the angular gyrus during single-word reading in normal readers, as found in lesion studies (Dejerine 1892; Damasio AR and Damasio H 1983; Henderson 1986). In particular, they have demonstrated strong functional linkages of the left angular gyrus with areas of the visual association cortex in the occipital and temporal lobes known to be activated by words and word-like stimuli (Petersen et al. 1989; Howard et al. 1992; Price et al. 1994; Bookheimer et al. 1995; Rumsey et al. 1997). They also reported that the left angular gyrus is functionally linked to a region in the left superior and middle temporal gyri that is part of Wernicke’s area, and to an area in the frontal region in or near Broca’s area during pseudoword reading, where explicit grapheme-to-phoneme conversions are required. This finding suggests that the left angular gyrus is involved not only in semantic processing, but also in phonological processing.

In the present study, by far the highest activation was observed in the left angular gyrus when children performed the high-frequency L1 task, in which children knew the meanings of an average of 96% of the words, and, correspondingly, this rating is much higher than those of the other 3 tasks as shown in Figure 2A. This result is relevant to previous findings that the left angular gyrus is involved in semantic processing. On the other hand, the mean ratings of semantic knowledge were much lower for low-frequency L1 words (12%), high-frequency L2 words (42%), and low-frequency L2 words (8%) than those for high-frequency L1 words (96%); however, the magnitude of brain activation during both high- and low-frequency L1 tasks was much higher than that during L2 tasks regardless of semantic knowledge of words. Similarly, the mean ratings of semantic knowledge were significantly low for both low-frequency L1 (12%) and L2 words (8%), and, importantly, the statistical analysis did not show significant difference in the ratings between these 2 tasks. Nevertheless, cortical activations during low-frequency L1 and L2 word tasks elicited significant differences. These facts suggest that the left angular gyrus is involved not only in semantic processing but also in phonological processing. In other words, processing familiar phonology in L1 induces higher brain activation than processing unfamiliar phonology in a foreign language, independent of semantic knowledge.

With respect to the word repetition tasks we employed, we postulate that phonological processing is the main process and that the activations in the angular gyrus arose mainly from phonological familiarity (phonological analysis of novel relative to familiar stimuli), which is relevant to differences in word repetition success rates. If we compare the bar graphs for semantic knowledge of words (Fig. 2A), repetition success rate (Fig. 2B), and brain activations in the angular gyrus (Fig. 5C,C′), brain activation in the angular gyrus is obviously associated with word repetition success rate rather than semantic knowledge of words. More specifically, we observed significant differences in brain activations between L1 and L2 tasks regardless of the children’s semantic knowledge of the words; accordingly, we observed significant differences in the word repetition success rates between L1 and L2 tasks, which would reflect differences in phonological familiarity. In addition, a complex interaction of semantic and phonological information processing was observed. Although clear separation of semantic and phonological processing is difficult, considering the relationship between the protruding semantic knowledge of high-frequency L1 words and corresponding cortical activation in the left angular gyrus, it is possible that semantic processing is also involved to some extent, especially in the left angular gyrus.

In contrast to the angular gyrus, the supramarginal gyrus exhibited a rather different feature. We did not observe a pronounced cortical activation in the LH in this region as we did for the left angular gyrus during the L1 high-frequency word task. Instead, right-dominant activation was noticeable, especially for low-frequency word tasks.

Lesions to the left supramarginal gyrus are often associated with conduction aphasia (Green and Howes 1978), characterized by relatively preserved comprehension, impaired repetition, and paraphasic and otherwise disordered speech. The results of an MRI study of lesions in aphasic patients by Caplan et al. (1995) indicated that the left supramarginal gyrus is the principal site of phonemic processing in speech perception. Moreover, previous studies show that the left supramarginal gyrus is strongly activated by phonological tasks relative to semantic tasks, supporting its role in phonological processing (Démonet et al. 1994; Caplan et al.1995; Celsis et al. 1999).

Specifically, Binder et al. (1996) demonstrated in their fMRI study that the left supramarginal gyrus was more strongly activated by nonlinguistic stimuli (tone sequences) than by words when subjects performed active listening tasks involving tone sequence analysis in comparison to analysis of words. Although the ROIs were only defined in the LH, and no information about the RH was mentioned, they reported in another paper that the supramarginal gyrus was activated bilaterally by the tone decision task relative to the semantic decision task, and, importantly, this activation was much more extensive in the RH than in the left (Binder et al. 1997).

Furthermore, lesions of the left inferior parietal lobe in the region of the supramarginal gyrus have been reported to give rise to deficit in auditory–verbal short-term memory (Shallice and Vallar 1990; Vallar et al. 1997). Also, specific activation of the left supramarginal gyrus by a short-term memory task was demonstrated by Paulesu et al. (1993), who considered this region to be the location of the phonological store; similar results were obtained by Salmon et al. (1996), Smith and Jonides (1998), and Smith et al. (1998). Studies with normal and brain-damaged subjects have indicated that there are semantic as well as phonological contributions to verbal short-term memory. Combined, it is likely that the supramarginal gyrus is involved in phonological processing in linguistic stimuli as well as nonlinguistic stimuli, and is the principal site of phonological representation and phonological store (verbal short-term memory).

Given the observation that the children in our study did not know the meanings of an average of 88% and 92% of low-frequency Japanese and English words, respectively, and that the supramarginal gyrus was activated by all the tasks irrespective of language or semantic knowledge, bilateral right-dominant activation in the supramarginal gyrus is likely to reflect phonological processing and storage. Moreover, given the fact that repetition success rates significantly differed between L1 and L2 tasks and that phonologically familiar words are easier to memorize than phonologically unfamiliar words, the greater brain activation during auditory word processing in L1 than in L2, regardless of semantic knowledge level, is likely related to phonological familiarity, which is relevant to the phonological store.

Taken together, it could be explained that both linguistic (mainlyLH) and nonlinguistic (mainly RH) processing, including the phonological store, can be executed in parallel, and that the children would depend more on nonlinguistic processing for unfamiliar or low-frequency words in repetition tasks.

In sum, a complex interaction of semantic and phonological information processing was observed, especially in the angular gyrus. As the repetition task employed in this study strongly demands phonological and prosodic analyses rather than semantic analyses, a clear separation of semantic and phonological processing is difficult. However, the present results clarify that left-hemispheric activation is dominant for high-frequency tasks especially in the angular gyrus, while right-hemispheric activation is dominant for low-frequency tasks in the supramarginal gyrus. These results suggest that a right-to-left shift in laterality occurs in the inferior parietal region as lexical knowledge increases, irrespective of language.

Inferior Frontal Region

With respect to the inferior frontal region, the statistical results of [oxy-Hb] changes demonstrate that brain activation in the RH was significantly greater than that in the LH in both the pars opercularis and the pars triangularis, and the statistics of the [deoxy-Hb] changes also confirmed the same results although with slightly lower statistical significance.

Price et al. (1996) demonstrated that Broca’s area is involved in both auditory word perception and repetition. The peak of frontal activation in response to hearing words is anterior to that associated with repeating words: Roughly, the former corresponds to the pars triangularis and the latter to the pars opercularis and the adjacent precentral sulcus. We observed greater activation in the RH, and the right-hemispheric dominance was more prominent in the pars triangularis than in the pars opercularis (Fig. 5E,E′,F,F′), which may indicate that right-hemispheric asymmetry is more pronounced when hearing words (auditory word perception) than when repeating words.

Broca’s area in the left inferior frontal gyrus has been traditionally considered a language area. However, there is not yet a consensus on the anatomical demarcation of this region, and its functional characterization remains a matter of debate. This region is often discussed in the context of language, working memory, episodic memory, or implicit memory. It is suggested that the left inferior prefrontal region serves as a crossroad between meaning in language and memory (for a review, see Gabrieli et al. 1998). In the adult brain, syntactic and working memory-related functions may be more pronounced in superior portions of the inferior frontal lobe (pars opercularis), whereas the inferior portions including pars triangularis may be more involved in lexicosemantic function (Dapretto and Bookheimer 1999; Friederici 2002).