Abstract

Background:

Health technology assessments (HTA) generally, and economic evaluations (EE) more specifically, have become an integral part of health care decision making around the world. However, these assessments are time consuming and expensive to conduct. Evaluation resources are scarce and therefore priorities need to be set for these assessments and the ability to use information from one country or region in another (geographic transferability) is an increasingly important consideration.

Objectives:

To review the existing approaches, systems, and tools for assessing the geographic transferability potential or guiding the conduct of transferring HTAs and EEs.

Methods:

A systematic literature review was conducted of several databases, supplemented with web searching, hand searching of journals, and bibliographic searching of identified articles. Systems, tools, checklists, and flow charts to assess, evaluate, or guide the conduct of transferability of HTAs and EEs were identified.

Results:

Of 282 references identified, 27 articles were reviewed in full text and of these, seven proposed unique systems, tools, checklists, or flow charts specifically for geographic transferability. All of the seven articles identified a checklist of transferability factors to consider, and most articles identified a subset of ‘critical’ factors for assessing transferability potential. Most of these critical factors related to study quality, transparency of methods, the level of reporting of methods and results, and the applicability of the treatment comparators to the target country. Some authors proposed a sequenced flow chart type approach, while others proposed an assessment of critical criteria first, followed by an assessment of other noncritical factors. Finally some authors proposed a quantitative score or index to measure transferability potential.

Conclusion:

Despite a number of publications on the topic, the proposed approaches and the factors used for assessing geographic transferability potential have varied substantially across the papers reviewed. Most promising is the identification of an extensive checklist of critical and noncritical factors in determining transferability potential, which may form the basis for consensus of a future tool. Due to the complexities of identifying appropriate weights for each of the noncritical factors, it is still uncertain whether the assessment and calculation of an overall transferability score or index will be practical or useful for transferability considerations in the future.

Keywords: costs and cost analysis, economic evaluation, health technology assessment, geographic transferability, portability, generalizability

Introduction

Over the past two decades, decision-makers at all levels of the health care system have been faced with increasing pressure to make more efficient use of existing health care resources. As a result, public and private agencies worldwide have turned to evidence-based processes to better assess the clinical and economic benefits of both new and existing health care technologies. Although safety and efficacy are essential first considerations, health technology assessment (HTA), in general, and economic evaluation (EE), specifically, have become an integral component of the overall decision-making process regarding the assessment and adoption of both new and existing health care technologies. Full EE, whether conducted alongside a trial or as a modeling study, assesses both the costs and consequences of a health care intervention, which is evaluated in comparison to at least one other intervention. The general rule when assessing programs is that the difference in costs is compared to the difference in outcomes achieved, typically in an incremental analysis. Therefore, the basic tasks of economic evaluation are to identify, measure, value, and compare the costs and consequences of the alternatives under consideration.1

The basic types of economic evaluation include cost-benefit analysis (CBA), cost-effectiveness analysis (CEA), and cost-utility analysis (CUA). CBA measures and values the benefits and costs of outcomes achieved from a program or intervention in monetary terms. For example a CBA may require that a dollar value be placed on expected years of life gained or expected improvements in health and wellbeing. The benefits and costs that are counted include not only those directly attributed to the program or intervention but also any indirect benefits or costs through externalities or other third-party effects.2 However, much of the controversy surrounding the use of CBA derives from the fact that consumers of health care are not used to valuing health outcomes and these other intangible benefits. Given these difficulties, CEA provides a more practical approach to health care decision-making. It compares the costs of achieving particular health effects that are measured in natural units related to the objective of the program, such as average cases of disease avoided, or years of life gained. The results of such comparisons are stated in terms of cost per unit of effect. CUA is often seen as a special form of CEA that introduces measures of benefits that reflect individuals’ preferences over the health consequences of alternative programs that affect them. CUAs use a global measure of health outcome, such as quality-adjusted life-years (QALYs) by undertaking one program instead of another, and the results are often expressed as a cost per QALY gained.1 This enables the comparison of different types of programs, which makes CUA more practical for decision-makers. Sometimes a cost-minimization analysis (CMA) may be performed if the alternatives under consideration are considered to achieve the given outcomes to the same extent. However, CMA is usually not viewed as a separate form of full economic evaluation because the original intent of the study was to conduct either a CEA or CUA.1

Approaches to conducting full economic analyses can be categorized as either trial-based studies using patient-level data or decision analytic modeling based on secondary data. Given that randomized clinical trials are typically a necessary condition for the successful licensing of a pharmaceutical, economic data are sometimes collected by piggybacking onto the same trials. This approach is potentially more attractive for internal validity, while the main limitation is that this study may suffer from external generalizability. Decision analytic modeling brings together a range of evidence sources and allows the expansion of the comparators considered in the analysis and an expansion of the time horizon beyond the trial period. In addition, decision analytic modeling provides a framework for informing specific decision-making under conditions of uncertainty by allowing more convenient assessment of modeling assumptions, modeling structural uncertainty, and different patient subgroups (heterogeneity).1

These evaluations, either done as a trial or modeling study and either as an EE or as part of a broader HTA report, are usually time-consuming, expensive, and demanding in terms of statistical sophistication and in terms of the researchers equipped with the necessary skills. As a result, it is not possible to conduct an EE or HTA on every intervention. Fortunately, health care decision-makers often have access to previously published EEs or HTAs on the topic of interest. Unfortunately, it is commonly the case that these EEs or HTAs are from another jurisdiction and decision-makers need to assess whether, and to what extent, the assessment and analysis from this other jurisdiction applies to their own jurisdiction. Several terms such as geographic transferability, generalizability, portability, and extrapolation have been used to describe the process of applying the analyses and results of EEs or HTAs from one jurisdiction to another jurisdiction. Before considering conducting an EE or HTA for the jurisdiction of interest, it is important to first consider whether an EE or HTA on the topic of interest may already exist from another jurisdiction, and if so, the potential applicability of transferring or using these results for the country of interest. This is especially useful for decision-makers who face budget or time constraints in health care resource allocation decisions and given the increasing number of technologies that need to be assessed. As a result of the growing demand in many jurisdictions to use evidence as part of their decision-making process, there is growing interest in the assessment of transferability of EEs and HTAs, with the aim to decide the extent to which the results of a study from another jurisdiction can be adapted locally.

There have been a series of important contributions to the published literature in recent years addressing the issue of geographic transferability of EE and HTA results. Several efforts were made to identify potential factors causing variability in EE and HTA data between locations. One of the first researchers who helped to progress the debate about transferability was O’Brien,3 who identified six threats to the transferability of economic data across countries. Since then the list of the factors affecting transferability has been further expanded through a number of review papers on the topic. For example, in a systematic review by Goeree4 a comprehensive classification system was developed of over 80 factors grouped into 5 broad categories of variability factors including characteristics of the patient, the disease, the health care provider, and the health care system. In addition to factors which may raise transferability concerns, authors have proposed systems, checklists or even indices to assess or measure the transferability potential of EEs and HTAs conducted in other jurisdictions to the jurisdiction of interest. However, no previous study has summarized these papers. Therefore, the purpose of this study was to review and summarize the existing approaches, systems, and tools for assessing transferability potential or guiding the actual conduct of transferability of HTAs and EEs.

Methods

Literature search strategy

Targeted literature search strategies were developed for PubMed, Ovid MEDLINE, and EMBASE databases respectively. Detailed search terms are provided in the Appendix. The search focused on relevant papers reflecting the methods, systems, or tools used to assess transferability or generalizability of economic evaluation results across jurisdictions. Studies published in English were considered, without publication year restriction. The literature search was supplemented with online searching using the Google search engine, hand searching of relevant journals, and reviewing of the bibliographies of identified articles. The search was run on 22 November 2010. All references obtained were incorporated into a reference manager database to check for duplication and to perform title and abstract screening.

Literature selection criteria

Studies meeting our selection criteria were those that had a focus on methods or tools applied to assess or evaluate transferability. The tools could consist of a checklist, toolkit, system, practice guideline, set of criteria, decision chart, or index. Articles were excluded if they were general narrative reviews, if they focused on identification of sources of variability in EEs of HTAs or factors likely to affect transferability between locations, or if they addressed the analytical approaches of adapting cost-effectiveness results to a particular jurisdiction of interest.

Synthesis and reporting

It was determined ‘a priori’ that due to the nature of the different proposed systems, a quantitative summary (eg, meta-analysis) of retrieved studies was not feasible. As a result, each paper is presented sequentially by year of publication with a qualitative description of the tool, checklist, criteria, system, or flow chart. Generalizations and conclusions across these approaches are provided following a description of each approach.

Results

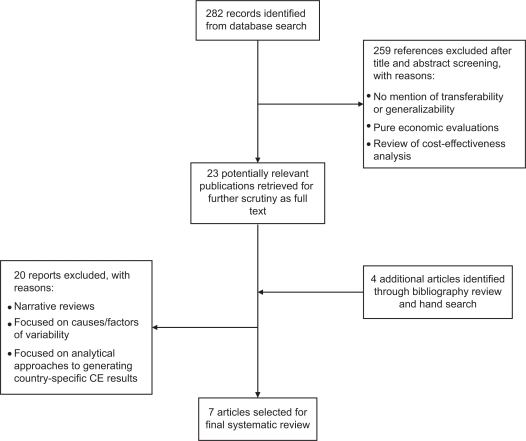

In total, the comprehensive search identified 282 citations. Among them, 230 articles were identified from PubMed, and 52 additional articles were identified from Ovid MEDLINE and EMBASE. A Google search did not identify any additional citations. Title and abstract screening was carried out for relevance to the topic and resulted in the inclusion of 23 articles, which were retrieved in full text and reviewed in detail. Four additional references were identified during bibliography searching. Among the 27 articles reviewed in full text, seven papers with specific focus on approaches to assess the transferability of economic evaluations across geographic locations were included in the review. Please refer to Figure 1 for a flow diagram of study selection. A description of the system or approach from each of the seven papers, by year of publication, is provided below along with a summary of the application of system or approach if available.

Figure 1.

Flow diagram of literature selection for systematic review.

Abbreviation: CE, cost effectiveness.

Heyland’s generalizability criteria (1996)

Heyland5 introduced a list of criteria when assessing the generalizability of economic evaluations to their own setting in order to improve the efficiency of their critical care unit. The criteria were based on ten key variability factor-based questions regarding clinical generalizability and health care system generalizability (see Table 1). Before applying these criteria, selected studies also need to meet the inclusion criteria for minimal methodologic standard, which includes a comprehensive description of competing alternatives, sufficient evidence of clinical effectiveness or efficacy, appropriate identification, measurement and valuation of all important costs, and appropriate sensitivity analysis that takes into account all estimates of uncertainty.

Table 1.

Heyland’s generalizability criteria

Clinical generalizability

Systems generalizability

|

Source: Heyland DK, et al. Crit Care Med. 1996;24(9):15.

The authors identified 29 critical care studies (19 CEAs, 6 CMAs, 4 partial economic evaluations that only compared costs of alternative interventions) that met the minimum level of methodological rigor to be further evaluated for generalizability to their own intensive care unit in a tertiary care hospital in Hamilton, ON. Of the 29 studies, 14 adequately described both competing alternatives; 17 provided sufficient evidence of clinical effectiveness; and six identified, measured, and evaluated costs appropriately. Overall, it was determined that none of the 29 papers met the minimum methodological standard for all criteria. Four papers that provided adequate costs and clinical effectiveness/efficacy were further evaluated using the proposed generalizability criteria from a hospital perspective. Overall, it was found that the differences in costing methods precluded the generalizability of three of these studies; however, for two of them, the direction or qualitative result was probably generalizable, whereas the magnitude or quantitative result was not.

Späth’s transferability indicators (1999)

Späth6 defined five indicators to assess the eligibility of economic evaluations for transfer to a given health care system (see Table 2). Similar to the approach proposed by Heyland,5 before applying the transferability criteria, Späth proposed four criteria critical for internal methodological validity (ie, perspective of the study from national level, comparison of two or more options, description of the evaluated therapies, and the assessed therapies and/or its comparators are used in the health system of interest) that need to be satisfied before consideration of transferability indicators. Studies fulfilling the four critical criteria are then assessed for eligibility for transferability using a five-indicator checklist. The five indicators comprise three dimensions including the settings at local level (potential users of the economic evaluation and characteristics of the patient population) in which the studies might be used, the transferability of health outcome data, and the transferability of resource utilization data (health care resources used and their unit prices). Studies from other health systems and settings must fulfill all of the five indicators in order to be considered transferable.

Table 2.

Späth’s transferability indicators

|

Source: Späth HM, et al. Health Policy. 1999;49:165–166.

To test the proposed checklist, 26 published economic evaluations regarding adjuvant therapy in women with breast cancer were identified to apply to the French health care system. Six of these studies met the critical appraisal of internal validity to be assessed further for transferability. These studies covered different types of economic evaluation (4 CUA, 1 CEA, 1 CMA; 3 decision analyses, and 3 clinical trial results with cost estimations). All of the evaluations included direct medical costs, but no other types of resource utilization information were included in the studies and the related unit prices were not reported. Therefore, none of the six studies were determined to be eligible for transfer to the French health care system.

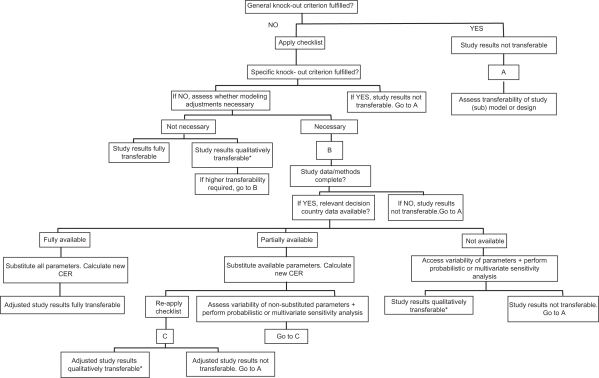

Welte’s transferability decision chart (2004)

One of the best known transferability assessment tools was published by Welte7 a few years later. As shown in Figure 2, Welte proposed the use of a transferability decision chart that takes into account ‘knock-out’ criteria, a transferability checklist, and methods for improving transferability and for assessing the uncertainty of transferred results. The decision chart starts with general ‘knock-out’ criteria to identify studies that are deemed not transferable. These general ‘knock-out’ criteria include:

The relevant technology is not comparable to the one that shall be used in the decision country.

The comparator is not comparable to the one that is relevant to the decision country.

The study does not possess an acceptable quality.

Figure 2.

Welte’s transferability decision chart.

Note: *Indicates order of magnitude can be transferred.

Source: Welte R, et al. Pharmacoeconomics. 2004;22(13):863.

Abbreviation: CER, cost-effectiveness analysis ratio.

If the general ‘knock-out’ criteria have been passed, a transferability checklist is then applied to test for specific ‘knock-out’ criteria and to determine whether modeling adjustments are necessary. The checklist is based on 14 factors of methodological, health care system and population characteristics. Although not a general ‘knock-out’ criteria, each relevant transferability factor from the checklist can become a specific ‘knock-out’ criterion if the factor/criterion cannot be assessed because of lack of data from the study or the decision country. The specific criteria checklist is listed below:

Perspective

Discount rate

Medical cost approach

Productivity cost approach

Absolute and relative prices in health care

Practice variation

Technology availability

Disease incidence/prevalence

Case-mix

Life expectancy

Health-status preference

Acceptance, compliance, incentives to patients

Productivity and work-loss time

Disease spread

For each transferability factor, it has to be determined 1) to what extent it is relevant for the investigated technology; 2) the correspondence between the study country and the decision country; and 3) the likely effect of the transferability factor on the results.

If any of the general or specific criteria applies, then users should examine the transferability of the study design rather than the study results. For studies that pass all the ‘knock-out’ criteria, the need for modeling-based adjustment to improve transferability will be evaluated based on data availability. For studies in which modeling adjustments are necessary, the decision chart will also guide the user to identify whether the study results are transferable after adjustments either in a descriptive way (reapply the decision chart) or by doing probabilistic or multivariate sensitivity analysis.

The transferability decision chart was then applied to several international cost-effectiveness studies in the areas of interventional cardiology, vaccination, and screening. Application of the transferability checklist to an American multicenter study of stenting versus percutaneous transluminal coronary angioplasty (PTCA) by Cohen8 revealed that modeling adjustments would be necessary for the German Ministry of Health, however, because resource valuation was not presented in enough detail, the results of this study could not be transferred. Another Belgium Netherlands Stent Study by Serruys9 also showed that modeling adjustments were needed for Germany. The study presented sufficient details, which enabled the adjustment of resource valuation using data derived from the German study. The adjusted study results were then evaluated for transferability by applying the transferability checklist again, and the authors concluded that transferring the order of magnitude of the adjusted EE result seemed defendable, but not the exact value. The third case was an example where Denmark was interested in a Dutch EE that used a dynamic model instead of the common static decision-analysis model to project the cost effectiveness of a large-scale chlamydial screening program. General ‘knock-out’ criteria were fulfilled and the transferability of the study model was examined. Although it was found that transferring the economic model was laborious, it was determined that the progression component of the disease model and the productivity cost module could easily be transferred. Because these models take much more time to build than to adjust from other contexts, it was estimated that transferring the model saved approximately 6 years of work.

Boulenger’s transferability information checklist (2005)

In the article by Boulenger,10 the authors developed a transferability information checklist and used it to generate a score that represents the percentage of applicable items that were either adequately or partially addressed in economic studies. The checklist is shown in Table 3. The overall checklist comprises 42 questions and includes two parts: the first part is related to overall methodological quality and internal validity of the studies; the second part (transferability sub-checklist) contains 16 items that are more specifically focused on judging the transferability of a study. The extended checklist was divided into six main sections: the subject and key elements of the study (Q1-M2), characteristics of the methods used to measure clinical outcomes (E1-E7), the measure of health benefits used in the economic analysis (B1-B5), the costs (C1-C11), discounting (D1-D4), and discussion by the authors (S1, O1). To quantitatively evaluate how thoroughly key methodological items regarding transferability are addressed and reported in each study, a percentage score of transferability information can be derived for both the full checklist and the sub-checklist after assigning each item one of the following values: 1 for ‘yes’, 0.5 for ‘partially’, and 0 for ‘no/no information’.11 Based on the responses for each item in the checklist, Boulenger generated an overall transferability score based on the assumption that each item or factor contributes equal weight to the overall score. In Boulenger’s system, there are no critical criteria as each criterion contributes equally to the overall score.

Table 3.

Boulenger’s transferability information checklist

| Each question is answered with ‘yes’, ‘partially’, ‘no/no information provided’ or ‘not applicable’ | |

|---|---|

| Q1 | Is the study question clearly stated |

| Q2 | Are the alternative technologies justified by the author(s)? |

| HT1a | Is the intervention described in sufficient detail? |

| HT2a | Is (are) the comparator(s) described in sufficient details? |

| SE1 | Did the authors correctly specify the setting in which the study took place (eg, primary care, community)? |

| SE2a | Is (are) the country(ies) in which the economic study took place clearly specified? |

| P1a | Did the authors correctly state which perspective they adopted for the economic analysis? |

| SP1a | Is the target population of the health technology clearly stated by the authors or when it is not done can it be inferred by reading the article? |

| SP2 | Are the population characteristics described? (eg, age, sex, health status, socio-economic status, inclusion/exclusion criteria) |

| SP3a | Does the article provide sufficient detail about the study sample(s)? |

| SP4 | Does the paper provide sufficient information to assess the representativeness of the study sample with respect to the target population? |

| M1 | If a model is used, is it described in detail? |

| M2 | Are the origins of the parameters used in the model given? |

| E1 | If a single study is used, is the study design described (sample selection, study design, allocation, follow-up)? |

| E2 | If a single study is used, are the methods of data analysis described (iTT/per protocol or observational data)? |

| E3 | If based on a review/synthesis of previous published studies, are review methods described (search strategy, inclusion criteria, sources, judgment criteria, combination, investigation of differences)? |

| E4 | If based on opinion, are the methods used to derive estimates described? |

| E5a | Have the principal estimates of effectiveness measure been reported? |

| E6 | Are the side effects or adverse effects addressed in the analysis? |

| E7a | Does the article provide the results of a statistical analysis of the effectiveness results? |

| B1 | Do the authors specify any summary benefit measure(s) used in the economic analysis? |

| B2 | Do the authors report the basic method of valuation of health states or interventions? |

| B3 | Do the authors specify the source(s) of health states (eg, specific patient populations or the general public)? |

| B4 | Do the authors specify the valuation tool used? |

| B5a | Is the level of reporting of benefit data adequate (incremental analysis, statistical analyses)? |

| C1a | Are the cost components/items used in the economic analysis presented? |

| C2 | Are the methods used to measure cost components/items provided? |

| C3 | Are the sources of resource consumption data provided? |

| C4 | Are the sources of unit price data provided? |

| C5a | Are the unit prices for resources given? |

| C6a | Are costs and quantities reported separately? |

| C7a | Is the price year given? |

| C8 | Is the time horizon given for each element of the cost analysis? |

| C9a | Is the currency unit reported? |

| C10 | Is a currency conversion rate given? |

| C11 | Does the article provide the results of a statistical analysis of cost results? |

| D1 | Was the summary benefit measure(s) discounted? |

| D2 | Were the cost data discounted? |

| D3 | Do the authors specify the rate(s) used in discounting costs and benefits? |

| D4 | Were discounted and not discounted results reported? |

| S1a | Are quantitative and/or descriptive analysis conducted to explore variability from place to place? |

| O1a | Did the authors discuss caveats regarding the generalizability of their results? |

Note:

Items comprising the transferability sub-checklist.

Source: Boulenger S, et al. Eur J Health Econom. 2005;4:337–338.

The checklist was applied to 25 studies to assess the level of transferability of EEs of health care programs between the UK and France. Selected studies included 17 multi-country studies that included study sites in France and the UK, along with eight single-country studies from France or the UK, which evaluated the same health technology using the same methodology. Cost and/or cost-effectiveness ratios in these studies were converted using purchasing power parities (PPP), with the Euro as the common currency. These enabled comparisons of the results between the two countries. The mean percentage transferability information score for the full checklist was 66.9% ± 13.6%, and the results obtained for the sub-checklist (68.8% ± 15.1%) were very similar to the full-checklist, suggesting a high correlation between overall study quality and a good transferability score. The potential relationship between the transferability information score and the characteristics of the study (multi-country vs single-country) was explored using χ2 test; however, no statistically significant differences were found. Comparisons for the 25 economic evaluations revealed that in the vast majority of cases, the cost-effectiveness ratio for France was more favorable than that of the UK. Therefore, the authors pointed out that if this finding was confirmed by further studies, the implication would be that a decision-maker in France, on seeing a study performed in the UK, could be fairly confident that even more favorable results would be obtained for France. However, it is difficult to determine the extent to which the results would be more favorable due to the wide range of difference (1.5%–250%). For the 17 multi-country studies, five enabled direct comparisons, and the source of difference was merely a price effect (unit costs).

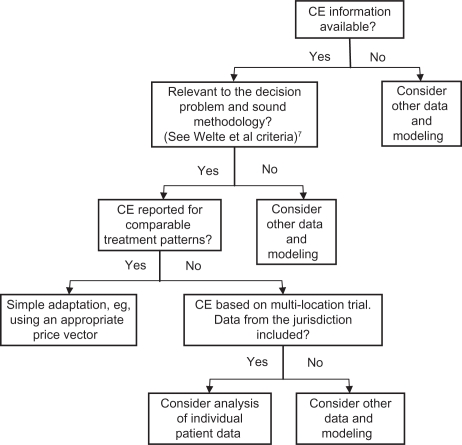

Drummond’s application algorithm (2009)

An International Society of Pharmacoeconomics and Outcomes Research (ISPOR) Task Force on Good Research Practices lead by Drummond,12 proposed a 4-step application algorithm that determines the appropriate methods for adjusting cost-effectiveness estimates based on data availability. The authors considered three types of situations where cost-effectiveness results are not transferable or in other words there are three critical criteria: 1) if either the experimental technology or the comparator(s) are not relevant in the jurisdiction of interest; 2) if the methodological quality of the studies doesn’t meet local standards, which is similar to Welte’s general ‘knock-out’ criteria; 3) if the study population is different between jurisdictions. Furthermore, they developed an application algorithm (see Figure 3) to assess whether the study results are directly transferable through simple adjustment procedures for practice variations, unit health care costs, the settings, time horizon, discount rates, and productivity/time costs (ie, similar to Welte’s specific ‘knock-out’ criteria), or whether more elaborate adjustment is needed which analyzes individual patient data or uses decision-analytic modeling techniques depending on whether the jurisdiction of interest is within a multi-location study that has been conducted. The authors further addressed analytical methods of using trial-based patient data, and under what circumstances decision-analytic models should be considered.

Figure 3.

Drummond’s steps for determining appropriate methods for adjusting cost-effectiveness information.

Source: Drummond MF, et al. Value Health. 2009;12:411.

Abbreviation: CE, cost effectiveness.

Turner’s transferability checklist (2009)

The next system proposed was by Turner13 as part of the European network for Health Technology Assessment (EUnetHTA) project.14 These authors and contributors developed an adaptation toolkit that contains a series of checklists, questions, and resources to guide the users through the process of selecting possible relevant material, assessing the relevance, reliability, and transferability of HTA reports from other settings or jurisdictions, and adapting the information for the desired context of their own setting. The toolkit is structured into two sections: 1) speedy sifting – a screening tool which enables rapid screening of existing HTA reports to assess the relevance of the HTA report for adaptation; 2) main toolkit – a more comprehensive tool with questions on reliability and issues regarding transferability. The speedy sifting section contains eight questions:

Are the policy and research questions being addressed relevant to your questions? (Yes/No)

What is the language of this HTA report? Is it possible to translate this report into your language? (Yes/No)

Is there a description of the health technology being assessed? (Judgment needed)

Is the scope of the assessment specified? (Judgment needed)

Has the report been externally reviewed? (Judgment needed)

Is there any conflict of interest? (Judgment needed)

When was the work that underpins this report done? Does this make it out of date for your purposes? (Judgment needed)

Have the methods of the assessment been described in the HTA report? (Judgment needed)

Users are supposed to undertake the speedy sifting for a quick assessment of the relevance of the report for adaptation before using the more comprehensive tool. Based on answers to the eight questions, users can make their own judgment on whether to: 1) proceed to the main part of the toolkit, 2) seek further information, or 3) end the adaptation process and consider creating a new local HTA report. To that extent, these first eight questions are similar to critical criteria proposed in early systems.

The main part of the toolkit (see Table 4) comprises five domains in the form of a checklist: technology use, safety, effectiveness or efficacy, economic evaluation, and organizational aspects. Each of the domains includes a series of questions that enable the assessment of a report’s reliability, specific relevance, and transferability. Answers to these questions help the users extract information from the corresponding sections of the HTA report, and incorporate it within an HTA report in their own setting. There may be a need to update the data and supplement with local context data. The main toolkit can be used in its entirety in all five domains or can be used to adapt information in one or more domains. If after going through the main toolkit it is found that no part of the HTA report was reliable and/or transferable, then users should not consider transferability and should consider creating a new local HTA report.

Table 4.

EUnetHTA adaptation toolkit

Technology use domain

| |||

Safety domain

| |||

Effectiveness (including efficacy) domain

| |||

| Economic evaluation domain | |||

To assess relevance and reliability:

| |||

To assess transferability:

| |||

| Organizational aspects domain (organizational aspects matrix) | |||

| Organizational levels | |||

| Organizational aspects dimensions | Inter-organizational level | Intra-organizational level | Health care system |

| Utilization | Type of data and methods of analysis | ||

| Work processes | Data from research (quantitative and qualitative) | ||

| Centralization/decentralization | Literature reviews | ||

| Staff | Routine data | ||

| Job satisfaction | Informal knowledge and anecdotes | ||

| Communication | Judgments | ||

| Finances | Models | ||

| Stakeholders | |||

Organizational aspects domain additional questions:

| |||

Source: Turner S, et al. EUnetHTA Adaptation Toolkit Work Package 5, v4, 2008.

Abbreviations: HRQoL, health related quality of life; HTA, health technology assessments.

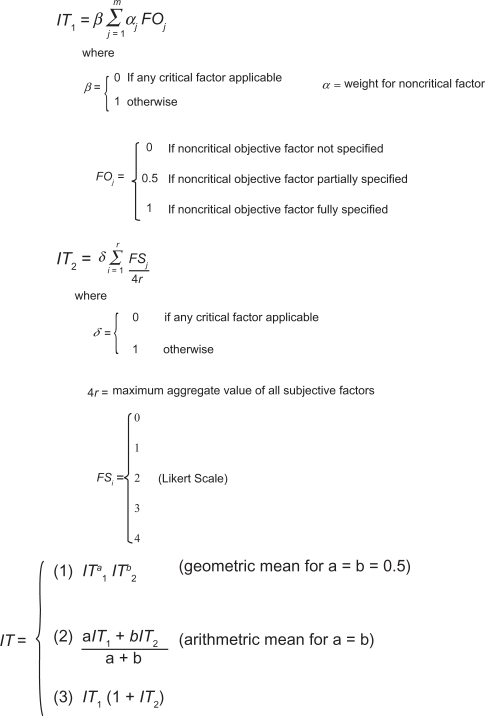

Antonanzas’ transferability index (2009)

And finally, in the paper by Antonanzas,15 the authors have constructed a numerical global transferability index based on weighted objective and subjective elements to measure the degree of transferability of EE results. The global index was derived through a mathematical formula that combines factors and weights from a general index and a specific index. Both of the partial indices considered a range of critical and noncritical factors that are similar to those considered by Boulenger10 and Welte.7 The factors used in the general and specific indices are shown in Table 5 and the index formulas are shown in Figure 4. In the first phase of this system, a general transferability index considers seven critical objective values and then the same 16 noncritical objective factors as proposed by Boulenger10 (see Table 5). The formula for the general index (IT1) is shown in the first panel of Figure 4 and is intended to initially evaluate the methodological quality of the studies and then exclude studies that are deemed not transferable. The specific index, which is considered subjective, measures the applicability of each study to the specific setting of the decision-maker. In the specific index there are four critical criteria and eight noncritical criteria. The formula for the specific index (IT2) is shown in the second panel of Figure 4. The overall global transferability index (third panel in Figure 4) then combines the general and specific indices, either as a simple or weighted arithmetic mean, the geometric mean, or a combination of indices. The overall approach for the objective factors is similar to that proposed by Boulenger,10 however, Boulenger assumed equal weights to all noncritical items in that checklist. In contrast, Antonanzas derived weights for noncritical objective factors averaged from answers of seven HTA agencies in Spain and assigned these weights to the noncritical objective factors. The theoretical value of the global index is between 0 and 1, or 0 and 2, depending on which formulation to choose. The authors do not provide a threshold above which a given study would be deemed transferable. Given that the same study could have different index values in different jurisdictions, it was felt that health authorities from different jurisdictions should select the threshold value that best fits their purposes.

Table 5.

Antonanzas’ general and specific transferability index factors

| Global index (IT) |

| General index (IT1) |

Critical objective factors:

|

| Specific index (IT2) |

Critical subjective factors:

|

Noncritical subjective factors:

|

Source: Antonanzas F, et al. Health Econ. 2009;18:629–643.

Figure 4.

Antonanzas’ transferability indices – detailed formulas.

Source: Antonanzas F, et al. Health Econ. 2009;18:629–643.

The index was applied to 27 Spanish studies on infectious diseases from the perspective of hypothetical regional agency in charge of evaluating health technologies. The reason infectious diseases were chosen was because they deal with preventive and curative technologies and included models with diverse time horizons, which allows the checking off of different aspects included in the proposed index. In total, 11 out of 27 studies were deemed not transferable as one or more critical factors received a value of 0 and were therefore excluded. A global transferability index was generated for each transferable study using the arithmetic and geometric means (ie, Figure 4; Formula 1) and 2) where a = b = 0.5), although the computed values of the index hardly showed any variation regardless of the formulation used. The transferability of the analyzed studies was relatively low; the mean value of the index was 0.534–0.543, with 1 as the maximum theoretical value. The authors also compared the average index value with the average score generated using Boulenger’s10 approach, and the difference was not found to be statistically significant.

Summary of approaches

Although there have been seven publications to date on alternative systems, processes, or approaches for assessing the geographic transferability potential of EEs and HTAs or for guiding the conduct of transferring EEs and HTAs across jurisdictions, the proposed approaches and the factors used for assessing transferability potential have varied substantially across these publications. Some of the proposed approaches consist simply of a list of a few study quality issues/factors, while other proposed approaches have a much more extensive list of factors for consideration. Some authors have gone in a completely different direction by proposing a sequenced flow chart type approach to help guide the process of either conducting a transferability study or determining whether a primary study in the country of origin is needed.

More common amongst the proposed systems or approaches is an assessment of critical criteria for determining transferability potential first, followed by an assessment based on other noncritical factors. Although there is some agreement on what these critical and noncritical transferability factors are, there are considerable discrepancies in both the critical and noncritical factors that have been proposed across the systems and approaches. Finally some authors have gone one step further by proposing a quantitative score or index to measure transferability potential, with a higher score indicating greater transferability likelihood. A variant on this approach is a weighting for the index where if critical factors are not satisfied, then the index value returns a zero indicating the EE or HTA is not transferable.

Discussion

The increasing pressure on health care decision-makers to make more efficient use of existing health care resources has resulted in a substantial increase in the global demand for EEs and HTAs. However, conducting EEs and HTAs on every new intervention in every jurisdiction is not only unfeasible, but will likely result in a very inefficient use of scarce global assessment and evaluation resources. As a result, decision-making bodies will increasingly be looking at the applicability and feasibility of using EE and HTA evidence from other jurisdictions for their own local needs. Geographic transferability can be a very promising way of making more efficient use of existing assessment and evaluation resources and may be the only alternative for some jurisdictions who want to follow an evidence-based decision-making process but where resources or expertise to conduct these assessments are limited.

Most promising from the research to date on geographic transferability is the identification of factors that can potentially affect transferability. This has led to systems and approaches with checklists for assessing and conducting transferability, and specifically to the development of an extensive checklist of critical and noncritical factors in determining transferability potential. Although the list of factors has varied substantially across publications, some patterns have emerged which might form the basis for consensus in the development of a future tool, checklist, or system. For example, the critical transferability factors that have been proposed seem to focus on issues of study quality, transparency of methods, the level of reporting of methods and results, and the applicability of the treatment comparators to the target country. The proposed list of noncritical factors has been much more extensive and perhaps future research might focus on narrowing or refining this list. More recently, indices have been promised to measure transferability potential. However, due to the complexities of identifying appropriate weights for each of the noncritical factors, it is still uncertain whether the assessment and calculation of an overall transferability score or index will be practical or useful for transferability considerations in the future.

Researchers need to continue to drive toward consensus on developing a list of critical factors that prevent geographic transferability and on developing a list of noncritical factors that may prevent geographic transferability of EE or HTA initiatives across jurisdictions. A consensus will lead to good practice guidelines and perhaps eventually to a general scoring system or index which could be tailored to each jurisdiction based on jurisdiction-specific weights for each transferability factor. Whether or not an index measurement approach is practical or useful for decision-makers, an essential starting point is consensus across jurisdictions regarding the list (eg, checklist) of critical and noncritical factors, which are important for determining transferability potential.

Acknowledgments

Jean-Eric Tarride and Daria O’Reilly are supported by Ministry of Health and Long Term Care (MOHLTC) Career Scientist awards.

Appendix

Search details for PubMed

Chart*[tiab] OR toolkit*[tiab] OR checklist*[tiab] OR index*[tiab] OR indices*[tiab] OR methodologic*[tiab] OR practice*[tiab] OR guideline*[tiab]

Models, Economic[mh]

Economic evaluation*[tiab]

Technology transfer[mh]

Transferab*[tiab] OR transferrab*[tiab] OR transfer[tiab] OR transportable[tiab] OR transportability[tiab] OR portable[tiab] OR portability[tiab] OR adapt*[tiab] OR generali*[tiab]

1 and (2 or 3) and (4 or 5)

Search details for Ovid MEDLINE and EMBASE

(chart* or toolkit* or checklist* or index* or indices* or methodologic* or practice* or guideline*).ti,ab.

Exp Models, Economic/use prmz

Economic evaluation*.ti,ab.

Economic Evaluation/use emez

Technology Transfer/

(transferab* or transferrab* or transfer or transportable or transportability or portable or portability or adapt* or generali*).ti,ab.

1 and (2 or 3) and (5 or 6 use prmz)

1 and (3 or 4) and (5 or 6 use emez)

Footnotes

Disclosure

No funding was obtained for this research and the authors have no financial conflicts of interest to declare.

References

- 1.Drummond MF, Sculpher MJ, Torrance GW, O’Brien BJ, Stoddart GL. Methods for the economic evaluation of health care programmes. New York: Oxford University Press; 2005. [Google Scholar]

- 2.Folland S, Goodman AC, Stano M. The economics of health and health care. Upper Saddle River, NJ: Prentice-Hall, Inc; 2001. [Google Scholar]

- 3.O’Brien BJ. A tale of two (or more) cities: geographic transferability of pharmacoeconomic data. Am J Manag Care. 1997;3(Suppl):S33–S39. [PubMed] [Google Scholar]

- 4.Goeree R, Burke N, O’Reilly D, Manca A, Blackhouse G, Tarride JE. Transferability of economic evaluations: approaches and factors to consider when using results from one geographic area for another. Curr Med Res Opin. 2007;23:671–682. doi: 10.1185/030079906x167327. [DOI] [PubMed] [Google Scholar]

- 5.Heyland DK, Kernerman P, Gafni A, Cook DJ. Economic evaluations in the critical care literature: do they help us improve the efficiency of our unit? Crit Care Med. 1996;24:1591–1598. doi: 10.1097/00003246-199609000-00025. [DOI] [PubMed] [Google Scholar]

- 6.Späth HM, Carrere MO, Fervers B, Philip T. Analysis of the eligibility of published economic evaluations for transfer to a given health care system. Methodological approach and application to the French health care system. Health Policy. 1999;49:161–177. doi: 10.1016/s0168-8510(99)00057-3. [DOI] [PubMed] [Google Scholar]

- 7.Welte R, Feenstra T, Jager H, Leidl R. A decision chart for assessing and improving the transferability of economic evaluation results between countries. Pharmacoeconomics. 2004;22:857–876. doi: 10.2165/00019053-200422130-00004. [DOI] [PubMed] [Google Scholar]

- 8.Cohen DJ, Breall JA, Ho KK, et al. Evaluating the potential cost-effectiveness of stenting as a treatment for symptomatic single-vessel coronary disease: use of a decision-analytic model. Circulation. 1994;89:1859–1894. doi: 10.1161/01.cir.89.4.1859. [DOI] [PubMed] [Google Scholar]

- 9.Serruys P, van Hout B, Bonnier H, et al. Randomized comparison of implantation of heparin-coated stents with balloon angioplasty in selected patients with coronary artery disease (Benestent II) Lancet. 1998;352:673–681. doi: 10.1016/s0140-6736(97)11128-x. [DOI] [PubMed] [Google Scholar]

- 10.Boulenger S, Nixon J, Drummond M, Ulmann P, Rice S, de PG. Can economic evaluations be made more transferable? Eur J Health Econ. 2005;6:334–346. doi: 10.1007/s10198-005-0322-1. [DOI] [PubMed] [Google Scholar]

- 11.Nixon J, Rice S, Drummond M, Boulenger S, Ulmann P, de PG. Guidelines for completing the EURONHEED transferability information checklists. Eur J Health Econ. 2009;10:157–165. doi: 10.1007/s10198-008-0115-4. [DOI] [PubMed] [Google Scholar]

- 12.Drummond M, Barbieri M, Cook J, et al. Transferability of economic evaluations across jurisdictions: ISPOR Good Research Practices Task Force report. Value Health. 2009;12:409–418. doi: 10.1111/j.1524-4733.2008.00489.x. [DOI] [PubMed] [Google Scholar]

- 13.Turner S, Chase DL, Milne R, et al. The health technology assessment adaptation toolkit: description and use. Int J Technol Assess Health Care. 2009;25(Suppl 2):37–41. doi: 10.1017/S0266462309990663. [DOI] [PubMed] [Google Scholar]

- 14.EUnetHTA. Work Package 5 Adaptation Toolkit v4. 2008. Available at: http://www.eunethta.net/upload/WP5/EUnetHTA_HTA_Adaptation_Toolkit_October08.pdf.

- 15.Antonanzas F, Rodriguez-Ibeas R, Juarez C, Hutter F, Lorente R, Pinillos M. Transferability indices for health economic evaluations: methods and applications. Health Econ. 2009;18:629–643. doi: 10.1002/hec.1397. [DOI] [PubMed] [Google Scholar]