Abstract

Although the ultimate aim of neuroscientific enquiry is to gain an understanding of the brain and how its workings relate to the mind, the majority of current efforts are largely focused on small questions using increasingly detailed data. However, it might be possible to successfully address the larger question of mind–brain mechanisms if the cumulative findings from these neuroscientific studies are coupled with complementary approaches from physics and philosophy. The brain, we argue, can be understood as a complex system or network, in which mental states emerge from the interaction between multiple physical and functional levels. Achieving further conceptual progress will crucially depend on broad-scale discussions regarding the properties of cognition and the tools that are currently available or must be developed in order to study mind–brain mechanisms.

The state of the mind–brain dilemma

The human mind is a complex phenomenon built on the physical scaffolding of the brain [1–3], which neuroscientific investigation continues to examine in great detail. However, the nature of the relationship between the mind and the brain is far from understood [4]. In this article we argue that recent advances in complex systems theory (see Glossary) might provide crucial new insights into this problem. We first examine what is presently known about the complexity of the brain and review recent applications of complex network theory to the study of brain connectivity [2,3] (Box 1 and Figure 1). We then discuss the philosophical concept of emergence as a potential framework for the investigation of mind–brain mechanisms. We delineate currently available investigative tools for the examination of this problem, from quantitative statistical physics to qualitative metaphors, and discuss their relative advantages and limitations. Finally, we highlight crucial areas where further work is necessary to achieve progress, including both detailed modeling and large-scale theoretical frameworks.

Box 1. Complex network theory.

Complex network theory draws from advances in statistical physics, mathematics, computer science and the social sciences to provide a principled framework in which to examine complex systems that are composed of unique components and display nontrivial component-to-component relations. This framework has been applied to systems as varied as metabolic networks, food webs, gene–gene interactions, social networks and more recently the human brain.

The simplest application of the theory to these systems is in the use of mathematical graph theory to describe the statistical properties of the system’s connectivity, which can provide important insights into underlying organizational principles. The graphical properties of systems can be directly related to characteristics of the system’s function and to external constraints that might have shaped the system’s growth, development and operation. Graphical models can be extended to create more complicated models in which simple connectivity maps are supplemented with additional information on the characteristics of individual components, functional algorithms and so on. An additional important avenue of inquiry is the construction of generative models of network organization that can shed light on the structural predictors of altered function, for example in disease states.

Complex network theory is particularly applicable to the study of the human brain - a complex system on multiple scales of space and time that can be decomposed into subcomponents and the interactions between them. In addition to applicability, the framework is generalizable across neuroimaging modalities and provides results that can be intuitively interpreted in relation to large bodies of previous neuroscientific and theoretical work [79]. Importantly, graphical properties of human brain networks have been directly linked to system function through correlations with behavioral and cognitive variables including verbal fluency, IQ and working memory accuracy [30]. Altered function, such as that present in disease states, has also been correlated with underlying structure in clinical states as diverse as schizophrenia and Alzheimer’s disease [80].

Complementary avenues of inquiry have uncovered evidence that metabolic properties of the brain can be mapped to network organization [81] suggesting energetic constraints on underlying architecture. These results are consistent with recent work characterizing the physical embedding of brain network organization into the 3-dimensional space of the skull- a process that seems to have been done in a cost-efficient manner characteristic of other highly constrained physical systems [7].

In summary, there exists a wealth of emerging evidence that complex network theory, applied to neuroimaging data, can uniquely facilitate neuroscientific inquiry.

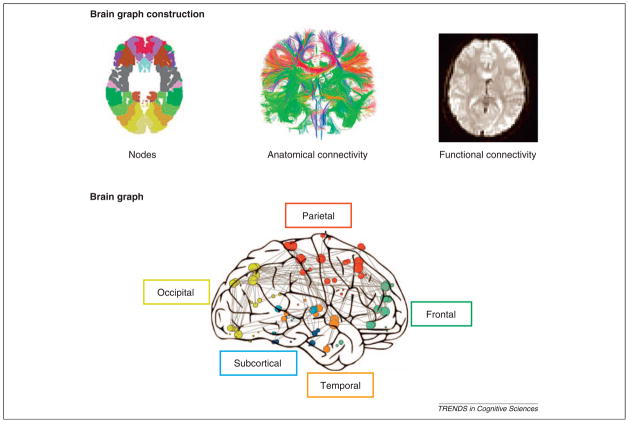

Figure 1. Brain graph construction.

One of the recent applications of complex network theory in neuroscience has been in the creation of brain graphs from neuroimaging data [30,79,80]. In this process, brain regions are represented by nodes in a graph and connections between those regions, whether anatomical (using diffusion imaging) or functional (using fMRI, electroencephalography or magnetoelectroencephalography), are represented by edges between those nodes. In this way a graph can be constructed that characterizes the entire brain system according to its components (nodes) and their relations with one another (edges).

Complexity and multiscale organization

A first step in understanding mind–brain mechanisms is to characterize what is known about the structure of the brain and its organizing principles. The brain is a complex temporally and spatially multiscale structure that gives rise to elaborate molecular, cellular, and neuronal phenomena [3] that together form the physical and biological basis of cognition. Furthermore, the structure within any given scale is organized into modules – for example anatomically or functionally defined cortical regions – that form the basis of cognitive functions that are optimally adaptable to perturbations in the external environment.

Spatial and temporal scaling

In the spatial domain alone, the brain displays similar organization at multiple resolutions (Figure 2). In addition to the spatial distributions of molecules inside neuronal and non-neuronal cells, the cells themselves are heterogeneously distributed throughout the brain [5]. Minicolumns - vertical columns through the cortical layers of the brain -contain on average 100 neurons, are roughly 30 microns in diameter and form the anatomical basis of columns, subareas (e.g. V1), areas (e.g. visual cortex), lobes (e.g. the occipital lobe), and thereby the complete cerebral cortex. Not only is the structural distribution of components heterogeneous within the cortex, but recent evidence also suggests that so, in fact, is the connectivity between those components [6–8].

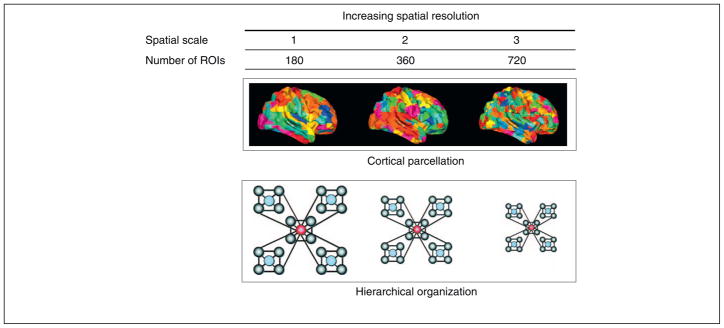

Figure 2.

Spatial scaling indicates that an organizational principle characterizes the structure of the human cortex over multiple spatial scales. An example of spatial scaling is the mathematical principle of network hierarchy [8]. Hierarchical structure is defined as a relation between network nodes whereby hubs (or highly connected nodes) are connected to nodes that are not otherwise connected to one another; in other words, the neighbors of a hub are not clustered together. This structure facilitates global communication and is thought to play a role in the modular organization of connectivity in the cortex [33,78]. Hierarchical network structure is consistently displayed at increasing spatial resolutions in which the brain is parcellated into more and more regions of interest (ROIs).

Temporal scaling is evident across the inherent rhythms of brain activity [9–11] that vary in frequency and relate to different cognitive capacities. The highest frequency gamma band (>30 Hz) is thought to be necessary for the cognitive binding of information from disparate sensory and cognitive modalities [12] whereas the beta (12–30 Hz), alpha (8–12 Hz), gamma (4–8 Hz), theta (2–4 Hz) and delta (1–2 Hz) bands each modulate other distinct yet complementary functions [13]. A broad range of temporal scales also characterizes human cognitive functions. For example, the pattern of neuronal connections in the brain changes with learning and memory through the process of synaptic plasticity on both short (seconds to minutes) and long (hours to days to months) timescales [14]. In addition to synapses between neurons, connections between large swaths of cortex can also be altered by learning and memory processes (e.g. after long-term training in a motor skill such as juggling) as indicated by white matter fiber structure measured by diffusion imaging [15]. Furthermore, functional [16], structural [17] and connectivity [18,19] signatures of short-term development and longer-term aging are evident, supporting the view that brain organization dynamically changes over multiple temporal and spatial scales.

Modularity

Although this hierarchy of scales characterizes brain organization both functionally and structurally in both time and space, organization within a single scale also displays a nontrivial ‘nearly decomposable’ [20] or modular nature (Figure 3). That is, the entire brain system can be decomposed into subsystems or modules. In the temporal domain, example modules might be short- and long-term memory whereas in the spatial domain anatomical modules are present in cortical minicolumns or columns. Importantly, each of these modules is composed of elements that are more highly connected to other elements within the same module than to elements in other modules, thereby providing a compartmentalization that reduces the interdependence of modules and enhances robustness [21,22]. Combined with the principle of hierarchy, modularity allows for a formation of complex architectures composed of subsystems within subsystems within subsystems that facilitate a high degree of functional specificity.

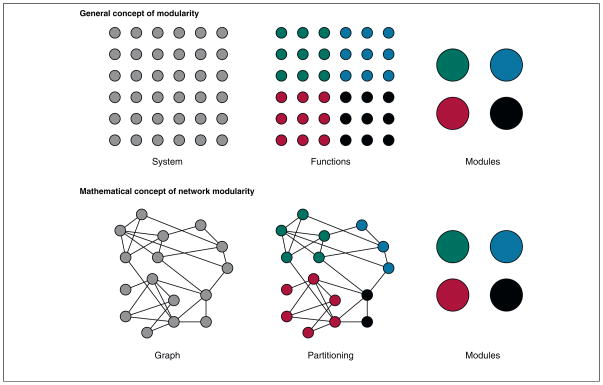

Figure 3. Modularity.

The general concept of modularity is that the components of a system can be categorized according to their functions. Components that subserve a similar function are said to belong to a single module, whereas components that subserve a second function are said to belong to another module. Modularity can also be defined mathematically in terms of network organization [30,33,70]. Nodes that share many common links are said to belong to a module, whereas nodes that do not share many links are likely to be assigned to different modules. The categorization of nodes into partitions is a process known as ‘community detection’ because – rather appropriately – it detects communities or modules composed of highly connected nodes and delineates the boundaries between those communities. This categorization procedure is an important current area of network science research.

In addition to enhancing robustness and specificity, modularity facilitates behavioral adaptation [21] because each module can both function and change its function without adversely perturbing the remainder of the system. The principle of modularity, by reducing constraints on change [21,23,24], therefore forms the structural basis on which subsystems can evolve and adapt [25] in a highly variable environment [26]. Although a limited conception of modularity of function has influenced neuroscientific thought since the early years of phrenology [27], the more contemporary understanding admits the nonarbitrary, non-random, nonhomogenous nature of the brain as evidenced by soft boundaries between coherently operating groups of cortical and subcortical brain regions such as the motor network and the visual network.

One particular experimental approach has recently highlighted the modular nature of cortical function, and that is resting state functional magnetic resonance imaging (fMRI) where spontaneous low frequency fluctuations of the blood-oxygen-level dependent (BOLD) signal in specific groups of brain regions are more highly correlated with one another than to fluctuations of regions in other groups [28]. These spatially specific modules have temporally dynamic interconnections both within and between themselves and include the control, visual, auditory, default mode, dorsal attention and sensorimotor networks [29].

More formal descriptions of modular architecture have become possible with the recent application of complex network theory to neuroimaging data [30], which has provided direct evidence for both functional [31] and structural [7,8,32] hierarchical modularity of human brain connectivity [33,34]. Within these modular structures, brain regions perform distinct roles either as hubs of high connectivity or as provincial nodes of local processing. This stratification of regional roles is in fact evident in both structural [6] and functional [35] connectivity networks and might have neurophysiological correlates: each region of the brain is differentially energetically active, sustaining varying amounts of synapse development and redevelopment or plasticity [36].

Anatomically, this organizational principle of hierarchical modularity is thought to be compatible with an evolutionary pressure for the minimization of energy consumption in developing and maintaining wiring [7,37]. In fact the large majority of the energy budget in the human brain is used for the function of synapses [38]. The physicality of wiring constraints is also compatible with the inherent spatiality of the observed connectivity: regions close together in physical space interact strongly [39] whereas long-range anatomical connections or functional interactions connect disparate modules [7,8,32]. In fact, the folding of the cortical sheet as well as its placement on the outside of the brain allows the complex wiring diagram of the brain to contain short-range energetically efficient connections [40]. In light of the physical geometry of the brain and its connections, an interesting complementary avenue of research will be to study the inherent metric geometry of the brain’s connectivity (independent of physical constraints) as measured by for example topological network curvature [41]: a description of the inherently curved space in which a network’s organization exists.

Relationship between structure and function

Mounting evidence suggests that the physical constraints on the anatomical organization of the human brain constrain its function. For example, two regions of the brain that are coherently active (functionally connected) with one another are often connected by a direct white matter pathway [6,42]. The Human Connectome Project (http://www.humanconnectomeproject.org/) [43] parallels similar efforts in the mouse [44,45], worm [39] and fly [46,47] in its attempt to comprehensively map the wiring diagram of the human brain at increasing levels of spatial resolution Figure 4). Importantly, this and similar efforts in future will need to be supplemented by investigations into neuromodulatory networks acting in parallel with structural connectivity networks or wiring diagrams but which are independent predictors of brain function [48].

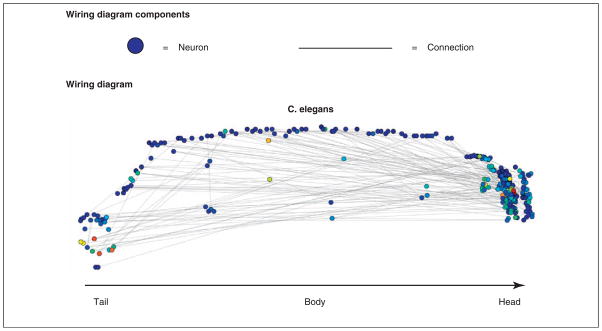

Figure 4. Wiring diagram of Caenorhabditis elegans.

The wiring diagram of the nematode worm, C. elegans, is composed of nodes (neurons) and connections between those nodes (electrical and chemical synapses). The worm is known to contain 302 neurons and here only a small fraction of the connections known to exist between these neurons are displayed (the full connectivity would be too dense to visualize clearly in this way). The color of each node represents the number of connections emanating from that node (red indicating many and blue indicating few). The analysis of wiring diagrams can be used to assess important organizational principles of biological system structure and might provide insight into system function [46].

The mapping of neuronal connectomes from many species represents an extensive effort in data collection and will require significant advances in data acquisition [49], processing [50] and neuroinformatics [51]. The resulting wiring diagrams, however, can be very useful for exploratory analysis to characterize the organizational principles underlying structural connectivity (e.g. [6,8]) or to test specific hypotheses about the structural underpinnings of function [52]. However, although the functional interpretation of the connectome is potentially immensely powerful it is also fraught with caveats. It is plausible that structural connectivity might enable us to predict function but it is not yet clear how to make that prediction. Efforts to this end are complicated by an inherent functional degeneracy that might facilitate robustness and adaptability [53]: the brain performs many (degenerate) functions on the identical anatomical substrate. Furthermore, the cortex is characterized by multipotentiality: anatomical structures specific to vision in the seeing adult might develop into auditory processing units in the early blind [54]. These characteristics of the human brain suggest that anatomical connectivity alone does not uniquely specify the global functionality of a circuit or brain system. Rather structure–function mappings are many-to-many and inherently degenerate because they depend on both network interactions and context. Therefore, although a one-to-one relationship between structure and function might be inconsistent with our current understanding of the brain, a more complicated emergence of function from multiscale structure is plausible [55].

Emergence

Multiscale organization is one hallmark of complex systems and provides the structural basis for another defining phenomenon; this is the concept of emergence in which the behavior, function and/or other properties of the system (e.g. consciousness or the subjective features of consciousness – qualia) are more than the sum of the system’s parts at any particular level or across levels [4]. In fact, such system properties can emerge from complex patterns of underlying subsystems.

Perhaps most simply, emergence – of consciousness or otherwise – in the human brain can be thought of as characterizing the interaction between two broad levels: the mind and the physical brain. To visualize this dichotomy, imagine that you are walking with Leibniz through a mill [56]. Consider that you can blow the mill up in size such that all components are magnified and you can walk among them. All that you find are mechanical components that push against each other but there is little if any trace of the function of the whole mill represented at this level. This analogy points to an important disconnect in the mind–brain interface: although the material components of the physical brain might be highly decomposable, mental properties seem to be fundamentally indivisible [4].

Although emergence can be conceptualized most broadly as occurring between the two levels of the mind and the brain, emergence might be a more fundamental property of the human brain system occurring between multiple physical and functional levels [57]. This idea represents a fundamental paradigm shift away from the so-called reductionism perspective in which the strongest explanatory power lies at the lowest level of investigation: that is, system phenomena are explained by breaking or reducing the system down into molecules, atoms, particles and then subparticles. However, biological systems such as the brain are fundamentally nonreducible in the sense that nonfundamental components have significant causal power: causation seems to occur both upwards and downwards between multiple levels (either neighboring or distant) of the system [58] creating a complementarity or mutually constraining environment of mental and physical functions [59]. This nonreducibility of the brain might be predicated on its inherent organization, which is not a simple sum of the parts with which it is organized. Nonfundamental causality, unlike correlation or determinism [60], allows for mutual manipulability over levels and multiple realizability of system function. It also provides a framework in which to place human responsibility [61] and relate neuroscientific advances to ethics and law [59,62], a process that poses significant difficulties in the context of deterministic reductionism.

The study of altered minds is perhaps one of the greatest tools currently available to assess the mind–brain interface and the characteristics of emergence. Indeed, some of the strongest lines of evidence for nonfundamental causality in the human brain come from psychiatry, for example in the treatment of clinical depression. The most effective therapy for depression combines the administration of Selective serotonin reuptake inhibitors (SSRIs) (pharmacotherapy, i.e. molecular intervention) and cognitive behavioral therapy (psychotherapeutic, i.e. mental intervention). Interestingly, evidence suggests that neither intervention alone is as effective as the two interventions together [63]. These results support the view that intervention at the phenomenological level of thought processes and beliefs is an appropriate co-solution of an inherently neurophysiological abnormality.

Finally, these properties of emergence and causation are inherent to complex systems that show multiscale organization. In addition to emergence and multiscale structure, however, complex systems often display so-called ‘threshold behavior’: a phenomenon studied for example in the context of the behavior of finite automata [64]. Von Neumann noted that simple machines might generate simpler machines. However, at a given threshold of complexity, a machine might generate a machine as complex as itself. Furthermore, above that threshold, a machine might generate another machine more complex than itself. The human brain is an example of a machine that can generate a plethora of other machines with functions other (and possibly more complicated) than its own. Human-made machines can see farther, can hear more sounds and can compute more swiftly than their makers, suggesting that human brains display a critical level of complexity consistent with their emergent functions.

Types of emergence

Understanding the brain depends significantly on understanding its emergent properties. What type of emergence characterizes the brain system? Are different types of emergence present between different levels of the multiscale system? When is the interaction between levels simply correlational and when is it causal?

In describing emergence, several different categories are often used including substance (a baby emerges from a mother), conjunction (two parts can perform a different function than either part separately), property (wetness is not a property of a molecule but of a group of molecules), structural (three lines make a triangle), functional (letters form words) and real (a cell is alive unlike the molecules of which it is made) emergence. The mind emerges from the brain in a way that is arguably unlike any of these weak types of emergence. The mind–brain emergence therefore requires a tailored definition. Mental states emerge from physical states by strong emergence, that is in a nonreducible and highly dependent manner: mental properties do not exist or change unless physical properties exist or change.

Measurement of emergence

Empirical measurement of the mind–brain continuum might be beneficial in characterizing mind–brain emergence. Neuroimaging tools such as fMRI provide coarse-grained statistical evidence for relationships between (indirect) measurements of physical and mental states [28,42]. However, in most cases, these studies provide information about correlations between mental and physical states but do not necessarily provide insight into the underlying emergent phenomena.

The interpretation of results from this experimental line of inquiry is complicated by the fact that it is not yet understood whether the mapping from mind states to brain states is one-to-one (one mind state corresponds to one brain state), or – like genotypes and phenotypes – is one-to-many (one brain state corresponds to many mental states or vice versa). Without such a map, there is no way to know how a human traverses mind-space as it passes through brain states.

Bidirectional causation and complementarity

Emergence is characterized by a higher-level phenomenon stemming from a lower system level; that is, emergence is upward. However, an important property of the brain, as opposed to some other complex systems, is that emergent phenomena can feedback to lower levels, causing lower level changes through what is called downward causation. The combination of upward emergence and downward causation suggests a simple bidirectionality or more nuanced mutual complementarity [59] that adds to the complexity of the system, and underscores the fact that the emergence of mental properties cannot be understood using fundamental reductionism.

Defining causation for the mind–brain system, however, might not be simple. The mind–brain interface might be characterized by complex conditional causation in which causes are neither necessary nor sufficient under all circumstances. The lighting of a match can serve as an example. The strike of a match does often light a match. However, it is not necessarily a sufficient cause for the lighting of the match (e.g. the match was wet) and neither is it a necessary cause (e.g. there are other ways to light a match). Furthermore, it is important to describe cause and effect at the right level of generality and to determine which levels of data are pertinent. Because a high-level causal phenomenon can be realized in many different ways, the range of realizers that can lead to the same or similar effects must be determined. Clarifying these variables might enable a better understanding of causation and complementarity between the mind and the body, and potentially might facilitate the formulation of experiments for testing such inter-level causal relations.

Investigative tools

Although it is important to philosophically define emergence, the question of how the mind and the brain relate to one another is one for which there are few quantitative tools and researchers often turn to simple intuitive metaphors. To date, efforts have touched on the theoretical application of physics and complexity theory, the construction of physical models, and the development of both technical and social metaphors. However, relatively neglected areas of inquiry that might prove highly fruitful include the analysis of evolutionary pressures on brain development and the construction of detailed multiscale multidisciplinary theories and models that coalesce current distributed patterns of knowledge.

Fields

Initial work has capitalized on principles derived from several branches of classical physics in which a common quantitative definition of emergence is related to the concept of phase transitions: thresholds above which the system displays a new characteristic behavior. A classic example from statistical physics is the Ising model that although having little to do with neurons and everything to do with electron spins, has served as a scientific metaphor for the mind–brain phenomenon.

However, to establish more than a metaphor might require inherently new physics to deal with functional (thought) states of aggregate systems. Complementary advances in the newer fields of complexity science and network theory might also shed light on the emergence debate. Importantly, complexity theory attempts to provide a compact representation of the dynamics of an aggregate adaptive system by collapsing the system’s many degrees of freedom into only those necessary for a description of the principal dynamics. Complex systems that can be distilled in this way are relatively robust to environmental perturbations. Network theory, by contrast, provides a new framework in which to study the organizational structure of complex systems made up of many interacting parts and can therefore be thought of as an extension of statistical physics. Recent applications of network theory have focused on descriptive statistics of the brain’s structural and functional organization [30], which can provide insight into the fundamental principles of mind-brain phenomena.

Additional insights (Box 2) will need to be provided by dynamic network models of brain development and function that can be linked to underlying cellular mechanisms such as synaptogenesis, proliferation, pruning, retraction and absorption. These network models could vary from the simple and stylized to the multiscale and complex [65]. In both exploratory and model-based investigations, empirical results are necessarily compared to stated null models that are often based on purely random organizational structure and function. However, the development of more nuanced null models in future will provide the basis for specific hypothesis testing of neurophysiological mechanisms of brain and behavior.

Box 2. Crucial frontiers.

Structure–function

To understand mind–brain mechanisms it is necessary to characterize relations between multiple levels of the multiscale human brain system, including the interactions between temporal scales [55,69] and the relationship between the anatomical organization and cognitive function [42,65]. Complex network theory provides one important context in which to address this question: connectivity at the anatomical level can be directly and mathematically compared to connectivity at the functional level [82], and consistent organizational principles can be identified.

Statics to dynamics

Although recent applications of complex network theory to neuroimaging data have provided unique insights into brain structure and function, the majority of studies to date have focused on representing the brain system as a static network. A crucial advance in this area will require an assessment of dynamic changes in that architecture with cognitive function (e.g. see [83] for a potential mathematical framework to assess dynamic changes in modular organization and [84] for a recent application in the context of human learning).

Dynamical systems theory

To complement a focus on an understanding of the underlying topological architecture of cortical systems provided by complex network theory, it is important to characterize the function of the brain on this architecture as a dynamical system [65,85]. For example, recent work in this area has suggested that the brain functions as an optimal controller [86] that uses feedforward modeling [87,88] for precise motor control [89].

Metaphors

In addition to the development of theoretical models, there is a need to identify principles that are shared across systems as well as those that are unique to a given system. In this context, we often turn to both biological and nonbiological metaphors of the brain.

For example, large-scale physical models can be constructed that attempt to mimic brain organization or structure. Such efforts might range from the construction of a computer whose connections mimic those of the brain (e.g. neuromorphic computing [66]), to the construction of a robot whose behavior mimics that of a human. In both cases, the instantiation of current hypotheses regarding mind–brain mechanisms can be directly tested for realistic behaviors. For example, the recent dynamical systems engineering of a brain-inspired robot with a continuous sensory motor flow experience highlighted several fundamental organizational principles necessary for complex motor behaviors [67]. To produce behavioral complexity, the system required a hierarchically modular architecture in which each module operated at a different time scale, sparsity of connections between neurons and between modules, and feedback loops to allow learning to occur. The distributed architecture also allows for impressive generalizability, where the robot can respond to commands it has not heard before if they are made up of isolated concepts with which it is familiar [68]. Importantly, these properties are consistent with current understanding of human brain structure and the role of cross-frequency couplings of neuronal oscillations [69], and therefore support current hypotheses regarding brain–behavior mechanisms.

The concept that emergence of complex behaviors might occur through the interaction of multiple temporal scales is one that, perhaps unsurprisingly, is not confined to neuroscience. Recent work characterizing power structures in animal societies suggests that emergence or the development of aggregates is a direct consequence of temporally dependent system uncertainty which, in social systems can be based on misaligned interests [70]. The social system in these animals is composed of an interaction network, a fight network and a submissive–dominant signal network that change on short-, medium- and long-temporal scales respectively. The submissive–dominant signal given by an animal to other animals reduces uncertainty in the social system, defines that animal’s place in the emergent power structure, and therefore allows for logical tuning and adaptation of the system [71]. The fact that this signal occurs over longer time scales allows for the decision to create that signal to be based on a long history or memory of animal–animal interactions; by contrast, decisions based on faster variables might only increase system uncertainty and thereby decrease both predictive and adaptive power. Some neurophysiological evidence for the emergence of slow time scales from faster time scales does exist: for example when V1 is recruited, retinal response times might occur at 40 Hz, whereas when higher order association areas are recruited responses might drop to 5 Hz. Further, given the implications of low-frequency oscillations in BOLD signal, it is plausible that slower time scales are important for the understanding of cognitive function and control. However, further work is necessary to elucidate the possible convergence between these two disparate systems.

Historically, many non-neurophysiological systems have been used as explanatory metaphors for the brain, perhaps the most recent examples of which are the computer and the Internet [72]. The mind–brain dualism has been simply likened to the relation between software and hardware in a computer system. Due to recent advances in diffusion weighted imaging, the large-scale wiring diagram of the human brain has been estimated and its organizational structure has been directly compared to that of computer chips, specifically very large-scale integrated circuits [7]. Striking similarities are evident, suggesting that both technological innovation and natural selection have discovered similar solutions to the problems of wiring efficiency in information processing systems.

Although the majority of metaphors have been drawn from systems that are constructed (e.g. robots, computers, the Internet and social systems), it is possible that some important insights can only be gleaned by examining the system that is doing the constructing. That is, to understand brains it might be necessary to understand the evolutionary forces of natural selection and the environment [73]. Recalling Von Neumann’s observations [64] (see above), it is plausible that these forces are at least as complicated as the brain itself. In fact, mounting evidence supports the combined notions that the brain’s development is in part a form of selection dynamic (e.g. neural Darwinism), whereas selection itself is a form of development (e.g. niche construction) [74]. The complexity of these evolutionary forces can be synthesized with the intuitive simplicity of natural selection by viewing evolution in essence as the selection of construction rules. In this case, selection must be complex enough to discriminate among the products of these rules.

One area of evolutionary theory that might be particularly useful in understanding the human brain is multilevel selection theory [75] that seeks to explain how payoffs to members of a fused aggregate can overcome the advantage of fissioning into independent replicators. A canonical example is the evolution of multicellular life and the subsequent emergence of specialized cell types many of which have forfeited the ability to replicate. It has been hypothesized that major transitions of this sort are associated with novel mechanisms for storing and transmitting information. Increasingly inclusive ‘levels’ are able to capture and then transmit information that grows nonlinearly in the number of individual components. In this way, higher levels can offset the replicative cost of social living. It is reasonable, therefore, that one way to understand the brain – one of the most complex multicellular structures in biology – is in terms of a nonlinear increase in the efficiency of information processing. The amount of adaptive information available to cells in which subpopulations specialize in sensing, integration and mobility is expected to vastly outweigh the information available to individual cells having to accumulate information independently. A better understanding of the sequence of selective pressures leading to these advantages will lead to an improved understanding of the functional role of a brain and its relationship to an information-rich environment.

Models and theory

Despite the usefulness of theoretical and physical metaphors of the brain, the drawing of links between disparate systems has its limitations and confounds. These are perhaps most troubling at the more detailed, less lofty levels of description. The field of neuroscience is flooded with highly detailed data produced by a plethora of different measurement techniques all measuring the same system. Although researchers often point to studies using other measurement streams, spatial resolutions, subsystems, species or animals models to support their own results, the empirical compatibility of these data is often tenuous. It is difficult to parse whether it is the same phenomenon that is measured, but with different tools, or a different phenomenon altogether. This predicament stems from the fact that the relations between present technologies or the data that they provide are not well understood, and is further exacerbated by the lack of detailed neurophysiological models that combine data and results from many experimental modalities [76], investigators and scientific communities. For example in the study of visual plasticity a software modeling environment could be developed in which information from single unit physiology, informed by molecular pathways and anatomical connectivity derived from tract tracing or diffusion imaging, could be used to predict the expected BOLD response. In a multidimensional endeavor of this size it might not be clear at the outset what the lowest relevant level of data should be; what is imperative is that connections exist between levels to provide a means of quantifying whether results are consistent with standards of the field and collective understanding. Similarly, issues of neuroinformatics [51], storage, computational power and resources, and common standards will become increasingly important but do not negate the necessity of modeling itself. The success of such an endeavor (similar to that of the Large Hadron Collider or the Human Genome Project) will depend on consistent collaborative efforts between diverse investigators to a degree previously unforeseen in the field of neuroscience [77].

Although extensive detailed neurophysiological models will be important for further understanding of the brain, perhaps an even more pressing issue is the lack of computational theoretical neuroscience. The majority of work being performed in the field falls largely within the confines of data analysis. However, until consistent insightful theories are posited for the many larger-scale questions of mind–brain mechanisms, progress will be limited.

Concluding remarks

Neuroscience desperately needs a stronger theoretical framework to solve the problems that it has taken on for itself. Complexity science has been posited as a potentially powerful explanation for a broad range of emergent phenomena in human neuroscience [78]. However, it is still unclear whether or not a program could be articulated that would develop new tools for understanding the nervous system by considering its inherent complexities. Is it possible to answer the questions discussed in this article? Are current tools the right ones? Is it necessary to draw from other quantitative fields or consider other metaphors? What theories need to be developed to guide further research? While many questions remain unanswered, the next few years will likely see a revolution in the study of the mind-brain interface as tools from mathematics and complex systems, which have as yet only brushed the surface, take hold of the field of neuroscience.

Acknowledgments

This document grew out a series of thoughtful discussions with Walter Sinnott-Armstrong, Jessica C. Flack, Scott T. Grafton, David C. Krakauer, George R. Mangun, William T. Newsome, Marcus E. Raichle, Daniel N. Rockmore, H. Sebastian Seung, Olaf Sporns, Jun Tani, and Brian A. Wandell at a two-day workshop held in Santa Barbara, CA on November 3–4, 2010.

Glossary

- Complex network theory

a modeling framework that defines a complex system in terms of its subcomponents and their interactions, which together form a network

- Complex system

a system whose overall behavior can be characterized as more than the sum of its parts

- Connectome

a complete connectivity map of a system. In neuroscience, the structural connectome is defined by the anatomical connections between subunits of the brain whereas the functional connectome is defined by the functional relations between those subunits

- Emergence

the manner in which complex phenomena arise from a collection of relatively simple interactions between system components

- Ising model

a historically important mathematical model of a phenomenon in physics known as ferromagnetism that displays several characteristics of complex systems including phase transitions and the emergence of collective phenomena

- Modularity

a property of a system that can be decomposed into subcomponents or ‘modules’, which can perform unique functions and can adapt or evolve to external demands

- Nonfundamental causality

the concept that parts of a system that are not its smallest parts (i.e. nonfundamental) can have significant causal power in terms of system function, facilitating mutual manipulability between multiple levels of the system and multiple realizability of system function

- Reductionism

in philosophy, a view that a complex system can be modeled and understood simply by reducing the examination to a study of the system’s constituent parts

- Scaling

the term scaling indicates a similarity of some organizational structure of phenomenon across multiple scales of a system. Spatial scaling therefore indicates that a principle or phenomenon is consistently displayed at multiple spatial resolutions. Temporal scaling indicates that a principle or phenomenon is consistently displayed at multiple temporal resolutions

- Wiring diagram

a map of the hard-wired connectivity of a system. In neuroscience, the term is usually used to refer to the map of connections (e.g. synapses) between neurons specifically but can also be used to refer to the map of larger scale white matter connections between brain regions

References

- 1.Nunez PL. Brain, Mind, and the Structure of Reality. Oxford University Press; 2010. [Google Scholar]

- 2.Sporns O. Networks of the Brain. MIT Press; 2010. [Google Scholar]

- 3.Bullmore E, et al. Generic aspects of complexity in brain imaging data and other biological systems. Neuroimage. 2009;47:1125–1134. doi: 10.1016/j.neuroimage.2009.05.032. [DOI] [PubMed] [Google Scholar]

- 4.Gazzaniga MS. Neuroscience and the correct level of explanation for understanding mind. Trends Cogn Sci. 2010;14:291–292. doi: 10.1016/j.tics.2010.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cajal SR. Histology of the Nervous System of Man and Vertebrates. Oxford University Press; 1995. [Google Scholar]

- 6.Hagmann P, et al. Mapping the structural core of human cerebral cortex. PLoS Biol. 2008;6:e159. doi: 10.1371/journal.pbio.0060159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bassett DS, et al. Efficient physical embedding of topologically complex information processing networks in brains and computer circuits. PLoS Comput Biol. 2010;6:e1000748. doi: 10.1371/journal.pcbi.1000748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bassett DS, et al. Conserved and variable architecture of human white matter connectivity. NeuroImage. 2011;54:1262–1279. doi: 10.1016/j.neuroimage.2010.09.006. [DOI] [PubMed] [Google Scholar]

- 9.He BJ, et al. The temporal structures and functional significance of scale-free brain activity. Neuron. 2010;66:353–369. doi: 10.1016/j.neuron.2010.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kitzbichler MG, et al. Broadband criticality of human brain network synchronization. PLoS Comput Biol. 2009;5:e1000314. doi: 10.1371/journal.pcbi.1000314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bassett DS, et al. Adaptive reconfiguration of fractal small-world human brain functional networks. Proc Natl Acad Sci USA. 2006;103:19518–19523. doi: 10.1073/pnas.0606005103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Herrmann CS, et al. Human gamma-band activity: a review on cognitive and behavioral correlates and network models. Neurosci Biobehav Rev. 2010;34:981–992. doi: 10.1016/j.neubiorev.2009.09.001. [DOI] [PubMed] [Google Scholar]

- 13.Uhlhaas PJ, et al. The role of oscillations and synchrony in cortical networks and their putative relevance for the pathophysiology of schizophrenia. Schizophr Bull. 2008;34:927–943. doi: 10.1093/schbul/sbn062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Abbott LF, Regehr WG. Synaptic computation. Nature. 2004;431:796–803. doi: 10.1038/nature03010. [DOI] [PubMed] [Google Scholar]

- 15.Scholz J, et al. Training induces changes in white-matter architecture. Nat Neurosci. 2009;12:1370–1371. doi: 10.1038/nn.2412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lippe S, et al. Differential maturation of brain signal complexity in the human auditory and visual system. Front Hum Neurosci. 2009;3:48. doi: 10.3389/neuro.09.048.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Madden DJ, et al. Cerebral white matter integrity and cognitive aging: contributions from diffusion tensor imaging. Neuropsychol Rev. 2009;19:415–435. doi: 10.1007/s11065-009-9113-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Meunier D, et al. Age-related changes in modular organization of human brain functional networks. Neuroimage. 2008;44:715–723. doi: 10.1016/j.neuroimage.2008.09.062. [DOI] [PubMed] [Google Scholar]

- 19.Fair DA, et al. Functional brain networks develop from a ‘local to distributed’ organization. PLoS Comput Biol. 2009;5:e1000381. doi: 10.1371/journal.pcbi.1000381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Simon H. The architecture of complexity. Proc Am Phil Soc. 1962;106:467–482. [Google Scholar]

- 21.Kirschner M, Gerhart J. Evolvability. Proc Natl Acad Sci USA. 1998;95:8420–8427. doi: 10.1073/pnas.95.15.8420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Felix MA, Wagner A. Robustness and evolution: concepts, insights and challenges from a developmental model system. Heredity. 1998;100:132–140. doi: 10.1038/sj.hdy.6800915. [DOI] [PubMed] [Google Scholar]

- 23.Kashtan N, Alon U. Spontaneous evolution of modularity and network motifs. Proc Natl Acad Sci USA. 2005;102:13773–13778. doi: 10.1073/pnas.0503610102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schlosser G, Wagner GP, editors. Modularity in Development and Evolution. The University of Chicago; 2004. [Google Scholar]

- 25.Wagner GP, et al. The road to modularity. Nat Rev Genet. 2007;8:921–931. doi: 10.1038/nrg2267. [DOI] [PubMed] [Google Scholar]

- 26.Lipson H, et al. On the origin of modular variation. Evolution. 2002;56:1549–1556. doi: 10.1111/j.0014-3820.2002.tb01466.x. [DOI] [PubMed] [Google Scholar]

- 27.Fodor JA. Modularity of Mind: An Essay on Faculty Psychology. MIT Press; 1983. [Google Scholar]

- 28.Raichle ME, Snyder AZ. A default mode of brain function: a brief history of an evolving idea. Neuroimage. 2007;37:1083–1090. doi: 10.1016/j.neuroimage.2007.02.041. [DOI] [PubMed] [Google Scholar]

- 29.Damoiseaux JS, et al. Consistent resting-state networks across healthy subjects. Proc Natl Acad Sci USA. 2006;103:13848–13853. doi: 10.1073/pnas.0601417103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bullmore E, Sporns O. Complex brain networks: graph theoretical analysis of structural and functional systems. Nat Rev Neurosci. 2009;10:186–198. doi: 10.1038/nrn2575. [DOI] [PubMed] [Google Scholar]

- 31.Meunier D, et al. Hierarchical modularity in human brain functional networks. Front Neuroinformatics. 2009;3:37. doi: 10.3389/neuro.11.037.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chen ZJ, et al. Revealing modular architecture of human brain structural networks by using cortical thickness from MRI. Cereb Cortex. 2008;18:2374–2381. doi: 10.1093/cercor/bhn003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Meunier D, et al. Modular and hierarchically modular organization of brain networks. Front Neurosci. 2010;4:200. doi: 10.3389/fnins.2010.00200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Werner G. Fractals in the nervous system: conceptual implications for theoretical neuroscience. Front Physiol. 2010;1:1–28. doi: 10.3389/fphys.2010.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Achard S, et al. A resilient, low-frequency, small-world human brain functional network with highly connected association cortical hubs. J Neurosci. 2006;26:63–72. doi: 10.1523/JNEUROSCI.3874-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Vaishnavi SN, et al. Regional aerobic glycolysis in the human brain. Proc Natl Acad Sci USA. 2010;107:17757–17762. doi: 10.1073/pnas.1010459107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Niven JE, Laughlin SB. Energy limitation as a selective pressure on the evolution of sensory systems. J Exp Biol. 2008;211:1792–1804. doi: 10.1242/jeb.017574. [DOI] [PubMed] [Google Scholar]

- 38.Lennie P. The cost of cortical computation. Curr Biol. 2003;13:493–497. doi: 10.1016/s0960-9822(03)00135-0. [DOI] [PubMed] [Google Scholar]

- 39.Chen BL, et al. Wiring optimization can relate neuronal structure and function. Proc Natl Acad Sci USA. 2006;103:4723–4728. doi: 10.1073/pnas.0506806103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ruppin E, et al. Examining the volume efficiency of the cortical architecture in a multi-processor network model. Biol Cybern. 1993;70:89–94. doi: 10.1007/BF00202570. [DOI] [PubMed] [Google Scholar]

- 41.Narayan O, Saniee I. The large scale curvature of networks. 2010. ar Xiv:0907.1478v1. [DOI] [PubMed] [Google Scholar]

- 42.Damoiseaux JS, Greicius MD. Greater than the sum of its parts: a review of studies combining structural connectivity and resting-state functional connectivity. Brain Struct Funct. 2009;213:525–533. doi: 10.1007/s00429-009-0208-6. [DOI] [PubMed] [Google Scholar]

- 43.Sporns O, et al. The human connectome: a structural description of the human brain. PLoS Comput Biol. 2005;1:e42. doi: 10.1371/journal.pcbi.0010042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lu J, et al. Semi-automated reconstruction of neural processes from large numbers of florescence images. PLoS ONE. 2009;4:e5655. doi: 10.1371/journal.pone.0005655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Li A, et al. Micro-optical sectioning tomography to obtain a high-resolution atlas of the mouse brain. Science. 2010;330:1404–1408. doi: 10.1126/science.1191776. [DOI] [PubMed] [Google Scholar]

- 46.Chklovskii DB, et al. Semi-automated reconstruction of neural circuits using electron microscopy. Curr Opin Neurobiol. 2010;20:667–675. doi: 10.1016/j.conb.2010.08.002. [DOI] [PubMed] [Google Scholar]

- 47.Chiang AS, et al. Three-dimensional reconstruction of brain-wide wiring networks in Drosophila at single-cell resolution. Curr Biol. 2011;21:1–11. doi: 10.1016/j.cub.2010.11.056. [DOI] [PubMed] [Google Scholar]

- 48.Brezina V. Beyond the wiring diagram: signaling through complex neuromodulator networks. Philos Trans R Soc Lond B Biol Sci. 2010;365:2363–2374. doi: 10.1098/rstb.2010.0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Sherbondy AJ, et al. Con-Track: finding the most likely pathways between brain regions using diffusion tractography. J Vis. 2008;8:15.1–16. doi: 10.1167/8.9.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Jain V, et al. Machines that learn to segment images: a crucial technology for connectomics. Curr Opin Neurobiol. 2010;20:653–666. doi: 10.1016/j.conb.2010.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Swanson LW, Bota M. Foundational model of structural connectivity in the nervous system with a schema for wiring diagrams, connectome, and basic plan architecture. Proc Natl Acad Sci USA. 2010;107:20610–20617. doi: 10.1073/pnas.1015128107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Seung HS. Reading the book of memory: sparse sampling versus dense mapping of connectomes. Neuron. 2009;62:17–29. doi: 10.1016/j.neuron.2009.03.020. [DOI] [PubMed] [Google Scholar]

- 53.Tononi G, et al. Measures of degeneracy and redundancy in biological networks. Proc Natl Acad Sci USA. 1999;96:3257–3262. doi: 10.1073/pnas.96.6.3257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Levin N, et al. Cortical maps and white matter tracts following long period of visual deprivation and retinal image restoration. Neuron. 2010;65:21–31. doi: 10.1016/j.neuron.2009.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Breakspear M, Stam CJ. Dynamics of a neural system with a multiscale architecture. Philos Trans R Soc Lond B Biol Sci. 2005;360:1051–1074. doi: 10.1098/rstb.2005.1643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Leibniz GW. La Monadologie. Paris: LGF; 1991. [Google Scholar]

- 57.Craver CF. Explaining the Brain: Mechanisms and the Mosaic Unity of Neuroscience. Oxford University Press; 2007. [Google Scholar]

- 58.Noble D. The Music of Life: Biology Beyond Genes. Oxford University Press; 2008. [Google Scholar]

- 59.Gazzaniga MS. Who’s in Charge? Free Will and the Science of the Brain. Harper (Ecco); New York: 2011. [Google Scholar]

- 60.Nichols MJ, Newsome WT. The neurobiology of cognition. Nature. 1999;402:C35–C38. doi: 10.1038/35011531. [DOI] [PubMed] [Google Scholar]

- 61.Sinnott-Armstrong W, Nadel L, editors. Conscious Will and Responsibility. Oxford University Press; 2010. [PubMed] [Google Scholar]

- 62.Sinnott-Armstrong W, et al. Brain images as legal evidence. Episteme. 2008;5:359–373. [Google Scholar]

- 63.March J, et al. Fluoxetine, cognitive-behavioral therapy, and their combination for adolescents with depression: Treatment for Adolescents with Depression Study (TADS) randomized controlled trial. JAMA. 2004;292:807–820. doi: 10.1001/jama.292.7.807. [DOI] [PubMed] [Google Scholar]

- 64.von Neumann J. The Theory of Self-reproducing Automata. University of Illinois Press; 1966. [Google Scholar]

- 65.Deco G, et al. Emerging concepts for the dynamical organization of resting-state activity in the brain. Nat Rev Neurosci. 2011;12:43–56. doi: 10.1038/nrn2961. [DOI] [PubMed] [Google Scholar]

- 66.Zhao WS, et al. Nanotube devices based crossbar architecture: toward neuromorphic computing. Nanotechnology. 2010;21:175202. doi: 10.1088/0957-4484/21/17/175202. [DOI] [PubMed] [Google Scholar]

- 67.Yamashita Y, Tani J. Emergence of functional hierarchy in a multiple timescale neural network model: a humanoid robot experiment. PLoS Comput Biol. 2008;4:e1000220. doi: 10.1371/journal.pcbi.1000220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Tani J, et al. Self-organization of distributedly represented multiple behavior schemata in a mirror system: reviews of robot experiments using RNNPB. Neural Netw. 2004;17:1273–1289. doi: 10.1016/j.neunet.2004.05.007. [DOI] [PubMed] [Google Scholar]

- 69.Canolty RT, Knight RT. The functional role of cross-frequency coupling. Trends Cogn Sci. 2010;14:506–515. doi: 10.1016/j.tics.2010.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Flack JC, et al. Policing stabilizes construction of social niches in primates. Nature. 2006;439:426–429. doi: 10.1038/nature04326. [DOI] [PubMed] [Google Scholar]

- 71.Flack JC, de Waal F. Context modulates signal meaning in primate communication. Proc Natl Acad Sci USA. 2007;104:1581–1586. doi: 10.1073/pnas.0603565104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Graham D, Rockmore D. The packet switching brain. J Cogn Neurosci. 2011;23:267–276. doi: 10.1162/jocn.2010.21477. [DOI] [PubMed] [Google Scholar]

- 73.Krakauer DC, de Zanotto AP. Viral individuality and limitations of the life concept. In: Rasmussen S, et al., editors. Protocells: Bridging Non-living and Living Matter. MIT Press; 2009. pp. 513–536. [Google Scholar]

- 74.Krakauer DC, et al. Diversity, dilemmas and monopolies of niche construction. Am Nat. 2009;173:26–40. doi: 10.1086/593707. [DOI] [PubMed] [Google Scholar]

- 75.Wilson DS. Introduction: multilevel selection theory comes of age. Am Nat. 1997;150:S1–S4. doi: 10.1086/286046. [DOI] [PubMed] [Google Scholar]

- 76.Teeters JL, et al. Data sharing for computational neuroscience. Neuroinformatics. 2008;6:47–55. doi: 10.1007/s12021-008-9009-y. [DOI] [PubMed] [Google Scholar]

- 77.Van Horn JD, Ball CA. Domain-specific data sharing in neuroscience: what do we have to learn from each other? Neuroinformatics. 2008;6:117–121. doi: 10.1007/s12021-008-9019-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Gazzaniga MS, et al. Looking toward the future: perspectives on examining the architecture and function of the human brain as a complex system. In: Gazzaniga MS, editor. The Cognitive Neurosciences IV. MIT Press; 2010. pp. 1245–1252. [Google Scholar]

- 79.Bullmore ET, Bassett DS. Brain graphs: graphical models of the human brain connectome. Annu Rev Clin Psychol. 2010 doi: 10.1146/annurev-clinpsy-040510-143934. [DOI] [PubMed] [Google Scholar]

- 80.Bassett DS, Bullmore ET. Human brain networks in health and disease. Curr Opin Neurol. 2009;22:340–347. doi: 10.1097/WCO.0b013e32832d93dd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Buckner RL, et al. Cortical hubs revealed by intrinsic functional connectivity: mapping, assessment of stability, and relation to Alzheimer’s disease. J Neurosci. 2009;29:1860–1873. doi: 10.1523/JNEUROSCI.5062-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Honey CJ, et al. Can structure predict function in the human brain? Neuroimage. 2010;52:766–776. doi: 10.1016/j.neuroimage.2010.01.071. [DOI] [PubMed] [Google Scholar]

- 83.Mucha PJ, et al. Community structure in time-dependent, multiscale, and multiplex networks. Science. 2010;328:876–878. doi: 10.1126/science.1184819. [DOI] [PubMed] [Google Scholar]

- 84.Bassett DS, et al. Dynamic reconfiguration of human brain networks during learning. Proc Natl Acad Sci U S A. 2011 doi: 10.1073/pnas.1018985108. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Churchland MM, et al. Cortical preparatory activity: representation of movement or first cog in a dynamical machine? Neuron. 2010;68:387–400. doi: 10.1016/j.neuron.2010.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Nagengast AJ, et al. Optimal control predicts human performance on objects with internal degrees of freedom. PLoS Comput Biol. 2009;5:e1000419. doi: 10.1371/journal.pcbi.1000419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Desmurget M, Grafton ST. Forward modeling allows feedback control for fast reaching movements. Trends Cogn Sci. 2000;4:423–431. doi: 10.1016/s1364-6613(00)01537-0. [DOI] [PubMed] [Google Scholar]

- 88.Mulliken GH, et al. Forward estimation of movement state in posterior parietal cortex. Proc Natl Acad Sci USA. 2008;105:8170–8177. doi: 10.1073/pnas.0802602105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Tunik E, et al. Beyond grasping: representation of action in human anterior intraparietal sulcus. Neuroimage. 2007;36:T77–T86. doi: 10.1016/j.neuroimage.2007.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]