Abstract

Although ears capable of detecting airborne sound have arisen repeatedly and independently in different species, most animals that are capable of hearing have a pair of ears. We review the advantages that arise from having two ears and discuss recent research on the similarities and differences in the binaural processing strategies adopted by birds and mammals. We also ask how these different adaptations for binaural and spatial hearing might inform and inspire the development of techniques for future auditory prosthetic devices.

The Hindu mother goddess Durga has eight hands. At times, this must earn her the envy of ordinary, mortal human mothers, who must confront the hundredfold challenges of motherhood with only two hands each. The considerable advantages that could spring from extra sets of hands are easy to imagine. And who has not occasionally wished for extra eyes, in the back of the head? Or what about ears? Is two ears a good number? Would one be enough, or should we really have more? It seems unlikely that evolution has independently ‘finetuned’ the number not just of hands but also of hind limbs, lungs, kidneys, gonads, eyes and ears, and found in each case that animals with two of each of these organs were invariably better adapted to their environments than those with one or three. That we, like most animals, have two ears may be due more to embryological constraints related to a bilaterally symmetric body plan than to evolutionary selection pressures. Whether two constitutes an ‘optimal’ number of ears, either for modern humans or for the many other binaural animal species in their diverse ecological niches, is uncertain.

It may seem peculiar to ask how many ears a person ideally should have. However, the advent of cochlear implant technology is turning this seemingly odd question into a point of serious and controversial debate of considerable practical importance. Possessing more than one functioning ear can certainly bring significant advantages. For example, binaural hearing greatly improves our ability to determine the direction of a sound source1. Without binaural cues, we must rely solely on monaural ‘spectral cues’ provided by the directional filtering of sounds by our outer ears2 to judge the direction of a sound source. Relying only on spectral cues results in much-reduced localization ability, whereas combining spectral and binaural cues results in remarkably accurate sound localization3. Binaural information can also improve our ability to separate sound signals from ambient background noise, a phenomenon called ‘binaural unmasking’4. Consequently, people with just a single cochlear implant often have great difficulty understanding speech in noisy, acoustically cluttered environments, whereas bilaterally implanted individuals may do better at these challenging acoustic tasks5,6. But cochlear implantation is an expensive procedure and not without risk. It is not obvious that the potential binaural advantages that might accrue from fitting two cochlear implants, rather than just one, warrant doubling the costs and the risks for each person, particularly because current cochlear implants are not optimized for binaural hearing.

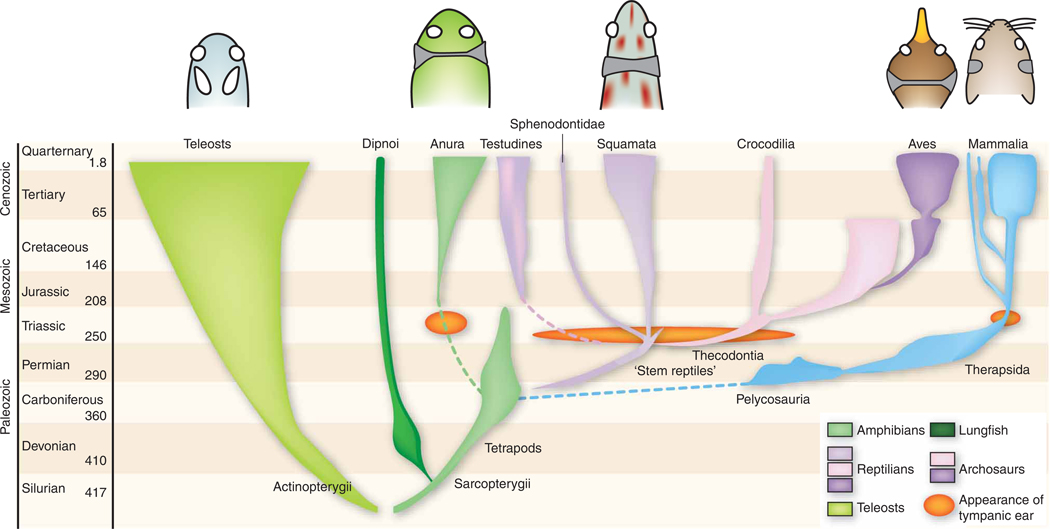

The problem is that the acoustic cues that need to be exploited to reap binaural advantages are often very subtle. For example, we, like many other vertebrates, use tiny differences in the time of arrival or the intensity of sounds at each ear to help us determine sound source direction. The sound will arrive slightly earlier, and be slightly louder, in the near ear—the emphasis here is on “slightly,” as natural interaural time differences (ITDs), for example, usually amount to only a small fraction of a millisecond. We are also very sensitive to changes in the correlation of inputs to the left and right ears, a prerequisite for binaural unmasking7. To process these minimal binaural cues, our ancestors evolved sensitive tympanic ears and highly specialized auditory brainstem circuits (Fig. 1). It seems that such tympanic ears capable of receiving airborne sound evolved separately and repeatedly among the ancestors of modern frogs, turtles, lizards, birds and mammals8–10. Their ancestors, the earliest land-dwelling vertebrates, were probably sensitive to bone conduction and sound waves traveling through the ground. Thus, each of these tetrapod groups constitutes an independent ‘evolutionary experiment in hearing’. Some notably similar principles have emerged, presumably due to similar selection pressures for localizing and identifying auditory targets.

Figure 1.

Evolution of vertebrate ears. Bony fish ears contain sensory maculae that respond to underwater sound in a directional fashion47. During the transition from water to land, tympanic middle ears capable of receiving airborne sound evolved separately among the ancestors of modern frogs, turtles, lizards, archosaurs (birds and crocodilians) and mammals. Above each clade, diagrams of the head show middle ears (gray fill) and tympana (thin lines). Coupled middle ears are inherently directional because they are acoustically connected by a continuous airspace9. Extinct forms, non-anuran amphibians, coelacanths and many actinopterygian groups are omitted from this diagram. Modified from Walker and Liem49. Reproduced by permission. www.cengage.com/permissions.

Parallel evolution of binaural pathways in birds and mammals

In both birds and mammals, early stages of the auditory pathways contain synaptic relays designed to preserve the temporal fine structure of incoming acoustic signals with great accuracy, and neural processing stages that compare inputs from the left and right ears arise early, immediately after the first synaptic relay in the cochlear nucleus. Both groups also have nuclei specialized for either the computation of ITDs or interaural level differences (ILDs). ILD-sensitive neurons are excited by input from one ear and inhibited by the other. These ‘EI neurons’ respond most strongly when sounds come from the side of the excitatory ear. Although this excitatory ear receives the full sound intensity, the inhibitory ear sits in a ‘sound shadow’ on the far side of the head, so the resulting inhibition is small. If the sound source moves closer to the inhibitory ear, the neural firing rates decline because of greater inhibition, resulting in a rate code for sound source position. This ILD sensitivity arises in the lateral superior olive (LSO) in mammals and in IE neurons in the avian nucleus of the lateral lemniscus10. However, the head provides a significant sound shadow only if it is large compared to the wavelength of the sound, so ILD cues are most effective at relatively high frequencies; neurons tuned to high frequencies are overrepresented in the ILD-sensitive nuclei.

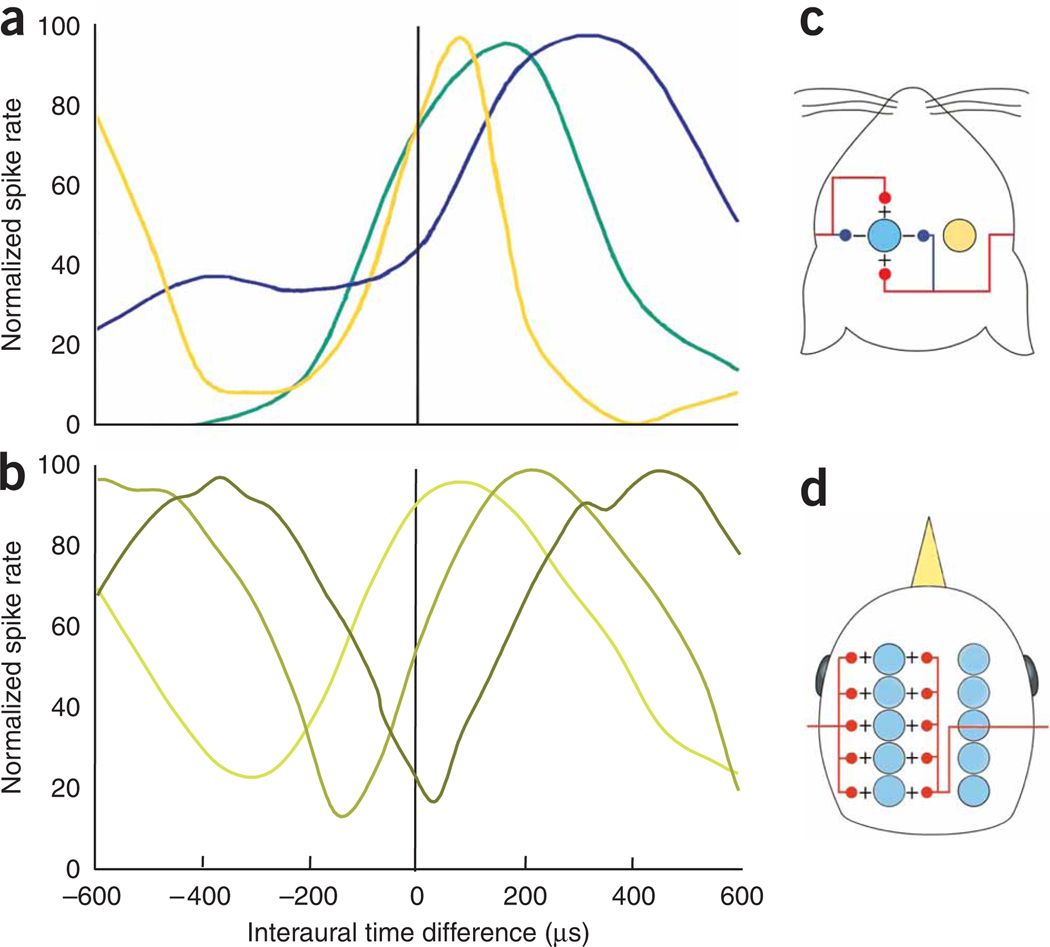

In contrast, most neurons that are sensitive to ITDs are excited by input from both ears (‘EE neurons’; see Fig. 2a). The strength of the excitation depends on the exact relative timing of the inputs. These neurons, found in the mammalian medial superior olive (MSO) or the avian nucleus laminaris, were classically thought to be organized in a ‘delay line and coincidence detector’ arrangement, known as the ‘Jeffress model’11 (see below). The model posits that individual neurons fire in response to precisely synchronized excitation from both ears, and systematically varied axonal conduction delays along the length of the nucleus serve to offset ITDs, so that each neuron is ‘tuned’ to a best ITD value that cancels the signal delays from the left and right ear (Fig. 3a,b). The Jeffress model has been particularly influential, partly because initial experimental evidence from birds provided strong support for the existence of such a delay line arrangement12,13, but also because many researchers find the manner in which this simple scheme turns systematic variations in ITD into a topographic map of sound source location very elegant and appealing. However, although it is widely thought that the Jeffress model is a good description of the avian ITD processing pathway, its relevance to the mammalian system has increasingly been questioned.

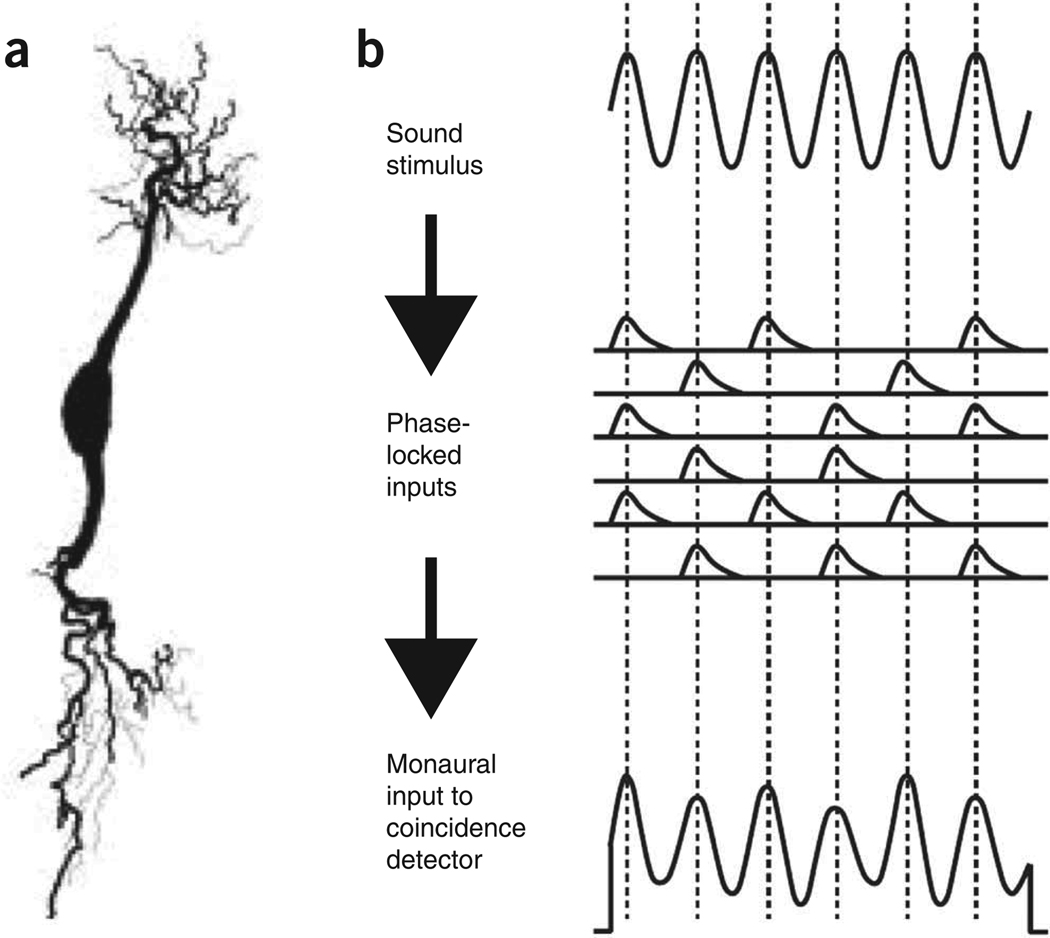

Figure 2.

Coincidence detection. (a) Anatomy of an ‘EE-type’ coincidence detector neuron from the nucleus laminaris of the emu (from ref. 48). Sound signals from the left and right ear respectively converge through the two prominent dendrites. Mammalian MSO neurons show a similar bipolar morphology. (b) Encoding of sound waves as sinusoidal membrane potentials. ‘Primary like’ afferent nerve fibers ‘phase lock’ to the sound stimulus (that is, they are most likely to fire near the peaks of the sound wave), and their excitatory synaptic potentials sum to produce fluctuating membrane potentials, which resemble the stimulus waveform, in each of the EE neuron’s dendrites. Redrawn from ref. 20.

Figure 3.

ITD computations in birds and mammals. (a) Examples of ITD tuning curves from the gerbil MSO (redrawn from ref. 27). The three examples are from neurons with different characteristic frequencies: blue, lowest; green, intermediate; yellow, highest. (b) Examples of ITD tuning curves from the chicken nucleus laminaris (data from ref. 25). The three examples shown were recorded along one isofrequency lamina. The position of the peak (and of the steepest slope) of the ITD tuning curve varies systematically with anatomical position. (c,d) Highly simplified, schematic models of the mammalian MSO (c) and the avian nucleus laminaris (d), as proposed by Grothe30. Arrays of coincidence detectors (colored circles) on both sides of the brainstem receive excitatory inputs (red lines) from the two ears. The avian system is essentially an implementation of Jeffress’s delay line model, whereas the mammalian model relies on an interplay of precisely timed excitation and inhibition (blue lines) to achieve similar aims.

For starters, anatomical evidence for systematic delay lines in mammals is not definitive14,15. Of course, the internal delays would not necessarily have to be set up through axonal conduction delay lines, and one alternative hypothesis is that the delays might actually be of cochlear origin16. Hearing begins when the cochlea mechanically filters incoming sounds to separate out various frequency components. The mechanical filters that transduce sound into neural signals cannot respond infinitely fast, and they are said to be subject to small ‘group delays’. The group delays for low sound frequencies are somewhat larger than those for higher frequencies. Thus, if a signal from a higher-frequency neuron in the left ear arrives at an EE neuron at exactly the same time as a low frequency input from the right ear, then this would indicate that the sound came from the right, so that the extra time taken by the sound traveling to the farther (left) ear was offset by the larger group delay in the right cochlea.

However, the implementation of delay lines (axonal or cochlear) does not change the fundamental nature of Jeffress’s delay-line-and-coincidence-detector model. A more crucial question is how neurons achieve coincidence detection at the phenomenally fine temporal resolution that is required to account for behaviorally measured ITD detection thresholds. Both birds and mammals can detect ITDs as small as a few microseconds. MSO and nucleus laminaris neurons have similar anatomical and biophysical specializations, such as stereotypical bipolar dendrites, with inputs from each ear segregated onto each set of dendrites, allowing nonlinear integration between the inputs from left and right ears17. These neurons have a high density of low voltage–activated potassium channels, which speed up their synaptic dynamics, yielding excitatory postsynaptic potentials that are typically around 400 ms wide at half amplitude18,19.

Coincidence detectors seem to work by ‘cross-correlating’ sinusoidal synaptic conductances, which mirror the stimulus waveform, as seen through the ‘narrow-band filters’ that provide the input to the MSO. MSO neurons receive band-pass-filtered input that is relayed from the cochlea through the cochlear nuclei. The band-pass filtering makes them sensitive only to frequencies close to their own characteristic frequency. In other words, all sounds ‘look’ to them more or less like a sine wave at their own characteristic frequency20 (see Fig. 2b). Models suggest that when these sinusoidal inputs from each ear arrive in phase, they interfere constructively, and the binaural conductance sum becomes maximal, but when they arrive totally out of phase (worst ITD), they interfere destructively. This probably explains why ITD tuning curves measured in the auditory brainstem and midbrain have a cosine-like shape, with a period that depends on the neuron’s characteristic frequency, since this tuning curve arises as a sum of roughly sinusoidal inputs with frequencies close to the neuron’s characteristic frequency. The range of ITDs spanned between each neuron’s most and least preferred ITD value is consequently always approximately equal to half the period of the neuron’s best frequency, and it is more appropriate to think of MSO neurons as sensitive to interaural phase differences rather than to ITDs.

A recent modeling study21 illustrated that this interdependence between the shape of the ITD tuning curve and a neuron’s frequency tuning is problematic. Jeffress envisaged arrays of ITD detectors for each frequency band, each tuned to a different preferred ITD, so that the whole array could implement a sort of ‘labeled line’ population code22. For most low-frequency neurons, however, the ITD tuning curves are too broad and their peaks too blunt to make such an arrangement efficient (Fig. 3). Consequently, from an ‘optimal coding’ perspective, the peaks of the ITD tuning curves may be less relevant, and what matters is that the steepest slopes of the ITD tuning curves cover the animal’s behaviorally relevant ITD range21,23. Steep slopes mean that a small change in the stimulus causes a relatively large, easily detectable change in the neuron’s response. But tuning curves cannot be infinitely ‘tall’, and if ITD tuning curves are very steep over some part of the possible range of ITDs, then other parts of that range may have to fall on the less steep and hence less informative ‘plateaus’. Thus, a Jeffress-like arrangement, with systematically spread out tuning curve peaks, becomes computationally efficient when ITD tuning curves are so sharp and narrow that their slopes can no longer cover the range of ITDs that an animal experiences. This would be the case for the barn owl, which has ITD-tuned neurons with characteristic frequencies as high as 9 kHz and widely separated ears, and therefore large maximal ITDs, but not for the gerbil, for which ITD-sensitive neurons in the MSO rarely have characteristic frequencies greater than 2 kHz and the separation between the ears is much smaller.

Is there selection for the most efficient neural code?

Given these differences, it is an open question whether optimally efficient coding is a substantial constraint on the evolution of neural circuits. Natural selection often produces local maxima, and solutions need not be optimal as long as they are good enough24,25. In the auditory system, the narrowest information bottleneck presumably occurs in the auditory nerve. Thereafter, diverging connections in the ascending auditory pathway could mean that ever greater numbers of neurons are available to encode finite information. This would create some redundancy and reduce the necessity to make neural coding at subsequent stages optimally efficient. Nevertheless, the ‘optimality’ arguments put forward by Harper et al.21 provide a plausible, even elegant, explanation for recent experimental findings in rodents, wherein the peaks in ITD tuning curves were often found to lie outside the animal’s physiological range and tended to depend systematically on the neuron’s characteristic frequency26,27 (Fig. 3a). These observations do not fit the classic Jeffress model, in which coincidence detectors are organized to form a place map. To form such a topographic map requires ITD detectors in each frequency band to be tuned to the full range of physiological ITDs, and their tuning should therefore not depend on characteristic frequency. The new data thus argue for a population rate code rather than a map.

Are the two models for encoding ITDs irreconcilable? Not entirely: both depend on coincidence detection and convey ITDs to the midbrain through the distribution of firing rates across the population of neurons. Barn owls seem to use the information in both the peaks and the slopes of the tuning curves28, whereas theoretic analyses suggest that the two codes are not mutually exclusive29. Whether peaks or slopes of the tuning curves are better suited to representing ITDs from an information theoretic perspective may depend on the factors that constrain the shape of the tuning curves21,29. Animals may also not necessarily adopt the same optimal solution. For example, chickens and gerbils both have similar head sizes and ability to encode temporal information, and both use ITDs at relatively low frequencies (Fig. 3). The two species have similar head sizes and abilities to encode temporal information, and both use ITDs at relatively low frequencies. The constraints on the codes should be similar. But chickens have a place map of ITD in the nucleus laminaris25 (Fig. 3b), fitting Jeffress’s model closely, whereas gerbils may not.

The anatomical organization of the MSO is also more complicated than required by the basic Jeffress model. MSO neurons receive excitatory inputs from each ear, but they also receive inhibition that is precisely time-locked to the incoming auditory signals30. This inhibition is important for shaping ITD tuning curves in the MSO. Brand and colleagues27, for example, recorded responses in gerbil MSO before and after pharmacological suppression of inhibitory inputs and noted substantial changes in the shape of the ITD tuning curves, including shifts of the peak in the curve by several hundred microseconds. Several modeling studies30–32 have since explored how the interplay of excitation and inhibition might produce these shifts in the ITD tuning curve. Joris and Yin33 have recently argued against such a central role for inhibition in ITD processing. Consideration of the data published by Brand and colleagues27 led them to conclude that inhibition in the MSO might mostly affect the early ‘onset’ but not the sustained response. This conclusion may, however, be premature. Many important sound signals (for example, footsteps) are highly transient in nature and contain little or no sustained sound, meaning that the onset is arguably the most important part of the neural response34. Moreover, data recently published by Pecka et al.35 indicate that the effect of glycinergic inhibition on the shape of ITD tuning curves can persist during the sustained part of the response in the MSO. Thus, synaptic dynamics based on the interplay of precisely timed excitation and inhibition remain a credible additional or alternative mechanism to either axonal or cochlear delays in the inputs to the mammalian MSO (Fig. 3c,d).

Perhaps we are so attached to the systematic axonal delay line arrangement in Jeffress’s model because it alone automatically leads to a topographic, ‘space-mapped’ representation of best ITDs. In the barn owl, where the evidence for the implementation of a Jeffress model in the nucleus laminaris is strongest, the resulting topographic map of ITDs is passed on and maintained in subsequent processing stations, such as the central and external nuclei of the inferior colliculus and the optic tectum, where auditory and visual information is combined to direct the animal’s direction of gaze22. Mammals too have a topographic mapping of auditory space in the superior colliculus (the mammalian homolog of the optic tectum), but the evidence for place coding in the mammalian MSO looks increasingly weak, and there is no topographic space map in the central nucleus of the inferior colliculus. Rather, the mammalian auditory space map in the superior colliculus emerges gradually, under visual guidance36, as the information passes from the central nucleus of the inferior colliculus by way of the nucleus of the brachium to the superior colliculus37. Furthermore, the mammalian map in the superior colliculus is, as far as we know, based mostly on ILDs and monaural spatial cues, not ITDs38, and no topographic arrangement of spatial tuning or ITD sensitivity has ever been found in the areas of mammalian cortex thought to be involved in the perception of sound source location. The generation of a topographic map of ITD is considered by some to be a “defining feature” of Jeffress’s model33, and although it serves a clear function in the barn owl brain (where this topography is brought into register with a retinotopic representation of visual space), it is unclear whether such an ITD map exists in the mammalian brainstem or what purpose it would serve. Why would the mammalian auditory pathway establish a topographic representation of ITD in the MSO, only to abandon it again at the next stage of the ascending pathway?

Perhaps the strategies for encoding ITDs may be somewhat different in birds and mammals because different species combine different binaural cues in different ways. For example, barn owls have highly asymmetric external ears and can use ILDs to detect sound source elevation22. They then combine ITD and ILD information to create neurons sharply tuned for location in both azimuth (sound source direction in the horizontal plane) and elevation (direction in the vertical plane)39. This is very different from mammals, which typically use both ITDs and ILDs as cues to sound source azimuth but tend to rely on ITDs mostly for low frequency sound and on ILDs for high frequencies40. Sounds only generate large ILDs when the wavelength of the sound is small compared to the head diameter, which limits the usefulness of ILDs at low frequencies. ITDs are in principle present at all frequencies, but limitations in the ability of neurons to represent and process very rapid changes in the sound (so-called phase-locking limits and phase ambiguity) make it difficult for the brain to exploit ITDs in high-frequency sound. Therefore, our judgment of the azimuthal location of a sound source is dominated by ITDs at low frequencies and by ILDs at high frequencies. However, there is a considerable ‘overlap’, where information from both cues is combined. Presumably, this cue combination occurs as ITD and ILD information streams converge, and it would be very straight forward if ITD and ILD were both encoded using a similar population rate-coding scheme41.

Lessons for bionic hearing

The noteworthy similarities and the differences between the avian and the mammalian ITD processing pathways clearly suggest that there are many ways of localizing sounds and separating sources from background noise; these different strategies can provide inspiration for the development of new technologies such as cochlear implants. Current cochlear implant speech processors simply encode the spectral profile of incoming sounds in a train of amplitude-modulated electric pulses. In binaural implants, present-day processors work independently, so that the timing of pulses is not synchronized or coordinated between the ears, and much of the fine-grained temporal structure required for effective ITD processing is not preserved. Also, the range of different intensities that can be delivered through cochlear implants (the ‘dynamic range’) is very limited compared to natural hearing, which may affect the delivery of ILD cues. The first technical challenge will therefore be to try to overcome these limitations on the delivery of ‘natural’ ILD and ITD information, already an active research area42.

However, in the longer term, it may be beneficial to try to incorporate cues that are ‘supranatural’, at least for humans. Humans normally rely mostly on ITDs for low-frequency sounds, but low frequency–sensitive neurons are found near the apex in the mammalian cochlea, far from the round window, which makes them very difficult to access with current cochlear implant techniques. Unlike humans, barn owls can extract valuable ITD information for frequencies up to 9 kHz, and they overcome the phase ambiguity that arises when ITDs may be smaller than the period of the sound wave by integrating information across frequency channels22. In principle, sophisticated bionic devices could similarly extract both ITD and ILD information at high precision over a very wide frequency range and recode it in the manner appropriate for each individual. For example, in cases where cochlear implantation failed to restore low frequency hearing, this might involve merely translating ITD cues into enhanced ILDs, but more sophisticated approaches could also be developed.

In an age where many personal stereo systems already pack powerful microprocessors, future cochlear implant processors and hearing aids could become more sophisticated and incorporate various spatial filtering and preprocessing techniques, not necessarily modeled on designs normally found in mammals. Future designs could incorporate pressure gradient receivers, as used by some insects43 and other terrestrial vertebrates (lizards, frogs and some birds; Fig. 1). The ears of these animals are inherently directional because they are acoustically connected by a continuous airspace between the eardrums, either through the mouth cavity or through interaural canals8. This acoustical connection allows sound to reach both sides of the eardrum, which is then driven by the pressure difference between the external and internal sounds. Pressure gradient receiver ears have highly directional eardrum motion, provided the interaural coupling is strong enough. These ears perform so well that they beg the question of why mammals and some hearing specialists such as owls even have independent ears. There have been many theories advanced: increased breathing rates interfere with tympanum motion when ears are coupled through the mouth; and even the best pressure gradient receivers have nulls—that is, they may become insensitive to certain frequencies if sound waves arising from either side interfere destructively44. Nevertheless, given that this design of a directional receiver is also used in insect hearing, it is clearly amenable to miniaturization, and potentially, it could be incorporated in directional receivers for auditory prostheses.

Of course, artificial directional hearing designs would not necessarily have to be binaural. Even insects rarely have more than two ears, and sometimes only one45, which is perhaps unexpected, given that a separation (‘unmixing’) of sounds from different simultaneous sound sources can in theory easily be achieved using techniques such as independent component analysis46, provided that the number of sound receivers (ears or microphones) is as large as the number of sound sources. Perhaps bionic ears of the future will interface to elaborate cocktail-party hats that sport as many miniature microphones as there are guests at the party. The basic algorithm for independent component analysis requires that the relationship between sources and receivers be stable over time. To adapt this to mobile speakers and listeners, methods would have to be developed to track auditory streams when the sound sources and receivers move relative to each other, but that may well be a solvable problem. If so, many-eared, rather than merely binaural, devices might ultimately turn out to be optimal solutions for bionic hearing.

ACKNOWLEDGMENTS

Supported by UK Biotechnology and Biological Sciences Research Council grant BB/D009758/1, UK Engineering and Physical Sciences Research Council grant EP/C010841/1, a European Union FP6 grant to J.W.H.S., and US National Institutes of Health grants DCD000436 to C.E.C. and P30 DC0466 to the University of Maryland Center for the Evolutionary Biology of Hearing.

References

- 1.King AJ, Schnupp JWH, Doubell TP. The shape of ears to come: dynamic coding of auditory space. Trends Cogn. Sci. 2001;5:261–270. doi: 10.1016/s1364-6613(00)01660-0. [DOI] [PubMed] [Google Scholar]

- 2.Wightman FL, Kistler DJ. Monaural sound localization revisited. J. Acoust. Soc. Am. 1997;101:1050–1063. doi: 10.1121/1.418029. [DOI] [PubMed] [Google Scholar]

- 3.Carlile S, Martin R, McAnally K. Spectral information in sound localization. Int. Rev. Neurobiol. 2005;70:399–434. doi: 10.1016/S0074-7742(05)70012-X. [DOI] [PubMed] [Google Scholar]

- 4.Stern RM, Trahiotis C. Models of binaural interaction. In: Moore BCJ, editor. Hearing. San Diego: Academic; 1995. pp. 347–380. [Google Scholar]

- 5.van Hoesel RJ. Exploring the benefits of bilateral cochlear implants. Audiol. Neurootol. 2004;9:234–246. doi: 10.1159/000078393. [DOI] [PubMed] [Google Scholar]

- 6.Long CJ, Carlyon RP, Litovsky RY, Downs DH. Binaural unmasking with bilateral cochlear implants. J. Assoc. Res. Otolaryngol. 2006;7:352–360. doi: 10.1007/s10162-006-0049-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Grantham DW, Wightman FL. Detectability of a pulsed tone in the presence of a masker with time-varying interaural correlation. J. Acoust. Soc. Am. 1979;65:1509–1517. doi: 10.1121/1.382915. [DOI] [PubMed] [Google Scholar]

- 8.Christensen-Dalsgaard J, Carr CE. Evolution of a sensory novelty: tympanic ears and the associated neural processing. Brain Res. Bull. 2008;75:365–370. doi: 10.1016/j.brainresbull.2007.10.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Clack JA. The evolution of tetrapod ears and the fossil record. Brain Behav. Evol. 1997;50:198–212. doi: 10.1159/000113334. [DOI] [PubMed] [Google Scholar]

- 10.Grothe B, Carr CE, Casseday JH, Fritzsch B, Köppl C. The evolution of central pathways and their neural processing patterns. In: Manley GA, Popper AN, Fay RR, editors. Evolution of the Vertebrate Auditory System. New York: Springer; 2004. [Google Scholar]

- 11.Jeffress LA. A place theory of sound localization. J. Comp. Physiol. Psychol. 1948;41:35–39. doi: 10.1037/h0061495. [DOI] [PubMed] [Google Scholar]

- 12.Carr CE, Konishi M. Axonal delay lines for time measurement in the owl’s brainstem. Proc. Natl. Acad. Sci. USA. 1988;85:8311–8315. doi: 10.1073/pnas.85.21.8311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Parks TN, Rubel EW. Organization and development of brain stemauditory nuclei of the chicken: organization of projections from n. magnocellularis to n. laminaris. J. Comp. Neurol. 1975;164:435–448. doi: 10.1002/cne.901640404. [DOI] [PubMed] [Google Scholar]

- 14.Beckius GE, Batra R, Oliver DL. Axons from anteroventral cochlear nucleus that terminate in medial superior olive of cat: observations related to delay lines. J. Neurosci. 1999;19:3146–3161. doi: 10.1523/JNEUROSCI.19-08-03146.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Smith PH, Joris PX, Yin TC. Projections of physiologically characterized spherical bushy cell axons from the cochlear nucleus of the cat: evidence for delay lines to the medial superior olive. J. Comp. Neurol. 1993;331:245–260. doi: 10.1002/cne.903310208. [DOI] [PubMed] [Google Scholar]

- 16.Shamma SA, Shen NM, Gopalaswamy P. Stereausis: binaural processing without neural delays. J. Acoust. Soc. Am. 1989;86:989–1006. doi: 10.1121/1.398734. [DOI] [PubMed] [Google Scholar]

- 17.Agmon-Snir H, Carr CE, Rinzel J. The role of dendrites in auditory coincidence detection. Nature. 1998;393:268–272. doi: 10.1038/30505. [DOI] [PubMed] [Google Scholar]

- 18.Scott LL, Mathews PJ, Golding NL. Posthearing developmental refinement of temporal processing in principal neurons of the medial superior olive. J. Neurosci. 2005;25:7887–7895. doi: 10.1523/JNEUROSCI.1016-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kuba H, Yamada R, Ohmori H. Evaluation of the limiting acuity of coincidence detection in nucleus laminaris of the chicken. J. Physiol. (Lond.) 2003;552:611–620. doi: 10.1113/jphysiol.2003.041574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ashida G, Abe K, Funabiki K, Konishi M. Passive soma facilitates submillisecond coincidence detection in the owl’s auditory system. J. Neurophysiol. 2007;97:2267–2282. doi: 10.1152/jn.00399.2006. [DOI] [PubMed] [Google Scholar]

- 21.Harper NS, McAlpine D. Optimal neural population coding of an auditory spatial cue. Nature. 2004;430:682–686. doi: 10.1038/nature02768. [DOI] [PubMed] [Google Scholar]

- 22.Konishi M. Coding of auditory space. Annu. Rev. Neurosci. 2003;26:31–55. doi: 10.1146/annurev.neuro.26.041002.131123. [DOI] [PubMed] [Google Scholar]

- 23.Skottun BC, Shackleton TM, Arnott RH, Palmer AR. The ability of inferior colliculus neurons to signal differences in interaural delay. Proc. Natl. Acad. Sci. USA. 2001;98:14050–14054. doi: 10.1073/pnas.241513998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Marder E, Goaillard JM. Variability, compensation and homeostasis in neuron and network function. Nat. Rev. Neurosci. 2006;7:563–574. doi: 10.1038/nrn1949. [DOI] [PubMed] [Google Scholar]

- 25.Köppl C, Carr CE. Maps of interaural time difference in the chicken’s brainstem nucleus laminaris. Biol. Cybern. 2008;98:541–559. doi: 10.1007/s00422-008-0220-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.McAlpine D, Jiang D, Palmer AR. A neural code for low-frequency sound localization in mammals. Nat. Neurosci. 2001;4:396–401. doi: 10.1038/86049. [DOI] [PubMed] [Google Scholar]

- 27.Brand A, Behrend O, Marquardt T, McAlpine D, Grothe B. Precise inhibition is essential for microsecond interaural time difference coding. Nature. 2002;417:543–547. doi: 10.1038/417543a. [DOI] [PubMed] [Google Scholar]

- 28.Takahashi TT, et al. The synthesis and use of the owl’s auditory space map. Biol. Cybern. 2003;89:378–387. doi: 10.1007/s00422-003-0443-5. [DOI] [PubMed] [Google Scholar]

- 29.Butts DA, Goldman MS. Tuning curves, neuronal variability, and sensory coding. PLoS Biol. 2006;4:e92. doi: 10.1371/journal.pbio.0040092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Grothe B. New roles for synaptic inhibition in sound localization. Nat. Rev. Neurosci. 2003;4:540–550. doi: 10.1038/nrn1136. [DOI] [PubMed] [Google Scholar]

- 31.Zhou Y, Carney LH, Colburn HS. A model for interaural time difference sensitivity in the medial superior olive: interaction of excitatory and inhibitory synaptic inputs, channel dynamics, and cellular morphology. J. Neurosci. 2005;25:3046–3058. doi: 10.1523/JNEUROSCI.3064-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Leibold C, van Hemmen JL. Spiking neurons learning phase delays: how mammals may develop auditory time-difference sensitivity. Phys. Rev. Lett. 2005;94:168102. doi: 10.1103/PhysRevLett.94.168102. [DOI] [PubMed] [Google Scholar]

- 33.Joris P, Yin TC. A matter of time: internal delays in binaural processing. Trends Neurosci. 2007;30:70–78. doi: 10.1016/j.tins.2006.12.004. [DOI] [PubMed] [Google Scholar]

- 34.Chase SM, Young ED. First-spike latency information in single neurons increases when referenced to population onset. Proc. Natl. Acad. Sci. USA. 2007;104:5175–5180. doi: 10.1073/pnas.0610368104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pecka M, Brand A, Behrend O, Grothe B. Interaural time difference processing in the mammalian medial superior olive: the role of glycinergic inhibition. J. Neurosci. 2008;28:6914–6925. doi: 10.1523/JNEUROSCI.1660-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.King AJ, Schnupp JWH, Thompson ID. Signals from the superficial layers of the superior colliculus enable the development of the auditory space map in the deeper layers. J. Neurosci. 1998;18:9394–9408. doi: 10.1523/JNEUROSCI.18-22-09394.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Schnupp JWH, King AJ. Coding for auditory space in the nucleus of the brachium of the inferior colliculus of the ferret. J. Neurophysiol. 1997;78:2717–2731. doi: 10.1152/jn.1997.78.5.2717. [DOI] [PubMed] [Google Scholar]

- 38.Campbell RA, Doubell TP, Nodal FR, Schnupp JW, King AJ. Interaural timing cues do not contribute to the map of space in the ferret superior colliculus: a virtual acoustic space study. J. Neurophysiol. 2006;95:242–254. doi: 10.1152/jn.00827.2005. [DOI] [PubMed] [Google Scholar]

- 39.Peña JL, Konishi M. Auditory spatial receptive fields created by multiplication. Science. 2001;292:249–252. doi: 10.1126/science.1059201. [DOI] [PubMed] [Google Scholar]

- 40.Macpherson EA, Middlebrooks JC. Listener weighting of cues for lateral angle: the duplex theory of sound localization revisited. J. Acoust. Soc. Am. 2002;111:2219–2236. doi: 10.1121/1.1471898. [DOI] [PubMed] [Google Scholar]

- 41.Hancock KE, Delgutte B. A physiologically based model of interaural time difference discrimination. J. Neurosci. 2004;24:7110–7117. doi: 10.1523/JNEUROSCI.0762-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Laback B, Majdak P. Binaural jitter improves interaural time-difference sensitivity of cochlear implantees at high pulse rates. Proc. Natl. Acad. Sci. USA. 2008;105:814–817. doi: 10.1073/pnas.0709199105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yager DD. Structure, development, and evolution of insect auditory systems. Microsc. Res. Tech. 1999;47:380–400. doi: 10.1002/(SICI)1097-0029(19991215)47:6<380::AID-JEMT3>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- 44.Christensen-Dalsgaard J, Manley GA. Acoustical coupling of lizard eardrums. J. Assoc. Res. Otolaryngol. 2008;9:407–416. doi: 10.1007/s10162-008-0130-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Yager DD, Hoy RR. The cyclopean ear: a new sense for the praying mantis. Science. 1986;231:727–729. doi: 10.1126/science.3945806. [DOI] [PubMed] [Google Scholar]

- 46.Hyvarinen A, Karhunen J, Oja E. Independent Component Analysis. New York: Wiley; 2001. [Google Scholar]

- 47.Fay RR, Edds-Walton PL. Directional encoding by fish auditory systems. Phil. Trans. R. Soc. Lond. B. 2000;355:1281–1284. doi: 10.1098/rstb.2000.0684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.MacLeod KM, Soares D, Carr CE. Interaural timing difference circuits in the auditory brainstem of the emu (Dromaius novaehollandiae) J. Comp. Neurol. 2006;495:185–201. doi: 10.1002/cne.20862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Walker WF, Liem KF. Functional Anatomy of Vertebrates: An Evolutionary Perspective. Saunders College Publishing; 1994. [Google Scholar]