Abstract

Two commonly used ideas in the development of citation-based research performance indicators are the idea of normalizing citation counts based on a field classification scheme and the idea of recursive citation weighing (like in PageRank-inspired indicators). We combine these two ideas in a single indicator, referred to as the recursive mean normalized citation score indicator, and we study the validity of this indicator. Our empirical analysis shows that the proposed indicator is highly sensitive to the field classification scheme that is used. The indicator also has a strong tendency to reinforce biases caused by the classification scheme. Based on these observations, we advise against the use of indicators in which the idea of normalization based on a field classification scheme and the idea of recursive citation weighing are combined.

Keywords: Bibliometric indicator, Citation impact, Field normalization, Recursive indicator

Introduction

In bibliometric and scientometric research, there is a trend towards developing more and more sophisticated citation-based research performance indicators. In this paper, we are concerned with two streams of research. One stream of research focuses on the development of indicators that aim to correct for the fact that the density of citations (i.e., the average number of citations per publication) differs among fields. Two basic approaches can be distinguished. One approach is to normalize citation counts for field differences based on a classification scheme that assigns publications to fields (e.g., Braun and Glänzel 1990; Moed et al. 1995; Waltman et al. 2011). The other approach is to normalize citation counts based on the number of references in citing publications or citing journals (e.g., Moed 2010; Zitt and Small 2008). The latter approach, which is sometimes referred to as source normalization (Moed 2010), does not need a field classification scheme.

A second stream of research focuses on the development of recursive indicators, typically inspired by the well-known PageRank algorithm (Brin and Page 1998). In the case of recursive indicators, citations are weighed differently depending on the status of the citing publication (e.g., Chen et al. 2007; Ma et al. 2008; Walker et al. 2007), the citing journal (e.g., Bollen et al. 2006; Pinski and Narin 1976), or the citing author (e.g., Radicchi et al. 2009; Życzkowski 2010). The underlying idea is that a citation from an influential publication, a prestigious journal, or a renowned author should be regarded as more valuable than a citation from an insignificant publication, an obscure journal, or an unknown author. It is sometimes argued that non-recursive indicators measure popularity while recursive indicators measure prestige (e.g., Bollen et al. 2006; Yan and Ding 2010).

Based on the above discussion, we have two binary dimensions along which we can distinguish citation-based research performance indicators, namely the dimension of normalization based on a field classification scheme versus source normalization and the dimension of non-recursive mechanisms versus recursive mechanisms. These two dimensions yield four types of indicators. This is shown in Table 1, in which we list some examples of the different types of indicators. It is important to note that all currently existing indicators that use a classification scheme for normalizing citation counts are of a non-recursive nature. Hence, there currently are no recursive indicators that make use of a classification scheme.1 Instead, the currently existing recursive indicators can best be regarded as belonging to the family of source-normalized indicators. This is because these indicators, like non-recursive source-normalized indicators, are based in one way or another on the idea that each unit (i.e., each publication, journal, or author) has a certain weight which it distributes over the units it cites. We refer to Waltman and Van Eck (2010a) for a detailed analysis of the close relationship between a source-normalized indicator (i.e., the audience factor) and two recursive indicators (i.e., the Eigenfactor indicator and the influence weight indicator).

Table 1.

A classification of some citation-based research performance indicators based on their normalization approach and the presence or absence of a recursive mechanism

| Normalization based on classification scheme | Source normalization | |

|---|---|---|

| Non-recursive mechanism |

Citation z-score (Lundberg 2007) CPP/FCSm (Moed et al. 1995) MNCS (Waltman et al. 2011) NMCR (Braun and Glänzel 1990) |

Audience factor (Zitt 2010; Zitt and Small 2008) Fractional counting (Glänzel et al. 2011; Leydesdorff and Bornmann 2011) SNIP (Moed 2010) Source-normalized MNCS (Waltman and Van Eck 2010b) |

| Recursive mechanism |

CiteRank (Walker et al. 2007) Eigenfactor (Bergstrom 2007; West et al. 2010) Influence weight (Pinski and Narin 1976) Science author rank (Radicchi et al. 2009) SCImago journal rank (González-Pereira et al. 2010) Weighted PageRank (Bollen et al. 2006) |

In this paper, we focus on the empty cell in the lower left of Table 1. Hence, we focus on recursive indicators that use a field classification scheme for normalizing citation counts. We first propose a recursive variant of the mean normalized citation score (MNCS) indicator (Waltman et al. 2011). We then present an empirical analysis of this recursive MNCS indicator. In the analysis, the recursive MNCS indicator is used to study the citation impact of journals and research institutes in the field of library and information science. Our aim is to get insight into the validity of recursive indicators that use a classification scheme for normalizing citation counts. We pay special attention to the sensitivity of such indicators to the classification scheme that is used.

Recursive mean normalized citation score

The ordinary non-recursive MNCS indicator for a set of publications equals the average number of citations per publication, where for each publication the number of citations is normalized for differences among fields (Waltman et al. 2011). The normalization is performed by dividing the number of citations of a publication by the publication’s expected number of citations. The expected number of citations of a publication is defined as the average number of citations per publication in the field in which the publication was published. An example of the calculation of the non-recursive MNCS indicator is provided in Table 2.

Table 2.

Example of the calculation of the ordinary non-recursive MNCS indicator

| Publication | No. cit. | Field | Expected no. cit. | Normalized cit. score |

|---|---|---|---|---|

| A | 3 | X | 4.32 | 0.69 |

| B | 8 | X | 4.32 | 1.85 |

| C | 10 | Y | 12.17 | 0.82 |

| MNCS = (0.69 + 1.85 + 0.82)/3 = 1.12 | ||||

There are three publications. For each publication, the table lists the number of citations, the field, the expected number of citations, and the normalized citation score. The normalized citation score of a publication is obtained by dividing the number of citations by the expected number of citations. The MNCS indicator equals the average of the normalized citation scores of the three publications

The non-recursive MNCS indicator can also be referred to as the first-order MNCS indicator. We define the second-order MNCS indicator in the same way as the first-order MNCS indicator except that citations are weighed differently. In the first-order MNCS indicator, all citations have the same weight. In the second-order MNCS indicator, on the other hand, the weight of a citation is given by the value of the first-order MNCS indicator for the citing journal. Hence, citations from journals with a high value for the first-order MNCS indicator are regarded as more valuable than citations from journals with a low value for the first-order MNCS indicator. We have now defined the second-order MNCS indicator in terms of the first-order MNCS indicator. In the same way, we define the third-order MNCS indicator in terms of the second-order MNCS indicator, the fourth-order MNCS indicator in terms of the third-order MNCS indicator, and so on. This yields the recursive MNCS indicator that we study in this paper.

To make the above definition of the recursive MNCS indicator more precise, we formalize it mathematically. We use i, j, k, and l to denote, respectively, a publication, a journal, a field, and an institute. We define

|

1 |

|

2 |

|

3 |

|

4 |

For α = 1, 2,…, the αth-order citation score of publication i is defined as

|

5 |

where w (α)i′ denotes the weight of a citation from publication i′. For α = 1, w (α)i′ = 1 for all i′. For α = 2, 3,…,w (α)i′ is given by

|

6 |

It follows from (5) and (6) that the αth-order citation score of a publication equals a weighted sum of the citations received by the publication. For α = 1, all citations have the same weight. For α = 2, 3,…, the weight of a citation is given by the (α − 1)th-order MNCS of the citing journal.

We define the αth-order mean citation score of a field as the average αth-order citation score of all publications belonging to the field, that is,

|

7 |

The αth-order expected citation score of a publication is defined as the αth-order mean citation score of the field to which the publication belongs,2 and the αth-order normalized citation score of a publication is defined as the ratio of the publication’s αth-order citation score and its αth-order expected citation score. This yields

|

8 |

|

9 |

If the αth-order normalized citation score of a publication is greater (less) than one, this indicates that the αth-order citation score of the publication is greater (less) than the average αth-order citation score of all publications in the field.

We define the αth-order MNCS of a set of publications as the average αth-order normalized citation score of the publications in the set. In the case of journals and institutes, we obtain, respectively

|

10 |

|

11 |

where

|

12 |

It follows from (11) and (12) that in the case of institutes we take a fractional counting approach. That is, a publication resulting from a collaboration of, say, three institutes is counted for each institute as 1/3 of a full publication. Alternatively, a full counting approach could have been taken. A collaborative publication would then be counted as a full publication for each of the institutes involved. A full counting approach is obtained by replacing  by a

il in (11).

by a

il in (11).

Until now, we have only discussed the issue of normalization for the field in which a publication was published. We have not discussed the issue of normalization for the age of a publication. The latter type of normalization can be used to correct for the fact that older publications have had more time to earn citations than younger publications. Normalization for the age of a publication can easily be incorporated into indicators that make use of a field classification scheme, such as the MNCS indicator. It is more difficult to incorporate into (recursive or non-recursive) source-normalized indicators (see however Waltman and Van Eck 2010b). In the empirical analysis presented later on in this paper, the recursive MNCS indicator performs a normalization both for the field in which a publication was published and for the age of a publication. This means that in the above mathematical description of the recursive MNCS indicator k in fact represents not just a field but a combination of a field and a publication year. As a consequence, b

ik indicates whether a publication was published in a certain field and year, and  indicates the average αth-order citation score of all publications published in a certain field and year.

indicates the average αth-order citation score of all publications published in a certain field and year.

Data

To test our recursive MNCS indicator, we use the indicator to study the citation impact of journals and research institutes in the field of library and information science (LIS). We focus on the period from 2000 to 2009. Our analysis is based on data from the Web of Science database.

We first needed to delineate the LIS field. We used the Journal of the American Society for Information Science and Technology (JASIST) as the ‘seed’ journal for our delineation. We decided to select the 47 journals that, based on co-citation data, are most strongly related with JASIST. Only journals in the Web of Science subject category Information Science & Library Science were considered. JASIST together with the 47 selected journals constituted our delineation of the LIS field. From the journals within our delineation, we selected all 12,202 publications in the period 2000–2009 that are of the document type ‘article’ or ‘review’. It is important to emphasize that in our analysis we only take into account citations within the set of 12,202 publications. Citations given by publications outside this set are not considered.3 Self citations are also excluded.

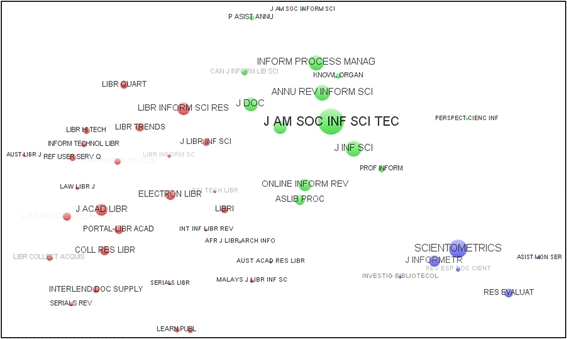

In our analysis, we also study the effect of splitting up the LIS field in a number of subfields. The following procedure was used to split up the LIS field. We first collected bibliographic coupling data for the 48 journals in our analysis.4 Based on the bibliographic coupling data, we created a clustering of the journals. The VOS clustering technique (Waltman et al. 2010), available in the VOSviewer software (Van Eck and Waltman 2010), was used for this purpose. We tried out different numbers of clusters. We found that a solution with three clusters yielded the most satisfactory interpretation in terms of well-known subfields of the LIS field. We therefore decided to use this solution. The three clusters can roughly be interpreted as follows. The largest cluster (27 journals) deals with library science, the smallest cluster (7 journals) deals with scientometrics, and the third cluster (14 journals) deals with general information science topics. The assignment of the 48 journals to the three clusters is shown in Table 3. The clustering of the journals is also shown in the journal map in Fig. 1. This map, produced using the VOSviewer software, is based on bibliographic coupling relations between the journals.

Table 3.

48 LIS journals and their assignment to three clusters

|

Library science (27 journals) African Journal of Library Archives and Information Science Australian Library Journal College & Research Libraries Electronic Library Information Technology and Libraries Interlending & Document Supply International Information & Library Review Journal of Academic Librarianship Journal of Librarianship and Information Science Journal of Scholarly Publishing Law Library Journal Learned Publishing Library & Information Science Research Library and Information Science Library Collections Acquisitions & Technical Services Library Hi Tech Library Quarterly Library Resources & Technical Services Library Trends Libri Malaysian Journal of Library & Information Science Portal-Libraries and the Academy Program-Electronic Library and Information Systems Reference & User Services Quarterly Science & Technology Libraries Serials Librarian Serials Review |

Information science (14 journals) Annual Review of Information Science and Technology Aslib Proceedings Canadian Journal of Information and Library Science Information Processing & Management Information Research Journal of Documentation Journal of Information Science Journal of the American Society for Information Science Journal of the American Society for Information Science and Technology Knowledge Organization Online Information Review Perspectivas Em Ciencia Da Informacao Proceedings of the ASIST Annual Meeting Profesional De La Informacion |

|

Scientometrics (7 journals) ASIST Monograph Series Australian Academic & Research Libraries Investigacion Bibliotecologica Journal of Informetrics Research Evaluation Revista Espanola De Documentacion Cientifica Scientometrics |

Fig. 1.

Journal map of 48 LIS journals based on bibliographic coupling data. The color of a journal indicates the cluster to which it belongs. The map was produced using the VOSviewer software

Results

As discussed in the previous section, we can treat LIS either as a single integrated field or as a field consisting of three separate subfields (i.e., library science, information science, and scientometrics). In the latter case, the recursive MNCS indicator normalizes for differences among the three subfields in the average number of citations per publication. Below, we first present the results obtained when LIS is treated as a single integrated field. We then present the results obtained when LIS is treated as a field consisting of three separate subfields. We also present a comparison of the results obtained using the two approaches. We note that all correlations that we report are Spearman rank correlations. Furthermore, we emphasize once more that in our analysis citations given by publications outside our set of 12,202 publications are not taken into account.

Single integrated LIS field

We first consider the case of a single integrated LIS field. The recursive MNCS indicator is said to have converged for a certain α if there is virtually no difference between values of the αth-order MNCS indicator and values of the (α + 1)th-order MNCS indicator. For our data, convergence of the recursive MNCS indicator can be observed for α = 20. In our analysis, our main focus therefore is on comparing the first-order MNCS indicator (i.e., the ordinary non-recursive MNCS indicator) with the 20th-order MNCS indicator.

In Table 4, we list the top 10 journals according to both the first-order MNCS indicator and the 20th-order MNCS indicator. In the case of the first-order MNCS indicator, the top 10 consists of journals from all three subfields. However, journals from the information science and scientometrics subfields seem to slightly dominate journals from the library science subfield. There are three library science journals in the top 10, at ranks 4, 8, and 10. Given that more than half of the journals in our analysis belong to the library science subfield (27 of the 48 journals), the library science journals seem to be underrepresented in the top 10. Also, within the top 10, the three library science journals have relatively low ranks.

Table 4.

Top 10 journals according to both the first-order MNCS indicator and the 20th-order MNCS indicator

| Journal | MNCS (α = 1) | Journal | MNCS (α = 20) |

|---|---|---|---|

| Journal of Informetrics | 4.49 | Journal of Informetrics | 12.32 |

| Annual Review of Information Science and Technology | 2.97 | Annual Review of Information Science and Technology | 3.79 |

| Journal of the American Society for Information Science | 2.35 | Scientometrics | 3.17 |

| Interlending & Document Supply | 1.94 | Journal of the American Society for Information Science | 2.72 |

| Journal of the American Society for Information Science and Technology | 1.84 | Journal of the American Society for Information Science and Technology | 2.36 |

| Scientometrics | 1.72 | Journal of Documentation | 1.36 |

| Journal of Documentation | 1.58 | Information Processing & Management | 1.21 |

| College & Research Libraries | 1.33 | Journal of Information Science | 0.96 |

| Information Processing & Management | 1.21 | Library & Information Science Research | 0.82 |

| Library & Information Science Research | 1.17 | Research Evaluation | 0.81 |

LIS is treated as a single integrated field in the calculation of the indicators

Let’s now turn to the top 10 journals according to the 20th-order MNCS indicator. This top 10 provides a much more extreme picture. The top 10 is now almost completely dominated by information science and scientometrics journals. There is only one library science journal left, at rank 9. Moreover, when looking at the values of the MNCS indicator, large differences can be observed within the top 10. Especially the extremely high value of the MNCS indicator for Journal of Informetrics, the highest ranked journal, is striking. The value of the MNCS indicator for this journal is more than three times as high as the value of the MNCS indicator for Annual Review of Information Science and Technology, which is the second-highest ranked journal.

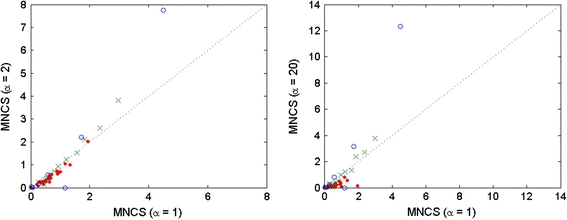

In Fig. 2, the first-order MNCS indicator is compared with the second-order MNCS indicator (left panel) and the 20th-order MNCS indicator (right panel) for the 48 LIS journals in our data set. As can be seen in the figure, the differences between the first- and the second-order MNCS indicator are relatively small, although there is one journal (Journal of Informetrics) that benefits quite a lot from going from the first-order MNCS indicator to the second-order MNCS indicator. The differences between the first- and the 20th-order MNCS indicator are much larger. As was also seen in Table 4, there are a number of journals for which the value of the 20th-order MNCS indicator is higher than the value of the first-order MNCS indicator. These are all information science and scientometrics journals. The library science journals all turn out to have a lower value for the 20th-order MNCS indicator than for the first-order MNCS indicator.

Fig. 2.

Comparison of the first-order MNCS indicator with the second-order MNCS indicator (left panel; ρ = 0.90) and the 20th-order MNCS indicator (right panel; ρ = 0.75) for 48 LIS journals. LIS is treated as a single integrated field in the calculation of the indicators. Library science, information science, and scientometrics journals are indicated by, respectively, red points, green crosses, and blue circles. (Color figure online)

In addition to journals, we also consider research institutes in LIS. We restrict our analysis to the 86 institutes that have at least 25 publications in our data set. (Recall that publications are counted fractionally.) The top 10 institutes according to both the first-order MNCS indicator and the 20th-order MNCS indicator are listed in Table 5. Comparing the results of the two MNCS indicators, it is clear that institutes which are mainly active in the scientometrics subfield benefit a lot from the use of a higher-order MNCS indicator. For these institutes, the value of the 20th-order MNCS indicator tends to be much higher than the value of the first-order MNCS indicator. This is consistent with our above analysis for LIS journals, where we found that the two most important scientometrics journals (Journal of Informetrics and Scientometrics) benefit quite significantly from the use of a higher-order MNCS indicator.

Table 5.

Top 10 research institutes according to both the first-order MNCS indicator and the 20th-order MNCS indicator

| Institute | MNCS (α = 1) | Institute | MNCS (α = 20) |

|---|---|---|---|

| Leiden Univ | 3.85 | Univ Antwerp | 8.64 |

| Univ Antwerp | 3.77 | Hungarian Acad Sci | 7.92 |

| Hungarian Acad Sci | 3.70 | Univ Amsterdam | 7.45 |

| Univ Amsterdam | 3.53 | Leiden Univ | 7.43 |

| Royal Sch Lib & Informat Sci | 3.20 | Limburg Univ Ctr | 5.94 |

| Indiana Univ | 2.52 | Kathol Univ Leuven | 5.19 |

| Hebrew Univ Jerusalem | 2.51 | Indiana Univ | 3.56 |

| Kathol Univ Leuven | 2.48 | Univ Wolverhampton | 3.13 |

| Univ Tennessee, Knoxville | 2.39 | Royal Sch Lib & Informat Sci | 3.07 |

| Univ Bar Ilan | 2.35 | Hebrew Univ Jerusalem | 2.72 |

LIS is treated as a single integrated field in the calculation of the indicators

Three separate LIS subfields

We now consider the case in which LIS is divided into three separate subfields (i.e., library science, information science, and scientometrics). In the calculation of the recursive MNCS indicator, a normalization is performed to correct for differences among the three subfields in the average number of citations per publication. Like in the above analysis, we focus mainly on comparing the first-order MNCS indicator with the 20th-order MNCS indicator.

In Table 6, the top 10 journals according to both the first-order MNCS indicator and the 20th-order MNCS indicator is shown. This table is similar to Table 4 above, except that in the calculation of the indicators LIS is treated as a field consisting of three separate subfields rather than as a single integrated field. Comparing Table 6 with Table 4, it can be seen that library science journals now play a much more prominent role, both in the case of the first-order MNCS indicator and in the case of the 20th-order MNCS indicator. As a consequence, the top 10 journals now looks much more balanced for both MNCS indicators. Also, unlike in Table 4, there are no journals in Table 6 with an extremely high value for the 20th-order MNCS indicator.

Table 6.

Top 10 journals according to both the first-order MNCS indicator and the 20th-order MNCS indicator

| Journal | MNCS (α = 1) | Journal | MNCS (α = 20) |

|---|---|---|---|

| Journal of Informetrics | 3.07 | Interlending & Document Supply | 5.25 |

| Annual Review of Information Science and Technology | 2.62 | Journal of Informetrics | 4.20 |

| Interlending & Document Supply | 2.46 | Annual Review of Information Science and Technology | 3.40 |

| College & Research Libraries | 1.90 | College & Research Libraries | 1.83 |

| Library & Information Science Research | 1.67 | Journal of the American Society for Information Science and Technology | 1.81 |

| Journal of the American Society for Information Science and Technology | 1.66 | Library & Information Science Research | 1.75 |

| Journal of the American Society for Information Science | 1.44 | Serials Review | 1.52 |

| Serials Review | 1.39 | Journal of the American Society for Information Science | 1.52 |

| Portal-Libraries and the Academy | 1.36 | Learned Publishing | 1.37 |

| Journal of Documentation | 1.35 | Journal of Documentation | 1.26 |

LIS is treated as a field consisting of three separate subfields in the calculation of the indicators

As can be seen in Table 6, the journal with the highest value for the 20th-order MNCS indicator is Interlending & Document Supply. We investigated this journal in more detail and found that each issue of the journal contains a review article entitled “Interlending and document supply: A review of the recent literature”. These review articles refer to other articles published in the same issue of the journal. Clearly, the practice of publishing these review articles is an important contributing factor to the journal’s top ranking in Table 6.

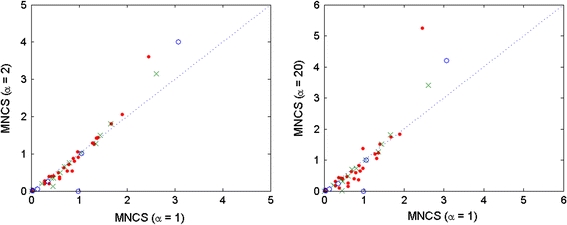

In Fig. 3, a comparison is presented of the first-order MNCS indicator with the second-order MNCS indicator (left panel) and the 20th-order MNCS indicator (right panel) for the 48 LIS journals in our data set. The figure shows that most of the differences between the first-order MNCS indicator and the higher-order MNCS indicators are not very large, especially when compared with the differences shown in Fig. 2. The journal that is most sensitive to the use of a higher-order MNCS indicator is Interlending & Document Supply. As pointed out above, this journal has quite special citation characteristics, which explains its sensitivity to the use of a higher-order MNCS indicator.

Fig. 3.

Comparison of the first-order MNCS indicator with the second-order MNCS indicator (left panel; ρ = 0.93) and the 20th-order MNCS indicator (right panel; ρ = 0.89) for 48 LIS journals. LIS is treated as a field consisting of three separate subfields in the calculation of the indicators. Library science, information science, and scientometrics journals are indicated by, respectively, red points, green crosses, and blue circles. (Color figure online)

Results for research institutes in LIS are reported in Table 7. In the table, the top 10 institutes according to both the first-order MNCS indicator and the 20th-order MNCS indicator are shown. Like in Table 5, the top of the ranking is dominated by institutes with a strong focus on scientometrics research. This is the case both for the first-order MNCS indicator and for the 20th-order MNCS indicator. However, comparing Table 7 with Table 5, it can be seen that the MNCS values of the scientometrics institutes have decreased quite considerably, especially when looking at the 20th-order MNCS indicator. Hence, although the scientometrics institutes still occupy the top positions in the ranking, the differences with the other institutes have become smaller.

Table 7.

Top 10 research institutes according to both the first-order MNCS indicator and the 20th-order MNCS indicator

| Institute | MNCS (α = 1) | Institute | MNCS (α = 20) |

|---|---|---|---|

| Univ Amsterdam | 3.04 | Univ Amsterdam | 3.64 |

| Univ Antwerp | 2.79 | Univ Antwerp | 3.63 |

| Royal Sch Lib & Informat Sci | 2.67 | Limburg Univ Ctr | 2.84 |

| Leiden Univ | 2.60 | Leiden Univ | 2.74 |

| Hungarian Acad Sci | 2.44 | Hungarian Acad Sci | 2.58 |

| Cornell Univ | 2.34 | Indiana Univ | 2.54 |

| Indiana Univ | 2.26 | Univ Tennessee, Knoxville | 2.46 |

| Univ Tennessee, Knoxville | 2.23 | Univ Coll Dublin | 2.40 |

| Hebrew Univ Jerusalem | 2.02 | Cornell Univ | 2.34 |

| Univ Wolverhampton | 1.97 | Royal Sch Lib & Informat Sci | 2.32 |

LIS is treated as a field consisting of three separate subfields in the calculation of the indicators

Comparison

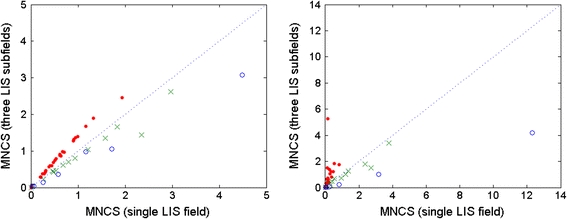

In Fig. 4, we present a direct comparison of on the one hand the results obtained when LIS is treated as a single integrated field and on the other hand the results obtained when LIS is treated as a field consisting of three separate subfields. The comparison is made for the 48 LIS journals in our data set. Figure 4 clearly shows how treating LIS as a single integrated field benefits the scientometrics journals and harms the library science journals. In addition, the figure shows that this effect is strongly reinforced when instead of the first-order MNCS indicator the 20th-order MNCS indicator is used.

Fig. 4.

Comparison of the MNCS indicator when LIS is treated as a single integrated field with the MNCS indicator when LIS is treated as a field consisting of three separate subfields. The comparison is made for 48 LIS journals, and either the first-order MNCS indicator (left panel; ρ = 0.96) or the 20th-order MNCS indicator (right panel; ρ = 0.77) is used. Library science, information science, and scientometrics journals are indicated by, respectively, red points, green crosses, and blue circles. (Color figure online)

Discussion and conclusion

Recursive bibliometric indicators are based on the idea that citations should be weighed differently depending on the source from which they originate. Citations from a prestigious journal, for instance, should have more weight than citations from an obscure journal. It is sometimes argued that by weighing citations differently depending on their source it is possible to measure not just the popularity of publications but also their prestige.

In this paper, we have combined the idea of recursive citation weighing with the idea of using a classification scheme to normalize citation counts for differences among fields. The combination of these two ideas has not been explored before. Although when used separately from each other the two ideas can be quite useful, our empirical analysis for the field of LIS indicates that the combination of the two ideas does not yield satisfactory results. The main observations from our analysis are twofold. First, our proposed recursive MNCS indicator is highly sensitive to the way in which fields are defined in the classification scheme that one uses. And second, if within a field there are subfields with significantly different citation characteristics, the recursive MNCS indicator will be strongly biased in favor of the subfields with the highest density of citations.

The sensitivity of bibliometric indicators to the field classification scheme that is used for normalizing citation counts has been investigated in various studies (Adams et al. 2008; Bornmann et al. 2008; Neuhaus and Daniel 2009; Van Leeuwen et al. 2009; Zitt et al. 2005). Our empirical results for the first-order MNCS indicator (i.e., the ordinary non-recursive MNCS indicator) are in line with earlier studies in which a significant sensitivity of bibliometric indicators to the classification scheme that is used has been reported. For the 20th-order MNCS indicator, this sensitivity even turns out to be much higher. Treating LIS as a single integrated field or as a field consisting of three separate subfields yields very different results for the 20th-order MNCS indicator.

If a field as defined in the classification scheme that one uses is heterogeneous in terms of citation characteristics, bibliometric indicators will have a bias that favors subfields with a higher citation density over subfields with a lower citation density. This is a general problem of bibliometric indicators that use a classification scheme to normalize citation counts. In the case of the recursive MNCS indicator, our empirical results show that the idea of recursive citation weighing strongly reinforces biases caused by the classification scheme. Within the field of LIS, the scientometrics subfield has the highest citation density, followed by the information science subfield. The library science subfield has the lowest citation density. When LIS is treated as a single integrated field, the differences in citation density among the three LIS subfields cause both the first-order MNCS indicator and the 20th-order MNCS indicator to be biased, where the bias favors the scientometrics subfield and harms the library science subfield. However, the bias is much stronger for the 20th-order MNCS indicator than for the first-order MNCS indicator. For instance, in the case of the 20th-order MNCS indicator, library science journals are completely dominated by journals in scientometrics and information science.

Based on the above observations, we advise against the introduction of recursiveness into bibliometric indicators that use a field classification scheme for normalizing citation counts (such as the MNCS indicator). Instead of providing more sophisticated measurements of citation impact (measurements of ‘prestige’ rather than ‘popularity’), the main effect of introducing recursiveness is to reinforce biases caused by the way in which fields are defined in the classification scheme that one uses. Although our negative results may partly relate to specific characteristics of the MNCS indicator, we expect our general conclusion to be valid for all field-normalized indicators.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Footnotes

However, a first step in the direction of such indicators was taken by Van Leeuwen et al. (2003). They proposed an indicator that weighs citations by the average field-normalized number of citations per publication of the citing journal.

For simplicity, we assume that fields are non-overlapping. A publication therefore always belongs to exactly one field.

In the case of citations given by publications outside the set of 12,202 publications, we do not know the MNCS value of the citing journal. We need to know the MNCS value of the citing journal in order to calculate the recursive MNCS indicator. In general, when calculating recursive indicators, the set of citing journals and the set of cited journals need to coincide.

We also tried out using co-citation data, but this gave less satisfactory results.

Contributor Information

Ludo Waltman, Email: waltmanlr@cwts.leidenuniv.nl.

Erjia Yan, Email: eyan@indiana.edu.

Nees Jan van Eck, Email: ecknjpvan@cwts.leidenuniv.nl.

References

- Adams J, Gurney K, Jackson L. Calibrating the zoom—A test of Zitt’s hypothesis. Scientometrics. 2008;75(1):81–95. doi: 10.1007/s11192-007-1832-7. [DOI] [Google Scholar]

- Bergstrom, C. T. (2007). Eigenfactor: Measuring the value and prestige of scholarly journals. College and Research Libraries News, 68(5), 314–316.

- Bollen J, Rodriguez MA, Van de Sompel H. Journal status. Scientometrics. 2006;69(3):669–687. doi: 10.1007/s11192-006-0176-z. [DOI] [Google Scholar]

- Bornmann L, Mutz R, Neuhaus C, Daniel H-D. Citation counts for research evaluation: Standards of good practice for analyzing bibliometric data and presenting and interpreting results. Ethics in Science and Environmental Politics. 2008;8(1):93–102. doi: 10.3354/esep00084. [DOI] [Google Scholar]

- Braun T, Glänzel W. United Germany: The new scientific superpower? Scientometrics. 1990;19(5–6):513–521. doi: 10.1007/BF02020712. [DOI] [Google Scholar]

- Brin S, Page L. The anatomy of a large-scale hypertextual Web search engine. Computer Networks and ISDN Systems. 1998;30(1–7):107–117. doi: 10.1016/S0169-7552(98)00110-X. [DOI] [Google Scholar]

- Chen P, Xie H, Maslov S, Redner S. Finding scientific gems with Google’s PageRank algorithm. Journal of Informetrics. 2007;1(1):8–15. doi: 10.1016/j.joi.2006.06.001. [DOI] [Google Scholar]

- Glänzel W, Schubert A, Thijs B, Debackere K. A priori vs. a posteriori normalisation of citation indicators. The case of journal ranking. Scientometrics. 2011;87(2):415–424. doi: 10.1007/s11192-011-0345-6. [DOI] [Google Scholar]

- González-Pereira B, Guerrero-Bote VP, Moya-Anegón F. A new approach to the metric of journals’ scientific prestige: The SJR indicator. Journal of Informetrics. 2010;4(3):379–391. doi: 10.1016/j.joi.2010.03.002. [DOI] [Google Scholar]

- Leydesdorff L, Bornmann L. How fractional counting of citations affects the impact factor: Normalization in terms of differences in citation potentials among fields of science. Journal of the American Society for Information Science and Technology. 2011;62(2):217–229. doi: 10.1002/asi.21450. [DOI] [Google Scholar]

- Lundberg J. Lifting the crown—Citation z-score. Journal of Informetrics. 2007;1(2):145–154. doi: 10.1016/j.joi.2006.09.007. [DOI] [Google Scholar]

- Ma N, Guan J, Zhao Y. Bringing PageRank to the citation analysis. Information Processing and Management. 2008;44(2):800–810. doi: 10.1016/j.ipm.2007.06.006. [DOI] [Google Scholar]

- Moed HF. Measuring contextual citation impact of scientific journals. Journal of Informetrics. 2010;4(3):265–277. doi: 10.1016/j.joi.2010.01.002. [DOI] [Google Scholar]

- Moed HF, De Bruin RE, Van Leeuwen TN. New bibliometric tools for the assessment of national research performance: Database description, overview of indicators and first applications. Scientometrics. 1995;33(3):381–422. doi: 10.1007/BF02017338. [DOI] [Google Scholar]

- Neuhaus C, Daniel H-D. A new reference standard for citation analysis in chemistry and related fields based on the sections of Chemical Abstracts. Scientometrics. 2009;78(2):219–229. doi: 10.1007/s11192-007-2007-2. [DOI] [Google Scholar]

- Pinski G, Narin F. Citation influence for journal aggregates of scientific publications: Theory, with application to the literature of physics. Information Processing and Management. 1976;12(5):297–312. doi: 10.1016/0306-4573(76)90048-0. [DOI] [Google Scholar]

- Radicchi F, Fortunato S, Markines B, Vespignani A. Diffusion of scientific credits and the ranking of scientists. Physical Review E. 2009;80(5):056103. doi: 10.1103/PhysRevE.80.056103. [DOI] [PubMed] [Google Scholar]

- Van Eck NJ, Waltman L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics. 2010;84(2):523–538. doi: 10.1007/s11192-009-0146-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Leeuwen, T. N., & Calero Medina, C. (2009). Redefining the field of economics: Improving field normalization for the application of bibliometric techniques in the field of economics. In B. Larsen & J. Leta (Eds.), Proceedings of the 12th international conference on scientometrics and informetrics (pp. 410–420).

- Van Leeuwen TN, Visser MS, Moed HF, Nederhof TJ, Van Raan AFJ. The Holy Grail of science policy: Exploring and combining bibliometric tools in search of scientific excellence. Scientometrics. 2003;57(2):257–280. doi: 10.1023/A:1024141819302. [DOI] [Google Scholar]

- Walker, D., Xie, H., Yan, K.-K., & Maslov, S. (2007). Ranking scientific publications using a model of network traffic. Journal of Statistical Mechanics: Theory and Experiment, P06010.

- Waltman L, Van Eck NJ. The relation between Eigenfactor, audience factor, and influence weight. Journal of the American Society for Information Science and Technology. 2010;61(7):1476–1486. doi: 10.1002/asi.21354. [DOI] [Google Scholar]

- Waltman, L., & Van Eck, N.J. (2010b). A general source normalized approach to bibliometric research performance assessment. In Book of Abstracts of the 11th International Conference on Science and Technology Indicators (pp. 298-299).

- Waltman L, Van Eck NJ, Noyons ECM. A unified approach to mapping and clustering of bibliometric networks. Journal of Informetrics. 2010;4(4):629–635. doi: 10.1016/j.joi.2010.07.002. [DOI] [Google Scholar]

- Waltman L, Van Eck NJ, Van Leeuwen TN, Visser MS, Van Raan AFJ. Towards a new crown indicator: Some theoretical considerations. Journal of Informetrics. 2011;5(1):37–47. doi: 10.1016/j.joi.2010.08.001. [DOI] [Google Scholar]

- West JD, Bergstrom TC, Bergstrom CT. The Eigenfactor metrics: A network approach to assessing scholarly journals. College and Research Libraries. 2010;71(3):236–244. [Google Scholar]

- Yan E, Ding Y. Weighted citation: An indicator of an article’s prestige. Journal of the American Society for Information Science and Technology. 2010;61(8):1635–1643. [Google Scholar]

- Zitt M. Citing-side normalization of journal impact: A robust variant of the Audience Factor. Journal of Informetrics. 2010;4(3):392–406. doi: 10.1016/j.joi.2010.03.004. [DOI] [Google Scholar]

- Zitt M, Ramanana-Rahary S, Bassecoulard E. Relativity of citation performance and excellence measures: From cross-field to cross-scale effects of field-normalisation. Scientometrics. 2005;63(2):373–401. doi: 10.1007/s11192-005-0218-y. [DOI] [Google Scholar]

- Zitt M, Small H. Modifying the journal impact factor by fractional citation weighting: The audience factor. Journal of the American Society for Information Science and Technology. 2008;59(11):1856–1860. doi: 10.1002/asi.20880. [DOI] [Google Scholar]

- Życzkowski K. Citation graph, weighted impact factors and performance indices. Scientometrics. 2010;85(1):301–315. doi: 10.1007/s11192-010-0208-6. [DOI] [Google Scholar]