Abstract

One approach to gauge the complexity of the computational problem underlying haptic perception is to determine the number of dimensions needed to describe it. In vision, the number of dimensions can be estimated to be seven. This observation raises the question of what is the number of dimensions needed to describe touch. Only with certain simplified representations of mechanical interactions can this number be estimated, because it is in general infinite. Organisms must be sensitive to considerably reduced subsets of all possible measurements. These reductions are discussed by considering the sensing apparatuses of some animals and the underlying mechanisms of two haptic illusions.

Keywords: haptic perception, plenhaptic function, tactile illusions

1. Introduction

The haptic system is astonishingly capable. It operates on time and length scales that overlap those accessible to vision or audition [1–3], and performs functions that may be compared with those of vision and audition [4–7]. Research has produced many results regarding the perceptual capabilities of touch, and indications regarding its underlying mechanisms, but the computational nature of haptic perception has not yet been considered. The first step when attempting to scope the computational problem performed by the nervous system during haptic processing can be approached by attempting to evaluate the number of coordinates that must be considered, a question that is examined in the present article.

Early authors who considered this question agree that the number of coordinates needed to describe mechanical sensory interactions is many times larger than three or four [8,9]. They have further noted that the experience that we derive from touching objects seems to take place in a space that has only a few dimensions. As far as haptic shape is concerned, for instance, objects seem to exist in three dimensions. Similar observations could be made about object attributes such heaviness, roughness, silkiness or any other perceptual aspect of things we touch, all which seem to exist in a few dimensions.

To evaluate the number of coordinates of the space in which haptic interaction takes place, one option would be to count the number of sensory and motor units in an organism that can independently respond to commands and to stimulation, and to assign one coordinate to each unit. This approach, however, does not directly address the question of how difficult is the task of perception. It refers rather to the motor and sensory capacities of an organism. Another approach is to enumerate the number of coordinates needed to describe all possible sensorimotor interactions. In vision, this approach leads to the notion of the ‘plenoptic function’ [10]. This function can be found by asking ‘what can potentially be seen’.

If one assumes that the intensity of a light ray is all that there is to measure, then considering the intensity of all the possible light rays captured from all directions inside a volume gives a scalar function written p(l, v, λ, t), where l ∈ S2 indicates a viewing direction,  a viewing position,

a viewing position,  a wavelength and

a wavelength and  time, and that has the value of an intensity.

time, and that has the value of an intensity.

Seeing and looking at everything, therefore, requires a space of at least seven dimensions, a very large space that is much larger than the space with four dimensions that is sometimes assumed. If we do not account for the polarization of light, the measurements are along one dimension. Yet, we do not perceive optical objects in these seven coordinates on which intensity depends. With touch, like with vision or audition, we are not at all aware of the nearly instantaneous reduction of dimensions taking place in the nervous system as we move around, seeing, feeling and hearing objects, but this reduction, clearly, is considerable.

The manner in which the visual space is sampled is a characteristic of each seeing organism, or machine, which is evident in the great variety of visual organs observed in animals, providing them with a multiplicity of perceptual options. All seeing organisms, nevertheless, sense a sampling of low-dimensional projections of the plenoptic function spanned by an intensity and a direction. The space of all that they can see has nevertheless seven dimensions.

Similar concepts applied to determining the dimensionality of haptic perception through a ‘plenhaptic function’, and its possible reductions, require a different approach because mechanics are different from optics. The scope of this article is limited to a discussion of this problem from the view point of mechanics, and excludes the direct investigation of the perceptual processes presumably taking place in the nervous system. Doing justice to them would require a different level of analysis.

2. What can potentially be felt

It is thus reasonable to ask ‘what can potentially be felt’. With haptics, sensory interaction comes from the contact between probes and objects. All probes and objects deform and are displaced by such interactions, and it is their movement and deformations that make sensing possible. If probes are assumed to be rigid, then they are useless as mechanical sensors.

When considering all that can be felt, difficulties arise when considering only a finite set of coordinates. The problem is rooted in the fact that if the movements of rigid bodies can be described with a finite set of coordinates, the mechanical world, including the perceiving organism, is also made of deformable solids, liquids, gases and of things in-between, such as sand, mud, slime and materials having complex rheological properties.

(a). Initial assumption: no interpenetration

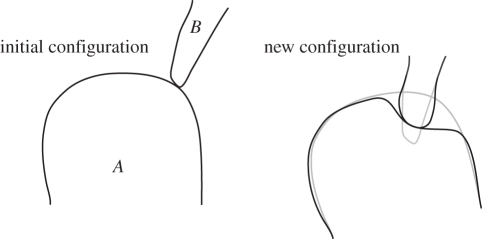

Absence of interpenetration during interaction makes it possible to suggest a first version of the general description of all that can potentially be felt. By analogy with the Lagrangian description of continuum mechanics, the expression a = hA,B (b) represents the displacements of all the points of an object contained in a domain, A, such that it becomes a displaced and deformed object once touched by a probe contained in a domain, B (figure 1). The probed object, conversely, displaces and deforms from configuration B to end up in a new configuration, so we also have b = hB,A(a).

Figure 1.

Interaction between two solid objects through contact. The grey outline represents the shape of the undeformed objects at the instant of initial contact. During interaction, both objects displace and deform from an initial configuration to another configuration.

In its general form, h maps the trajectories of a continuum of point trajectories, b, into another continuum of point trajectories, a, in almost arbitrary ways, which requires consideration of an infinite number of coordinates. Moreover, we neglect the possibility that an object can deform under its own agency or under the effect of forces acting at distance, such as gravity, as the ‘plenhaptic function’ is meant to represent the consequences of contact only.

The perceptual problem, from the probe's perspective, may be viewed to be the computation of some aspects of the mechanics of A from the measurements made by the probe's sensors, such measurements relating to b, only. For example, the perceiver may wish to obtain an estimate of the shape of A, that is, of its frontier. Because this form of the plenhaptic function is far too general to be practically useful, it is natural to consider simplifying assumptions.

(b). Possible assumption: local deformation

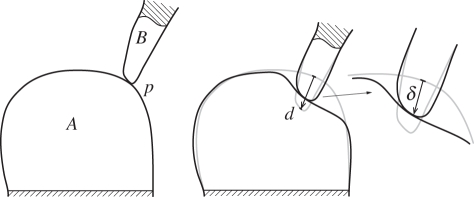

The assumption that deformations vanish sufficiently far away from a contact allows the plenhaptic function to be simplified (an assumption known as Saint-Venant's principle). The relative movements of the objects in contact can then be separated into a rigid component and a deformation component, as in figure 2. The deformation component occurs in a volume that is much smaller than the domain considered. The validity of this assumption depends in particular on a restriction to small displacements, which at a proper scale, is applicable to many tactile situations.

Figure 2.

Interaction that is separated into global and local components. In this instance, the rigid displacement, d, transports the point of initial contact from one undeformed object to the other.

The crucial step here is to approximate the relationship between the movements of an infinite number of points of the probe and the movements of an infinite number of points of the touched object with a simpler relationship. This approximation can relate the rigid displacement of the probe to the deflection of a single point of the object. The relative movement of the probe and the object is reduced to a rigid displacement and the deformation of the touched object no longer depends on a continuum of points, but is represented by a single vector, δ, that represents the displacement of the initial point of contact, p, on the touched object (figure 2).

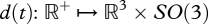

Since the result depends on where the objects come into contact, both on probe and on the touched object, we have a simplified plenhaptic function of the form,  , where

, where  represents the relative rigid displacement trajectories of the two bodies and p where they touch. This approximation is somewhat arbitrary and similar alternatives are possible. For instance, the displacement of points other than the point of contact could be used to represent the consequences of contact. Alternatively, it would be possible to report the displacement of the initial point of contact relative to the probe. To be sufficiently general, it is necessary to consider a multiplicity of simultaneous contacts according to the length scale at which the analysis is performed.

represents the relative rigid displacement trajectories of the two bodies and p where they touch. This approximation is somewhat arbitrary and similar alternatives are possible. For instance, the displacement of points other than the point of contact could be used to represent the consequences of contact. Alternatively, it would be possible to report the displacement of the initial point of contact relative to the probe. To be sufficiently general, it is necessary to consider a multiplicity of simultaneous contacts according to the length scale at which the analysis is performed.

A simplified plenhaptic function of the form,  , has the advantage of involving a finite number of coordinates. To further simplify, we can replace the trajectory, d(t), by a local approximation comprising a displacement and a velocity, giving

, has the advantage of involving a finite number of coordinates. To further simplify, we can replace the trajectory, d(t), by a local approximation comprising a displacement and a velocity, giving  , or h(p, d, t) for the quasi-static version. It is only at this level of simplification, the validity of which depends on the length and time scale considered, that the plenhaptic function could possibly be compared with the plenoptic function.

, or h(p, d, t) for the quasi-static version. It is only at this level of simplification, the validity of which depends on the length and time scale considered, that the plenhaptic function could possibly be compared with the plenoptic function.

(c). Possible alternatives using local deformation

Other approximations should be invoked to obtain more tractable descriptions. For instance, if the interaction is assumed to have no memory, which is rare in the mechanical world, then the simpler version of the plenhaptic function, with proper restrictions, could be viewed as a function in the ordinary sense. Such simplification is not even one-to-one, as the phenomenon of buckling, for instance, can cause different values to be obtained from the same displacements, even for purely elastic materials. Buckling is omnipresent in the behaviour of fabrics, foams and other common materials, during seemingly innocuous haptic interactions.

Another approximation, yet a questionable one, is ignoring the pronounced viscolelastic and hysteretic properties of the tissues engaged in haptic interaction. In spite of all these simplifications, a large number of dimensions is still required to express the plenhaptic function, exceeding 10 in most practical situations, and justifying the further examination of special cases.

Clearly, there is an entire hierarchy of possible simplifications. It can be argued that approximating the continuum, b, by a rigid displacement, d, and the continuum, a, by a deflection, δ, among other possibilities, may cause an irremediable loss of potentially available sensory information. Less drastic simplifications could consider, for example, the movements of surfaces or lines instead of volumes, although then the function would remain infinite-dimensional.

(d). Neglecting the influence of the initial point of contact

Large mutual displacements of solid objects yield rolling, sliding or damaging interactions. Rolling is defined as those mutual movements and deformations such that each pair of coinciding points, one on each object, has an identically zero relative velocity, inside a finite region of contact. Sliding is when there are no points in the mutual contact having zero relative velocity. Damage is when there are new surfaces created in the object, in the probe or in both.

Simplifications of the plenhaptic function, including where the value of h is reduced to a finite-dimensional displacement of the surface points, can be obtained by assuming that the effect of the initial point of contact can partially or completely vanish when mutual displacements are large. These simplifications are possible under strict assumptions as it is easy to eliminate some of the most informative aspects of an interaction, for instance, if deformations propagate at a distance inside the objects in contact.

In cases of rolling and sliding, reduced forms for the plenhaptic function, i.e.  , can be obtained by replacing the true point of initial contact, p, by a fictitious point of contact and the displacement, d(t), by a truncation which would have the same effect as the true version at a given instant. Such simplification is not valid when there is damage or when there is plastic deformation. Also, truncating trajectories too early in the past can be detrimental to an accurate description of an interaction (see for instance [11]).

, can be obtained by replacing the true point of initial contact, p, by a fictitious point of contact and the displacement, d(t), by a truncation which would have the same effect as the true version at a given instant. Such simplification is not valid when there is damage or when there is plastic deformation. Also, truncating trajectories too early in the past can be detrimental to an accurate description of an interaction (see for instance [11]).

The case of wielding or moving objects may be viewed as a case where there is neither sliding nor rolling between the hand and a held object. It suggests a further simplification of the plenhaptic function where perceivers have access to simplifications that do not depend on p.

(e). Rigid objects and rigid probe

The case of a rigid object touched by a rigid probe does not seem to have immediate biological relevance, except perhaps with a hoof (or a shoe) against a rock. Yet, it has industrial importance as it is the basis of calipers, profilometers and coordinate measurement machines that are engineered such that contact deformations may be neglected. Then, the function simplifies and its value can be restricted to  , that is, the object can be found from determining the portion of space where there exists a small interference with the probe.

, that is, the object can be found from determining the portion of space where there exists a small interference with the probe.

If the probe is a sphere of curvature greater than the curvature of the concave regions of the touched object, then the shape can be recovered from d given appropriate assumptions regarding the surface of the unknown object. If these assumptions do not hold, the shape is difficult or impossible to recover owing to the possibility of multiple points of contact. In any case, the task of recovering shape is bound to be time-consuming as, even in the case of continuous contact, the information collected is at best curves on the surface of the probed object from which shape cannot be extracted without special assumptions.

The sharp ends of the vibrissae of whisking animals are well adapted to simplify the speed-up of the plenhaptic function for this purpose, provided that their deformation is minimized and that they are sufficiently numerous to provide information at the length scale given by their mutual separation. On the other hand, if the task asked from the users of force-feedback devices is to experience shape, then this task is close to impossible to perform at perceptual speeds.

(f). Rigid objects comparative to the probe

This case also has common practical importance, including that of the human fingers. In the simplifying case of a stationary object, the displacements of the points of the surface of the touched object are zero regardless of the movements of the probe. If the perceptual task is to determine the shape of a touched object, then the problem is difficult, yet as the perceptual problem simplifies dramatically when an object can be determined to be stationary and rigid, then this determination is a problem that comes before that of perceiving its shape [12]. Then, the task is to find those objects that are the most likely to satisfy 0 = h(b) (see [13] for an approach to this problem).

If the object in question is mobile, the perceptual problem becomes much more complicated as the perceiving organism must distinguish in the modifications made to its own anatomy those owing to the external object's properties from those owing to relative movement. It must be noted that the case of the rigid object comparative to the probe does not preclude simplifications similar to those mentioned in §2e.

(g). Rigid probe and deformable object

As a rule, humans use rigid tools. Rigid implements could be thought to simplify the perceptual problem. If the probe is sufficiently sharp, we could model the interaction by δ = h(p, d), where d is the displacement of the tip. If local deformation with no slip is assumed, then the plenhaptic function can take an even simpler form, h(p, d) = d–p, which says that the touched object tracks the probe at the place of contact. Thus, with a rigid probe, it is not possible to simultaneously sense material properties, gained through large δ, and shape, requiring δ to be small.

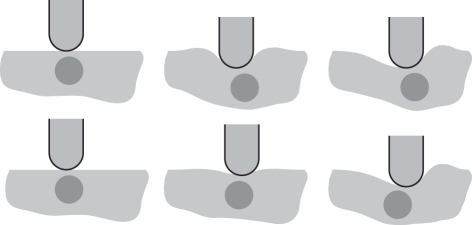

Surgeons manipulating instruments against soft tissues, for instance, must not only contend with this alternative, but must cope with, and take advantage of, the fact that the plenhaptic function may not be single-valued. An example is illustrated in figure 3 and its caption.

Figure 3.

Consider a rigid probe touching an elastic solid with a hard inclusion. A first probe trajectory first indents the object and then moves sideways (top row). A second trajectory first moves sideways and then indents the object (bottom row). For the same initial and final displacements of the probe, the resulting distribution of displacements in the object is different, yet not excluding the possibility that further straining of the object might eventually give the same final configuration (example loosely adapted from [14]).

(h). Pastes, sand, liquids, etc.

When a or b exceeds certain thresholds, most interactions with solids give rise to irreversible interactions, such as those involving plastic deformations. Some solids have dominant irreversible properties, such as pastes, or aggregate materials, such as sand. Interactions with these solids have a propensity to resist reductions of the plenhaptic function. These interactions give different deformations for the same movements, as these values potentially depend on all past trajectories, and the same deformations can be achieved with different movements.

An interesting case is that of touching a liquid. Quasi-statically, and ignoring the meniscus, we can consider this to be a limiting case as liquids displace to copy the shape of the probe, which is to say that a ≈ b in a domain. A major difference between sands and liquids, however, is related to the length scale of local deformations at the surface of the probe. A similar observation can be made regarding the notion of roughness of a surface [15].

(i). Differences with vision or audition

At this point, it is worth returning to the comparison of touch with vision or audition. Each eye may be different, but the plenoptic function does not depend on each eye. The plenhaptic function, in contrast, depends on the shape and on the mechanics of the probe, as what can potentially be felt depends on it. With vision or audition, the sensitive probes do not change what can potentially be seen or heard. An ear changes the acoustic field only by a tiny amount. Another difference with vision and audition is the possibility for irreversible interactions as commented above. Irreversibility is of no concern with vision and audition: we do not change an object by looking at it. This is not to say, however, that the perceived object could not change its state through cognitive awareness [16].

3. What can potentially be measured

Whereas in vision the question of what can be measured can be settled by supposing that what is sensed is light intensity, the haptic sense does not lend itself to straightforward analysis.

(a). Mechanical sensing

Mechanical sensors operate on the basis of the detection of movement. A most relevant type of movement is deformation, that is, small relative displacements in a solid. In the simplest case of a homogeneous solid undergoing a small deformation, to a first order, each infinitesimal sphere surrounding every point, when strained, becomes a rotated ellipsoid. According to continuum mechanics theory, small strain can be represented by the so-called deformation tensor,  , expressing elongation and shear.

, expressing elongation and shear.

These dimensional changes, in general, cause modifications of other non-mechanical characteristics that trigger transduction from the mechanical domain to the electrical or chemical domain. Therefore, what can potentially be measured is at least a field of deformation tensors in a volume requiring nine coordinates to specify. It must be stressed that it is not forces, more generally not stresses, that are at the basis of measurements, but relative displacements inside a volume.

(b). What is not likely to be measured

The notion of tensor of strain, in turn, depends on the notion of homogeneity, which specifies that material properties must vary smoothly throughout a volume. At most length scales, however, tissues lack homogeneity as is apparent in the structure of cells, or networks of connective fibres. The notion of homogeneity relates to a sensing function rather than to how a sensor is made. Accordingly, the direct applicability of continuum mechanics to the analysis of the sensory function of tissue may be put into question. The highly organized nature of tissues may be thought to privilege certain modes of deformation deviating considerably from the picture painted by continuum mechanics. This organization is expected to yield drastic simplifications in the measurements.

The question of function can be well illustrated by means of the common place notion that touch is the sense of pressure. Pressure at the surface of a solid corresponds to the distribution of normal forces per unit of area. Inside a solid, pressure is the invariant trace of the stress tensor, ε, which corresponds to a change of volume in the material. It is quite apparent that we do not sense pressure, as we can dive without feeling it; nevertheless, our ears hurt if we do not equalize pressure in the ear's inner compartment with ambient pressure. Fishes, in contrast, have the ability to sense hydrostatic pressure [17], exemplifying the functional specialization of what is measured. If humans are insensitive to hydrostatic pressure, then the receptors embedded in them must be ‘sensorially incompressible’, which makes them insensitive to certain aspects of what can be sensed.

(c). Some examples

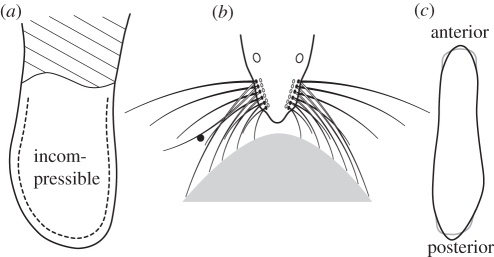

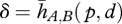

It can be speculated that the functional organization of mechanical sensing goes a long way to selectively simplify sensing. In other words, should each mechanoreceptor have the ability to distinguish all the individual components of deformation? Most likely not. In this section, we discuss three examples to illustrate the idea of sensing reduction: the human finger, the whisker system and a haptically skilled, single-cell organism represented in figure 4.

Figure 4.

Haptic samplers. (a) Schematic of a primate finger. The hatched region represents (intentionally without metric accuracy) the regions that move rigidly during local contact. These regions include the bone and any other part of the body that are not affected by the contact other than by a statically equivalent system of forces. In contrast, that the tissues are incompressible and that receptors are located just beneath the surface at a small distance compared with other characteristic distances is crucial. (b) Schematic of the rat whisking system. Rats have at least two behavioural patterns involving repeatedly taping objects with the tips (in grey) through synchronized whisking [18], or engaging a subset of whiskers that interact with an edge or with a small object (black circle) at some location along the whisker, inverting its curvature [19]. (c) Sensitive regions of the paramoecium's membrane. Anterior stimulation triggers backward, turning swimming. Posterior stimulation triggers forward swimming, after delay, and at lower thresholds [20].

(i). The human finger

In the human finger, one type of shallow touch receptor exhibits an axisymmetric shape organized in stacks of discoids connectively attached in all directions to the walls of encapsulating pits with axes oriented orthogonally to the surface [21–24]. Another type of shallow receptor has the shape of arborescent cell-neurite complexes located at the basal epidermal layer. Its function is still obscure, but it is not found deeper than 700 µm [25,26]. The distribution of these receptors in a thin sheet beneath the surface (see figure 4a and caption) begs the question of what could be sensed by the superficial layers of the skin.

For a moment, let us ignore the fact that the sheet of receptors is at a distance beneath the skin surface, that is to say, let us consider contacting objects at sufficiently large spatial frequencies. It is known that the finger skin responds physiologically to the curvature of such objects [27]. It is however wholly unlikely that curvature be sensed, as the ability to measure curvature decreases with the thickness of a shell. If curvature is not sensed, then it must be that it is the consequences of curvature that are sensed.

As a relaxed fingertip resembles a minimal surface, any contact with an object of greater curvature will result in an increase in its surface. This is not to say that the change of surface is the only cue that allows one to sense shape. There might be other cues. For instance, the gross shape of the contact area itself is a cue to the shape of a contacting object [12,28]. See §4 for a refutation that all components of deformation determine the perception of shape.

Besides its round shape, the human finger does not exhibit any obvious feature to simplify the plenhaptic function—which makes it a versatile organ—but by necessity, certainly relies on simplified sensing. Under the assumption that only changes of skin surface are sensed in the low temporal frequencies, then the dimensional reduction of the sensory space would be from  (a six-dimensional tensor field in a volume) to

(a six-dimensional tensor field in a volume) to  (a one-dimensional tensor field on a surface). In the previous discussion, any reference to time and time dependencies induced by the recovery of tissues is absent. Time dependencies, however, are certainly essential to increasing sensing options and resolve ambiguities, pointing to the probable importance of its biomechanics.

(a one-dimensional tensor field on a surface). In the previous discussion, any reference to time and time dependencies induced by the recovery of tissues is absent. Time dependencies, however, are certainly essential to increasing sensing options and resolve ambiguities, pointing to the probable importance of its biomechanics.

(ii). Whiskers

Many mammals have whiskers that are represented in figure 4b and commented on in the caption. In §2 we found that touching objects with rigid probes simplified the plenhaptic function greatly to the point of rendering it uninformative, unless many contacts are made simultaneously. The behaviour and the anatomy of certain animals, such as rats, could be interpreted in terms of the efficient sampling of the plenhaptic function.

During exploratory whisking, interaction timing is driven by the contact with an object [29]. The small size of the contact region owing to active retraction after contact shows that, in this case, the interaction is to be seen as that of rigid probe against a rigid object as in §2e, justifying the need to increase the density of individual contacts in space and in time.

A second type of behaviour in the rat, in contrast, involves bending a whisker against an object causing the interaction to fall into the case examined in §2f, 0 = h(b). It has been shown that rats can determine the point of contact of the shaft of the whisker with an object [19]. This performance implies that the rats must be using a simplified version of the plenhaptic function of the form  , as noted in §2b, with which they can find p through the knowledge of the mechanical properties of the whiskers and given a trajectory, d, resulting from the active movement of the root of the whisker.

, as noted in §2b, with which they can find p through the knowledge of the mechanical properties of the whiskers and given a trajectory, d, resulting from the active movement of the root of the whisker.

Another possibility is the use of the whiskers as a tuned harp where the plenhaptic function would be sampled to discriminate textures during brushing [30,31].

(iii). Paramoecium

The paramoecium is a unicellular organism which has found a way to sample plenhaptic function with the resources of a single cell [20,32]. This organism swims freely by oscillating its cilia. They can propel the animal forward or backward. Mechanical stimulation in the anterior region triggers fast backward swimming, including a turning component. Posterior stimulation, owing to hydrodynamic pressure, triggers forward swimming. The differences between anterior and posterior sensing are that anterior sensing is less sensitive than posterior sensing and that anterior sensing has a shorter reaction time than its posterior counterpart. The result of such ‘one bit’ sensing is the automatic sampling of a paramoecium's plenhaptic function through stereotypical sensorimotor behaviour.

4. Tactile illusions

Like all haptic systems, the human haptic system has, in essence, access to low-dimensional simplifications of the plenhaptic function that are determined by its motoric and sensory capabilities. These projections are in turn sampled in time and space, notably through relatively small contact surfaces, giving the nervous system the task to recover the desired object attributes that are needed to accomplish a desired manipulative or perceptual task.

Tactile illusions, which correspond to percepts that seem to defy expectations [33], can be discussed in terms of the sampling of the plenhaptic function. Owing to space limitations, only two examples follow, appealing to different aspects of tactile perception. They may be viewed as resulting from the processes used by the nervous system to convert a complex problem into a manageable set of computational tasks, such that these problems can be solved at perceptual speeds.

(a). Illusion resulting from locally stretching the skin

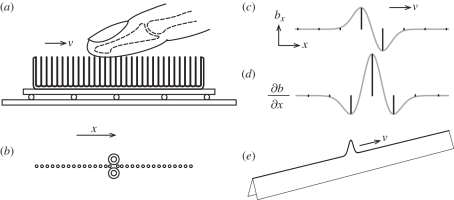

The hypothesis that small-scale shape can be sensed through the measurement of small changes of the finger surface and not through the measurement of curvature can be tested as follows. It involves an apparatus sketched in figure 5a, like that described in the study of Hayward [33]. Its purpose is to deform the skin locally by differential traction, as further described in the caption of figure 5. Barring the discretization introduced by the experimental contraption, the function, h, represents the case of frictionless, time-invariant interaction of a deformable probe interacting with a deformable, stationary object. The perceptual problem is to determine the nature of the interacting object from measurements resulting from the surface strain variations illustrated in figure 5d [34].

Figure 5.

Apparatus to cause surface changes. (a) A plastic fine-pitched comb is attached to a linear guide allowing motion relatively to two miniature wheels (<5 mm) along a direction, x, at velocity, v, but importantly without relative slip with a finger. (b) Miniature wheels deflect the bristles sideways. (c) Deflection pattern (discretized, black) and skin deflection smoothed by the tissue mechanics (grey). (d) Smoothed surface strain (grey) results from the spatial gradient of displacement. The simplified plenhaptic function is projected onto one single stretch component moving at apparent velocity, v. (e) Resulting percept.

The illusion is likely to result from the nervous system's attempt to solve a shape problem by assuming that the touched object is stationary and rigid. The problem is to find those shapes that satisfy 0 ≃ h(b). The result is a percept, as in figure 5e, that does not depend on whether or not relative displacement is caused by the subject or by an external agent. It does not depend on pressure either, which is uniform along the line, and is unrelated to the movements along x.

(b). Curved plate

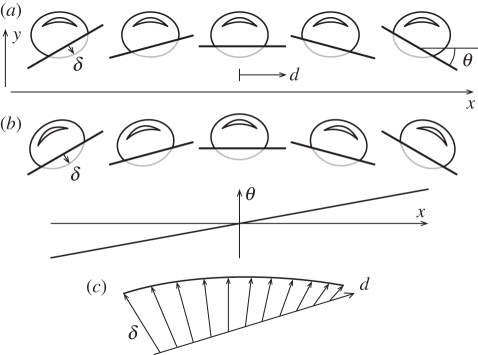

Some reductions of the plenhaptic function can be illustrated using an apparatus that artificially enforces a correlation between the orientation of a plate and the finger's rigid displacement [35]. See figure 6 for its representation along a single direction, x.

Figure 6.

Stimuli causing the experience of curvature. (a) Correlation between the orientation, θ, of a plate and the finger's rigid displacement, d, along a single direction, x. The interaction description is simplified to a single deflection, δ. A first motor strategy maintains the probe at a fixed orientation. (b) Other sensorimotor strategy that maintains the contact invariant. Barring differences in sensing, this strategy provides the same artificially dimension-reduced plenhaptic function, here a single curve, as in (c).

A motor strategy that maintains the probe at a fixed orientation, as in figure 6a, results in a projected plenhaptic function, as in figure 6c, that provides a robust percept of curvature [36]. A different sensorimotor strategy, as in figure 6b, that maintains the contact invariant provides equivalent sensory information, up to sensing constraints, by means of distant deformation, i.e. by proprioception [37].

Here, the rigid displacement, d, owing to the localization of the contact, can be viewed as a one-dimensional variety in the three-dimensional group of x–y–θ displacements in the plane, with one direction, y, constrained by the contact, leaving freedom in an x–θ subspace. Barring differences in sensing, this strategy provides the same artificially dimension-reduced plenhaptic mapping as in figure 6c. When experiencing a curved object, the nervous system similarly solves 0 ≃ h(d) assuming, again, that the touched object is rigid, stationary and frictionless [12].

5. Conclusion

The task of haptics, which is to know and manipulate objects by touch, is thus formidable. The sheer number of dimensions in which it operates allows many ambiguities to arise and that can be constructed as easily as with vision. Ambiguities arise in the dynamics of wielded objects [38], but other types of ambiguities can be created by introducing symmetries in low-dimensional projections of the plenhaptic function, as in earlier studies [39,40], or from the basic laws of mechanics [34]. By the same token, a single, isolated moving object can create very different projections of the plenhaptic function [41].

One task of the nervous system is to sort out these ambiguities at speeds that are compatible with survival. To succeed, the nervous system must use something that could be compared with David Marr's visual bag of tricks [42], except that the haptic bag may be considerably larger than the vision bag, and may be quite different as it would be very difficult for an organism to have perfect knowledge of its own mechanical state. It would not be surprising that the nervous system, at all levels of its hierarchy, deployed good tricks that are robust to the difficulties owing to the unpractically high dimensionality of the plenhaptic function, not mentioning the unavoidable noise introduced by the afferent and efferent organs.

Acknowledgements

The idea of the existence of the plenhaptic objects owes much to the work of the author's former collaborators, specifically Mohsen Mahvash who used it to perform synthesis, the inverse of perception, Andrew H. Gosline who explored the argument ḋ, and Gianni Campion who actually suggested its name. It appeared implicitly in an article by Philip Fong, but in a simplified form [43]. The author is indebted to Alexander V. Terekhov, Irene Fasiello and Jonathan Platkiewicz for illuminating discussions and help leading to the present draft. Helpful comments from the reviewers are also gratefully acknowledged. This work was supported by the European Research Council (FP7 Programme) ERC Advanced Grant agreement no. 247300.

References

- 1.Goff G. D. 1967. Differential discrimination of frequency of cutaneous mechanical vibration. J. Exp. Psychol. 74, 294–299 10.1037/h0024561 (doi:10.1037/h0024561) [DOI] [PubMed] [Google Scholar]

- 2.van Doren C. L. 1989. A model of spatiotemporal tactile sensitivity linking psychophysics to tissue mechanics. J. Acoust. Soc. Am. 85, 2065–2080 10.1121/1.397859 (doi:10.1121/1.397859) [DOI] [PubMed] [Google Scholar]

- 3.Louw S., Kappers A. M. L., Koenderink J. J. 2000. Haptic detection thresholds of Gaussian profiles over the whole range of spatial scales. Exp. Brain Res. 132, 369–374 10.1007/s002210000350 (doi:10.1007/s002210000350) [DOI] [PubMed] [Google Scholar]

- 4.Gurfinkel V. S., Levik Y. S. 1993. The suppression of cervico-ocular response by the haptokinetic information about the contact with a rigid, immobile object. Exp. Brain Res. 95, 359–364 10.1007/BF00229794 (doi:10.1007/BF00229794) [DOI] [PubMed] [Google Scholar]

- 5.Norman J. F., Norman H. F., Clayton A. M., Lianekhammy J., Zielke G. 2004. The visual and haptic perception of natural object shape. Percept. Psychophys. 66, 342–351 10.3758/BF03194883 (doi:10.3758/BF03194883) [DOI] [PubMed] [Google Scholar]

- 6.Lederman S. J., Klatzky R. L., Abramowicz A., Salsman K., Kitada R., Hamilton C. 2007. Haptic recognition of static and dynamic expressions of emotion in the live face. Psychol. Sci. 18, 158–164 10.1111/j.1467-9280.2007.01866.x (doi:10.1111/j.1467-9280.2007.01866.x) [DOI] [PubMed] [Google Scholar]

- 7.Giordano B. L., McAdams S., Visell Y., Cooperstock J., Yao H. Y., Hayward V. 2008. Non-visual identification of walking grounds. J. Acoust. Soc. Am. 123, 3412. 10.1121/1.2934136 (doi:10.1121/1.2934136) [DOI] [PubMed] [Google Scholar]

- 8.Poincaré H. 1905. La science et l'hypothèse. Paris, France: Champs-Flammarion [Google Scholar]

- 9.Mach E. 1906. Space and geometry. Chicago, IL: The Open Court Publishing Company [Google Scholar]

- 10.Adelson E. H., Bergen J. R. 1991. The plenoptic function and the elements of early vision. In Computational models of visual processing (eds Landy M., Movshon J. A.), pp. 3–20 Cambridge, MA: MIT Press [Google Scholar]

- 11.André T., Levesque V., Hayward V., Lefèvre P., Thonnard J. L. 2011. Effect of skin hydration on the dynamics of fingertip gripping contact. J. R. Soc. Interface 8 10.1098/rsif.2011.0086 (doi:10.1098/rsif.2011.0086) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hayward V. 2008. Haptic shape cues, invariants, priors and interface design. In Human haptic perception: basics and applications (ed. Grunwald M.), pp. 381–392 Basel, Switzerland: Birkhauser Verlag [Google Scholar]

- 13.Ferrier N. J., Brockett R. W. 2000. Reconstructing the shape of a deformable membrane from image data. Int. J. Robot. Res. 19, 795–816 10.1177/02783640022067184 (doi:10.1177/02783640022067184) [DOI] [Google Scholar]

- 14.Mahvash M., Hayward V. 2004. High fidelity haptic synthesis of contact with deformable bodies. IEEE Comput. Graphics Appl. 24, 48–55 [DOI] [PubMed] [Google Scholar]

- 15.Wiertlewski M., Lozada J., Hayward V. 2011. The spatial spectrum of tangential skin displacement can encode tactual texture. IEEE Trans. Robot. 27, 461–472 10.1109/TRO.2011.2132830 (doi:10.1109/TRO.2011.2132830) [DOI] [Google Scholar]

- 16.Auvray M., Lenay C., Stewart J. 2009. Perceptual interactions in a minimalist virtual environment. New Ideas Psychol. 27, 32–47 10.1016/j.newideapsych.2007.12.002 (doi:10.1016/j.newideapsych.2007.12.002) [DOI] [Google Scholar]

- 17.Fraser P. J., Shelmerdine R. L. 2002. Dogfish hair cells sense hydrostatic pressure. Nature 415, 495–496 10.1038/415495a (doi:10.1038/415495a) [DOI] [PubMed] [Google Scholar]

- 18.Grant R. A., Mitchinson B., Fox C. W., Prescott T. J. 2009. Active touch sensing in the rat: anticipatory and regulatory control of whisker movements during surface exploration. J. Neurophysiol. 101, 862–874 10.1152/jn.90783 (doi:10.1152/jn.90783) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ahissar E., Knutsen P. M. 2008. Object localization with whiskers. Biol. Cybernet. 98, 449–458 10.1007/s00422-008-0214-4 (doi:10.1007/s00422-008-0214-4) [DOI] [PubMed] [Google Scholar]

- 20.Naitoh Y., Eckert R. 1969. Ionic mechanisms controlling behavioral responses of paramecium to mechanical stimulation. Science 164, 963–965 10.1126/science.164.3882.963 (doi:10.1126/science.164.3882.963) [DOI] [PubMed] [Google Scholar]

- 21.Cauna N. 1954. Nature and functions of the papillary ridges of the digital skin. Anat. Rec. 119, 449–468 10.1002/ar.1091190405 (doi:10.1002/ar.1091190405) [DOI] [PubMed] [Google Scholar]

- 22.Paré M., Elde R., Mazurkiewicz J. E., Smith A. M., Rice F. L. 2001. The Meissner corpuscle revised: a multiafferented mechanoreceptor with nociceptor immunochemical properties. J. Neurosci. 21, 7236–7246 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Takahashi-Iwanaga H., Shimoda H. 2003. The three-dimensional microanatomy of Meissner corpuscules in monkey palmar skin. J. Neurocytol. 32, 363–371 10.1023/B:NEUR.0000011330.57530.2f (doi:10.1023/B:NEUR.0000011330.57530.2f) [DOI] [PubMed] [Google Scholar]

- 24.Herrmann D. H., Boger J. N., Jansen C., Alessi-Fox C. 2007. In vivo confocal microscopy of Meissner corpuscules as a measure of sensory neuropathy. Neurology 69, 2121–2127 10.1212/01.wnl.0000282762.34274.94 (doi:10.1212/01.wnl.0000282762.34274.94) [DOI] [PubMed] [Google Scholar]

- 25.Halata Z., Grim M., Bauman K. I. 2003. Friedrich Sigmund Merkel and his ‘Merkel cell’, morphology, development, and physiology: review and new results. Anat. Rec. A 271A, 225–239 10.1002/ar.a.10029 (doi:10.1002/ar.a.10029) [DOI] [PubMed] [Google Scholar]

- 26.Lumpkin E. A., Caterina M. J. 2007. Mechanisms of sensory transduction in the skin. Nature 445, 858–865 10.1038/nature05662 (doi:10.1038/nature05662) [DOI] [PubMed] [Google Scholar]

- 27.Goodwin A. W., Macefield V. G., Bisley J. W. 1997. Encoding object curvature by tactile afferents from human fingers. J. Neurophysiol. 78, 2881–2888 [DOI] [PubMed] [Google Scholar]

- 28.Fearing R. S., Binford T. O. 1991. Using a cylindrical tactile sensor for determining curvature. IEEE Trans. Robot. Autom. 7, 806–817 10.1109/70.105389 (doi:10.1109/70.105389) [DOI] [Google Scholar]

- 29.Mitchinson B., Martin C. J., Grant R. A., Prescott T. J. 2007. Feedback control in active sensing: rat exploratory whisking is modulated by environmental contact. Proc. R. Soc. B 274, 1035–1041 10.1098/rspb.2006.0347 (doi:10.1098/rspb.2006.0347) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ritt J. T., Andermann M. L., Moore C. I. 2008. Embodied information processing: vibrissa mechanics and texture features shape micromotions in actively sensing rats. Neuron 57, 599–613 10.1016/j.neuron.2007.12.024 (doi:10.1016/j.neuron.2007.12.024) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zuo Y., Perkon I., Diamond M. E. 2011. Whisking and whisker kinematics during a texture classification task. Phil. Trans. R. Soc. B 366, 3058–3069 10.1098/rstb.2011.0161 (doi:10.1098/rstb.2011.0161) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Jennings H. S. 1906. Behavior of lower animals. Bloomington, IN: Indiana University Press [Google Scholar]

- 33.Hayward V. 2008. A brief taxonomy of tactile illusions and demonstrations that can be done in a hardware store. Brain Res. Bull. 75, 742–752 10.1016/j.brainresbull.2008.01.008 (doi:10.1016/j.brainresbull.2008.01.008) [DOI] [PubMed] [Google Scholar]

- 34.Wang Q., Hayward V. 2008. Tactile synthesis and perceptual inverse problems seen from the view point of contact mechanics. ACM Trans. Appl. Percept. 5, 1–19 10.1145/1279920.1279921 (doi:10.1145/1279920.1279921) [DOI] [Google Scholar]

- 35.Dostmohamed H., Hayward V. 2005. Trajectory of contact region on the fingerpad gives the illusion of haptic shape. Exp. Brain Res. 164, 387–394 10.1007/s00221-005-2262-5 (doi:10.1007/s00221-005-2262-5) [DOI] [PubMed] [Google Scholar]

- 36.Wijntjes M. W. A., Sato A., Hayward V., Kappers A. M. L. 2009. Local surface orientation dominates haptic curvature discrimination. IEEE Trans. Haptics 2, 94–102 10.1109/TOH.2009.1 (doi:10.1109/TOH.2009.1) [DOI] [PubMed] [Google Scholar]

- 37.Sanders A. F. J., Kappers A. M. L. 2009. A kinematic cue for active haptic shape perception. Brain Res. 1267, 25–36 10.1016/j.brainres.2009.02.038 (doi:10.1016/j.brainres.2009.02.038) [DOI] [PubMed] [Google Scholar]

- 38.Shockley K., Carello C., Turvey M. T. 2004. Metamers in the haptic perception of heaviness and moveableness. Percept. Psychophys. 66, 731–742 10.3758/BF03194968 (doi:10.3758/BF03194968) [DOI] [PubMed] [Google Scholar]

- 39.Robles De La Torre G. 2002. Comparing the role of lateral force during active and passive touch: lateral force and its correlates are inherently ambiguous cues for shape perception. In Proc. Eurohaptics 2002, pp. 159–164 See http://www.interaction-design.org/references/conferences/proceedings_of_eurohaptics_2002.html. [Google Scholar]

- 40.Smith A. M., Chapman C. E., Donati F., Fortier-Poisson P., Hayward V. 2009. Perception of simulated local shapes using active and passive touch. J. Neurophysiol. 102, 3519–3529 10.1152/jn.00043.2009 (doi:10.1152/jn.00043.2009) [DOI] [PubMed] [Google Scholar]

- 41.Wexler M., Hayward V. 2011. Weak spatial constancy in touch. In Proc. 2011 IEEE World Haptics Conference (WHC), pp. 605–607 IEEE-Wiley eBooks Library 10.1109/WHC.2011.5945554 (doi:10.1109/WHC.2011.5945554) [DOI] [Google Scholar]

- 42.Marr D. 1982. Vision. New York, NY: Freeman [Google Scholar]

- 43.Fong P. 2004. Sensing, acquisition, and interactive playback of data-based models for elastic deformable objects. Int. J. Robot. Res. 28, 630–655 10.1177/0278364908100326 (doi:10.1177/0278364908100326) [DOI] [Google Scholar]